Abstract

Advancements in satellite sensor technology have enabled access to diverse remote sensing (RS) data from multiple platforms. Hyperspectral Image (HSI) data offers rich spectral detail for material identification, while LiDAR captures high-resolution 3D structural information, making the two modalities naturally complementary. By fusing HSI and LiDAR, we can mitigate the limitations of each and improve tasks like land cover classification, vegetation analysis, and terrain mapping through more robust spectral–spatial feature representation. However, traditional multi-scale feature fusion models often struggle with aligning features effectively, which can lead to redundant outputs and diminished spatial clarity. To address these issues, we propose the Cross Attention Bridge for HSI and LiDAR (CAB-HL), a novel dual-path framework that employs a multi-stage cross-attention mechanism to guide the interaction between spectral and spatial features. In CAB-HL, features from each modality are refined across three progressive stages using cross-attention modules, which enhance contextual alignment while preserving the distinctive characteristics of each modality. These fused representations are subsequently integrated and passed through a lightweight classification head. Extensive experiments on three benchmark RS datasets demonstrate that CAB-HL consistently outperforms existing state-of-the-art models, confirm that CAB-HL consistently outperforms in learning deep joint representations for multimodal classification tasks.

1. Introduction

In recent years, the integration of hyperspectral and LiDAR data has become critical for improving land cover classification tasks. Recent innovations, such as joint convolutional networks, have further refined this process, offering significant improvements in classification accuracy and robustness. This multimodal fusion has proven superior performance in applications such as land cover classification and vegetation analysis, particularly by enhancing the ability to distinguish between spectrally similar land cover classes [1]. Meanwhile, with the development of sensor technology, remote sensing imaging methods exhibit a diversified trend [2]. Despite the availability of extensive multi-source data, remote sensing data from each source captures just one or a restricting their ability to fully characterize complex terrestrial scenes, which fail to comprehensively depict the observed scenes [3].

For different modalities, by combining HSI and LiDAR data, we can obtain enhanced features that are crucial for remote sensing activities. Sophisticated methods related to the deep learning architecture for multimodal fusion have successfully fused spectral and spatial features, increasing the model’s stability against environmental changes and providing accurate and valuable information. Currently, the use of HSI and LiDAR is steadily evolving as a foundation for handling multidimensional issues in Earth observation, opening a new chapter towards progressive change in ground-breaking remote sensing solutions.

The processing of hyperspectral image (HSI) and LiDAR data for classification has become enhanced through the use of different deep learning models to take advantage of the characteristics of the format. Among them, there is the so-called “Dual-Coupled CNN-GCN Structure (DCCG)”, where spatial features are detected using CNN. This two-way coupling structure absorbs both spatial and structural information and can accurately classify complex scenes with high precision [4]. Another well-known model is called HSLiNets, which has bidirectional reversed CNN pathways in a dual linear fused space framework to overcome the problems of high dimensionality and redundancy in HSI data and promotes the improvement of classification accuracy [5]. The HSI feature extraction module primarily encompasses a convolutional method, recurrent method, transformer method, and attention method. Numerous CNN methodologies employ 2D convolution to acquire local contextual information from pixel-centric data cubes [6,7]. However, these methods devote insufficient attention to the spectral signatures and fail to consider the joint spatial–spectral information in HSI. Some scholars employ 2D–3D convolutions to enhance feature extraction modules, resulting in integrated spatial–spectral feature embeddings that yield promising outcomes in practical applications [8,9]. Also, the Cross-Transformer Feature Fusion Network has two branches that combine convolutional operators for spatial-feature extraction and Transformer for capturing feature dependencies. Cross attention used here extends feature interaction between HSI and LiDAR data, therefore promoting better classification results [10]. Finally, EndNet breaks down the spectral and elevation information from both HSI and LiDAR data through an encoder-decoder architecture that enables the framework to effectively fusion them and accomplish better classification [11]. Taken together, such models present a marked advancement in remote sensing classification methodologies. Advancements in deep learning (DL) offer novel avenues for surmounting the constraints in feature extraction efficacy of conventional methods. CNN have attained considerable success in the classification of hyperspectral imagery (HSI) and LiDAR inside standard deep learning architectures [12]. Developed a pair of interconnected CNN [13]. The proposed model, TBMSSN (Two-Branch Multiscale Spectral–Spatial Feature Extraction Network), demonstrates significant improvements in OA, AA, and Kappa coefficients compared to existing methods, indicating its effectiveness in hyperspectral image classification [14].

Although there have been several innovative methods for HSIs images and LiDAR fusion and classification, there are still limitations in achieving sufficient feature extraction. These limitations can be summarized in two parts.

Firstly, CNNs excel at extracting spatial structure and contextual information from high-resolution images, making them a popular backbone design. CNNs typically use single-scale patches as inputs and fixed convolutional kernels for feature extraction. Land cover types require varied input scales based on their surrounding distributions. Using a set scale as the input hinders the ability to satisfy the practical needs of various land cover types and accomplish fine-grained classification. Secondly, a multiscale input can extract land cover information at distinct scales, resulting in complimentary joint features that improve the classification accuracy. However, integrating multiscale data creates two obstacles. To improve the classification performance, it is important to calibrate the weights of different scales, as their contributions vary. Concatenating multiscale features may worsen the dimensionality problem, resulting in a poor classification performance. The main contributions are summarized as follows:

- 1.

- Based on cross-attention, we proposed the cross-attention bridge (CAB), leveraging the flexible advantage of CA to combine the HSI and LiDAR images, which have different architectures, such as features that dynamically adopted the feature fusion.

- 2.

- As we can combine data with different architectures, we use the complementary advantages of my proposed modal multistage fusion, a module that generates features combined from different semantic levels. The cross-attention module has been designed to combine and fuse features from different modalities.

- 3.

- Three publicly accessible datasets are utilized to assess the suggested methodology, and many state-of-the-art (SOTA) HSI and LiDAR classification methods are contrasted against it. The experimental results demonstrate that CAB-HL has an outstanding performance, achieving an accuracy of 99.33% on the Houston2013 dataset, surpassing other sophisticated algorithms by at least 2.5%.

The rest of the paper is organized as follows: Section 2 introduces the related works, such as multistage feature extraction, attention mechanism, and hyperspectral and LiDAR fusion classification. Section 3 presents the details of the proposed network. Section 4 provides the experimental results. Section 5 details an ablation study. Section 6 concludes this article and provides future work.

2. Related Work

2.1. Multistage Feature Extraction

This approach allows for a more accurate representation of land cover types, making it a suitable choice for our framework, which integrates both HSI and LiDAR data. Researchers have extensively examined feature extraction and representation, particularly in deep learning, as the fundamental stage of the majority of computer vision and multimedia processing applications. Effective parameter training in subsequent networks depends especially on the capacity to extract high-quality features [15]. Employing several scales for feature extraction enables the observation of distinct information and the completion of diverse tasks. Smaller-scale features yield more localized information, whereas higher scales capture broader spatial context. Multiscale features can be used to describe visual features, extracting more detailed information and producing better outcomes [16]. Multiscale feature extraction has proven to be highly effective in capturing both fine-grained and broader contextual features from remote sensing data [17].

In remote sensing image processing, prevalent issues include spectral similarity, interleaved edges in complicated scenes, and many singular points within mixed vegetation across land cover categories. Hence, the efficient extraction of significant land cover information attributes is crucial in determining the interpretational results accuracy. Numerous studies on RS image processing and analysis employing multiscale features are now underway: ref. [17] proposed a novel multiscale spectral–spatial cross-extraction network (MSCEN) for HS image classification; ref. [18] studied hyperspectral image denoising via multiscale adaptive fusion networks (MAFNets); ref. [19] proposed MashFormer, an innovative multiscale sensing-integrated hybrid detector with CNN and Transformer to enhance the characterization capability in complex background scenes. It can improve the detection performance of targets with multiscale features, so as to complete the target detection task with greater accuracy. Research on collaborative classification based on multiscale has garnered a lot of attention in recent years: ref. [20] proposed a novel multiscale deep neural network that employs a hierarchical residual architecture integrated with self-calibrated convolution to extract features from diverse receiving domains, thereby augmenting the model’s capacity to represent multimodal data; ref. [21] proposed a novel Glt-Net that extracts multiscale local spatial features and performs an adaptive linear weighted fusion of multimodal features. Additionally, multiscale features are incorporated with spectral features of HS data; ref. [22] proposed a multiscale pseudo-Siamese network with attention mechanism (MA-PSNet) and provided a multiscale feature learning module to comprehensively extract features at various scales.

Despite the compensatory advantages of multiscale feature input over single scale characteristics, many challenges persist. Different land cover categories possess varying requirements across scales, indicating that the contribution of multiscale features to classification performance is not uniform. Consequently, it is essential to calibrate the respective weights of each characteristic, a laborious and inherently imprecise operation. Conversely, merely amalgamating multimodal features from various scales via cascading may intensify high-dimensional issues, while high-dimensional data can result in the curse of dimensionality in the model when labeled data is inadequate, potentially compromising the accuracy of the final classification of multimodal data. Consequently, the selection of suitable scale features from multiscale characteristics and the proper utilization of multiscale feature information to prevent overly high dimensionality are critical challenges that require immediate attention.

2.2. Attention Mechanism

The attention mechanism (AM) [23] has been a subject of investigation in neurology for some decades. The AM is an important part of human vision and has become a research hotspot in the past few years [24]. The human visual system does not perceive external objects in their entirety; instead, it selectively focuses on significant elements based on necessity, subsequently synthesizing these disparate components to create a comprehensive impression of the observed entities. The AM is critically significant since it enhances the performance and accuracy of DNNs [25]. The AM can provide varying weights to relevant components of each input, enabling the model to concentrate on extracting the most critical and significant content, thus facilitating more precise decisions. Simultaneously, the implementation of AM does not impose additional burdens on model storage and processing, which is a significant factor contributing to its widespread utilization [26]. The AM has been extensively utilized in visual tasks by augmenting the features; ref. [27] propose a hierarchical CNN and transformer architecture for combined categorization of hyperspectral imagery and LiDAR data. The approaches for attention can be found in [28].

The AM has been widely applied in the visual tasks by enhancing the ability of network to perceive the effective information and enables the model to focus on the pivotal parts of the features [29]. Woo et al. [30] proposed a convolutional block attention module (CBAM) that can enhance the features in both the channel and spatial domains. Dosovitskiy et al. [31] presented a VIT (Vision Transformer) based on the traditional transformer model and applied self-attention mechanism to extract image features, achieving results comparable to those of CNNs. The success of AM in computer vision has introduced new concepts to the field of RS image processing. Zhu et al.’s [32] proposed end-to-end residual spectral spatial attention network (RSSAN) utilizes CBAM to search for empty spectral features connected to target pixel points and assign different weights to them, resulting in more discriminative features and improved classification accuracy. Wang et al. [33] proposed a full-scale linked Unet network based on spatial–spectral joint perceptual attention for HS image and multispectral image fusion.

2.3. Hyperspectral and LiDAR Fusion Classification

The integration of LiDAR and hyperspectral data has seen numerous advancements with deep learning models, such as CNNs, that effectively extract spatial and spectral features [34]. Furthermore, ref. [14] introduced a two-branch multiscale network that enhances spectral–spatial feature extraction, providing a solid foundation for multimodal classification tasks. In recent years, many researchers combined HSI with LiDAR images for classification. Typical networks are dual-branch CNN [34] and HRWN (Hyperspectral and LiDAR Wide Network). The former designs a unique network, which has two branches, to extract HSI features and LiDAR image features, respectively. Specifically, 2D convolution structure and 1D convolution are applied in HSI feature extraction to capture spectral and spatial features simultaneously. Also, a cascade block is utilized in LiDAR feature extraction for feature reusing. Finally, the features are stacked and classified in the classifier. Based on the two-branch network, HRWN applies the pixel affinity approach to the LiDAR branch. In addition, a hierarchical random walk module is designed in the classifier to fuse the features of different sources and obtain more significant classification results. Moreover the FusAtNet [35] applies the attention mechanism for land-cover classification. In the feature extraction part, the self-attention method is adopted to collect HSI and LiDAR features, and then this network links multimodal data with cross-attention. The coupled CNN [36] also achieves satisfactory outcomes. This network adopts the approach of sharing parameters when extracting features from different sources, greatly reducing the number of operation parameters, and designs a decision fusion module to better adapt to the features of multisource data. Different from the above methods, the proposed method adopts an exceptional feature fusion approach and achieves a better classification performance.

3. Methodology

3.1. Overall Architecture

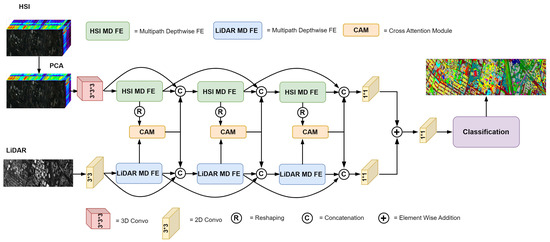

The overall architecture of the proposed CAB-HL model is shown in Figure 1. The proposed framework uses both HSI and LiDAR data for classification in a dual-modality manner. The HSI data was first transformed into a data cube through PCA dimensionality reduction and fed through multipath 3D convolutional layers to extract spectral–spatial features. To extract spatial features effectively, LiDAR data is passed through multipath depthwise separable 2D convolutional layers. The extracted features from both modalities are then fused using CAM in two stages to enable feature matching and interaction. The last fused features are then classified to produce accurate land-cover maps to take advantage of the synergistic relationship between HSI and LiDAR, integrating hyperspectral imaging (HSI) and LiDAR modalities. The model leverages complementary spatial and spectral features from HSI and spatial features from LiDAR to achieve robust classification. The pipeline consists of the following steps:

Figure 1.

Architecture of the proposed cross-attention bridge for the HSI and LiDAR (CAB-HL) models.

- HSI Feature Extraction: A multipath 3D convolutional block is used to extract spectral–spatial features from the HSI data.

- LiDAR Feature Extraction: A multipath depthwise 2D convolutional block processes the LiDAR data to extract spatial features at multiple scales

- Cross-Attention Fusion: The extracted features from HSI and LiDAR are fused using a cross-attention mechanism in two stages.

- Classification: The fused features are passed through fully connected layers and a softmax activation function to generate class predictions.

3.2. Multipath Convolutional Blocks (CAB-HL)

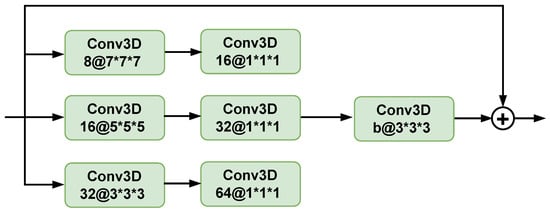

The HSI feature extraction module employs multipath 3D convolutional layers to extract spectral–spatial features at multiple scales, as illustrated in Figure 2. The block utilizes three parallel pathways to record multi-scale spectral–spatial characteristics. Each pathway initiates with a Conv3D layer, employing varying kernel sizes (, , and ), generating feature maps with channel dimensions of 8, 16, and 32, correspondingly. The Conv3D layers with kernels further enhance these features, augmenting the channels to 16, 32, and 64 for improved feature representations. The outputs from all pathways are concatenated and integrated using a Conv3D layer with a kernel, resulting in a final output with b channels. A skip connection directly incorporates the block’s input into the output via element-wise summation, facilitating robust spectral–spatial feature learning and fast gradient propagation. The methodology includes the following:

Figure 2.

Structure of the multipath 3D convolutional block for HSI feature extraction in the CAB-HL model.

- -

- Multi-Scale Convolutions: Different kernel sizes (, , ) are applied to capture features at varying scales.

- -

- Channel Refinement: Pointwise convolutions refine the extracted features by reducing the number of channels:

- -

- Feature Fusion and Skip Connections: The refined features are concatenated and passed through another 3D convolutional layer. A skip connection adds the input back to the processed features:

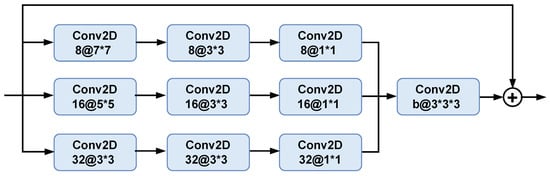

The LiDAR feature extraction module uses multipath depthwise convolutional blocks, as depicted in Figure 3. The block consists of three parallel paths, with Conv2D layers using different kernel sizes (, , and ) to extract multi-scale spatial features, producing feature maps with 8, 16, and 32 channels, respectively. Each pathway incorporates a depthwise separable Conv2D () and a Conv2D to enhance the computing efficiency and optimize features. The outputs are concatenated and integrated via a Conv2D () layer, followed by a skip connection to enhance feature learning and gradient propagation. The methodology includes the following:

Figure 3.

Structure of the multipath depthwise convolutional block for LiDAR feature extraction in the CAB-HL model.

- -

- Multi-Scale Convolutions: Convolutions with kernel sizes (, , ) extract features at different spatial scales:

- -

- Depthwise Separable Convolutions: Depthwise convolutions followed by pointwise convolutions refine the spatial features:

- -

- Feature Fusion and Skip Connections: The refined features are concatenated and processed further, with the input added back to preserve the original information:

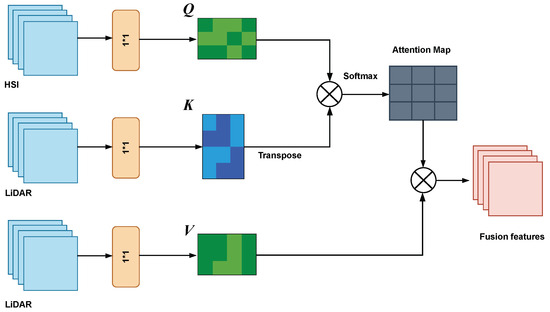

3.3. Cross-Attention Mechanism

The CAM serves as the core of the proposed CAB-HL framework, facilitating effective feature fusion between HSI data and LiDAR data. HSI data, which are inherently 3D, provide detailed spectral–spatial information, while LiDAR data, which are primarily 2D, focus on spatial elevation details. CAM is designed to align and integrate these heterogeneous features by dynamically adjusting attention weights between the modalities, ensuring effective information exchange.

The architecture of CAM, shown in Figure 4, aligns and fuses the HSI and LiDAR features in two stages. For each stage k, the fusion process is defined as follows:

Figure 4.

Cross-attention module.

We first reshape each input so that the spatial dimensions are flattened:

The HSI input tensor is denoted as , and the LiDAR input tensor is denoted as .

Here, B is the batch size, and are the channel dimensions of the respective modalities, while H and W are the spatial dimensions, where is the sequence length. In the computational framework of CAM, the query serves as the core information for generating attention scores. When processing joint HSI and LiDAR datasets, we posit that the spectral features derived from HSI exhibit superior discriminative power compared to spatial and elevation features, thus warranting higher prioritization. Spectral information inherently captures fine-grained physical properties and spectral responses of ground objects, making it especially effective for discriminative tasks, such as land-cover classification and change detection. In contrast, while the spatial and elevation data provided by LiDAR play a critical role in characterizing object morphology and structural attributes, their discriminative capacity remains relatively limited. We project to queries and to keys and values, respectively:

where are the projection matrices for the three spaces, respectively. Q represents the queries, K represents the keys, V represents the values, h represents the number of heads, represents the output of the h-th head, and represents the output transform matrix.

3.4. Classification and Optimization

The fused features from the cross-attention mechanism are passed through fully connected layers for classification. A softmax activation function generates the final class probabilities:

To optimize the CAB-HL model, the cross-entropy loss is minimized:

where is the ground truth label, and is the predicted probability for class i. An Adam optimizer is employed for training.

3.5. Algorithm

The overall training strategy of the CAB-HL framework is outlined in Algorithm 1. This algorithm encapsulates the end-to-end process, including preprocessing, feature extraction, cross-attention fusion, and final classification. Equations (1)–(17) correspond to key steps for spectral–spatial integration of HSI and LiDAR data. Refer to Algorithm 1 for the detailed procedural steps.

| Algorithm 1 Multimodal classification pipeline with cross-attention |

| Require: HSI data , LiDAR data |

Ensure: Classified results Y

|

4. Experimental Results

4.1. Datasets

To assess the effectiveness of the proposed approach, three publicly accessible multisensor remote sensing image classification datasets are utilized as experimental datasets: the Houston2013 dataset, the Trento dataset, and the Augsburg dataset. Comprehensive parameters are shown in Table 1.

Table 1.

Dataset description.

Augsburg dataset: The Augsburg dataset consists of paired HSI and LiDAR DSM data, where the HSI data was collected using the HySpex sensor, and the LiDAR DSM data was obtained with the DLR-3 K sensor. This dataset was acquired over Augsburg, Germany, which is an urban environment. The spatial dimensions of the Augsburg dataset are 332 × 485, with a spatial resolution of approximately 30 m. The HSI data includes 180 spectral bands, spanning the wavelength range of 0.4 to 2.5 µm. The LiDAR DSM data provides 3D elevation information for surface features. The detailed number of samples for each category is listed in Table 2.

Table 2.

Classification accuracy description with Augsburg dataset.

Houston2013 dataset: The Houston2013 dataset was captured using the ITERS CASI-1500 sensor over the University of Houston campus and its surrounding urban area in Houston, Texas, USA, in 2012. This dataset includes both HSI and LiDAR DSM data. The spatial dimensions of the dataset are 349 × 1905, with a spatial resolution of approximately 2.5 m. The HSI data consists of 144 spectral bands, covering the wavelength range from 380 to 1050 nm. The LiDAR data provides elevation information for ground features. The land cover is categorized into 15 types: Healthy grass, Stressed grass, Synthetic grass, Trees, Soil, Water, Residential, Commercial, Road, Highway, Railway, Parking Lot 1, Parking Lot 2, Tennis Court, and Running Track. The sample counts for each class are listed in Table 3.

Table 3.

Classification accuracy description with Houston2013 dataset.

Trento Dataset: The Trento dataset is an HSI-LiDAR pair dataset, where the HSI data were collected by an AISA Eagle sensor, and the LiDAR digital surface model (DSM) data were acquired by an Optech ALTM 3100EA sensor. The dataset is captured over a rural area south of the city of Trento, Italy. The Trento dataset has a spatial dimension of 166 × 600 with a spatial resolution of approximately 1 m. The HSI data in the Trento dataset consists of 63 spectral bands, with wavelengths ranging from 420 to 990 nm. The LiDAR DSM data provides elevation information of ground features. The land cover is classified into six categories: Apple trees, Buildings, Ground, Woods, Vineyard, and Roads. The number of samples for each category is provided in Table 4.

Table 4.

Classification accuracy description with Trento dataset.

4.2. Parameter Tuning

In this article, we use Python 3.10 to create our programs and PyTorch 2.1.2 to implement the convolutional neural network component. Every test was carried out on a PC running Windows 10, an Intel Core i7-10870 h, and a GeForce RTX 4060ti with Max-Q Design.

4.3. Parameter Setting

The experiments use multiple batch sizes of 8, 16, 32, 48, and 64; encompass 150 epochs; and employ the cross-entropy loss function. The proposed CAB-HL model is implemented using the PyTorch deep learning framework, with all program development conducted in Python 3.10. The experiments are executed on a workstation configured with an Intel Core i5-13490F CPU, 96 GB of RAM, and an NVIDIA GeForce RTX 4060 Ti GPU, operating under the Windows 10 environment. To ensure objective and reproducible performance evaluation, three commonly adopted metrics are employed: overall accuracy (OA), average accuracy (AA), and the Kappa coefficient. These metrics facilitate a comprehensive comparison between the predicted classification results and the corresponding ground truth maps across all benchmark datasets.

4.4. Effect of Patch Size and PCA Components on OA

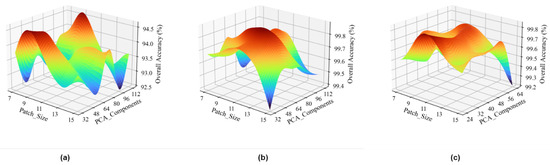

In the proposed method, the CAB-HL component applies CNN as its backbone. The feature extraction efficacy of CNNs is considerably affected by the dimensions of the input patches. Smaller patch sizes emphasize intricate features in the image, including texture and edges; nevertheless, overly diminutive patches may neglect contextual information. On the other hand, greater neighborhood patch sizes can incorporate rich data from nearby pixels, which improves the ability to capture contextual associations. Excessively large patches, however, could mask crucial local information. Thus, choosing the neighborhood patch size necessitates striking a balance between local detail preservation and information integration. Patch and PCA comparison has been shown in Figure 5.

Figure 5.

Comparison of different models various parameters: (a) Augsburg. (b) Houston2013. (c) Trento.

4.5. Learning-Rate Comparison

The learning rate is a crucial hyperparameter in the deep learning process. The main goal is to control the network’s mass by calculating and implementing the gradient of the loss function. An excessively high learning rate may cause parameter updates to exceed the optimal value. This consequently causes variations in the loss function value throughout parameter tuning, ultimately leading to the failure of network convergence. A learning rate that is excessively low may cause the network parameters to become ensnared in a high local minimum during the parameter search, hindering the exploration of superior local minima that are more likely to be global.

4.6. Comparison and Analysis of Classification Performance

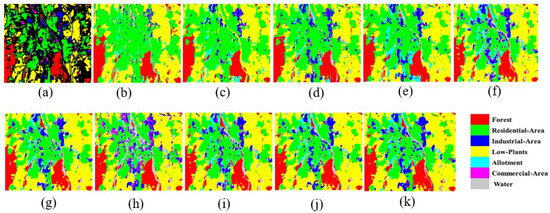

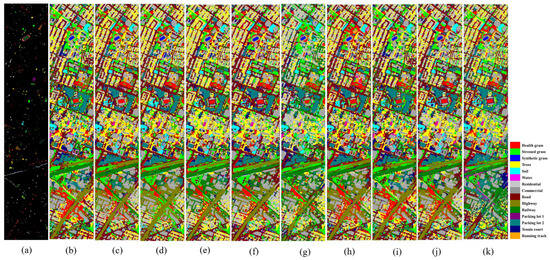

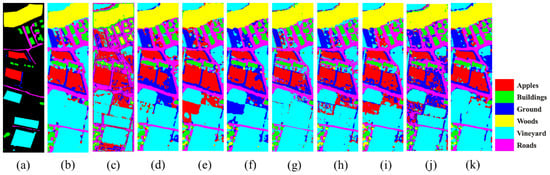

The classification efficacy of the proposed CAB-HL model is evaluated utilizing three standard benchmark datasets: Augsburg, Trento, and Houston2013, in comparison to nine cutting-edge models: MDL-RS [13], EndNet [11], CALC [39], CCR-Net [40], ExViT [41], DHViT [42], SAL2RN [43], MS2CANet [44], and DSHF [45]. The assessment is conducted utilizing OA, AA, and the Kappa Coefficient, in addition to class-specific accuracy evaluations. Our proposed CAB-HL model consistently surpasses all rival method, demonstrating substantial enhancements across all datasets. The model attains OA, AA, and Kappa coefficients, illustrating its efficacy in managing intricate spectral–spatial fluctuations. The classification maps (Figure 6, Figure 7 and Figure 8) visibly validate the enhanced efficacy of CAB-HL, demonstrating fewer classification errors and superior boundary delineation relative to alternative models. In Figure 6, Figure 7 and Figure 8, the black pixels in the ground truth label image represent background or unlabeled regions, which are intentionally excluded from both training and evaluation. This visualization follows established practices in recent benchmark studies, such as S3F2Net [46], DSHFNet [45] and AM3Net [47], ensuring consistency and fairness in comparative analysis. Among the competing methodologies, DSHF ranks as the second-best performer, succeeded by MS2CANet and SAL2RN, whereas models like CCR-Net, DHViT, and MDL-RS demonstrate inferior classification performance due to their inadequacy in effectively distinguishing spectrally identical land-cover categories. A comprehensive analysis of classification outcomes for each dataset is presented below:

Figure 6.

Classification maps utilizing several methodologies for the Augsburg dataset: (a) Ground-truth map; (b) MDL-RS (89.60%); (c) CALC (98.16%); (d) CCR-Net (88.15%); (e) EndNet (88.52%); (f) ExViT (90.62%); (g) DHViT (85.82%); (h) Sal2RN (91.08%); (i) MS2CANet (91.99%); (j) DSHF (92.88%); (k) Proposed (94.5%).

Figure 7.

Classification maps utilizing several methodologies for the Houston2013 dataset: (a) Ground-truth map; (b) MDL-RS (89.60%); (c) CALC (98.16%); (d) CCR-Net (88.15%); (e) EndNet (88.52%); (f) ExViT (90.62%); (g) DHViT (85.82%); (h) Sal2RN (91.08%); (i) MS2CANet (91.99%); (j) DSHF (92.88%); (k) Proposed (99.35%).

Figure 8.

Classification maps utilizing several methodologies for the Trento dataset: (a) Ground-truth map; (b) MDL-RS (89.60%); (c) CALC (98.16%); (d) CCR-Net (88.15%); (e) EndNet (88.52%); (f) ExViT (90.62%); (g) DHViT (85.82%); (h) Sal2RN (91.08%); (i) MS2CANet (91.99%); (j) DSHF (92.88%); (k) Proposed (99.7%).

4.6.1. Classification Performance on the Augsburg Dataset

The Augsburg dataset comprises natural and artificial land cover types, presenting difficulties due to the existence of spectrally analogous urban structures and vegetation. Table 2 delineates the categorization performance of each model.

- CAB-HL achieved the highest accuracy, with an OA of 94.5%, AA of 77.12%, and a Kappa coefficient of 92.1%.

- DSHF follows as the second-best model with an OA of 92.88%, benefiting from its spectral–spatial fusion capability but struggling with shadowed urban areas.

- MS2CANet (91.67%) and SAL2RN (89.85%) perform relatively well but fail to fully exploit spatial dependencies in the dataset.

- MDL-RS, EndNet, and DHViT exhibit a lower classification accuracy, particularly in urban areas where mixed pixels reduce their effectiveness.

The classification maps in Figure 6 provide a visual confirmation of the superior performance of CAB-HL, where misclassification in urban areas is significantly reduced compared to other models. Competing models exhibit difficulty in differentiating between vegetative and constructed environments, whereas CAB-HL provides more accurate boundary delineation.

4.6.2. Classification Performance on the Houston2013 Dataset

The Houston2013 dataset is widely recognized in hyperspectral image classification research due to its diverse set of urban and vegetation classes, making it a highly challenging benchmark. The composition includes intricate urban formations, aquatic environments, and flora, necessitating advanced spectral–spatial learning for optimal categorization precision.

- CAB-HL markedly surpasses all alternative approaches, attaining OA of 99.35%, an AA of 99.5%, and a Kappa coefficient of 99.3.

- DSHF achieved an overall accuracy of 92.88%, ranking second in performance, although it demonstrates misclassification in metropolitan areas characterized by significant spectral mixing.

- MS2CANet (91.99%) and SAL2RN (91.08%) yield robust findings; nevertheless, they do not sustain classification consistency across various land cover types.

- MDL-RS and EndNet exhibit modest performance; nevertheless, their classification maps indicate increased noise and misclassification in vegetative areas.

- CCR-Net and DHViT demonstrate the poorest performance, especially in differentiating residential, business, and road networks due to spectral overlap.

Figure 7 illustrates classification maps that underscore the superior performance of CAB-HL, demonstrating more precise and refined separation of urban and vegetation areas relative to rival models. The CAB-HL approach significantly mitigates misclassification in aquatic and urban regions, hence enhancing accuracy in land cover classification.

4.6.3. Classification Performance on the Trento Dataset

The Trento dataset predominantly consists of agricultural and urban areas, posing difficulties due to the significant spectral resemblance among various land cover categories, especially in vineyards and roadways.

- CAB-HL attains an OA of 99.7%, an AA of 99.6%, and a Kappa coefficient of 99.59, illustrating its exceptional proficiency in identifying agricultural and urban areas.

- DSHF (98.3%) and MS2CANet (97.9%) demonstrate competitive efficacy; nevertheless, they inadequately capture fine-grained spatial information, resulting in misclassification within mixed land cover areas.

- SAL2RN (97.6%) and CCR-Net (96.4%) exhibit difficulty in distinguishing between vineyard and ground classes, thus affecting their total classification accuracy.

- DHViT and ExViT demonstrate the poorest performance, mostly because to their constrained capacity to capture long-range dependencies in agricultural regions.

Figure 8 demonstrates that the classification map produced by CAB-HL closely corresponds with the ground-truth data, whereas alternative approaches exhibit significant classification noise. Competing models demonstrate misclassification at field boundaries and road networks, but CAB-HL effectively maintains spatial continuity in agricultural areas. In all three datasets (Augsburg, Trento, and Houston2013), our proposed CAB-HL model consistently surpasses current state-of-the-art approaches regarding OA, AA, and Kappa measures. The enhancements in classification accuracy are due to CAB-HL’s sophisticated spectral–spatial feature extraction and hierarchical learning methodology, which adeptly catches intricate nuances and complex land cover patterns.

The classification maps (Figure 6, Figure 7 and Figure 8) further substantiate the efficacy of our approach, demonstrating a notable decrease in misclassification errors relative to alternative methods. Specifically, in densely populated metropolitan regions and agricultural environments, CAB-HL exhibits distinct benefits in maintaining spatial coherence and minimizing categorization noise.

These findings highlight the capability of CAB-HL as a formidable hyperspectral image classification framework, facilitating enhanced land cover mapping applications in urban planning, agriculture, and environmental monitoring.

4.7. Multiscale Input Patch Size Comparison

The determination of the optimal patch size is critical in picture categorization, since a large patch size incorporates superfluous information, but a small patch size may obscure essential details. In multiscale feature inputs, the patch size is crucial, as varying patch sizes produce distinct imaging outcomes. Figure 7 depicts the effect of input patch sizes varying from 2 to 10. The findings indicate that 8 is the ideal parameter for the Houston2013 dataset, whereas 6 is optimal for the other two datasets. This variation is ascribed to the varying spatial scale dimensions among the various datasets.

4.8. Learning-Rate Comparison

The learning rate is a crucial hyperparameter in the deep learning process (Figure 6). The main goal is to control the network’s mass by calculating and implementing the gradient of the loss function. An excessively high learning rate may cause parameter updates to exceed the optimal value. This consequently causes variations in the loss function value throughout parameter tuning, ultimately leading to the failure of network convergence. A learning rate that is excessively low may cause the network parameters to become ensnared in a high local minimum during the parameter search, hindering the exploration of superior local minima that are more likely to be global. The classification results with learning rates varying from 0.001 to 0.0001. The findings indicate that 0.0001 is the best parameter for three datasets.

5. Ablation Study: Evaluating the Impact of Key Components in the CAB-HL Model

In this ablation study, we examine various iterations and combinations of input modalities and modules in order to assess the efficacy of the cross attention module (CAM) in the CAB-HL model. Three benchmark datasets, Houston2013, Augsburg, and Trento, were used in the trials to evaluate how each component affects the model performance. The findings for various configurations, such as HSI, LiDAR, and CAMs, are displayed in Table 5, along with the OA, AA, and Kappa coefficient.

Table 5.

Ablation Study.

The introduction of the cross attention module (CAM) significantly improves the performance of the CAB-HL model across all datasets. The HSI + LiDAR + CAM configuration yields the maximum overall accuracy (OA) of 99.91 percent in the Trento dataset, 99.89 percent in Houston2013, and 99.91 percent in Augsburg. This signifies that the CAM proficiently integrates spatial and spectral data from both HSI and LiDAR, enhancing the model’s capacity to capture complementing attributes. The improvement in performance is due to the model’s capacity to dynamically focus on the most informative information from both modalities, hence enhancing the overall representation.

When the CAM is excluded and the model uses only HSI and LiDAR features for fusion, we observe a drop in performance. The OA diminishes to 99.57% for Houston2013, 99.54% for Augsburg, and 99.74 percent for Trento, as seen in the HSI-with-LiDAR row in the table. The lack of the CAM restricts the model’s capacity to concentrate on the most pertinent features from each modality, leading to a less effective fusion process. This setup underscores the significance of attention methods for enhanced feature alignment and integration, notwithstanding the model’s continued strong performance.

6. Conclusions

In conclusion, a major development in multimodal data fusion for remote sensing applications is the suggested cross attention bridge for the HSI and LiDAR (CAB-HL) framework. CAB-HL achieves greater integration of spectral and spatial information by utilizing a single-stage fusion technique and a cross-attention mechanism, which overcomes conventional constraints in feature clarity and spatial context. Its efficacy is demonstrated by experimental results on a variety of real-world datasets, which show improved performance in vegetation analysis, topography mapping, and land cover categorization. These results highlight CAB-HL’s potential to improve resource management and environmental monitoring, opening the door for further advancements in remote sensing technology.

Author Contributions

Conceptualization, K.M.H. and Y.L.; Methodology, K.M.H., Y.Z. and A.A.; Validation, K.Z.; Resources, Y.L.; Data curation, A.A.; Writing—original draft, K.M.H.; Writing—review & editing, K.Z. and Y.Z.; Visualization, A.A.; Supervision, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no funding.

Data Availability Statement

Datasets and Code can be provided on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xu, H.; Zheng, T.; Liu, Y.; Zhang, Z.; Xue, C.; Li, J. A joint convolutional cross ViT network for hyperspectral and light detection and ranging fusion classification. Remote Sens. 2024, 16, 489. [Google Scholar] [CrossRef]

- Sun, W.; Yang, G.; Chen, C.; Chang, M.; Huang, K.; Meng, X.; Liu, L. Development status and literature analysis of China’s earth observation remote sensing satellites. J. Remote Sens. 2020, 24, 479–510. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph convolutional networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5966–5978. [Google Scholar] [CrossRef]

- Wang, L.; Wang, X. Dual-coupled cnn-gcn-based classification for hyperspectral and lidar data. Sensors 2022, 22, 5735. [Google Scholar] [CrossRef]

- Yang, J.X.; Wang, J.; Sui, C.H.; Long, Z.; Zhou, J. HSLiNets: Hyperspectral Image and LiDAR Data Fusion Using Efficient Dual Linear Feature Learning Networks. arXiv 2024, arXiv:2412.00302. [Google Scholar]

- Yu, C.; Han, R.; Song, M.; Liu, C.; Chang, C.I. A simplified 2D-3D CNN architecture for hyperspectral image classification based on spatial–spectral fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2485–2501. [Google Scholar] [CrossRef]

- Hao, J.; Dong, F.; Wang, S.; Li, Y.; Cui, J.; Men, J.; Liu, S. Combined hyperspectral imaging technology with 2D convolutional neural network for near geographical origins identification of wolfberry. J. Food Meas. Charact. 2022, 16, 4923–4933. [Google Scholar] [CrossRef]

- Liu, D.; Han, G.; Liu, P.; Yang, H.; Sun, X.; Li, Q.; Wu, J. A novel 2D-3D CNN with spectral-spatial multi-scale feature fusion for hyperspectral image classification. Remote Sens. 2021, 13, 4621. [Google Scholar] [CrossRef]

- Zhao, J.; Wang, G.; Zhou, B.; Ying, J.; Liu, J. Exploring an application-oriented land-based hyperspectral target detection framework based on 3D–2D CNN and transfer learning. EURASIP J. Adv. Signal Process. 2024, 2024, 37. [Google Scholar] [CrossRef]

- Wang, Q.; Zhou, B.; Zhang, J.; Xie, J.; Wang, Y. Joint Classification of Hyperspectral Images and LiDAR Data Based on Dual-Branch Transformer. Sensors 2024, 24, 867. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Hang, R.; Zhang, B.; Chanussot, J. Deep encoder–decoder networks for classification of hyperspectral and LiDAR data. IEEE Geosci. Remote Sens. Lett. 2020, 19, 5500205. [Google Scholar] [CrossRef]

- Ghamisi, P.; Höfle, B.; Zhu, X.X. Hyperspectral and LiDAR data fusion using extinction profiles and deep convolutional neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 3011–3024. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. More diverse means better: Multimodal deep learning meets remote-sensing imagery classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4340–4354. [Google Scholar] [CrossRef]

- Ali, A.; Mu, C.; Zhang, Z.; Zhu, J.; Liu, Y. A two-branch multiscale spectral-spatial feature extraction network for hyperspectral image classification. J. Inf. Intell. 2024, 2, 224–235. [Google Scholar] [CrossRef]

- Choi, E.; Lee, C. Optimizing feature extraction for multiclass problems. IEEE Trans. Geosci. Remote Sens. 2001, 39, 521–528. [Google Scholar] [CrossRef]

- Zheng, Q.; Sun, J. Effective point cloud analysis using multi-scale features. Sensors 2021, 21, 5574. [Google Scholar] [CrossRef] [PubMed]

- Gao, H.; Wu, H.; Chen, Z.; Zhang, Y.; Zhang, Y.; Li, C. Multiscale spectral-spatial cross-extraction network for hyperspectral image classification. IET Image Process. 2022, 16, 755–771. [Google Scholar] [CrossRef]

- Pan, H.; Gao, F.; Dong, J.; Du, Q. Multiscale adaptive fusion network for hyperspectral image denoising. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3045–3059. [Google Scholar] [CrossRef]

- Wang, K.; Bai, F.; Li, J.; Liu, Y.; Li, Y. MashFormer: A novel multiscale aware hybrid detector for remote sensing object detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2753–2763. [Google Scholar] [CrossRef]

- Xue, Z.; Yu, X.; Tan, X.; Liu, B.; Yu, A.; Wei, X. Multiscale deep learning network with self-calibrated convolution for hyperspectral and LiDAR data collaborative classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5514116. [Google Scholar] [CrossRef]

- Ding, K.; Lu, T.; Fu, W.; Li, S.; Ma, F. Global–local transformer network for HSI and LiDAR data joint classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5541213. [Google Scholar] [CrossRef]

- Song, D.; Gao, J.; Wang, B.; Wang, M. A multi-scale pseudo-siamese network with an attention mechanism for classification of hyperspectral and lidar data. Remote Sens. 2023, 15, 1283. [Google Scholar] [CrossRef]

- Meng, Q.; Zhao, M.; Zhang, L.; Shi, W.; Su, C.; Bruzzone, L. Multilayer feature fusion network with spatial attention and gated mechanism for remote sensing scene classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6510105. [Google Scholar] [CrossRef]

- Geng, Z.; Guo, M.H.; Chen, H.; Li, X.; Wei, K.; Lin, Z. Is attention better than matrix decomposition? arXiv 2021, arXiv:2109.04553. [Google Scholar] [CrossRef]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, K. Recurrent models of visual attention. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar] [CrossRef]

- Vaswani, A. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Li, H.C.; Hu, W.S.; Li, W.; Li, J.; Du, Q.; Plaza, A. A 3 clnn: Spatial, spectral and multiscale attention convlstm neural network for multisource remote sensing data classification. IEEE Trans. Neural Netw. Learn. Syst. 2020, 33, 747–761. [Google Scholar] [CrossRef]

- He, K.; Sun, W.; Yang, G.; Meng, X.; Ren, K.; Peng, J.; Du, Q. A dual global–local attention network for hyperspectral band selection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5527613. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhu, M.; Jiao, L.; Liu, F.; Yang, S.; Wang, J. Residual spectral–spatial attention network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 449–462. [Google Scholar] [CrossRef]

- Wang, X.; Wang, X.; Zhao, K.; Zhao, X.; Song, C. Fsl-unet: Full-scale linked unet with spatial–spectral joint perceptual attention for hyperspectral and multispectral image fusion. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5539114. [Google Scholar] [CrossRef]

- Xu, X.; Li, W.; Ran, Q.; Du, Q.; Gao, L.; Zhang, B. Multisource remote sensing data classification based on convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2017, 56, 937–949. [Google Scholar] [CrossRef]

- Mohla, S.; Pande, S.; Banerjee, B.; Chaudhuri, S. Fusatnet: Dual attention based spectrospatial multimodal fusion network for hyperspectral and lidar classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 92–93. [Google Scholar]

- Hang, R.; Li, Z.; Ghamisi, P.; Hong, D.; Xia, G.; Liu, Q. Classification of hyperspectral and LiDAR data using coupled CNNs. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4939–4950. [Google Scholar] [CrossRef]

- Debes, C.; Merentitis, A.; Heremans, R.; Hahn, J.; Frangiadakis, N.; van Kasteren, T.; Liao, W.; Bellens, R.; Pižurica, A.; Gautama, S.; et al. Hyperspectral and LiDAR data fusion: Outcome of the 2013 GRSS data fusion contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2405–2418. [Google Scholar] [CrossRef]

- Hong, D.; Hu, J.; Yao, J.; Chanussot, J.; Zhu, X.X. Multimodal remote sensing benchmark datasets for land cover classification with a shared and specific feature learning model. ISPRS J. Photogramm. Remote Sens. 2021, 178, 68–80. [Google Scholar] [CrossRef] [PubMed]

- Lu, T.; Ding, K.; Fu, W.; Li, S.; Guo, A. Coupled adversarial learning for fusion classification of hyperspectral and LiDAR data. Inf. Fusion 2023, 93, 118–131. [Google Scholar] [CrossRef]

- Wu, X.; Hong, D.; Chanussot, J. Convolutional neural networks for multimodal remote sensing data classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5517010. [Google Scholar] [CrossRef]

- Yao, J.; Zhang, B.; Li, C.; Hong, D.; Chanussot, J. Extended vision transformer (ExViT) for land use and land cover classification: A multimodal deep learning framework. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5514415. [Google Scholar] [CrossRef]

- Xue, Z.; Tan, X.; Yu, X.; Liu, B.; Yu, A.; Zhang, P. Deep hierarchical vision transformer for hyperspectral and LiDAR data classification. IEEE Trans. Image Process. 2022, 31, 3095–3110. [Google Scholar] [CrossRef]

- Li, J.; Liu, Y.; Song, R.; Li, Y.; Han, K.; Du, Q. Sal2rn: A spatial–spectral salient reinforcement network for hyperspectral and lidar data fusion classification. IEEE Trans. Geosci. Remote Sens. 2022, 61, 5500114. [Google Scholar] [CrossRef]

- Wang, X.; Zhu, J.; Feng, Y.; Wang, L. MS2CANet: Multiscale spatial–spectral cross-modal attention network for hyperspectral image and LiDAR classification. IEEE Geosci. Remote Sens. Lett. 2024, 21, 5501505. [Google Scholar] [CrossRef]

- Feng, Y.; Song, L.; Wang, L.; Wang, X. DSHFNet: Dynamic scale hierarchical fusion network based on multiattention for hyperspectral image and LiDAR data classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5522514. [Google Scholar] [CrossRef]

- Wang, X.; Song, L.; Feng, Y.; Zhu, J. S3F2Net: Spatial-Spectral-Structural Feature Fusion Network for Hyperspectral Image and LiDAR Data Classification. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4801–4815. [Google Scholar] [CrossRef]

- Wang, J.; Li, J.; Shi, Y.; Lai, J.; Tan, X. AM3Net: Adaptive mutual-learning-based multimodal data fusion network. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5411–5426. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).