BCTDNet: Building Change-Type Detection Networks with the Segment Anything Model in Remote Sensing Images

Abstract

1. Introduction

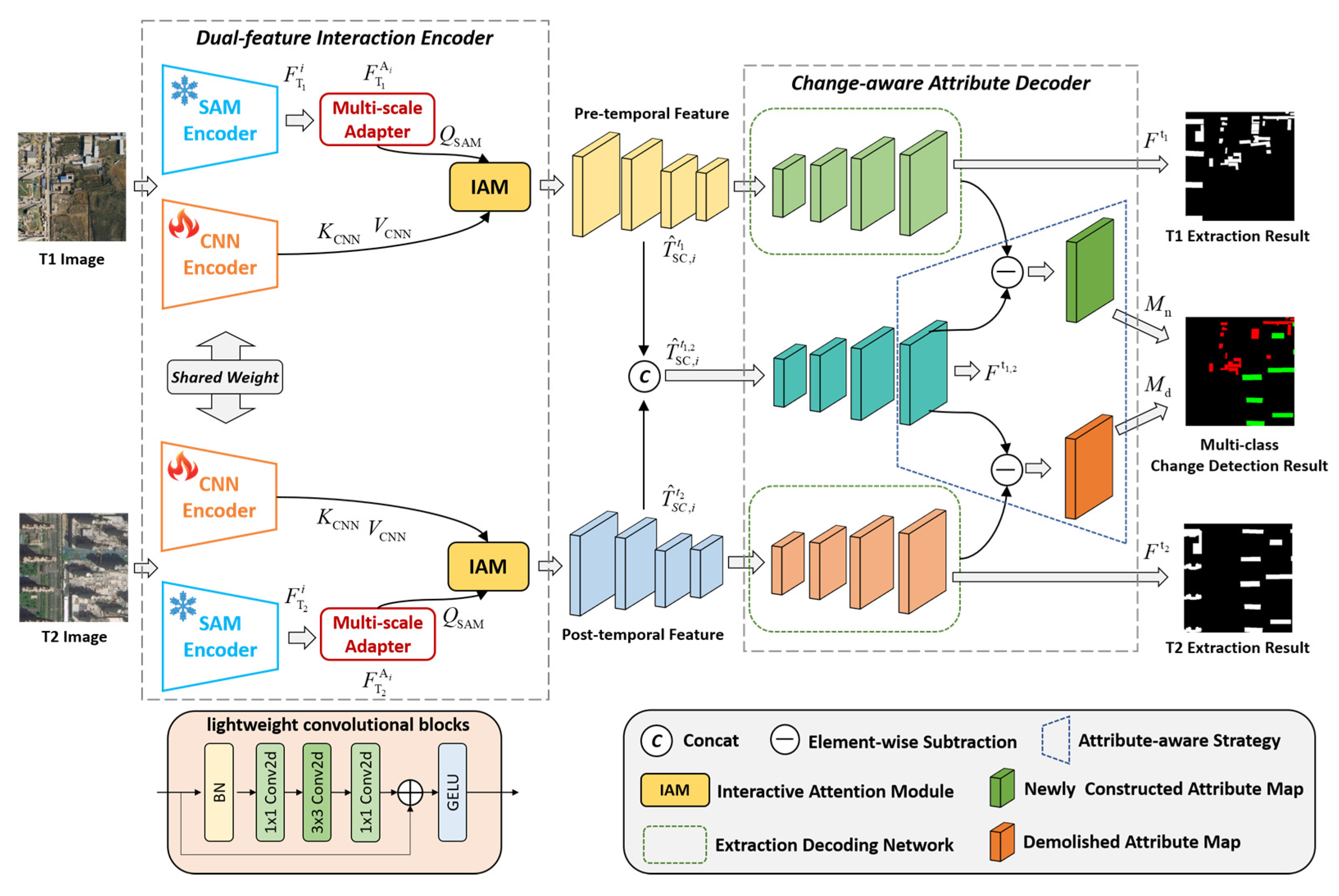

- We present a building change-type detection network, BCTDNet, which utilizes dual-feature interaction and attribute-aware decoding to identify newly constructed and demolished buildings.

- To improve building recognition, we design a dual-feature interaction encoder that integrates multi-granularity features from SAM and CNN, adopting interactive attention. Furthermore, we develop a change-aware attribute decoder that incorporates an attribute-aware strategy to explicitly generate discriminative maps for newly constructed and demolished buildings, ensuring clear change type separation.

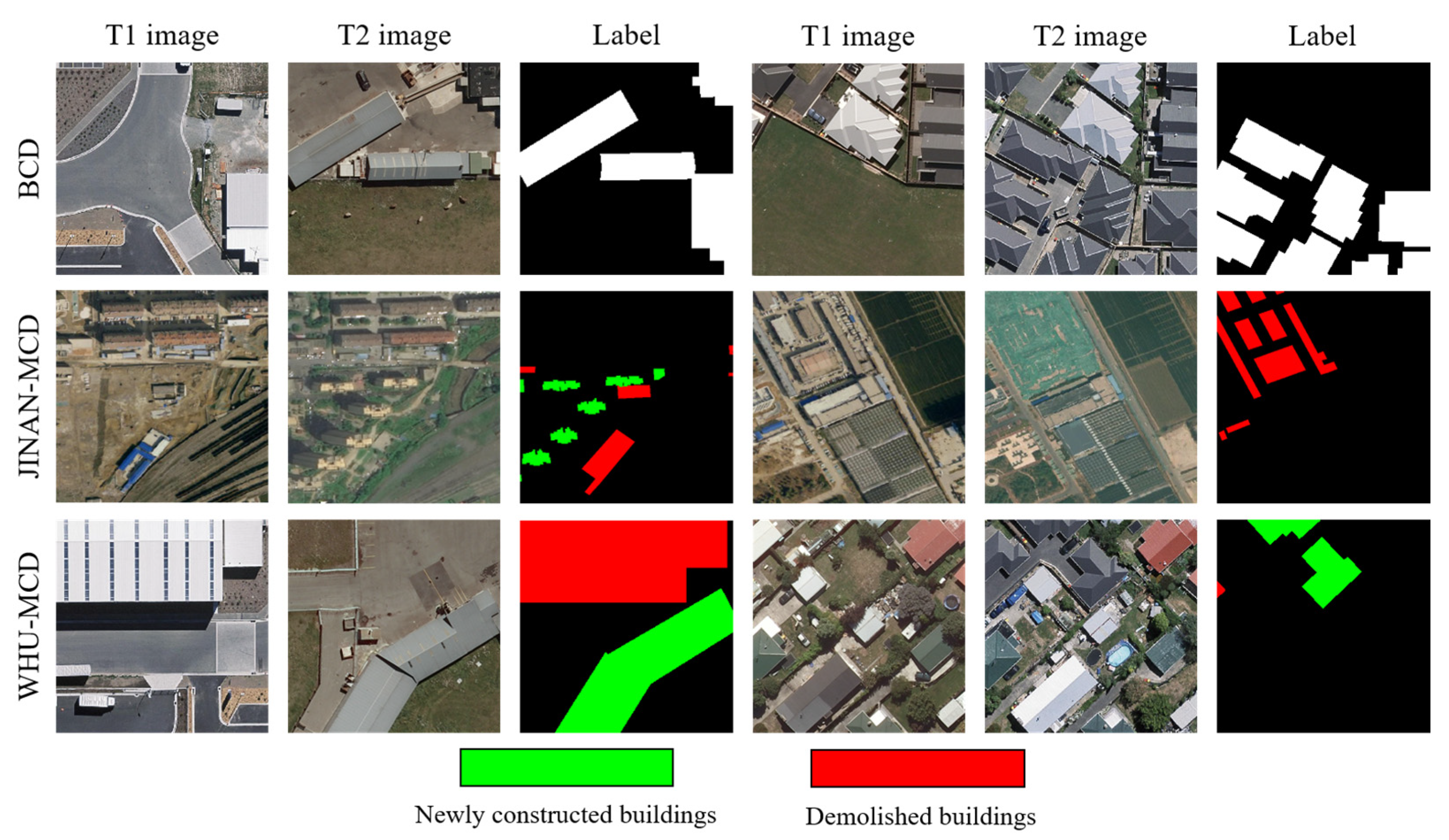

- We construct the JINAN-MCD dataset specifically for the change-type detection task. Covering urban core areas over a six-year period, the JINAN-MCD dataset captures diverse change scenarios. It contains bi-temporal images, extraction labels, and change-type labels, thus meeting the needs of multi-task execution.

2. Related Work

2.1. Binary Building Change Detection Methods

2.2. Building Change-Type Detection Methods

3. Methodology

3.1. Architecture Overview

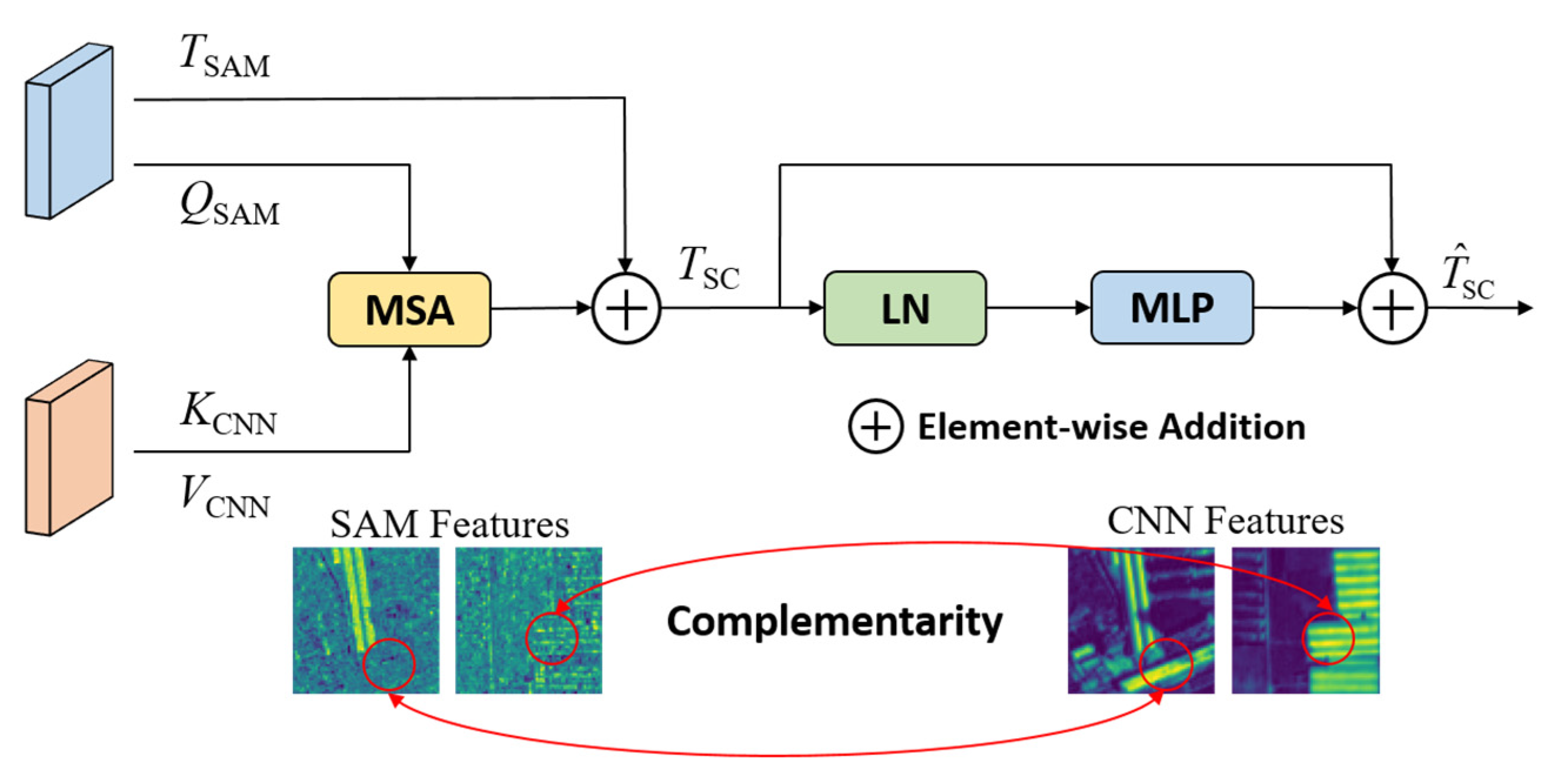

3.2. Dual-Feature Interaction Encoder

3.2.1. Multi-Scale Adapter

3.2.2. Interactive Attention Module

3.3. Change-Aware Attribute Decoder

3.4. Loss Function

4. Experiment

4.1. Datasets

4.2. Implementation Details

4.3. Evaluation Metrics

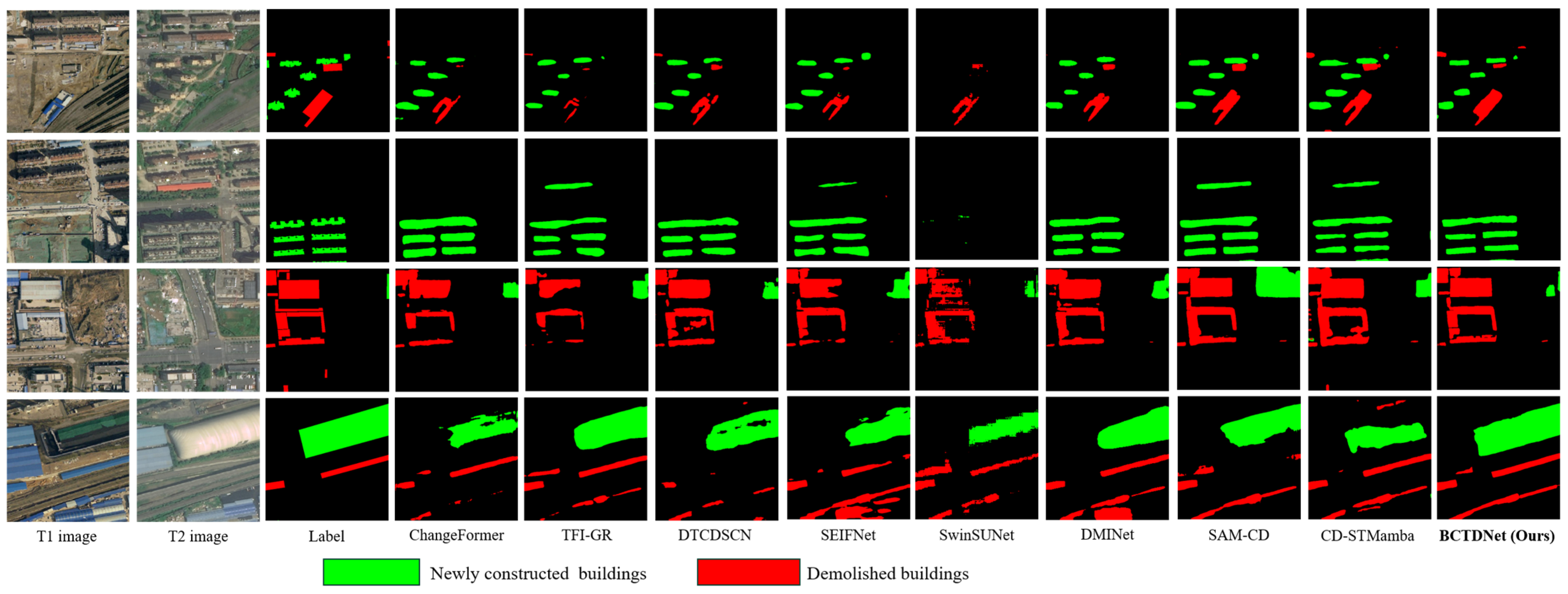

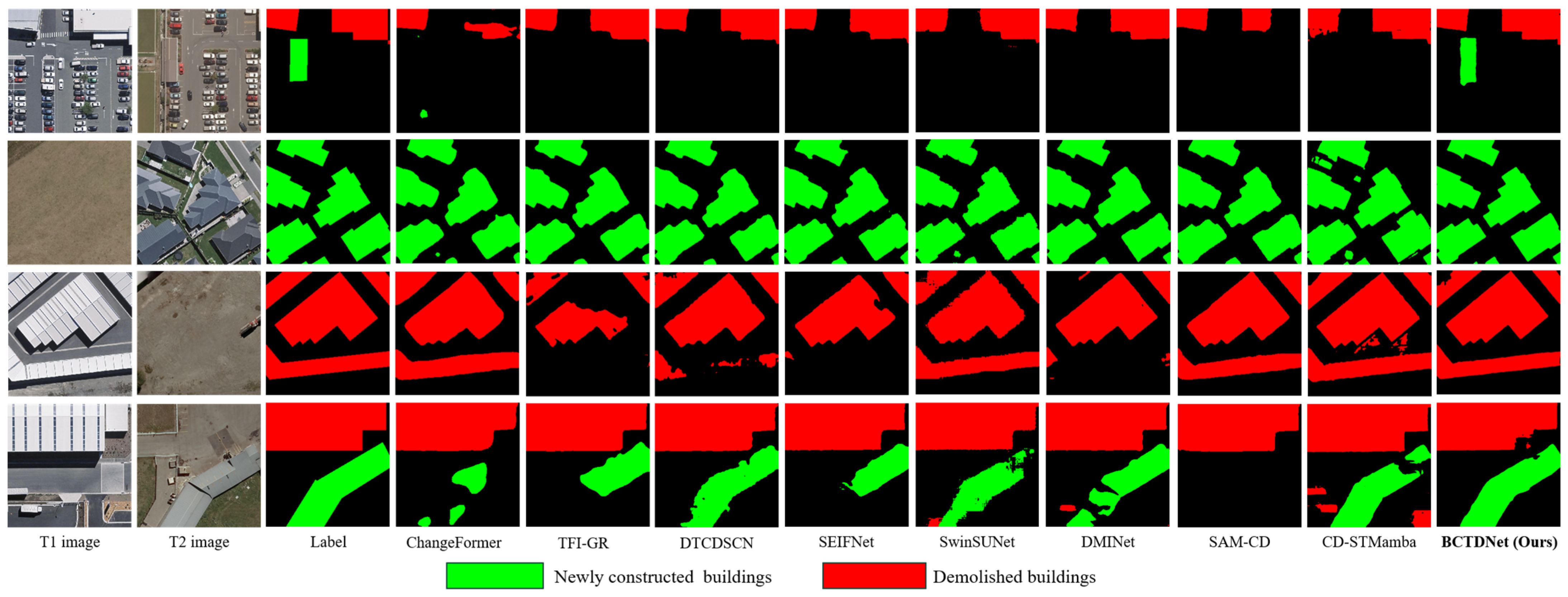

4.4. Experimental Results

4.5. Ablation Studies

4.5.1. The Effectiveness of the Dual-Feature Interaction Encoder

4.5.2. The Impact of the Interactive Attention Module

4.5.3. The Effectiveness of the Attribute-Aware Strategy

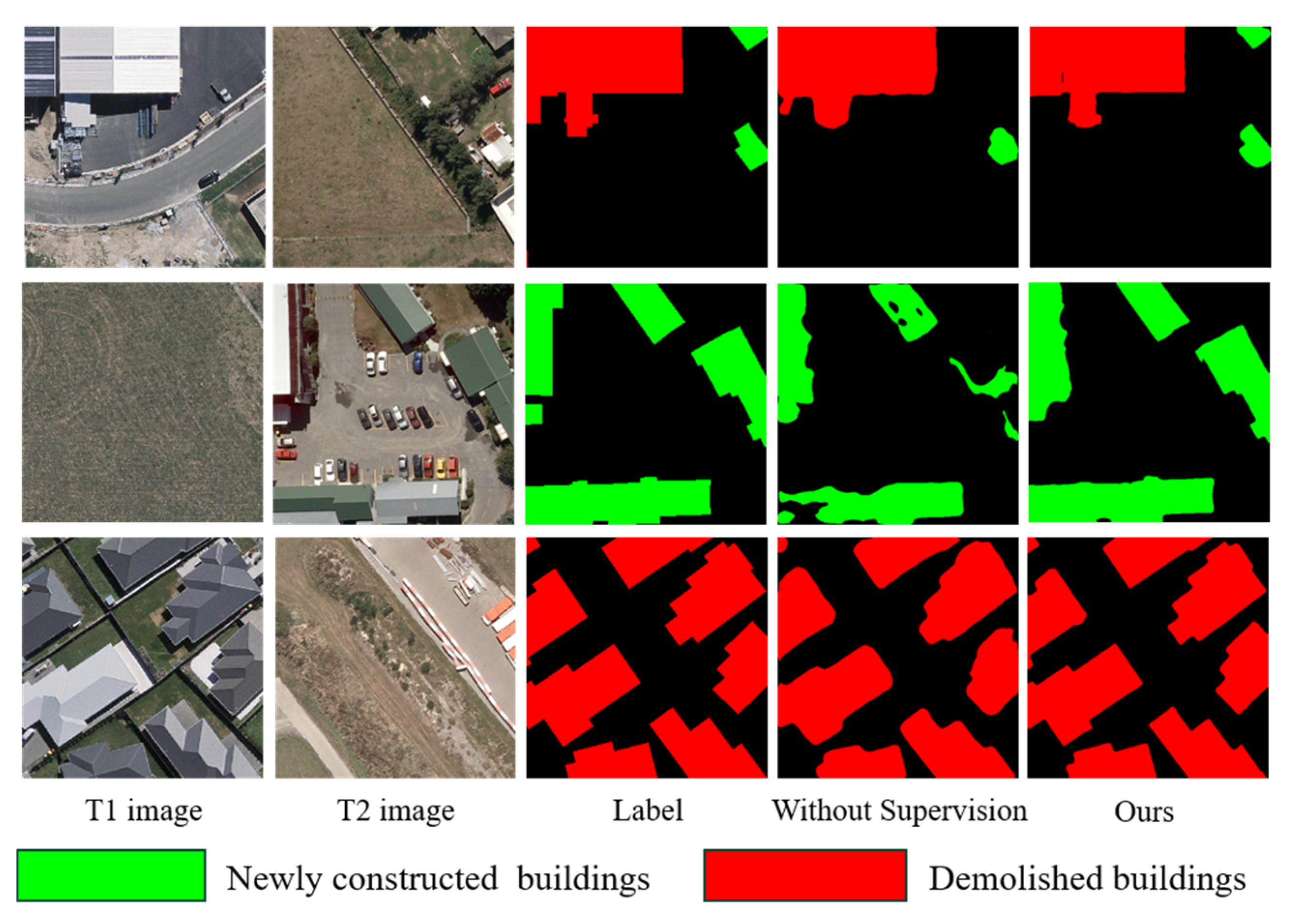

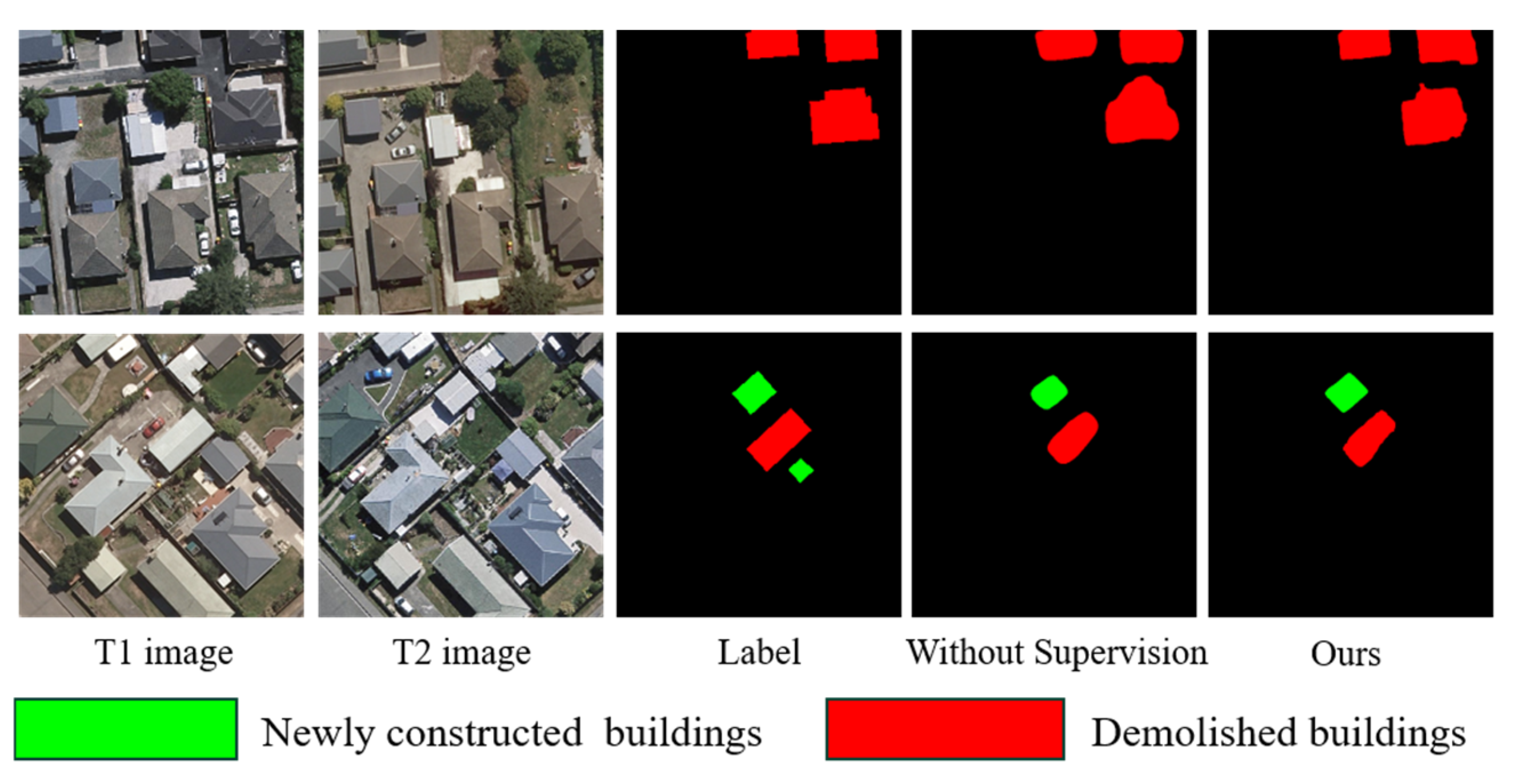

4.5.4. The Impact of the Supervision of Extraction Decoding Networks

5. Discussion

5.1. Visualization Performance in Complex Environments

5.2. Interpretability of Independent Ablation Studies

5.3. Effectiveness of the Temporal Augmentation Strategy on the WHU-MCD Dataset

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| BCTDNet | building change-type detection network |

| SAM | Segment Anything Model |

| CNN | Convolutional Neural Network |

| BCD | binary change detection |

| FCNs | fully convolutional networks |

| SSMs | state space models |

| IAM | interactive attention module |

| AAS | attribute-aware strategy |

| BN | batch normalization |

| GELU | Gaussian Error Linear Unit |

| RSIs | remote sensing images |

| MLP | Multi-Layer Perceptron |

References

- Caye Daudt, R.; Le Saux, B.; Boulch, A. Fully Convolutional Siamese Networks for Change Detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; IEEE: New York, NY, USA, 2018; pp. 4063–4067. [Google Scholar]

- Abdi, G.; Jabari, S. A Multi-Feature Fusion Using Deep Transfer Learning for Earthquake Building Damage Detection. Can. J. Remote Sens. 2021, 47, 337–352. [Google Scholar] [CrossRef]

- Shi, Q.; Liu, M.; Li, S.; Liu, X.; Wang, F.; Zhang, L. A Deeply Supervised Attention Metric-Based Network and an Open Aerial Image Dataset for Remote Sensing Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Zhu, D.; Huang, X.; Huang, H.; Shao, Z.; Cheng, Q. ChangeViT: Unleashing Plain Vision Transformers for Change Detection. arXiv 2024, arXiv:2406.12847. [Google Scholar]

- Zhao, S.; Zhang, X.; Xiao, P.; He, G. Exchanging Dual-Encoder–Decoder: A New Strategy for Change Detection With Semantic Guidance and Spatial Localization. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Liu, S.; Wang, S.; Zhang, W.; Zhang, T.; Xu, M.; Yasir, M.; Wei, S. CD-STMamba: Toward Remote Sensing Image Change Detection With Spatio-Temporal Interaction Mamba Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 10471–10485. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A Deeply Supervised Image Fusion Network for Change Detection in High Resolution Bi-Temporal Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Liu, Y.; Pang, C.; Zhan, Z.; Zhang, X.; Yang, X. Building Change Detection for Remote Sensing Images Using a Dual-Task Constrained Deep Siamese Convolutional Network Model. IEEE Geosci. Remote Sens. Lett. 2021, 18, 811–815. [Google Scholar] [CrossRef]

- Chen, H.; Wu, C.; Du, B.; Zhang, L.; Wang, L. Change Detection in Multisource VHR Images via Deep Siamese Convolutional Multiple-Layers Recurrent Neural Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2848–2864. [Google Scholar] [CrossRef]

- He, R.; Li, W.; Mei, S.; Dai, Y.; He, M. EFP-Net: A Novel Building Change Detection Method Based on Efficient Feature Fusion and Foreground Perception. Remote Sens. 2023, 15, 5268. [Google Scholar] [CrossRef]

- Yasir, M.; Liu, S.; Pirasteh, S.; Xu, M.; Sheng, H.; Wan, J.; De Figueiredo, F.A.P.; Aguilar, F.J.; Li, J. YOLOShipTracker: Tracking Ships in SAR Images Using Lightweight YOLOv8. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104137. [Google Scholar] [CrossRef]

- Yasir, M.; Shanwei, L.; Mingming, X.; Jianhua, W.; Nazir, S.; Islam, Q.U.; Dang, K.B. SwinYOLOv7: Robust Ship Detection in Complex Synthetic Aperture Radar Images. Appl. Soft Comput. 2024, 160, 111704. [Google Scholar] [CrossRef]

- Tao, C.; Kuang, D.; Wu, K.; Zhao, X.; Zhao, C.; Du, X.; Zhang, Y. A Siamese Network with a Multiscale Window-Based Transformer via an Adaptive Fusion Strategy for High-Resolution Remote Sensing Image Change Detection. Remote Sens. 2023, 15, 2433. [Google Scholar] [CrossRef]

- Zou, Y.; Shen, T.; Chen, Z.; Chen, P.; Yang, X.; Zan, L. A Transformer-Based Neural Network with Improved Pyramid Pooling Module for Change Detection in Ecological Redline Monitoring. Remote Sens. 2023, 15, 588. [Google Scholar] [CrossRef]

- Bandara, W.G.C.; Patel, V.M. A Transformer-Based Siamese Network for Change Detection. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022. [Google Scholar]

- Ke, Q.; Zhang, P. Hybrid-TransCD: A Hybrid Transformer Remote Sensing Image Change Detection Network via Token Aggregation. IJGI 2022, 11, 263. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection with Transformers. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Li, B.; Wang, G.; Zhang, T.; Yang, H.; Zhang, S. Remote Sensing Image-Change Detection with Pre-Generation of Depthwise-Separable Change-Salient Maps. Remote Sens. 2022, 14, 4972. [Google Scholar] [CrossRef]

- Gu, A.; Goel, K.; Ré, C. Efficiently Modeling Long Sequences with Structured State Spaces. arXiv 2022, arXiv:2111.00396. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-Time Sequence Modeling with Selective State Spaces. arXiv 2024, arXiv:2312.00752. [Google Scholar]

- Wang, Z.; Zheng, J.-Q.; Zhang, Y.; Cui, G.; Li, L. Mamba-UNet: UNet-Like Pure Visual Mamba for Medical Image Segmentation. arXiv 2024, arXiv:2402.05079. [Google Scholar]

- Zhao, S.; Chen, H.; Zhang, X.; Xiao, P.; Bai, L.; Ouyang, W. RS-Mamba for Large Remote Sensing Image Dense Prediction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Xing, Z.; Ye, T.; Yang, Y.; Liu, G.; Zhu, L. SegMamba: Long-Range Sequential Modeling Mamba for 3D Medical Image Segmentation. arXiv 2024, arXiv:2401.13560. [Google Scholar]

- Ruan, J.; Xiang, S. VM-UNet: Vision Mamba UNet for Medical Image Segmentation. arXiv 2024, arXiv:2402.02491. [Google Scholar]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Liu, Y. VMamba: Visual State Space Model. arXiv 2024, arXiv:2401.10166. [Google Scholar]

- He, X.; Cao, K.; Yan, K.; Li, R.; Xie, C.; Zhang, J.; Zhou, M. Pan-Mamba: Effective Pan-Sharpening with State Space Model. Inf. Fusion 2025, 115, 102779. [Google Scholar] [CrossRef]

- Liao, W.; Zhu, Y.; Wang, X.; Pan, C.; Wang, Y.; Ma, L. LightM-UNet: Mamba Assists in Lightweight UNet for Medical Image Segmentation. arXiv 2024, arXiv:2403.05246. [Google Scholar]

- Zhang, H.; Chen, K.; Liu, C.; Chen, H.; Zou, Z.; Shi, Z. CDMamba: Remote Sensing Image Change Detection with Mamba. arXiv 2024, arXiv:2406.04207. [Google Scholar]

- Xie, X.; Cui, Y.; Ieong, C.-I.; Tan, T.; Zhang, X.; Zheng, X.; Yu, Z. FusionMamba: Dynamic Feature Enhancement for Multimodal Image Fusion with Mamba. Vis. Intell. 2024, 2, 37. [Google Scholar] [CrossRef]

- Yang, A.; Li, M.; Ding, Y.; Fang, L.; Cai, Y.; He, Y. GraphMamba: An Efficient Graph Structure Learning Vision Mamba for Hyperspectral Image Classification. arXiv 2024, arXiv:2407.08255. [Google Scholar] [CrossRef]

- Liao, C.; Hu, H.; Yuan, X.; Li, H.; Liu, C.; Liu, C.; Fu, G.; Ding, Y.; Zhu, Q. BCE-Net: Reliable Building Footprints Change Extraction Based on Historical Map and up-to-Date Images Using Contrastive Learning. ISPRS J. Photogramm. Remote Sens. 2023, 201, 138–152. [Google Scholar] [CrossRef]

- Zhang, W.; Li, F.; Meng, J.; Li, J.; Wang, S.; Wan, J. Segment Anything Model for Multiclass Building Change Detection in Remote Sensing Images. In Proceedings of the Third International Conference on Environmental Remote Sensing and Geographic Information Technology (ERSGIT 2024), Xi’an, China, 22–24 November 2024; Tan, K., Yao, G., Eds.; SPIE: Bellingham, WA, USA, 2025; p. 6. [Google Scholar]

- Feng, Y.; Jiang, J.; Xu, H.; Zheng, J. Change Detection on Remote Sensing Images Using Dual-Branch Multilevel Intertemporal Network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Du, Z.; Li, X.; Miao, J.; Huang, Y.; Shen, H.; Zhang, L. Concatenated Deep-Learning Framework for Multitask Change Detection of Optical and SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 719–731. [Google Scholar] [CrossRef]

- Chen, P.; Zhang, B.; Hong, D.; Chen, Z.; Yang, X.; Li, B. FCCDN: Feature Constraint Network for VHR Image Change Detection. ISPRS J. Photogramm. Remote Sens. 2022, 187, 101–119. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, L.; Cheng, S.; Li, Y. SwinSUNet: Pure Transformer Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Li, Q.; Zhong, R.; Du, X.; Du, Y. TransUNetCD: A Hybrid Transformer Network for Change Detection in Optical Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–19. [Google Scholar] [CrossRef]

- Li, W.; Xue, L.; Wang, X.; Li, G. ConvTransNet: A CNN–Transformer Network for Change Detection With Multiscale Global–Local Representations. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Chen, H.; Song, J.; Han, C.; Xia, J.; Yokoya, N. ChangeMamba: Remote Sensing Change Detection With Spatiotemporal State Space Model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–20. [Google Scholar] [CrossRef]

- Zhao, X.; Ding, W.; An, Y.; Du, Y.; Yu, T.; Li, M.; Tang, M.; Wang, J. Fast Segment Anything. arXiv 2023, arXiv:2306.12156. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for Activation Functions. arXiv 2017, arXiv:1710.05941. [Google Scholar]

- Ding, L.; Zhu, K.; Peng, D.; Tang, H.; Yang, K.; Bruzzone, L. Adapting Segment Anything Model for Change Detection in VHR Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–11. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction From an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Li, Z.; Tang, C.; Wang, L.; Zomaya, A.Y. Remote Sensing Change Detection via Temporal Feature Interaction and Guided Refinement. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Huang, Y.; Li, X.; Du, Z.; Shen, H. Spatiotemporal Enhancement and Interlevel Fusion Network for Remote Sensing Images Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

| Source Image | Coverage | Resolution | Number of Bands | Temporal Subset | Image Size | Train/Test | Change-Type Scale |

|---|---|---|---|---|---|---|---|

| 2017 | 50 km2 | 0.5 m/pixel | 3 (RGB) | 2017–2018 | 512 × 512 | 13,639/1608 | Newly constructed buildings (Number: 30,598; Area: 12.59 km2) Demolished buildings (Number: 25,616; Area: 8.32 km2) |

| 2018 | 2018–2019 | ||||||

| 2019 | 2019–2021 | ||||||

| 2021 | 2021–2022 | ||||||

| 2022 | 2022–2023 | ||||||

| 2023 | 2017–2023 |

| Coverage | Resolution | Number of Bands | Temporal Subset | Image Size | Train/Test | Change-Type Scale |

|---|---|---|---|---|---|---|

| 20.5 km2 | 0.3 m/pixel | 3 (RGB) | 2012–2016 | 256 × 256 | 6096/1524 | Newly constructed buildings (Number: 3054; Area: 0.89 km2) Demolished buildings (Number: 2577; Area: 0.85 km2) |

| Method | Param. (M) | FLOPs (G) | JINAN-MCD | WHU-MCD | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| IoU | F1 | Pre | Rec | IoU | F1 | Pre | Rec | |||

| ChangeFormer | 41.03 | 202.79 | 30.59 | 46.80 | 64.20 | 36.90 | 69.36 | 81.82 | 87.24 | 77.08 |

| TFI-GR | 28.37 | 20.37 | 26.84 | 42.32 | 72.48 | 30.02 | 77.73 | 87.39 | 90.26 | 84.72 |

| DTCDSCN | 41.07 | 15.24 | 36.03 | 52.95 | 67.70 | 43.53 | 77.85 | 87.53 | 92.58 | 83.09 |

| SEIFNet | 8.38 | 27.91 | 35.29 | 52.12 | 64.16 | 44.03 | 76.57 | 86.64 | 92.89 | 81.38 |

| SwinSUNet | 43.57 | 12.43 | 22.09 | 35.71 | 52.45 | 27.40 | 81.76 | 89.95 | 90.25 | 89.66 |

| DMINet | 6.76 | 17.43 | 33.39 | 50.04 | 69.81 | 39.07 | 78.42 | 87.85 | 92.43 | 83.78 |

| SAM-CD | 5.49 | 39.06 | 39.63 | 56.69 | 59.07 | 54.89 | 80.60 | 89.24 | 91.67 | 86.95 |

| CD-STMamba | 63.33 | 67.00 | 40.78 | 57.86 | 56.25 | 59.72 | 72.13 | 83.81 | 78.06 | 90.50 |

| BCTDNet (Ours) | 118.14 | 79.20 | 53.42 | 69.81 | 64.50 | 75.90 | 84.47 | 91.57 | 92.95 | 90.04 |

| Method | Newly Constructed Buildings | Demolished Buildings | ||||||

|---|---|---|---|---|---|---|---|---|

| IoU | F1 | Pre | Rec | IoU | F1 | Pre | Rec | |

| ChangeFormer | 28.28 | 44.09 | 57.46 | 35.77 | 32.90 | 49.51 | 70.93 | 38.03 |

| TFI-GR | 25.95 | 41.21 | 64.25 | 30.33 | 27.73 | 43.42 | 80.71 | 29.70 |

| DTCDSCN | 34.29 | 51.07 | 62.94 | 42.97 | 37.76 | 54.82 | 72.45 | 44.09 |

| SEIFNet | 32.79 | 49.39 | 57.53 | 43.27 | 37.78 | 54.84 | 70.70 | 44.79 |

| SwinSUNet | 15.52 | 26.87 | 45.90 | 19.00 | 28.66 | 44.55 | 59.00 | 35.79 |

| DMINet | 31.94 | 48.41 | 64.41 | 38.78 | 34.83 | 51.67 | 75.20 | 39.36 |

| SAM-CD | 36.37 | 53.33 | 51.83 | 54.93 | 42.90 | 60.04 | 66.31 | 54.86 |

| CD-STMamba | 37.52 | 54.56 | 55.04 | 54.09 | 44.05 | 61.16 | 57.47 | 65.34 |

| BCTDNet (Ours) | 53.54 | 70.09 | 66.70 | 73.04 | 53.30 | 69.53 | 62.30 | 78.75 |

| Method | Newly Constructed Buildings | Demolished Buildings | ||||||

|---|---|---|---|---|---|---|---|---|

| IoU | F1 | Pre | Rec | IoU | F1 | Pre | Rec | |

| ChangeFormer | 74.01 | 85.06 | 89.18 | 81.31 | 64.71 | 78.58 | 85.29 | 72.84 |

| TFI-GR | 82.71 | 90.54 | 92.19 | 88.94 | 72.75 | 84.23 | 88.33 | 80.49 |

| DTCDSCN | 80.36 | 89.11 | 91.88 | 86.50 | 75.34 | 85.94 | 93.27 | 79.68 |

| SEIFNet | 81.40 | 89.74 | 92.66 | 87.01 | 71.73 | 83.54 | 93.12 | 75.75 |

| SwinSUNet | 84.33 | 91.50 | 92.79 | 90.24 | 79.19 | 88.39 | 87.71 | 89.08 |

| DMINet | 82.20 | 90.23 | 92.74 | 87.84 | 74.63 | 85.47 | 92.11 | 79.72 |

| SAM-CD | 83.01 | 90.72 | 92.37 | 89.12 | 78.19 | 87.76 | 90.97 | 84.77 |

| CD-STMamba | 73.36 | 84.63 | 77.82 | 92.74 | 70.91 | 82.98 | 78.29 | 88.26 |

| BCTDNet (Ours) | 86.61 | 92.83 | 94.59 | 91.10 | 82.34 | 90.31 | 91.66 | 88.98 |

| Encoder | Change-Type Detection | Newly Constructed Buildings | Demolished Buildings | |||

|---|---|---|---|---|---|---|

| IoU | F1 | IoU | F1 | IoU | F1 | |

| Only CNN | 79.05 | 86.57 | 80.00 | 87.62 | 78.09 | 85.51 |

| Only SAM | 81.32 | 88.60 | 83.15 | 90.00 | 79.49 | 87.20 |

| Our Encoder | 84.47 | 91.57 | 86.61 | 92.83 | 82.34 | 90.31 |

| Feature Fusion | Change-Type Detection | Newly Constructed Buildings | Demolished Buildings | |||

|---|---|---|---|---|---|---|

| IoU | F1 | IoU | F1 | IoU | F1 | |

| Addition | 51.47 | 67.93 | 51.62 | 68.39 | 51.32 | 67.47 |

| Concatenation | 51.34 | 67.85 | 51.83 | 68.27 | 50.86 | 67.42 |

| IAM | 53.42 | 69.81 | 53.54 | 70.09 | 53.30 | 69.53 |

| Feature Fusion | Change-Type Detection | Newly Constructed Buildings | Demolished Buildings | |||

|---|---|---|---|---|---|---|

| IoU | F1 | IoU | F1 | IoU | F1 | |

| Addition | 82.85 | 89.30 | 84.31 | 90.95 | 81.39 | 87.65 |

| Concatenation | 82.00 | 90.08 | 84.81 | 91.78 | 79.20 | 88.39 |

| IAM | 84.47 | 91.57 | 86.61 | 92.83 | 82.34 | 90.31 |

| Module | Change-Type Detection | Newly Constructed Buildings | Demolished Buildings | |||

|---|---|---|---|---|---|---|

| IoU | F1 | IoU | F1 | IoU | F1 | |

| - | 50.32 | 66.86 | 51.19 | 67.72 | 49.27 | 66.01 |

| Multi-task segmentation branches | 51.58 | 68.07 | 52.19 | 68.59 | 50.97 | 67.55 |

| AAS | 53.42 | 69.81 | 53.54 | 70.09 | 53.30 | 69.53 |

| Module | Change-Type Detection | Newly Constructed Buildings | Demolished Buildings | |||

|---|---|---|---|---|---|---|

| IoU | F1 | IoU | F1 | IoU | F1 | |

| - | 81.28 | 89.64 | 84.44 | 91.57 | 78.12 | 87.71 |

| Multi-task segmentation branches | 82.19 | 90.21 | 84.52 | 91.61 | 79.86 | 88.80 |

| AAS | 84.47 | 91.57 | 86.61 | 92.83 | 82.34 | 90.31 |

| Supervising Extraction Decoding Networks | Change-Type Detection | Newly Constructed Buildings | Demolished Buildings | |||

|---|---|---|---|---|---|---|

| IoU | F1 | IoU | F1 | IoU | F1 | |

| - | 51.74 | 66.77 | 51.77 | 66.80 | 51.71 | 66.74 |

| √ | 53.42 | 69.81 | 53.54 | 70.09 | 53.30 | 69.53 |

| Supervising Extraction Decoding Networks | Change-Type Detection | Newly Constructed Buildings | Demolished Buildings | |||

|---|---|---|---|---|---|---|

| IoU | F1 | IoU | F1 | IoU | F1 | |

| - | 83.37 | 89.96 | 86.09 | 90.64 | 80.65 | 89.28 |

| √ | 84.47 | 91.57 | 86.61 | 92.83 | 82.33 | 90.31 |

| Temporal Augmentation Strategy | Change-Type Detection | Newly Constructed Buildings | Demolished Buildings | |||

|---|---|---|---|---|---|---|

| IoU | F1 | IoU | F1 | IoU | F1 | |

| - | 72.31 | 83.05 | 87.31 | 93.22 | 57.32 | 72.87 |

| √ | 84.47 | 91.57 | 86.61 | 92.83 | 82.34 | 90.31 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, W.; Li, J.; Wang, S.; Wan, J. BCTDNet: Building Change-Type Detection Networks with the Segment Anything Model in Remote Sensing Images. Remote Sens. 2025, 17, 2742. https://doi.org/10.3390/rs17152742

Zhang W, Li J, Wang S, Wan J. BCTDNet: Building Change-Type Detection Networks with the Segment Anything Model in Remote Sensing Images. Remote Sensing. 2025; 17(15):2742. https://doi.org/10.3390/rs17152742

Chicago/Turabian StyleZhang, Wei, Jinsong Li, Shuaipeng Wang, and Jianhua Wan. 2025. "BCTDNet: Building Change-Type Detection Networks with the Segment Anything Model in Remote Sensing Images" Remote Sensing 17, no. 15: 2742. https://doi.org/10.3390/rs17152742

APA StyleZhang, W., Li, J., Wang, S., & Wan, J. (2025). BCTDNet: Building Change-Type Detection Networks with the Segment Anything Model in Remote Sensing Images. Remote Sensing, 17(15), 2742. https://doi.org/10.3390/rs17152742