Abstract

The number of CNN application areas is growing, which leads to the need for training data. The research conducted in this work aimed to obtain effective detection models trained only using simplified synthetic objects (SSOs). The research was conducted on inland shallow water areas, while images of bottom objects were obtained using a UAV platform. The work consisted in preparing SSOs, thanks to which composite images were created. On such training data, 120 models based on the YOLO (You Only Look Once) network were obtained. The study confirmed the effectiveness of models created using YOLOv3, YOLOv5, YOLOv8, YOLOv9, and YOLOv10. A comparison was made between versions of YOLO. The influence of the amount of training data, SSO type, and augmentation parameters used in the training process was analyzed. The main parameter of model performance was the F1-score. The calculated statistics of individual models indicate that the most effective networks use partial augmentation, trained on sets consisting of 2000 SSOs. On the other hand, the increased transparency of SSOs resulted in increasing the diversity of training data and improving the performance of models. This research is developmental, and further research should improve the processes of obtaining detection models using deep networks.

1. Introduction

The increasing progress in the development of deep networks has resulted in numerous publications in recent years relating to the use of machine learning [1]. One of the environments in which work is carried out is shallow water areas. In particular, convolutional neural networks (CNNs) allow for the development of research related to the habitats of marine animals and plants [2,3,4,5,6,7,8]. The use of deep learning allows for the development of techniques related to object detection, as well as improving the quality of images [4,5,9,10]. The ocean is still largely unmapped, and although only about 1% of the ocean is shallow water below 10 m, it is still an important area of research [11,12].

Among interesting applications, it is worth mentioning the acquisition of knowledge regarding the monitoring of animal populations [2,13,14], detection of their habitat areas [3,4,8], monitoring of underwater plant vegetation [15,16,17], underwater boulder mapping [18,19], and control of water pollution [20,21,22]. In the case of working with artificial intelligence-based networks, one of the leading problems is the provision of large training sets [8,23,24]. The large number of labeled images that must be provided by a deep network has led to the search for alternative solutions. One such solution is the use of synthetic data. This type of data can be obtained in many ways, which can be divided into rule-based simulation and generative AI simulation [25,26,27,28,29,30,31,32].

Many publications refer to the use of synthetic data to obtain neural networks for detection purposes. The obtained synthetic data are created as a result of simple image processing as well as complex processes of generating 3D objects [26,33]. They can also contain generated backgrounds or real images [34]. Examples of works in which extremely realistic synthetic objects are generated are all kinds of research related to the inspection of mechanical objects, where the search focuses on details and defects [33,35]. In these studies, the researchers focused on developing a robust system for creating synthetic or composite images. Objects created using computer programs such as Blender allowed for the creation of objects nearly identical to real-world objects. The automation process allowed for automatic labeling and the introduction of changes related to lighting, positioning, and texture. The primary goal of the solutions being developed is to create reliable datasets.

In contrast to this approach, works on objects in shallow water reservoirs often contain elements related to improving the quality of images. The aquatic environment is an extremely variable and difficult area to work in. The use of remote sensing images is limited by the range of electromagnetic radiation penetration; hence, there are works focusing on the detection of objects from sonar images [12,18,19,27,36,37]. Such images do not allow for obtaining natural object colors and have limitations in the case of flat objects, and the use of sonar in shallow water areas can be problematic. In the case of traditional photos taken by an unmanned aerial system (UAV), as well as underwater images, we are dealing with factors that make it difficult to obtain remote sensing data, such as reflections, refraction and dispersion of light, pollution occurring in water, and currents that dynamically change the environmental conditions [3,9,10,38,39,40,41,42]. A number of factors, more or less locally diverse, must be taken into account in such studies. In these studies, the influence of water refers to factors such as water waves and depth, which affect the color and shape of the object. Additionally, the images show reflections and bottom sediments obscuring the objects.

In this article, attention was focused on obtaining images using unmanned aerial vehicles (UAVs) and detecting objects in shallow inland waters. A small amount of training data led to the use of synthetic data. The influence of the previously mentioned factors, i.e., the dynamics of changes in the aquatic environment and its unpredictability, creates a big problem in generating a realistic synthetic object. In this work, the spatial resolution of remote sensing images was also a limitation that influenced the visualization of underwater objects observed from an aerial platform. This is mainly caused by image pixelization and depends on the altitude of the remote sensing platform and the specifications of the optical sensor. These factors led to the proposal to use simplified synthetic objects. Such an SSO visually represents the simplified appearance of the object captured by a UAV camera rather than as a complex 3D model that realistically replicates the real one. Simplification allows for faster generation of this type of synthetic data, as well as easier adaptation to the environment in which the detection is carried out.

The main goal of this article was to develop a methodology for creating training dataset with simplified synthetic data of objects located at the bottom of inland water reservoirs for the purposes of creation detection models based on deep networks. This topic is related to certain challenges, limitations, and opportunities that are described later in this work. The partial goals achieved in the work were as follows:

- Development of simplified synthetic objects;

- Assessing the impact of type, quantity, and augmentation of SSOs on detection accuracy;

- Testing and validating the neural network models using real data to ensure its efficiency;

- Analyzing the effectiveness of the CNN model in locating ground control points in the orthoimage;

- Determining the neural network creation process to prepare the model that performs best in detecting the real underwater objects in the shallow water area.

The objects researched in this work were measuring disks used as ground control points (GCPs). This type of object was only experimental data for the possibility of using the method of creating composite images with simplified synthetic objects. There are also works available focusing on the detection of GCPs strictly for georeferencing of aerial images for land survey, which, however, was not the aim of this work. Additionally, those works used the real images of objects in detection process [43,44,45].

In this research, YOLO (You Only Look Once) networks were utilized as the detection model. YOLO networks are widely adopted and well documented in the scientific literature. Since the first version was introduced in 2015, YOLO models have evolved rapidly, with YOLOv3, released in 2018. The latest version, YOLO12, reflects the continued advancements in this technology [46,47,48,49,50,51,52,53,54,55]. In order to use the YOLO network on wide-area image data registered in spatial coordinate system, in the first stage of the study, we used ArcGIS software. In particular, this allowed us to automate the preparation of the test set (subdivision into smaller image chips) and then detect objects in the domain of a uniform image. Since the version, due to licensing restrictions, implements YOLO version v3, we verified the detection performance of this model with models on other models developed using YOLOv5, YOLOv6, YOLOv8, YOLOv9, YOLOv10, YOLO11, YOLO12, using Python 3.10.12.

The YOLO series has become a leading framework for real-time object detection and has evolved over the years through successive improvements. Early YOLO systems provide a framework for the YOLO series, primarily from a model design perspective. YOLOv4 and YOLOv5 introduced CSPNet, a multi-feature scaling and augmentation framework. YOLOv6 further expands on these solutions by introducing BiC and SimCSPSPPF modules for the backbone and neck, as well as anchor-aided training. Subsequently, YOLOv7 introduced E-ELAN (Efficient Layer Aggregation Networks) for improved gradient flow and various “bag-of-freebies” features. YOLOv8 integrates an efficient C2f block for better feature extraction, while YOLOv9 introduces GELAN for architecture optimization and PGI for improved training. YOLOv10 employs NMS-free training with double assignment to improve performance, while YOLO11 further reduces latency and improves accuracy by using C3K2 module and uses lightweight depthwise separable convolution in the detection head. YOLO12 introduces an attention-centric architecture that departs from the traditional approach by improving network performance to achieve a compromise between the best possible results and real-time performance [54].

The research undertaken has relevance in terms of bridging the data availability gap for underwater object detection models, using simplified synthetic data as an alternative to fully synthetic and real data. The results may find applications in underwater monitoring, underwater archaeology, geographic research, environmental protection, and further development of neural detection methods based on this type of data.

2. Materials and Methods

In this section, various assumptions and parameters of the conducted research will be discussed. The research problems resulting from the application of deep learning, discussed in the previous chapter, led to the establishment of the course of the conducted research.

2.1. Research Methodology

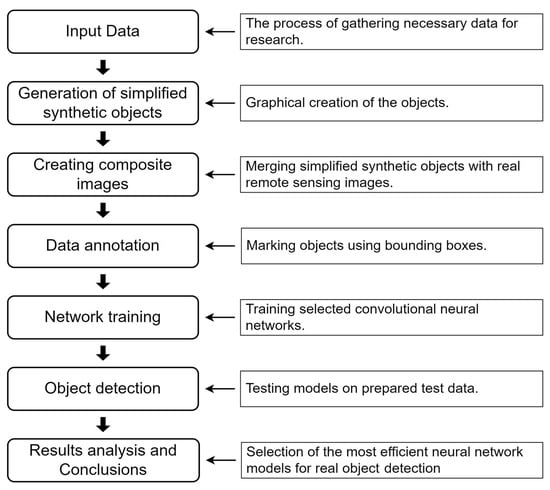

In our research, a series of activities, presented in Figure 1, were carried out, the aim of which was to obtain ready-made deep learning models for use in searching for objects at the bottom of water reservoirs. The presented diagram constitutes the individual basic stages of work related to the preparation and processing of data in the study. Seven main elements have been identified, which will constitute subsections later in this work. These sections will discuss the most important elements, concepts, and abbreviations used in this article.

Figure 1.

Flowchart of the process for obtaining underwater object detection models.

2.2. Input Data

The following image data were used for this study:

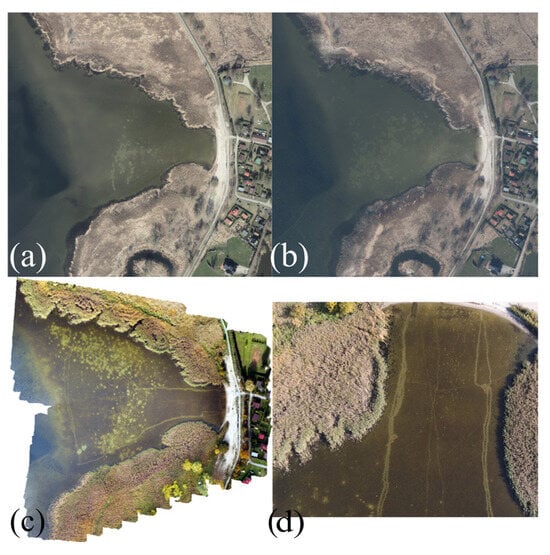

- Orthophotos from the resources of the Polish geoportal [56]: two images showing the same part of Dąbie Lake located in northwestern Poland. The images had a ground resolution of 5 cm and were taken in 2021 and 2022. They show the area of a shallow-water bay in natural colors. These data were the basis for fusion with simplified synthetic objects.

- Set of 129 images from the UAV photogrammetric mission: photos taken in October 2022. These photos show the previously mentioned area of Dąbie Lake in natural colors. There are 152 real GCPs visible in the entire set of photos. These data served as a test set to check the effectiveness of the detection models.

- Orthophoto from a UAV photogrammetric mission: an orthoimage showing the same bay on the lake with a visible arrangement of 5 ground control points (GCPs). This image, taken in October 2022, had a ground resolution of 4.5 cm and a composition of natural colors. The CNN-detected GCPs in this study were utilized to evaluate positional accuracy by calculating the distance differences between the actual GCP coordinates and the bounding boxes generated by the neural network. This method allowed for a precise measurement of accuracy, highlighting the effectiveness of the CNN model in locating ground control points in the orthoimage.

The orthophotos used in the study and an example photo from the test set are shown in Figure 2.

Figure 2.

Raster data compilation used in the research: (a) aerial orthophoto (2021); (b) aerial orthophoto (2022); (c) UAV orthophoto (2022); (d) example of single UAV image (2022).

2.3. Simplified Synthetic Object Generation

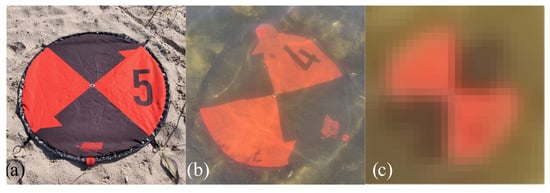

In this study, the generated objects represented the real ground control points (GCPs), which are well-recognizable objects in aerial imagery. The GCP used was a circular disk with a diameter of 1.1 m, featuring a four-field checkerboard pattern in two colors: black and orange. This type of GCP was visible in the UAV images and served as the basis for SSO generation.

The ground control points shown in Figure 3 have a digit visible on one of the fields and a distinct triangular notch in the orange area within the black field. These features of the GCP were not represented in the SSOs (Figure 4). This was because they were created based on the UAV orthophoto image. Due to the resolution and the influence of the water layer, the aforementioned features of the GCP are not visible in the imagery (Figure 3c) and were not reproduced on the SSOs.

Figure 3.

Presentation of a GCP in the field and in a photo from a UAV: (a) GCP located on land; (b) GCP at the bottom of the reservoir (photo taken with a camera); (c) GCP visible in the UAV aerial image (altitude 80 m).

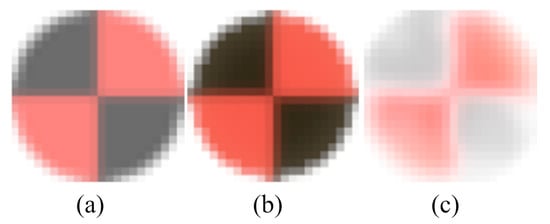

Figure 4.

Three types of simplified synthetic objects used in the study: (a) Type A GCP; (b) Type B GCP; (c) Type C GCP.

The process of generating synthetic ground control points involved using GIMP (2.10.34) and Inkscape (0.92.0 r15299). The object was initially created in Inkscape as four quarters of a circle, two orange and two black, forming a checkerboard pattern, assuming the Circle tool without contour and with colored filling. The colors were picked using the Color Picker tool from the GCP image obtained from the drone.

After exporting the graphic to PNG format, the GCP image resolution was reduced to 22 by 22 pixels, matching the size of the GCP in the UAV images.

The next stage was the preparation of three types of artificial ground control points, which were processed using Opacity tools. Opacity has been set to 80% for all object types. In this way, a Type A GCP was created.

Based on this foundation, a background layer was added under the synthetic GCP with a color similar to the reservoir bed visible in Figure 3c (HTML: a58953), using a circular gradient that brightens outward (HTML: c8b694). This background shows through from under the partially transparent Type A GCP. In this way, a Type B GCP is obtained, which has the lowest transparency.

The last type of GCP was created by using GCP Type A as a base, followed by reducing the black opacity to 65%. After this adjustment, the blur was applied. The resulting objects are presented in Figure 4.

Despite the GCP being a highly characteristic object due to its regular circular shape and color scheme, simple graphic modifications were applied to enhance the quality of training data and illustrate the impact of the water layer above the object.

- GCP Type A: described with the abbreviation Type A or just letter “A”, has partial transparency;

- GCP Type B: described with the abbreviation Type B or just letter “B”, has a gradient that reduces transparency and gives a sandy color;

- GCP Type C: described with the abbreviation Type C or just letter “C”, an object with the highest transparency, especially increased in the black area, and blurred edges.

The simplified synthetic objects vary slightly in their circularity, which can be influenced in underwater objects by water impurities, vegetation, sediment layers, or light reflections that blur the object’s contour.

2.4. Creating Image Data with SSOs

The creation of images, combining the base layer of orthoimages from 2021 and 2022 with the three types of ground control points, was conducted using Python scripts. The choice of underlying images influenced the diversity of synthetic ground control points due to variations in lighting and color saturation characteristic of these images. The general aim was to generate composite images containing a specific quantity of one type of synthetic GCP. Given that deep learning requires sufficient training data, the study employed four quantities of simplified synthetic GCPs on composite images: 500, 1000, 2000, and 4000. These SSOs were distributed across two images and are summarized in Table 1.

Table 1.

Presentation of a method for creating synthetic images constituting training data.

An example composite image created from GCP Type C with 500 objects on the 2021 orthoimage is presented in Figure 5. Due to the large extent of the terrain, the SSOs are not clearly visible, hence the fragment showing a close-up view of the objects.

Figure 5.

Composite image with zoomed-in area showing random distribution of Type C GCPs.

Composite Image Generation Principles

The algorithm used for creating composite images aimed to expedite the process while adhering to specific assumptions regarding the placement of synthetic GCPs on the image:

- Non-overlapping objects: ground control points must not overlap each other. This rule ensures that the simplified synthetic GCPs mimic the arrangement observed in the UAV imageries, where each GCP is distinctly positioned without overlap.

- Random rotation of objects: each GCP was randomly rotated to simulate real-world variability. This rotation adds diversity to the simplified synthetic GCPs, reflecting the natural variability in the orientation of objects in the environment.

- Objects distributed in water areas: the simplified synthetic ground control points were placed specifically in the water areas of the lake. This condition replicates the detection of underwater objects, ensuring that the synthetic images accurately represent the environment where the GCPs would be detected.

- Inclusion of transparency in synthetic ground control points: simplified synthetic objects were created with transparency, represented by an alpha channel. This transparency allows the underlying orthoimage to influence the appearance of the simplified synthetic GCP, resulting in variations in coloration depending on the underlying surface.

The assumptions adopted for generating simplified synthetic images were aimed at testing the impact of different types of artificial GCPs in the same environment, on the same background data, and taking into account the basic properties of the detected object.

2.5. Preparation of Training Sequences

At the stage of preparing the training sequence, ArcGIS Pro 3.3.1 software was used along with the required extensions related to machine learning. The activities carried out were aimed at creating datasets for training deep networks on image chips, i.e., small images extracted from a larger source image. The source image in this case was the composite images obtained in the previous stage.

ArcGIS Pro includes a pre-defined tool called Export Training Data For Deep Learning, designed specifically for generating image chips. This tool allows users to define bounding boxes around the objects of interest and assign appropriate labels. In this context, the bounding box was configured as a circle with a diameter of 1.2 m, which encompassed the detected object along with a small margin. The marking process was automated using a script due to the large volume of data and the known coordinates of synthetic ground control points on the generated images.

The Export Training Data For Deep Learning tool in ArcGIS Pro facilitated the extraction of image chips with the specified bounding boxes and labels, providing datasets ready for further processing.

2.6. Network Training

The network training process was carried out with the Train Deep Learning Model tool. At this stage, it is possible to set a large number of hyper-parameters related to learning, the type of model used and hardware.

For the purposes of the experiment, an object detection model based on the convolutional neural network (CNN) architecture called YOLOv3 was used. The reason to use YOLOv3 in this study was due to its implementation in ArcGIS Pro software, which was the primary platform for conducting the research. It allows easy preparation of training datasets and performs object detection for large images.

YOLOv3 is based on the architecture of a CNN-type neural network and is known for its speed and effectiveness in real-time object detection. The model divides the image into a grid and creates bounding boxes and feature classes for each grid cell.

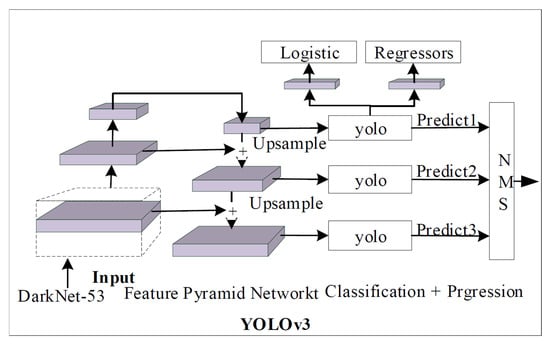

YOLOv3 consists of two main elements, i.e., a detection module and a deep base model (backbone model), which, in this case, is Darknet-53, consisting of 53 convolutional layers. The structure of this convolutional neural network is shown in Figure 6.

Figure 6.

YOLOv3 network framework. Based on figure from “Multi-Scale Ship Detection from SAR and Optical Imagery via A More Accurate YOLOv3” [49].

2.6.1. Description of Network Hyperparameters

In addition to selecting the detection model, the tool also has other settings available, including number of epochs, batch size, learning rate, chip size, data augmentation, and validation set size. The models created had the “Stop when model stops improving” option applied, which made them automatically stop the training process if the model started to improve. In practice, all models achieved a range of 60–100 training epochs. Learning rate was not specified; hence, the software adjusted the learning rate from the learning curve during the training process. The value determined by the software varied depending on the model and was, on average, learning rate of slice (0.00006, 0.0006). The chip size for models was set to 416 × 416 pixels, the validation set size was set to 10% (default value), and the batch size was 16. The monitoring method used was validation loss. Some of these settings are due to hardware limitations and the computing power of the computer. The device on which the tests were conducted had one GeForce RTX 3070 DUAL 8 GB V2 LHR GPU, an Intel Core i7-13700K (3.40 GHz, 16 total cores, 24 total threads) CPU, and 32 GB (DDR5, 6000 MHz) RAM.

2.6.2. Research Series Based on Data Augmentation

In this work, 36 models using the YOLOv3 architecture were created. All models can be divided into 3 series, differing in augmentation parameters:

- 1 series: a set of 12 models for which no additional augmentation parameters have been set. This series is marked with the letter “N”.

- 2 series: a set of 12 models in which some of the available augmentation parameters were used. These parameters were brightness, contrast, and zoom, all with basic software value settings. This series is marked with the letter “P”.

- 3 series: a set of 12 models in which all available augmentation parameters were used. These parameters were brightness, contrast, zoom, rotate, and crop, all with basic software value settings. This series is marked with the letter “F”.

A total of 36 models were obtained in the study, differing in settings, type of artificial GCP, and the amount of training data. All obtained networks, along with a description of the elements on which they were based, are presented in Table 2.

Table 2.

List of prepared detection models.

2.7. Object Detection

The process of object detection was conducted using the Detect Objects Using Deep Learning tool. It utilized the 36 CNN-based models trained during the previous stages to detect objects on unmodified UAV images. The test set for all models was a set of 129 photos from a UAV mission (2022) and an orthophoto from another one (2022). There was a total of 152 GCPs to be detected in the set of images. The description of the test data is provided in Section 2.2. The results of this experiment are discussed in the results section and summarized in Table 3.

Table 3.

Network detection results on image set.

It should be noted that no data used in the process of testing the obtained models constituted a training set. The training set consisted only of composite images. Additionally, in the detection process, the area of each image was limited to the water area using masks. The aim of the research was to detect shallow-water objects, hence the limitation of detection only to the lake area.

In the detection process, it is possible to set parameters that determine how the created models work. The available and used options in the software are padding, threshold, nms overlap, batch size, non-maximum suppression, and exclude pad detections. In the study, padding was a quarter of the chip size value, i.e., 104, while the threshold value was set to 0.6. Nms overlap refers to the maximum overlap ratio of two objects and was set to 0.1. The batch size value was 4 due to hardware limitations. Non-maximum suppression limits the number of duplicate objects, and this setting was used in the study. The last parameter used was exclude pad detections, which limits detection on the edges of image chips.

3. Results

This section presents the obtained results of the detection models along with the basic method of testing their effectiveness. It also describes the method for evaluating the trained network and assessing its training level.

3.1. Validation and Testing

As mentioned earlier in this work, the Train Deep Learning Model tool allows for specifying the percentage of data allocated to the validation set, which is crucial for assessing the quality of network training. We used 10% of input data as validation datasets.

After completing the training process, the Train Deep Learning Model tool provides a model summary, which includes the following metrics:

- Training loss: the average entropy loss function result computed across the training dataset;

- Validation loss: the average entropy loss function result calculated on the validation dataset using the trained model at each epoch;

- Average precision: the ratio of points in the validation data that were correctly classified by the model trained in a given epoch (true positives) to all points in the validation data.

3.2. Verification on Real Data

3.2.1. Performance Indicators of the Developed Models

The accuracy of detected objects was measured using the Compute Accuracy For Object Detection tool. The tool, based on the knowledge of the location of the proper bounding box of the detected object and the minimum Intersection over Union (IOU) parameter, counts detections, false detections, and non-detections. Based on these three parameters, the precision, recall, F1-score, and AP coefficients are calculated. The previously mentioned parameters can be described as follows:

- Intersection over Union (IOU)—a fundamental metric in object detection, quantifying the overlap between the predicted and ground truth bounding boxes [57];

- 2.

- True positive (TP)—a correct detection of a ground-truth bounding box [57];

- 3.

- False positive (FP)—an incorrect detection of a nonexistent object or a misplaced detection of an existing object [57];

- 4.

- False negative (FN)—an undetected ground-truth bounding box [57];

- 5.

- Precision—a parameter that is the percentage of correct positive predictions [57];

- 6.

- Recall—a model parameter that is the percentage of correct positive predictions among all given ground truths [57];

- 7.

- F1-score—the parameter is the weighted sum of recall and precision [38];

- 8.

- AP—average of the precision of all recall values between 0 and 1; the parameter is interpreted as finding the area under the precision–recall curve.

The IOU parameter in the Compute Accuracy For Object Detection tool was set to 10%. This means that only 10% of the detection bounding box must match the actual bounding box of the object. The value was deliberately underestimated because the study tested detection, and in the case of higher IOU settings, it was possible to miss some successful detections.

In the rest of the article, the F1-score is used as the main parameter of model performance, as it is a result of precision and recall. This parameter best represents the relationship between TP, FN, and FP.

3.2.2. Evaluation of Model Effectiveness

The evaluation of the effectiveness of models on real images was carried out on two sources of image data. First was the set of real images that consisted of 129 photos. A total of 152 GCPs could be detected, of which there were actually 5 in the study field. UAV photos allow for obtaining diversity due to different angles of taking photos in relation to ground control points, as well as reflection of light from water and its ripples. Table 3 presents a summary of the study performed on a set of 129 photos from a UAV flight (2022).

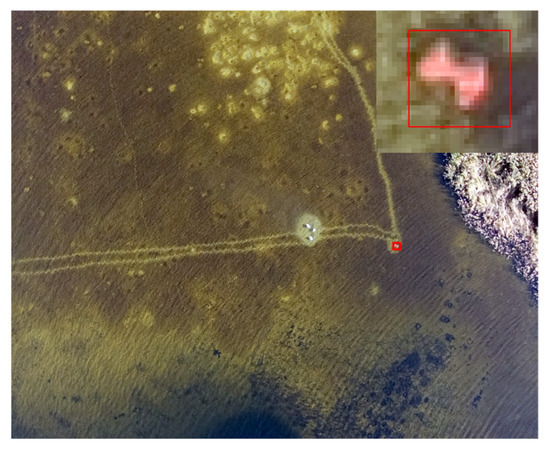

Figure 7 shows one of the photos from the test set. The size of the detected object relative to the image is visible. To better demonstrate how the network frames the detection, a fragment of the image has been enlarged.

Figure 7.

Example of GCP detection in an image from a UAV survey.

3.3. Analysis of the Accuracy of Determining the Location of Detected Objects

The orthophoto, which allows for the measurement of geospatial accuracy and includes five ground control points (GCPs), was primarily used in this study as a source of information on the average deviation in detection relative to points with known locations. Based on the obtained boundary frames and calculations of their centers, the distances to the actual centers of ground control points were determined. Information on the basic parameters of the detection results together with the deviations of individual GCPs (dmean) and the average value of the total deviation of individual models (Δdmean) is included in Table 4 for 36 YOLOv3 models. True positive values indicate the number of ground control points detected by the model out of five possible and from what number of results the average model deviation would be calculated.

Table 4.

Network detection results on the orthophoto along with deviations on individual GCPs and the calculated average deviation of the 36 YOLOv3 models.

Table 4 shows that not all models were able to calculate the deviation, which is due to the lack of detection of these models. In total, the models detected 114 ground control points out of 180 possible to detect. The deviation values in the obtained results ranged from 1.03 to 43.26 cm, and the average value was 12.15 cm, while the median was 10.71 cm.

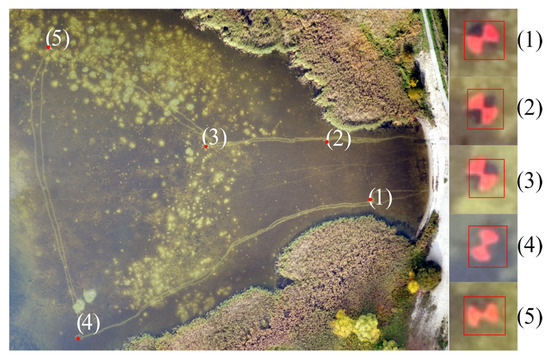

Figure 8 shows the bonding boxes obtained with the YC1000P model, as well as the arrangement of ground control points in the real image. The YC1000P model is an example of how detection models work and was selected based on data from Table 3.

Figure 8.

Five ground control points on UAV image with bounding boxes. The nomenclature used is consistent with Table 4 (1) GCP#1; (2) GCP#2; (3) GCP#3; (4) GCP#4; (5) GCP#5.

3.3.1. Analysis of GCP Detection on Orthoimage

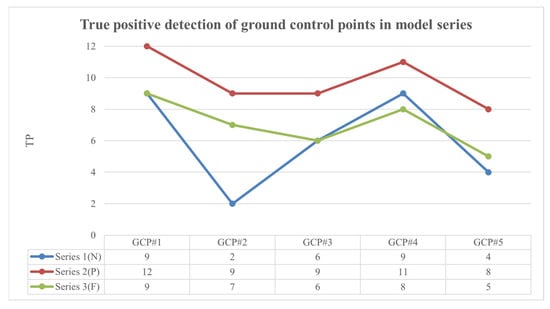

The number of TPs of individual ground control points in the entire series was compared. Such statistics allow for checking the influence of the applied augmentations on the detection of specific GCPs. It is possible to estimate whether certain GCPs are not detected significantly less often than others, which could be caused by the environment at the location of the objects or the influence of the water layer above the detected objects. This comparison is presented in the graph in Figure 9.

Figure 9.

The influence of the location of ground control points on the number of true positives (TPs). Graph of the curves of three measurement series: series 1(N)—not augmented; series 2(P)—partial augmentation; series 3(F)—full augmentation.

Based on the TP value graph of the model series, it can be seen that GCP#1, located closest to the lake shore, was detected most often in all series. As we move further into the lake, the number of TPs decreases, although there is one exception, namely the GCP#4 object. This ground control point also had a much better detection among all series. This may be due to the high contrast of the object to the background, because this GCP was located on the darkest lake bottom of all the objects. It is likely that the influence of the dark bottom makes this the only case where series 1 performed better than series 3.

It was also observed that partial augmentation proved to be the most effective in this scenario. This approach resulted in improved detection accuracy, particularly in challenging conditions.

3.3.2. Analysis of Deviations from Detected Objects

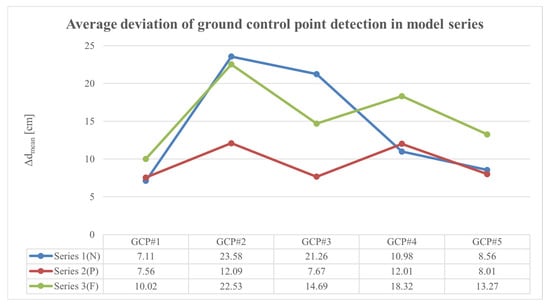

The next comparison of the results of the entire series of models was the average deviation obtained by them on individual GCPs. The results of this comparison are presented in Figure 10.

Figure 10.

Influence of the position of ground control points on the value of the mean detection deviation of a series of models. Graph of the curves of three measurement series: series 1(N)—not augmented; series 2(P)—partial augmentation; series 3(F)—full augmentation.

The values read from the series mean deviation graph are not as closely related as in the case of the TP values from Figure 9. Figure 10 indicates that the GCP#1 object has the lowest mean deviation values, which is consistent with the results of Figure 9. It is interesting that the least detected ground control point GCP#5 has the second-best mean deviation value.

As above, we also observed that partial augmentation proved to be the most effective in this scenario, resulting in lesser deviations and better location of detected objects.

3.4. Influence of the Size of the Training Sequence

The study showed an effect of training set size on the model’s ability to detect ground control points, even when the results are different across the series. Potentially, a larger training set means more data on which CNNs can improve their detection performance, but the results do not indicate such a correlation. Figure 11 shows the effect of training set size on the obtained F1-scores and is based on the data in Table 3.

Figure 11.

Chart depicting the F1-score of detection for individual models relative to the length of their training sets, based on data from Table 3.

The F1-score changes shown in Figure 11 vary across models. The calculated F1-score means showed that all models achieved the highest efficiency when the number of training data (SSOs) was 1000 and 2000 (0.76 and 0.75, respectively). Large fluctuations in network performance make it difficult to clearly assess the impact of training set size. The F1-score increases with the amount of training data, although this increase clearly decreases with the largest amount of data (4000). This may be due to model overfitting, although the training loss + validation loss vs. time plots provided by the software do not indicate this. Potential overfitting led to model oversensitivity, which for most models trained on 4000 simplified artificial objects showed higher FP value than the other three models on these sets.

3.5. Influence of the Augmentation Method

The comparison of all 36 CNN models from Table 3 was restricted by a filter assuming the efficiency of 0.85 in all parameters (precision, recall, F1-score, and AP). The obtained results were sorted by F1-score value and are presented in Table 5.

Table 5.

List of the most effective detection networks.

The requirement of 0.85 efficiency in all parameters was met by seven YOLOv3 networks. These models come from two research series. The first four models were created by partial augmentation, while the remaining three were created with full augmentation available in ArcGIS Pro.

The seven distinguished models constitute 19.4% of all models created. Highly effective models were created in one out of five cases. The lack of any model obtained without additional augmentation is very noticeable here. The first non-augmented model takes only 11th place among all models.

Two conclusions can be drawn from this comparison regarding the impact of augmentation on training dataset with SSOs:

- The augmentation of detection networks positively affects the effectiveness of the obtained models.

- Partial augmentation gives better results for this study.

Although comparing the same models in different series (the same type of artificial GCP and size of training data) gives very different results, in some cases, augmentation improved the model performance by dozens of percentage points. The second conclusion may result from the fact that in the case of these studies, additional augmentation could lead to the less efficient detection by the neural model. Such an unwanted effect of augmentation may explain the greater number of FP detections. The advantage of augmenting training data in the network training process is also indicated in Figure 9 and Figure 10. The number of TPs and the average deviation of individual series calculated on the basis of the orthophoto clearly indicate that the performance of partially augmented models is better than the remaining series. Series 2 detects objects most effectively, while series 1 is the worst in the comparison.

3.6. Influence of the Type of Simplified Synthetic Object

Studies on the effectiveness of YOLOv3 models depending on the type of simplified synthetic object used provide unambiguous results. Figure 11 clearly shows that the F1-score values break down in the middle parts of each of the three series of models. The results of networks trained on GCP Type B are in the range of the lowest F1-score values. This is best seen in series 1, not augmented, where three out of four models trained on Type B have clearly low F1-score values. In Table 5, presenting only the seven best models, there is only one model trained on the basis of GCP Type B. This model also takes the penultimate position in this ranking. The calculated F1-score average of all 36 models with respect to the type of simplified synthetic object is as follows:

- Type A: F1-score coefficient value equal to 0.76;

- Type B: F1-score coefficient value equal to 0.50;

- Type C: F1-score coefficient value equal to 0.82.

However, if the number of TPs detected by the 36 YOLOv3 models is summarized according to the artificial GCP type, then

- Type A: 1270;

- Type B: 759;

- Type C: 1478;

The reported detection results in the form of F1-score coefficient and true positive (TP) values allow for the creation of a ranking of simplified synthetic objects, where Type C is the most effective, while Type B is the least effective. All synthetic objects were created with the aim of the most accurate possible representation of ground control points photographed in UAV photos. Despite this, the model results differ drastically depending on the simplified synthetic object. Research should be carried out on a much larger set of synthetic objects to better understand the relationships affecting the operation of ready-made detection networks.

However, referring to the process of preparing the Type B and C objects themselves, it should be noted that both simplified presentations are extensions of Type A. GCP Type A is in the middle position in terms of the effectiveness of trained models. The changes made when creating Type B worsened the effectiveness, while the modifications within Type C increased it. The main difference between these simplified synthetic objects is transparency: Type B has the lowest transparency, while Type C has the highest. Transparency can be crucial in achieving significant diversity of training data and significantly affect the subsequent performance of ready-made detection networks.

3.7. Test YOLO Models Outside the ArcGIS Environment

In order to check the effectiveness of YOLOv3 available in ArcGIS Pro against newer models, tests were carried out on subsequent versions of YOLO up to the current latest version YOLO12. The tests were carried out on versions YOLOv5, YOLOv6, YOLOv8, YOLOv9, YOLOv10, YOLO11, and YOLO12.

All models used in the Python environment were L-type models, except for YOLOv9, for which this version is not available, and C-type was used instead. The device on which the tests were conducted had one GeForce RTX 4090 GPU, an Intel Core i7-14900K CPU, and 128 GB (DDR5, 5400 MHz) RAM. The same training sets as in YOLOv3 were used in model training, also with 10% test and validation sets. In all cases, the chip size was 416 × 416 pixels. The hyperparameters of the model training process are listed in Table 6.

Table 6.

Hyperparameters of YOLO models.

The hyperparameters were intended to reflect as closely as possible those used in series 2 (partial augmentation) in ArcGIS PRO.

As in the case of studies in the ArcGIS Pro environment, masks were used to limit the test only to the water area. The evaluation of the models’ effectiveness was performed on a set of 152 real photos from the UAV flight. In total, it was possible to detect 152 GCPs in the set, of which 5 were distributed in the study area. In the case of tests of newer models, the effectiveness test was conducted on the same images as in the case of YOLOv3 but cropped to 416-pixel size, i.e., the same size on which the models were trained.

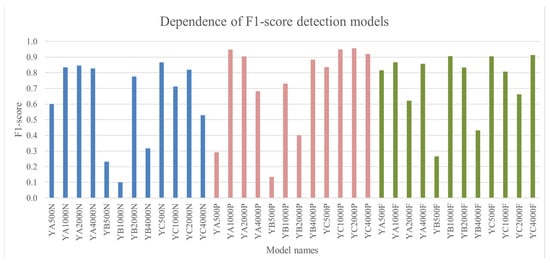

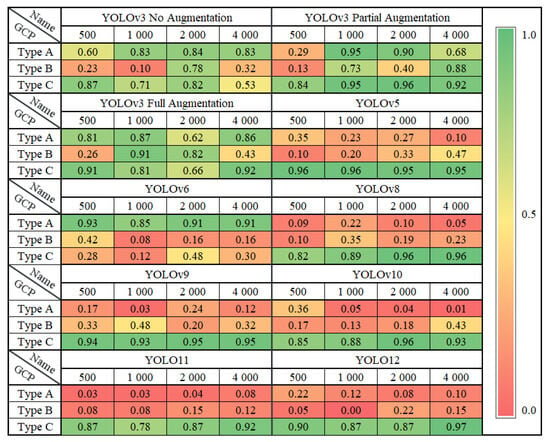

In total, the tests of seven higher versions of YOLO resulted in 84 models whose detection performance is presented in Table 7, which is included in Appendix A. Figure 12 and Figure 13 were created based on the results from Table 3 and Table A1. The first of these figures, Figure 12, shows the heat map of the F1-score of all 120 models created in this study.

Table 7.

Analysis of deviations in the distance of the bounding box marking from the GCP center of the five determined models with respect to the F1-score parameter.

Figure 12.

Heat map of the F1-score the range of parameter values from 0 (red) to 1 (green).

Figure 13.

F1-score averages of 8 versions of YOLO, with values for the 3 best performing model sets shown.

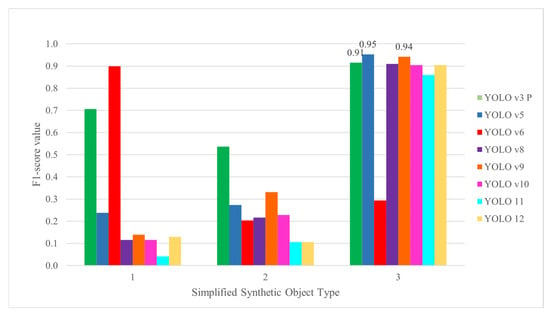

The test shows that newer versions of YOLO are not necessarily characterized by much better SSO detection efficiency than YOLOv3. In the case of the best YOLOv3 model (YC2000P), the obtained F1-score parameter was 0.96, while among the new 84 models, only the YOLO12 4000C model exceeded this value (0.97), and 4 models were equally effective. Similar values, equaled minimum to 0.95, were obtained by YOLOv5 (range 0.95–0.96), YOLOv8 (0.96), YOLOv9 (0.95), and YOLOv10 (0.96).

The compiled results clearly indicate the highest effectiveness of SSO Type C, which shows much better detection in all YOLO models except YOLOv6, where Type A is the most effective, as shown in Figure 13. It should be noted, however, that the results of YOLOv6 differ significantly from the rest of the YOLO models due to the high sensitivity of these models and a very large number of FPs, despite the use of a mask for the water area in the study. After calculating the average F1-score of the models against C-type SSOs, the best-performing three YOLO versions are YOLOv5 (0.95), YOLOv9 (0.94), and partially augmented (P) YOLOv3 (0.91).

The prepared 84 models were also used to analyze the accuracy of object localization in a similar way as in Section 3.3. In this case, the location of the center of the bounding box relative to the GCP point was analyzed. This analysis was not performed on the orthophotomap but rather on its slices of 416 × 416 pixels. All obtained results are included in Appendix A, Table A2.

Table 7 is based on the data from Table A2 and presents the five selected models relative to the best-performing YOLO higher-level models obtained in this work so far.

Based on Table 7, it can be seen that in the case of this method of determining the distance deviation, the determined distance is 2–3 times more accurate than the data obtained by the YOLOv3 models with partial augmentation. The calculated values represent the distance corresponding to less than one pixel on an orthophotomap with a spatial resolution of 4.5 cm.

The data from the table confirm again the advantage of SSO Type C. Models trained on other types of SSOs obtain almost no results, except for models based on YOLOv6. YOLOv6, in the case of five images from the orthophotomap, also shows very high recall but also low precision due to the large number of FPs.

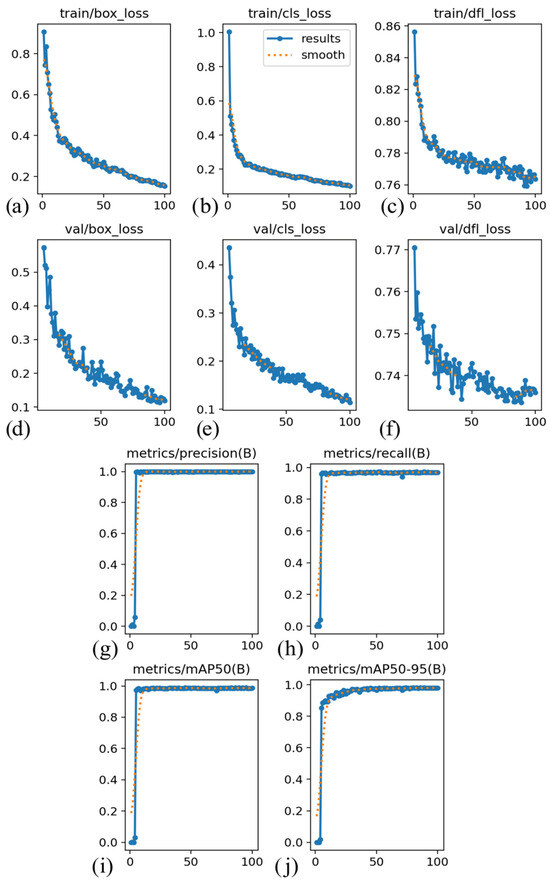

The resulting 84 models achieved very high detection rates very quickly during the training process and required a small number of epochs to train the model. An example of the parameters obtained during the training process is included in Appendix B, Figure A1.

4. Discussion

The research conducted presents a methodology for creating composite images with simplified synthetic objects (SSOs) designed for object detection purposes. Through the development of 36 detection models in ArcGIS Pro and 84 in Python environment, it has been confirmed that utilizing a CNN trained on these composite images with SSOs effectively detects objects on the bottom of shallow inland water areas.

The study focused on recreating objects using a graphic form to create SSOs that closely resemble the appearance of objects in UAV images. This concept is well illustrated in Figure 3 and Figure 4, where the synthetic ground control points (GCPs) represent not actual GCPs but their appearance as captured in UAV images. The literature review discusses various methods researchers use to create synthetic objects, including generating 3D models [28,29,30] and adding detected objects from other images to background scenes [31,32,34]. Notable works by Hinterstoisser et al. and Huh et al. illustrate graphic modification techniques such as blurring, light adjustment, and object positioning, which increase training data volume and enhance detection performance across different environments. Unlike these studies, our research focused on creating SSOs that closely resemble the GCPs seen in UAV images, with backgrounds reflecting the actual operational area of the UAV. This approach presents an alternative method of data creation, allowing for quick, practical application without complex, realistic data simulations.

A clear advantage of proposed method is its ability to precisely locate detected objects, supporting applications in object mapping. This precise location capability enhances spatial analysis and broadens the use of SSOs. Deviations in bounding box centers from actual GCP centers were assessed using orthophotos, with the results of all 36 YOLOv3 models ranging from 1 to 43 cm. The top four models had deviations between 8 and 10 cm. The Python versions of YOLO performed better, with deviations ranging from 0 to 30 cm. However, the average value for these 84 models was less than 4 cm. Further performance improvements in the CNN models could potentially improve these results, although at this stage, the offsets are only 1–3 pixels relative to the image resolution.

Additionally, the presented synthetic image generation process, automated with Python scripts, enables creating large amounts of specialized training data for specific objects and environments. Model performance may decrease when SSOs are detected in different terrains, yet the ease of background modification and synthetic object creation should allow for rapid adaptation to new study requirements. This methodology also facilitates the swift development of specialized detection models, which could be beneficial in emergency situations requiring object detection in aquatic environments, including even human detection.

The primary limitation of this method is the spatial resolution, impacting the minimum detectable object size. Objects smaller than the spatial resolution may not be captured, and slightly larger objects may lack sufficient pixel density for effective deep learning recognition. While UAV images enable relatively small object detection, higher resolutions also increase background complexity, potentially complicating detection model performance. Additionally, environmental factors, such as changing water and lighting conditions [58], can significantly reduce water transparency, hindering or partially obstructing object visibility to optical sensors. In extreme cases, the object may simply be invisible to the optical system.

During these studies, it was proven that YOLOv3, YOLOv5, YOLOv8, YOLOv9, and YOLOv10 are good detectors of elaborated SSOs of Type C. Taking into account overall performance, YOLOv5 achieve the higher average value (0.95) and achieved very good GCP detection in the orthophotomap test, in particular detecting all five GCPs in the SSO Type C trained models. In this overview, however, it is worth noting that YOLOv3 may have a fundamental advantage, related to its ability to be implemented within acceptable licensing terms of the software under development. The models used achieved high detection rates despite the very rapid training and network adaptation process. This is likely due to the low diversity of the datasets, the size of the object, and its graphical features. This issue should be addressed in future work.

This study specifically targeted the detection of a distinctive object like ground control point, characterized by their round shape, color pattern, and uniform size. Based on current observations, special attention should also be paid to the influence of the background, and therefore, in future work, the training set should be expanded to include more diverse background on the images. Future research should pertain to another objects, such water pollution (including plastics), animal habitats, boulders, and various anthropogenic ones. It can certainly be a further challenge to explore different configurations of learning sets, e.g., consisting in part of simplified synthetic and real data.

Such work would introduce also new challenges related to using alternative types of simplified synthetic data. A promising research avenue could involve generating super-resolution images with generative adversarial networks [59,60,61] as foundational data for real object detection, particularly in shallow water areas, potentially applicable across large areas covered by satellite scenes.

Next research should also focus on developing models based on various YOLO versions, incorporating real-time detection, and use of the technology Internet of Things [62] in terms of using processed image data. In addition to models based on the YOLO architecture, it is possible to extend the research to other detection models. This would allow us to determine the effectiveness of the proposed methodology related to simplified synthetic objects on other CNNs and frameworks.

Future research should also investigate the impact of interferences resulting from the aquatic environment (turbidity, changes in water bio-optical properties, and changes in lighting) and the detected object (shape, regularity, texture, and light reflectivity). It will also be important to introduce multi-variant SSOs to increase training datasets and counteract dynamic environmental changes. Future research will allow for better identification of the limitations of the methodology presented in this work and areas for further development.

5. Conclusions

This article describes a method of generating simplified synthetic objects for training YOLO networks. The main focus is on the object’s compliance with its presentation obtained in UAV images. Data variability is obtained by using SSO transparency and the influence of the background. The data generation process is automated by processing a pipeline with automatic placement, rotation, and generation of object centers, which helps in their labeling. The efficiency and applicability of the method using GCPs are demonstrated. Synthetic data generated for object recognition are successfully used to train neural networks, taking into account the influence of network augmentation.

Although not all models achieved satisfactory detection test results on a UAV image set, the top five models—YOLOv12 4000C, YOLOv8 4000C, YOLOv8 2000C, YOLOv5 1000C, and YOLOv5 500C—exhibited very high precision, recall, and F1-score values, each exceeding 0.93 in efficiency. The results of the 120 models presented in this paper show that the best models were created based on SSO Type C, using partial augmentation and a training set of 2000 objects. In the full set of tests, the most effective version of YOLO was version 5. Particularly effective were the models obtained using a simplified synthetic object C, which was most dependent on the background image due to its high transparency. This type statistically achieves twice the detection efficiency of other SSO types. Research confirms the better efficiency of detection models trained on SSOs with greater convergence with the real object, while larger simplifications are less effective.

The research indicates the possibility of using simplified synthetic objects as an alternative to real or fully synthetic data, when there is a lack of data with real images to create training dataset. In addition, proposed method can be used when acquisition of the real data is too time consuming or when is required a short time to elaboration a detection model.

Author Contributions

Conceptualization, D.K. and J.L.; methodology, D.K. and J.L.; software, D.K. and P.A.; validation, D.K. and P.A.; formal analysis, D.K. and J.L.; investigation, D.K., J.L., and P.A.; resources, D.K., J.L., and P.A.; data curation, D.K. and J.L.; writing—original draft preparation, D.K.; writing—review and editing, D.K. and J.L.; visualization, D.K.; supervision, J.L.; project administration, J.L.; funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research outcome was financed by a subsidy from the Polish Ministry of Education and Science for statutory activities at the Maritime University of Szczecin, grant no. 2/s/KGiH/25.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A

The summary of the 84 models obtained in the Python environment is given in Table A1.

Table A1.

Model detection results of newer versions of YOLO on the image set.

Table A1.

Model detection results of newer versions of YOLO on the image set.

| Short Name of the Model | TP | FP | FN | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|

| YOLOv5 500A | 33 | 3 | 119 | 0.92 | 0.22 | 0.35 |

| YOLOv5 1000A | 20 | 0 | 132 | 1.00 | 0.13 | 0.23 |

| YOLOv5 2000A | 24 | 3 | 128 | 0.89 | 0.16 | 0.27 |

| YOLOv5 4000A | 8 | 0 | 144 | 1.00 | 0.05 | 0.10 |

| YOLOv5 500B | 8 | 1 | 144 | 0.89 | 0.05 | 0.10 |

| YOLOv5 1000B | 17 | 2 | 135 | 0.89 | 0.11 | 0.20 |

| YOLOv5 2000B | 30 | 0 | 122 | 1.00 | 0.20 | 0.33 |

| YOLOv5 4000B | 47 | 3 | 105 | 0.94 | 0.31 | 0.47 |

| YOLOv5 500C | 142 | 2 | 10 | 0.99 | 0.93 | 0.96 |

| YOLOv5 1000C | 144 | 4 | 8 | 0.97 | 0.95 | 0.96 |

| YOLOv5 2000C | 138 | 2 | 14 | 0.99 | 0.91 | 0.95 |

| YOLOv5 4000C | 142 | 6 | 10 | 0.96 | 0.93 | 0.95 |

| YOLOv6 500A | 148 | 20 | 4 | 0.88 | 0.97 | 0.93 |

| YOLOv6 1000A | 148 | 48 | 4 | 0.76 | 0.97 | 0.85 |

| YOLOv6 2000A | 148 | 25 | 4 | 0.86 | 0.97 | 0.91 |

| YOLOv6 4000A | 149 | 26 | 3 | 0.85 | 0.98 | 0.91 |

| YOLOv6 500B | 151 | 423 | 1 | 0.26 | 0.99 | 0.42 |

| YOLOv6 1000B | 151 | 3456 | 1 | 0.04 | 0.99 | 0.08 |

| YOLOv6 2000B | 150 | 1562 | 2 | 0.09 | 0.99 | 0.16 |

| YOLOv6 4000B | 151 | 1620 | 1 | 0.09 | 0.99 | 0.16 |

| YOLOv6 500C | 151 | 781 | 1 | 0.16 | 0.99 | 0.28 |

| YOLOv6 1000C | 149 | 2168 | 3 | 0.06 | 0.98 | 0.12 |

| YOLOv6 2000C | 149 | 323 | 3 | 0.32 | 0.98 | 0.48 |

| YOLOv6 4000C | 149 | 705 | 3 | 0.17 | 0.98 | 0.30 |

| YOLOv8 500A | 7 | 0 | 145 | 1.00 | 0.05 | 0.09 |

| YOLOv8 1000A | 19 | 0 | 133 | 1.00 | 0.13 | 0.22 |

| YOLOv8 2000A | 8 | 1 | 144 | 0.89 | 0.05 | 0.10 |

| YOLOv8 4000A | 4 | 1 | 148 | 0.80 | 0.03 | 0.05 |

| YOLOv8 500B | 8 | 0 | 144 | 1.00 | 0.05 | 0.10 |

| YOLOv8 1000B | 33 | 2 | 119 | 0.94 | 0.22 | 0.35 |

| YOLOv8 2000B | 16 | 3 | 136 | 0.84 | 0.11 | 0.19 |

| YOLOv8 4000B | 20 | 5 | 132 | 0.80 | 0.13 | 0.23 |

| YOLOv8 500C | 110 | 6 | 42 | 0.95 | 0.72 | 0.82 |

| YOLOv8 1000C | 124 | 3 | 28 | 0.98 | 0.82 | 0.89 |

| YOLOv8 2000C | 146 | 5 | 6 | 0.97 | 0.96 | 0.96 |

| YOLOv8 4000C | 147 | 6 | 5 | 0.96 | 0.97 | 0.96 |

| YOLOv9 500A | 14 | 1 | 138 | 0.93 | 0.09 | 0.17 |

| YOLOv9 1000A | 2 | 0 | 150 | 1.00 | 0.01 | 0.03 |

| YOLOv9 2000A | 21 | 1 | 131 | 0.95 | 0.14 | 0.24 |

| YOLOv9 4000A | 10 | 1 | 142 | 0.91 | 0.07 | 0.12 |

| YOLOv9 500B | 30 | 2 | 122 | 0.94 | 0.20 | 0.33 |

| YOLOv9 1000B | 48 | 2 | 104 | 0.96 | 0.32 | 0.48 |

| YOLOv9 2000B | 18 | 8 | 134 | 0.69 | 0.12 | 0.20 |

| YOLOv9 4000B | 30 | 4 | 122 | 0.88 | 0.20 | 0.32 |

| YOLOv9 500C | 144 | 10 | 8 | 0.94 | 0.95 | 0.94 |

| YOLOv9 1000C | 141 | 11 | 11 | 0.93 | 0.93 | 0.93 |

| YOLOv9 2000C | 140 | 3 | 12 | 0.98 | 0.92 | 0.95 |

| YOLOv9 4000C | 144 | 7 | 8 | 0.95 | 0.95 | 0.95 |

| YOLOv10 500A | 34 | 4 | 118 | 0.89 | 0.22 | 0.36 |

| YOLOv10 1000A | 4 | 1 | 148 | 0.80 | 0.03 | 0.05 |

| YOLOv10 2000A | 3 | 2 | 149 | 0.60 | 0.02 | 0.04 |

| YOLOv10 4000A | 1 | 0 | 151 | 1.00 | 0.01 | 0.01 |

| YOLOv10 500B | 14 | 1 | 138 | 0.93 | 0.09 | 0.17 |

| YOLOv10 1000B | 11 | 1 | 141 | 0.92 | 0.07 | 0.13 |

| YOLOv10 2000B | 15 | 1 | 137 | 0.94 | 0.10 | 0.18 |

| YOLOv10 4000B | 42 | 1 | 110 | 0.98 | 0.28 | 0.43 |

| YOLOv10 500C | 118 | 8 | 34 | 0.94 | 0.78 | 0.85 |

| YOLOv10 1000C | 123 | 3 | 29 | 0.98 | 0.81 | 0.88 |

| YOLOv10 2000C | 144 | 5 | 8 | 0.97 | 0.95 | 0.96 |

| YOLOv10 4000C | 139 | 9 | 13 | 0.94 | 0.91 | 0.93 |

| YOLO11 500A | 2 | 1 | 150 | 0.67 | 0.01 | 0.03 |

| YOLO11 1000A | 2 | 0 | 150 | 1.00 | 0.01 | 0.03 |

| YOLO11 2000A | 3 | 0 | 149 | 1.00 | 0.02 | 0.04 |

| YOLO11 4000A | 6 | 1 | 146 | 0.86 | 0.04 | 0.08 |

| YOLO11 500B | 6 | 1 | 146 | 0.86 | 0.04 | 0.08 |

| YOLO11 1000B | 6 | 1 | 146 | 0.86 | 0.04 | 0.08 |

| YOLO11 2000B | 13 | 5 | 139 | 0.72 | 0.09 | 0.15 |

| YOLO11 4000B | 10 | 7 | 142 | 0.59 | 0.07 | 0.12 |

| YOLO11 500C | 118 | 2 | 34 | 0.98 | 0.78 | 0.87 |

| YOLO11 1000C | 138 | 62 | 14 | 0.69 | 0.91 | 0.78 |

| YOLO11 2000C | 119 | 4 | 33 | 0.97 | 0.78 | 0.87 |

| YOLO11 4000C | 137 | 9 | 15 | 0.94 | 0.90 | 0.92 |

| YOLO12 500A | 19 | 2 | 133 | 0.90 | 0.13 | 0.22 |

| YOLO12 1000A | 10 | 2 | 142 | 0.83 | 0.07 | 0.12 |

| YOLO12 2000A | 6 | 1 | 146 | 0.86 | 0.04 | 0.08 |

| YOLO12 4000A | 8 | 0 | 144 | 1.00 | 0.05 | 0.10 |

| YOLO12 500B | 4 | 0 | 148 | 1.00 | 0.03 | 0.05 |

| YOLO12 1000B | 0 | 0 | 152 | 0.00 | 0.00 | 0.00 |

| YOLO12 2000B | 19 | 1 | 133 | 0.95 | 0.13 | 0.22 |

| YOLO12 4000B | 13 | 6 | 139 | 0.68 | 0.09 | 0.15 |

| YOLO12 500C | 128 | 3 | 24 | 0.98 | 0.84 | 0.90 |

| YOLO12 1000C | 121 | 5 | 31 | 0.96 | 0.80 | 0.87 |

| YOLO12 2000C | 124 | 9 | 28 | 0.93 | 0.82 | 0.87 |

| YOLO12 4000C | 147 | 4 | 5 | 0.97 | 0.97 | 0.97 |

The calculations of the deviations of the center position relative to the five GCPs from the orthophotomap obtained for the newer YOLO versions of the models are presented in Table A2.

Table A2.

Network detection results on orthophotomap images along with deviations on individual GCPs and calculated average deviation of 84 YOLOv5–12 models.

Table A2.

Network detection results on orthophotomap images along with deviations on individual GCPs and calculated average deviation of 84 YOLOv5–12 models.

| Short Name of the Model | TP | GCP#1 dmean [cm] | GCP#2 dmean [cm] | GCP#3 dmean [cm] | GCP#4 dmean [cm] | GCP#5 dmean [cm] | Δdmean [cm] |

|---|---|---|---|---|---|---|---|

| YOLOv5 500A | 0 | – | – | – | – | – | – |

| YOLOv5 1000A | 1 | – | 30.27 | – | – | – | 30.27 |

| YOLOv5 2000A | 0 | – | – | – | – | – | – |

| YOLOv5 4000A | 0 | – | – | – | – | – | – |

| YOLOv5 500B | 0 | – | – | – | – | – | – |

| YOLOv5 1000B | 0 | – | – | – | – | – | – |

| YOLOv5 2000B | 0 | – | – | – | – | – | – |

| YOLOv5 4000B | 3 | – | 2.25 | 5.03 | – | – | 3.64 |

| YOLOv5 500C | 5 | 3.18 | 2.25 | 5.03 | 8.11 | 3.18 | 4.35 |

| YOLOv5 1000C | 5 | 2.25 | 2.25 | 3.18 | 8.11 | 7.12 | 4.58 |

| YOLOv5 2000C | 5 | 3.18 | 2.25 | 3.18 | 7.12 | 2.25 | 3.60 |

| YOLOv5 4000C | 5 | 3.18 | 2.25 | 3.18 | 8.11 | 2.25 | 3.80 |

| YOLOv6 500A | 5 | 0.00 | 0.00 | 4.50 | 2.25 | 3.18 | 1.99 |

| YOLOv6 1000A | 5 | 3.18 | 2.25 | 7.12 | 5.03 | 2.25 | 3.97 |

| YOLOv6 2000A | 5 | 3.18 | 2.25 | 7.12 | 5.03 | 3.18 | 4.15 |

| YOLOv6 4000A | 5 | 0.00 | 2.25 | 6.36 | 6.75 | 6.75 | 4.42 |

| YOLOv6 500B | 5 | 2.25 | 2.25 | 2.25 | 2.25 | 2.25 | 2.25 |

| YOLOv6 1000B | 5 | 3.18 | 4.50 | 3.18 | 3.18 | 2.25 | 3.26 |

| YOLOv6 2000B | 5 | 3.18 | 2.25 | 5.03 | 3.18 | 2.25 | 3.18 |

| YOLOv6 4000B | 5 | 3.18 | 5.03 | 4.50 | 2.25 | 3.18 | 3.63 |

| YOLOv6 500C | 5 | 3.18 | 6.36 | 2.25 | 3.18 | 3.18 | 3.63 |

| YOLOv6 1000C | 5 | 5.03 | 3.18 | 3.18 | 3.18 | 2.25 | 3.37 |

| YOLOv6 2000C | 5 | 6.36 | 0.00 | 5.03 | 5.03 | 4.50 | 4.19 |

| YOLOv6 4000C | 5 | 4.50 | 2.25 | 3.18 | 5.03 | 4.50 | 3.89 |

| YOLOv8 500A | 0 | – | – | – | – | – | – |

| YOLOv8 1000A | 0 | – | – | – | – | – | – |

| YOLOv8 2000A | 0 | – | – | – | – | – | – |

| YOLOv8 4000A | 0 | – | – | – | – | – | – |

| YOLOv8 500B | 0 | – | – | – | – | – | – |

| YOLOv8 1000B | 0 | – | – | – | – | – | – |

| YOLOv8 2000B | 0 | – | – | – | – | – | – |

| YOLOv8 4000B | 0 | – | – | – | – | – | – |

| YOLOv8 500C | 3 | 3.18 | 2.25 | 4.50 | – | – | 3.31 |

| YOLOv8 1000C | 4 | 3.18 | 2.25 | 2.25 | 8.11 | – | 3.95 |

| YOLOv8 2000C | 5 | 3.18 | 2.25 | 3.18 | 7.12 | 5.03 | 4.15 |

| YOLOv8 4000C | 5 | 2.25 | 2.25 | 2.25 | 5.03 | 3.18 | 2.99 |

| YOLOv9 500A | 0 | – | – | – | – | – | – |

| YOLOv9 1000A | 0 | – | – | – | – | – | – |

| YOLOv9 2000A | 0 | – | – | – | – | – | – |

| YOLOv9 4000A | 0 | – | – | – | – | – | – |

| YOLOv9 500B | 1 | – | 3.18 | – | – | – | 3.18 |

| YOLOv9 1000B | 2 | – | 0.00 | 4.50 | – | – | 2.25 |

| YOLOv9 2000B | 0 | – | – | – | – | – | – |

| YOLOv9 4000B | 1 | – | 2.25 | – | – | – | 2.25 |

| YOLOv9 500C | 4 | 2.25 | 2.25 | 3.18 | 8.11 | – | 3.95 |

| YOLOv9 1000C | 5 | 3.18 | 2.25 | 3.18 | 8.11 | 2.25 | 3.80 |

| YOLOv9 2000C | 5 | 2.25 | 2.25 | 2.25 | 7.12 | 3.18 | 3.41 |

| YOLOv9 4000C | 5 | 2.25 | 2.25 | 3.18 | 7.12 | 2.25 | 3.41 |

| YOLOv10 500A | 0 | – | – | – | – | – | – |

| YOLOv10 1000A | 0 | – | – | – | – | – | – |

| YOLOv10 2000A | 0 | – | – | – | – | – | – |

| YOLOv10 4000A | 0 | – | – | – | – | – | – |

| YOLOv10 500B | 0 | – | – | – | – | – | – |

| YOLOv10 1000B | 0 | – | – | – | – | – | – |

| YOLOv10 2000B | 0 | – | – | – | – | – | – |

| YOLOv10 4000B | 0 | – | – | – | – | – | – |

| YOLOv10 500C | 4 | 2.25 | 2.25 | 3.18 | 7.12 | – | 3.70 |

| YOLOv10 1000C | 3 | 2.25 | – | 2.25 | 9.55 | – | 3.51 |

| YOLOv10 2000C | 5 | 2.25 | 2.25 | 2.25 | 5.03 | 3.18 | 2.99 |

| YOLOv10 4000C | 5 | 3.18 | 2.25 | 2.25 | 8.11 | 3.18 | 3.80 |

| YOLO11 500A | 0 | – | – | – | – | – | – |

| YOLO11 1000A | 0 | – | – | – | – | – | – |

| YOLO11 2000A | 0 | – | – | – | – | – | – |

| YOLO11 4000A | 0 | – | – | – | – | – | – |

| YOLO11 500B | 0 | – | – | – | – | – | – |

| YOLO11 1000B | 0 | – | – | – | – | – | – |

| YOLO11 2000B | 0 | – | – | – | – | – | – |

| YOLO11 4000B | 0 | – | – | – | – | – | – |

| YOLO11 500C | 2 | – | 4.50 | 4.50 | – | – | 4.50 |

| YOLO11 1000C | 5 | 2.25 | 2.25 | 5.03 | 6.36 | 3.18 | 3.82 |

| YOLO11 2000C | 5 | 3.18 | 2.25 | 3.18 | 5.03 | 2.25 | 3.18 |

| YOLO11 4000C | 5 | 3.18 | 2.25 | 3.18 | 7.12 | 3.18 | 3.78 |

| YOLO12 500A | 0 | – | – | – | – | – | – |

| YOLO12 1000A | 0 | – | – | – | – | – | – |

| YOLO12 2000A | 0 | – | – | – | – | – | – |

| YOLO12 4000A | 0 | – | – | – | – | – | – |

| YOLO12 500B | 0 | – | – | – | – | – | – |

| YOLO12 1000B | 0 | – | – | – | – | – | – |

| YOLO12 2000B | 0 | – | – | – | – | – | – |

| YOLO12 4000B | 0 | – | – | – | – | – | – |

| YOLO12 500C | 5 | 3.18 | 4.50 | 3.18 | 7.12 | 9.28 | 5.45 |

| YOLO12 1000C | 4 | 2.25 | 2.25 | 3.18 | 6.36 | – | 3.51 |

| YOLO12 2000C | 5 | 3.18 | 2.25 | 3.18 | 7.12 | 5.03 | 4.15 |

| YOLO12 4000C | 5 | 3.18 | 2.25 | 3.18 | 5.03 | 3.18 | 3.37 |

Appendix B

Example graphs of the training phase of 84 models in the Python environment are shown in Figure A1.

Figure A1.

YOLO12 1000C network training stage graphs: (a) change in box loss coefficient in subsequent training epochs; (b) change in class loss coefficient in subsequent training epochs; (c) change in distributed focal loss coefficient in subsequent training epochs; (d) change in box loss coefficient in subsequent validation epochs; (e) change in class loss coefficient in subsequent validation epochs; (f) change in distributed focal loss coefficient in subsequent validation epochs; (g) change in precision parameter in subsequent training epochs; (h) change in recall parameter in subsequent training epochs; (i) change in mean average precision parameter at IoU threshold 0.5 in subsequent training epochs; (j) change in mean average precision parameter at IoU threshold 0.95 in subsequent training epochs.

References

- Li, Z.; Wang, Y.; Zhang, N.; Zhang, Y.; Zhao, Z.; Xu, D.; Ben, G.; Gao, Y. Deep Learning-Based Object Detection Techniques for Remote Sensing Images: A Survey. Remote Sens. 2022, 14, 2385. [Google Scholar] [CrossRef]

- Dunstan, A.; Robertson, K.; Fitzpatrick, R.; Pickford, J.; Meager, J. Use of Unmanned Aerial Vehicles (UAVs) for Mark-Resight Nesting Population Estimation of Adult Female Green Sea Turtles at Raine Island. PLoS ONE 2020, 15, e0228524. [Google Scholar] [CrossRef]

- Feng, J.; Jin, T. CEH-YOLO: A Composite Enhanced YOLO-Based Model for Underwater Object Detection. Ecol. Inform. 2024, 82, 102758. [Google Scholar] [CrossRef]

- Sineglazov, V.; Savchenko, M. Comprehensive Framework for Underwater Object Detection Based on Improved YOLOv8. Electron. Control Syst. 2024, 1, 9–15. [Google Scholar] [CrossRef]

- Martin, J.; Eugenio, F.; Marcello, J.; Medina, A. Automatic Sun Glint Removal of Multispectral High-Resolution Worldview-2 Imagery for Retrieving Coastal Shallow Water Parameters. Remote Sens. 2016, 8, 37. [Google Scholar] [CrossRef]

- Cao, N. Small Object Detection Algorithm for Underwater Organisms Based on Improved Transformer. J. Phys. Conf. Ser. 2023, 2637, 012056. [Google Scholar] [CrossRef]

- Mathias, A.; Dhanalakshmi, S.; Kumar, R.; Narayanamoorthi, R. Deep Neural Network Driven Automated Underwater Object Detection. Comput. Mater. Contin. 2022, 70, 5251–5267. [Google Scholar] [CrossRef]

- Dakhil, R.A.; Khayeat, A.R.H. Review on Deep Learning Techniques for Underwater Object Detection. In Proceedings of the Data Science and Machine Learning, Academy and Industry Research Collaboration Center (AIRCC), Copenhagen, Denmark, 17–18 September 2022; pp. 49–63. [Google Scholar]

- Zheng, M.; Luo, W. Underwater Image Enhancement Using Improved CNN Based Defogging. Electronics 2022, 11, 150. [Google Scholar] [CrossRef]

- Hu, K.; Weng, C.; Zhang, Y.; Jin, J.; Xia, Q. An Overview of Underwater Vision Enhancement: From Traditional Methods to Recent Deep Learning. J. Mar. Sci. Eng. 2022, 10, 241. [Google Scholar] [CrossRef]

- Mogstad, A.A.; Johnsen, G.; Ludvigsen, M. Shallow-Water Habitat Mapping Using Underwater Hyperspectral Imaging from an Unmanned Surface Vehicle: A Pilot Study. Remote Sens. 2019, 11, 685. [Google Scholar] [CrossRef]

- Grządziel, A. Application of Remote Sensing Techniques to Identification of Underwater Airplane Wreck in Shallow Water Environment: Case Study of the Baltic Sea, Poland. Remote Sens. 2022, 14, 5195. [Google Scholar] [CrossRef]

- Goodwin, M.; Halvorsen, K.T.; Jiao, L.; Knausgård, K.M.; Martin, A.H.; Moyano, M.; Oomen, R.A.; Rasmussen, J.H.; Sørdalen, T.K.; Thorbjørnsen, S.H. Unlocking the Potential of Deep Learning for Marine Ecology: Overview, Applications, and Outlook. ICES J. Mar. Sci. 2022, 79, 319–336. [Google Scholar] [CrossRef]

- Liu, Y.; An, B.; Chen, S.; Zhao, D. Multi-target Detection and Tracking of Shallow Marine Organisms Based on Improved YOLO v5 and DeepSORT. IET Image Process. 2024, 18, 2273–2290. [Google Scholar] [CrossRef]

- Flynn, K.; Chapra, S. Remote Sensing of Submerged Aquatic Vegetation in a Shallow Non-Turbid River Using an Unmanned Aerial Vehicle. Remote Sens. 2014, 6, 12815–12836. [Google Scholar] [CrossRef]

- Taddia, Y.; Russo, P.; Lovo, S.; Pellegrinelli, A. Multispectral UAV Monitoring of Submerged Seaweed in Shallow Water. Appl. Geomat. 2020, 12, 19–34. [Google Scholar] [CrossRef]

- Chabot, D.; Dillon, C.; Shemrock, A.; Weissflog, N.; Sager, E.P.S. An Object-Based Image Analysis Workflow for Monitoring Shallow-Water Aquatic Vegetation in Multispectral Drone Imagery. ISPRS Int. J. Geo-Inf. 2018, 7, 294. [Google Scholar] [CrossRef]

- Feldens, P. Super Resolution by Deep Learning Improves Boulder Detection in Side Scan Sonar Backscatter Mosaics. Remote Sens. 2020, 12, 2284. [Google Scholar] [CrossRef]

- Von Rönn, G.; Schwarzer, K.; Reimers, H.-C.; Winter, C. Limitations of Boulder Detection in Shallow Water Habitats Using High-Resolution Sidescan Sonar Images. Geosciences 2019, 9, 390. [Google Scholar] [CrossRef]

- Román, A.; Tovar-Sánchez, A.; Gauci, A.; Deidun, A.; Caballero, I.; Colica, E.; D’Amico, S.; Navarro, G. Water-Quality Monitoring with a UAV-Mounted Multispectral Camera in Coastal Waters. Remote Sens. 2022, 15, 237. [Google Scholar] [CrossRef]

- Matsui, K.; Shirai, H.; Kageyama, Y.; Yokoyama, H. Improving the Resolution of UAV-Based Remote Sensing Data of Water Quality of Lake Hachiroko, Japan by Neural Networks. Ecol. Inform. 2021, 62, 101276. [Google Scholar] [CrossRef]

- Yan, Y.; Wang, Y.; Yu, C.; Zhang, Z. Multispectral Remote Sensing for Estimating Water Quality Parameters: A Comparative Study of Inversion Methods Using Unmanned Aerial Vehicles (UAVs). Sustainability 2023, 15, 10298. [Google Scholar] [CrossRef]

- Rajpura, P.S.; Bojinov, H.; Hegde, R.S. Object Detection Using Deep CNNs Trained on Synthetic Images. arXiv 2017, arXiv:1706.06782v2. [Google Scholar]

- Ayachi, R.; Afif, M.; Said, Y.; Atri, M. Traffic Signs Detection for Real-World Application of an Advanced Driving Assisting System Using Deep Learning. Neural Process Lett. 2020, 51, 837–851. [Google Scholar] [CrossRef]

- Singh, K.; Navaratnam, T.; Holmer, J.; Schaub-Meyer, S.; Roth, S. Is Synthetic Data All We Need? Benchmarking the Robustness of Models Trained with Synthetic Images. arXiv 2024, arXiv:2405.20469v2. [Google Scholar]

- Greff, K.; Belletti, F.; Beyer, L.; Doersch, C.; Du, Y.; Duckworth, D.; Fleet, D.J.; Gnanapragasam, D.; Golemo, F.; Herrmann, C.; et al. Kubric: A Scalable Dataset Generator. arXiv 2022, arXiv:2203.03570. [Google Scholar]

- Guerneve, T.; Mignotte, P. Expect the unexpected: A man-made object detection algorithm for underwater operations in unknown environments. In Proceedings of the International Conference on Underwater Acoustics 2024, Institute of Acoustics, Bath, UK, 31 May 2024. [Google Scholar]

- Hinterstoisser, S.; Pauly, O.; Heibel, H.; Marek, M.; Bokeloh, M. An Annotation Saved Is an Annotation Earned: Using Fully Synthetic Training for Object Instance Detection. arXiv 2019, arXiv:1902.09967. [Google Scholar]

- Josifovski, J.; Kerzel, M.; Pregizer, C.; Posniak, L.; Wermter, S. Object Detection and Pose Estimation Based on Convolutional Neural Networks Trained with Synthetic Data. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: New York, NY, USA, 2018; pp. 6269–6276. [Google Scholar]

- Fabbri, M.; Braso, G.; Maugeri, G.; Cetintas, O.; Gasparini, R.; Osep, A.; Calderara, S.; Leal-Taixe, L.; Cucchiara, R. MOTSynth: How Can Synthetic Data Help Pedestrian Detection and Tracking? In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 10829–10839. [Google Scholar]

- Lin, S.; Wang, K.; Zeng, X.; Zhao, R. Explore the Power of Synthetic Data on Few-Shot Object Detection. arXiv 2023, arXiv:2303.13221. [Google Scholar]

- Huh, J.; Lee, K.; Lee, I.; Lee, S. A Simple Method on Generating Synthetic Data for Training Real-Time Object Detection Networks. In Proceedings of the 2018 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Honolulu, HI, USA, 12–15 November 2018; IEEE: New York, NY, USA, 2018; pp. 1518–1522. [Google Scholar]

- Seiler, F.; Eichinger, V.; Effenberger, I. Synthetic Data Generation for AI-Based Machine Vision Applications. Electron. Imaging 2024, 36, IRIACV-276. [Google Scholar] [CrossRef]

- Andulkar, M.; Hodapp, J.; Reichling, T.; Reichenbach, M.; Berger, U. Training CNNs from Synthetic Data for Part Handling in Industrial Environments. In Proceedings of the 2018 IEEE 14th International Conference on Automation Science and Engineering (CASE), Munich, Germany, 20–24 August 2018; IEEE: New York, NY, USA, 2018; pp. 624–629. [Google Scholar]

- Wang, R.; Hoppe, S.; Monari, E.; Huber, M.F. Defect Transfer GAN: Diverse Defect Synthesis for Data Augmentation. arXiv 2023, arXiv:2302.08366. [Google Scholar]

- Ma, Q.; Jin, S.; Bian, G.; Cui, Y. Multi-Scale Marine Object Detection in Side-Scan Sonar Images Based on BES-YOLO. Sensors 2024, 24, 4428. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, P.; Cao, D. Synthetic aperture image enhancement with near-coinciding Nonuniform sampling case. Comput. Electr. Eng. 2024, 120, 109818. [Google Scholar] [CrossRef]

- Mohamed, H.; Nadaoka, K.; Nakamura, T. Automatic Semantic Segmentation of Benthic Habitats Using Images from Towed Underwater Camera in a Complex Shallow Water Environment. Remote Sens. 2022, 14, 1818. [Google Scholar] [CrossRef]

- Mohamed, H.; Nadaoka, K.; Nakamura, T. Semiautomated Mapping of Benthic Habitats and Seagrass Species Using a Convolutional Neural Network Framework in Shallow Water Environments. Remote Sens. 2020, 12, 4002. [Google Scholar] [CrossRef]

- Villon, S.; Mouillot, D.; Chaumont, M.; Darling, E.S.; Subsol, G.; Claverie, T.; Villéger, S. A Deep Learning Method for Accurate and Fast Identification of Coral Reef Fishes in Underwater Images. Ecol. Inform. 2018, 48, 238–244. [Google Scholar] [CrossRef]

- Han, F.; Yao, J.; Zhu, H.; Wang, C. Underwater Image Processing and Object Detection Based on Deep CNN Method. J. Sens. 2020, 2020, 6707328. [Google Scholar] [CrossRef]

- Jin, L.; Liang, H. Deep Learning for Underwater Image Recognition in Small Sample Size Situations. In Proceedings of the OCEANS 2017—Aberdeen, Aberdeen, UK, 19–22 June 2017; IEEE: New York, NY, USA, 2017; pp. 1–4. [Google Scholar]

- Jain, A.; Mahajan, M.; Saraf, R. Standardization of the Shape of Ground Control Point (GCP) and the Methodology for Its Detection in Images for UAV-Based Mapping Applications. In Advances in Computer Vision; Arai, K., Kapoor, S., Eds.; Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2020; Volume 943, pp. 459–476. ISBN 978-3-030-17794-2. [Google Scholar]

- Chuanxiang, C.; Jia, Y.; Chao, W.; Zhi, Z.; Xiaopeng, L.; Di, D.; Mengxia, C.; Zhiheng, Z. Automatic Detection of Aerial Survey Ground Control Points Based on Yolov5-OBB. arXiv 2023, arXiv:2303.03041. [Google Scholar]

- Muradás Odriozola, G.; Pauly, K.; Oswald, S.; Raymaekers, D. Automating Ground Control Point Detection in Drone Imagery: From Computer Vision to Deep Learning. Remote Sens. 2024, 16, 794. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 779–788. [Google Scholar]

- Wang, C.; Wang, Q.; Wu, H.; Zhao, C.; Teng, G.; Li, J. Low-Altitude Remote Sensing Opium Poppy Image Detection Based on Modified YOLOv3. Remote Sens. 2021, 13, 2130. [Google Scholar] [CrossRef]

- Wang, Q.; Shen, F.; Cheng, L.; Jiang, J.; He, G.; Sheng, W.; Jing, N.; Mao, Z. Ship Detection Based on Fused Features and Rebuilt YOLOv3 Networks in Optical Remote-Sensing Images. Int. J. Remote Sens. 2021, 42, 520–536. [Google Scholar] [CrossRef]

- Hong, Z.; Yang, T.; Tong, X.; Zhang, Y.; Jiang, S.; Zhou, R.; Han, Y.; Wang, J.; Yang, S.; Liu, S. Multi-Scale Ship Detection From SAR and Optical Imagery Via A More Accurate YOLOv3. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6083–6101. [Google Scholar] [CrossRef]

- Chen, L.; Shi, W.; Deng, D. Improved YOLOv3 Based on Attention Mechanism for Fast and Accurate Ship Detection in Optical Remote Sensing Images. Remote Sens. 2021, 13, 660. [Google Scholar] [CrossRef]