Effects of Clouds and Shadows on the Use of Independent Component Analysis for Feature Extraction

Abstract

1. Introduction

2. Materials and Methods

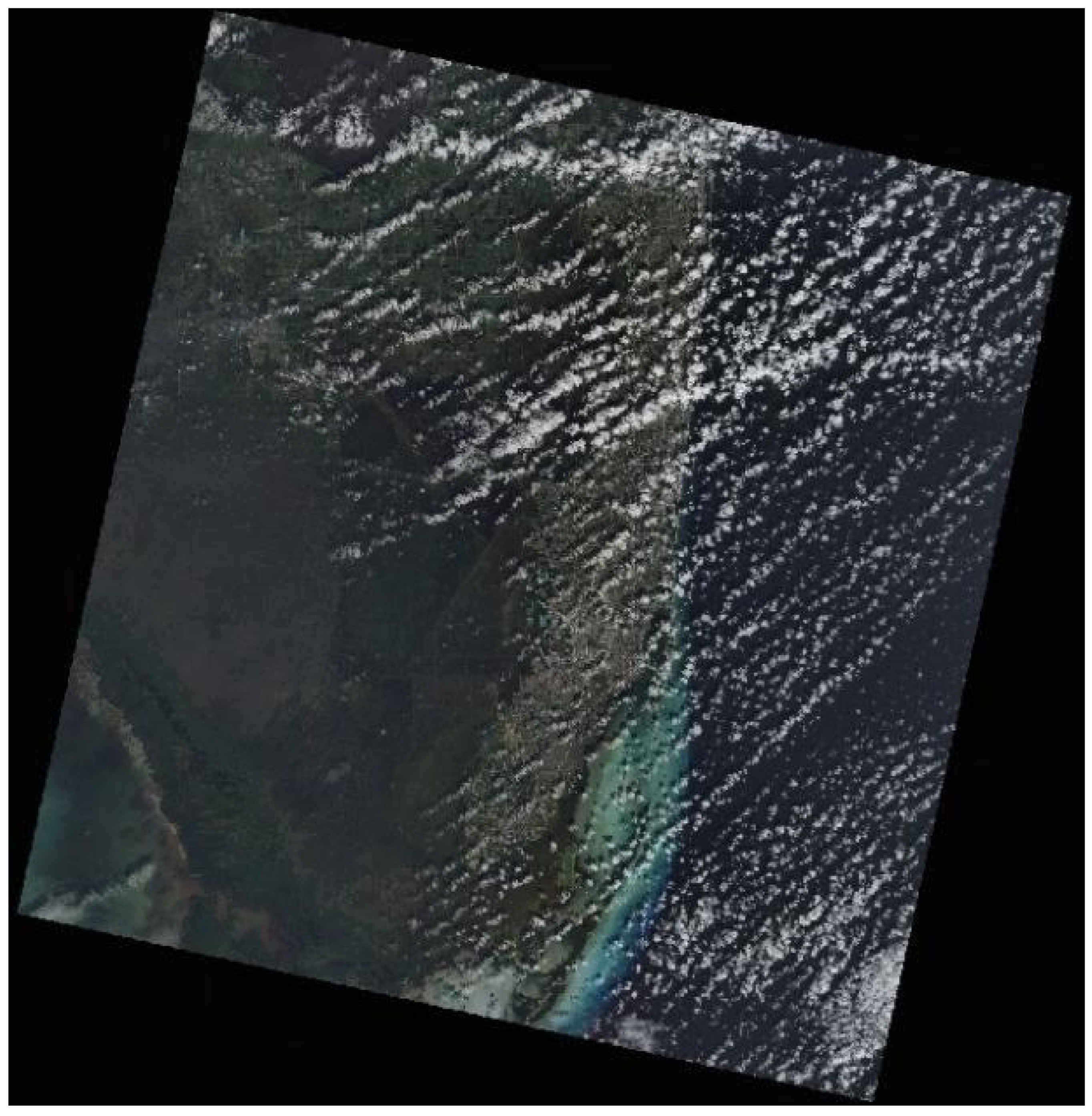

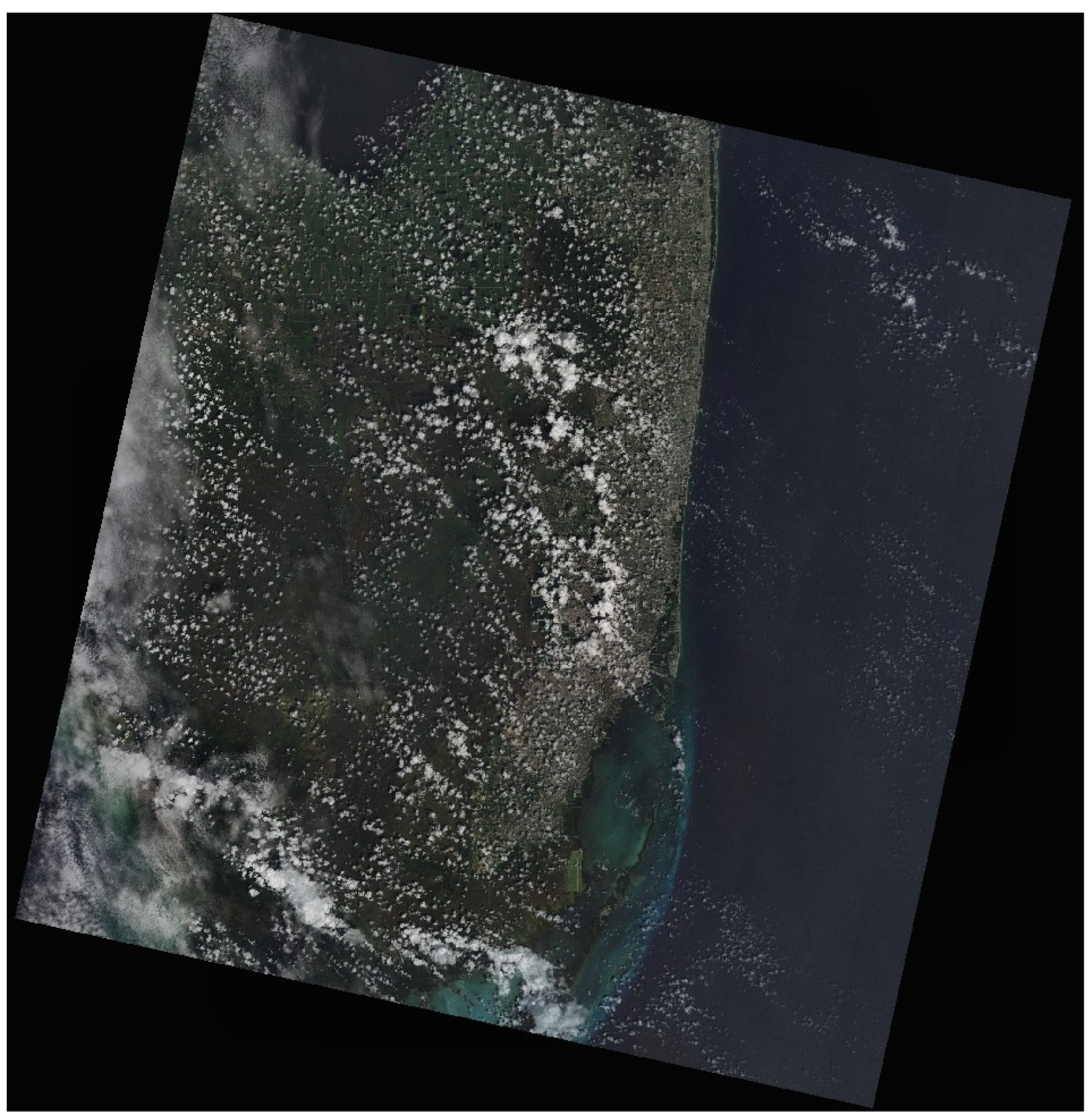

2.1. USGS Landsat-8 Imagery

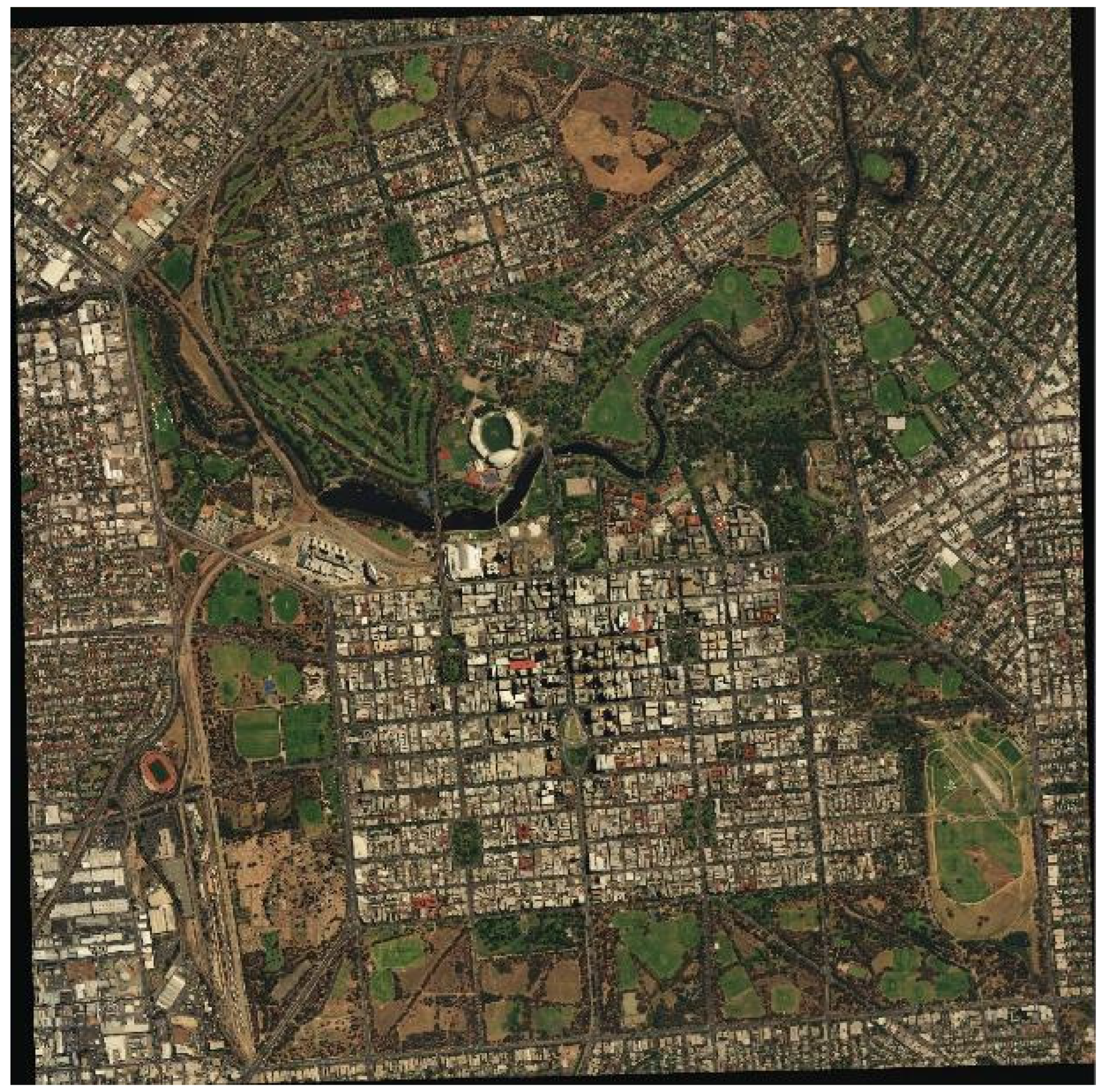

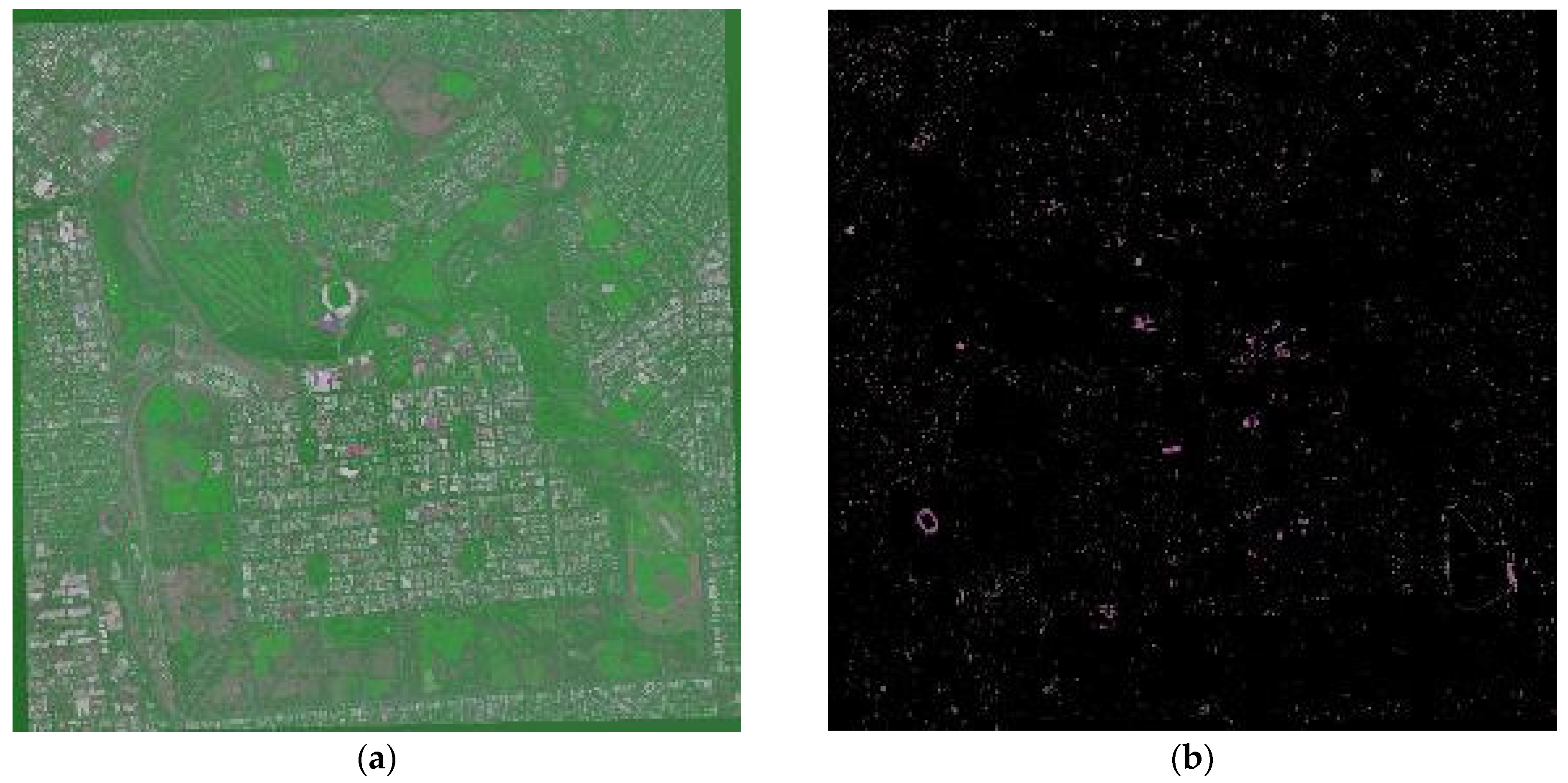

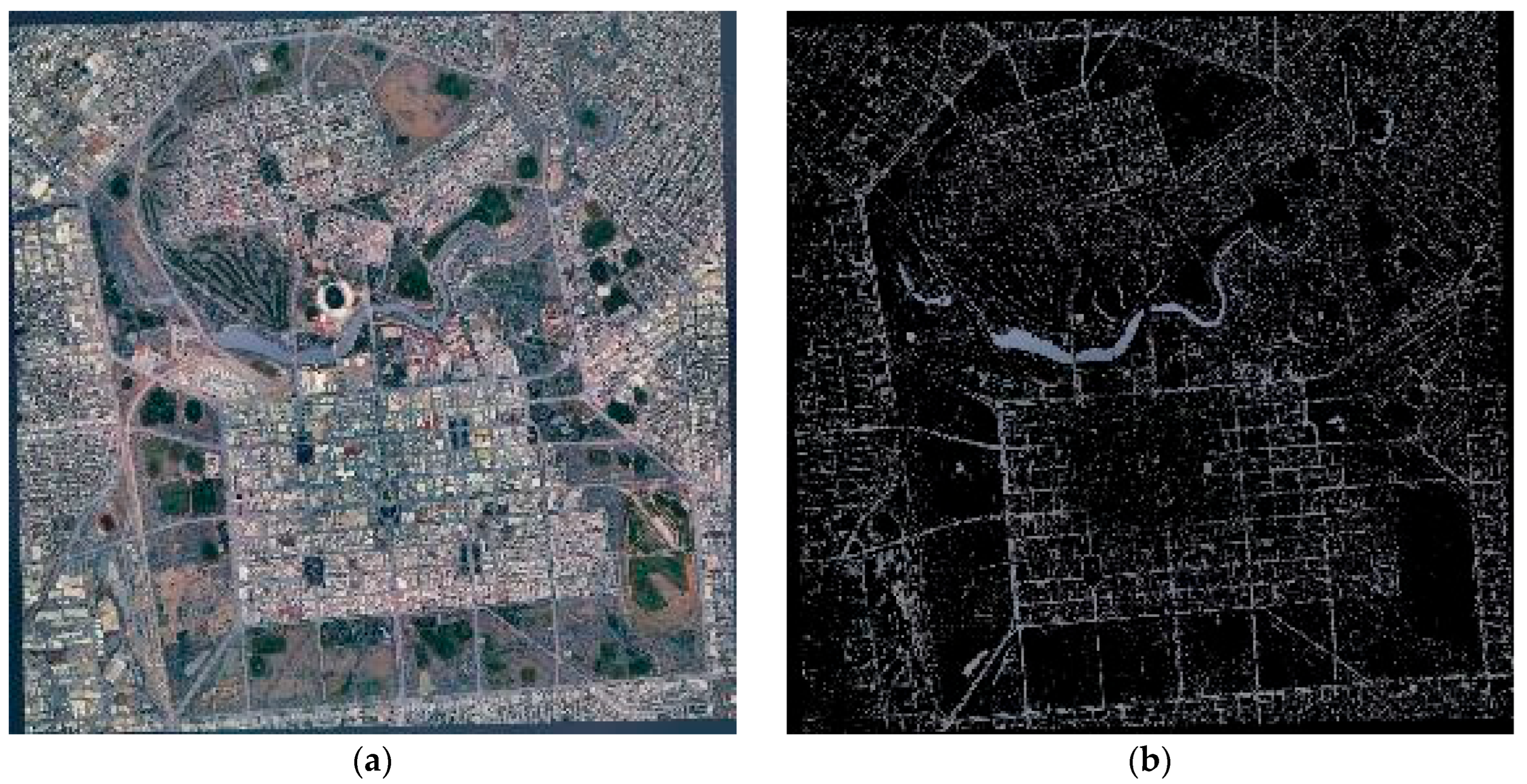

2.2. Apollo Mapping Worldview-3 Imagery

2.3. Independent Component Analysis (ICA) Framework

3. Results

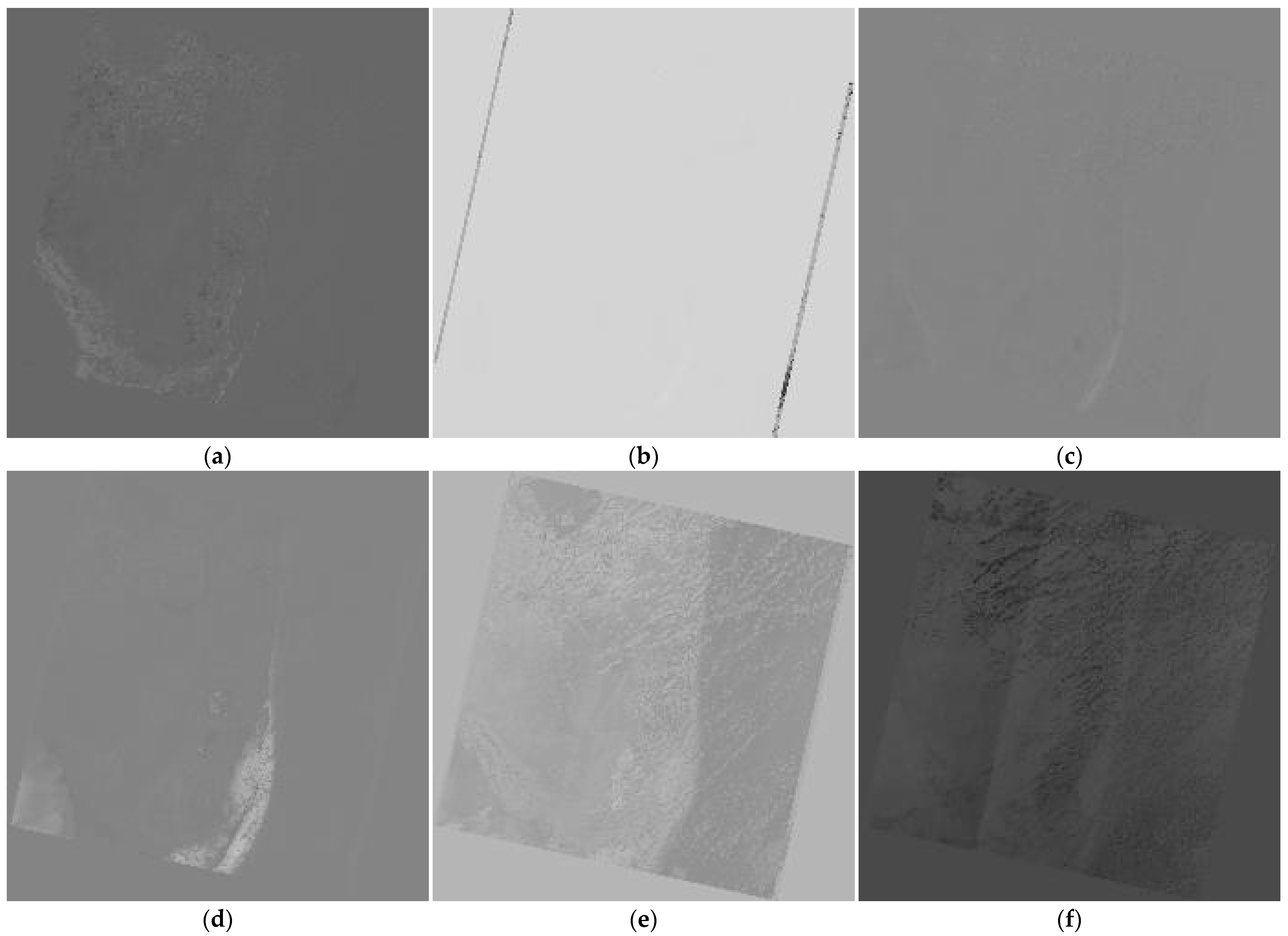

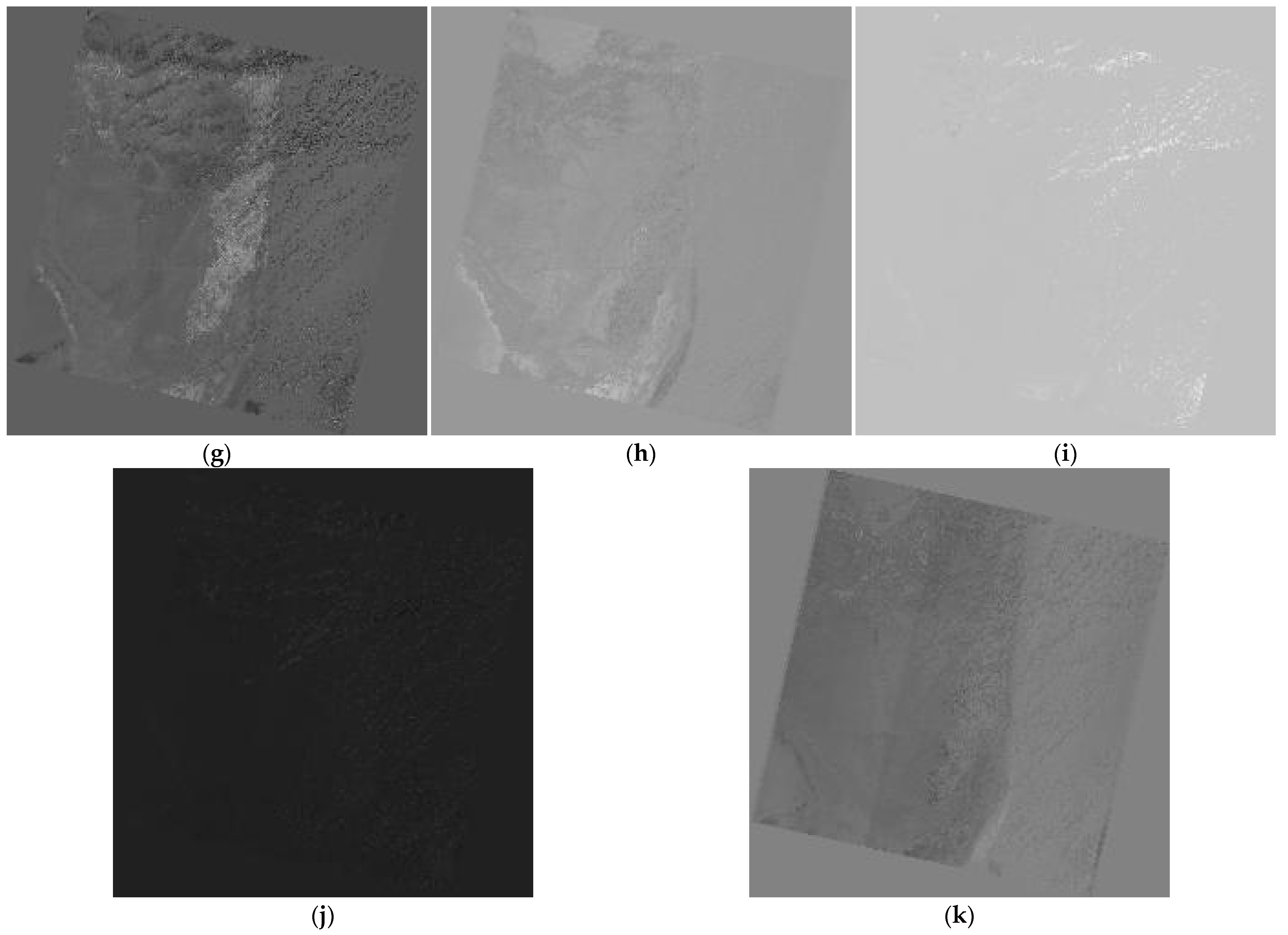

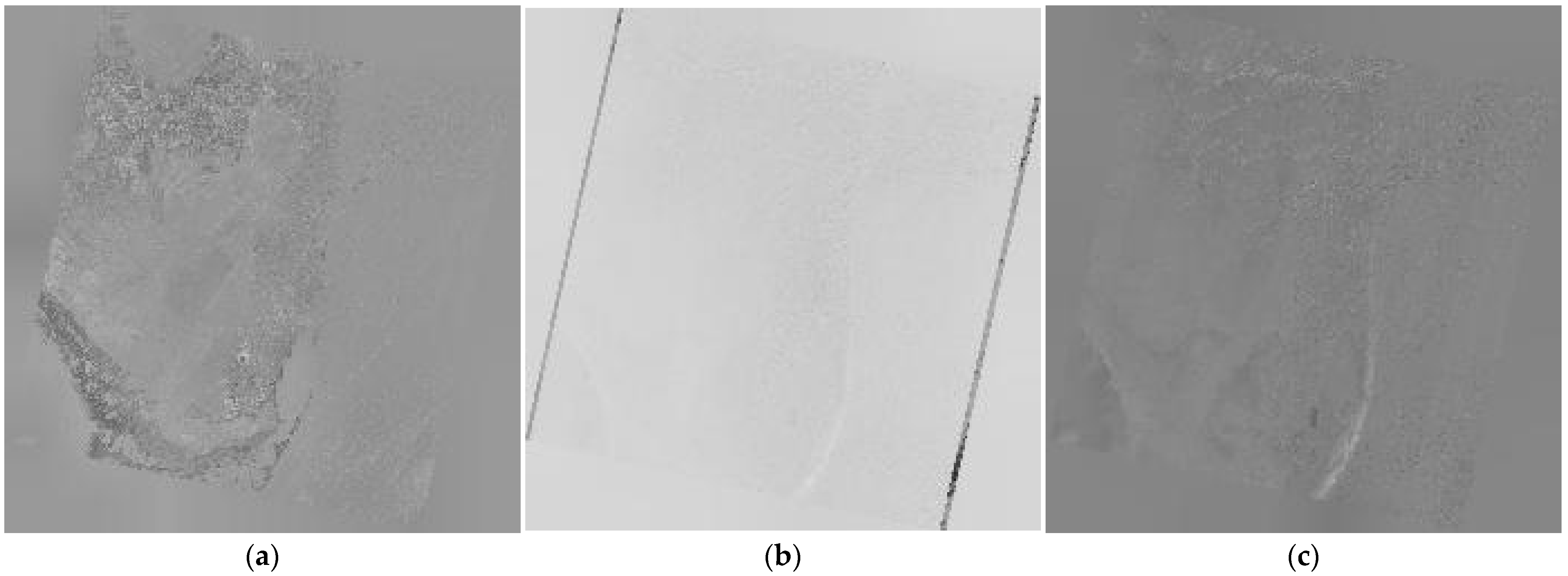

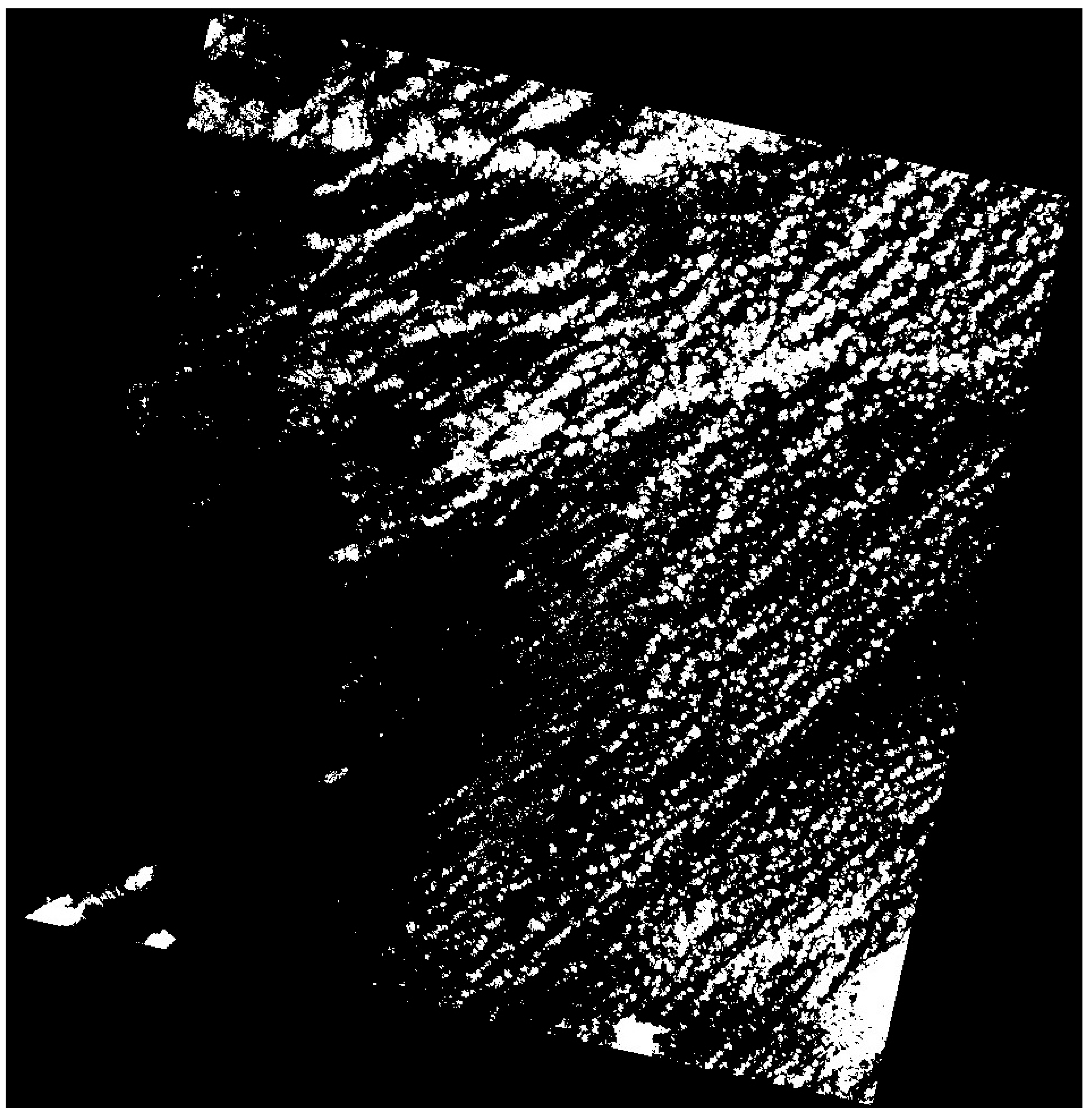

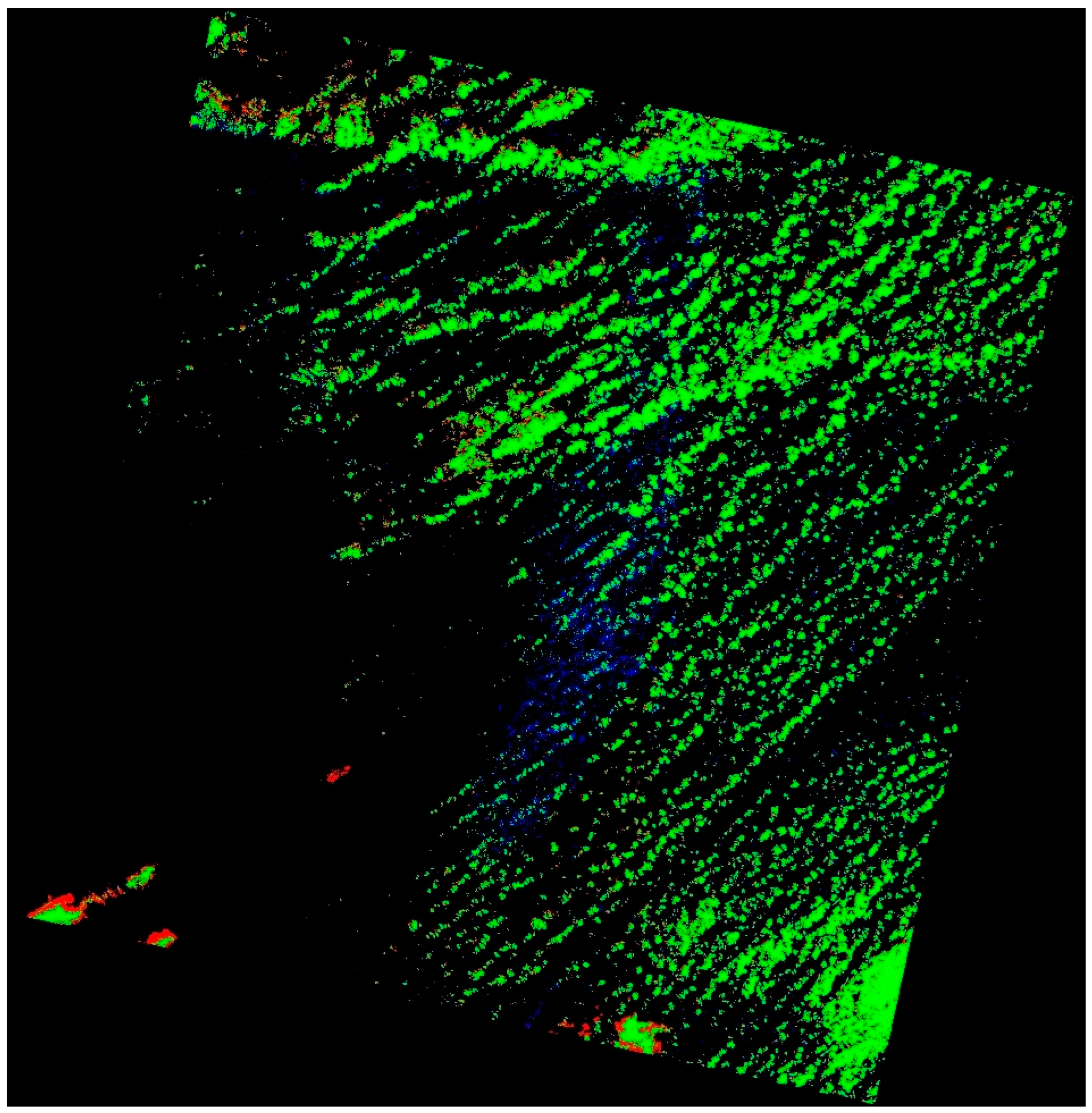

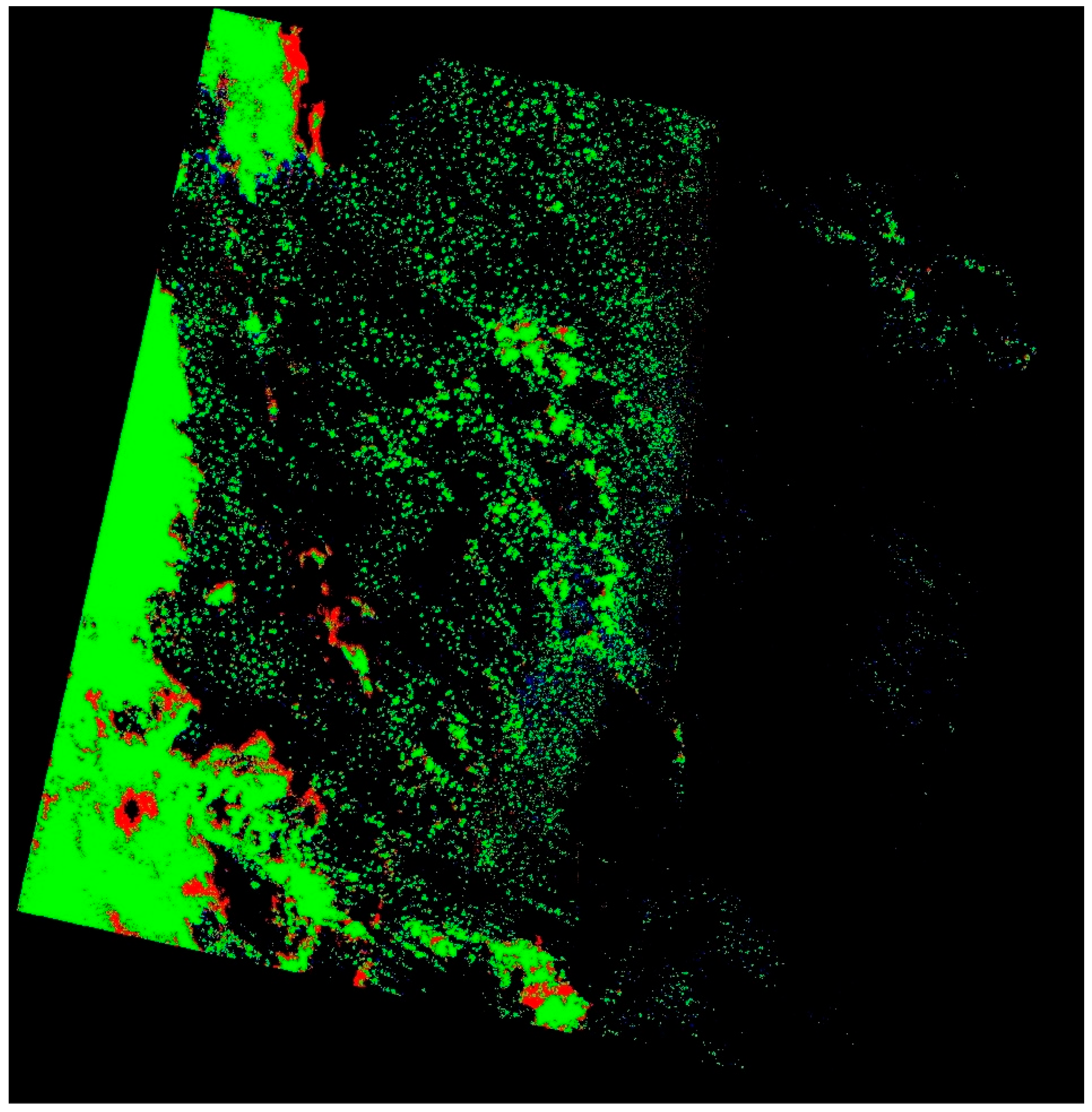

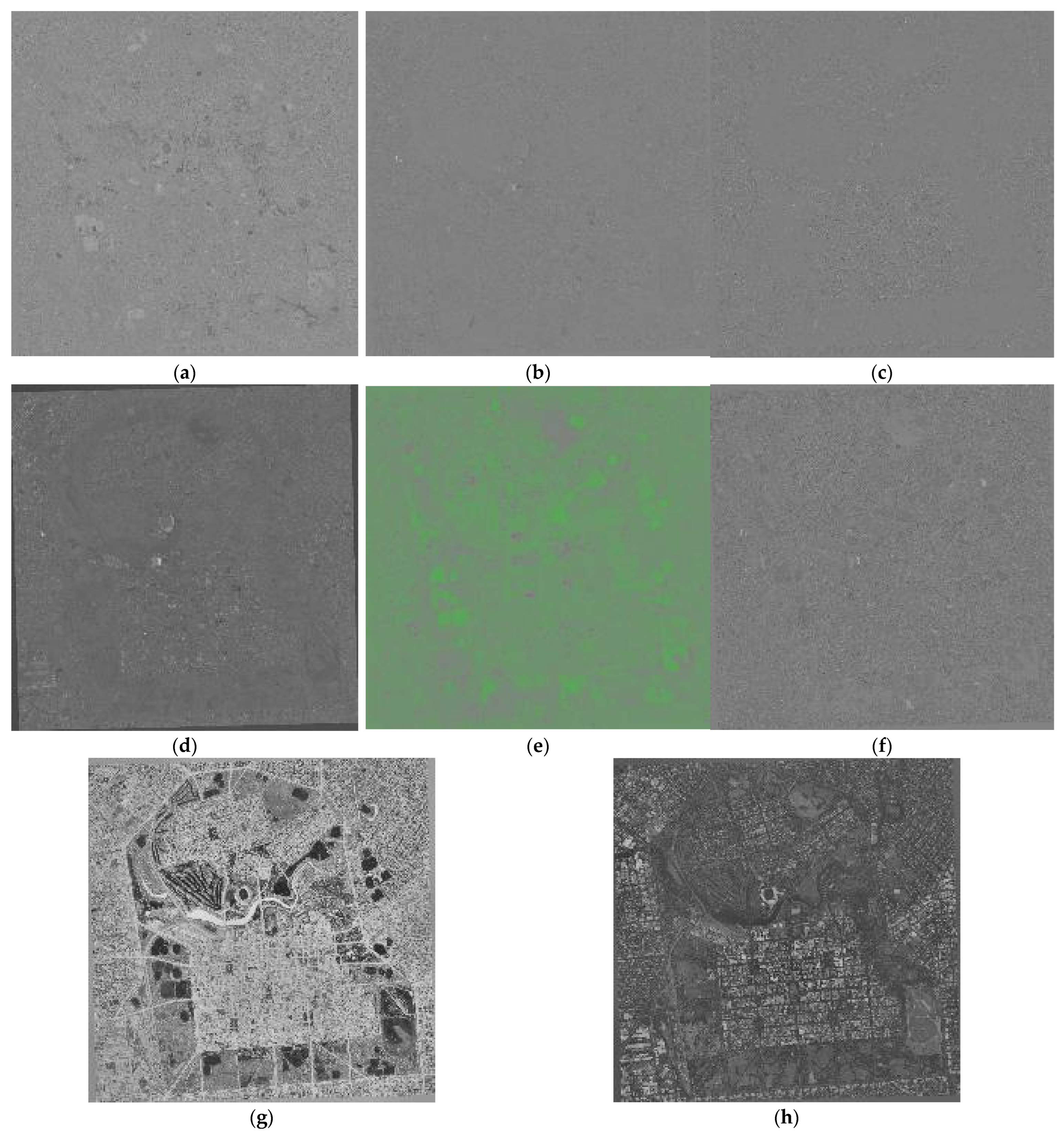

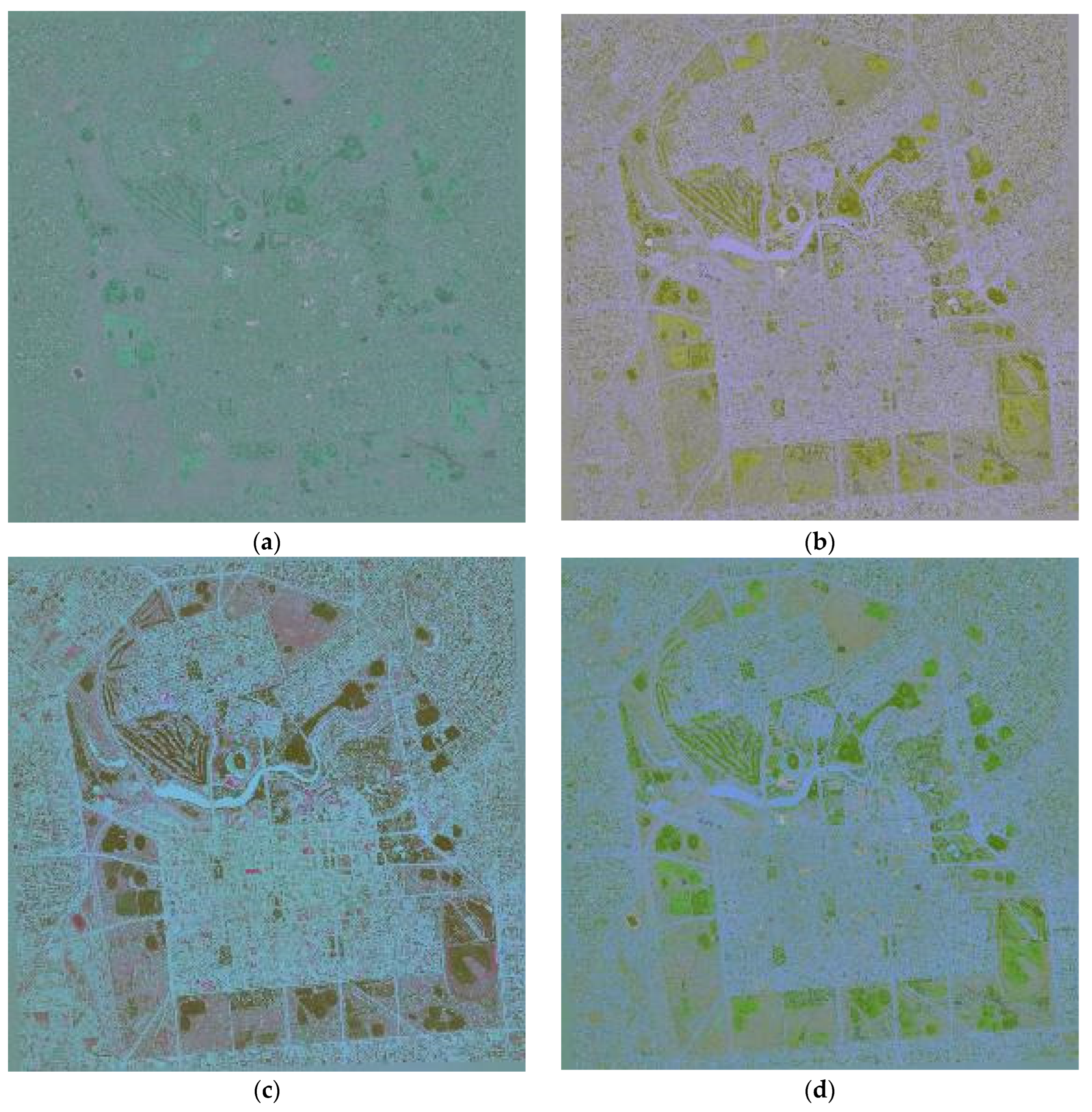

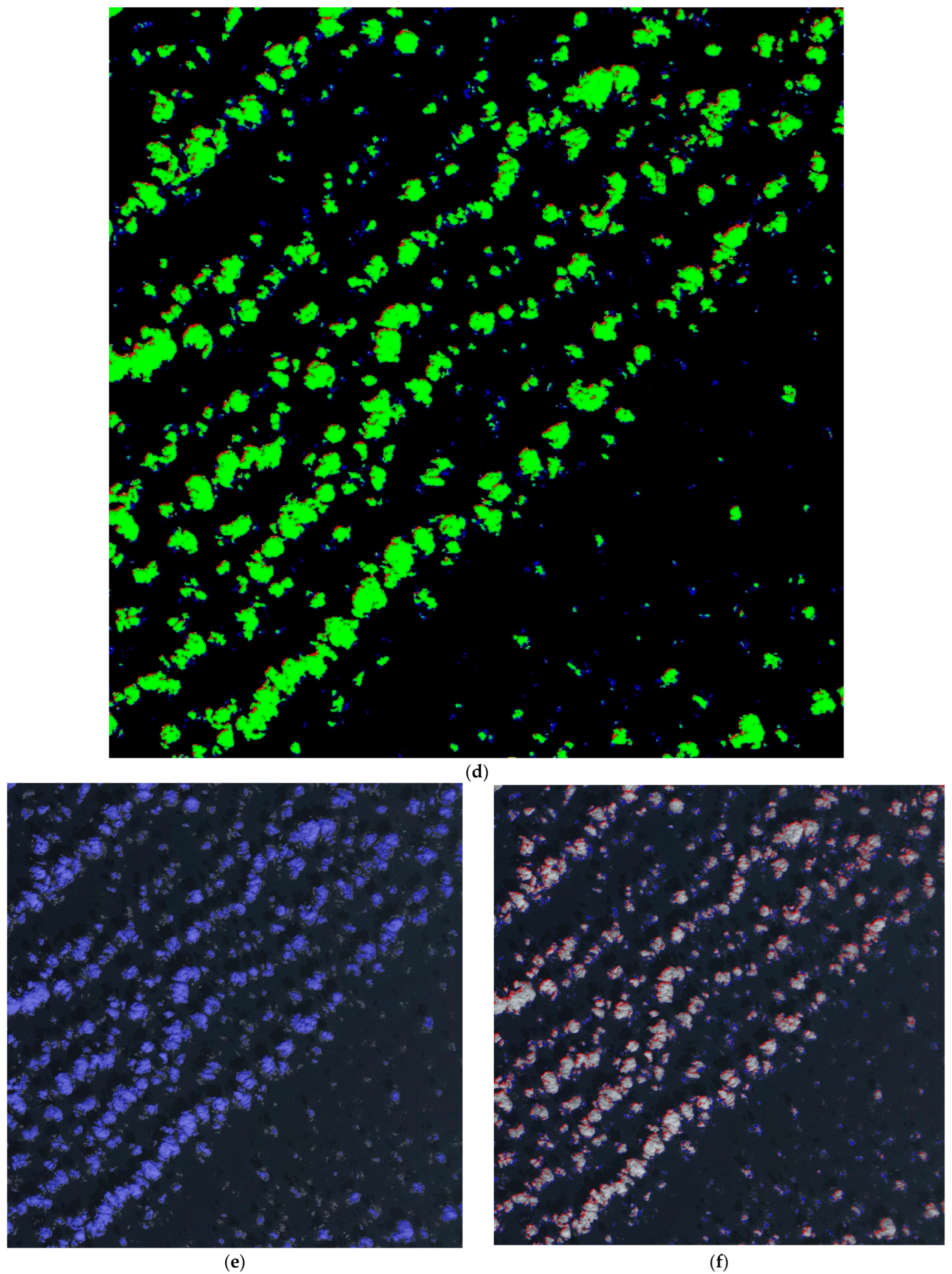

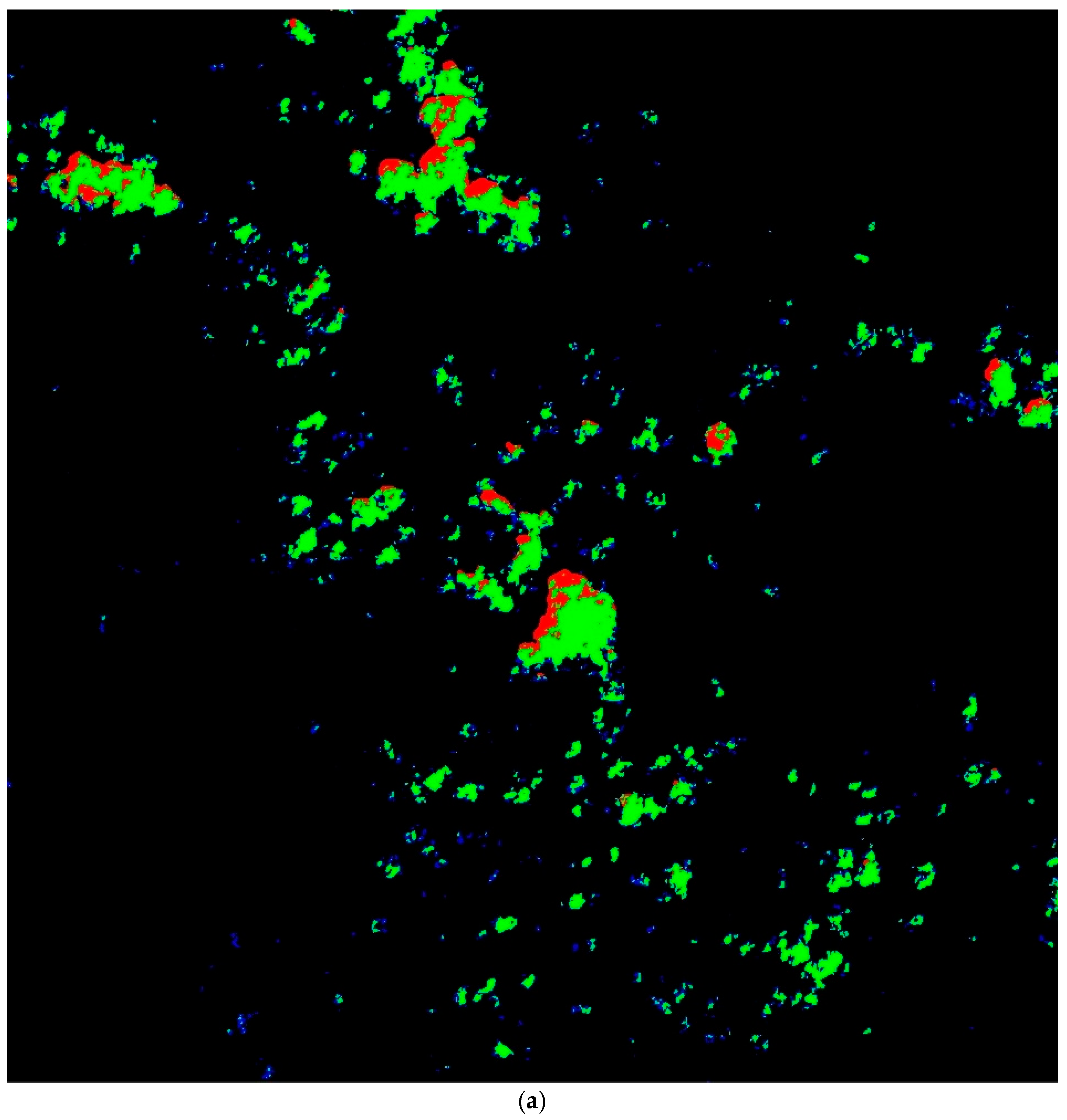

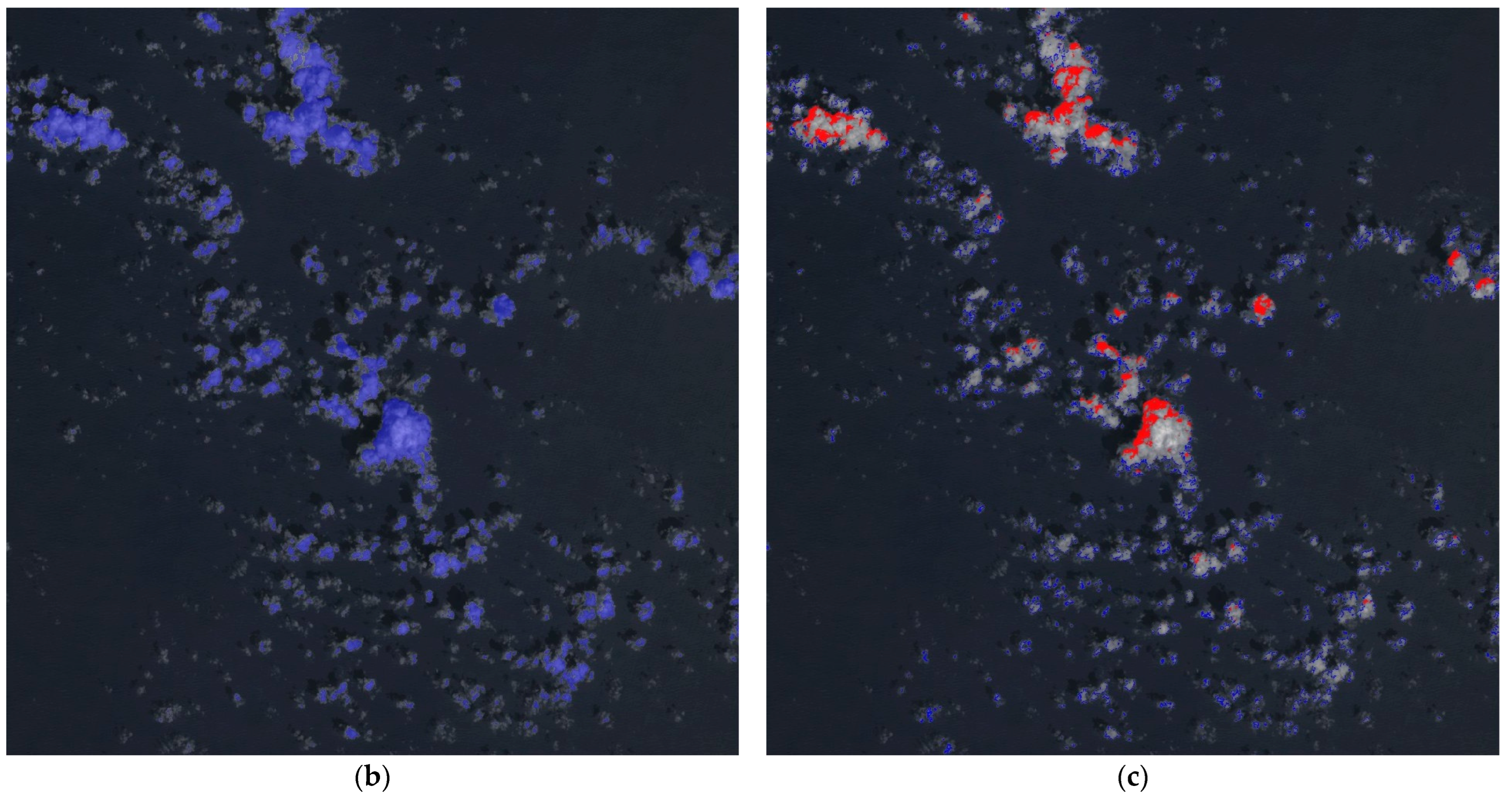

3.1. Landsat-8 Data

Retrospective on Results Across Datasets

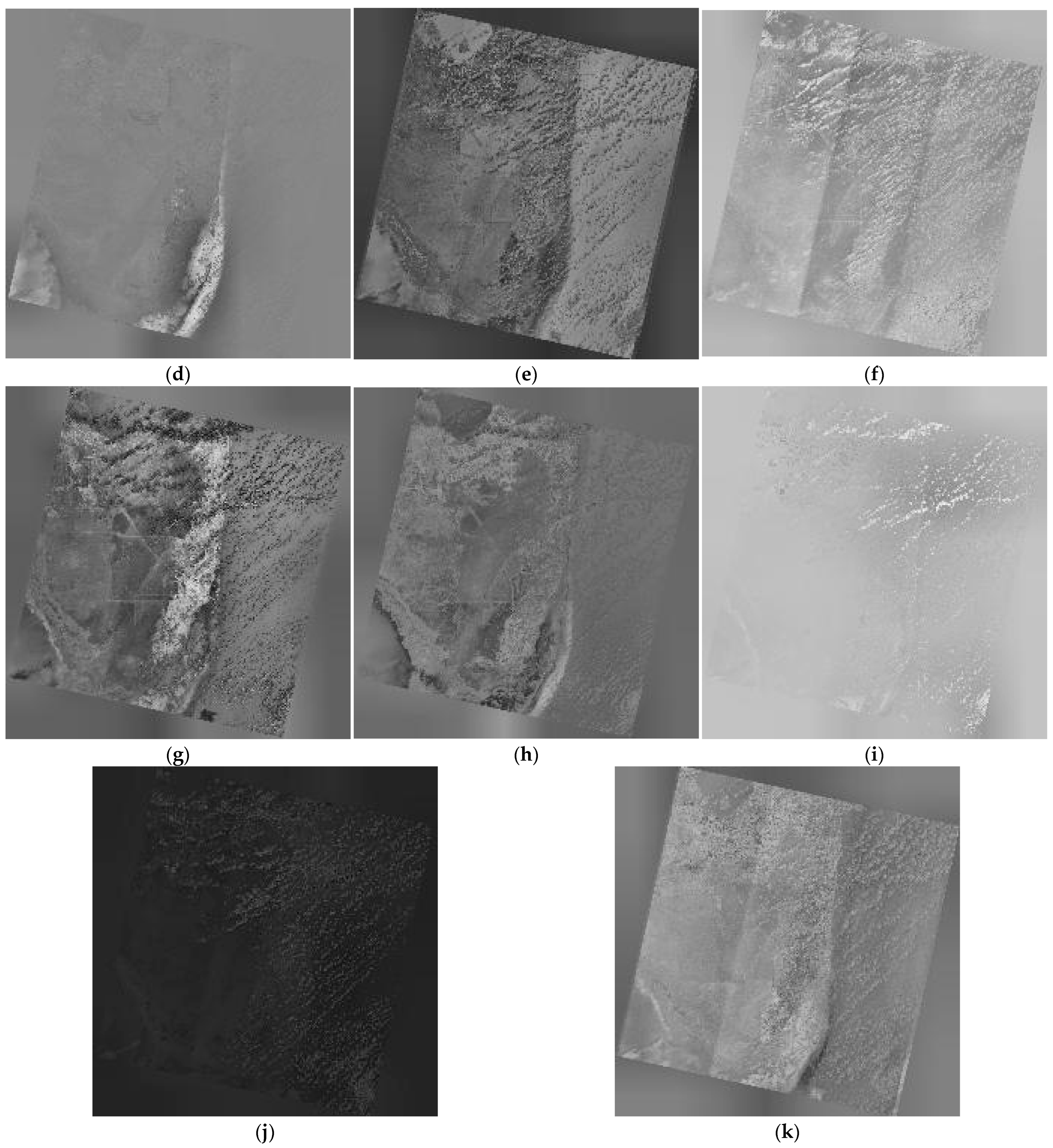

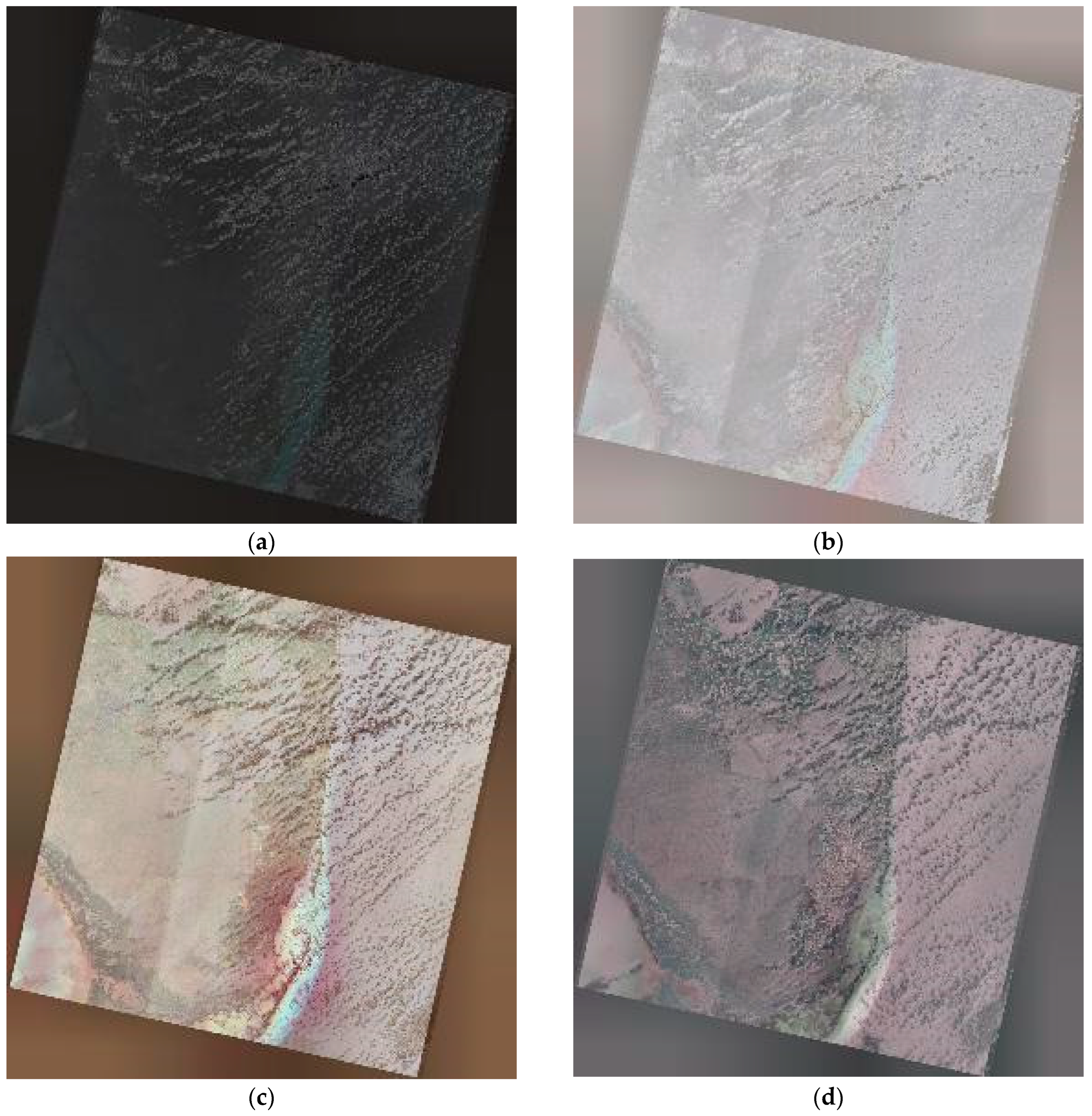

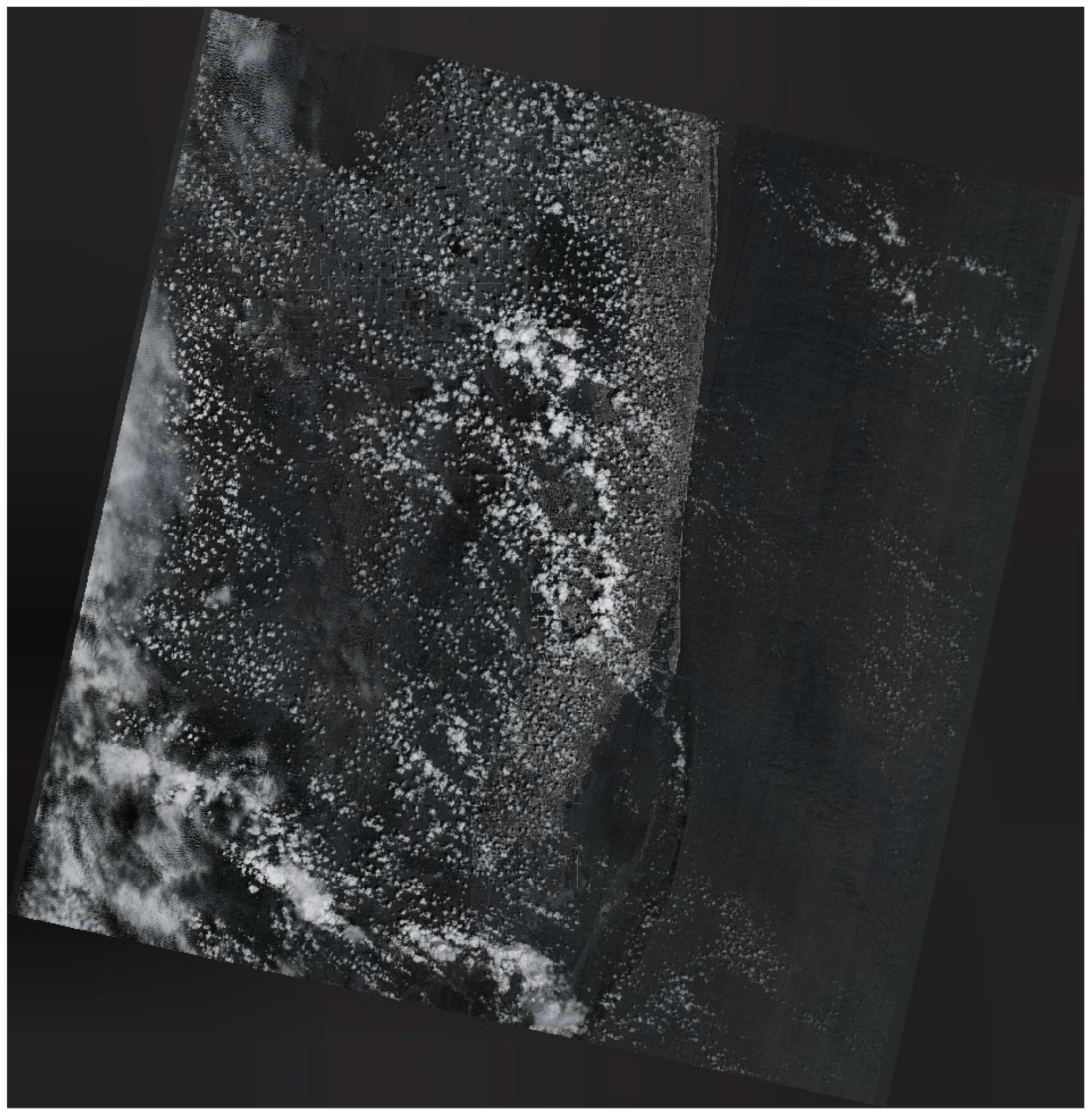

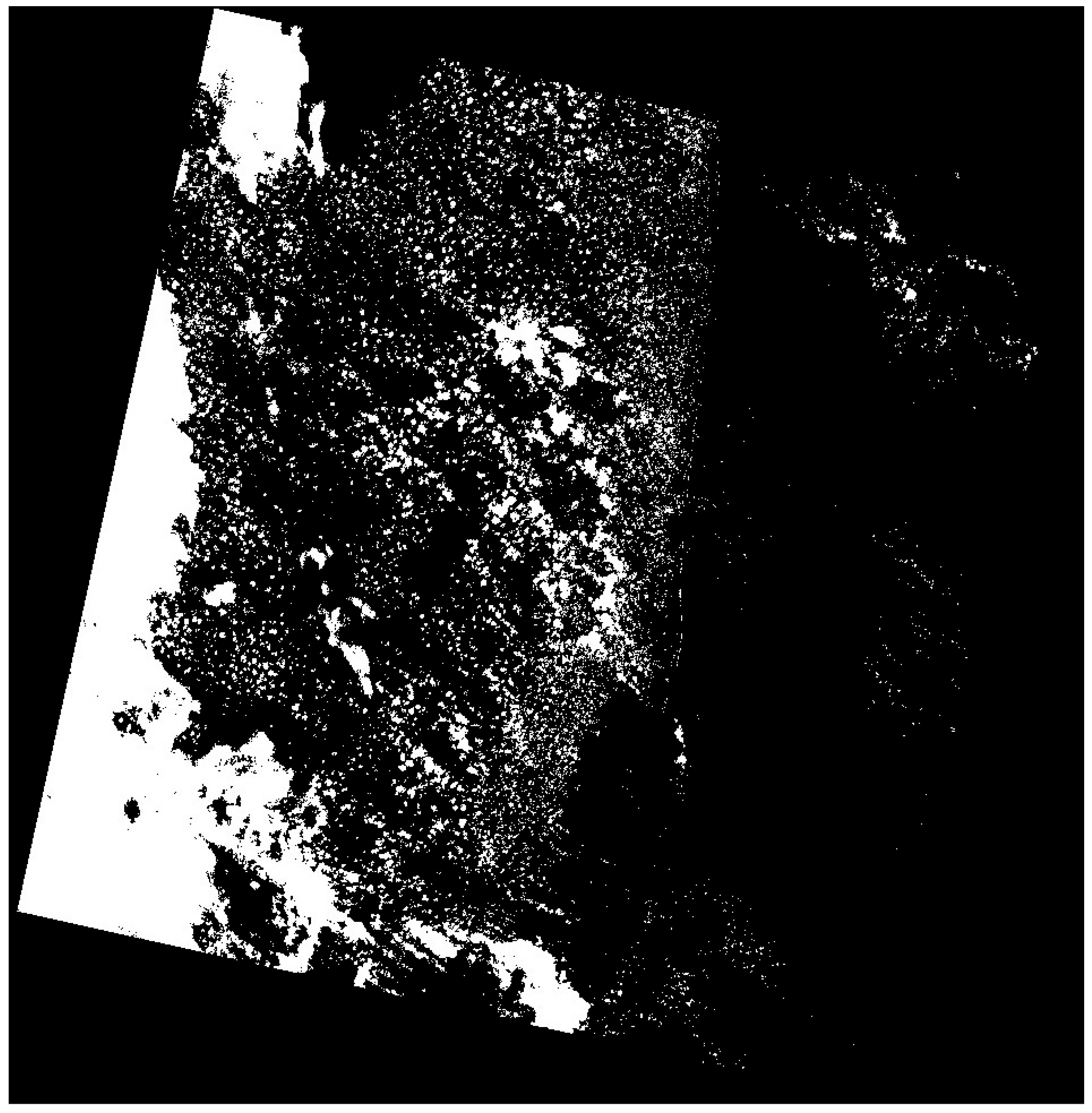

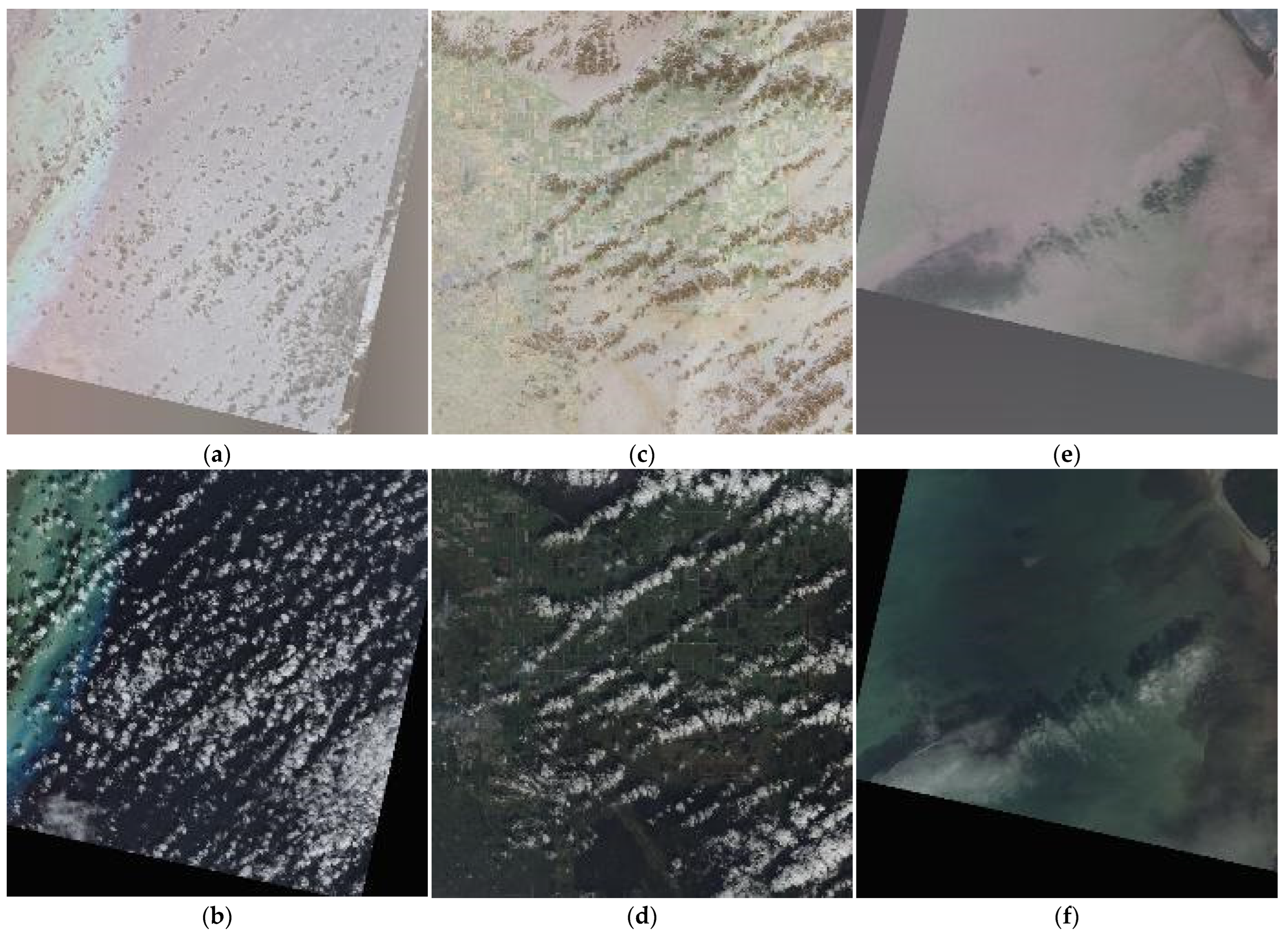

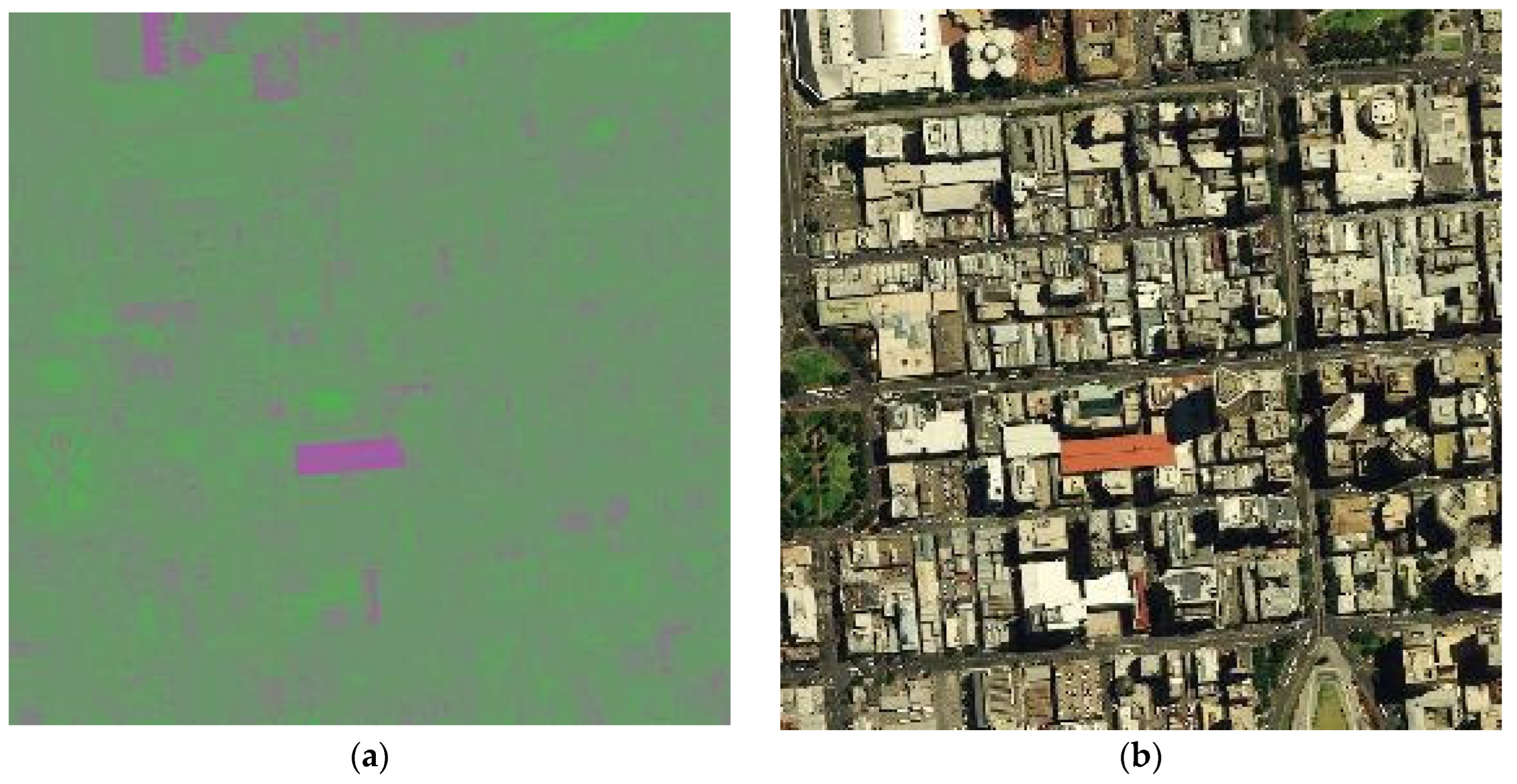

3.2. Results on Worldview-3 Data

4. Discussion

4.1. Landsat 8 Data

4.2. Analysis on Worldview-3 Data

- Yellow boxes: Grassy regions retained green color tones in both the original and reconstructed images.

- Green box: A red track remained distinguishable from adjacent grassy areas.

- Red boxes: Ponds and water bodies were correctly identified, even when their visual appearance mimicked grassy fields, demonstrating ICA’s ability to capture latent spectral distinctions.

5. ICA-Based Cloud Detection Within the Remote Sensing Landscape

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MSI | Multispectral Images |

| ICA | Independent Component Analysis |

| IC | Independent Component |

| USGS | United States Geological Survey |

| SWIR | Shortwave infrared |

| TIRS | Thermal infra-red sensor |

| NIR | Near-infrared |

| RGB | Red, green, blue |

| Pan | Panchromatic |

| SAR | Synthetic Aperture Radar |

References

- Cagnazzo, M.; Poggi, G.; Verdoliva, L. Region-based transform coding of multispectral images. IEEE Trans. Image Process. 2007, 16, 2916–2926. [Google Scholar] [CrossRef]

- Torres, J.; Vazquez, D.; Antelo, T.; Menendez, J.M.; Posse, A.; Alvarez, A.; Munoz, J.; Vega, C.; Del Egido, M. Acquisition and formation of multispectral images of paintings. Opt. Pura Apl. 2012, 45, 201–207. [Google Scholar] [CrossRef]

- Ma, Z.; Ng, M.K. Multispectral Image Restoration by Generalized Opponent Transformation Total Variation. SIAM J. Imaging Sci. 2025, 18, 246–279. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, D.; Li, X.; Zhu, L.; Gong, H.; Ke, Y. Virtual image-based cloud removal for Landsat images. GISci. Remote Sens. 2023, 60, 2160411. [Google Scholar] [CrossRef]

- Tong, Q.; Wang, L.; Dai, Q.; Zheng, C.; Zhou, F. Enhanced cloud removal via temporal U-Net and cloud cover evolution simulation. Sci. Rep. 2025, 15, 4544. [Google Scholar] [CrossRef]

- Ma, D.; Wu, R.; Xiao, D.; Sui, B. Cloud Removal from Satellite Images Using a Deep Learning Model with the Cloud-Matting Method. Remote Sens. 2023, 15, 904. [Google Scholar] [CrossRef]

- Han, J.; Zhou, Y.; Gao, X.; Zhao, Y. Thin Cloud Removal Generative Adversarial Network Based on Sparse Transformer in Remote Sensing Images. Remote Sens. 2024, 16, 3658. [Google Scholar] [CrossRef]

- Xiong, Q.; Di, L.; Feng, Q.; Liu, D.; Liu, W.; Zan, X.; Zhang, L.; Zhu, D.; Liu, Z.; Yao, X.; et al. Deriving Non-Cloud Contaminated Sentinel-2 Images with RGB and Near-Infrared Bands from Sentinel-1 Images Based on a Conditional Generative Adversarial Network. Remote Sens. 2021, 13, 1512. [Google Scholar] [CrossRef]

- Li, J.; Wu, Z.; Hu, Z.; Li, Z.; Wang, Y.; Molinier, M. Deep Learning Based Thin Cloud Removal Fusing Vegetation Red Edge and Short Wave Infrared Spectral Information for Sentinel-2A Imagery. Remote Sens. 2021, 13, 157. [Google Scholar] [CrossRef]

- Xu, S.; Wang, J.; Wang, J. Fast Thick Cloud Removal for Multi-Temporal Remote Sensing Imagery via Representation Coefficient Total Variation. Remote Sens. 2024, 16, 152. [Google Scholar] [CrossRef]

- Yamazaki, M.; Fels, S. Local Image Descriptors Using Supervised Kernel ICA. IEICE Trans. Inf. Syst. 2009, E92.D, 1745–1751. [Google Scholar] [CrossRef]

- Mitianoudis, N.; Stathaki, T. Pixel-based and region-based image fusion schemes using ICA bases. Inf. Fusion 2007, 8, 131–142. [Google Scholar] [CrossRef]

- Cvejic, N.; Bull, D.; Canagarajah, N. Region-based multimodal image fusion using ICA bases. IEEE Sens. J. 2007, 7, 743–751. [Google Scholar] [CrossRef]

- Boppidi, P.K.R.; Louis, V.J.; Subramaniam, A.; Tripathy, R.K.; Banerjee, S.; Kundu, S. Implementation of fast ICA using memristor crossbar arrays for blind image source separations. IET Circuits Devices Syst. 2020, 14, 484–489. [Google Scholar] [CrossRef]

- U.S. Geological Survey. Landsat 8. Available online: https://www.usgs.gov/landsat-missions/landsat-8 (accessed on 2 June 2025).

- U.S. Geological Survey. Landsat Collection 1. Available online: https://www.usgs.gov/landsat-missions/landsat-collection-1 (accessed on 2 June 2025).

- Apollo Mapping. WorldView-3 Satellite Imagery. Available online: https://apollomapping.com/worldview-3-satellite-imagery. (accessed on 3 June 2025).

- Apollo Mapping. Download Free Poster—Sample Satellite Imagery Posters & Wallpapers. Available online: https://apollomapping.com/download-free-poster (accessed on 3 June 2025).

- Google Maps. 25.441914, −80.196061. Available online: https://maps.app.goo.gl/JxzFDrfNX4wMiiA37 (accessed on 2 June 2025).

- Google Maps. CEMEX Doral FEC Aggregates Quarry. Available online: https://maps.app.goo.gl/ingQDJydJnxXSK1e6 (accessed on 2 June 2025).

- Xiong, Q.; Li, G.; Zhu, H.; Liu, Y.; Zhao, Z.; Zhang, X. SAR-to-optical image translation and cloud removal based on conditional generative adversarial networks: Literature survey, taxonomy, evaluation indicators, limits and future directions. Remote Sens. 2023, 15, 1137. [Google Scholar] [CrossRef]

- Jeppesen, J.H.; Jacobsen, R.H.; Andersen, O.B.; Toftegaard, T.S. A cloud detection algorithm for satellite imagery based on deep learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Huang, K.H.; Sun, Z.L.; Xiong, Y.; Tu, L.; Yang, C.; Wang, H.T. Exploring factors affecting the performance of neural network algorithm for detecting clouds, snow, and lakes in Sentinel-2 images. Remote Sens. 2024, 16, 3162. [Google Scholar] [CrossRef]

- Shi, Z.; Huang, L.; Wu, F.; Lei, Y.; Wang, H.; Tang, Z. An improved multi-threshold clutter filtering algorithm for W-band cloud radar based on K-means clustering. Remote Sens. 2024, 16, 4640. [Google Scholar] [CrossRef]

- Nguyen, C.; Starek, M.J.; Tissot, P.; Gibeaut, J. Unsupervised clustering method for complexity reduction of terrestrial LiDAR data in marshes. Remote Sens. 2018, 10, 133. [Google Scholar] [CrossRef]

- Prades, J.; Safont, G.; Salazar, A.; Vergara, L. Estimation of the number of endmembers in hyperspectral images using agglomerative clustering. Remote Sens. 2020, 12, 3585. [Google Scholar] [CrossRef]

- Zhu, J.; Cao, S.; Xie, B. Subpixel snow mapping using daily AVHRR/2 data over Qinghai-Tibet Plateau. Remote Sens. 2022, 14, 2844. [Google Scholar] [CrossRef]

- Liu, Y.H.; Wu, S.B.; Zhang, B.; Xiong, S.; Wang, C.S. Accurate deformation retrieval of the 2023 Turkey–Syria earthquakes using multi-track InSAR data and a spatio-temporal correlation analysis with the ICA method. Remote Sens. 2024, 16, 3139. [Google Scholar] [CrossRef]

- King, M.D.; Kaufman, Y.J.; Menzel, W.P.; Tanre, D. Remote sensing of cloud, aerosol, and water vapor properties from the Moderate Resolution Imaging Spectrometer (MODIS). IEEE Trans. Geosci. Remote Sens. 1992, 30, 2–27. [Google Scholar] [CrossRef]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.-C.; Skakun, S.V.; Justice, C.O. The Harmonized Landsat and Sentinel-2 surface reflectance data set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Meraner, A.; Ebel, P.; Zhu, X.X.; Schmitt, M. Cloud removal in Sentinel-2 imagery using a deep residual neural network and SAR-optical data fusion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 333–346. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Weng, Q.; Zhang, Y.; Dou, P.; Zhang, L. Cloud and cloud shadow detection for optical satellite imagery: Features, algorithms, validation, and prospects. ISPRS J. Photogramm. Remote Sens. 2022, 188, 89–108. [Google Scholar] [CrossRef]

| Number | Name | Wavelength (µm) | Resolution (m) |

|---|---|---|---|

| 1 | Coastal | 0.430–0.450 | 30 |

| 2 | Blue | 0.450–0.510 | 30 |

| 3 | Green | 0.530–0.590 | 30 |

| 4 | Red | 0.640–0.670 | 30 |

| 5 | NIR | 0.850–0.880 | 30 |

| 6 | SWIR 1 | 1.570–1.650 | 30 |

| 7 | SWIR 2 | 2.110–2.290 | 30 |

| 8 | Pan | 0.500–0.680 | 15 |

| 9 | Cirrus | 1.360–1.380 | 30 |

| 10 | TIRS 1 | 10.600–11.190 | 100 |

| 11 | TIRS 2 | 11.500–12.510 | 100 |

| Number | Name | Wavelength (µm) | Resolution (m) |

|---|---|---|---|

| 1 | Coastal | 0.400–0.450 | 1.24 |

| 2 | Blue | 0.450–0.510 | 1.24 |

| 3 | Green | 0.510–0.580 | 1.24 |

| 4 | Yellow | 0.585–0.625 | 1.24 |

| 5 | Red | 0.630–0.690 | 1.24 |

| 6 | Red Edge | 0.705–0.745 | 1.24 |

| 7 | NIR 1 | 0.770–0.895 | 1.24 |

| 8 | NIR 2 | 0.860–1.040 | 1.24 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bosques-Perez, M.A.; Rishe, N.; Yan, T.; Deng, L.; Adjouadi, M. Effects of Clouds and Shadows on the Use of Independent Component Analysis for Feature Extraction. Remote Sens. 2025, 17, 2632. https://doi.org/10.3390/rs17152632

Bosques-Perez MA, Rishe N, Yan T, Deng L, Adjouadi M. Effects of Clouds and Shadows on the Use of Independent Component Analysis for Feature Extraction. Remote Sensing. 2025; 17(15):2632. https://doi.org/10.3390/rs17152632

Chicago/Turabian StyleBosques-Perez, Marcos A., Naphtali Rishe, Thony Yan, Liangdong Deng, and Malek Adjouadi. 2025. "Effects of Clouds and Shadows on the Use of Independent Component Analysis for Feature Extraction" Remote Sensing 17, no. 15: 2632. https://doi.org/10.3390/rs17152632

APA StyleBosques-Perez, M. A., Rishe, N., Yan, T., Deng, L., & Adjouadi, M. (2025). Effects of Clouds and Shadows on the Use of Independent Component Analysis for Feature Extraction. Remote Sensing, 17(15), 2632. https://doi.org/10.3390/rs17152632