Optical and SAR Image Registration in Equatorial Cloudy Regions Guided by Automatically Point-Prompted Cloud Masks

Abstract

1. Introduction

- (1)

- We introduce an optical and SAR image-matching framework specifically designed for equatorial cloudy regions, enhancing the geometric alignment accuracy by addressing the challenges posed by cloud interference in optical imagery;

- (2)

- We propose a cloud detection method based on a prompt-driven visual segmentation model with automatic prompt point generation, achieving matching performance comparable to that of manual prompts;

- (3)

- We conduct experiments in four equatorial regions using five different satellite images, demonstrating that the proposed method maintains sub-pixel alignment accuracy, even when cloud coverage in the optical images exceeds 50%.

2. Related Works

2.1. Multimodal Remote Sensing Image-Matching

2.2. Cloud Detection from Optical Remote Sensing Imagery

3. Materials and Methods

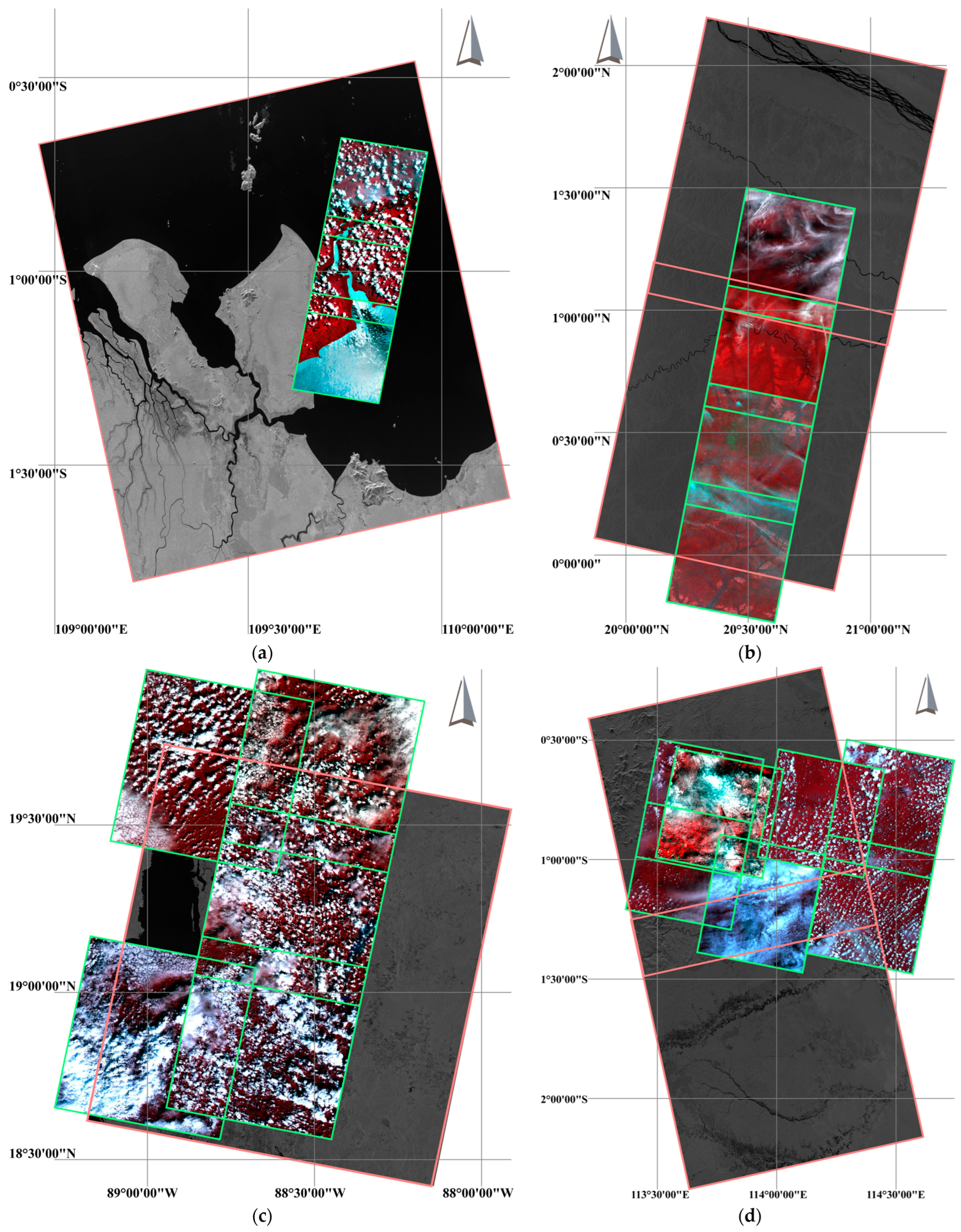

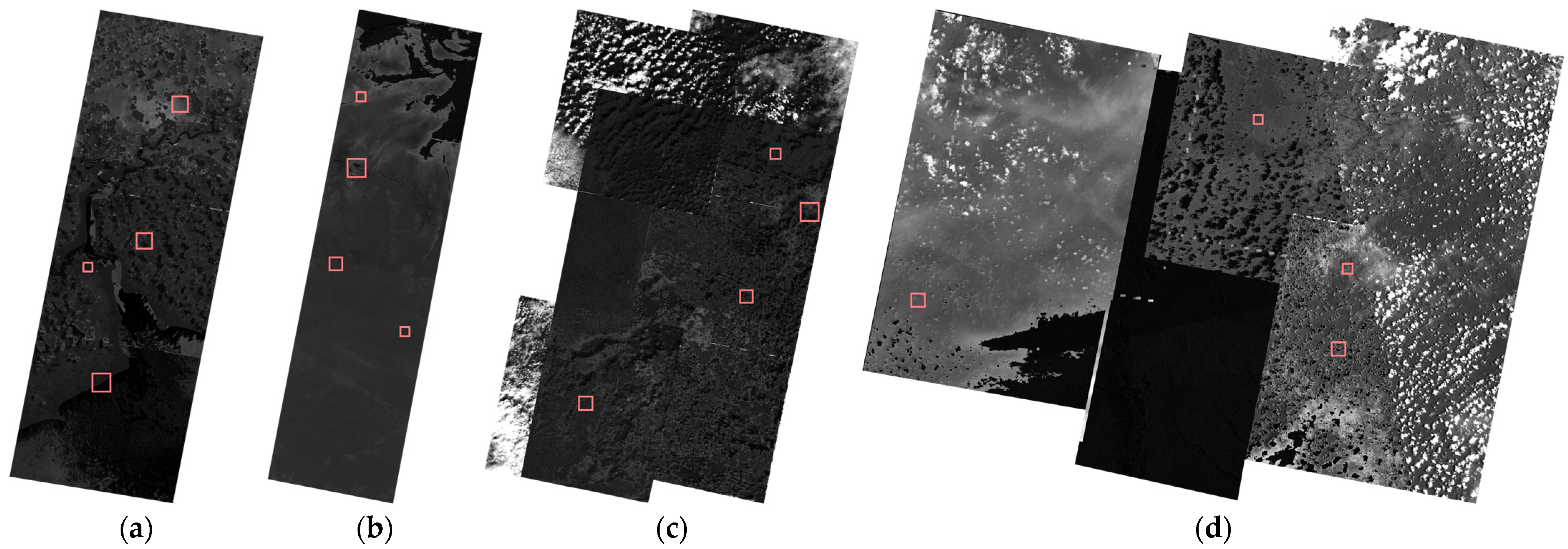

3.1. Datasets

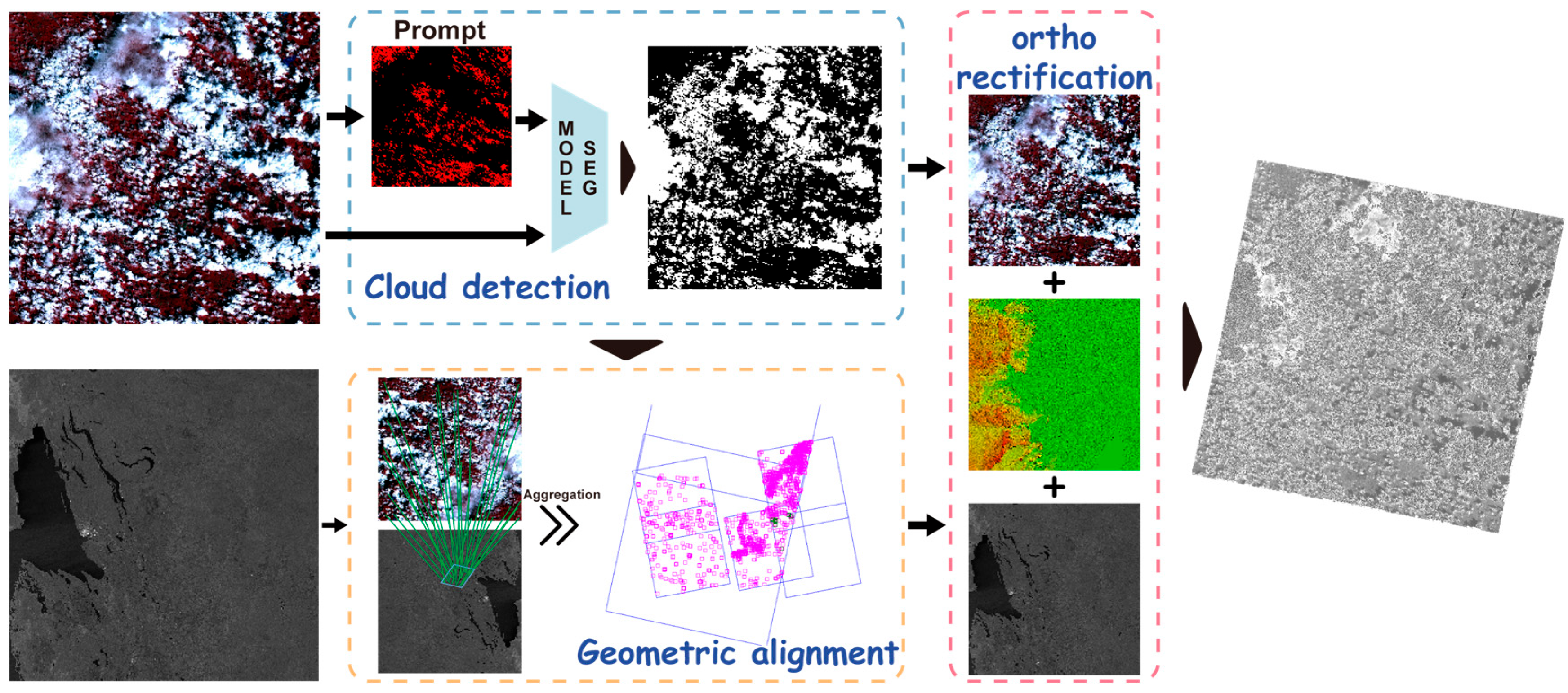

3.2. The Proposed Framework

3.2.1. Cloud Detection with Prompt-Driven Segmentation

3.2.2. Geometric Alignment of Optical-SAR Images with Cloud-Mask Weighting

3.3. Implementation Details

4. Results

4.1. Parameter Study

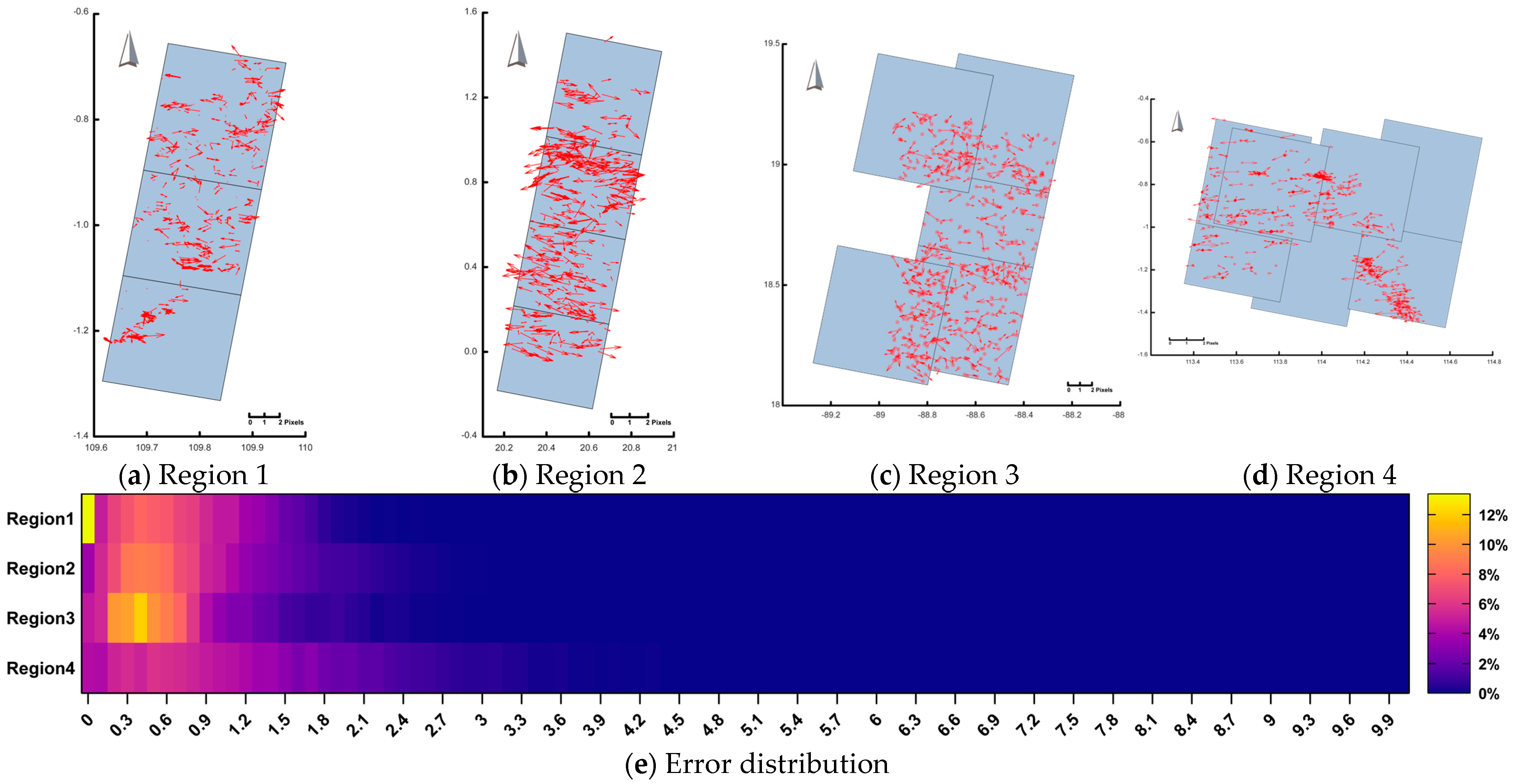

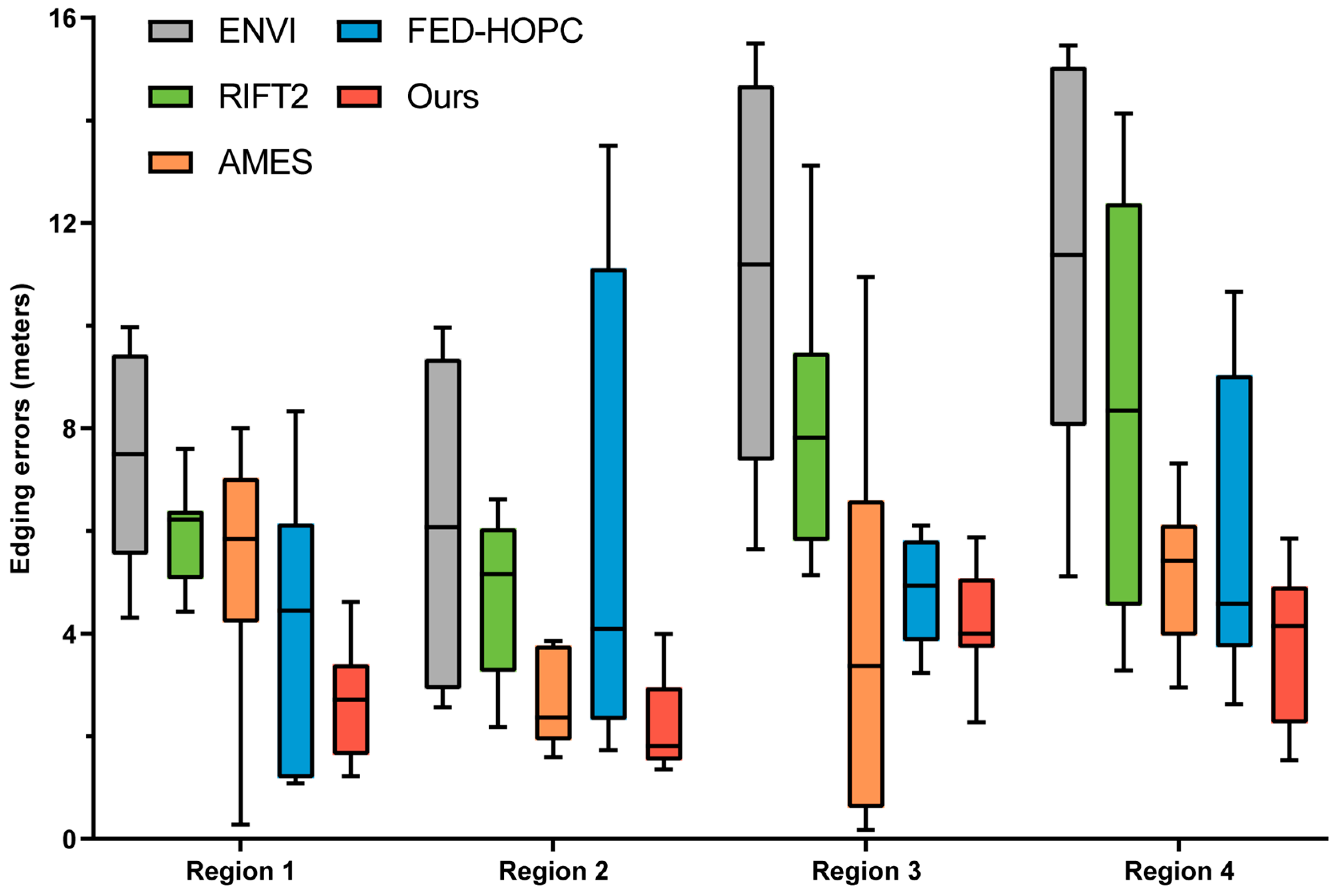

4.2. Quantitative Analysis

4.3. Qualitative Analysis

5. Discussion

5.1. Comparison with Other Matching Strategies

5.2. Ablation Experiments

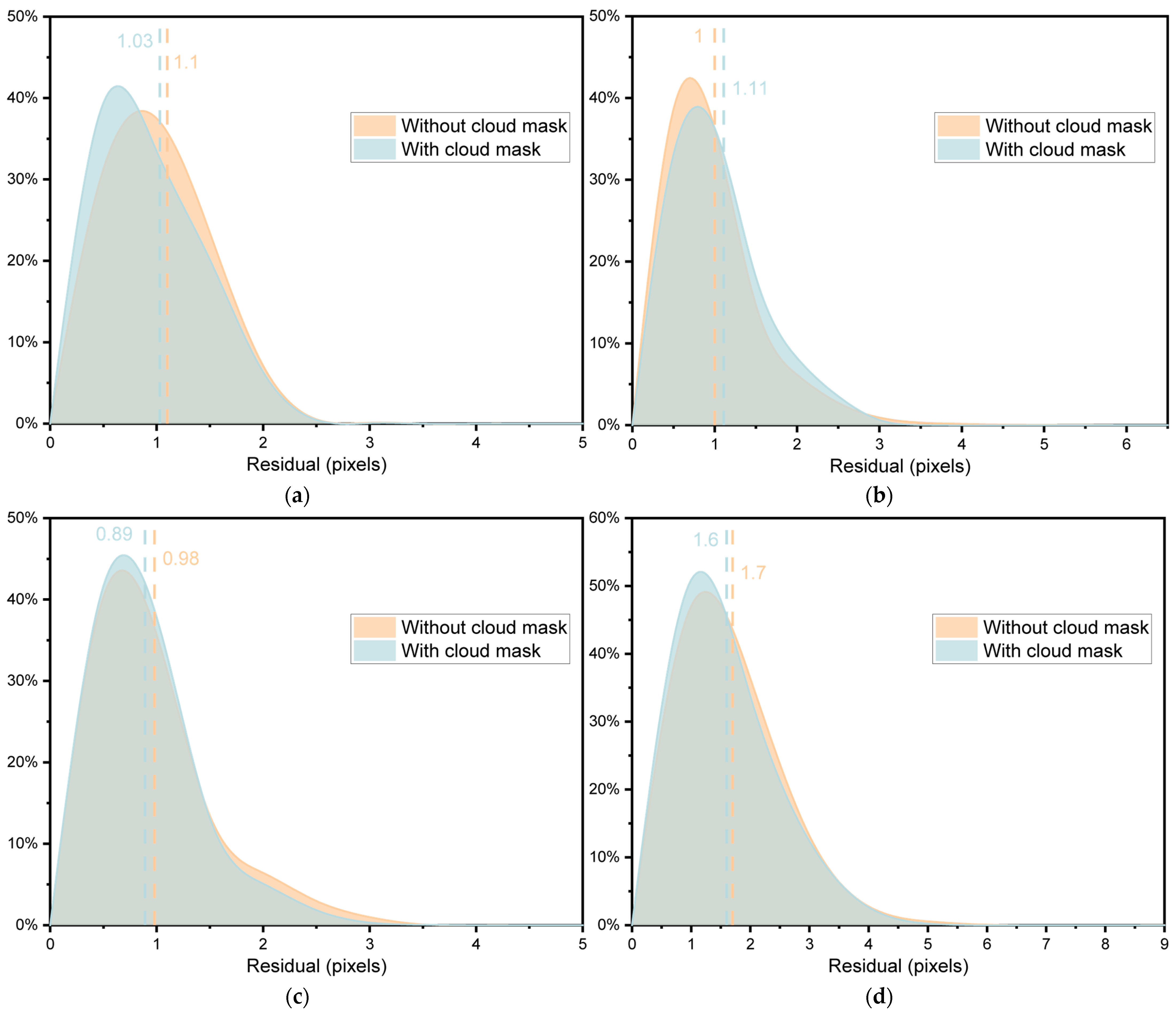

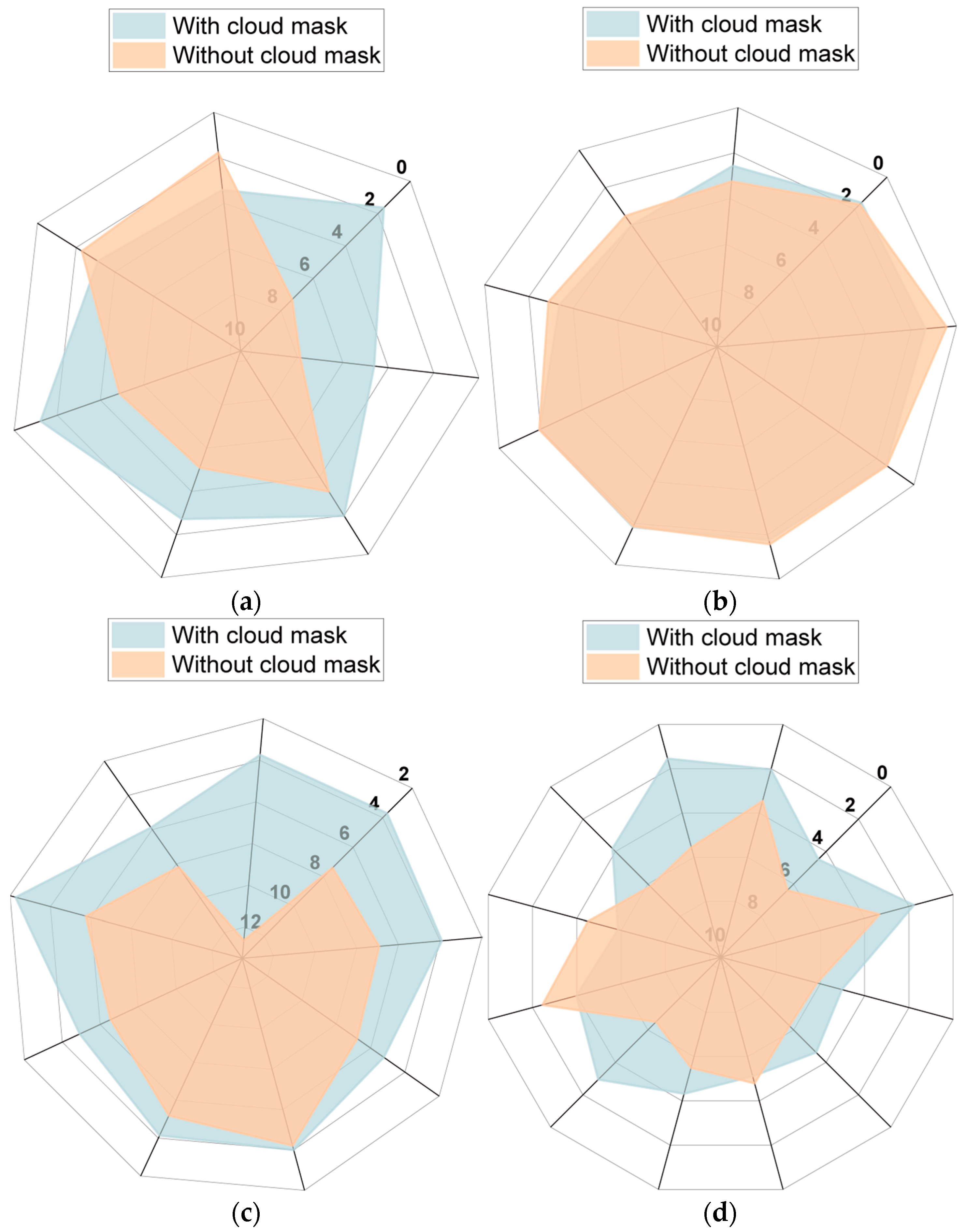

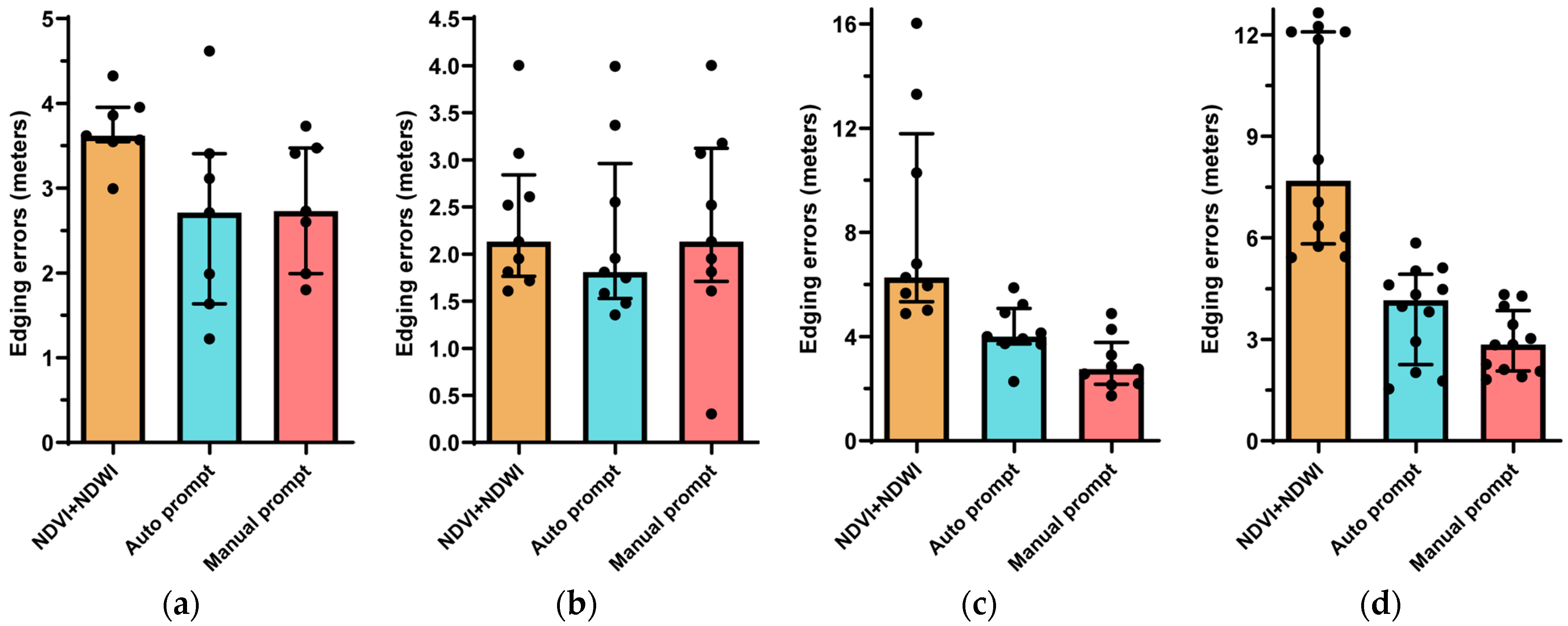

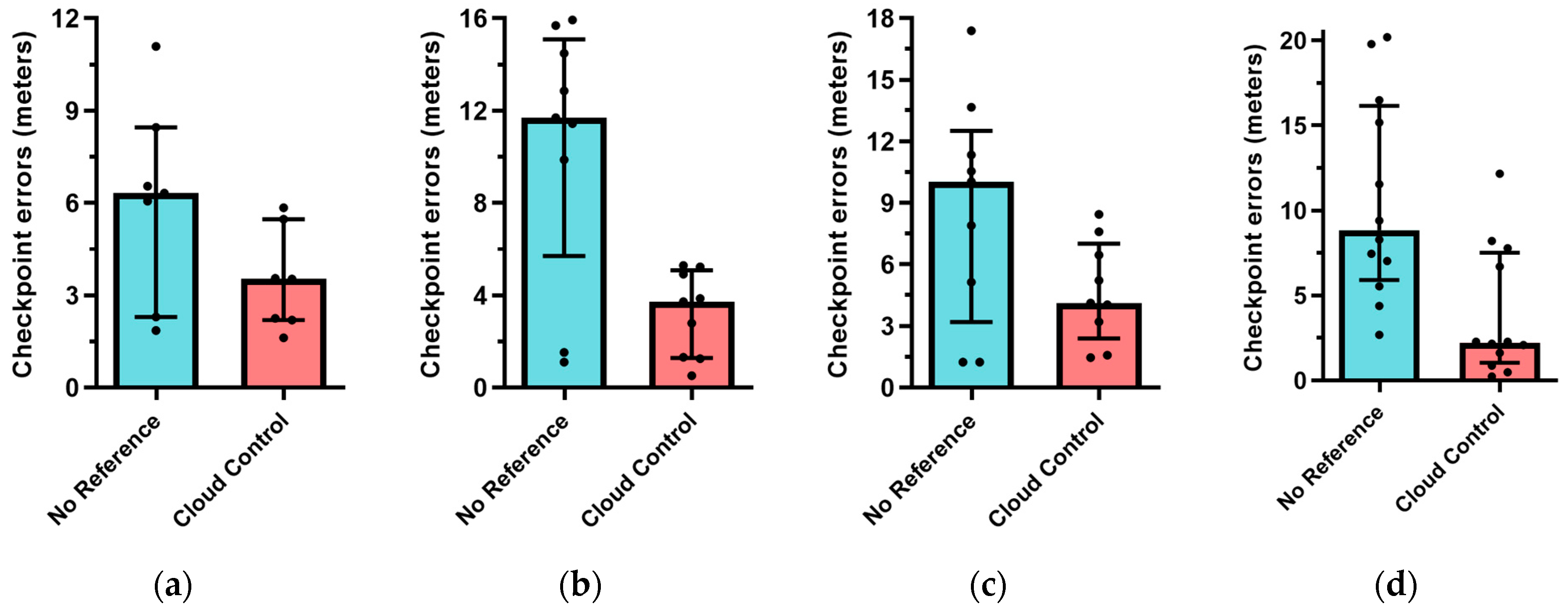

- (a)

- To quantify the contribution of cloud masks on final results, we repeated the entire workflow across all four experimental regions, deliberately omitting the cloud-mask constraint. By comparing tie-point distributions, residual statistics, and ortho-edging errors between masked and unmasked runs, this experiment isolates the cloud mask’s effect on alignment precision and overall orthophoto quality.

- (b)

- To assess the impact of our cloud-mask generation method on matching performance, we first generated masks for each experimental region using the detection approach NDVI and NDWI, proposed by Huang et al. [27]. We then selected keypoints located over thick clouds to serve as prompts for SAM-based mask creation. Each set of masks guided the matching and subsequent orthorectification processes. The resulting performance metrics are presented in Table 5.

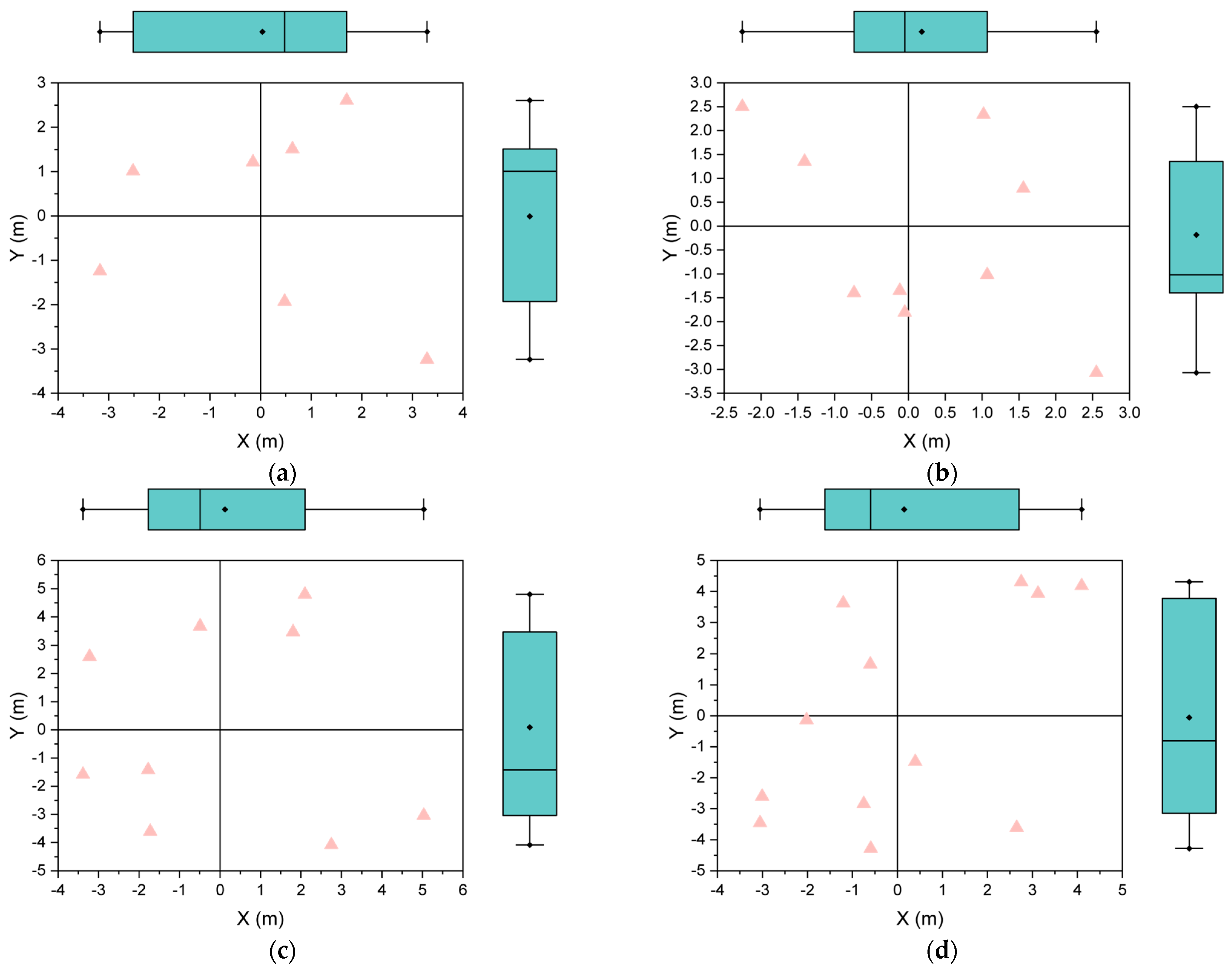

5.3. Evaluation of Absolute Positioning Accuracy

5.4. Limitations and Prospects

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Slough, T.; Kopas, J.; Urpelainen, J. Satellite-Based Deforestation Alerts with Training and Incentives for Patrolling Facilitate Community Monitoring in the Peruvian Amazon. Proc. Natl. Acad. Sci. USA 2021, 118, e2015171118. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Dong, J.; Njeudeng Tenku, S.; Xiao, X. Mapping Oil Palm Plantations in Cameroon Using PALSAR 50-m Orthorectified Mosaic Images. Remote Sens. 2015, 7, 1206–1224. [Google Scholar] [CrossRef]

- Yin, T.; Guo, W.; Zhu, J.; Wu, Y.; Zhang, B.; Zhou, Z. Underwater Broadband Target Detection by Filtering Scanning Azimuths Based on Features of Subband Peaks. IEEE Sens. J. 2025, 25, 13601–13609. [Google Scholar] [CrossRef]

- Young, A.H.; Knapp, K.R.; Inamdar, A.; Hankins, W.; Rossow, W.B. The International Satellite Cloud Climatology Project H-Series Climate Data Record Product. Earth Syst. Sci. Data 2018, 10, 583–593. [Google Scholar] [CrossRef]

- Mao, K.; Yuan, Z.; Zuo, Z.; Xu, T.; Shen, X.; Gao, C. Changes in Global Cloud Cover Based on Remote Sensing Data from 2003 to 2012. Chin. Geogr. Sci. 2019, 29, 306–315. [Google Scholar] [CrossRef]

- Liu, H.; Koren, I.; Altaratz, O.; Chekroun, M.D. Opposing Trends of Cloud Coverage over Land and Ocean under Global Warming. Atmos. Chem. Phys. 2023, 23, 6559–6569. [Google Scholar] [CrossRef]

- Zhu, J.; Yin, T.; Guo, W.; Zhang, B.; Zhou, Z. An Underwater Target Azimuth Trajectory Enhancement Approach in BTR. Appl. Acoust. 2025, 230, 110373. [Google Scholar] [CrossRef]

- King, M.D.; Platnick, S.; Menzel, W.P.; Ackerman, S.A.; Hubanks, P.A. Spatial and Temporal Distribution of Clouds Observed by MODIS Onboard the Terra and Aqua Satellites. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3826–3852. [Google Scholar] [CrossRef]

- Ausherman, D.A.; Kozma, A.; Walker, J.L.; Jones, H.M.; Poggio, E.C. Developments in Radar Imaging. IEEE Trans. Aerosp. Electron. Syst. 1984, AES-20, 363–400. [Google Scholar] [CrossRef]

- Huang, Z.; Zhang, X.; Tang, Z.; Xu, F.; Datcu, M.; Han, J. Generative Artificial Intelligence Meets Synthetic Aperture Radar: A Survey. IEEE Geosci. Remote Sens. Mag. 2025, 62, 2–44. [Google Scholar] [CrossRef]

- Xu, Z.; Tang, B.; Ai, W.; Xie, Z.; Zhu, J. Radar Transceiver Design for Extended Targets Based on Optimal Linear Detector. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 6070–6082. [Google Scholar] [CrossRef]

- Zhu, J.; Song, Y.; Jiang, N.; Xie, Z.; Fan, C.; Huang, X. Enhanced Doppler Resolution and Sidelobe Suppression Performance for Golay Complementary Waveforms. Remote Sens. 2023, 15, 2452. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, P.; Yao, Y.; Wan, Y.; Zhang, W.; Li, Y.; Yan, X. Multimodal Remote Sensing Image Robust Matching Based on Second-Order Tensor Orientation Feature Transformation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4701314. [Google Scholar] [CrossRef]

- Wang, S.; Han, W.; Huang, X.; Zhang, X.; Wang, L.; Li, J. Trustworthy Remote Sensing Interpretation: Concepts, Technologies, and Applications. ISPRS J. Photogramm. Remote Sens. 2024, 209, 150–172. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep Learning in Remote Sensing Applications: A Meta-Analysis and Review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Huang, M.; Xu, Y.; Qian, L.; Shi, W.; Zhang, Y.; Bao, W.; Wang, N.; Liu, X.; Xiang, X. The QXS-SAROPT Dataset for Deep Learning in SAR-Optical Data Fusion. arXiv 2021, arXiv:2103.08259. [Google Scholar]

- Zhu, J.; Xie, Z.; Jiang, N.; Song, Y.; Han, S.; Liu, W.; Huang, X. Delay-Doppler Map Shaping through Oversampled Complementary Sets for High-Speed Target Detection. Remote Sens. 2024, 16, 2898. [Google Scholar] [CrossRef]

- Lei, Z.; Feng, Y.; Xi, M.; Tong, X.; Wang, J.; Xie, H.; Xu, X.; Wang, C.; Jin, Y.; Liu, S. High-Precision Geometric Calibration Model for Spaceborne SAR Using Geometrically Constrained GCPs. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Xu, Z.; Tang, B.; Ai, W.; Zhu, J. Relative Entropy Based Jamming Signal Design against Radar Target Detection. IEEE Trans. Signal Process. 2025, 73, 1200–1215. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.; Xiao, G.; Shao, Z.; Guo, X. A Review of Multimodal Image Matching: Methods and Applications. Inf. Fusion 2021, 73, 22–71. [Google Scholar] [CrossRef]

- Li, L.; Han, L.; Liu, M.; Gao, K.; He, H.; Wang, L.; Li, J. SAR–Optical Image Matching with Semantic Position Probability Distribution. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Dellinger, F.; Delon, J.; Gousseau, Y.; Michel, J.; Tupin, F. SAR-SIFT: A SIFT-Like Algorithm for SAR Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 453–466. [Google Scholar] [CrossRef]

- Cui, S.; Ma, A.; Zhang, L.; Xu, M.; Zhong, Y. MAP-Net: SAR and Optical Image Matching via Image-Based Convolutional Network With Attention Mechanism and Spatial Pyramid Aggregated Pooling. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image Matching from Handcrafted to Deep Features: A Survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Shang, H.; Letu, H.; Xu, R.; Wei, L.; Wu, L.; Shao, J.; Nagao, T.M.; Nakajima, T.Y.; Riedi, J.; He, J.; et al. A Hybrid Cloud Detection and Cloud Phase Classification Algorithm Using Classic Threshold-Based Tests and Extra Randomized Tree Model. Remote Sens. Environ. 2024, 302, 113957. [Google Scholar] [CrossRef]

- Ni, Z.; Wu, M.; Lu, Q.; Huo, H.; Wu, C.; Liu, R.; Wang, F.; Xu, X. A Review of Research on Cloud Detection Methods for Hyperspectral Infrared Radiances. Remote Sens. 2024, 16, 4629. [Google Scholar] [CrossRef]

- Huang, F.; Wang, X.; Nie, G.; Yan, J.; Li, X.; Tian, J.; Zhu, C.; Li, Q.; Tian, Q. Optical Remote Sensing Cloud Detection and Extraction Method in Tropical and Subtropical Veg-Etation Region. Remote Sens. Nat. Resour. 2024, 1–10. Available online: https://link.cnki.net/urlid/10.1759.P.20240827.1731.014 (accessed on 25 April 2025).

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023. [Google Scholar]

- Li, F.; Zhang, H.; Xu, H.; Liu, S.; Zhang, L.; Ni, L.M.; Shum, H.-Y. Mask DINO: Towards a Unified Transformer-Based Framework for Object Detection and Segmentation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; IEEE: New York, NY, USA, 2023; pp. 3041–3050. [Google Scholar]

- Wang, J.; Liu, Z.; Zhao, L.; Wu, Z.; Ma, C.; Yu, S.; Dai, H.; Yang, Q.; Liu, Y.; Zhang, S.; et al. Review of Large Vision Models and Visual Prompt Engineering. Meta-Radiol. 2023, 1, 100047. [Google Scholar] [CrossRef]

- Zhu, B.; Zhou, L.; Pu, S.; Fan, J.; Ye, Y. Advances and Challenges in Multimodal Remote Sensing Image Registration. IEEE J. Miniat. Air Space Syst. 2023, 4, 165–174. [Google Scholar] [CrossRef]

- Xiong, Q.; Fang, S.; Peng, Y.; Gong, Y.; Liu, X. Feature Matching of Multimodal Images Based on Nonlinear Diffusion and Progressive Filtering. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7139–7152. [Google Scholar] [CrossRef]

- Li, J.; Hu, Q.; Ai, M. RIFT: Multi-Modal Image Matching Based on Radiation-Variation Insensitive Feature Transform. IEEE Trans. Image Process. 2020, 29, 3296–3310. [Google Scholar] [CrossRef]

- Li, J.; Shi, P.; Hu, Q.; Zhang, Y. RIFT2: Speeding-up RIFT with A New Rotation-Invariance Technique. arXiv 2023, arXiv:2303.00319. [Google Scholar]

- Yao, Y.; Zhang, Y.; Wan, Y.; Liu, X.; Yan, X.; Li, J. Multi-Modal Remote Sensing Image Matching Considering Co-Occurrence Filter. IEEE Trans. IMAGE Process. 2022, 31, 14. [Google Scholar] [CrossRef]

- Fischer, S.; Šroubek, F.; Perrinet, L.; Redondo, R.; Cristóbal, G. Self-Invertible 2D Log-Gabor Wavelets. Int. J. Comput. Vis. 2007, 75, 231–246. [Google Scholar] [CrossRef]

- Arróspide, J.; Salgado, L. Log-Gabor Filters for Image-Based Vehicle Verification. IEEE Trans. Image Process. 2013, 22, 2286–2295. [Google Scholar] [CrossRef] [PubMed]

- Jevnisek, R.J.; Avidan, S. Co-Occurrence Filter. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21 July 2017; pp. 3816–3824. [Google Scholar]

- Cui, S.; Ma, A.; Wan, Y.; Zhong, Y.; Luo, B.; Xu, M. Cross-Modality Image Matching Network with Modality-Invariant Feature Representation for Airborne-Ground Thermal Infrared and Visible Datasets. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Liao, Y.; Tao, P.; Chen, Q.; Wang, L.; Ke, T. Highly Adaptive Multi-Modal Image Matching Based on Tuning-Free Filtering and Enhanced Sketch Features. Inf. Fusion 2024, 112, 102599. [Google Scholar] [CrossRef]

- Gao, T.; Lan, C.; Huang, W.; Wang, S. SFA-Net: A SAM-Guided Focused Attention Network for Multimodal Remote Sensing Image Matching. ISPRS J. Photogramm. Remote Sens. 2025, 223, 188–206. [Google Scholar] [CrossRef]

- Xiang, Y.; Jiao, N.; Wang, F.; You, H. A Robust Two-Stage Registration Algorithm for Large Optical and SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Ye, Y.; Yang, C.; Gong, G.; Yang, P.; Quan, D.; Li, J. Robust Optical and SAR Image Matching Using Attention-Enhanced Structural Features. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Xiang, Y.; Wang, X.; Wang, F.; You, H.; Qiu, X.; Fu, K. A Global-to-Local Algorithm for High-Resolution Optical and SAR Image Registration. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–20. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Jiang, J.; Zhou, H.; Guo, X. Locality Preserving Matching. Int. J. Comput. Vis. 2019, 127, 512–531. [Google Scholar] [CrossRef]

- Bian, J.; Lin, W.-Y.; Matsushita, Y.; Yeung, S.-K.; Nguyen, T.-D.; Cheng, M.-M. GMS: Grid-Based Motion Statistics for Fast, Ultra-Robust Feature Correspondence. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21 July 2017; IEEE: New York, NY, USA, 2017; pp. 2828–2837. [Google Scholar]

- Cavalli, L.; Larsson, V.; Oswald, M.R.; Sattler, T.; Pollefeys, M. AdaLAM: Revisiting Handcrafted Outlier Detection. arXiv 2020, arXiv:2006.04250. [Google Scholar] [CrossRef]

- Lindenberger, P.; Sarlin, P.-E.; Pollefeys, M. Lightglue: Local Feature Matching at Light Speed. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1 October 2023; IEEE: New York, NY, USA, 2023; pp. 17581–17592. [Google Scholar]

- Liu, J.; Li, X. Geometrized Transformer for Self-Supervised Homography Estimation. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1 October 2023; IEEE: New York, NY, USA, 2023; pp. 9522–9531. [Google Scholar]

- Xia, Y.; Jiang, J.; Lu, Y.; Liu, W.; Ma, J. Robust Feature Matching via Progressive Smoothness Consensus. ISPRS J. Photogramm. Remote Sens. 2023, 196, 502–513. [Google Scholar] [CrossRef]

- Huang, J.; Li, H.; Gong, Y.; Fan, F.; Ma, Y.; Du, Q.; Ma, J. Robust Feature Matching via Graph Neighborhood Motion Consensus. IEEE Trans. Multimed. 2024, 26, 9790–9803. [Google Scholar] [CrossRef]

- Lu, Y.; Ma, J.; Mei, X.; Huang, J.; Zhang, X.-P. Feature Matching via Topology-Aware Graph Interaction Model. IEEE/CAA J. Autom. Sin. 2024, 11, 113–130. [Google Scholar] [CrossRef]

- Wu, P.; Yao, Y.; Zhang, W.; Wei, D.; Wan, Y.; Li, Y.; Zhang, Y. MapGlue: Multimodal Remote Sensing Image Matching. arXiv 2025, arXiv:2503.16185. [Google Scholar]

- Ye, Y.; Wang, Q.; Zhao, H.; Teng, X.; Bian, Y.; Li, Z. Li Fast and Robust Optical-to-SAR Remote Sensing Image Registration Using Region-Aware Phase Descriptor. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Ye, Y.; Bruzzone, L.; Shan, J.; Bovolo, F.; Zhu, Q. Fast and Robust Matching for Multimodal Remote Sensing Image Registration. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9059–9070. [Google Scholar] [CrossRef]

- Liao, Y.; Xi, K.; Fu, H.; Wei, L.; Li, S.; Xiong, Q.; Chen, Q.; Tao, P.; Ke, T. Refining Multi-Modal Remote Sensing Image Matching with Repetitive Feature Optimization. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104186. [Google Scholar] [CrossRef]

- Mahajan, S.; Fataniya, B. Cloud Detection Methodologies: Variants and Development—A Review. Complex Intell. Syst. 2020, 6, 251–261. [Google Scholar] [CrossRef]

- Aybar, C.; Mateo-García, G.; Acciarini, G.; Růžička, V.; Meoni, G.; Longépé, N.; Gómez-Chova, L. Onboard Cloud Detection and Atmospheric Correction with Efficient Deep Learning Models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 19518–19529. [Google Scholar] [CrossRef]

- Foga, S.; Scaramuzza, P.L.; Guo, S.; Zhu, Z.; Dilley, R.D.; Beckmann, T.; Schmidt, G.L.; Dwyer, J.L.; Joseph Hughes, M.; Laue, B. Cloud Detection Algorithm Comparison and Validation for Operational Landsat Data Products. Remote Sens. Environ. 2017, 194, 379–390. [Google Scholar] [CrossRef]

- Gupta, R.; Panchal, P. Advancement of Cloud Detection Algorithm in Satellite Images with Application to Color Models. In Proceedings of the 2015 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 8–10 January 2015; IEEE: New York, NY, USA, 2015; pp. 1–6. [Google Scholar]

- Tian, P.; Guang, Q.; Liu, X. Cloud Detection from Visual Band of Satellite Image Based on Variance of Fractal Dimension. J. Syst. Eng. Electron. 2019, 30, 485–491. [Google Scholar] [CrossRef]

- Liu, K.; Liao, Y.; Yang, K.; Xi, K.; Chen, Q.; Tao, P.; Ke, T. Efficient Radiometric Triangulation for Aerial Image Consistency across Inter and Intra Variances. Int. J. Appl. Earth Obs. Geoinf. 2024, 130, 103911. [Google Scholar] [CrossRef]

- Jeppesen, J.H.; Jacobsen, R.H.; Inceoglu, F.; Toftegaard, T.S. A Cloud Detection Algorithm for Satellite Imagery Based on Deep Learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Li, Y.; Wu, Y.; Li, J.; Sun, A.; Zhang, N.; Liang, Y. A Machine Learning Algorithm Using Texture Features for Nighttime Cloud Detection from FY-3D MERSI L1 Imagery. Remote Sens. 2025, 17, 1083. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Auckland, New Zealand, 2–6 December 2024; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 3149–3157. [Google Scholar]

- Ravi, N.; Gabeur, V.; Hu, Y.-T.; Hu, R.; Ryali, C.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L.; et al. SAM 2: Segment Anything in Images and Videos. arXiv 2024, arXiv:2408.00714. [Google Scholar]

- Zou, X.; Yang, J.; Zhang, H.; Li, F.; Li, L.; Wang, J.; Wang, L.; Gao, J.; Lee, Y.J. Segment Everything Everywhere All at Once. arXiv 2023, arXiv:2304.06718. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, X.; Jiang, R.; Shao, W.; Zhang, L. RSAM-Seg: A SAM-Based Approach with Prior Knowledge Integration for Remote Sensing Image Semantic Segmentation. Remote Sens. 2025, 17, 590. [Google Scholar] [CrossRef]

- Gao, B. NDWI—A Normalized Difference Water Index for Remote Sensing of Vegetation Liquid Water from Space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Carlson, T.N.; Ripley, D.A. On the Relation between NDVI, Fractional Vegetation Cover, and Leaf Area Index. Remote Sens. Environ. 1997, 62, 241–252. [Google Scholar] [CrossRef]

- Schubert, E.; Sander, J.; Ester, M.; Kriegel, H.P.; Xu, X. DBSCAN Revisited, Revisited: Why and How You Should (Still) Use DBSCAN. ACM Trans. Database Syst. 2017, 42, 1–21. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J.; Bruzzone, L.; Shen, L. Robust Registration of Multimodal Remote Sensing Images Based on Structural Similarity. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2941–2958. [Google Scholar] [CrossRef]

- Zhang, Z.; Tao, P. An Overview on “Cloud Control” Photogrammetry in Big Data Era. J. Geod. Geoinf. Sci. 2017, 46, 1238–1248. [Google Scholar]

| Region 1 | Region 2 | Region 3 | Region 4 | |||||

|---|---|---|---|---|---|---|---|---|

| Optical | SAR | Optical | SAR | Optical | SAR | Optical | SAR | |

| Satellite | GF-07 | GF-03B | ZY-303 | GF-03C | ZY-302 | GF-03C | ZY-303 | GF-03C |

| Resolution | 0.67 m | 4.45 m | 2.12 m | 4.34 m | 2.12 m | 4.45 m | 2.12 m | 4.34 m |

| Location | 109.5°E 1.1°S | 20.6°E 0.9°N | 88.6°W 18.6°N | 114.0°E 1.3°S | ||||

| Number | 3 | 1 | 4 | 2 | 5 | 1 | 7 | 2 |

| Cloud cover | 33.61% | / | 27.96% | / | 52.77% | / | 43.27% | / |

| Capture time | 2022-04 | 2022-08 | 2025-01 | 2025-02 | 2024-01 | 2024-03 | 2024-03 | 2023-07 |

| Region 1 | Region 2 | Region 3 | Region 4 | |

|---|---|---|---|---|

| 3.52 | 1.21 | 1.35 | 2.26 | |

| 3.48 | 1.52 | 1.31 | 2.06 | |

| 3.50 | 1.61 | 1.39 | 1.97 | |

| 3.49 | 1.68 | 1.38 | 1.96 |

| Region 1 | Region 2 | Region 3 | Region 4 | |

|---|---|---|---|---|

| Tie-point number | 9386 | 16,703 | 7734 | 10,826 |

| Adjustment accuracy (pixels) | 3.48 | 1.52 | 1.31 | 2.06 |

| Check points number | 7 | 9 | 9 | 12 |

| Edging error (meters) | 2.88 | 2.37 | 4.31 | 4.02 |

| Without Cloud Mask | With Cloud Mask | |||||

|---|---|---|---|---|---|---|

| Tie-Point Number | Adjustment Accuracy (Pixels) | Edging Error (Meters) | Tie-Point Number | Adjustment Accuracy (Pixels) | Edging Error (Meters) | |

| Region 1 | 9490 | 3.59 | 5.13 | 9386 | 3.48 | 2.88 |

| Region 2 | 24,320 | 1.46 | 2.26 | 16,703 | 1.52 | 2.37 |

| Region 3 | 8316 | 1.43 | 7.42 | 7734 | 1.31 | 4.31 |

| Region 4 | 10,371 | 2.17 | 5.25 | 10,826 | 2.06 | 4.02 |

| Region 1 | Region 2 | Region 3 | Region 4 | ||

|---|---|---|---|---|---|

| NDVI + NDWI | Tie-point number | 9334 | 23,812 | 2562 | 3346 |

| Adjustment accuracy (pixels) | 3.54 | 1.96 | 1.50 | 2.30 | |

| Edging error (meters) | 3.72 | 2.49 | 9.08 | 9.27 | |

| Auto prompt | Tie-point number | 9386 | 16,703 | 7734 | 10,826 |

| Adjustment accuracy (pixels) | 3.48 | 1.52 | 1.31 | 2.06 | |

| Edging error (meters) | 2.88 | 2.37 | 4.31 | 4.02 | |

| Manual prompt | Tie-point number | 9496 | 26,709 | 9380 | 11,912 |

| Adjustment accuracy (pixels) | 2.96 | 1.17 | 1.28 | 1.85 | |

| Edging error (meters) | 2.90 | 1.98 | 3.12 | 3.04 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, Y.; Li, S.; Gao, M.; Li, S.; Qin, W.; Xiong, Q.; Lin, C.; Chen, Q.; Tao, P. Optical and SAR Image Registration in Equatorial Cloudy Regions Guided by Automatically Point-Prompted Cloud Masks. Remote Sens. 2025, 17, 2630. https://doi.org/10.3390/rs17152630

Liao Y, Li S, Gao M, Li S, Qin W, Xiong Q, Lin C, Chen Q, Tao P. Optical and SAR Image Registration in Equatorial Cloudy Regions Guided by Automatically Point-Prompted Cloud Masks. Remote Sensing. 2025; 17(15):2630. https://doi.org/10.3390/rs17152630

Chicago/Turabian StyleLiao, Yifan, Shuo Li, Mingyang Gao, Shizhong Li, Wei Qin, Qiang Xiong, Cong Lin, Qi Chen, and Pengjie Tao. 2025. "Optical and SAR Image Registration in Equatorial Cloudy Regions Guided by Automatically Point-Prompted Cloud Masks" Remote Sensing 17, no. 15: 2630. https://doi.org/10.3390/rs17152630

APA StyleLiao, Y., Li, S., Gao, M., Li, S., Qin, W., Xiong, Q., Lin, C., Chen, Q., & Tao, P. (2025). Optical and SAR Image Registration in Equatorial Cloudy Regions Guided by Automatically Point-Prompted Cloud Masks. Remote Sensing, 17(15), 2630. https://doi.org/10.3390/rs17152630