Estimation of Leaf, Spike, Stem and Total Biomass of Winter Wheat Under Water-Deficit Conditions Using UAV Multimodal Data and Machine Learning

Abstract

1. Introduction

2. Materials and Methods

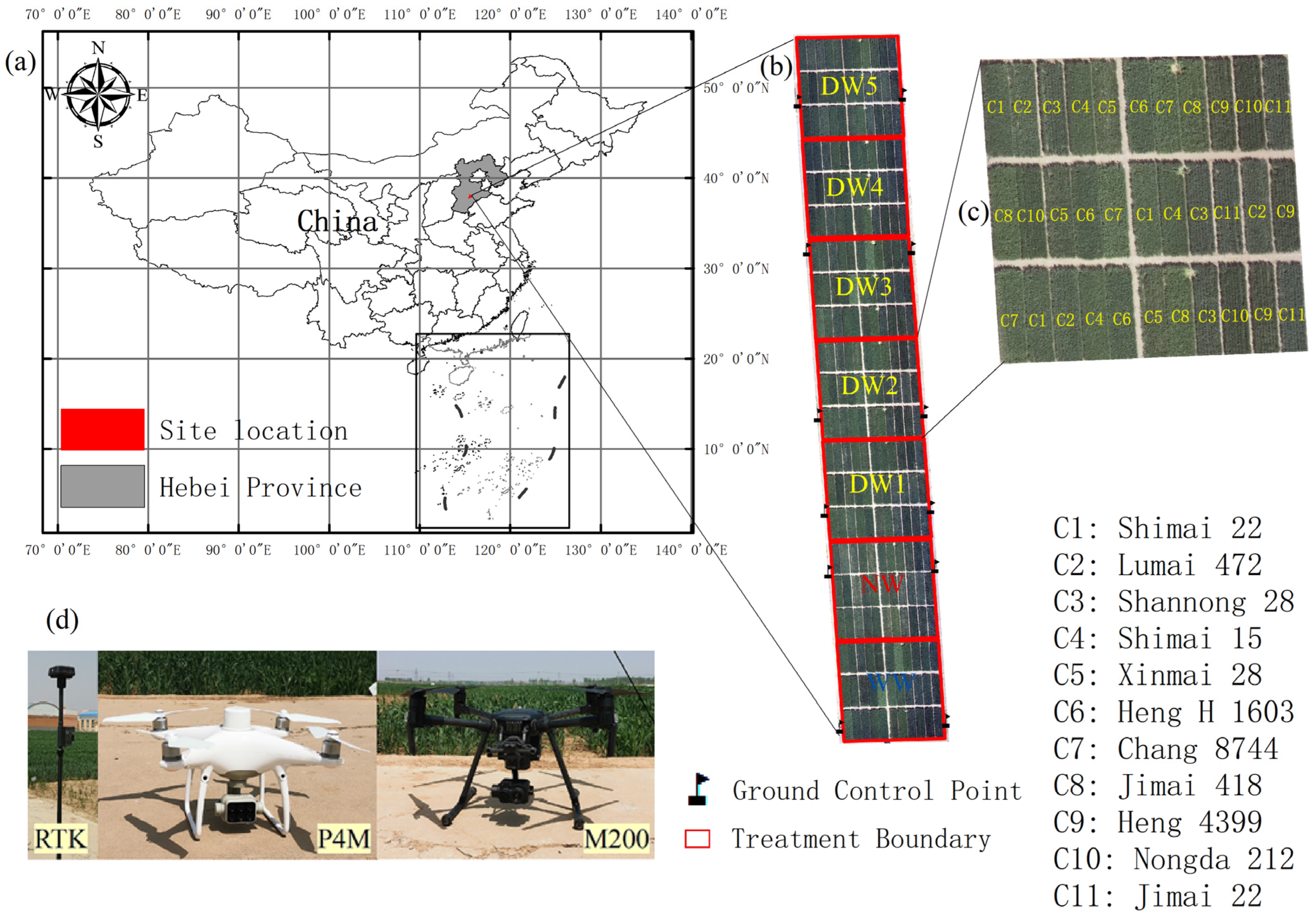

2.1. Experimental Design

2.2. Data Acquisition

2.3. Image Processing

2.4. Calculation of the Leaf, Spike, Stem, and Total Biomass

2.5. Canopy Feature Extraction and Selection

2.6. Modeling and Evaluation

3. Results

3.1. Measured Biomass of Winter Wheat

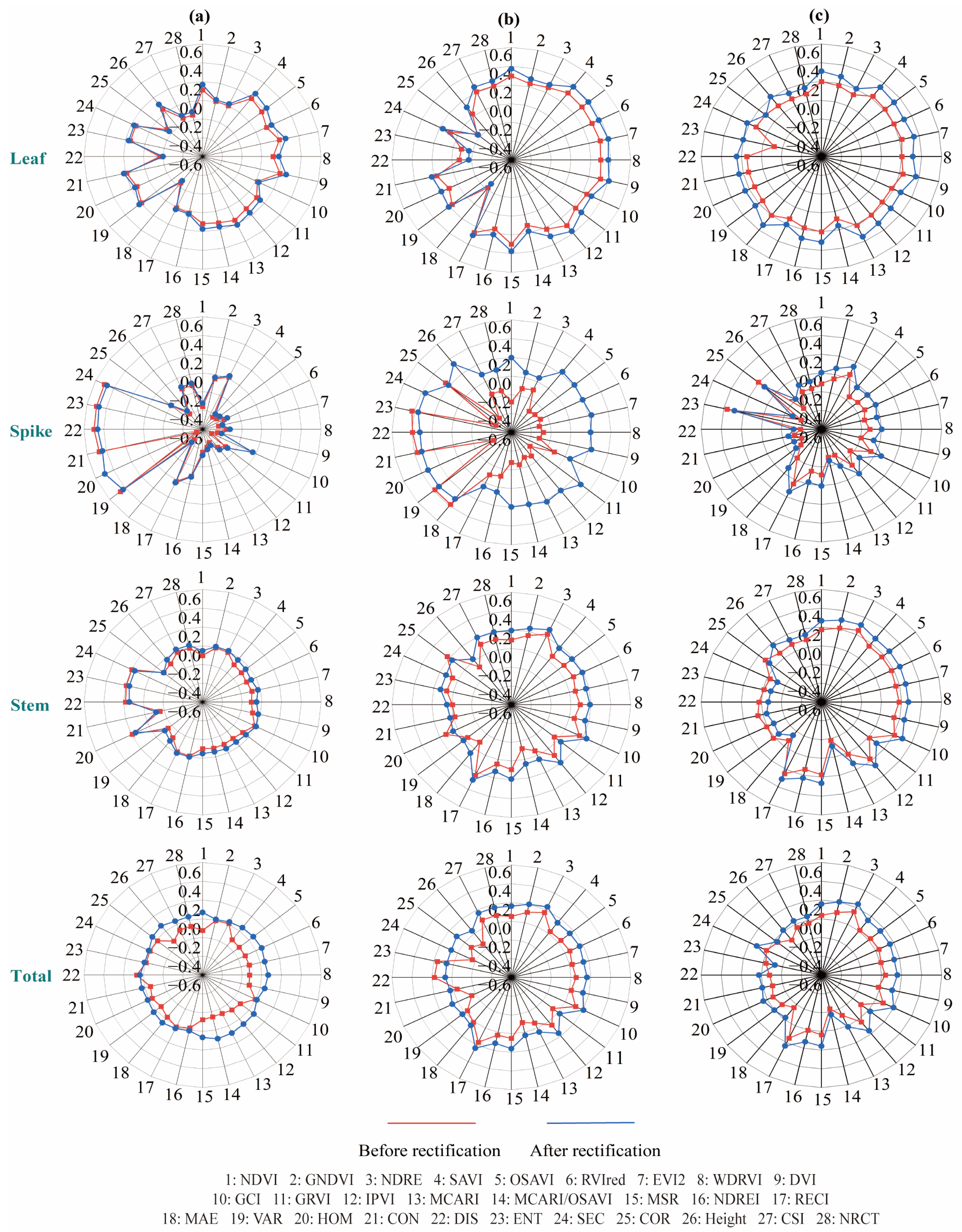

3.2. Correlation Between Biomass Before and After Rectification and Indices

3.3. Evaluation of Leaf, Spike, Stem, and Total Biomass

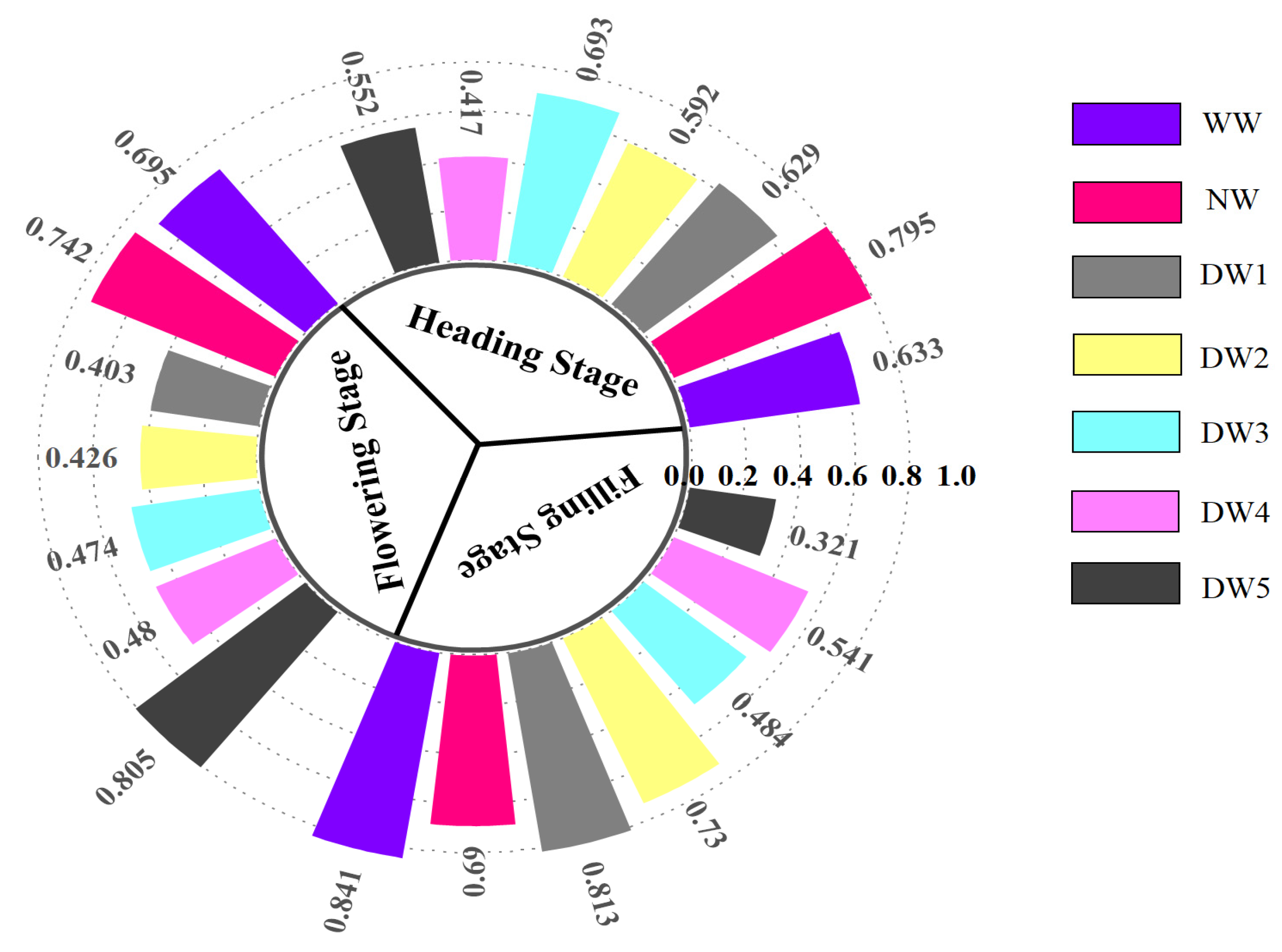

3.4. Evaluation of Leaf Biomass Under Different Treatments

4. Discussion

4.1. The Rectified Biomass Improved the Representation of the Plot

4.2. Comparison of Leaf, Spike, Stem and Total Biomass Results

4.3. Comparison of Different Machine Learning Models

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhao, Y.; Han, S.; Zheng, J.; Xue, H.; Li, Z.; Meng, Y.; Li, X.; Yang, X.; Li, Z.; Cai, S.; et al. ChinaWheatYield30m: A 30 m annual winter wheat yield dataset from 2016 to 2021 in China. Earth Syst. Sci. Data 2023, 15, 4047–4063. [Google Scholar] [CrossRef]

- Fang, Q.; Zhang, X.; Shao, L.; Chen, S.; Sun, H. Assessing the performance of different irrigation systems on winter wheat under limited water supply. Agric. Water Manag. 2018, 196, 133–143. [Google Scholar] [CrossRef]

- Becker, E.; Schmidhalter, U. Evaluation of Yield and Drought Using Active and Passive Spectral Sensing Systems at the Reproductive Stage in Wheat. Front. Plant Sci. 2017, 8, 379. [Google Scholar] [CrossRef] [PubMed]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef] [PubMed]

- Uto, K.; Seki, H.; Saito, G.; Kosugi, Y. Characterization of Rice Paddies by a UAV-Mounted Miniature Hyperspectral Sensor System. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 851–860. [Google Scholar] [CrossRef]

- Hu, X.; Caturegli, L.; Corniglia, M.; Gaetani, M.; Grossi, N.; Magni, S.; Migliazzi, M.; Angelini, L.; Mazzoncini, M.; Silvestri, N.; et al. Unmanned Aerial Vehicle to Estimate Nitrogen Status of Turfgrasses. PLoS ONE 2016, 11, e0158268. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Li, J.; Guo, X.; Wang, S.; Lu, J. Estimating biomass of winter oilseed rape using vegetation indices and texture metrics derived from UAV multispectral images. Comput. Electron. Agric. 2019, 166, 105026. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, H.; Wang, D.; Li, H.; Mouazen, A.M. A Novel Approach for Estimation of Above-Ground Biomass of Sugar Beet Based on Wavelength Selection and Optimized Support Vector Machine. Remote Sens. 2020, 12, 620. [Google Scholar] [CrossRef]

- Zolkos, S.G.; Goetz, S.J.; Dubayah, R. A meta-analysis of terrestrial aboveground biomass estimation using lidar remote sensing. Remote Sens. Environ. 2013, 128, 289–298. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.W.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation Index Weighted Canopy Volume Model (CVMVI) for soybean biomass estimation from Unmanned Aerial System-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Liu, Y.; Fan, Y.; Feng, H.; Chen, R.; Bian, M.; Ma, Y.; Yue, J.; Yang, G. Estimating potato above-ground biomass based on vegetation indices and texture features constructed from sensitive bands of UAV hyperspectral imagery. Comput. Electron. Agric. 2024, 220, 108918. [Google Scholar] [CrossRef]

- Ma, J.; Liu, B.; Ji, L.; Zhu, Z.; Wu, Y.; Jiao, W. Field-scale yield prediction of winter wheat under different irrigation regimes based on dynamic fusion of multimodal UAV imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103293. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2018, 20, 611–629. [Google Scholar] [CrossRef]

- Atkinson Amorim, J.G.; Schreiber, L.V.; de Souza, M.R.Q.; Negreiros, M.; Susin, A.; Bredemeier, C.; Trentin, C.; Vian, A.L.; de Oliveira Andrades-Filho, C.; Doering, D.; et al. Biomass estimation of spring wheat with machine learning methods using UAV-based multispectral imaging. Int. J. Remote Sens. 2022, 43, 4758–4773. [Google Scholar] [CrossRef]

- Yu, D.; Zha, Y.; Sun, Z.; Li, J.; Jin, X.; Zhu, W.; Bian, J.; Ma, L.; Zeng, Y.; Su, Z. Deep convolutional neural networks for estimating maize above-ground biomass using multi-source UAV images: A comparison with traditional machine learning algorithms. Precis. Agric. 2022, 24, 92–113. [Google Scholar] [CrossRef]

- Zhu, W.; Sun, Z.; Peng, J.; Huang, Y.; Li, J.; Zhang, J.; Yang, B.; Liao, X. Estimating Maize Above-Ground Biomass Using 3D Point Clouds of Multi-Source Unmanned Aerial Vehicle Data at Multi-Spatial Scales. Remote Sens. 2019, 11, 2678. [Google Scholar] [CrossRef]

- Yue, J.; Yang, H.; Yang, G.; Fu, Y.; Wang, H.; Zhou, C. Estimating vertically growing crop above-ground biomass based on UAV remote sensing. Comput. Electron. Agric. 2023, 205, 107627. [Google Scholar] [CrossRef]

- Vahidi, M.; Shafian, S.; Thomas, S.; Maguire, R. Pasture Biomass Estimation Using Ultra-High-Resolution RGB UAVs Images and Deep Learning. Remote Sens. 2023, 15, 5714. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- De Rosa, D.; Basso, B.; Fasiolo, M.; Friedl, J.; Fulkerson, B.; Grace, P.R.; Rowlings, D.W. Predicting pasture biomass using a statistical model and machine learning algorithm implemented with remotely sensed imagery. Comput. Electron. Agric. 2021, 180, 105880. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Fan, Y.; Jin, X.; Song, X.; Yang, H.; Yang, G. Estimation of Potato Above-Ground Biomass Based on Vegetation Indices and Green-Edge Parameters Obtained from UAVs. Remote Sens. 2022, 14, 5323. [Google Scholar] [CrossRef]

- Wei, L.; Yang, H.; Niu, Y.; Zhang, Y.; Xu, L.; Chai, X. Wheat biomass, yield, and straw-grain ratio estimation from multi-temporal UAV-based RGB and multispectral images. Biosyst. Eng. 2023, 234, 187–205. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Jin, X.; Fan, Y.; Chen, R.; Bian, M.; Ma, Y.; Song, X.; Yang, G. Improved potato AGB estimates based on UAV RGB and hyperspectral images. Comput. Electron. Agric. 2023, 214, 108260. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Li, Z.; Yang, G.; Song, X.; Yang, X.; Zhao, Y. Remote-sensing estimation of potato above-ground biomass based on spectral and spatial features extracted from high-definition digital camera images. Comput. Electron. Agric. 2022, 198, 107089. [Google Scholar] [CrossRef]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef] [PubMed]

- Næsset, E.; McRoberts, R.E.; Pekkarinen, A.; Saatchi, S.; Santoro, M.; Trier, Ø.D.; Zahabu, E.; Gobakken, T. Use of local and global maps of forest canopy height and aboveground biomass to enhance local estimates of biomass in miombo woodlands in Tanzania. Int. J. Appl. Earth Obs. Geoinf. 2020, 93, 102138. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Wan, L.; Zhu, J.; Du, X.; Zhang, J.; Han, X.; Zhou, W.; Li, X.; Liu, J.; Liang, F.; He, Y.; et al. A model for phenotyping crop fractional vegetation cover using imagery from unmanned aerial vehicles. J. Exp. Bot. 2021, 72, 4691–4707. [Google Scholar] [CrossRef] [PubMed]

- Yan, G.; Li, L.; Coy, A.; Mu, X.; Chen, S.; Xie, D.; Zhang, W.; Shen, Q.; Zhou, H. Improving the estimation of fractional vegetation cover from UAV RGB imagery by colour unmixing. ISPRS J. Photogramm. Remote Sens. 2019, 158, 23–34. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Saunders, C.; Stitson Mo, W.J.; Bottou, L.S.B.; Smola, A. Support Vector Machine—Reference Manual; Department of Computer Science, Royal Holloway, University of London: Amsterdam, The Netherlands, 1998. [Google Scholar]

- Ding, S.F.; Jia, W.K.; Su, C.Y.; Zhang, L.W.; Shi, Z.Z. Neural Network Research Progress and Applications in Forecast. In Proceedings of the 5th International Symposium on Neural Networks, Beijing, China, 24–28 September 2008; pp. 783–793. [Google Scholar]

- Wu, Y.; Ma, J.; Zhang, W.; Sun, L.; Liu, Y.; Liu, B.; Wang, B.; Chen, Z. Rapid evaluation of drought tolerance of winter wheat cultivars under water-deficit conditions using multi-criteria comprehensive evaluation based on UAV multispectral and thermal images and automatic noise removal. Comput. Electron. Agric. 2024, 218, 108679. [Google Scholar] [CrossRef]

- Jiang, J.; Zheng, H.; Ji, X.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; Ehsani, R.; Yao, X. Analysis and Evaluation of the Image Preprocessing Process of a Six-Band Multispectral Camera Mounted on an Unmanned Aerial Vehicle for Winter Wheat Monitoring. Sensors 2019, 19, 747. [Google Scholar] [CrossRef] [PubMed]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS, Section A. In Proceedings of the NASA Goddard Space Flight Center 3d ERTS-1 Symposyum, Greenbelt, MD, USA, 1 January 1974; Volume 1. [Google Scholar]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef] [PubMed]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [PubMed]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, 8. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote Sens. 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Hassan, M.A.; Yang, M.; Rasheed, A.; Jin, X.; Xia, X.; Xiao, Y.; He, Z. Time-Series Multispectral Indices from Unmanned Aerial Vehicle Imagery Reveal Senescence Rate in Bread Wheat. Remote Sens. 2018, 10, 809. [Google Scholar] [CrossRef]

- Daughtry, C.S.T.; Walthall, C.L.; Kim, M.S.; de Colstoun, E.B.; McMurtrey, J.E. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Crippen, R.E. Calculating the vegetation index faster. Remote Sens. Environ. 1990, 34, 71–73. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of Vegetation Indices and a Modified Simple Ratio for Boreal Applications. Can. J. Remote Sens. 2014, 22, 229–242. [Google Scholar] [CrossRef]

- Su, H.; Sheng, Y.; Du, P.; Chen, C.; Liu, K. Hyperspectral image classification based on volumetric texture and dimensionality reduction. Front. Earth Sci. 2015, 9, 225–236. [Google Scholar] [CrossRef]

- Das, S.; Christopher, J.; Apan, A.; Choudhury, M.R.; Chapman, S.; Menzies, N.W.; Dang, Y.P. Evaluation of water status of wheat genotypes to aid prediction of yield on sodic soils using UAV-thermal imaging and machine learning. Agric. For. Meteorol. 2021, 307, 108477. [Google Scholar] [CrossRef]

- Salmeron, R.; Garcia, C.B.; Garcia, J. Variance Inflation Factor and Condition Number in multiple linear regression. J. Stat. Comput. Simul. 2018, 88, 2365–2384. [Google Scholar] [CrossRef]

- Wang, Z.; Ma, Y.; Chen, P.; Yang, Y.; Fu, H.; Yang, F.; Raza, M.A.; Guo, C.; Shu, C.; Sun, Y.; et al. Estimation of Rice Aboveground Biomass by Combining Canopy Spectral Reflectance and Unmanned Aerial Vehicle-Based Red Green Blue Imagery Data. Front. Plant Sci. 2022, 13, 903643. [Google Scholar] [CrossRef] [PubMed]

- Derraz, R.; Melissa Muharam, F.; Nurulhuda, K.; Ahmad Jaafar, N.; Keng Yap, N. Ensemble and single algorithm models to handle multicollinearity of UAV vegetation indices for predicting rice biomass. Comput. Electron. Agric. 2023, 205, 107621. [Google Scholar] [CrossRef]

- Zhang, Y.; Xia, C.; Zhang, X.; Cheng, X.; Feng, G.; Wang, Y.; Gao, Q. Estimating the maize biomass by crop height and narrowband vegetation indices derived from UAV-based hyperspectral images. Ecol. Indic. 2021, 129, 107985. [Google Scholar] [CrossRef]

- Wang, L.a.; Zhou, X.; Zhu, X.; Dong, Z.; Guo, W. Estimation of biomass in wheat using random forest regression algorithm and remote sensing data. Crop J. 2016, 4, 212–219. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Duan, F.; Chen, R.; Ma, Y. UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precis. Agric. 2022, 24, 187–212. [Google Scholar] [CrossRef] [PubMed]

- Aghighi, H.; Azadbakht, M.; Ashourloo, D.; Shahrabi, H.S.; Radiom, S. Machine Learning Regression Techniques for the Silage Maize Yield Prediction Using Time-Series Images of Landsat 8 OLI. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4563–4577. [Google Scholar] [CrossRef]

- Yuan, H.; Yang, G.; Li, C.; Wang, Y.; Liu, J.; Yu, H.; Feng, H.; Xu, B.; Zhao, X.; Yang, X. Retrieving Soybean Leaf Area Index from Unmanned Aerial Vehicle Hyperspectral Remote Sensing: Analysis of RF, ANN, and SVM Regression Models. Remote Sens. 2017, 9, 309. [Google Scholar] [CrossRef]

| Treatment | Supplemental Irrigation Schedule | Growth Stage Irrigated | Replicates |

|---|---|---|---|

| T1 | 3 April 2021 + 3 May 2021 | Jointing + flowering | 3 |

| T2 | None | No irrigation | 3 |

| T3 | 29 November 2020 | Wintering | 3 |

| T4 | 10 March 2021 | Green-up | 3 |

| T5 | 3 April 2021 | Jointing | 3 |

| T6 | 10 April 2021 | 7 days after jointing | 3 |

| T7 | 18 April 2021 | 14 days after jointing | 3 |

| Vegetation Index | Name | Formula | References |

|---|---|---|---|

| NDVI | Normalized difference vegetation index | ( − )/( + ) | [37] |

| GNDVI | Green normalized difference vegetation index | ( − )/( + ) | [38] |

| SAVI | Soil adjusted vegetation index | 1.5 × ( − )/( + + 0.5) | [39] |

| OSAVI | Optimized soil adjusted vegetation index | ( − )/( − + 0.16) | [40] |

| Ratio vegetation index | [41] | ||

| EVI2 | Two-band enhanced vegetation index | 2.5 × ( − )/( + 2.4 × + 1) | [42] |

| WDRVI | Wide dynamic range vegetation index | (0.12 × − )/(0.12 × + ) | [43] |

| DVI | Difference vegetation index | − | [41] |

| GCI | Green chlorophyll index | − 1 | [44] |

| RECI | Red-edge chlorophyll index | − 1 | [44] |

| GRVI | Green–red vegetation index | ( − )/( + ) | [41] |

| NDRE | Normalized difference red-edge | ( − )/( + ) | [45] |

| NDREI | Normalized difference red-edge index | ( − )/( + ) | [46] |

| MCARI | Modified chlorophyll absorption in reflectance index | (( − ) − 0.2 × ( − )) × () | [47] |

| MCARI/OSAVI | MCARI/OSAVI | [47] | |

| IPVI | Infrared percentage vegetation index | /( + ) | [48] |

| MSR | Modified simple ratio | ( − 1)/( + 1) | [49] |

| Acquisition Date | Sector | Number of Samples | Minimum | Maximum | Mean | Standard Deviation | Coefficient of Variation (%) |

|---|---|---|---|---|---|---|---|

| Heading Stage | Leaf | 231 | 1.955 | 5.417 | 3.404 | 0.714 | 20.97% |

| Spike | 0.921 | 5.684 | 2.604 | 0.833 | 31.98% | ||

| Stem | 3.613 | 14.79 | 8.611 | 2.097 | 24.35% | ||

| Total | 7.976 | 24.662 | 14.619 | 3.268 | 22.35% | ||

| Flowering Stage | Leaf | 231 | 1.824 | 6.699 | 3.726 | 0.936 | 25.13% |

| Spike | 1.547 | 8.01 | 3.761 | 1.205 | 32.05% | ||

| Stem | 5.115 | 21.209 | 10.491 | 2.773 | 26.43% | ||

| Total | 9.161 | 34.59 | 18.007 | 4.522 | 25.11% | ||

| Filling Stage | Leaf | 231 | 1.784 | 4.963 | 3.141 | 0.636 | 20.26% |

| Spike | 4.675 | 17.74 | 10.224 | 2.692 | 26.33% | ||

| Stem | 4.345 | 14.886 | 9.399 | 2.157 | 22.95% | ||

| Total | 11.599 | 35.594 | 22.763 | 4.923 | 21.63% |

| Stage | Sector | Indicators |

|---|---|---|

| Heading | Leaf | , GRVI, MCARI/OSAVI, MAE(), VAR(), CON(), COR(), HOM() |

| Spike | NDVI, GRVI, MCARI/OSAVI, MAE(), VAR(), HOM(), COR(), Height | |

| Stem | MAE(), VAR(), DIS(), SEM(), COR() | |

| Total | / | |

| Flowering | Leaf | GRVI, NDREI, RECI, MAE(), VAR(), DIS(), SEM(), COR(), CSI |

| Spike | NDVI, MCARI/OSAVI, MAE(), VAR(), SEM(), COR(), Height, NRCT | |

| Stem | DVI, GCI, RECI, COR(), CSI | |

| Total | DVI, GCI, RECI, CSI | |

| Filling | Leaf | DVI, GRVI, MCARI/OSAVI, VAR(), HOM(), ENT(), COR(), Height, CSI |

| Spike | MCARI/OSAVI, RECI, CON(), ENT(), Height | |

| Stem | DVI, MCARI/OSAVI, MAE(), SEM(), Height, CSI | |

| Total | IPVI, MCARI/OSAVI, NDREI |

| Flight Date | Models | Sector | Train Dataset | Test Dataset | ||||

|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | |||

| April 28 | RF | Leaf | 0.552 | 0.097 | 0.073 | 0.605 | 0.09 | 0.068 |

| Spike | 0.493 | 0.106 | 0.08 | 0.493 | 0.106 | 0.081 | ||

| Stem | 0.381 | 0.307 | 0.226 | 0.327 | 0.377 | 0.271 | ||

| Total | / | / | / | / | / | / | ||

| SVM | Leaf | 0.419 | 0.109 | 0.08 | 0.575 | 0.079 | 0.062 | |

| Spike | 0.56 | 0.09 | 0.071 | 0.572 | 0.091 | 0.069 | ||

| Stem | 0.116 | 0.37 | 0.277 | 0.114 | 0.4 | 0.302 | ||

| Total | / | / | / | / | / | / | ||

| NN | Leaf | 0.55 | 0.094 | 0.072 | 0.66 | 0.08 | 0.055 | |

| Spike | 0.548 | 0.098 | 0.069 | 0.654 | 0.098 | 0.072 | ||

| Stem | 0.303 | 0.333 | 0.269 | 0.276 | 0.339 | 0.278 | ||

| Total | / | / | / | / | / | / | ||

| May 12 | RF | Leaf | 0.653 | 0.106 | 0.076 | 0.709 | 0.114 | 0.091 |

| Spike | 0.578 | 0.137 | 0.107 | 0.666 | 0.154 | 0.117 | ||

| Stem | 0.615 | 0.336 | 0.258 | 0.616 | 0.302 | 0.242 | ||

| Total | 0.442 | 0.604 | 0.463 | 0.445 | 0.676 | 0.516 | ||

| SVM | Leaf | 0.526 | 0.129 | 0.105 | 0.557 | 0.117 | 0.099 | |

| Spike | 0.371 | 0.167 | 0.128 | 0.458 | 0.154 | 0.125 | ||

| Stem | 0.149 | 0.452 | 0.343 | 0.13 | 0.52 | 0.39 | ||

| Total | 0.144 | 0.733 | 0.543 | 0.076 | 0.854 | 0.681 | ||

| NN | Leaf | 0.659 | 0.102 | 0.073 | 0.65 | 0.116 | 0.072 | |

| Spike | 0.557 | 0.14 | 0.102 | 0.572 | 0.139 | 0.109 | ||

| Stem | 0.468 | 0.355 | 0.29 | 0.551 | 0.401 | 0.328 | ||

| Total | 0.21 | 0.74 | 0.59 | 0.179 | 0.665 | 0.54 | ||

| May 21 | RF | Leaf | 0.57 | 0.087 | 0.064 | 0.588 | 0.071 | 0.066 |

| Spike | 0.454 | 0.343 | 0.266 | 0.449 | 0.373 | 0.296 | ||

| Stem | 0.436 | 0.304 | 0.233 | 0.473 | 0.332 | 0.288 | ||

| Total | 0.331 | 0.742 | 0.591 | 0.258 | 0.725 | 0.563 | ||

| SVM | Leaf | 0.507 | 0.086 | 0.076 | 0.466 | 0.094 | 0.08 | |

| Spike | 0.245 | 0.403 | 0.302 | 0.237 | 0.441 | 0.356 | ||

| Stem | 0.378 | 0.306 | 0.246 | 0.419 | 0.352 | 0.282 | ||

| Total | 0.094 | 0.849 | 0.698 | 0.102 | 0.856 | 0.707 | ||

| NN | Leaf | 0.621 | 0.078 | 0.053 | 0.648 | 0.065 | 0.046 | |

| Spike | 0.458 | 0.348 | 0.269 | 0.458 | 0.34 | 0.243 | ||

| Stem | 0.454 | 0.3 | 0.249 | 0.432 | 0.301 | 0.249 | ||

| Total | 0.193 | 0.787 | 0.653 | 0.213 | 0.873 | 0.77 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Zhang, W.; Wu, Y.; Ma, J.; Zhang, Y.; Liu, B. Estimation of Leaf, Spike, Stem and Total Biomass of Winter Wheat Under Water-Deficit Conditions Using UAV Multimodal Data and Machine Learning. Remote Sens. 2025, 17, 2562. https://doi.org/10.3390/rs17152562

Liu J, Zhang W, Wu Y, Ma J, Zhang Y, Liu B. Estimation of Leaf, Spike, Stem and Total Biomass of Winter Wheat Under Water-Deficit Conditions Using UAV Multimodal Data and Machine Learning. Remote Sensing. 2025; 17(15):2562. https://doi.org/10.3390/rs17152562

Chicago/Turabian StyleLiu, Jinhang, Wenying Zhang, Yongfeng Wu, Juncheng Ma, Yulin Zhang, and Binhui Liu. 2025. "Estimation of Leaf, Spike, Stem and Total Biomass of Winter Wheat Under Water-Deficit Conditions Using UAV Multimodal Data and Machine Learning" Remote Sensing 17, no. 15: 2562. https://doi.org/10.3390/rs17152562

APA StyleLiu, J., Zhang, W., Wu, Y., Ma, J., Zhang, Y., & Liu, B. (2025). Estimation of Leaf, Spike, Stem and Total Biomass of Winter Wheat Under Water-Deficit Conditions Using UAV Multimodal Data and Machine Learning. Remote Sensing, 17(15), 2562. https://doi.org/10.3390/rs17152562