Local Information-Driven Hierarchical Fusion of SAR and Visible Images via Refined Modal Salient Features

Abstract

1. Introduction

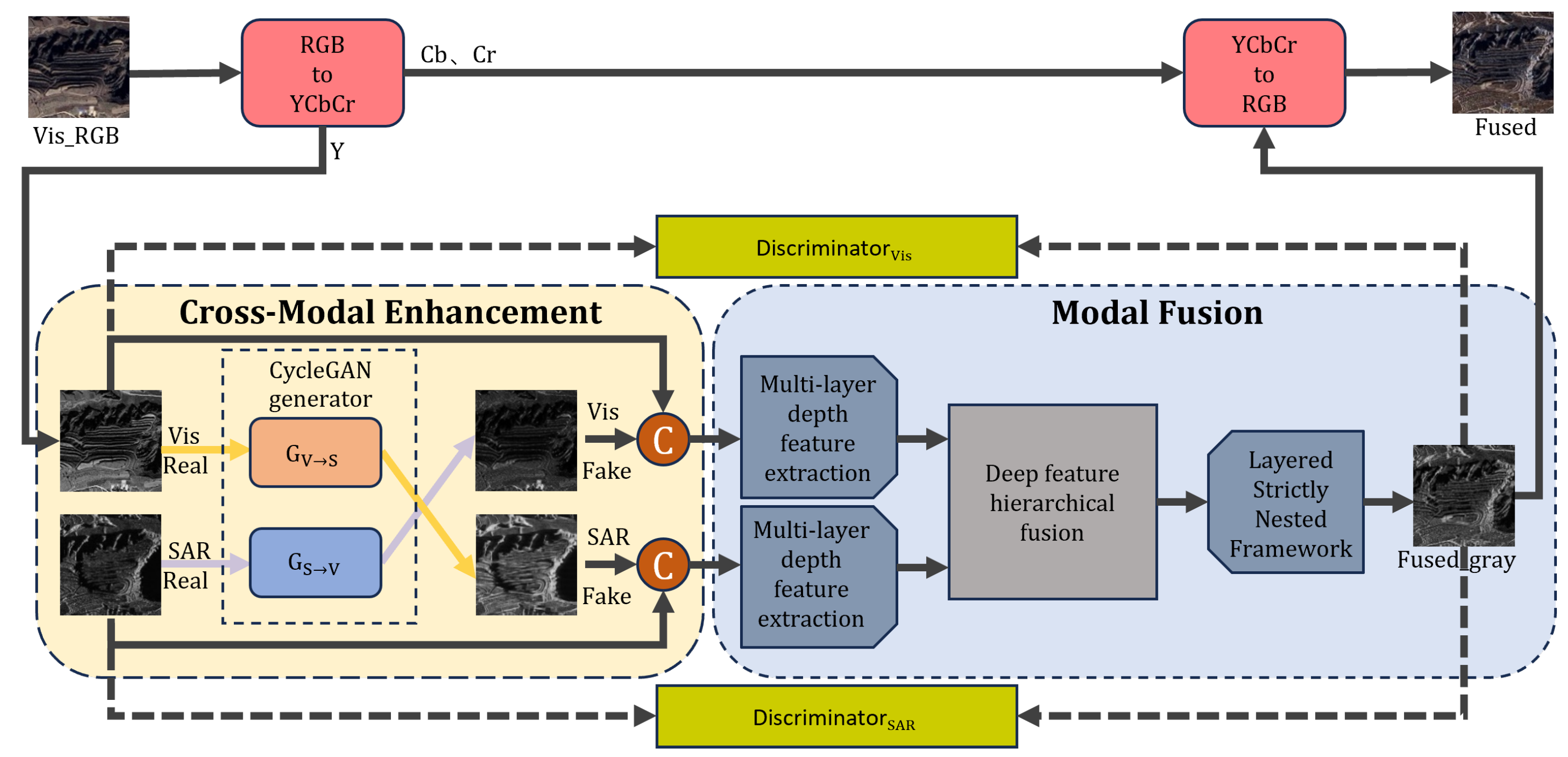

- To mitigate the effects of data quality limitations on the training of fusion networks, we propose a two-stage hierarchical fusion paradigm for SAR-visible images. The cross-modal enhancement stage employs CycleGAN generators to synthesize pseudo-modal images, effectively facilitating cross-modal interaction between SAR-visible images and enhancing cross-modal consistency. In the fusion stage, departing from conventional decomposition-based methods, we introduce computer vision-guided hierarchical decomposition to explicitly refine modality salient features and implement strict layer-wise processing of complementary characteristics.

- To fully exploit and utilize complementary modality information while jointly considering the characteristic properties of hierarchical features and the high dynamic range specific to SAR images, we construct a topology where differentiated feature extraction and fusion branches target distinct hierarchical features and devise an LSNF. This hierarchical differentiation mechanism facilitates improved learning of complementary cross-modal features between visible and SAR modalities.

- To address detail degradation and edge blurring in the fusion process, we formulate a GWPL that guides pixel-level optimization through feature saliency spatial variations, thereby bridging global structural constraints with local detail preservation and enhancing detail representation in fused results.

2. Materials and Methods

2.1. CycleGAN Generators for Cross-Modal Enhancement

2.2. Multi-Layer Deep Feature Extraction

2.3. Deep Feature Hierarchical Fusion

2.4. Layered Strictly Nested Framework (LSNF)

2.5. Discriminator Structure

2.6. Loss Function

2.6.1. Loss Function of the Generator

2.6.2. Content Loss

2.6.3. Adversarial Loss

2.6.4. Loss Function of the Discriminator

3. Experimental Results

3.1. Experimental Setup

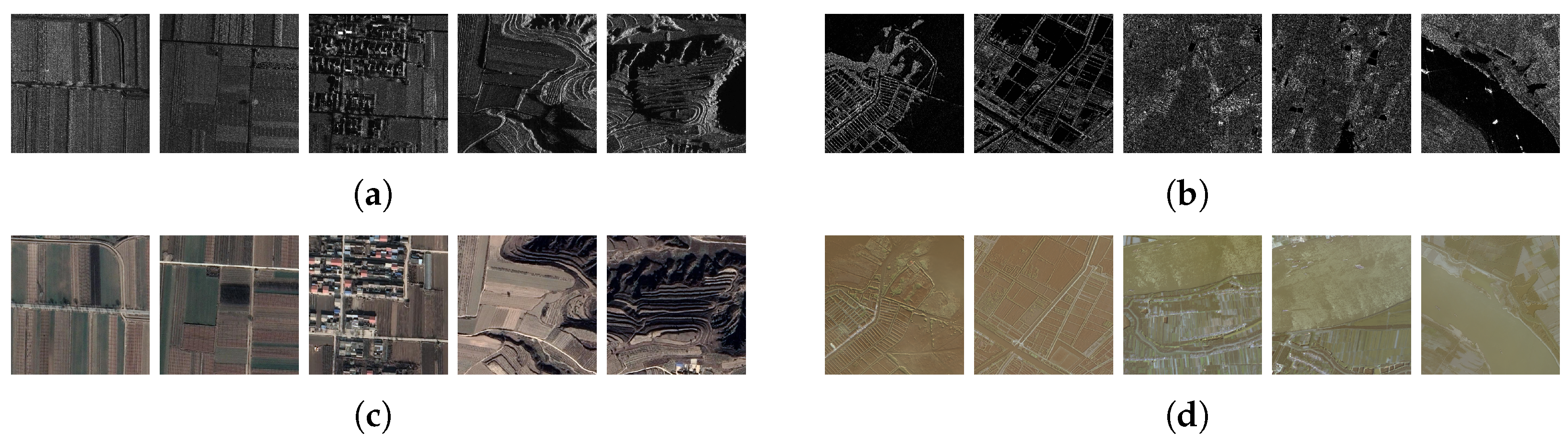

3.1.1. Datasets

3.1.2. Metrics

- Information-Based:

- -

- Entropy (EN) quantifies the amount of information contained in an image, with higher values indicating richer information diversity.

- -

- The standard deviation (SD) reflects the contrast and intensity distribution of the fused image, where a higher SD suggests a better utilization of the dynamic range.

- -

- The edge-based metric (Qabf) evaluates the edge information transferred from source images to the fused result, with higher values implying superior edge preservation.

- Image-Feature-Based:

- -

- The average gradient (AG) measures the sharpness and texture details of an image; higher AG values correspond to richer gradient information.

- -

- The spatial frequency (SF) characterizes the overall activity level of the image details, where an elevated SF indicates enhanced edge and texture representation.

- Structural-Similarity-Based:

- -

- The Structural Similarity Index (SSIM) assesses the structural consistency between the fused and source images, with values closer to one denoting minimal structural distortion.

- Human Perception-Inspired:

- -

- Visual information fidelity (VIF) quantifies perceptual similarity through natural scene statistics and human visual system modeling, where higher scores align better with human visual expectations.

3.1.3. Implementation Details

3.2. Comparison with State-of-the-Art Methods

3.2.1. Qualitative Comparison

3.2.2. Quantitative Comparison

3.3. Ablation Studies

3.3.1. Improved Resnet-50 and [−1, 1] Normalization

3.3.2. Fusion Method of Joint Attention Mechanism CBAM and Addition

3.3.3. Layered Strictly Nested Framework (LSNF)

3.3.4. Densely Connected Atrous Spatial Pyramid Pooling (DenseASPP) Module

3.3.5. Modal Cross-Enhancement with CycleGAN Generators

3.3.6.

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A Unified Unsupervised Image Fusion Network. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 502–518. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Ma, Y.; Li, C. Infrared and Visible Image Fusion Methods and Applications: A Survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Franceschetti, G.; Lanari, R. Synthetic Aperture Radar Processing; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Kulkarni, S.C.; Rege, P.P. Pixel level fusion techniques for SAR and optical images: A review. Inf. Fusion 2020, 59, 13–29. [Google Scholar] [CrossRef]

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A General Framework for Image Fusion Based on Multi-Scale Transform and Sparse Representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Yang, B.; Li, S. Multi-Focus Image Fusion and Restoration with Sparse Representation. IEEE Trans. Instrum. Meas. 2010, 59, 884–892. [Google Scholar] [CrossRef]

- Harsanyi, J.C.; Chang, C.I. Hyperspectral Image Classification and Dimensionality Reduction: An Orthogonal Subspace Projection Approach. IEEE Trans. Geosci. Remote Sens. 1994, 32, 779–785. [Google Scholar] [CrossRef]

- Han, J.; Pauwels, E.J.; De Zeeuw, P. Fast Saliency-Aware Multi-Modality Image Fusion. Neurocomputing 2013, 111, 70–80. [Google Scholar] [CrossRef]

- Ma, J.; Chen, C.; Li, C.; Huang, J. Infrared and Visible Image Fusion via Gradient Transfer and Total Variation Minimization. Inf. Fusion 2016, 31, 100–109. [Google Scholar] [CrossRef]

- Lian, Z.; Zhan, Y.; Zhang, W.; Wang, Z.; Liu, W.; Huang, X. Recent Advances in Deep Learning-Based Spatiotemporal Fusion Methods for Remote Sensing Images. Sensors 2025, 25, 1093. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Sun, P.; Yan, H.; Zhao, X.; Zhang, L. IFCNN: A General Image Fusion Framework Based on Convolutional Neural Network. Inf. Fusion 2020, 54, 99–118. [Google Scholar] [CrossRef]

- Qi, B.; Zhang, Y.; Nie, T.; Yu, D.; Lv, H.; Li, G. A Novel Infrared and Visible Image Fusion Network Based on Cross-Modality Reinforcement and Multi-Attention Fusion Strategy. Expert Syst. Appl. 2025, 264, 125682. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A Generative Adversarial Network for Infrared and Visible Image Fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Qu, L.; Liu, S.; Wang, M.; Li, S.; Yin, S.; Qiao, Q.; Song, Z. TransFuse: A Unified Transformer-Based Image Fusion Framework Using Self-Supervised Learning. arXiv 2022, arXiv:2201.07451. [Google Scholar] [CrossRef]

- Vese, L.A.; Osher, S.J. Modeling Textures with Total Variation Minimization and Oscillating Patterns in Image Processing. J. Sci. Comput. 2003, 19, 553–572. [Google Scholar] [CrossRef]

- Ye, Y.; Zhang, J.; Zhou, L.; Li, J.; Ren, X.; Fan, J. Optical and SAR Image Fusion Based on Complementary Feature Decomposition and Visual Saliency Features. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5205315. [Google Scholar] [CrossRef]

- Ye, Y.; Liu, W.; Zhou, L.; Peng, T.; Xu, Q. An Unsupervised SAR and Optical Image Fusion Network Based on Structure-Texture Decomposition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4028305. [Google Scholar] [CrossRef]

- Song, W.; Li, S.; Fang, L.; Lu, T. Hyperspectral Image Classification with Deep Feature Fusion Network. IEEE Trans. Geosci. Remote. Sens. 2018, 56, 3173–3184. [Google Scholar] [CrossRef]

- Li, Z.; Huang, L.; He, J. A Multiscale Deep Middle-level Feature Fusion Network for Hyperspectral Classification. Remote. Sens. 2019, 11, 695. [Google Scholar] [CrossRef]

- Aslam, M.A.; Salik, M.N.; Chughtai, F.; Ali, N.; Dar, S.H.; Khalil, T. Image Classification Based on Mid-Level Feature Fusion. In Proceedings of the 2019 15th International Conference on Emerging Technologies (ICET), Peshawar, Pakistan, 2–3 December 2019; pp. 1–6. [Google Scholar]

- Kong, Y.; Hong, F.; Leung, H.; Peng, X. A Fusion Method of Optical Image and SAR Image Based on Dense-UGAN and Gram–Schmidt Transformation. Remote Sens. 2021, 13, 4274. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Wang, Z.; Wang, Z.J.; Ward, R.K.; Wang, X. Deep Learning for Pixel-Level Image Fusion: Recent Advances and Future Prospects. Inf. Fusion 2018, 42, 158–173. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV 2017), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Yuqiang, F. Research on Mid-level Feature Learning Methods and Applications for Image Target Recognition. Ph.D. Thesis, National University of Defense Technology, Changsha, China, 2015. (In Chinese). [Google Scholar]

- Marr, D. Vision: A Computational Investigation into the Human Representation and Processing of Visual Information; MIT Press: Cambridge, MA, USA, 1982. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yao, Z.; Fang, L.; Yang, J.; Zhong, L. Nonlinear Quantization Method of SAR Images with SNR Enhancement and Segmentation Strategy Guidance. Remote Sens. 2025, 17, 557. [Google Scholar] [CrossRef]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep Sparse Rectifier Neural Networks. In Proceedings of the 14th International Conference on Artificial Intelligence and Statistics (AISTATS), Reykjavic, Iceland, 22–25 April 2014; pp. 315–323. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier Nonlinearities Improve Neural Network Acoustic Models. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; Volume 30, p. 3. [Google Scholar]

- Zhang, Y.; Li, X.; Chen, W.; Zang, Y. Image Classification Based on Low-Level Feature Enhancement and Attention Mechanism. Neural Process. Lett. 2024, 56, 217. [Google Scholar] [CrossRef]

- Yang, M.; Yu, K.; Zhang, C.; Li, Z.; Yang, K. DenseASPP for Semantic Segmentation in Street Scenes. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3684–3692. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3–19. [Google Scholar]

- Li, H.; Wu, X.J.; Durrani, T. NestFuse: An Infrared and Visible Image Fusion Architecture Based on Nest Connection and Spatial/Channel Attention Models. IEEE Trans. Instrum. Meas. 2020, 69, 9645–9656. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; LNCS; Springer: Cham, Switzerland, 2018; Volume 11045, pp. 3–11. [Google Scholar]

- Clarke, M.R.B. Pattern Classification and Scene Analysis; Wiley: Hoboken, NJ, USA, 1974. [Google Scholar]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss Functions for Image Restoration with Neural Networks. IEEE Trans. Comput. Imaging 2017, 3, 47–57. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Ge, L.; Dou, L. G-Loss: A Loss Function with Gradient Information for Super-Resolution. Optik 2023, 280, 170750. [Google Scholar] [CrossRef]

- Li, J.; Zhang, J.; Yang, C.; Liu, H.; Zhao, Y.; Ye, Y. Comparative Analysis of Pixel-Level Fusion Algorithms and a New High-Resolution Dataset for SAR and Optical Image Fusion. Remote Sens. 2023, 15, 5514. [Google Scholar] [CrossRef]

- Li, X.; Zhang, G.; Cui, H.; Hou, S.; Wang, S.; Li, X.; Chen, Y.; Li, Z.; Zhang, L. MCANet: A Joint Semantic Segmentation Framework of Optical and SAR Images for Land Use Classification. Int. J. Appl. Earth Obs. Geoinf. 2022, 106, 102638. [Google Scholar] [CrossRef]

- Liu, Z.; Blasch, E.; Xue, Z.; Zhao, J.; Laganière, R.; Wu, W. Objective Assessment of Multiresolution Image Fusion Algorithms for Context Enhancement in Night Vision: A Comparative Study. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 94–109. [Google Scholar] [CrossRef]

- Zhang, X.; Ye, P.; Xiao, G. VIFB: A Visible and Infrared Image Fusion Benchmark. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 468–478. [Google Scholar]

- Ma, J.; Liang, P.; Yu, W.; Chen, C.; Guo, X.; Wu, J.; Jiang, J. Infrared and Visible Image Fusion via Detail Preserving Adversarial Learning. Inf. Fusion 2020, 54, 85–98. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J. DenseFuse: A Fusion Approach to Infrared and Visible Images. IEEE Trans. Image Process. 2019, 28, 2614–2623. [Google Scholar] [CrossRef]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-Aware Dual Adversarial Learning and a Multi-Scenario Multi-Modality Benchmark to Fuse Infrared and Visible for Object Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5792–5801. [Google Scholar]

- Huang, Z.; Liu, J.; Fan, X.; Liu, R.; Zhong, W.; Luo, Z. ReCoNet: Recurrent Correction Network for Fast and Efficient Multi-Modality Image Fusion. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 539–555. [Google Scholar]

- Zhang, H.; Ma, J. SDNet: A Versatile Squeeze-and-Decomposition Network for Real-Time Image Fusion. Int. J. Comput. Vis. 2021, 129, 2761–2785. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhang, J.; Zhang, Y.; Xu, S.; Lin, Z.; Timofte, R.; Van Gool, L. CDDFuse: Correlation-Driven Dual-Branch Feature Decomposition for Multi-Modality Image Fusion. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 5906–5916. [Google Scholar]

- Xie, X.; Zhang, X.; Tang, X.; Zhao, J.; Xiong, D.; Ouyang, L.; Yang, B.; Zhou, H.; Ling, B.W.K.; Teo, K.L. MACTFusion: Lightweight Cross Transformer for Adaptive Multimodal Medical Image Fusion. IEEE J. Biomed. Health Inform. 2024, 29, 3317–3328. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and Visible Image Fusion Based on Visual Saliency Map and Weighted Least Square Optimization. Infrared Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Zhou, Z.; Wang, B.; Li, S.; Dong, M. Perceptual Fusion of Infrared and Visible Images Through a Hybrid Multi-Scale Decomposition with Gaussian and Bilateral Filters. Inf. Fusion 2016, 30, 15–26. [Google Scholar] [CrossRef]

- Zhao, J.; Liu, Y.; Wang, L.; Zhang, W.; Chen, H. A Comprehensive Review of Image Fusion Techniques: From Pixel-Based to Deep Learning Approaches. IEEE Trans. Image Process. 2021, 30, 1–15. [Google Scholar]

| Method | EN | Qabf | SD | AG | SF | SSIM | VIF |

|---|---|---|---|---|---|---|---|

| WLS [51] | 6.992 | 0.539 | 35.601 | 12.086 | 32.839 | 0.967 | 0.499 |

| Hybrid-MSD [52] | 7.018 | 0.565 | 38.034 | 12.867 | 34.572 | 0.978 | 0.543 |

| VSFF [17] | 7.333 | 0.44 | 49.279 | 13.126 | 33.263 | 0.919 | 0.49 |

| FusionGAN [14] | 6.855 | 0.314 | 36.152 | 7.188 | 17.926 | 0.325 | 0.477 |

| DenseFuse [45] | 6.853 | 0.408 | 30.961 | 8.498 | 22.092 | 0.914 | 0.442 |

| ResNetFusion [44] | 6.537 | 0.57 | 32.044 | 11.384 | 32.698 | 0.872 | 0.7 |

| SDNet [48] | 6.434 | 0.445 | 27.009 | 9.94 | 27.274 | 0.903 | 0.418 |

| TarDal [46] | 6.261 | 0.178 | 24.679 | 5.186 | 13.143 | 0.656 | 0.294 |

| RecoNet [47] | 6.461 | 0.338 | 40.681 | 8.044 | 17.008 | 0.781 | 0.42 |

| CDDFuse [49] | 7.197 | 0.618 | 41.234 | 12.546 | 32.799 | 0.929 | 0.651 |

| MACTFusion [50] | 7.271 | 0.561 | 41.735 | 12.2 | 32.58 | 0.914 | 0.484 |

| Ours | 7.118 | 0.62 | 40.277 | 13.244 | 34.402 | 0.966 | 0.638 |

| Method | EN | Qabf | SD | AG | SF | SSIM | VIF |

|---|---|---|---|---|---|---|---|

| WLS [51] | 6.775 | 0.662 | 39.67 | 15.69 | 42.722 | 0.733 | 0.545 |

| Hybrid-MSD [52] | 6.687 | 0.688 | 48.527 | 16.854 | 46.773 | 0.947 | 0.655 |

| VSFF [17] | 6.241 | 0.202 | 24.767 | 7.881 | 23.634 | 0.784 | 0.465 |

| FusionGAN [14] | 7.223 | 0.569 | 47.873 | 17.242 | 44.19 | 0.471 | 0.434 |

| DenseFuse [45] | 6.582 | 0.562 | 34.942 | 11.539 | 31.36 | 0.807 | 0.518 |

| ResNetFusion [44] | 6.21 | 0.74 | 48.291 | 16.812 | 47.194 | 0.954 | 0.834 |

| SDNet [48] | 6.457 | 0.655 | 36.128 | 14.322 | 38.646 | 0.682 | 0.512 |

| TarDal [46] | 6.386 | 0.272 | 36.766 | 8.185 | 21.446 | 0.574 | 0.319 |

| RecoNet [47] | 6.398 | 0.204 | 25.028 | 7.902 | 16.805 | 0.649 | 0.309 |

| CDDFuse [49] | 6.849 | 0.749 | 51.654 | 18.777 | 51.274 | 0.783 | 0.837 |

| MACTFusion [50] | 7.027 | 0.62 | 41.183 | 17.893 | 54.201 | 0.651 | 0.441 |

| Ours | 7.036 | 0.73 | 52.443 | 19.541 | 52.833 | 0.845 | 0.744 |

| Exp | Configuration | EN | AG | SF | SD | VIF | Parameter Quantity |

|---|---|---|---|---|---|---|---|

| I | Leaky_Relu with Normalized to [−1, 1] → Relu with Normalized to [0, 1] | 7.111 | 12.497 | 32.011 | 38.71 | 0.561 | 27,939,735 |

| II | 3 CBAM with 2 Addition → 5 CBAM | 7.046 | 12.481 | 32.99 | 38.266 | 0.621 | 30,726,257 |

| III | 3 CBAM with 2 Addition → 5 Addition | 7.057 | 12.568 | 32.819 | 38.299 | 0.595 | 27,762,769 |

| IV | LSNF → Nest Connection | 7.068 | 12.536 | 32.637 | 38.83 | 0.61 | 35,372,257 |

| V | with DenseASPP → out DenseASPP | 7.064 | 12.762 | 33.643 | 39.009 | 0.621 | 27,737,841 |

| VI | with CycleGAN generators → out CycleGAN generators | 7.099 | 12.969 | 33.709 | 39.671 | 0.635 | 27,938,865 |

| VII | → | 7.276 | 11.724 | 29.807 | 42.982 | 0.578 | 27,939,441 |

| Ours | 7.118 | 13.244 | 34.402 | 40.277 | 0.638 | 27,939,441 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, Y.; Jiang, L.; Li, J.; Liu, S.; Liu, Z. Local Information-Driven Hierarchical Fusion of SAR and Visible Images via Refined Modal Salient Features. Remote Sens. 2025, 17, 2466. https://doi.org/10.3390/rs17142466

Yan Y, Jiang L, Li J, Liu S, Liu Z. Local Information-Driven Hierarchical Fusion of SAR and Visible Images via Refined Modal Salient Features. Remote Sensing. 2025; 17(14):2466. https://doi.org/10.3390/rs17142466

Chicago/Turabian StyleYan, Yunzhong, La Jiang, Jun Li, Shuowei Liu, and Zhen Liu. 2025. "Local Information-Driven Hierarchical Fusion of SAR and Visible Images via Refined Modal Salient Features" Remote Sensing 17, no. 14: 2466. https://doi.org/10.3390/rs17142466

APA StyleYan, Y., Jiang, L., Li, J., Liu, S., & Liu, Z. (2025). Local Information-Driven Hierarchical Fusion of SAR and Visible Images via Refined Modal Salient Features. Remote Sensing, 17(14), 2466. https://doi.org/10.3390/rs17142466