Abstract

To enhance the generalization of networks and avoid redundant training efforts, cross-image hyperspectral anomaly detection (HAD) based on deep learning has been gradually studied in recent years. Cross-image HAD aims to perform anomaly detection on unknown hyperspectral images after a single training process on the network, thereby improving detection efficiency in practical applications. However, the existing approaches may require additional supervised information or stacking of networks to improve model performance, which may impose high demands on data or hardware in practical applications. In this paper, a simple and lightweight unsupervised cross-image HAD method called Variational Joint Discrimination Network (VJDNet) is proposed. We leverage the reconstruction and distribution representation ability of the variational autoencoder (VAE), learning the global and local discriminability of anomalies jointly. To integrate these representations from the VAE, a probability distribution joint discrimination (PDJD) module is proposed. Through the PDJD module, the VJDNet can directly output the anomaly score mask of pixels. To further facilitate the unsupervised paradigm, a sample pair generation module is proposed, which is able to generate anomaly samples and background representation samples tailored for the cross-image HAD task. The experimental results show that the proposed method is able to maintain the detection accuracy with only a small number of parameters.

1. Introduction

Hyperspectral images typically consist of hundreds of continuous spectral bands, usually ranging from visible to mid-infrared [,]. Due to the rich information contained in the high spectral dimension, hyperspectral images can sensitively distinguish different landforms and objects according to their materials [,]. Therefore, hyperspectral images are often used to discover anomalies in scenes, such as airplanes, tanks, or other objects in backgrounds like airports or forests []. The task of identifying anomalous objects from hyperspectral backgrounds is called hyperspectral anomaly detection (HAD), which has a wide range of practical applications [,].

For HAD, a major challenge is that the prior information of anomalies is usually unknown, and therefore it is usually regarded as an unsupervised task [,]. Moreover, there are several characteristics between anomalies and the backgrounds in hyperspectral images []. Anomalous targets are usually composed of materials such as metal, plastic, and paint, occupying a small proportion in remote sensing scenes, with spectral characteristics that differ significantly from the environment (soil, vegetation, cement, water, etc.). Based on these characteristics, many statistics-based methods were proposed in early research [,,]. The Reed–Xiaoli (RX) detector is one of the most classic methods []. It assumes that the background follows a multivariate Gaussian distribution and detects anomalies by calculating the Mahalanobis distance between the central pixel and surrounding pixels. Subsequently, some improved RX detectors were proposed, such as the local RX [] and the kernel RX algorithm [].

However, for complex regions, the background may not be accurately estimated with statistical models. To remove such limitations, many other methods have been proposed, such as representation-based methods and tensor decomposition-based methods [,,,]. Collaborative representation detection (CRD) [] is a representation-based method that assumes a background pixel can be represented by its surrounding pixels while anomalous pixels cannot. Methods based on low-rank and sparse representation (LRASR) exploit the low-rank characteristics of the background [,]. In order to further model background and anomaly information, an HAD method based on potential anomaly and background dictionary construction (PAB-DC) was proposed []. Shen et al. [] introduced the saliency prior and utilized framelet decomposition to maintain the sparsity and piecewise smoothness of background representation. Total variation regularization is also commonly used in tensor-based HAD methods due to its ability to maintain piecewise smoothness [,]. Feng et al. [] combined tensor ring decomposition with TV regularization, exploiting both the low-rank property and piecewise smoothness in the spatial and spectral dimensions. These methods achieve good detection performance through the representation and decomposition of hyperspectral images. However, for different hyperspectral images, different dictionaries and representations need to be constructed, making the parameters difficult to apply in unknown hyperspectral images.

In recent years, with the rapid development of deep neural networks, numerous deep learning-based HAD methods have been proposed, such as deep belief network (DBN) [], convolutional neural network (CNN) [,], generative adversarial network (GAN) [,], long short-term memory (LSTM) [], and autoencoder (AE) [,]. Many methods utilize AEs to reconstruct hyperspectral images and distinguish anomalies from the background through reconstruction errors. These methods are usually based on an assumption that the number of anomalous pixels is significantly smaller than the number of backgrounds. Therefore, the model tends to learn the reconstruction criterion of the background, while anomalies cannot be reconstructed well [,,]. By introducing a convolutional autoencoder, Wang et al. proposed Auto-AD [], reconstructing hyperspectral images from random noise. Gao et al. proposed a blind-spot architecture that uses surrounding pixels to reconstruct the central pixel, thereby suppressing the reconstruction of anomalies [,]. Liu et al. introduced enhanced separation training to address the identical mapping problem in the reconstruction process [,]. Cheng et al. [] introduced an alternating optimization strategy to reduce the effects of anomalies during optimization. Wu et al. [] proposed a Transformer-based autoencoder that enhances the representation of global spatial information. Liu et al. [] combined low-rank representation models with self-supervised learning, using deep neural networks to generate pseudo-anomalies. Chen et al. [] proposed a spectral diffusion model to further infer the potential background of the anomalous regions. Variational autoencoders (VAEs) have also been used in anomaly detection in recent years []. The VAE implements regularization constraints on the encoded latent space features, so it can obtain latent space features with regular distribution, thereby reducing the overfitting of the model. Lei et al. [] introduced the VAE into HAD, leveraging the strong representation capability of the VAE to extract inherent spectral features from high-dimensional spectral vectors. Subsequently, some improved methods were introduced to the VAE to further enhance HAD performance, such as 3D convolution [], graph regularization [], manifold learning, and multi-head self-attention []. Yu et al. [], combining the Chebyshev neighborhood, discriminated anomalies based on the latent layer probability distribution of pixels. Since the latent layer constraint can reduce the interference of anomalous samples on learning background reconstruction, the variational inference method was also introduced into a GAN to obtain hyperspectral image reconstruction in a generative strategy [].

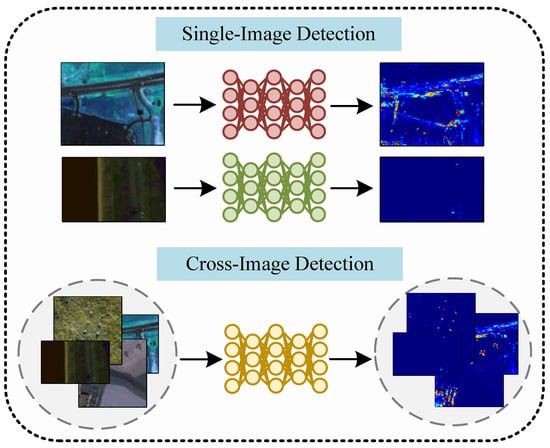

These deep learning methods mostly learn from a single hyperspectral image in an unsupervised manner and detect anomalies in the current image. They require training a specific network for each hyperspectral image, and the knowledge learned from one hyperspectral image is difficult to extend to other images. Therefore, the detection efficiency is likely to be reduced. To address these issues, in recent years, many studies have begun exploring HAD across images. Li et al. used a CNN to learn the similarity between pixels and their surroundings through classification labels and discriminated if the central pixel is anomalous []. Based on relation learning, Huyan et al. [] introduced VLAD pooling to project different image features into the same space. Li et al. [] first proposed an unsupervised cross-image HAD model that can directly generate an anomaly map in one step. Ma et al. [] proposed a statistical offset module that enables the model to adapt to hyperspectral images in different domains. To extract spatial context information, AETNet was proposed, which can learn reconstruction rules directly from a set of hyperspectral images []. As shown in Figure 1, these cross-image HAD methods do not need to be optimized for each unknown image. However, the current cross-image HAD methods still face the following issues.

Figure 1.

Schematic of the comparison of single-image and cross-image detection. Most current HAD methods are performed on single images and require optimization for each image. In contrast, after a unified training process, cross-image methods only require a forward-propagation process to perform detection on each unknown image.

- (1)

- Knowledge transfer misalignment. During the model training process, additional supervised information may be required, such as classification labels. However, the background may contain various categories of land cover in HAD, which might be classified as negative samples in category classification, thereby affecting the performance of the model.

- (2)

- Implicit modeling. The existing cross-image HAD methods mostly learn features of background and anomalies in an implicit manner, insufficiently considering the distribution differences between the background and anomalies for reconstruction or discrimination.

- (3)

- High computational complexity. In order to maintain performance, most existing cross-image HAD methods require stacking of networks, such as CNNs or Transformers, resulting in extensive parameters and hardware requirements.

To address the above issues, a simple and lightweight cross-image HAD model is proposed in this paper. Like other cross-image HAD methods, once it is trained, for any new image, only one forward propagation is required to obtain the detection map without additional optimization. Based on the assumption that background pixels in hyperspectral images are much more than anomalies, we use artificially generated anomalies and original pixels to train a binary classification network to perform HAD without ground truth labels. After sampling from the central pixel and the surrounding pixels, through a model based on a siamese VAE, the VJDNet can combine global and local information to discriminate anomalies. From the global perspective, by learning the probability of pixels being reconstructed, the model can identify anomalies with low reconstruction probability from the whole image, which are difficult to be reconstructed. To prevent the model from overfitting to specific images, we use a relatively broad reconstruction probability rather than the precise spectral angle distances as the learning objective. From the local perspective, by encoding the distributions of pixels and their surroundings, the model can identify anomalies that are significantly different from the surrounding pixels based on local information. To integrate global and local information, we propose a probability distribution joint discrimination (PDJD) module, which can directly obtain the anomaly score of the input pixels. Furthermore, we propose generating methods for anomaly samples and surrounding background representations, respectively, considering the stability and cross-image generalization of the generated samples. For anomaly samples, we generate them through dynamic weights to ensure their diversity and difference from the original pixels. On the other hand, in the original image, the surrounding pixels might contain anomalous pixels. Therefore, we establish a distribution for the surrounding pixels and sample from it to obtain representations. Compared with the existing cross-image HAD methods, the proposed VJDNet can be implemented with just a few fully connected layers, making it highly lightweight while maintaining good detection performance and scalability. The main contributions of this paper can be summarized as follows.

- (1)

- A lightweight cross-image HAD network based on a siamese VAE named VJDNet is proposed. The network is able to learning global and local distributions from samples of multiple hyperspectral images and detect anomalies in unknown hyperspectral images after one unsupervised training process. Compared with other advanced unsupervised cross-image HAD methods, our proposed method achieves comparable or even superior performance while utilizing a lightweight network structure.

- (2)

- A probability distribution joint discriminant (PDJD) module is proposed, which can simultaneously consider reconstruction probabilities and distribution differences to distinguish anomalies. The PDJD module can combine the information of the pixel itself and the information of the surroundings to achieve more accurate anomaly detection.

- (3)

- We propose a dynamic sample pair generation strategy. This generative module balances the stability and randomness of training samples, allowing the model to learn more diverse representations and better adapt to unknown hyperspectral images.

- The remainder of this paper is organized as follows. Section 2 provides a brief overview of the related works covered in this paper, specifically introducing VAEs and cross-image HAD. Section 3 provides a detailed explanation of the proposed method. Section 4 presents the experimental results and analysis, validating the effectiveness of the proposed method. Finally, a conclusion is given in Section 5.

2. Related Work

2.1. Variational Autoencoder

The variational autoencoder (VAE) is an encoder–decoder-structured network that can be used for generation tasks []. Its difference from the autoencoder (AE) is that AE learns reconstruction from sample to sample, while the VAE aims to learn probability distributions. Given a sample , the VAE assumes that it can be generated from an unobservable continuous random variable . To learn this generation process, the model aims to maximize a posterior probability that conforms to a multivariate Gaussian distribution, where represents the parameters of the decoder. To obtain the latent variable , the posterior probability is computed by the encoder, where represents the parameters of the encoder. In order to ensure the generation ability and prevent degeneration into an ordinary AE, the VAE usually uses a KL divergence as the latent layer constraint to make the distribution of close to the prior probability of the latent variable . Finally, the VAE can be trained by maximizing the evidence lower bound (ELBO). For each data point , ELBO is defined as

Generally, the standard normal distribution is used as the prior probability distribution. Consequently, for each sample , the VAE can encode it into a multivariate distribution approximating the standard normal distribution. By sampling from the distribution in the latent space, the VAE can generate new samples with a similar distribution to the input sample.

For anomaly detection tasks, the posterior probability generated by the decoder can be used to represent the probability of a pixel being reconstructed in the hyperspectral image, thus serving as the anomaly score [,]. Some other works use mean squared error loss (MSE) and spectral angle loss (SAD) instead of reconstruction probabilities to facilitate efficient post-processing []. In this paper, due to its good robustness and generalization, we design the cross-image HAD method based on the VAE and explore its joint representation and discriminative capabilities between different hyperspectral images.

2.2. Cross-Image Hyperspectral Anomaly Detection

In the past two years, cross-image hyperspectral anomaly detection (HAD) has become prominent among researchers. In previous studies, most deep learning-based HAD methods were trained and tested on the same hyperspectral image. That is, for each hyperspectral image, an individual model needs to be trained. This results in the need to store a large number of parameters when dealing with a large number of hyperspectral images. Consequently, cross-image HAD methods have been gradually explored. However, there are differences in scenes or sensors between hyperspectral images, which is a significant challenge for cross-image HAD.

The earliest methods introduced pixel-level classification labels, learning whether pixels are similar based on their categories and those of their surroundings []. This approach simplified anomaly detection into a binary classification task of “similar” or “dissimilar” between pixels, making the model easier to transfer. Based on this idea, AUD-Net introduces VLAD pooling to project pixels of different images to the same space []. In addition, a memory module was proposed to predict the feature of the central pixel through surrounding features. Pixels that are difficult to predict have a greater probability of being anomalous. These methods have verified the effectiveness of cross-image HAD. However, they all require the introduction of additional classification labels, which means there are still limitations on the data. Ma et al. proposed an unsupervised background suppression model and designed a statistical offset module that enables the model to generalize to other images []. However, it relies on existing HAD methods such as RX for detection. The Transferred Direct Detection (TDD) model [] and Anomaly Enhancement Transformation Network (AETNet) [] are both unsupervised HAD methods. The TDD model simulates and constructs some anomalies in the image, allowing the model to learn discriminative features using the labels of these “anomalies”. On the other hand, AETNet overlays masks on the image, causing the model to reconstruct the covered parts from surrounding pixels. Through this approach, the model will reconstruct anomalous pixels as background during the implementation, resulting in reconstruction errors. Most of these existing cross-image HAD methods require stacking large networks to achieve good performance. Therefore, we conduct research from the perspectives of local distribution and global reconstruction probability, aiming to design a lightweight cross-image HAD model with good performance.

3. Methodology

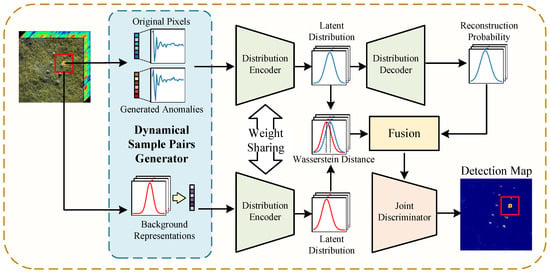

In this paper, we propose a siamese variational autoencoder (VAE)-based cross-image hyperspectral anomaly detection model, named Variational Joint Discrimination Network (VJDNet). After training with multiple hyperspectral images, the VJDNet can perform anomaly detection on other hyperspectral images not involved in the training process. The overall structure of the VJDNet is shown in Figure 2. It mainly consists of three parts: sample pair generation module, siamese VAE module, and probability-distribution joint discrimination (PDJD) module. First, the VJDNet dynamically constructs a series of background–center pixel sample pairs. The background representation is extracted by dual windows and resampled according to the distribution model, while the central pixel mutates into an anomaly according to a preset probability. After obtaining such sample pairs, we use two weight-shared VAEs to represent their latent distribution and the reconstruction probability of the central pixel. Finally, the PDJD module combines the reconstruction probability of the central pixel and the difference in latent distribution between the background and central pixels to determine whether the central pixel is an anomaly.

Figure 2.

Overview schematic of the proposed VJDNet. During the training phase, and constrain the latent distribution and reconstruction probability, respectively, while constrains the output of the joint discriminator. During the inference phase, the anomaly score of each pixel is output from the joint discriminator.

3.1. Dynamical Generation of Sample Pairs

In the sample pair generation module, we first divide the input hyperspectral image into image patches according to the dual window sizes and . For the model to learn discriminative features between anomalies and non-anomalies, we need to mutate the central pixel to an anomaly based on a preset probability . Then, we model the distribution of surrounding pixels between the inner and outer windows and sample representative surrounding background pixels from the modeled distribution.

Given N image patches, , which may come from different hyperspectral images, where c is the spectral dimension after dimensionality reduction. In this work, we use principal component analysis (PCA) to reduce the dimension of hyperspectral images and achieve dimensional unification between images. Each image patch contains two elements, the central pixel and the surrounding pixel set . represents the number of surrounding pixels, which can be calculated from the double window size as follows:

For the central pixel , we generate anomalies based on the probability . That is, in one anomaly generation process, each central pixel has a probability of being selected as an anomaly. Anomalies can be generated by shuffling the spectral order of the original pixels []. However, for samples after PCA dimension reduction, shuffling the dimensions may destroy the data structure at the level of feature space. Therefore, we have designed an anomaly generation method by generating weight vectors. Once a pixel is selected, a random weight array is generated according to Gaussian distribution , in which the negative value is fixed to 1. Then, the selected center pixel is multiplied by . As a result, the values in some dimensions of generated anomalies remain unchanged, while the values in the remaining dimensions are randomly enlarged or reduced, resulting in features different from the original pixels. This approach is able to generate anomalies similar to the original pixels in some dimensions, thus constraining the decision boundary by similar but not identical samples rather than significantly different samples, enhancing the discriminability of the model. After we obtain the center pixel , the corresponding binary label y is also generated. When , it means that the corresponding center pixel is selected as the generated anomaly. On the contrary, when , the center pixel remains unchanged, and it is regarded as a normal sample.

For the surrounding pixel set , we first model its distribution. Many previous works have assumed that the background of hyperspectral images can be represented by a multivariate Gaussian distribution. Due to the double window, the surrounding pixels are limited to a smaller scope, so their distribution is generally closer than that of the global image. Therefore, we model as a univariate Gaussian distribution on each reduced spectral dimension, where

We perform sampling in the distribution to obtain the sampled background pixels and then take their mean as the background representation of the image patch. This approach makes representative and close to the mean value of surrounding pixels while also introducing some randomness to enhance the network’s generalization and resistance to interference. To make the trained model more adaptable for transfer between images, we use a dynamic approach where anomaly and background generation are performed once per training epoch. This approach enriches the diversity of samples and reduces the overfitting of the model on training hyperspectral images.

During the inference stage, we use the mean of the surrounding pixels as the background representation. This is because the inference process is only performed once, so, compared to the training process, it is necessary to reduce randomness to ensure the stability of the detection results. Since most of the background representations generated during training are close to the mean , the model can achieve good detection performance during the inference process as well.

3.2. Model Architecture

The network structure consists of three parts: distribution encoder, distribution decoder, and probability-distribution joint discriminator. The encoder and decoder together form a VAE structure. To keep the model as lightweight as possible, we use several fully connected structures to build the network. We use Leaky ReLU as the activation layer in the model to ensure non-zero output of neurons, avoid gradient vanishing, and enhance the generalization capability of the network. It is worth noting that, during the encoding and decoding process of background representation, we fixed the parameters of the network. That is, the encoder and decoder only learn the distribution representation and reconstruction probability of the central pixel and share the weights for the forward-propagation process of the background representation. The reason is that the background representation is obtained by resampling from the background distribution, and we hope that the VAE part can maximize the reconstruction probability of real pixels as much as possible.

3.2.1. Distribution Encoder

The distribution encoder consists of three fully connected layers, with a Batch Normalization layer and an activation layer between every two layers. We use a siamese structure to construct the encoder, which can encode the input sample pairs into latent distribution representations separately. Therefore, the input layer size of the encoder is the same as the sample spectral size. The last layer size is twice the latent size, which is used to represent the mean value and standard deviation of the latent distribution, where is the latent-layer size. The encoding process can be represented as

where and are the weight and bias of the encoder , respectively. Then, the latent layer distribution of the center pixel and the latent layer distribution of the background representation can be represented as

For the latent distribution representation, we refer to the latent layer constraint of the VAE and use KL divergence as the loss function to make it close to the standard normal distribution; that is,

3.2.2. Distribution Decoder

Similar to the encoder, the distribution decoder also consists of three fully connected layers, with a Batch Normalization layer and an activation layer between every two fully connected layers. Before decoding, the VAE performs M sampling from the latent distribution to obtain latent sample representations . Then, the decoder reconstructs the latent sample representations into corresponding reconstruction distributions. Therefore, the input size of the decoder is the same as the latent size, while the output size is twice the sample spectral size, used to represent the mean and the standard deviation of the reconstruction distribution. For each latent-layer sample representation , similar to the encoding process, the reconstruction process can be represented as

where and are the weight and bias of the decoder , respectively. Since we only use the center pixels to optimize the weights of the VAE module, the background representation does not participate in the reconstruction decoding process. Then, the reconstruction distribution of the central pixel can be represented as

We use the logarithmic probability of the center pixel in the reconstruction distribution as the reconstruction probability and take the average over the number of latent samples. The final reconstruction probability can be represented as

The objective of the VAE is to maximize the reconstruction probability; thus, the reconstruction loss can be represented as

3.2.3. Probability-Distribution Joint Discriminator

To jointly utilize the relationship between pixels with global hyperspectral images and with local surrounding pixels, we propose a probability-distribution joint discriminator (PDJD) module. First, we calculate the distance between the latent distributions of the central pixel and of the background representation to measure the difference between the central pixel and its surroundings. In this work, we use Wasserstein distance to measure the distance between latent layer distributions. Wasserstein distance measures the minimum cost of transforming one distribution into another, effectively reflecting their distance regardless of whether there is overlap between distributions. Since the dimensions are independent of each other, the distribution distance can be expressed as

Then, the distribution distance d and the reconstruction probability are concatenated to obtain the joint measurement representation r. We use a classification network to discriminate whether the represented central pixel belongs to an anomaly based on the joint measurement representation. The classification network consists of three fully connected layers, with an activation layer between each two layers. Finally, we use a softmax layer to obtain the probability of being classified as background or anomaly, where the probability of being classified as anomaly can serve as the output anomaly score. We use cross-entropy loss as the classification loss , which can be represented as

where is the binary classification label, indicating that the i-th sample is a background sample or a generated anomaly sample. is the joint measurement representation of the i-th sample. Finally, introducing a balancing parameter , the overall loss function of the model is

After the discriminator, we apply an n-th power operation to the output. In this work, the exponent n is set to 3 to suppress the background while keeping the integrity of the anomalous regions.

4. Experiments

4.1. Dataset Description

To verify the cross-image anomaly detection capabilities of the VJDNet, six different datasets are used in this work. Their specific details are as follows.

- (1)

- San Diego Dataset []: This dataset was collected by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS, Jet Propulsion Laboratory, Pasadena, CA, USA) sensor in San Diego, CA, USA. The original data size is , with a resolution of 3.5 m per pixel, containing 224 spectral bands ranging from 400–2500 nm. For anomaly detection, 35 bands with low quality are removed, and a subset of is selected, containing 143 anomalous pixels.

- (2)

- Cri Dataset []: This dataset was collected by the Nuance Cri (PerkinElmer, Waltham, MA, USA) hyperspectral sensor, containing 46 bands ranging from 650–1100 nm. The image size is , containing 1254 anomalous pixels.

- (3)

- HYDICE Dataset []: This dataset was collected by the Hyperspectral Digital Imagery Collection Experiment (HYDICE, Naval Research Laboratory, Washington, DC, USA) sensor, containing 175 bands after removing water vapor absorption bands. The image size is , with 1 m per pixel as the spatial resolution. There are 21 anomalous pixels representing cars and roofs in the image.

- (4)

- WHU-Hi-River Dataset []: This dataset was collected by the Nano-Hyperspec (Headwall Photonics, Bolton, MA, USA) visible and near-infrared UAV-borne hyperspectral sensor, containing 135 bands ranging from 400 to 1000 nm. The image size is , with 6 cm per pixel as the spatial resolution. There are 21 anomalous pixels representing plastic plates in the image.

- (5)

- Airport–Beach–Urban (ABU) Dataset []: This dataset contains 13 hyperspectral scenes, including airport, beach, and urban. They were collected by the AVIRIS sensor and the Reflective Optics System Imaging Spectrometer (ROSIS-03, Deutsches Zentrum für Luft- und Raumfahrt, Oberpfaffenhofen, Germany) sensor. The images are or in size, with different spatial resolutions and numbers of the spectral bands.

- (6)

- HAD-100 Dataset []: This is a large-scale hyperspectral dataset containing 260 training images and 100 test images, collected by the AVIRIS-NG sensor. The test images are in size, with spatial resolutions ranging from 1.9 m to 8.4 m.

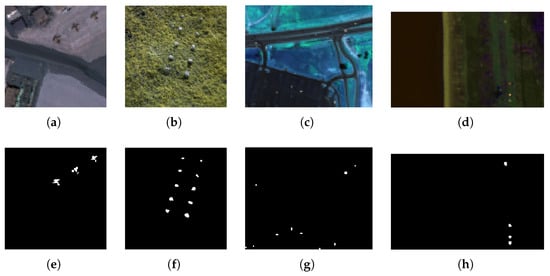

The pseudo-color images and ground truths of the four individual datasets used for testing are shown in Figure 3, and some detailed information is listed in Table 1.

Figure 3.

Pseudo-color images and ground truths of the four datasets used for testing. (a) Pseudo-color of San Diego dataset. (b) Pseudo-color of Cri dataset. (c) Pseudo-color of HYDICE dataset. (d) Pseudo-color of WHU-Hi-River dataset. (e) Ground truth of San Diego dataset. (f) Ground truth of Cri dataset. (g) Ground truth of HYDICE dataset. (h) Ground truth of WHU-Hi-River dataset.

Table 1.

Detailed information of the datasets.

4.2. Implementation Details

In this work, the input hyperspectral images are normalized and scaled to the range [0, 1]. Then, to keep the input sample size consistent, we use principal component analysis (PCA) to reduce the spectral size to 30. In the sample pair generation module, the inner window size is set to , while the outer window size is . For cross-image detection, the anomaly generation probability is set to 0.2; for single-image detection, is set to 0.5. The number of background representation samples is 10 each time, and the number of latent-layer samples M is also set to 10. In the network, the latent size is set as 20. The balancing parameter is set to 1. The whole model is trained by the Adam optimizer with a learning rate of , and the batch size is set to 64.

For cross-image anomaly detection, we first train the VJDNet on 13 images of the ABU dataset, and then verify the detection performance on the remaining four individual datasets. To reduce the computational cost, we randomly select 3500 pixels and their surroundings from each image as training samples. Afterward, these samples are randomly shuffled and sent to the network for learning. In this way, the VJDNet achieves simultaneous training on multiple hyperspectral images. For the HAD-100 dataset, we randomly select 1000 pixels and their surroundings from each training image. After training the network, detection is performed on all images in the test set.

4.3. Comparative Methods and Evaluation Metrics

In the comparative experiment, we compared the VJDNet with 11 methods: RX [], CRD [], LRASR [], 2S-GLRT [], Auto-AD [], GT-HAD [], MSNet [], CNND [], AUD-Net [], AET-Net [], and BSDM []. Among them, RX and 2S-GLRT are statistics-based methods, while CRD and LRASR are representation-based methods. In deep learning methods, Auto-AD, MSNet, and CNND are CNN-based methods, GT-HAD and AUD-Net are Transformer-based methods, AET-Net is a method combining CNN and Transformer, and BSDM is a method based on Denoising Diffusion Probabilistic Models (DDPMs).

For Auto-AD, the experiment is conducted only on single-image detection. For cross-image detection by GT-HAD, we concatenate the training images into a large image to train the network and then perform inference on the four test images. For CNND and AUD-Net, since they require classification labels for supervised training, the experiment is conducted only for cross-image detection. We use the pre-trained weights on their default hyperspectral classification dataset for testing. For other methods, similarly to the proposed VJDNet, the hyperspectral image is first normalized, and the spectral dimension is reduced to 30 by PCA. For CRD and 2S-GLRT, the settings of the dual-window size are the same as those of the proposed VJDNet. Other experimental settings are consistent with those in the original paper.

To evaluate the performance of the model, the receiver operating characteristic (ROC) curves are plotted based on the relationship between the true positive rate , the false positive rate , and the threshold . Then, their areas under the curve (AUCs) are calculated as the quantitative metric. Moreover, we also compare the number of parameters, FLOPs, and inference times of the methods to verify the lightweightness of the VJDNet.

4.4. Comparative Results on Single-Image Detection

We first verified the anomaly detection performance of the VJDNet by training and testing on the same hyperspectral images. The experimental results are shown in Table 2, in which River is the abbreviation of the WHU-Hi-River dataset. As can be seen from the table, the proposed VJDNet does not achieve the best performance on a single dataset but shows overall balance and thus achieves the highest mean score of AUROC.

Table 2.

AUC(, ) for different methods of single-image detection.

As shown in the table, CRD exhibits significant performance on the HYDICE datasets but does not perform as well on the Cri dataset. Similarly, LRASR and 2S-GLRT also perform poorly on the Cri dataset. This may be because the spectral range and dimension size of the Cri dataset are significantly different from others. On the other hand, Auto-AD achieves the best performance on the San Diego dataset but experiences a large performance drop on the WHU-Hi-River dataset. The proposed VJDNet demonstrates good detection performance on these four datasets, which have varying sensors, resolutions, and data sizes, thus showing its universality.

4.5. Comparative Results on Cross-Image Detection

4.5.1. Comparison on Four Individual Datasets

To verify the performance of the VJDNet on cross-image HAD, we compared it with different methods for cross-image detection. Among these, traditional methods such as RX, CRD, LRASR, and 2S-GLRT do not perform cross-image detection, so they have the same results as single-image detection. The CNND and AUD-Net are cross-image HAD methods based on classification labels, so they are first trained on their default classification datasets and then perform anomaly detection on the four test datasets. The remaining methods are first trained on 13 images from the ABU dataset and then tested on the four datasets. The AUC scores for each method are shown in Table 3. Similar to the results of single-image detection, the VJDNet shows balanced performance on various datasets and achieves the highest mean AUC(, ) score. In addition, the VJDNet also achieves the best detection performance on the San Diego dataset. From the results of AUC(, ) and AUC(, ), although the VJDNet does not show significant advantages in background suppression, it has excellent sensitivity to anomalies, thereby achieving the highest AUC(, ) scores in most datasets. This enables the VJDNet to ensure the ability to distinguish between anomalies and background.

Table 3.

AUC scores for different methods of cross-image detection on four individual datasets.

Compared with Table 2, we find that, on most datasets, the cross-image detection performs better than single-image detection. This may be because overfitting problems are more likely to occur in single-image detection, while, in cross-image detection, the network can learn richer information about anomalies and backgrounds by training on multiple images, thereby improving detection performance.

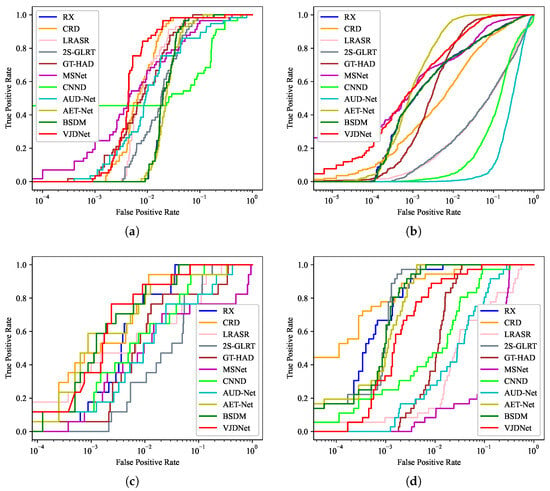

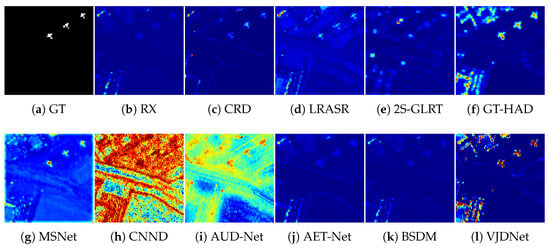

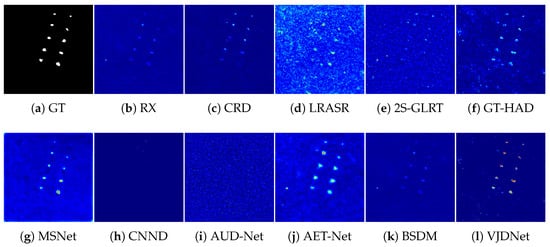

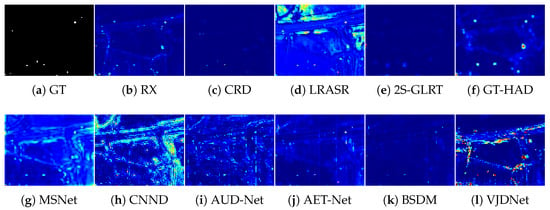

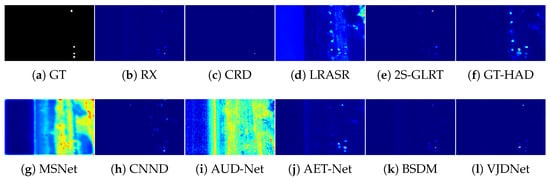

The ROC curves of different methods on various datasets are shown in Figure 4. It can be seen that, on the San Diego dataset, the VJDNet can quickly achieve a high true positive rate. On other datasets, the performance of the VJDNet can reach a similar level to other methods. We present the detection maps of cross-image detection for different methods in Figure 5, Figure 6, Figure 7 and Figure 8. It can be seen from the detection map that, compared to other methods, the VJDNet can greatly enhance the activation of anomalies.

Figure 4.

ROC curves of true positive rate and false positive rate for different methods. (a) San Diego dataset. (b) Cri dataset. (c) HYDICE dataset. (d) WHU-Hi-River dataset.

Figure 5.

Cross-image detection maps on San Diego dataset for different methods.

Figure 6.

Cross-image detection maps on Cri dataset for different methods.

Figure 7.

Cross-image detection maps on HYDICE dataset for different methods.

Figure 8.

Cross-image detection maps on WHU dataset for different methods.

4.5.2. Comparison on HAD-100 Dataset

To further validate the cross-image detection performance and efficiency of the VJDNet, we conducted comparative experiments on the large-scale dataset HAD-100. Similar to the experiments on individual datasets, we first trained on all images in the training set, then performed detection on the 100 test images. We compared the AUC scores and efficiency metrics of different methods, as shown in Table 4. The FLOPs were calculated on a image, while the inference time represents the average time taken for detection on one image.

Table 4.

AUC scores, parameters, FLOPs, and inference times for different methods of cross-image detection on HAD-100 dataset.

The experimental results show that the proposed VJDNet achieves the best detection performance on large-scale datasets compared to non-deep-learning methods and other cross-image methods. Although CNND has higher sensitivity to anomalies than the VJDNet, it also has the highest mAUC (, ) score, resulting in weaker discrimination between background and anomalies. In terms of model lightweightness and detection efficiency, while the VJDNet’s inference time is slightly higher than RX and AET-Net, it achieves the lowest number of parameters and FLOPs. In general, the VJDNet exhibits remarkable generalization and stability while also featuring a lightweight network structure.

4.6. Ablation Studies

4.6.1. Effectiveness of the PDJD Module

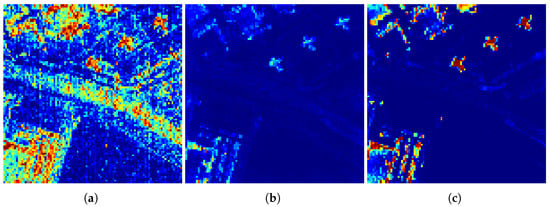

In this section, we validate the effectiveness of the proposed PDJD module. The PDJD module combines the global reconstruction probability and the local distribution difference to jointly discriminate anomalies. Therefore, we compare the detection results of the PDJD module with methods using single discriminative information. Each method is first trained on the ABU dataset and then tested on the remaining four datasets. The detection results on the four datasets are shown in Table 5. In the table, the “Probability” method uses pixel reconstruction probability as the anomaly score, which can be regarded as the basic VAE method. The “Distribution” method uses the Wasserstein distance between the latent distribution of the central pixel and the background representation as the anomaly score. To further demonstrate the performance of the VJDNet visually, we use the San Diego dataset as an example and compare the detection map after exponentiation of different methods.

Table 5.

AUROC scores for the proposed method with different anomaly discrimination modules.

The results in Table 5 show that, on the three datasets and the mean score, the proposed method using the PDJD module achieved the best performance and was only slightly inferior to the distribution-discriminant method on the WHU-Hi-River dataset. The visualization results on the San Diego dataset are shown in Figure 9. It can be observed that, compared to the method using only global reconstruction probability information, the PDJD module suppresses background activation by measuring differences with surroundings. Compared to the method using only local distribution difference information, the PDJD module significantly enhances the anomaly activation. Therefore, the proposed VJDNet can balance background suppression and anomaly enhancement and achieve good detection results on different datasets.

Figure 9.

Detection maps on San Diego dataset for the proposed method with different anomaly discrimination modules. (a) Probability. (b) Distribution. (c) PDJD.

4.6.2. Effectiveness of the Dynamical Sample Pair Generator

To verify the effectiveness of the dynamic generation procedure, we compare four combinations of training sample pairs: static anomalies with static surroundings, static anomalies with dynamic surroundings, dynamic anomalies with static surroundings, and dynamic anomalies with dynamic surroundings. For static anomalies, we converted 20 percent of samples into anomalies as preprocessing rather than doing so every iteration. For static surroundings, we used the mean of surrounding pixels instead of the sampling process. The experimental results of the four combinations are shown in Table 6. The WHU-Hi-River dataset is abbreviated as River.

Table 6.

AUROC scores for the proposed method with different sample pair generation procedures.

As seen from the results, although the static generation procedure can already perform effective detection, using the dynamic generation approach further improves the overall detection performance. Especially on San Diego and WHU-Hi-River datasets, the dynamic generation of anomalies and surroundings both significantly improve the detection results. For the HYDICE dataset, the dynamic generation of anomalies does not improve the detection performance significantly. This may be because the HYDICE dataset has relatively complex backgrounds while dynamic anomalies enhance the discriminability of the network, thus slightly increasing the probability of classifying the backgrounds as anomalies.

4.7. Parameter Analysis

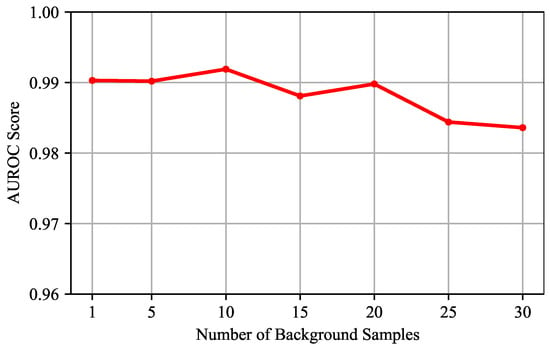

In this section, we conduct experiments on the hyperparameter settings in the VJDNet, including the number of background representation samples , the anomaly generation probability , and the balance parameter in (17). When a certain hyperparameter is evaluated, the remaining hyperparameters are consistent with the settings in Section 4.2.

- (1)

- Evaluation of the Number of Background Representation Samples: We conduct experiments on different numbers of background representation samples , which is set as [1, 5, 10, 15, 20, 25, 30]. The averages of the results on the four datasets are shown in Figure 10. The results show that, when is less than 20, the detection performance remains relatively stable. However, as becomes larger, the AUROC score gradually decreases. This may be because, with a larger , the background representation is more likely to be close to the mean of surrounding pixels, thereby reducing diversity and weakening the generalization of the network. When is set to 10, the detection performance reaches the best, and the generalization and stability of the network have reached a balance.

Figure 10. AUROC scores of different numbers of background representation samples .

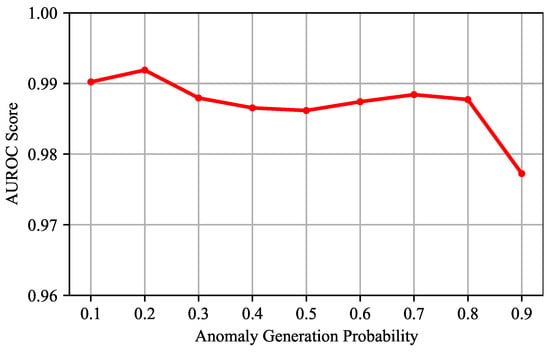

Figure 10. AUROC scores of different numbers of background representation samples . - (2)

- Evaluation of the Anomaly Generation Probability: We conduct experiments on different values of the anomaly generation probability in the range of [0.1, 0.9]. The averages of the results on the four datasets are shown in Figure 11. The results show that, when is 0.2, the detection performance reaches the best. When is set to different values, the VJDNet can achieve good results within a certain range. But, when reaches 0.9, the detection performance declines. It is worth noting that, when is large, network training may become unstable. In this case, as the number of training epochs increases, the detection performance may drop significantly. This is another reason why we have chosen a small anomaly generation probability.

Figure 11. AUROC scores of different anomaly generation probability values .

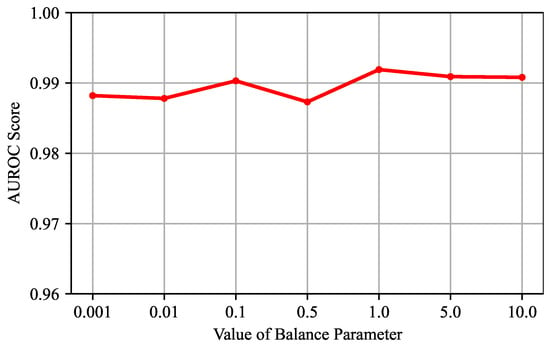

Figure 11. AUROC scores of different anomaly generation probability values . - (3)

- Evaluation of the Balance Parameter: We conduct experiments on different values of the balance parameter , which is set as [0.001, 0.01, 0.1, 0.5, 1, 5, 10]. The averages of the results on the four datasets are shown in Figure 12. The results show that the performance of the VJDNet is not sensitive to the value of . When is set to 1, the detection performance reaches the best. Therefore, we set as 1 in the experiments.

Figure 12. AUROC scores of different balance parameter values .

Figure 12. AUROC scores of different balance parameter values .

5. Conclusions

To maintain the detection performance in unknown images, the existing cross-image HAD methods often require additional supervised information or network stacking to enhance representation capabilities. However, this may impose high demands on data or hardware in practical applications. To address these shortcomings, we proposed a simple and unsupervised lightweight cross-image HAD method named VJDNet. Leveraging the reconstruction and distribution representation capabilities of the VAE, the VJDNet combines global and local information to jointly learn the pixel’s reconstruction probability and its distribution difference from surrounding pixels, thereby effectively identifying anomalies in unknown hyperspectral images. In addition, a sample pair generation module was proposed to generate anomalous pixels and surrounding background representations suitable for cross-image HAD tasks, balancing stability and generalization. We verified the single-image detection and cross-image detection performance of the VJDNet. The experimental results show that the VJDNet is able to achieve good detection performance while containing only a small number of parameters. The VJDNet also has good scalability and is expected to further improve cross-image HAD performance via more advanced networks or generating more representative anomalies and background representations.

Author Contributions

Conceptualization, G.W. and L.J.; methodology, S.W.; software, J.G.; validation, J.G.; formal analysis, P.C. and X.C.; investigation, X.C.; data curation, P.C.; writing—original draft preparation, S.W.; writing—review and editing, X.Z.; supervision, X.Z.; project administration, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 62276197, the Shaanxi Province Innovation Capability Support Plan under Grant 2023-CX-TD-09, the Natural Science Basic Research Program of Shaanxi (Program No. 2025JC-YBQN-795) and the Fundamental Research Funds for the Centra Universities under Grant ZYTS25205.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HAD | Hyperspectral anomaly detection |

| AE | Autoencoder |

| VAE | Variational autoencoder |

| CNN | Convolutional neural network |

| PDJD | Probability distribution joint discrimination |

| MSE | Mean squared error |

| PCA | Principal component analysis |

| ROC | Receiver operating characteristic curve |

| AUROC | Area under the ROC curve |

References

- Hu, X.; Xie, C.; Fan, Z.; Duan, Q.; Zhang, D.; Jiang, L.; Wei, X.; Hong, D.; Li, G.; Zeng, X.; et al. Hyperspectral Anomaly Detection Using Deep Learning: A Review. Remote Sens. 2022, 14, 1973. [Google Scholar] [CrossRef]

- Manolakis, D.; Shaw, G. Detection algorithms for hyperspectral imaging applications. IEEE Signal Process. Mag. 2002, 19, 29–43. [Google Scholar] [CrossRef]

- Landgrebe, D. Hyperspectral image data analysis. IEEE Signal Process. Mag. 2002, 19, 17–28. [Google Scholar] [CrossRef]

- Willett, R.M.; Duarte, M.F.; Davenport, M.A.; Baraniuk, R.G. Sparsity and Structure in Hyperspectral Imaging: Sensing, Reconstruction, and Target Detection. IEEE Signal Process. Mag. 2014, 31, 116–126. [Google Scholar] [CrossRef]

- Lei, J.; Fang, S.; Xie, W.; Li, Y.; Chang, C.I. Discriminative Reconstruction for Hyperspectral Anomaly Detection with Spectral Learning. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7406–7417. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A review on the combination of deep learning techniques with proximal hyperspectral images in agriculture. Comput. Electron. Agric. 2023, 210, 107920. [Google Scholar] [CrossRef]

- Chalapathy, R.; Chawla, S. Deep Learning for Anomaly Detection: A Survey. arXiv 2019, arXiv:1901.03407. [Google Scholar]

- Cheng, X.; Zhang, M.; Lin, S.; Li, Y.; Wang, H. Deep Self-Representation Learning Framework for Hyperspectral Anomaly Detection. IEEE Trans. Instrum. Meas. 2024, 73, 1–16. [Google Scholar] [CrossRef]

- Jiang, T.; Li, Y.; Xie, W.; Du, Q. Discriminative Reconstruction Constrained Generative Adversarial Network for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4666–4679. [Google Scholar] [CrossRef]

- Matteoli, S.; Veracini, T.; Diani, M.; Corsini, G. Models and Methods for Automated Background Density Estimation in Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2837–2852. [Google Scholar] [CrossRef]

- Matteoli, S.; Diani, M.; Theiler, J. An Overview of Background Modeling for Detection of Targets and Anomalies in Hyperspectral Remotely Sensed Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2317–2336. [Google Scholar] [CrossRef]

- Chiang, S.S.; Chang, C.I.; Ginsberg, I. Unsupervised target detection in hyperspectral images using projection pursuit. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1380–1391. [Google Scholar] [CrossRef]

- Reed, I.; Yu, X. Adaptive multiple-band CFAR detection of an optical pattern with unknown spectral distribution. IEEE Trans. Acoust. Speech Signal Process. 1990, 38, 1760–1770. [Google Scholar] [CrossRef]

- Kwon, H.; Der, S.Z.; Nasrabadi, N.M. Adaptive anomaly detection using subspace separation for hyperspectral imagery. Opt. Eng. 2003, 42, 3342–3351. [Google Scholar] [CrossRef]

- Kwon, H.; Nasrabadi, N. Kernel RX-algorithm: A nonlinear anomaly detector for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2005, 43, 388–397. [Google Scholar] [CrossRef]

- Tao, R.; Zhao, X.; Li, W.; Li, H.C.; Du, Q. Hyperspectral Anomaly Detection by Fractional Fourier Entropy. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4920–4929. [Google Scholar] [CrossRef]

- Geng, X.; Sun, K.; Ji, L.; Zhao, Y. A high-order statistical tensor based algorithm for anomaly detection in hyperspectral imagery. Sci. Rep. 2014, 4, 6869. [Google Scholar] [CrossRef]

- Zhang, X.; Wen, G.; Dai, W. A Tensor Decomposition-Based Anomaly Detection Algorithm for Hyperspectral Image. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5801–5820. [Google Scholar] [CrossRef]

- Qin, H.; Shen, Q.; Zeng, H.; Chen, Y.; Lu, G. Generalized Nonconvex Low-Rank Tensor Representation for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. Collaborative Representation for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1463–1474. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, Z.; Li, J.; Plaza, A.; Wei, Z. Anomaly Detection in Hyperspectral Images Based on Low-Rank and Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1990–2000. [Google Scholar] [CrossRef]

- Zhang, X.; Ma, X.; Huyan, N.; Gu, J.; Tang, X.; Jiao, L. Spectral-Difference Low-Rank Representation Learning for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10364–10377. [Google Scholar] [CrossRef]

- Huyan, N.; Zhang, X.; Zhou, H.; Jiao, L. Hyperspectral Anomaly Detection via Background and Potential Anomaly Dictionaries Construction. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2263–2276. [Google Scholar] [CrossRef]

- Shen, X.; Liu, H.; Nie, J.; Zhou, X. Matrix Factorization with Framelet and Saliency Priors for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–13. [Google Scholar] [CrossRef]

- Cheng, T.; Wang, B. Total Variation and Sparsity Regularized Decomposition Model with Union Dictionary for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1472–1486. [Google Scholar] [CrossRef]

- Sun, S.; Liu, J.; Chen, X.; Li, W.; Li, H. Hyperspectral Anomaly Detection with Tensor Average Rank and Piecewise Smoothness Constraints. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 8679–8692. [Google Scholar] [CrossRef]

- Feng, M.; Chen, W.; Yang, Y.; Shu, Q.; Li, H.; Huang, Y. Hyperspectral Anomaly Detection Based on Tensor Ring Decomposition with Factors TV Regularization. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Ma, N.; Peng, Y.; Wang, S.; Leong, P.H.W. An Unsupervised Deep Hyperspectral Anomaly Detector. Sensors 2018, 18, 693. [Google Scholar] [CrossRef]

- Li, W.; Wu, G.; Du, Q. Transferred Deep Learning for Anomaly Detection in Hyperspectral Imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 597–601. [Google Scholar] [CrossRef]

- Fu, X.; Jia, S.; Zhuang, L.; Xu, M.; Zhou, J.; Li, Q. Hyperspectral Anomaly Detection via Deep Plug-and-Play Denoising CNN Regularization. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9553–9568. [Google Scholar] [CrossRef]

- Jiang, T.; Xie, W.; Li, Y.; Lei, J.; Du, Q. Weakly Supervised Discriminative Learning with Spectral Constrained Generative Adversarial Network for Hyperspectral Anomaly Detection. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6504–6517. [Google Scholar] [CrossRef]

- Zhu, D.; Du, B.; Zhang, L. EDLAD: An Encoder-Decoder Long Short-Term Memory Network-Based Anomaly Detector for Hyperspectral Images. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 4412–4415. [Google Scholar]

- Bati, E.; Çalışkan, A.; Koz, A.; Alatan, A.A. Hyperspectral anomaly detection method based on auto-encoder. Proc. Image Signal Process. Remote. Sens. XXI 2015, 9643, 96430N. [Google Scholar]

- Cao, L.L.; Huang, W.B.; Sun, F.C. Building feature space of extreme learning machine with sparse denoising stacked-autoencoder. Neurocomputing 2016, 174, 60–71. [Google Scholar] [CrossRef]

- Xia, Y.; Cao, X.; Wen, F.; Hua, G.; Sun, J. Learning Discriminative Reconstructions for Unsupervised Outlier Removal. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1511–1519. [Google Scholar]

- Fan, G.; Ma, Y.; Mei, X.; Fan, F.; Huang, J.; Ma, J. Hyperspectral Anomaly Detection with Robust Graph Autoencoders. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Huyan, N.; Zhang, X.; Quan, D.; Chanussot, J.; Jiao, L. Cluster-Memory Augmented Deep Autoencoder via Optimal Transportation for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Zhang, L.; Zhong, Y. Auto-AD: Autonomous Hyperspectral Anomaly Detection Network Based on Fully Convolutional Autoencoder. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Gao, L.; Wang, D.; Zhuang, L.; Sun, X.; Huang, M.; Plaza, A. BS3LNet: A New Blind-Spot Self-Supervised Learning Network for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–18. [Google Scholar] [CrossRef]

- Wang, D.; Zhuang, L.; Gao, L.; Sun, X.; Huang, M.; Plaza, A. BockNet: Blind-Block Reconstruction Network with a Guard Window for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Liu, H.; Su, X.; Shen, X.; Chen, L.; Zhou, X. BiGSeT: Binary Mask-Guided Separation Training for DNN-based Hyperspectral Anomaly Detection. arXiv 2023, arXiv:2307.07428. [Google Scholar]

- Liu, H.; Su, X.; Shen, X.; Zhou, X. MSNet: Self-Supervised Multiscale Network with Enhanced Separation Training for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, B. Transformer-Based Autoencoder Framework for Nonlinear Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Liu, Y.; Jiang, K.; Xie, W.; Zhang, J.; Li, Y.; Fang, L. Hyperspectral anomaly detection with self-supervised anomaly prior. Neural Netw. 2025, 187, 107294. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Zhi, X.; Jiang, S.; Huang, Y.; Han, Q.; Zhang, W. DWSDiff: Dual-Window Spectral Diffusion for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–17. [Google Scholar] [CrossRef]

- An, J.; Cho, S. Variational autoencoder based anomaly detection using reconstruction probability. Spec. Lect. IE 2015, 2, 1–18. [Google Scholar]

- Zhang, J.; Xu, Y.; Zhan, T.; Wu, Z.; Wei, Z. Anomaly Detection in Hyperspectral Image Using 3D-Convolutional Variational Autoencoder. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 12–16 July 2021; pp. 2512–2515. [Google Scholar]

- Wei, J.; Zhang, J.; Xu, Y.; Xu, L.; Wu, Z.; Wei, Z. Hyperspectral Anomaly Detection Based on Graph Regularized Variational Autoencoder. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Jiang, H. A Manifold Constrained Multi-Head Self-Attention Variational Autoencoder Method for Hyperspectral Anomaly Detection. In Proceedings of the 2021 International Conference on Electronic Information Technology and Smart Agriculture (ICEITSA), Huaihua, China, 10–12 December 2021; pp. 11–17. [Google Scholar]

- Yu, S.; Li, X.; Chen, S.; Zhao, L. Exploring the Intrinsic Probability Distribution for Hyperspectral Anomaly Detection. Remote Sens. 2022, 14, 441. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, X.; Tan, K.; Han, B.; Ding, J.; Liu, Z. Hyperspectral anomaly detection based on variational background inference and generative adversarial network. Pattern Recogn. 2023, 143, 109795. [Google Scholar] [CrossRef]

- Huyan, N.; Zhang, X.; Quan, D.; Chanussot, J.; Jiao, L. AUD-Net: A Unified Deep Detector for Multiple Hyperspectral Image Anomaly Detection via Relation and Few-Shot Learning. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 6835–6849. [Google Scholar] [CrossRef]

- Li, J.; Wang, X.; Wang, S.; Zhao, H.; Zhong, Y. One-Step Detection Paradigm for Hyperspectral Anomaly Detection via Spectral Deviation Relationship Learning. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Ma, J.; Xie, W.; Li, Y.; Fang, L. BSDM: Background Suppression Diffusion Model for Hyperspectral Anomaly Detection. arXiv 2023, arXiv:2307.09861. [Google Scholar]

- Li, Z.; Wang, Y.; Xiao, C.; Ling, Q.; Lin, Z.; An, W. You Only Train Once: Learning a General Anomaly Enhancement Network with Random Masks for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–18. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Zhang, Y.; Du, B.; Zhang, L.; Wang, S. A Low-Rank and Sparse Matrix Decomposition-Based Mahalanobis Distance Method for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1376–1389. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Zhong, Y.; Zhang, L. Hyperspectral Anomaly Detection via Locally Enhanced Low-Rank Prior. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6995–7009. [Google Scholar] [CrossRef]

- Kang, X.; Zhang, X.; Li, S.; Li, K.; Li, J.; Benediktsson, J.A. Hyperspectral Anomaly Detection with Attribute and Edge-Preserving Filters. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5600–5611. [Google Scholar] [CrossRef]

- Liu, J.; Hou, Z.; Li, W.; Tao, R.; Orlando, D.; Li, H. Multipixel Anomaly Detection with Unknown Patterns for Hyperspectral Imagery. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 5557–5567. [Google Scholar] [CrossRef]

- Lian, J.; Wang, L.; Sun, H.; Huang, H. GT-HAD: Gated Transformer for Hyperspectral Anomaly Detection. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 3631–3645. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).