Abstract

Aerial image object detection faces significant challenges due to notable scale variations, numerous small objects, complex backgrounds, illumination variability, motion blur, and densely overlapping objects, placing stringent demands on both accuracy and real-time performance. Although Transformer-based real-time detection methods have achieved remarkable performance by effectively modeling global context, they typically emphasize non-local feature interactions while insufficiently utilizing high-frequency local details, which are crucial for detecting small objects in aerial images. To address these limitations, we propose a novel VMC-DETR framework designed to enhance the extraction and utilization of high-frequency texture features in aerial images. Specifically, our approach integrates three innovative modules: (1) the VHeat C2f module, which employs a frequency-domain heat conduction mechanism to fine-tune feature representations and significantly enhance high-frequency detail extraction; (2) the Multi-scale Feature Aggregation and Distribution Module (MFADM), which utilizes large convolution kernels of different sizes to robustly capture effective high-frequency features; and (3) the Context Attention Guided Fusion Module (CAGFM), which ensures precise and effective fusion of high-frequency contextual information across scales, substantially improving the detection accuracy of small objects. Extensive experiments and ablation studies on three public aerial image datasets validate that our proposed VMC-DETR framework effectively balances accuracy and computational efficiency, consistently outperforming state-of-the-art methods.

1. Introduction

Aerial image object detection [1,2] plays a vital role in remote sensing, supporting various modern applications including drone-assisted detection, satellite remote sensing analysis, and smart city monitoring. It also significantly impacts critical areas like agricultural development [3,4], environmental monitoring [5,6], disaster assessment [7,8], and military reconnaissance [9,10], providing essential support for efficient and accurate remote sensing data analysis. However, aerial image detection inherently presents enormous challenges due to significant size variations in high-resolution imagery, abundant small objects, interference from complex backgrounds, illumination variability, motion blur, and dense overlaps among objects. These features pose severe requirements on both detection accuracy and real-time inference performance simultaneously [11].

Compared with traditional object-detection methods relying on manual features or shallow learning models, deep learning methods have demonstrated remarkable superiority in modeling accuracy due to their powerful feature representation capabilities [1,12]. Among recent deep-learning techniques, Transformer-based approaches [13] have rapidly become research hotspots thanks to their outstanding performance in global feature modeling and capturing long-distance dependencies, and are commonly referred to as Detection Transformer (DETR) in the context of object detection. Compared with CNN-based methods, Transformer architectures can more effectively capture global context information, thus significantly improving accuracy in scenarios involving occlusions and small object detection. In particular, Zhao et al. introduced RT-DETR [14,15], demonstrating the possibility of achieving real-time detection with DETR architectures.

Although Transformer modules in DETR-based methods excel at modeling non-local dependencies, their detection performance degrades significantly in aerial remote sensing scenarios. This performance gap stems from two structural limitations: (1) the inadequate capacity of CNN-based backbones to extract fine-grained local features, leading to weak initial representations of small or densely packed objects; and (2) the restricted ability of existing feature aggregation and fusion strategies to preserve and enhance these details across scales, resulting in further information degradation during multi-level integration. These challenges are further exacerbated by the fact that most state-of-the-art DETR models are trained and evaluated on natural image datasets, which differ fundamentally from aerial imagery in object scale, texture distribution, and especially in the frequency domain.

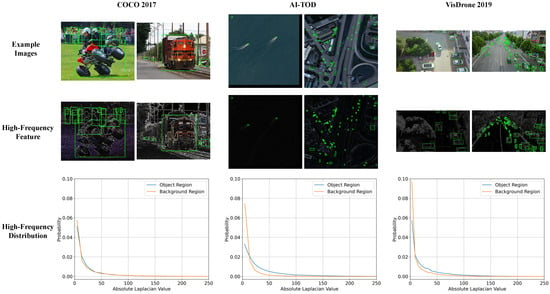

As illustrated in Figure 1, aerial images often contain numerous small, densely packed objects and exhibit concentrated high-frequency textures, whereas natural images (e.g., COCO val2017 [16]) feature more distinct object boundaries and smoother variations. The Laplacian-based frequency analysis highlights this discrepancy across datasets, including AI-TOD val [17] and VisDrone val2019 [18], confirming the unique high-frequency properties of aerial imagery. These characteristics expose real-time DETR frameworks to several practical challenges: (1) Existing studies have shown that conventional CNN-based feature extractors (e.g., ResNet [19], C3 [20], C2f [21]) tend to emphasize low-to-mid frequency components [22] and may introduce artifacts [23], hindering accurate localization of small targets. (2) The effectiveness of high-frequency extraction is highly sensitive to convolutional kernel sizes [24], and modules like ASFF [25], PAFPN [26], and BIFPN [27] fail to capture multi-scale fine details comprehensively. (3) Prior research has also indicated that simplistic fusion strategies (e.g., direct concatenation or linear weighting [28,29]) tend to mix high-frequency signals across scales indiscriminately, leading to feature aliasing and the loss of distinct texture cues critical for accurate modeling.

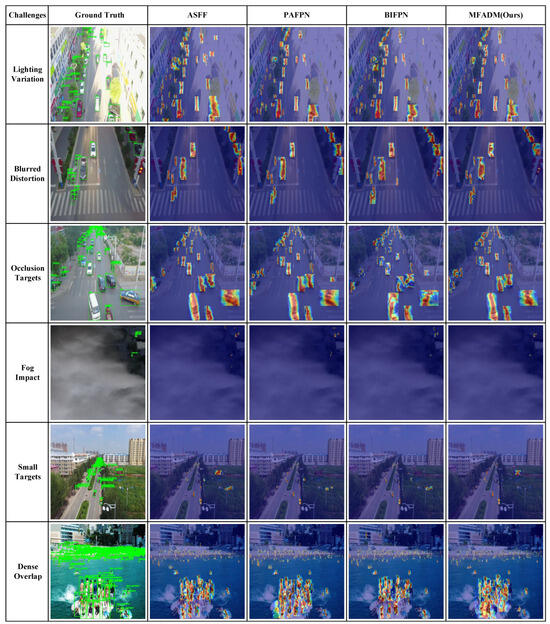

Figure 1.

Comparison of frequency component distributions in object and background regions between natural and remote sensing images. The object regions annotated in the ground truth labels are marked by green rectangular boxes. High-frequency features are extracted using the Laplacian operator. The high-frequency feature maps are obtained by extracting frequency-domain responses separately from the R, G, and B channels, where brighter colors indicate stronger high-frequency components. The frequency distribution plots are computed from grayscale images, and larger x-axis values correspond to higher-frequency features.

These observations reveal a core scientific challenge: the mismatch in high-frequency feature distributions between natural and aerial images, coupled with the inherent architectural limitations of CNN-based frameworks, results in a performance bottleneck for existing real-time DETR models in aerial object detection. To address these critical limitations, this work proposes a novel framework, VMC-DETR, which is inspired by the recent RT-DETR architecture and advancements in lightweight module design [30,31,32]. It explicitly enhances the extraction, aggregation, and fusion of high-frequency texture information, and incorporates three carefully designed modules: the VHeat C2f Module, the Multi-scale Feature Aggregation and Distribution Module (MFADM), and the Context Attention-Guided Fusion Module (CAGFM). Specifically, the main contributions of this work are as follows:

- VHeat C2f, which introduces frequency-domain heat conduction into the backbone. It enhances local high-frequency detail extraction, solving the problem of blurred edges and weak features in small and densely packed objects.

- MFADM, which employs large and diverse depthwise convolutions for multi-scale feature aggregation. It selectively preserves informative high-frequency features of small objects across scales, balancing detail sensitivity and redundancy in aerial images.

- CAGFM, which employs a lightweight attention mechanism to integrate contextual information across scales. It refines the representation of small and overlapping targets, improving detection accuracy in complex aerial scenes.

- Extensive comparisons with state-of-the-art real-time DNN-based methods on benchmark remote sensing datasets AI-TOD, VisDrone-2019, and TinyPerson across small object-detection tasks demonstrate that the proposed VMC-DETR framework achieves outstanding detection performance while maintaining real-time inference speed.

2. Related Work

Relevant prior work includes one-stage and two-stage object-detection algorithms for aerial image object detection, as well as DETR-based algorithms.

2.1. CNN-Based One-Stage Object-Detection Methods

One-stage object-detection algorithms have shown high detection efficiency in aerial image object detection and have achieved a series of improvements in recent studies. For example, EL-YOLO [33] designs a sparsely connected progressive feature pyramid network and a cross-space learning multi-head attention mechanism based on YOLOv5 [20], which eliminates cross-layer interference during feature fusion and enhances contextual connections between object scales. It is deployed on an embedded platform, demonstrating the model’s real-time performance. FCOSR [34] introduces a novel label assignment strategy tailored to the characteristics of aerial images, significantly improving the detection of overlapping objects and enhancing the model’s robustness in complex scenes. Drone-TOOD [35] introduces an explicit visual center module in the neck network to capture local object information, boosting the model’s capability to detect dense objects and achieving outstanding performance in aerial image object detection in crowded scenes. SDSDet [36] proposes a neighborhood erasing module that optimizes the learning of multi-scale features and improves detection accuracy by preventing redundant gradient feedback between adjacent scales.

However, these one-stage methods usually focus on optimizing the multi-scale feature fusion strategy of the feature pyramid and improving the label assignment strategy. Despite these advances, they do not make full use of the feature extraction stage and fail to distinguish edge information between objects and backgrounds effectively. The weak foundation not only limits the object feature information that can be used in the subsequent feature fusion and detection stages but also allows excessive background information to interfere when fusing contextual feature information. As a result, they still struggle to flexibly and effectively handle high-density aerial images with complex backgrounds, and the detection results remain suboptimal. These limitations highlight the need to pave the way for subsequent feature fusion through more effective feature extraction and refinement of small object features, thus shaping our research direction for VMC-Net to address the shortcomings of these aerial object-detection methods.

2.2. CNN-Based Two-Stage Object-Detection Methods

Two-stage object-detection algorithms have stronger feature extraction capabilities in aerial image object detection and have achieved a series of optimizations in recent studies. For example, TARDet [37] introduces a feature refinement module and an aligned convolution module, which are used to aggregate and enhance contextual information, respectively, achieving outstanding performance in the task of rotational object detection in aerial images with large-scale variations. AFOD [38] integrates spatial and channel attention modules into the Faster R-CNN network, enhancing the network’s ability to perceive object features in remote sensing images and improving detection accuracy in complex scenes. Another work [39] incorporates a dynamic detection head into the Oriented R-CNN framework, effectively addressing the problem of object occlusion in aerial images. MSA R-CNN [40] proposes an enhanced feature extraction method that optimizes the feature processing process and reduces information loss in the FPN, thereby improving the detection performance of multi-scale objects.

However, these two-stage methods typically come with high computational costs and time complexity, especially when processing high-resolution aerial images. This limits their real-time performance, making them less suitable for time-sensitive applications. While recent studies introduce modules that enhance feature extraction and contextual awareness, these improvements often increase computational demands. These issues highlight the need for detection methods to balance object feature richness and computational overhead, which leads us to design VMC-DETR as a lightweight yet effective aerial object detector.

2.3. Transformer-Based One-Stage Object-Detection Methods

It is worth noting that DETR (DEtection TRansformer) and its variants belong to the category of one-stage object detectors, as they directly predict objects from image features without relying on a region proposal stage. Therefore, we reorganize the related work accordingly, separating CNN-based methods and Transformer-based methods while clearly categorizing DETR as a one-stage method. The DETR series of object-detection algorithms has the advantage of global information modeling in aerial image object detection and has made breakthroughs in many aspects in recent studies. For example, OVA-DETR [41], inspired by the concept of text alignment, introduces a region-text contrastive loss and a bidirectional vision-language fusion module to address the limitations of DETR models in open-world object detection. QETR [42] incorporates query alignment and a scale controller to enhance the ability of local queries to capture object information, thereby improving detection performance in aerial images. Another work [43] based on DINO designs a backbone network that combines CNN and ViT, leveraging the local feature extraction capabilities of CNNs and the global modeling strengths of ViTs, thus enhancing the network’s ability to extract both global and local features. AODet [44] first uses RoI to remove background areas that do not contain objects, then employs a Transformer to integrate the contextual information of the foreground regions, enabling effective detection in aerial images with sparse objects.

However, these DETR-based methods often struggle with slow convergence and poor handling of high-frequency details and multi-scale features, limiting their effectiveness in aerial images with densely occluded objects. Although recent work introduces improvements such as region-text contrastive loss, query alignment, and hybrid backbones combining CNN and ViT, these approaches still have difficulty processing fine-grained features across different scales efficiently. Additionally, their reliance on large-scale global modeling makes it challenging to detect small, overlapping objects in complex scenes. These limitations emphasize the need for VMC-DETR to not only make full use of information about objects of different scales in aerial images to more accurately detect objects with dense occlusions but also to improve the convergence speed of the detector for practical deployment.

3. Method

3.1. The Overall Architecture of VMC-DETR

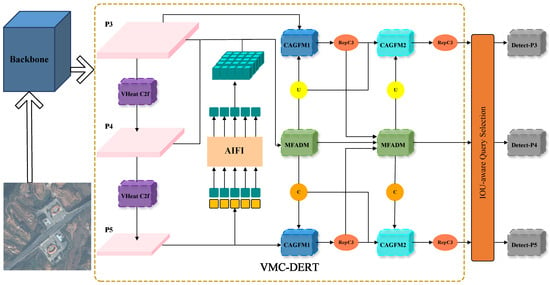

VMC-DETR is a one-stage object-detection framework. Its overall architecture is shown in Figure 2. In the feature extraction stage, VMC-DETR employs a C2f backbone network based on visual heat conduction operations [45] to refine the feature map. This unique backbone enables the model to enhance its ability to capture high-frequency features that represent fine-grained details, improving its effectiveness in handling small-scale objects. After extracting features from the input image, these features are passed to the attention-based intrascale feature interaction (AIFI) module [14], which is responsible for facilitating effective interactions between features of different scales. This module ensures that the model can balance the contextual information across scales, thus providing robust feature representations that are essential for accurate detection.

Figure 2.

The overall architecture of the proposed VMC-DETR framework consists mainly of three modules: the VHeat C2f module, which is based on visual heat conduction; the MFADM, a multi-scale feature aggregation distribution module; and the CAGFM, a contextual attention guided fusion module, where CAGFM1 refers to the dual-branch CAGFM and CAGFM2 refers to the triple-branch CAGFM. The remaining modules include U, which handles upsampling operations; C, responsible for downsampling using ADown [46]; AIFI, an attention-based intrascale feature interaction module; and RepC3 [14], designed for reparameterization convolution.

In the neck network, VMC-DETR leverages two MFADM blocks. These modules operate at the P4 layer, aggregating and distributing features from the P3, P4, and P5 layers to improve multi-scale feature fusion. By using the MFADM modules, VMC-DETR is able to achieve efficient integration of features at different resolutions, which is crucial for detecting objects of varying sizes. Additionally, four CAGFM blocks replace standard feature fusion methods in the neck. These CAGFM modules use contextual attention mechanisms to guide the fusion of features, enhancing the model’s ability to leverage the rich contextual information present in the aerial images.

Finally, the VMC-DETR framework employs an IoU-aware query selection mechanism for label matching and detection. This process ensures that the final detection results are not only precise but also robust against overlapping objects and complex scenarios. By incorporating these advanced modules, VMC-DETR provides a powerful and efficient framework for aerial object detection, capable of handling both small-scale and large-scale objects with high accuracy.

3.2. Visual Heat Conduction C2f Module

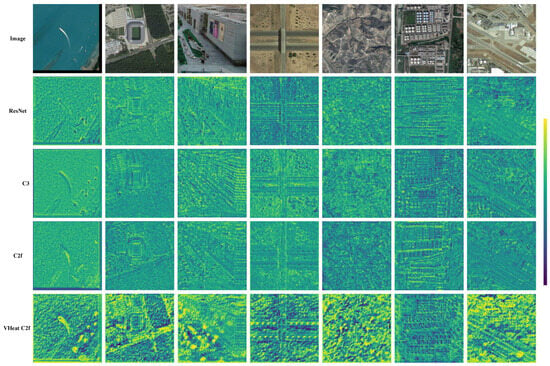

In the task of object detection in aerial images, traditional backbone networks exhibit notable limitations in extracting high-frequency features that convey critical detail cues. Figure 3 illustrates the feature maps of ResNet [19], C3 [20], C2f [21], and the proposed VHeat C2f backbone network across various scenarios. Observations indicate that ResNet performs relatively poorly in capturing the details of small objects in high-resolution aerial images, resulting in blurred object edges and details in the feature maps. C3 improves upon ResNet by better capturing edges and textures, but is more susceptible to interference in scenes with densely arranged or overlapping objects, leading to boundary confusion among objects. Compared with C3, C2f further enhances the distribution and capture of features, generating feature maps with more intricate details. However, in complex backgrounds, C2f has limited noise suppression capabilities and remains susceptible to background interference.

Figure 3.

Visual comparison of feature maps from traditional backbone networks (ResNet, C3, and C2f) and the proposed VHeat C2f backbone on aerial images from various categories and scenes. The colormap encodes the strength of feature responses, where yellow corresponds to high response values, often associated with salient texture or edge information, while dark green represents weaker responses. Variations in response strength allow for intuitive observation of how different backbone networks capture high-frequency features with varying levels of precision and accuracy.

These deficiencies can be partially attributed to the intrinsic properties of high-frequency features. Characterized by fine textures and sharp edges, high-frequency components are inherently difficult for CNNs to capture accurately, particularly in complex aerial scenes. Papyan et al. [47] demonstrated that CNNs can be interpreted as learning convolutional sparse-coded representations, implying that the receptive field size of convolutional kernels significantly influences their ability to extract high-frequency information. Lin et al. [22] further observed that in classification and detection tasks, convolutional kernels larger than 1 × 1 in ResNet tend to prioritize medium-to-low frequency texture learning. Consequently, the 1 × 1 convolutions employed in modules such as C3 and C2f can forcibly extract high-frequency features. However, Tomen et al. [24] pointed out that overly small kernels may introduce severe high-frequency artifacts, ultimately reducing the robustness of the extracted high-frequency representations. This observation is consistent with the phenomenon illustrated in Figure 3.

To address these challenges comprehensively, we introduce the VHeat [45] module to improve the robustness of the C2f backbone by fine-tuning features in the frequency domain [30,48], significantly improving its capacity to handle high-frequency features and manage the complexities associated with aerial image object-detection tasks during feature extraction.

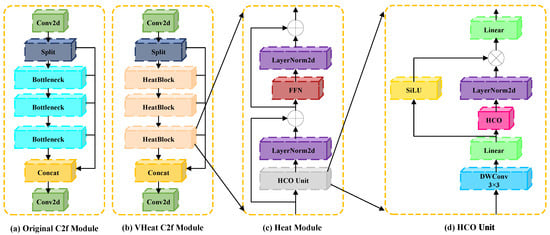

Figure 4 presents the detailed implementation of the proposed VHeat C2f module. In the original C2f module, where the number of channels between P3, P4, and P5 is 256, 512, and 1024, respectively. The Bottleneck block, composed of simple convolutions, is replaced by the Heat block from the VHeat module, which enhances the backbone’s capability to extract and utilize high-frequency texture features that are critical for precise object detection.

Figure 4.

Detailed architecture of the VHeat C2f module used in the backbone network. (a) Original C2f module, which consists of a convolutional layer, a split-branch structure, and three sequential Bottleneck blocks followed by concatenation. (b) Modified VHeat C2f module, where Bottleneck blocks are replaced with HeatBlocks to enhance high-frequency feature learning. (c) Heat Module, which introduces frequency-domain processing with residual connections and normalization layers. (d) HCO Unit, which performs the heat conduction operation using discrete cosine transforms, simulating spatial-frequency energy propagation. Here, ⊗ denotes the Hadamard product and ⊕ represents element-wise addition. HCO in (d) stands for Heat Conduction Operation.

3.2.1. Heat Block

Heat block is the core unit of the VHeat C2f module, consisting of a series of feature enhancements and information processing steps. It begins with a heat conduction operation, where the input feature map and learning frequency are used as parameters to simulate the physical heat diffusion process, dynamically extracting the spatial and frequency information of the feature map. This is followed by two-layer normalization processes, a feedforward layer, and two residual connections to further enhance the framework’s feature representation capabilities.

3.2.2. Heat Conduction Operator Unit

The Heat Conduction Operator (HCO) unit primarily simulates the heat conduction process. It performs a series of operations on the input features, including convolution, frequency mapping, and weighting, to achieve fine-grained feature adjustments. First, a depthwise convolution (DWConv [49]) with a kernel size is applied, followed by a linear layer to extract the local spatial information from the feature map while retaining the channel features. Then, the feature map is split into two parts: one for the heat conduction operation and the other for frequency embedding. The HCO is grounded in the general solution of the heat equation in the spatial domain of the inverse Fourier transform (), as shown in Equation (1).

The two-dimensional temperature distribution () is extended to the channel dimension, with the input and output being and , respectively. Based on Equation (1), this concept is applied to the field of computer vision, and the resulting formula can be expressed as (2).

Among them, represents , and represents . Since visual images are generally rectangular, HCO replaces the two-dimensional discrete Fourier transform (2D DFT) and the two-dimensional inverse discrete Fourier transform (2D IDFT) with the two-dimensional discrete cosine transform (2D DCT) and the two-dimensional inverse discrete cosine transform (2D IDCT), as shown below:

Here, and represent the discrete cosine transform and inverse discrete cosine transform, respectively. The HCO unit is similar to the self-attention mechanism in ViT [50], dynamically propagating energy to capture object features within the image. In HCO, the cosine weight matrix required for is first initialized to transform the feature map from the spatial domain to the frequency domain and to initialize the attenuation matrix that simulates the diffusion effect in the heat conduction process. In the frequency domain, HCO regards the object pixels in the image as heat sources according to the heat diffusion formula , and weights the frequency components of the feature map to propagate information. Among them, frequency embedding (FVEs) is a learnable shared parameter, similar to the absolute position embedding in ViT, which is used to adjust the frequency attenuation in heat conduction. The parameters k and t are both fixed hyperparameters, which are jointly controlled by FVEs and the output image resolution (1600 between P3 and P4 layers, 400 between P4 and P5 layers). k is used to control the attenuation rate of heat conduction, and t is used to simulate the time change during heat conduction.

HCO can be regarded as an adaptive filter. Representative objects as high-frequency components will accumulate more energy and higher temperatures, while irrelevant objects and backgrounds as low-frequency components are the opposite. In addition, adjacent areas of the image have similar features. HCO can continuously propagate heat source information to enhance the boundary contour feature extraction of high-frequency component areas and suppress the interference of irrelevant information features in low-frequency component areas. After applying the heat diffusion formula, the transformed feature map is returned to the spatial domain through the inverse transform . The time complexity of the HCO operation is , where N is the number of input image patches. Since HCO’s frequency domain filtering can affect all patches in the image, it is less complex than the ViT self-attention mechanism (with a complexity of ) that calculates the similarity between image patches. Finally, the cosine map feature map obtained by HCO is fused with the frequency embedding feature map, and a nonlinear activation (SiLU) is applied to produce the final output.

Through comparative analysis (as shown in Figure 3), the VHeat C2f module significantly improves the quality of high-frequency feature extraction and makes up for the shortcomings of the traditional backbone network. In response to the problem of blurred details of small objects in ResNet, VHeat C2f refines the object area features through HCO, generates clearer edges and richer textures, and solves the problem of distinguishing small objects. At the same time, compared with the shortcomings of C3 in boundary confusion in high-density or overlapping object scenes, VHeat C2f uses energy propagation characteristics to enhance the object boundary distinction and adapt to complex scenes. In addition, it effectively suppresses background noise through frequency domain filtering, makes up for the shortcomings of C2f, improves the overall quality of feature maps, and achieves efficient calculation with a lower time complexity of , has stronger adaptability and robustness, and can better cope with the diverse needs of aerial image object detection tasks.

3.3. Multi-Scale Feature Aggregation and Distribution Module

At present, many mainstream and well-established multi-scale feature fusion methods exhibit strong performance in natural image object-detection tasks but perform suboptimally when applied to aerial imagery. This performance gap is primarily due to the unique aerial perspective, where the extraction of abundant high-frequency cues is beneficial for detecting dense and tiny objects. However, modules such as VHeat C2f extract high-frequency features indiscriminately, and these redundant or irrelevant high-frequency components have been shown to compromise the robustness of detection networks [51,52], leading to a high rate of false positives. As shown in Figure 5, we visualize the heatmaps of ASFF [25], PAFPN [26], BIFPN [27], and the proposed MFADM under six key challenges in aerial object detection. It can be observed that the first three methods exhibit varying degrees of missed detections and false positives, indicating their limited effectiveness in handling such extreme aerial scenarios.

Figure 5.

Comparison of heatmap activations under typical challenges in aerial object detection using different multi-scale feature fusion methods, including ASFF, PAFPN, BIFPN, and the proposed MFADM. The colormap highlights feature response intensity, where red and yellow regions indicate strong attention and blue denotes weak response. The green bounding boxes in the Ground Truth column denote annotated object locations. Despite minor visual overlap, all target areas remain clearly identifiable and do not hinder scientific interpretation.

Specifically, both ASFF and BIFPN utilize weighted feature fusion mechanisms, which tend to overreact to high-frequency regions. For example, ASFF frequently misclassifies streetlights, traffic lights, noise artifacts, and trees as valid objects, while BIFPN often falsely detects streetlights, building windows, traffic lights, wall graffiti, road centerlines, and trees. Additionally, both methods tend to miss larger objects within densely populated scenes. This behavior proves beneficial when high-frequency information is limited, as the fusion mechanism can enhance detection accuracy. However, when paired with modules like VHeat C2f that extract abundant high-frequency features, this tendency leads to a surge in false positives.

In contrast, PAFPN, which does not adopt a weighted feature fusion strategy, demonstrates a lower false-detection rate. Nonetheless, it still occasionally misidentifies high-frequency regions such as wall textures, signal lights, streetlights, and trees as objects. This may be due to the use of relatively small convolutional kernels in PAFPN, which limits its ability to effectively filter high-frequency features across different levels [53].

To overcome the limitations of these classic multi-scale feature fusion approaches in aerial object detection, and to better leverage the spatial and semantic information extracted by the VHeat-enhanced C2f backbone, we propose the Multi-level Feature Aggregation and Distribution Module (MFADM). This module integrates the strengths of both PAFPN and KPI Modules [31], leveraging large convolutional kernels of varying sizes to capture rich contextual information across hierarchical feature maps. Moreover, MFADM ensures precise and efficient propagation of high-frequency information at every detection scale. As a result, it significantly improves the accuracy of anchor box localization and object classification. The implementation details of MFADM are illustrated in Figure 6.

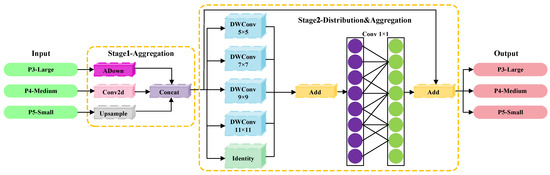

Figure 6.

The detailed process of the MFADM module: ADown denotes the downsampling module of YOLOv9, DWConv represents the depthwise convolution, and Identity refers to the identity mapping.

3.3.1. The First Feature Aggregation Stage

MFADM can process input features of different scales from P3, P4, and P5 using a customized feature aggregation module. For the 80 × 80 feature map of the P3 layer, we employ the ADown module from YOLOv9c and YOLOv9e within the YOLOv9 [46] family. This module integrates maximum pooling, average pooling, and standard convolution operations. Compared to ordinary 2D convolution downsampling, it retains more original information and provides more comprehensive object features for subsequent operations. For the 40 × 40 feature map of the P4 layer and the 20 × 20 feature map of the P5 layer, standard 2D convolution and upsampling are used to adjust the number of channels, which are then concatenated with the P3 layer along the channel dimension.

3.3.2. Feature Distribution and Second Aggregation Stage

After the first feature aggregation stage of MFADM, we introduced the PKI Module from PKINet to perform the feature distribution operation. The PKI Module employs deep convolutions of sizes 5 × 5, 7 × 7, 9 × 9, and 11 × 11 in parallel (the selection of kernel sizes will be discussed in Section 5.2.3), along with an identity mapping, to transform the global information of the extracted features into various forms of local information for distribution. A 1 × 1 convolution is then used for channel fusion. Finally, a residual connection is established with the feature information prior to distribution, allowing the network to effectively retain both global and local feature information of objects at different scales across each layer. The complete formula for the DWConv module, including depthwise convolutions and the 1 × 1 convolution for channel fusion, is expressed as follows:

where represents the weights of the depthwise convolution with kernel size k, and ∗ denotes the convolution operation, is the 1 × 1 convolution used for channel fusion.

Finally, according to the comparison of the heat map results in Figure 5, MFADM effectively solves the defects of other methods. For example, under conditions of changing lighting, MFADM combines deep convolutions of different kernel sizes with identity mapping to prevent excessive response at a single scale and adaptively suppress interference caused by strong light. For example, in the case of motion blur, MFADM more effectively classifies the semantic information of the extracted object features during the downsampling process using ADown, and combines the feature distribution of deep convolutions of different kernel sizes. This method enhances the model’s ability to identify blurred objects. For example, in the case of densely overlapping objects, MFADM uses convolution kernels of different sizes to enhance the receptive field and effectively capture the global and local features of dense objects. Its aggregation and distribution strategy dynamically adjusts the contribution of features at different layers, significantly reducing background sensitivity.

3.4. Contextual Attention Guided Fusion Module

In aerial image object detection, feature fusion plays a critical role in enhancing the performance of multi-scale object detection. High-frequency features extracted from different scales carry rich information and are highly sensitive to spatial variations [54,55]. However, conventional feature fusion methods typically rely on simple concatenation or linear weighting operations. Such simplistic strategies often lead to the blending or compression of essential high-frequency cues, resulting in the loss of contextual information, redundancy, and insufficient exploitation of multi-scale representations. These issues adversely affect detection accuracy, especially for small objects.

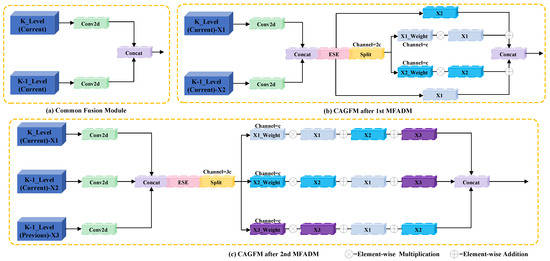

To address these issues, we propose the Context Attention Guided Fusion Module (CAGFM), as illustrated in Figure 7. The pseudocode of CAGFM for aerial image processing within the VMC-DETR framework is provided in Algorithm 1. By effectively preserving and integrating high-frequency contextual information, CAGFM significantly enhances the robustness and precision of multi-scale feature fusion.

Figure 7.

Specific details of the CAGFM module implementation: ESE stands for the effective squeeze and extraction attention mechanism. (a) Standard feature fusion operation, (b) dual-branch CAGFM, (c) triple-branch CAGFM. , , and represent input feature maps from different scales or stages. The terms , , and denote the channel-wise attention weights computed via the contextual attention mechanism (ESE [56]), adjusting the contributions of each feature map in the fusion process.

| Algorithm 1 Applying CAGFM for Aerial Image Processing in VMC-DETR Framework |

| 1: Input: Aerial image dataset with images of size , number of epochs N |

| 2: Output: Enhanced feature maps of and layers after contextual attention guided fusion |

| 3: for to N do |

| 4: Loop over each training epochs and load image and extract feature maps at scales , , with channels for each layer |

| 5: Step 1: Dual-Branch CAGFM on Layer, |

| 6: if The number of channels of and do not match after the first MFDAM then |

| 7: Adjust channels of and to via Conv |

| 8: end if |

| 9: Concatenate and along the channel dimension to obtain . |

| 10: Apply ESE Attention: , refine features by global mean and adaptive gating |

| 11: Split into weighted components for and layers |

| 12: Fuse weighted features: |

| 13: Step 2: Dual-Branch CAGFM on Layer, |

| 14: Repeat Step 1 for and to obtain , focusing on larger object regions in complex scenes |

| 15: Step 3: Triple-Branch CAGFM on Layer, |

| 16: if The number of channels in , after the second MFADM and after the first MFDAM do not match then |

| 17: Adjust channels of , and to via Conv |

| 18: end if |

| 19: Concatenate along the channel dimension to obtain |

| 20: Apply ESE Attention: , enhance small object features by computing channel mean and scaling |

| 21: Split into weighted components for , and layers |

| 22: Fuse weighted features: |

| 23: Step 4: Triple-Branch CAGFM on Layer, |

| 24: Repeat Step 3 for to obtain , focusing on broader contextual elements |

| 25: Store enhanced feature maps for image |

| 26: end for |

| 27: return Enhanced feature maps for all dataset images used in the final detection stage |

3.4.1. Dual-Branch CAGFM

The dual-branch CAGFM is applied to the P5 and P3 layers, respectively, after the first MFADM module, where the corresponding features in these two layers are fused. For the P5 layer, small object detection in aerial images is a critical challenge. Since the P5 layer contains more small-scale features and has a higher number of channels, it is essential to fully utilize the features of this layer. Therefore, in the dual-branch CAGFM, we fuse the P5 and P4 layers during the current fusion stage. To ensure the complete transmission of information from the P5 layer, the number of channels in the P4 layer is first increased to match that of the P5 layer, aligning the feature dimensions required for the fusion operation. This strategy preserves rich multi-scale information and enhances the accuracy of small object detection. For the P3 layer, to strike a balance between detection accuracy and computational efficiency, the fusion process reduces the number of channels in the P4 feature map to match that of the P3 layer. In this manner, the dual-branch CAGFM effectively utilizes features of different scales during fusion while avoiding excessive computational overhead in the P3 layer, thereby optimizing the overall performance of the network.

Subsequently, the Effective Squeeze and Extraction (ESE [32]) mechanism is employed as an attention module to effectively capture global contextual information from the input features. The core idea of ESE is to dynamically adjust the channel-wise importance of the feature map through a channel attention mechanism, thereby enhancing key channels and suppressing redundant ones. Specifically, the input feature map first undergoes a global average pooling operation , which compresses the spatial dimensions to and retains only the channel-wise information:

The obtained channel vector is fed into a fully connected layer, whose weight is , where is the size of the intermediate dimension. Subsequently, the normalized channel attention weight is generated by the Sigmoid activation function :

Finally, we perform element-wise multiplication operation ⊗ on the generated channel attention weight and the original feature map to obtain the enhanced feature map :

This process not only preserves the semantic information between channels but also enhances the overall performance of the framework through dynamic weighting. Compared with the traditional squeeze and extract (SE [56]) attention, it only uses one fully connected layer, which not only reduces the channel information loss but also improves the computational efficiency. After the ESE attention mechanism, each layer can adaptively adjust the weight of the input feature map through feature weight segmentation. Finally, after interactive fusion, the two feature maps are weighted based on the weight mapping. The weighted features are then added and concatenated to form a new output feature. For more details, please refer to the following section on the Triple-Branch CAGFM.

3.4.2. Triple-Branch CAGFM

A triple-branch CAGFM is deployed at both the P3 and P5 layers. Although the two modules do not share parameters, their inputs and outputs are represented using the same variables for notational convenience.

Specifically, the triple-branch CAGFM integrates features from three different layers (, , and ). Unlike separately computing channel-wise attention weights for each layer, the CAGFM jointly computes the attention through a concatenation of all three feature maps(), as formulated below:

where denotes the attention weights for layer , and represents the learnable parameters. The function Split(·, i) extracts the portion of the concatenated attention vector that corresponds to based on its channel allocation.

Then, the weighted feature fusion across three scales is explicitly formulated as follows:

where is the fused feature at the current object scale .

Finally, the three enhanced features are concatenated to form , which is then processed by the RepC3 module before being passed to the detection head:

4. Experiments and Results

To validate the effectiveness of the proposed VMC-DETR framework, we adopt RT-DETR with a ResNet-18 backbone (RT-DETR-R18 [14]) as the baseline. This framework employs the same AIFI and RepC3 modules in the neck and maintains a comparable level of computational complexity. We conduct both comparative and ablation experiments on three aerial image datasets—AI-TOD, VisDrone-2019, and TinyPerson—and visualize representative detection results to facilitate comprehensive analysis.

4.1. Datasets

The first dataset we use is the AI-TOD [17] dataset, which was proposed by Wuhan University in 2020. It is an aerial image dataset, where 87.7% of the objects are smaller than 32 × 32 pixels. The mean and standard deviation of the absolute size are 12.8 pixels and 5.9 pixels, respectively. These values are much smaller than those found in other natural image and aerial image datasets. The AI-TOD dataset contains eight categories, namely airplanes (Air), bridges (Bri), persons (Per), ships (Shi), storage-tanks (Sto), swimming pools (Swi), vehicles (Veh), and windmills (Win). The dataset consists of images with 800 × 800 pixels and contains 700,621 labeled objects. It is split into 11,214 images for training and 2804 images for testing.

The second dataset we use is the VisDrone-2019 [18] dataset, which is released by the AISKYEYE team at Tianjin University. This dataset is designed for object detection in UAV-captured images of diverse remote sensing scenes, including urban areas, residential regions, and rural environments. The VisDrone-2019 dataset presents challenges such as dense object distribution, scale variation, and complex backgrounds. The dataset contains ten categories: awning-tricycle (Awn), bicycle (Bic), bus (Bus), car (Car), motorcycle (Mot), pedestrian (Ped), people (Peo), tricycle (Tri), truck (Tru), and van (Van). In total, the dataset consists of 8629 images with a variety of weather conditions, lighting, and viewpoints. It is divided into three subsets: 6471 images for training, 548 images for validation, and 1610 images for testing.

The third dataset we use is the TinyPerson [57] dataset, proposed by the University of the Chinese Academy of Sciences in 2019. This dataset is constructed using high-resolution aerial images, originally gathered from various websites. The researchers extract frames from videos captured at different seaside locations at 50-frame intervals, removing duplicates to compile a diverse collection of aerial scenes. The TinyPerson dataset is characterized by very small human object s, significant aspect ratio variations, and dense distributions, all within complex seaside environments. It includes two categories: people on the sea (Sp), such as swimmers or surfers, and people on earth (Ep), including beachgoers. In total, the dataset comprises 1610 images and 72,651 labeled instances of people. For our experiments, we use 794 images for training and 816 images for testing.

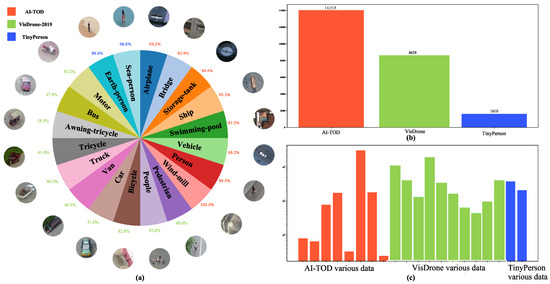

Figure 8 summarizes the detailed statistics of the three datasets, including object categories, the number of images, instance counts, and other relevant attributes. In addition, following the MS COCO [16] definition of small objects (i.e., objects smaller than pixels), we compute the proportion of small objects within each category across the three datasets. This information is also shown in Figure 8, enabling a more intuitive analysis in the subsequent experiments of the VMC-DETR framework’s detection performance on small objects in aerial images.

Figure 8.

(a) Object category names, image examples, and the proportion of small objects across the three datasets. (b) The number of images in each of the three datasets. (c) The number of instances for each object category across the three datasets.

4.2. Experimental Setup

The hardware configuration of our experimental environment includes: CPU: Intel(R) Xeon(R) CPU E5-2682 v4 @ 2.50 GHz 64-core processor, GPU: NVIDIA GeForce RTX 3090 × 1, video memory: 24G. The software environment includes: Ubuntu 20.04.3 LTS, python 3.8.16, Torch 1.13.1. Table 1 and Table 2 provide detailed information on the hyperparameter configuration and data augmentation techniques used throughout the experimental process.

Table 1.

The hyperparameters and their corresponding values used in the experiments. Adam denotes the adaptive moment estimation optimizer, and IoU represents intersection over union.

Table 2.

Data augmentation techniques and their application ratios used in the experiment.

To evaluate the performance of the framework, this experiment uses precision (Pre), recall (Rec), average precision (mAP), frames per second (FPS), gigaflop operations (GFLOPs), inference time per image (IT), and model memory usage (MU) as evaluation metrics. The formulas for the first three evaluation indicators are as follows:

where , , and represent the numbers of true positives, false positives, and false negatives, respectively; N denotes the total number of object categories; and is the average precision for the i-th category, computed as follows:

where denotes the precision-recall curve for class i as a function of recall r.

4.3. Quantitative Evaluation of Detection Results

We use mainstream CNN-based and Transformer-based object-detection algorithms from the past three years, including medium-sized models from DDOD, TOOD, DAB-DETR, DINO, RTMDET, LD, ConvNeXt, Gold-YOLO, YOLOv9, and YOLOv10 detection frameworks. Specifically, we employ the “Medium” models from the YOLO series and other models with ResNet50 as the backbone network to conduct comparative experiments on three aerial image datasets. Considering that several recent methods such as RingMoE [11], QETR [58], and OVA-DETR [59] focus on different problem settings, including multi-modal fusion, open-vocabulary detection, and large-scale pretraining, they are not directly comparable to our work, which emphasizes single-modality input, lightweight architecture, and real-time performance. Therefore, we exclude them from our comparative experiments, while still acknowledging their contributions in Section 1 and Section 2.

Table 3 presents the results of our comparative experiments on the AI-TOD aerial image dataset. The proposed VMC-DETR framework achieves the best overall performance, with mAP50 and mAP50:95 scores of 45.6% and 19.3%, respectively. Compared to other mainstream detection frameworks such as DDOD, TOOD, and ConvNeXt, VMC-DETR significantly outperforms them—for instance, achieving gains of 11.4%, 17.4%, and 20.1% in mAP50, respectively. Furthermore, VMC-DETR obtains the highest accuracy in five of the eight object categories and demonstrates superior capability in detecting densely overlapping targets such as “Veh” (69.7%) and “Sto” (79.3%). This performance is largely attributed to the proposed VHeat C2f module, which draws inspiration from the heat conduction mechanism to refine feature maps and enhance object-level feature representation. As a result, VMC-DETR is better equipped to distinguish between foreground and background in complex aerial scenes, outperforming other frameworks in the feature extraction stage.

Table 3.

Comparison experiments with current mainstream methods on the AI-TOD dataset. Each object category and mAP are expressed as percentages (%), IT represents the inference time for a single image (ms), and MU denotes peak memory usage during model runtime (MB). Bold numbers indicate the best results among all compared methods.

In terms of computational efficiency and real-time processing, VMC-DETR ranks fourth in both FPS and GFLOPs, with a single image processing time of 9.2 ms. Although it slightly lags behind the YOLO series models, it outperforms other CNN and Transformer-based models, demonstrating excellent detection accuracy while maintaining high computational efficiency and real-time processing capabilities. This meets the real-time performance requirements of one-stage object detection algorithms. Additionally, the VMC-DETR framework uses 302 MB of memory, making it compatible with the memory constraints of most edge computing devices and positioning it as a lightweight model.

Table 4 presents the results of our comparative experiments on the VisDrone-2019 drone dataset. Our proposed VMC-DETR framework achieves the best performance, with mAP50 and mAP50:95 scores of 45.9% and 27.9%, respectively. Compared to the excellent YOLOv9 and YOLOv10 medium models proposed in 2024, VMC-DETR framework outperforms them by 3.9% in mAP50 and by 2.7% and 2.6% in mAP50:95, respectively. Notably, it demonstrates the highest accuracy not only for small objects like “Peo” (47.9%) but also for large objects such as “Van” (49.9%), while maintaining strong performance across other categories. This superior outcome is attributed to the integration of MFADM in the neck network. By effectively aggregating and distributing multi-scale features across the P3, P4, and P5 layers, MFADM enhances the VMC-DETR framework’s detection accuracy for objects of various sizes, enabling it to consistently outperform other mainstream methods in complex aerial imagery.

Table 4.

Comparison experiments with current mainstream methods on the VisDrone-2019 dataset. All values are expressed as percentages (%). Bold numbers indicate the best results for each metric across all methods.

Table 5 presents the results of our comparative experiments on the TinyPerson dataset. Our proposed VMC-DETR framework achieves the best performance, with mAP50 and mAP50:95 scores of 25.4% and 7.5%, respectively, surpassing the best Transformer-based method, DINO, by 1.1% and 0.7%. Additionally, VMC-DETR framework achieves the highest accuracy in the categories “Ep” (19.3%) and “Sp” (31.5%), demonstrating its effectiveness in handling tiny objects in aerial images. This outstanding performance is attributed to the integration of the CAGFM. By utilizing an optimized feature fusion strategy and attention mechanism, CAGFM effectively guides and enhances the fused features, allowing the framework to fully leverage the contextual information in the image. This capability significantly improves the detection accuracy of small objects in aerial images.

Table 5.

Comparison experiments with current mainstream methods on the TinyPerson dataset. All values are expressed as percentages (%). Bold numbers indicate the best performance for each metric across all methods.

In all three datasets, VMC-DETR exhibits a strong trade-off between detection accuracy, speed, and memory footprint. It maintains top-tier performance across both anchor-based and anchor-free models, including two-stage (e.g., DAB-DETR, RTMDet) and one-stage (e.g., YOLOv8-M, YOLOv10-M) detectors, highlighting its generalization ability under varied aerial scenarios.

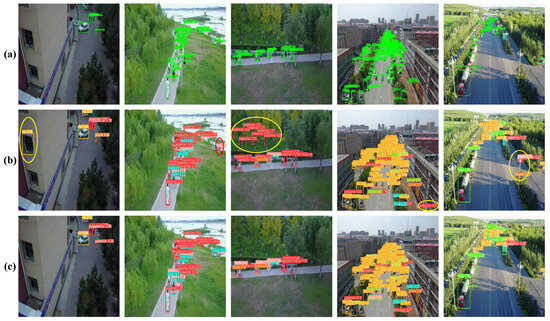

4.4. Visualization of Detection Results

Figure 9 shows the visualization results of all comparison methods on the AI-TOD dataset. For clarity, these errors are highlighted using red and yellow circles. Red circles indicate missed detections, where actual objects in the scene are not detected, leading to false negatives. Yellow circles, on the other hand, indicate false detections, where the model identifies objects that do not exist in the scene, resulting in false positives.

Figure 9.

Visualization of all comparison methods on the AI-TOD dataset. (a) shows the ground truth bounding boxes. (b–h) are detection results visualized using MMDetection version 3.3.0, while (i–l) are generated using Ultralytics version 8.0.201. Red circles highlight false positive detections, and yellow circles mark missed detections.

As shown in Figure 9, our framework (l) produces results that are nearly identical to the ground truth (a), demonstrating the high accuracy and stability of our approach in object detection in aerial images. In contrast, other methods (shown in (b) to (k)) exhibit varying degrees of error. Notably, the image is taken at night, where objects are densely distributed and overlapping, and all object instances are small—factors that pose significant challenges in aerial object detection. Our method effectively addresses these issues. Specifically, VHeat C2f enhances the feature extraction capability, enabling clearer distinction between objects and backgrounds under low-light conditions. MFADM expands the receptive field through multi-scale deep convolution kernels to capture dense object regions effectively, while CAGFM enhances the weight of small objects through contextual attention and adaptive fusion. As a result, the VMC-DETR framework significantly reduces both false detections and missed detections, offering more reliable and robust detection performance compared to other advanced frameworks.

Figure 10 presents the visualization results on the VisDrone-2019 dataset, comparing our framework (c) with the baseline (b). Similar to before, red circles indicate missed detections, and yellow circles represent false positives. In this dataset, baseline methods often miss multiple objects or generate false detections in crowded urban scenes, especially along roadsides where occlusions from trees, vehicles, and buildings are frequent. In contrast, our framework accurately detects both large and small vehicles as well as partially occluded pedestrians and cyclists. This improvement is largely due to the MFADM module, which incorporates the ADown structure to enhance the model’s capacity to distinguish objects in complex spatial hierarchies. These visual results further confirm the robustness of VMC-DETR in dense urban environments with challenging occlusion and clutter.

Figure 10.

Comparison of visualization effects with baseline methods on the VisDrone-2019 dataset, implemented using Ultralytics version 8.0.201. (a) shows the ground truth annotations, (b) presents detection results from the baseline model, and (c) displays the results of our proposed method. Yellow circles highlight missed detections or false classifications made by the baseline method.

5. Analysis and Discussion

5.1. Module Contribution Analysis of VMC-DETR

To verify the contribution of each module in VMC-DETR framework, we conduct an ablation study on three datasets: AI-TOD, VisDrone-2019, and TinyPerson. The modules involved include VHeat C2f, MFADM, and CAGFM. Below, we provide a detailed analysis of the results.

5.1.1. Effectiveness Validation on AI-TOD Dataset

The results in Table 6 show that the VMC-DETR framework achieves a significant performance improvement on the AI-TOD dataset, with final mAP50 and mAP50:95 scores of 45.6% and 19.3%, respectively, compared to 40.7% and 16.8% for the baseline model. Introducing the VHeat C2f module alone raises mAP50 from 40.7% to 42.2% and Rec from 42.0% to 44.1%. This result demonstrates the effectiveness of VHeat C2f in addressing the challenges posed by varying object scales and motion blur in the AI-TOD dataset. Through a heat conduction mechanism, VHeat C2f enables precise spatial adjustments in the feature map, enhancing the model’s ability to distinguish objects at different scales and capture finer details in blurred conditions.

Table 6.

Ablation experiment results on the AI-TOD dataset. All values are expressed as percentages (%). Bold numbers indicate the best performance for each metric across all variants.

Further combining VHeat C2f with CAGFM or MFADM yields even more significant performance gains. For instance, the combination of VHeat C2f and CAGFM achieves a Pre of 61.3% and mAP50 of 42.5%, highlighting the ability of the contextual attention mechanism to fusion key information in complex backgrounds. In the AI-TOD dataset, background information greatly exceeds the object. CAGFM focuses on the object area through contextual clues, identifies the object location, and filters the background noise. In addition, the multi-scale feature fusion and distribution mechanism of MFADM enables the model to effectively handle the problem of changes in object details caused by changes in fog conditions, improving the VMC-DETR framework’s ability to resist light interference. Together, the modules in the VMC-DETR framework greatly enhance the model’s robustness in tackling diverse challenges within the AI-TOD dataset.

5.1.2. Effectiveness Validation on VisDrone-2019 Dataset

The results in Table 7 indicate that the VMC-DETR framework performs exceptionally well on the VisDrone-2019 dataset, achieving final mAP50 and mAP50:95 scores of 45.9% and 27.9%, respectively, significantly surpassing the baseline model’s scores of 39.6% and 23.7%. Introducing the MFADM alone raises Rec from 37.9% to 38.2% and mAP50 from 39.6% to 40.0%. This demonstrates MFADM’s advantage in handling object overlap and occlusion issues in the VisDrone-2019 dataset. Through multi-scale feature aggregation and distribution, MFADM enables flexible feature allocation across scales, enhancing the model’s ability to distinguish densely packed and occluded objects.

Table 7.

Ablation experiment results on the VisDrone-2019 dataset. All values are expressed as percentages (%). Bold numbers indicate the best performance for each metric across all ablation variants.

Combining MFADM with VHeat C2f or CAGFM further boosts model performance. For example, the combination of MFADM and CAGFM achieves an mAP50 of 42.9%, showcasing the synergy between contextual attention and multi-scale feature fusion. In the VisDrone-2019 dataset, small objects are densely packed, and some images are significantly affected by lighting conditions. CAGFM improves the model’s ability to locate small objects in complex lighting environments by focusing on the contextual information around the small objects. Additionally, the VHeat C2f module enhances the model’s detail recognition when addressing lighting variations and dense distributions. With all three modules combined, the VMC-DETR framework demonstrates robust detection performance, effectively tackling the challenges of density, occlusion, and lighting variations.

5.1.3. Effectiveness Validation on TinyPerson Dataset

The results in Table 8 show that the VMC-Net framework achieves a significant performance improvement on the TinyPerson dataset, with final mAP50 and mAP50:95 scores of 25.4% and 7.6%, respectively, representing a clear improvement over the baseline model’s scores of 22.0% and 6.6%. Using the CAGFM module alone increases Pre from 37.6% to 39.4% and mAP50 from 22.0% to 23.2%. This result highlights CAGFM’s ability to fusion information effectively in the complex scenes of the TinyPerson dataset. By leveraging contextual attention, CAGFM enhances the model’s capacity to perceive and fuse small and overlapping objects, allowing for more accurate object recognition within aerial images.

Table 8.

Ablation experiment results on the TinyPerson dataset. All values are expressed as percentages (%). Bold numbers indicate the best performance in each column.

Further combining CAGFM with VHeat C2f or MFADM leads to additional performance gains. For example, the combination of CAGFM and VHeat C2f achieves an mAP50 of 24.4%, demonstrating the synergy between fine-grained feature extraction and contextual attention. In the TinyPerson dataset, small objects are often densely packed within complex backgrounds. The VHeat C2f module, through its heat conduction mechanism, effectively captures detailed features, while MFADM’s multi-scale feature fusion and distribution mechanism improves the model’s adaptability to overlapping and variably sized objects. With all three modules working in tandem, the VMC-DETR framework achieves comprehensive enhancements in detecting overlapping objects within complex scenes on the TinyPerson dataset.

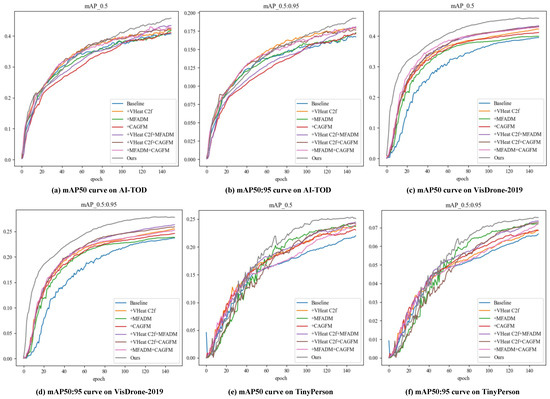

5.1.4. Discussion on Module Contributions

The ablation study clearly shows that each module in the VMC-DETR framework contributes to improving detection performance. The VHeat C2f module effectively enhances the framework’s ability to capture detailed object features, MFADM ensures efficient feature fusion across different scales, and CAGFM leverages contextual information to further strengthen the fused features. The combination of these modules achieves superior performance across all datasets, especially for small and densely overlapping objects in complex aerial images. The mAP curves in Figure 11 show the mAP trends for different modules across the three datasets, providing an intuitive illustration of the incremental improvements brought by each module, and highlighting the effectiveness and necessity of each component in achieving optimal performance.

Figure 11.

mAP curves from ablation experiments on the AI-TOD, VisDrone-2019, and TinyPerson datasets, showing the performance impact of each module.

However, the VMC-DETR framework also has certain limitations. Although VMC-DETR is optimized for aerial imagery datasets, it may struggle to adapt to non-aerial or highly diverse scenes. The reliance on multi-scale feature fusion and attention mechanisms could also make the framework sensitive to hyperparameter settings and model configurations, potentially reducing its generalizability to other tasks.

5.2. Module Configurations Analysis in VMC-DETR

To ensure the completeness of the experiment and thoroughly examine the impact of the internal design details of the proposed module on performance, we conduct ablation experiments on the three modules of the VMC-DETR framework using the AI-TOD, VisDrone-2019, and TinyPerson datasets.

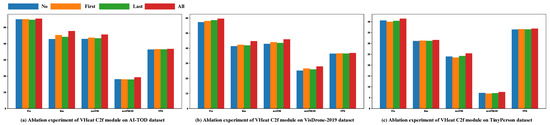

5.2.1. Ablation Study on the Design of the VHeat C2f Module

As shown in Figure 12, in terms of Pre, Rec, mAP50, and mAP50:95 metrics, the “All” configuration, which uses the VHeat C2f module in all positions, shows varying degrees of improvement over the “No” configuration, which uses only the standard C2f module, across all three datasets. This demonstrates that the VHeat C2f module effectively enhances the extraction of feature information for small objects in aerial images by refining feature maps. Additionally, the FPS results indicate that the computational complexity of using the VHeat C2f module is nearly identical to that of the standard C2f module, showing that the VHeat C2f module improves detection accuracy while maintaining the model’s inference speed.

Figure 12.

Effect of varying frequency and placement of the VHeat C2f module across the AI-TOD, VisDrone-2019, and TinyPerson datasets. “First” indicates that the VHeat C2f module is used only between the P3 and P4 layers, “Last” indicates that it is used only between the P4 and P5 layers, “No” indicates that the standard C2f module is used in both locations, and “All” indicates that the VHeat C2f module is used in both locations.

For the AI-TOD and VisDrone-2019 datasets, the “First” configuration—using the VHeat C2f module between P3 and P4—performs better than the “Last” configuration using the VHeat C2f module between P4 and P5. This suggests that for aerial image data with complex scenes and large-scale variations, using the VHeat C2f module at a lower level strengthens the spatial distribution and fine-grained high-frequency features of the object earlier, allowing more irrelevant background information to be filtered out for subsequent layers. For the TinyPerson dataset, the results of “Last” and “No” are nearly identical, while “First” performs lower than “No” in most metrics except for Rec. This indicates that for extremely small objects, it remains challenging to extract low-level feature information effectively, as these objects rely more on high-level semantic information with richer channels. Using the VHeat C2f module alone has limited impact, and better results are achieved when it is used in combination with other configurations.

5.2.2. Ablation Study on the First Aggregation Stage of MFADM

According to the ablation experiment results in Table 9, ADown, used in the first feature aggregation stage of MFADM, achieves the best overall performance in terms of Pre, Rec, mAP50, and mAP50:95 across the three datasets. Specifically, mAP50 and mAP50:95 are improved by 0.5%, 0.5%, 0.8%, 0.7%, 0.8%, and 0.4% on the three datasets, respectively, compared to the second-best Conv2d. Although the Rec values on the AI-TOD and TinyPerson datasets are 0.4% lower than those of Conv2d, this has minimal impact on the overall strong performance of the ADown module.

Table 9.

Ablation experiments on the AI-TOD, VisDrone-2019, and TinyPerson datasets using different downsampling methods in the first feature aggregation stage of the MFADM module, including Interpolation, AvgPool2d, Conv2d, and ADown. Bold numbers indicate the best performance in each column.

In addition, for aerial images with densely overlapping small objects, interpolation downsampling and average pooling have poor overall effects. This is because the absence of convolution operations leads to excessive loss of spatial structural information, making it difficult for the model to distinguish between objects and backgrounds, and preventing accurate object localization, ultimately resulting in reduced accuracy. Overall, the ADown module offers distinct advantages in processing aerial images. By preserving the smoothness of downsampled features, it retains more multi-scale feature information through a rich and diverse combination of convolution and pooling operations.

Finally, the FPS of the ADown module is similar to that of other methods, indicating that there is no significant increase in computational overhead, and it maintains high efficiency. Therefore, downsampling using ADown in the first stage of MFADM is necessary for the overall VMC-DETR framework.

5.2.3. Ablation Study on the Distribution and Second Aggregation Stage of MFADM

We conduct ablation experiments on the distribution operation in the third stage of MFADM using different numbers, strides, and sizes of deep convolution kernel combinations. The experimental results are shown in Table 10. The (5, 7, 9, 11) deep convolution kernel combination used in our MFADM performs the best across all three accuracy indicators on the three datasets.

Table 10.

Ablation experiments on three datasets for different numbers, strides, and sizes of deep convolutional kernels used in the distribution operation in the third stage of the MFADM module. Bold numbers indicate the best performance in each column.

The fusion strategy using four deep convolution kernels consistently outperforms the one using only three kernels in terms of all evaluation metrics. This suggests that employing a greater number of deep convolution kernels enables more effective and comprehensive integration of high-frequency object features across multiple scales. In contrast, the (3, 7, 11, 15) kernel combination with a stride of 4 performs poorly on all datasets. Compared with the (5, 7, 9, 11) setting, the mAP50 drops by 2.3%, 1.7%, and 2.7% on the respective datasets. These results indicate that using an excessively large stride during the distribution process can lead to the loss of important high-frequency details—particularly around object boundaries—thus weakening the model’s ability to localize objects accurately.

Moreover, using overly small depthwise convolution kernels (e.g., 3 × 3) may lead to missed features of large-scale objects due to the limited receptive field. At the same time, high-frequency features extracted with small kernels tend to be more vulnerable to interference and exhibit poor robustness. In contrast, employing excessively large kernels (e.g., 13 × 13 or 15 × 15) often results in the loss of critical high-frequency cues essential for accurate detection, retaining primarily mid- and low-frequency components. Both scenarios ultimately compromise detection accuracy.

This observation is consistent with findings from adversarial attack studies on deep neural networks, which indicate that the extraction of high-frequency features is highly sensitive to the choice of convolution kernel size [24,53,69]. To address this issue, MFADM adopts a multi-scale depthwise convolution kernel combination of (5, 7, 9, 11), which serves as a compromise strategy. This design effectively balances sensitivity to fine-grained high-frequency details and robustness against noise and perturbations, thereby achieving optimal detection performance.

Regarding computational complexity, increasing the kernel size and the number of kernels inevitably introduces additional computational overhead. However, the overall impact on inference efficiency remains minimal, making this trade-off acceptable for practical applications.

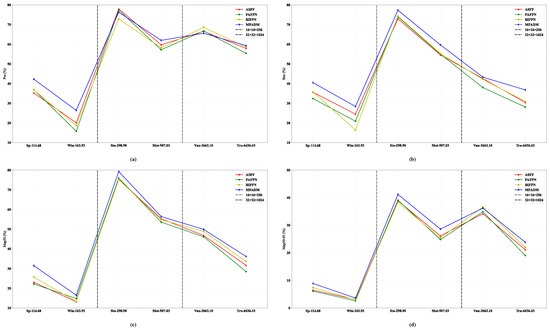

5.2.4. Ablation Study on MFADM and Classic Multi-Scale Feature Fusion Methods

We conduct ablation experiments on the proposed MFADM and classic multi-scale feature fusion strategies, including ASFF, PAFPN, and BIFPN. To ensure the exclusivity of MFADM as the sole variable in the ablation experiments, we use VHeat C2f as the backbone network and replace the normal concatenation operation in the neck with CAGFM when evaluating the other three methods.

In addition, to clearly demonstrate the experimental effects of different methods on objects of varying sizes, we use two thresholds, an area of 16 × 16 = 256 pixels and an area of 32 × 32 = 1024 pixels, to categorize object sizes. The objects are divided into three groups: extremely small objects with an area less than 256 pixels, small objects with an area between 256 and 1024 pixels, and medium objects with an area greater than 1024 pixels. Additionally, two object categories are selected from each group to display experimental results: Sp from the TinyPerson dataset, Win and Sto from the AI-TOD dataset, and Mot, Van, and Tru from the VisDrone-2019 dataset.

Figure 13 shows the experimental results, demonstrating that MFADM achieves significant advantages in two key metrics: Rec and mAP50. Across six object categories of varying sizes—including extremely small, small, and medium object—MFADM consistently delivers the best performance. This highlights the effectiveness and robustness of MFADM’s ADown-based feature fusion and multi-scale large-kernel depthwise convolution distribution strategy in aerial image object detection.

Figure 13.

The comparison between the proposed MFADM and classic multi-scale feature fusion strategies on aerial image object-detection datasets is presented. The vertical axes in (a), (b), (c), and (d) represent the performance metrics of Pre, Rec, mAP50, and mAP50:95, respectively, while the horizontal axes indicate the average area of object instances in pixels.

Despite its strong overall performance in terms of Pre and mAP50:95 across most categories, MFADM exhibits suboptimal results on the small object class “Sto” (area: 298.90 pixels) and the medium object class “Van” (area: 3663.10 pixels), with “Van” even ranking last in the Pre metric. This suggests that although MFADM excels in detecting smaller objects, it still faces limitations when handling medium or larger objects. A potential reason lies in the design of the feature distribution stage, where multiple large-sized depthwise convolutions are applied. While this design effectively filters high-frequency features of extremely small and small objects, it may also lead to the excessive attenuation of limited high-frequency details in larger objects, ultimately resulting in significant bounding box regression loss.

In general, aerial image object detection mainly focuses on detecting small objects. MFADM performs well on extremely small and small objects and solves the shortcomings of several classic multi-scale feature fusion methods in some extreme cases, as shown in Figure 5.

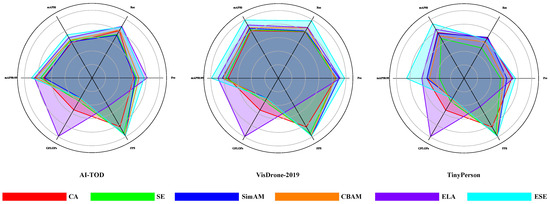

5.2.5. Ablation Study on the Design of CAGFM

We conduct experiments on the AI-TOD, VisDrone-2019, and TinyPerson datasets, using various attention mechanisms with similar computational complexity for context guided fusion in CAGFM, including CA [70], SE [56], SimAM [71], CBAM [72], ELA [73], and ESE [32], as used in this paper. The results are shown in Figure 14. CAGFM with the ESE attention mechanism achieves the best results on the mAP50 and mAP50:95 metrics across all three datasets, with the most notable effect on the VisDrone-2019 dataset. This is because ESE more effectively highlights the object regions of various categories in the image through an efficient attention mechanism, thereby enhancing the quality of the feature fusion process, especially for small object detection in dense, light-affected scenes in the VisDrone-2019 dataset.

Figure 14.

Effect of different attention mechanisms in CAGFM on context-guided fusion, evaluated through ablation experiments across the AI-TOD, VisDrone-2019, and TinyPerson datasets. Higher Pre, Rec, mAP50, and mAP50:95 indicate greater model accuracy, higher FPS represents faster inference, and lower GFLOPs imply a more lightweight model.

In addition, for the GFLOPs metric, ESE is relatively lightweight among the six attention mechanisms, significantly lower than ELA, which ranks second in accuracy. This demonstrates that ESE reduces redundant operations through effective parameter-sharing mechanisms and efficient feature selection strategies. Compared to other mainstream attention mechanisms, it maintains a lightweight model structure while effectively enhancing contextual information interaction between multi-scale objects in aerial images.

5.3. Small Object Detection Performance Analysis

Due to the frequent occurrence of densely distributed and low-resolution objects in aerial imagery, this study focuses on the task of small object detection. As illustrated in Figure 8, and following the MS COCO definition, objects smaller than pixels are categorized as small objects. To better evaluate the effectiveness of the proposed VMC-DETR in this context, we analyze its performance on three benchmark datasets: AI-TOD (as shown in Table 3), VisDrone-2019 (as shown in Table 4), and TinyPerson (as shown in Table 5).

In the AI-TOD dataset, more than 87.7% of objects are considered small, with a mean object size of only 12.8 pixels. VMC-DETR achieves the highest AP in small-object dominant categories such as Air, Per, and Veh, with scores of 42.0%, 30.7%, and 69.7%, respectively, indicating strong robustness in dense aerial scenes.

The VisDrone-2019 dataset contains a high proportion of small targets in categories like Peo, Ped, and Mot. In these categories, our method outperforms other baselines with up to 47.9% AP on Peo, clearly demonstrating improved sensitivity to small-scale object features.

The TinyPerson dataset consists almost entirely of extremely small human instances. VMC-DETR achieves AP scores of 19.3% and 31.5% for the Ep and Sp categories, outperforming the best baseline method by 1.4% and 1.0%, respectively.

These results affirm that the high-frequency feature enhancement and multi-scale context-aware design of VMC-DETR are particularly beneficial for small object detection, which is critical for real-world aerial applications.

5.4. Computational Complexity Analysis

The proposed VMC-DETR framework introduces three modules—VHeat C2f, MFADM, and CAGFM—each designed to enhance performance while maintaining computational efficiency. This section provides a theoretical analysis of their complexity characteristics. The actual effectiveness of this design is quantitatively demonstrated in Table 3 and through the ablation experiments presented in this section.

VHeat C2f Module: By integrating frequency-domain heat conduction into the backbone, the module leverages a HCO based on DCT. This operator operates with a time complexity of , where N denotes the number of spatial locations. This is more efficient than traditional attention mechanisms (), and the frequency filtering is applied selectively to balance precision and overhead.

MFADM Module: The MFADM utilizes depthwise separable convolutions with large kernels (5 × 5 to 11 × 11) to capture multi-scale spatial context. Depthwise convolutions significantly reduce computational load compared to standard convolutions, and their parallel arrangement introduces only marginal cost while substantially expanding the receptive field.

CAGFM Module: The CAGFM applies a lightweight attention mechanism, ESE (Effective Squeeze and Extraction), which uses a single fully connected layer without dimensional expansion. This module avoids expensive multi-head attention and maintains linear complexity relative to channel count, adding negligible burden to the overall model.

In summary, all modules are constructed based on lightweight design principles, with their computational complexity strictly controlled to ensure real-time inference capability. Naturally, the integration of multiple modules introduces additional computational overhead, as shown in Table 3. VMC-DETR achieves 36.8 FPS, 70.5 GFLOPs, 9.2 IT, and 302 MU, which are slightly lower than those of the baseline model and YOLO series in terms of real-time performance and memory efficiency. Nevertheless, VMC-DETR strikes a well-considered balance between detection accuracy and computational efficiency. It is compatible with mainstream edge computing platforms such as the Raspberry Pi 4B and NVIDIA Jetson Nano, making it suitable for real-time aerial applications under resource-constrained conditions, although it may encounter limitations on lower-end smartphones or older mobile devices.