Using an Area-Weighted Loss Function to Address Class Imbalance in Deep Learning-Based Mapping of Small Water Bodies in a Low-Latitude Region

Abstract

1. Introduction

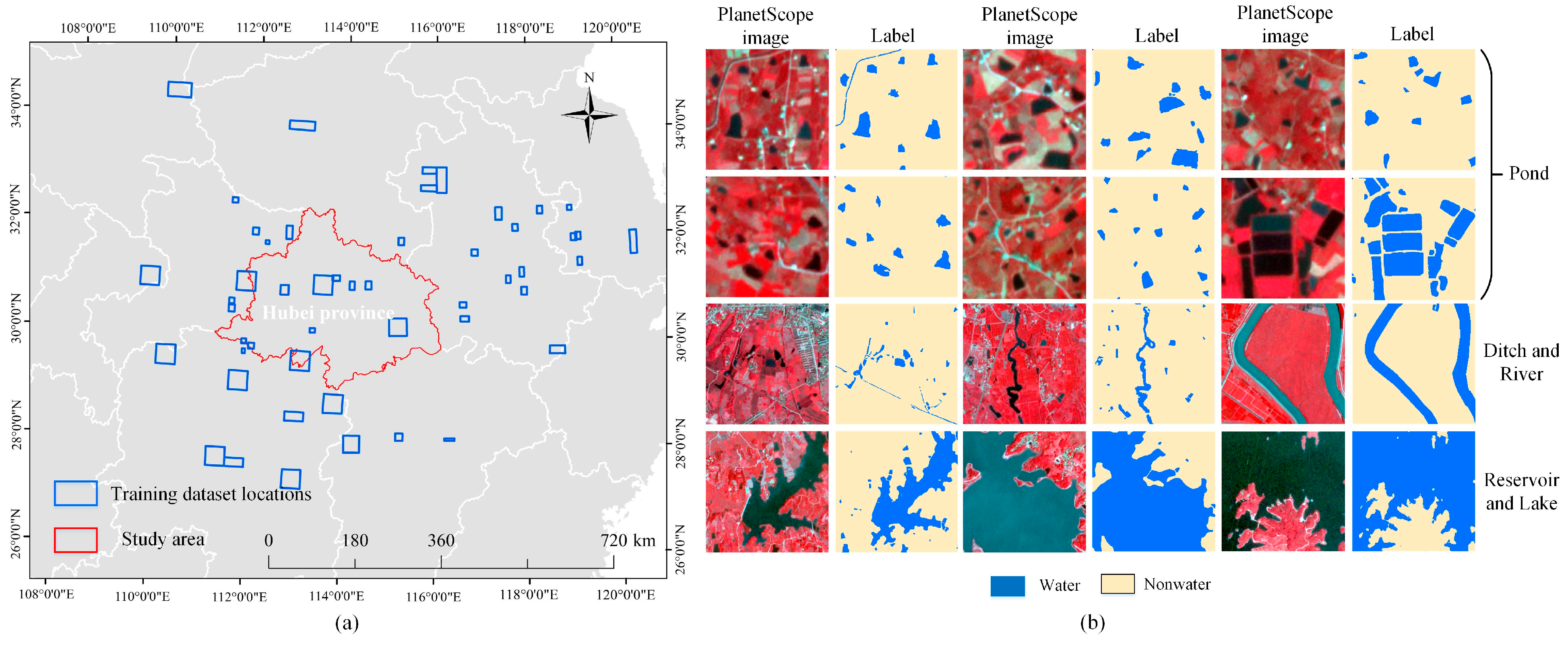

2. Study Area and Data

2.1. Study Area

2.2. Data

2.2.1. PlanetScope

2.2.2. Construction of Training Dataset for Deep-Learning Models

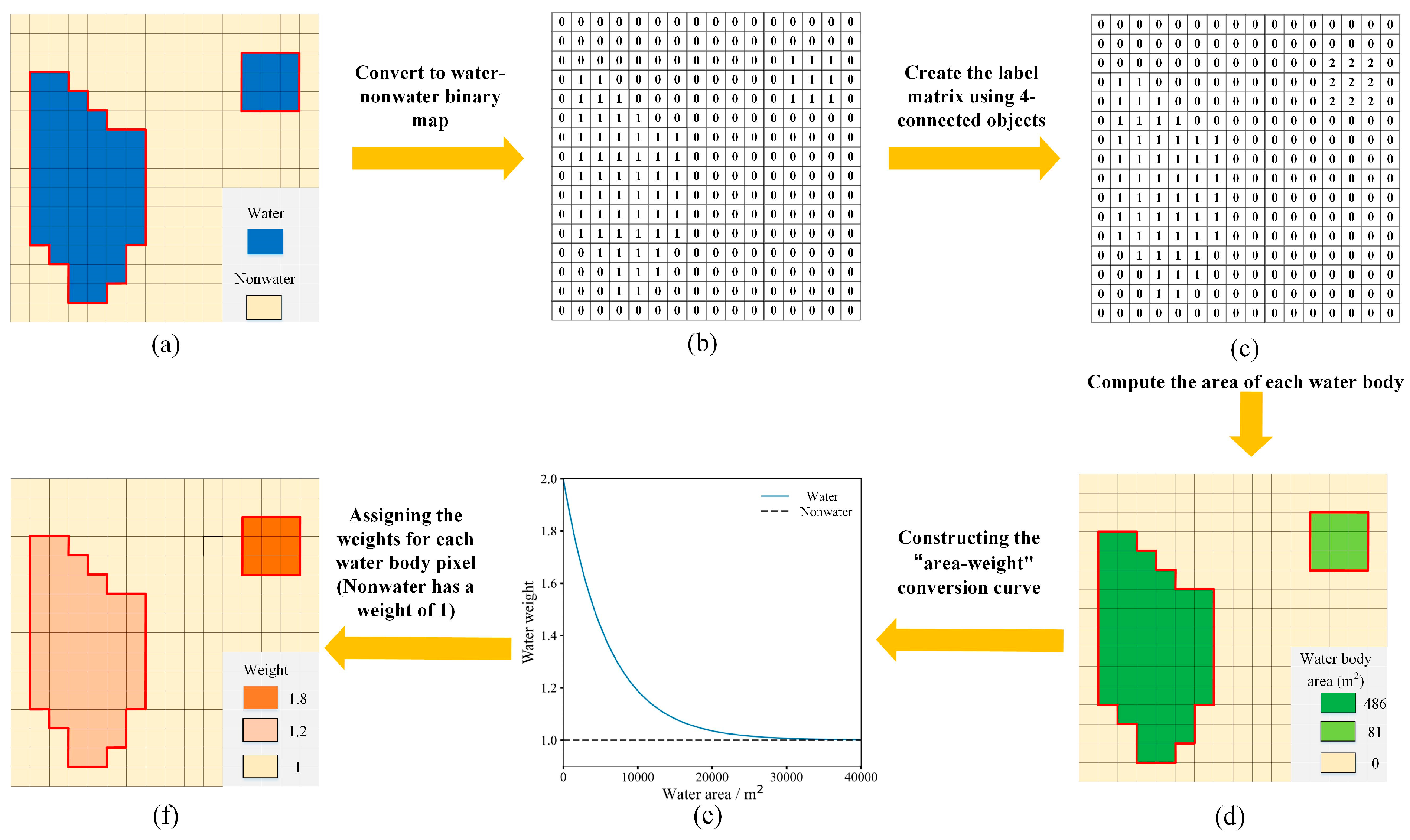

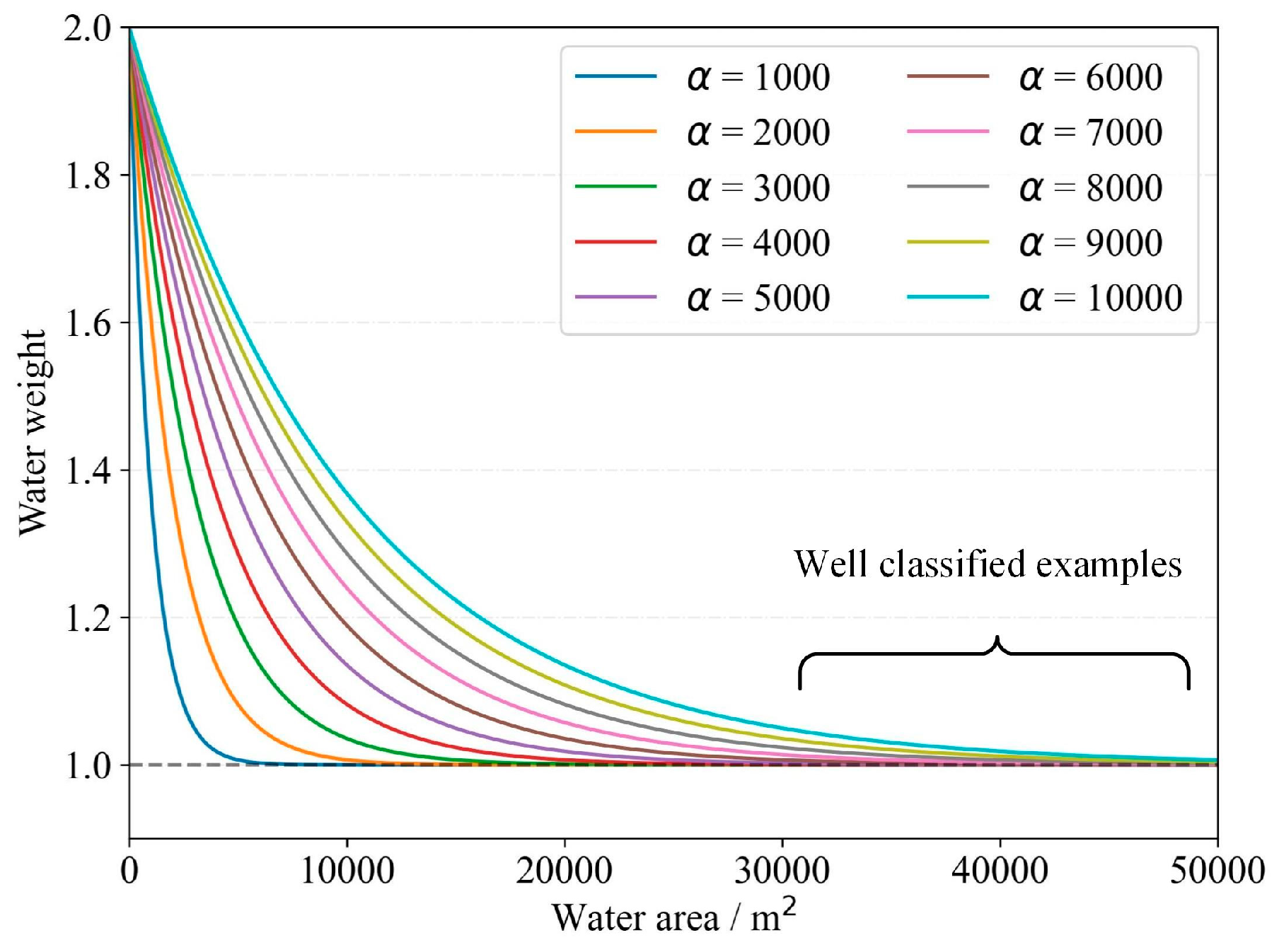

3. Methods

4. Experiments

4.1. Small Area Experiment Using UAV for Validation

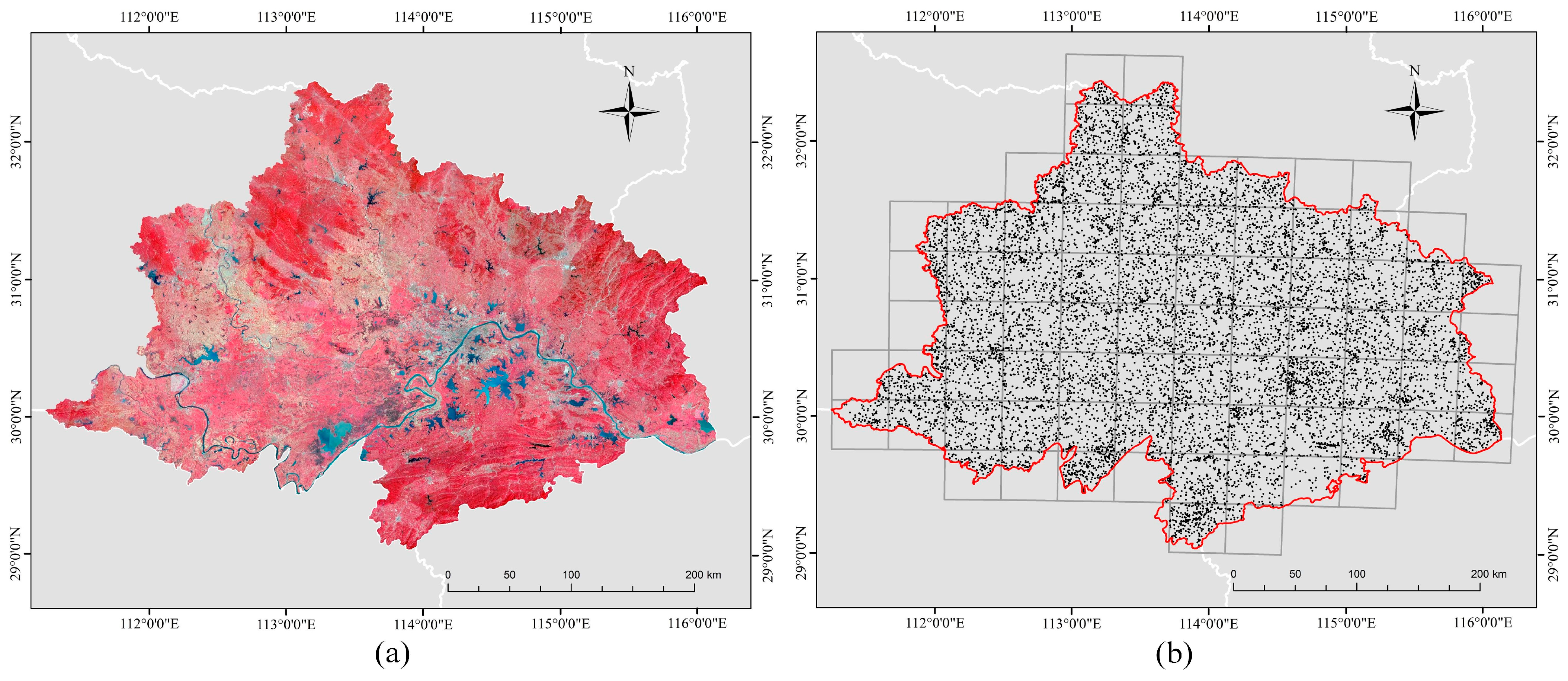

4.2. Large Area Experiment Using Random Sample Points from Google Earth for Validation

4.3. Comparators and Model Parameter Settings

4.4. Accuracy Assessment

5. Results

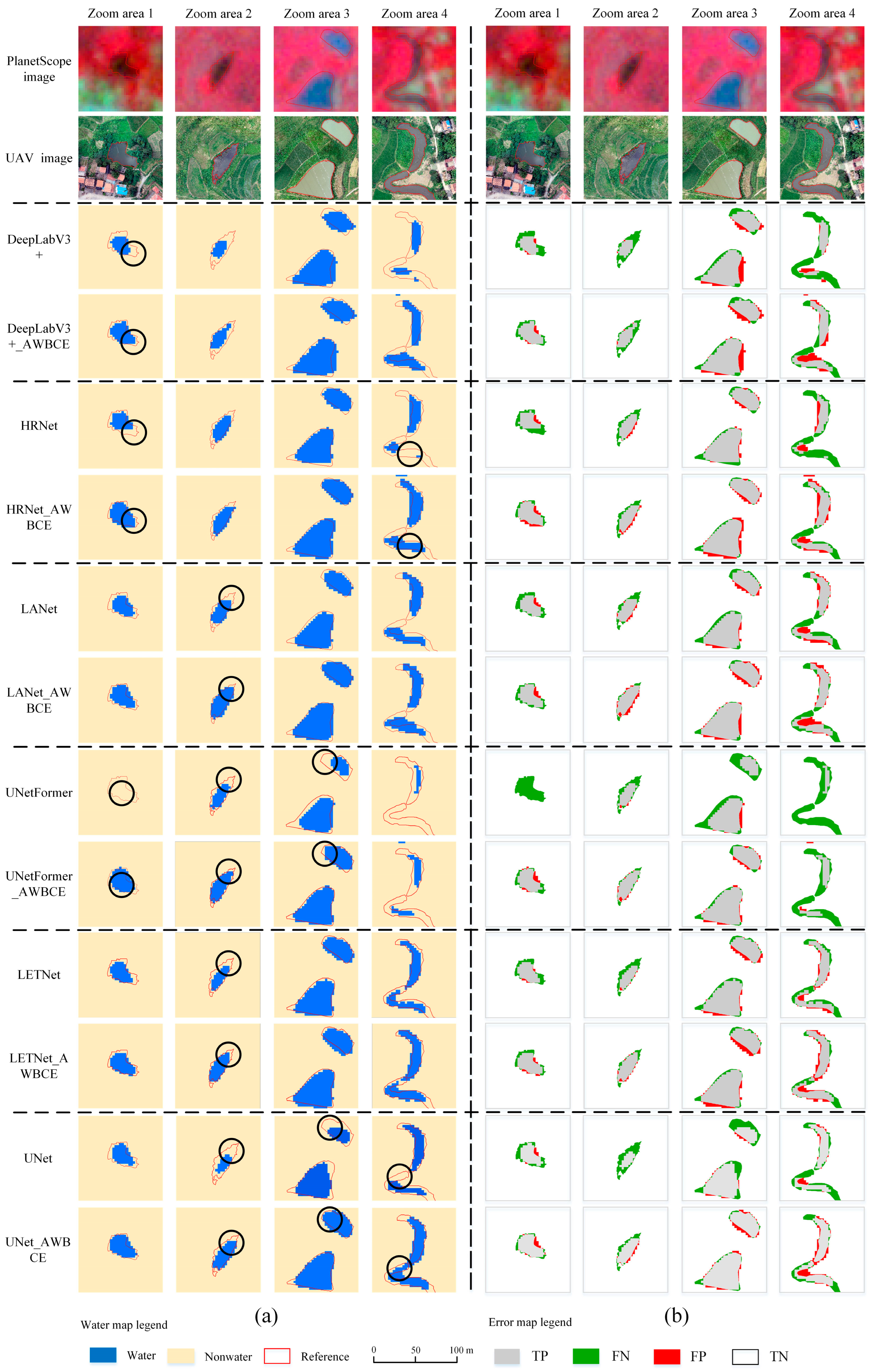

5.1. Result of Small Area Experiment Using UAV for Validation

5.1.1. Results of Different Deep-Learning Models with Classic BCE Loss and with the Proposed AWBCE Loss in the Small Area Experiment

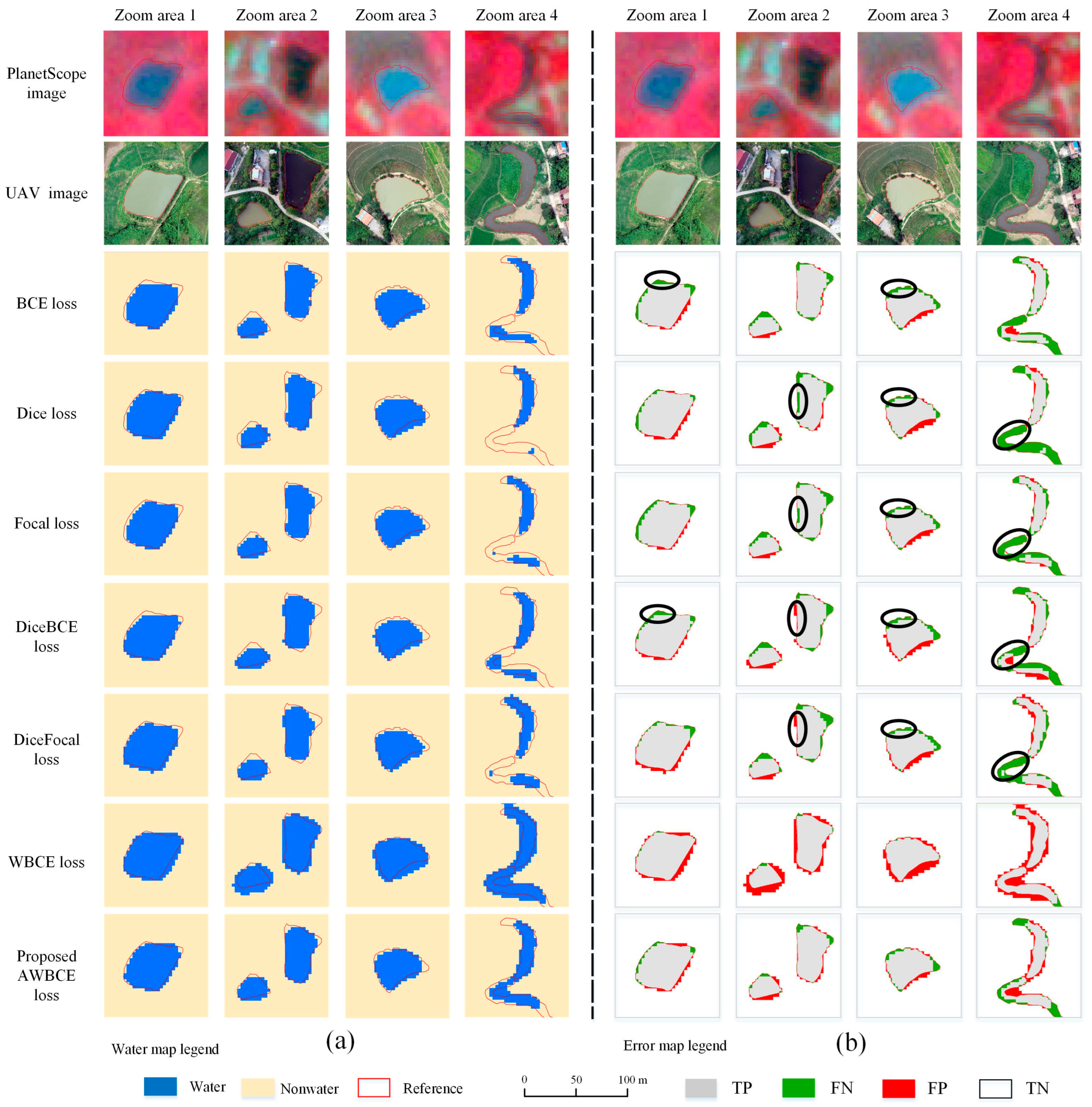

5.1.2. Comparison of Different Loss Functions for Addressing the Class Imbalance Problem in the Small Area Experiment

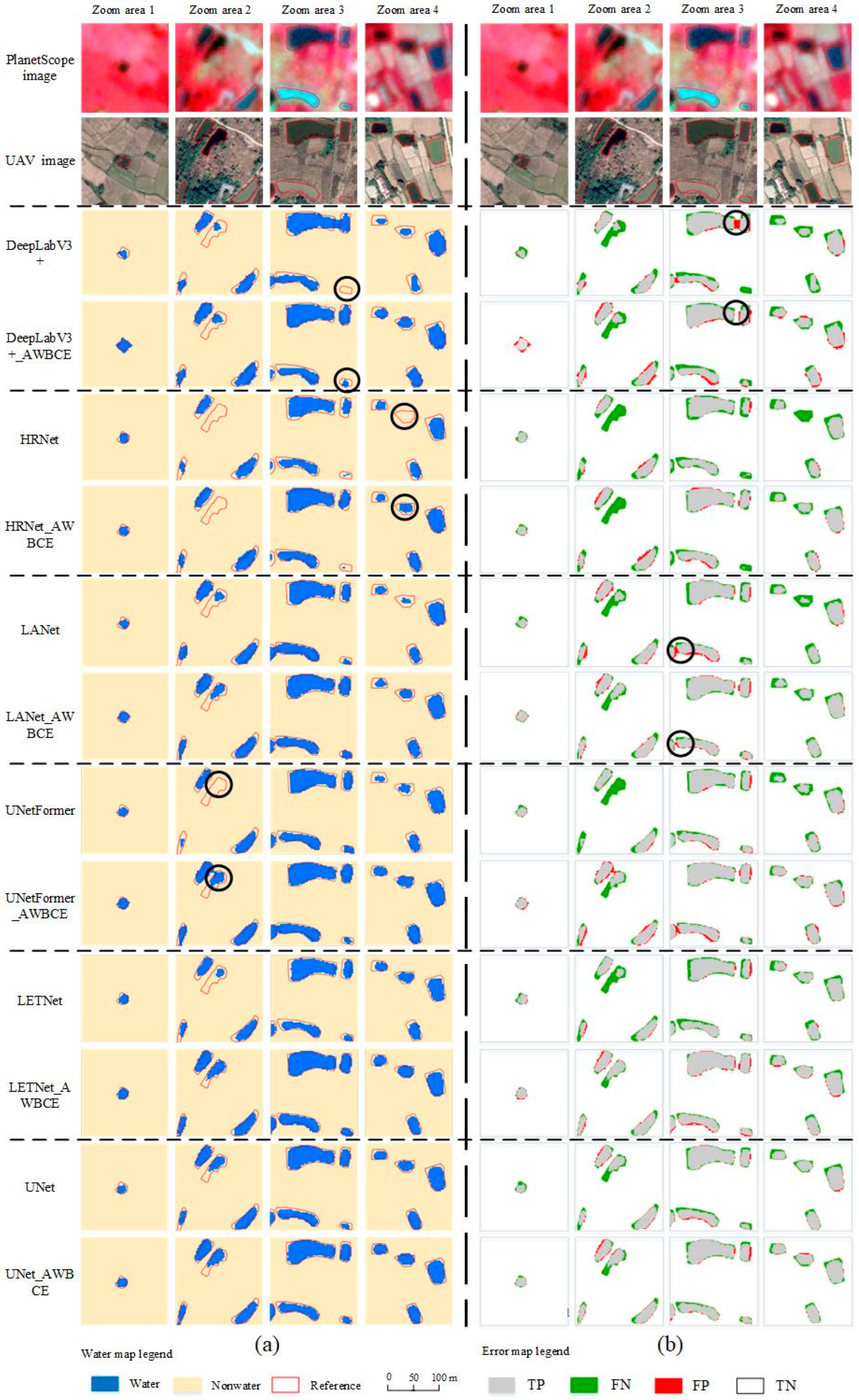

5.2. Large Area Experiment

5.2.1. Results of Different Deep-Learning Models with and Without the Proposed AWBCE Loss in the Large Area Experiment

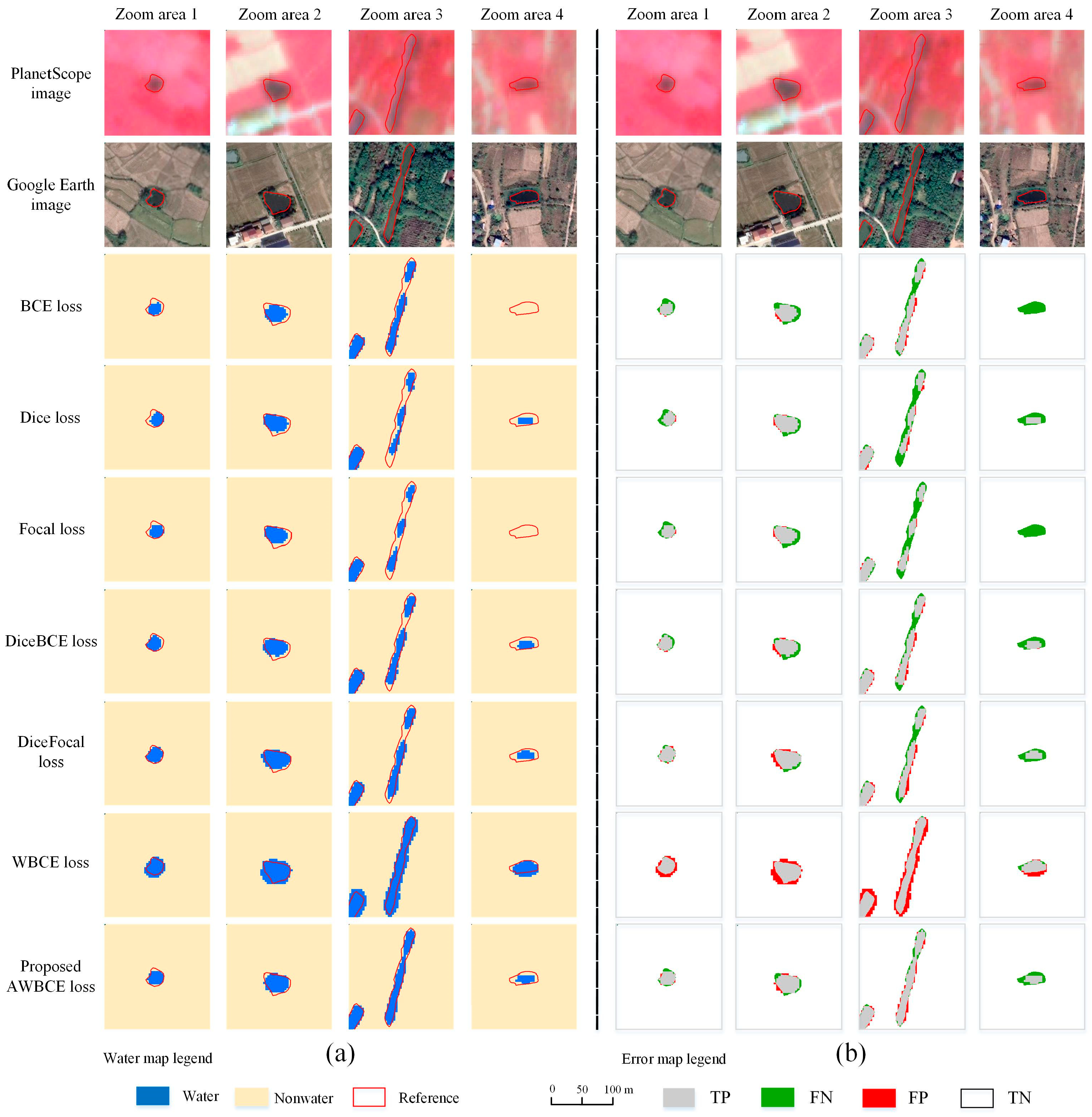

5.2.2. Comparison of Different Loss Functions for Addressing the Class Imbalance Problem in the Large Area Experiment

6. Discussion

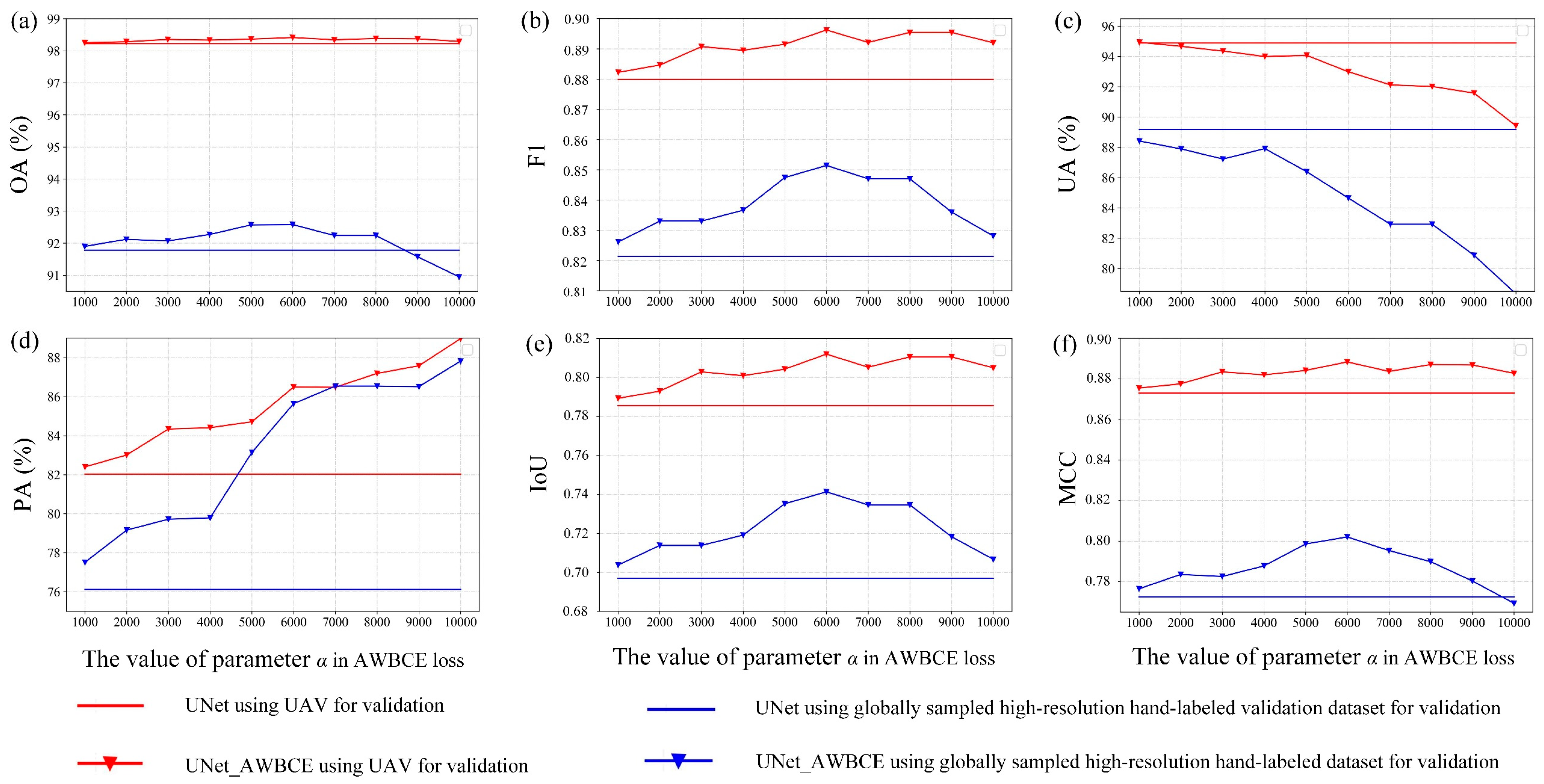

6.1. The Impact of Model Parameters

6.2. Reliability and Stability Analysis of the Models

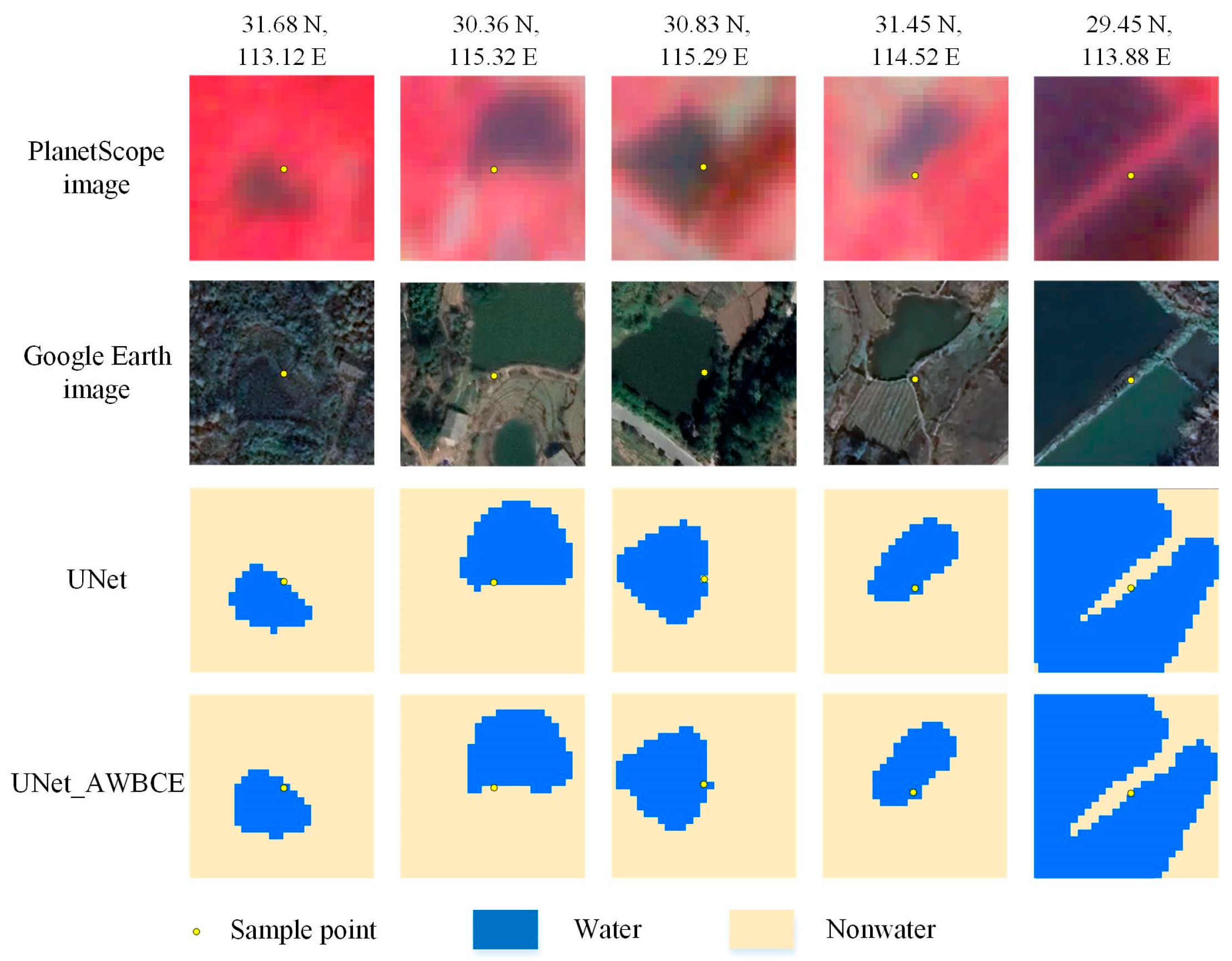

6.3. Migration Experiments

6.4. Limitations and Future Works

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Holgerson, M.A.; Raymond, P.A. Large contribution to inland water CO2 and CH4 emissions from very small ponds. Nat. Geosci. 2016, 9, 222–226. [Google Scholar] [CrossRef]

- Avis, C.A.; Weaver, A.J.; Meissner, K.J. Reduction in areal extent of high-latitude wetlands in response to permafrost thaw. Nat. Geosci. 2011, 4, 444–448. [Google Scholar] [CrossRef]

- Mullen, A.L.; Watts, J.D.; Rogers, B.M.; Carroll, M.L.; Elder, C.D.; Noomah, J.; Williams, Z.; Caraballo-Vega, J.A.; Bredder, A.; Rickenbaugh, E.; et al. Using High-Resolution Satellite Imagery and Deep Learning to Track Dynamic Seasonality in Small Water Bodies. Geophys. Res. Lett. 2023, 50, e2022GL102327. [Google Scholar] [CrossRef]

- Polishchuk, Y.M.; Bogdanov, A.N.; Muratov, I.N.; Polishchuk, V.Y.; Lim, A.; Manasypov, R.M.; Shirokova, L.S.; Pokrovsky, O.S. Minor contribution of small thaw ponds to the pools of carbon and methane in the inland waters of the permafrost-affected part of the Western Siberian Lowland. Environ. Res. Lett. 2018, 13, 1–16. [Google Scholar] [CrossRef]

- Lv, M.; Wu, S.; Ma, M.; Huang, P.; Wen, Z.; Chen, J. Small water bodies in China: Spatial distribution and influencing factors. Sci. China Earth Sci. 2022, 65, 1431–1448. [Google Scholar] [CrossRef]

- Perin, V.; Tulbure, M.G.; Gaines, M.D.; Reba, M.L.; Yaeger, M.A. A multi-sensor satellite imagery approach to monitor on-farm reservoirs. Remote Sens. Environ. 2021, 270, 112796. [Google Scholar] [CrossRef]

- Chao Rodríguez, Y.; el Anjoumi, A.; Domínguez Gómez, J.A.; Rodríguez Pérez, D.; Rico, E. Using Landsat image time series to study a small water body in Northern Spain. Environ. Monit. Assess. 2014, 186, 3511–3522. [Google Scholar] [CrossRef]

- Du, Y.; Zhang, Y.; Ling, F.; Wang, Q.; Li, W.; Li, X. Water Bodies’ Mapping from Sentinel-2 Imagery with Modified Normalized Difference Water Index at 10-m Spatial Resolution Produced by Sharpening the SWIR Band. Remote Sens. 2016, 8, 354. [Google Scholar] [CrossRef]

- Li, Y.; Dang, B.; Zhang, Y.; Du, Z. Water body classification from high-resolution optical remote sensing imagery: Achievements and perspectives. ISPRS J. Photogramm. Remote Sens. 2022, 187, 306–327. [Google Scholar] [CrossRef]

- Li, X.; Jia, X.; Yin, Z.; Du, Y.; Ling, F. Integrating MODIS and Landsat imagery to monitor the small water area variations of reservoirs. Sci. Remote Sens. 2022, 5, 100045. [Google Scholar] [CrossRef]

- Ogilvie, A.; Belaud, G.; Massuel, S.; Mulligan, M.; Le Goulven, P.; Malaterre, P.-O.; Calvez, R. Combining Landsat observations with hydrological modelling for improved surface water monitoring of small lakes. J. Hydrol. 2018, 566, 109–121. [Google Scholar] [CrossRef]

- Chen, Y.; Tang, L.; Kan, Z.; Bilal, M.; Li, Q. A novel water body extraction neural network (WBE-NN) for optical high-resolution multispectral imagery. J. Hydrol. 2020, 588, 125092. [Google Scholar] [CrossRef]

- Pi, X.; Luo, Q.; Feng, L.; Xu, Y.; Tang, J.; Liang, X.; Ma, E.; Cheng, R.; Fensholt, R.; Brandt, M.; et al. Mapping global lake dynamics reveals the emerging roles of small lakes. Nat. Commun. 2022, 13, 5777. [Google Scholar] [CrossRef]

- Ogilvie, A.; Belaud, G.; Massuel, S.; Mulligan, M.; Le Goulven, P.; Calvez, R. Surface water monitoring in small water bodies: Potential and limits of multi-sensor Landsat time series. Hydrol. Earth Syst. Sci. 2018, 22, 4349–4380. [Google Scholar] [CrossRef]

- Dong, Y.; Fan, L.; Zhao, J.; Huang, S.; Geiß, C.; Wang, L.; Taubenböck, H. Mapping of small water bodies with integrated spatial information for time series images of optical remote sensing. J. Hydrol. 2022, 614, 128580. [Google Scholar] [CrossRef]

- Crawford, C.J.; Roy, D.P.; Arab, S.; Barnes, C.; Vermote, E.; Hulley, G.; Gerace, A.; Choate, M.; Engebretson, C.; Micijevic, E.; et al. The 50-year Landsat collection 2 archive. Sci. Remote Sens. 2023, 8, 100103. [Google Scholar] [CrossRef]

- Bie, W.; Fei, T.; Liu, X.; Liu, H.; Wu, G. Small water bodies mapped from Sentinel-2 MSI (MultiSpectral Imager) imagery with higher accuracy. Int. J. Remote Sens. 2020, 41, 7912–7930. [Google Scholar] [CrossRef]

- Yan, L.; Roy, D.P.; Promkhambut, A.; Fox, J.; Zhai, Y. Automated extraction of aquaculture ponds from Sentinel-2 seasonal imagery—A validated case study in central Thailand. Sci. Remote Sens. 2022, 6, 100063. [Google Scholar] [CrossRef]

- Freitas, P.; Vieira, G.; Canário, J.; Folhas, D.; Vincent, W.F. Identification of a Threshold Minimum Area for Reflectance Retrieval from Thermokarst Lakes and Ponds Using Full-Pixel Data from Sentinel-2. Remote Sens. 2019, 11, 657. [Google Scholar] [CrossRef]

- Ji, Z.; Zhu, Y.; Pan, Y.; Zhu, X.; Zheng, X. Large-Scale Extraction and Mapping of Small Surface Water Bodies Based on Very High-Spatial-Resolution Satellite Images: A Case Study in Beijing, China. Water 2022, 14, 2889. [Google Scholar] [CrossRef]

- Zhou, P.; Li, X.; Foody, G.M.; Boyd, D.S.; Wang, X.; Ling, F.; Zhang, Y.; Wang, Y.; Du, Y. Deep Feature and Domain Knowledge Fusion Network for Mapping Surface Water Bodies by Fusing Google Earth RGB and Sentinel-2 Images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Marta, S. Planet Imagery Product Specifications; Planet Labs: San Francisco, CA, USA; Volume 91, Available online: https://assets.planet.com/docs/Combined-Imagery-Product-Spec-Dec-2018.pdf (accessed on 15 August 2020).

- Perin, V.; Roy, S.; Kington, J.; Harris, T.; Tulbure, M.G.; Stone, N.; Barsballe, T.; Reba, M.; Yaeger, M.A. Monitoring Small Water Bodies Using High Spatial and Temporal Resolution Analysis Ready Datasets. Remote Sens. 2021, 13, 5176. [Google Scholar] [CrossRef]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Duan, J.; Kang, L.; Qiu, G. Class-Imbalanced Deep Learning via a Class-Balanced Ensemble. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 5626–5640. [Google Scholar] [CrossRef]

- Galar, M.; Fernandez, A.; Barrenechea, E.; Bustince, H.; Herrera, F. A Review on Ensembles for the Class Imbalance Problem: Bagging-, Boosting-, and Hybrid-Based Approaches. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 463–484. [Google Scholar] [CrossRef]

- Qayyum, N.; Ghuffar, S.; Ahmad, H.M.; Yousaf, A.; Shahid, I. Glacial Lakes Mapping Using Multi Satellite PlanetScope Imagery and Deep Learning. ISPRS Int. J. Geo Inf. 2020, 9, 560. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar]

- Li, K.; Wang, J.; Yao, J. Effectiveness of machine learning methods for water segmentation with ROI as the label: A case study of the Tuul River in Mongolia. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102497. [Google Scholar] [CrossRef]

- Sáez, J.A.; Krawczyk, B.; Woźniak, M. Analyzing the oversampling of different classes and types of examples in multi-class imbalanced datasets. Pattern Recognit. 2016, 57, 164–178. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Wang, S.; Liu, W.; Wu, J.; Cao, L.; Meng, Q.; Kennedy, P.J. Training deep neural networks on imbalanced data sets. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, CA, Canada, 24–29 July 2016; pp. 4368–4374. [Google Scholar]

- Han, H.; Wang, W.-Y.; Mao, B.-H. Borderline-SMOTE: A New Over-Sampling Method in Imbalanced Data Sets Learning. In Advances in Intelligent Computing; Springer: Berlin, Germany, 2005; pp. 878–887. [Google Scholar]

- Mullick, S.S.; Datta, S.; Das, S. Generative adversarial minority oversampling. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1695–1704. [Google Scholar]

- Mariani, G.; Scheidegger, F.; Istrate, R.; Bekas, C.; Malossi, C. BAGAN: Data augmentation with balancing GAN. arXiv 2018, arXiv:1803.09655. [Google Scholar]

- Dieste, Á.G.; Argüello, F.; Heras, D.B. ResBaGAN: A Residual Balancing GAN with Data Augmentation for Forest Mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 6428–6447. [Google Scholar] [CrossRef]

- Wang, Q.; Lohse, J.P.; Doulgeris, A.P.; Eltoft, T. Data Augmentation for SAR Sea Ice and Water Classification Based on Per-Class Backscatter Variation with Incidence Angle. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Jadon, S. A survey of loss functions for semantic segmentation. In Proceedings of the 2020 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Via del Mar, FL, USA, 27–29 October 2020; pp. 1–7. [Google Scholar]

- Song, Y.; Rui, X.; Li, J. AEDNet: An Attention-Based Encoder–Decoder Network for Urban Water Extraction From High Spatial Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 1286–1298. [Google Scholar] [CrossRef]

- Galar, M.; Fernández, A.; Barrenechea, E.; Herrera, F. EUSBoost: Enhancing ensembles for highly imbalanced data-sets by evolutionary undersampling. Pattern Recognit. 2013, 46, 3460–3471. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, FL, USA, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Xu, G.; Cao, H.; Dong, Y.; Yue, C.; Li, K.; Tong, Y. Focal Loss Function based DeepLabv3+ for Pathological Lymph Node Segmentation on PET/CT. In Proceedings of the 2020 2nd International Conference on Intelligent Medicine and Image Processing, Tianjin, China, 18 June 2020; pp. 24–28. [Google Scholar]

- Bai, Y.; Wu, W.; Yang, Z.; Yu, J.; Zhao, B.; Liu, X.; Yang, H.; Mas, E.; Koshimura, S. Enhancement of Detecting Permanent Water and Temporary Water in Flood Disasters by Fusing Sentinel-1 and Sentinel-2 Imagery Using Deep Learning Algorithms: Demonstration of Sen1Floods11 Benchmark Datasets. Remote Sens. 2021, 13, 2220. [Google Scholar] [CrossRef]

- Du, J.; Zhou, Y.; Liu, P.; Vong, C.M.; Wang, T. Parameter-Free Loss for Class-Imbalanced Deep Learning in Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 3234–3240. [Google Scholar] [CrossRef]

- Hossain, M.S.; Betts, J.M.; Paplinski, A.P. Dual Focal Loss to address class imbalance in semantic segmentation. Neurocomputing 2021, 462, 69–87. [Google Scholar] [CrossRef]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Lima, R.P.d.; Karimzadeh, M. Model Ensemble With Dropout for Uncertainty Estimation in Sea Ice Segmentation Using Sentinel-1 SAR. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Zhong, H.F.; Sun, Q.; Sun, H.M.; Jia, R.S. NT-Net: A Semantic Segmentation Network for Extracting Lake Water Bodies From Optical Remote Sensing Images Based on Transformer. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Taghanaki, S.A.; Zheng, Y.; Kevin Zhou, S.; Georgescu, B.; Sharma, P.; Xu, D.; Comaniciu, D.; Hamarneh, G. Combo loss: Handling input and output imbalance in multi-organ segmentation. Comput. Med. Imaging Graph. 2019, 75, 24–33. [Google Scholar] [CrossRef] [PubMed]

- Bai, H.; Cheng, J.; Su, Y.; Liu, S.; Liu, X. Calibrated Focal Loss for Semantic Labeling of High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6531–6547. [Google Scholar] [CrossRef]

- Zhou, Y.; Yang, K.; Ma, F.; Hu, W.; Zhang, F. Water–Land Segmentation via Structure-Aware CNN–Transformer Network on Large-Scale SAR Data. IEEE Sens. J. 2022, 23, 1408–1422. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, Y.; Zhang, Y.; Zhong, L.; Wang, J.; Chen, J. DKDFN: Domain Knowledge-Guided deep collaborative fusion network for multimodal unitemporal remote sensing land cover classification. ISPRS J. Photogramm. Remote Sens. 2022, 186, 170–189. [Google Scholar] [CrossRef]

- Azad, R.; Heidary, M.; Yilmaz, K.; Hüttemann, M.; Karimijafarbigloo, S.; Wu, Y.; Schmeink, A.; Merhof, D. Loss Functions in the Era of Semantic Segmentation: A Survey and Outlook. arXiv 2023, arXiv:2312.05391. [Google Scholar]

- Xu, J.; Zhao, Y.; Lyu, H.; Liu, H.; Dong, X.; Li, Y.; Cao, K.; Xu, J.; Li, Y.; Wang, H.; et al. A semianalytical algorithm for estimating particulate composition in inland waters based on Sentinel-3 OLCI images. J. Hydrol. 2022, 608, 127617. [Google Scholar] [CrossRef]

- Kabir, S.M.I.; Ahmari, H. Evaluating the effect of sediment color on water radiance and suspended sediment concentration using digital imagery. J. Hydrol. 2020, 589, 125189. [Google Scholar] [CrossRef]

- Dang, B.; Li, Y. MSResNet: Multiscale Residual Network via Self-Supervised Learning for Water-Body Detection in Remote Sensing Imagery. Remote Sens. 2021, 13, 3122. [Google Scholar] [CrossRef]

- Tong, X.-Y.; Xia, G.-S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ. 2020, 237, 111322. [Google Scholar] [CrossRef]

- Valman, S.J.; Boyd, D.S.; Carbonneau, P.E.; Johnson, M.F.; Dugdale, S.J. An AI approach to operationalise global daily PlanetScope satellite imagery for river water masking. Remote Sens. Environ. 2024, 301, 113932. [Google Scholar] [CrossRef]

- Xiang, D.; Zhang, X.; Wu, W.; Liu, H. DensePPMUNet-a: A Robust Deep Learning Network for Segmenting Water Bodies From Aerial Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Pihur, V.; Datta, S.; Datta, S. Weighted rank aggregation of cluster validation measures: A Monte Carlo cross-entropy approach. Bioinformatics 2007, 23, 1607–1615. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5693–5703. [Google Scholar]

- Ding, L.; Tang, H.; Bruzzone, L. LANet: Local Attention Embedding to Improve the Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 426–435. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Xu, G.; Li, J.; Gao, G.; Lu, H.; Yang, J.; Yue, D. Lightweight Real-Time Semantic Segmentation Network With Efficient Transformer and CNN. IEEE Trans. Intell. Transp. Syst. 2023, 24, 15897–15906. [Google Scholar] [CrossRef]

- Yeung, M.; Sala, E.; Schönlieb, C.-B.; Rundo, L. Unified Focal loss: Generalising Dice and cross entropy-based losses to handle class imbalanced medical image segmentation. Comput. Med. Imaging Graph. 2022, 95, 102026. [Google Scholar] [CrossRef]

- Gammoudi, I.; Ghozi, R.; Mahjoub, M.A. HDFU-Net: An Improved Version of U-Net using a Hybrid Dice Focal Loss Function for Multi-modal Brain Tumor Image Segmentation. In Proceedings of the 2022 International Conference on Cyberworlds (CW), Kanazawa, Japan, 27–29 September 2022; pp. 71–78. [Google Scholar]

- Zhou, P.; Li, X.; Zhang, Y.; Wang, Y.; Li, Y.; Li, X.; Zhou, C.; Shen, L.; Du, Y. Attention is all you need. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 2541–2562. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Maxwell, A.E.; Bester, M.S.; Ramezan, C.A. Enhancing Reproducibility and Replicability in Remote Sensing Deep Learning Research and Practice. Remote Sens. 2022, 14, 5760. [Google Scholar] [CrossRef]

- Sharma, R.; Tsiamyrtzis, P.; Webb, A.G.; Seimenis, I.; Loukas, C.; Leiss, E.; Tsekos, N.V. A Deep Learning Approach to Upscaling “Low-Quality” MR Images: An In Silico Comparison Study Based on the UNet Framework. Appl. Sci. 2022, 12, 11758. [Google Scholar] [CrossRef]

- Mukherjee, R.; Policelli, F.; Wang, R.; Arellano-Thompson, E.; Tellman, B.; Sharma, P.; Zhang, Z.; Giezendanner, J. A globally sampled high-resolution hand-labeled validation dataset for evaluating surface water extent maps. Earth Syst. Sci. Data 2024, 16, 4311–4323. [Google Scholar] [CrossRef]

| Training | Validation | Small Area Experiment | Large Area Experiment | |||

|---|---|---|---|---|---|---|

| Input | Water Mask | Input | Water Mask | |||

| Number of image patches | 24,664 | 2177 | 1 | 1 | 1 | - |

| Image size (in pixels) | 512 × 512 | 512 × 512 | 1007 × 1043 | Approximately 120,000 × 125,000 | 120,840 × 125,160 | 12,518 random sample points |

| Spatial resolution | 3 m | 3 m | 3 m | 0.025 m | 3 m | <1 m |

| Data source | PlanetScope | PlanetScope | PlanetScope | UAV | PlanetScope | Google Earth image |

| Models | OA (%) | UA (%) | PA (%) | F1 | IoU | MCC |

|---|---|---|---|---|---|---|

| DeepLabV3+ | 97.9 | 91.2 | 81.7 | 0.862 | 0.757 | 0.852 |

| DeepLabV3+_AWBCE | 98.0 | 89.7 | 84.6 | 0.871 | 0.771 | 0.861 |

| HRNet | 98.0 | 91.6 | 82.9 | 0.870 | 0.770 | 0.861 |

| HRNet_AWBCE | 98.1 | 88.1 | 88.7 | 0.884 | 0.792 | 0.874 |

| LANet | 98.0 | 89.3 | 85.1 | 0.872 | 0.772 | 0.861 |

| LANet_AWBCE | 98.3 | 89.9 | 88.4 | 0.892 | 0.804 | 0.882 |

| UNetFormer | 98.1 | 94.8 | 81.1 | 0.874 | 0.776 | 0.867 |

| UNetFormer_AWBCE | 98.2 | 88.9 | 88.6 | 0.888 | 0.798 | 0.878 |

| LETNet | 98.0 | 92.0 | 82.1 | 0.868 | 0.766 | 0.859 |

| LETNet_AWBCE | 98.3 | 90.8 | 87.5 | 0.891 | 0.804 | 0.882 |

| UNet | 98.2 | 94.3 | 82.8 | 0.882 | 0.789 | 0.874 |

| UNet_AWBCE | 98.4 | 93.0 | 86.5 | 0.896 | 0.812 | 0.888 |

| Models | OA (%) | UA (%) | PA (%) | F1 | IoU | MCC |

|---|---|---|---|---|---|---|

| BCE loss | 98.2 | 94.3 | 82.8 | 0.882 | 0.789 | 0.874 |

| Dice loss | 98.2 | 92.9 | 84.1 | 0.883 | 0.790 | 0.875 |

| Focal loss | 98.3 | 95.0 | 83.4 | 0.888 | 0.799 | 0.882 |

| DiceBCE loss | 98.2 | 91.8 | 85.0 | 0.883 | 0.790 | 0.874 |

| DiceFocal loss | 98.3 | 92.3 | 85.4 | 0.887 | 0.797 | 0.879 |

| WBCE loss | 98.0 | 83.6 | 93.0 | 0.880 | 0.786 | 0.871 |

| Proposed AWBCE loss | 98.4 | 93.0 | 86.5 | 0.896 | 0.812 | 0.888 |

| Models | OA (%) | UA (%) | PA (%) | F1 | IoU | MCC |

|---|---|---|---|---|---|---|

| DeepLabV3+ | 95.9 | 98.9 | 92.8 | 0.958 | 0.919 | 0.920 |

| DeepLabV3+_AWBCE | 96.0 | 98.4 | 93.6 | 0.959 | 0.921 | 0.921 |

| HRNet | 95.9 | 99.2 | 92.6 | 0.958 | 0.919 | 0.921 |

| HRNet_AWBCE | 96.4 | 98.9 | 93.8 | 0.963 | 0.928 | 0.929 |

| LANet | 97.0 | 98.7 | 95.2 | 0.969 | 0.941 | 0.940 |

| LANet_AWBCE | 97.1 | 98.7 | 95.5 | 0.971 | 0.943 | 0.943 |

| UNetFormer | 96.0 | 99.0 | 92.9 | 0.959 | 0.921 | 0.922 |

| UNetFormer_AWBCE | 97.4 | 98.7 | 96.1 | 0.974 | 0.950 | 0.949 |

| LETNet | 96.4 | 99.1 | 93.7 | 0.963 | 0.929 | 0.930 |

| LETNet_AWBCE | 97.0 | 98.0 | 96.0 | 0.970 | 0.942 | 0.941 |

| UNet | 96.1 | 98.8 | 93.2 | 0.929 | 0.922 | 0.923 |

| UNet_AWBCE | 97.6 | 99.0 | 96.2 | 0.976 | 0.953 | 0.953 |

| Models | OA (%) | UA (%) | PA (%) | F1 | IoU | MCC |

|---|---|---|---|---|---|---|

| BCE loss | 96.1 | 98.8 | 93.2 | 0.929 | 0.922 | 0.923 |

| Dice loss | 96.7 | 99.5 | 94.0 | 0.966 | 0.935 | 0.936 |

| Focal loss | 96.4 | 99.6 | 93.2 | 0.963 | 0.928 | 0.930 |

| DiceBCE loss | 96.3 | 99.4 | 93.3 | 0.962 | 0.927 | 0.928 |

| DiceFocal loss | 97.2 | 99.2 | 95.2 | 0.972 | 0.945 | 0.945 |

| WBCE loss | 97.1 | 97.3 | 96.8 | 0.971 | 0.943 | 0.941 |

| Proposed AWBCE loss | 97.6 | 99.0 | 96.2 | 0.976 | 0.953 | 0.953 |

| Date | Date | Date | Date |

|---|---|---|---|

| 26 May 2017 | 8 April 2018 | 25 August 2019 | 30 November 2020 |

| 17 July 2017 | 7 June 2018 | 4 March 2020 | 6 May 2021 |

| 23 July 2017 | 28 June 2018 | 20 March 2020 | 7 May 2021 |

| 2 January 2018 | 8 September 2018 | 26 April 2020 | 16 June 2021 |

| 12 January 2018 | 4 October 2018 | 20 May 2020 | 30 July 2021 |

| 28 March 2018 | 14 March 2019 | 10 October 2020 | 26 September 2021 |

| 1 April 2018 | 6 April 2019 | 24 October 2020 | 17 November 2021 |

| 7 April 2018 | 24 August 2019 | 10 November 2020 | 29 November 2021 |

| Accuracy | Fold1 | Fold2 | Fold3 | Fold4 | Fold5 | Ave. | St. dev. |

|---|---|---|---|---|---|---|---|

| OA (%) | 99.2 | 99.1 | 99.1 | 99.1 | 99.2 | 99.1 | 0.049 |

| UA (%) | 94.2 | 94.7 | 94.0 | 94.3 | 95.1 | 94.5 | 0.393 |

| PA (%) | 93.3 | 93.0 | 93.2 | 93.2 | 93.1 | 93.2 | 0.102 |

| F1 | 0.938 | 0.938 | 0.936 | 0.937 | 0.941 | 0.938 | 0.002 |

| IoU | 0.883 | 0.883 | 0.879 | 0.882 | 0.888 | 0.883 | 0.003 |

| MCC | 0.933 | 0.933 | 0.931 | 0.933 | 0.936 | 0.933 | 0.002 |

| Site | Location | Image Size | Resolution | Data Source |

|---|---|---|---|---|

| 1 | 30°53′~30°58′N, 113°6′~111°11′E | 2578 × 3223 | 3 m | PlanetScope |

| 2 | 30°19′~30°25′N, 113°23′~113°29′E | 3335 × 3334 | 3 m | PlanetScope |

| 3 | 31°15′~31°17′N, 112°35′~112°38′E | 1667 × 1667 | 3 m | PlanetScope |

| Models | OA (%) | UA (%) | PA (%) | F1 | IoU | MCC |

|---|---|---|---|---|---|---|

| BCE loss | 98.2 | 94.5 | 77.0 | 0.848 | 0.737 | 0.844 |

| Dice loss | 98.2 | 93.6 | 78.0 | 0.851 | 0.741 | 0.846 |

| Focal loss | 98.3 | 93.2 | 79.4 | 0.857 | 0.750 | 0.851 |

| DiceBCE loss | 98.2 | 91.4 | 79.7 | 0.851 | 0.741 | 0.844 |

| DiceFocal loss | 98.2 | 94.1 | 77.0 | 0.847 | 0.735 | 0.842 |

| WBCE loss | 97.8 | 78.9 | 91.4 | 0.847 | 0.734 | 0.838 |

| Proposed AWBCE loss | 98.5 | 93.5 | 82.8 | 0.878 | 0.783 | 0.872 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, P.; Foody, G.; Zhang, Y.; Wang, Y.; Wang, X.; Li, S.; Shen, L.; Du, Y.; Li, X. Using an Area-Weighted Loss Function to Address Class Imbalance in Deep Learning-Based Mapping of Small Water Bodies in a Low-Latitude Region. Remote Sens. 2025, 17, 1868. https://doi.org/10.3390/rs17111868

Zhou P, Foody G, Zhang Y, Wang Y, Wang X, Li S, Shen L, Du Y, Li X. Using an Area-Weighted Loss Function to Address Class Imbalance in Deep Learning-Based Mapping of Small Water Bodies in a Low-Latitude Region. Remote Sensing. 2025; 17(11):1868. https://doi.org/10.3390/rs17111868

Chicago/Turabian StyleZhou, Pu, Giles Foody, Yihang Zhang, Yalan Wang, Xia Wang, Sisi Li, Laiyin Shen, Yun Du, and Xiaodong Li. 2025. "Using an Area-Weighted Loss Function to Address Class Imbalance in Deep Learning-Based Mapping of Small Water Bodies in a Low-Latitude Region" Remote Sensing 17, no. 11: 1868. https://doi.org/10.3390/rs17111868

APA StyleZhou, P., Foody, G., Zhang, Y., Wang, Y., Wang, X., Li, S., Shen, L., Du, Y., & Li, X. (2025). Using an Area-Weighted Loss Function to Address Class Imbalance in Deep Learning-Based Mapping of Small Water Bodies in a Low-Latitude Region. Remote Sensing, 17(11), 1868. https://doi.org/10.3390/rs17111868