A Texture-Enhanced Deep Learning Network for Cloud Detection of GaoFen/WFV by Integrating an Object-Oriented Dynamic Threshold Labeling Method and Texture-Feature-Enhanced Attention Module

Abstract

1. Introduction

- (1)

- Traditional threshold-based and texture analysis-based image processing methods, which primarily rely on analyzing the spectral characteristics of clouds and other objects in the image. These methods set different thresholds for various spectral channels in RS imagery to detect clouds. However, they face challenges with issues such as large areas of bright ground surfaces and snow being misclassified as clouds [9].

- (2)

- Machine learning methods based on handcrafted physical features use manually selected features such as image texture and brightness. These methods demonstrate good detection accuracy and robustness but exhibit lower accuracy when detecting thin clouds compared to thick clouds [10].

- (3)

- Deep learning methods based on convolutional neural networks have been widely used for cloud detection and have achieved excellent performance [8]. These methods design different network architectures to extract hierarchical features for cloud detection. However, most of these methods require large, precise, pixel-level annotated datasets, which are time-consuming and expensive to create due to the diverse types of clouds and their irregular geometric structures and uneven spectral characteristics.

- (4)

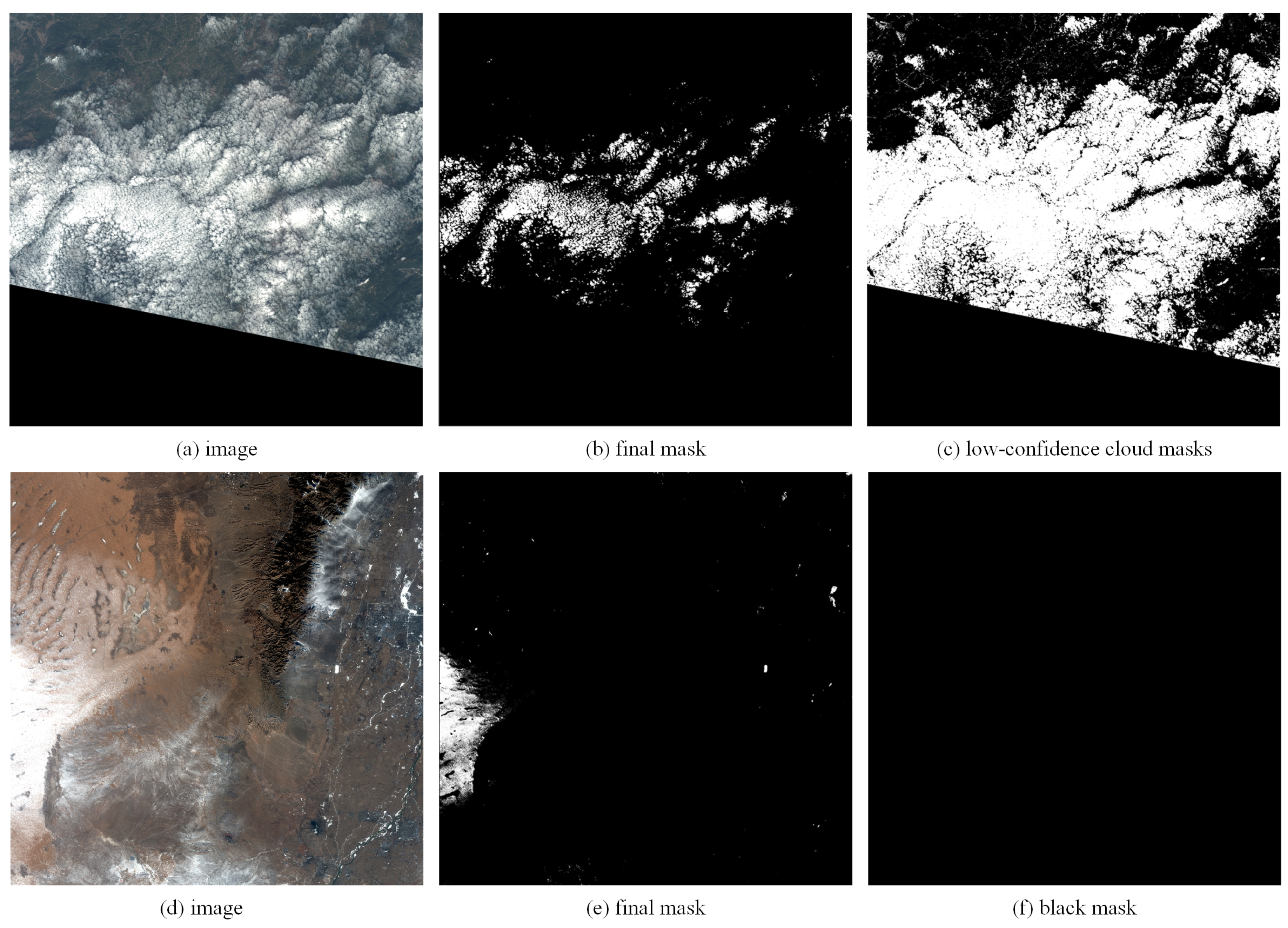

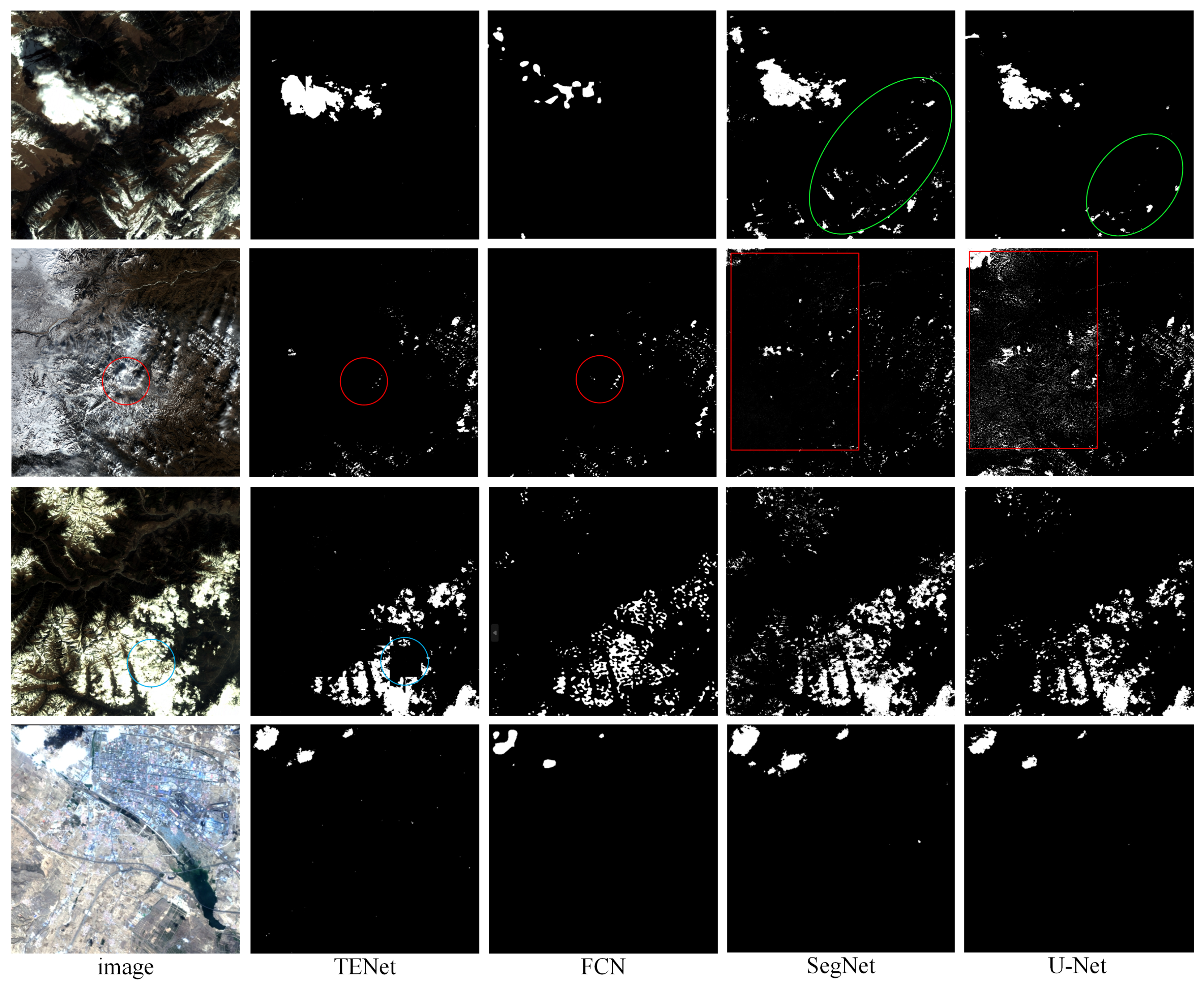

- Weakly supervised cloud detection (WDCD) methods have gained widespread attention to address the annotation burden. However, these methods’ performance based on physical properties is not ideal in complex cloud scenes and mixed ice/snow environments. Since the training samples are scarce for these challenging surfaces, the WDCD methods have low accuracy for certain specific scenes, such as snow-covered mountains, snow-covered land, deserts, saline–alkali land, and urban bright surfaces, as shown in Figure 1. As a result, weakly supervised deep learning (DL) models trained on these inaccurate datasets often produce poor predictions in such scenes.

- (1)

- We develop an adaptive, object-oriented framework with hybrid attention mechanisms for WDCD.

- (2)

- We propose an automated method to generate large-scale pseudo-cloud annotations without human intervention, significantly improving efficiency while substantially increasing sample acquisition for the challenging surfaces.

- (3)

- A downsampling module utilizing Haar wavelet transformation is designed to strengthen multi-dimensional attention mechanisms (textural, spatial, and channel-wise) through learning complex cloud features, enabling accurate identification of thin cloud regions with subtle spectral characteristics while excluding bright backgrounds.

- (4)

- Extensive experiments using the four-band GF-1 dataset demonstrate the method’s effectiveness. The remainder of this paper is organized as follows: Section 2 reviews related work; Section 3 details the proposed framework; Section 4 presents the GF-1 dataset and experimental analysis; Section 5 concludes with a summary of key findings and discusses potential directions for future improvements.

2. Related Work

2.1. Cloud Detection in RS Imagery

2.2. Wavelet Transform and Feature Enhancement Methods in CNNs

3. Methodology

3.1. Overview

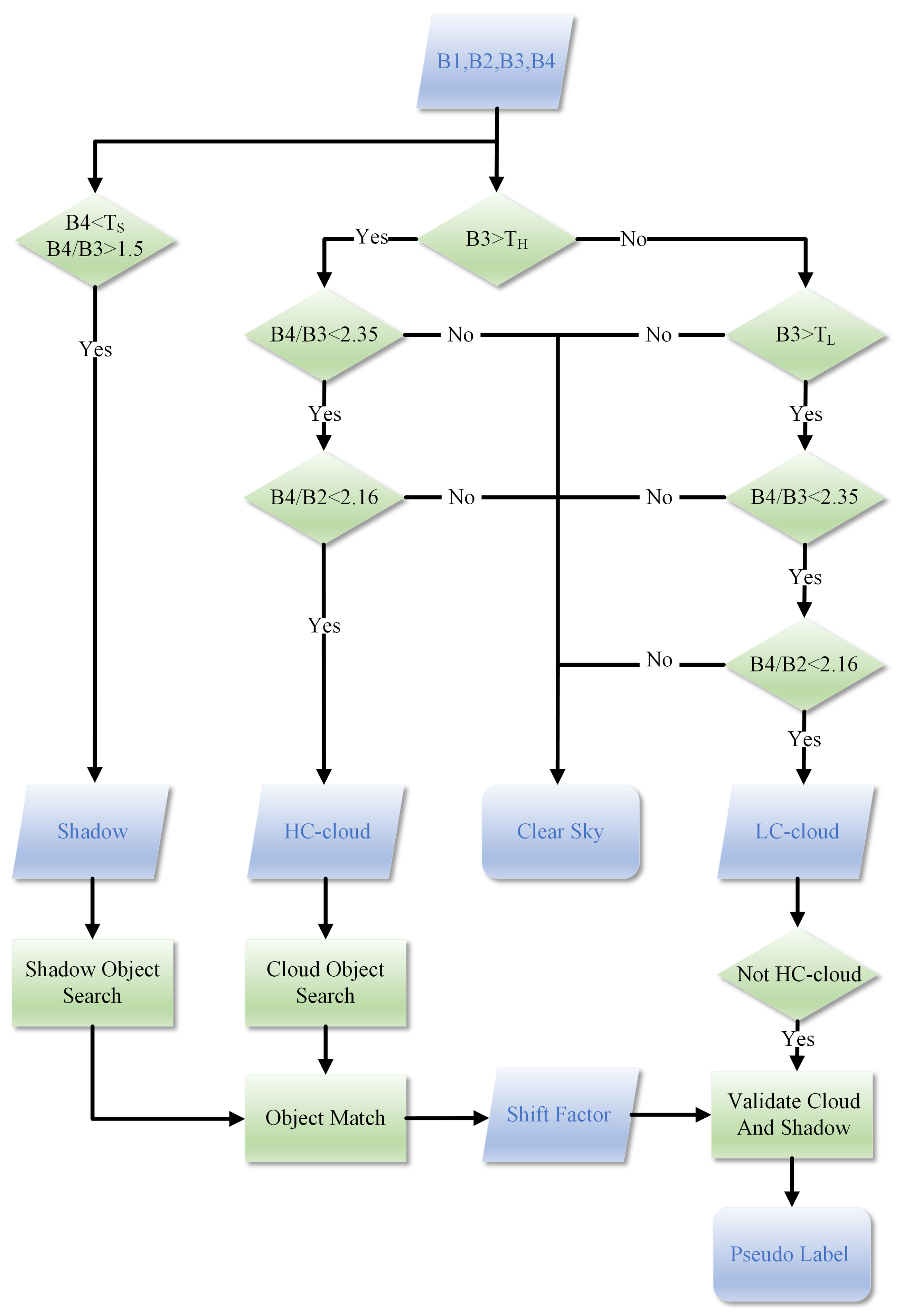

3.2. Object-Oriented Dynamic Threshold Pseudo-Cloud Pixel Labeling Method

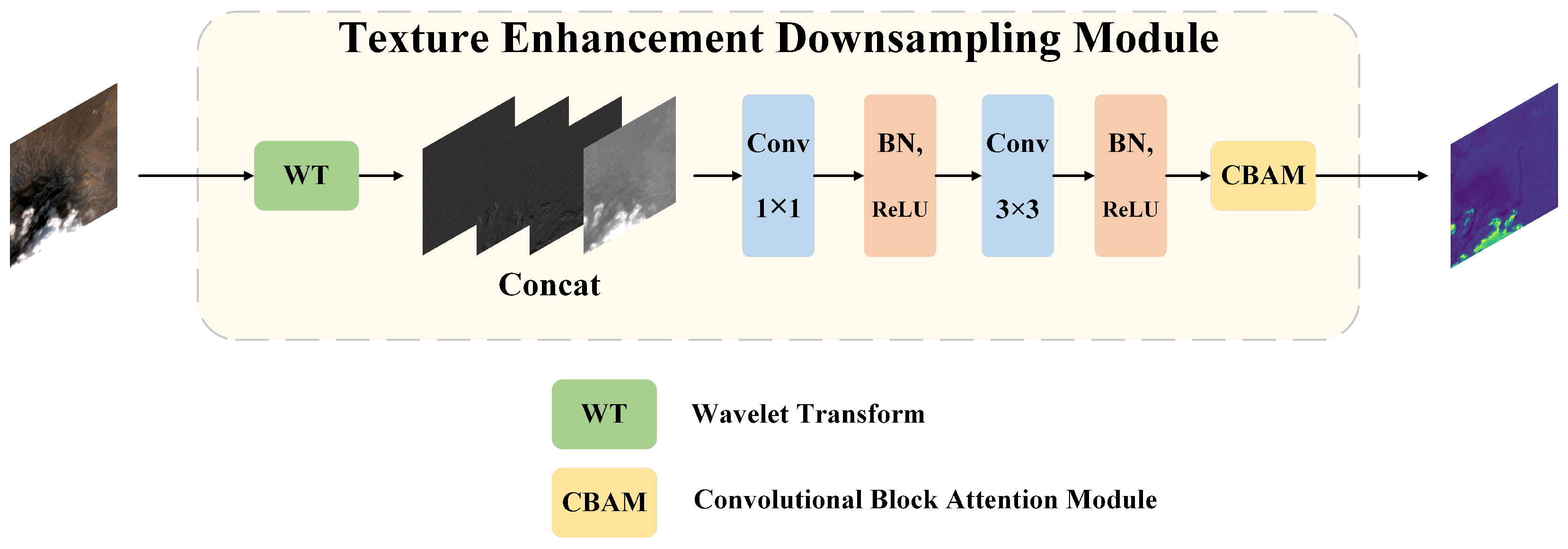

3.3. Texture-Enhanced Downsampling Module

3.4. Loss Function

4. Experiment and Analysis

4.1. Dataset

4.2. Evaluation Metrics

4.3. Implementation Details

4.4. Ablation Experiments

4.5. Comparison

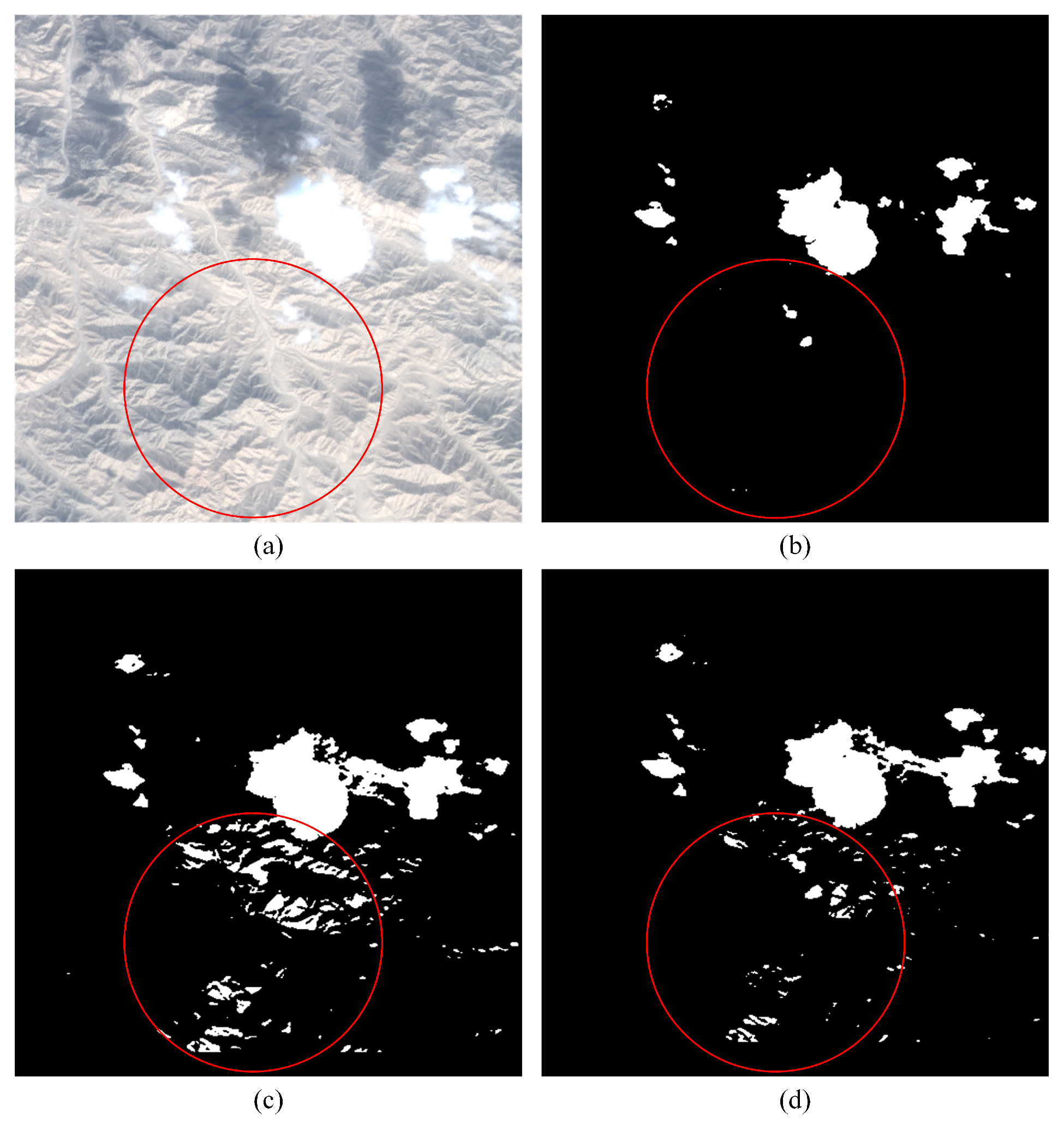

4.6. Challenging Image Analysis

5. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, J.; Pei, Y.; Zhao, S.; Xiao, R.; Sang, X.; Zhang, C. A review of remote sensing for environmental monitoring in China. Remote Sens. 2020, 12, 1130. [Google Scholar] [CrossRef]

- Wang, X. Remote sensing applications to climate change. Remote Sens. 2023, 15, 747. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar]

- Wellmann, T.; Lausch, A.; Andersson, E.; Knapp, S.; Cortinovis, C.; Jache, J.; Scheuer, S.; Kremer, P.; Mascarenhas, A.; Kraemer, R.; et al. Remote sensing in urban planning: Contributions towards ecologically sound policies? Landsc. Urban Plan. 2020, 204, 103921. [Google Scholar]

- Simpson, J.J.; Stitt, J.R. A procedure for the detection and removal of cloud shadow from AVHRR data over land. IEEE Trans. Geosci. Remote Sens. 1998, 36, 880–897. [Google Scholar] [CrossRef]

- Chen, Y.; Tao, F. Potential of remote sensing data-crop model assimilation and seasonal weather forecasts for early-season crop yield forecasting over a large area. Field Crop. Res. 2022, 276, 108398. [Google Scholar]

- Gawlikowski, J.; Ebel, P.; Schmitt, M.; Zhu, X.X. Explaining the effects of clouds on remote sensing scene classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 9976–9986. [Google Scholar]

- Mahajan, S.; Fataniya, B. Cloud detection methodologies: Variants and development—A review. Complex Intell. Syst. 2020, 6, 251–261. [Google Scholar]

- Liu, J.; Wang, X.; Guo, M.; Feng, R.; Wang, Y. Shadow Detection in Remote Sensing Images Based on Spectral Radiance Separability Enhancement. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 3438–3449. [Google Scholar]

- Wang, Z.; Zhao, L.; Meng, J.; Han, Y.; Li, X.; Jiang, R.; Chen, J.; Li, H. Deep learning-based cloud detection for optical remote sensing images: A Survey. Remote Sen. 2024, 16, 4583. [Google Scholar]

- Irish, R.R.; Barker, J.L.; Goward, S.N.; Arvidson, T. Characterization of the Landsat-7 ETM+ automated cloud-cover assessment (ACCA) algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 1179–1188. [Google Scholar]

- Zhu, Z.; Wang, S.; Woodcock, C. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote. Sens. Environ. 2015, 159, 269–277. [Google Scholar]

- Zhong, B.; Chen, W.; Wu, S.; Hu, L.; Luo, X.; Liu, Q. A cloud detection method based on relationship between objects of cloud and cloud-shadow for Chinese moderate to high resolution satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4898–4908. [Google Scholar]

- Xu, G.; Liao, W.; Zhang, X.; Li, C.; He, X.; Wu, X. Haar wavelet downsampling: A simple but effective downsampling module for semantic segmentation. Pattern Recognit. 2023, 143, 109819. [Google Scholar]

- Li, Z.; Shen, H.; Li, H.; Xia, G.; Gamba, P.; Zhang, L. Multi-feature combined cloud and cloud shadow detection in GaoFen-1 wide field of view imagery. Remote Sens. Environ. 2017, 191, 342–358. [Google Scholar]

- Tian, M.; Chen, H.; Liu, G. Cloud detection and classification for S-NPP FSR CRIS data using supervised machine learning. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; IEEE: New York, NY, USA, 2019; pp. 9827–9830. [Google Scholar]

- Li, J.; Wu, Z.; Hu, Z.; Jian, C.; Luo, S.; Mou, L.; Zhu, X.X.; Molinier, M. A lightweight deep learning-based cloud detection method for Sentinel-2A imagery fusing multiscale spectral and spatial features. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–19. [Google Scholar]

- Zhang, J.; Wu, J.; Wang, H.; Wang, Y.; Li, Y. Cloud detection method using CNN based on cascaded feature attention and channel attention. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–17. [Google Scholar]

- Wu, X.; Shi, Z.; Zou, Z. A geographic information-driven method and a new large scale dataset for remote sensing cloud/snow detection. ISPRS J. Photogramm. Remote Sens. 2021, 174, 87–104. [Google Scholar]

- Chen, Y.; Weng, Q.; Tang, L.; Liu, Q.; Fan, R. An automatic cloud detection neural network for high-resolution remote sensing imagery with cloud–snow coexistence. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar]

- Li, X.; Yang, X.; Li, X.; Lu, S.; Ye, Y.; Ban, Y. GCDB-UNet: A novel robust cloud detection approach for remote sensing images. Knowl.-Based Syst. 2022, 238, 107890. [Google Scholar]

- Zhu, X.; Helmer, E.H. An automatic method for screening clouds and cloud shadows in optical satellite image time series in cloudy regions. Remote Sens. Environ. 2018, 214, 135–153. [Google Scholar] [CrossRef]

- Buttar, P.K.; Sachan, M.K. Semantic segmentation of clouds in satellite images based on U-Net++ architecture and attention mechanism. Expert Syst. Appl. 2022, 209, 118380. [Google Scholar] [CrossRef]

- Chan, L.; Hosseini, M.S.; Plataniotis, K.N. A comprehensive analysis of weakly-supervised semantic segmentation in different image domains. Int. J. Comput. Vis. 2021, 129, 361–384. [Google Scholar] [CrossRef]

- Wang, S.; Chen, W.; Xie, S.M.; Azzari, G.; Lobell, D.B. Weakly supervised deep learning for segmentation of remote sensing imagery. Remote Sens. 2020, 12, 207. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, H.; Yang, R.; Yao, G.; Xu, Q.; Zhang, X. A novel weakly supervised remote sensing landslide semantic segmentation method: Combining CAM and cycleGAN algorithms. Remote Sens. 2022, 14, 3650. [Google Scholar] [CrossRef]

- Liu, Y.; Li, Q.; Li, X.; He, S.; Liang, F.; Yao, Z.; Jiang, J.; Wang, W. Leveraging physical rules for weakly supervised cloud detection in remote sensing images. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 1–18. [Google Scholar] [CrossRef]

- Ma, H.; Liu, D.; Xiong, R.; Wu, F. iWave: CNN-based wavelet-like transform for image compression. IEEE Trans. Multimed. 2019, 22, 1667–1679. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-excite: Exploiting feature context in convolutional neural networks. arXiv 2018, arXiv:1810.12348. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Baaziz, N.; Abahmane, O.; Missaoui, R. Texture feature extraction in the spatial-frequency domain for content-based image retrieval. arXiv 2010, arXiv:1012.5208. [Google Scholar]

- Ross, T.Y.; Dollár, G. Focal loss for dense object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2980–2988. [Google Scholar]

- Zhong, B.; Yang, A.; Liu, Q.; Wu, S.; Shan, X.; Mu, X.; Hu, L.; Wu, J. Analysis ready data of the Chinese GaoFen satellite data. Remote Sens. 2021, 13, 1709. [Google Scholar] [CrossRef]

| Components | Challenge | Non-Challenge | Total |

|---|---|---|---|

| Only Negative | 58 | 28 | 86 |

| Both Included | 115 | 274 | 389 |

| Total | 173 | 302 | 475 |

| Methods | OA (%) | F1 (%) | mIoU (%) | Ka (%) |

|---|---|---|---|---|

| Baseline | 91.35 | 75.09 | 75.08 | 69.86 |

| CAM+U-Net | 96.94 | 91.09 | 90.00 | 89.25 |

| CBAM+U-Net | 96.71 | 90.38 | 89.29 | 88.40 |

| TENet | 96.98 | 91.21 | 90.13 | 89.39 |

| Methods | OA (%) | F1 (%) | mIoU (%) | Ka (%) | Params (MB) | FLOPs (G) |

|---|---|---|---|---|---|---|

| SegNet | 91.09 | 75.18 | 74.97 | 69.76 | 7.37 | 15.84 |

| U-Net | 91.35 | 75.09 | 75.08 | 69.86 | 13.40 | 48.69 |

| FCN | 96.40 | 89.70 | 88.53 | 87.52 | 134.29 | 99.78 |

| TENet | 96.98 | 91.21 | 90.13 | 89.39 | 11.3 | 45.03 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, B.; Tang, X.; Luo, X.; Wu, S.; Ao, K. A Texture-Enhanced Deep Learning Network for Cloud Detection of GaoFen/WFV by Integrating an Object-Oriented Dynamic Threshold Labeling Method and Texture-Feature-Enhanced Attention Module. Remote Sens. 2025, 17, 1677. https://doi.org/10.3390/rs17101677

Zhong B, Tang X, Luo X, Wu S, Ao K. A Texture-Enhanced Deep Learning Network for Cloud Detection of GaoFen/WFV by Integrating an Object-Oriented Dynamic Threshold Labeling Method and Texture-Feature-Enhanced Attention Module. Remote Sensing. 2025; 17(10):1677. https://doi.org/10.3390/rs17101677

Chicago/Turabian StyleZhong, Bo, Xiao Tang, Xiaobo Luo, Shanlong Wu, and Kai Ao. 2025. "A Texture-Enhanced Deep Learning Network for Cloud Detection of GaoFen/WFV by Integrating an Object-Oriented Dynamic Threshold Labeling Method and Texture-Feature-Enhanced Attention Module" Remote Sensing 17, no. 10: 1677. https://doi.org/10.3390/rs17101677

APA StyleZhong, B., Tang, X., Luo, X., Wu, S., & Ao, K. (2025). A Texture-Enhanced Deep Learning Network for Cloud Detection of GaoFen/WFV by Integrating an Object-Oriented Dynamic Threshold Labeling Method and Texture-Feature-Enhanced Attention Module. Remote Sensing, 17(10), 1677. https://doi.org/10.3390/rs17101677