Derivation of 3D Coseismic Displacement Field from Integrated Azimuth and LOS Displacements for the 2018 Hualien Earthquake

Abstract

1. Introduction

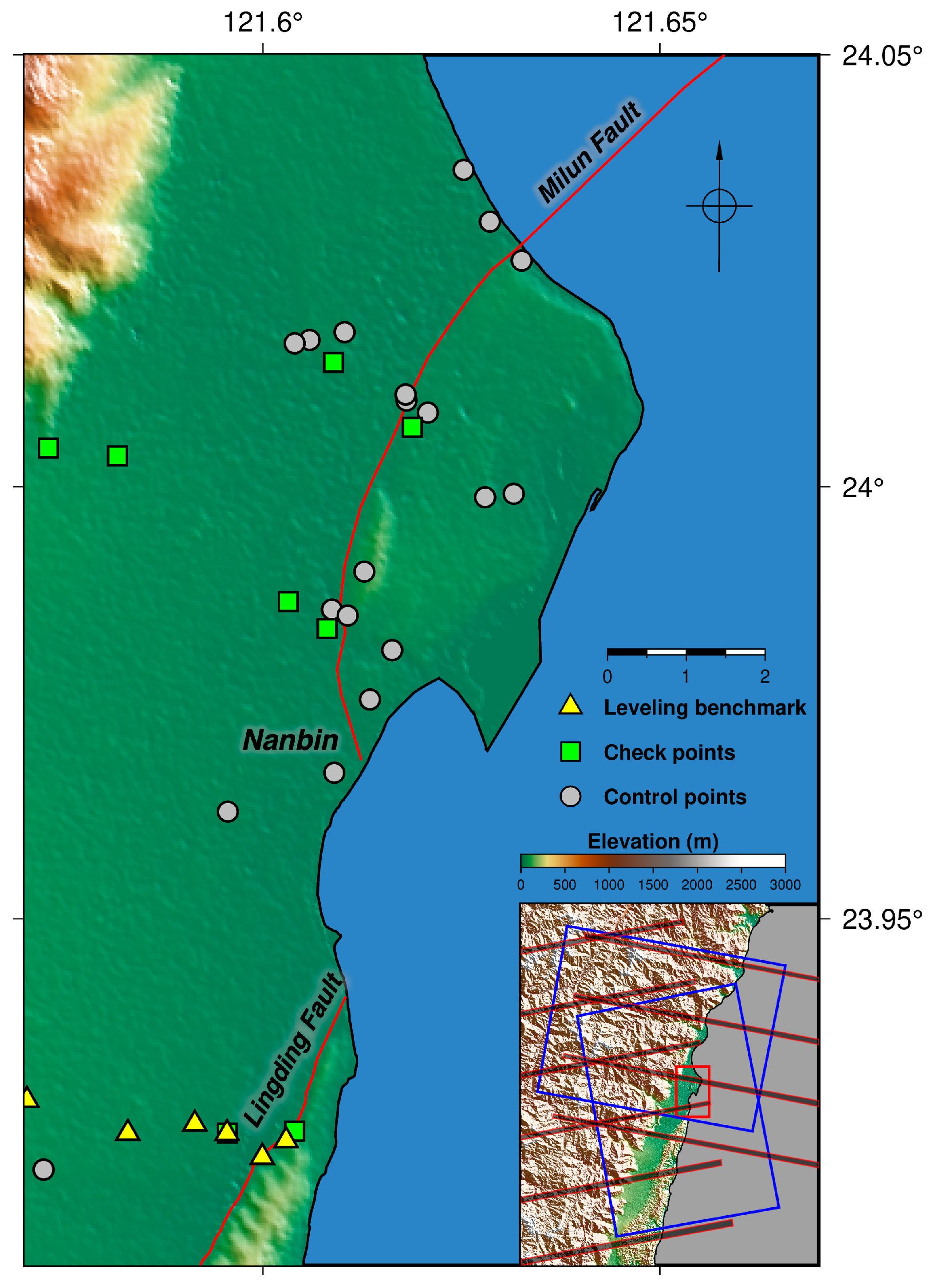

2. Data and Materials

2.1. SAR Images

2.2. GPS and Leveling Data

3. Methods

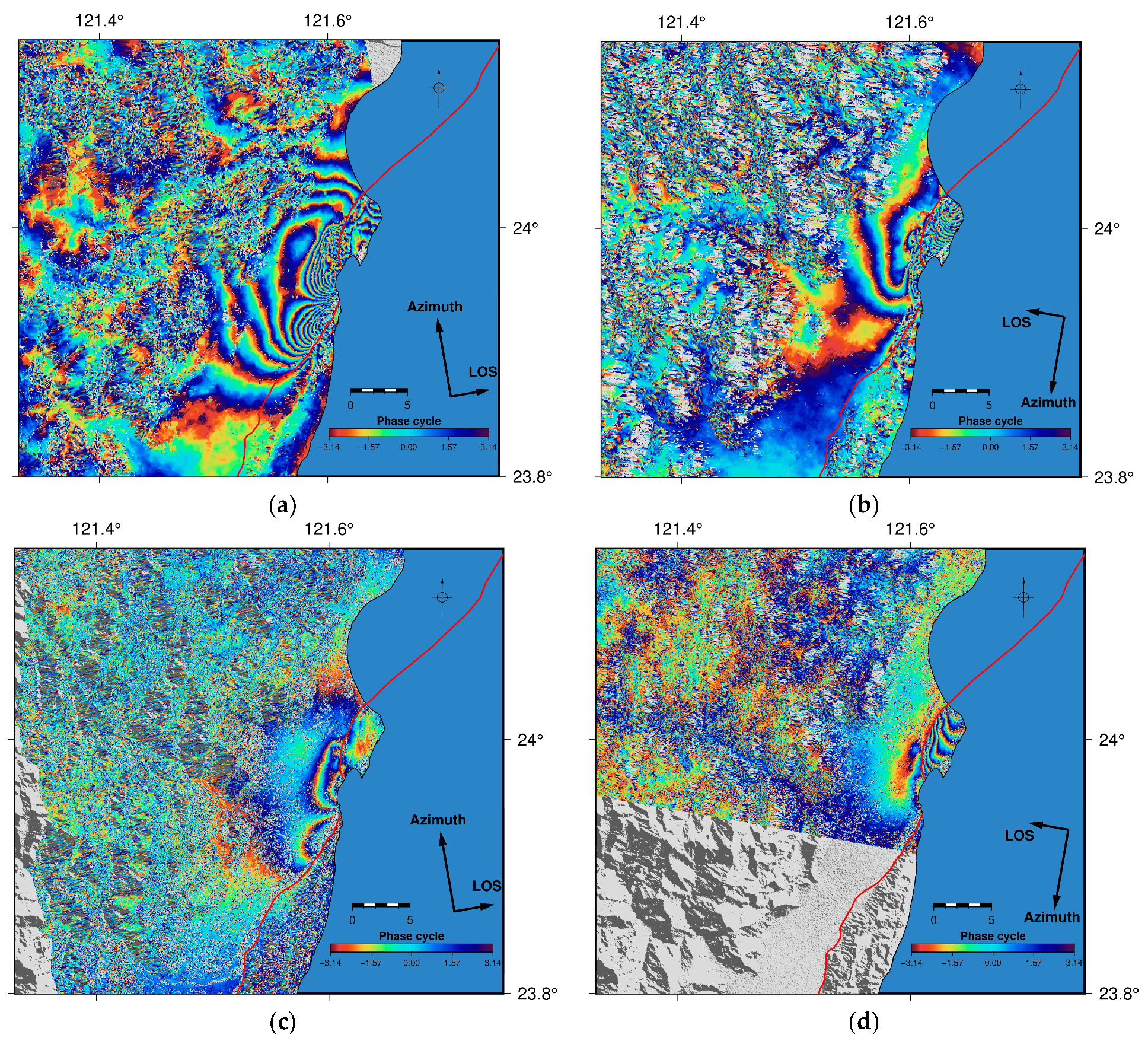

3.1. SAR-Based Surface Displacement Retrieval

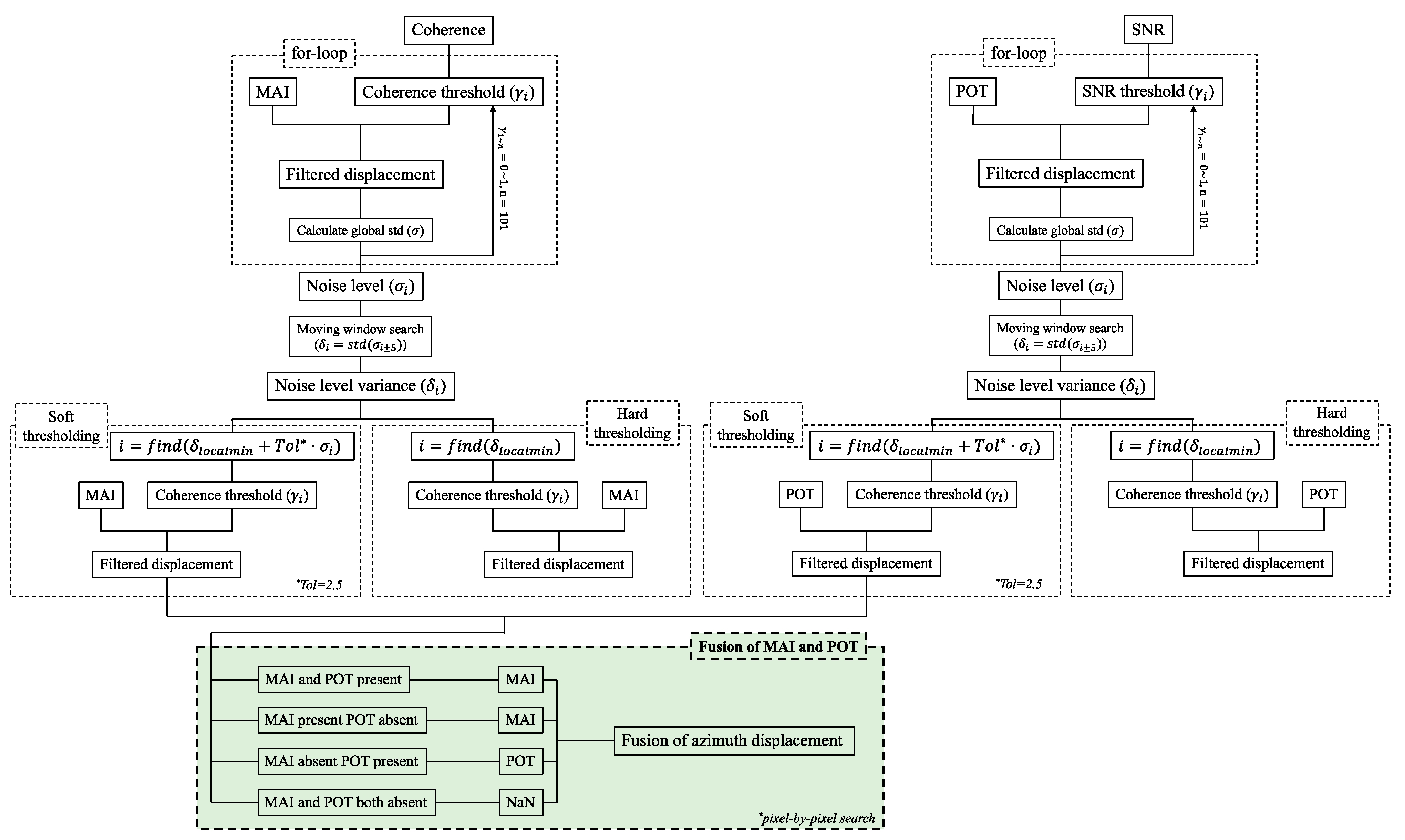

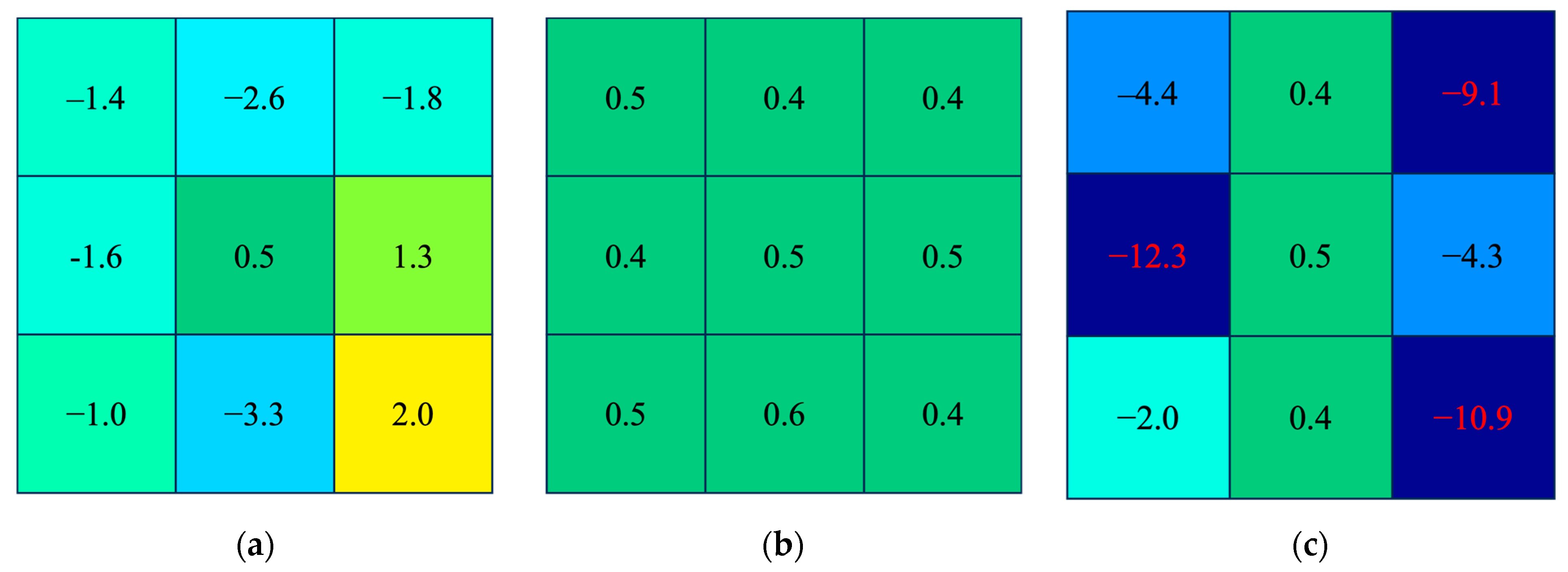

3.2. Azimuth Displacement Integration

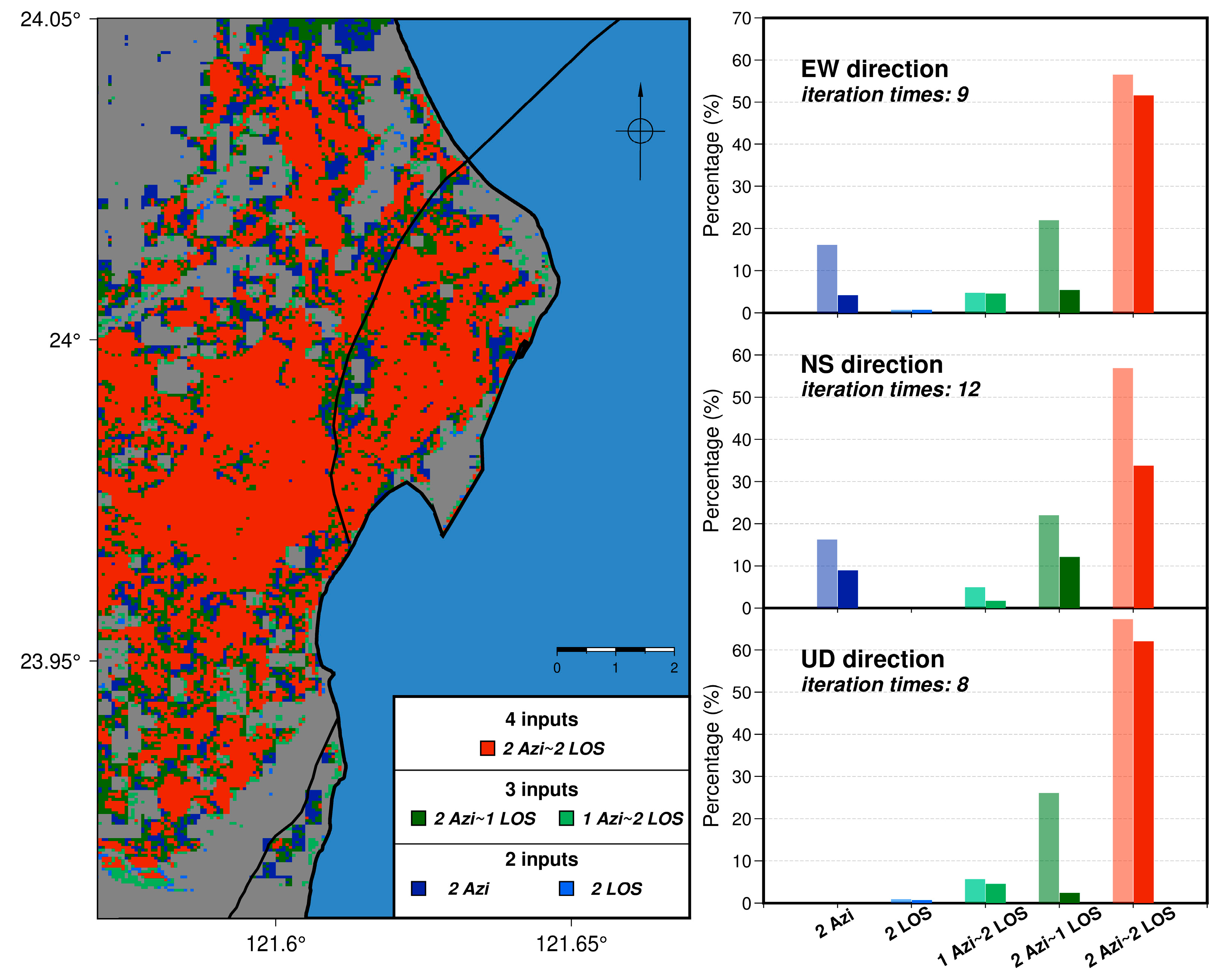

3.3. 3D Displacement Inversion

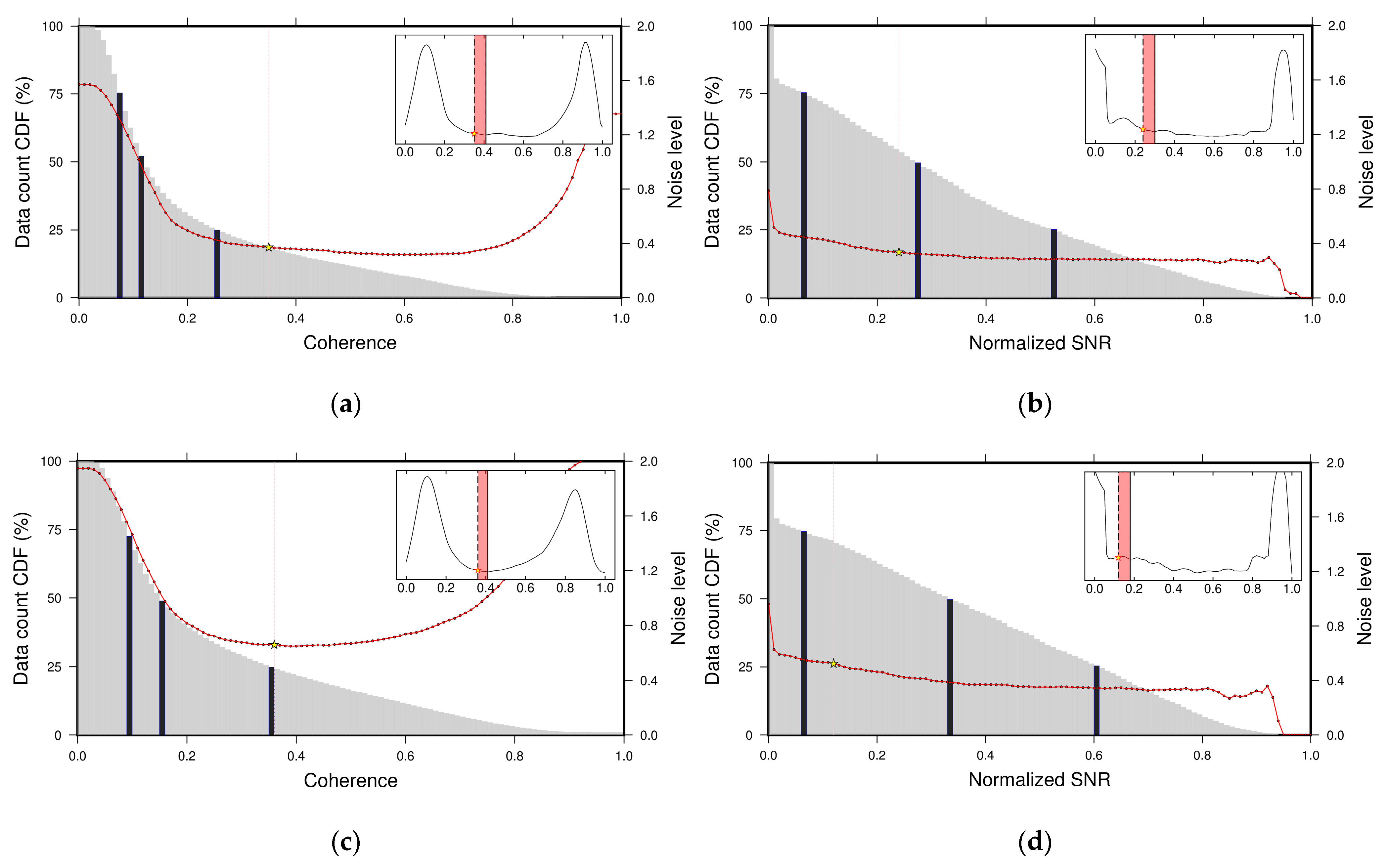

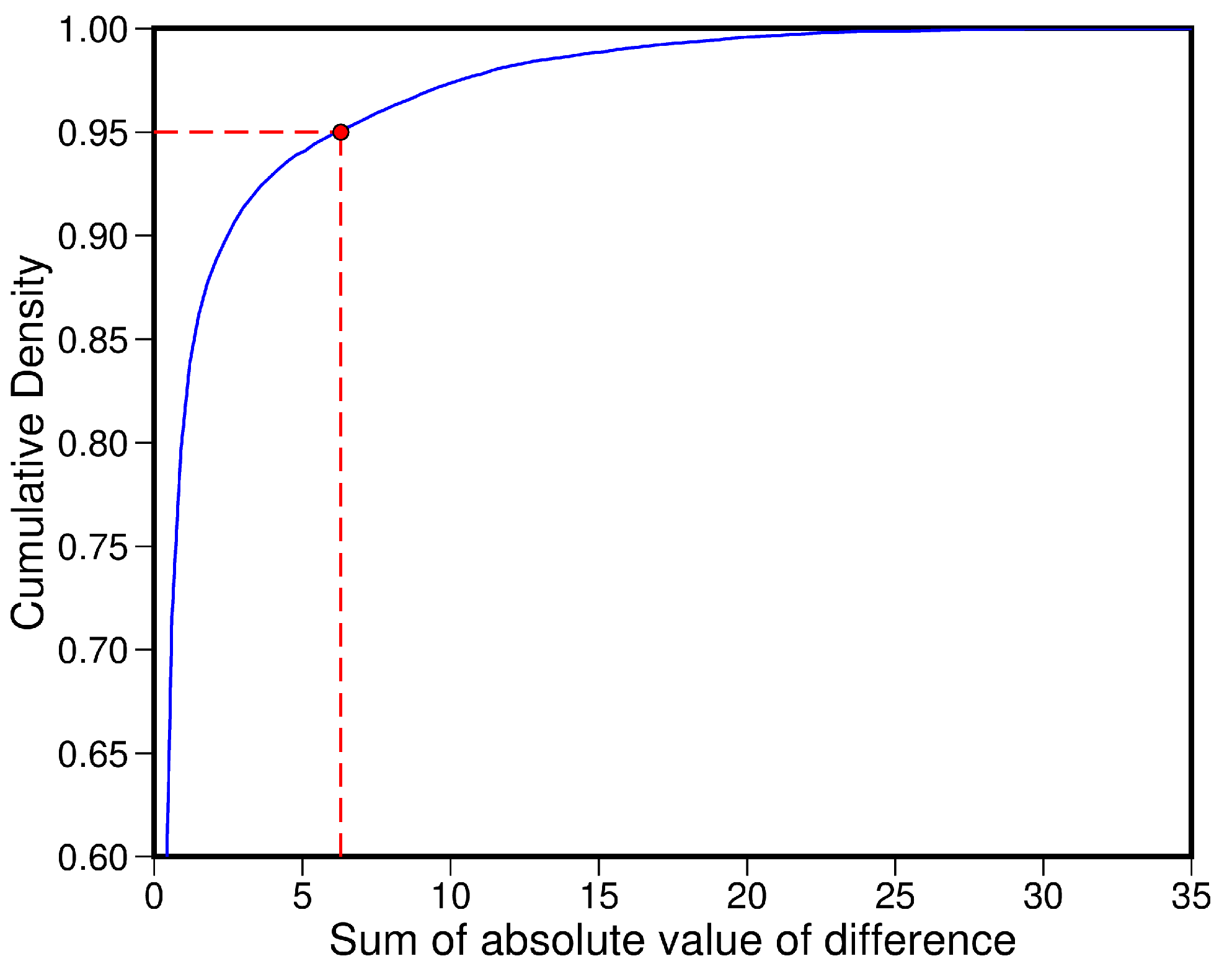

3.4. Denoising Criterion

4. Results

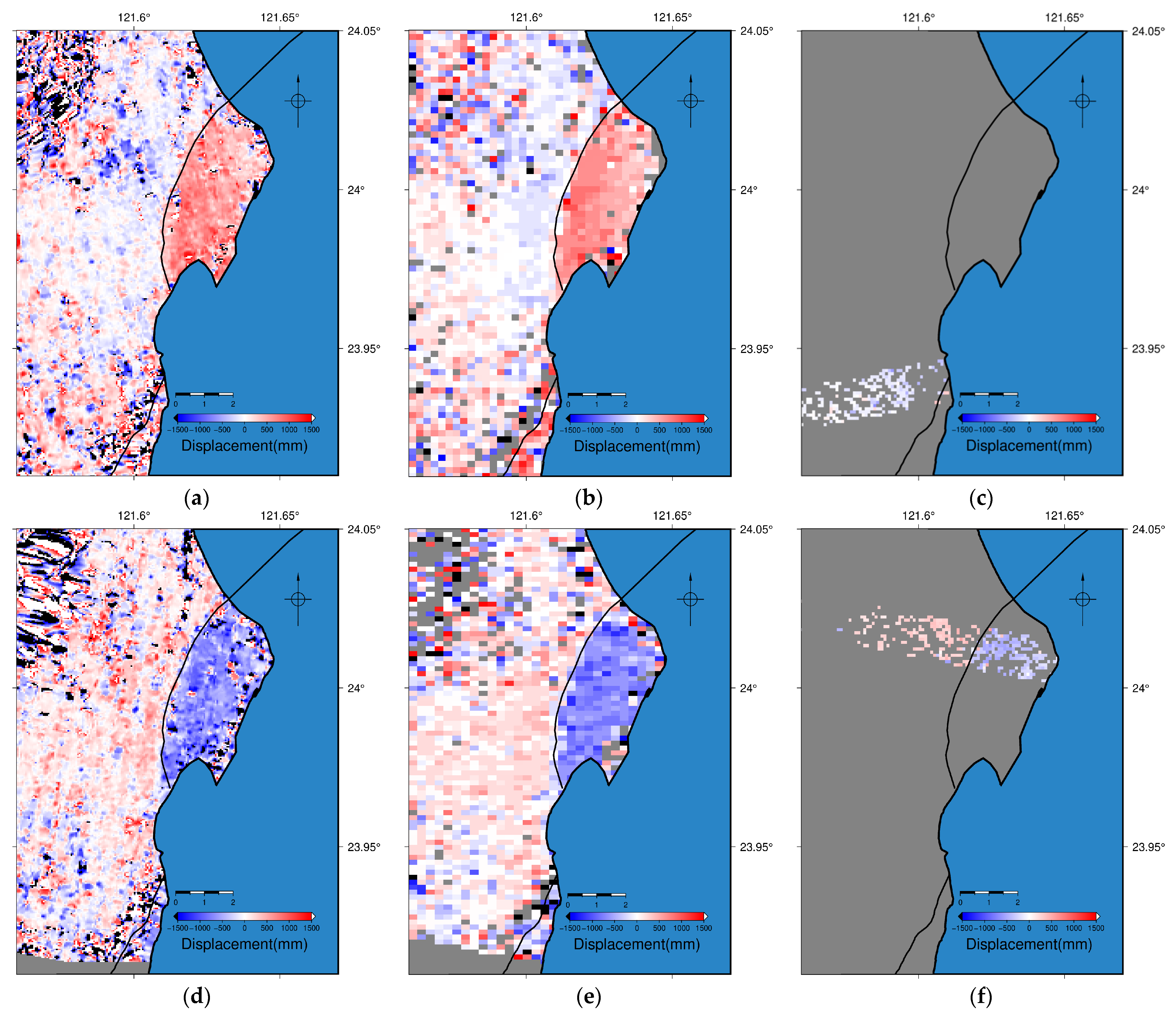

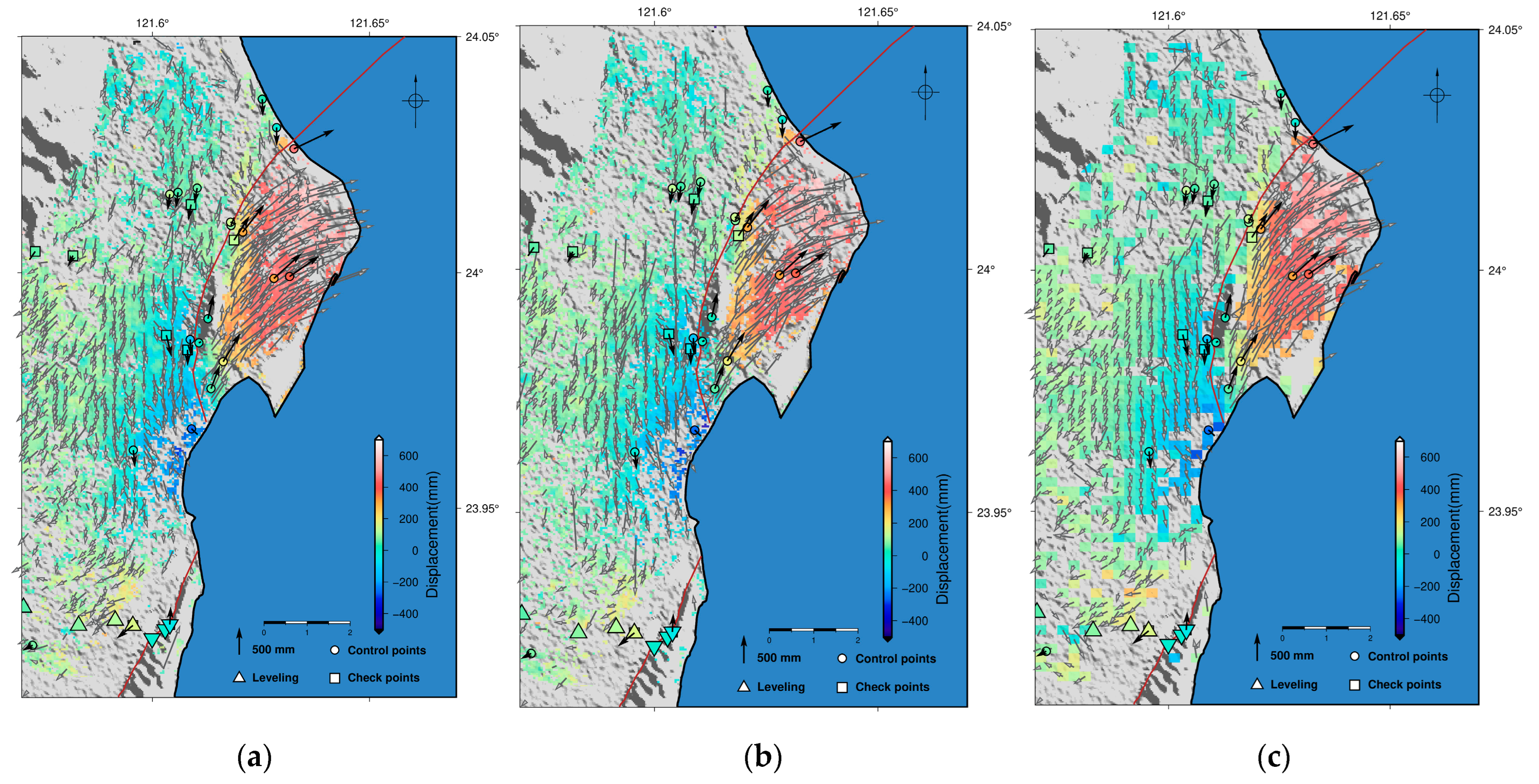

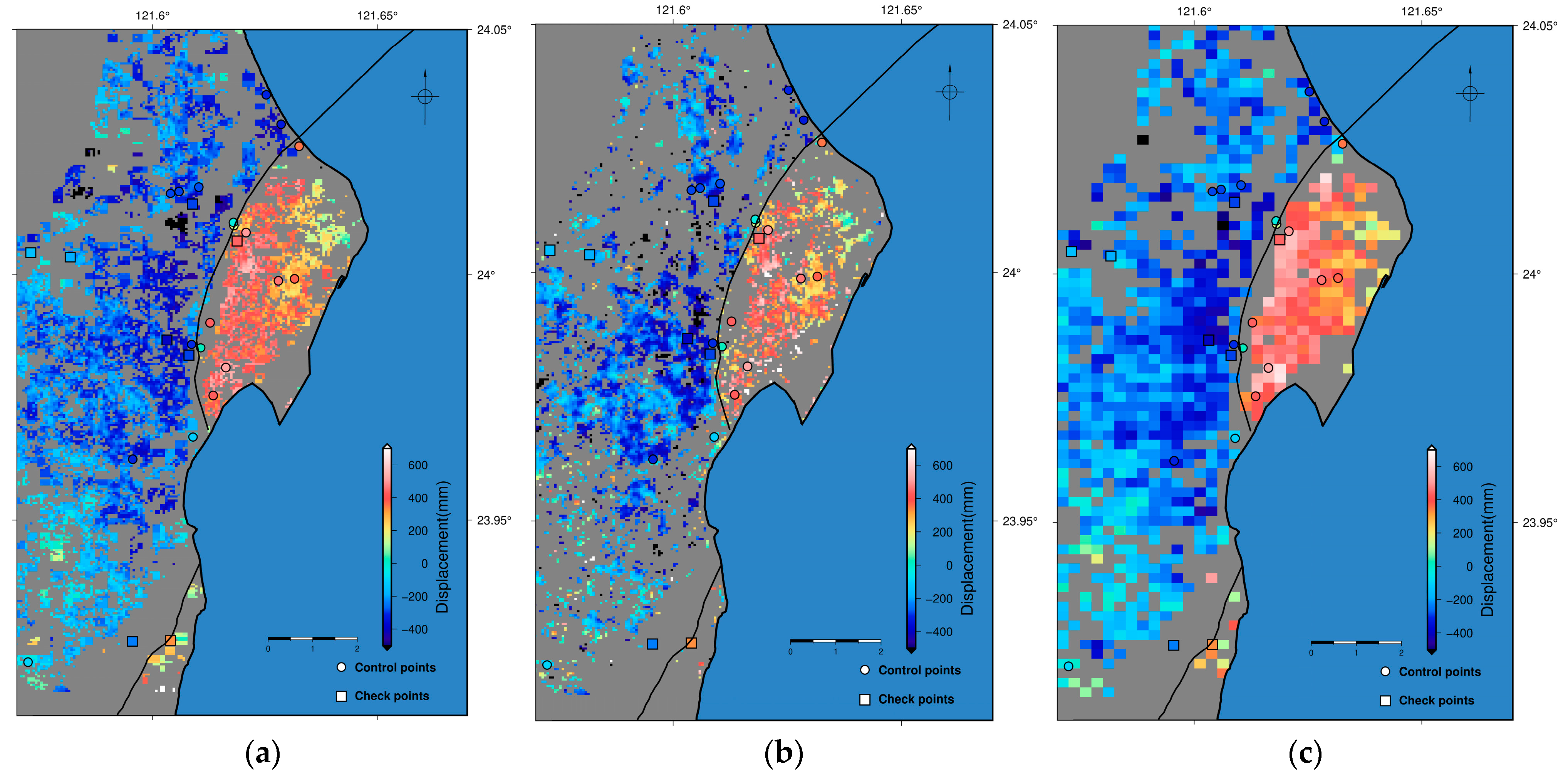

4.1. SAR-Derived Surface Displacement

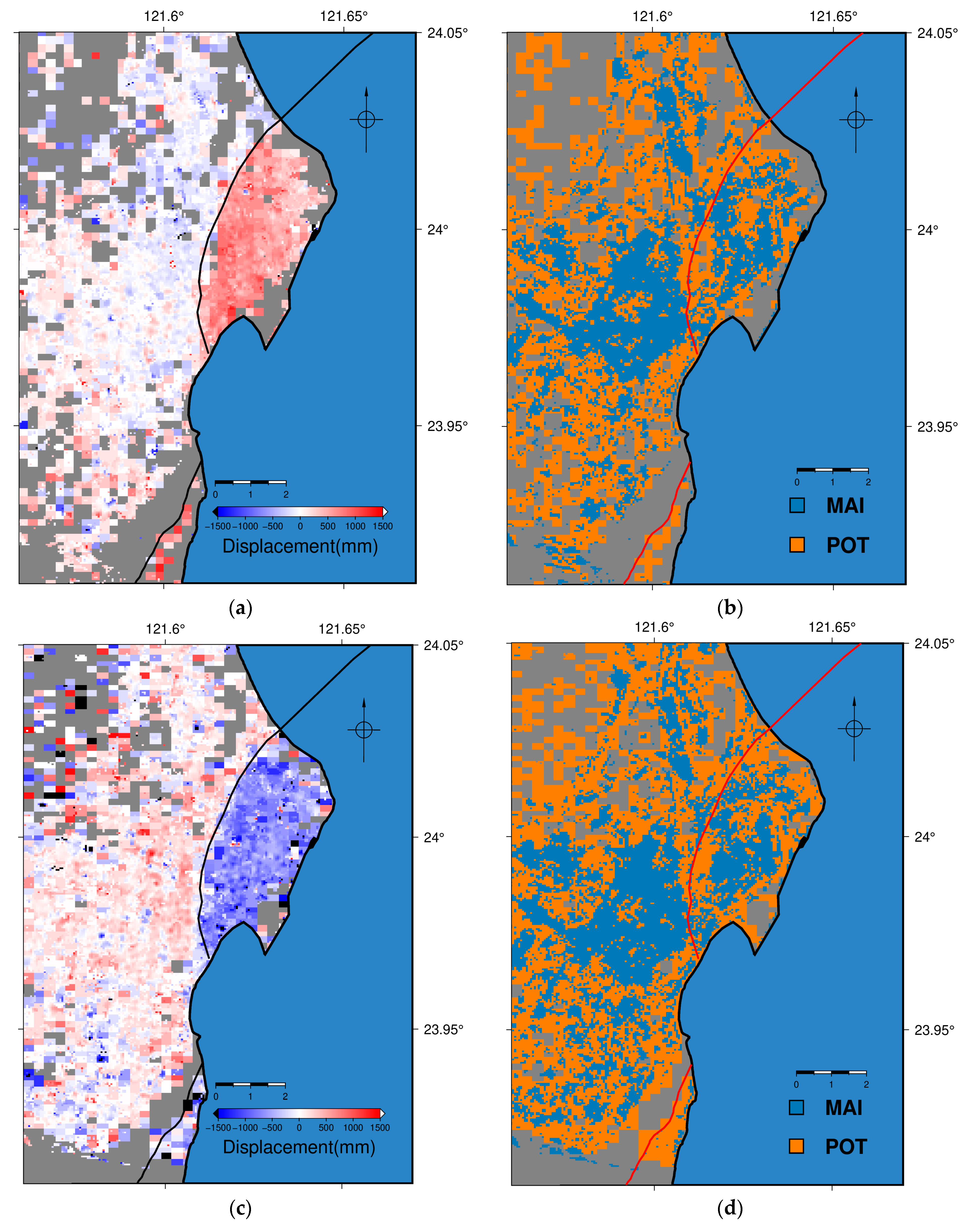

4.2. Integrated Azimuth Displacement

4.3. 3D Displacement Field

5. Discussion

5.1. Comparison between Different Versions of 3D Displacement Fields

5.2. Performance of Denoising

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fan, X.; Scaringi, G.; Korup, O.; West, A.J.; van Westen, C.J.; Tanyas, H.; Hovius, N.; Hales, T.C.; Jibson, R.W.; Allstadt, K.E.; et al. Earthquake-induced chains of geologic hazards: Patterns, mechanisms, and impacts. Rev. Geophys. 2019, 57, 421–503. [Google Scholar] [CrossRef]

- Huang, Y.; Yu, M. Review of soil liquefaction characteristics during major earthquakes of the twenty-first century. Nat. Hazards 2013, 65, 2375–2384. [Google Scholar] [CrossRef]

- Huang, M.H.; Fielding, E.J.; Liang, C.; Milillo, P.; Bekaert, D.; Dreger, D.; Salzer, J. Coseismic deformation and triggered landslides of the 2016 Mw 6.2 Amatrice earthquake in Italy. Geophys. Res. Lett. 2017, 44, 1266–1274. [Google Scholar] [CrossRef]

- Yu, E.; Segall, P. Slip in the 1868 Hayward earthquake from the analysis of historical triangulation data. J. Geophys. Res. Solid Earth 1996, 101, 16101–16118. [Google Scholar] [CrossRef]

- Marshall, G.A.; Stein, R.S.; Thatcher, W. Faulting geometry and slip from co-seismic elevation changes: The 18 October 1989, Loma Prieta, California, earthquake. Bull. Seismol. Soc. Am. 1991, 81, 1660–1693. [Google Scholar] [CrossRef]

- Bock, Y.; Melgar, D. Physical applications of GPS geodesy: A review. Rep. Prog. Phys. 2016, 79, 106801. [Google Scholar] [CrossRef]

- Massonnet, D.; Rossi, M.; Carmona, C.; Adragna, F.; Peltzer, G.; Feigl, K.; Rabaute, T. The displacement field of the Landers earthquake mapped by radar interferometry. Nature 1993, 364, 138–142. [Google Scholar] [CrossRef]

- Massonnet, D.; Feigl, K.L. Radar interferometry and its application to changes in the Earth’s surface. Rev. Geophys. 1998, 36, 441–500. [Google Scholar] [CrossRef]

- Bürgmann, R.; Rosen, P.A.; Fielding, E.J. Synthetic aperture radar interferometry to measure Earth’s surface topography and its deformation. Annu. Rev. Earth Planet. Sci. 2000, 28, 169–209. [Google Scholar] [CrossRef]

- Simons, M.; Rosen, P.A. Interferometric synthetic aperture radar geodesy. Geodesy 2007, 3, 391–446. [Google Scholar] [CrossRef]

- Biggs, J.; Wright, T.J. How satellite InSAR has grown from opportunistic science to routine monitoring over the last decade. Nat. Commun. 2020, 11, 3863. [Google Scholar] [CrossRef] [PubMed]

- González, P.J.; Fernandez, J.; Camacho, A.G. Coseismic three-dimensional displacements determined using SAR data: Theory and an application test. Pure Appl. Geophys. 2009, 166, 1403–1424. [Google Scholar] [CrossRef]

- Hu, J.; Li, Z.W.; Ding, X.L.; Zhu, J.J.; Zhang, L.; Sun, Q. Resolving three-dimensional surface displacements from InSAR measurements: A review. Earth-Sci. Rev. 2014, 133, 1–17. [Google Scholar] [CrossRef]

- Merryman Boncori, J.P. Measuring coseismic deformation with spaceborne synthetic aperture radar: A review. Front. Earth Sci. 2019, 7, 16. [Google Scholar] [CrossRef]

- Lu, C.H.; Lin, Y.S.; Chuang, R.Y. Pixel offset fusion of SAR and optical images for 3-D coseismic surface deformation. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1049–1053. [Google Scholar] [CrossRef]

- Carboni, F.; Porreca, M.; Valerio, E.; Mariarosaria, M.; De Luca, C.; Azzaro, S.; Ercoli, M.; Barchi, M.R. Surface ruptures and off-fault deformation of the October 2016 central Italy earthquakes from DInSAR data. Sci. Rep. 2022, 12, 3172. [Google Scholar] [CrossRef] [PubMed]

- Hamling, I.J.; Hreinsdóttir, S.; Clark, K.; Elliott, J.; Liang, C.; Fielding, E.; Litchfield, N.; Villamor, P.; Wallace, L.; Wright, T.J.; et al. Complex multifault rupture during the 2016 Mw 7.8 Kaikōura earthquake, New Zealand. Science 2017, 356, 154. [Google Scholar] [CrossRef]

- Diederichs, A.; Nissen, E.K.; Lajoie, L.J.; Langridge, R.M.; Malireddi, S.R.; Clark, K.J.; Hamling, I.J.; Tagliasacchi, A. Unusual kinematics of the Papatea fault (2016 Kaikōura earthquake) suggest anelastic rupture. Sci. Adv. 2019, 5, 10. [Google Scholar] [CrossRef]

- Wright, T.J.; Lu, Z.; Wicks, C. Source model for the Mw 6.7, 23 October 2002, Nenana Mountain Earthquake (Alaska) from InSAR. Geophys. Res. Lett. 2003, 30, 18. [Google Scholar] [CrossRef]

- Fialko, Y.; Simons, M.; Agnew, D. The complete (3-D) surface displacement field in the epicentral area of the 1999 Mw7. 1 Hector Mine earthquake, California, from space geodetic observations. Geophys. Res. Lett. 2001, 28, 17. [Google Scholar] [CrossRef]

- Bechor, N.B.; Zebker, H.A. Measuring two-dimensional movements using a single InSAR pair. Geophys. Res. Lett. 2006, 33, 16. [Google Scholar] [CrossRef]

- Grandin, R.; Klein, E.; Métois, M.; Vigny, C. Three-dimensional displacement field of the 2015 Mw8. 3 Illapel earthquake (Chile) from across-and along-track Sentinel-1 TOPS interferometry. Geophys. Res. Lett. 2016, 43, 2552–2561. [Google Scholar] [CrossRef]

- Wright, T.J.; Parsons, B.E.; Lu, Z. Toward mapping surface deformation in three dimensions using InSAR. Geophys. Res. Lett. 2004, 31, 1. [Google Scholar] [CrossRef]

- Jung, H.S.; Lee, W.J.; Zhang, L. Theoretical accuracy of along-track displacement measurements from multiple-aperture interferometry (MAI). Sensors 2014, 14, 17703–17724. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Jónsson, S. Improved SAR amplitude image offset measurements for deriving three-dimensional coseismic displacements. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3271–3278. [Google Scholar] [CrossRef]

- Huang, M.H.; Huang, H.H. The complexity of the 2018 Mw 6.4 Hualien earthquake in east Taiwan. Geophys. Res. Lett. 2018, 45, 13–249. [Google Scholar] [CrossRef]

- Yen, J.Y.; Lu, C.H.; Dorsey, R.J.; Kuo-Chen, H.; Chang, C.P.; Wang, C.C.; Chuang, R.Y.; Kuo, Y.T.; Chiu, C.Y.; Chang, Y.H.; et al. Insights into seismogenic deformation during the 2018 Hualien, Taiwan, earthquake sequence from InSAR, GPS, and modeling. Seismol. Res. Lett. 2019, 90, 78–87. [Google Scholar] [CrossRef]

- Wu, B.L.; Yen, J.Y.; Huang, S.Y.; Kuo, Y.T.; Chang, W.Y. Surface deformation of 0206 Hualien earthquake revealed by the integrated network of RTK GPS. Terr. Atmos. Ocean. Sci. 2019, 30, 301–310. [Google Scholar] [CrossRef]

- Lin, Y.S.; Chuang, R.Y.; Yen, J.Y.; Chen, Y.C.; Kuo, Y.T.; Wu, B.L.; Huang, S.Y.; Yang, C.J. Mapping surface breakages of the 2018 Hualien earthquake by using UAS photogrammetry. Terr. Atmos. Ocean Sci. 2019, 30, 3. [Google Scholar] [CrossRef]

- Huang, S.Y.; Yen, J.Y.; Wu, B.L.; Yen, I.C.; Chuang, R.Y. Investigating the Milun Fault: The coseismic surface rupture zone of the 2018/02/06 M L 6.2 Hualien earthquake, Taiwan. Terr. Atmos. Ocean Sci. 2019, 30, 3. [Google Scholar] [CrossRef]

- Lo, Y.C.; Yue, H.; Sun, J.; Zhao, L.; Li, M. The 2018 Mw6. 4 Hualien earthquake: Dynamic slip partitioning reveals the spatial transition from mountain building to subduction. Earth Planet. Sci. Lett. 2019, 524, 115729. [Google Scholar] [CrossRef]

- Kuo, Y.T.; Wang, Y.; Hollingsworth, J.; Huang, S.Y.; Chuang, R.Y.; Lu, C.H.; Hsu, Y.C.; Tung, H.; Yen, J.Y.; Chang, C.P. Shallow fault rupture of the Milun fault in the 2018 Mw 6.4 Hualien earthquake: A high-resolution approach from optical correlation of Pléiades satellite imagery. Seismol. Res. Lett. 2019, 90, 97–107. [Google Scholar] [CrossRef]

- Nikolaidis, R. Observation of Geodetic and Seismic Deformation with the Global Positioning System. Ph.D. Thesis, University of California, San Diego, CA, USA, 2002. Available online: https://www.proquest.com/dissertations-theses/observation-geodetic-seismic-deformation-with/docview/304798351/se-2 (accessed on 5 August 2023).

- Shyu, J.B.H.; Chuang, Y.R.; Chen, Y.L.; Lee, Y.R.; Cheng, C.T. A New On-Land Seismogenic Structure Source Database from the Taiwan Earthquake Model (TEM) Project for Seismic Hazard Analysis of Taiwan. Terr. Atmos. Ocean Sci. 2016, 27, 311–323. [Google Scholar] [CrossRef]

- Sandwell, D.; Mellors, R.; Tong, X.; Wei, M.; Wessel, P. Open radar interferometry software for mapping surface deformation. Eos. Trans. AGU 2011, 92, 234. [Google Scholar] [CrossRef]

- Sandwell, D.; Mellors, R.; Tong, X.; Xu, X.; Wei, M.; Wessel, P. GMTSAR: An InSAR Processing System Based on Generic Mapping Tools; Scripps Institution of Oceanography: San Diego, CA, USA, 2016; Available online: https://escholarship.org/uc/item/8zq2c02m (accessed on 5 August 2023).

- Gomba, G.; Parizzi, A.; De Zan, F.; Eineder, M.; Bamler, R. Toward operational compensation of ionospheric effects in SAR interferograms: The split-spectrum method. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1446–1461. [Google Scholar] [CrossRef]

- Fattahi, H.; Simons, M.; Agram, P. InSAR time-series estimation of the ionospheric phase delay: An extension of the split range-spectrum technique. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5984–5996. [Google Scholar] [CrossRef]

- Wang, K.; Xu, X.; Fialko, Y. Improving burst alignment in TOPS interferometry with bivariate enhanced spectral diversity. IEEE Trans. Geosci. Remote Sens. 2017, 14, 2423–2427. [Google Scholar] [CrossRef]

| Satellite | Orbit | Reference Date | Aligned Date | Scan Mode | Wavelength |

|---|---|---|---|---|---|

| Sentinel-1 | Ascending | 3 February 2018 | 15 February 2018 | TOPS | C-band |

| Descending | 5 February 2018 | 11 February 2018 | TOPS | C-band | |

| ALOS-2 | Ascending | 5 November 2016 | 10 February 2018 | Stripmap-HBQ 1 | L-band |

| Descending | 18 June 2017 | 11 February 2018 | Stripmap-FBD 2 | L-band |

| Version | EW Error 1 | NS Error 1 | UD Error 1 | X Spacing 2 | Y Spacing 2 |

|---|---|---|---|---|---|

| DInSAR + Integrated azimuth displacement (1) | 2.2 | 9.5 | 4.3 | 61.6 | 46.2 |

| DInSAR + MAI (2) | 2.0 | 19.8 | 2.7 | 61.6 | 46.2 |

| DInSAR + POT (3) | 48.6 | 11.6 | 3.2 | 279.0 | 212.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, L.-C.J.; Chuang, R.Y.; Lu, C.-H.; Ching, K.-E.; Chen, C.-L. Derivation of 3D Coseismic Displacement Field from Integrated Azimuth and LOS Displacements for the 2018 Hualien Earthquake. Remote Sens. 2024, 16, 1159. https://doi.org/10.3390/rs16071159

Lin L-CJ, Chuang RY, Lu C-H, Ching K-E, Chen C-L. Derivation of 3D Coseismic Displacement Field from Integrated Azimuth and LOS Displacements for the 2018 Hualien Earthquake. Remote Sensing. 2024; 16(7):1159. https://doi.org/10.3390/rs16071159

Chicago/Turabian StyleLin, Li-Chieh J., Ray Y. Chuang, Chih-Heng Lu, Kuo-En Ching, and Chien-Liang Chen. 2024. "Derivation of 3D Coseismic Displacement Field from Integrated Azimuth and LOS Displacements for the 2018 Hualien Earthquake" Remote Sensing 16, no. 7: 1159. https://doi.org/10.3390/rs16071159

APA StyleLin, L.-C. J., Chuang, R. Y., Lu, C.-H., Ching, K.-E., & Chen, C.-L. (2024). Derivation of 3D Coseismic Displacement Field from Integrated Azimuth and LOS Displacements for the 2018 Hualien Earthquake. Remote Sensing, 16(7), 1159. https://doi.org/10.3390/rs16071159