1. Introduction

Synthetic aperture radar (SAR) is widely mounted on aerial platforms such as airplanes due to its weather-independent and day-and-night sensing capability to obtain high-resolution images over long distances [

1,

2,

3,

4,

5]. It has been applied in various fields, such as environmental monitoring, ground surveying, and target detection. Classic SAR imaging modes include traditional stripe mode, high-resolution spotlight mode, and large-scene scanning mode, each with advantages and disadvantages regarding resolution performance and detection area scale [

6,

7,

8,

9]. A new imaging mode called widefield staring SAR (WFS-SAR) has been introduced to overcome the limitations of classic modes, which can offer sub-meter-level high-resolution images with large-swath scenes [

10,

11,

12]. The implementation of WFS-SAR is mainly based on the expansion of spotlight mode, which can be arranged on linear or maneuvering trajectory motion platforms [

13,

14,

15]. By reasonable beam shaping and control, a larger detection area can be obtained while ensuring high-resolution imaging. This advanced WFS-SAR mode offers superior performance and perfectly suits applications that demand high-resolution imaging over a wide area.

This paper considers a more complex and universal WFS-SAR imaging with maneuvering trajectory. As the spatial detection area expands and the time-frequency domain support area increases, frequency domain imaging algorithms based on transform domain approximation processing, such as range-doppler algorithm (RDA) [

16,

17], chirp-scaling algorithm (CSA) [

18,

19], and nonlinear CSA (NCSA) [

20,

21,

22], will have significant residual phase error, which can adversely affect imaging performance. Although time domain imaging algorithms such as back-projection algorithm (BPA) and its modifications can be used for SAR imaging of any complex trajectory and work mode [

23,

24,

25], they have strict requirements for navigation systems and digital signal processing systems, making them challenging to apply for real-time processing. The wavenumber domain polar format algorithm (PFA) is a typical spotlight SAR imaging method that eliminates spectrum aliasing through Deramp processing and achieves two-dimensional focusing through wavenumber spectrum resampling [

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40]. In addition, the implicit keystone transform in PFA can partly alleviate the range cell migration (RCM) caused by radar platform jitter and target motion, which can still effectively correct the RCM even with low inertial navigation accuracy or moving targets [

28]. Therefore, it has inherent advantages in high-resolution imaging processing and can be further applied in WFS-SAR imaging.

However, PFA has a serious deficiency, as its derivation relies on a planar wavefront assumption that violates the actual situation [

29,

30]. Actually, the echo received by the radar antenna is a spherical wavefront in nature, and the neglected wavefront curvature phenomenon will affect the imaging results, leading to significant distortion and defocusing in the final image. The degree of distortion and defocusing increases with the distance away from the reference position during the PFA processing, exhibiting spatial variability, making it difficult to compensate through autofocusing and affine transformation [

31]. Therefore, an essential step in PFA is to analyze and correct the wavefront curvature error (WCE).

A significant body of literature has been dedicated to exploring the wavefront curvature phenomenon. Doren [

32,

33] initially developed the wavefront curvature error and obtained the analytical expression of the WCE phase through an ideal model. Mao [

28,

34,

35] gives a WCE phase suitable for level flight and diving motion and acquires good refocusing results through simulation experiments. The method presented in [

36], which introduces a quadratic approximation of the differential slant range and derives the WCE phase through variable substitution, has a non-ignorable error in high-resolution and widefield SAR mode, despite being simple and easy to comprehend [

37]. These methods are predicated on assuming the radar platform moves along an ideal linear trajectory. When the platform maneuvers along a complex trajectory, the inevitable acceleration will alter the echo azimuth modulation characteristics and cause image defocusing. Therefore, Deng [

38,

39] adopts an acceleration error compensation strategy combined with the WCE correction, which can achieve imaging processing of the maneuvering platform. However, the literature mentioned above only considers the impact of the quadratic component of WCE on image quality. As the resolution improves and the scene scale expands, the higher-order phase error will gradually increase and deteriorate the azimuth focusing performance. In addition, due to the coupling characteristics of the two-dimensional wavenumber domain variables, higher-order phase error will also affect the scattering point’s envelope. Therefore, in WFS-SAR imaging that considers both high-resolution and large-swath scenes, it is necessary to derive an accurate WCE phase in the two-dimensional wavenumber domain and conduct a specific analysis and evaluation on the neglected higher-order phase error.

In terms of WCE correction, the authors of [

38] propose a local joint compensation method for spatially variant phase error and geometry distortion, which compensates for the WCE phase with each point while performing geometric correction. Although this method offers high accuracy in error compensation, it increases the algorithm’s computational complexity and poses challenges in integrating with motion compensation methods. Spatially variant inverse filtering [

29,

40] is a classic method for correcting WCE based on the gradual variability of the phase error. By dividing sub-images and compensating for the phase error in the transform domain, an acceptable focused image is obtained within the allowable error extent, resulting in a refocused SAR image with the cost of minimal increase in computational complexity.

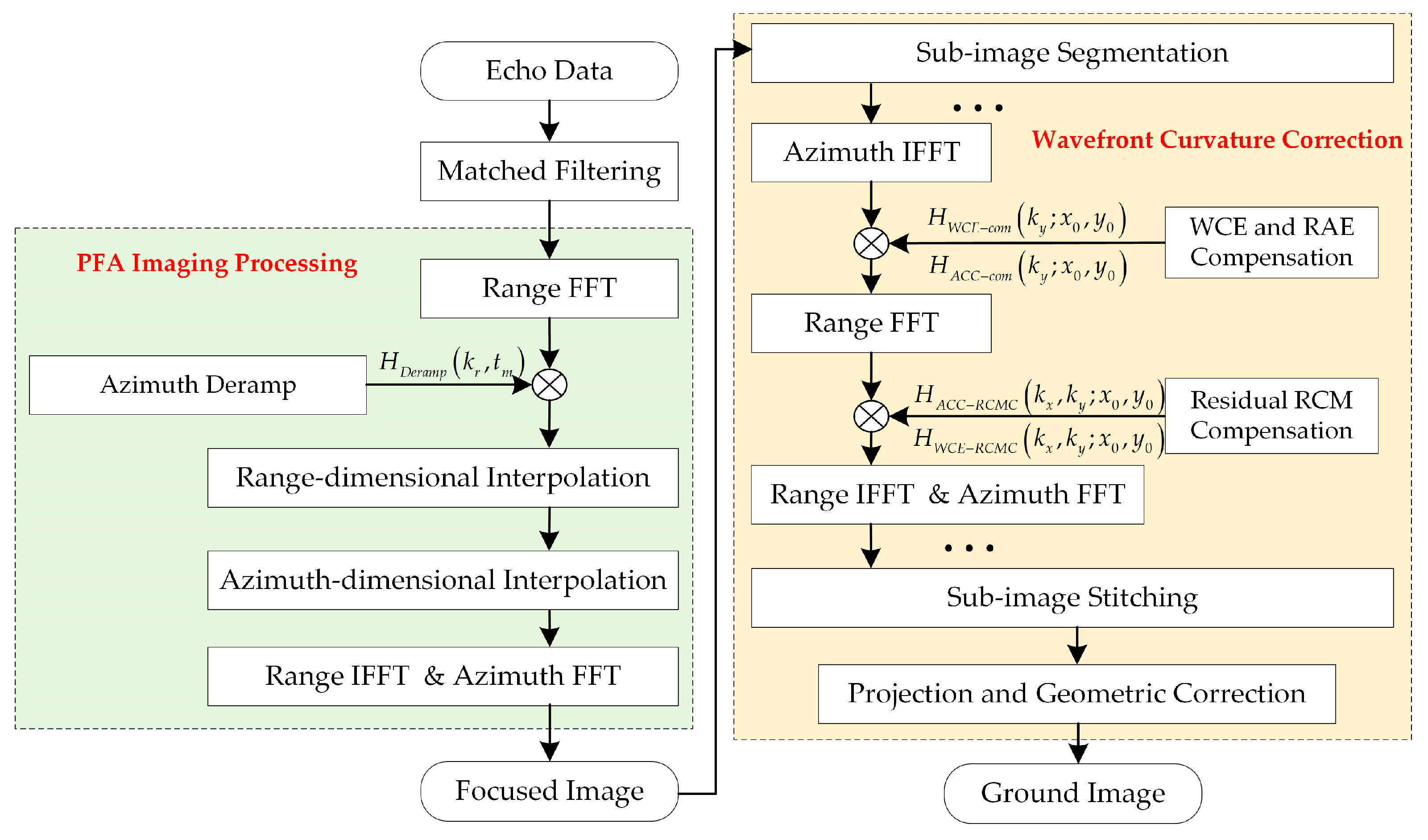

Aiming at the problem of WFS-SAR imaging with maneuvering trajectory, an extended polar format algorithm for joint envelope and phase error correction is proposed in this paper. Firstly, we establish a geometric model for maneuvering trajectory SAR imaging and construct an instantaneous slant range to obtain the baseband echo signal model. After acceleration compensation, the azimuth Deramp and two-dimensional resampling processing are performed to acquire a coarse-focused image on the slant range plane. Next, to eliminate the issue of image defocusing and distortion caused by the wavefront curvature phenomenon, we establish a mapping relationship between the geometry space and wavenumber space, and derive the WCE phase and residual acceleration error (RAE) phase by the McLaughlin series. On this basis, the joint envelope and phase correction function is proposed, and the phase error is accurately compensated through a sub-image processing strategy in the spatially variant inverse filtering. Finally, we obtain a well-focused and distortionless ground scene image through projection and geometric correction.

Compared with the existing algorithms, the main advantages of the proposed algorithm can be summarized as follows:

We associate the geometry space with wavenumber space based on the defined observation angle and derive an accurate analytical expression for the wavenumber domain signal.

We derive the WCE phase and RAE phase in polynomial form through the McLaughlin series, and evaluate the influence of the cubic phase error component on the scattering point envelope and azimuth focusing in the WFS-SAR imaging mounted on the maneuvering platform.

Relying on the spatially variant inverse filtering processing, we improve the traditional WCR phase compensation function and construct a new RCM recalibration function for joint envelope and phase error correction, which results in a well-focused maneuvering platform WFS-SAR image at the cost of a slight increase in computational complexity in the final.

This paper is organized as follows: In

Section 2, a geometric model for WFS-SAR imaging with maneuvering trajectory is constructed, and the coarse-focused image is obtained through PFA imaging processing.

Section 3 describes the proposed method for WCE correction in detail. The results of simulation experiments used to evaluate the proposed method are given in

Section 4. Finally, the conclusions are drawn in

Section 5.

3. Joint Envelope and Phase Error Correction

The planar wavefront assumption is adopted for PFA imaging processing in the previous section, while in reality, the echo received by the radar antenna is a spherical wavefront. Therefore, there exists a phase error

at all other points except for the scene reference position, which is a coupling function of the scattering point position

and the two-dimensional wavenumber variable

, and increases with the displacement of the scattering point and the expansion of the wavenumber spectrum width [

37].

For WFS-SAR imaging with high-resolution and large scene, the quality of the focused image will be negatively impacted by the WCE. Specifically, the impact can manifest in two aspects. The first one is the displacement of the focusing position of the scattering point. The linear component concerned with the two-dimensional wavenumber variable in the WCE phase reflects displacement of the scattering point relative to its proper position, which varies with the proper position , ultimately causing geometric distortion of the overall image. The second one is the deterioration of the focusing effect of the scattering point. The quadratic and higher-order components concerned with the two-dimensional wavenumber variable in the WCE phase worsen the azimuth focusing result. Similarly, due to the inherent spatial variability characteristics, the defocusing effect gradually becomes apparent as the scattering point moves away from the reference position.

Therefore, correcting the wavefront curvature phenomenon is the most crucial step in PFA-based WFS-SAR imaging processing. According to the analysis above, the WCE is related to radar spatial sampling and varies with the spatial position of scattering points. To accurately compensate for the WCE, it is essential to gather spatial location information from both the radar and scattering points. However, in the focusing domain, there is no wavenumber variable that can characterize the radar sampling information. Conversely, in the wavenumber domain, the wavenumber spectrum of scattering points overlaps with each other, making it impossible to handle the spatial variability of the scattering points’ position. This contradiction presents a significant challenge to the WCR correction.

In this section, the WCE phase will be divided into the defocused and distorted components, which are compensated by spatially variant inverse filtering and projection distortion correction, respectively.

3.1. Derivation of the Wavenumber Domain Signal

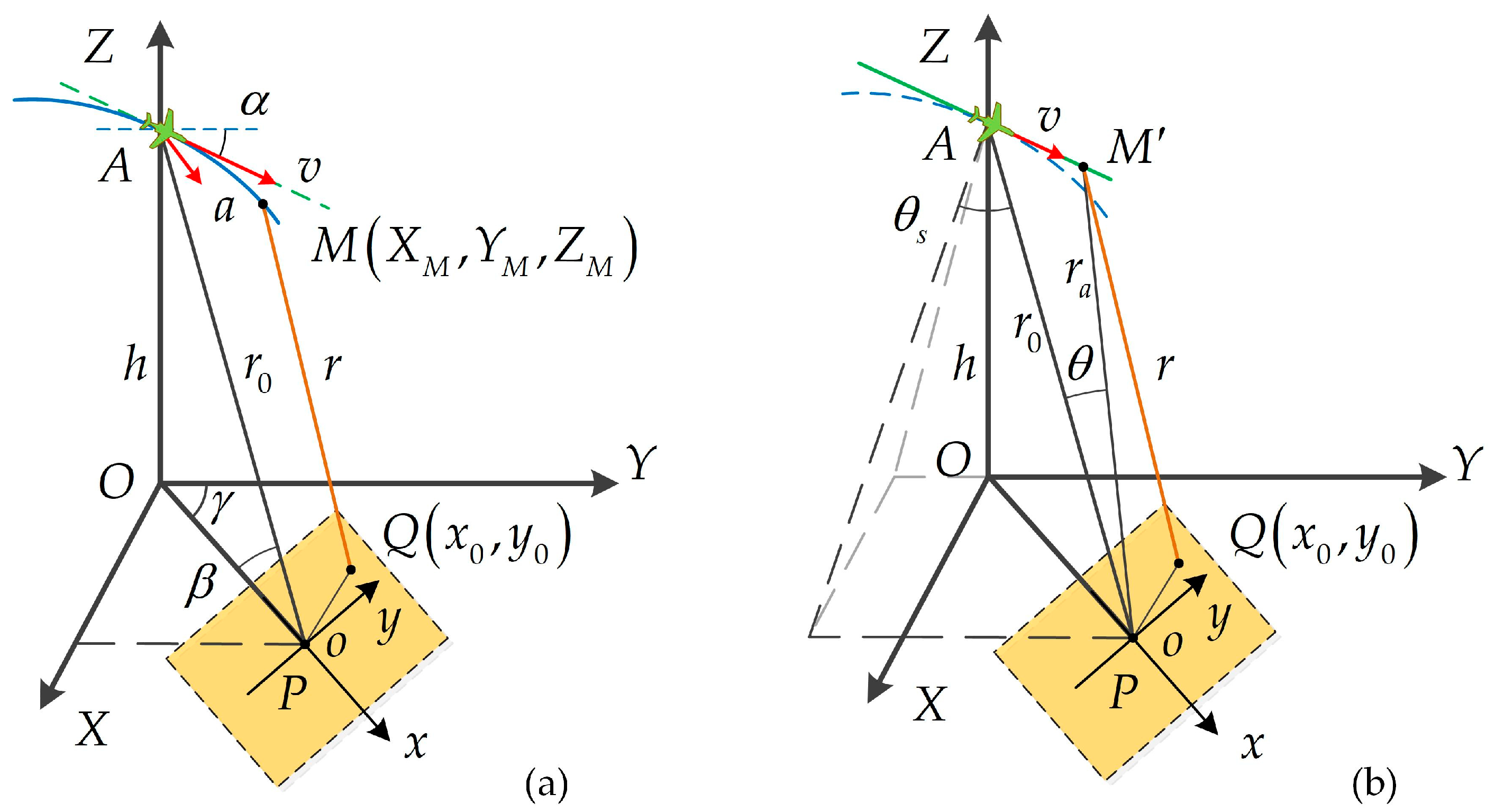

Before performing the WCE correction, it is necessary to derive the analytical expression for the wavenumber domain signal with the Cartesian coordinate format. Due to the two-dimensional resampling being implemented in coarse-focused imaging, it is difficult to obtain the WCE phase directly through variable substitution. However, by investigating the observation angle

, we can express it as the arcsine value of the ratio of the lateral displacement within the instantaneous center slant range

, as given in Equation (10). Simultaneously, it can also be expressed as the arctangent value of the ratio of the two-dimensional wavenumber variables, as shown in

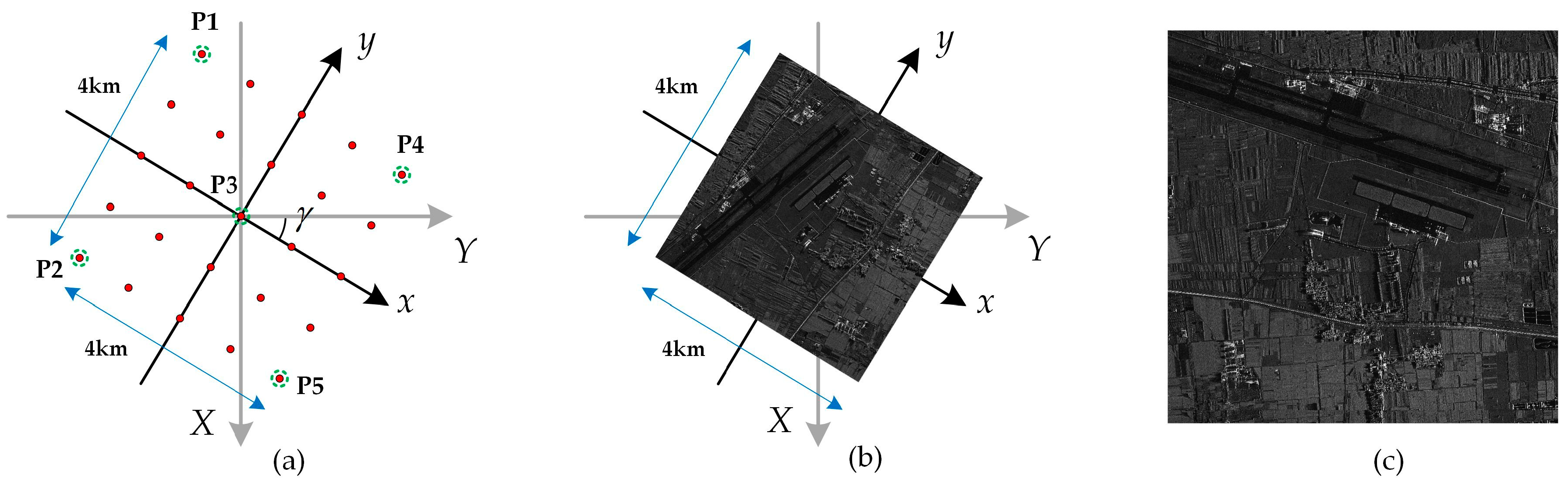

Figure 2a.

Therefore, we can associate the variables in the geometry space and wavenumber space by the observing angle, and acquire the differential phase

through this relationship.

The diagram of the imaging slant range plane is shown in

Figure 2b. We establish a right-hand coordinate system

XPY with point

P as the origin and the beam pointing

AP as the

X-axis at the azimuth middle moment. Assuming that at an arbitrary moment, the radar moves to point

, and the displacement of the radar platform concern to azimuth middle moment is

. Then, we extend the instantaneous center slant range

in reverse and intersect with the

Y-axis parallel line passing through point

A at point

B. We set

AB as

,

BP as

, and

as

, where

and

represent the position displacement and instantaneous center slant range of the radar platform concern to azimuth middle moment in the side-looking mode, and

represents the difference between the instantaneous center slant range in the side-looking mode and squint mode.

Combining Equations (16) and (17), the opposite

and the hypotenuse

in triangle

can be obtained, respectively, based on geometric relationships:

Next, according to the sine theorem, there is a corresponding relationship between the three angles and three sides in triangle

, and we can represent the other two edges through the known

:

where

is a factor, which yields:

We use

to represent the position displacement of the radar platform and obtain a new instantaneous slant range

in the two-dimensional wavenumber.

Similarly, the new instantaneous center range slant

is obtained by subtracting

from

.

Therefore, the accurate differential phase in the wavenumber domain can be rewritten as:

A common method to handle the complex formula is to approximate it as a power series [

41,

42]. Thus, we expand

concerning the two-dimensional wavenumber variable through the McLaughlin series to obtain the differential phase in the polynomial form.

where

represents the McLaughlin expansion level, and

,

, and

are all natural numbers,

represents the McLaughlin expansion coefficient, which yields:

where

i! represents the factorial from 1 to

i. Among them, the linear term concerning the wavenumber variables represents the focusing position of the scattering point, while the quadratic and higher-order terms represent the defocusing phase caused by the wavefront curvature phenomenon.

3.2. Compensation for Wavefront Curvature Error

We know that the quadratic and higher-order components in the McLaughlin series expansion of the differential phase represent the defocused phase caused by the wavefront curvature phenomenon, which is the phase error that needs to be compensated in inverse filtering processing.

The existing literature [

31,

34] only considers the quadratic phase error (QPE) and neglects other high-order phase components, which have been proven to satisfy the requirements of conventional PFA imaging processing. However, we know that phase error is related to the position of scattering points and the width of the wavenumber spectrum. As the imaging scene expands and the resolution improves, the impact of higher-order phase error will gradually increase. Therefore, it is essential to explore the magnitude of cubic phase error (CER) and evaluate its effect on WFS-SAR imaging processing.

According to the McLaughlin series expansion formula shown in Equations (26) and (27), we can easily obtain the quadratic and cubic phase error coefficients of the differential phase

concerning the wavenumber variable

:

where:

where

represents the distance from the radar at the azimuth middle moment to an arbitrary point in the target coordinate system.

We can easily observe that only , , and exhibit non-zero values in the quadratic and cubic phase error coefficients. Among them, represents the quadratic term of the azimuth wavenumber, which is the main factor causing azimuth defocusing and the most crucial term in the WCE compensation. Additionally, represents the coupling term between the linear component of the range wavenumber and the quadratic component of the azimuth wavenumber, which reflects the variation of the range envelope with the azimuth position and is essentially the residual RCM caused by the WCE. Moreover, denotes the cubic term of the azimuth wavenumber, which is usually disregarded in conventional PFA imaging processing. However, its influence on azimuth focusing necessitates further evaluation for WFS-SAR imaging with high-resolution and large scenes.

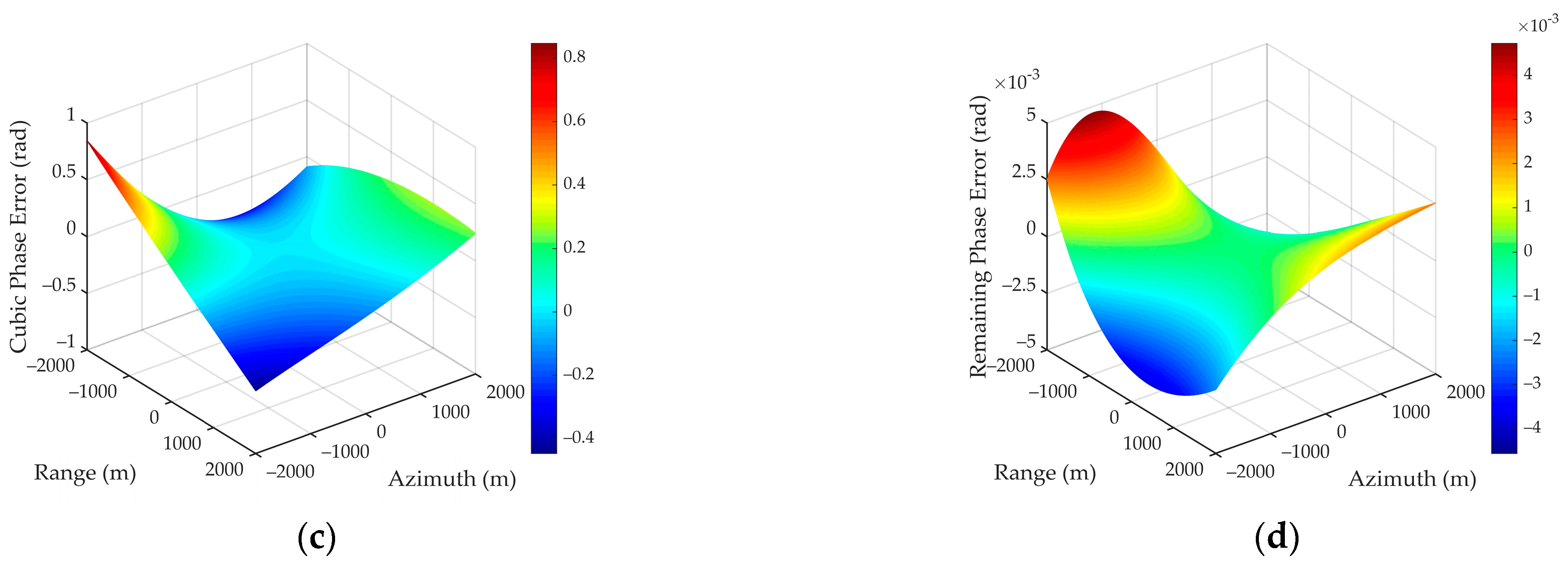

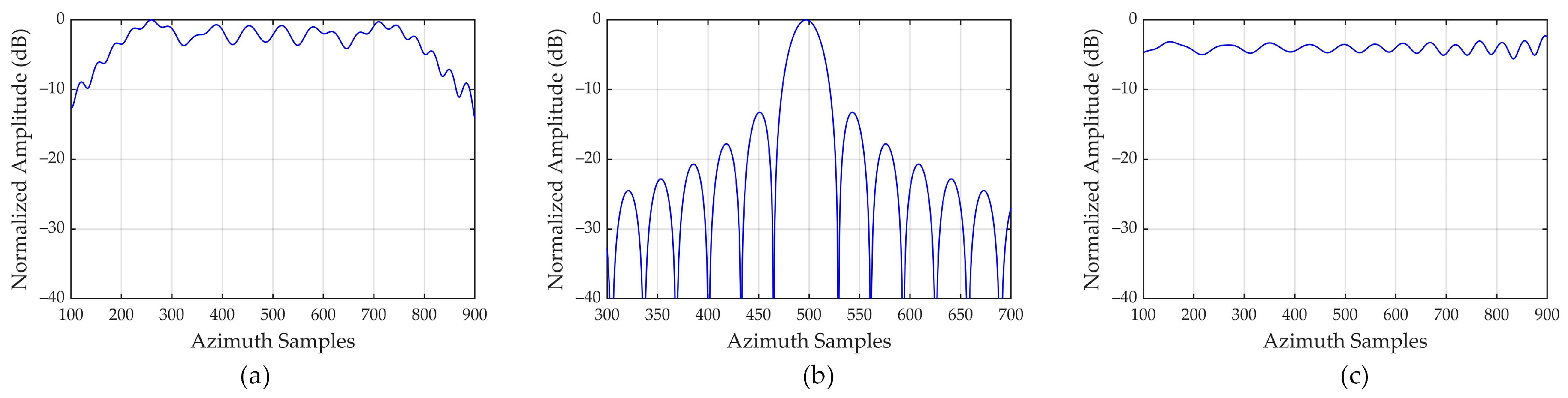

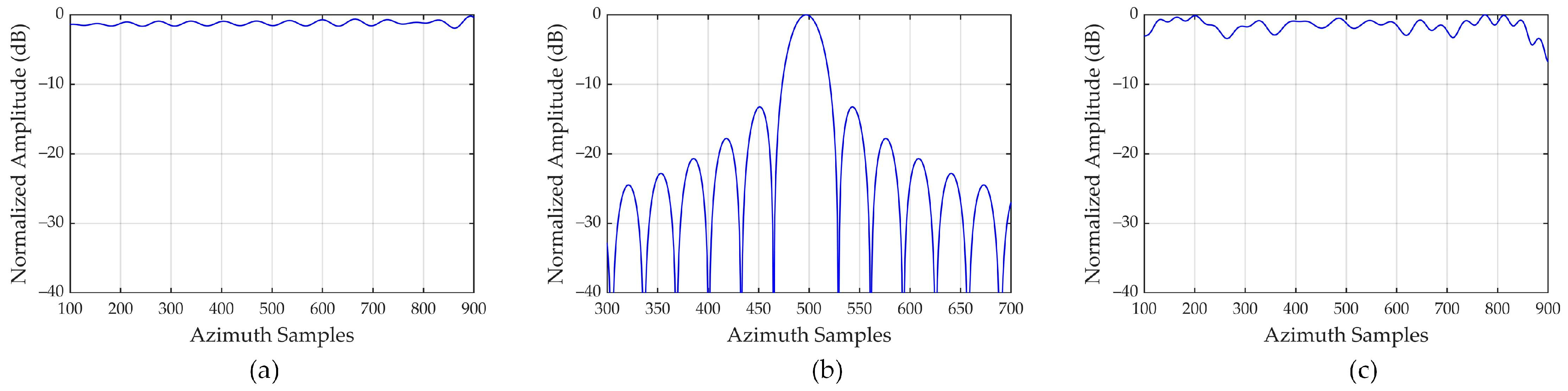

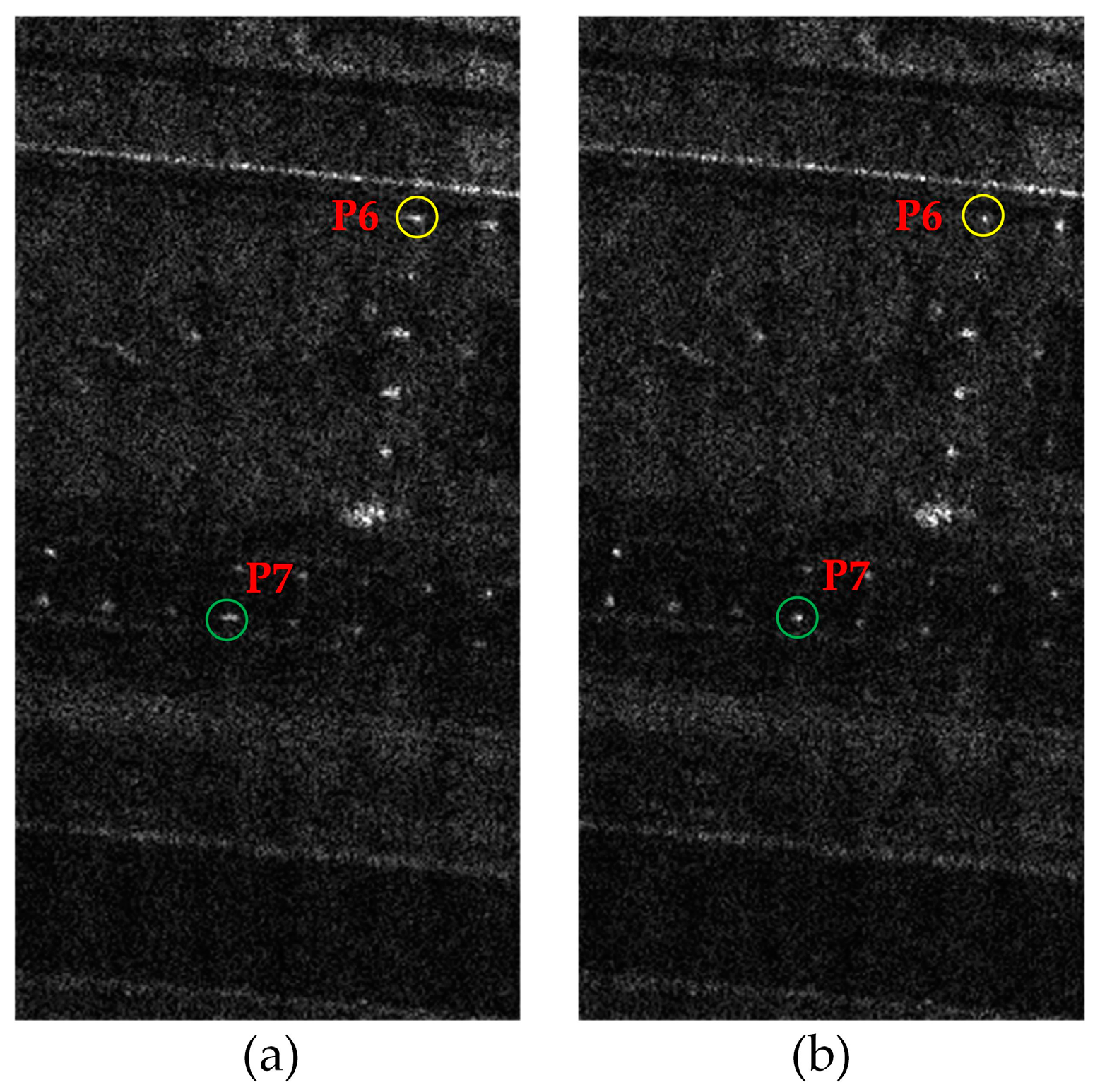

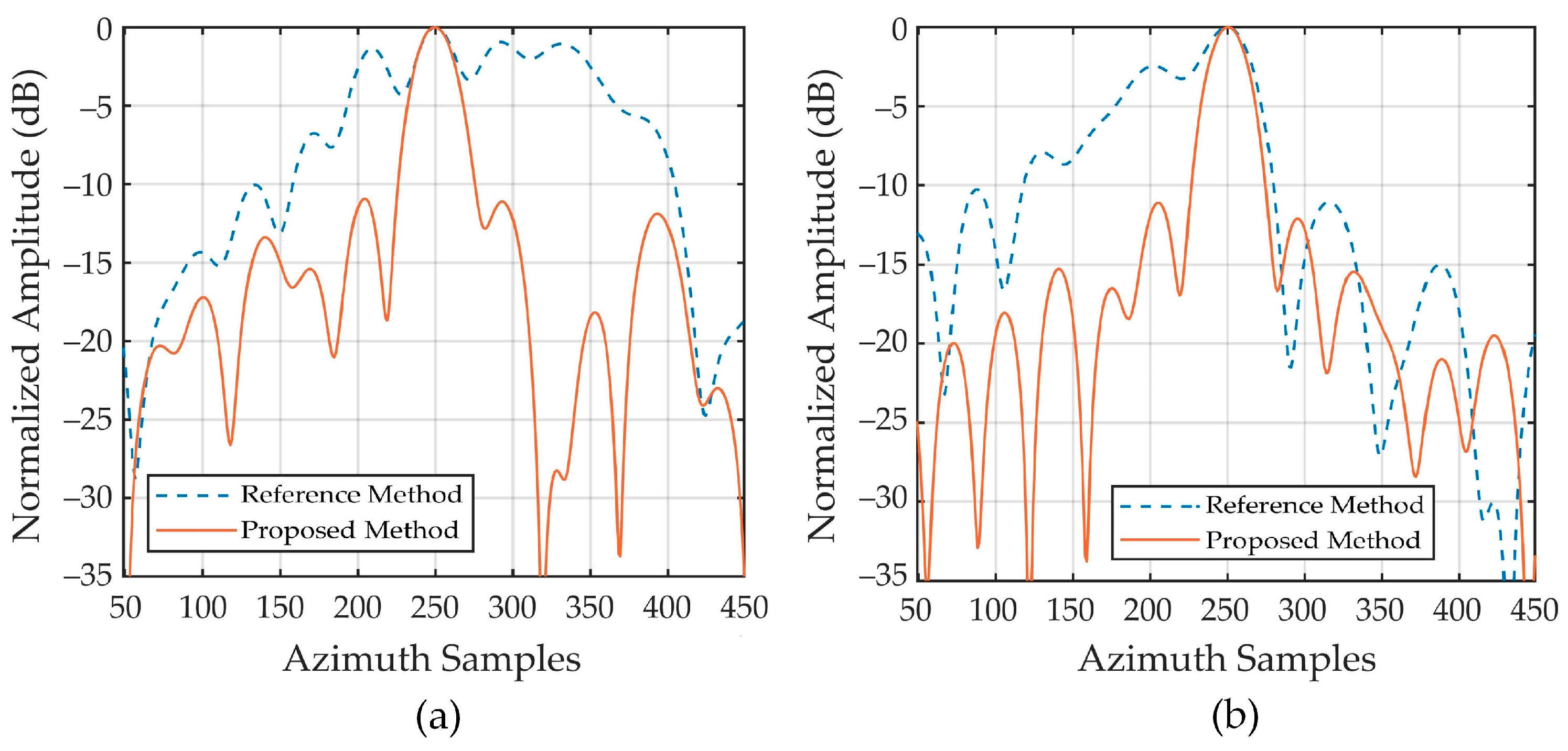

To intuitively present the extent of different error components, a simulation experiment is performed with typical parameters shown in

Table 1. Simulation results are given in

Figure 3. It is easy to notice that the quadratic phase error caused by

exceeds 30 radians in

Figure 3a, which is undoubtedly the primary issue to be addressed. The residual RCM caused by

is less than 0.05 m, shown in

Figure 3b, which is negligible when considering a sampling interval of 0.3 m, as half or a quarter of the sampling interval is usually used as the error threshold. Furthermore, the cubic phase error caused by

exceeds

in

Figure 3c, thus making it necessary to compensate for this term, as

or even

is generally considered an acceptable error margin.

Figure 3d shows the remaining phase error beyond the quadratic and cubic terms with the value less than 0.005 rad, indicating that the remaining phase error is small enough to be negligible.

Therefore, we must compensate for the quadratic and cubic phase errors caused by the WCR to ensure azimuth-focusing performance, while the residual RCM can be temporarily ignored. Based on the analysis results, a WCE compensation function is constructed.

This equation can achieve an accurate compensation for the WCE of the scattering point at

in the imaging scene.

After obtaining the error compensation function, how to perform the WCE compensation on the coarse-focused image is also an important issue. Considering the gradual variation of error with the position of scattering points, spatially variant inverse filtering is a typical and efficient wavefront curvature correction strategy. We first divide the coarse-focused image into sub-images based on the criterion that ensures the phase error change within the sub-image is less than . Subsequently, the sub-image is transformed into the azimuth wavenumber domain, and a WCE compensation function is constructed with its center as a reference. After completing the error compensation, it returns to the focusing domain to obtain an accurately focused sub-image. Finally, the refocused image is acquired through sub-image stitching. It should be noted that the keynote of this paper is to discuss the impact of higher-order components in the WCE on imaging performance when using PFA for WFS-SAR imaging, while we will not elaborate on sub-image segmentation and stitching methods in detail.

3.3. Compensation for Residual Acceleration Error

In the previous section, we use the origin of the target coordinate system to uniformly compensate for high-order motion, and the residual error can be expressed as:

It is easy to know that

is also an error related to the position of scattering points. According to the characteristics of the echo signal, the RAE phase in the range wavenumber domain can be expressed as:

As the resolution improves and the imaging area expands, the RAE phase

gradually increases and cannot be ignored. Therefore, it is imperative to analyze and compensate for the phase error in the WFS-SAR imaging mode.

Since the RAE phase varies with the scattering point’s position, which is similar to the WCE phase, we consider performing the error analysis using the same method. Based on the correspondence between the geometry and wavenumber space established earlier, a similar method is used to map the RAE phase to the wavenumber domain, and it is expanded by the McLaughlin series to obtain the residual acceleration error in polynomial form.

where:

where:

Based on the results shown above, it can be concluded that the RAE phase and the WCE phase have similar spatially variant characteristics since only the values of , , and are not equal to zero in all expansion terms. Among them, represents the quadratic term of the azimuth wavenumber, which is the most crucial term in the RAE phase compensation. Here, represents the coupling term between the linear component of the range wavenumber and the quadratic component of the azimuth wavenumber, which reflects the residual RCM brought by acceleration, and its impact needs further evaluation. Additionally, represents the cubic term of the azimuth wavenumber, which theoretically has a smaller value. However, for the WFS-SAR mode with more stringent imaging conditions, its impact on azimuth focusing performance also needs to be further evaluated.

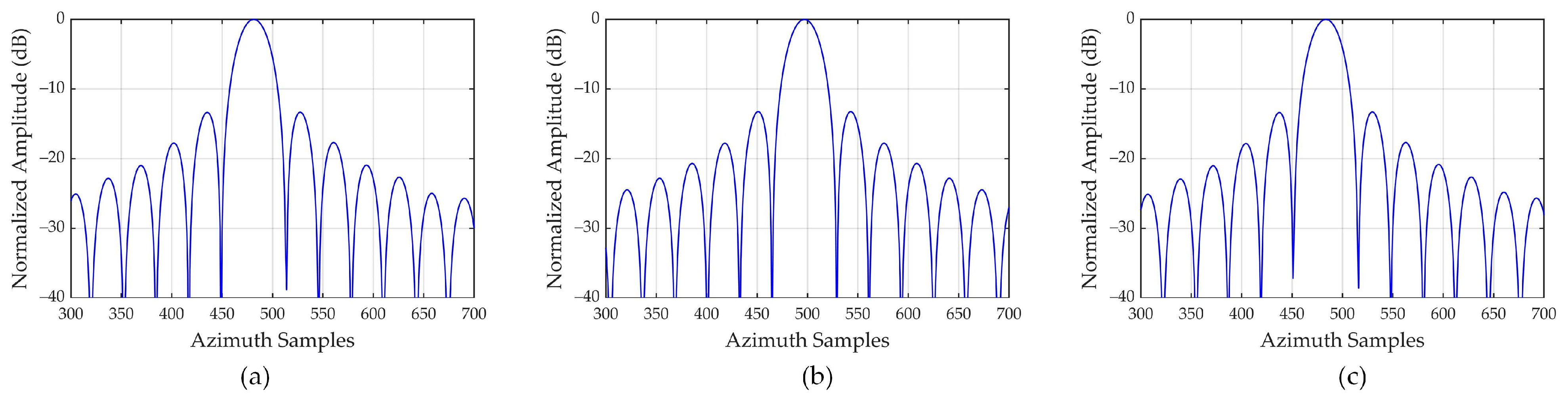

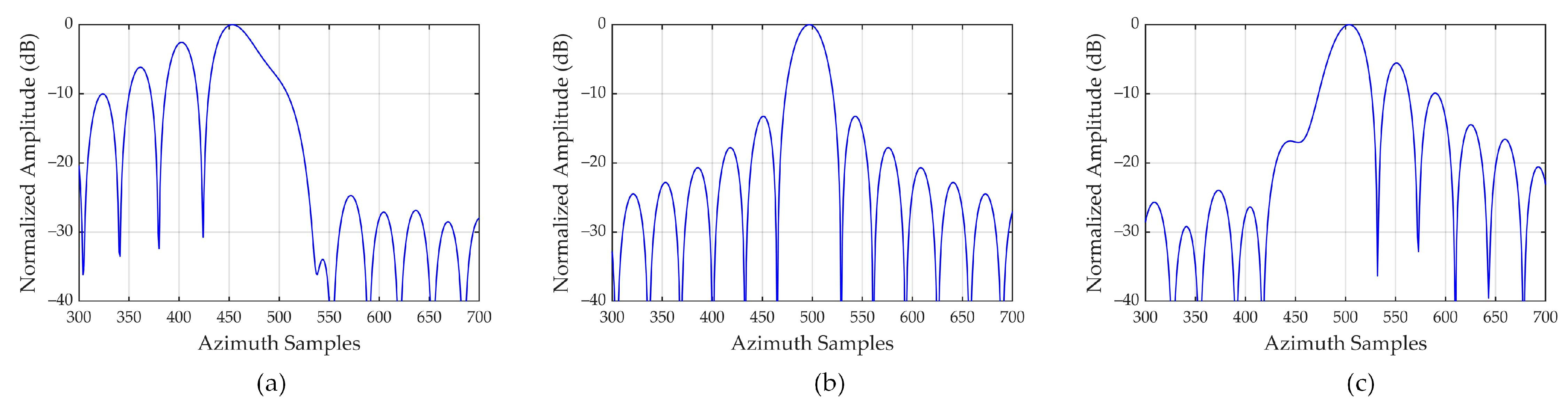

Next, we will simulate the phase error caused by the residual acceleration. Parameters are shown in

Table 1, and simulation results are given in

Figure 4. It is easy to find that in

Figure 4a, the quadratic phase error caused by

exceeds 160 rad, which is the main component of the RAE, and its value even exceeds the WCE. The residual RCM caused by

in

Figure 4b exceeds 0.2 m, which has outnumbered the error threshold of half of the sampling interval. Thus, further correction of residual RCM is also required. In addition, the phase error caused by

exceeds 5 rad, as shown in

Figure 4c, which is an indispensable item in error compensation.

Figure 4d shows the remaining higher-order phase error beyond the quadratic and cubic components, which can be ignored with a maximum value of approximately 0.05 rad.

According to the simulation results, we construct a residual acceleration error compensation function and a range cell migration recalibration function:

It is easy to find that the RAE phase shown in Equation (36) can be universally processed in the spatially variant inverse filtering together with the WCE phase. The RCM shown in Equation (37) must be corrected by transforming the sub-image into the two-dimensional wavenumber domain, which is also an essential processing module in WFS-SAR imaging.

Furthermore, considering that residual acceleration compensation needs to undergo the two-dimensional wavenumber domain, we can also correct the RCM caused by the WCE while performing RCM recalibration to improve our method’s robustness and universality. The recalibration function can be expressed as:

3.4. Projection and Geometric Correction

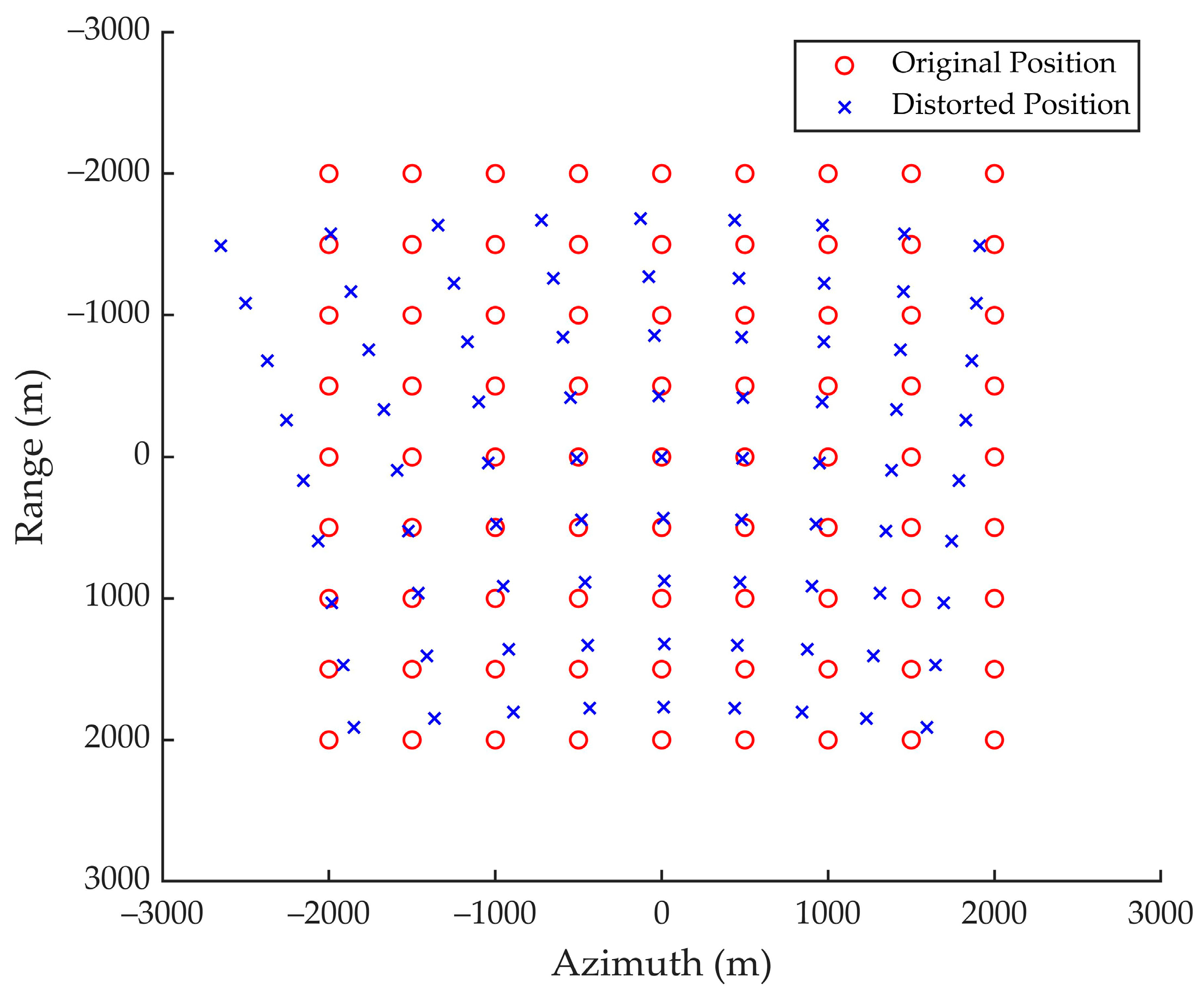

In the previous part, the WCE and RAE compensation functions are derived, and the strategy of inverse filtering based on sub-image processing is explained. Next, we will analyze the problem of the scattering point position distortion caused by wavefront curvature phenomena in detail.

As mentioned earlier, in the McLaughlin series expansion of the differential phase, the linear term of the wavenumber variable represents the scattering point focusing position. The exact analytical expression for the focusing position can be obtained through Equation (27).

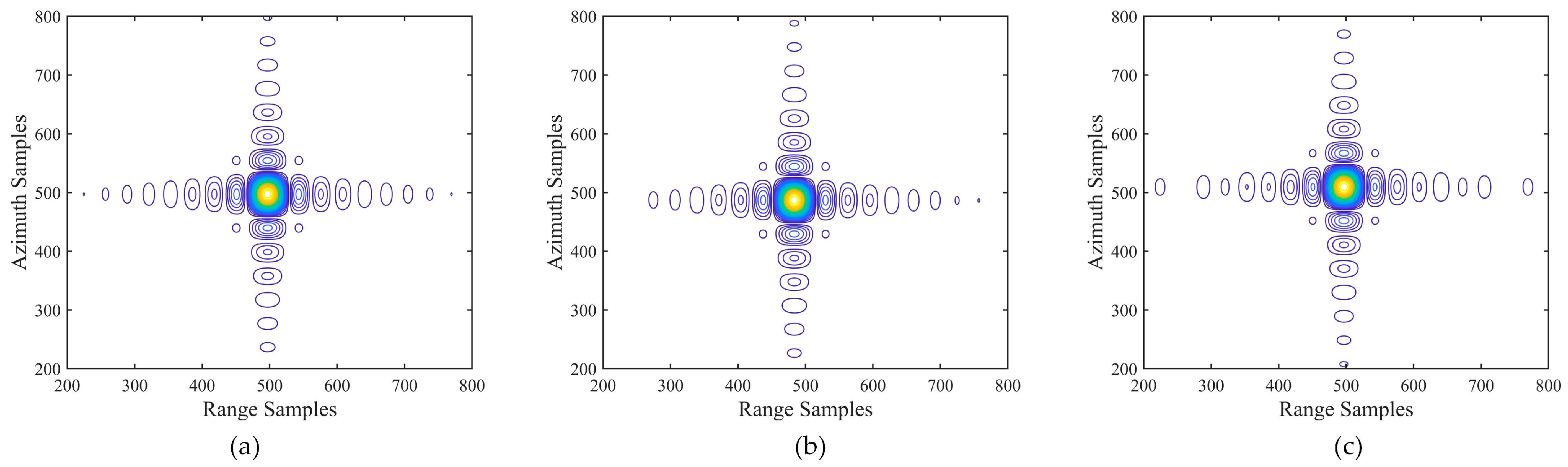

The equations shown above indicate that the scattering point located at

in the target coordinate system is focused at

after PFA imaging processing, which is the position distortion caused by the WCE. To present the position distortion phenomenon more intuitively, we conducted a simple simulation using a point array, and parameters are also given in

Table 1.

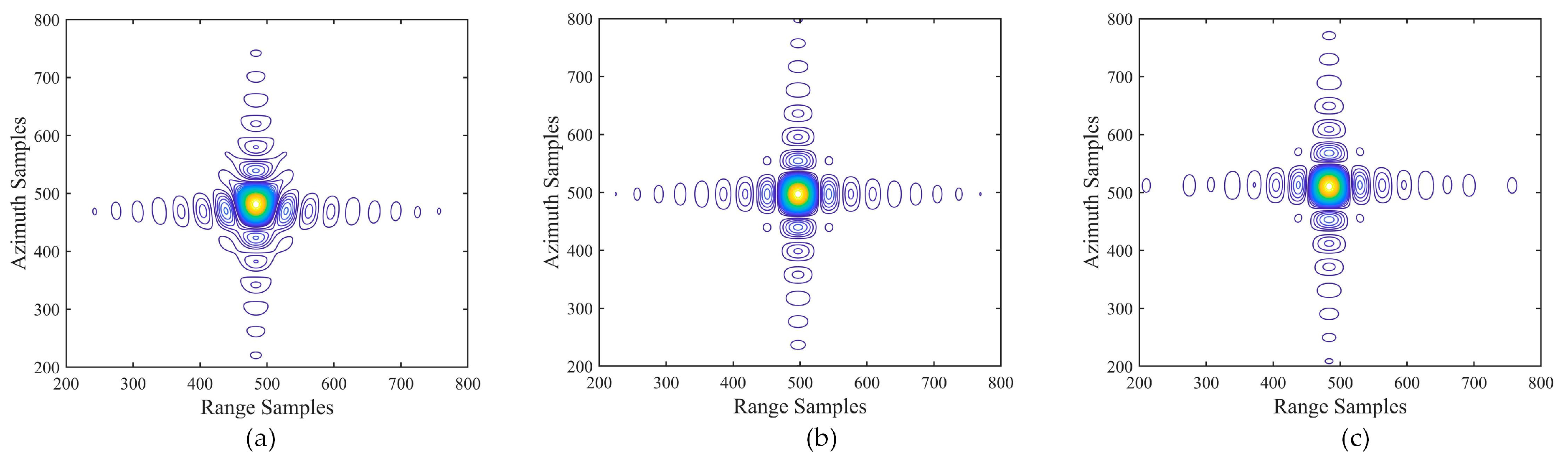

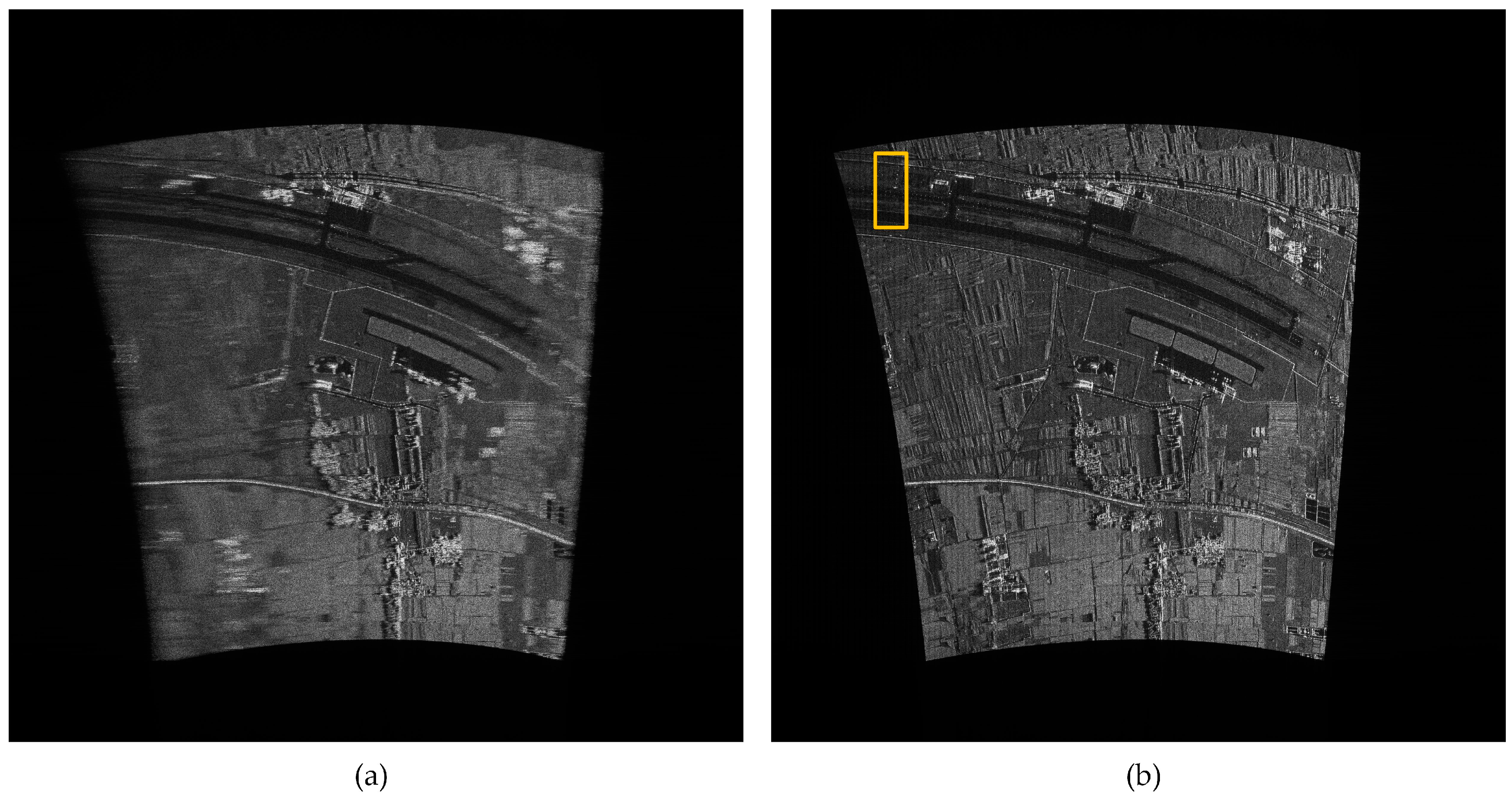

From the simulation result depicted in

Figure 5, it can be observed that the extent of image distortion in the slant range plane increases as the displacement of the scattering point, ultimately changing the geometric shape and relative position relationship of the imaging area, resulting in the entire scene appearing as an irregular sector.

Since the actual terrain features cannot be accurately presented in the focused image on the slant range plane, it is necessary to perform the projection and distortion correction. We adopt the theory of reverse projection and locate the scattering point position through the distortion relationship shown in Equations (39) and (40), thereby enabling the retrieval of amplitude and phase information [

29,

39]. Further details on this process are provided in the following.

Step 1: Form a pixel grid in the target coordinate system based on a fixed resolution interval and number of image points.

Step 2: For an arbitrary point within the pixel grid, calculate its distorted position on the focused image using Equations (39) and (40).

Step 3: Take a data block of size with as the reference and obtain the amplitude and phase information through interpolation.

Step 4: Traverse all grid points to obtain a well-focused ground plane image without distortion.

We can adopt different interpolation kernels according to accuracy requirements in practice, and select the size M of the data block based on the interpolation kernel.

The processing flow of this algorithm is shown in

Figure 6.

3.5. Computational Complexity

For engineering applications, we need to analyze the computational complexity of the proposed algorithm. It is well known that the calculation amount of N-point FFT/IFFT is , the calculation amount of N-point complex phase multiplication is , and the calculation amount of N-point M-kernel sinc interpolation is .

In the coarse-focused processing of PFA, the algorithm involves two instances of FFT/IFFT in the range dimension, one instance of FFT/IFFT in the azimuth dimension, one instance of phase multiplication, and two instances of interpolation. Supposing

and

denote the range and azimuth dimension sampling number, respectively, the computational complexity of coarse-focused processing can be expressed as:

It is consistent with the computational burden of traditional interpolation-based PFA.

In the proposed algorithm, we compensate for the WCE and RAE through the spatially variable inverse filtering. Assuming that the range and azimuth dimension points of the sub-image are both

, and considering half of the azimuth dimension overlap width, the computational complexity of the joint envelope and phase error correction can be expressed as:

Therefore, the computational complexity of the proposed algorithm can be calculated by

.

Although the proposed algorithm partly increases the computational burden compared to others, it can precisely focus the entire scene in WFS-SAR imaging with high-resolution and large scene. Therefore, this phenomenon is acceptable in practice.