1. Introduction

Synthetic aperture radar (SAR) is widely applied in many fields due to its all-weather and all-day capabilities [

1,

2,

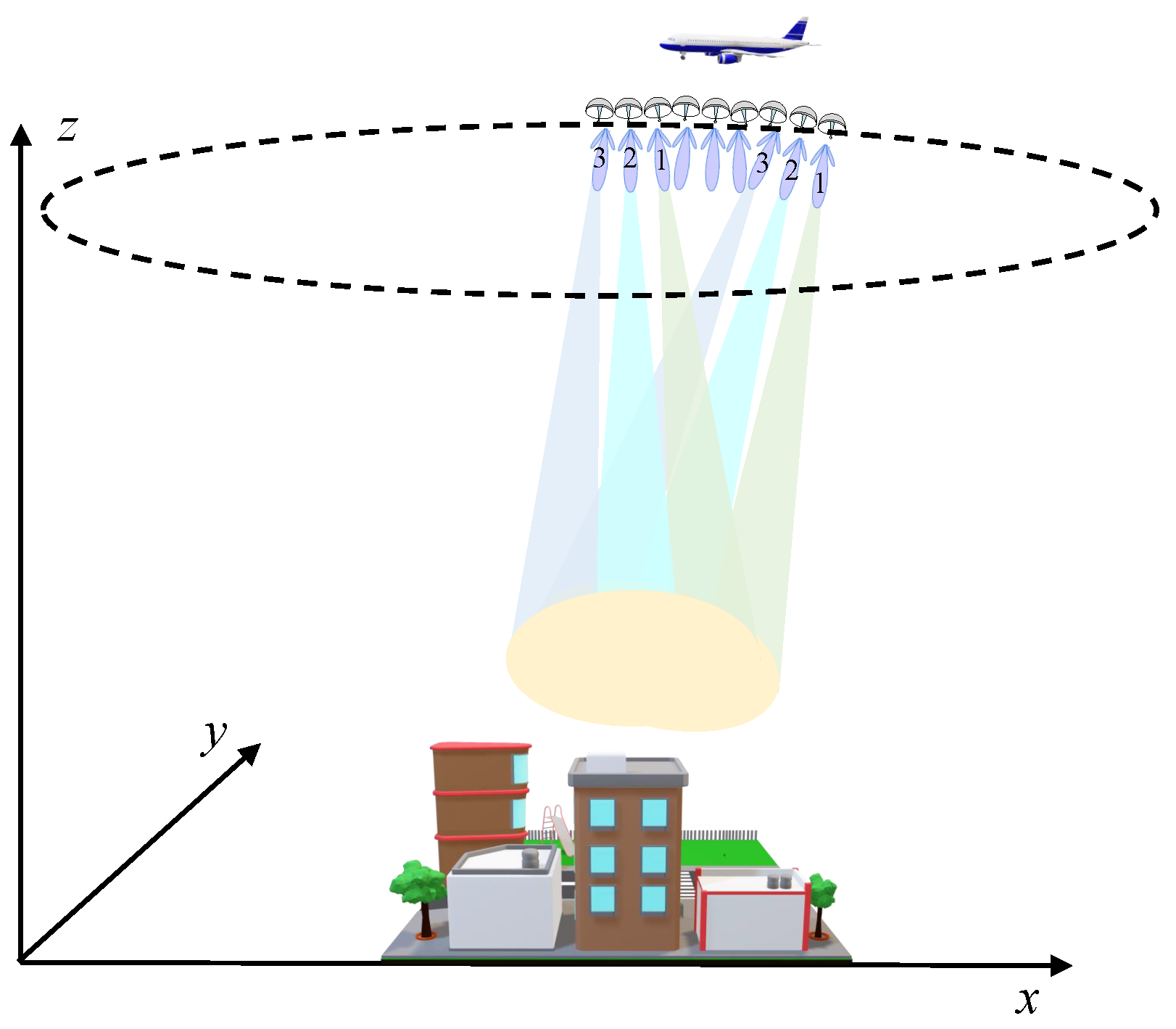

3]. Circular synthetic aperture radar (CSAR) has attracted widespread attention due to its unique geometric observation perspective. CSAR is a SAR operating mode that was proposed in the 1990s [

4,

5]. CSAR makes a circular trajectory in the air through the radar platform, and the radar beam always illuminates the scene to achieve all-round observation of the target. The long-time circular synthetic aperture allows CSAR to achieve plane resolution at a sub-wavelength level, eliminating the dependence on system bandwidth [

6]. Additionally, CSAR has the potential for three-dimensional imaging [

7], breaking through the two-dimensional limitations of traditional SAR and mitigating or even eliminating issues such as shadows, geometric deformation, and overlap. With these unique advantages, CSAR holds significant potential for applications in high-precision mapping, ground object classification, target recognition, and more [

8,

9,

10,

11,

12,

13]. As a result, CSAR has emerged as a prominent area of research within the SAR field.

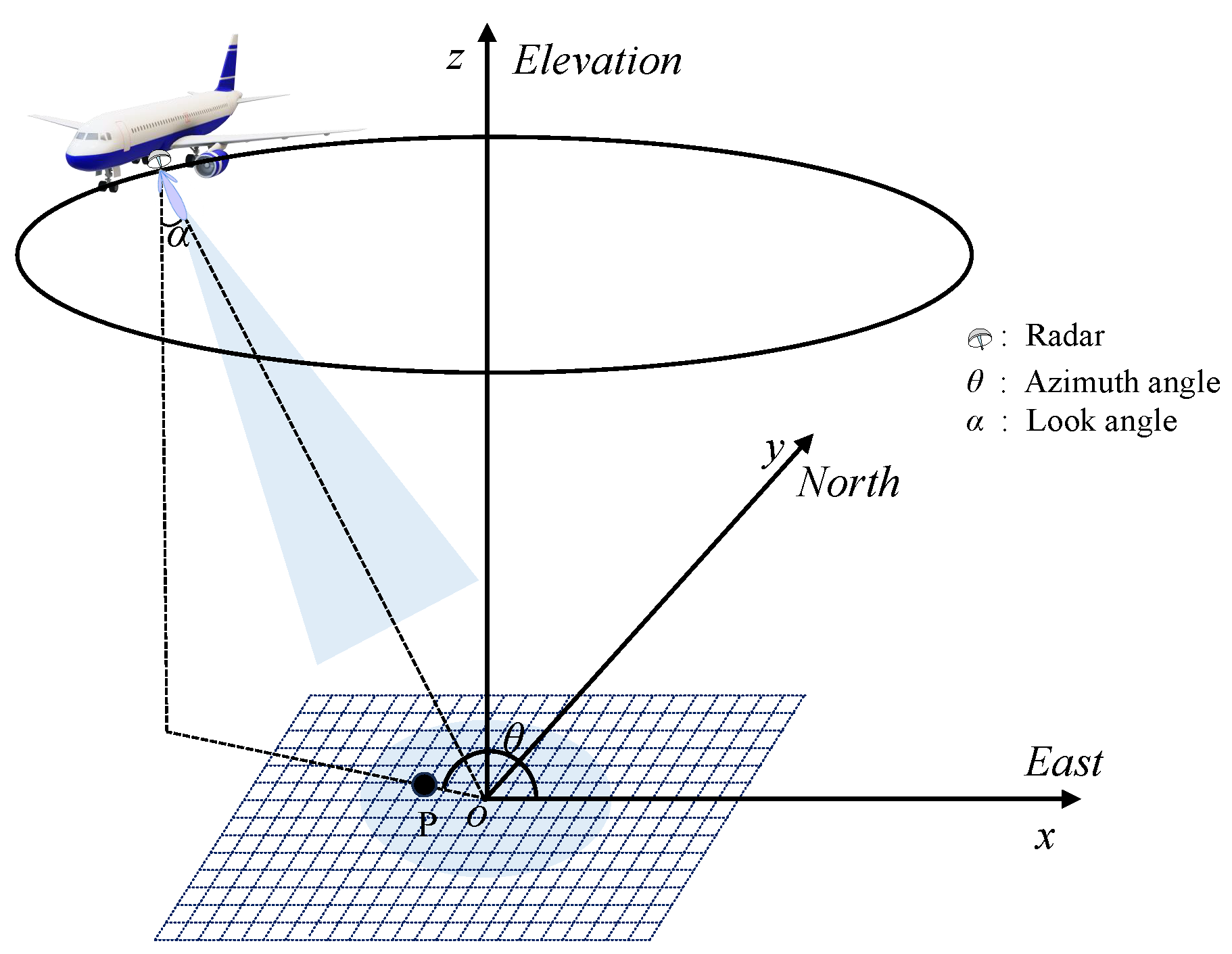

The advantages of CSAR are closely tied to its 360-degree observation capability. However, the limited spotlight region is one of the factors that hamper the development of CSAR. The spotlight region is affected by many factors, such as pulse repetition frequency, beam width, and range ambiguity [

14]. In 2014, Ponce et al. [

15] conducted theoretical and technical research on Multiple-Input Multiple-Output (MIMO) CSAR, applying MIMO technology to CSAR to achieve high-resolution imaging of large scenes. In 2021, the AIRCAS conducted research on multi-beam CSAR technology to expand the spotlight area of CSAR.

Compared to traditional linear SAR, CSAR offers unparalleled advantages. However, owing to the intricate motion trajectory of CSAR, traditional imaging algorithms designed for linear trajectories are no longer applicable. Currently, CSAR imaging algorithms can be broadly categorized into two types: time-domain imaging algorithms and frequency-domain imaging algorithms. Time-domain imaging algorithms, such as the cross-correlation algorithm [

16], confocal projection algorithm [

6,

17], and backprojection (BP) algorithm [

18,

19], find application in CSAR. Notably, the BP algorithm stands out due to its versatility in handling various imaging geometries. Nonetheless, it is crucial to acknowledge that the BP algorithm is hampered by high computational complexity and low efficiency. On the other hand, frequency-domain imaging algorithms achieve focused imaging through Fourier transforms in the frequency domain. Examples include the polar format algorithm (PFA) [

20] and the wavefront reconstruction algorithm proposed by Soumekh [

5]. Nevertheless, these algorithms encounter challenges associated with their limited adaptability to diverse scenes and complex implementation processes [

21]. Furthermore, the inherent approximation procedures can affect the imaging accuracy of high-resolution CSAR.

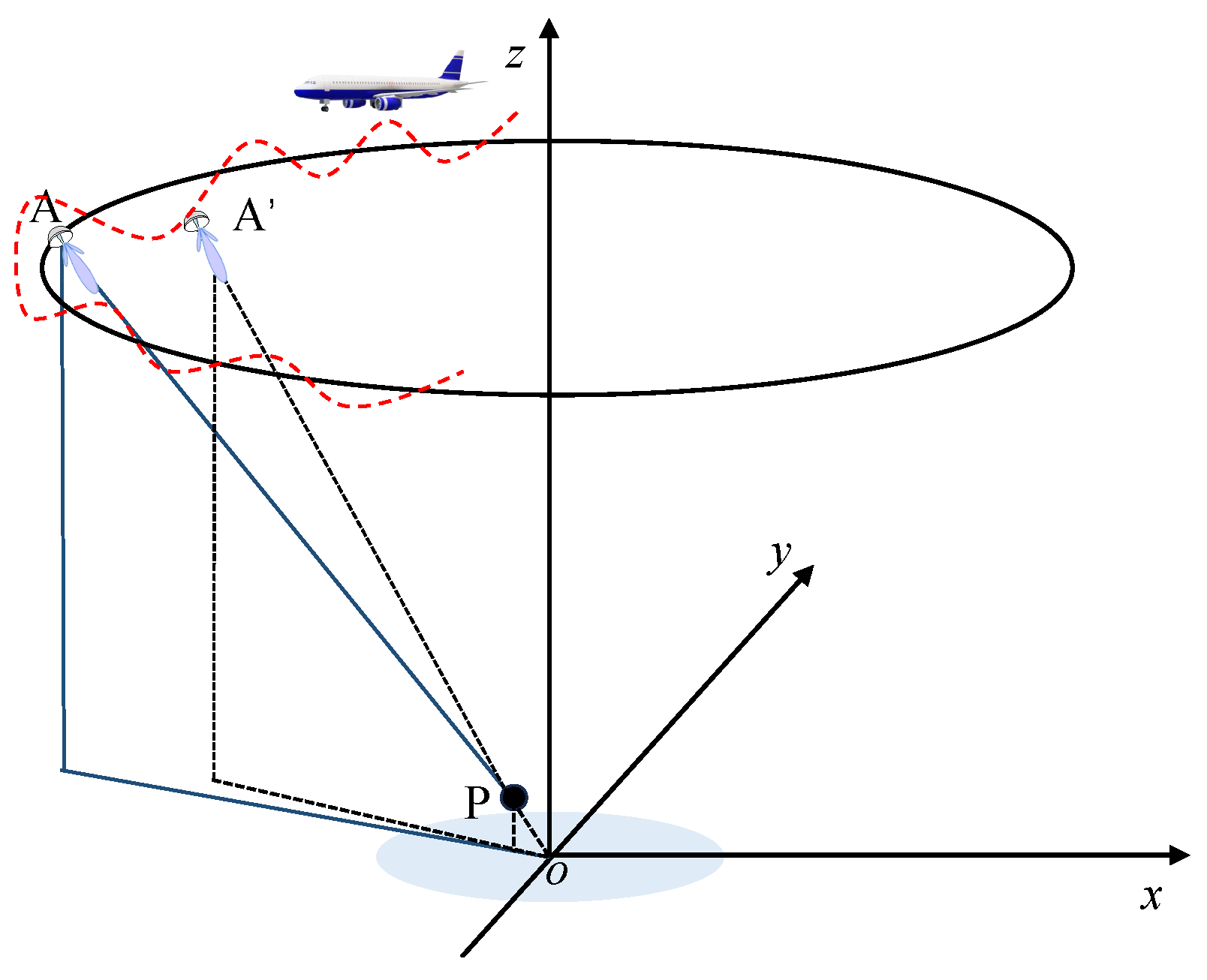

For airborne SAR platforms, imaging errors can be introduced due to airflow disturbances and carrier mechanical vibrations [

22]. The trajectory of CSAR is intricate, and the synthetic aperture time is prolonged, making the impact of motion errors significant. Unlike traditional linear SAR, CSAR motion compensation does not require aligning with an ideal trajectory. It only needs to correct slant-range errors and phase errors arising from trajectory measurement inaccuracies and other factors [

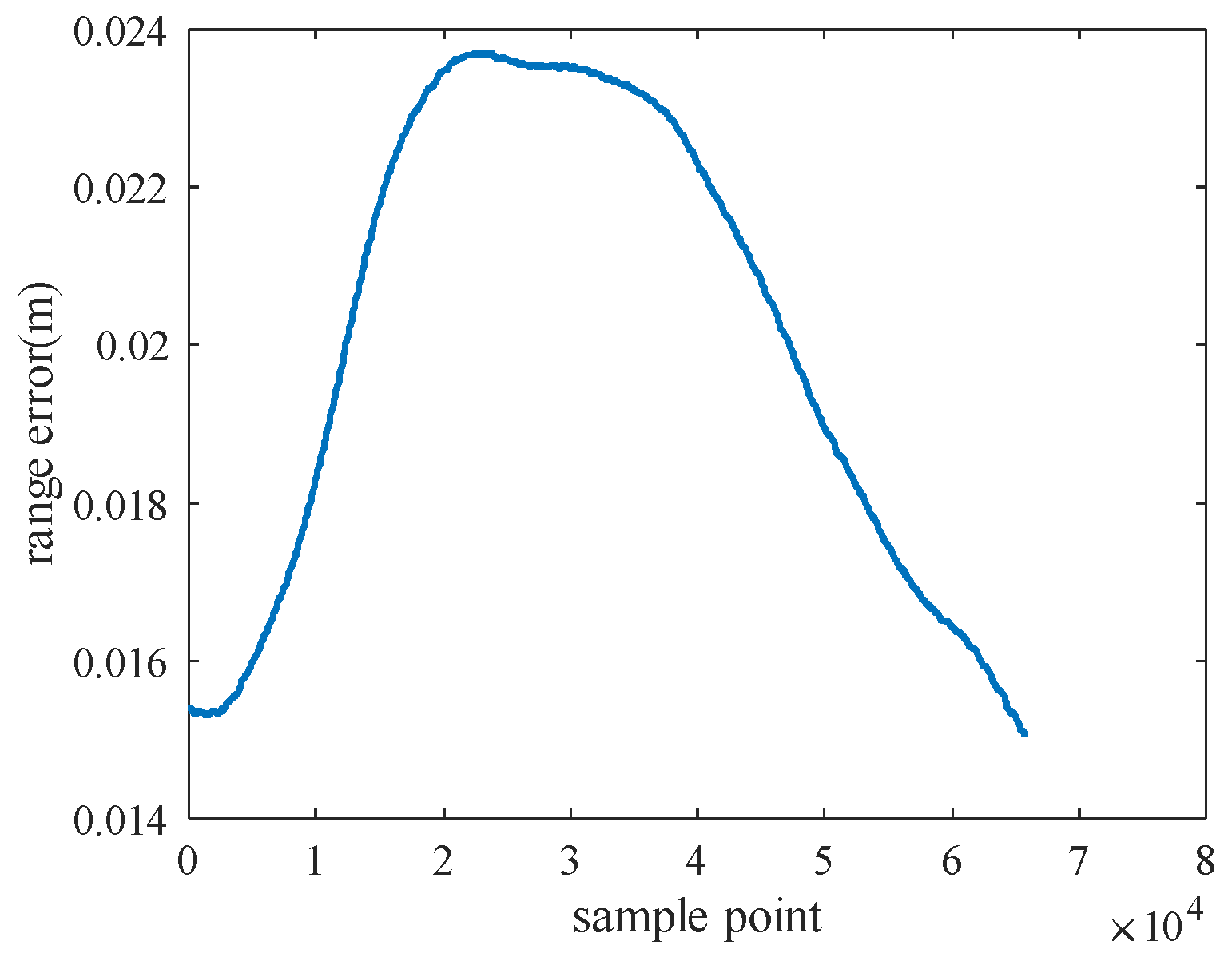

23]. The accuracy of the navigation system plays a crucial role in obtaining high-resolution CSAR images. To achieve superior imaging quality, the trajectory measurement errors need to be controlled within

of the wavelength [

24]. Additionally, CSAR faces challenges in motion compensation due to the strong coupling between the range and azimuth, along with the complexity of slant-range expressions. These factors contribute to the increased difficulty in motion compensation for CSAR.

In 2011, Lin et al. [

25] introduced a CSAR focusing algorithm based on echo generation. This method performs reverse echo generation by extracting the phase error of point targets after coarse imaging to achieve motion compensation. Zhou et al. [

22] conducted a detailed analysis in 2013 of the impact of three-dimensional position errors of the radar on the envelope and phase of the echo. In 2015, Zeng et al. [

26] proposed a high-resolution SAR autofocus algorithm in polar format to address the challenge of insufficient inertial navigation accuracy for spotlight SAR, thereby achieving high-resolution imaging. In the same year, Zhang et al. [

27] presented a CSAR autofocus algorithm based on sub-aperture synthesis to address the issue of diminished imaging resolution resulting from variations in target scattering coefficients with observation angles. In 2016, Luo et al. [

28] introduced an extended factor geometric autofocus algorithm for time-domain CSAR. This innovative approach combines factorized geometrical autofocus (FGA) with fast factorized backprojection (FFBP) processing, relying on gradually changing trajectory parameters to obtain a clear image. In 2017, Wang et al. [

29] introduced a sub-bandwidth phase-gradient autofocus method to accomplish motion compensation for high resolution. In 2018, Kan et al. [

30] quantitatively analyzed the peak-drop coefficient of the target in the presence of motion errors and assessed the impact of motion errors on imaging quality based on the peak-drop coefficient. In 2023, Li et al. [

31] proposed an autofocus method based on the Prewitt operator and particle swarm optimization (PSO). This method aims to enhance imaging quality by optimizing the autofocus process using the Prewitt operator and the PSO algorithm. In general, current motion compensation methods for CSAR can be broadly categorized into two types: one that relies on a high-precision Position and Orientation System (POS), and another based on echo data. However, relying on the motion compensation of a POS is generally challenging, as it is difficult to meet the accuracy requirements of high-resolution CSAR [

24,

32,

33]. On the other hand, motion compensation based on echo data involves estimating the motion-induced errors in the echo signal, which can be a complex process and is susceptible to interference from clutter signals [

7]. The long synthetic aperture in CSAR introduces more complex motion errors compared to linear SAR, making it difficult to directly apply classical autofocus algorithms to CSAR [

22]. Therefore, specialized motion correction algorithms are needed to address the unique challenges of CSAR.

Currently, motion compensation in CSAR primarily investigates the impact of radar positioning errors on imaging, with a notable scarcity of research on compensating for motion errors using acceleration data. In fact, the degree of deformation experienced by the arm connected to the Inertial Measurement Unit (IMU) and the Global Positioning System (GPS) is proportional to the acceleration. Errors caused by deformation can be determined by analyzing the acceleration information. The innovation of this paper is to analyze the relationship between the acceleration information of the airborne platform and the motion error and propose a high-resolution CSAR motion compensation technology based on the platform’s acceleration information. After calibrating system parameters, it uses acceleration data to directly calculate the amount of motion error caused by deformation. In contrast to the traditional method, there is no need to employ point targets for calibrating the phase error of each scene. Point targets only need to be introduced after calibrating the system’s deformation error parameters. Then, the phase errors of different scenes or different sub-apertures can be directly calculated according to the acceleration. This method reveals the existence of a correlation between the acceleration and residual phase errors and reduces the need for point targets. Finally, the effectiveness of this method is verified through airborne flight experiments. The results demonstrate that the algorithm significantly improves the peak sidelobe ratio (PSLR) and integral sidelobe ratio (ISLR) of the target, thereby achieving high-quality imaging for CSAR.

This article is structured as follows.

Section 2 introduces the signal model of multi-beam CSAR and the motion error analysis of CSAR. In

Section 3, a high-resolution CSAR motion compensation algorithm is proposed, utilizing the acceleration information of the carrier platform. In

Section 4, we introduce the airborne flight experiments and data acquisition. The proposed algorithm is validated through simulation and experimental data in

Section 5. These results are further discussed and analyzed in

Section 6, where the relationship between the acceleration information and residual phase error is explored. Conclusions are given in

Section 7.

3. Method

This paper conducts two-dimensional imaging of multi-beam CSAR data and proposes a motion compensation algorithm for CSAR based on acceleration information from the airborne platform. The algorithm utilizes radial acceleration data from the POS to address phase errors stemming from deformation. Initially, azimuth reconstruction and coarse imaging of multi-beam CSAR data are undertaken. Following this, the ground calibrators within the image are chosen to extract deformation errors. Acceleration is employed for estimating and calibrating the system’s deformation error parameters. Ultimately, leveraging the calibrated system parameters, the deformation error of the imaging scene can be computed based on acceleration, facilitating subsequent phase compensation. The proposed method in this paper is categorized into two main parts: data preprocessing and phase error compensation based on acceleration information. The primary processing flow is outlined as follows:

The raw echo data undergo range-pulse compression, and the resulting data post-pulse compression are extracted at intervals to obtain multi-channel data.

The data are converted into the azimuth frequency domain, and the Doppler center frequency is estimated. Subsequently, the data undergo interpolation and de-ambiguity processing.

Compensating for the delay factor in multi-channel data achieves time-domain alignment, followed by the reconstruction of the multi-channel signal with reference to the content in

Section 2.1.

The BP algorithm is employed for two-dimensional imaging. Calibrators are selected to mark the deformation error in the two-dimensional frequency domain.

Acceleration information is calculated from the POS, the phase error is linearly estimated from the acceleration, and the system deformation error coefficient K is calibrated.

Using the system parameter K calibrated in the previous step, the phase error requiring compensation based on acceleration is calculated, and error compensation in the frequency domain is performed to obtain the focused image after compensation.

The overall flowchart is illustrated in

Figure 4.

The complete algorithm for data preprocessing is given in Algorithm 1.

| Algorithm 1 Multi-beam CSAR data preprocessing. |

Input: Radar raw echo Initialization: The raw echo is processed using pulse compression. Define the beam center pointing angle, radar system , azimuth channel number N, and reference channel . Step 1: The multi-beam CSAR data after pulse compression are extracted to obtain N sub-channel data; Step 2: The autocorrelation method is used to estimate and calculate the Doppler center frequency of each channel; Step 3: The Doppler ambiguity m is calculated according to the oblique angle of the antenna beam center, and the ambiguity is solved to obtain ; Step 4: The multi-channel data are zero-padded in the frequency domain so that the data of each channel are equal to the length of the data before extraction; Step 5: Multiple sets of data are interpolated to compensate for the phase factor , where ; Step 6: Multi-channel data are accumulated according to Formula ( 4) to obtain ; % Accumulate multi-channel data ; An azimuth inverse Fourier transform is performed to obtain the reconstructed time-domain pulse-pressure data ; Step 7: Time-domain two-dimensional imaging using the BP algorithm. Output: CSAR two-dimensional images.

|

Assuming that the image with the phase error is represented as

, the spectrum obtained through the two-dimensional FFT is

.

Assuming the target position is

, under ideal conditions, the two-dimensional spectral phase only includes the initial phase of the target and the linear phase related to the target position. By neglecting the initial phase and the envelope of the transmitted signal spectrum, we obtain Equation (

11).

The phase difference between

and

is the phase error. From Equation (

7), it can be observed that the phase error at the central wavenumber primarily influences imaging. Therefore, the residual phase can be expressed as:

The time-domain image obtained through the BP algorithm exhibits periodic blurring in the spectrum when the imaging grid is relatively large, i.e., when it does not satisfy the grid spacing required for full-aperture imaging. Therefore, before establishing the time-frequency relationship between the two-dimensional wavenumber domain

and the angular wavenumber domain

, deblurring is necessary. The central frequencies of the two-dimensional spectrum of the radar signal can be expressed as

and

, where

,

is the look angle of the radar, and

is the radar signal carrier frequency. Under full-aperture conditions, the plane resolution of CSAR can be expressed as

where

, and

B is the signal bandwidth. When the imaging grid spacing is

, the frequency range obtained in the

and

directions is

, and the blur period can be represented as

where

is the blur number in the

direction, and

is the blur number in the

direction. Finally, the residual phase at the central frequency is extracted from the frequency-domain image.

However, there is no direct relationship between the azimuth time and phase error. We need to extract the phase error for each sub-aperture. Sometimes, there is no suitable isolated point target in the scene, and the phase error is difficult to obtain directly. Therefore, a more adaptive method is needed. This article proposes a CSAR motion compensation method based on acceleration information. Radial acceleration can, to some extent, reflect the degree of deformation of the lever arm between the IMU and GPS. Furthermore, radial acceleration can reflect motion errors. In this paper, a motion compensation method for CSAR based on acceleration information is proposed, which uses the acceleration to estimate the phase error of each sub-aperture and then compensates to obtain the focused image. The complete algorithm for CSAR motion compensation based on acceleration information is given in Algorithm 2.

| Algorithm 2 CSAR motion compensation algorithm based on acceleration. |

Input: Two-dimensional CSAR images. Initialization: Select the calibrator and apply windowing to reduce clutter and interference from nearby targets, obtaining data . Step 1: Perform a two-dimensional Fourier transform on to move to the frequency domain ; Step 2: Deblur in the frequency domain. , ; Step 3: Extract the phase at the central wavenumber and unwrap the phase; Step 4: Remove the linear phase to extract the residual phase errors ; obtain the residual motion errors based on Equation ( 12); Step 5: Calculate the radial acceleration using POS data; Step 6: The radial acceleration information and motion error are estimated using the least-square method; determine the system parameter K; Step 7: According to the system parameter K obtained in Step 6, the phase error is calculated and compensated through the acceleration, and the compensated spectrum is obtained; Step 8: Perform a two-dimensional inverse Fourier transform on to obtain the motion-compensated image ; Step 9: For the new scene, after transforming to the two-dimensional frequency domain, Steps 7 and 8 are repeated to obtain the compensated focusing image. Output: Two-dimensional CSAR image after motion compensation.

|

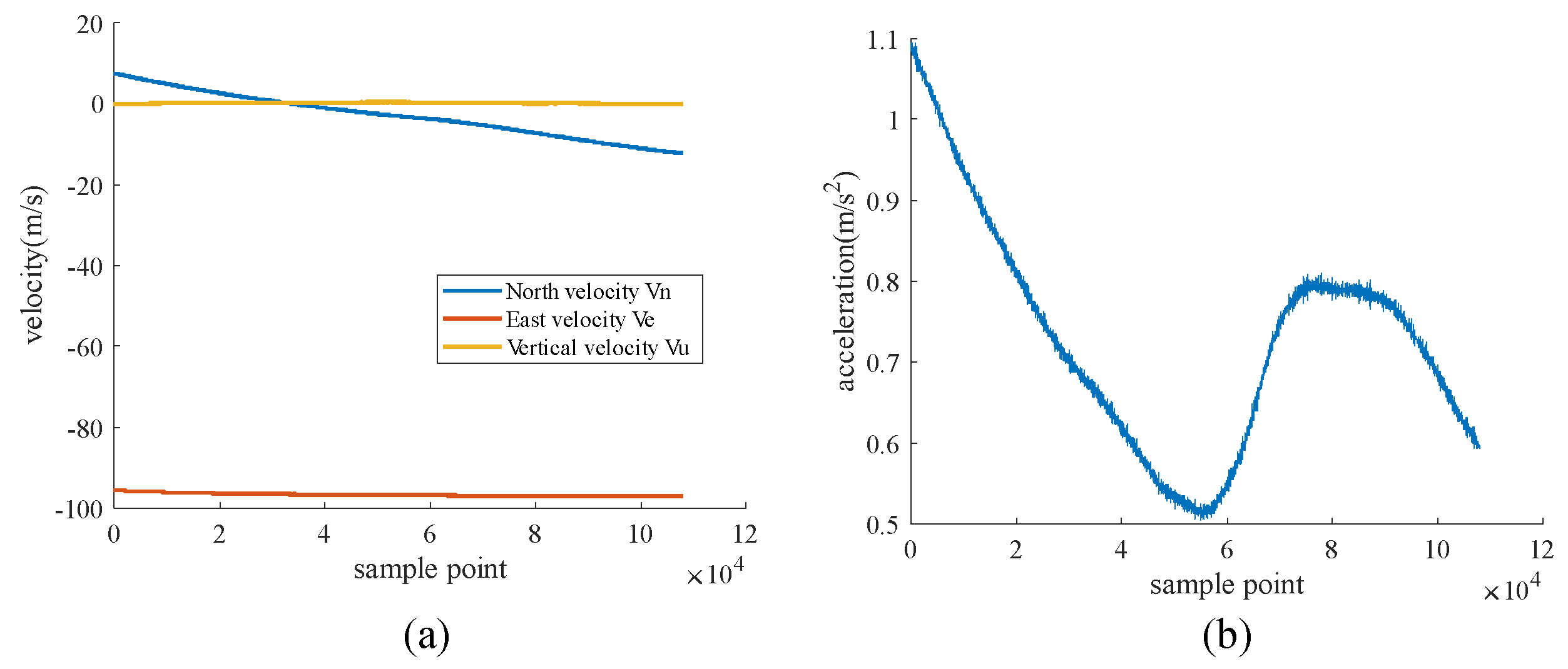

4. Airborne Experiments and Data Acquisition

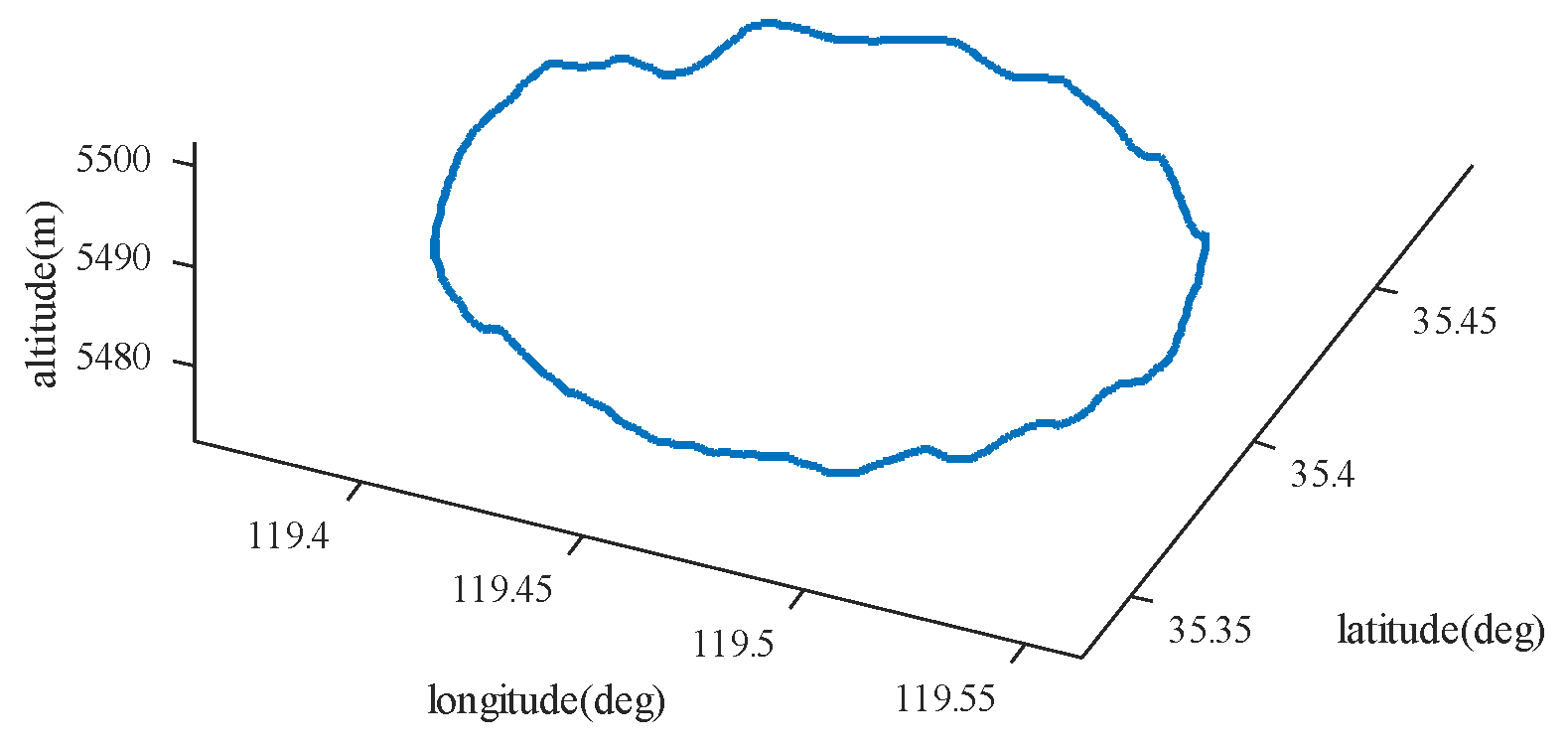

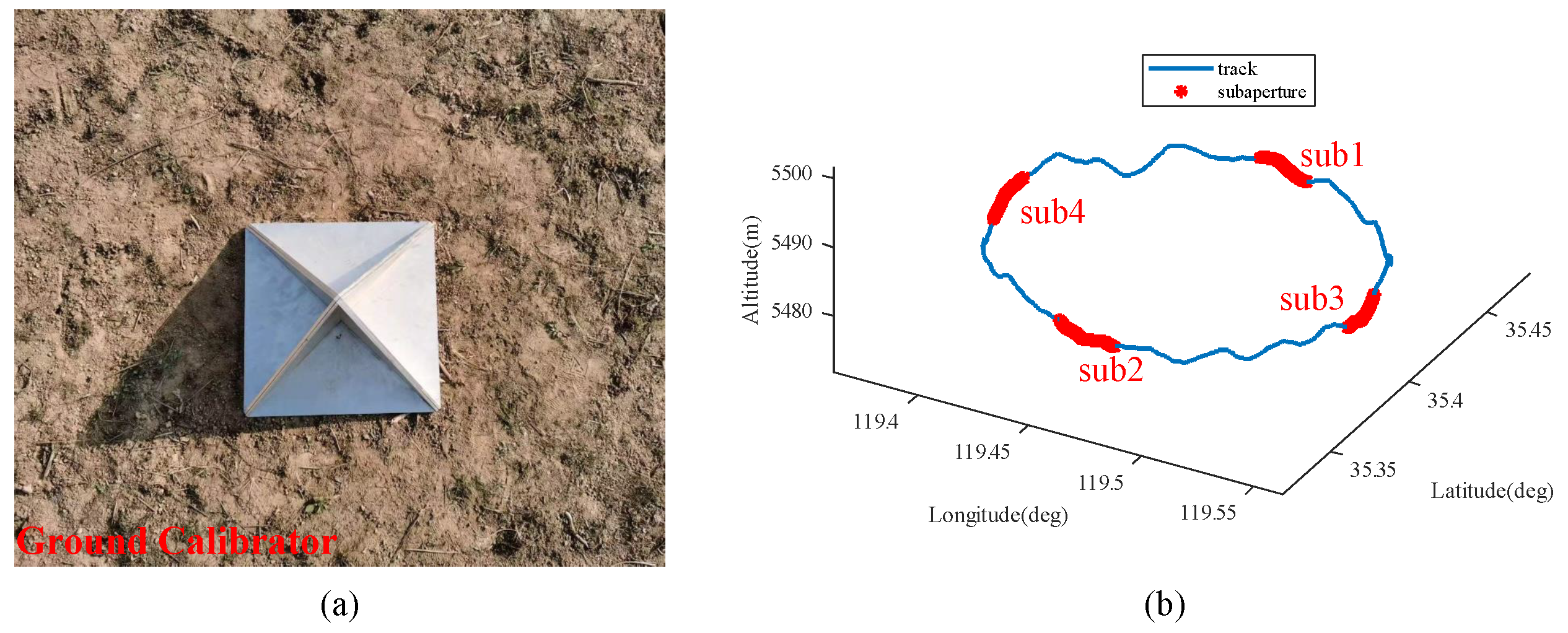

In 2021, AIRCAS conducted airborne multi-beam CSAR flight experiments in Rizhao, Shandong, China. The experimental system is shown in

Figure 5. The GPS antenna is located on top of the aircraft, and the phased-array radar closely integrated with the IMU is positioned on the belly of the aircraft. The system is configured with six beam positions, scanning from

deg to

deg. The aircraft flies counterclockwise at an altitude of 5500 m, with the radar operating in the left-looking mode and the working frequency band in the X-band.

Table 1 provides the configuration parameters of the airborne multi-beam CSAR experimental system.

Figure 6 illustrates the flight trajectory of the radar platform.

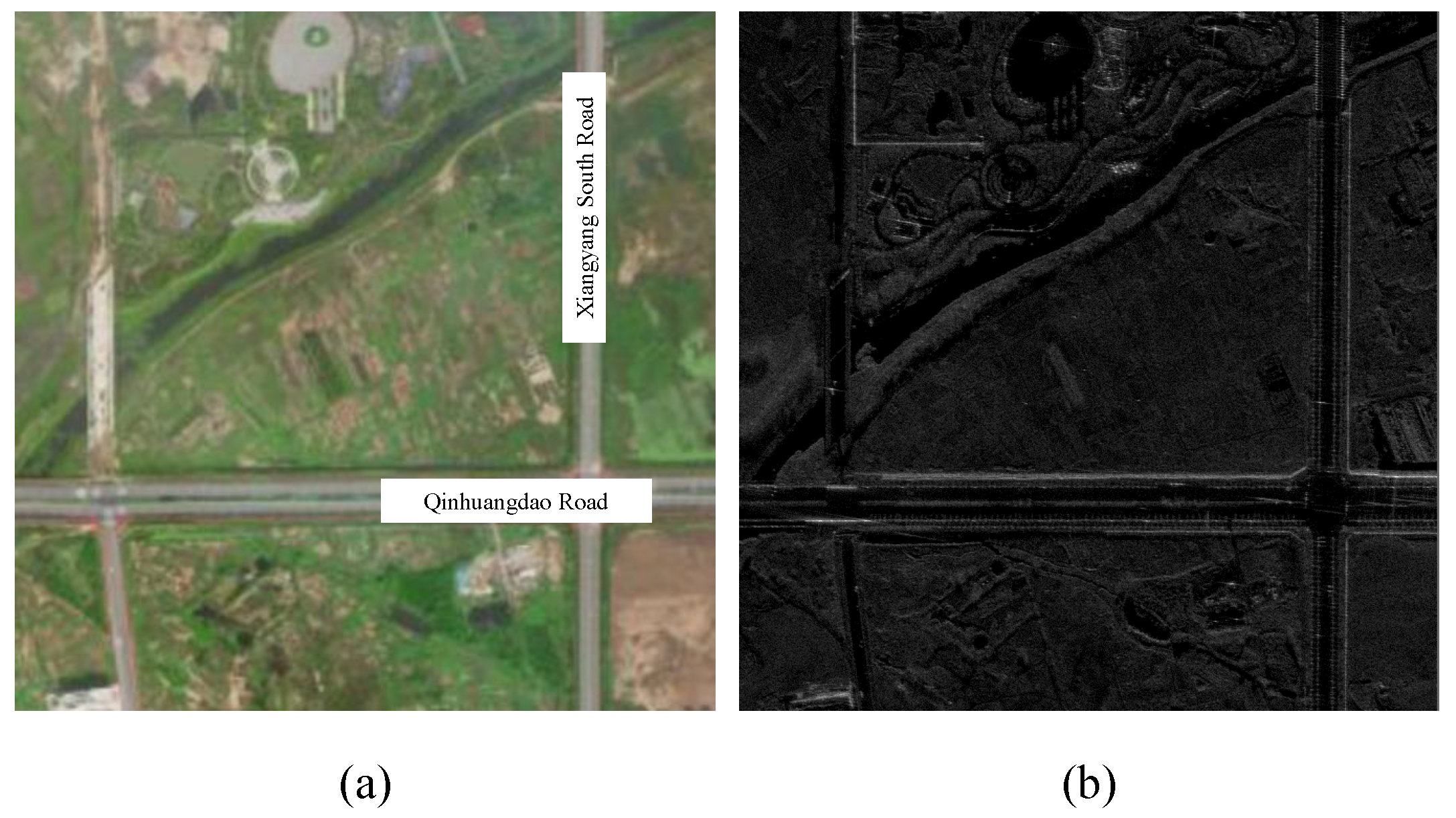

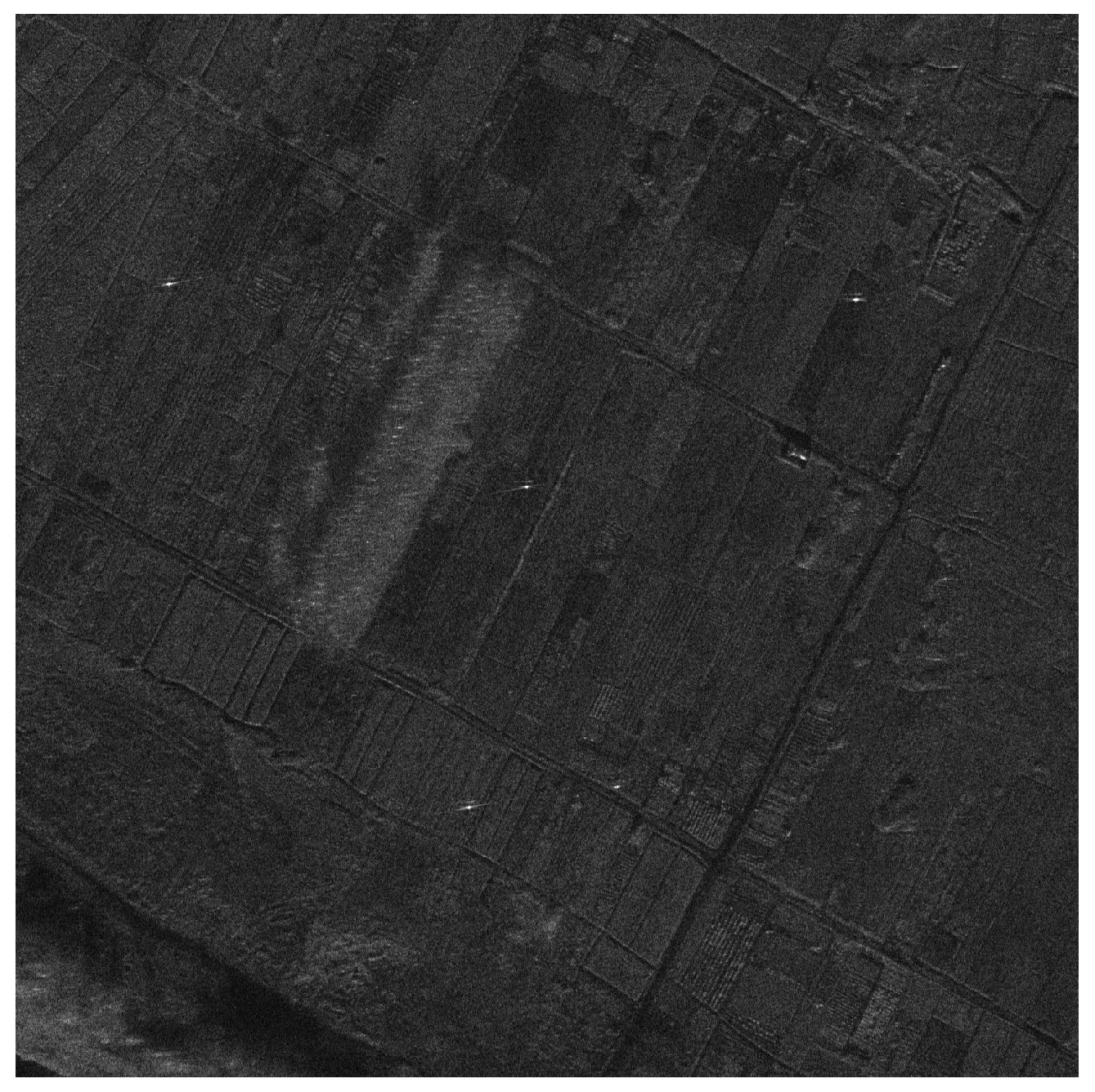

Figure 7 shows an optical image and a SAR image of the imaging area.

Before imaging, it is necessary to reconstruct the azimuth signal of the obtained multi-beam CSAR. This involves deinterleaving the data after pulse compression to extract multi-beam data, numbering channel 1 to channel 6, respectively. To estimate the center frequency of the signal in the baseband, the autocorrelation method is employed. Additionally, the Doppler ambiguity is resolved by considering the beam-pointing relationship. The multi-channel data are then deblurred and interpolated in the frequency domain, ensuring that the data length matches that of the original data and compensating for the delay phase factor. Subsequently, the multi-channel data are combined into a single-channel signal, with attention given to the energy balance during spectrum splicing. As shown in

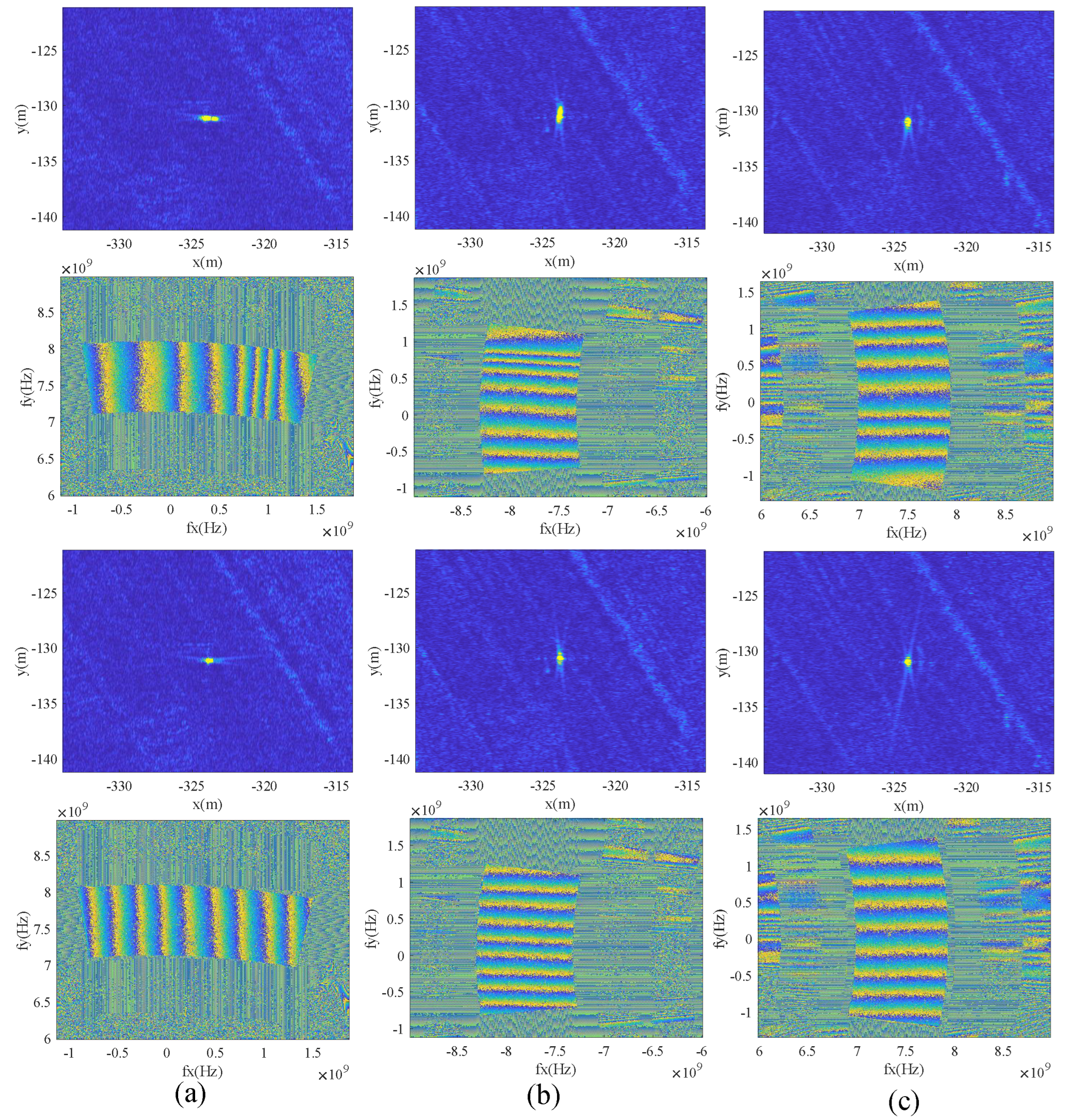

Figure 8, four sub-apertures with varying angles are chosen for data preprocessing, leading to the generation of reconstructed signals and imaging results. The sub-aperture length is set to 6000 pulses. The reconstructed data can be imaged, but they still have the residual phase error. Therefore, it is necessary to study CSAR motion compensation technology based on acceleration information.

6. Discussion

The deformation error of the lever arm exhibits a strong correlation with acceleration. Consequently, this paper introduces a CSAR motion compensation method based on acceleration information. The method initially utilizes point targets to calibrate the system parameters, subsequently calculating the deformation error that requires compensation at each position based on the calibrated parameters and acceleration. Once the system parameters are calibrated, there is no longer a need to employ a point target to estimate the phase error for new scenes and new sub-apertures. This effectively eliminates the dependence on point targets. The method proposed in this paper has been validated through airborne flight experiments, and the experimental results are presented in

Section 5.

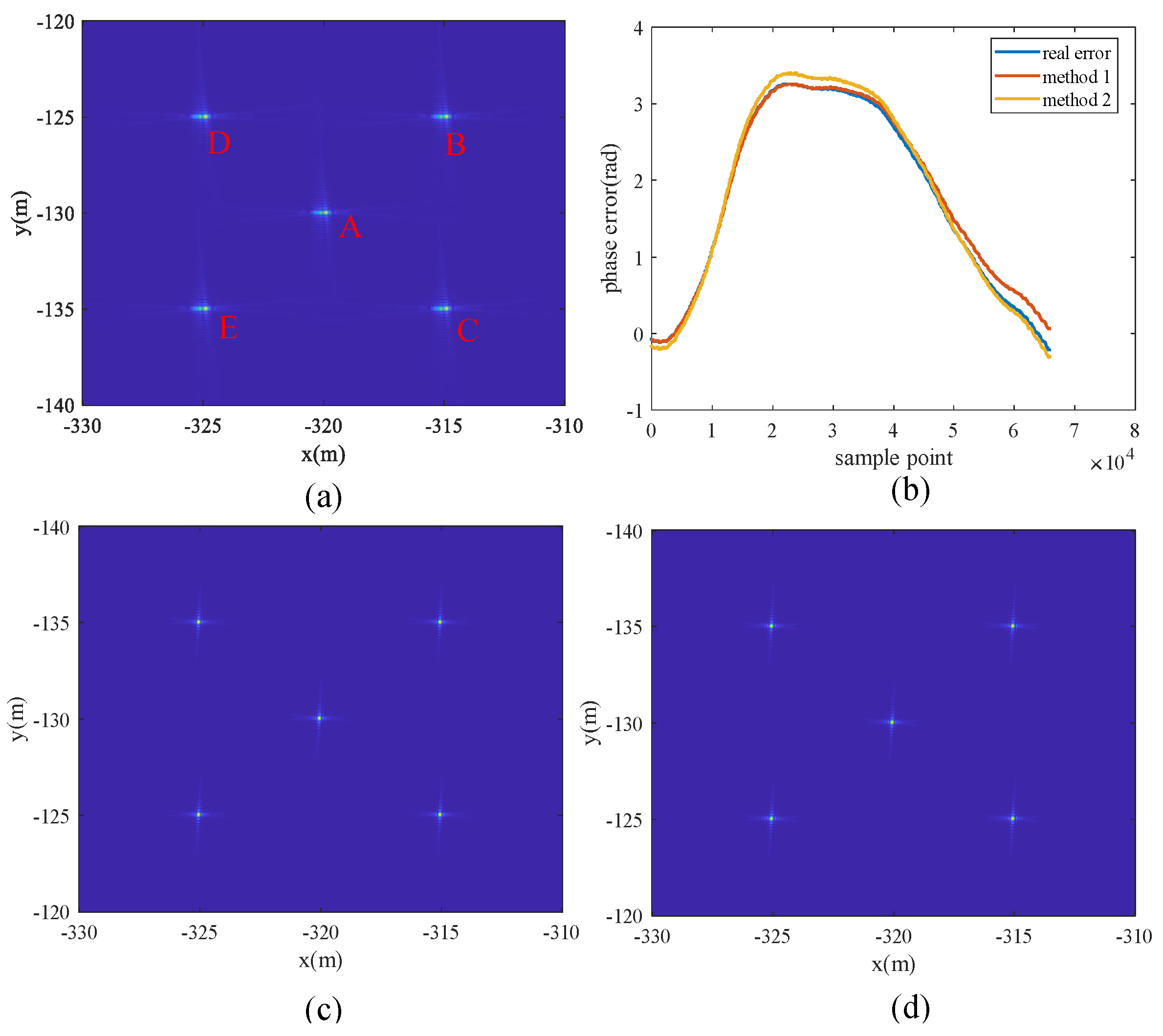

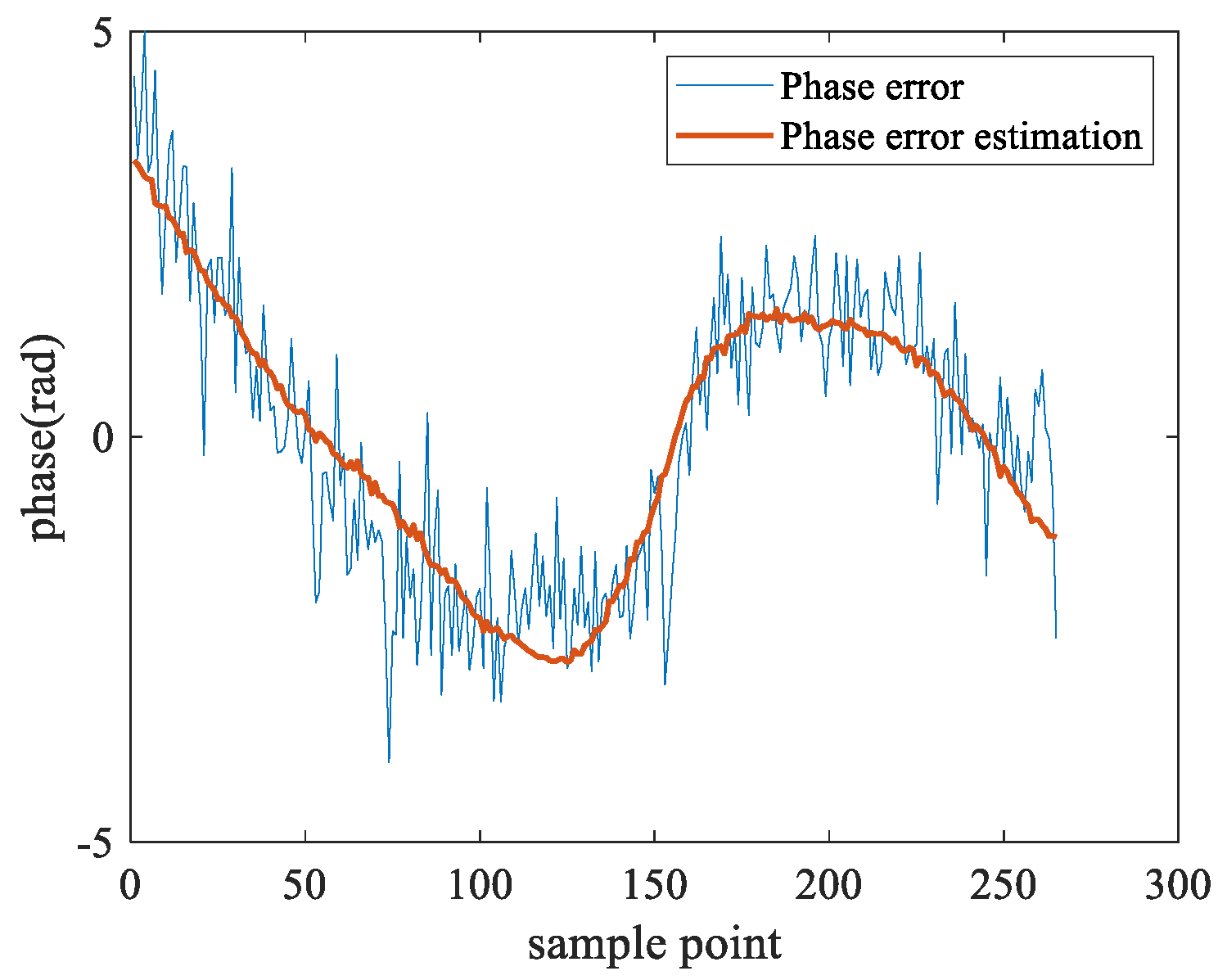

Firstly, we reconstruct the azimuth signal of multi-beam CSAR based on Algorithm 1. Subsequently, motion compensation based on the acceleration information is executed, following the steps outlined in Algorithm 2. As depicted in

Figure 12, the selected calibrator (or isolated strong point) is windowed in the image domain, and the synthetic aperture size is set to 15∼20 deg. Equation (

14) is employed for deblurring, resulting in a frequency spectrum phase, as illustrated in

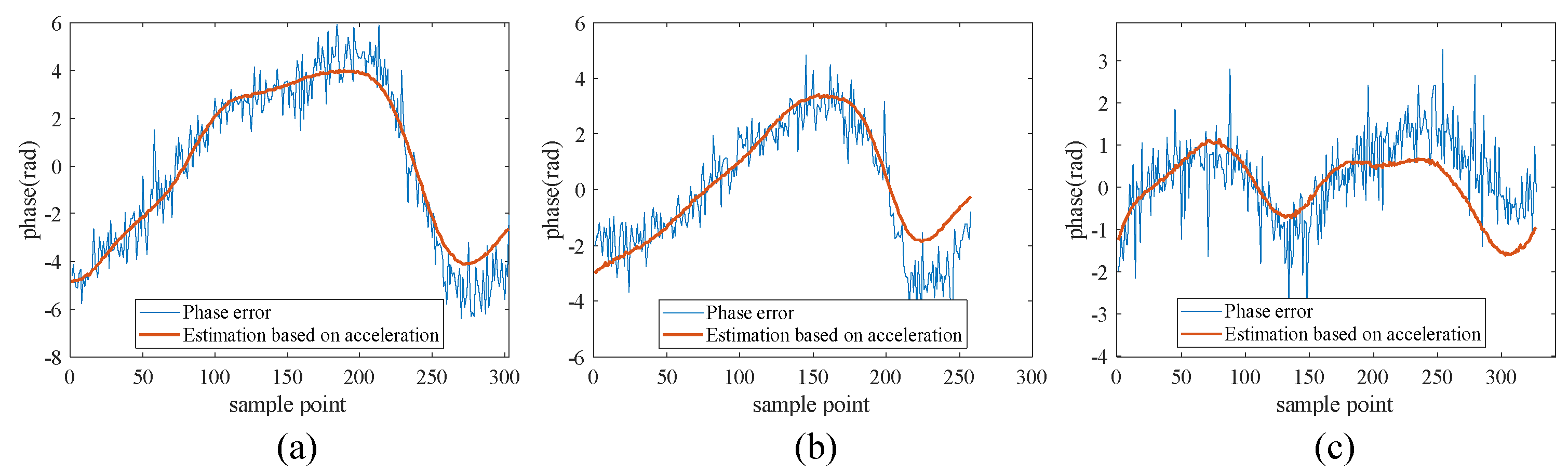

Figure 12d. For a target, its frequency spectrum phase should only contain an initial phase and a linear phase, with the phase uniformly varying. We extract the phase at the center frequency, and after unwrapping the phase and removing the linear phase, we obtain a residual phase error. This phase error is challenging to express directly in an equation for compensation during the CSAR imaging process. There is a correlation between the phase error and radial acceleration. The parameter relationship between the acceleration information and phase error can be estimated from a sub-aperture, as shown in

Figure 15. This allows us to calculate the phase error compensation required at various azimuth times based on the acceleration information. One source of error is the frequent changes in the flight attitude of CSAR. Coupled with the non-rigid connection between the IMU and GPS, the deformation error of the lever arm becomes significant. The variations in acceleration serve as an indicator of the degree of lever arm deformation. Consequently, utilizing acceleration to estimate residual motion errors is a rational and effective approach.

Figure 18 illustrates the estimated results for sub-apertures in various directions. It is evident that the radial acceleration information aligns well with the residual motion error.

Figure 17 and

Figure 19 showcase the motion compensation results for four directions. The compensated target’s spectral phase becomes uniform, retaining only the linear phase associated with the target position, resulting in a focused target point. Further analysis of the target’s azimuth characteristics reveals a significant improvement in the peak sidelobe ratio and integral sidelobe ratio, confirming the effectiveness of the proposed method. It is noteworthy that this paper introduces acceleration information creatively and verifies its application in high-resolution CSAR imaging.