Probabilistic Estimation of Tropical Cyclone Intensity Based on Multi-Source Satellite Remote Sensing Images

Abstract

1. Introduction

1.1. Motivation and Background

- We introduce a novel network for probabilistic estimation of TC intensity based on multi-source satellite remote sensing images, marking the first application of uncertainty in TC intensity estimation.

- Experimental results on the constructed MTCID dataset demonstrate that our model achieves performance comparable to current mainstream networks in deterministic TC intensity estimation and provides reliable probability estimates.

- Probability and interval estimates of TC intensity facilitate decision makers in better assessing the level of TC danger and assisting governments and emergency agencies at all levels in adopting timely and reasonable warning measures to minimize the impact of disasters.

1.2. Related Work

1.2.1. Estimates of TC Intensity

1.2.2. Uncertainty Research

2. Materials and Methods

2.1. Introduction to the Dataset

2.1.1. Source of Data

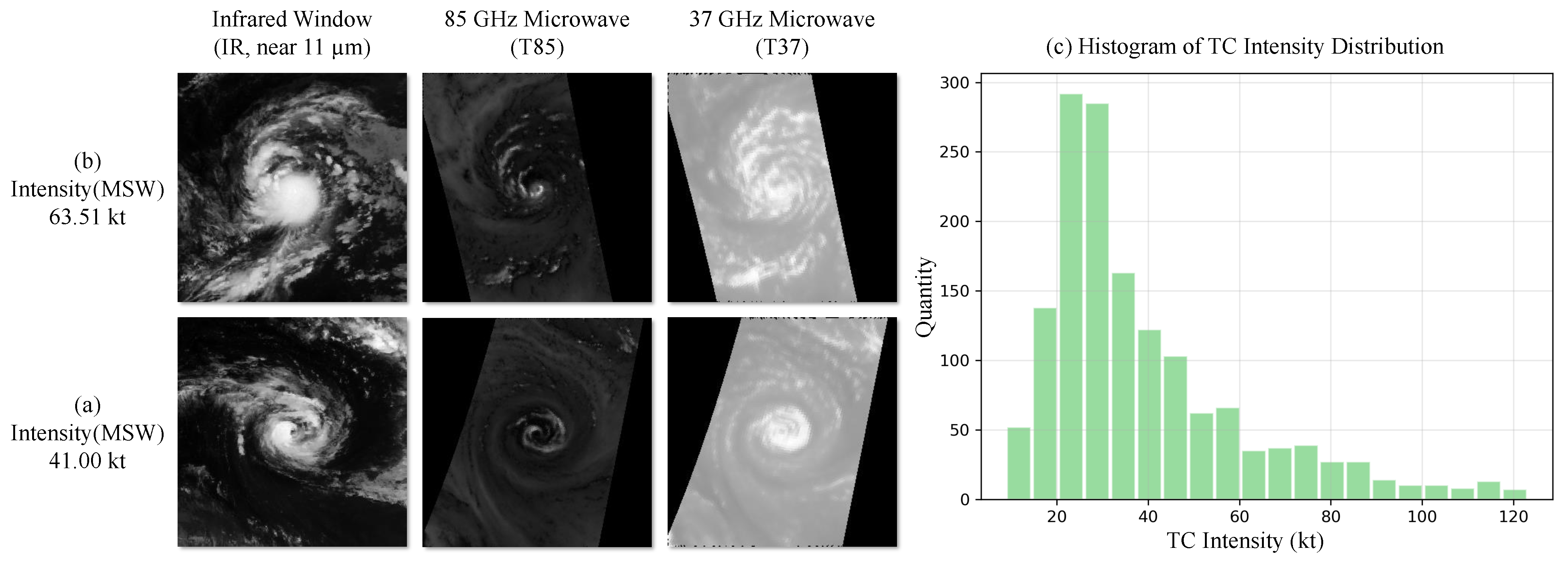

- HURSAT-B1 data are employed for collecting infrared images of TCs. The data covers the time span from 1978 to 2015, with a resolution of 8 km and a 3 h interval, encompassing global TCs. The IRWIN (infrared window, near 11 µm) channel from this dataset is utilized for the MTCID dataset’s infrared images in this study.

- HURSAT-MW data offers microwave images of TCs through passive microwave observations. The dataset includes a total of 2412 TC microwave images from 1987 to 2009, sharing the spatial resolution of HURSAT-B1. In this study, the 37 GHz (T37) and 85 GHz (T85) microwave channels are applied as microwave images for the MTCID dataset. This selection is motivated by the influential nature of the 85–92 GHz frequency range in the model, with the inclusion of 37 GHz providing marginal benefits [11].

2.1.2. Construction of the MTCID Dataset

- The dataset contains numerous images with black patches that do not convey any information about hurricanes, which could complicate the learning process. Hence, images with over 40% invalid information are chosen for elimination through ratio computation.

- As microwave data are captured in a striped pattern by polar-orbiting meteorological satellites, images with scanning coverage less than 60% of the image frame are directly discarded. Moreover, each data point ranges from 0 to 350 as a decimal, and empty values in the data are filled with the maximum value (350, consistent with the background value).

- Due to the intrinsic long-tailed nature of the data (more mid–low intensity TCs and fewer high-intensity TCs), Figure 1c illustrates the intensity distribution of the TC dataset. In this study, some data augmentation approaches are utilized to balance the dataset and alleviate its long-tail effects. We generate additional high-intensity TC data through operations like random rotation and adding noise, as depicted in Figure 2 illustrating the employed data augmentation methods.

2.2. TC Intensity Probability Estimation Network

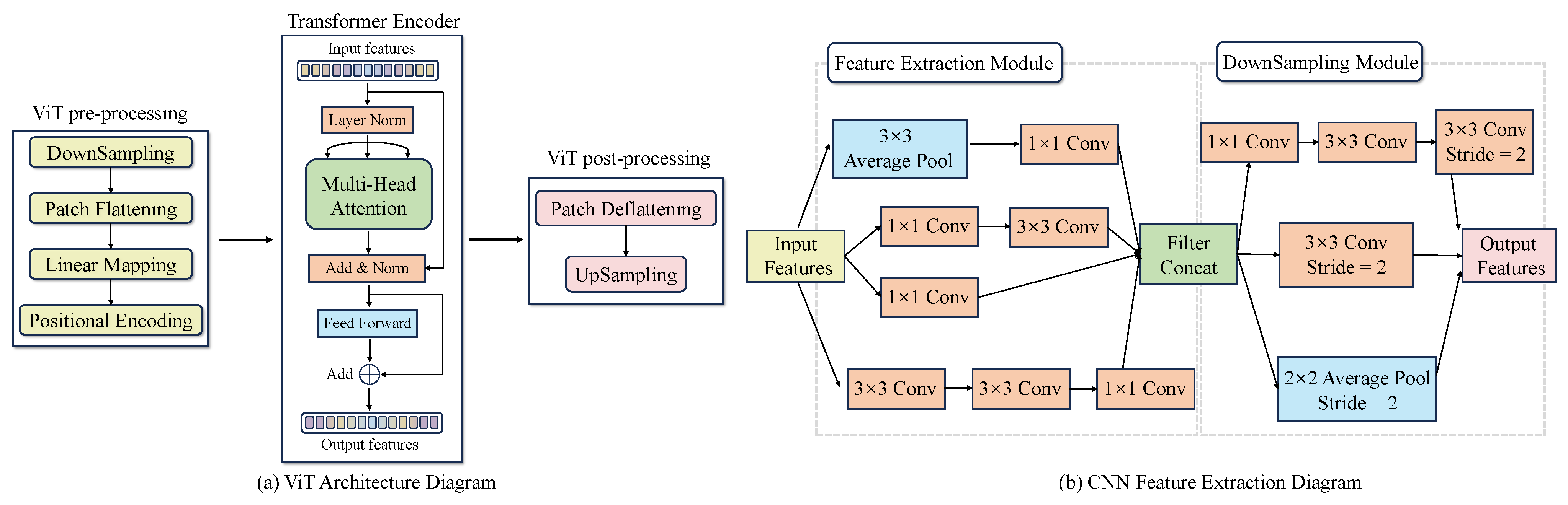

2.2.1. Dual-Branch Self-Attention Encoder

2.2.2. Feature Fusion and Intensity Estimation

2.2.3. Loss Function

3. Experimental Results

3.1. Experimental Setup and Evaluation Metrics

3.1.1. Deterministic Estimation Metrics

3.1.2. Probabilistic Estimation Evaluation Metrics

3.2. Ablation Experiment Results

3.2.1. Module Ablation Experiment for MTCIE

3.2.2. Ablation Experiment for Multiple Source Image Inputs

3.3. Deterministic Estimation Experiments

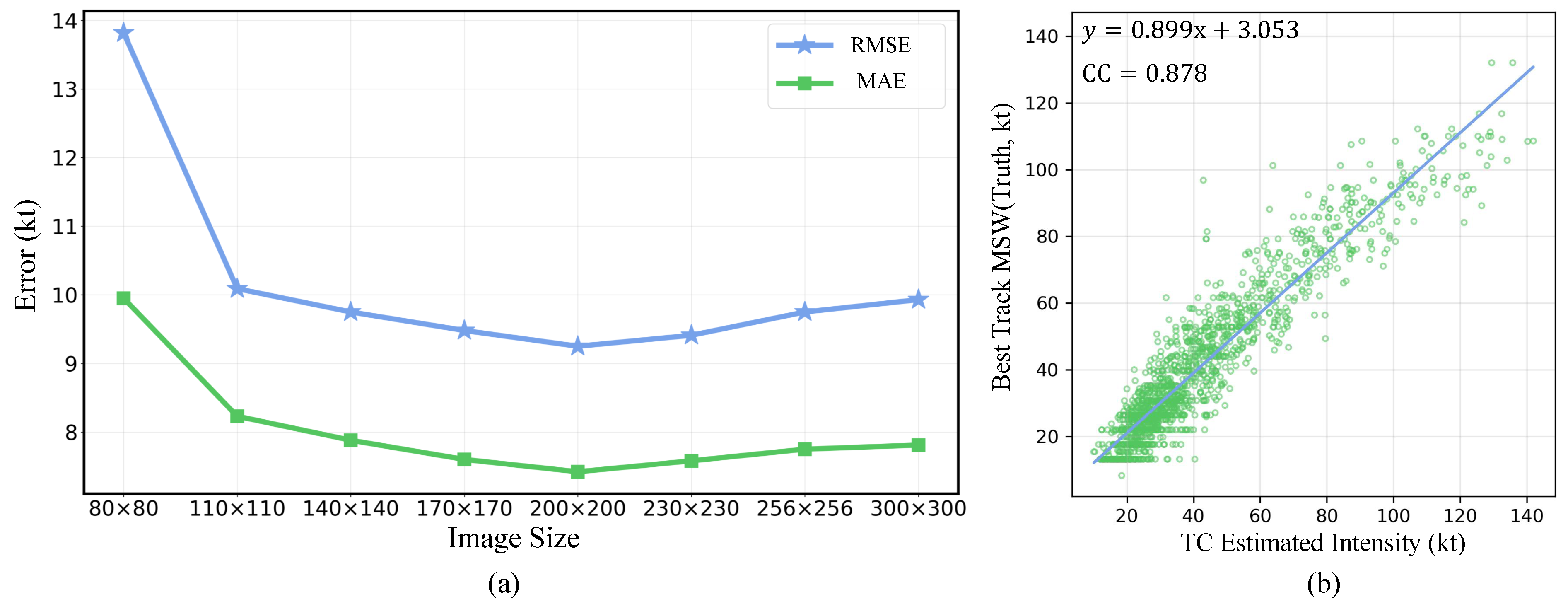

3.3.1. Input Image Size Comparative Experiment

3.3.2. Test Dataset Quantitative Evaluation Experiment

3.3.3. Comparative Experiments in Deterministic Estimation

3.4. Probability Estimation Experiments

3.4.1. Comparative Experiment of Probability Estimation

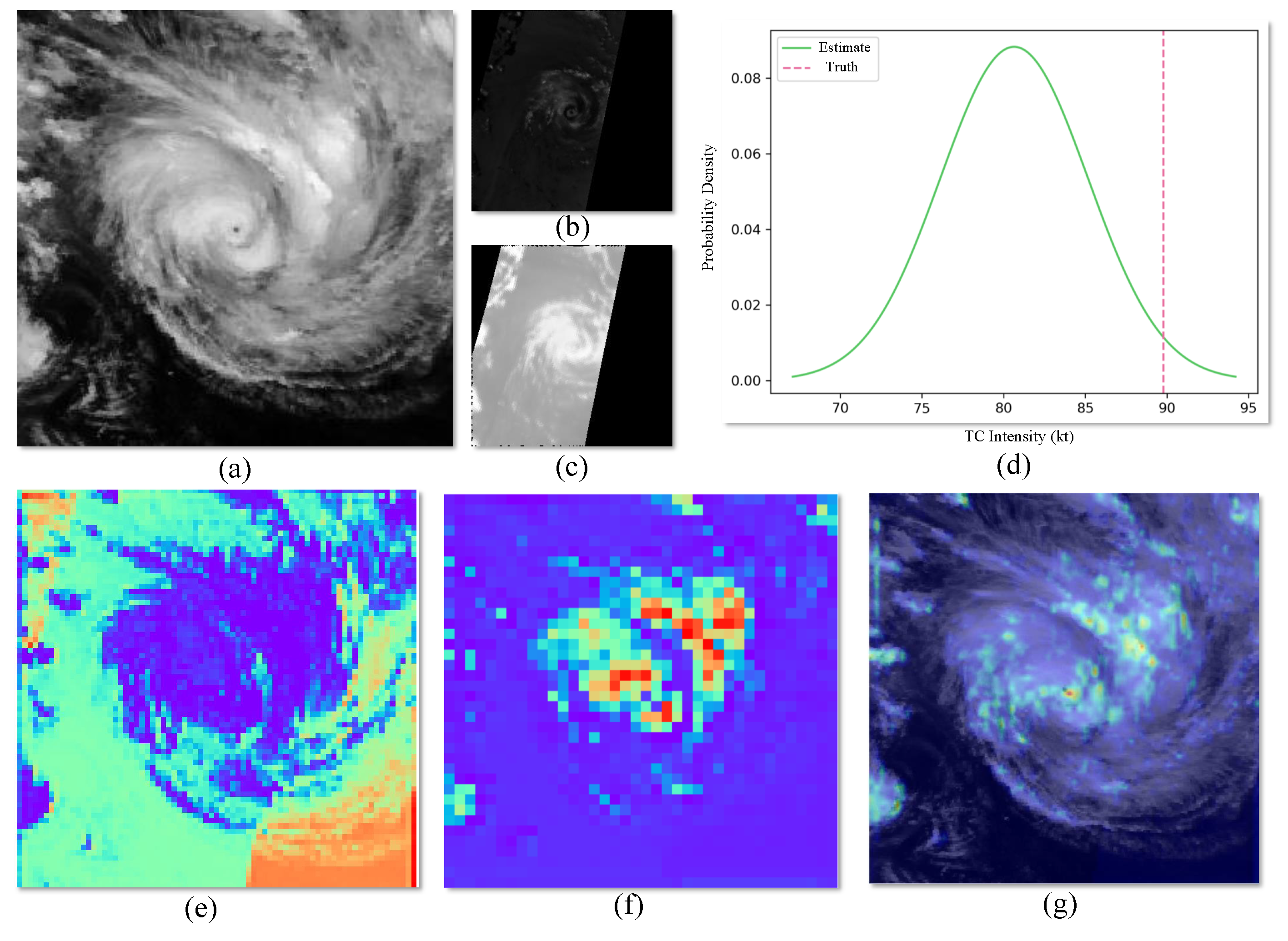

3.4.2. Individual Case Experiment

4. Discussion

4.1. Misestimation Analysis

4.2. Limitations

- The quality and size of the dataset need improvement. The data obtained through pairing are limited and there is a certain misalignment. Additionally, the dataset distribution is highly uneven, displaying a significant long-tail effect. While data augmentation measures alleviate this issue to some extent, more, higher-quality, balanced data are still required.

- The estimation performance for high-intensity TCs is unsatisfactory. Although probabilistic estimation enlarges uncertainty to constrain results within the estimated range, improving both deterministic and probabilistic estimation can be achieved by incorporating more data and expert knowledge on high-intensity TCs.

- Although the probabilistic estimation results cover a majority of real scenarios, the estimated interval width is relatively large, leaving room for improvement in practical applications.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dominguez, C.; Magaña, V. The role of tropical cyclones in precipitation over the tropical and subtropical North America. Front. Earth Sci. 2018, 6, 19. [Google Scholar] [CrossRef]

- Swain, D. Tropical cyclones and coastal vulnerability: Assessment and mitigation. Geospat. Technol. Land Water Resour. Manag. 2022, 103, 587–621. [Google Scholar]

- Velden, C.; Harper, B.; Wells, F.; Beven, J.L.; Zehr, R.; Olander, T.; Mayfield, M.; Guard, C.; Lander, M.; Edson, R.; et al. The Dvorak tropical cyclone intensity estimation technique: A satellite-based method that has endured for over 30 years. Bull. Am. Meteorol. Soc. 2006, 87, 1195–1210. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, J.; Bao, Z.; Ao, T.; Wang, G.; Wu, H.; Wang, J. Evaluation of multi-source soil moisture datasets over central and eastern agricultural area of China using in situ monitoring network. Remote Sens. 2021, 13, 1175. [Google Scholar] [CrossRef]

- Mohammadpour, P.; Viegas, C. Applications of Multi-Source and Multi-Sensor Data Fusion of Remote Sensing for Forest Species Mapping. Adv. Remote Sens. For. Monit. 2022, 255–287. [Google Scholar] [CrossRef]

- Shao, Z.; Pan, Y.; Diao, C.; Cai, J. Cloud detection in remote sensing images based on multiscale features-convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4062–4076. [Google Scholar] [CrossRef]

- Panegrossi, G.; D’Adderio, L.P.; Dafis, S.; Rysman, J.F.; Casella, D.; Dietrich, S.; Sanò, P. Warm Core and Deep Convection in Medicanes: A Passive Microwave-Based Investigation. Remote Sens. 2023, 15, 2838. [Google Scholar] [CrossRef]

- Qiu, S.; Zhao, H.; Jiang, N.; Wang, Z.; Liu, L.; An, Y.; Zhao, H.; Miao, X.; Liu, R.; Fortino, G. Multi-sensor information fusion based on machine learning for real applications in human activity recognition: State-of-the-art and research challenges. Inf. Fusion 2022, 80, 241–265. [Google Scholar] [CrossRef]

- Wang, C.; Zheng, G.; Li, X.; Xu, Q.; Liu, B.; Zhang, J. Tropical cyclone intensity estimation from geostationary satellite imagery using deep convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- Dawood, M.; Asif, A.; Minhas, F.u.A.A. Deep-PHURIE: Deep learning based hurricane intensity estimation from infrared satellite imagery. Neural Comput. Appl. 2020, 32, 9009–9017. [Google Scholar] [CrossRef]

- Wimmers, A.; Velden, C.; Cossuth, J.H. Using deep learning to estimate tropical cyclone intensity from satellite passive microwave imagery. Mon. Weather Rev. 2019, 147, 2261–2282. [Google Scholar] [CrossRef]

- Heming, J.T.; Prates, F.; Bender, M.A.; Bowyer, R.; Cangialosi, J.; Caroff, P.; Coleman, T.; Doyle, J.D.; Dube, A.; Faure, G.; et al. Review of recent progress in tropical cyclone track forecasting and expression of uncertainties. Trop. Cyclone Res. Rev. 2019, 8, 181–218. [Google Scholar] [CrossRef]

- Feng, K.; Ouyang, M.; Lin, N. Tropical cyclone-blackout-heatwave compound hazard resilience in a changing climate. Nat. Commun. 2022, 13, 4421. [Google Scholar] [CrossRef] [PubMed]

- Marks, F.D.; Shay, L.K.; Barnes, G.; Black, P.; Demaria, M.; McCaul, B.; Mounari, J.; Montgomery, M.; Powell, M.; Smith, J.D.; et al. Landfalling tropical cyclones: Forecast problems and associated research opportunities. Bull. Am. Meteorol. Soc. 1998, 79, 305–323. [Google Scholar]

- Martin, J.D.; Gray, W.M. Tropical cyclone observation and forecasting with and without aircraft reconnaissance. Weather Forecast. 1993, 8, 519–532. [Google Scholar] [CrossRef]

- Maral, G.; Bousquet, M.; Sun, Z. Satellite Communications Systems: Systems, Techniques and Technology; John Wiley & Sons: Hoboken, NJ, USA, 2020. [Google Scholar]

- Velden, C.; Hawkins, J. Satellite observations of tropical cyclones. In Global Perspectives on Tropical Cyclones: From Science to Mitigation; World Scientific: Singapore, 2010; pp. 201–226. [Google Scholar]

- Lee, J.; Im, J.; Cha, D.H.; Park, H.; Sim, S. Tropical cyclone intensity estimation using multi-dimensional convolutional neural networks from geostationary satellite data. Remote Sens. 2019, 12, 108. [Google Scholar] [CrossRef]

- Clement, M.; Snell, Q.; Walke, P.; Posada, D.; Crandall, K. TCS: Estimating gene genealogies. In Proceedings of the Parallel and Distributed Processing Symposium, International; IEEE Computer Society: Washington, DC, USA, 2002; Volume 2, p. 7. [Google Scholar]

- Pradhan, R.; Aygun, R.S.; Maskey, M.; Ramachandran, R.; Cecil, D.J. Tropical cyclone intensity estimation using a deep convolutional neural network. IEEE Trans. Image Process. 2017, 27, 692–702. [Google Scholar] [CrossRef] [PubMed]

- Jiang, W.; Hu, G.; Wu, T.; Liu, L.; Kim, B.; Xiao, Y.; Duan, Z. DMANet_KF: Tropical Cyclone Intensity Estimation Based on Deep Learning and Kalman Filter From Multi-Spectral Infrared Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4469–4483. [Google Scholar] [CrossRef]

- Gawlikowski, J.; Tassi, C.R.N.; Ali, M.; Lee, J.; Humt, M.; Feng, J.; Kruspe, A.; Triebel, R.; Jung, P.; Roscher, R.; et al. A survey of uncertainty in deep neural networks. Artif. Intell. Rev. 2023, 56, 1513–1589. [Google Scholar] [CrossRef]

- Gal, Y.; Ghahramani, Z. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1050–1059. [Google Scholar]

- Yang, J.Y.; Wang, J.D.; Zhang, Y.F.; Cheng, W.J.; Li, L. A heuristic sampling method for maintaining the probability distribution. J. Comput. Sci. Technol. 2021, 36, 896–909. [Google Scholar] [CrossRef]

- Jiang, S.H.; Liu, X.; Wang, Z.Z.; Li, D.Q.; Huang, J. Efficient sampling of the irregular probability distributions of geotechnical parameters for reliability analysis. Struct. Saf. 2023, 101, 102309. [Google Scholar] [CrossRef]

- Kamran, M. A probabilistic approach for prediction of drilling rate index using ensemble learning technique. J. Min. Environ. 2021, 12, 327–337. [Google Scholar]

- Yang, Y.; Hong, W.; Li, S. Deep ensemble learning based probabilistic load forecasting in smart grids. Energy 2019, 189, 116324. [Google Scholar] [CrossRef]

- Sun, J.; Wu, S.; Zhang, H.; Zhang, X.; Wang, T. Based on multi-algorithm hybrid method to predict the slope safety factor–stacking ensemble learning with bayesian optimization. J. Comput. Sci. 2022, 59, 101587. [Google Scholar] [CrossRef]

- Mercer, A.; Grimes, A. Atlantic tropical cyclone rapid intensification probabilistic forecasts from an ensemble of machine learning methods. Procedia Comput. Sci. 2017, 114, 333–340. [Google Scholar] [CrossRef]

- Tolwinski-Ward, S.E. Uncertainty quantification for a climatology of the frequency and spatial distribution of n orth a tlantic tropical cyclone landfalls. J. Adv. Model. Earth Syst. 2015, 7, 305–319. [Google Scholar] [CrossRef]

- Bonnardot, F.; Quetelard, H.; Jumaux, G.; Leroux, M.D.; Bessafi, M. Probabilistic forecasts of tropical cyclone tracks and intensities in the southwest Indian Ocean basin. Q. J. R. Meteorol. Soc. 2019, 145, 675–686. [Google Scholar] [CrossRef]

- Mohapatra, M.; Bandyopadhyay, B.; Nayak, D. Evaluation of operational tropical cyclone intensity forecasts over north Indian Ocean issued by India Meteorological Department. Nat. Hazards 2013, 68, 433–451. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; Volume 68, pp. 770–778. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Phillips, J.D. Sources of nonlinearity and complexity in geomorphic systems. Prog. Phys. Geogr. 2003, 27, 1–23. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y. What uncertainties do we need in bayesian deep learning for computer vision? Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Zhang, R.; Liu, Q.; Hang, R. Tropical cyclone intensity estimation using two-branch convolutional neural network from infrared and water vapor images. IEEE Trans. Geosci. Remote Sens. 2019, 58, 586–597. [Google Scholar] [CrossRef]

- Zhang, C.J.; Wang, X.J.; Ma, L.M.; Lu, X.Q. Tropical cyclone intensity classification and estimation using infrared satellite images with deep learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2070–2086. [Google Scholar] [CrossRef]

- Dietterich, T.G. Ensemble methods in machine learning. In Proceedings of the International Workshop on Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2000; pp. 1–15. [Google Scholar]

- Koenker, R.; Hallock, K.F. Quantile regression. J. Econ. Perspect. 2001, 15, 143–156. [Google Scholar] [CrossRef]

- Efron, B.; Tibshirani, R.J. An Introduction to the Bootstrap; CRC Press: Boca Raton, FL, USA, 1994. [Google Scholar]

| Modules | Metrics | ||||

|---|---|---|---|---|---|

| Baseline | ViT | IFEM | FFM | MAE↓ (kt) | RMSE↓ (kt) |

| ✓ | 8.34 | 10.48 | |||

| ✓ | ✓ | 7.93 | 9.83 | ||

| ✓ | ✓ | ✓ | 7.51 | 9.35 | |

| ✓ | ✓ | ✓ | ✓ | 7.42 | 9.25 |

| Input Data | Metrics | |||

|---|---|---|---|---|

| IR | T37 | T85 | MAE↓ (kt) | RMSE↓ (kt) |

| ✓ | 7.95 | 9.87 | ||

| ✓ | 12.48 | 14.35 | ||

| ✓ | 10.67 | 12.62 | ||

| ✓ | ✓ | 7.84 | 9.74 | |

| ✓ | ✓ | 7.51 | 9.38 | |

| ✓ | ✓ | ✓ | 7.42 | 9.25 |

| Range of Wind Speed (kt) | Sample Size | MAE↓ (kt) | RMSE↓ (kt) | |

|---|---|---|---|---|

| TD | ≤33.25 | 478 | 6.67 | 8.47 |

| TS | 33.45–47.45 | 332 | 6.72 | 8.58 |

| STS | 47.64–63.32 | 256 | 7.61 | 9.47 |

| TY | 63.52–80.47 | 198 | 8.17 | 10.03 |

| STY | 80.73–98.79 | 157 | 9.05 | 11.14 |

| Super TY | ≥99.13 | 88 | 10.68 | 12.75 |

| Average | 7.42 | 9.25 |

| Models | Data | MAE↓ (kt) | RMSE↓ (kt) | References |

|---|---|---|---|---|

| DeepMicroNet | MINT | - | 10.60 | [11] |

| TCIENet | IR, WV | 7.84 | 9.98 | [38] |

| Deep-PHURIE | IR | 7.96 | 8.94 | [10] |

| TCICENet | IR | 6.67 | 8.60 | [39] |

| DMANel_KF | IR1, IR2, IR3, IR4 | 6.19 | 7.82 | [21] |

| MTCIE | IR, T37, T85 | 7.42 | 9.25 | Ours |

| Models | MAE↓ (kt) | RMSE↓ (kt) | CRPS↓ | PICP↑ | MWP↓ |

|---|---|---|---|---|---|

| MC Dropout | 9.74 | 11.39 | 2.18 | 0.487 | 0.235 |

| DE | 8.81 | 10.60 | 3.43 | 0.916 | 0.781 |

| QR | 11.35 | 13.26 | - | 0.258 | 0.574 |

| Bootstrap | 7.83 | 9.87 | 1.76 | 0.445 | 0.139 |

| Ours | 7.42 | 9.25 | 2.45 | 0.958 | 0.925 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, T.; Yang, K.; Li, X.; Peng, S.; Meng, F. Probabilistic Estimation of Tropical Cyclone Intensity Based on Multi-Source Satellite Remote Sensing Images. Remote Sens. 2024, 16, 606. https://doi.org/10.3390/rs16040606

Song T, Yang K, Li X, Peng S, Meng F. Probabilistic Estimation of Tropical Cyclone Intensity Based on Multi-Source Satellite Remote Sensing Images. Remote Sensing. 2024; 16(4):606. https://doi.org/10.3390/rs16040606

Chicago/Turabian StyleSong, Tao, Kunlin Yang, Xin Li, Shiqiu Peng, and Fan Meng. 2024. "Probabilistic Estimation of Tropical Cyclone Intensity Based on Multi-Source Satellite Remote Sensing Images" Remote Sensing 16, no. 4: 606. https://doi.org/10.3390/rs16040606

APA StyleSong, T., Yang, K., Li, X., Peng, S., & Meng, F. (2024). Probabilistic Estimation of Tropical Cyclone Intensity Based on Multi-Source Satellite Remote Sensing Images. Remote Sensing, 16(4), 606. https://doi.org/10.3390/rs16040606