A Multi-Level SAR-Guided Contextual Attention Network for Satellite Images Cloud Removal

Abstract

1. Introduction

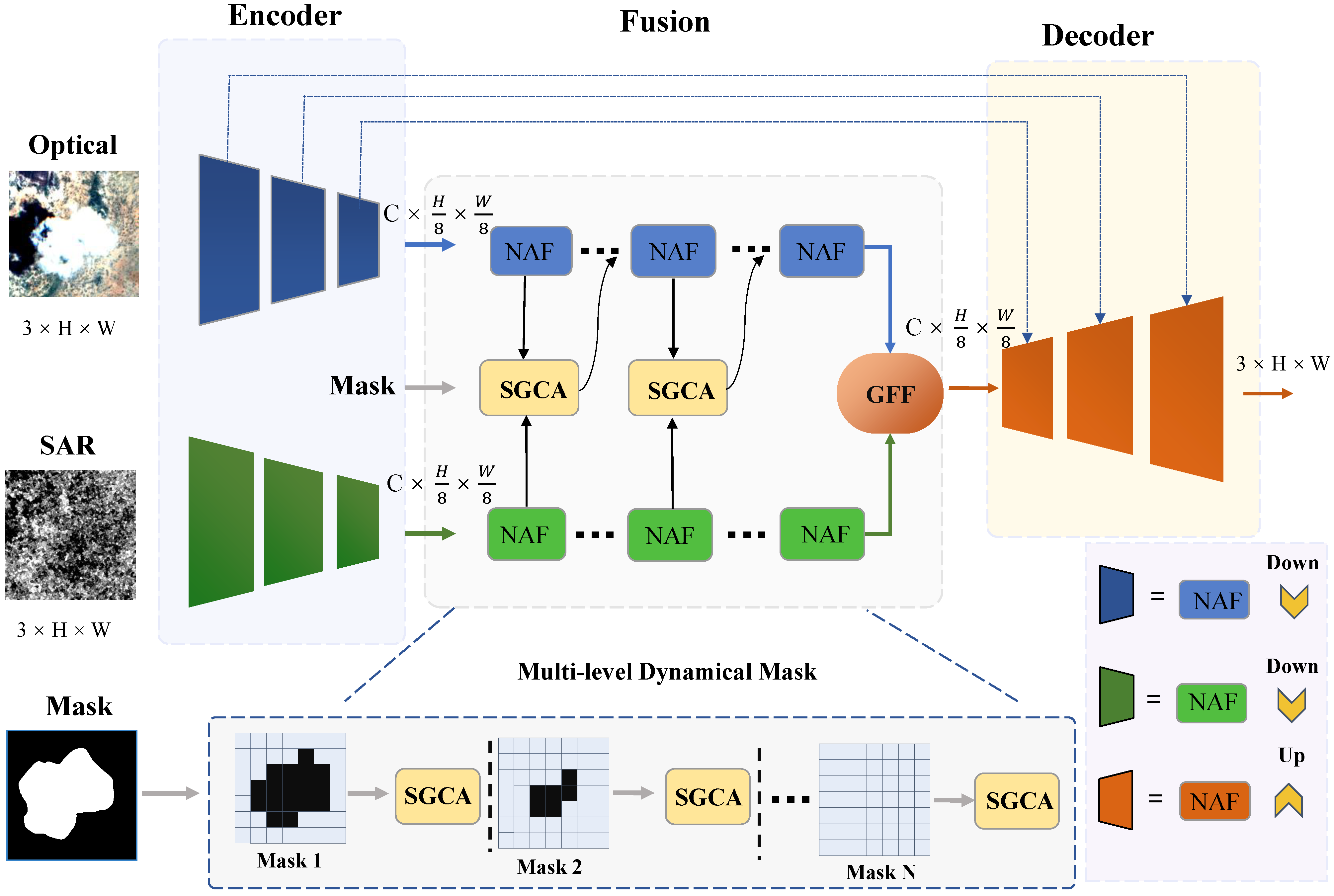

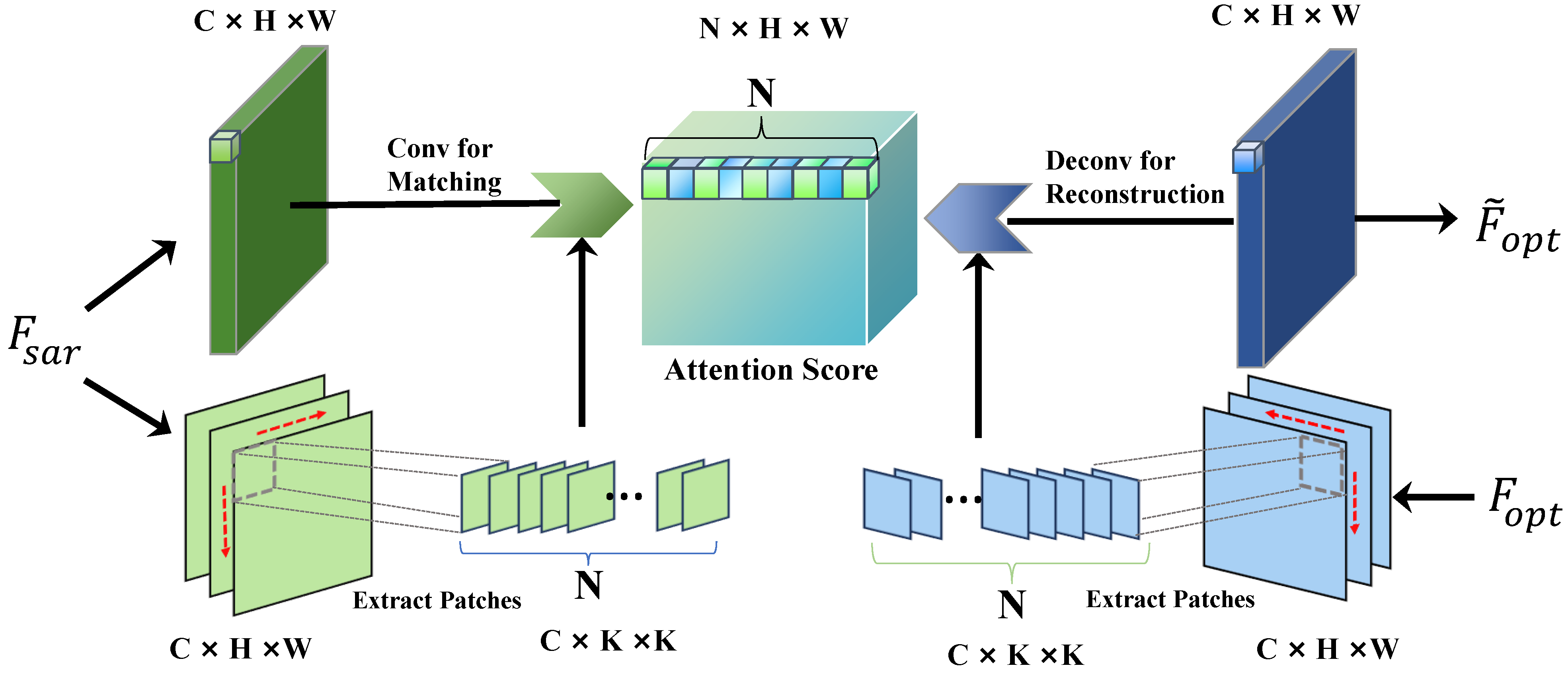

- To leverage the dependable global contextual information inherent in SAR features, a SAR-Guided Contextual Attention (SGCA) module is designed. The module provides valuable guidance for capturing global interactions between contexts in order to maintain global consistency with the remaining cloud-free regions.

- Furthermore, to prevent interference from cloud-covered and cloud-shadow regions during cloud removal estimation and to gradually restore large cloudy regions, we implement a strategy known as the Multi-level Dynamical Mask (MDM) strategy.

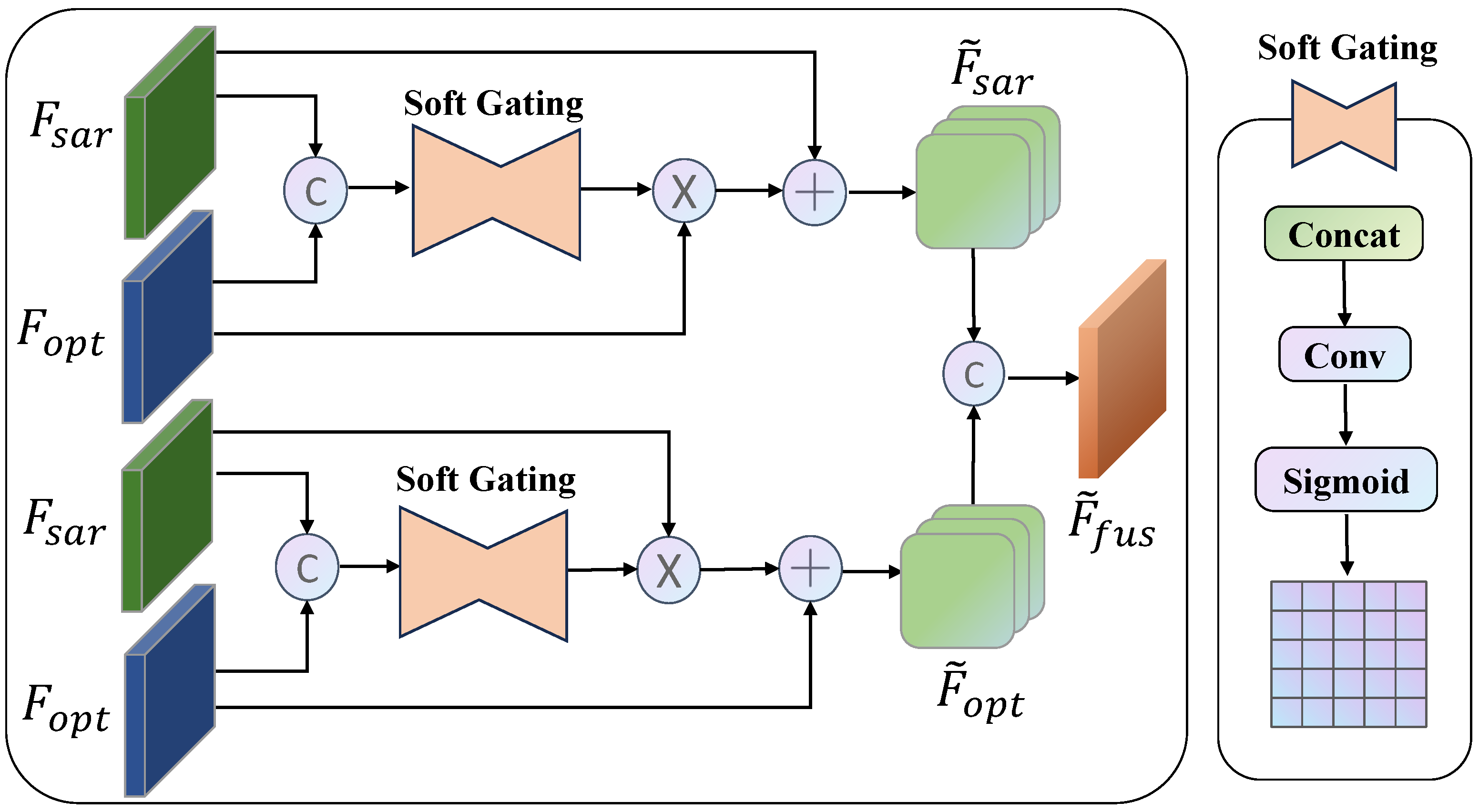

- At last, we introduce the Gated Feature Fusion (GFF) module to transfer the complementary information, thereby achieving collaborative enhancement of both SAR and Optical features.

2. Related Works

2.1. SAR-Based Multi-Source Cloud Removal

2.2. Attention-Based Method

3. Method

3.1. Overall Pipeline

3.2. SAR Guided Contextual Attention

3.3. Multi-Level Dynamical Mask

3.4. Gated Feature Fusion

3.5. Loss Functions

4. Experiments

4.1. Datasets and Metrics

4.2. Implementation Details

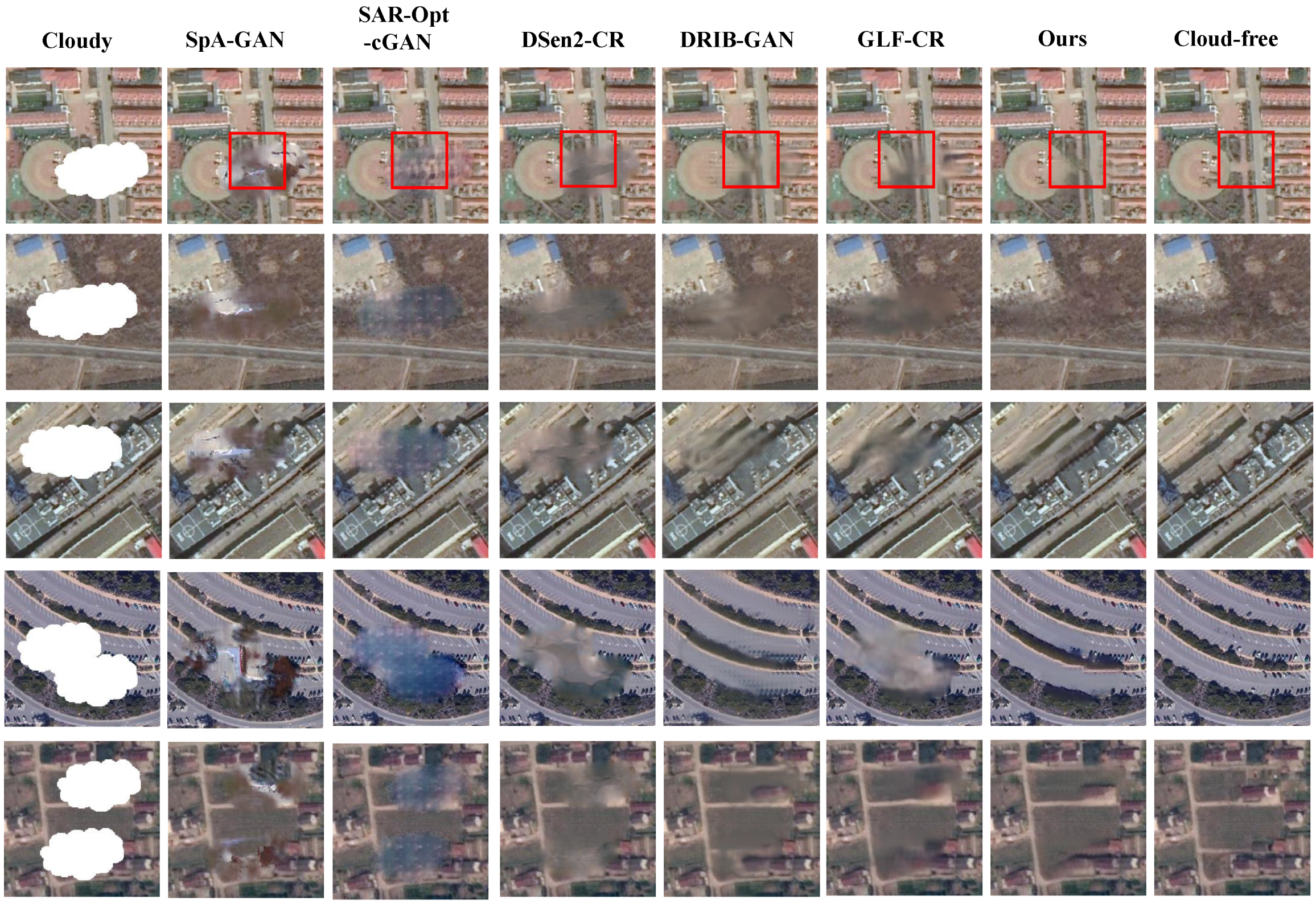

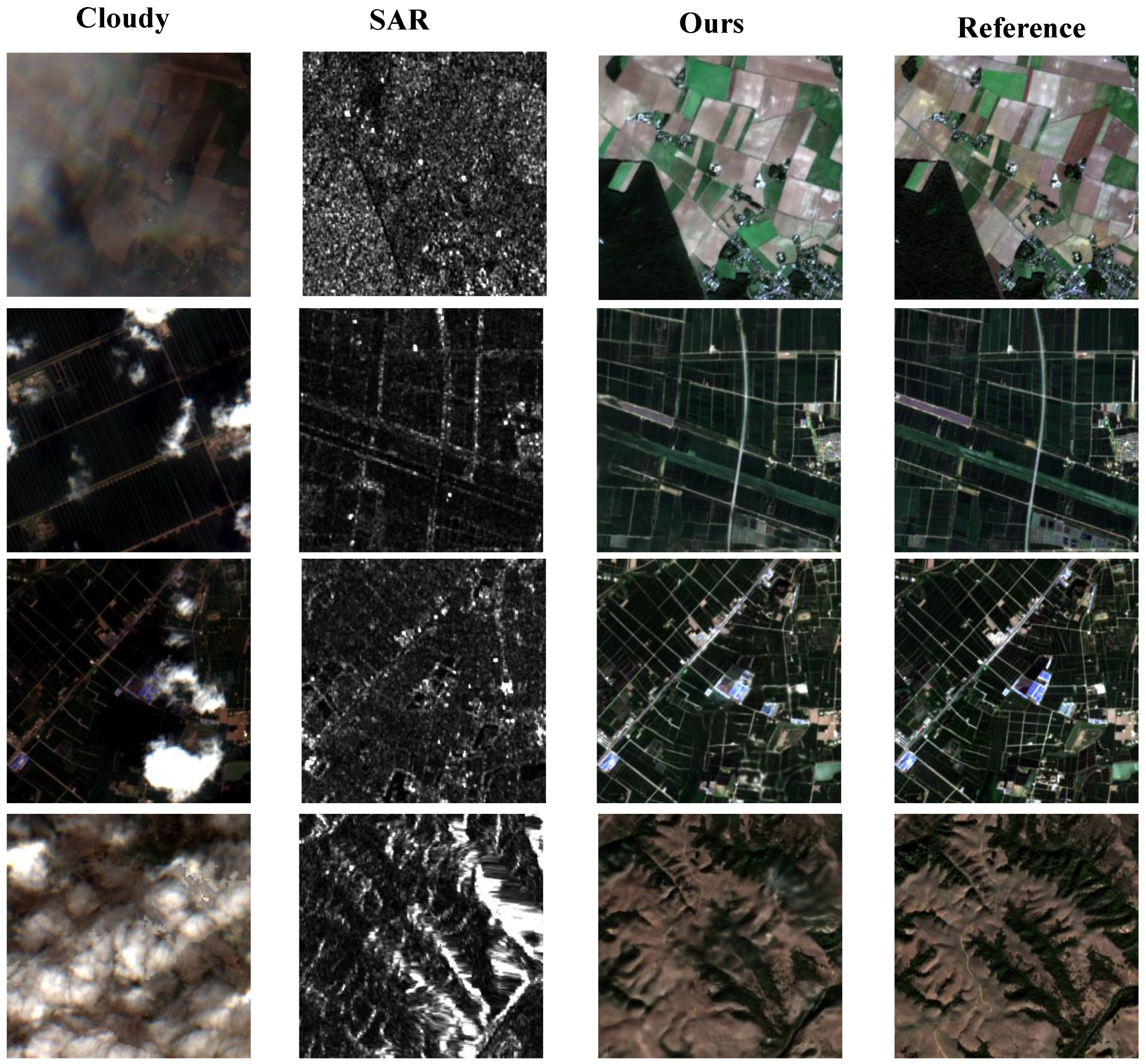

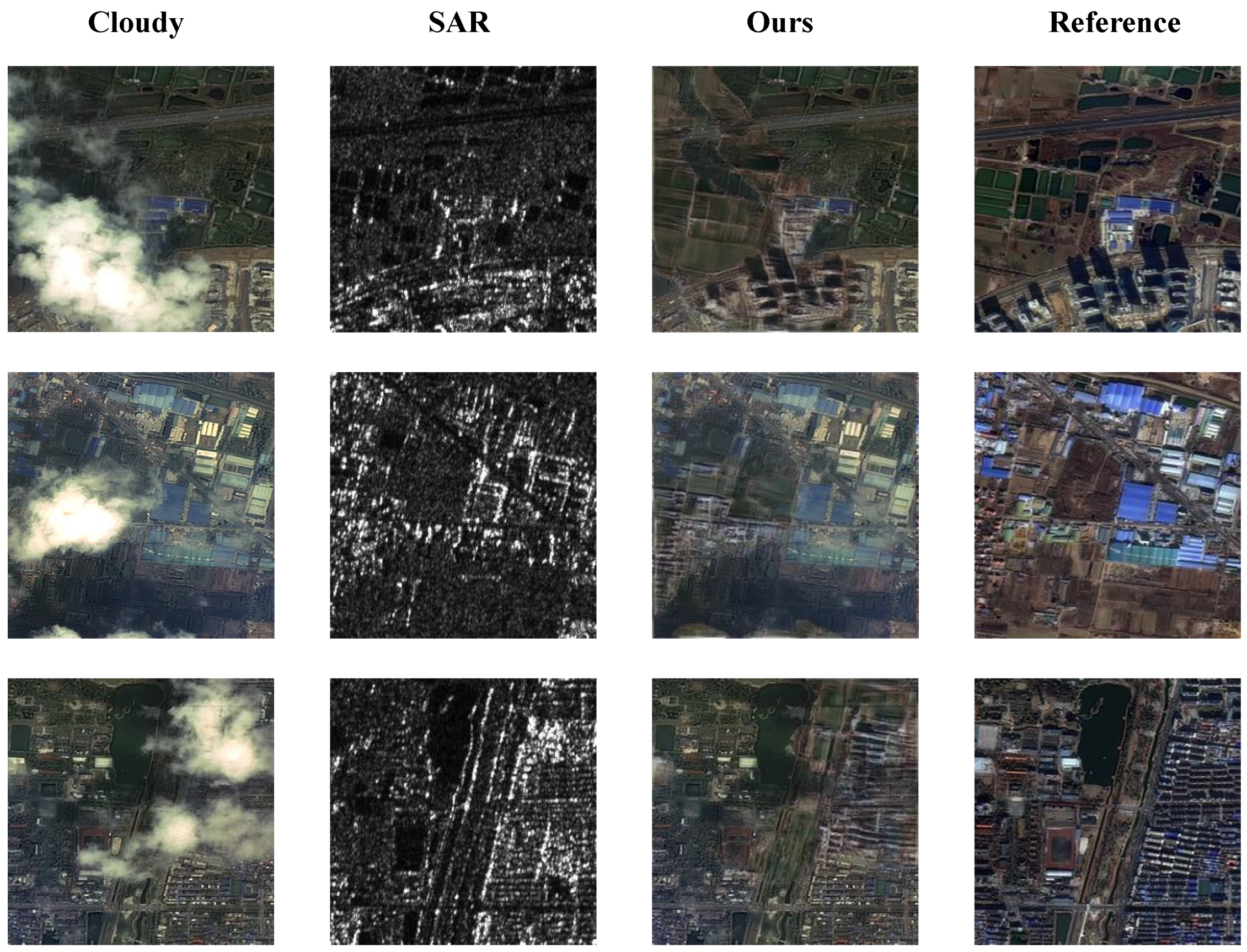

4.3. Comparisons with State-of-the-Art Methods

5. Discussion

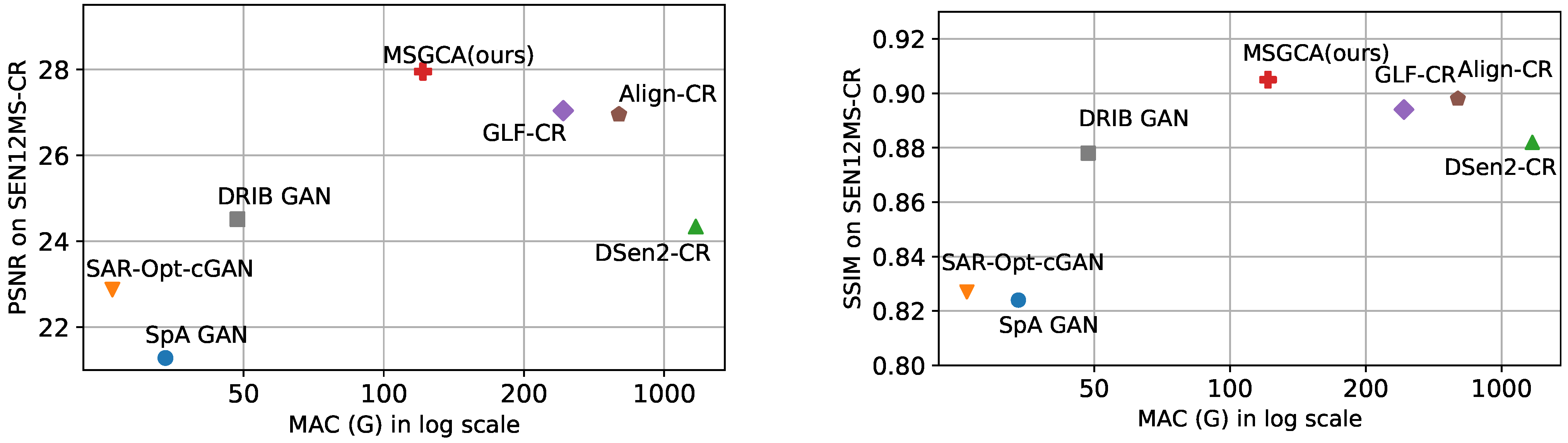

5.1. Analysis of Computational Cost

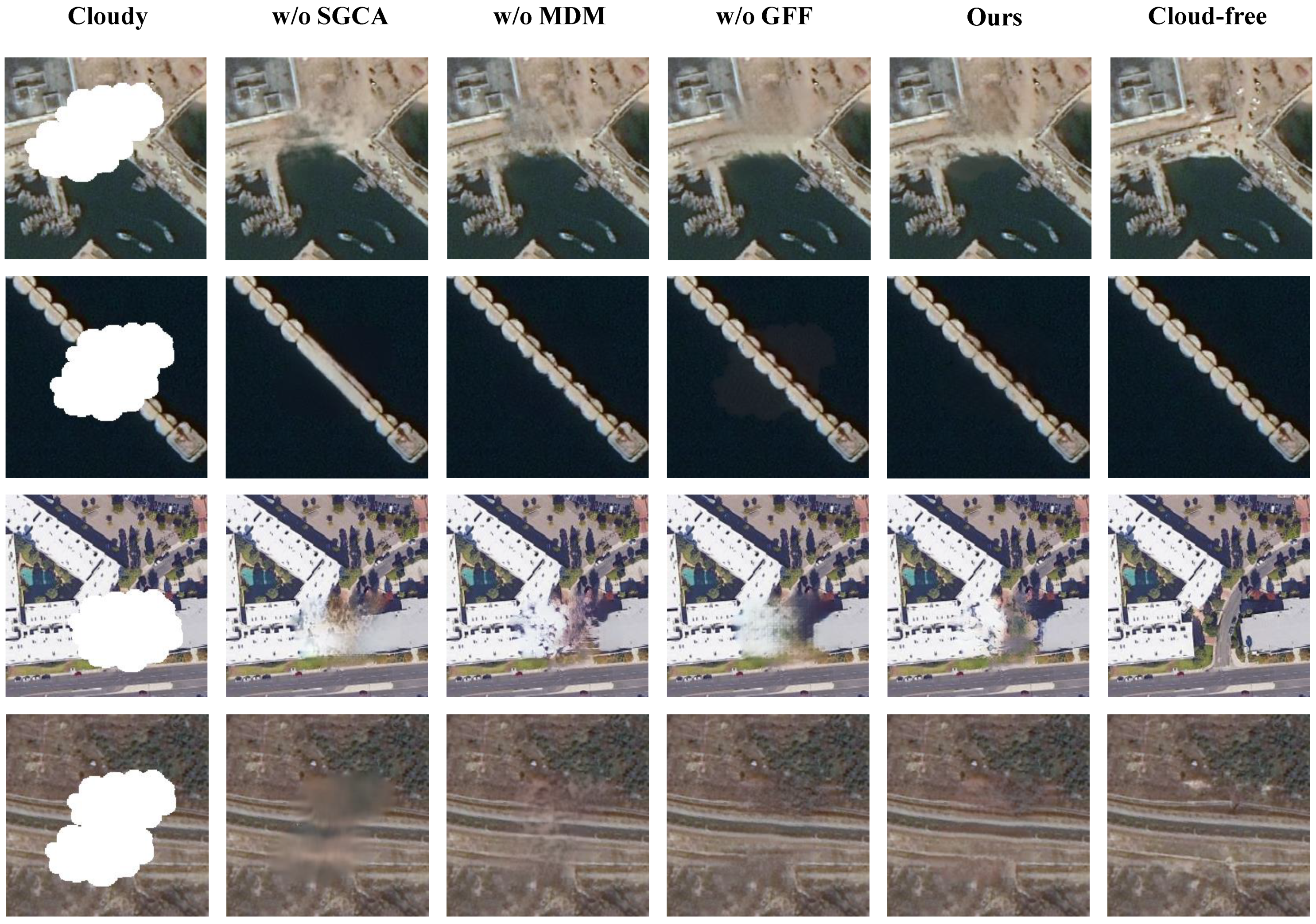

5.2. Ablation Study

5.3. Limitations and Shortcomings

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lin, Z.; Leng, B. SSN: Scale Selection Network for Multi-Scale Object Detection in Remote Sensing Images. Remote Sens. 2024, 16, 3697. [Google Scholar] [CrossRef]

- Mo, N.; Zhu, R. Semi-Supervised Subcategory Centroid Alignment-Based Scene Classification for High-Resolution Remote Sensing Images. Remote Sens. 2024, 16, 3728. [Google Scholar] [CrossRef]

- Liu, G.; Li, C.; Zhang, S.; Yuan, Y. VL-MFL: UAV Visual Localization Based on Multi-Source Image Feature Learning. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5618612. [Google Scholar] [CrossRef]

- Shen, J.; Huo, C.; Xiang, S. Siamese InternImage for Change Detection. Remote Sens. 2024, 16, 3642. [Google Scholar] [CrossRef]

- King, M.D.; Platnick, S.; Menzel, W.P.; Ackerman, S.A.; Hubanks, P.A. Spatial and temporal distribution of clouds observed by MODIS onboard the Terra and Aqua satellites. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3826–3852. [Google Scholar] [CrossRef]

- Shen, H.; Li, X.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.; Zhang, L. Missing information reconstruction of remote sensing data: A technical review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

- Li, W.; Li, Y.; Chan, J.C.W. Thick Cloud Removal with Optical and SAR Imagery via Convolutional-Mapping-Deconvolutional Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2865–2879. [Google Scholar] [CrossRef]

- Zhang, C.; Li, W.; Travis, D. Gaps-fill of SLC-off Landsat ETM+ satellite image using a geostatistical approach. Int. J. Remote Sens. 2007, 28, 5103–5122. [Google Scholar] [CrossRef]

- Maalouf, A.; Carré, P.; Augereau, B.; Fernandez-Maloigne, C. A bandelet-based inpainting technique for clouds removal from remotely sensed images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2363–2371. [Google Scholar] [CrossRef]

- Shen, H.; Zhang, L. A MAP-based algorithm for destriping and inpainting of remotely sensed images. IEEE Trans. Geosci. Remote Sens. 2008, 47, 1492–1502. [Google Scholar] [CrossRef]

- Criminisi, A.; Pérez, P.; Toyama, K. Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 2004, 13, 1200–1212. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Xu, M.; Jia, X.; Pickering, M.; Jia, S. Thin cloud removal from optical remote sensing images using the noise-adjusted principal components transform. ISPRS J. Photogramm. Remote Sens. 2019, 149, 215–225. [Google Scholar] [CrossRef]

- Lv, H.; Wang, Y.; Shen, Y. An empirical and radiative transfer model based algorithm to remove thin clouds in visible bands. Remote Sens. Environ. 2016, 179, 183–195. [Google Scholar] [CrossRef]

- Enomoto, K.; Sakurada, K.; Wang, W.; Fukui, H.; Matsuoka, M.; Nakamura, R.; Kawaguchi, N. Filmy cloud removal on satellite imagery with multispectral conditional generative adversarial nets. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 48–56. [Google Scholar]

- Li, J.; Wu, Z.; Hu, Z.; Zhang, J.; Li, M.; Mo, L.; Molinier, M. Thin cloud removal in optical remote sensing images based on generative adversarial networks and physical model of cloud distortion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 373–389. [Google Scholar] [CrossRef]

- Lin, C.H.; Tsai, P.H.; Lai, K.H.; Chen, J.Y. Cloud removal from multitemporal satellite images using information cloning. IEEE Trans. Geosci. Remote Sens. 2012, 51, 232–241. [Google Scholar] [CrossRef]

- Chen, J.; Jönsson, P.; Tamura, M.; Gu, Z.; Matsushita, B.; Eklundh, L. A simple method for reconstructing a high-quality NDVI time-series data set based on the Savitzky–Golay filter. Remote Sens. Environ. 2004, 91, 332–344. [Google Scholar] [CrossRef]

- Li, X.; Shen, H.; Zhang, L.; Zhang, H.; Yuan, Q.; Yang, G. Recovering quantitative remote sensing products contaminated by thick clouds and shadows using multitemporal dictionary learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7086–7098. [Google Scholar]

- Bamler, R. Principles of synthetic aperture radar. Surv. Geophys. 2000, 21, 147–157. [Google Scholar] [CrossRef]

- Meraner, A.; Ebel, P.; Zhu, X.X.; Schmitt, M. Cloud Removal in Sentinel-2 Imagery Using a Deep Residual Neural Network and SAR-optical Data Fusion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 333–346. [Google Scholar] [CrossRef]

- Zeng, C.; Shen, H.; Zhang, L. Recovering missing pixels for Landsat ETM+ SLC-off imagery using multi-temporal regression analysis and a regularization method. Remote Sens. Environ. 2013, 131, 182–194. [Google Scholar] [CrossRef]

- Xu, F.; Shi, Y.; Ebel, P.; Yang, W.; Zhu, X.X. Multimodal and Multiresolution Data Fusion for High-Resolution Cloud Removal: A Novel Baseline and Benchmark. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Zou, X.; Li, K.; Xing, J.; Zhang, Y.; Wang, S.; Jin, L.; Tao, P. DiffCR: A Fast Conditional Diffusion Framework for Cloud Removal From Optical Satellite Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Grohnfeldt, C.; Schmitt, M.; Zhu, X. A Conditional Generative Adversarial Network to Fuse Sar and Multispectral Optical Data for Cloud Removal from Sentinel-2 Images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, IEEE, Valencia, Spain, 22–27 July 2018; pp. 1726–1729. [Google Scholar]

- Gao, J.; Yuan, Q.; Li, J.; Zhang, H.; Su, X. Cloud Removal with Fusion of High Resolution Optical and SAR Images Using Generative Adversarial Networks. Remote Sens. 2020, 12, 191. [Google Scholar] [CrossRef]

- Darbaghshahi, F.N.; Mohammadi, M.R.; Soryani, M. Cloud Removal in Remote Sensing Images Using Generative Adversarial Networks and SAR-to-Optical Image Translation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–9. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Generative Image Inpainting with Contextual Attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5505–5514. [Google Scholar]

- Zeng, Y.; Lin, Z.; Lu, H.; Patel, V.M. CR-Fill: Generative Image Inpainting with Auxiliary Contextual Reconstruction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 14164–14173. [Google Scholar]

- Yi, Z.; Tang, Q.; Azizi, S.; Jang, D.; Xu, Z. Contextual Residual Aggregation for Ultra High-Resolution Image Inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7508–7517. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Xu, F.; Shi, Y.; Ebel, P.; Yu, L.; Xia, G.S.; Yang, W.; Zhu, X.X. GLF-CR: SAR-enhanced cloud removal with global–local fusion. ISPRS J. Photogramm. Remote Sens. 2022, 192, 268–278. [Google Scholar] [CrossRef]

- Han, S.; Wang, J.; Zhang, S. Former-CR: A Transformer-Based Thick Cloud Removal Method with Optical and SAR Imagery. Remote Sens. 2023, 15, 1196. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Liu, Y. VMamba: Visual State Space Model. arXiv 2024, arXiv:2401.10166. [Google Scholar]

- Zhao, S.; Chen, H.; Zhang, X.; Xiao, P.; Bai, L.; Ouyang, W. Rs-mamba for large remote sensing image dense prediction. arXiv 2024, arXiv:2404.02668. [Google Scholar] [CrossRef]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple Baselines for Image Restoration. In Proceedings of the Computer Vision–ECCV, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2022; pp. 17–33. [Google Scholar] [CrossRef]

- Li, W.; Lin, Z.; Zhou, K.; Qi, L.; Wang, Y.; Jia, J. MAT: Mask-Aware Transformer for Large Hole Image Inpainting. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10748–10758. [Google Scholar] [CrossRef]

- Guo, X.; Yang, H.; Huang, D. Image Inpainting via Conditional Texture and Structure Dual Generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 14134–14143. [Google Scholar]

- Huang, M.; Xu, Y.; Qian, L.; Shi, W.; Zhang, Y.; Bao, W.; Wang, N.; Liu, X.; Xiang, X. The QXS-SAROPT Dataset for Deep Learning in SAR-Optical Data Fusion. arXiv 2021, arXiv:2103.08259. [Google Scholar]

- Pan, H. Cloud Removal for Remote Sensing Imagery via Spatial Attention Generative Adversarial Network. arXiv 2020, arXiv:2009.13015. [Google Scholar]

- Xu, F.; Shi, Y.; Ebel, P.; Yang, W.; Zhu, X.X. High-resolution cloud removal with multi-modal and multi-resolution data fusion: A new baseline and benchmark. arXiv 2023, arXiv:2301.03432. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual Dense Network for Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

| Dataset | Mode | Data Source | Band | Patch Size | Resolution Rg. × Az. (m) | Train/Val Number |

|---|---|---|---|---|---|---|

| SEN12MS-CR | Optical | Sentinel-2 | 13 bands | 256 | 1 × 1 | 101,615/8623 |

| SAR | Sentinel-1 | VV, VH | ||||

| QXS-SAROPT | Optical | Google Earth | RGB | 256 | 1 × 1 | 16,000/4000 |

| SAR | GaoFen-3 | single | ||||

| Flood-Zhengzhou | Optical | Gaofen-1 | RGB | 512 | 1 × 1 | 1865/467 |

| SAR | Gaofen-3 | HH |

| Method | Params | MAC | QXS-SAROPT | SEN12MS-CR | ||||

|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | MAE | PSNR | SSIM | MAE | |||

| SpA GAN (2020) [43] | 0.42 | 33.96 | 21.28 | 0.824 | 0.0355 | 24.86 | 0.753 | 0.0444 |

| SAR-Opt-cGAN (2018) [25] | 170.0 | 26.12 | 22.87 | 0.827 | 0.0323 | 25.29 | 0.759 | 0.0441 |

| DSen2-CR (2020) [21] | 18.9 | 1238.0 | 24.34 | 0.882 | 0.0245 | 27.37 | 0.870 | 0.0319 |

| DRIB GAN (2022) [27] | 37.05 | 42.69 | 24.51 | 0.878 | 0.0286 | - | - | - |

| GLF-CR (2022) [32] | 14.65 | 243.0 | 27.04 | 0.894 | 0.0171 | 29.07 | 0.885 | 0.0266 |

| Align-CR (2023) [44] | 43.51 | 447.7 | 26.95 | 0.898 | 0.0161 | - | - | - |

| MSGCA-Net (Ours) | 63.3 | 121.4 | 27.95 | 0.905 | 0.0153 | 29.2 | 0.905 | 0.0215 |

| Method | PSNR | SSIM | MAE |

|---|---|---|---|

| w/o SGCA | 25.96 | 0.880 | 0.0178 |

| w/o MDM | 27.11 | 0.890 | 0.0168 |

| w/o GFF | 27.42 | 0.898 | 0.0166 |

| Ours | 27.95 | 0.905 | 0.0163 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, G.; Qiu, J.; Yuan, Y. A Multi-Level SAR-Guided Contextual Attention Network for Satellite Images Cloud Removal. Remote Sens. 2024, 16, 4767. https://doi.org/10.3390/rs16244767

Liu G, Qiu J, Yuan Y. A Multi-Level SAR-Guided Contextual Attention Network for Satellite Images Cloud Removal. Remote Sensing. 2024; 16(24):4767. https://doi.org/10.3390/rs16244767

Chicago/Turabian StyleLiu, Ganchao, Jiawei Qiu, and Yuan Yuan. 2024. "A Multi-Level SAR-Guided Contextual Attention Network for Satellite Images Cloud Removal" Remote Sensing 16, no. 24: 4767. https://doi.org/10.3390/rs16244767

APA StyleLiu, G., Qiu, J., & Yuan, Y. (2024). A Multi-Level SAR-Guided Contextual Attention Network for Satellite Images Cloud Removal. Remote Sensing, 16(24), 4767. https://doi.org/10.3390/rs16244767