Estimation of Urban Tree Chlorophyll Content and Leaf Area Index Using Sentinel-2 Images and 3D Radiative Transfer Model Inversion

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Sites

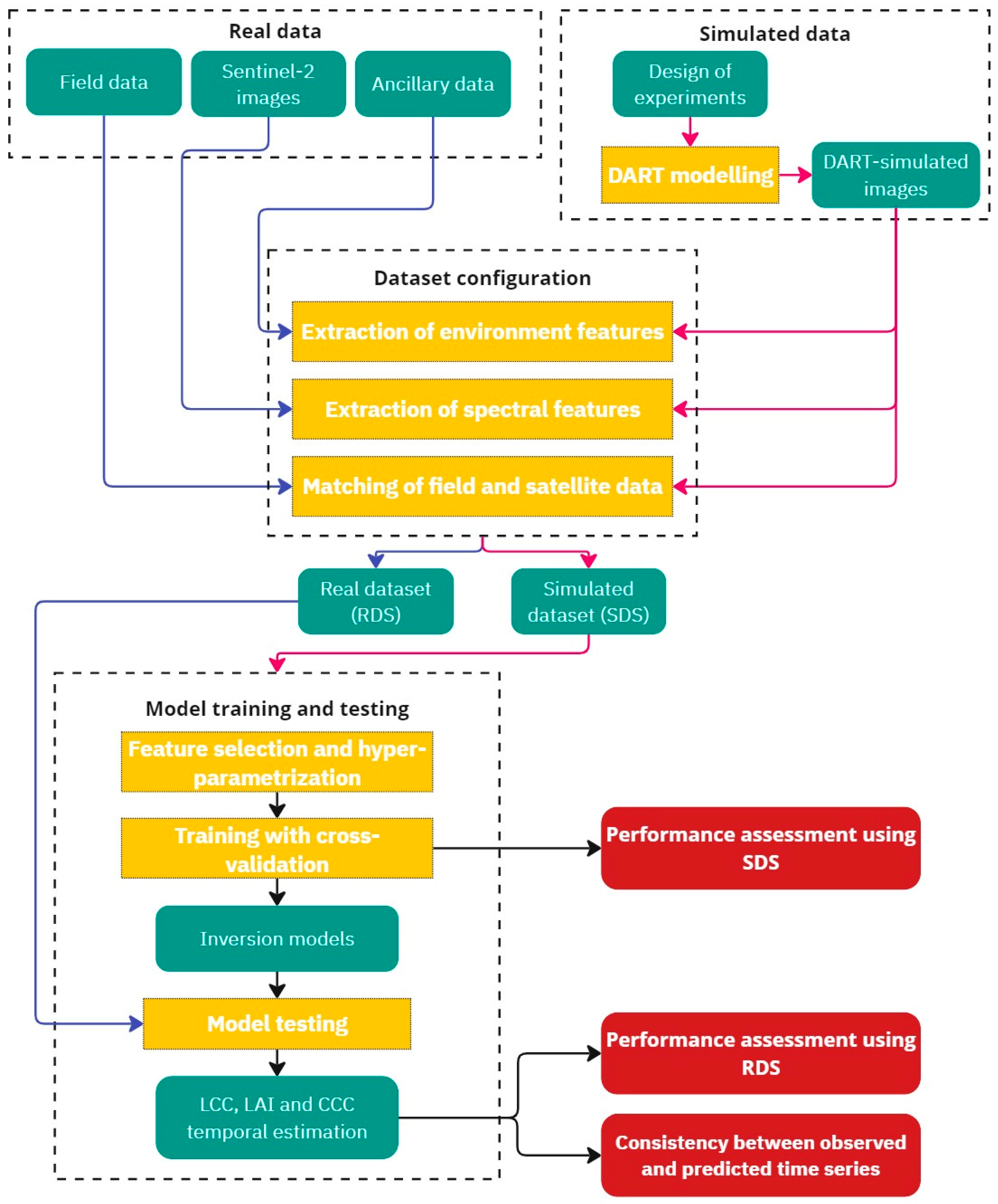

2.2. Methodological Framework

2.3. Real Data

2.3.1. Field Data

- LCC was measured using a Dualex leaf-clip (FORCE A, Orsay, France). Two leaves were collected in each cardinal direction on the edge of the crown and as high up as possible using a lopper. Two Dualex measurements were taken per leaf. Mean LCC per tree, which equaled the mean of all 16 Dualex readings on a given date, was calculated for the eight dates. We used the Dualex device rather than the widely used SPAD and CCM-200 chlorophyll meters, as it responds linearly to increasing chlorophyll content rather than non-linearly. An equation developed for dicot species was used to retrieve LCC from the Dualex reading [49]:

- LAD was measured using a canopy analyzer (LAI-2200, LiCor, Lincoln, NE, USA). The measurement protocol was adapted according to the user manual and the protocol of Wei et al. [50].

| Dates | Image Lag Time (Days) | LCC Measurement | LAD Measurement | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Species | Species | |||||||||

| Field | Sentinel-2 | AC | FR | PL | QR | AC | FR | PL | QR | |

| 27 April 2021 | 23 April 2021 | −4 | A | A | A | A | P | A | P | A |

| 11 May 2021 | 6 May 2021 | −5 | P | P | P | P | P | P | P | P |

| 2 June 2021 | 31 May 2021 | −2 | P | P | P | P | P | P | P | P |

| 23 June 2021 | 15 June 2021 | −8 | P | P | P | P | P | P | P | P |

| 21 July 2021 | 20 July 2021 | −1 | P | P | P | P | P | P | P | P |

| 17 August 2021 | 14 August 2021 | −3 | P | P | P | P | P | P | P | P |

| 1 September 2021 | 5 September 2021 | 4 | P | P | P | P | P | P | P | P |

| 20 September 2021 | 13 September 2021 | −7 | P | P | P | P | A | P | P | P |

2.3.2. Sentinel-2 Data

2.3.3. Ancillary Data

2.4. Simulated Data

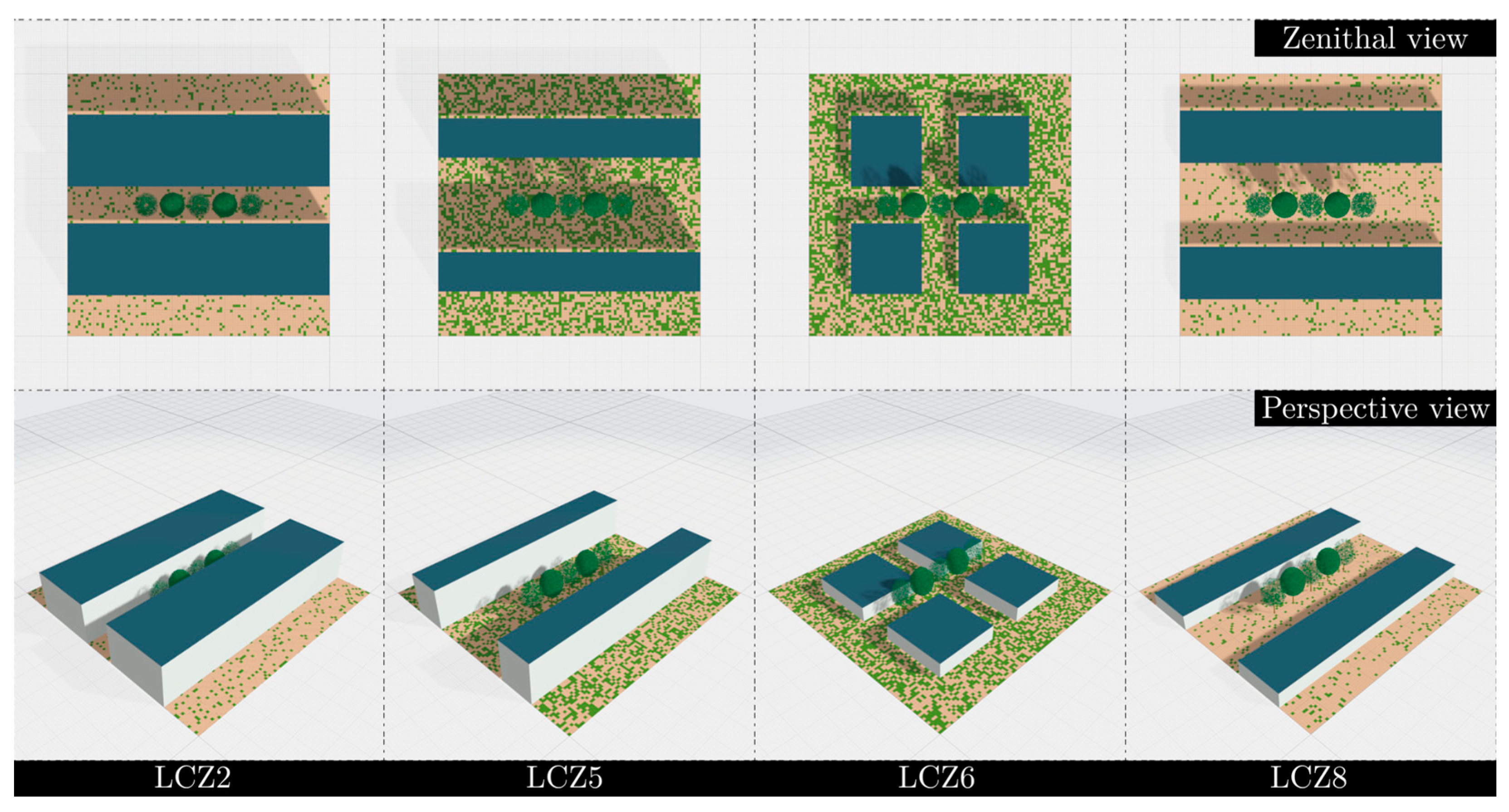

2.4.1. 3D Urban Scene Modelling

2.4.2. DART Parametrization and Simulation

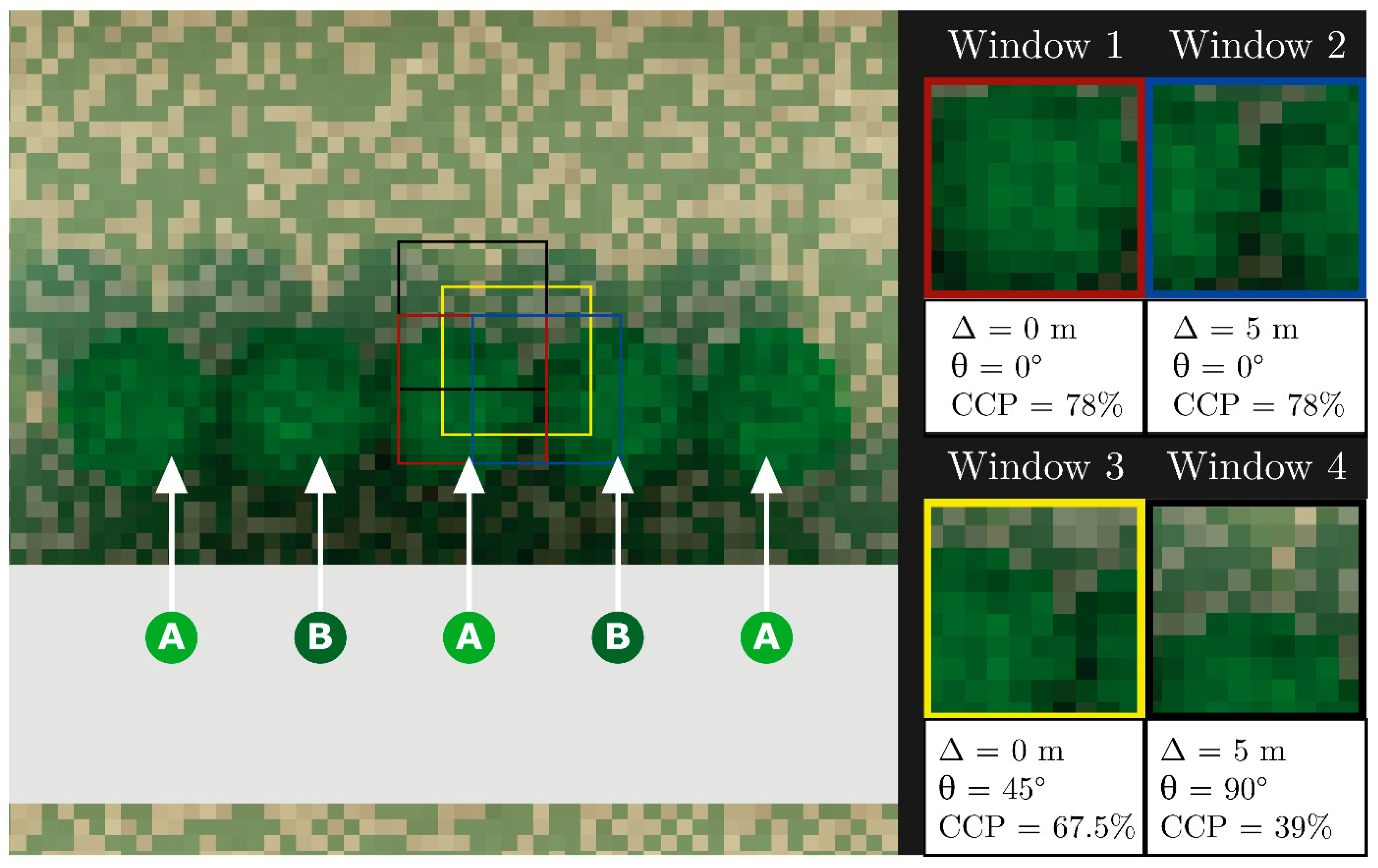

2.4.3. Spatial Window Extraction

2.5. Dataset Configuration

2.5.1. Environmental Feature Extraction

- Green proportion (Pgreen): the percentage of the pixel covered by underlying vegetation, which strongly influences reflectance when the tree canopy does not cover the entire pixel and/or the tree has low LAI;

- Shadow proportion (Pshadow): the percentage of the pixel covered by shadow, which also strongly influences reflectance, particularly of one near-infrared S2 band (B08) with solar angle decrease;

- Canopy cover pixel (CCP): the percentage of the pixel covered by tree canopy, which indicates the percentage of pixel purity.

- Pgreen was calculated at the pixel scale by intersecting the S2 grid and the grass-extent layer;

- Pshadow was calculated using a raster layer of potential direct incoming solar radiation (kWh/m2) at 1 m resolution derived from the DSM using the Potential Incoming Solar Radiation algorithm (Terrain Analysis; Lighting, Visibility) in SAGA software v7.8.2 [60]. Pixels with > 0 kWh/m2 were classified as non-shadow (0), while those with 0 kWh/m2 were classified as shadow. This layer was then aggregated to the S2 grid resolution (10 m);

- For CCP calculation, the S2 grid and the tree-crown extent were spatially intersected. Finally, CCP was calculated at the pixel scale based on this spatial intersection.

2.5.2. Spectral Feature Extraction

2.5.3. Spatial Allocation for Field-Satellite Data Matching

2.6. Machine Learning Regression Algorithms: Building Strategy and Training

2.7. Validation

- Coefficient of determination (R2): The coefficient of determination measures the proportion of the variance in the dependent variable that is explained by the regression model. It ranges from 0 to 1, where 1 indicates a perfect fit of the model to the observed data.

- Root mean squared error (RMSE): RMSE is a measure of model accuracy that calculates the square root of the mean of the squares of the differences between predictions and observations, thus indicating the mean deviation between them.

- Symmetric mean absolute percentage error (SMAPE): SMAPE equals the percentage difference between predictions and observations while accounting for their scales. In this study, it provided an intuitive interpretation and allowed model performances for the three vegetation traits to be compared.

- Bias: Bias equals the mean difference between predictions and observations, which indicates a model’s tendency to overestimate or underestimate the dependent variable.

- Bias standard deviation (BSD): The standard deviation of the bias measures the distribution of prediction errors around the mean bias, which indicates the variability in differences between predictions and observations.

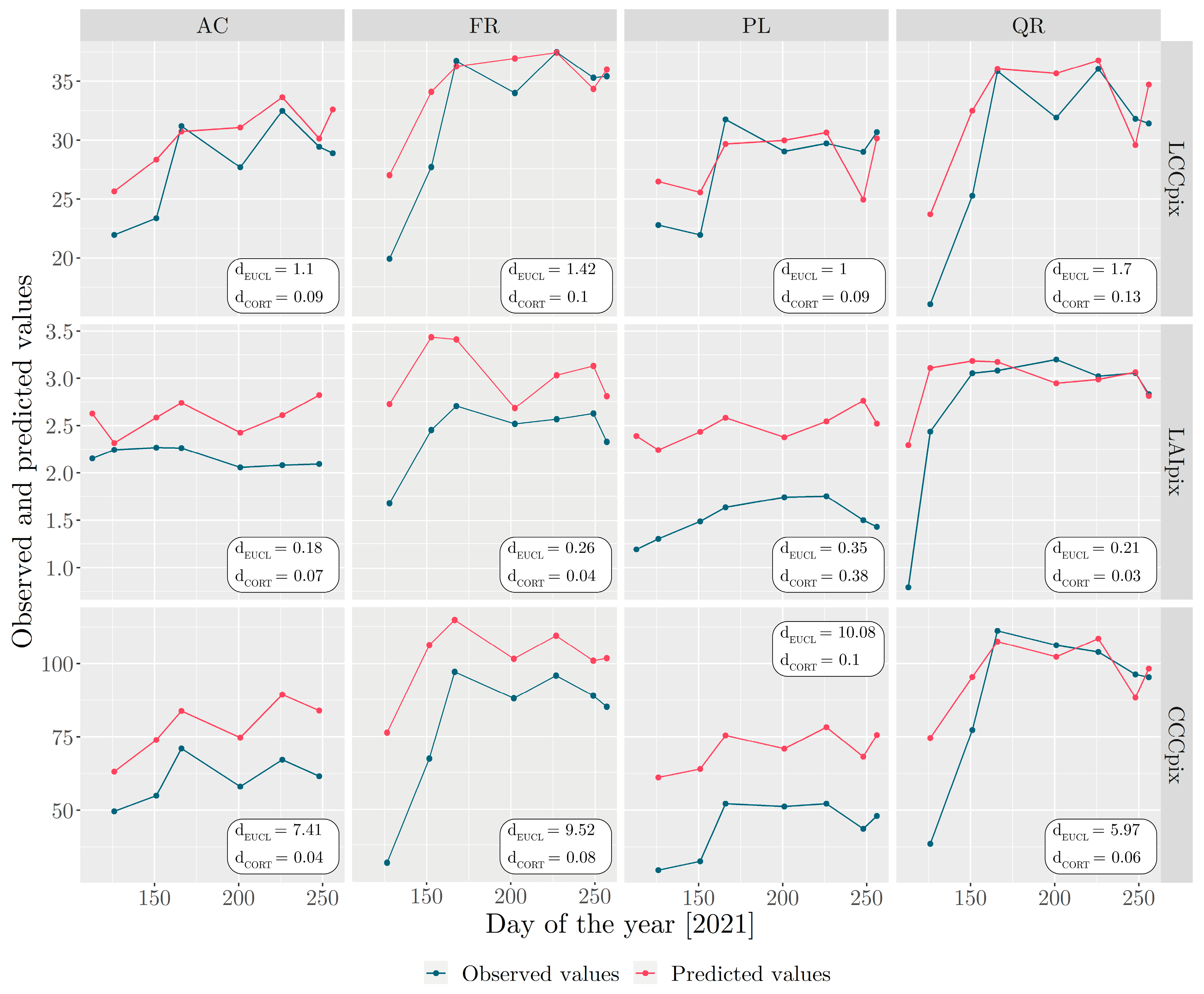

- dEUCL, based on the Euclidean distance between values observed at the same points in time

- dCOR, based on Pearson correlation between the two series [86]

- dCORT, based on temporal correlation between the two series, used to include both conventional measures for the proximity of observations and temporal correlation to estimate the proximity behaviour and dynamic [87].

3. Results

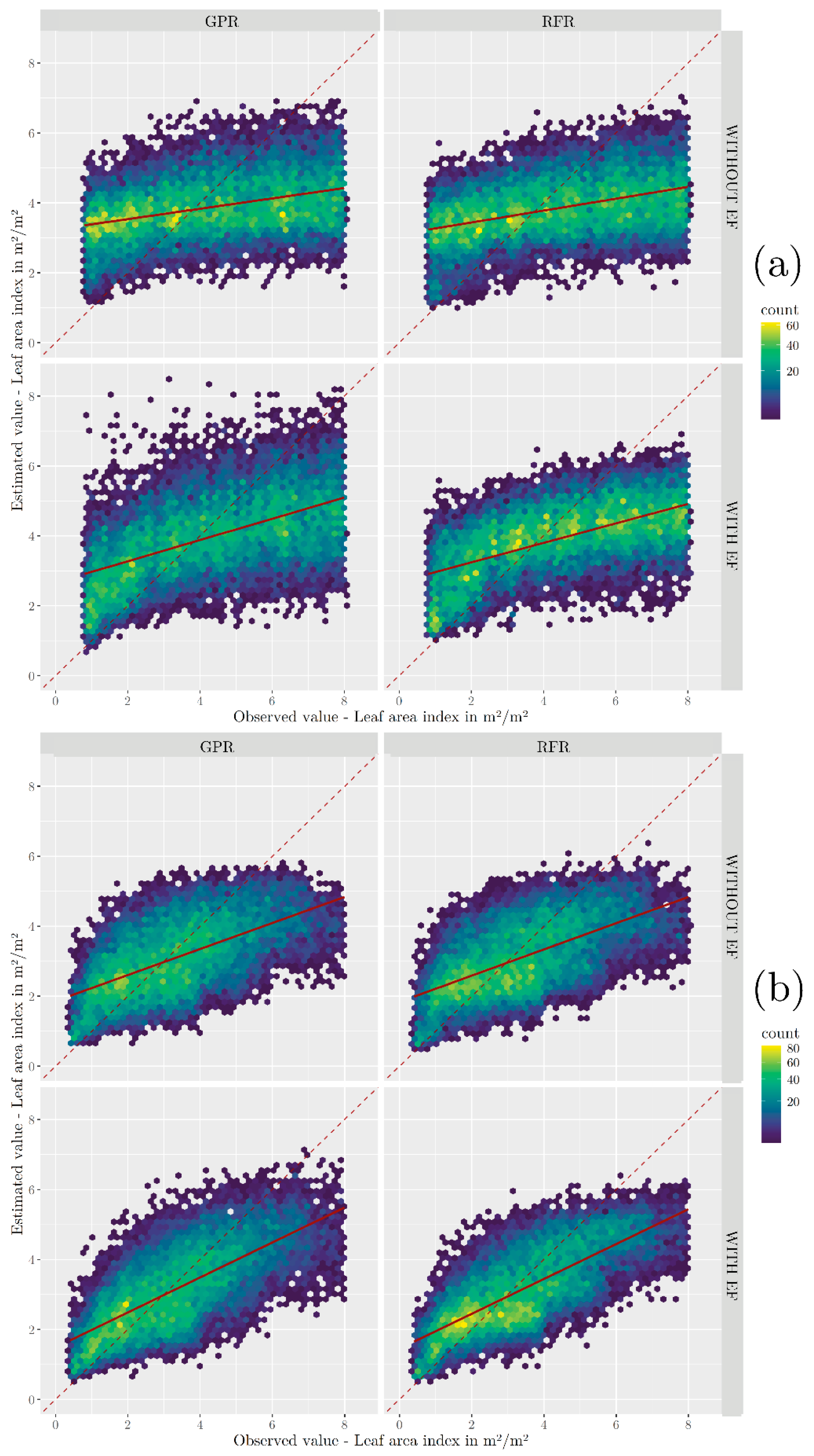

3.1. Accuracy of LCC, LAI, and CCC Estimation Using the Simulated Dataset

3.2. Accuracy of LCC, LAI, and CCC Estimation Using the Real Dataset

3.2.1. Overall Accuracy Assessment and Consistency between Cross-Validation and Validation Using the Real Dataset

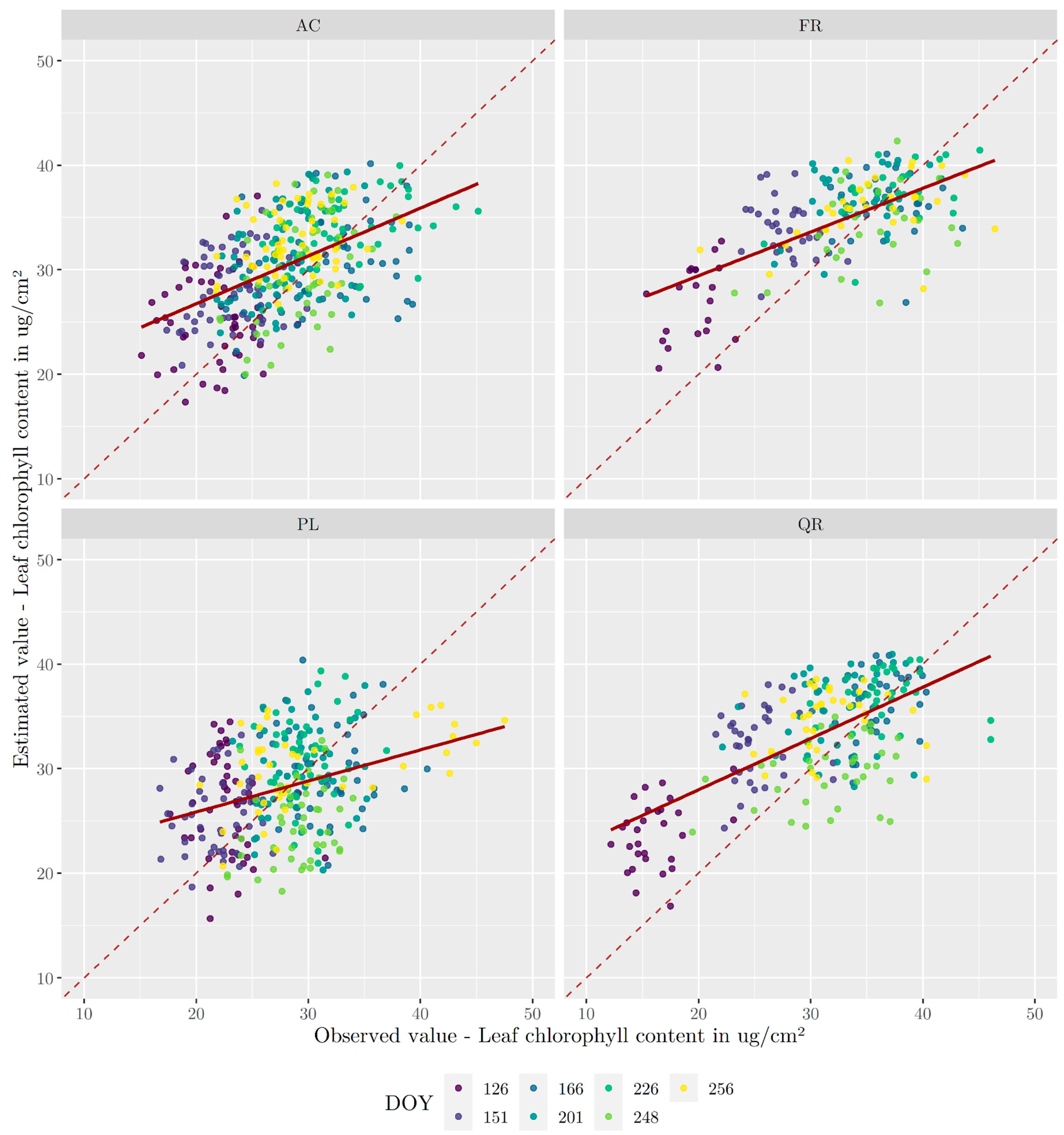

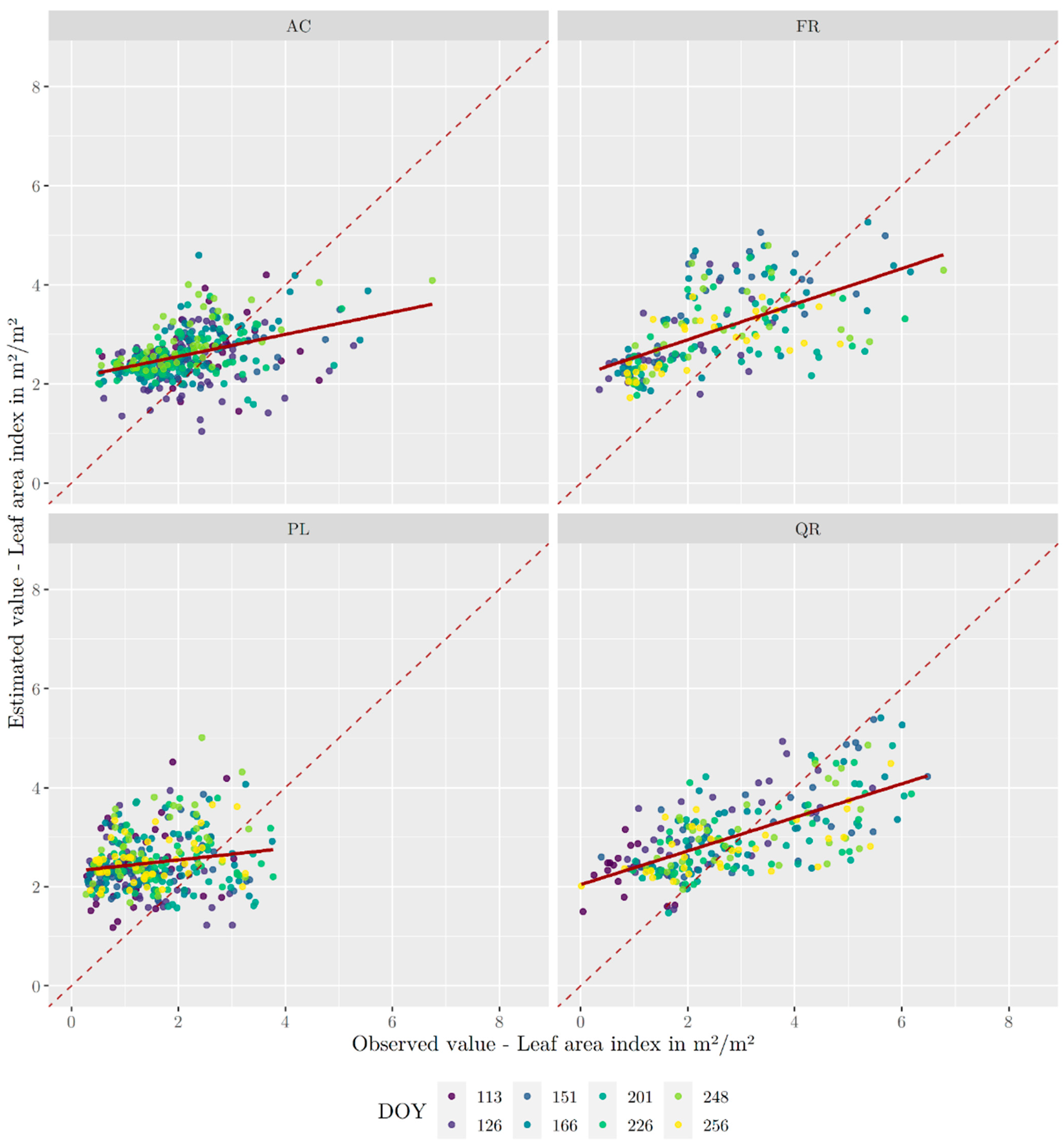

3.2.2. Accuracy of LCC, LAI, and CCC Estimation by Tree Species

3.3. Consistency between Estimated and Observed Time Series

4. Discussion

4.1. Overall Performance of Vegetation Trait Estimation

4.2. DART Modelling

4.3. Environment Features

4.4. Spatial Allocation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Detailed Description of DART Input Parameters

Appendix A.1. Tree-Exogenous Parameters

Appendix A.1.1. Sensor Settings, Direction Input Parameter, and Atmosphere

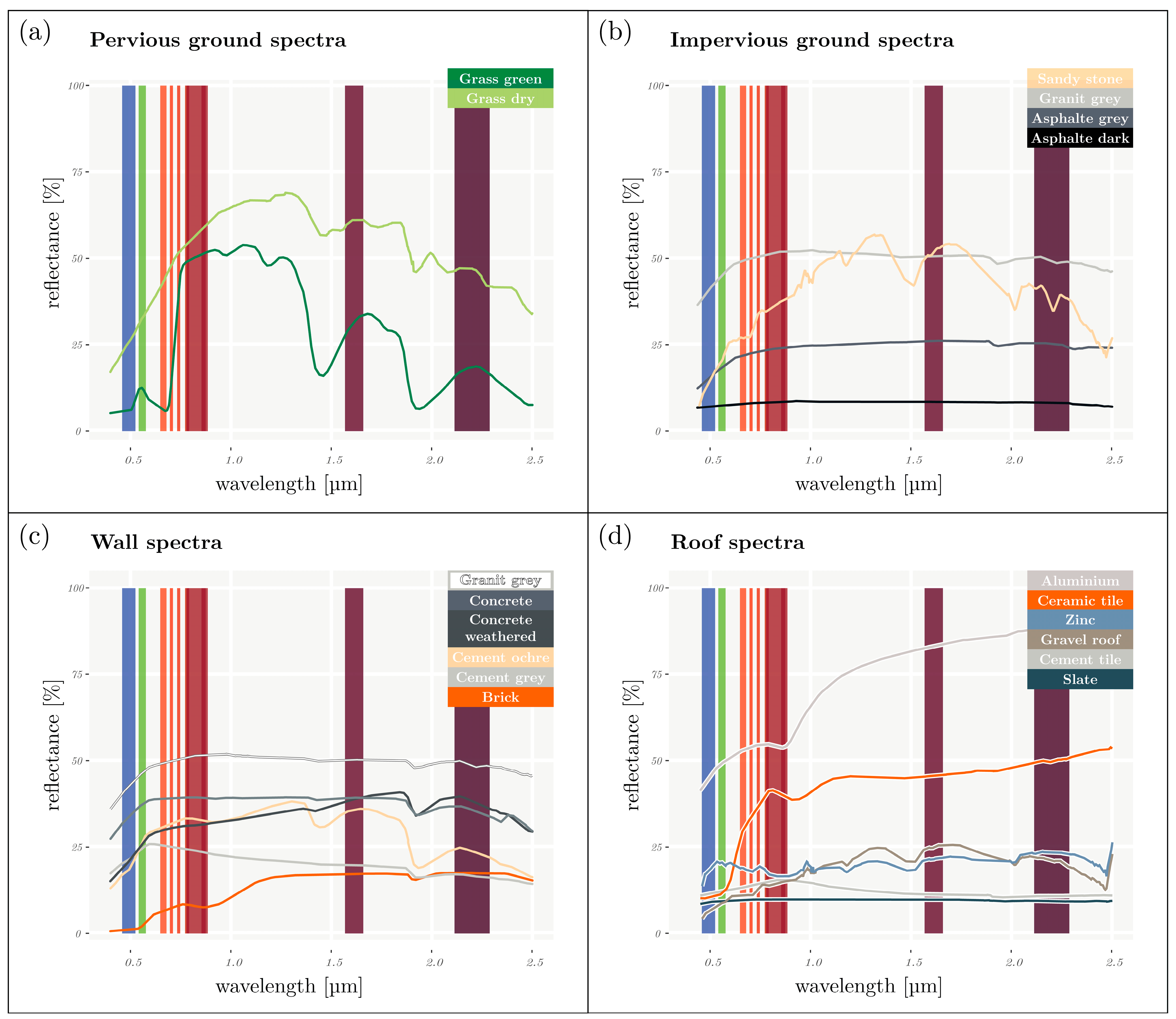

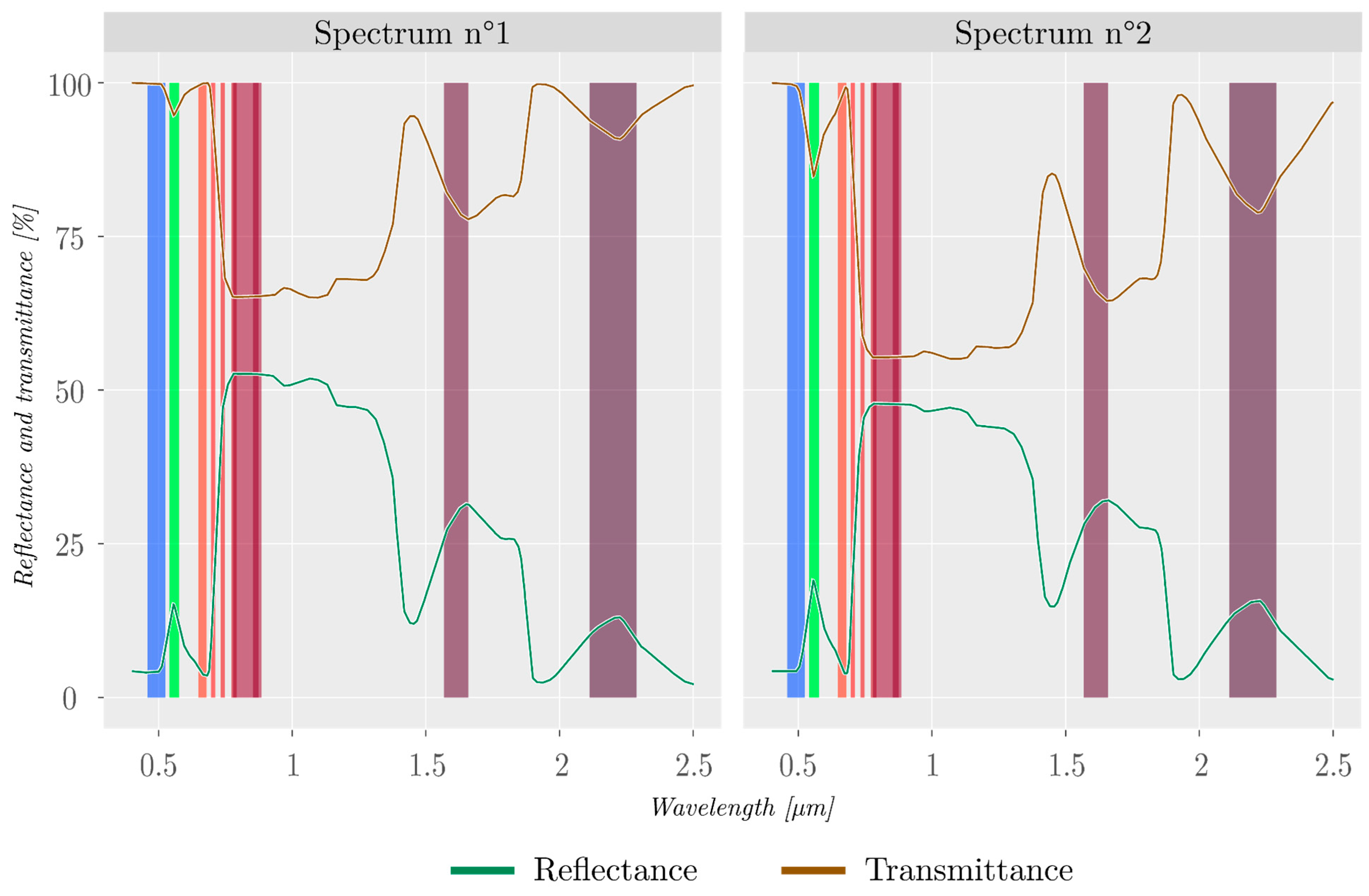

Appendix A.1.2. Spectral Library

Appendix A.1.3. Earth Scene and Tree Planting Context

Appendix A.2. Tree-Endogenous Parameters

Appendix A.2.1. Tree Structural Parameters

| Parameter | Value [m] |

|---|---|

| Tree height | 15 |

| Trunk height under the crown | 4 |

| Trunk height in the crown | 6 |

| Trunk diameter | 0.4 |

Appendix A.2.2. Leaf Parameters

Appendix B. Cross-Validation Scatterplots

References

- Xu, F.; Yan, J.; Heremans, S.; Somers, B. Pan-European Urban Green Space Dynamics: A View from Space between 1990 and 2015. Landsc. Urban Plan. 2022, 226, 104477. [Google Scholar] [CrossRef]

- Bolund, P.; Hunhammar, S. Ecosystem Services in Urban Areas. Ecol. Econ. 1999, 29, 293–301. [Google Scholar] [CrossRef]

- Andersson-Sköld, Y.; Thorsson, S.; Rayner, D.; Lindberg, F.; Janhäll, S.; Jonsson, A.; Moback, U.; Bergman, R.; Granberg, M. An Integrated Method for Assessing Climate-Related Risks and Adaptation Alternatives in Urban Areas. Clim. Risk Manag. 2015, 7, 31–50. [Google Scholar] [CrossRef]

- Nowak, D.J.; Crane, D.E. Carbon Storage and Sequestration by Urban Trees in the USA. Environ. Pollut. 2002, 116, 381–389. [Google Scholar] [CrossRef]

- Andersson, E.; Barthel, S.; Ahrné, K. Measuring Social–Ecological Dynamics Behind the Generation of Ecosystem Services. Ecol. Appl. 2007, 17, 1267–1278. [Google Scholar] [CrossRef]

- Wolf, K.L.; Lam, S.T.; McKeen, J.K.; Richardson, G.R.A.; van den Bosch, M.; Bardekjian, A.C. Urban Trees and Human Health: A Scoping Review. Int. J. Environ. Res. Public Health 2020, 17, 4371. [Google Scholar] [CrossRef]

- Czaja, M.; Kołton, A.; Muras, P. The Complex Issue of Urban Trees—Stress Factor Accumulation and Ecological Service Possibilities. Forests 2020, 11, 932. [Google Scholar] [CrossRef]

- Sæbø, A.; Borzan, Ž.; Ducatillion, C.; Hatzistathis, A.; Lagerström, T.; Supuka, J.; García-Valdecantos, J.L.; Rego, F.; Van Slycken, J. The Selection of Plant Materials for Street Trees, Park Trees and Urban Woodland. In Urban Forests and Trees: A Reference Book; Konijnendijk, C., Nilsson, K., Randrup, T., Schipperijn, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 257–280. ISBN 978-3-540-27684-5. [Google Scholar]

- Ma, B.; Hauer, R.J.; Östberg, J.; Koeser, A.K.; Wei, H.; Xu, C. A Global Basis of Urban Tree Inventories: What Comes First the Inventory or the Program. Urban For. Urban Green. 2021, 60, 127087. [Google Scholar] [CrossRef]

- Hilbert, D.; Roman, L.; Koeser, A.; Vogt, J.; van Doorn, N. Urban Tree Mortality: A Literature Review. Arboric. Urban For. AUF 2019, 45, 167–200. [Google Scholar] [CrossRef]

- Neyns, R.; Canters, F. Mapping of Urban Vegetation with High-Resolution Remote Sensing: A Review. Remote Sens. 2022, 14, 1031. [Google Scholar] [CrossRef]

- García-Pardo, K.A.; Moreno-Rangel, D.; Domínguez-Amarillo, S.; García-Chávez, J.R. Remote Sensing for the Assessment of Ecosystem Services Provided by Urban Vegetation: A Review of the Methods Applied. Urban For. Urban Green. 2022, 74, 127636. [Google Scholar] [CrossRef]

- Mattila, H.; Valev, D.; Havurinne, V.; Khorobrykh, S.; Virtanen, O.; Antinluoma, M.; Mishra, K.B.; Tyystjärvi, E. Degradation of Chlorophyll and Synthesis of Flavonols during Autumn Senescence—the Story Told by Individual Leaves. AoB Plants 2018, 10, ply028. [Google Scholar] [CrossRef] [PubMed]

- Croft, H.; Chen, J.M.; Luo, X.; Bartlett, P.; Chen, B.; Staebler, R.M. Leaf Chlorophyll Content as a Proxy for Leaf Photosynthetic Capacity. Glob. Change Biol. 2017, 23, 3513–3524. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Liu, Y.; Lu, Y.; Liao, Y.; Nie, J.; Yuan, X.; Chen, F. Use of a Leaf Chlorophyll Content Index to Improve the Prediction of Above-Ground Biomass and Productivity. PeerJ 2019, 6, e6240. [Google Scholar] [CrossRef]

- Filella, I.; Penuelas, J. The Red Edge Position and Shape as Indicators of Plant Chlorophyll Content, Biomass and Hydric Status. Int. J. Remote Sens. 1994, 15, 1459–1470. [Google Scholar] [CrossRef]

- Ramos-Montaño, C. Vehicular Emissions Effect on the Physiology and Health Status of Five Tree Species in a Bogotá, Colombia Urban Forest. Rev. Biol. Trop. 2020, 68, 1001–1015. [Google Scholar] [CrossRef]

- Talebzadeh, F.; Valeo, C. Evaluating the Effects of Environmental Stress on Leaf Chlorophyll Content as an Index for Tree Health. IOP Conf. Ser. Earth Environ. Sci. 2022, 1006, 012007. [Google Scholar] [CrossRef]

- Peñuelas, J.; Rutishauser, T.; Filella, I. Phenology Feedbacks on Climate Change. Science 2009, 324, 887–888. [Google Scholar] [CrossRef]

- Duncan, W.G. Leaf Angles, Leaf Area, and Canopy Photosynthesis 1. Crop Sci. 1971, 11, 482–485. [Google Scholar] [CrossRef]

- Halme, E.; Pellikka, P.; Mõttus, M. Utility of Hyperspectral Compared to Multispectral Remote Sensing Data in Estimating Forest Biomass and Structure Variables in Finnish Boreal Forest. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101942. [Google Scholar] [CrossRef]

- Granero-Belinchon, C.; Adeline, K.; Briottet, X. Impact of the Number of Dates and Their Sampling on a NDVI Time Series Reconstruction Methodology to Monitor Urban Trees with Venμs Satellite. Int. J. Appl. Earth Obs. Geoinf. 2021, 95, 102257. [Google Scholar] [CrossRef]

- Verrelst, J.; Rivera, J.P.; Veroustraete, F.; Muñoz-Marí, J.; Clevers, J.G.P.W.; Camps-Valls, G.; Moreno, J. Experimental Sentinel-2 LAI Estimation Using Parametric, Non-Parametric and Physical Retrieval Methods—A Comparison. ISPRS J. Photogramm. Remote Sens. 2015, 108, 260–272. [Google Scholar] [CrossRef]

- Combal, B.; Baret, F.; Weiss, M.; Trubuil, A.; Macé, D.; Pragnère, A.; Myneni, R.; Knyazikhin, Y.; Wang, L. Retrieval of Canopy Biophysical Variables from Bidirectional Reflectance: Using Prior Information to Solve the Ill-Posed Inverse Problem. Remote Sens. Environ. 2003, 84, 1–15. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Hornero, A.; Beck, P.S.A.; Kattenborn, T.; Kempeneers, P.; Hernández-Clemente, R. Chlorophyll Content Estimation in an Open-Canopy Conifer Forest with Sentinel-2A and Hyperspectral Imagery in the Context of Forest Decline. Remote Sens. Environ. 2019, 223, 320–335. [Google Scholar] [CrossRef]

- Brown, L.A.; Ogutu, B.O.; Dash, J. Estimating Forest Leaf Area Index and Canopy Chlorophyll Content with Sentinel-2: An Evaluation of Two Hybrid Retrieval Algorithms. Remote Sens. 2019, 11, 1752. [Google Scholar] [CrossRef]

- Ali, A.M.; Darvishzadeh, R.; Skidmore, A.; Gara, T.W.; Heurich, M. Machine Learning Methods’ Performance in Radiative Transfer Model Inversion to Retrieve Plant Traits from Sentinel-2 Data of a Mixed Mountain Forest. Int. J. Digit. Earth 2021, 14, 106–120. [Google Scholar] [CrossRef]

- Amin, E.; Verrelst, J.; Rivera-Caicedo, J.P.; Pipia, L.; Ruiz-Verdú, A.; Moreno, J. Prototyping Sentinel-2 Green LAI and Brown LAI Products for Cropland Monitoring. Remote Sens. Environ. 2021, 255, 112168. [Google Scholar] [CrossRef]

- de Sá, N.C.; Baratchi, M.; Hauser, L.T.; van Bodegom, P. Exploring the Impact of Noise on Hybrid Inversion of PROSAIL RTM on Sentinel-2 Data. Remote Sens. 2021, 13, 648. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Baret, F. PROSPECT: A Model of Leaf Optical Properties Spectra. Remote Sens. Environ. 1990, 34, 75–91. [Google Scholar] [CrossRef]

- Féret, J.-B.; Gitelson, A.A.; Noble, S.D.; Jacquemoud, S. PROSPECT-D: Towards Modeling Leaf Optical Properties through a Complete Lifecycle. Remote Sens. Environ. 2017, 193, 204–215. [Google Scholar] [CrossRef]

- Verhoef, W. Light Scattering by Leaf Layers with Application to Canopy Reflectance Modeling: The SAIL Model. Remote Sens. Environ. 1984, 16, 125–141. [Google Scholar] [CrossRef]

- Atzberger, C. Development of an Invertible Forest Reflectance Model: The INFOR-Model. In A Decade of Trans-European Remote Sensing Cooperation, Proceedings of the 20th EARSeL Symposium, Dresden, Germany, 14–16 June 2000; University of Trier: Trier, Germany, 2000; Volume 14, pp. 39–44. [Google Scholar]

- Jacquemoud, S.; Verhoef, W.; Baret, F.; Bacour, C.; Zarco-Tejada, P.J.; Asner, G.P.; François, C.; Ustin, S.L. PROSPECT + SAIL Models: A Review of Use for Vegetation Characterization. Remote Sens. Environ. 2009, 113, S56–S66. [Google Scholar] [CrossRef]

- Darvishzadeh, R.; Wang, T.; Skidmore, A.; Vrieling, A.; O’Connor, B.; Gara, T.W.; Ens, B.J.; Paganini, M. Analysis of Sentinel-2 and RapidEye for Retrieval of Leaf Area Index in a Saltmarsh Using a Radiative Transfer Model. Remote Sens. 2019, 11, 671. [Google Scholar] [CrossRef]

- Wan, L.; Ryu, Y.; Dechant, B.; Lee, J.; Zhong, Z.; Feng, H. Improving Retrieval of Leaf Chlorophyll Content from Sentinel-2 and Landsat-7/8 Imagery by Correcting for Canopy Structural Effects. Remote Sens. Environ. 2024, 304, 114048. [Google Scholar] [CrossRef]

- Sinha, S.K.; Padalia, H.; Dasgupta, A.; Verrelst, J.; Rivera, J.P. Estimation of Leaf Area Index Using PROSAIL Based LUT Inversion, MLRA-GPR and Empirical Models: Case Study of Tropical Deciduous Forest Plantation, North India. Int. J. Appl. Earth Obs. Geoinf. 2020, 86, 102027. [Google Scholar] [CrossRef]

- Gastellu-Etchegorry, J.-P.; Lauret, N.; Yin, T.; Landier, L.; Kallel, A.; Malenovsky, Z.; Bitar, A.A.; Aval, J.; Benhmida, S.; Qi, J.; et al. DART: Recent Advances in Remote Sensing Data Modeling with Atmosphere, Polarization, and Chlorophyll Fluorescence. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2640–2649. [Google Scholar] [CrossRef]

- Wang, Y.; Kallel, A.; Yang, X.; Regaieg, O.; Lauret, N.; Guilleux, J.; Chavanon, E.; Gastellu-Etchegorry, J.-P. DART-Lux: An Unbiased and Rapid Monte Carlo Radiative Transfer Method for Simulating Remote Sensing Images. Remote Sens. Environ. 2022, 274, 112973. [Google Scholar] [CrossRef]

- Zhen, Z.; Benromdhane, N.; Kallel, A.; Wang, Y.; Regaieg, O.; Boitard, P.; Landier, L.; Chavanon, E.; Lauret, N.; Guilleux, J.; et al. DART: A 3D Radiative Transfer Model for Urban Studies. In Proceedings of the 2023 Joint Urban Remote Sensing Event (JURSE), Heraklion, Greece, 17–19 May 2023; pp. 1–4. [Google Scholar]

- Landier, L.; Gastellu-Etchegorry, J.P.; Al Bitar, A.; Chavanon, E.; Lauret, N.; Feigenwinter, C.; Mitraka, Z.; Chrysoulakis, N. Calibration of Urban Canopies Albedo and 3D Shortwave Radiative Budget Using Remote-Sensing Data and the DART Model. Eur. J. Remote Sens. 2018, 51, 739–753. [Google Scholar] [CrossRef]

- Makhloufi, A.; Kallel, A.; Chaker, R.; Gastellu-Etchegorry, J.-P. Retrieval of Olive Tree Biophysical Properties from Sentinel-2 Time Series Based on Physical Modelling and Machine Learning Technique. Int. J. Remote Sens. 2021, 42, 8542–8571. [Google Scholar] [CrossRef]

- Stewart, I.D.; Oke, T.R. Local Climate Zones for Urban Temperature Studies. Bull. Am. Meteorol. Soc. 2012, 93, 1879–1900. [Google Scholar] [CrossRef]

- Aslam, A.; Rana, I.A. The Use of Local Climate Zones in the Urban Environment: A Systematic Review of Data Sources, Methods, and Themes. Urban Clim. 2022, 42, 101120. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Rasmussen, C.E. Gaussian Processes in Machine Learning. In Advanced Lectures on Machine Learning: ML Summer Schools 2003, Canberra, Australia, 2–14 February 2003, Tübingen, Germany, 4–16 August 2003; Revised Lectures; Bousquet, O., von Luxburg, U., Rätsch, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 63–71. ISBN 978-3-540-28650-9. [Google Scholar]

- INSEE (French National Institute of Statistics and Economic Studies). Population Census 2020; INSEE: Montrouge, France, 2022. [Google Scholar]

- Haut Conseil pour le Climat en Bretagne. Le changement Climatique En Bretagne. Bulletin, 2023; pp. 10–11. [Google Scholar]

- Casa, R.; Castaldi, F.; Pascucci, S.; Pignatti, S. Chlorophyll Estimation in Field Crops: An Assessment of Handheld Leaf Meters and Spectral Reflectance Measurements. J. Agric. Sci. 2015, 153, 876–890. [Google Scholar] [CrossRef]

- Wei, S.; Yin, T.; Dissegna, M.A.; Whittle, A.J.; Ow, G.L.F.; Yusof, M.L.M.; Lauret, N.; Gastellu-Etchegorry, J.-P. An Assessment Study of Three Indirect Methods for Estimating Leaf Area Density and Leaf Area Index of Individual Trees. Agric. For. Meteorol. 2020, 292–293, 108101. [Google Scholar] [CrossRef]

- Sain, T.L.; Nabucet, J.; Sulmon, C.; Pellen, J.; Adeline, K.; Hubert-moy, L. A Spatio-Temporal Dataset for Ecophysiological Monitoring of Urban Trees. Data Brief 2024, 57, 111010. [Google Scholar] [CrossRef]

- Copernicus Browser. Available online: https://browser.dataspace.copernicus.eu/ (accessed on 7 October 2024).

- Stumpf, A.; Michéa, D.; Malet, J.-P. Improved Co-Registration of Sentinel-2 and Landsat-8 Imagery for Earth Surface Motion Measurements. Remote Sens. 2018, 10, 160. [Google Scholar] [CrossRef]

- Zhao, C.; Weng, Q.; Wang, Y.; Hu, Z.; Wu, C. Use of Local Climate Zones to Assess the Spatiotemporal Variations of Urban Vegetation Phenology in Austin, Texas, USA. GIScience Remote Sens. 2022, 59, 393–409. [Google Scholar] [CrossRef]

- Demuzere, M.; Bechtel, B.; Middel, A.; Mills, G. Mapping Europe into Local Climate Zones. PLOS ONE 2019, 14, e0214474. [Google Scholar] [CrossRef]

- Gascon, F.; Gastellu-Etchegorry, J.-P.; Lefevre-Fonollosa, M.-J.; Dufrene, E. Retrieval of Forest Biophysical Variables by Inverting a 3-D Radiative Transfer Model and Using High and Very High Resolution Imagery. Int. J. Remote Sens. 2004, 25, 5601–5616. [Google Scholar] [CrossRef]

- Miraglio, T.; Adeline, K.; Huesca, M.; Ustin, S.; Briottet, X. Joint Use of PROSAIL and DART for Fast LUT Building: Application to Gap Fraction and Leaf Biochemistry Estimations over Sparse Oak Stands. Remote Sens. 2020, 12, 2925. [Google Scholar] [CrossRef]

- Feret, J.-B.; François, C.; Asner, G.P.; Gitelson, A.A.; Martin, R.E.; Bidel, L.P.R.; Ustin, S.L.; le Maire, G.; Jacquemoud, S. PROSPECT-4 and 5: Advances in the Leaf Optical Properties Model Separating Photosynthetic Pigments. Remote Sens. Environ. 2008, 112, 3030–3043. [Google Scholar] [CrossRef]

- Baudin, M.; Dutfoy, A.; Iooss, B.; Popelin, A.-L. OpenTURNS: An Industrial Software for Uncertainty Quantification in Simulation. In Handbook of Uncertainty Quantification; Ghanem, R., Higdon, D., Owhadi, H., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 2001–2038. ISBN 978-3-319-12385-1. [Google Scholar]

- Conrad, O.; Bechtel, B.; Bock, M.; Dietrich, H.; Fischer, E.; Gerlitz, L.; Wehberg, J.; Wichmann, V.; Böhner, J. System for Automated Geoscientific Analyses (SAGA) v. 2.1.4. Geosci. Model Dev. 2015, 8, 1991–2007. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-Based Plant Height from Crop Surface Models, Visible, and near Infrared Vegetation Indices for Biomass Monitoring in Barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Bargen, V.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Penuelas, J.; Baret, F.; Filella, I. Semi-Empirical Indices to Assess Carotenoids/Chlorophyll a Ratio from Leaf Spectral Reflectance. Photosynthetica 1995, 31, 221. [Google Scholar]

- Peñuelas, J.; Inoue, Y. Reflectance Indices Indicative of Changes in Water and Pigment Contents of Peanut and Wheat Leaves. Photosynthetica 1999, 36, 355–360. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the NASA Scientific and Technical publications, Greenbelt, MD, USA, 1 January 1974. [Google Scholar]

- Kaufman, Y.J.; Tanre, D. Atmospherically Resistant Vegetation Index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Liu, H.Q.; Huete, A. A Feedback Based Modification of the NDVI to Minimize Canopy Background and Atmospheric Noise. IEEE Trans. Geosci. Remote Sens. 1995, 33, 457–465. [Google Scholar] [CrossRef]

- Son, N.-T.; Chen, C.-F.; Chen, C.-R.; Guo, H.-Y. Classification of Multitemporal Sentinel-2 Data for Field-Level Monitoring of Rice Cropping Practices in Taiwan. Adv. Space Res. 2020, 65, 1910–1921. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of Soil-Adjusted Vegetation Indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral Vegetation Indices and Novel Algorithms for Predicting Green LAI of Crop Canopies: Modeling and Validation in the Context of Precision Agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Quantitative Estimation of Chlorophyll-a Using Reflectance Spectra: Experiments with Autumn Chestnut and Maple Leaves. J. Photochem. Photobiol. B Biol. 1994, 22, 247–252. [Google Scholar] [CrossRef]

- Pasqualotto, N.; Delegido, J.; Van Wittenberghe, S.; Rinaldi, M.; Moreno, J. Multi-Crop Green LAI Estimation with a New Simple Sentinel-2 LAI Index (SeLI). Sensors 2019, 19, 904. [Google Scholar] [CrossRef] [PubMed]

- Lymburner, L. Estimation of Canopy-Average Surface-Specific Leaf Area Using Landsat TM Data. Photogramm. Eng. Remote Sens. 2000, 66, 183–192. [Google Scholar]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated Narrow-Band Vegetation Indices for Prediction of Crop Chlorophyll Content for Application to Precision Agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Wu, C.; Niu, Z.; Tang, Q.; Huang, W. Estimating Chlorophyll Content from Hyperspectral Vegetation Indices: Modeling and Validation. Agric. For. Meteorol. 2008, 148, 1230–1241. [Google Scholar] [CrossRef]

- Qian, B.; Ye, H.; Huang, W.; Xie, Q.; Pan, Y.; Xing, N.; Ren, Y.; Guo, A.; Jiao, Q.; Lan, Y. A Sentinel-2-Based Triangular Vegetation Index for Chlorophyll Content Estimation. Agric. For. Meteorol. 2022, 322, 109000. [Google Scholar] [CrossRef]

- Shi, T.; Xu, H. Derivation of Tasseled Cap Transformation Coefficients for Sentinel-2 MSI At-Sensor Reflectance Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4038–4048. [Google Scholar] [CrossRef]

- Fang, F.; Im, J.; Lee, J.; Kim, K. An Improved Tree Crown Delineation Method Based on Live Crown Ratios from Airborne LiDAR Data. GIScience Remote Sens. 2016, 53, 402–419. [Google Scholar] [CrossRef]

- Dyer, M.E.; Burkhart, H.E. Compatible Crown Ratio and Crown Height Models. Can. J. For. Res. 1987, 17, 572–574. [Google Scholar] [CrossRef]

- Holdaway, M.R. Modeling Tree Crown Ratio. For. Chron. 1986, 62, 451–455. [Google Scholar] [CrossRef]

- Guo, A.; Ye, H.; Li, G.; Zhang, B.; Huang, W.; Jiao, Q.; Qian, B.; Luo, P. Evaluation of Hybrid Models for Maize Chlorophyll Retrieval Using Medium- and High-Spatial-Resolution Satellite Images. Remote Sens. 2023, 15, 1784. [Google Scholar] [CrossRef]

- Garrigues, S.; Lacaze, R.; Baret, F.; Morisette, J.T.; Weiss, M.; Nickeson, J.E.; Fernandes, R.; Plummer, S.; Shabanov, N.V.; Myneni, R.B.; et al. Validation and Intercomparison of Global Leaf Area Index Products Derived from Remote Sensing Data. J. Geophys. Res. Biogeosciences 2008, 113. [Google Scholar] [CrossRef]

- Misra, G.; Cawkwell, F.; Wingler, A. Status of Phenological Research Using Sentinel-2 Data: A Review. Remote Sens. 2020, 12, 2760. [Google Scholar] [CrossRef]

- Montero, P.; Vilar, J.A. TSclust: An R Package for Time Series Clustering. J. Stat. Softw. 2015, 62, 1–43. [Google Scholar] [CrossRef]

- Golay, X.; Kollias, S.; Stoll, G.; Meier, D.; Valavanis, A.; Boesiger, P. A New Correlation-Based Fuzzy Logic Clustering Algorithm for FMRI. Magn. Reson. Med. 1998, 40, 249–260. [Google Scholar] [CrossRef]

- Douzal, A.; Nagabhushan, P. Adaptive Dissimilarity Index for Measuring Time Series Proximity. Adv. Data Anal. Classif. 2007, 1, 5–21. [Google Scholar] [CrossRef]

- Delegido, J.; Van Wittenberghe, S.; Verrelst, J.; Ortiz, V.; Veroustraete, F.; Valcke, R.; Samson, R.; Rivera, J.P.; Tenjo, C.; Moreno, J. Chlorophyll Content Mapping of Urban Vegetation in the City of Valencia Based on the Hyperspectral NAOC Index. Ecol. Indic. 2014, 40, 34–42. [Google Scholar] [CrossRef]

- Degerickx, J.; Roberts, D.A.; McFadden, J.P.; Hermy, M.; Somers, B. Urban Tree Health Assessment Using Airborne Hyperspectral and LiDAR Imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 26–38. [Google Scholar] [CrossRef]

- Wu, K.; Chen, J.; Yang, H.; Yang, Y.; Hu, Z. Spatiotemporal Variations in the Sensitivity of Vegetation Growth to Typical Climate Factors on the Qinghai–Tibet Plateau. Remote Sens. 2023, 15, 2355. [Google Scholar] [CrossRef]

- Zhen, Z.; Gastellu-Etchegorry, J.-P.; Chen, S.; Yin, T.; Chavanon, E.; Lauret, N.; Guilleux, J. Quantitative Analysis of DART Calibration Accuracy for Retrieving Spectral Signatures Over Urban Area. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10057–10068. [Google Scholar] [CrossRef]

- Adeline, K.R.M.; Paparoditis, N.; Briottet, X.; Gastellu-Etchegorry, J.-P. Material Reflectance Retrieval in Urban Tree Shadows with Physics-Based Empirical Atmospheric Correction. In Proceedings of the Joint Urban Remote Sensing Event 2013, Sao Paulo, Brazil, 21–23 April 2013; pp. 279–283. [Google Scholar]

- Dissegna, M.A.; Yin, T.; Wu, H.; Lauret, N.; Wei, S.; Gastellu-Etchegorry, J.-P.; Grêt-Regamey, A. Modeling Mean Radiant Temperature Distribution in Urban Landscapes Using DART. Remote Sens. 2021, 13, 1443. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, D.; Gastellu-Etchegorry, J.-P.; Yang, J.; Qian, Y. Impact of 3-D Structures on Directional Effective Emissivity in Urban Areas Based on DART Model. Build. Environ. 2023, 239, 110410. [Google Scholar] [CrossRef]

- Houborg, R.; McCabe, M.; Cescatti, A.; Gao, F.; Schull, M.; Gitelson, A. Joint Leaf Chlorophyll Content and Leaf Area Index Retrieval from Landsat Data Using a Regularized Model Inversion System (REGFLEC). Remote Sens. Environ. 2015, 159, 203–221. [Google Scholar] [CrossRef]

- Houborg, R.; McCabe, M.F. A Hybrid Training Approach for Leaf Area Index Estimation via Cubist and Random Forests Machine-Learning. ISPRS J. Photogramm. Remote Sens. 2018, 135, 173–188. [Google Scholar] [CrossRef]

- Zurita-Milla, R.; Laurent, V.C.E.; van Gijsel, J.A.E. Visualizing the Ill-Posedness of the Inversion of a Canopy Radiative Transfer Model: A Case Study for Sentinel-2. Int. J. Appl. Earth Obs. Geoinf. 2015, 43, 7–18. [Google Scholar] [CrossRef]

- Baret, F.; Buis, S. Estimating Canopy Characteristics from Remote Sensing Observations: Review of Methods and Associated Problems. In Advances in Land Remote Sensing: System, Modeling, Inversion and Application; Liang, S., Ed.; Springer: Dordrecht, The Netherlands, 2008; pp. 173–201. ISBN 978-1-4020-6450-0. [Google Scholar]

- Schiefer, F.; Schmidtlein, S.; Kattenborn, T. The Retrieval of Plant Functional Traits from Canopy Spectra through RTM-Inversions and Statistical Models Are Both Critically Affected by Plant Phenology. Ecol. Indic. 2021, 121, 107062. [Google Scholar] [CrossRef]

- Fernández-Guisuraga, J.M.; Suárez-Seoane, S.; Quintano, C.; Fernández-Manso, A.; Calvo, L. Comparison of Physical-Based Models to Measure Forest Resilience to Fire as a Function of Burn Severity. Remote Sens. 2022, 14, 5138. [Google Scholar] [CrossRef]

- Atzberger, C.; Richter, K. Spatially Constrained Inversion of Radiative Transfer Models for Improved LAI Mapping from Future Sentinel-2 Imagery. Remote Sens. Environ. 2012, 120, 208–218. [Google Scholar] [CrossRef]

- Rivera, J.P.; Verrelst, J.; Leonenko, G.; Moreno, J. Multiple Cost Functions and Regularization Options for Improved Retrieval of Leaf Chlorophyll Content and LAI through Inversion of the PROSAIL Model. Remote Sens. 2013, 5, 3280–3304. [Google Scholar] [CrossRef]

- Liu, S.; Brandt, M.; Nord-Larsen, T.; Chave, J.; Reiner, F.; Lang, N.; Tong, X.; Ciais, P.; Igel, C.; Pascual, A.; et al. The Overlooked Contribution of Trees Outside Forests to Tree Cover and Woody Biomass across Europe. Sci. Adv. 2023, 9, eadh4097. [Google Scholar] [CrossRef] [PubMed]

- Wright, M.N.; Ziegler, A.; König, I.R. Do Little Interactions Get Lost in Dark Random Forests? BMC Bioinform. 2016, 17, 145. [Google Scholar] [CrossRef] [PubMed]

- Atmosphere, U.S. US Standard Atmosphere; National Oceanic and Atmospheric Administration: Silver Spring, MD, USA, 1976. [Google Scholar]

- Yu, K.; Van Geel, M.; Ceulemans, T.; Geerts, W.; Ramos, M.M.; Sousa, N.; Castro, P.M.L.; Kastendeuch, P.; Najjar, G.; Ameglio, T.; et al. Foliar Optical Traits Indicate That Sealed Planting Conditions Negatively Affect Urban Tree Health. Ecol. Indic. 2018, 95, 895–906. [Google Scholar] [CrossRef]

- Chianucci, F.; Pisek, J.; Raabe, K.; Marchino, L.; Ferrara, C.; Corona, P. A Dataset of Leaf Inclination Angles for Temperate and Boreal Broadleaf Woody Species. Ann. For. Sci. 2018, 75, 50. [Google Scholar] [CrossRef]

| Name | Description | Resolution/Altimetric Precision | Source |

|---|---|---|---|

| Digital terrain model | Spatial raster representing the elevation of the surface of bare Earth, free of natural and built features | 0.5 m/0.2 m | Opendata Rennes Métropole |

| Digital surface model | Spatial raster representing the elevation of the surface of bare Earth, including natural and built features | 0.5 m/0.2 m | Opendata Rennes Métropole |

| Orthophotographs | Optical visible orthophotographs of Rennes Métropole acquired in 2021 | 0.05 m/- | Opendata Rennes Métropole |

| Tree-crown extent | Spatial vector of tree-crown extent | - | Manual digitization of orthophotographs |

| Grass extent | Spatial vector of grass extent | - | OpenStreetMap (“landuse” key and “grass” value) |

| Parameter | LCZ 2 | LCZ 5 | LCZ 6 | LCZ 8 |

|---|---|---|---|---|

| Height of roughness elements [m] | 18.0 | 18.0 | 6.5 | 6.5 |

| Aspect ratio | 1.375 | 0.525 | 0.525 | 0.200 |

| Fraction of area in buildings [%] | 55 | 30 | 30 | 40 |

| DART Section | Parameter Name | Category | Type | Values and Range |

|---|---|---|---|---|

| Global settings | Light propagation mode | exogenous | F | Bi-directional (DART-Lux) |

| Sensor settings | Spectral bands | exogenous | F | According to Sentinel-2 sensor |

| Zenithal angle | exogenous | F | 2.8 [°] | |

| Azimuth angle | exogenous | F | 182 [°] | |

| Spatial resolution | exogenous | F | 1 [m] | |

| Direction input parameter | Hour | exogenous | F | 11:07 UTC |

| Day | exogenous | F | Day 15 of each month | |

| Month | exogenous | V | From March to November | |

| Atmosphere | Atmosphere model | exogenous | F | USSTD76 |

| Aerosol properties | exogenous | F | Urban Type Aerosol optical depth = 1 | |

| Scene optical properties | Roof | exogenous | V | See Appendix A |

| Wall | exogenous | V | See Appendix A | |

| Impervious ground | exogenous | V | See Appendix A | |

| Pervious ground | exogenous | V | See Appendix A | |

| Earth scene | Dimensions | exogenous | F | 100 m × 100 [m] |

| Latitude | exogenous | F | 48.1° N | |

| Longitude | exogenous | F | 1.68° W | |

| Tree planting conditions | Distance to nearest building | exogenous | V | LCZ2 and LCZ6: 5.0–6.5 [m] LCZ5 and LCZ8: 6–16 [m] |

| Tree exposure | exogenous | V | Shady side or sunny side | |

| Street orientation 1 | exogenous | V | 0, 45, 90 and 135 [°] | |

| Percentage of grass on the ground 2 | exogenous | V | 0–100 [%] | |

| Tree | Tree-crown diameter | endogenous | V | 10 and 12 [m] |

| Other geometric parameters | endogenous | F | See Appendix A | |

| Leaf angle distribution | endogenous | V | Plagiophile and planophile | |

| Leaf area density (LAD) | endogenous | V | 0.1 and 1.2 [m2/m3] | |

| Leaf | Clumping factor | endogenous | V | 0–50 [%] |

| Structure coefficient (N) | endogenous | V | 1.1–2.3 [arbitrary unit] | |

| Leaf chlorophylls content (Cab) | endogenous | V | 5–60 [μg/cm2] | |

| Carotenoid content (Car) | endogenous | V | 2.5–25 [μg/cm2] | |

| Brown pigment | endogenous | F | 0 [arbitrary unit] | |

| Anthocyanin | endogenous | F | 0 [μg/cm2] | |

| Equivalent water thickness | endogenous | V | 0.004–0.024 [cm] | |

| Dry matter content | endogenous | V | 0.002–0.014 [g/cm2] |

| Index | Abbrev. | Equation (with S2 Band Names) | Ref. |

|---|---|---|---|

| Red-green-blue vegetation index | RGBVI | [61] | |

| Green leaf index | GLI | [62] | |

| Normalized green-blue difference index | NGBDI | [63] | |

| Structure insensitive pigment index | SIPI | [64,65] | |

| Normalized difference vegetation index | NDVI | [66] | |

| Atmospherically resistant vegetation index | ARVI | [67] | |

| Enhanced vegetation index | EVI | [68,69] | |

| Optimized soil adjusted vegetation index | OSAVI | [70] | |

| Modified chlorophyll absorption in reflectance index 2 | MCARI2 | MCARI2 → See reference | [71] |

| Red-edge normalized difference vegetation index | NDVIRE | [72] | |

| Sentinel-2 LAI index | SELI | [73] | |

| Mixed leaf area index vegetation index | MixLAIVI | [74] | |

| Transformed chlorophyll absorption in reflectance index | TCARI | [75] | |

| TCARI/OSAVI | CCII | [71,75,76] | |

| Sentinel-2-based triangular vegetation index | STVI | STVI → See equation in [77] | [77] |

| Greenness component of Sentinel-2 tasseled cap transformation | TCT_G | TCT_G → See equation in [78] | [78] |

| Brightness component of Sentinel-2 tasseled cap transformation | TCT_B | TCT_B → See equation in [78] | [78] |

| Wetness component of Sentinel-2 tasseled cap transformation | TCT_W | TCT_W → See equation in [78] | [78] |

| Property | Name | Unit | Equation | Description |

|---|---|---|---|---|

| Tree height | Htree | m | - | Tree height, given by DSM-DTM, according to the crown centroid or corresponding to DART input |

| Tree crown height | Hcrown | m | Crown height, assumed to equal 2/3 of the tree height | |

| Tree crown ellipsoid semi-axis (b, c) | b, c | m | - | Semi-axis of the tree crown (considered as and ellipsoid). Calculated from 2D crown-delineation polygons or corresponding to DART input |

| Tree crown volume | Vcrown | m3 | Crown volume, calculated as that of an ellipsoid | |

| Tree crown area | Acrown | m2 | - | Area projected onto the ground by the crown |

| Tree LAD | LADtree | m2/m3 | - | Leaf area density, measured with LAI-2200 or corresponding to DART input |

| Tree LAI | LAItree | m2/m2 | Leaf area index of the tree |

| Property | Name | Unit | Equation | Description |

|---|---|---|---|---|

| Pixel area | Apix | m2 | - | Constant area for a pixel of 10 m resolution (100 m2) |

| Intersection area tree-pixel | Aintertree | m2 | - | Intersection area between a given tree and a given pixel. A tree can overlap several pixels and vice-versa. |

| Total canopy area | TCA | m2 | Total canopy area for a given pixel | |

| Canopy cover pixel | CCP | % | Percentage of canopy cover in the pixel | |

| Percentage of canopy cover | PCCPtree | % | Percentage of canopy in the pixel for a given tree |

| Trait | Name | Unit | Equation | Description |

|---|---|---|---|---|

| LAI tree | LAItree | m2/m2 | Leaf area index for the tree | |

| LCC tree | LCCtree | μg/cm2 | - | Dualex leaf-clip reading for a given tree or corresponding to DART input. |

| CCC tree | CCCtree | μg/m2 | Canopy chlorophyll content | |

| LAI pixel | LAIpix | m2/m2 | Weighted sum of LAItree in the pixel. n equals the number of trees intersected with the pixel. | |

| LCC pixel | LCCpix | μg/cm2 | Leaf chlorophyll content at the pixel scale | |

| CCC pixel | CCCpix | μg/m2 | Weighted sum of CCCtree in the pixel. n equals the number of trees intersected with the pixel. |

| Vegetation Traits | Tree-Scale | Pixel-Scale | ||||

|---|---|---|---|---|---|---|

| Min | Mean | Max | Min | Mean | Max | |

| Leaf chlorophyll content [µg/cm2] | 11.4 | 28.9 | 49.3 | 11.6 | 28.9 | 48.3 |

| Leaf area index [m2/m2] | 0.94 | 3.78 | 8.24 | 0.01 | 2.36 | 7.92 |

| Canopy chlorophyll content [µg/m2] | 11 | 111 | 279 | 1 | 71 | 289 |

| Target Variable | SA Method | Environment Features | MLRA | R2 | RMSE | SMAPE | BIAS | BSD |

|---|---|---|---|---|---|---|---|---|

| LCC | Tree | Yes | GPR | 0.68 | 5.95 | 16% | 0.55 | 5.93 |

| Tree | Yes | RFR | 0.71 | 5.74 | 16% | 0.74 | 5.69 | |

| Tree | No | GPR | 0.67 | 6.08 | 17% | 0.55 | 6.06 | |

| Tree | No | RFR | 0.69 | 5.87 | 16% | 0.69 | 5.83 | |

| Pixel | Yes | GPR | 0.79 | 4.38 | 12% | 0.25 | 4.38 | |

| Pixel | Yes | RFR | 0.82 | 4.16 | 11% | 0.46 | 4.14 | |

| Pixel | No | GPR | 0.77 | 4.57 | 13% | 0.28 | 4.56 | |

| Pixel | No | RFR | 0.80 | 4.34 | 12% | 0.43 | 4.32 | |

| LAI | Tree | Yes | GPR | 0.29 | 1.80 | 37% | 0.36 | 1.76 |

| Tree | Yes | RFR | 0.35 | 1.77 | 36% | 0.49 | 1.7 | |

| Tree | No | GPR | 0.13 | 2.01 | 43% | 0.47 | 1.96 | |

| Tree | No | RFR | 0.17 | 1.98 | 41% | 0.56 | 1.90 | |

| Pixel | Yes | GPR | 0.51 | 1.2 | 31% | 0.21 | 1.18 | |

| Pixel | Yes | RFR | 0.56 | 1.15 | 30% | 0.27 | 1.12 | |

| Pixel | No | GPR | 0.37 | 1.36 | 35% | 0.27 | 1.33 | |

| Pixel | No | RFR | 0.39 | 1.36 | 34% | 0.30 | 1.32 | |

| CCC | Tree | Yes | GPR | 0.48 | 58.58 | 37% | 12.14 | 57.31 |

| Tree | Yes | RFR | 0.52 | 58.73 | 37% | 16.16 | 56.47 | |

| Tree | No | GPR | 0.40 | 62.93 | 42% | 14.19 | 61.31 | |

| Tree | No | RFR | 0.44 | 62.13 | 41% | 16.47 | 59.91 | |

| Pixel | Yes | GPR | 0.64 | 36.88 | 30% | 6.24 | 36.35 | |

| Pixel | Yes | RFR | 0.68 | 36.39 | 29% | 8.59 | 35.37 | |

| Pixel | No | GPR | 0.56 | 40.94 | 34% | 7.78 | 40.20 | |

| Pixel | No | RFR | 0.58 | 40.84 | 33% | 9.09 | 39.82 |

| Target Variable | SA Method | Environment Features | MLRA | R2 | RMSE | SMAPE | BIAS | BSD |

|---|---|---|---|---|---|---|---|---|

| LCC | Tree | Yes | GPR | 0.24 | 7.66 | 22% | −1.34 | 7.55 |

| Tree | Yes | RFR | 0.30 | 5.83 | 16% | −1.57 | 5.61 | |

| Tree | No | GPR | 0.24 | 7.48 | 22% | −1.26 | 7.38 | |

| Tree | No | RFR | 0.29 | 6.01 | 17% | −1.87 | 5.72 | |

| Pixel | Yes | GPR | 0.27 | 7.46 | 21% | −1.78 | 7.25 | |

| Pixel | Yes | RFR | 0.33 | 5.64 | 16% | −1.87 | 5.32 | |

| Pixel | No | GPR | 0.24 | 7.45 | 21% | −1.71 | 7.25 | |

| Pixel | No | RFR | 0.32 | 5.87 | 17% | −2.29 | 5.40 | |

| LAI | Tree | Yes | GPR | 0.02 | 1.94 | 43% | −0.28 | 1.92 |

| Tree | Yes | RFR | 0.03 | 1.59 | 36% | −0.43 | 1.54 | |

| Tree | No | GPR | 0.04 | 1.54 | 35% | −0.29 | 1.51 | |

| Tree | No | RFR | 0.04 | 1.43 | 33% | −0.28 | 1.40 | |

| Pixel | Yes | GPR | 0.12 | 1.63 | 57% | −0.87 | 1.38 | |

| Pixel | Yes | RFR | 0.29 | 1.18 | 47% | −0.58 | 1.03 | |

| Pixel | No | GPR | 0.25 | 1.47 | 53% | −0.90 | 1.17 | |

| Pixel | No | RFR | 0.22 | 1.30 | 49% | −0.69 | 1.1 | |

| CCC | Tree | Yes | GPR | 0.12 | 57.11 | 43% | −10.94 | 56.08 |

| Tree | Yes | RFR | 0.13 | 49.22 | 39% | −12.85 | 47.54 | |

| Tree | No | GPR | 0.16 | 49.77 | 38% | −10.86 | 48.59 | |

| Tree | No | RFR | 0.13 | 48.84 | 38% | −13.23 | 47.03 | |

| Pixel | Yes | GPR | 0.27 | 51.00 | 58% | −29.01 | 41.97 | |

| Pixel | Yes | RFR | 0.46 | 36.44 | 49% | −18.79 | 31.23 | |

| Pixel | No | GPR | 0.31 | 48.99 | 56% | −29.73 | 38.96 | |

| Pixel | No | RFR | 0.32 | 42.94 | 54% | −24.26 | 35.45 |

| Target Variable | Species | R2 | RMSE | SMAPE | BIAS | BSD |

|---|---|---|---|---|---|---|

| LCC | AC | 0.26 | 5.48 | 0.16 | −2.45 | 4.91 |

| FR | 0.41 | 5.45 | 0.14 | −2.07 | 5.06 | |

| PL | 0.11 | 5.66 | 0.16 | −0.36 | 5.66 | |

| QR | 0.45 | 5.99 | 0.17 | −2.81 | 5.29 | |

| LAI | AC | 0.17 | 0.95 | 0.36 | −0.42 | 0.85 |

| FR | 0.36 | 1.26 | 0.47 | −0.61 | 1.10 | |

| PL | 0.03 | 1.34 | 0.65 | −0.98 | 0.92 | |

| QR | 0.43 | 1.15 | 0.37 | −0.18 | 1.14 | |

| CCC | AC | 0.28 | 31.07 | 0.42 | −17.75 | 25.53 |

| FR | 0.5 | 41.05 | 0.48 | −21.54 | 35.03 | |

| PL | 0.1 | 37.74 | 0.64 | −26.42 | 26.99 | |

| QR | 0.55 | 36.26 | 0.38 | −6.96 | 35.66 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Le Saint, T.; Nabucet, J.; Hubert-Moy, L.; Adeline, K. Estimation of Urban Tree Chlorophyll Content and Leaf Area Index Using Sentinel-2 Images and 3D Radiative Transfer Model Inversion. Remote Sens. 2024, 16, 3867. https://doi.org/10.3390/rs16203867

Le Saint T, Nabucet J, Hubert-Moy L, Adeline K. Estimation of Urban Tree Chlorophyll Content and Leaf Area Index Using Sentinel-2 Images and 3D Radiative Transfer Model Inversion. Remote Sensing. 2024; 16(20):3867. https://doi.org/10.3390/rs16203867

Chicago/Turabian StyleLe Saint, Théo, Jean Nabucet, Laurence Hubert-Moy, and Karine Adeline. 2024. "Estimation of Urban Tree Chlorophyll Content and Leaf Area Index Using Sentinel-2 Images and 3D Radiative Transfer Model Inversion" Remote Sensing 16, no. 20: 3867. https://doi.org/10.3390/rs16203867

APA StyleLe Saint, T., Nabucet, J., Hubert-Moy, L., & Adeline, K. (2024). Estimation of Urban Tree Chlorophyll Content and Leaf Area Index Using Sentinel-2 Images and 3D Radiative Transfer Model Inversion. Remote Sensing, 16(20), 3867. https://doi.org/10.3390/rs16203867