1. Introduction

Roads are a critical aspect of man-made infrastructure, playing a pivotal role in urban planning, change detection, traffic management, disaster assessment, and various other scenarios. Furthermore, in the age of urban expansion and rapid advancements in remote sensing technology, high-resolution remote sensing imagery is emerging as a valuable resource for road information extraction, thereby revolutionizing cartography and geospatial studies [

1]. Current high-precision road maps are traditionally generated through labor-intensive and costly methods such as vehicle-mounted mobile surveys and airborne Light Detection and Ranging (LiDAR). With the advent of high-resolution satellite remote sensing technology, the utilization of remotely sensed data for extracting road information has gained prominence.

The proliferation of multisensor and multispectral Earth observation satellites has introduced a diverse array of image data. Each sensor type brings its unique advantages, influencing the choice of data for specific applications. Currently, road extraction from remote sensing data employs various sources, including smartphone Global Positioning System (GPS) data, SAR images, LiDAR data, and optical images, among others [

2].

SAR stands out for its all-weather, day-and-night imaging capabilities, rich information content (amplitude, phase, polarization), and independence from weather conditions, making it a viable choice for road extraction. SAR-based road extraction algorithms can be categorized into semi-automatic and fully automatic methods. Semi-automatic approaches involve human–computer interaction and include the snake model, particle filter, template matching, a mathematical morphology, and the extended Kalman filter [

3,

4,

5,

6,

7]. In contrast, fully automatic methods require no human intervention and encompass techniques such as dynamic programming, Markov Random Field (MRF) models, Genetic Algorithms (GAs), and fuzzy connectedness [

8,

9,

10,

11]. Furthermore, the advent of deep learning has introduced a new paradigm, exemplified by Henry’s use of the Fully Convolutional Neural Network (FCNN) model for road segmentation in TerraSAR images in 2018, Zhang’s automatic discrimination method based on a deep neural network (DNN) for roads from dual-polarimetric (VV and VH) Sentinel-1 SAR imagery in 2019 [

12], and the utilization in reference [

13] of Copernicus Sentinel-1 SAR satellite data in a deep learning model based on the U-Net architecture for image segmentation in 2022. Reference [

14] proposed an improved Deeplabv3+ network for the semantic segmentation of SAR images in 2023.

However, the distinct imaging characteristics of SAR data pose unique challenges for road extraction. SAR images are susceptible to coherent patch noise, interference from speckles, and other phenomena that hinder image interpretation, ultimately affecting road feature extraction. Moreover, road extraction based on SAR images faces challenges when confronted with similar features, such as ridges, in the images, leading to issues of road recognition robustness. Deep learning-based methods, although powerful, are data-dependent and require substantial training samples, with road recognition effectiveness closely tied to the quality of SAR images.

On the other hand, optical images offer rich feature information, including spectral, geometric, and textural features, enhancing feature decoding capabilities. Various road extraction methods have been developed for optical images, such as template-matching-based object detection, knowledge-based methods, object-based image analysis (OBIA), and machine learning-based object detection [

15]. Template-matching-based methods, one of the earliest detection methods, rely on template generation and similarity metrics, encompassing rigid and deformable template matching [

16,

17]. Knowledge-based methods transform target detection into a hypothesis testing problem, incorporating geometric and contextual knowledge [

18,

19]. OBIA-based methods efficiently merge shape, texture, geometry, and contextual semantic features, including image segmentation and target classification [

20]. Machine learning-based algorithms perform object detection through classifier learning, primarily influenced by feature extraction, fusion, and classifier training [

21,

22,

23].

Nonetheless, optical imagery encounters limitations such as cloud cover, fog, and diurnal variations, rendering it unsuitable for real-time ground observation in certain situations, particularly in emergency relief operations. Additionally, optical imagery may result in fragmented road segments due to occlusions, shadows, and other factors, impeding the extraction of geometric and shape features.

To maximize the benefits of different sensors and optimize road extraction outcomes, the fusion of multi-source remote sensing images offers a promising solution. Depending on the level of data abstraction and the stage of remote sensing data fusion, fusion can be categorized into three levels: pixel-level, feature-level, and decision-level fusion [

24]. Pixel-level fusion integrates original image metadata directly and encompasses methods like the Intensity Hue Saturation (IHS) transform, Principal Component Analysis (PCA) transform, and Brovey’s change [

25,

26,

27]. Feature-level fusion combines extracted features, involving clustering and Kalman filtering methods [

28,

29]. Decision-level fusion represents the highest level of data fusion, offering decision support for specific objectives, including Support Vector Machine (SVM), the PCA transform, and Brovey’s change, among others [

30,

31].

However, methods for road extraction based on the fusion of multi-source remote sensing images remain underexplored, representing a pivotal research area. In 2021, reference [

32] developed a United U-Net (UU-Net) for fusing optical and synthetic aperture radar data for road extraction, subsequently training and evaluating it on a large-scale multi-source road extraction dataset. In 2022, reference [

33] introduced SDG-DenseNet, a novel deep learning network, and a decision-level optical and SAR data fusion method for road extraction. In various other remote sensing domains, leveraging the advantages of multi-source remote sensing data for land cover recognition has become prevalent. In 2023, reference [

34] proposed a method that integrates multispectral and radar data, employing a deep neural network pipeline to analyze available remote sensing observations across different dates. In another study conducted in 2023 [

35], various feature parameters were extracted from GF-2 panchromatic and multispectral sensor (PMS) and GF-3 SAR data. Additionally, a canopy height model (CHM) derived from Unmanned Aerial Vehicle (UAV) LiDAR data was utilized as observational data for mangrove height estimation. This approach accurately estimated the heights of mangrove species.

In summary, many existing road extraction methods relying on single sensors face a significant challenge. These algorithms struggle to cope with issues like coherent patch noise, sudden disturbances such as building occlusion, and the influence of numerous interfering features with characteristics similar to roads. This limitation hampers their applicability to the extraction of roads in large-scale complex terrain.

To address the challenge of road extraction in ultra-large-scale and complex scenarios, this paper integrates the penetrating abilities of high-resolution SAR remote sensing imagery and the rich spectral information of multispectral images. We propose a decision-level fusion approach for SAR and multispectral images, making the following key contributions:

- (1)

Aided DEM for road extraction in complex terrain scenes: To enable road extraction from high-resolution SAR images in large-scale composite scenes, we focus on a common feature in SAR images that closely resembles roads—mountain ridges. Leveraging features such as magnitude, direction, contrast, and the multi-angle template matching of roads in SAR images, we incorporate DEM data. We exploit the fact that roads exhibit significantly gentler slopes and lower variance compared to ridges, thus enhancing the robustness of road extraction in composite scenes.

- (2)

A road optimization method based on the rotational adaptive median filter and graph theory: To address challenges such as optical image occlusion and introduced interference segments during the fusion of multi-source remote sensing images, we introduce a rotatable road-like shape median filter. This filter effectively fills in broken and small-area road segments in network reconstruction by using convolution kernels with various orientations. We identify matching regions in the candidate road image and apply median judgments to bridge broken areas. To remove interfering features, we utilize graph theory’s topological and connectivity characteristics, optimizing the road network and excluding isolated and non-connected candidate roads.

By integrating the capabilities of various sensors and fusing their data, our approach aims to enhance road extraction in complex scenarios, contributing to more accuracy.

2. Methods

In order to extend the remote sensing image road extraction algorithm to large-scale complex terrain scenes, this paper amalgamates the advantages of various sensors and employs a decision-level fusion of multi-source remote sensing images. This approach facilitates road extraction in large-scale complex scenes while prioritizing robustness. The methodology comprises the following four primary steps. (1) High-resolution SAR road extraction: this step focuses on multi-scale and multi-feature fusion for road extraction from SAR images, aided by DEM. (2) Multispectral image road extraction: here, spectral features and the Normalized Difference Water Index (NDWI) are harnessed to identify candidate road regions, subsequently refined using a road template matching algorithm. (3) Multi-source remote sensing image fusion: this stage emphasizes decision-level fusion, unifying information from diverse sensors to enhance road extraction. (4) Road optimization based on rotational adaptive median filter and graph theory: an optimization strategy is introduced, incorporating a rotational adaptive median filter and graph theory to enhance the road network’s topological and connectivity characteristics.

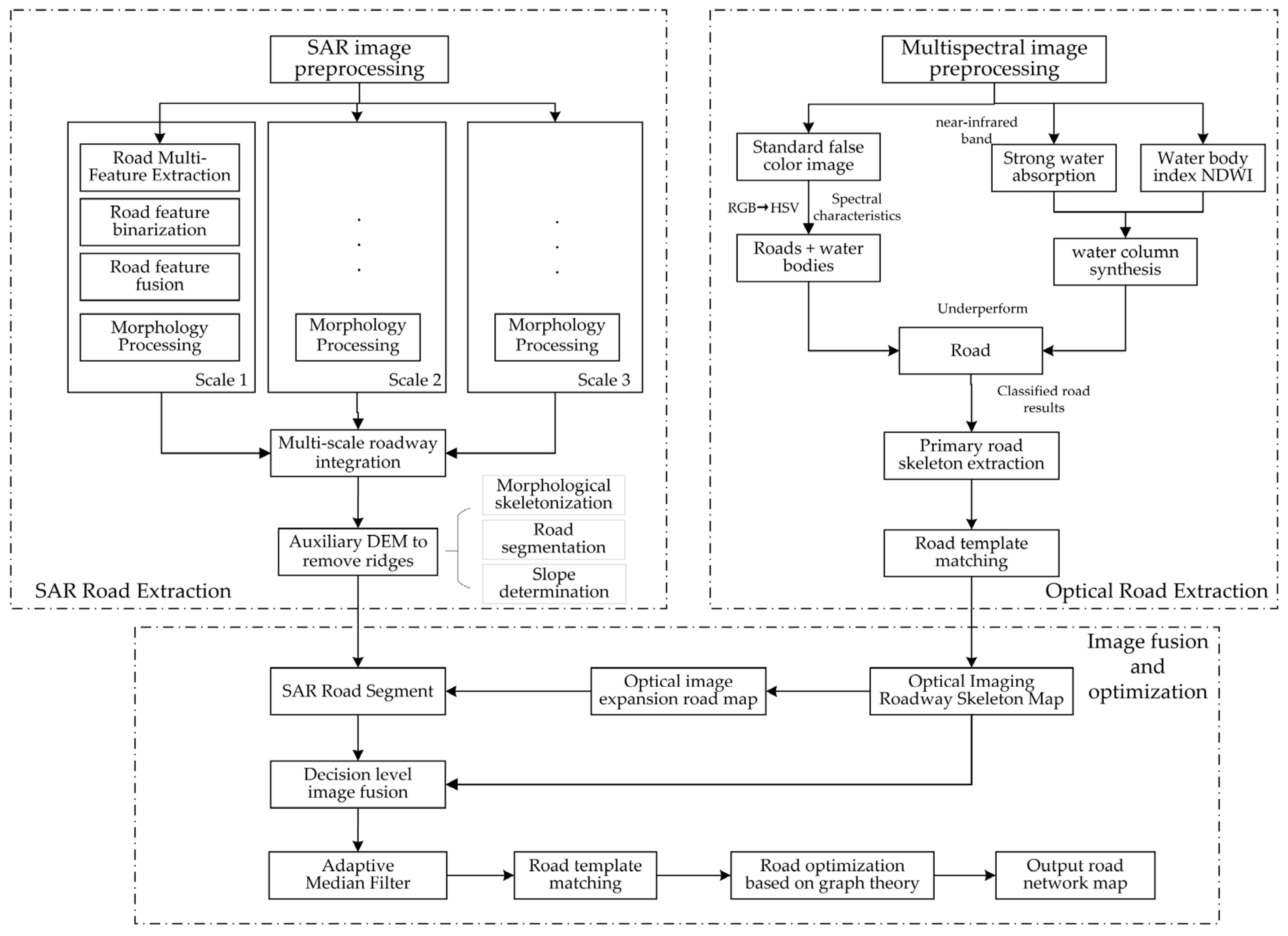

These overarching sections provide a comprehensive view of the method’s components. Subsequent sections delve into the details of each step, offering a more comprehensive understanding of the methodology. The overall structure of the proposed method is visually represented in

Figure 1.

2.1. Large-Scale Complex Scene Road Extraction from SAR Images with the Assistance of DEM

Historically, road extraction from high-resolution SAR remote sensing images relied on geometric features, texture attributes, and the detection of bi-parallel lines, complemented by radiometric properties. However, this approach had inherent limitations. As SAR image road extraction algorithms extend to large-scale complex terrains, a significant challenge emerges—the differentiation between roads and ridges. This challenge arises due to the similarity in feature information between ridges and roads, encompassing radiation, geometry, double-parallel-line characteristics, and closely proximate width. Consequently, traditional road extraction methods face difficulty in effectively discerning between the two.

Figure 2 illustrates typical interference features encountered in large-scale scenes, where the orange-boxed section represents interference from ridges.

This paper introduces an SAR image road extraction method tailored for large-scale complex terrain using assisted DEM data. The approach involves a multi-step process, including image preprocessing, feature extraction through multi-angle template matching, feature binarization and fusion, and morphological optimization using Advanced Land Observing Satellite (ALOS) DEM assistance.

Given the intricate nature of terrain in large-scale and complex settings, conventional filtering methods may exhibit limitations, particularly in handling noise or blurring phenomena. To address this challenge, the article adopts a multi-view processing method to enhance feature discernment. The feature extraction method based on multi-angle template matching combines the multi-angle directional characteristics of the road by introducing a rectangular filter template

with a variable orientation. The SAR image is continuously convolved with the rotated filter template, and the information of each feature of the road is calculated according to the result of the convolution. The angle

of the convolution template and the specific convolution process are defined, respectively, as follows [

36]:

where

is the angle between the direction of the filter template and the horizontal axis;

is the number of angles to be traversed, indicating the number of times the template matching is performed in different directions; and

denotes the number of directions currently obtained, determining the angle of the filter template.

denotes the template obtained by rotating the angle

based on the underlying rectangular template

, which is then convolved with the SAR image to obtain the different pixel information

under different angles.

In this paper, we set

to 18, i.e., the filter template needs to be rotated by a total of 18 different angles, and the angle interval of each rotation is 10°. Finally, we express the road direction feature as the following equation [

37]:

From this, the corresponding minimum radiance characteristics of the individual pixel points, as well as the contrast characteristics of the road, respectively, can be obtained:

The direction and contrast features of the roads are obtained by traversing the selected number of directions

. By iteratively exploring different values for the parameter

, we derive the directional and contrast characteristics of the roads. Leveraging the topological and continuous properties of roads, areas that do not meet the area threshold conditions for a specific angle are eliminated. The directional and contrast features of the roads are then binarized and fused, resulting in the traditional binarized road result image

[

37].

Building upon the functional characteristics of roads as artificial structures, which exhibit significantly gentler slopes and lower variance compared to ridges, this study utilizes ALOS World 3D—30 m data and the original SAR imagery for image registration and reprojection. This process generates a two-dimensional elevation matrix that is pixel-wise paired with the SAR imagery.

Subsequently, morphological methods are employed to identify road nodes in the binarized road result image

and to segment each road into n road segments.

where

represents the number of road segments,

denotes the k-th segmented road in the traditional binarized road result image, and

is obtained by the dot product, where the non-zero element is the elevation information of each pixel in the k-th road segment.

Finally, the variance of all non-zero elements in

is calculated, and linear regression is applied to obtain the variance and slope of each candidate road segment. Setting a slope threshold according to the ‘Highway Engineering Technical Standards [

38]’, slope and variance threshold judgments are applied to each candidate road segment. This process effectively distinguishes roads from ridges, enhancing the robustness of road extraction in large-scale complex terrain.

This comprehensive methodology ensures accurate road extraction in complex terrains and diverse environments, offering robust results for large-scale terrain.

2.2. Road Extraction from Multispectral Remote Sensing Images

Given the challenges in efficiently and accurately extracting road information from remote sensing images, especially in complex terrains, this study harnesses the rich spectral features of multispectral images applied to GF-2 imagery (4.0 m resolution). We strategically combine different spectral bands to highlight specific aspects of road information.

2.2.1. Spectral Features

Spectral features are derived from luminance observations of the same feature point in different image bands, forming a multidimensional random vector

:

where n represents the total number of bands in the image, and

denotes the luminance value of the feature point in the ‘i-th’ band image.

To enhance feature identification, single-band remote sensing images can be colorized by assigning different colors based on luminance layers. For example, water bodies, which exhibit strong absorption in the infrared band and appear dark, are assigned a low brightness value, highlighting them in a distinct color. Similarly, sandy areas with high reflectance are identified by higher brightness values. Understanding these spectral characteristics provides a better means to obtain feature category images.

Color synthesis is a crucial aspect of remote sensing image display, based on additive color synthesis principles. When selecting specific bands and assigning them to red, green, and blue primary colors, color images are created. The choice of the best synthesis scheme depends on the application’s specific objectives, aiming to maximize information retained after synthesis while minimizing information correlation between bands.

2.2.2. Water Index NDWI

The Water Index (WI) is a valuable tool in remote sensing for detecting and categorizing water bodies in digital images. A crucial component of this is the NDWI [

39], which utilizes reflectance in the near-infrared (NIR) and green light bands. It is calculated using the following formula:

where

refers to the green light band, and

refers to the near-infrared band. The threshold value for

typically falls within the range of 0 to 1. NDWI capitalizes on the strong absorption characteristics of water bodies in the near-infrared band, which exhibit minimal reflection, and the robust reflectivity of vegetation. This approach effectively extracts water information while mitigating interference from vegetation. However, in urban environments with image shadows caused by buildings, the WI may encounter challenges when distinguishing water from soil and building features during water information extraction.

In our study, we adopt the standard false-color synthesis scheme, which highlights the NIR, red, and green bands as red, green, and blue, respectively. This creates a standard false-color image that contains road information. We then proceed to extract specific spectral road information, gradually reducing its saturation, and apply segmentation to identify candidate road regions. To ensure the precision of road spectral information extraction, we introduce the use of NDWI to mitigate the influence of road-like spectral features, such as those from water bodies. Finally, the pixel range of the candidate road is determined based on the spatial resolution of the multispectral image and the width range of the trunk road. A road-like slender road template is selected, and the adaptive rotation sliding window is employed to match it with the multispectral image. The threshold determination method is applied to obtain the road network after template matching. Subsequently, the main road information is extracted from the multispectral image.

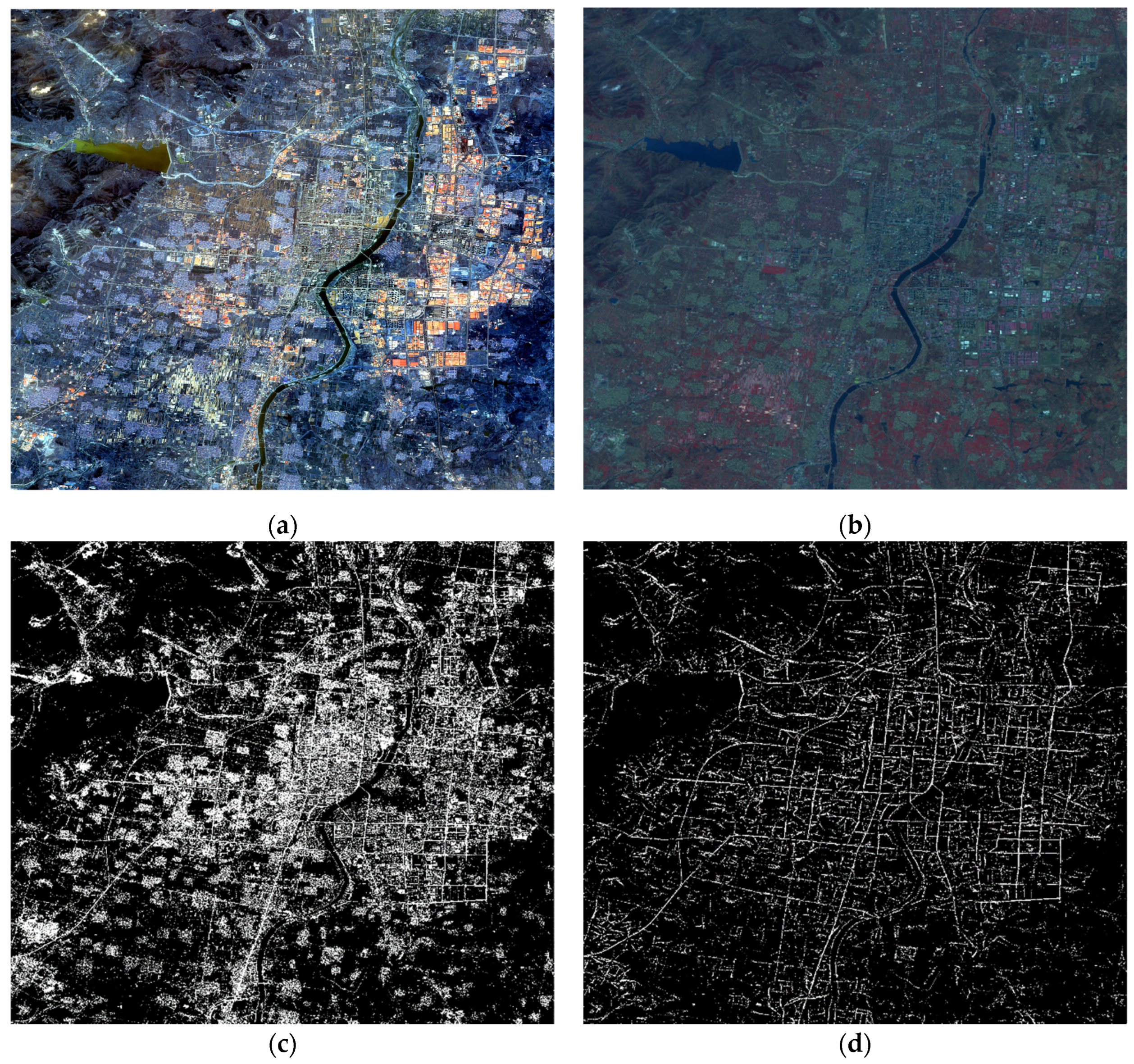

As shown in

Figure 3, it represents the resultant map of road extraction for multispectral images.

Figure 3a represents the input multispectral image,

Figure 3b represents the standard false-color image of

Figure 3a,

Figure 3c represents the set of candidate roads obtained based on the spectral features and the NDWI, and

Figure 3d represents the backbone candidate roads obtained using the template matching method.

This comprehensive methodology enhances road extraction by incorporating spectral information and NDWI, providing a more accurate representation of road features in complex terrain and diverse environmental conditions.

2.3. Multi-Source Remote Sensing Image Fusion Based on Decision Layer

SAR remote sensing images and optical images each possess unique advantages and inherent limitations in road imaging. While individual sensor utilization often yields favorable outcomes, such an approach remains constrained by specific sensor limitations. SAR images offer simple feature information but are vulnerable to noise, whereas optical images are susceptible to various factors like clouds, fog, buildings, and vegetation cover. This variability often causes discontinuities in road areas within the original images, impacting road extraction. To address these limitations, this paper employs the fusion of multi-source remote sensing images for road extraction.

In the preprocessing of remote sensing image fusion, three primary methods are commonly employed: pixel-level fusion, feature-level fusion, and decision-level fusion [

40].

Pixel-level fusion integrates data after precise alignment and preliminary processing. While it retains much of the original image data, it might be less efficient for large datasets and could be susceptible to various forms of noise and contamination. Feature-level fusion merges multiple road features extracted from different images, retaining feature information while avoiding unnecessary noise. However, it demands significant computational resources. The decision-level fusion method combines road recognition results from multi-source images based on independent classifications from each sensor. This approach requires fewer multi-source satellite data points, delivers enhanced anti-interference capabilities, and accommodates variations in shooting time, angle, and location.

The registration process between SAR images and multispectral images involves several key steps. Firstly, SAR images undergo orthorectification to eliminate parallax caused by ground elevation differences. Subsequently, the ‘Georeferencing’ tool in ArcGIS was employed to register SAR images, multispectral images, and DEM, achieving cross-source alignment and ensuring proper registration and alignment among the images. Following this, image reprojection is applied to ensure consistent image resolution across multiple remote sensing sources, with a post-reprojection resolution of 3.0 m. Lastly, the corresponding overlapping regions are cropped to create a candidate road dataset.

In this paper, due to disparities in imaging angles and shooting times between SAR and multispectral images, the pixel-level and feature-level fusion methods might not be feasible. Therefore, the decision-level fusion method is chosen to fuse SAR and multispectral remote sensing images. The overlap of the k-th road segment can be expressed as

where

represents the degree of overlap between the SAR image and the optical image,

represents the k-th segment result of SAR image road extraction,

signifies the SAR image road extraction result,

indicates the road extraction result of multispectral images, and

denotes the dot-multiplication operation. The threshold T is employed to signify the overlap threshold, which, guided by empirical evidence and validation, is set to 0.15.

Consequently, we derive the road segment

from the SAR image, based on the meeting requirements of the overlap degree. Building upon this, the final result of multi-source remote sensing image fusion is achieved by overlaying the optical image road extraction result

with the aforementioned equation.

As a result, this study accomplishes the fusion of road extraction from multi-source remote sensing images by leveraging the optical image road extraction method, considering the results of road extraction from SAR images comprehensively. This approach effectively overcomes scenarios involving missing roads due to lighting and other occlusions, thereby enhancing the robustness of the road extraction algorithm.

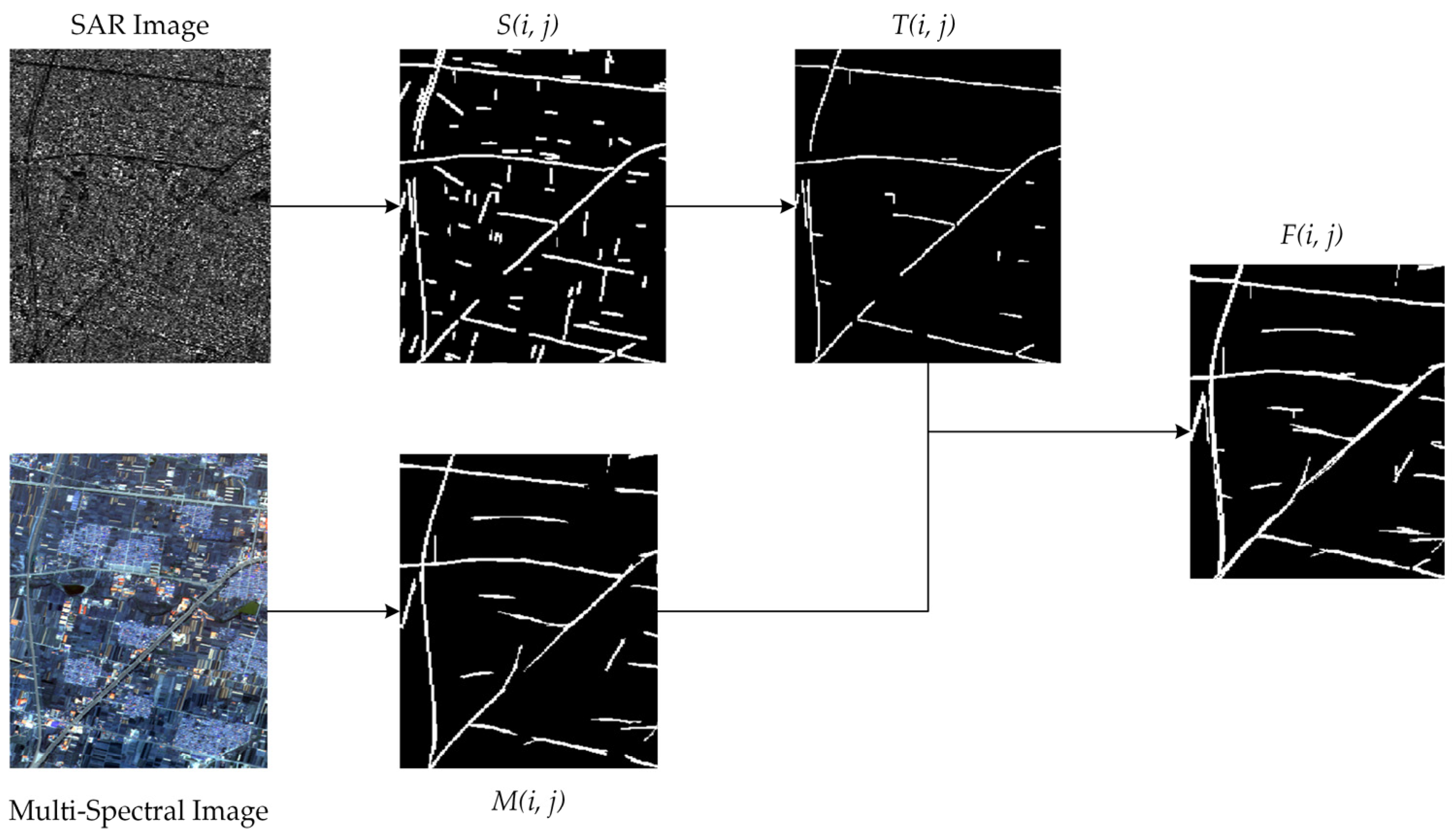

Figure 4 outlines the comprehensive implementation process.

2.4. Rotational Adaptive Median Filter and Graph Theory

Road extraction based on multi-source remote sensing fusion effectively mitigates issues related to buildings, vegetation shadows in optical images, and feature insufficiency and coherent spot noise in SAR images. However, this decision-level fusion method occasionally introduces misjudged roads into the results, balancing recall and precision. Addressing these challenges and enhancing the road extraction process are crucial.

Two persistent problems occur in the road extraction results from multispectral and SAR fusion. Firstly, despite the overlap calculation between road extraction results from SAR images and optical images, some road segments fail to meet the requirements, leading to discontinuities in roads and the introduction of small branch roads. Secondly, decision-level image fusion often struggles with road-like interfering segments that meet area and length conditions and are challenging to manage using conventional mathematical morphology methods.

To tackle these issues, two improvement methods are proposed.

The first method introduces an adaptive rotated median filter optimizer. The median filter, commonly employed as a denoising nonlinear filter, stands out for its immunity to outliers and its ability to address pretzel noise in images. Notably, the median filter offers various implementations, including the adaptive median filter (AMF), Progressive Switching Median Filter (PSMF), vector directional filter, and others, primarily used as an image preprocessing method [

41]. This technique operates by substituting the target noise pixel with the median of its local neighborhood, determined by the filter window’s size, which defines the area surrounding the target pixel and the number of pixels within this region, and can be the process expressed as follows:

where

is the ascending or descending sequence of neighboring pixels and

is the number of pixels in the neighborhood. According to the above equation, we find that the result of median filtering depends on the median value of the corresponding neighborhood pixels and is not affected by outliers. Taking advantage of this property, we construct an adaptive median filter optimizer for road shapes with the aim of filling and connecting the fine broken areas after the fusion of multi-source remote sensing images, and at the same time deleting some fine non-trunk roads. The specific computation is as follows:

where

represents the angle between the direction of the median filter template and the horizontal axis. The variable

denotes the number of directions currently obtained.

denotes the template derived by rotating the

angle concerning the rectangular template, Med. The application of the median filtering results in

at various angles through convolution operations. Subsequently, the results of median filter optimization across different directions undergo a logical ‘or’ operation, thereby achieving the matching of the median filter across multiple angles.

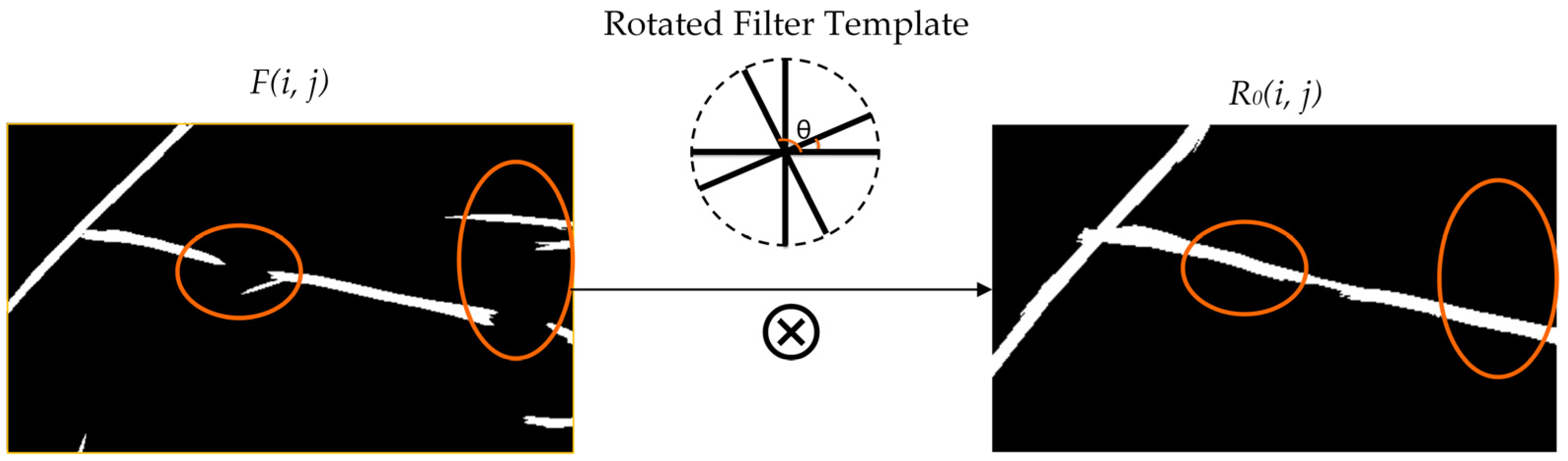

Figure 5 illustrates the specific effects of the adaptive rotational median filter optimization, where

represents convolution operations,

denotes the rotation angle of the convolution kernel, and the orange ellipse represents the detailed optimization details.

From the outcomes observed in the aforementioned figure, the application of the nonlinear median filter’s capabilities in dealing with abnormal interference noise is evident. Leveraging the median filter’s features, this paper introduces a specific rectangular filter template, optimizing the median filter by adjusting its orientation to address issues like broken road segments and non-trunk branch roads during the extraction process.

Reviewing the methods discussed above, both the decision-level fusion of multi-source remote sensing images and the adaptive rotary median filter optimization aimed to identify the missing road segments within complex scene features. The focus is now directed towards identifying non-road areas within the road extraction results, with the intent of reducing mistakenly extracted roads in road recognition.

Following the aforementioned method, we obtained a binary road extraction map. After the optimization via adaptive rotated median filtering, the connected broken road segments were restored, and non-trunk branch regions were eliminated. However, a significant challenge remains in dealing with candidate segments misclassified as trunk roads, especially those that are discrete and falsely identified as main roads. Traditional morphological methods for analyzing segment aspect ratios often prove insufficient in resolving these discrete misclassifications, which resemble roads but are misidentified.

Taking into account the topological and connectivity characteristics inherent in road networks, this study seeks to optimize the results of road network graphs using principles from graph theory. The core approach involves considering road extraction results as a graph structure and optimizing node determinations based on the graph’s weight relationships. The specific implementation process includes the preprocessing of the road graph using adaptive median filtering optimization results

and applying the connectivity criterion to generate the graph

with

segments. These segments are treated as nodes of the graph, with each representing a road segment and the minimum distance between them acting as the graph’s weight. The result is an undirected weighted graph M, where

indicates the minimum distance between the i-th and j-th road segments. Subsequently, graph theory is applied to exclude disruptive terms, where discrete or distant segments are considered interference and are consequently eliminated. The specific calculations are as follows:

where

represents the minimum non-diagonal value within the weighted graph

, signifying the shortest distance between the i-th segmented road and others. The parameter

designates the minimum distance threshold, while the set

encompasses road segments whose minimum distances fail to meet the prescribed condition, often associated with discrepant or misdetected roads. Following this criterion, road segments failing to satisfy connectivity and topological standards are excluded. As a result,

is derived as the final road extraction outcome.

2.5. Evaluation Metrics

In this paper, the evaluation of road extraction effectiveness relies on the Open Street Map (OSM) open-source dataset. The assessment utilizes common road surface metrics, including recall, precision, and F1-score [

42,

43]. These metrics are vital for assessing the quality of the road extraction model.

Recall: Completeness measures the proportion of correctly extracted real road pixels, reflecting the model’s road continuity and integrity. A higher completeness value indicates improved model performance.

where

denotes the number of correctly extracted road pixels, and

denotes the number of road pixels incorrectly identified as non-roads.

Precision: Correctness represents the proportion of predicted road pixels that are actually real road pixels. It quantifies the model’s ability to avoid false road detections.

where

denotes the number of non-road pixels incorrectly identified as roads.

F1-score: The F1-score is a weighted average of precision and recall, combining both completeness and correctness metrics. It offers a balanced assessment of the model’s performance.

The combination of these metrics provides a comprehensive evaluation of road extraction performance. However, it is important to note that factors such as the time difference between OSM dataset collection and remote sensing image acquisition and the potential imperfections in the OSM dataset for certain urban areas can influence the evaluation. To account for these variations, this paper considers all three metrics and does not rely solely on a single evaluation indicator. Additionally, in cases where OSM road segment coordinates slightly deviate from those in remote sensing images, this paper evaluates the relevant indices by applying appropriate morphological extensions to the real OSM road values.

3. Materials

In the realm of remote sensing, the initial and pivotal step before any image processing is data pre-processing. Due to variations in shooting scenes, times, angles, and resolutions among different satellite images, data pre-processing is of paramount importance. The primary objective is to ensure that data from various satellite images effectively represent the same scene and correspond as closely as possible to the pixel location. This meticulous alignment is essential for achieving precision in subsequent analysis, image fusion, and meaningful comparisons between images.

In this paper, our foremost endeavor is to align remote sensing images obtained from diverse sources. The alignment process involves determining the reference point based on landmark orientation. We meticulously selected high-resolution SAR images from the GF-3 satellite’s ultra-fine stripe (UFS) dataset, optical images from the multispectral band of the GF-2 satellite (comprising four bands: R, G, B, and NIR), and global digital surface model data (DSM) from ‘ALOS World 3D—30 m’. All these images are meticulously aligned to the WGS 84 coordinate reference system to ensure rigorous spatial alignment.

Furthermore, in our data pre-processing workflow, we aim to maximize the alignment of each image. To achieve this, we conduct precise cropping of the common areas shared between these images, resulting in a set of aligned GF-3, ALOS, and GF-2 images, each at their respective resolutions. To achieve seamless geometric alignment and ensure data congruity, we employed ArcGIS’s ‘Georeferencing’ tool for image registration. Additionally, we utilized the GDAL library for image reprojection, ensuring consistent sampling at the same resolution across all images. The results of this image pre-processing process are presented in

Figure 6.

For this experiment, we selected two sets of large-scale images sourced from Shan-dong Province and Hubei Province in China. The SAR images, sourced from the GF-3 satellite, feature a spatial resolution of 3 m × 3 m, while the optical images obtained from the GF-2 satellite consist of four bands, R, G, B, and NIR, with a spatial resolution of 4 m. Additionally, we leveraged the ‘ALOS World 3D—30 m’ dataset, which provides global digital surface model data (DSM) with a horizontal resolution of 30 m. To validate the algorithms proposed in this paper, we incorporated Open Street Map, an open-source road map. A comprehensive description of the datasets utilized in this study is meticulously detailed in

Table 1.

4. Results and Discussion

To comprehensively validate the effectiveness of the proposed method in this paper, we conducted validation experiments using GF-2 and GF-3 remote sensing image data captured under varying conditions, including different times, scenes, and shooting angles. Based on the geographical locations and scene types of the selected images, we categorized the test images into three distinct groups: composite scenes featuring mountain ridges, densely populated urban areas, and expansive large-scale terrain.

In the upcoming section, we meticulously analyze and discuss the optimization effects of the method proposed in this paper across different scene types. The evaluation process encompasses several critical aspects, including road extraction from SAR images in complex scenes with the support of DEMs, the fusion of multi-source remote sensing images, and the application of optimization techniques that involve the use of rotating adaptive median filters and graph theory.

4.1. Complex Scene Road Extraction from SAR Images with the Auxiliary of DEM

To validate the road extraction method for complex terrain in SAR images with the aid of the DEM proposed in this paper, two GF-3 images captured at different times and locations in complex terrains were chosen for testing. The method proposed in this paper is compared with the conventional method [

37], which is based on pixel, geometric, and bi-parallel line features of SAR images, as well as a method based on Directional Grouping and Curve Fitting [

44], as shown in

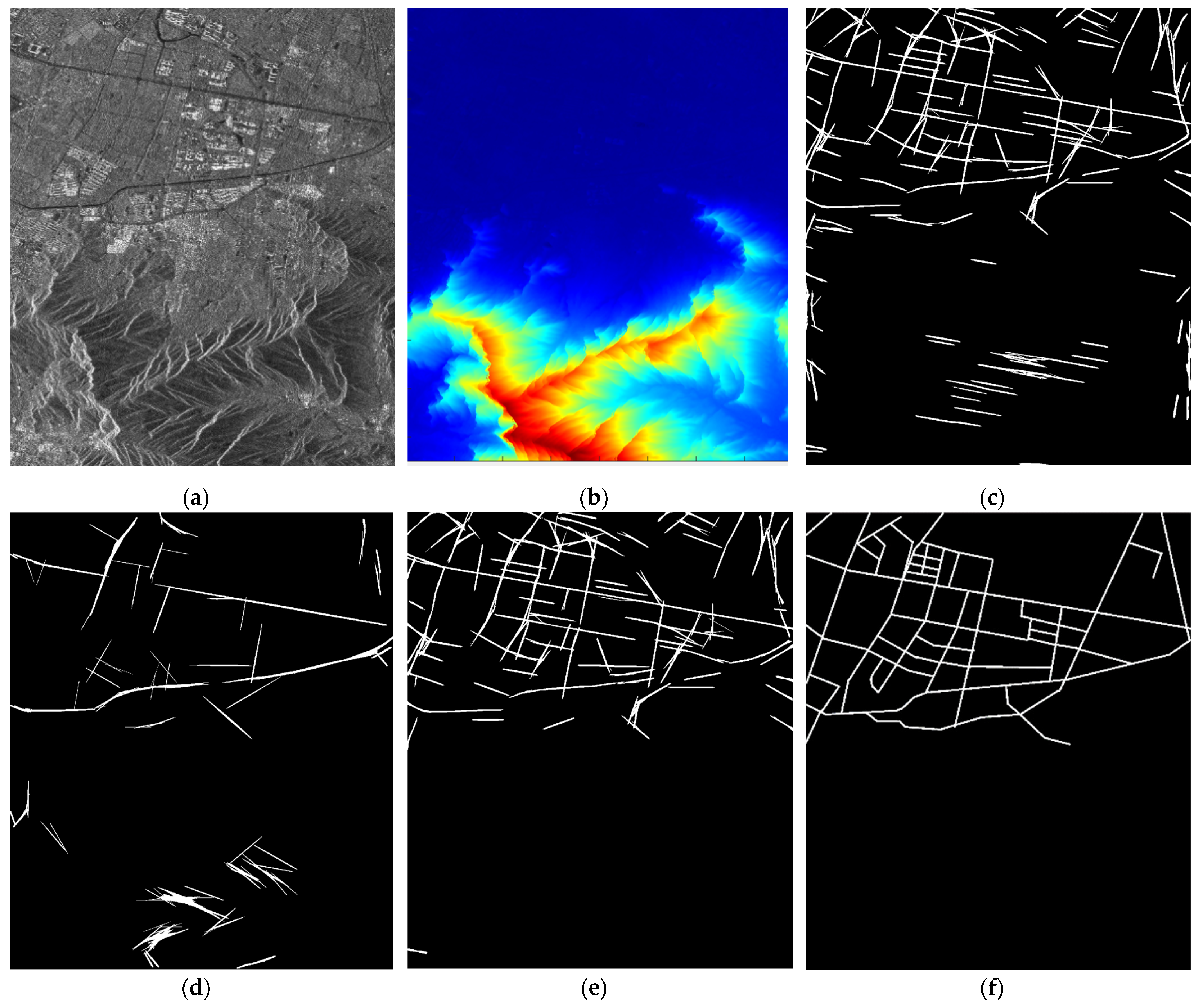

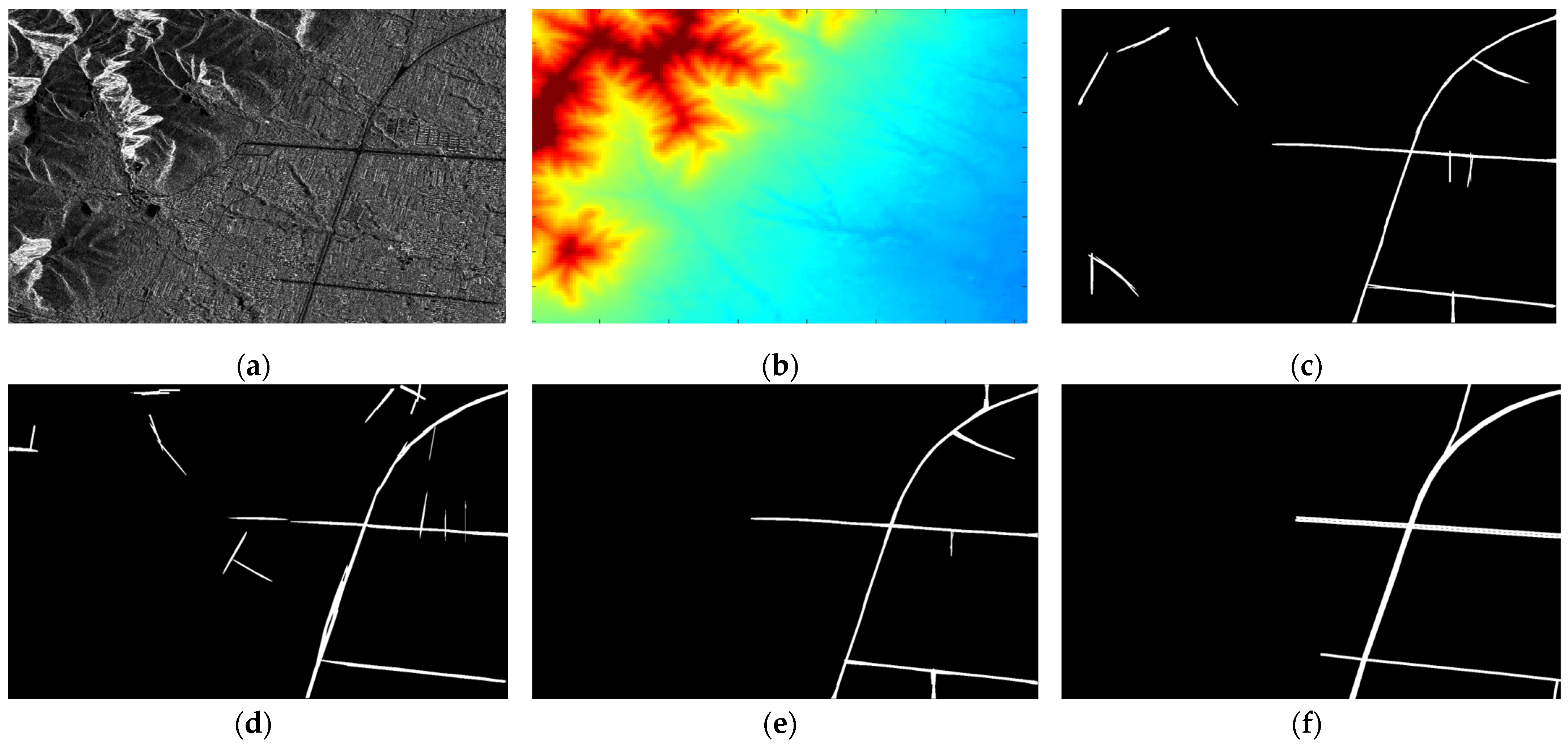

Table 2. The results of the case tests are illustrated in

Figure 7 and

Figure 8:

As observed in the figures, when extracting roads in large-scale complex SAR image areas, relying solely on the intrinsic radiometric features of roads is unsuitable for scenes with complex terrains and interfering elements like mountain ridges (

Figure 7c and

Figure 8c). This interference leads to unsatisfactory road extraction results.

In this paper, DEM data are integrated into traditional multi-scale multi-feature road extraction, as shown in

Figure 7b and

Figure 8b, where the color depth represents the elevation. Leveraging the fact that roads typically exhibit gentler slopes compared to ridges, this method assesses the slope of each candidate road segment. The results, as shown in

Figure 7e and

Figure 8e, demonstrate a significant reduction in the influence of interfering features such as ridges. Specific quantitative evaluation indices are provided in

Table 2. Three classical assessment indices, recall, precision, and F1-score, are used for a quantitative comparison of the method’s effectiveness before and after using the assisted DEM.

The experimental results indicate that the SAR road extraction method proposed in this paper, with the assistance of the DEM, enhances the algorithm’s robustness in complex remote sensing scenes compared to traditional methods that solely rely on radiometric features. Notably, the proposed method sacrifices a small amount of recall to achieve a significantly higher road extraction precision by optimizing road segment adjudication.

In

Figure 7, the recall of the method proposed in this paper is 89.23%, a 2.98% reduction compared to the traditional method. The precision is 76.24%, marking a 15.62% improvement over the traditional method. The final F1-score is 80.94%, representing an 8.75% enhancement compared to the traditional method, and a notable improvement of 17.49% compared to the Directional Grouping and Curve Fitting Method. For

Figure 8, the proposed method achieves a recall of 92.26%, an improvement of 0.07% compared to the traditional method. The precision is 89.55%, a significant 32.41% improvement over the traditional method. The F1-score is 90.89%, demonstrating a 20.34% improvement over the traditional method, and an enhanced performance of 11.15% compared to the Directional Grouping and Curve Fitting Method.

4.2. Multi-Source Remote Sensing Image Road Fusion and Result Optimization

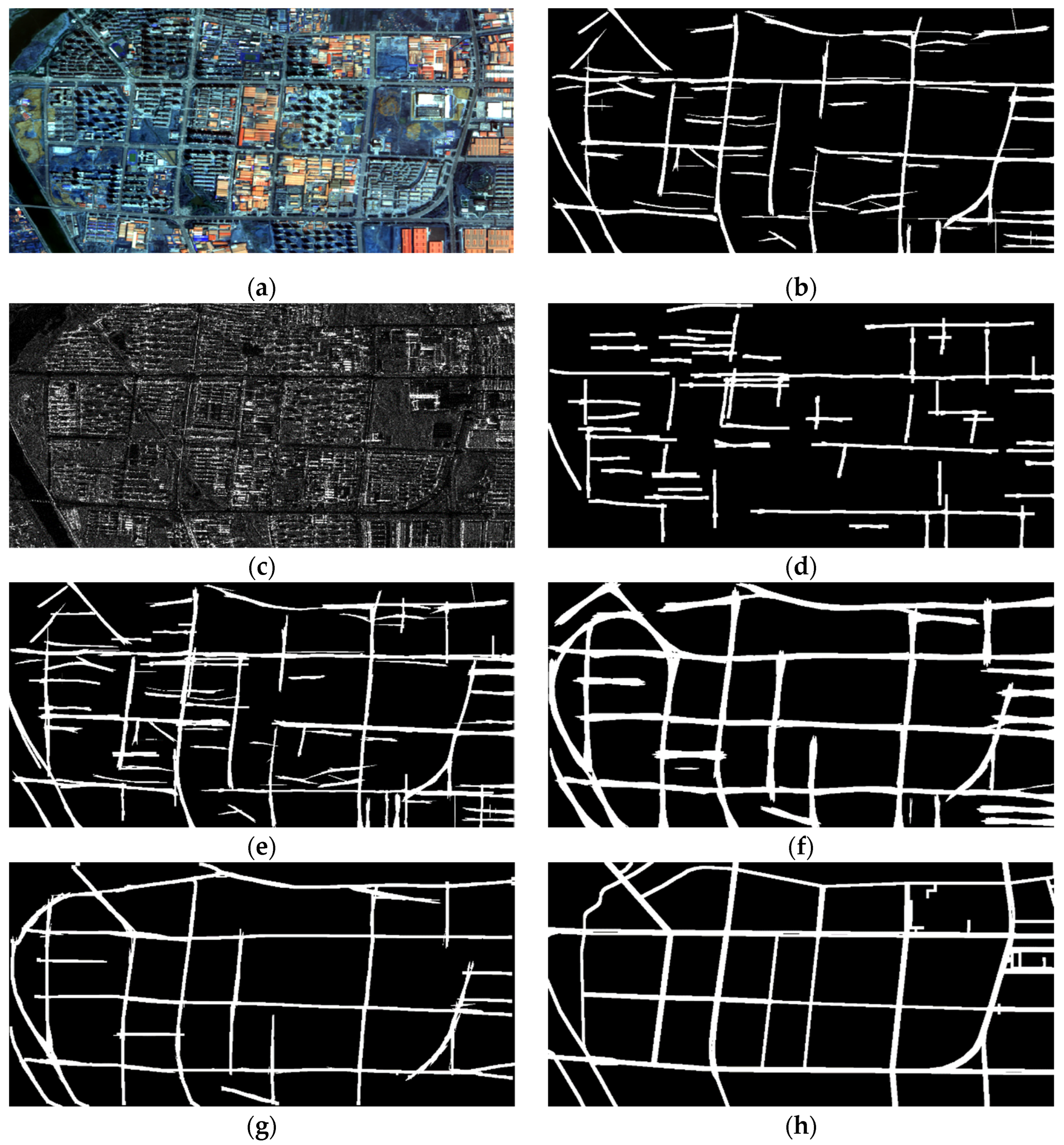

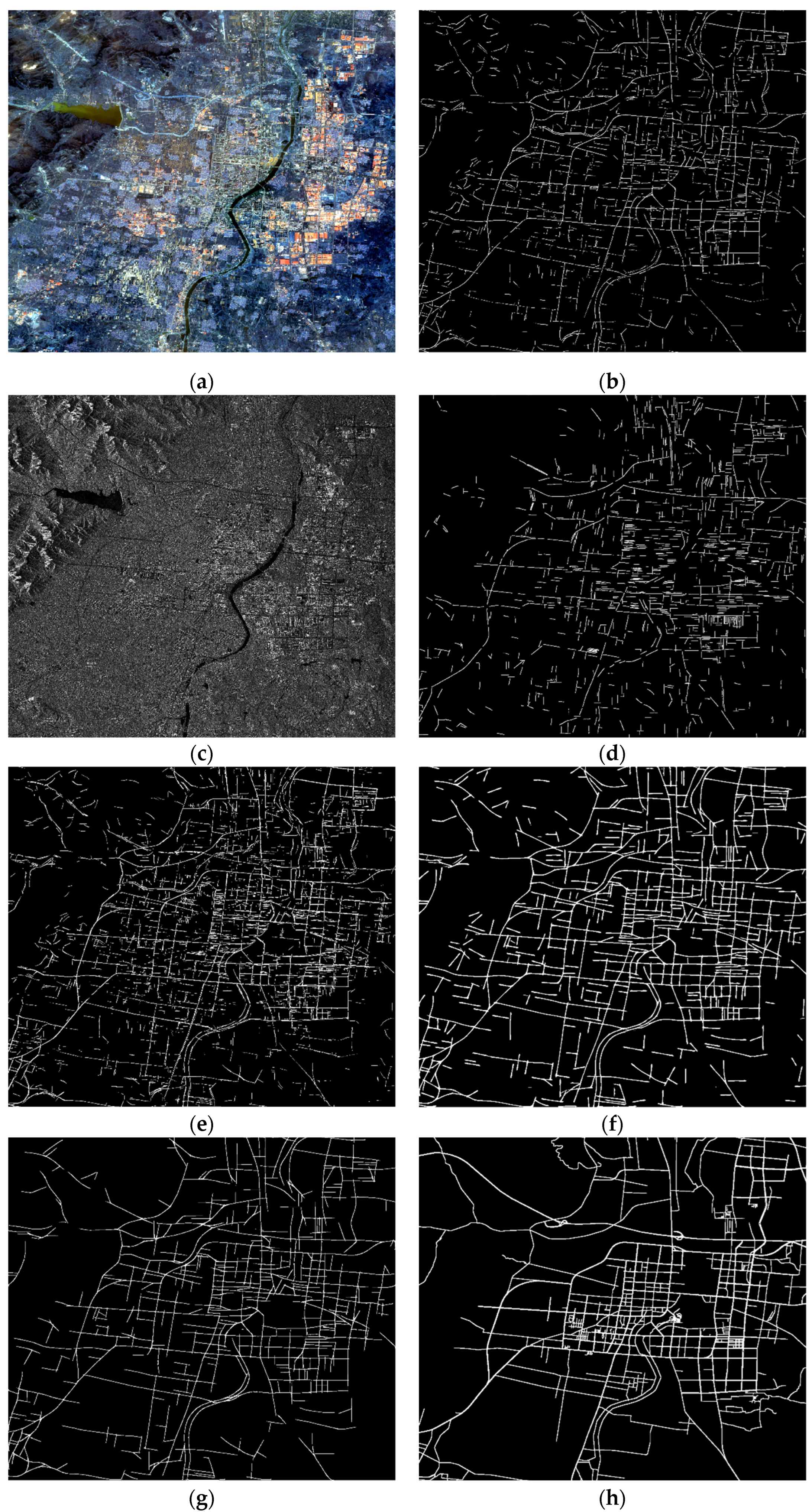

Building upon the use of the DEM to enhance road extraction from SAR remote sensing images, this paper conducted tests on several groups of large-scale complex feature scenes captured by GF-2 and GF-3 satellites at different times and locations. These tests were conducted to verify the reliability of the decision layer fusion of multi-source remote sensing images and the optimization method based on the rotationally adaptive median filter and graph theory.

Figure 9,

Figure 10 and

Figure 11 display the source images and result maps at different stages of the fusion and optimization process.

The figures illustrate that the fusion of SAR and multispectral images at the decision layer combines the strengths of both sensors. It leverages SAR’s penetrating ability to compensate for challenges posed by buildings and shadow obstructions during optical imaging, resulting in a more comprehensive road extraction. Additionally, the method based on rotational adaptive median filtering effectively fills broken regions and removes small interfering road segments, enhancing road segment continuity. Finally, the optimization method based on graph theory capitalizes on road topology and continuity to eliminate discrete and continuous large interfering road segments.

The table below (

Table 3) provides a comparison of road fusion and result optimization results for multi-source remote sensing images:

The experimental results reveal variations in the performance of road extraction methods across different remote sensing scenes, influenced by factors such as scene size and complexity. In the context of SAR image road extraction, the metrics of recall, precision, and F1-score exhibit relatively low values, emphasizing the inherent challenges in this domain.

In contrast, road extraction methods based on multispectral remote sensing images generally exhibit superior performance metrics. However, they are susceptible to limitations imposed by cloud cover, lighting conditions, and shadows from structures. To address these challenges comprehensively, this paper takes into account the distinct characteristics and advantages of various sensors.

Considering the continuity and topology of roads, this paper exploits the substantial overlap in characteristics between SAR images and multispectral images for decision-level image fusion. Specifically, the road segments identified in SAR image results and the road results from multispectral images undergo overlap threshold determination. Road segments whose overlap meets the defined threshold are then superimposed onto the multispectral image road map. This innovative approach effectively addresses the issue of missing roads in multispectral images caused by cloud cover, varying lighting conditions, and building shadows. Consequently, it significantly enhances the recall rate of road recognition, even though there may be a slight reduction in precision compared to methods solely reliant on multispectral images. This methodological integration ensures a more robust and comprehensive road extraction process, leveraging the strengths of both SAR and multispectral remote sensing technologies.

Specifically, the adaptive median filtering optimization, as shown in

Figure 9, yields the highest recall at an impressive rate of 94.85%. In contrast, the graph-theory-based optimization method consistently delivers the highest precision (89.49%) and F1-score (91.45%). The multi-source image fusion, as depicted in

Figure 10, achieves the highest recall at 96.80%, although it exhibits a slightly lower recognition precision. Conversely, graph-theory-based optimization consistently achieves the highest precision (76.42%) and F1-score (80.69%). When we examine the results displayed in

Figure 11, we observe that multi-source image fusion records the highest recall at 85.16%. Simultaneously, the graph-theory-based optimization method demonstrates the highest precision (76.59%) and F1-score (77.36%).

Our proposed optimization approach, encompassing multi-source remote sensing image fusion, adaptive median filtering, and graph theory, significantly enhances precision and F1-score in the context of road recognition, particularly in large-scale complex remote sensing terrain. Furthermore, the introduction of the adaptive rotated median filter and the application of graph theory optimization effectively address challenges related to road continuity and topology. These methods facilitate the connection of disconnected road segments and the removal of discontinuities, resulting in substantial improvements in the continuity and topological properties of the recognized road networks.

In the future, we can consider further image fusion through pixel-level or feature-level integration, provided that the registration accuracy of multi-source images is sufficiently high. Additionally, by incorporating a more diverse range of remote sensing data from various sources, we can leverage the distinctive advantages of different sensors, thereby reducing the judgment errors associated with a single sensor. Additionally, we can explore the integration of deep learning-based methods for multi-source image fusion analysis, aiming to realize a road extraction algorithm with enhanced precision and robustness.

5. Conclusions

In conclusion, this study presents a comprehensive approach for road extraction from complex remote sensing terrain, with a particular focus on high-resolution SAR images. By introducing the auxiliary DEM-based method and multi-source remote sensing image fusion, coupled with optimization techniques like the adaptive rotated median filter and graph theory, we address the ridge interference in SAR image road extraction and the influences of lighting and building shadows in multispectral images. The proposed methods extend the road extraction algorithm to larger scene scales, enhancing the precision, F1-score, continuity, and topological performance of road extraction in remote sensing images. Notably, the F1-score achieves values of 91.45%, 80.69%, and 77.36% on different test images.

This study not only demonstrates the effectiveness of our proposed methodologies but also highlights their potential applications in real-world scenarios. The decision layer fusion of multi-source remote sensing images, combined with optimization techniques, allows for a well-balanced trade-off between recall and precision. The resulting road extraction exhibits an improved network topology and road recognition quality, making it a valuable contribution to the field of remote sensing and geospatial analysis.

However, the fusion of multi-source remote sensing images demands high image registration accuracy, posing a significant challenge that requires innovative solutions. Furthermore, the integration of additional data sources, such as synthetic aperture sonar images, LiDAR, or aerial imagery, holds promise for further improving the robustness and accuracy of road extraction. This aspect also merits future exploration and research.