Abstract

Accurate detection of transportation objects is pivotal for enhancing driving safety and operational efficiency. In the rapidly evolving domain of transportation systems, the utilization of unmanned aerial vehicles (UAVs) for low-altitude detection, leveraging remotely-sensed images and videos, has become increasingly vital. Addressing the growing demands for robust, real-time object-detection capabilities, this study introduces a lightweight, memory-efficient model specifically engineered for the constrained computational and power resources of UAV-embedded platforms. Incorporating the FasterNet-16 backbone, the model significantly enhances feature-processing efficiency, which is essential for real-time applications across diverse UAV operations. A novel multi-scale feature-fusion technique is employed to improve feature utilization while maintaining a compact architecture through passive integration methods. Extensive performance evaluations across various embedded platforms have demonstrated the model’s superior capabilities and robustness in real-time operations, thereby markedly advancing UAV deployment in crucial remote-sensing tasks and improving productivity and safety across multiple domains.

1. Introduction

Unmanned systems (US), including unmanned ground vehicles (UGVs) and UAVs, are increasingly crucial for driving technological advancements within the transportation sector [1,2,3]. UAVs, providing a bird’s eye view, enhance traditional traffic monitoring methods by collecting real-time data on vehicle speed, distance, and location, thereby supporting efficient traffic management and collision avoidance [4]. Utilizing remote-sensing technologies, UAVs significantly contribute to congestion analysis, vehicle tracking, and safety evaluations in urban traffic systems [5,6]. Equipped with high-resolution cameras and advanced image-processing algorithms, UAVs dynamically monitor and analyze traffic flow, promptly identify bottlenecks, track vehicle trajectories, and assess accident scenes and driver behavior. These capabilities substantially improve traffic management efficiency and road safety [7,8].

For most UAV applications, precise target detection and advanced image-processing algorithms are essential. Equipped with sensors and refined detection algorithms, UAVs can effectively perceive their surroundings, accurately identify targets, and make corresponding decisions [9]. Numerous studies are currently focused on developing lightweight target-detection algorithms tailored for UAV platforms. Notably, improvements to the YOLO series have led to several new approaches, such as [10,11,12]. These studies underscore the diversity and critical importance of UAV target-detection technologies. However, most research is conducted on consumer-grade GPUs, like the Nvidia RTX 3060, primarily focusing on improving detection precision without testing on actual UAV computing platforms. This type of unreal-time detection overlooks the specific computational and resource constraints of UAV platforms. A thorough assessment of these target-detection algorithms’ real-world performance and potential applications can only be achieved through stringent testing and validation on UAV-embedded devices [13].

Balancing the computation resources, detection capabilities, and processing speed is a critical technological issue in UAV application development [14]. Real-time UAV target detection typically relies on embedded devices, which not only serve as the core computing platforms within the UAV systems but also operate under severe payload and power supply constraints. These constraints are essential to ensure prolonged UAV operations and the completion of numerous tasks [4]. Consequently, these devices are required to process significant data volumes in real time while adhering to strict power and size limitations. However, the limited computational capacity and memory resources of embedded devices present considerable challenges for deploying efficient target-detection models [7].

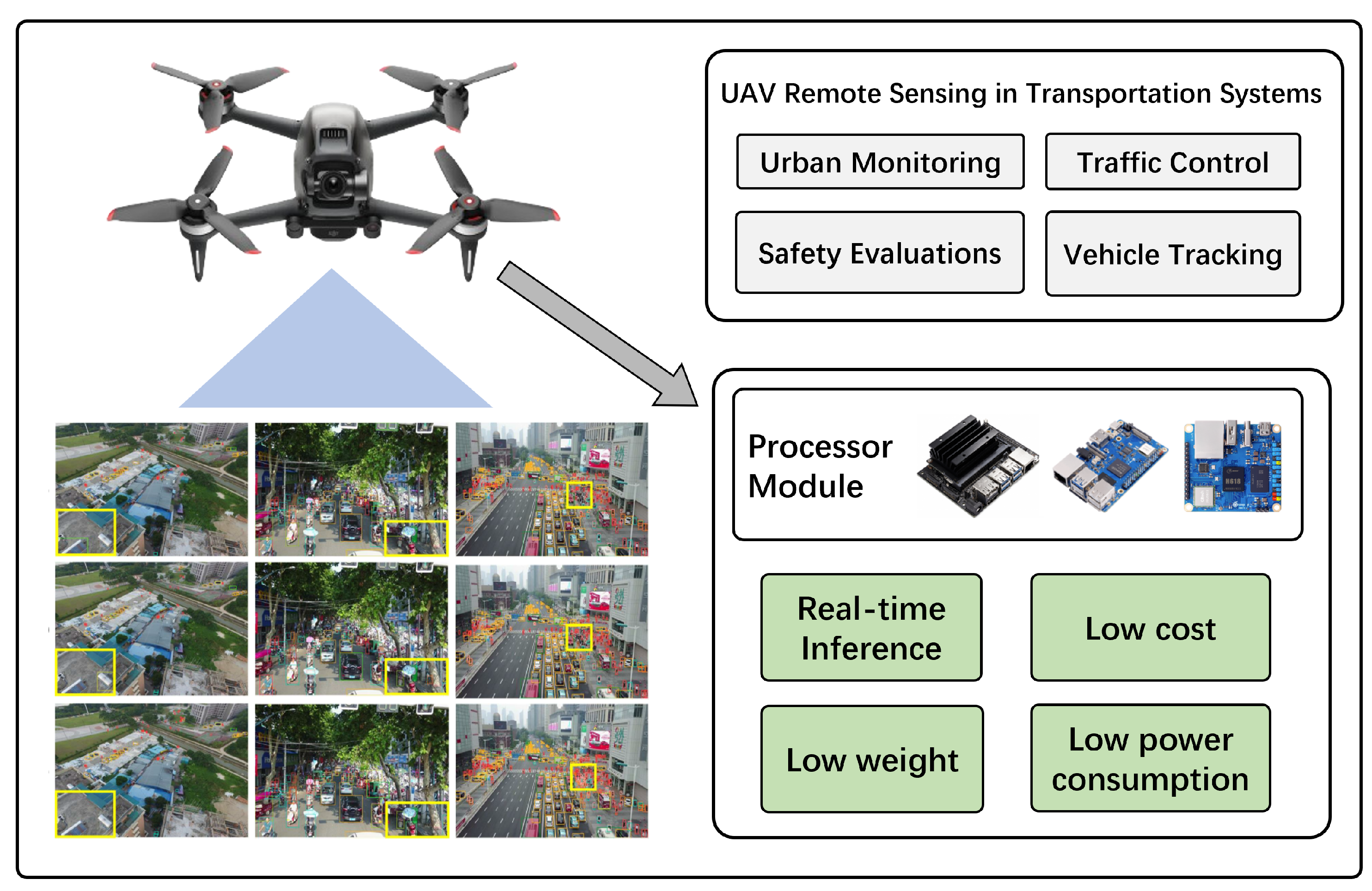

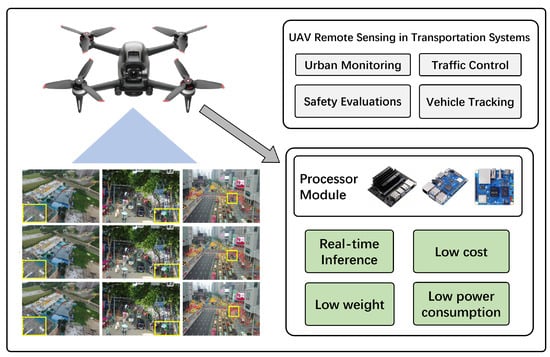

In recent years, comprehensive research has been dedicated to enhancing multi-object-detection capabilities and optimizing inference speeds. Studies such as [15,16] have introduced lightweight target-detection algorithms designed for edge computing platforms. Additionally, research like [9] emphasizes the critical importance of real-time data processing, which is crucial for operational environments requiring rapid decisions. Although there is a noticeable discrepancy in detection precision between embedded devices and high-performance consumer-grade GPUs, the relevance of these algorithms in practical scenarios should not be underestimated. However, current research, such as [15,16], primarily utilizes high-performance artificial-intelligence-embedded platforms like the Nvidia Jetson Nano for experimental validation. While these platforms excel in detection precision, their high cost and power consumption may not be economical for UAV applications. Specifically, the Jetson Nano costs up to 1299 RMB and consumes 10 watts of power. Such high power consumption is particularly detrimental in UAV applications, as it directly affects the drone’s flight endurance. High power demands mean UAVs need to carry larger, heavier batteries, increasing costs and potentially reducing payload capacity and flight duration. Figure 1 showcases the diverse applications of UAVs in remote sensing, and the critical specifications for processor modules in UAVs include capabilities for real-time inference, lightweight architecture, minimal power usage, and cost efficiency [13].

Figure 1.

Illustration of UAV remote sensing applications in transportation systems and the demands for processor module [7,13].

Therefore, exploring more cost-effective and energy-efficient alternative platforms is essential. Extensive research has been undertaken on lightweight target-detection models tailored for cost-effective embedded platforms. Importantly, a variety of state-of-the-art lightweight object-detection models, including YOLO-Fastest v1.1, YOLO-Fastest V2, and FastestDet, have been evaluated [17]. These models have been tested on Rockchip-based embedded platforms, such as RK3566 and RK3568, where they demonstrated robust performance and notable potential for real-time applications. The aforementioned models must maintain a parameter size under 0.5 MB for real-time inference on Rockchip-based platforms. In comparison, the YOLOv8s model suitable for the Jetson Nano features a significantly larger parameter count of 11.2 MB. However, as these methods are not originally designed for UAV-specific applications, the challenge still exists:

- The limited memory of embedded devices leads to frequent memory accesses and data transfers, considerably slowing down the inference process and impacting the real-time perceptual capabilities of UAV systems. Current models lack optimizations for small-memory hardware constraints.

Addressing this issue, this study introduces a memory-efficient, lightweight target-detection model designed specifically for low-cost and memory-constrained embedded platforms. The contribution includes the following:

- An in-depth analysis of the FasterNet backbone network is conducted, resulting in the reduction of feature-processing dimensionality and the introduction of the FasterNet-16 backbone network. This enhancement enables faster and more efficient feature extraction in target detection.

- Multi-scale feature fusion in the neck module is evaluated, and a strategy utilizing multiple PConv convolutions is developed. This approach ensures optimal feature utilization while maintaining a minimal structural footprint within the target-detection network.

- Based on various datasets and three different embedded platforms, extensive experiments are conducted. The proposed model demonstrates improvements in both detection precision and inference speed on the Rockchip RK3566-embedded platform compared to state-of-the-art methods.

The structure of this paper is organized as follows: Section 2 reviews related work and recent developments in UAV detection systems; Section 3 elaborates on the proposed lightweight object-detection algorithm, detailing the design of the FasterNet-16 backbone and the multi-scale feature-fusion neck module; Section 4 outlines the experimental setup and discusses the results obtained on diverse embedded platforms; Section 5 analyzes these results, highlights the limitations of the current study, and proposes directions for future research; Section 6 concludes the paper.

2. Related Work

2.1. Applications of UAV Remote-Sensing Technology in Transportation Systems

Unmanned aerial vehicles (UAVs) are increasingly being utilized in urban management to enhance the efficiency of various tasks, such as traffic congestion analysis, vehicle tracking [18], and safety evaluations [19]. Equipped with high-resolution cameras and advanced image-processing algorithms, UAVs can capture detailed aerial imagery and real-time data. This capability is crucial for monitoring traffic flow, detecting vehicles, and managing incidents [20]. By providing a bird’s eye view and being deployable in various locations, UAVs enable urban planners to gather comprehensive data on infrastructure usage and traffic patterns [8]. This real-time data collection is essential for effective traffic management and safety evaluations. Additionally, UAVs are used to monitor traffic density, estimate traffic flow parameters, and detect vehicle collisions, thus enhancing overall urban traffic management [21]. This technology empowers urban planners to address traffic congestion, improve road infrastructure, and respond effectively to emergencies [14,22,23].

2.2. Lightweight Object-Detection Methods for Embedded Devices in UAVs

Numerous studies have utilized the Jetson platform for deploying lightweight target-detection models. In 2023, Chen et al. launched the LODNU network, specifically designed for UAVs, demonstrating superior performance in real-time tests on the NVIDIA Jetson [24]. In 2024, Hua et al. introduced UAV-Net 18 using the NVIDIA Jetson Nano. This model enhanced detection precision and reduced complexity through advanced pruning techniques [25]. Concurrently, the YOLOv8n model improved small-target detection on UAVs, with Yue et al. optimizing it for the Jetson TX2, thereby increasing precision and speed in complex UAV-captured scenes [26]. The following year, Fan et al. adapted the Jetson Xavier for their LUD-YOLO model, achieving efficient and suitable target detection for real-time UAV operations [27]. Despite these advancements on high-performance Jetson-based platforms, challenges remain in developing UAV-specific algorithms for more cost-effective, lower-power embedded platforms. Studies like [15,16] have proposed models with about 1 MB of parameters, showing significant improvements. While many models are still tested on relatively resource-rich Jetson-based platforms, general-purpose object-detection methods like YOLO-Fastest [28] and FastestDet [17] have been adapted for constrained embedded platforms. YOLO-Fastest, a highly streamlined version within the YOLO series, drastically reduces the number of convolutional layers and channels. This significant simplification transforms it into an extremely lightweight model, capable of achieving minimal latency and ultra-fast inference speeds, often exceeding 50 FPS [28]. FastestDet enhances real-time, high-precision inference through features like lightweight detection heads and dynamic positive–negative sample distribution [17]. Although these models perform well as general-purpose object-detection solutions on constrained platforms like Rockchip, specific enhancements for UAV applications remain limited.

2.3. Optimization Strategies for Lightweight Model Performance

Frequent memory accesses and data transfers can considerably impede inference speeds, undermining the real-time detection capabilities of unmanned systems [29]. Furthermore, balancing model complexity with detection precision remains a critical challenge; overly simplifying the model can result in substantial performance degradation. In practical applications, real-time performance is a crucial metric for UAV object detection algorithms [30]. To enhance detection speed, researchers have proposed various optimization strategies, such as using lightweight network architectures, pruning, and quantization techniques [31]. For instance, SlimYOLOv3 balances the number of parameters, memory usage, and inference time to achieve real-time object detection [32]. Although depthwise separable convolutions (DSCs) [33] significantly reduce the number of parameters and computational load of CNNs, their deployment on performance-limited embedded devices does not always result in faster operation than conventional convolutions. For example, Jierun Chen analyzed that ShuffleNetV2’s operation speed on a CPU is slower than ResNet-18 due to the frequent memory accesses required by DSCs [34].

Despite advancements in lightweight models, balancing model complexity with detection precision remains a critical challenge [31,35]. Overly simplified models can suffer from performance degradation, while the high frequency of memory accesses in DSCs can slow down inference speeds. The design of memory-efficient models like PConv offers a promising solution by optimizing computational resources and enhancing real-time detection capabilities [36,37].

3. Design of a Memory-Efficient Lightweight Object-Detection Model

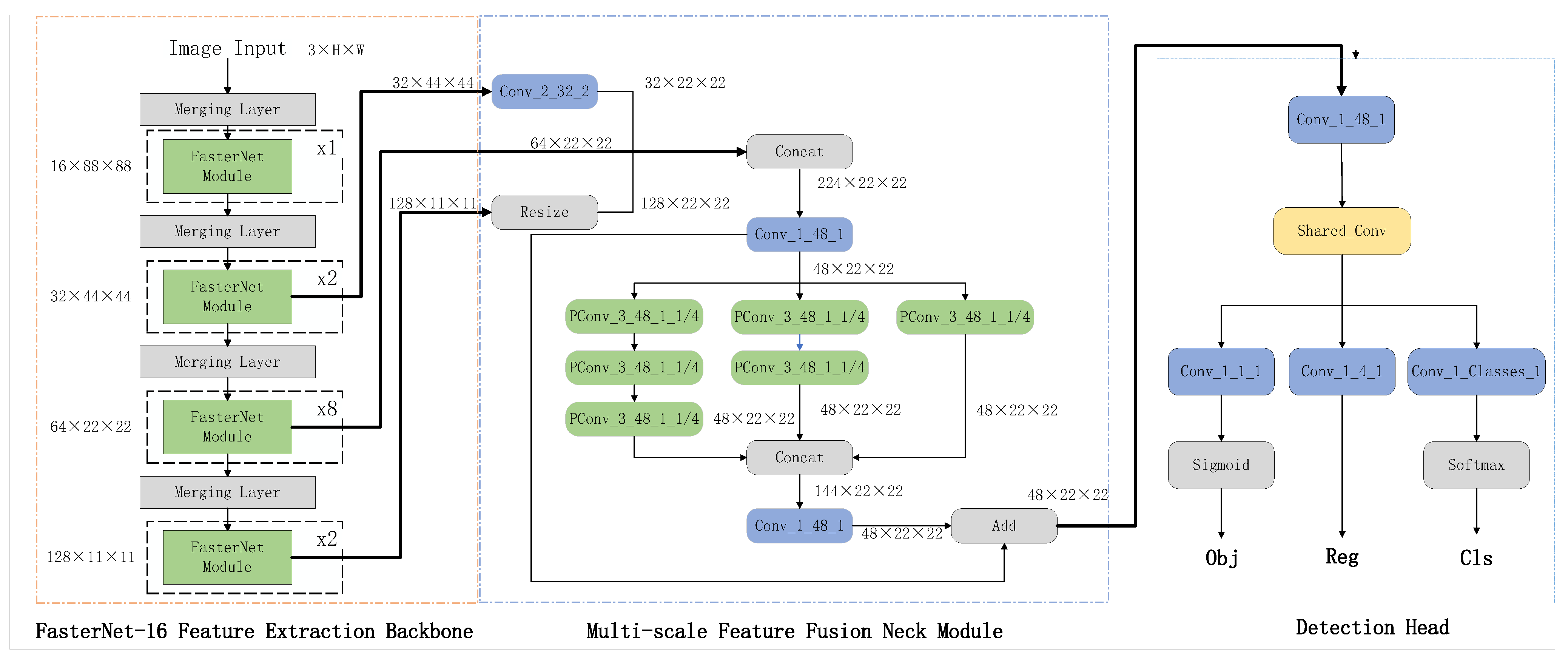

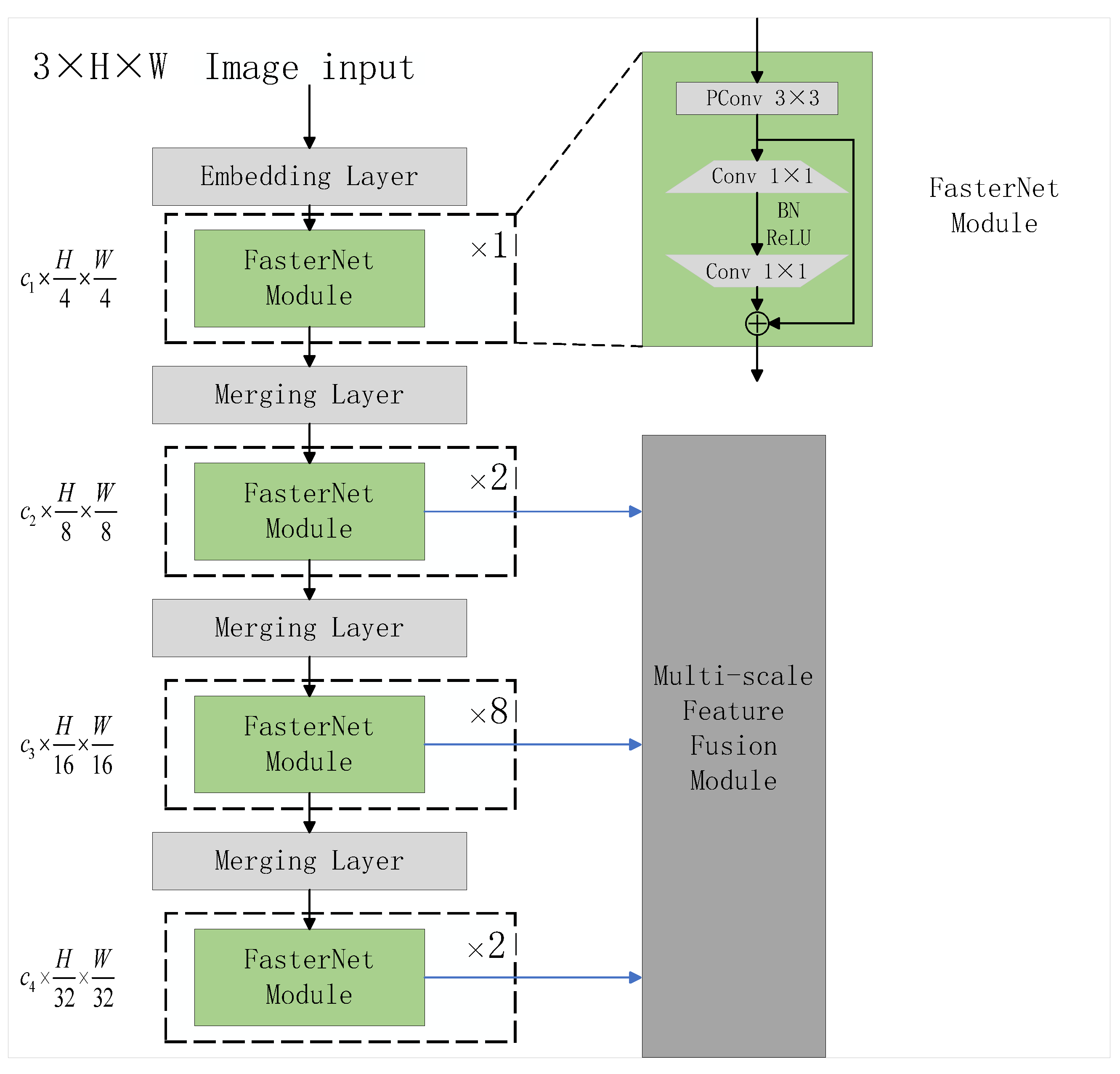

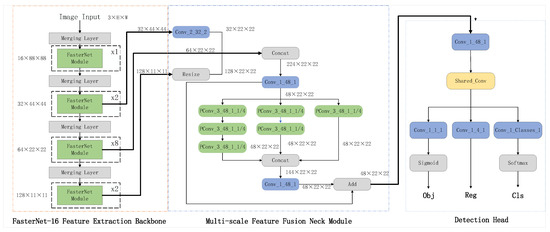

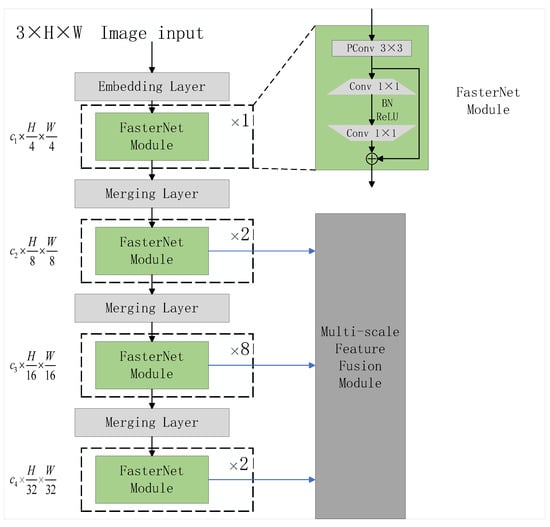

This section details the development of a memory-efficient, lightweight object-detection model, emphasizing the crucial role of memory management in optimizing inference processes. Given that memory requirements often represent a significant bottleneck in lightweight object detection, the goal is to minimize the memory footprint to ensure the model’s efficient operation on embedded devices. The model integrates streamlined designs in both its backbone network and neck module, striking a balance between precision and speed during inference on embedded platforms. The overall architecture of the proposed object-detection model is depicted in Figure 2.

Figure 2.

Overall structure of the proposed model.

3.1. Memory Consumption Analysis

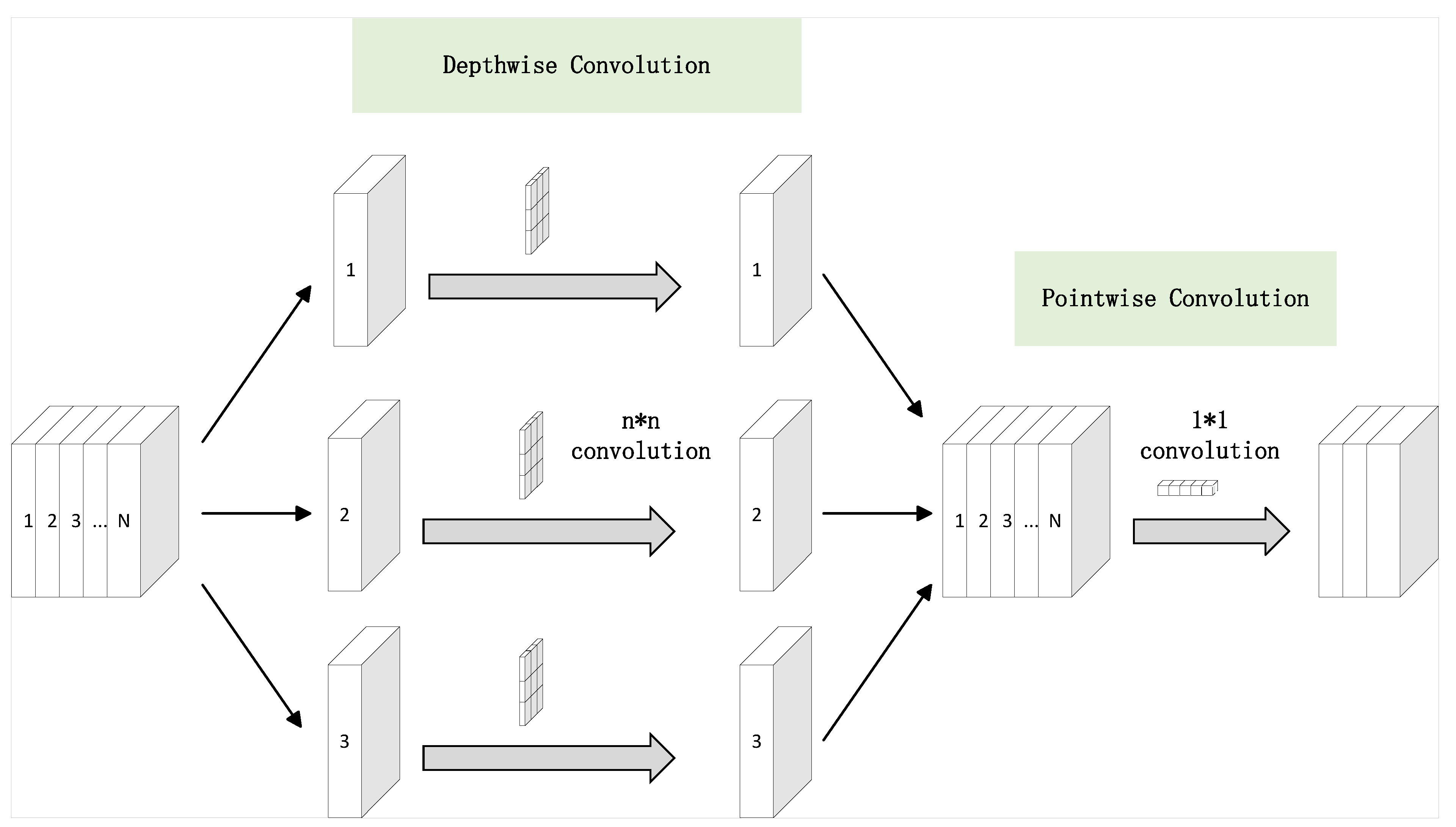

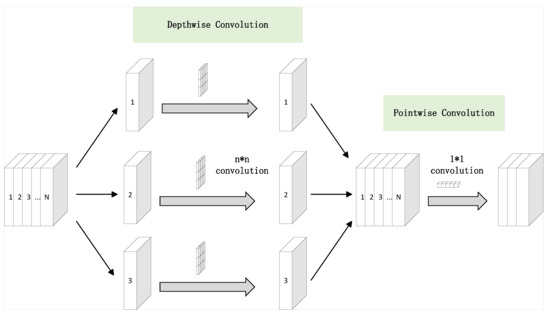

In designing a lightweight object-detection model, addressing memory consumption is paramount for ensuring efficient operation on embedded devices. The approach involves a detailed analysis of the memory usage across various convolution operations. Among the techniques explored, DSC stands out. Originally proposed in 2017 and subsequently implemented in Google’s MobileNet [38], DSC splits standard convolution into depthwise and pointwise convolution, reducing both the computational burden and the parameter count. Depthwise convolution (DC) processes each input channel independently with a convolutional filter, and the results are concatenated as shown in Figure 3. In Figure 3, ‘1, 2, 3…N’ represents the input channels, with each number corresponding to a specific input channel feature map. During depthwise convolution, each channel undergoes a separate convolution operation, rather than being convolved across all channels simultaneously. Subsequently, these processed channel feature maps are combined through pointwise convolution to produce the output feature map.

Figure 3.

Detailed structure of depthwise separable convolution.

In this analysis, parameters, memory, and floating point operations are abbreviated as P, M, and F, respectively, to simplify the presentation of mathematical formulas.

Consider an input feature map of size , with input channels and output channels, and a kernel size of k. The parameters and computational costs are computed as follows:

For pointwise convolution (PC), the parameter count and computational cost are calculated as:

Thus, the total parameter count and computational cost for DSC are:

Comparing these values, the overall parameters and computational costs for DSC relative to standard convolution are:

Using a convolution layer with 100 input and output channels and a kernel size of 5, replacing standard convolution with DSC can reduce the parameter count and computational cost to one-twentieth of the original, significantly decreasing the computational resource requirement.

Although DSC can reduce the number of parameters and computational costs, its performance on embedded devices, which require frequent memory accesses due to per-channel convolution operations, may not necessarily be better than that of standard convolution [34]. Moreover, using DSC can lead to a drop in detection precision [39], necessitating an increase in the number of channels to compensate. For instance, in MobileNetV2, the width of DSC is expanded six times, increasing memory access and ultimately resulting in slower performance compared to standard convolution-based CNN networks. The memory usage of DSC compared to standard convolution can be calculated as follows:

Here, usually is larger than c due to the expanded number of channels in the feature map for DSC, potentially leading to higher memory usage for DSC than standard convolution.

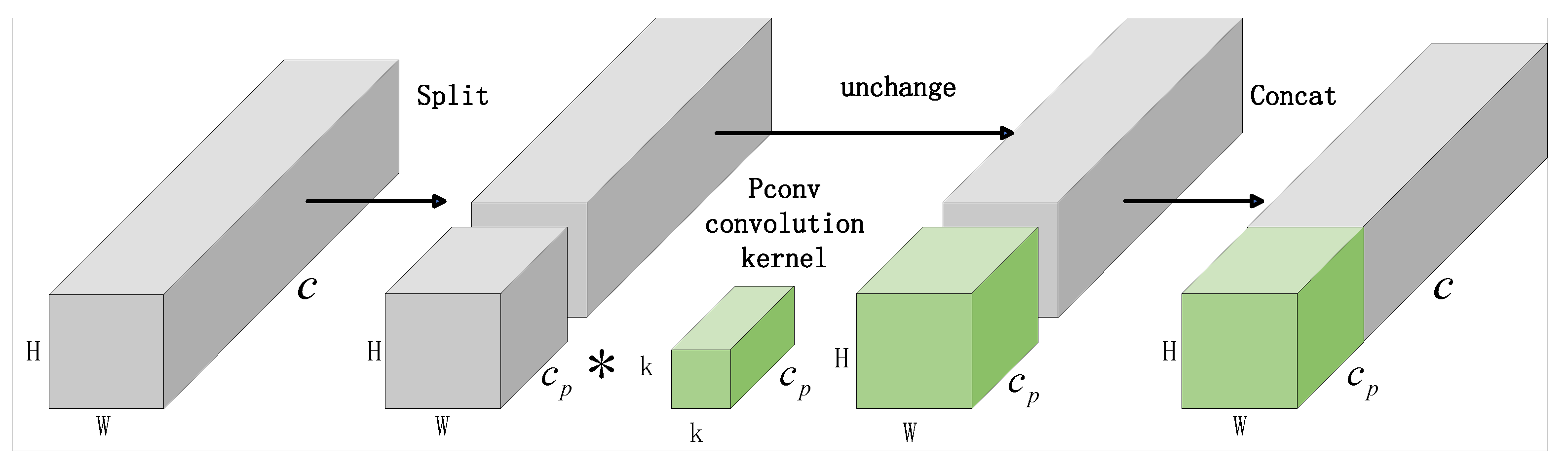

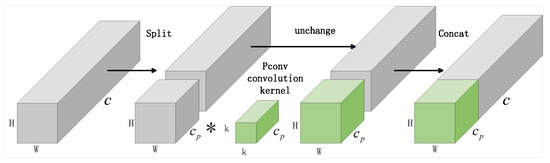

To address these issues, the partial convolution (PConv) is proposed [34]. The basic idea of PConv is to use standard convolution but only perform convolution on a portion of the channels in the feature map, while the remaining channels undergo identity mapping, as shown in Figure 4. Due to redundancy in the channel dimension of features, replacing standard convolution with PConv does not result in significant performance degradation while significantly reducing computational load and memory usage.

Figure 4.

Detailed structure of PConv.

The computational cost and memory usage of PConv are significantly lower than those of standard convolution. Assuming a PConv with channels, its computational cost is:

PConv offers a substantial reduction in both computational cost and memory usage. Assuming channels for PConv, the computational costs are significantly lower than those of standard convolution, as exemplified by experimental results showing a computational reduction to and memory usage to of the standard values.

Consequently, PConv proves superior to DSC in terms of memory efficiency and computational demands, making it an ideal choice for deployment on memory-constrained embedded devices. Thus, PConv has been selected as the primary convolution technique for the feature-extraction network, addressing both the efficiency and effectiveness required for UAV applications.

3.2. Design of a Lightweight Backbone Network Based on FasterNet

PConv, by processing only a subset of feature channels, significantly reduces memory requirements compared to DSC. Consequently, The FasterNet backbone is integrated with PConv for efficient feature extraction.

Figure 5 illustrates the structure of the FasterNet backbone used in the model, comprising four groups of FasterNet modules. Each feature map initially passes through either an embedded layer (with a 4 × 4 kernel and a stride of 4) or a merging layer (with a 2 × 2 kernel and a stride of 2) before entering the FasterNet modules, facilitating downsampling and channel expansion.

Figure 5.

Structure of the FasterNet feature-extraction backbone.

In the implementation, each FasterNet module comprises a 3 × 3 PConv with padding of 1 and a stride of 1, reducing the input dimension to a quarter. This is followed by two pointwise convolutions, each with a 1 × 1 kernel and stride, which modulate the dimensions of the output from the PConv to ensure comprehensive integration and extraction of features. After the first pointwise convolution, the output is processed through a batch normalization (BN) layer followed by a ReLU activation function. The arrangement of FasterNet modules varies across the groups: one module in the first group, two in the second, eight in the third, and two in the fourth. The feature maps generated by the second, third, and fourth groups are then directed to a multi-scale feature-fusion module.

Originally, the FasterNet series included variants like FasterNet-T0/T1/T2 and FasterNet-S/M/L, differing primarily in the number of modules per group and the dimensions of features processed. The configuration of these dimensions, , , , and , is critical in determining the parameter count and computational cost. For instance, FasterNet-T0 features dimensions of 40, 80, 160, and 320, with a parameter count of 3.9 M and a computational cost of 0.34 GFLOPs. However, in the context of ultra-lightweight models, these parameters are relatively high, with leading models like FastestDet exhibiting parameter counts as low as 0.24 M.

To address this short coming, the FasterNet structure is refined to create FasterNet-16, a more streamlined model tailored for embedded devices that enhances feature-extraction capabilities without the heavy computational load. Each FasterNet module in FasterNet-16 integrates a 3 × 3 PConv, two 1 × 1 pointwise convolutions, and residual connections to improve feature integration and system resilience.

Each FasterNet module not only includes PConv and pointwise convolutions but also introduces residual connections. Residual connections effectively mitigate the vanishing gradient problem in deep networks, promote feature reuse, and improve training efficiency and performance. As shown in Figure 5, the number of FasterNet modules in each group varies: the first group uses one module, the second group uses two, the third group uses eight, and the fourth group uses two. Ultimately, the multi-scale feature maps extracted by these modules are fed into the multi-scale feature-fusion module for feature fusion.

The detailed structure of FasterNet-16 backbone is shown in Table 1. In Table 1, “Conv_k_c_s” denotes a standard convolution with kernel size k, output channel count c, and stride s. “PConv_k_c_s_r” represents a partial convolution with an additional parameter r, indicating the proportion of channels that undergo partial convolution, refining the computational efficiency. represents the dimensions of the input feature map, while indicates the number of modules in each FasterNet group.

Table 1.

Detailed structure of FasterNet-16 backbone.

3.3. Multi-Scale Feature-Fusion Neck Module Based on PConv

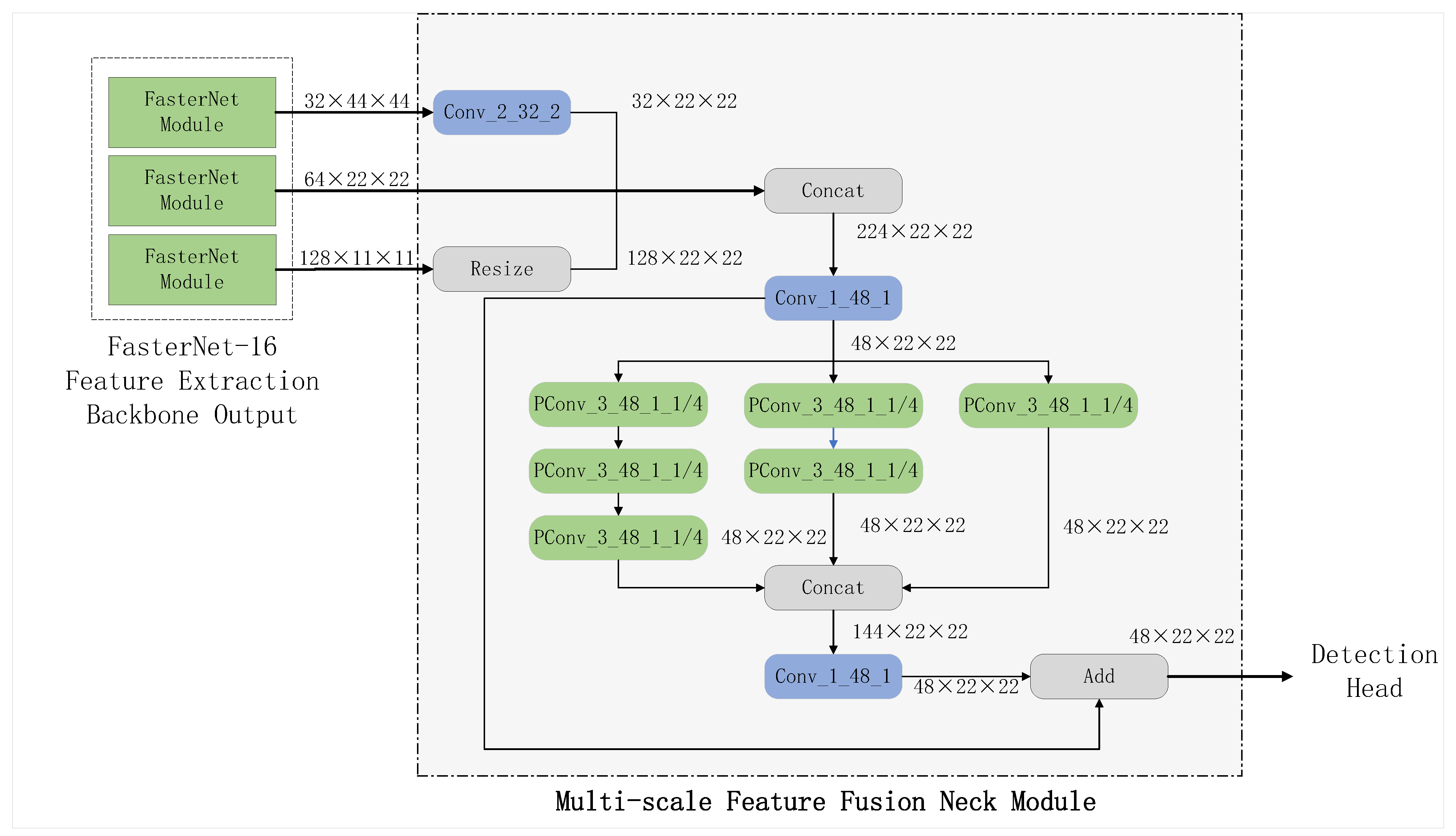

The extracted multi-scale feature information by the FasterNet-16 backbone, specifically the feature maps from the second, third, and fourth groups, generally necessitates further processing prior to being fed into the detection head for object classification and bounding box regression. A prevalent approach for feature fusion is the feature pyramid network (FPN). FPN effectively amalgamates multi-scale feature information from both high-resolution shallow and semantically rich deep layers, thus enhancing the object-detection model’s efficacy by feeding these integrated feature maps into variously scaled detection heads.

However, in the context of ultra-lightweight object-detection models, the FPN module’s additional convolutional operations for feature fusion can become a computational burden. When integrated with the backbone network, these additional operations significantly impact the total computational cost, especially if the backbone itself is already resource-intensive. To address this, the model adopts a fusion strategy inspired by the YOLOF design, where the output feature maps from FasterNet-16 undergo spatial resolution adjustments and are then concatenated along the channel dimension to achieve efficient multi-scale feature stacking.

The three distinct sets of feature maps produced by FasterNet-16 are harmonized to the same spatial resolution before concatenation. This approach not only ensures computational efficiency but also integrates features across various levels, encapsulating both granular details and deep semantic insights.

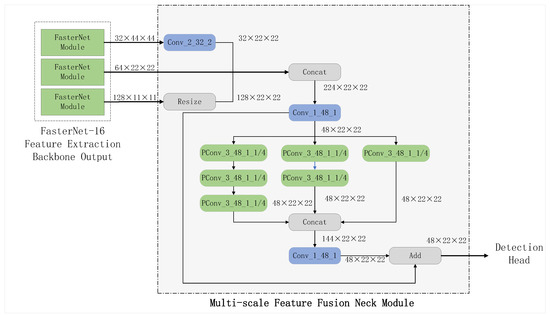

The total dimension of the stacked multi-scale features equals the combined dimensions of the three backbone output feature maps. This novel approach is illustrated in Figure 6 and is designated as the P-Inception structure, which allows for the capturing of diverse scale features within a single feature map, thus enriching feature representation and enhancing detection accuracy.

Figure 6.

Multi-scale feature-fusion neck module based on PConv.

In the depicted P-Inception module (Figure 6), the central component is the newly designed multi-scale feature-fusion neck module. Following the stacking of the three backbone output feature maps, a 1 × 1 convolution performs dimension transformation before entering a pseudo-inception structure. This structure consists of three groups of PConv convolutions in varying quantities (1, 2, and 3), enabling diverse scale information fusion within a singular layer and maximizing the utilization of the extracted backbone features. Subsequent to the convolutional processing, the outputs of the three PConv groups are merged via another 1 × 1 convolution for further feature fusion and dimension alignment, ensuring that the output dimensions are consistent with those of the input feature map. Ultimately, the output feature map from the P-Inception module is reintegrated with its input via a residual connection, thereby enhancing feature reuse and stability in feature representation.

3.4. Detection Head and Loss Functions

In this research, a unified detection head architecture is employed, referred to as the coupled head, which integrates three key branches through a single convolutional neural network. This head simultaneously predicts class information, bounding box coordinates, and objectness probability, processing the feature maps output by the FasterNet-16 backbone. The design of the coupled head not only ensures efficient operation but also maximizes feature utilization.

To refine the model’s performance further, the following loss functions are implemented:

- 1.

- Classification loss. The classification loss is computed as follows:where N is the number of samples, C is the number of classes, represents the true label of sample i belonging to class c, and represents the predicted probability of sample i belonging to class c.

- 2.

- Bounding box regression loss. The bounding box regression loss is defined using the smooth L1 loss function:where represents the true bounding box coordinates and represents the predicted bounding box coordinates. The smooth L1 loss function is defined as:

- 3.

- Objectness loss. The objectness loss, assessing the likelihood of an object’s presence, is calculated by:where represents the true label indicating whether sample i is an object and represents the predicted probability that sample i is an object.

- 4.

- Combined loss function. The overall loss, combining the three individual components, is given by:where , , and are the weights for the classification loss, regression loss, and objectness loss, respectively. These weights balance the contributions of each part of the loss to the total loss.

By defining and strategically combining these specific loss functions, the coupled head model is optimized for effective training and performance across classification, regression, and objectness tasks, significantly boosting the overall efficacy of the object-detection system.

4. Experimental Results

This section of the document delves into the experimental results derived from the study, structured to thoroughly evaluate the performance of the proposed models under various settings and conditions. Initially, the technical specifications and configurations of the embedded devices used are detailed. The dataset and metrics that form the basis of the evaluation strategy are then discussed. Following this, the methods and benchmarks used for comparative analysis are explored, emphasizing the adaptability and efficiency of the models in realistic operational environments. Comprehensive performance evaluations offer insights into the models’ capabilities in handling real-world scenarios, particularly focusing on their responsiveness and accuracy in dynamic traffic conditions.

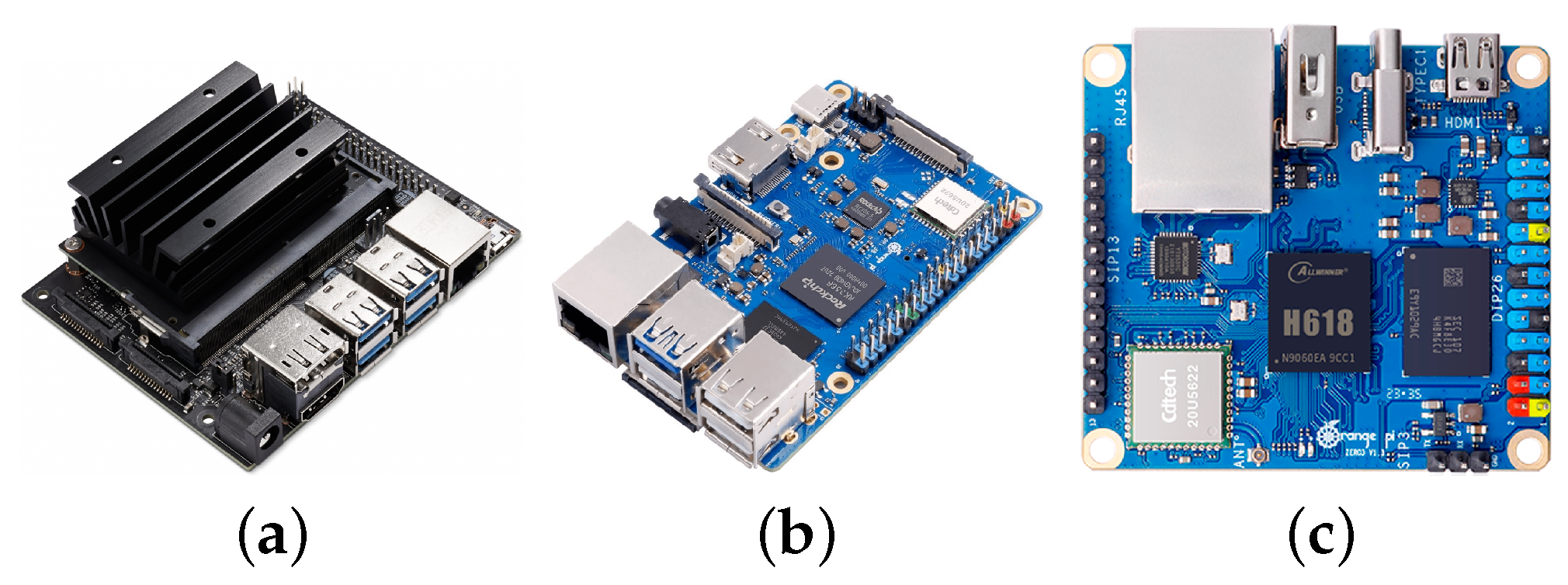

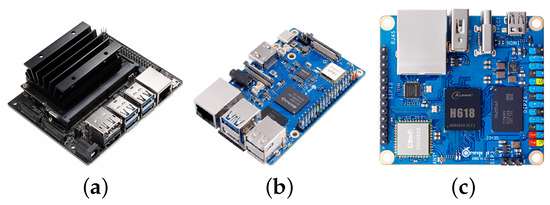

4.1. Embedded Devices

The embedded devices utilized in the experiments are shown in Figure 7. Table 2 provides detailed specifications of all the embedded devices employed in this study. The Rockchip RK3566 served as the primary embedded platform in the experiments to validate the precision of the proposed model and to measure its inference latency with different computing cores engaged. Comparative experiments are also conducted using the Nvidia Jetson Nano, which boasts abundant computing resources, and the AllWinner H618, known for its limited computing capabilities. These experiments underscore the adaptability of the model and its potential across diverse computational resource environments.

Figure 7.

The embedded devices. (a) Nvidia Jetson Nano; (b) Rockchip RK3566; (c) AllWinner H618.

Table 2.

Comparison of the embedded devices.

- Nvidia Jetson Nano: Developed by Nvidia, the Nvidia Jetson Nano is a compact embedded single-board computer tailored for accelerating machine learning and artificial intelligence [40,41,42]. It features a quad-core ARM Cortex-A57 processor and is equipped with 4 GB of memory.

- Rockchip RK3566: The RK3566 is a 64-bit high-performance embedded processor from Rockchip, comprising 4 ARM Cortex-A55 cores that can operate at a maximum frequency of 1.8 GHz [43,44,45]. The specific RK3566 development board utilized in the study is equipped with 2 GB of memory.

- AllWinner H618: Engineered by Zhuhai AllWinner Technology, the H618 is an embedded processor designed for applications in security surveillance and smart connectivity in the internet of things [46,47,48]. It operates on the ARM Cortex-A53 architecture and includes 4 processor cores with a peak frequency of 1.5 GHz. The H618 development board featured in this study is equipped with 1 GB of memory.

4.2. Dataset

Three datasets are employed to validate the performance of the lightweight UAV detection model. Primary evaluations, including precision, latency, and comprehensive performance assessments, are conducted using the VisDrone dataset, a standard in UAV lightweight model testing. The COCO dataset, with its larger objects and greater variety of categories, provides a different perspective to test the model’s generalization capabilities. Finally, the Traffic-Net dataset is utilized to specifically validate the model’s effectiveness in real-world traffic scenarios, demonstrating its practical application in UAV-based traffic management.

- VisDrone Dataset [49]: The VisDrone dataset, utilized for the experiments, is developed by the AISKYEYE team at Tianjin University, China. It consists of 288 video clips with 261,908 frames and 10,209 static images, captured across 14 cities in China under varying conditions using different drone models. This dataset includes over 2.6 million bounding boxes annotating diverse objects such as pedestrians, vehicles, and bicycles in various urban and rural settings. Additional data attributes like scene visibility, object class, and occlusion are provided to enhance the robustness of object-detection algorithms tested.

- COCO Dataset [50]: The COCO dataset offers a rich diversity of 80 common object categories. The images in the COCO dataset come from a wide range of scenes and environments, such as indoor and outdoor settings, urban and rural areas, which enhances the model’s ability to generalize across different contexts. Compared with the Visdrone dataset, the COCO dataset contains more object categories and larger targets, providing a diverse perspective to evaluate the detection model.

- Traffic-Net Dataset [51]: The Traffic-Net Dataset contains 4400 images across four classes—accident, dense traffic, fire, and sparse traffic—with 900 images for training and 200 for testing. Designed for training machine-learning models in real-time traffic-condition detection and monitoring, Traffic-Net enables systems to effectively perceive and respond to various traffic scenarios. By applying the model to this dataset, the effectiveness is evaluated in UAV-based traffic management.

4.3. Evaluation Metrics

For the performance evaluation of the detection models, several metrics crucial for real-time applications on embedded devices are focused on. Frames per second (FPS) measures how many frames the algorithm can process each second, indicating the model’s operational speed. Latency is assessed in milliseconds (ms), with an average taken over ten inference rounds to compensate for any computational fluctuations on edge GPUs. The size of the model is considered by examining the weight file size in megabytes (MB), which influences loading times and memory usage. For detection accuracy, mean average precision (mAP) is utilized, calculating the model’s precision across a range of intersection over union (IoU) thresholds from 0.5 to 0.95 in increments of 0.05. AP50, or the area under the precision–recall curve at 50% IoU, provides a specific measure of how well the model discerns true positives from false, integrating both precision and recall in its calculation:

where TP represents true positives, FP false positives, and FN false negatives.

4.4. Comparison Methods

For a thorough and relevant comparison, benchmarks from [17], which specialize in lightweight object-detection algorithms on Rockchip platforms, are employed. This includes models like YOLOv5s, YOLOv6n, YOLOx-nano, NanoDet_m, YOLO-fastestv1.1, YOLO-fastestv2, and FastestDet. YOLO-fastestv2 and FastestDet are selected to avoid redundancy and ensure the inclusion of high-performing models. Moreover, the inclusion of the high-performance, high-parameter YOLOv8s model provides a comprehensive assessment of the method against a spectrum of detection capabilities.

4.5. Methods Evaluation

Due to the time-intensive nature of validation precision assessments, which require processing all images in the validation set, validations are performed every 10 epochs. To evaluate the model against comparison methods, two additional models are trained at input resolutions of 352 × 352 and 320 × 320, respectively. This approach allows for assessing the proposed model’s performance across different resolutions and identifying the optimal balance of performance for various application scenarios. The server configuration used for training the models is outlined in Table 3. Training parameter settings are summarized in Table 4.

Table 3.

Experimental hardware and software.

Table 4.

Configuration of training parameters.

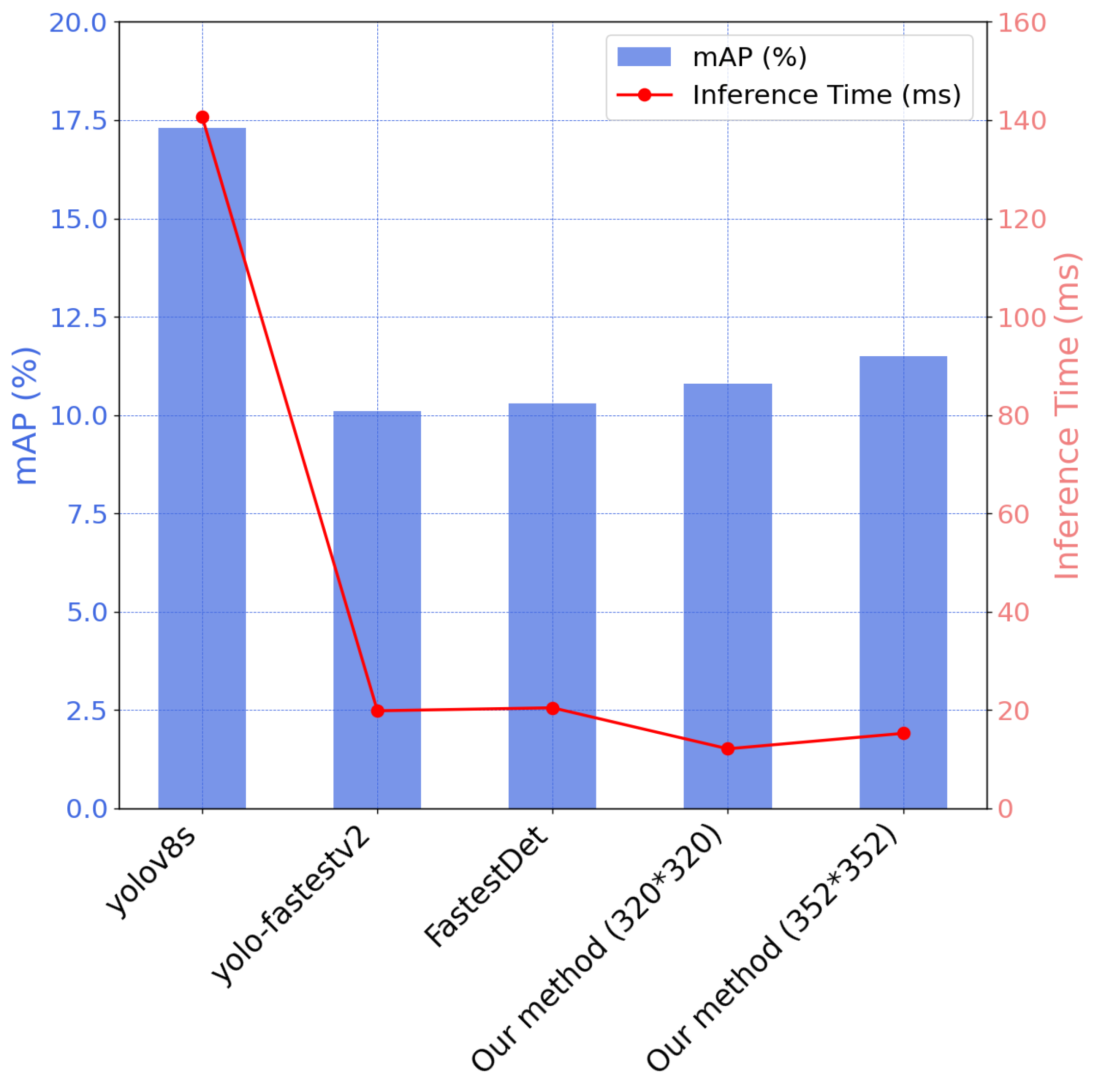

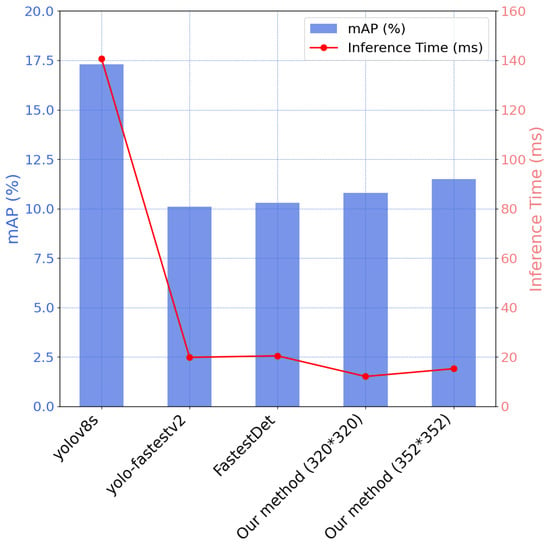

After training, the model is converted to ONNX format and deployed on three different embedded development boards using NCNN for inference testing. The performance comparison with state-of-the-art methods is illustrated in Table 5 and Figure 8. The inference times reported in Table 5 are the result of deploying the models on the RK3566 development board using NCNN and averaging the outcomes of 100 inferences with the NCNN benchmark tool. In the experimental evaluation on the RK3566-embedded platform, the proposed object-detection model significantly excels in balancing precision and inference speed, which is crucial for real-time applications in resource-constrained environments. Compared to state-of-the-art models such as YOLOv8s, which achieves a higher mAP of 17.3% and AP50 of 31.00% at the expense of longer inference times (140.72 ms), the model demonstrates superior efficiency. Specifically, at a trained resolution of 320 × 320, the proposed model attains a mAP of 10.8% and AP50 of 21% with an impressive inference speed of only 12.13 ms. Increasing the input resolution to 352 × 352 not only maintains rapid processing with an inference time of 15.27 ms but also enhances mAP to 11.5% and AP50 to 23.05%. These results highlight the proposed model’s ability to effectively leverage computational resources, making it particularly suitable for embedded systems where both speed of detection and low power consumption are paramount. Furthermore, the model’s performance with a reduced parameter count of only 0.4035 M significantly enhances its applicability for real-time object-detection tasks, establishing it as an optimal solution for advanced embedded applications that demand high efficiency and accuracy.

Table 5.

Comparison of the proposed model with object-detection models on RK3566.

Figure 8.

Comparison of mAP and inference time with object-detection models on RK3566.

The investigation utilizes the Visdrone dataset to conduct comprehensive testing of object-detection algorithms across various embedded platforms, examining different core configurations including single-core, dual-core, and quad-core setups.

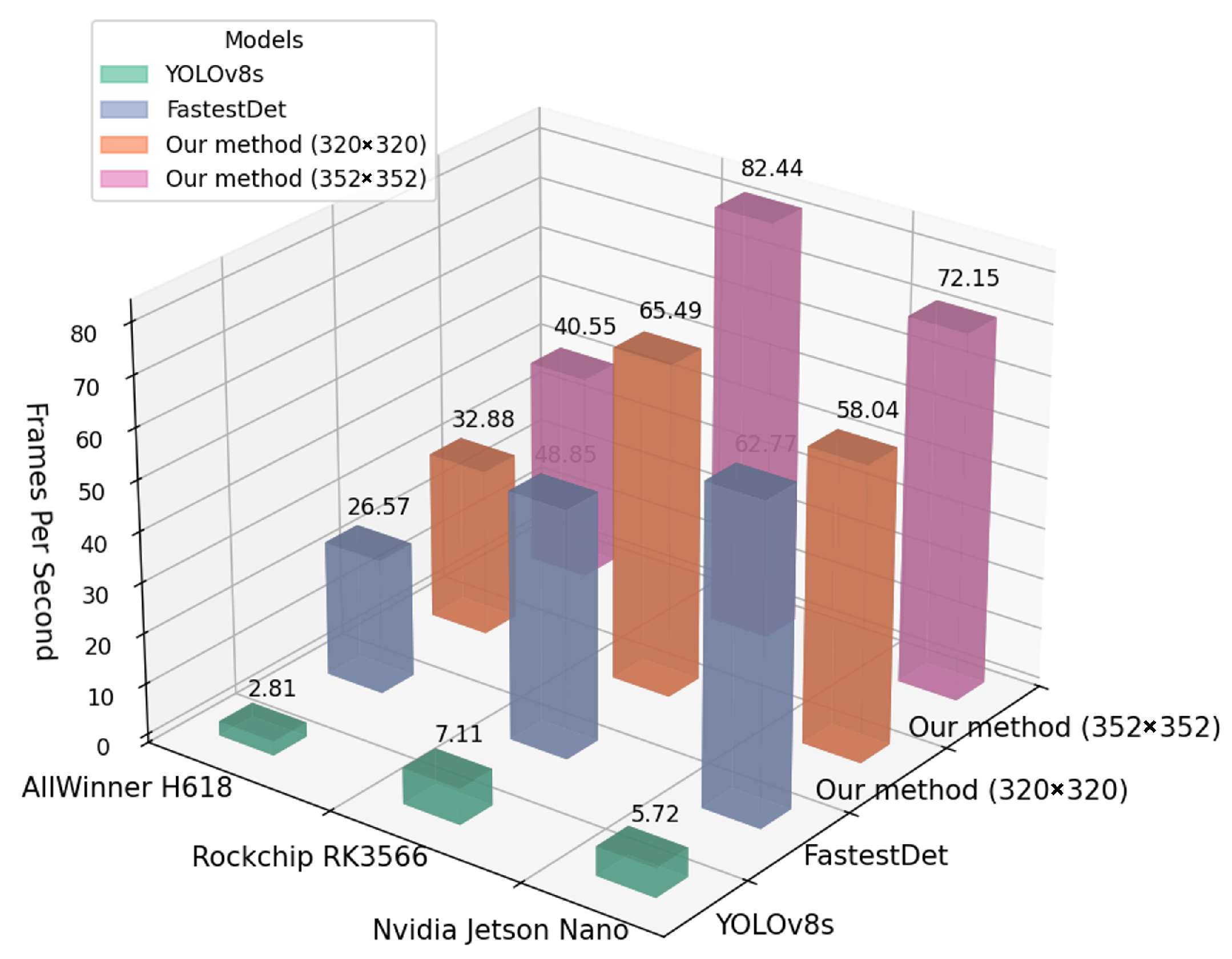

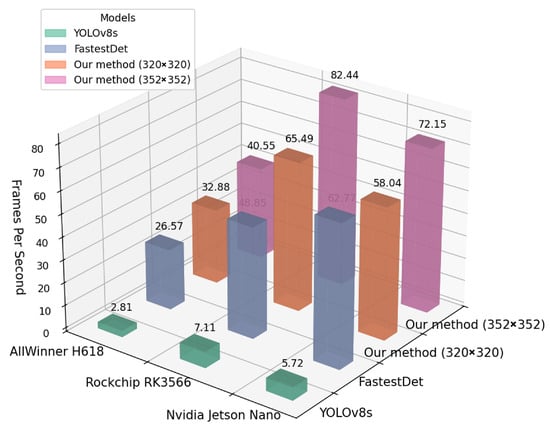

The initial analysis focuses on a quad-core configuration, where the proposed model demonstrates superior performance. Specifically, on the RK3566 platform, the model achieves an impressive 65.49 FPS, and on the H618, it reaches 32.88 FPS, markedly surpassing the FastestDet model, which achieves 48.85 FPS and 26.57 FPS, respectively, under identical conditions. This translates to an enhancement in inference speed of 34.06% for the RK3566 and 23.75% for the H618. Figure 9 distinctly illustrates these performance benefits, showing the proposed model’s higher frame rates across all tested platforms. Such results underscore the proposed model’s optimized processing efficiency, crucial for real-time object-detection tasks.

Figure 9.

Comparison of frames per second on embedded devices (quad-core).

Further evaluations are conducted to assess inference speeds across different computational core configurations on the embedded platforms, as shown in Table 6. Given that computing modules in UAV operations typically handle multiple tasks simultaneously—such as flight control and battery management—the allocation of computational resources is pivotal. The proposed model exhibits exemplary inference speeds, surpassing those of FastestDet across all processor configurations. Notably, on platforms like the Nvidia Jetson Nano and Rockchip RK3566, the proposed model maintains consistent performance, achieving inference times of at least 24.78 ms, which correlates to approximately 50 FPS, thus fulfilling the requirements for real-time processing. On the AllWinner H618 platform, the model demonstrates robust performance even at the lowest configuration, maintaining an inference time of approximately 56.32 ms, or nearly 18 FPS, indicative of strong real-time processing capability on resource-limited devices.

Table 6.

Comparison of the different model inference speeds on embedded devices.

Additionally, the performance of the model is analyzed under varying memory constraints, highlighting its efficiency with a reduced parameter count. The inference times across platforms—from the Jetson Nano to the Rockchip RK3566, and the AllWinner H618—show the proposed model’s adaptability to different memory bandwidths. Specifically, the proposed model records inference times of 17.23 ms, 15.27 ms, and 30.41 ms, respectively, at a resolution of 352 × 352 on a quad-core setup, performing better than FastestDet under similar conditions. This demonstrates lower performance degradation and emphasizes the model’s capability to sustain high efficiency in environments with constrained memory bandwidth.

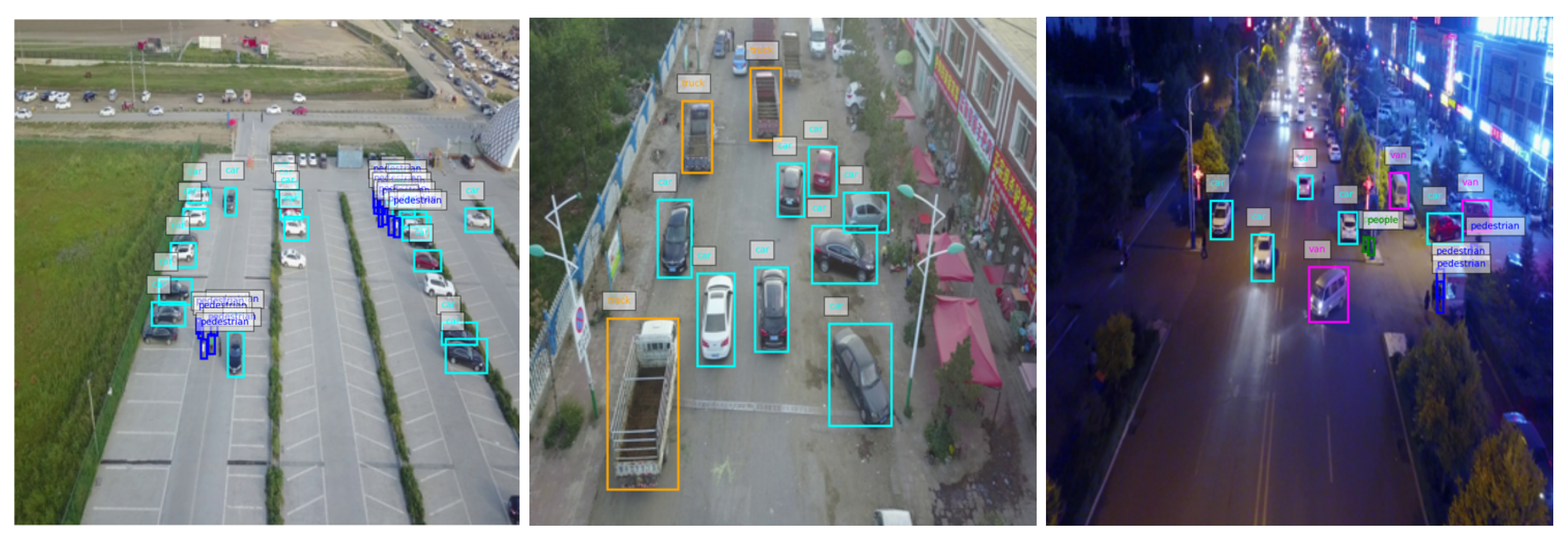

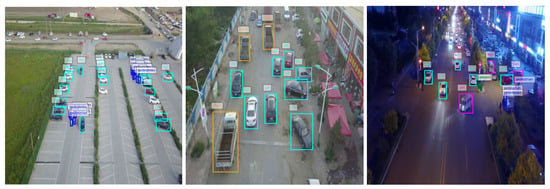

Figure 10 presents visualization samples from the proposed model (352 × 352) applied to the VisDrone dataset, illustrating enhanced detection precision during daytime compared to nighttime due to better lighting conditions, superior performance in detecting larger objects like vehicles over smaller ones such as pedestrians, and challenges in handling scenarios with occlusion, where the detection precision declines as objects overlap or obscure each other. These observations underscore the need for further enhancements in low-light performance and occlusion management to improve the overall robustness of the model.

Figure 10.

Visualization samples of the proposed model (352 × 352) on the VisDrone dataset.

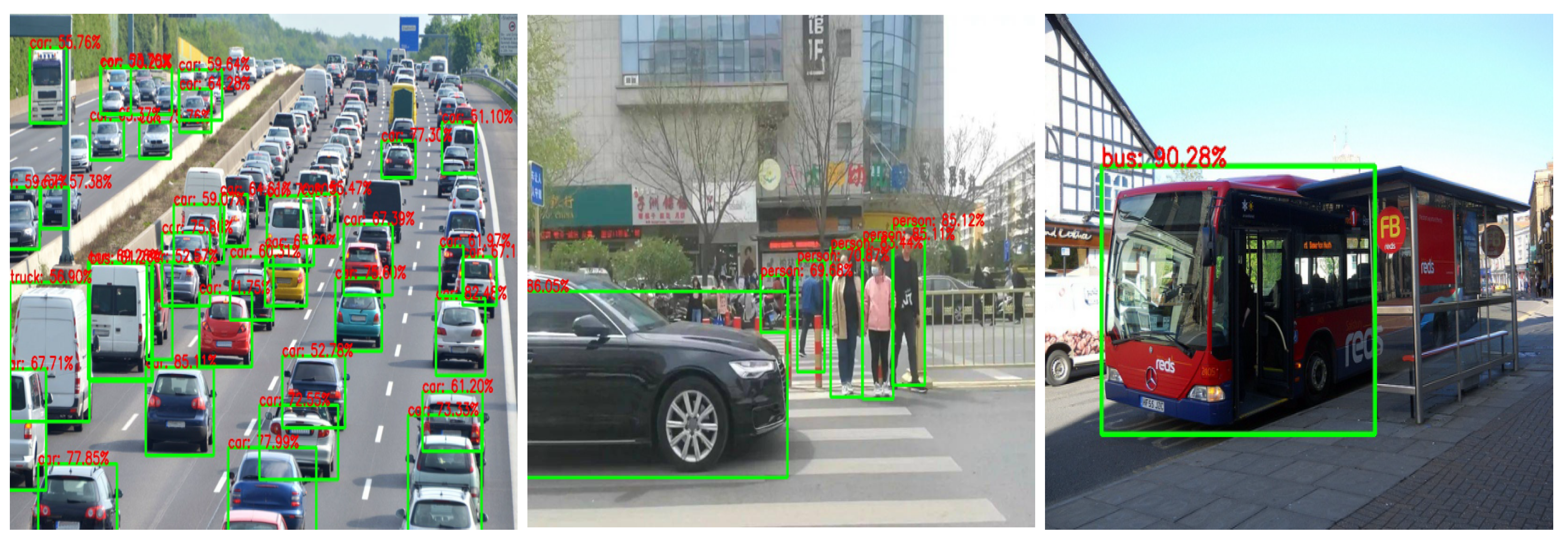

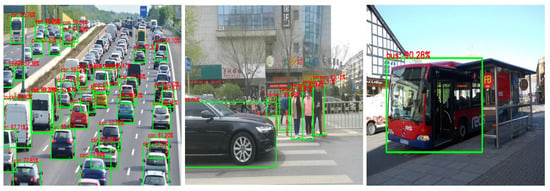

To further validate the model, the evaluation is expanded using the COCO dataset, focusing on three representative scenarios: large-scale traffic flow on highways, mixed pedestrian and vehicle environments, and single-vehicle settings, as shown in Figure 11. The COCO dataset, with its closer object perspectives and generally larger targets compared to the VisDrone dataset, allowed the proposed model to demonstrate enhanced detection performance, particularly in identifying the majority of objects within traffic scenes. However, the model still shows room for improvement in detecting smaller, distant objects and in scenarios where objects are heavily occluded.

Figure 11.

Visualization samples of the proposed model (352 × 352) on the COCO dataset.

4.6. Comprehensive Performance Evaluation

To assess the advantages of the proposed model in terms of inference speed, power consumption, and deployment cost on embedded development boards, several key performance metrics are defined. Energy per frame (EPF) is calculated using the formula: , where PC is the device power consumption (in watts) and is the per-frame inference time (in seconds). Cost per frame per second (CPFPS) is calculated using the formula: , where C is the device cost (in RMB). Mean average precision (mAP) directly represents model accuracy: the higher, the better. To comprehensively reflect the model’s advantages in inference speed, power consumption, and deployment cost, a comprehensive performance indicator (CPI) is defined that averages these three factors with weights. Assuming weights of , , and , where , the equation can be expressed as:

Assuming the following data—inference time for the proposed model at 352 resolution on RK3566 is 15.27 ms, RK3566 power consumption is 3.5 W, deployment cost is 299 RMB, and mAP is 11.5%—EPF, CPFPS, and CPI can be calculated using the above formulas.

The CPI table, as shown in Table 7, highlights the trade-offs between inference speed, accuracy, energy efficiency, and cost-effectiveness. The proposed proposed models, particularly the one with a 320 × 320 resolution, stand out with a CPI of 34.02, significantly higher than other models. This superior performance is due to its balanced metrics: an inference time of 12.13 ms, mAP of 10.8%, EPF of 0.0425 joules, and CPFPS of 3.63 RMB. The 352 × 352 resolution variant also performs well with a CPI of 27.61, demonstrating a good balance between higher precision (mAP of 11.5%) and efficiency. Compared to models like YOLOv8s, which has the highest mAP but significantly lower CPI (6.20) due to high energy and cost. The proposed methods achieve faster inference speeds and are less sensitive to memory bandwidth variations. These results highlight the proposed model’s efficiency in utilizing computational resources, making it ideal for real-time applications on cost-effective embedded platforms where both performance and operational costs are critical.

Table 7.

Comprehensive performance indicators (CPI) for different models.

4.7. UAV Application in Transportation Analysis

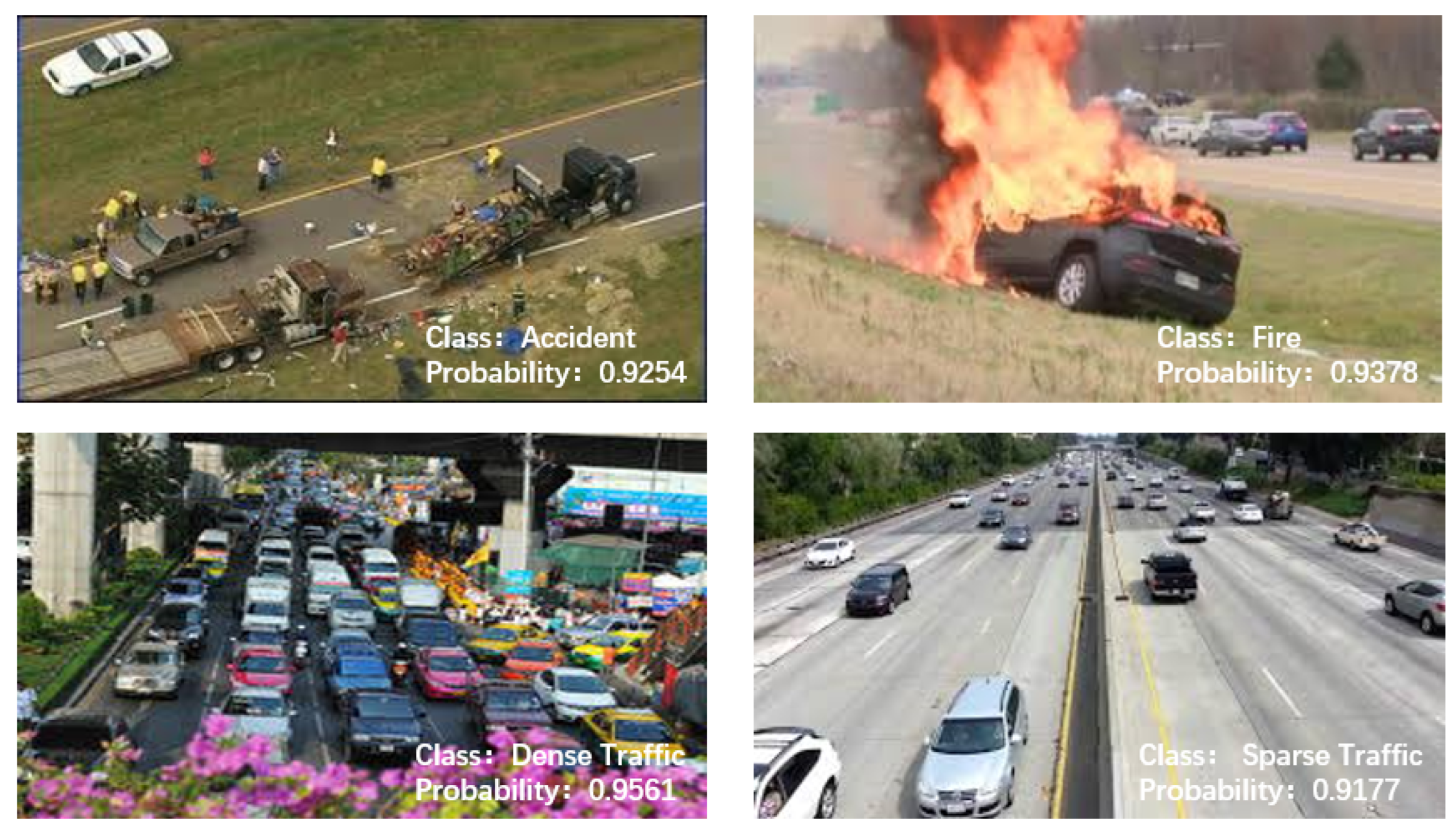

To demonstrate the practical usability of the model in real-world UAV applications, experiments are conducted using the Traffic-Net dataset. By applying the model to Traffic-Net, its effectiveness in traffic condition monitoring is validated, which is crucial for UAV-based traffic management systems. To align with the traffic condition classification tasks, the softmax loss function from the model is retained to ensure consistency in the optimization process, and the model is retrained. As shown in Figure 12, the proposed model effectively recognized and classified different traffic conditions with an average latency of 12.53 ms, including dense traffic, sparse traffic, fire incidents, and accidents. The high prediction accuracy across these varied scenarios underscores the model’s robustness and its potential for deployment in UAV systems tasked with real-time traffic monitoring. These results demonstrate that the proposed model is not only capable of operating efficiently in diverse environments but also adept at handling the real-world challenges that UAVs encounter when managing traffic conditions. This experiment confirms that the proposed model can effectively support UAVs in performing crucial tasks such as traffic surveillance, incident detection, and emergency response, making it a valuable tool for enhancing urban mobility and safety.

Figure 12.

Predictions on the Traffic-Net dataset across different traffic scenarios.

5. Discussion

In this paper, the power and cost constraints inherent to UAV platforms are rigorously considered. By utilizing the RK 3566-embedded platform, which has significantly lower power consumption and cost compared to the Nvidia Jetson Nano, efficient object detection is achieved that not only meets but exceeds the performance of state-of-the-art methods like FastestDet and YOLO-FastestV2 in both detection precision and speed. This advancement significantly pushes the boundaries of practical UAV applications, enabling more accessible and effective deployment of UAV systems in real-time scenarios. Specifically, a memory-efficient lightweight object-detection algorithm designed for embedded UAV platforms is presented. The proposed model, based on the FasterNet-16 backbone and PConv convolution, significantly reduces memory bandwidth requirements compared to traditional DSC, enabling more efficient utilization of limited computational resources in embedded devices and resulting in faster inference speeds. Through extensive experiments, it is demonstrated that the model outperforms current state-of-the-art lightweight detection models, such as FastestDet, in terms of inference speed, while maintaining competitive detection accuracy.

Despite these strengths, one of the main limitations of the proposed model is its lower detection precision compared to more complex algorithms running on higher-performance hardware. This trade-off is a direct consequence of the focus on creating a lightweight model that can operate effectively within the constraints of low-power embedded systems. As a result, while the model achieves satisfactory performance in many scenarios, it may not be as precise as more computationally intensive models in detecting smaller or more obscure objects, particularly in complex or cluttered environments.

6. Conclusions and Future Work

In this study, a novel, memory-efficient lightweight object-detection model specifically tailored for embedded UAV platforms in remote sensing is introduced, leveraging the FasterNet-16 backbone and optimized PConv convolution layers. The model significantly reduces memory bandwidth requirements compared to traditional deep separable convolutions, enabling more efficient computational resource use in embedded devices. Through rigorous testing on platforms like Nvidia Jetson Nano and Rockchip RK3566, the model demonstrated superior inference speeds, achieving a mean average precision (mAP) of 11.5% at 15.27 ms inference time for a 352 × 352 resolution on RK3566, significantly outperforming contemporary models like FastestDet and YOLOv8s. This breakthrough underscores the model’s capability to balance computational efficiency and detection precision effectively, setting a new standard for real-time object detection in UAV transportation applications.

In future research, the focus will be on enhancing the model’s performance by addressing the trade-off between accuracy and inference time. This will involve exploring advanced network architectures to achieve better speed and precision, integrating techniques such as hyperparameter tuning and attention mechanisms, and improving robustness across diverse environmental conditions. Additionally, plans will include gathering user feedback from UAV operators to identify practical challenges and areas for improvement in the model’s usability. In UAV traffic management and urban monitoring, drones must transmit detection results back to communication base stations, making UAV communication a critical area for future research. Ensuring secure communication channels is vital, especially in scenarios involving real-time data transmission and decision-making. The integrity, confidentiality, and availability of communication links between the UAV and its control center must be maintained to prevent malicious attacks such as data interception, spoofing, or jamming [52,53].

Author Contributions

Conceptualization, Z.L. and C.C.; methodology, Z.L.; validation, C.C., Z.H. and Y.C.C.; writing—original draft preparation, Z.L.; writing—review and editing, Z.L., C.C., Z.H., Y.C.C., L.L. and Q.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China (62072360, 62172438), the key research and development plan of Shaanxi province (2021ZDLGY02-09, 2023-GHZD-44, 2023-ZDLGY-54), the National Key Laboratory Foundation (2023-JCJQ-LB-007), the Natural Science Foundation of Guangdong Province of China (2022A1515010988), Key Project on Artificial Intelligence of Xi’an Science and Technology Plan (23ZDCYJSGG0021-2022, 23ZDCYYYCJ0008, 23ZDCYJSGG0002-2023), Xidian-UTAR China Malaysia Science and Technology Institute-the Fundamental Research Funds for the Central Universities/XURF-2024-QTZX24089, and the Proof-of-concept fund from Hangzhou Research Institute of Xidian University (GNYZ2023QC0201, GNYZ2024QC004, GNYZ2024QC015).

Data Availability Statement

The data can be provided to any interested reader upon request as usual practice.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xiao, T.; Chen, C.; Pei, Q.; Jiang, Z.; Xu, S. SFO: An adaptive task scheduling based on incentive fleet formation and metrizable resource orchestration for autonomous vehicle platooning. IEEE Trans. Mob. Comput. 2023, 23, 7695–7713. [Google Scholar] [CrossRef]

- Sánchez-Montero, M.; Toscano-Moreno, M.; Bravo-Arrabal, J.; Serón Barba, J.; Vera-Ortega, P.; Vázquez-Martín, R.; Fernandez-Lozano, J.J.; Mandow, A.; García-Cerezo, A. Remote planning and operation of a UGV through ROS and commercial mobile networks. In Proceedings of the Iberian Robotics Conference, Zaragoza, Spain, 23–25 November 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 271–282. [Google Scholar]

- Liu, Z.; Chen, C.; Wang, Z.; Cong, L.; Lu, H.; Pei, Q.; Wan, S. Automated Vehicle Platooning: A Two-Stage Approach Based on Vehicle-Road Cooperation. IEEE Trans. Intell. Veh. 2024; early access. [Google Scholar] [CrossRef]

- Lyu, X.; Li, X.; Dang, D.; Dou, H.; Wang, K.; Lou, A. Unmanned aerial vehicle (UAV) remote sensing in grassland ecosystem monitoring: A systematic review. Remote Sens. 2022, 14, 1096. [Google Scholar] [CrossRef]

- Butilă, E.V.; Boboc, R.G. Urban traffic monitoring and analysis using unmanned aerial vehicles (UAVs): A systematic literature review. Remote Sens. 2022, 14, 620. [Google Scholar] [CrossRef]

- Liu, Q.; Li, Z.; Yuan, S.; Zhu, Y.; Li, X. Review on vehicle detection technology for unmanned ground vehicles. Sensors 2021, 21, 1354. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Zhu, J.; Sun, K.; Jia, S.; Li, Q.; Hou, X.; Lin, W.; Liu, B.; Qiu, G. Urban traffic density estimation based on ultrahigh-resolution UAV video and deep neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2018, 11, 4968–4981. [Google Scholar] [CrossRef]

- Nousi, P.; Mademlis, I.; Karakostas, I.; Tefas, A.; Pitas, I. Embedded UAV real-time visual object detection and tracking. In Proceedings of the 2019 IEEE International Conference on Real-time Computing and Robotics (RCAR), Irkutsk, Russia, 4–9 August 2019; pp. 708–713. [Google Scholar]

- Luo, X.; Wu, Y.; Wang, F. Target detection method of UAV aerial imagery based on improved YOLOv5. Remote Sens. 2022, 14, 5063. [Google Scholar] [CrossRef]

- Niu, K.; Yan, Y. A Small-Object-Detection Model Based on Improved YOLOv8 for UAV Aerial Images. In Proceedings of the 2023 2nd International Conference on Artificial Intelligence and Intelligent Information Processing (AIIIP), Hangzhou, China, 27–29 October 2023; pp. 57–60. [Google Scholar]

- Zhou, H.; Ma, A.; Niu, Y.; Ma, Z. Small-object detection for UAV-based images using a distance metric method. Drones 2022, 6, 308. [Google Scholar] [CrossRef]

- Tang, G.; Ni, J.; Zhao, Y.; Gu, Y.; Cao, W. A survey of object detection for UAVs based on deep learning. Remote Sens. 2023, 16, 149. [Google Scholar] [CrossRef]

- Amarasingam, N.; Salgadoe, A.S.A.; Powell, K.; Gonzalez, L.F.; Natarajan, S. A review of UAV platforms, sensors, and applications for monitoring of sugarcane crops. Remote Sens. Appl. Soc. Environ. 2022, 26, 100712. [Google Scholar] [CrossRef]

- Min, X.; Zhou, W.; Hu, R.; Wu, Y.; Pang, Y.; Yi, J. Lwuavdet: A lightweight uav object detection network on edge devices. IEEE Internet Things J. 2024, 11, 24013–24023. [Google Scholar] [CrossRef]

- Wu, W.; Liu, A.; Hu, J.; Mo, Y.; Xiang, S.; Duan, P.; Liang, Q. EUAVDet: An Efficient and Lightweight Object Detector for UAV Aerial Images with an Edge-Based Computing Platform. Drones 2024, 8, 261. [Google Scholar] [CrossRef]

- Ma, X. FastestDet: Ultra Lightweight Anchor-Free Real-Time Object Detection Algorithm. 2022. Available online: https://github.com/dog-qiuqiu/FastestDet (accessed on 14 July 2022).

- Ahmed, F.; Mohanta, J.; Keshari, A.; Yadav, P.S. Recent advances in unmanned aerial vehicles: A review. Arab. J. Sci. Eng. 2022, 47, 7963–7984. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Wang, W.; Liu, Z.; Wang, Z.; Li, C.; Lu, H.; Pei, Q.; Wan, S. RLFN-VRA: Reinforcement Learning-based Flexible Numerology V2V Resource Allocation for 5G NR V2X Networks. IEEE Trans. Intell. Veh. 2024; early access. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Vehicle detection from UAV imagery with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6047–6067. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, L. A review on unmanned aerial vehicle remote sensing: Platforms, sensors, data processing methods, and applications. Drones 2023, 7, 398. [Google Scholar] [CrossRef]

- Adade, R.; Aibinu, A.M.; Ekumah, B.; Asaana, J. Unmanned Aerial Vehicle (UAV) applications in coastal zone management—A review. Environ. Monit. Assess. 2021, 193, 154. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Chen, N.; Li, Y.; Yang, Z.; Lu, Z.; Wang, S.; Wang, J. LODNU: Lightweight object detection network in UAV vision. J. Supercomput. 2023, 79, 10117–10138. [Google Scholar] [CrossRef]

- Hua, W.; Chen, Q.; Chen, W. A new lightweight network for efficient UAV object detection. Sci. Rep. 2024, 14, 13288. [Google Scholar] [CrossRef] [PubMed]

- Yue, M.; Zhang, L.; Huang, J.; Zhang, H. Lightweight and Efficient Tiny-Object Detection Based on Improved YOLOv8n for UAV Aerial Images. Drones 2024, 8, 276. [Google Scholar] [CrossRef]

- Fan, Q.; Li, Y.; Deveci, M.; Zhong, K.; Kadry, S. LUD-YOLO: A novel lightweight object detection network for unmanned aerial vehicle. Inf. Sci. 2024, 686, 121366. [Google Scholar] [CrossRef]

- Yang, Z.; Li, Y.; Wang, B.; Ding, S.; Jiang, P. A lightweight sea surface object detection network for unmanned surface vehicles. J. Mar. Sci. Eng. 2022, 10, 965. [Google Scholar] [CrossRef]

- Zhou, H.; Hu, F.; Juras, M.; Mehta, A.B.; Deng, Y. Real-time video streaming and control of cellular-connected UAV system: Prototype and performance evaluation. IEEE Wirel. Commun. Lett. 2021, 10, 1657–1661. [Google Scholar] [CrossRef]

- Ghazali, M.H.M.; Teoh, K.; Rahiman, W. A systematic review of real-time deployments of UAV-based LoRa communication network. IEEE Access 2021, 9, 124817–124830. [Google Scholar] [CrossRef]

- Cao, Z.; Kooistra, L.; Wang, W.; Guo, L.; Valente, J. Real-time object detection based on uav remote sensing: A systematic literature review. Drones 2023, 7, 620. [Google Scholar] [CrossRef]

- Zhang, P.; Zhong, Y.; Li, X. SlimYOLOv3: Narrower, faster and better for real-time UAV applications. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Chen, J.; Kao, S.h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Chen, C.; Si, J.; Li, H.; Han, W.; Kumar, N.; Berretti, S.; Wan, S. A High Stability Clustering Scheme for the Internet of Vehicles. IEEE Trans. Netw. Serv. Manag. 2024, 21, 4297–4311. [Google Scholar] [CrossRef]

- Ye, T.; Qin, W.; Zhao, Z.; Gao, X.; Deng, X.; Ouyang, Y. Real-time object detection network in UAV-vision based on CNN and transformer. IEEE Trans. Instrum. Meas. 2023, 72, 2505713. [Google Scholar] [CrossRef]

- Cheng, Q.; Wang, H.; Zhu, B.; Shi, Y.; Xie, B. A real-time uav target detection algorithm based on edge computing. Drones 2023, 7, 95. [Google Scholar] [CrossRef]

- Kaiser, L.; Gomez, A.N.; Chollet, F. Depthwise separable convolutions for neural machine translation. arXiv 2017, arXiv:1706.03059. [Google Scholar]

- Hossain, S.M.M.; Deb, K.; Dhar, P.K.; Koshiba, T. Plant leaf disease recognition using depth-wise separable convolution-based models. Symmetry 2021, 13, 511. [Google Scholar] [CrossRef]

- Mittapalli, P.S.; Tagore, M.; Reddy, P.A.; Kande, G.B.; Reddy, Y.M. Deep learning based real-time object detection on jetson nano embedded gpu. In Microelectronics, Circuits and Systems: Select Proceedings of Micro2021; Springer: Berlin/Heidelberg, Germany, 2023; pp. 511–521. [Google Scholar]

- Süzen, A.A.; Duman, B.; Şen, B. Benchmark analysis of jetson tx2, jetson nano and raspberry pi using deep-cnn. In Proceedings of the 2020 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 26–28 June 2020; pp. 1–5. [Google Scholar]

- Kareem, A.A.; Hammood, D.A.; Alchalaby, A.A.; Khamees, R.A. A performance of low-cost nvidia jetson nano embedded system in the real-time siamese single object tracking: A comparison study. In Proceedings of the International Conference on Computing Science, Communication and Security, Gandhingar, India, 6–7 February 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 296–310. [Google Scholar]

- Kim, J.; Kim, S. Hardware accelerators in embedded systems. In Artificial Intelligence and Hardware Accelerators; Springer: Berlin/Heidelberg, Germany, 2023; pp. 167–181. [Google Scholar]

- Liu, Q.; Wu, Z.; Wang, J.; Peng, W.; Li, C. Implementation and acceleration of driving behavior detection model based on Rockchip RV1126+ Yolov5s. In Proceedings of the 2024 International Conference on Power Electronics and Artificial Intelligence, Zhuhai, China, 23–25 August 2024; pp. 902–906. [Google Scholar]

- Pomšár, L.; Brecko, A.; Zolotová, I. Brief overview of Edge AI accelerators for energy-constrained edge. In Proceedings of the 2022 IEEE 20th Jubilee World Symposium on Applied Machine Intelligence and Informatics (SAMI), Poprad, Slovakia, 2–5 March 2022; pp. 461–466. [Google Scholar]

- Lee, J.K.; Jamieson, M.; Brown, N.; Jesus, R. Test-driving RISC-V Vector hardware for HPC. In Proceedings of the International Conference on High Performance Computing, Hamburg, Germany, 23–25 May 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 419–432. [Google Scholar]

- Shi, H. Research on the Application of the Internet of Things Based on A-IoT. In Proceedings of the 2022 6th International Seminar on Education, Management and Social Sciences (ISEMSS 2022), Chongqing, China, 15–17 July 2022; Atlantis Press: Dordrecht, The Netherlands, 2022; pp. 1496–1502. [Google Scholar]

- Brown, N.; Jamieson, M.; Lee, J.K. Experiences of running an HPC RISC-V testbed. arXiv 2023, arXiv:2305.00512. [Google Scholar]

- Du, D.; Zhu, P.; Wen, L.; Bian, X.; Lin, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-DET2019: The vision meets drone object detection in image challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Cao, F.; Chen, S.; Zhong, J.; Gao, Y. Traffic Condition Classification Model Based on Traffic-Net. Comput. Intell. Neurosci. 2023, 2023, 7812276. [Google Scholar] [CrossRef]

- Krichen, M.; Adoni, W.Y.H.; Mihoub, A.; Alzahrani, M.Y.; Nahhal, T. Security challenges for drone communications: Possible threats, attacks and countermeasures. In Proceedings of the 2022 2nd International Conference of Smart Systems and Emerging Technologies (SMARTTECH), Riyadh, Saudi Arabia, 9–11 May 2022; pp. 184–189. [Google Scholar]

- Ko, Y.; Kim, J.; Duguma, D.G.; Astillo, P.V.; You, I.; Pau, G. Drone secure communication protocol for future sensitive applications in military zone. Sensors 2021, 21, 2057. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).