Cadastral-to-Agricultural: A Study on the Feasibility of Using Cadastral Parcels for Agricultural Land Parcel Delineation

Abstract

1. Introduction

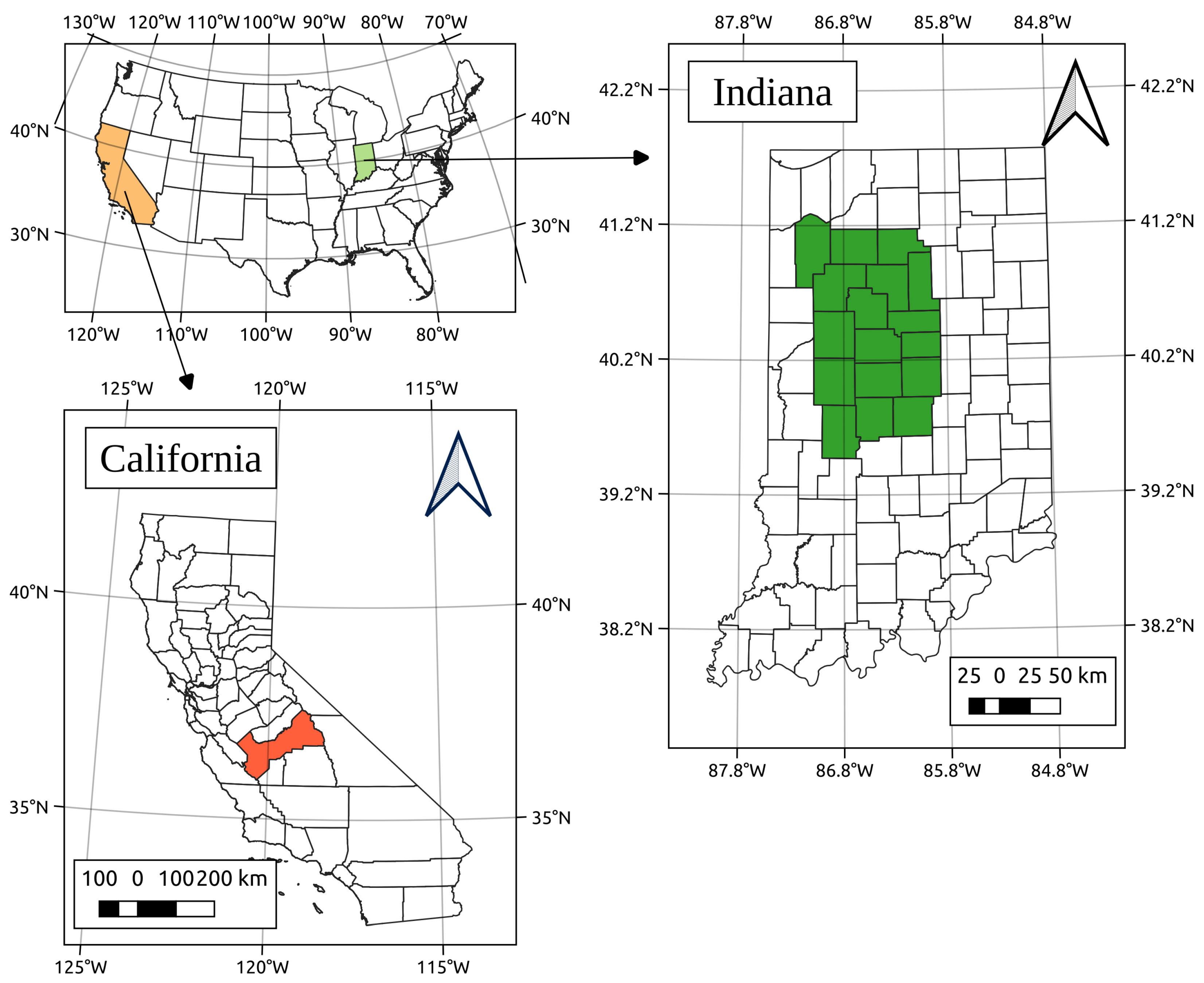

2. Study Area and Material

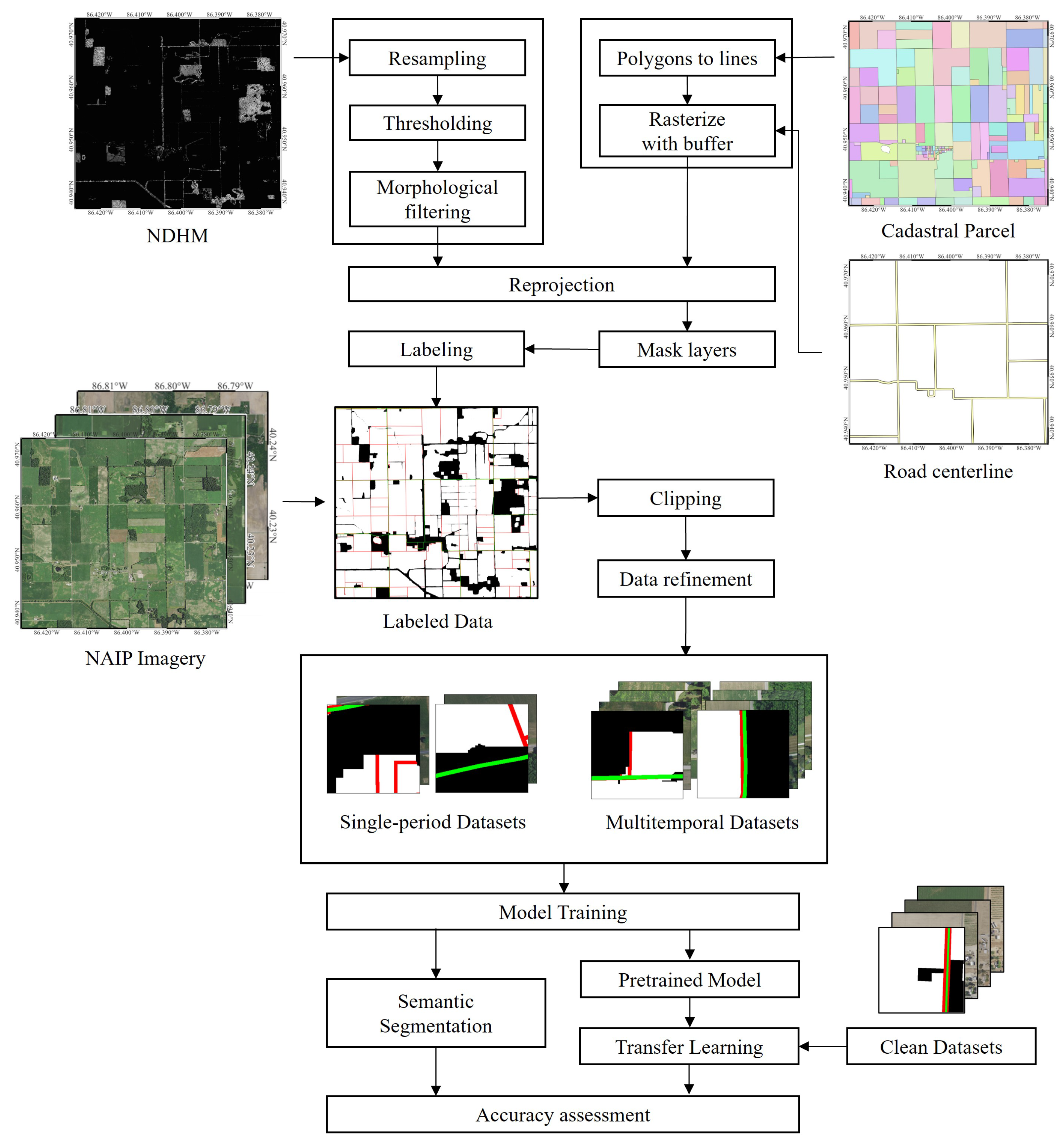

3. The Cad2Ag Framework

3.1. Class Definition for ALP Delineation

- Background: Non-agricultural land;

- Parcel: Agricultural land;

- Road: Road centerlines;

- Buffer: Boundaries between farmlands or between farmlands and other land types.

3.2. Data Labeling Workflow

3.3. Data Refinement

4. Experimental Design

4.1. Dataset Construction and Assessment

4.2. Deep Learning Architecture

4.3. Performance Evaluation across Various Scenarios

4.4. Evaluation Metrics

4.5. Computational Environment

5. Experimental Results

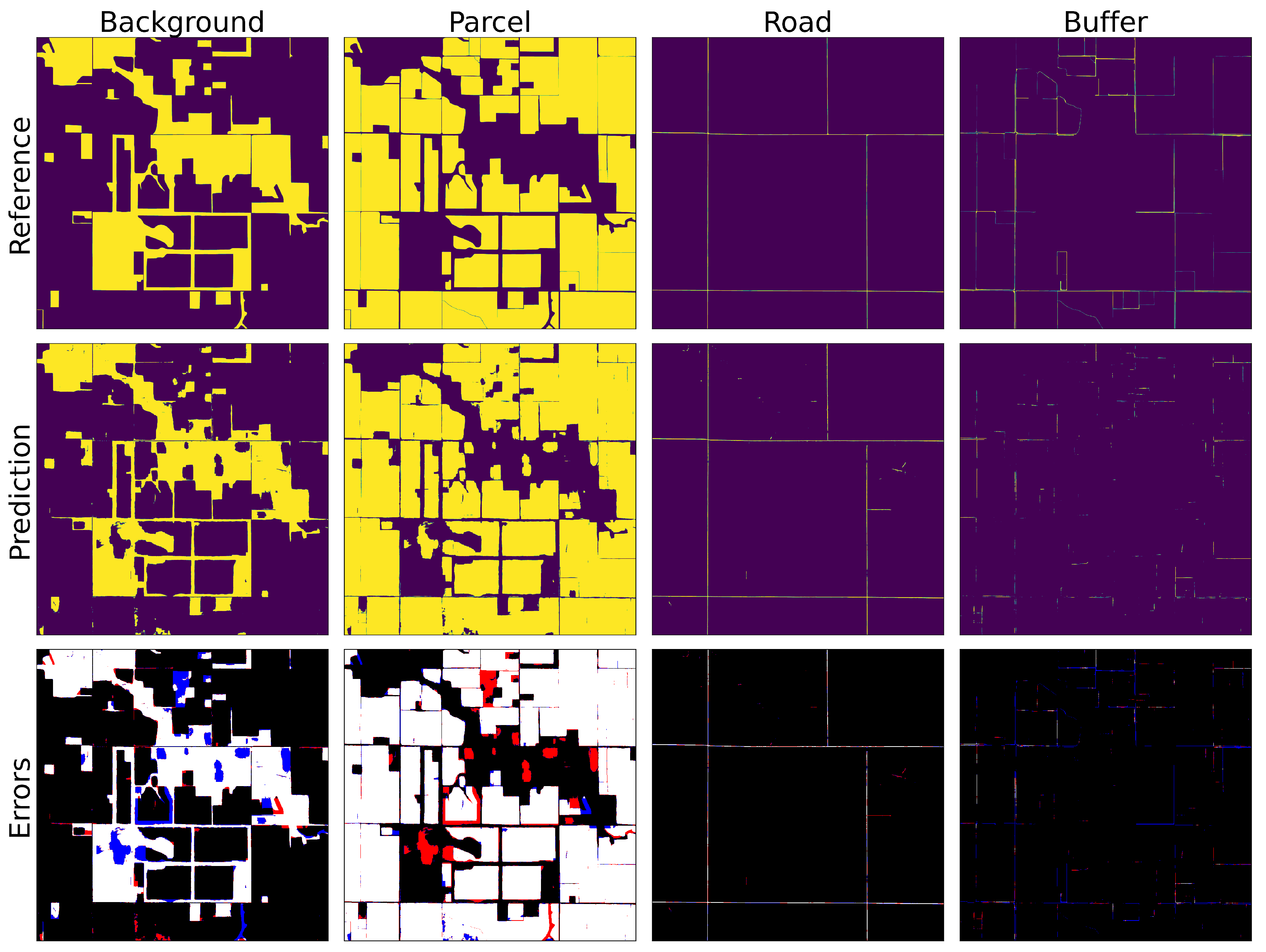

5.1. Generated Labels for Cad2Ag

5.2. Analysis of Single-Temporal Dataset Results

5.3. Evaluating the Impact of Multi-Temporal Data

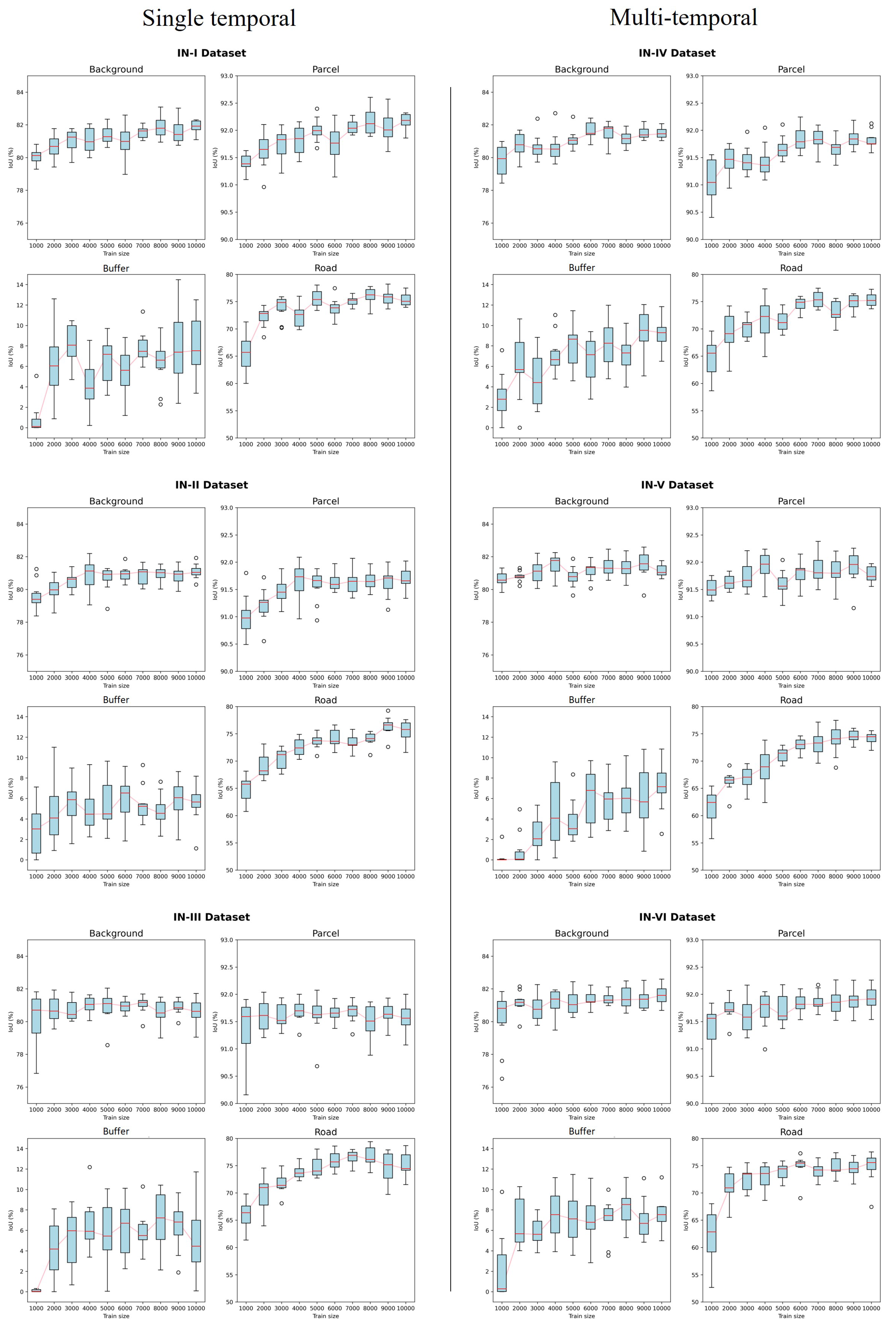

5.4. Evaluating the Impact of Training Data Size

5.5. Evaluating the Impact of Transfer Learning with Clean Labels

6. Discussion

6.1. The Significance of the Study

6.2. Limitations and Future Work

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Masoud, K.M.; Persello, C.; Tolpekin, V.A. Delineation of Agricultural Field Boundaries from Sentinel-2 Images Using a Novel Super-Resolution Contour Detector Based on Fully Convolutional Networks. Remote Sens. 2019, 12, 59. [Google Scholar] [CrossRef]

- Liu, W.; Wang, J.; Luo, J.; Wu, Z.; Chen, J.; Zhou, Y.; Sun, Y.; Shen, Z.; Xu, N.; Yang, Y. Farmland Parcel Mapping in Mountain Areas Using Time-Series SAR Data and VHR Optical Images. Remote Sens. 2020, 12, 3733. [Google Scholar] [CrossRef]

- European Commission; Joint Research Centre; Institute for the Protection and the Security of the Citizen. Land Parcel Identification System (LPIS) Anomalies’ Sampling and Spatial Pattern: Towards Convergence of Ecological Methodologies and GIS Technologies; Publications Office: Luxembourg, 2008. [Google Scholar]

- Lark, T.J.; Schelly, I.H.; Gibbs, H.K. Accuracy, bias, and improvements in mapping crops and cropland across the United States using the USDA cropland data layer. Remote Sens. 2021, 13, 968. [Google Scholar] [CrossRef]

- Zimmermann, J.; González, A.; Jones, M.B.; O’Brien, P.; Stout, J.C.; Green, S. Assessing land-use history for reporting on cropland dynamics—A comparison between the Land-Parcel Identification System and traditional inter-annual approaches. Land Use Policy 2016, 52, 30–40. [Google Scholar] [CrossRef]

- Chen, X.; Yu, L.; Du, Z.; Liu, Z.; Qi, Y.; Liu, T.; Gong, P. Toward sustainable land use in China: A perspective on China’s national land surveys. Land Use Policy 2022, 123, 106428. [Google Scholar] [CrossRef]

- Dacko, M.; Wojewodzic, T.; Pijanowski, J.; Taszakowski, J.; Dacko, A.; Janus, J. Increase in the Value of Agricultural Parcels—Modelling and Simulation of the Effects of Land Consolidation Project. Agriculture 2021, 11, 388. [Google Scholar] [CrossRef]

- Subedi, Y.R.; Kristiansen, P.; Cacho, O. Drivers and consequences of agricultural land abandonment and its reutilisation pathways: A systematic review. Environ. Dev. 2022, 42, 100681. [Google Scholar] [CrossRef]

- Kalantari, M.; Rajabifard, A.; Wallace, J.; Williamson, I. Spatially referenced legal property objects. Land Use Policy 2008, 25, 173–181. [Google Scholar] [CrossRef]

- Garcia-Pedrero, A.; Gonzalo-Martin, C.; Lillo-Saavedra, M. A machine learning approach for agricultural parcel delineation through agglomerative segmentation. Int. J. Remote Sens. 2017, 38, 1809–1819. [Google Scholar] [CrossRef]

- Garcia-Pedrero, A.; Lillo-Saavedra, M.; Rodriguez-Esparragon, D.; Gonzalo-Martin, C. Deep learning for automatic outlining agricultural parcels: Exploiting the land parcel identification system. IEEE Access 2019, 7, 158223–158236. [Google Scholar] [CrossRef]

- Hong, R.; Park, J.; Jang, S.; Shin, H.; Kim, H.; Song, I. Development of a parcel-level land boundary extraction algorithm for aerial imagery of regularly arranged agricultural areas. Remote Sens. 2021, 13, 1167. [Google Scholar] [CrossRef]

- Xu, L.; Ming, D.; Du, T.; Chen, Y.; Dong, D.; Zhou, C. Delineation of cultivated land parcels based on deep convolutional networks and geographical thematic scene division of remotely sensed images. Comput. Electron. Agric. 2022, 192, 106611. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Rydberg, A.; Borgefors, G. Integrated method for boundary delineation of agricultural fields in multispectral satellite images. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2514–2520. [Google Scholar] [CrossRef]

- Arbelaez, P. Boundary extraction in natural images using ultrametric contour maps. In Proceedings of the 2006 Conference on Computer Vision and Pattern Recognition Workshop (CVPRW’06), New York, NY, USA, 17–22 June 2006; IEEE: Piscataway, NJ, USA, 2006; p. 182. [Google Scholar]

- Maire, M.; Arbelaez, P.; Fowlkes, C.; Malik, J. Using contours to detect and localize junctions in natural images. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–8. [Google Scholar]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Yang, M.Y.; Vosselman, G. Contour detection for UAV-based cadastral mapping. Remote Sens. 2017, 9, 171. [Google Scholar] [CrossRef]

- Persello, C.; Tolpekin, V.A.; Bergado, J.R.; De By, R.A. Delineation of agricultural fields in smallholder farms from satellite images using fully convolutional networks and combinatorial grouping. Remote Sens. Environ. 2019, 231, 111253. [Google Scholar] [CrossRef]

- Alibabaei, K.; Gaspar, P.D.; Lima, T.M.; Campos, R.M.; Girão, I.; Monteiro, J.; Lopes, C.M. A review of the challenges of using deep learning algorithms to support decision-making in agricultural activities. Remote Sens. 2022, 14, 638. [Google Scholar] [CrossRef]

- Tuia, D.; Persello, C.; Bruzzone, L. Domain adaptation for the classification of remote sensing data: An overview of recent advances. IEEE Geosci. Remote Sens. Mag. 2016, 4, 41–57. [Google Scholar] [CrossRef]

- Ma, Y.; Chen, S.; Ermon, S.; Lobell, D.B. Transfer learning in environmental remote sensing. Remote Sens. Environ. 2024, 301, 113924. [Google Scholar] [CrossRef]

- Çağdaş, V.; Kara, A.; Lisec, A.; Paasch, J.M.; Paulsson, J.; Skovsgaard, T.L.; Velasco, A. Determination of the property boundary—A review of selected civil law jurisdictions. Land Use Policy 2023, 124, 106445. [Google Scholar] [CrossRef]

- Moreno-Torres, J.G.; Raeder, T.; Alaiz-Rodríguez, R.; Chawla, N.V.; Herrera, F. A unifying view on dataset shift in classification. Pattern Recognit. 2012, 45, 521–530. [Google Scholar] [CrossRef]

- Stoker, J.; Miller, B. The accuracy and consistency of 3D elevation program data: A systematic analysis. Remote Sens. 2022, 14, 940. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Vanderbilt, B.C.; Ramezan, C.A. Land Cover Classification and Feature Extraction from National Agriculture Imagery Program (NAIP) Orthoimagery: A Review. Photogramm. Eng. Remote Sens. 2017, 83, 737–747. [Google Scholar] [CrossRef]

- Crommelinck, S.; Koeva, M.; Yang, M.Y.; Vosselman, G. Application of Deep Learning for Delineation of Visible Cadastral Boundaries from Remote Sensing Imagery. Remote Sens. 2019, 11, 2505. [Google Scholar] [CrossRef]

- Luo, X.; Bennett, R.; Koeva, M.; Lemmen, C.; Quadros, N. Quantifying the overlap between cadastral and visual boundaries: A case study from Vanuatu. Urban Sci. 2017, 1, 32. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings Part III 18. Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Xu, J.; Yang, J.; Xiong, X.; Li, H.; Huang, J.; Ting, K.; Ying, Y.; Lin, T. Towards interpreting multi-temporal deep learning models in crop mapping. Remote Sens. Environ. 2021, 264, 112599. [Google Scholar] [CrossRef]

- Hughes, G. On the mean accuracy of statistical pattern recognizers. IEEE Trans. Inf. Theory 1968, 14, 55–63. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Goldberger, J.; Ben-Reuven, E. Training deep neural-networks using a noise adaptation layer. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Azadi, S.; Feng, J.; Jegelka, S.; Darrell, T. Auxiliary image regularization for deep cnns with noisy labels. arXiv 2015, arXiv:1511.07069. [Google Scholar]

- Song, H.; Yang, L.; Jung, J. Self-filtered learning for semantic segmentation of buildings in remote sensing imagery with noisy labels. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 16, 1113–1129. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Cheng, X.; Sun, Y.; Zhang, W.; Wang, Y.; Cao, X.; Wang, Y. Application of deep learning in multitemporal remote sensing image classification. Remote Sens. 2023, 15, 3859. [Google Scholar] [CrossRef]

- Rußwurm, M.; Körner, M. Multi-temporal land cover classification with sequential recurrent encoders. ISPRS Int. J. Geo-Inf. 2018, 7, 129. [Google Scholar] [CrossRef]

- Ndikumana, E.; Ho Tong Minh, D.; Baghdadi, N.; Courault, D.; Hossard, L. Deep recurrent neural network for agricultural classification using multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar] [CrossRef]

- Liu, T.; Tao, D. Classification with Noisy Labels by Importance Reweighting. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 447–461. [Google Scholar] [CrossRef]

- Yeung, M.; Sala, E.; Schönlieb, C.B.; Rundo, L. Unified focal loss: Generalising dice and cross entropy-based losses to handle class imbalanced medical image segmentation. Comput. Med. Imaging Graph. 2022, 95, 102026. [Google Scholar] [CrossRef]

- Li, P.; He, X.; Qiao, M.; Cheng, X.; Li, Z.; Luo, H.; Song, D.; Li, D.; Hu, S.; Li, R.; et al. Robust deep neural networks for road extraction from remote sensing images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6182–6197. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

| Region | Dataset | Acquisition Date | Tiles | Patch Size | Primary Crop |

|---|---|---|---|---|---|

| Indiana | IN-I | 2016 | 32,000 | 512 × 512 × 4 | Corn |

| IN-II | 2018 | 32,000 | 512 × 512 × 4 | Soybeans | |

| IN-III | 2020 | 32,000 | 512 × 512 × 4 | Soil, soybeans | |

| IN-IV | 2016 and 2018 | 32,000 | 512 × 512 × 8 | Corn, soybeans | |

| IN-V | 2016 and 2020 | 32,000 | 512 × 512 × 8 | Corn | |

| IN-VI | 2016 and 2018 and 2020 | 32,000 | 512 × 512 × 12 | Corn, soybeans | |

| California | CA-I | 2016 and 2018 and 2020 | 200 | 512 × 512 × 12 | Almonds, grapes |

| Metric | Formula | Equation |

|---|---|---|

| Precision (P) | (1) | |

| Recall (R) | (2) | |

| -Score () | (3) | |

| Intersection over Union (IoU) | (4) |

| Dataset | Metric | Class | Macro-Averaged | |||

|---|---|---|---|---|---|---|

| Background | Parcel | Road | Buffer | |||

| IN-I | P | 0.95 | 0.94 | 0.81 | 0.36 | 0.77 |

| R | 0.86 | 0.98 | 0.91 | 0.10 | 0.71 | |

| 0.90 | 0.96 | 0.86 | 0.15 | 0.72 | ||

| IoU | 0.81 | 0.92 | 0.75 | 0.08 | 0.64 | |

| IN-II | P | 0.94 | 0.93 | 0.83 | 0.36 | 0.77 |

| R | 0.85 | 0.98 | 0.89 | 0.06 | 0.70 | |

| 0.90 | 0.96 | 0.86 | 0.10 | 0.70 | ||

| IoU | 0.81 | 0.91 | 0.75 | 0.05 | 0.63 | |

| IN-III | P | 0.95 | 0.93 | 0.84 | 0.45 | 0.79 |

| R | 0.84 | 0.99 | 0.88 | 0.05 | 0.69 | |

| 0.89 | 0.96 | 0.86 | 0.09 | 0.70 | ||

| IoU | 0.80 | 0.91 | 0.75 | 0.04 | 0.63 | |

| Dataset | Metric | Class | Macro-Averaged | |||

|---|---|---|---|---|---|---|

| Background | Parcel | Road | Buffer | |||

| IN-IV | P | 0.95 | 0.93 | 0.84 | 0.41 | 0.78 |

| R | 0.85 | 0.98 | 0.88 | 0.11 | 0.71 | |

| 0.90 | 0.96 | 0.86 | 0.17 | 0.72 | ||

| IoU | 0.81 | 0.91 | 0.75 | 0.09 | 0.64 | |

| IN-V | P | 0.95 | 0.93 | 0.83 | 0.38 | 0.77 |

| R | 0.85 | 0.98 | 0.87 | 0.08 | 0.70 | |

| 0.90 | 0.96 | 0.85 | 0.13 | 0.71 | ||

| IoU | 0.81 | 0.91 | 0.74 | 0.07 | 0.63 | |

| IN-VI | P | 0.95 | 0.93 | 0.83 | 0.38 | 0.77 |

| R | 0.86 | 0.98 | 0.88 | 0.09 | 0.70 | |

| 0.90 | 0.96 | 0.86 | 0.14 | 0.71 | ||

| IoU | 0.81 | 0.91 | 0.74 | 0.07 | 0.63 | |

| Number of Clean Labels | Metric | Class | Macro-Averaged | |||

|---|---|---|---|---|---|---|

| Background | Parcel | Road | Buffer | |||

| 0 Samples (No Fine-tuning) | P | 0.36 | 0.94 | 0.00 | 0.31 | 0.40 |

| R | 0.62 | 0.87 | 0.00 | 0.47 | 0.49 | |

| 0.45 | 0.91 | 0.00 | 0.37 | 0.43 | ||

| IoU | 0.29 | 0.82 | 0.00 | 0.22 | 0.33 | |

| 50 Samples | P | 0.15 | 0.99 | 0.87 | 0.39 | 0.60 |

| R | 0.89 | 0.54 | 0.52 | 0.64 | 0.65 | |

| 0.25 | 0.70 | 0.65 | 0.49 | 0.53 | ||

| IoU | 0.14 | 0.54 | 0.48 | 0.32 | 0.37 | |

| 100 Samples | P | 0.14 | 1.00 | 0.94 | 0.37 | 0.61 |

| R | 0.92 | 0.51 | 0.32 | 0.65 | 0.60 | |

| 0.24 | 0.68 | 0.47 | 0.46 | 0.46 | ||

| IoU | 0.13 | 0.50 | 0.30 | 0.29 | 0.31 | |

| 150 Samples | P | 0.54 | 0.99 | 0.89 | 0.58 | 0.75 |

| R | 0.85 | 0.92 | 0.76 | 0.87 | 0.85 | |

| 0.66 | 0.95 | 0.82 | 0.70 | 0.78 | ||

| IoU | 0.49 | 0.90 | 0.69 | 0.53 | 0.66 | |

| 200 Samples | P | 0.72 | 0.98 | 0.93 | 0.58 | 0.80 |

| R | 0.77 | 0.95 | 0.68 | 0.90 | 0.83 | |

| 0.74 | 0.97 | 0.78 | 0.70 | 0.80 | ||

| IoU | 0.58 | 0.93 | 0.64 | 0.53 | 0.67 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.S.; Song, H.; Jung, J. Cadastral-to-Agricultural: A Study on the Feasibility of Using Cadastral Parcels for Agricultural Land Parcel Delineation. Remote Sens. 2024, 16, 3568. https://doi.org/10.3390/rs16193568

Kim HS, Song H, Jung J. Cadastral-to-Agricultural: A Study on the Feasibility of Using Cadastral Parcels for Agricultural Land Parcel Delineation. Remote Sensing. 2024; 16(19):3568. https://doi.org/10.3390/rs16193568

Chicago/Turabian StyleKim, Han Sae, Hunsoo Song, and Jinha Jung. 2024. "Cadastral-to-Agricultural: A Study on the Feasibility of Using Cadastral Parcels for Agricultural Land Parcel Delineation" Remote Sensing 16, no. 19: 3568. https://doi.org/10.3390/rs16193568

APA StyleKim, H. S., Song, H., & Jung, J. (2024). Cadastral-to-Agricultural: A Study on the Feasibility of Using Cadastral Parcels for Agricultural Land Parcel Delineation. Remote Sensing, 16(19), 3568. https://doi.org/10.3390/rs16193568