Abstract

Convolutional neural networks (CNNs) have significantly advanced in recent years in detecting arbitrary-oriented ships in synthetic aperture radar (SAR) images. However, challenges remain with multi-scale target detection and deployment on satellite-based platforms due to the extensive model parameters and high computational complexity. To address these issues, we propose a lightweight method for arbitrary-oriented ship detection in SAR images, named LSR-Det. Specifically, we introduce a lightweight backbone network based on contour guidance, which reduces the number of parameters while maintaining excellent feature extraction capability. Additionally, a lightweight adaptive feature pyramid network is designed to enhance the fusion capability of the ship features across different layers with a low computational cost by incorporating adaptive ship feature fusion modules between the feature layers. To efficiently utilize the fused features, a lightweight rotating detection head is designed, incorporating the idea of sharing the convolutional parameters, thereby improving the network’s ability to detect multi-scale ship targets. The experiments conducted on the SAR ship detection dataset (SSDD) and the rotating ship detection dataset (RSDD-SAR) demonstrate that LSR-Det achieves an average precision (AP50) of 98.5% and 97.2% with 3.21 G floating point operations (FLOPs) and 0.98 M parameters, respectively, outperforming the current popular SAR arbitrary-direction ship target detection methods.

1. Introduction

Synthetic aperture radar (SAR), as a microwave imaging sensor, offers extensive applications in marine monitoring, ocean development, terrain classification, and disaster prevention [1] and control due to its technical advantages of all-day, all-weather, and long-range capabilities. Ship detection is a crucial aspect of SAR maritime applications [2], which is of great significance to the monitoring of water transport in coastal areas, port management, and ensuring maritime safety and security in coastal areas [3].

The traditional SAR image ship detection methods primarily rely on manual feature extraction, which typically involves stages such as land–sea segmentation, image preprocessing, and target pre-screening. These methods mainly encompass constant false alarm rate (CFAR)-based methods [4], visual-saliency-based methods [5], polarization-decomposition-based methods [6], transform-domain-based methods [7], and global-threshold-based methods [8]. Among these, the CFAR method, which is based on the statistical distribution of sea clutter, is the most widely used. This method detects ship targets by statistically modeling the sea clutter, setting an adaptive threshold, and comparing the pixel’s gray value to be detected with this threshold. However, the CFAR algorithm is less versatile and requires re-modeling the distribution of sea clutter under different conditions. In practical scenarios, the distribution of sea clutter is influenced by numerous factors, such as wind and waves, making it challenging to accurately model. Consequently, the CFAR algorithm struggles to deliver optimal detection results in in-shore complex environments. Thus, the traditional SAR ship detection methods can no longer meet the current detection requirements.

In recent years, with the advancement of deep learning technology, target detection algorithms based on convolutional neural networks (CNNs) [9] have developed rapidly and have been applied to SAR image ship detection. Currently, the SAR image ship detection methods are primarily divided into two categories: horizontal-bounding-box-based and oriented-bounding-box-based methods. In horizontal-bounding-box-based SAR ship detection, Ke et al. [10] replaced some traditional rigid convolutional kernels in Faster R-CNN [11] with deformable convolutional kernels that can adaptively learn the extra 2D offset of the original kernels to better simulate the shapes of ships, thus improving the detection performance. Wang et al. [12] proposed an improved Faster R-CNN port SAR ship detection method based on the MSER decision criterion, which replaces the threshold decision criterion of Faster R-CNN with the Maximum Stability Extreme Regions (MSER) method to re-evaluate the generated region proposals with higher scores, effectively reducing the false alarm rate and improving the detection accuracy. Zhu et al. [13] introduced an improved residual module and deformable convolution in the feature extraction network to enhance the feature extraction capability and redesigned the anchor frame regression method to improve the target localization accuracy. Hu et al. [14] designed an anchor-frame-free detection algorithm to balance local and nonlocal attention mechanisms, better utilizing contextual information to extract the semantic information of the image and improve the multi-scale ship detection capabilities. Li et al. [15] proposed an attention-guided balanced feature pyramid network, which mitigates the effects of complex background clutter and noise on ship detection, enhancing the detection performance of multi-scale ships. Cui et al. [16] introduced the spatial shuffle-group enhance (SSE) module in CenterNet, which extracts stronger semantic features and simultaneously suppresses the partial noise to reduce the false alarms caused by offshore and inland interference. Bai et al. [17] proposed an anchorless frame detection network based on feature balancing and united attention, which aggregates and balances the semantic information at different levels of the feature pyramid using a global-context-guided feature balance pyramid (GC-FBP). Zhou et al. [18] proposed a step-by-step feature refinement backbone and pyramid network, which sequentially refines the position and silhouette of ships through a step-by-step spatial information decoupling function to reduce the multi-scale high-level semantic loss of the neighboring feature layers and improve the ship detection performance.

Most of the aforementioned methods employ horizontal bounding boxes (HBBs) for ship detection. However, the ship targets in SAR images exhibit significant directional differences, and using the traditional horizontal bounding boxes to detect inclined ship targets with large aspect ratios introduces more background clutter. In areas such as harbors where ships are densely distributed, the HBBs may overlap with other ship targets around the primary target, resulting in lower detection accuracy. In contrast, oriented bounding box (OBB)-based detectors can provide more precise localization and orientation information for ship targets and are more suitable for ship detection in SAR images, garnering significant attention. Guo et al. [19] used the upper-left offsets of two horizontal anchor points and an inclination factor to directly infer the coordinates of the four OBB vertices and designed a feature-adaptive module to enhance the target’s feature information. For instance, An et al. [20] proposed the DRBox-v2 algorithm, which employs a multilayer anchor box generation strategy and an improved anchor box coding method for ship target detection in SAR images, yielding better detection results. Yang et al. [21] approached the issue from the perspective of feature matching, decoupling the feature optimization processes of different tasks to alleviate the conflicts between the learning objectives and proposed an improved rotating frame RetinaNet detection algorithm to address the mismatch between the ship targets and algorithm features. Wang et al. [22] combined the multi-scale contextual semantic information fusion (MCSIF) module and scattering points information learning (SPIL) module, proposing a two-stage network that incorporates ship scattering information learning for enhanced detection robustness. Chen et al. [23] designed a nonlocal attention module with a feature-oriented alignment module, solving the drawbacks of feature misalignment in the cascade optimization scheme and balancing the quality of the bounding box prediction with the speed of single-stage algorithms. Xu et al. [24] introduced an attention-weighted feature pyramid network to achieve high-quality semantic interaction and soft feature selection among the ship features of different resolutions and scales and designed a Triangle Distance IoU Loss to generate more accurate bounding boxes while accelerating the model convergence.

Although the aforementioned SAR ship target detection methods have achieved high detection accuracy, they often come with high network complexity, improving the detection accuracy at the expense of technical complexity, which poses challenges for subsequent application and deployment. In response, some scholars have researched lightweight ship detection models. Chen et al. [25] proposed a dense connection method, integrating the outputs of three different scales through upsampling and cascading operations, fully merging low-resolution features with high-resolution features, and combining network pruning [26] and knowledge distillation operations to construct a high-precision small-scale SAR real-time ship detector. Guo et al. [27] combined depthwise separable convolutions and Mobilenet and proposed the depthwise adaptive spatial feature fusion (DSASFF) module, achieving a lightweight, fast, and accurate SAR ship target detection algorithm. Liu et al. [28] introduced the Kullback–Leibler Divergence (KLD) loss function and BRA attention mechanism, enhancing the detection accuracy of small ship targets, and designed the lightweight P-ELAN structure by adjusting the width and depth of the model, reducing the network parameters and saving computational resources.

Although these methods partially address the issue of the high computational complexity of the models, several problems remain. Firstly, detecting ship targets in complex backgrounds is still a challenge. Due to the imaging mechanism of SAR, a certain amount of speckle noise is generated, making it difficult to extract the ship feature information, thereby increasing the difficulty of distinguishing ship targets from nearshore buildings and other interferences. Secondly, the multi-scale differences in the ship target sizes in different images, influenced by the different imaging resolutions of the various SAR working modes and the volume sizes of the ships themselves, increase the detection difficulty.

In summary, addressing the challenge of arbitrary-direction ship target detection with a low computational cost while enhancing the multi-scale detection capability is urgent. To this end, we propose a lightweight anchor-free method for arbitrary-direction ship detection in SAR images, named LSR-Det. Firstly, we introduce a lightweight backbone network (LCGNet) based on contour and spatial information guidance. This network is mainly constructed using the contour-guided feature aggregation module (CGFAM) and the lightweight feature extraction module (LFEM), which efficiently extract ship features from SAR images. Secondly, to improve the ship target feature fusion performance, we design a lightweight adaptive feature pyramid network (LAFPN). By incorporating the adaptive ship feature fusion module (ASFM) into different feature layers of the feature pyramid network (FPN), the network can adaptively learn the subtle features in ship images and suppress the background clutter noise. Finally, we propose a lightweight rotation detection head (LRDHead) network that employs a shared convolutional parameters strategy to address the imbalance in the number of samples from ships of different scales, reducing the computational costs while enhancing the network’s ability to detect multi-scale ship targets in SAR images.

The main contributions of this paper are as follows:

- A lightweight contour-guided backbone network (LCGNet) is designed. The backbone network constructed using the contour-guided feature aggregation module (CGFAM) and lightweight feature extraction module (LFEM) can perform the extraction of SAR image ship features more efficiently and at the same time provide lower computational complexity.

- The lightweight adaptive feature pyramid network (LAFPN) is designed. It improves the model’s ability to perceive ship position information and can achieve the multi-scale feature-adaptive fusion of ship features in SAR images with lower parameters.

- The lightweight rotation detection head (LRDHead) network is designed. The design of shared convolutional parameters can reduce the number of parameters and computational volume of the detection head network and at the same time enhance the multi-scale detection capability of the model.

- The experimental results on the SAR ship detection dataset (SSDD) and rotated ship detection dataset in SAR images (RSDD-SAR) show that our proposed module is effective, and, compared with the other arbitrary-direction target detectors, LSR-Det obtains higher AP50 and F1 scores, while both the parameters and computation are lower than for the other arbitrary-direction target detectors.

2. Methodology

2.1. Overall Architecture

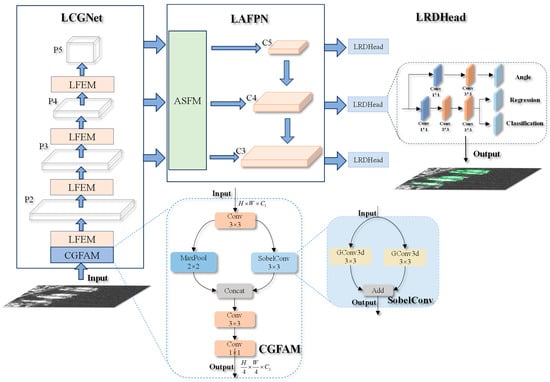

In this paper, we propose an arbitrary-direction ship detection method named LSR-Det. The overall structure is illustrated in Figure 1. The backbone network employed is LCGNet, which primarily consists of CGFAM and multiple LFEMs. The feature maps output from the last three segments of the backbone network are used as inputs to the neck network, denoted as {P3, P4, P5}. The proposed neck network is named LAFPN, which processes these inputs to produce output feature maps {C3, C4, C5}, using ASFMs between adjacent feature layers. It is important to note that features in different layers vary in size, with the three output feature maps being 1/8, 1/16, and 1/32 of the input image, respectively, used for predicting small, medium, and large ship targets. The head network proposed, called LRDHead, is divided into three branches: classification, regression, and angle prediction. It optimizes feature extraction by using the structural design of shared convolutional parameters to address the imbalance in sample numbers at different scales across layers, thereby enhancing the network’s multi-scale detection capability. Additionally, the loss function used in the proposed method comprises two components: the classification loss function and the rotating anchor regression loss function.

Figure 1.

Overall architecture of LSR-Det.

2.2. Lightweight Contour-Guided Backbone Network (LCGNet)

Compared to optical images, ship targets in SAR images often exhibit less pronounced feature information, relatively blurred contours, and are prone to interference from nearby shore buildings. Thus, efficiently extracting ship features in SAR images presents a challenging problem. To address this, we propose CGFAM and LFEM and use them to reconstruct CSPDarknet53 by replacing the convolutional blocks in its top layer and a series of CSP modules. We refer to the improved backbone network as LCGNet, as illustrated in Figure 1. LCGNet effectively enhances the feature extraction and information transfer capabilities of the backbone network while reducing the number of parameters and computational load. Further details on CGFAM and LFEM are provided in the following paragraphs.

CGFAM efficiently implements the Sobel operator using 3D group convolution and integrates it with pooling operations, enabling effective extraction of the ship’s contour and spatial information. The structure of CGFAM is illustrated in Figure 1. Initially, the input image features, denoted as , are downsampled using a 3 × 3 convolution with a stride of 2. These features are then divided into two branches: one branch undergoes feature extraction via the Sobel operator, which detects abrupt changes in image intensity and thus effectively captures edge features of the ship target. The other branch performs a pooling operation to extract the spatial features of the ship. The results from these two branches are then concatenated to fuse the edge and spatial information, enhancing the network’s ability to comprehend the ship target. Subsequently, downsampling is performed again using a 3 × 3 convolution with a stride of 2, followed by channel alignment through a 1 × 1 convolution to produce the output P2 layer feature map. Notably, we efficiently construct the Sobel operator using a 3D group convolution, which provides a more computationally economical approach. The entire process can be summarized as follows:

where and denote convolution operations of size 3 × 3 and 1 × 1, denote Sobel operators, and denote 2 × 2 maxpooling operations.

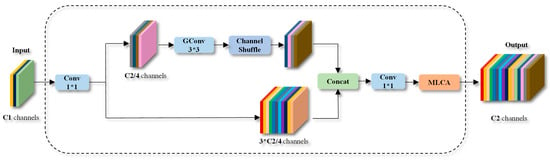

LFEM significantly enhances the computational efficiency of the backbone network by combining group convolution with channel shuffle operations, while maintaining excellent feature extraction capabilities. The structure of LFEM is illustrated in Figure 2. Initially, the input image features are denoted as . Initially, the input channel undergoes transformation via 1 × 1 convolution. Subsequently, the channel is divided into two branches at a ratio of 1:3. One branch conducts feature extraction through a 3 × 3 group convolution operation, followed by a channel shuffle operation, enhancing feature interaction and information transfer between groups. The other branch is connected to the former branch via a long-hop connection. Moreover, most channel attention mechanisms, such as SE and ECA, only consider the overall relationship between channels and neglect spatial feature information, leading to suboptimal model detection performance. Additionally, some attention mechanisms that do account for spatial feature information incur high computational costs. MLCA [29] addresses these issues by efficiently combining channel and spatial feature information with minimal computational overhead, thereby improving network detection performance. Consequently, we embed MLCA at the tail end to obtain the final output. The entire process can be summarized as follows:

where and denote the top branch and the bottom branch in Figure 2, respectively. denotes a convolution operation of size 1 × 1, denotes a channel shuffle operation, denotes a group convolution operation of size 3 × 3, and denotes the embedded MLCA attention mechanism.

Figure 2.

Architecture of LFEM.

LCGNet consists of CGFAM and multiple LFEMs, and further details of LCGNet are provided in Table 1.

Table 1.

Details of LCGNet.

2.3. Lightweight Adaptive Feature Pyramid Network (LAFPN)

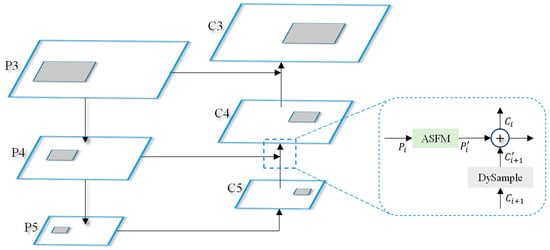

Ship targets in SAR images exhibit multi-scale features. To enhance detection capabilities for multi-scale targets, feature pyramid networks (FPNs) [30] are frequently employed for feature fusion. FPN achieves multi-scale feature integration by connecting high-level and low-level feature maps through top-down pathways and lateral connections, thereby enhancing target detection performance. However, the feature fusion method in FPN is constrained to element-wise addition or concatenation of features along the channel dimension, which fails to fully exploit the complex relationships and complementary information between high-level and low-level features. While other feature fusion networks, such as PAFPN and BiFPN, more fully utilize features in different dimensions, SAR images, compared to optical remote sensing images, have lower resolution and more noticeable scattering noise. The longer propagation paths of structures like PAFPN and BiFPN can lead to a loss of ship texture details, negatively affecting the detection ability of small target ships. Additionally, the more complex structure of these networks increases the computational cost of model training and inference, which does not meet our task requirements. Consequently, to improve multi-scale ship target detection while reducing network complexity, we have designed the lightweight adaptive feature pyramid network (LAFPN) based on FPN. The specific structure of LAFPN is depicted in Figure 3.

Figure 3.

Architecture of LAFPN.

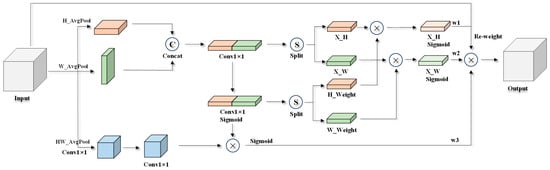

Inspired by [31], we propose the adaptive ship feature fusion module (ASFM). ASFM enhances the model’s capability to discern ship position information, adaptively learns subtle features in the ship image, and suppresses background clutter noise, thereby improving the model’s ability to detect multi-scale ship targets. The workflow schematic of ASFM is illustrated in Figure 4.

Figure 4.

Architecture of ASFM.

The initial encoding of the input features is achieved through pooling operations in both horizontal and vertical directions, which can be expressed as

where and denote the pooling operations in the vertical and horizontal directions, respectively, and represents the input feature map.

The feature mappings in both directions are then concatenated and reduced in channel dimension through convolution. This can be expressed as

where denotes the convolution operation, represents the concatenation operation, and and denote the feature mappings in the vertical and horizontal directions, respectively.

Subsequently, the feature map is divided back into the original two directions along the spatial dimension. This can be expressed as

where denotes the splitting operation, and represents the feature map obtained after the concatenation of vertical and horizontal direction features.

Since the imaging mechanism of SAR images differs from that of traditional optical images, leading to relatively less feature information about ships and increasing detection difficulty, we introduce an additional convolution for the sigmoid and split operations following the initial convolution. We then multiply the obtained weights with the feature maps from the two branches to enhance detailed information in the vertical and horizontal directions. Finally, after applying the sigmoid function, we obtain the final weights, which can be expressed as

where denotes the sigmoid function, and represent the weights for enhancing feature information in the vertical and horizontal directions, respectively, and and denote the final weights obtained from the first and second branches, respectively.

In addition, it is crucial to consider the interaction information between the original channels of the feature map. Therefore, we introduce another branch that compresses the feature map along the global spatial dimension and performs a global average pooling operation. After a convolution operation, this is multiplied with the weights obtained from the previous two branches, which further refines the global feature information of the ship and effectively captures cross-channel interaction information. Finally, the weight of this branch is obtained through a sigmoid function. This can be expressed as follows:

where denotes the global average pooling operation, represents the feature map obtained from the third branch, and denotes the weights obtained from the third branch.

The final output feature map can be expressed as

Additionally, given that the upsampling operation in FPN is a simple interpolation method, it may reduce the model’s ability to capture fine structures and edge information of ship targets in SAR images. Therefore, we replace the upsampling operation in LAFPN with the DySample [32] operation, an efficient dynamic upsampler. Finally, to minimize computational cost, we set the number of channels per layer in LAFPN to 256.

2.4. Lightweight Rotational Detection Head (LRDHead)

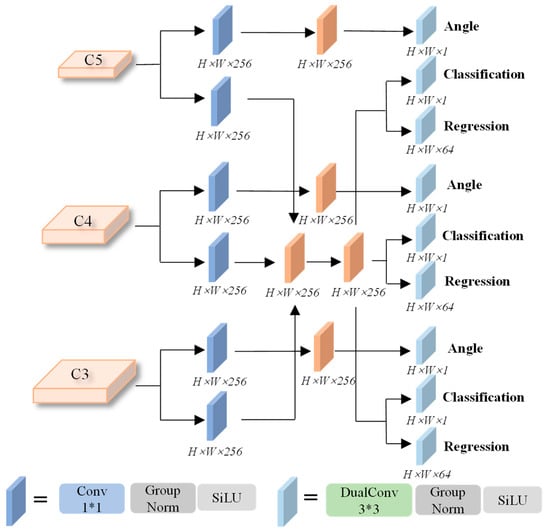

To enhance the detection capability for multi-scale ship targets while reducing computational cost, we have designed a lightweight rotary detection head network. The design of the proposed head network is illustrated in Figure 5. Initially, the feature map passes through a 1 × 1 convolutional block, reducing the number of channels to 256. Subsequently, a 3 × 3 convolutional block processes three different layers of feature maps, and, after a series of operations, the final prediction result is obtained. Unlike conventional detection head networks, our designed head network shares convolutional parameters across different feature layers, addressing the issue of sample imbalance at various ship scales across layers, thereby enhancing multi-scale detection capability and reducing the number of parameters. Another notable distinction from standard horizontal frame detection heads is the inclusion of an additional angular branch. Each layer of the head comprises three branches for classification, regression, and angle tasks.

Figure 5.

Architecture of LRDHead.

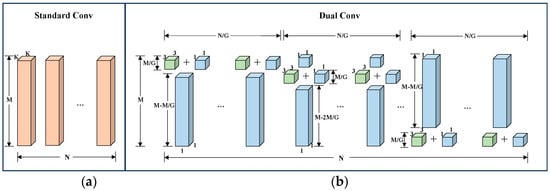

It is worth noting that we implemented the two 3 × 3 convolution blocks using the lightweight convolution DualConv [33], which merges the feature extraction capabilities of a 3 × 3 convolution with the processing efficiency of a 1 × 1 convolution kernel. This approach simultaneously processes the same input feature mapping channels (see Figure 6b), offering fewer parameters compared to standard convolution while enhancing feature utilization. Additionally, to address the issue of inconsistent BatchNorm behavior between the training and inference phases—often resulting in reduced detection accuracy during inference—we use GroupNorm instead of BatchNorm in the convolution module. This design effectively reduces the number of parameters while improving detection accuracy.

Figure 6.

Architecture of (a) standard convolution; (b) dual convolution. M is the number of input channels (i.e., the depth of input feature map), N is the number of convolutional filters and also the number of output channels (i.e., the depth of output feature map), K × K is the convolutional kernel size, and G is the number of groups in group convolution.

2.5. Loss Function

The loss function of the proposed method consists of three components and can be expressed as

where denotes classification loss using binary cross-entropy loss function (BCE). denotes the regression loss using a rotated bounding box. consists of Probabilistic Intersection-over-Union (ProbIoU) loss function [34] and Distribution Focal Loss (DFL) function [35]. ProbIoU loss function is based on the Hellinger distance [36] to compute the similarity between two oriented bounding boxes, and , , and denote the equilibrium weights of , , and , respectively.

3. Experiment

3.1. Datasets and Experimental Settings

For the experimental validation, we use the publicly available SAR ship detection dataset (SSDD+) [37] and RSDD-SAR [38]. The detailed information is shown in Table 2.

Table 2.

Detailed descriptions of SSDD+ and RSDD-SAR.

The SSDD+ dataset contains 1160 images with 2587 ships, covering SAR images in multiple resolutions, polarization modes, and various sea surface conditions. The multi-resolution coverage ensures that the training model has sufficient detection performance for multi-scale targets.

The RSDD-SAR dataset includes 7000 images, which contain a total of 10,263 ship targets with sizes of 512 × 512 and resolutions ranging from 2 to 20 m. The RSDD-SAR dataset features a rich variety of scenes, including typical scenes of harbors, shipping channels, offshore low-resolution, and offshore high-resolution areas. The images in both datasets are mainly from the RadarSat-2, TerraSAR-X, and Sentinel-1 satellites. These datasets contain a variety of different scenes in different imaging modes and are therefore highly suitable for measuring the multi-scale SAR ship detection performance of the model.

We partitioned the SSDD dataset into training and test sets with an 8:2 ratio, and the RSDD-SAR dataset into training and test sets with a 5:2 ratio. In the ablation experiments, stochastic gradient descent (SGD) was used as the optimizer, with the initial learning rate set to 0.01, the number of training epochs set to 300, the batch size set to 16, and the input size set to 640 × 640. For the comparison experiments, the number of training epochs was set to 72, the initial learning rate was set to 0.0025, the weight decay was set to 0.0001, the momentum was set to 0.9, the batch size was set to 8, and the input size was set to 640 × 640. All the experiments were conducted on the same computer configuration. The GPU of the computer is RTX4060Ti (16 g), and the CPU is an Intel Core i5-12400. The computer operating system is Windows 10, Python 3.8, and Cuda 11.7. All the deep learning networks are built based on the deep learning framework PyTorch 1.13. In addition, all the comparison experiments were conducted based on the MMRotate-dev-1.0.0 version [39] framework.

3.2. Evaluation Indicators

To quantitatively evaluate the performance of the proposed method, we utilize evaluation metrics such as precision (P), recall (R), mean average precision (AP), model size, parameters, and floating point operations per second (FLOPs) to measure the effectiveness of LSR-Det. The calculation formula is

where (True Positive) represents the count of correctly detected ship samples, (False Positive) signifies the count of samples erroneously identified as ships, and (False Negative) indicates the count of ship samples missed.

Average precision (AP) delineates the area beneath the precision–recall curve, composed of precision and recall, and the coordinate axis. The formula for AP is as follows:

In addition, the F1 score is the reconciled mean of accuracy and recall, which is a commonly used comprehensive evaluation metric for detection performance. It is calculated by the following formula:

3.3. Ablation Experiments

To validate the effectiveness of the proposed method, we incrementally incorporate its components into the baseline and analyze the results, as shown in Table 3. The baseline network used is YOLOv8n (OBB), which includes an additional angular branch in its head network compared to YOLOv8n [40] to enhance the detection of ship targets from any direction and employs the same training strategy as the proposed method.

Table 3.

Results of ablation experiment on the SSDD+ dataset.

Based on the results shown in Table 3, several conclusions can be drawn. Compared to the baseline, the proposed method demonstrates an increase in the AP50 and F1 values by 1.6% and 1.5%, respectively. Additionally, the values of the parameters and FLOPs decrease by 68.0% and 61.5%, respectively, and the model size is only 2.6 M, which indicates the excellent performance of LSR-Det in all aspects.

When the LCGNet is used as the backbone, both AP50 and recall improve, with only a minor decrease in precision, which remains within acceptable limits. Additionally, there is a notable reduction in the model complexity metrics (number of parameters, computation, and model size). This indicates that the LCGNet enhances the feature extraction for ship targets while reducing the network complexity.

By continuing to use the LAFPN as the neck network, the number of model parameters decreases by 54.5%, while AP50 increases by 1.3% compared to the baseline. Notably, this enhancement is achieved without increasing the model parameters or computational effort, demonstrating the effectiveness of our improvement measures.

After incorporating the LRDHead as the head, all the accuracy metrics improve to varying degrees compared to the baseline. The number of model parameters decreases to 32.0% of the original, the computational amount is only 38.5% of the baseline, and the model size is just 2.6 M. This indicates that the proposed method significantly enhances the detection of multi-scale ships, proving its efficacy in multi-scale ship detection.

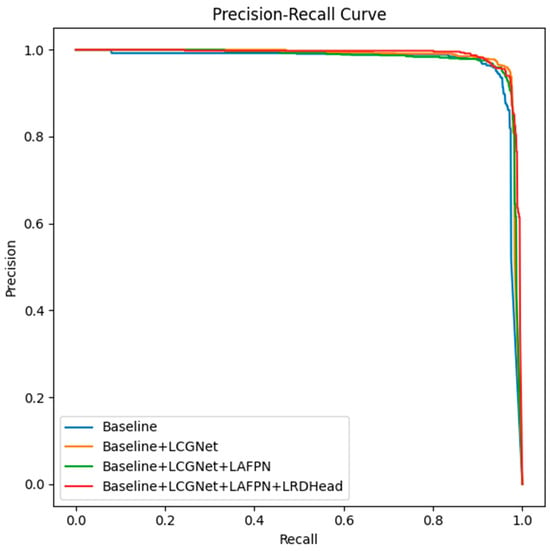

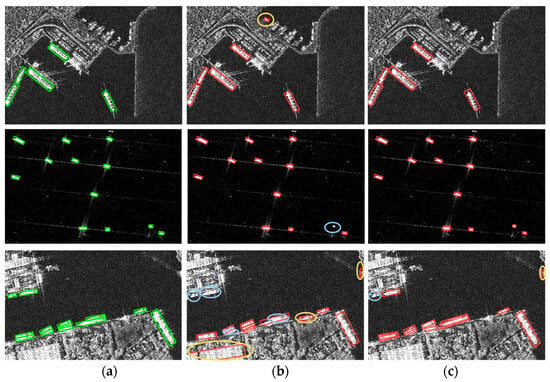

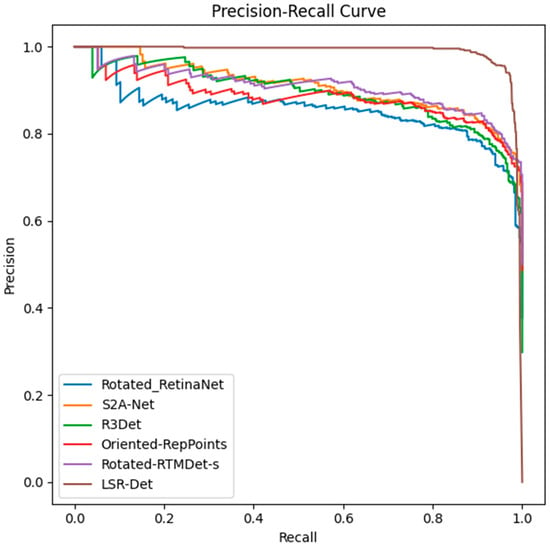

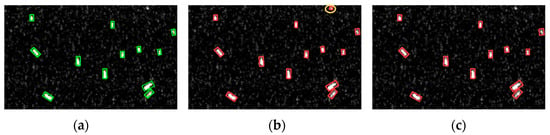

Figure 7 displays the precision-recall curves for the different module combinations, providing a more intuitive representation of the benefits of each module. To further verify the effectiveness of LSR-Det, Figure 8 presents the detection results for the baseline and LSR-Det under various SSDD scenarios, demonstrating that LSR-Det results in fewer false alarms and missed detections, with overall improved detection performance.

Figure 7.

Precision–recall curves when adding different modules.

Figure 8.

Visualization of the detection results. (a) Ground truth. (b) Baseline. (c) LSR-Det. Green: ground truths. Red: detection results. Blue: missed detections. Yellow: false alarms.

3.4. Comparison Experiments

To further evaluate the effectiveness of LSR-Det, this section compares it with several other commonly used arbitrary-directed target detection methods on the SSDD+ and RSDD-SAR datasets. These methods include Rotated-RetinaNet [41], S2A-Net [42], R3Det [43], Oriented-RepPoints [44], and Rotated-RTMDet-s [45].

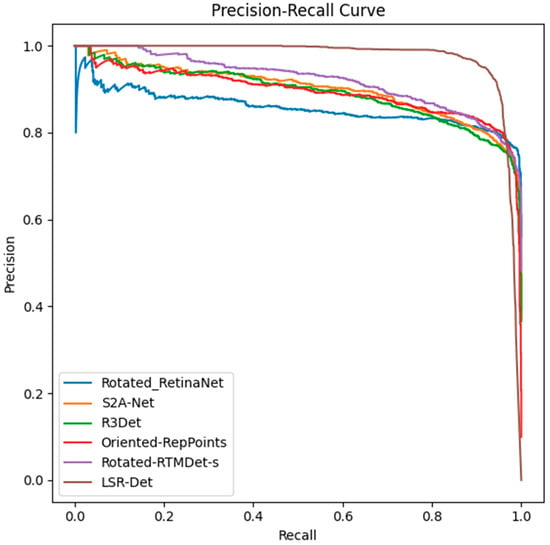

We quantitatively analyzed the aforementioned arbitrary-oriented target detection methods on the SSDD+ dataset, and the specific detection results are presented in Table 4. As shown in the table, our proposed LSR-Det method achieves the highest scores on AP50 and F1, with values of 98.5% and 96.2%, respectively. In terms of AP50, LSR-Det surpasses R3Det, S2A-Net, and Rotated-RTMDet-s by 9.1%, 8.1%, and 7.8%, respectively, demonstrating the superior accuracy of the proposed method. Additionally, LSR-Det has significant advantages in terms of the parameters and FLOPs, with values of 0.98 M and 3.2 G. Specifically, the parameters and FLOPs values of LSR-Det are only 11.1% and 21.9% of those of the lightweight method, RTMDet-R-s. To visually compare the accuracy of these methods, Figure 9 displays the corresponding precision–recall curves, further illustrating the advantages of LSR-Det from various perspectives.

Table 4.

Comparison with other arbitrary-oriented object detection methods on SSDD+.

Figure 9.

Precision–recall curves of different methods on SSDD+.

To assess the generalizability of the method, we continued our experiments with various currently popular arbitrary-oriented detection methods on the RSDD-SAR dataset. The specific detection results are shown in Table 5. It is evident from the table that the proposed method, LSR-Det, achieves the highest values for AP50 and F1, which are 97.2% and 93.3%, respectively. Notably, in terms of the AP50 values, LSR-Det surpasses Oriented-RepPoints, S2A-Net, and Rotated-RTMDet-s by 7.7%, 7.2%, and 7.0%, respectively, demonstrating its values of 0.98 M and 3.21 G for the parameters and FLOPs, respectively, compared to the other methods. This indicates that the proposed method considers both detection accuracy and model size, proving the superiority of LSR-Det. As shown in Figure 10, the precision–recall curves corresponding to these methods more intuitively reflects the accuracy advantage of LSR-Det over the other methods.

Table 5.

Comparison with other arbitrary-oriented object detection methods on RSDD-SAR.

Figure 10.

Precision–recall curves of different methods on RSDD-SAR.

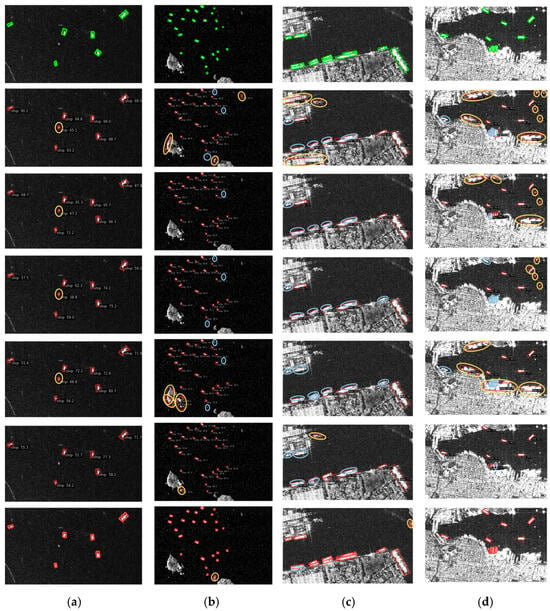

We continue to qualitatively analyze the different detection methods by selecting four typical scenarios from the SSDD+ dataset, and the results are shown in Figure 11. The figure illustrates two offshore scenarios and two inshore scenarios. Figure 11a depicts sparse small ship targets on the far sea, which are misdetected by all the methods except for Rotated-RTMDet-s and LSR-Det. In Figure 11b, in the far-sea scenario, in addition to the presence of multiple ships, there are also interfering objects such as islands and reefs, and all the methods suffer from different degrees of leakage and misdetection. In contrast, LSR-Det has only one false alarm, proving its effectiveness in detecting sparse small targets. Figure 11c shows a dense arrangement of multi-scale ship targets near the shore. It is evident that all the algorithms exhibit varying degrees of false alarms and missed detections, illustrating the challenges in detecting densely arranged ship targets near the shore in SAR images. However, LSR-Det exhibits minimal false alarms and missed detections, illustrating its excellent performance. Figure 11d displays densely arranged ship targets near the shore. All the methods except LSR-Det show the same degree of false alarms and missed detections, while LSR-Det correctly detects all the ship targets. This series of detection results proves the powerful detection capability of LSR-Det in complex scenes.

Figure 11.

Visualization of the detection results of various methods on SSDD+. (a) Offshore Scene 1. (b) Offshore Scene 2. (c) Inshore Scene 1. (d) Inshore Scene 2. From top to bottom: ground truths, Rotated-RetinaNet, S2A-Net, R3Det, Oriented-RepPoints, Rotated-RTMDet-s, and LSR-Det. The green boxes indicate the ground truths, the red boxes indicate detection results, the blue circles indicate missed detections, and the yellow circles indicate false alarms.

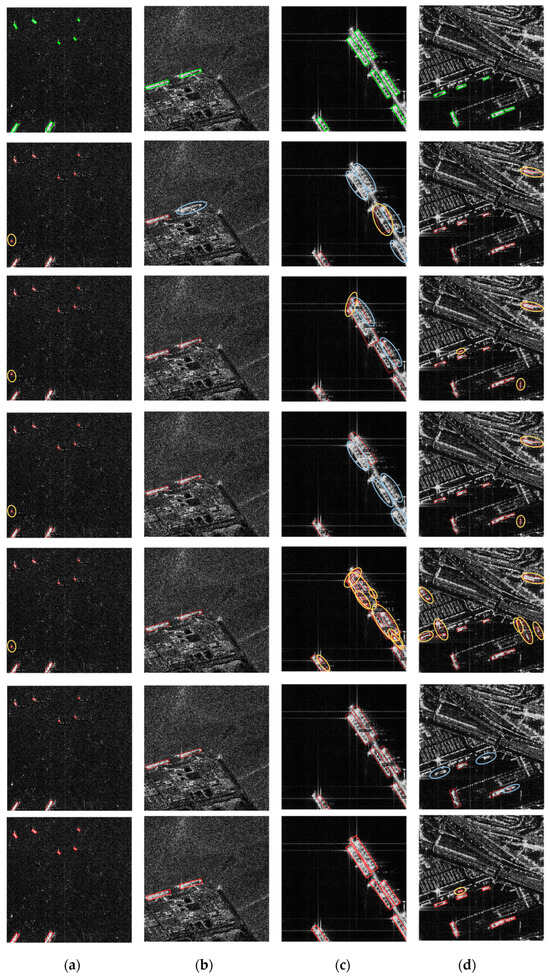

We also conducted a qualitative analysis of the RSDD-SAR dataset, and the visualization is shown in Figure 12. The figure illustrates one offshore scene and three inshore scenes. Figure 12a presents a low-resolution offshore scene with sparse small ship targets and speckle noise interference. Rotated-RetinaNet, S2A-Net, R3Det, and Oriented-RepPoints all produce false detections for the same target, whereas LSR-Det successfully detects all the targets. This indicates that LSR-Det has superior detection capability and anti-jamming ability in far-sea scenarios compared to the other methods. Figure 12b illustrates two large ship targets distributed in an offshore scenario, where only Rotated-RetinaNet has missed detections. In Figure 12c, there is a densely arranged distribution of ships in an in-shore scenario, with many omissions and misdetections for all the methods except for Rotated-RTMDet-s and LSR-Det. Figure 12d shows a complex scene of multi-scale ship target distribution at the inshore harbor with many interferences. Only LSR-Det correctly detects all the targets, while the other methods have different degrees of misdetection and omission. This series of detection results fully demonstrate the excellent detection capability and anti-interference ability of LSR-Det when facing various scenarios.

Figure 12.

Visualization of the detection results of various methods on RSDD-SAR. (a) Offshore Scene 1. (b) Inshore Scene 1. (c) Inshore Scene 2. (d) Inshore Scene 3. From top to bottom: ground truths, Rotated-RetinaNet, S2A-Net, R3Det, Oriented-RepPoints, Rotated-RTMDet-s, and LSR-Det. The green boxes indicate the ground truths, the red boxes indicate detection results, the blue circles indicate missed detections, and the yellow circles indicate false alarms.

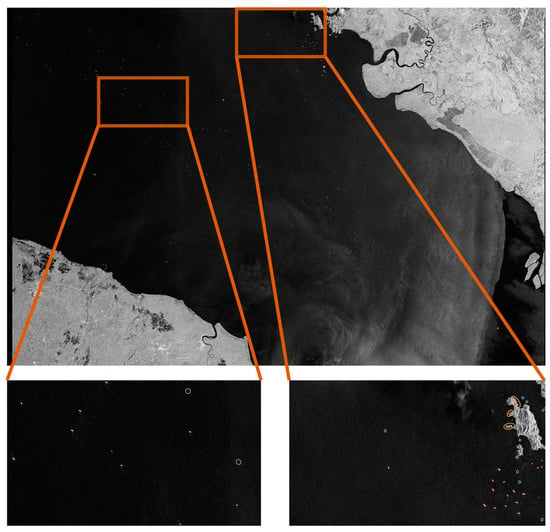

3.5. Experiment on a Large-Scale SAR Image

We use a large-scale SAR image from the LS-SSDD-v1.0 dataset [46] to further validate the robustness of the proposed method. This image measures 24,000 × 16,000 pixels. We test this image using model weights trained on the SSDD dataset, and the results are presented in Figure 13. In the offshore scenario, LSR-Det demonstrates excellent ship detection performance, with only two missed detections. However, in the complex nearshore scenario, the false detection rate for land-based facilities is high. We analyzed the reasons for this issue. Firstly, nearshore environments are complex, with various man-made structures exhibiting characteristics similar to ships. Secondly, the SSDD dataset contains fewer land-based interference scenarios for training, which hinders the model performance. In conclusion, while LSR-Det shows promise, it still has limitations and requires further improvements in detection performance in complex environments, which will be a focus of our future work.

Figure 13.

Visual results of the proposed method on a large-scale SAR image. In the figures, the red boxes indicate detection results, the blue circles indicate missed detections, and the yellow circles indicate false alarms.

4. Discussion

The proposed LSR-Det method comprises three modules: the LCGNet, LAFPN, and LRDHead. This section will discuss the specific impact of each module on LSR-Det, compare the results with other similar structures on the SSDD+ dataset, and provide a visual analysis.

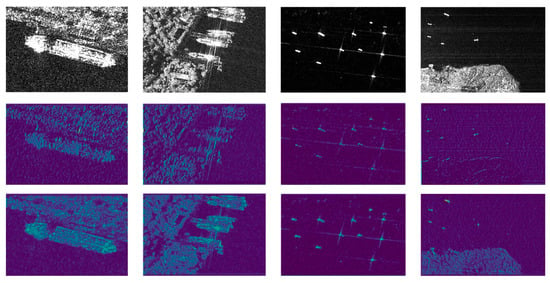

4.1. Effect of LCGNet

A more detailed discussion of the role of the LCGNet follows. To thoroughly evaluate the LCGNet, this paper compares it with other lightweight and commonly used backbone networks, such as EfficientNet [47], MobileNetv3 [48], and GhostNetv2 [49]. The experimental results, shown in Table 6, use PAFPN as the neck network and YOLOv8 (OBB) as the head network. The LCGNet demonstrates superior performance compared to the other lightweight backbones, achieving the highest AP50 and F1 scores of 98.1% and 95.4%, respectively. This superior performance can be attributed to the integration of the LFEM and CGFAM in the backbone network, which effectively extracts the edge and spatial information from ships and enhances the feature interaction and information transfer. Furthermore, the LCGNet’s parameters and FLOPs are 0.77 M and 2.0 G, respectively, which are lower than those of the other lightweight backbones. This efficiency is due to the embedding of multiple LFEM modules and the unique channel branching design of the LFEM, which, along with the group convolution in one of the branches, significantly reduces the computational cost. These experimental results indicate that the LCGNet can more efficiently extract ship features from SAR images. Additionally, we generated feature visualization results for both the LCGNet and the baseline, as shown in Figure 14. The figure illustrates that the LCGNet significantly enhances the focus on ship targets and improves the feature extraction capability.

Table 6.

Comparison of performance between LCGNet and other backbone networks.

Figure 14.

First row includes the original SAR images, the second row includes the feature visualization results of baseline, and the last row includes the feature visualization results of LCGNet.

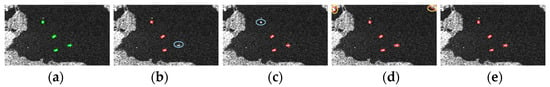

We also visually represent these methods. Figure 15 depicts some sparse ship target distributions in the inshore scenario of the SSDD+ dataset. The detection results with EfficientNet, MobileNetv3, and GhostNetv2 as the backbone network all exhibit missed detections or false alarms. In contrast, the detection network with the LCGNet as the backbone network correctly detects all the ship targets, further proving the effectiveness of the LCGNet.

Figure 15.

Visualization of the detection results for different backbone networks. (a) Ground truths. (b) EfficientNet. (c) MoblieNetv3. (d) GhostNetv2. (e) LCGNet. Green: ground truths. Red: detection results. Blue: missed detections. Yellow: false alarms.

4.2. Effect of LAFPN

The experiments continued with a further discussion on the LAFPN. Comparing the LAFPN with the other commonly used neck networks, the results are shown in Table 7. In each experiment, the LCGNet was used as the backbone network and YOLOv8 (OBB) as the head network. The results indicated that FPN-based variants, such as PAFPN [50] and BiFPN [51], were less effective than the FPN, resulting in decreased AP50 and F1 values. This reduction in effectiveness may be attributed to the longer propagation paths of structures like PAFPN and BiFPN, which lead to a loss of ship texture details, adversely affecting the detection outcomes. However, the LAFPN outperformed the other neck networks, achieving the highest AP50 and F1 scores. Additionally, the parameters and FLOPs values for the LAFPN were lower compared to those for the other neck networks.

Table 7.

Comparison of performance between LAFPN and other neck networks.

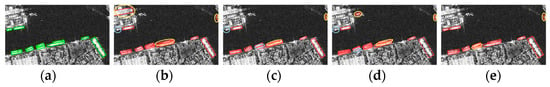

The visualizations of these methods are shown in Figure 16, where a nearshore complex scene graph from the SSDD dataset, containing densely arranged multi-scale ship targets, was selected. The visualization results demonstrate that all the methods exhibit varying degrees of false alarms and missed detections, whereas the LAFPN has the fewest false alarms and missed detections. This highlights the LAFPN’s excellent performance in complex scenarios, showcasing its effectiveness in achieving high-precision detections with very low computational costs.

Figure 16.

Visualization of the detection results for different neck networks. (a) Ground truths. (b) PAFPN. (c) BiFPN. (d) FPN. (e) LAFPN. Green: ground truths. Red: detection results. Blue: missed detections. Yellow: false alarms.

4.3. Effect of LRDhead

In the following analysis, we further discuss the LRDHead using the YOLOv8n (OBB) head as a benchmark for comparison with the LRDHead. The results are shown in Table 8. In each experiment, the LCGNet is used for the backbone, and PAFPN for the neck network. It can be observed that the LRDHead outperforms the baseline, with the values of AP50 and F1 improving by 0.3% and 0.4%, respectively. Meanwhile, the values of the FLOPs and parameters are significantly reduced, and this enhancement stems from the design of shared convolutional parameters, which enhances the multi-scale detection capability of the network by sharing the convolutional parameters of three different layers and solving the problem of imbalance in the number of samples of ships of different scales on different layers. Additionally, the convolution module is constructed from the lightweight convolution DualConv, further reducing the number of parameters of the network.

Table 8.

Comparison of performance between LRDHead and baseline.

The visualization of the two methods is shown in Figure 17. We selected a low-resolution offshore scene map from the SSDD+ dataset, which contains sparse ship targets with some speckle noise. It is worth noting that the baseline results in false alarms, while the network with the LRDHead as the head successfully detects all the ship targets, thus confirming the effectiveness of the proposed module qualitatively.

Figure 17.

Visualization of the detection results for different head networks. (a) Ground truths. (b) Baseline. (c) LRDHead. Green: ground truths. Red: detection results. Blue: missed detections. Yellow: false alarms.

5. Conclusions

In this article, we propose a lightweight single-stage anchor-free detector for ship detection in any direction in SAR images, named LSR-Det. The detector achieves high detection accuracy with a low computational cost, consisting of three main components: the LCGNet, LAFPN, and LRDHead. The LCGNet is a lightweight contour-guided backbone network that combines an LFEM and CGFAM to efficiently extract the feature information of ship targets while maintaining lower parameters. The LAFPN integrates an ASFM to adaptively learn the subtle feature details of ship targets in SAR images and suppress the background clutter noise, enhancing the multi-scale ship detection. The LRDHead employs a shared convolutional layer parameter design, which further improves the detection accuracy and reduces the computational cost. The ablation experiments demonstrate the effectiveness of these components. The comparative experimental results on two publicly available datasets, SSDD+ and RSDD-SAR, show that our proposed LSR-Det outperforms the other current mainstream arbitrary-direction target detectors while maintaining lower computational complexity.

Author Contributions

Conceptualization, F.M. and X.Q.; methodology, F.M.; software, F.M.; validation, F.M.; formal analysis, F.M.; investigation, F.M.; resources, X.Q.; data curation, F.M.; writing—original draft preparation, F.M.; writing—review and editing, X.Q. and H.F.; visualization, F.M.; supervision, X.Q.; project administration, X.Q. and H.F.; funding acquisition, X.Q. and H.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Key Research and Development Program under Grant 2021YFC3000405.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A Tutorial on Synthetic Aperture Radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Wei, X.; Zheng, W.; Xi, C.; Shang, S. Shoreline Extraction in SAR Image Based on Advanced Geometric Active Contour Model. Remote Sens. 2021, 13, 642. [Google Scholar] [CrossRef]

- Li, J.; Chen, J.; Cheng, P.; Yu, Z.; Yu, L.; Chi, C. A Survey on Deep-Learning-Based Real-Time SAR Ship Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3218–3247. [Google Scholar] [CrossRef]

- Gao, G. Statistical Modeling of SAR Images: A Survey. Sensors 2010, 10, 775–795. [Google Scholar] [CrossRef]

- Xie, T.; Zhang, W.; Yang, L.; Wang, Q.; Huang, J.; Yuan, N. Inshore Ship Detection Based on Level Set Method and Visual Saliency for SAR Images. Sensors 2018, 18, 3877. [Google Scholar] [CrossRef]

- Yang, H.; Cao, Z.; Cui, Z.; Pi, Y. Saliency Detection of Targets in Polarimetric SAR Images Based on Globally Weighted Perturbation Filters. ISPRS J. Photogramm. Remote Sens. 2019, 147, 65–79. [Google Scholar] [CrossRef]

- Schwegmann, C.P.; Kleynhans, W.; Salmon, B.P. Synthetic Aperture Radar Ship Detection Using Haar-Like Features. IEEE Geosci. Remote Sens. Lett. 2017, 14, 154–158. [Google Scholar] [CrossRef]

- Eldhuset, K. An Automatic Ship and Ship Wake Detection System for Spaceborne SAR Images in Coastal Regions. IEEE Trans. Geosci. Remote Sens. 1996, 34, 1010–1019. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Ke, X.; Zhang, X.; Zhang, T.; Shi, J.; Wei, S. SAR Ship Detection Based on an Improved Faster R-CNN Using Deformable Convolution. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3565–3568. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Wang, R.; Xu, F.; Pei, J.; Wang, C.; Huang, Y.; Yang, J.; Wu, J. An Improved Faster R-CNN Based on MSER Decision Criterion for SAR Image Ship Detection in Harbor. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1322–1325. [Google Scholar]

- Zhu, M.; Hu, G.; Li, S.; Zhou, H.; Wang, S.; Feng, Z. A Novel Anchor-Free Method Based on FCOS + ATSS for Ship Detection in SAR Images. Remote Sens. 2022, 14, 2034. [Google Scholar] [CrossRef]

- Hu, Q.; Hu, S.; Liu, S. BANet: A Balance Attention Network for Anchor-Free Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5222212. [Google Scholar] [CrossRef]

- Li, X.; Li, D.; Liu, H.; Wan, J.; Chen, Z.; Liu, Q. A-BFPN: An Attention-Guided Balanced Feature Pyramid Network for SAR Ship Detection. Remote Sens. 2022, 14, 3829. [Google Scholar] [CrossRef]

- Cui, Z.; Wang, X.; Liu, N.; Cao, Z.; Yang, J. Ship Detection in Large-Scale SAR Images Via Spatial Shuffle-Group Enhance Attention. IEEE Trans. Geosci. Remote Sens. 2021, 59, 379–391. [Google Scholar] [CrossRef]

- Bai, L.; Yao, C.; Ye, Z.; Xue, D.; Lin, X.; Hui, M. A Novel Anchor-Free Detector Using Global Context-Guide Feature Balance Pyramid and United Attention for SAR Ship Detection. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4003005. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, S.; Ren, H.; Hu, J.; Zou, L.; Wang, X. Multi-Level Feature-Refinement Anchor-Free Framework with Consistent Label-Assignment Mechanism for Ship Detection in SAR Imagery. Remote Sens. 2024, 16, 975. [Google Scholar] [CrossRef]

- Guo, P.; Celik, T.; Liu, N.; Li, H.-C. Break Through the Border Restriction of Horizontal Bounding Box for Arbitrary-Oriented Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4005505. [Google Scholar] [CrossRef]

- An, Q.; Pan, Z.; Liu, L.; You, H. DRBox-v2: An Improved Detector with Rotatable Boxes for Target Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8333–8349. [Google Scholar] [CrossRef]

- Yang, R.; Pan, Z.; Jia, X.; Zhang, L.; Deng, Y. A Novel CNN-Based Detector for Ship Detection Based on Rotatable Bounding Box in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1938–1958. [Google Scholar] [CrossRef]

- Wang, H.; Liu, S.; Lv, Y.; Li, S. Scattering Information Fusion Network for Oriented Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4013105. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, J.; Zhan, R. R2FA-Det: Delving into High-Quality Rotatable Boxes for Ship Detection in SAR Images. Remote Sens. 2020, 12, 2031. [Google Scholar] [CrossRef]

- Xu, Z.; Gao, R.; Huang, K.; Xu, Q. Triangle Distance IoU Loss, Attention-Weighted Feature Pyramid Network, and Rotated-SARShip Dataset for Arbitrary-Oriented SAR Ship Detection. Remote Sens. 2022, 14, 4676. [Google Scholar] [CrossRef]

- Chen, S.; Zhan, R.; Wang, W.; Zhang, J. Learning Slimming SAR Ship Object Detector Through Network Pruning and Knowledge Distillation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1267–1282. [Google Scholar] [CrossRef]

- Han, S.; Pool, J.; Tran, J.; Dally, W.J. Learning Both Weights and Connections for Efficient Neural Networks 2015. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Guo, Y.; Chen, S.; Zhan, R.; Wang, W.; Zhang, J. LMSD-YOLO: A Lightweight YOLO Algorithm for Multi-Scale SAR Ship Detection. Remote Sens. 2022, 14, 4801. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, Y.; Chen, F.; Shang, E.; Yao, W.; Zhang, S.; Yang, J. YOLOv7oSAR: A Lightweight High-Precision Ship Detection Model for SAR Images Based on the YOLOv7 Algorithm. Remote Sens. 2024, 16, 913. [Google Scholar] [CrossRef]

- Wan, D.; Lu, R.; Shen, S.; Xu, T.; Lang, X.; Ren, Z. Mixed Local Channel Attention for Object Detection. Eng. Appl. Artif. Intell. 2023, 123, 106442. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 13708–13717. [Google Scholar]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to Upsample by Learning to Sample. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 6004–6014. [Google Scholar]

- Zhong, J.; Chen, J.; Mian, A. DualConv: Dual Convolutional Kernels for Lightweight Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 9528–9535. [Google Scholar] [CrossRef]

- Murrugarra-Llerena, J.; Kirsten, L.N.; Zeni, L.F.; Jung, C.R. Probabilistic Intersection-Over-Union for Training and Evaluation of Oriented Object Detectors. IEEE Trans. Image Process. 2024, 33, 671–681. [Google Scholar] [CrossRef]

- Li, X.; Lv, C.; Wang, W.; Li, G.; Yang, L.; Yang, J. Generalized Focal Loss: Towards Efficient Representation Learning for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3139–3153. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.K.; Kannappan, P.L.; Ng, C.T.; Sahoo, P.K. Measures of Distance between Probability Distributions. J. Math. Anal. Appl. 1989, 138, 280–292. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR Ship Detection Dataset (SSDD): Official Release and Comprehensive Data Analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Xu, C.; Su, H.; Li, J.; Liu, Y.; Yao, L.; Gao, L.; Yan, W.; Wang, T. RSDD-SAR: Rotated Ship Detection Dataset in SAR Images. J. Radars 2022, 11, 581. [Google Scholar]

- Zhou, Y.; Yang, X.; Zhang, G.; Wang, J.; Liu, Y.; Hou, L.; Jiang, X.; Liu, X.; Yan, J.; Lyu, C.; et al. MMRotate: A Rotated Object Detection Benchmark Using PyTorch. In Proceedings of the Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 7331–7334. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 7 August 2024).

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Li, J.; Xia, G.-S. Align Deep Features for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5602511. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3Det: Refined Single-Stage Detector with Feature Refinement for Rotating Object 2020. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024. [Google Scholar]

- Li, W.; Chen, Y.; Hu, K.; Zhu, J. Oriented RepPoints for Aerial Object Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1819–1828. [Google Scholar]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. RTMDet: An Empirical Study of Designing Real-Time Object Detectors 2022. arXiv 2022, arXiv:2212.07784. [Google Scholar]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1.0: A Deep Learning Dataset Dedicated to Small Ship Detection from Large-Scale Sentinel-1 SAR Images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks 2020. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3 2019. arXiv 2019, arXiv:1905.02244. [Google Scholar]

- Tang, Y.; Han, K.; Guo, J.; Xu, C.; Xu, C.; Wang, Y. GhostNetV2: Enhance Cheap Operation with Long-Range Attention. arXiv 2022, arXiv:2211.12905b. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).