An Accurate and Robust Multimodal Template Matching Method Based on Center-Point Localization in Remote Sensing Imagery

Abstract

1. Introduction

- We propose a robust multimodal template matching method that transforms the template matching task into a center-point localization task, alleviating the problem of low accuracy.

- We present a novel encoder–decoder Siamese feature extraction network, which enhances the robustness to large-scale variations and reduces the computational complexity.

- We design an adaptive shrinkage cross-correlation method to dynamically remove a proportion of the similar features from the object, effectively improving the localization accuracy without adding additional parameters.

- We build a new multimodal template matching dataset covering scenarios where the template matching task suffers from variations in rotation, viewing angle, occlusion and heterogeneity in practical applications.

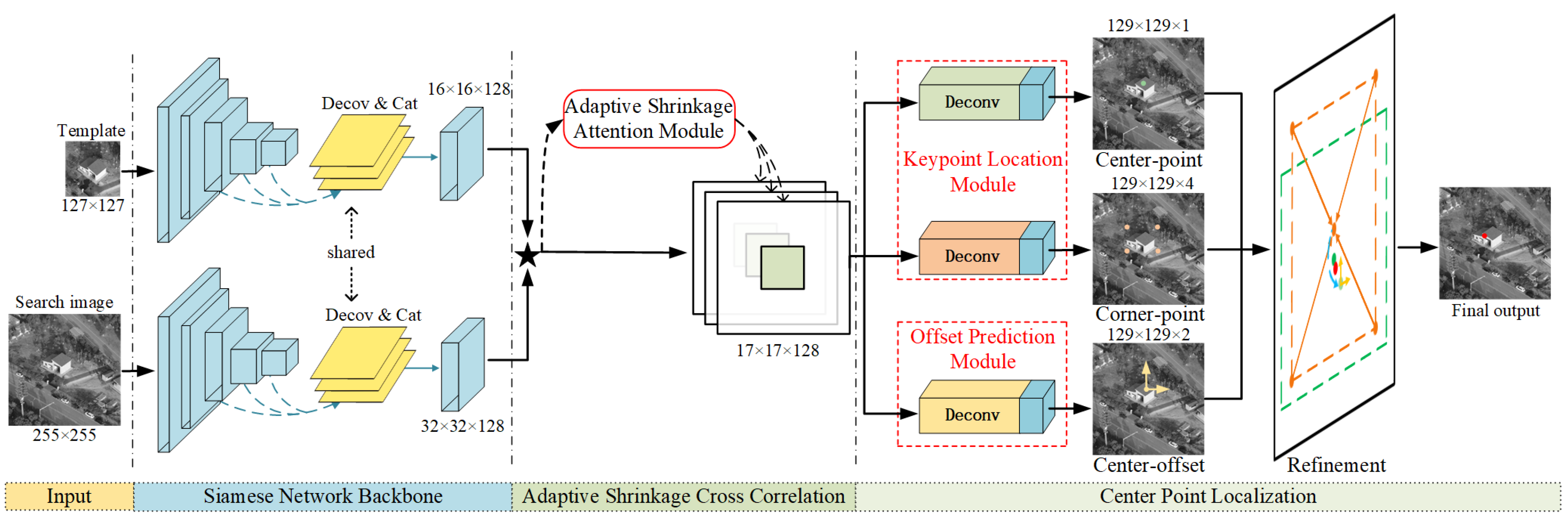

2. Materials and Methods

2.1. Template Matching Methods

2.2. Fully Convolutional Siamese Networks

2.3. Attention Modules

2.4. Proposed Method

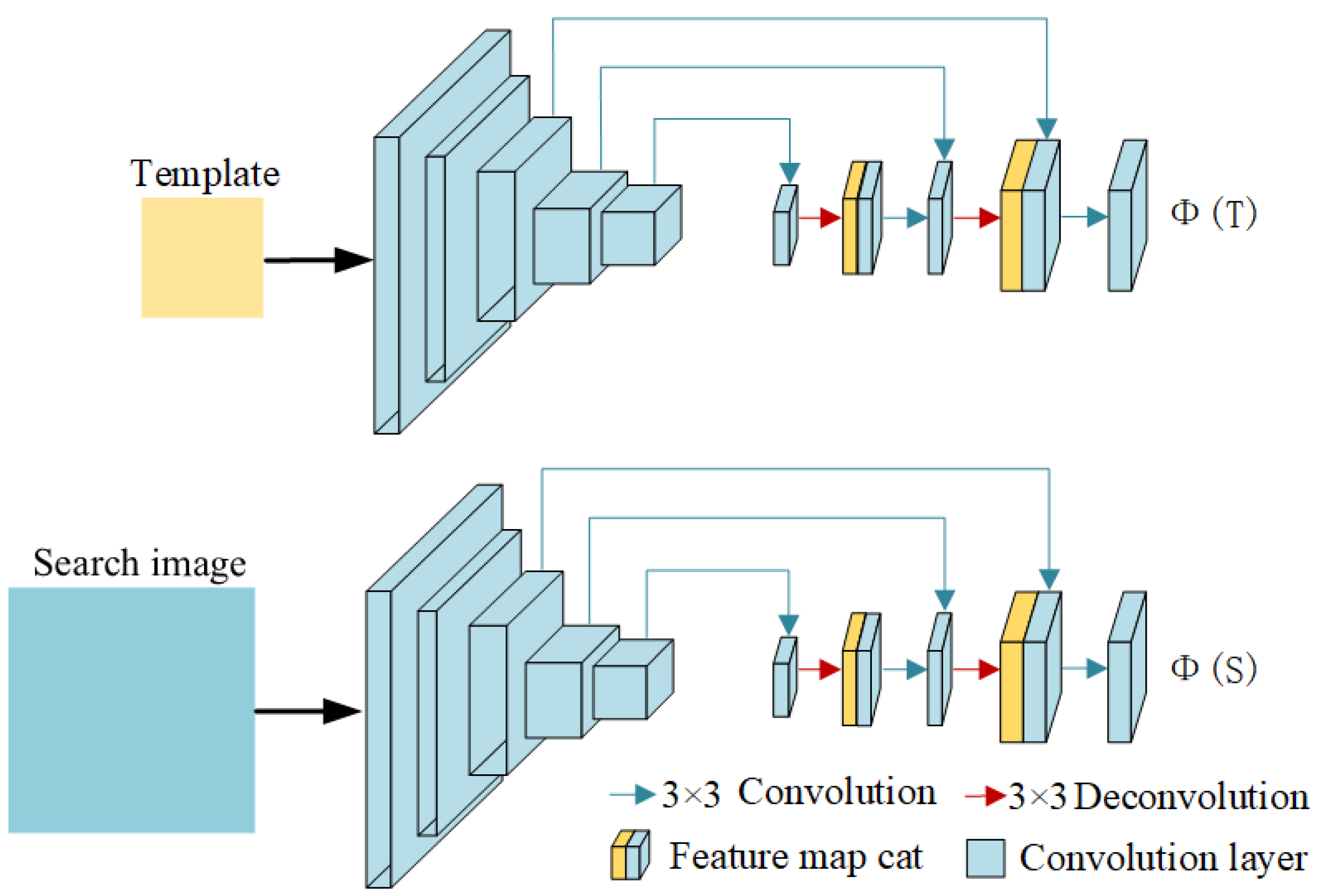

2.4.1. Siamese Network Backbone

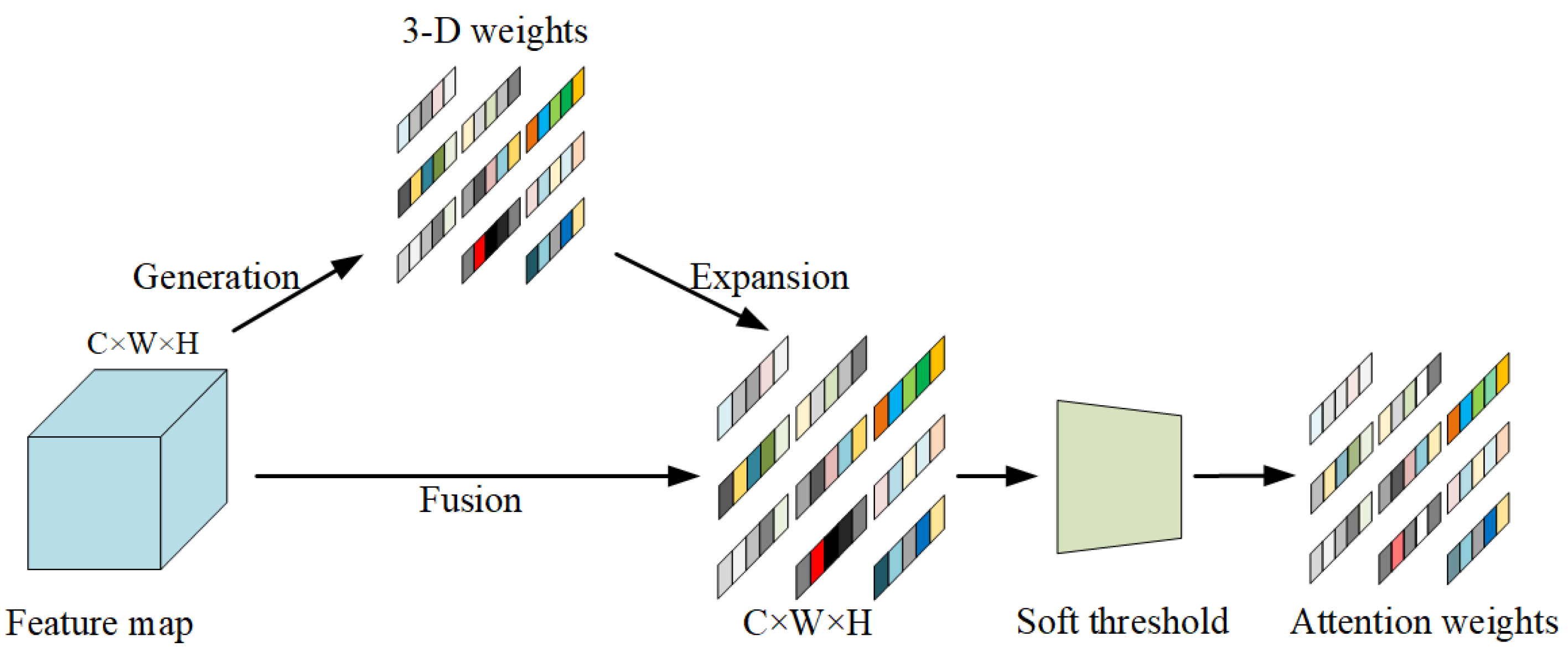

2.4.2. Adaptive Shrinkage Cross-Correlation

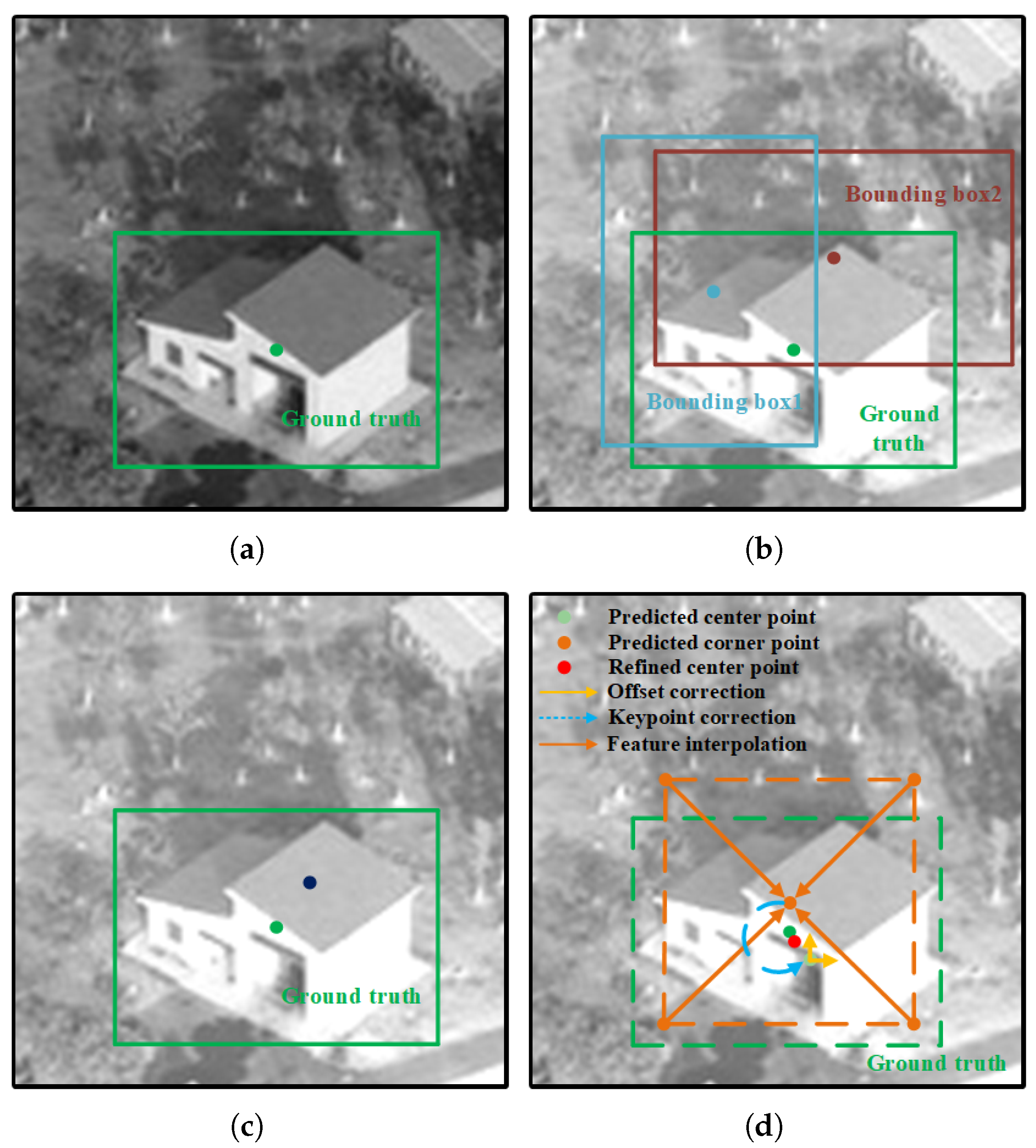

2.4.3. Center-Point Localization

2.4.4. Ground Truth and Loss

| Algorithm 1 Center-Point Localization |

|

3. Results

3.1. Implementation Details

3.1.1. Training

3.1.2. Testing

3.1.3. Evaluation Datasets

3.1.4. Evaluation Metrics

3.2. Comparison to State of the Art

3.2.1. Quantitative Evaluation

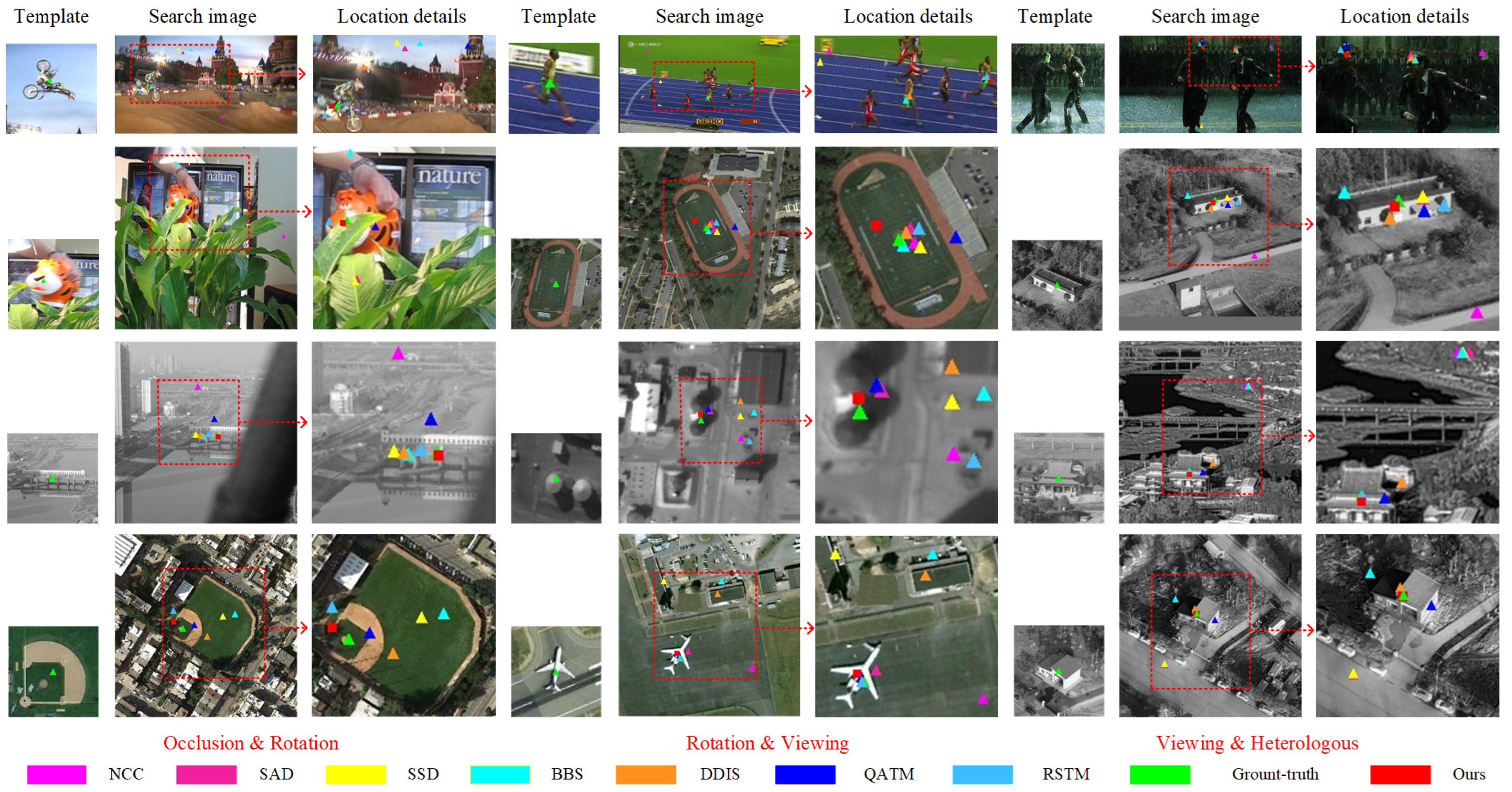

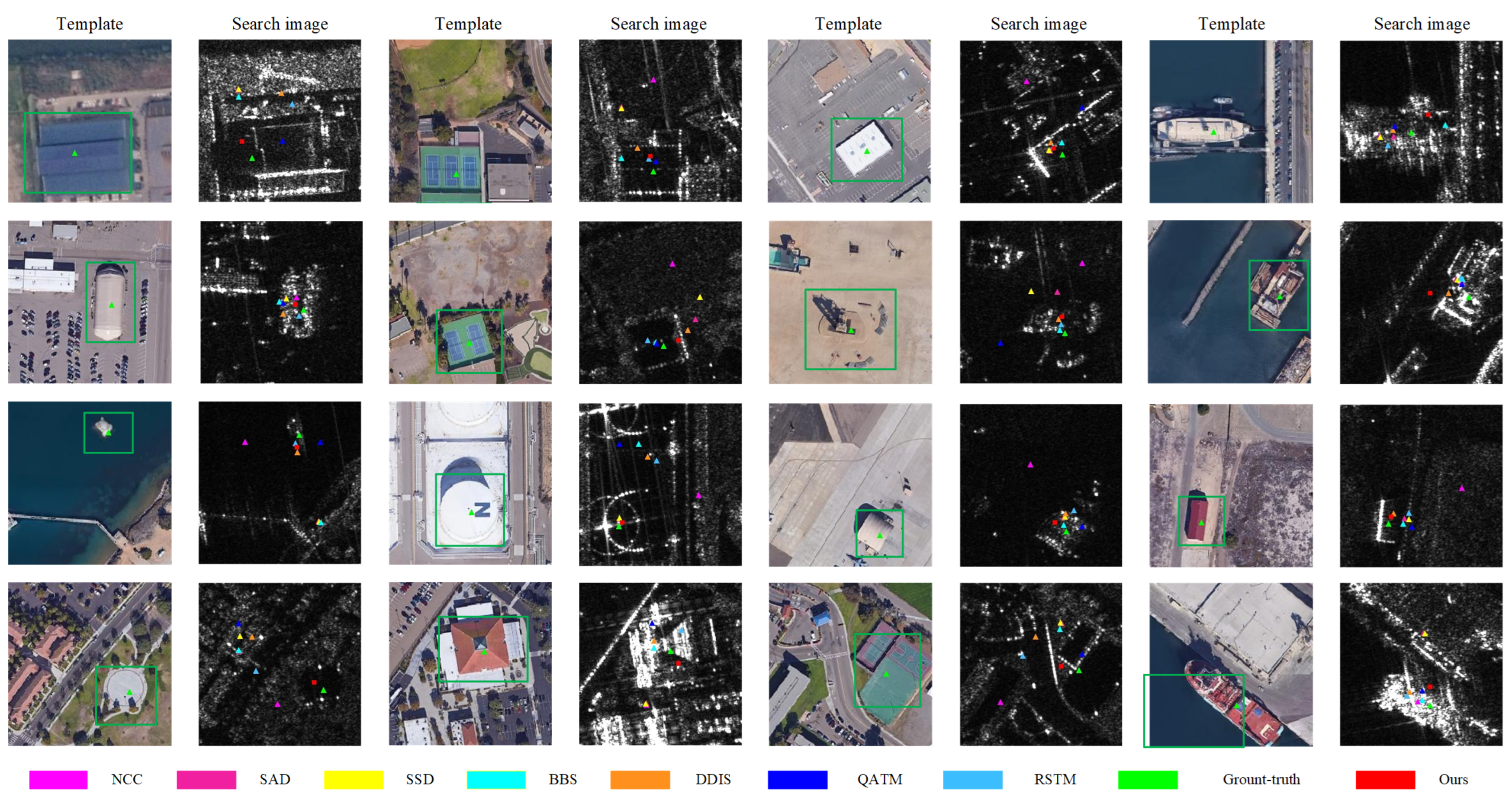

3.2.2. Qualitative Evaluation

3.3. Ablation Study

3.3.1. Ablation Study on Network Framework

3.3.2. Ablation Study on the Fine-Tuning Scheme

3.3.3. Ablation Study on Feature Concatenation Modules

3.3.4. Ablation Study on the Adaptive Shrinkage Attention Module

3.3.5. Ablation Study on the Detection Head Branch

3.3.6. Parameter Sensitivity Analysis

4. Discussion

4.1. The Advantages of Our Method

4.2. Limitations and Potential Improvements

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ren, Q.; Zheng, Y.; Sun, P.; Xu, W.; Zhu, D.; Yang, D. A Robust and Accurate End-to-End Template Matching Method Based on the Siamese Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Martin-Lac, V.; Petit-Frere, J.; Le Caillec, J.M. A Generic, Multimodal Geospatial Data Alignment System for Aerial Navigation. Remote Sens. 2023, 15, 4510. [Google Scholar] [CrossRef]

- Hui, T.; Xu, Y.; Zhou, Q.; Yuan, C.; Rasol, J. Cross-Viewpoint Template Matching Based on Heterogeneous Feature Alignment and Pixel-Wise Consensus for Air- and Space-Based Platforms. Remote Sens. 2023, 15, 2426. [Google Scholar] [CrossRef]

- Hikosaka, S.; Tonooka, H. Image-to-Image Subpixel Registration Based on Template Matching of Road Network Extracted by Deep Learning. Remote Sens. 2022, 14, 5360. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object Detection with Discriminatively Trained Part-Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Davis, L.S.; Doermann, D.; DeMenthon, D. Hierarchical Part-Template Matching for Human Detection and Segmentation. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Zheng, Y.; Sun, P.; Zhou, Z.; Xu, W.; Ren, Q. ADT-Det: Adaptive Dynamic Refined Single-Stage Transformer Detector for Arbitrary-Oriented Object Detection in Satellite Optical Imagery. Remote Sens. 2021, 13, 2623. [Google Scholar] [CrossRef]

- Cen, M.; Jung, C. Fully Convolutional Siamese Fusion Networks for Object Tracking. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3718–3722. [Google Scholar] [CrossRef]

- Hou, B.; Cui, Y.; Ren, Z.; Li, Z.; Wang, S.; Jiao, L. Siamese Multi-Scale Adaptive Search Network for Remote Sensing Single-Object Tracking. Remote Sens. 2023, 15, 4359. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, S.; Ahuja, N.; Yang, M.H.; Ghanem, B. Robust Visual Tracking Via Consistent Low-Rank Sparse Learning. Int. J. Comput. Vis. 2015, 111, 171–190. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar] [CrossRef]

- Dekel, T.; Oron, S.; Rubinstein, M.; Avidan, S.; Freeman, W.T. Best-Buddies Similarity for robust template matching. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 2021–2029. [Google Scholar] [CrossRef]

- Talmi, I.; Mechrez, R.; Zelnik-Manor, L. Template Matching with Deformable Diversity Similarity. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1311–1319. [Google Scholar] [CrossRef]

- Kat, R.; Jevnisek, R.; Avidan, S. Matching Pixels Using Co-occurrence Statistics. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1751–1759. [Google Scholar] [CrossRef]

- Cheng, J.; Wu, Y.; AbdAlmageed, W.; Natarajan, P. QATM: Quality-Aware Template Matching for Deep Learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11545–11554. [Google Scholar] [CrossRef]

- Hou, B.; Ren, Z.; Zhao, W.; Wu, Q.; Jiao, L. Object Detection in High-Resolution Panchromatic Images Using Deep Models and Spatial Template Matching. IEEE Trans. Geosci. Remote Sens. 2020, 58, 956–970. [Google Scholar] [CrossRef]

- Mercier, J.P.; Garon, M.; Giguère, P.; Lalonde, J.F. Deep Template-based Object Instance Detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1506–1515. [Google Scholar] [CrossRef]

- Wu, W.; Xian, Y.; Su, J.; Ren, L. A Siamese Template Matching Method for SAR and Optical Image. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Tian, Y.; Narasimhan, S.G. Globally Optimal Estimation of Nonrigid Image Distortion. Int. J. Comput. Vis. 2012, 98, 279–302. [Google Scholar] [CrossRef]

- Zhang, C.; Akashi, T. Fast Affine Template Matching over Galois Field. In Proceedings of the British Machine Vision Conference, Swansea, UK, 7–10 September 2015. [Google Scholar]

- Korman, S.; Reichman, D.; Tsur, G.; Avidan, S. FasT-Match: Fast Affine Template Matching. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2331–2338. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar] [CrossRef]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of Siamese Visual Tracking With Very Deep Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4277–4286. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9626–9635. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. SiamFC++: Towards Robust and Accurate Visual Tracking with Target Estimation Guidelines. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12549–12556. [Google Scholar] [CrossRef]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese Fully Convolutional Classification and Regression for Visual Tracking. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6268–6276. [Google Scholar] [CrossRef]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R.; Tang, Z.; Li, X. SiamBAN: Target-Aware Tracking With Siamese Box Adaptive Network. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 5158–5173. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Peng, H.; Fu, J.; Li, B.; Hu, W. Ocean: Object-Aware Anchor-Free Tracking. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 771–787. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Park, J.; Woo, S.; Lee, J.Y.; Kweon, I.S. BAM: Bottleneck Attention Module. arXiv 2018, arXiv:1807.06514. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Online, 18–24 July 2021. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and Wider Siamese Networks for Real-Time Visual Tracking. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4586–4595. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M.H. Online Object Tracking: A Benchmark. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.H.; Zhu, X. The SEN1-2 Dataset for Deep Learning in SAR-Optical Data Fusion. arXiv 2018, arXiv:1807.01569. [Google Scholar] [CrossRef]

| Block | Backbone | Search Branch Output Size | Template Branch Output Size |

|---|---|---|---|

| conv1 max pool conv2_x conv3_x conv4_x conv5_x |

ResNet50 pretrained model | 8 × 8 | 4 × 4 |

| decode5 | 3 × 3, 128 | 8 × 8 | 4 × 4 |

| decode4 | 16 × 16 | 8 × 8 | |

| decode3 | 32 × 32 | 16 × 16 | |

| xcorr | cross-correlation | 17 × 17 | |

| deconv1 | 3 × 3, 128, | 33 × 33 | |

| deconv2 | 3 × 3, 128, | 65 × 65 | |

| deconv3 | 3 × 3, 128, | 129 × 129 | |

| conv_1 conv_2 conv_3 | 3 × 3, 1 3 × 3, 1 3 × 3, 1 | 129 × 129 | |

| Method | OTB | Hard350 | ||||

|---|---|---|---|---|---|---|

| MCE | SR5 | SR10 | MCE | SR5 | SR10 | |

| SSD | 71.987 | 0.362 | 0.419 | 33.19 | 0.429 | 0.589 |

| NCC | 82.579 | 0.324 | 0.371 | 39.364 | 0.4 | 0.526 |

| SAD | 73.825 | 0.067 | 0.124 | 31.062 | 0.431 | 0.557 |

| BBS | 38.49 | 0.49 | 0.62 | 16.62 | 0.35 | 0.62 |

| DDIS | 26.53 | 0.51 | 0.69 | 13.89 | 0.3 | 0.54 |

| QATM | 29.967 | 0.543 | 0.724 | 12.42 | 0.163 | 0.523 |

| RSTM | 14.191 | 0.495 | 0.629 | 11.116 | 0.489 | 0.751 |

| Ours | 5.263 | 0.79 | 0.924 | 9.92 | 0.5 | 0.82 |

| Fusion | PFA | Head | MCE | SR5 | SR10 |

|---|---|---|---|---|---|

| √ | 7.172 | 0.743 | 0.895 | ||

| √ | 11.597 | 0.4 | 0.667 | ||

| √ | 13.086 | 0.371 | 0.648 | ||

| √ | √ | 6.083 | 0.762 | 0.914 | |

| √ | √ | 9.176 | 0.733 | 0.848 | |

| √ | √ | 11.925 | 0.429 | 0.648 | |

| √ | √ | √ | 5.263 | 0.79 | 0.924 |

| L3 | L4 | L5 | MCE | SR5 | SR10 |

|---|---|---|---|---|---|

| √ | √ | √ | 61.269 | 0.057 | 0.076 |

| √ | 45.27 | 0.171 | 0.257 | ||

| √ | √ | 39.898 | 0.219 | 0.371 | |

| √ | √ | 8.119 | 0.743 | 0.857 | |

| √ | √ | 7.997 | 0.724 | 0.857 | |

| √ | 7.053 | 0.743 | 0.886 | ||

| √ | 5.263 | 0.79 | 0.924 |

| D3 | D4 | D5 | PFA | Center | Corner | Offset | MCE |

|---|---|---|---|---|---|---|---|

| √ | √ | 9.287 | |||||

| √ | √ | 7.317 | |||||

| √ | √ | 13.77 | |||||

| √ | √ | √ | 7.506 | ||||

| √ | √ | √ | 7.175 | ||||

| √ | √ | √ | 7.023 | ||||

| √ | √ | √ | √ | 6.649 | |||

| √ | √ | √ | √ | √ | 6.083 | ||

| √ | √ | √ | √ | √ | √ | 5.496 | |

| √ | √ | √ | √ | √ | √ | 5.745 | |

| √ | √ | √ | √ | √ | √ | √ | 5.263 |

| MCE | ||||

|---|---|---|---|---|

| OTB | Hard350 | |||

| 0.5 | 1.0 | 1.0 | 7.27 | 15.099 |

| 1.0 | 0.5 | 1.0 | 7.962 | 11.589 |

| 1.0 | 1.0 | 0.5 | 7.997 | 12.171 |

| 1.0 | 0.5 | 0.5 | 6.45 | 9.521 |

| 0.5 | 1.0 | 0.5 | 6.808 | 10.964 |

| 0.5 | 0.5 | 1.0 | 6.35 | 12.028 |

| 1.0 | 1.0 | 1.0 | 5.263 | 9.92 |

| 0 | 0.2 | 0.4 | 0.6 | 0.8 | 0.9 | 1 | |

|---|---|---|---|---|---|---|---|

| MCE | 10.233 | 8.733 | 7.292 | 6.161 | 5.397 | 5.263 | 5.404 |

| SR5 | 0.352 | 0.448 | 0.524 | 0.638 | 0.733 | 0.79 | 0.781 |

| SR10 | 0.648 | 0.714 | 0.781 | 0.829 | 0.914 | 0.924 | 0.924 |

| Method | SSD | NCC | SAD | BBS | DDIS | QATM | RSTM | Ours |

|---|---|---|---|---|---|---|---|---|

| Speed (s/pairs) | 1.452 | 0.004 | 2.171 | 24.080 | 2.717 | 1.094 | 0.017 | 0.037 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Zheng, Y.; Xu, W.; Sun, P.; Bai, S. An Accurate and Robust Multimodal Template Matching Method Based on Center-Point Localization in Remote Sensing Imagery. Remote Sens. 2024, 16, 2831. https://doi.org/10.3390/rs16152831

Yang J, Zheng Y, Xu W, Sun P, Bai S. An Accurate and Robust Multimodal Template Matching Method Based on Center-Point Localization in Remote Sensing Imagery. Remote Sensing. 2024; 16(15):2831. https://doi.org/10.3390/rs16152831

Chicago/Turabian StyleYang, Jiansong, Yongbin Zheng, Wanying Xu, Peng Sun, and Shengjian Bai. 2024. "An Accurate and Robust Multimodal Template Matching Method Based on Center-Point Localization in Remote Sensing Imagery" Remote Sensing 16, no. 15: 2831. https://doi.org/10.3390/rs16152831

APA StyleYang, J., Zheng, Y., Xu, W., Sun, P., & Bai, S. (2024). An Accurate and Robust Multimodal Template Matching Method Based on Center-Point Localization in Remote Sensing Imagery. Remote Sensing, 16(15), 2831. https://doi.org/10.3390/rs16152831