Abstract

The ability to accurately map tropical wetland dynamics can significantly contribute to a number of areas, including food and water security, protection and enhancement of ecosystems, flood hazard management, and our understanding of natural greenhouse gas emissions. Yet currently, there is not a tractable solution for mapping tropical forested wetlands at high spatial and temporal resolutions at a regional scale. This means that we lack accurate and up-to-date information about some of the world’s most significant wetlands, including the Amazon Basin. RadWet-L is an automated machine-learning classification technique for the mapping of both inundated forests and open water using ALOS ScanSAR data. We applied and validated RadWet-L for the Amazon Basin. The proposed method is computationally light and transferable across the range of landscape types in the Amazon Basin allowing, for the first time, regional inundation maps to be produced every 42 days at 50 m resolution over the period 2019–2023. Time series estimates of inundation extent from RadWet-L were significantly correlated with NASA-GFZ GRACE-FO water thickness (Pearson’s r = 0.96, p < 0.01), USDA G-REALM lake hight (Pearson’s r between 0.63 and 0.91, p < 0.01), and in situ river stage measurements (Pearson’s r between 0.78 and 0.94, p < 0.01). Additionally, we conducted an evaluation of 11,162 points against the input ScanSAR data revealing spatial and temporal consistency in the approach (F1 score = 0.97). Serial classifications of ALOS-2 PALSAR-2 ScanSAR data by RadWet-L can provide unique insights into the spatio-temporal inundation dynamics within the Amazon Basin. Understanding these dynamics can inform policy in the sustainable use of these wetlands, as well as the impacts of inundation dynamics on biodiversity and greenhouse gas budgets.

1. Introduction

Natural wetlands represent a major source of methane emissions, a greenhouse gas that is the cause of about 20% of the warming induced by long-lived GHGs since the Industrial Revolution [1,2]. Recent work has shown that inter and intra-annual variation in wetland inundation can have significant effects on methane emissions, but this is poorly understood at the global scale, largely due to a lack of accurate data on wetland inundation dynamics [3,4]. Wetlands also represent significant ecosystems and are arguably some of the most biologically diverse regions of the globe [5,6]. Indeed, recent work showed that a large proportion of tree diversity across the Amazon exists within forested floodplains [6]. Equally, millions of people rely upon wetlands like the Amazon for maintaining fish stocks, agricultural zones, and water resources [7,8]. If we are to protect these important ecosystems, we need to understand how they are changing over time and space [6] and, in particular, how wetlands like the Amazon Basin respond to climatic shocks like El Niño. Satellite Earth Observation represents an important tool for mapping and quantifying wetland inundation dynamics at the basin scale.

Given its capacity for wide coverage and repetitive observations, satellite remote sensing is the most feasible way to map wetland inundation dynamics. Passive and active microwave systems, GNSS (Global Navigation Satellite System) altimeters, and optical systems have all been previously used at various temporal and spatial scales [9,10,11,12,13,14,15]. Optical remote sensing systems are inherently limited when mapping inundation within tropical wetland ecosystems as vegetation canopy cover can obscure the signal from the ground surface, making the detection of water inundation difficult or impossible. Additionally, optical imagery is affected by cloud cover, often persistent in tropical regions, particularly during wet season conditions. Microwave remote sensing systems are not affected by cloud cover and are able to image both day and night. Furthermore, microwave pulses from radar systems can penetrate vegetation canopy cover, under certain conditions and sensor types, enabling the detection of standing water beneath the canopy.

Passive microwave systems are capable of detecting inundation under vegetation canopies due to a reduction in microwave emissivity where surface water is present, but this is limited to a coarse spatial resolution (~25 km) [13,14,15]. Attempts have mainly employed the US Defense Meteorological Satellite Program operated Special Sensor Microwave/Imager (SSM/I) and the Special Sensor Microwave Imager Sounder (SSMIS), producing the SWAMPS and GIEMS products [13,14] that provide global monthly inundation estimates at a 25 km resolution. Satellite EO approaches, using thermal imaging [16] or signals from global navigation systems such as CYGNSS [17], can map both open water and inundated vegetation at spatial resolutions of about 1.1 km. These data provide high temporal frequency maps of inundation, revealing important intra- and inter-annual trends. Their relatively coarse spatial resolution means that key hydrological features that may be key to routing water through a wetland system, such as anabranching channels and smaller tributaries, might not be captured [18]. As such, we cannot use these data to understand the hydrological processes that govern their dynamics. Efforts were made to downscale the GIEMS dataset to create the GIEMS-D3 product [15], providing average yearly minimum and maximum global inundation extent at a 90 m resolution. Despite the improved spatial scale, this product lacks the monthly granularity of the original GIEMS product, limiting its use for quantifying temporal dynamics in inundation.

Active Synthetic Aperture Radar (SAR) systems can provide high-resolution observations (i.e., 3–100 m) of inundation compared to passive systems. Open water acts as a specular-like reflector of an incident radar pulse, providing a distinct (low) backscatter signal [9,10,11,12,19,20,21,22,23,24,25]. Therefore, SAR imagery has been widely used for the mapping of open water (i.e., without the presence of emergent or floating vegetation), particularly in the context of floodwater mapping [19,20,21,22]

The potential for SAR systems to detect inundation underneath vegetation canopies is due to a double-bounce interaction between the vertical structure of the vegetation and the surface of the water beneath [9,10,12,24,25,26,27]. In forested wetlands in the Amazon Basin, this mechanism was demonstrated by Rosenqvist et al. [28] by comparing L-band backscatter signals from the JERS-1 system with transects of field observations within seasonally flooded (igapó) forests. The circumstances under which this backscatter mechanism occurs depends on a number of factors but is largely a function of the wavelength and polarisation used by the SAR system and the type of vegetation that is inundated. For instance, C-band (wavelength ~5.5 cm) systems have the potential to detect inundation within herbaceous plant communities [24,25], and L-band (wavelength ~23.5 cm) systems have the potential to detect inundation in forested environments. The double bounce effect occurs only in the co-polarisation channels of radar imagery (HH or VV) [9,10,11,12,14,28,29,30,31,32,33].

Several previous studies have used L-band SAR for mapping inundated forests within the Amazon River Basin, including those using the systems JERS-1 SAR [10,11,28,34], ALOS PALSAR [12,29,30,31,32,33], and ALOS-2 PALSAR-2 [9,35]. These studies have produced inundation maps at various temporal and spatial scales. Attempts to map the entire Amazon River Basin have produced annual dual-season inundation maps [9,10,11,28]. These products typically capture the broader temporal scale inundation characteristics (dry and wet season inundation extents). Chapman et al. [12] also examined inundation dynamics on an intra-annual basis but this was limited to particularly sub-catchments in the Amazon River Basin over a relatively short time period, considering observations made on six dates between January and August 2007. Intra-annual dynamics have been shown to be important in other large wetland systems where spatial and temporal dynamics have been linked to global scale circulation patterns [4], enabling delineation of areas vulnerable to drought or flood [24]. To date, there are no high-frequency maps (multiple observations per year) of inundation for the Amazon at the basin scale.

Previous assessments of inundation across the Amazon Basin using L-band SAR have used rule-based or decision-tree approaches to define areas of inundation with reference to a multi-temporal mean backscatter [12], decision-tree classification of multi-temporal backscatter and statistical composite imagery [9], or high and low water season composites calculated from imagery acquired during expected high and low water season timings [10]. Whilst these products have a great deal of value in ecosystem mapping, they cannot be used to fully assess the inherent inter and intra-annual inundation dynamics of forested wetlands in response to longer-term changes in rainfall, land use, water resource use, as well as shorter-term shocks resulting from events like El Niño. To achieve this level of temporal granularity (e.g., quarterly or monthly maps of inundation), new approaches are needed to identify inundation on a scene-by-scene basis.

An automated machine-learning approach has been developed that can detect inundation within herbaceous wetlands using serial Sentinel-1 C-band imagery [24,25]. This approach exploits a process-based understanding of seasonal inundation dynamics to automatically define training data for open water and inundated vegetation pixels using a series of rules applied to metrics that characterise the temporal variability of the backscatter signal, as well as the backscatter of the image being classified. This approach, termed RadWet (referred to as RadWet-C in this paper), was designed to detect inundated vegetation through the assumption that seasonal inundation will lead to high variability in backscatter throughout the time series (typically the whole archive of the SAR dataset) and relatively high backscatter during the high-water season (owing to the double-bounce backscatter mechanism) compared to the low-water season [24].

However, RadWet-C can only be used to detect inundation in herbaceous vegetation because the relatively short C-band wavelength cannot penetrate through dense canopies and, as such, cannot detect inundation in forested wetlands. The aim of this research is to develop a new approach, termed RadWet-L, that can be used to generate serial maps of inundation within forested wetlands. This paper presents and evaluates the RadWet-L approach for mapping inundation across the entire Amazon River Basin using serial ALOS-2 PALSAR-2 L-band imagery for the period 2019–late 2023.

2. Materials and Methods

2.1. Study Area

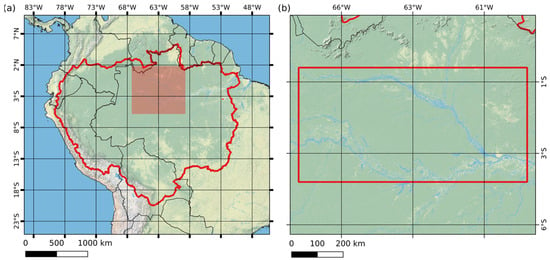

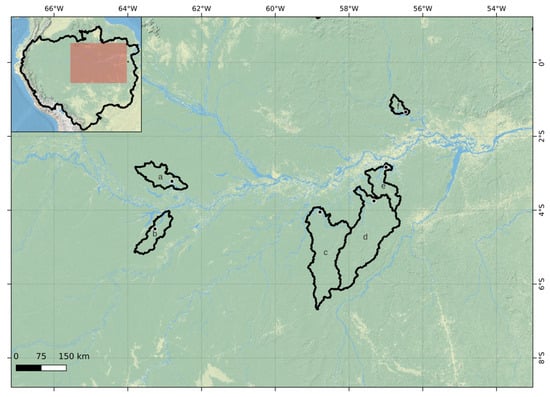

The Amazon River Basin was selected as the study area for the development of the RadWet-L algorithm (Figure 1). The drainage basin covers approximately 5.95 million km2, out of which close to 10% consists of permanent or seasonally inundated environments [9]. The central part of the basin is dominated by a lowland floodplain with forested (varzea, igapó) wetlands with an evergreen canopy, with smaller areas of herbaceous or low vegetation wetlands also present.

Figure 1.

(a) Map of the Amazon River Basin to which the RadWet-L image classification was applied and evaluated. (b) Area within the Central Amazon Basin (indicated by the red box) where training data was generated, and algorithm testing and development was carried out.

The central part of the basin was chosen for initial RadWet-L algorithm testing and development. The algorithm was subsequently applied to the full basin for assessment of classification performance and comparison with datasets from other studies.

2.2. Datasets

ALOS-2 PALSAR-2 Mosaic Data

The ALOS-2 PALSAR-2 L-band imaging radar, operated by the Japan Aerospace Exploration Agency (JAXA), acquires imagery across the tropical belt in ScanSAR mode with a 350 km swath every 42 days as per the PALSAR-2 Basic Observation Scenario [36]. The data used in this study were provided through JAXA’s ALOS Kyoto & Carbon (K&C) Initiative project [37] in 1 × 1 degree tiles (n = 1794), processed to the CEOS Analysis Ready Data standard [38] expressed as Gamma Nought γ0. The HH and HV backscatter, as well as the Local Incidence Angle images, are used in the image classification, and in addition, the Normalised Difference of HH and HV backscatter, creating a Normalised Polarisation Difference Index band (NPDI) defined as (HH-HV)/(HH+HV) [23].

As all SAR imagery is susceptible to speckles resulting from random signal-target interaction, a 3 × 3 pixel window Lee Filter was applied to the input imagery as a means of reducing the speckle within the imagery. All available 1 × 1 degree tiles intersecting the Amazon Basin boundaries (defined by HydroBASIN), between 2019 and mid 2023 were used for image classification.

2.3. Auxiliary Datasets

Auxiliary datasets used for training data generation and as independent variables in the classification procedure are summarised in Table 1 and described below.

Table 1.

Summary of datasets used by RadWet-L.

2.3.1. Sentinel-1

To assist in the detection of regions of open water, ESA Sentinel-1 C-band radar imagery was used, provided as monthly median backscatter composites of Radiometrically Terrain Corrected (RTC) expressed in gamma-nought [44].

Sentinel-1 VV, VH, and Normalised Polarisation Difference Index of VV and VH (NPDI) data were generated using the Microsoft Planetary Computer [39] covering the central Amazon region. In this case, NDPI is defined as (VV-VH)/(VV+VH).

2.3.2. Slope

The slope angle was calculated from the HydroSheds Hydrologically Conditioned DEM [45] for the whole of the Amazon Basin within Google Earth Engine, and then the data were exported as a GeoTIFF image.

2.3.3. Height above Nearest Drainage (HAND)

The MERIT Hydro Height Above Nearest Drainage (HAND) [41,46] layer was used as a classification predictor variable. HAND indicates a pixel’s height relative to its point of nearest drainage and can be used to determine the likelihood of a pixel experiencing inundation [46]. Low HAND values are assumed to be more likely to store water and be susceptible to flooding [46].

The HAND layer, developed from the MERIT DEM and associated hydrological layers, showed an improvement over the HAND layer produced by HydroSheds [41]. Visual analysis of the HydroSheds-derived HAND layer showed anomalies and inaccuracies across the Amazon Basin, and consequently the MERIT HAND layer was used.

2.3.4. Copernicus Global Landcover

The ESA Copernicus Global Land Cover Collection 3 [42], derived from ESA’s Proba-V mission data, was acquired over the Amazon Basin and used to generate a binary mask representing areas considered to be suitable or unsuitable for extracting ‘pure’ inundation training data. Specifically, areas defined as (a) Urban, (b) Herbaceous Vegetation, (c) Cultivated/Managed Vegetation, and (d) Bare/Sparse Vegetation were all considered unsuitable. Conversely, landcover types referring to forest cover, shrubs, or water bodies were considered suitable areas for extracting open water and inundated forest training data.

2.3.5. Global Forest Change

Visual analysis of the Copernicus Global Landcover Collection 3 data showed some underestimation of the extent of shrubland as well as managed and herbaceous vegetation. This underestimation is likely due to deforestation and land use change since the Copernicus Global Landcover layer was completed in 2019. As such, the Global Forest Watch Change [43] loss year data was used to identify areas of deforestation by creating a binary forest loss layer indicating deforestation since 2016. This binary layer was combined with the Copernicus Global Landcover to update areas of deforestation to create a comprehensive mask layer indicating areas unsuitable for inundation mapping.

2.4. Class Definitions

The typology for the classification is (a) Inundated Forest, (b) Open Water, and (c) Other Landcover. The following sub-sections describe these classes in more detail.

2.4.1. Inundated Forest

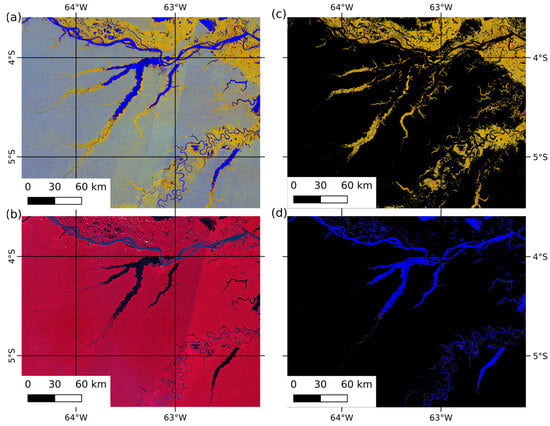

The definition of inundated forest is based on our previous work reported in Rosenquist et al. [28]. As described earlier, this study revealed an association between field observation of inundation within Amazonian floodplain forests and a double-bounce backscatter signal from co-polarised L-band imagery. Specifically, the incident co-polarised radar pulse first reflects from the water’s surface, and then reflects off the vertical tree structure back to the sensor or vice versa, resulting in a strongly enhanced high backscatter in the HH co-polarised channel [28]. This phenomenon represented the basis for several subsequent efforts to map inundation within forested wetlands (notably including the Amazon Basin) [9,10,11,12] and is illustrated in Figure 2.

Figure 2.

(a) ALOS-2 PALSAR-2 ScanSAR false colour composite (HH, HV, NPDI: Normalised Difference Polarisation Index) acquired between 12 and 25 August 2019; (b) reference optical Landsat 8 and Sentinel-2 false colour composite (NIR, Red, Green). Example area defined as (c) inundated forest, and (d) open water through visual interpretation of the ALOS-2 PALSAR-2 ScanSAR data. Images centre on the Coari region of the Amazon Basin.

This double-bounce backscatter mechanism preserves the transmitted polarisation direction and is therefore observed in the co-polarised channel only, while the cross-polarised channel (HV) on the other hand is almost exclusively sensitive to volumetric scattering [26]. Following these principles, the normalised difference polarisation ratio between the HH and the HV channels is the main parameter behind the detection of inundated vegetation within the ALOS-2 PALSAR-2 imagery as it is better at discriminating inundated forest from dry forest compared with using either one of the channels in isolation.

2.4.2. Open Water

The incident radar pulse typically exhibits a single specular reflection on a smooth open water surface, resulting in little or no scattering of the signal back towards the SAR antenna. This in turn results in very low backscatter in both the co-polarised (HH) and cross-polarised (HV) channels, as shown above in Figure 2d. Wind and rain can create a rough surface with respect to the wavelength of the SAR, and therefore increase the backscatter from the water surface [47]. However, this increase is predominately within the HH polarisation and is normally small in comparison to the backscatter from the vegetation [47]. The normalised polarisation difference ratio is therefore a valuable indicator of open water as both bands show similarly low backscatter responses.

2.4.3. Other Landcover

Other landcover can be defined as any other landcover which is not inundated forest, open water, or is not masked as herbaceous vegetation or urban areas defined by the Copernicus Global Landcover or Global Forest Watch Change Masks.

2.5. Training Data Generation

RadWet-L follows a supervised machine learning classification approach, where training data is automatically generated in the central Amazon region in the floodplain between the Negro and Solimōes rivers west of their confluence at Manus (Figure 1b). This region experiences a pronounced seasonal flood pulse and contains large areas of inundated forest and open surface water that vary in extent over the hydrological year. There are also large areas of the other terrestrial classes able to provide sufficient training to be representative of the whole basin.

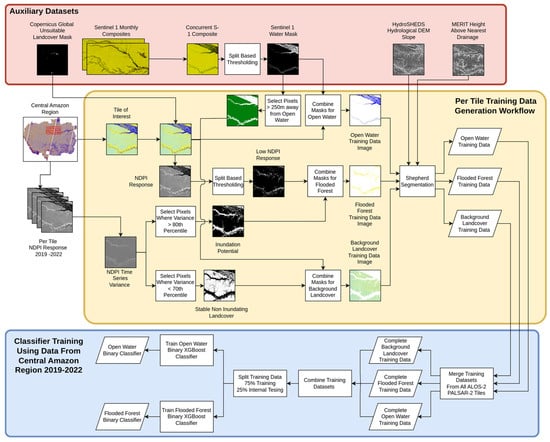

Training data was generated for two binary classifiers. One classifier was trained for the detection of Inundated Forest vs all other landcovers, and a second classifier was trained to map Open Water vs all other landcovers. This approach was adopted following [24], which demonstrated improved classification accuracy compared to a more complex multi-class classification system when applied to Sentinel-1 C-band imagery for classification of open water, inundated herbaceous vegetation, and background dry land in Western Zambia. Figure 3 provides a summary of the workflow for generating the training data as well as an overview of how this is used to train the two classifiers. Figure 4 shows the preparation of ALOS-2 PALSAR-2 tiles and the subsequent application of the trained classifiers. Details of the training and classification procedures are provided in the sections below.

Figure 3.

Flow diagram summarising the RadWet-L automatic training data generation process and classifier training.

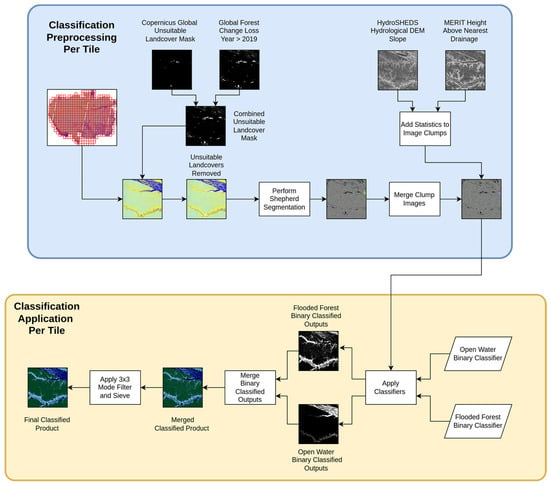

Figure 4.

Flow diagram outlining the data preparation and classifier application for each ALOS-2 PALSAR-2 in the Amazon River Basin.

Training data for each class is developed on a per 1 × 1 degree tile basis, for all tiles available over the central Amazon region between 2019 and 2022 (1794 tiles in total) covering a range of hydrological conditions. This comprehensive training dataset ensures that the subsequent classifier is capable of detecting inundation at any time of year within any part of the basin.

Training data was generated for each class through the application of a rule-based approach resulting in a mask for each class (described below). Shepherd image segmentation [48] was then applied to each class mask. Subsequent segments were populated with base statistics and the training samples were exported to a Pandas dataframe [49] and stored as an HDF5 file. This object-based approach was used to reduce the influence of the inherent speckle within SAR images, where isolated pixels which are particularly bright or dark compared to surrounding pixels are merged with surrounding pixels forming a single object. This also has the added benefit of reducing the computing time required to perform a classification, as there are fewer objects to classify compared to classifying each pixel.

This process was applied to each tile, and for each class producing a structured data table of training samples for each tile. All the training samples from each tile were concatenated and shuffled, producing one larger training dataset that could be used to train and optimise the classifiers.

This approach offers improvements over the original RadWet-C algorithm which attempts to generate training data, train two binary supervised machine learning classifiers over 25 replicates each, and apply these classifiers on a per-scene basis [24]. RadWet-L generates training data from representative tiles and trains a global classifier applied to subsequent tiles, removing the need to retrain a new classifier for each tile and reducing computational demand.

2.5.1. Open Water Training Data Mask

Open water training data was defined using Sentinel-1 C-band SAR image composites. The comparatively shorter wavelength of the C-band system provides better discrimination of ‘pure’ open water targets as it is more sensitive to ‘contamination’ from emergent vegetation or the edges/banks of water bodies compared to L-band imagery. Equally, C-band imagery is better able to discriminate between open water and other targets that exhibit low backscatter. For instance, in L-band imagery, flat grassland can appear similar to open water, but in C-band imagery, grassland has a higher backscatter than open water.

Monthly Sentinel-1 composites (10 m) were generated and downloaded from the Microsoft Planetary Computer [39]. Monthly median composites were used, rather than single observations, to reduce the influence of rain or rough water caused by wind that can affect the C-Band VV backscatter signal. Sentinel-1 monthly median composites were resampled to 50 m to match the resolution and raster grid of the ALOS-2 PALSAR-2 data using nearest neighbour resampling. Split-based image thresholding [24] was then applied to the VV image band to generate a low backscatter image mask. This mask is then applied to ALOS-2 PALSAR-2 ScanSAR imagery based on the closest matching date to define training data for open water. This enabled training data to be generated for a range of periods, representing a range of different hydrological conditions.

2.5.2. Inundated Forest Training Data Mask

Following the conceptual model outlined in Section 2.4.1, training data for the Inundated Forest class can be defined where there has been an increase in HH backscatter (due to the double bounce backscatter mechanism), but no increase in backscatter in the cross-polarised HV channel [28]. As such, time series metrics were used to generate a measure of inundation potential and a rule base was applied to each image tile to extract training data pixels. First, the normalised polarisation difference ratio of the HH and HV channels was calculated (NPDI) [23,24], designed to exploit the differences in HH and HV backscatter associated with a double-bounce backscatter signature.

Inundation potential was defined using the per-pixel NPDI variation through the time series of available ALOS-2 PALSAR-2 data. As per the conceptual model, it is assumed the most variable NPDI pixels through time are a result of seasonal inundation. Consequently, selecting all pixels with a per-pixel NPDI variance greater than the 80th percentile defined an area where inundation would be expected to occur within wet season imagery. The 80th percentile was selected using visual sensitivity analysis, being restrictive enough to select ‘pure’ inundated forest pixels, whilst sampling the range of backscatter characteristics within this class.

A split-based thresholding technique [24] was applied to the NPDI band of each input ALOS-2 PALSAR-2 tile to calculate a threshold value used to separate areas of low NPDI value (corresponding to inundated forest) from other landcover. This was constrained by the inundation potential layer to ensure that subsequent training data samples include pixels that have a low NPDI value but are also highly variable in backscatter over time.

The sensitivity of a SAR system to detect inundation underneath a vegetation canopy is also dependent on the angle of incidence, which varies between 25.7° in the near range and 49.0° in the far range of a PALSAR-2 ScanSAR swath. Due to the nature of the ALOS-2 orbit characteristics, areas in the overlap zones between neighbouring ScanSAR swaths are imaged twice within 5 days, within the same orbit cycle, at different (10–15°) incidence angles. Smaller incidence angles (steep) are more suitable than larger incidence angles (shallow), because the radar pulse has less vegetation canopy to travel through and, therefore, has a greater chance of obtaining a strong signal from underneath the canopy [26]. Conversely, at larger (shallower) incidence angles, the radar pulse is more susceptible to attenuation of the signal prior to any double bounce backscatter scattering [26]. As such, the incidence angle has a control on the backscatter values over inundated forests, particularly in the HH channel.

The influence of larger incidence angles on the ability to detect inundated forests was addressed by increasing the NPDI threshold calculated from the Split Based Thresholding technique by 0.1, where the median incidence angle value within the ALOS-2 PALSAR-2 ScanSAR tile is greater than 40°.

2.5.3. Other Landcover Training Data Mask

Other landcover training data is defined where (i) the NPDI variance through the time series is less than the 70th percentile, (ii) the NPDI value in the scene is less than the threshold derived by the split-based thresholding, and (iii) not within 5 pixels of anywhere identified as open water training data. Areas of other landcover are assumed to remain dry all year round (i.e., not seasonally inundated), and consequently, the NPDI variance through time remains relatively low. The NPDI variance 70th percentile was used as a threshold to select temporally stable pixels which do not experience regular inundation. Pixels selected where the NDPI variance is less than the 70th percentile were consistent with field observations of permanently non-inundated areas made in previous work [28], and could therefore be reliably used as training data samples.

A further rule was included where the other landcover training data are deemed not to exist within 5 pixels (250 m) of areas identified to be open water. This reduced the likelihood of areas of open water being included in the other landcover training data if there was an issue with the split-based thresholding applied to the Sentinel-1 VV band missing areas of open water.

2.6. Image Segmentation

Following previous work [24,25] a segment-based image analysis approach was used as this was shown to negate single-pixel misclassification errors observed when carrying out a pixel-based classification approach, driven by speckle noise within SAR imagery. Specifically, ALOS-2 PALSAR-2 ScanSAR data is susceptible to speckle noise due to a relatively low number of ground target looks during data acquisition.

The image segmentation procedure aggregates single pixels into clumps of neighbouring pixels with a similar backscatter response, thereby eliminating isolated pixels with spuriously high or low backscatter values that might be incorrectly classified as Inundated Forest [48].

The Inundated Forest, Open Water, and other landcover training data masks for each tile (1794 in total) within the Central Amazon region underwent image segmentation using the Shepherd Segmentation Algorithm [48] within the Python library RSGISLib [50].

When applying the Shepherd segmentation to the input ALOS-2 PALSAR-2 tiles, pixels within areas of open water tend to cluster into relatively large clumps due to the homogenous nature of backscatter characteristics of open water. This resulted in relatively few segments that could be used for training data generation. Furthermore, the pixel statistics from these clumps (e.g., the mean and standard deviation for HH and HV bands, etc.) are not statistically representative of the backscatter properties of open water, which tend to be much more variable (in the HH band). As such, this training data would under-classify the extent of open water. To negate this, the HV-band imagery was thresholded to create a low HV backscatter image and a high HV backscatter image. These images subsequently underwent image segmentation independently, forcing the segmentation algorithm to create many clumps within the low HV backscatter image and better replicating how the training data was derived. The low and high backscatter segmented images were then merged to create a single segmented image to be classified.

For each segment, the mean and standard deviation pixel value were calculated for (a) HH, (b) HV, (c) NPDI, (d) Incidence Angle, (e) Slope, and (f) Height Above Nearest Drainage (MERIT), and added to a Raster Attribute Table as classifier predictor variables. The Raster Attribute Table for each training dataset was exported as a pandas dataframe [50]. The dataframes for each of the ALOS-2 PALSAR-2 tiles were concatenated to generate three training datasets, one for each class. This process generated 12.3 million training samples for Inundated Forest, 3 million for Open Water, and 21 million for other landcover. All training data samples were normalised using SciKit Learn Standard Scaler [51,52] to ensure that all values for each predictor variable were within the same order of magnitude.

2.7. Classification Model Training

The eXtreme Gradient Boosting (XGBoost Version: 1.7.1) classifier was used for this analysis. XGBoost is a boosted tree machine-learning classification algorithm designed to be scaled for use on large datasets and is computationally more efficient than other machine-learning algorithms such as Random Forests [53]. XGBoost is also able to utilise GPU hardware acceleration, increasing model training speed [53].

The XGBoost classifier was used to generate two separate binary classifications: (1) for the detection of Inundated Forest, and (2) Open Water. In both cases, all other land cover types are lumped into a collective, other landcover class. For the inundated forest classifier, the Open Water and other landcover training datasets were concatenated to create a single negative case class (totalling ~24.1 million training samples), and Inundated Forest training data was used as a true case dataset (totalling ~12.3 million samples).

For the Open Water classifier, the training data is prepared in a similar way: the Open Water training dataset is used as the true case data, with other landcover and Inundated Forest training data concatenated to form the false case data.

Table 2 shows Jeffries–Matsusita (JM) Distances calculated between the training datasets for the detection of each class. JM-Distances less than 0.5 are generally considered to show poor class separability [54]. Consequently, classification predictor variables with a value greater than 0.5 were selected, as shown in bold in Table 2. Further classification predictor variables were also included which showed a JM-Distance less than 0.5, in the case of object mean Incidence Angle for the detection of Inundated Forest. This was specifically included as the incidence angle of the radar pulse can directly influence the strength of the double-bounce backscatter. It was expected that including incidence angle as a classifier predictor variable would allow the XGBoost classifier to learn from this interaction.

Table 2.

JM-Distances for each classification predictor variable. Variables used as independent variables in the classifier are shown in bold.

In the case of Open Water detection, object mean, and standard deviation of slope angle were also included as it would be expected that areas of open water would exist in areas of very low or zero slope. The standard deviation of HAND for each segment was also included, as it would be expected water objects would all have similar HAND values.

The training data were split, with 75% being used to train the classifier and the remaining 25% reserved for internal model evaluation. Bayesian Optimisation was used to select the optimal hyperparameter set for the XGBoost classifier. The two binary classifiers were saved as trained models and applied to individual input ALOS-2 PALSAR-2 1° × 1° tiles.

2.8. Application of Classifiers

Input ALOS-2 PALSAR-2 tiles were pre-processed prior to classification as described in Section 2.2 such that input ALOS-2 PALSAR-2 tiles are representative of the training data supplied to the classifier.

2.9. Data Filling

Classifications were undertaken on temporal stacks of ALOS-2 PALSAR-2 data, each representing a ScanSAR observation cycle of 42 days, with 9 such cycles per year. To handle areas of no data in the coverage, caused by missed acquisitions during certain cycles, a post-processing data-filling procedure was performed. The classified products were examined in turn and areas of no data would be filled with class values based on that pixel’s classified value in the preceding and subsequent observation cycle. Where the preceding and subsequent classified outputs had the same pixel value, this value was inserted into the no-data region.

When the preceding and subsequent classified outputs have differing classified values, the classified value to be filled was determined based on the date of the current observation. As the Amazon River Basin typically experiences peak inundation sometime between April and July, and peak dry conditions in October–November, if the observation with no data was acquired between November and April, the classified value from the preceding image was used. If the current image was acquired after April but before November, the no-data pixels were replaced with the classified value of the subsequent classified output.

2.10. Evaluation of Classification Performance

Similar to previous attempts to map inundation across the extent of the Amazon Basin [9,11,12], a conventional accuracy assessment was not possible because collecting representative ground-truth data over such a large and challenging landscape is unfeasible. Equally, unlike other studies mapping inundation within herbaceous wetlands, we could not use higher-resolution images to act as pseudo ground-truth points [55] as the dense forest canopy masks the ground beneath in optical images and higher-resolution radar image data (i.e., C-band and X-band imagery). Rather, we conducted an evaluation of the RadWet-L classified outputs by examining a set of random points against the original input ALOS-2 PALSAR-2 imagery. Interpretation of whether the classification was correct or not was guided by our knowledge of inundation patterns across the basin, alongside our field observations from previous work [28].

This evaluation was applied to twelve 1° × 1° ALOS-2 PALSAR-2 tiles, distributed across the study area, each acquired at different times during the hydrological year. Each tile was populated with 1000 random points. Where points were distributed in areas of no data those points were removed, creating a total visual evaluation dataset of 11,162 points: 1462 points for Inundated Forest, 569 points for Open Water, and 9131 points for other landcover.

Subsequent data on the number of false/true positives/negatives was used to calculate accuracy assessment metrics per class. Confidence intervals of accuracy assessment metrics were generated following the bootstrapping method of Bunting et al. [55] and Murray et al. [56], whereby 1000 iterations, selecting 50% of the total validation dataset at each iteration, were used to estimate the variance of the classification evaluation statistics. An area-based confidence interval for open water and inundated forest was then defined for each class using errors of commission and omission with the following equations from Bunting et al. [55]:

This information was subsequently used to report estimated inundation extents with their associated confidence range (95th percentile).

To supplement this evaluation procedure, we also conducted comparisons of RadWet-L time series estimates of inundation extent against other products that are indicative of inundation at the basin and sub-basin scale (detailed in Section 2.10.1, Section 2.10.2, Section 2.10.3 and Section 2.10.4).

2.10.1. Comparison against NASA-GFZ GRACE-FO

An Amazon Basin-wide comparison was made between RadWet-L classified total wetted area for each ALOS-2 PALSAR-2 orbit cycle and the NASA-GFZ Gravity Recovery and Climate Experiment Follow-On (GRACE-FO) Gravity Mass Anomaly Dataset [57]. Changes in gravity anomaly are strongly controlled by changes in terrestrial water storage, measured as water equivalent thickness [57]. Although these data are provided at a coarse spatial scale (native resolution of 3° × 3°), mass anomaly measurements and water equivalent thickness are calculated as a monthly product for the entire basin region.

To make a comparison between the GRACE-FO mass anomaly measurements and classified inundation extent, the total area of inundated forest and open water was compared to a single value of the total wetted area for each classified orbit cycle. As the ALOS-2 PALSAR-2 data acquisitions for the basin as a whole occur over a period of 14 days, the mid-date of the start and end of the acquisition is calculated. Where this date is within 10 days of the GRACE-FO measurement, a comparison of mass anomaly and classified total wetted area is performed.

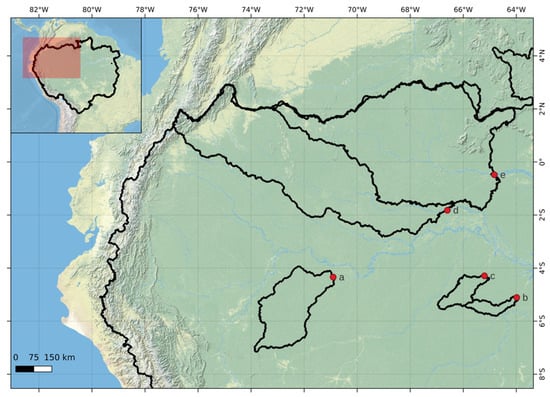

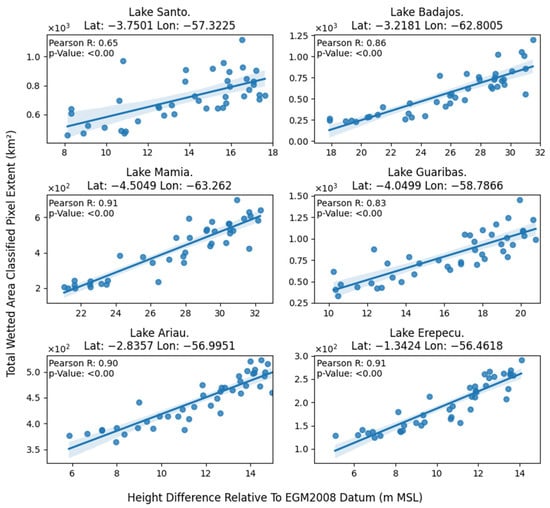

2.10.2. Comparisons against USDA G-REALM

The RadWet-L-derived estimates of inundation extent were compared against water level height data from the USDA Global Reservoirs and Lakes Monitor (G-REALM) lake/reservoir monitoring programme [58] at six locations within the central Amazon region. As G-REALM measures lake levels through radar altimetry, to make a comparison between G-realm and the RadWet-L classified products the upslope contributing area for each lake was defined using HydroSheds level 6 and 7 sub-basins [45]. These contributing areas shown in Figure 5 are then used to mask classified outputs where the middle date of ALOS-2 PALSAR-2 ScanSAR acquisition is within 10 days of the G-REALM lake water height measurement. Pearson correlation was used to quantify the relationship between classified sub-basin total wetted area (sum of inundated forest and open water) and lake water height.

Figure 5.

Location of G-REALM Satellite altimetry measurements on 6 lakes around the Central Amazon and associated drainage basins defined from HydroSheds Level 6 and 7 basin products [45]. (a) Lake Badajos, (b) Lake Mamia, (c) Lake Guaribas, (d) Lake Santo, (e) Lake Ariau, and (f) Lake Erepecu. Basemap ESRI Physical.

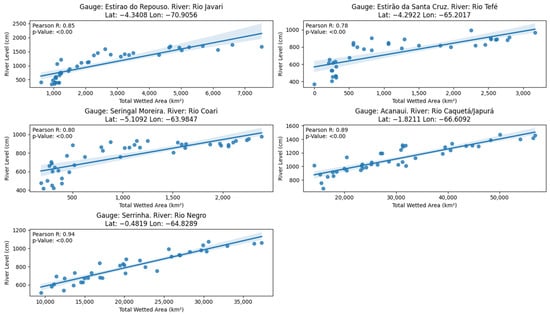

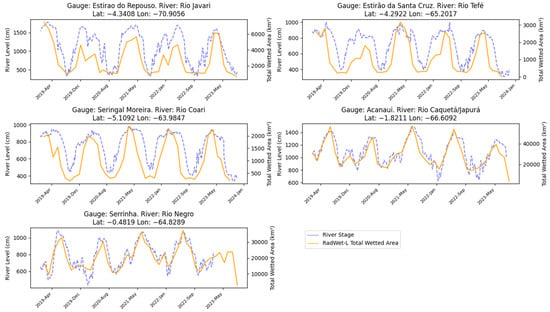

2.10.3. Comparison against In Situ Stage Data

The RadWet-L-derived inundation extent was compared with in situ river stage data at five locations within the Amazon River Basin (Figure 6) as performed by [9]. River stage data was acquired from the Brazilian National Water and Sanitation Agency (ANA) online data portal HIDROWEB [59].

Figure 6.

Locations of river stage gauge stations and associated upslope contributing areas. (a) Estirão Do Repouso, (b) Seringal Moreira, (c) Estirão Da Santa Cruz, (d) Acanaui, and (e) Serrinha. Data provided from [59,60].

These time series data provide a point reference of river heights between 2019 and 2023. To enable comparisons to be made with RadWet-L outputs, the upslope contributing area of each river stage gauge station (defined by the Global Runoff Data Centre [60]) were used to mask each RadWet-L classified output (Figure 6). The total inundated area could then be calculated to allow for a direct comparison between RadWet-L and river stage height. Pearson correlation was used to quantitatively compare RadWet-L derived inundation extent and river stage height at the date of the ALOS-2 PALSAR-2 image acquisitions.

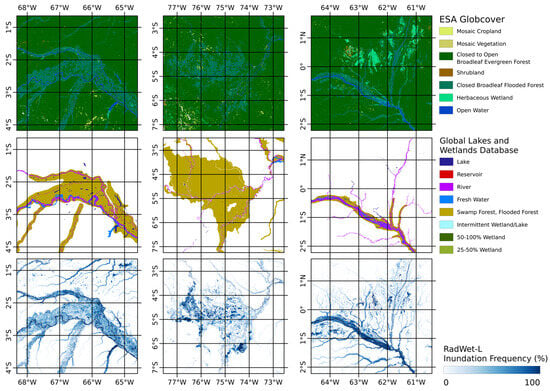

2.10.4. Comparison against Other Mapping Approaches

Previous studies have sought to map the minimum and maximum extent of inundation within the Amazon River Basin using JERS-1 SAR [10,11], ALOS-1 PALSAR-1 ScanSAR [12], and ALOS-2 PALSAR-2 ScanSAR [9] data at comparable spatial resolutions (50–100 m). A direct comparison of mapped minimum and maximum inundation extent was made for the period 2019 to 2023 for the whole Amazon Basin.

Inundation maximum extent was defined where the instances of inundation throughout the time series between 2019 and 2023 were greater than the 5th percentile (2 observations) of valid observations (maximum 43). Minimum inundation was defined where the count of inundation was greater than the 95th percentile (41 observations) of valid pixels in the time series (usually 43).

Despite the data-filling procedure, some areas of no data remain for certain observation cycles, usually visible as striping between orbit swaths. Consequently, in these areas, the maximum number of valid pixels through time was less than the 43 observations of the time series. Minimum inundation therefore was defined by the 95th percentile of the number of valid observation cycles.

Although studies mapping inundation within the Amazon Basin have covered different temporal periods (1996 [10,11], 2006–2010 [12], 2014–2017 [9], and 2019–2022 for this study), performing a comparison in mapped extent is still relevant as the spatial patterns should be similar and this builds confidence in the mapping approach of this study.

3. Results

3.1. Evaluation of Classification Performance

Table 3 provides a summary of classification performance metrics calculated using a combined error matrix including all pseudo ground-truth points (derived from visual interpretation of the input ALOS-2 PALSAR-2 imagery combined with the field-based observation made in previous work [28], as outlined in Section 2.10) for all classes. The evaluation procedure demonstrated that RadWet-L performs consistently in mapping inundation in the Amazon Basin with an overall agreement of 97.0% (95th Conf Int: 96.5 to 97.4%). RadWet-L performed particularly well in the classification of open water with an overall F1 score of 0.97 (Conf Int: 0.96 to 0.99). The main source of error related to false positives due to errors in the ‘unsuitable’ land cover mask (Section 2.3.4 and Section 2.3.5), leading to the classification of relatively smooth, low backscatter targets such as grasslands as open water. There was very little confusion between open water and inundated forest. The overall F1 score for inundated forest was 0.90 (Conf Int: 0.88 to 0.91). The main source of error was the underestimation of inundation extent, indicated by a relatively high error of omission (15.8%). This omission tended to occur at the floodwater edge where the signal (an increase in backscatter due to inundation) is less pronounced, resulting in an underestimation in inundation extent.

Table 3.

Summary of Classifier Performance.

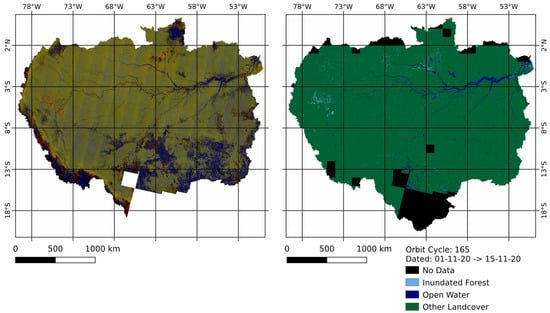

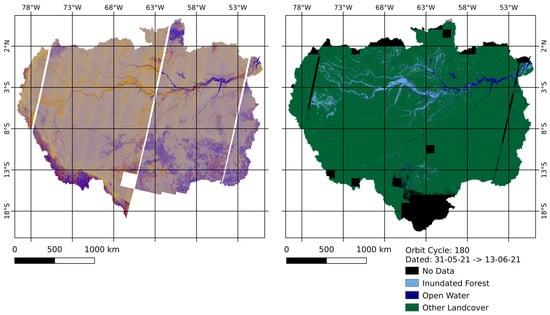

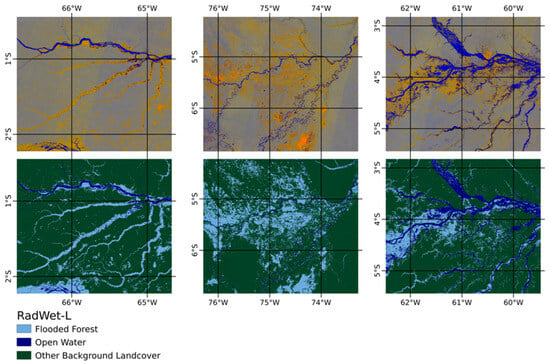

3.2. Classification Outputs

RadWet-L enables high-frequency mapping of inundation using serial L-band radar observations. Figure 7 and Figure 8 show example maps of open water and inundated forest inundation over the extent of the Amazon Basin for November 2020 (dry season) and June 2021 (wet season). In addition to providing basin-wide estimates of inundation extent, RadWet-L is capable of capturing fine-scale hydrological features, as illustrated in Figure 9. This reveals inundation patterns surround relatively minor tributary channels, as well as complex anabranching patterns and confluence sections.

Figure 7.

Classified output over the Amazon River Basin from ALOS-2 PALSAR-2 Orbit Cycle 165, acquired between 01/11/20 and 15/11/20 in low water conditions.

Figure 8.

Classified output over the Amazon River Basin from ALOS-2 PALSAR-2 orbit cycle 180, acquired between 31/05/21 and 13/06/21 in high water conditions.

Figure 9.

Examples of the RadWet-L classified outputs from ALOS-2 PALSAR-2 orbit cycle 180, acquired between 31/05/21 and 13/06/21, demonstrating the ability of RadWet-L to detect fine-scale inundation features.

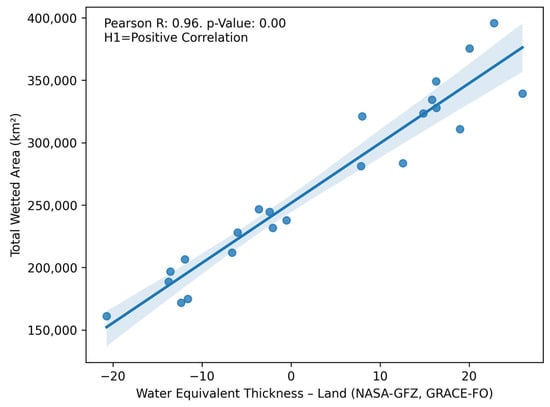

3.3. Comparison with GRACE-FO

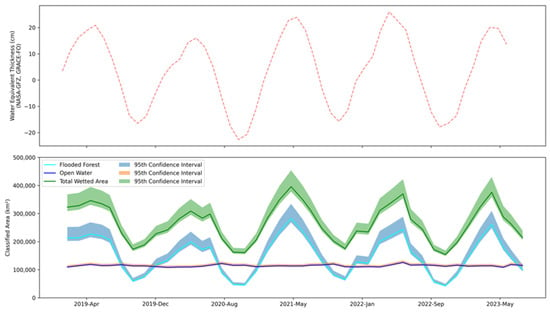

A high correlation (R = 0.96, p value < 0.01) was found between RadWet-L mapped inundation extent and GRACE-FO water equivalent thickness [57] at the whole-basin level, as shown in Figure 10 and Figure 11. This demonstrates that RadWet-L is able to capture the temporal fluctuations in inundation over the hydrological year shown in Figure 11.

Figure 10.

Pearson R correlation between RadWet-L total wetted area classified extent and GRACE-FO Gravity Anomaly [57] where measurement and observation dates are within 10 days.

Figure 11.

Comparison between NASA-GFZ GRACE-FO Gravity Anomaly (Water Equivalent Thickness) [57] from January 2019 to June 2023 (top) and the classified total wetted extent (Inundated Forest and Open Water) between January 2019 and 1st October 2023 as measured by RadWet-L (bottom).

The extent of open water remains relatively static over the hydrological year at a basin-wide scale, as seen in Figure 11. Conversely, inundated forest extent was shown to vary considerably with dry to wet season variations in extent in the order of 285%.

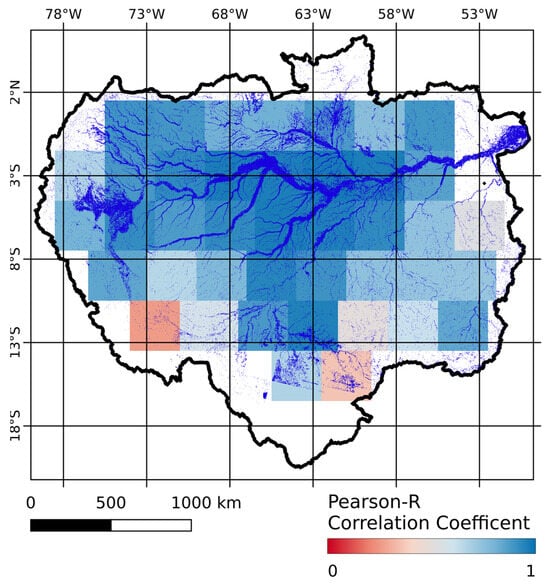

The RadWet-L mapped total wetted area is also highly correlated to GRACE-FO water equivalent thickness within the Amazon River Basin measured at the GRACE-FO native resolution of 3° × 3°, as shown in Figure 12. The Pearson-R correlation between RadWet-L and GRACE-FO is shown across the Amazon Basin, with the 2017–2019 maximum total wetted area shown for reference. The correlation is generally high, and greater than 0.7 (p value < 0.01) where RadWet-L is detecting inundation. Areas of lower correlation are usually in herbaceous-dominated areas where the ALOS-2 PALSAR-2 ScanSAR system is unable to map inundation.

Figure 12.

Pearson-R correlation coefficient calculated between GRACE-FO and RadWet-L mapped total wetted area at 3° × 3° native GRACE-FO resolution across the Amazon Basin [57]. RadWet-L 2019–2023 maximum total inundation extent is overlaid to show main areas of inundation within the basin.

The annual median date of peak high water measured by RadWet-L was April 27th, with a mean date of peak high water on the 6th of May. Both these estimated dates of peak high water occurred earlier than measured by GRACE-FO, with a difference of 19 and 10 days, respectively.

The annual median estimated date of low water occurs on the 6th of November, with a mean low water occurrence on the 28th of October. This is later in the hydrological year than measured by GRACE-FO, with a median and mean low water occurrence on the 16th of October.

3.4. Comparison with G-REALM

At a sub-basin level, RadWet-L inundation extent was highly correlated with water levels recorded by satellite altimetry with Pearson correlation R values ranging from 0.83 to 0.91 (p value < 0.01) at five out of the six sub-basins examined shown in Figure 13. The correlation for the Rio Marau sub-basin was comparatively low (R = 0.65, p value < 0.01).

Figure 13.

Comparison between RadWet-L total wetted area classified outputs with G-REALM satellite altimetry measurements [58] over 6 candidate sub-basins within the larger Amazon Basin.

3.5. Comparision with In Situ River Stage Data

The total wetted area measured by RadWet-L was highly correlated with the river stage (Figure 14 and Figure 15) with Pearson R values ranging between 0.78 and 0.94 (p values < 0.01). This gives confidence that RadWet-L is able to capture temporal patterns of inundation. At all gauge stations, RadWet-L measured the total wetted area tracks river stage height closely (Figure 15). It was able to capture isolated changes in inundation, such as the reduction in river height observed at the Estiãrodo Repouso gauging station in February 2022 and the increase in river stage at the Acanaui gauging station in November 2019.

Figure 14.

Comparisons between RadWet-L total wetted area, and in situ river stage height at [59] 5 locations within the Amazon River Basin.

Figure 15.

In situ measurements of river stage height at 5 gauging stations within the Amazon River Basin between 2019 and 2023, with RadWet—L total wetted [59] area.

3.6. Comparison with Other Mapping Approaches

At the Amazon Basin scale, RadWet-L applied over the time period 2019–2023 estimated a maximum inundation extent of 655,788 km2 (95th Conf Int: 622,996–639,350 km2), where open water accounts for 170,129 km2 (95th Conf Int: 161,622–179,913 km2) and inundated forest contributes 485,659 km2 (95th Conf Int: 461,374–513,588 km2). This overall extent is comparable with those found in previous studies, including Hess et al. [11] (overall inundation extent: 629,453 km2, 1996), Chapman et al. [12] (overall inundation extent: 568,064 km2, 2006–2010), and Rosenqvist [9] (overall inundation extent: 538,699 km2, 2014–2017) (Table 4). Both Hess et al. [11] and Rosenqvist et al. [9] include a Submerged Vegetation class, defined as vegetation that is fully submerged during a part of the hydrological year [9]. These landcovers were not considered an independent class in this study and would likely be classified as open water.

Table 4.

Comparison between RadWet-L-classified maximum and minimum Open Water, Inundated Forest and Total Wetted Area and other comparable studies mapping inundation within the Amazon River Basin.

Comparing RadWet-L to other commonly used global wetland products such as the Global Lakes and Wetland Database [61,62], or global landcover classifications such as the European Space Agency GlobCover [42], RadWet-L shows good agreement. However, it is able to map wetland features at a finer spatial scale and identifies areas which both these maps miss, as shown in Figure 16.

Figure 16.

Comparison between ESA GlobCover [63] (top), the Global Lakes and Wetlands Database [61,62] (centre), and RadWet-L Inundation Frequency (2019–2023) (bottom).

4. Discussion

RadWet-L is an automated algorithm that can be applied to a time series of imagery and therefore can be used to reconstruct inundation extent history between or within a hydrological year, including assessments of inundation extent in both wet and dry conditions. For the Amazon Basin, we found that we were able to detect temporal variations in inundation extent over the 2019–2022 study period that are closely related to other measurements, such as NASA-GFZ GRACE-FO’s water equivalent thickness. This indicates that RadWet-L could be used to detect specific responses to climatological/hydrological events that affect the hydrological regime of the Amazon and its sub-basins, such as strong El Niño and La Niña events.

The classification evaluation reported an overall agreement of 97% (95th Conf Int: 96.5–97.4) when compared against the input ALOS-2 PALSAR-2 ScanSAR imagery. The majority of errors were found to be a result of false negatives, specifically, areas of inundated forest being wrongly classified as other landcover (i.e., not inundated). These were generally observed at the boundary between areas of inundation and non-inundation. Here, mixed pixels result in a weakening of the double-bounce backscatter signal. In many instances, human interpretation could identify these areas as inundated forest, but this interpretation relied on contextual information, such as proximity to a river channel/tributary or the general shape and landscape of where the inundated forest feature is located. This was particularly the case for long thin features representing small river channels. This contextual knowledge cannot be readily included in the XGBoost classifier used in RadWet-L. Future work should consider the use of Convolutional Neural Network (CNN) deep learning classifiers that are better suited to capturing contextual information, potentially leading to improved classification performance [64]. However, it is also important to acknowledge and measure the apparent cost-benefit of employing more computationally demanding data analysis approaches and aligning this with our responsibility to provide energy-efficient solutions [65].

Nevertheless, given their complexity, wetland classification maps are unlikely to be perfect and it is therefore important to properly measure and quantify confidence. Following the approach used in the evaluation of the Global Mangrove Watch products [55], we calculated accuracy confidence intervals. In doing so, users can interpret RadWet-L-generated maps and data products in the context of the supplied accuracy scores and related confidence intervals. We recommend that this becomes common practice for remote sensing analysts.

RadWet-L was applied to ALOS-2 PALSAR-2 ScanSAR imagery that was acquired with a nominal revisit period of 42 days. This temporal granularity is likely to be less suitable for capturing fluctuations in inundation in more rapidly responding catchments, particularly those in drier environments where Hortionian overland flow predominates (i.e., rainfall is less likely to infiltrate the soil and more likely to rapidly move into stream and river channels) leading to flashy responses to rainfall events. Additionally, the 42-day granularity means that for most years, the peak high/low water extent for a specific region may not exactly coincide with an ALOS-2 PALSAR-2 overpass. This is likely to account for some differences in the date of high/low water peaks with the monthly observations by GRACE-FO. Deploying RadWet-L on data from the upcoming (planned launch in 2024) NASA-ISRO NISAR (L-band and S-band) mission, with a 12-day revisit time, would potentially allow for these dynamics to be captured more accurately.

Some satellite observation systems offer higher temporal frequency mapping of inundation extent. For instance [17], used the L-band signal from the Cyclone Global Navigation Satellite System (CYGNSS) satellite constellation to provide monthly inundation maps over regions including the Pantanal (Bolivia, Brazil) and Sudd (South Sudan) wetlands. However, at a resolution of 0.01 degree (∼1111 m), this approach will not be able to capture fine-scale hydrological features [18]. Conversely, RadWet-L, with the ALOS-2 PALSAR-2 ScanSAR mapping unit of 50 m, offers the ability to map fine-scale features such as headwater rivers, smaller tributary systems and floodplain features such as anabranching channels shown in Figure 9. These features have an important role in the movement of water through basins [66] and, therefore, capturing the inundation dynamics of these features can increase our understanding of the hydrology of a region such as the Amazon Basin. Furthermore, smaller headwaters and tributaries are likely to act as important habitats and refugia for approximately half of the aquatic species in the Amazon, yet they are relatively understudied compared to larger channels [5], and therefore understanding their spatial extent and temporal dynamics can help with efforts to conserve and protect these important habitats [5,67].

Many widely used global wetland databases and maps (including the Global Lakes and Wetlands Database and the Global Surface Water system) do not adequately capture the extent of inundation vegetation [4]. RadWet-L is designed to detect both open water and inundated forest. The contribution of inundated forest to the total inundation extent of the Amazon Basin is considerable, representing an estimated 9.5% of inundation in the dry season, and 74% of the total observed inundated during the wet season. This proportion of inundated forest detected by RadWet-L for the Amazon Basin is similar to the proportions of inundation vegetation reported in other studies focussing on herbaceous-dominated tropical wetland landscapes using C-band radar, including Western Zambia [24,25] and the Upper Rupununi, Guyana. If tropical wetland inundation is to be mapped, inundated herbaceous vegetation must also be taken into account. Future work should consider harmonising information from L- and C-band radar to map both inundated forest and inundation herbaceous vegetation, working towards a comprehensive inundation mapping system to provide accurate inventories of the world’s tropical wetlands.

The ability to map wetland inundation dynamics in large tropical river basins like the Amazon can transform our ability to monitor wetland ecosystems and biodiversity hotspots. Inundated forests in the Amazon are relatively sparsely populated and therefore exist in a near-natural state, largely untouched by human activity and are highly associated with increased biodiversity [5,68]. However, they are highly vulnerable to a range of pressures, including land cover change and pollution [5]. Through high spatial and temporal scale mapping of inundation dynamics, RadWet-L has the capacity to provide vital information on how these important habitats are changing.

Furthermore, anaerobic conditions experienced during periods of inundation have a substantial role in governing the rate of emissions from greenhouse gases such as carbon dioxide and methane. This mechanism is significantly accelerated over inundated vegetation, with tropical regions having a disproportionally large role in global emissions [69]. Indeed, the Amazon floodplain represents the largest natural source of methane in the tropics, largely mediated by forested wetlands [70]. Information generated by RadWet-L could provide unprecedented information on the inundation dynamics of these wetlands and could therefore be used to inform models of greenhouse gas emissions following an approach similar to that used by [17]. They employed improved inundation maps for regions in East Africa and South America for driving methane emission estimates using the WetCHARTS model [71].

In this study, RadWet-L was calibrated and evaluated using ALOS-2 PALSAR-2 ScanSAR data from 2019-late to mid-2023. Corroboration with GRACE-FO data demonstrates that RadWet-L can depict both intra and inter-annual variations in inundation, albeit over a relatively short timescale. The next step is to apply RadWet-L to the entire ALOS-2 PALSAR-2 ScanSAR archive, potentially generating data on inundation dynamics from 2014 to present for a range of wetlands across the tropics. Analysis of historical ALOS PALSAR ScanSAR data would also allow the dynamics of the 2007–2010 period to be included. In doing so, we may be able to unpick the relationship between longer-term inundation dynamics and longer-term climatic factors, including ENSO cycles, and understand how climate change might affect tropical wetlands. For instance, a longer time series of information can be used to establish long-term typical inundation extent, specifically the median wet/dry season extent. This can then be used to benchmark against the extent recorded on an annual basis, revealing the impact of El Niño/La Niña events. It can identify areas where significant ‘excess’ in inundation is occurring and the resulting flood hazard, or areas that are particularly affected by drought conditions. Such information can help inform policy to safeguard wetland ecosystems and livelihoods.

5. Conclusions

RadWet-L can map inundation within forested wetlands using time series L-band radar imagery. In this instance, RadWet-L was applied and evaluated using 50 m ALOS-2 PALSAR-2 ScanSAR imagery, at approximately 42-day time steps, providing new insights into the temporal and spatial dynamics in inundation within the Amazon Basin at both inter- and intra-annual scales. This unprecedented granularity is potentially transformational for the way that we monitor forested wetlands for applications in food and water security, the protection of ecosystems, the sustainable use of wetlands, and carbon budgeting.

In this paper, RadWet-L was shown to perform consistently well over the 5.95 million km2 area of the Amazon Basin, a region that includes a range of land covers and forest types. As such, RadWet-L may be transferable to other forested wetlands such as the Congo River Basin in Central Africa. In working towards a comprehensive wetland mapping system, future studies should also consider combining L- and C-band radar data, allowing both the forested and herbaceous inundated vegetation dynamics to be mapped.

Author Contributions

Conceptualization, G.O., A.H., P.B. and A.R.; methodology, G.O., A.H., P.B. and A.R.; software, G.O.; validation, G.O.; formal analysis, G.O. and A.H.; investigation, G.O.; resources, G.O. and P.B.; data curation, G.O. and A.R.; writing—original draft preparation, G.O. and A.H.; writing—review and editing, G.O., A.H., P.B. and A.R.; visualization, G.O.; supervision, A.H. and P.B.; project administration, A.H.; funding acquisition, A.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Aberystwyth University’s AberDoc Programme and Aberystwyth University, Faculty of Earth and Life Sciences Research Boost award.

Data Availability Statement

Data are available from Zenodo (10.5281/zenodo.10566417) with scripts used for image classification available at GitHub (https://github.com/gro5-AberUni/ALOS-LBand accessed: 30 May 2024).

Acknowledgments

This work has been undertaken in part within the framework of the JAXA Kyoto & Carbon (K&C) Initiative. ALOS-2 PALSAR-2 data have been provided by JAXA EORC.

Conflicts of Interest

Author Ake Rosenqvist is the founder of the company solo Earth Observation (soloEO). The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Peng, S.; Lin, X.; Thompson, R.L.; Xi, Y.; Liu, G.; Hauglustaine, D.; Lan, X.; Poulter, B.; Ramonet, M.; Saunois, M.; et al. Wetland Emission and Atmospheric Sink Changes Explain Methane Growth in 2020. Nature 2022, 612, 477–482. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Palmer, P.I.; Parker, R.J.; Lunt, M.F.; Bösch, H. Methane Emissions Are Predominantly Responsible for Record-Breaking Atmospheric Methane Growth Rates in 2020 and 2021. Atmos. Chem. Phys. 2023, 23, 4863–4880. [Google Scholar] [CrossRef]

- Lunt, M.F.; Palmer, P.I.; Lorente, A.; Borsdorff, T.; Landgraf, J.; Parker, R.J.; Boesch, H. Rain-Fed Pulses of Methane from East Africa during 2018-2019 Contributed to Atmospheric Growth Rate. Environ. Res. Lett. 2021, 16, 024021. [Google Scholar] [CrossRef]

- Hardy, A.; Palmer, P.I.; Oakes, G. Satellite Data Reveal How Sudd Wetland Dynamics Are Linked with Globally-Significant Methane Emissions. Environ. Res. Lett. 2023, 18, 074044. [Google Scholar] [CrossRef]

- Junk, W.J.; Soares, M.G.M.; Bayley, P.B. Freshwater Fishes of the Amazon River Basin: Their Biodiversity, Fisheries, and Habitats. Aquat. Ecosyst. Health Manag. 2007, 10, 153–173. [Google Scholar] [CrossRef]

- Householder, J.E.; Wittmann, F.; Schöngart, J.; Piedade, M.T.F.; Junk, W.J.; Latrubesse, E.M.; Quaresma, A.C.; Demarchi, L.O.; Lobo, G.d.S.; de Aguiar, D.P.P.; et al. One Sixth of Amazonian Tree Diversity Is Dependent on River Floodplains. Nat. Ecol. Evol. 2024, 8, 901–911. [Google Scholar] [CrossRef]

- Castello, L.; Mcgrath, D.G.; Hess, L.L.; Coe, M.T.; Lefebvre, P.A.; Petry, P.; Macedo, M.N.; Renó, V.F.; Arantes, C.C. The Vulnerability of Amazon Freshwater Ecosystems. Conserv. Lett. 2013, 6, 217–229. [Google Scholar] [CrossRef]

- Correa, S.B.; Van Der Sleen, P.; Siddiqui, S.F.; Bogotá-Gregory, J.D.; Arantes, C.C.; Barnett, A.A.; Couto, T.B.A.; Goulding, M.; Anderson, E.P. Biotic Indicators for Ecological State Change in Amazonian Floodplains. Bioscience 2022, 72, 753–768. [Google Scholar] [CrossRef] [PubMed]

- Rosenqvist, J.; Rosenqvist, A.; Jensen, K.; McDonald, K. Mapping of Maximum and Minimum Inundation Extents in the Amazon Basin 2014–2017 with ALOS-2 PALSAR-2 ScanSAR Time-Series Data. Remote Sens. 2020, 12, 1326. [Google Scholar] [CrossRef]

- Hess, L.L.; Melack, J.M.; Affonso, A.G.; Barbosa, C.; Gastil-Buhl, M.; Novo, E.M.L.M. Wetlands of the Lowland Amazon Basin: Extent, Vegetative Cover, and Dual-Season Inundated Area as Mapped with JERS-1 Synthetic Aperture Radar. Wetlands 2015, 35, 745–756. [Google Scholar] [CrossRef]

- Hess, L.L.; Melack, J.M.; Novo, E.M.L.M.; Barbosa, C.C.F.; Gastil, M. Dual-Season Mapping of Wetland Inundation and Vegetation for the Central Amazon Basin. Remote Sens. Environ. 2003, 87, 404–428. [Google Scholar] [CrossRef]

- Chapman, B.; McDonald, K.; Shimada, M.; Rosenqvist, A.; Schroeder, R.; Hess, L. Mapping Regional Inundation with Spaceborne L-Band SAR. Remote Sens. 2015, 7, 5440–5470. [Google Scholar] [CrossRef]

- Prigent, C.; Jimenez, C.; Bousquet, P. Satellite-Derived Global Surface Water Extent and Dynamics over the Last 25 Years (GIEMS-2). J. Geophys. Res. Atmos. 2020, 125, e2019JD030711. [Google Scholar] [CrossRef]

- Jensen, K.; Mcdonald, K. Surface Water Microwave Product Series Version 3: A Near-Real Time and 25-Year Historical Global Inundated Area Fraction Time Series From Active and Passive Microwave Remote Sensing. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1402–1406. [Google Scholar] [CrossRef]

- Aires, F.; Miolane, L.; Prigent, C.; Pham, B.; Fluet-Chouinard, E.; Lehner, B.; Papa, F. A Global Dynamic Long-Term Inundation Extent Dataset at High Spatial Resolution Derived through Downscaling of Satellite Observations. J. Hydrometeorol. 2017, 18, 1305–1325. [Google Scholar] [CrossRef]

- Muro, J.; Strauch, A.; Heinemann, S.; Steinbach, S.; Thonfeld, F.; Waske, B.; Diekkrüger, B. Land Surface Temperature Trends as Indicator of Land Use Changes in Wetlands. Int. J. Appl. Earth Obs. Geoinf. 2018, 70, 62–71. [Google Scholar] [CrossRef]

- Gerlein-Safdi, C.; Bloom, A.A.; Plant, G.; Kort, E.A.; Ruf, C.S. Improving Representation of Tropical Wetland Methane Emissions With CYGNSS Inundation Maps. Glob. Biogeochem. Cycles 2021, 35, e2020GB006890. [Google Scholar] [CrossRef]

- Di Vittorio, C.A.; Georgakakos, A.P. Land Cover Classification and Wetland Inundation Mapping Using MODIS. Remote Sens. Environ. 2018, 204, 1–17. [Google Scholar] [CrossRef]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-Based Flood Mapping: A Fully Automated Processing Chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

- Martinis, S.; Plank, S.; Ćwik, K. The Use of Sentinel-1 Time-Series Data to Improve Flood Monitoring in Arid Areas. Remote Sens. 2018, 10, 583. [Google Scholar] [CrossRef]

- Martinis, S.; Kersten, J.; Twele, A. A Fully Automated TerraSAR-X Based Flood Service. ISPRS J. Photogramm. Remote Sens. 2015, 104, 203–212. [Google Scholar] [CrossRef]

- Martinis, S.; Twele, A.; Voigt, S. Towards Operational near Real-Time Flood Detection Using a Split-Based Automatic Thresholding Procedure on High Resolution TerraSAR-X Data. Nat. Hazards Earth Syst. Sci. 2009, 9, 303–314. [Google Scholar] [CrossRef]

- Huang, W.; DeVries, B.; Huang, C.; Lang, M.W.; Jones, J.W.; Creed, I.F.; Carroll, M.L. Automated Extraction of Surface Water Extent from Sentinel-1 Data. Remote Sens. 2018, 10, 797. [Google Scholar] [CrossRef]

- Oakes, G.; Hardy, A.; Bunting, P. RadWet: An Improved and Transferable Mapping of Open Water and Inundated Vegetation Using Sentinel-1. Remote Sens. 2023, 15, 1705. [Google Scholar] [CrossRef]

- Hardy, A.; Ettritch, G.; Cross, D.E.; Bunting, P.; Liywalii, F.; Sakala, J.; Silumesii, A.; Singini, D.; Smith, M.; Willis, T.; et al. Automatic Detection of Open and Vegetated Water Bodies Using Sentinel 1 to Map African Malaria Vector Mosquito Breeding Habitats. Remote Sens. 2019, 11, 593. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. SAR-Based Detection of Flooded Vegetation—A Review of Characteristics and Approaches. Int. J. Remote Sens. 2018, 39, 2255–2293. [Google Scholar] [CrossRef]

- Fleischmann, A.S.; Papa, F.; Fassoni-Andrade, A.; Melack, J.M.; Wongchuig, S.; Paiva, R.C.D.; Hamilton, S.K.; Fluet-Chouinard, E.; Barbedo, R.; Aires, F.; et al. How Much Inundation Occurs in the Amazon River Basin? Remote Sens. Environ. 2022, 278, 113099. [Google Scholar] [CrossRef]

- Rosenqvist, Å.; Forsberg, B.R.; Pimentel, T.; Rauste, Y.A.; Richey, J.E. The Use of Spaceborne Radar Data to Model Inundation Patterns and Trace Gas Emissions in the Central Amazon Floodplain. Int. J. Remote Sens. 2002, 23, 1303–1328. [Google Scholar] [CrossRef]

- Arnesen, A.S.; Silva, T.S.F.; Hess, L.L.; Novo, E.M.L.M.; Rudorff, C.M.; Chapman, B.D.; McDonald, K.C. Monitoring Flood Extent in the Lower Amazon River Floodplain Using ALOS/PALSAR ScanSAR Images. Remote Sens. Environ. 2013, 130, 51–61. [Google Scholar] [CrossRef]

- Ferreira-Ferreira, J.; Silva, T.S.F.; Streher, A.S.; Affonso, A.G.; De Almeida Furtado, L.F.; Forsberg, B.R.; Valsecchi, J.; Queiroz, H.L.; De Moraes Novo, E.M.L. Combining ALOS/PALSAR Derived Vegetation Structure and Inundation Patterns to Characterize Major Vegetation Types in the Mamirauá Sustainable Development Reserve, Central Amazon Floodplain, Brazil. Wetl. Ecol. Manag. 2015, 23, 41–59. [Google Scholar] [CrossRef]

- Ovando, A.; Tomasella, J.; Rodriguez, D.A.; Martinez, J.M.; Siqueira-Junior, J.L.; Pinto, G.L.N.; Passy, P.; Vauchel, P.; Noriega, L.; von Randow, C. Extreme Flood Events in the Bolivian Amazon Wetlands. J. Hydrol. Reg. Stud. 2016, 5, 293–308. [Google Scholar] [CrossRef]

- De Resende, A.F.; Schöngart, J.; Streher, A.S.; Ferreira-Ferreira, J.; Piedade, M.T.F.; Silva, T.S.F. Massive Tree Mortality from Flood Pulse Disturbances in Amazonian Floodplain Forests: The Collateral Effects of Hydropower Production. Sci. Total Environ. 2019, 659, 587–598. [Google Scholar] [CrossRef] [PubMed]

- Pinel, S.; Bonnet, M.P.; Da Silva, J.S.; Sampaio, T.C.; Garnier, J.; Catry, T.; Calmant, S.; Fragoso, C.R.; Moreira, D.; Motta Marques, D.; et al. Flooding Dynamics Within an Amazonian Floodplain: Water Circulation Patterns and Inundation Duration. Water Resour. Res. 2020, 56, e2019WR026081. [Google Scholar] [CrossRef]

- Siqueira, P.; Hensley, S.; Shaffer, S.; Hess, L.; McGarragh, G.; Chapman, B.; Freeman, A. A Continental-Scale Mosaic of the Amazon Basin Using JERS-1 SAR. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2638–2644. [Google Scholar] [CrossRef]

- Jensen, K.; McDonald, K.; Podest, E.; Rodriguez-Alvarez, N.; Horna, V.; Steiner, N. Assessing L-Band GNSS-Reflectometry and Imaging Radar for Detecting Sub-Canopy Inundation Dynamics in a Tropical Wetlands Complex. Remote Sens. 2018, 10, 1431. [Google Scholar] [CrossRef]

- Rosenqvist, A.; Shimada, M.; Suzuki, S.; Ohgushi, F.; Tadono, T.; Watanabe, M.; Tsuzuku, K.; Watanabe, T.; Kamijo, S.; Aoki, E. Operational Performance of the ALOS Global Systematic Acquisition Strategy and Observation Plans for ALOS-2 PALSAR-2. Remote Sens. Environ. 2014, 155, 3–12. [Google Scholar] [CrossRef]

- Rosenqvist, A.; Rebelo, L.M.; Costa, M. The ALOS Kyoto & Carbon Initiative: Enabling the Mapping, Monitoring and Assessment of Globally Important Wetlands. Wetl. Ecol. Manag. 2015, 23, 1. [Google Scholar]

- CEOS Analysis Ready Data. Available online: https://ceos.org/ard/index.html#specs (accessed on 18 January 2024).

- Microsoft Open Source; McFarland, M.; Emanuele, R.; Morris, D.; Augspurger, T. Microsoft/PlanetaryComputer: October 2022. 2022. Available online: https://zenodo.org/records/7261897 (accessed on 5 October 2023).

- Lehner, B.; Verdin, K.; Jarvis, A. New Global Hydrography Derived From Spaceborne Elevation Data. EOS Trans. Am. Geophys. Union. 2008, 89, 93–94. [Google Scholar] [CrossRef]

- Yamazaki, D.; Ikeshima, D.; Sosa, J.; Bates, P.D.; Allen, G.H.; Pavelsky, T.M. MERIT Hydro: A High-Resolution Global Hydrography Map Based on Latest Topography Dataset. Water Resour. Res. 2019, 55, 5053–5073. [Google Scholar] [CrossRef]

- Buchhorn, M.; Lesiv, M.; Tsendbazar, N.E.; Herold, M.; Bertels, L.; Smets, B. Copernicus Global Land Cover Layers—Collection 2. Remote Sens. 2020, 12, 1044. [Google Scholar] [CrossRef]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-Resolution Global Maps of 21st-Century Forest Cover Change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef]

- Small, D. Flattening Gamma: Radiometric Terrain Correction for SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3081–3093. [Google Scholar] [CrossRef]

- Lehner, B.; Grill, G. Global River Hydrography and Network Routing: Baseline Data and New Approaches to Study the World’s Large River Systems. Hydrol. Process 2013, 27, 2171–2186. [Google Scholar] [CrossRef]

- Nobre, A.D.; Cuartas, L.A.; Hodnett, M.; Rennó, C.D.; Rodrigues, G.; Silveira, A.; Waterloo, M.; Saleska, S. Height Above the Nearest Drainage—A Hydrologically Relevant New Terrain Model. J. Hydrol. 2011, 404, 13–29. [Google Scholar] [CrossRef]

- Zhou, X.; Chong, J.; Yang, X.; Li, W.; Guo, X. Ocean Surface Wind Retrieval Using SMAP L-Band SAR. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 65–74. [Google Scholar] [CrossRef]

- Shepherd, J.D.; Bunting, P.; Dymond, J.R. Operational Large-Scale Segmentation of Imagery Based on Iterative Elimination. Remote Sens. 2019, 11, 658. [Google Scholar] [CrossRef]

- The Pandas Development Team Pandas-Dev/Pandas: Pandas. Zenodo. 2020. Available online: https://zenodo.org/records/10426137 (accessed on 5 August 2023).

- Bunting, P.; Clewley, D.; Lucas, R.M.; Gillingham, S. The Remote Sensing and GIS Software Library (RSGISLib). Comput. Geosci. 2014, 62, 216–226. [Google Scholar] [CrossRef]

- Sklearn Sklearn.Preprocessing.StandardScaler—Scikit-Learn 1.3.2 Documentation. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.StandardScaler.html#sklearn.preprocessing.StandardScaler (accessed on 16 January 2024).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar] [CrossRef]

- Sen, R.; Goswami, S.; Chakraborty, B. Jeffries-Matusita Distance as a Tool for Feature Selection. In Proceedings of the 2019 International Conference on Data Science and Engineering, ICDSE 2019, Patna, India, 26–28 September 2019; pp. 15–20. [Google Scholar] [CrossRef]

- Bunting, P.; Rosenqvist, A.; Hilarides, L.; Lucas, R.M.; Thomas, N.; Tadono, T.; Worthington, T.A.; Spalding, M.; Murray, N.J.; Rebelo, L.M. Global Mangrove Extent Change 1996–2020: Global Mangrove Watch Version 3.0. Remote Sens. 2022, 14, 3657. [Google Scholar] [CrossRef]

- Murray, N.J.; Phinn, S.R.; DeWitt, M.; Ferrari, R.; Johnston, R.; Lyons, M.B.; Clinton, N.; Thau, D.; Fuller, R.A. The Global Distribution and Trajectory of Tidal Flats. Nature 2018, 565, 222–225. [Google Scholar] [CrossRef]

- Landerer, F.W.; Swenson, S.C. Accuracy of Scaled GRACE Terrestrial Water Storage Estimates. Water Resour. Res. 2012, 48, 4531. [Google Scholar] [CrossRef]

- Birkett, C.M.; Ricko, M.; Beckley, B.D.; Yang, X.; Tetrault, R.L.; Birkett, C.M.; Ricko, M.; Beckley, B.D.; Yang, X.; Tetrault, R.L. G-REALM: A Lake/Reservoir Monitoring Tool for Drought Monitoring and Water Resources Management. In Fall Meeting 2017; American Geophysical Union: Washington, DC, USA, 2017; H23P-02. [Google Scholar]

- Agência Nacional de Águas HIDROWEB. Available online: https://www.snirh.gov.br/hidroweb/mapa (accessed on 12 March 2024).

- GRDC–The GRDC–Watershed Boundaries–Watershed Boundaries of GRDC Stations (GRDC, 2011). Available online: https://grdc.bafg.de/GRDC/EN/02_srvcs/22_gslrs/222_WSB/watershedBoundaries.html (accessed on 18 March 2024).

- WWF. Global Lakes and Wetlands Database GLWD; GLWD Documentation; WWF: Gland, Switzerland, 2004. [Google Scholar]

- Lehner, B.; Döll, P. Development and Validation of a Global Database of Lakes, Reservoirs and Wetlands. J. Hydrol. 2004, 296, 1–22. [Google Scholar] [CrossRef]

- Arino, O.; Perez, J.R.; Kalogirou, V.; Defourny, P.; Achard, F. Global Land Cover Map for 2009 (GlobCover 2009). ESA Living Planet Symposium. 2010. Available online: https://developers.google.com/earth-engine/datasets/catalog/ESA_GLOBCOVER_L4_200901_200912_V2_3 (accessed on 1 December 2023).