Deep Learning Hyperspectral Pansharpening on Large-Scale PRISMA Dataset

Abstract

1. Introduction

- A new large-scale dataset covering 262,200 km2 for the qualitative assessment of deep neural models for HS image pansharpening. Such a dataset, compared with the others in Table 1, is collected from the PRISMA satellite, preprocessed and adopted for the retraining of current state-of-the-art approaches for image pansharpening.

- An in-depth statistically relevant comparison, both in quantitative and qualitative terms, of traditional machine-learning-free approaches and current deep learning approaches, adapted to HS data, retrained and tested on the newly proposed large-scale dataset.

| Dataset | Cardinality | Images Resolution | Type | # of Bands | Wavelength Coverage |

|---|---|---|---|---|---|

| Pavia University | 1 | 610 × 610 | airborne | 103 | 430–838 nm |

| Pavia Center | 1 | 1096 × 1096 | airborne | 102 | 430–860 nm |

| Houston [33,38] | 1 | 349 × 1905 | airborne | 144 | 364–1046 nm |

| Chikusei [39] | 1 | 2517 × 2335 | airborne | 128 | 363–1018 nm |

| AVIRIS Moffett Field [18] | 1 | 37 × 79 | airborne | 224 | 400–2500 nm |

| Garons [18] | 1 | 80 × 80 | airborne | 125 | 400–2500 nm |

| Camargue [18] | 1 | 100 × 100 | airborne | 125 | 400–2500 nm |

| Indian Pines [40] | 1 | 145 × 145 | airborne | 224 | 400–2500 nm |

| Cuprite Mine | 1 | 400 × 350 | airborne | 185 | 400–2450 nm |

| Salinas | 1 | 512 × 217 | airborne | 202 (224) | 400–2500 nm |

| Washington Mall [29] | 1 | 1200 × 300 | airborne | 191 (210) | 400–2400 nm |

| Merced [33] | 1 | 180 × 180 | satellite | 134 (242) | 400–2500 nm |

| Halls Creek [30] | 1 | 3483 × 567 | satellite | 171 (230) | 400–2500 nm |

| OURS based on PRISMA | 190 | 1259 × 1225 | satellite | 203 (230) | 400–2505 nm |

2. Materials and Methods

2.1. Data

2.1.1. Data Cleaning Procedure

- a slight misalignment between the panchromatic image and VNIR and SWIR cubes (VNIR and SWIR are assumed to be aligned already);

- the presence of pixels marked as invalid from the Level-2D Prisma pre-processing.

2.1.2. Full Resolution and Reduced Resolution Datasets

- Full Resolution (FR)Due to missing reference images, this dataset cannot be used for model training but only for evaluation purposes. This dataset is made of couples of the type .

- Reduced Resolution (RR): This dataset was created in order to perform a full-reference evaluation since it presents reference bands alongside the input HS and the PAN, and for training the deep learning model. This dataset is made of triplets of the type .

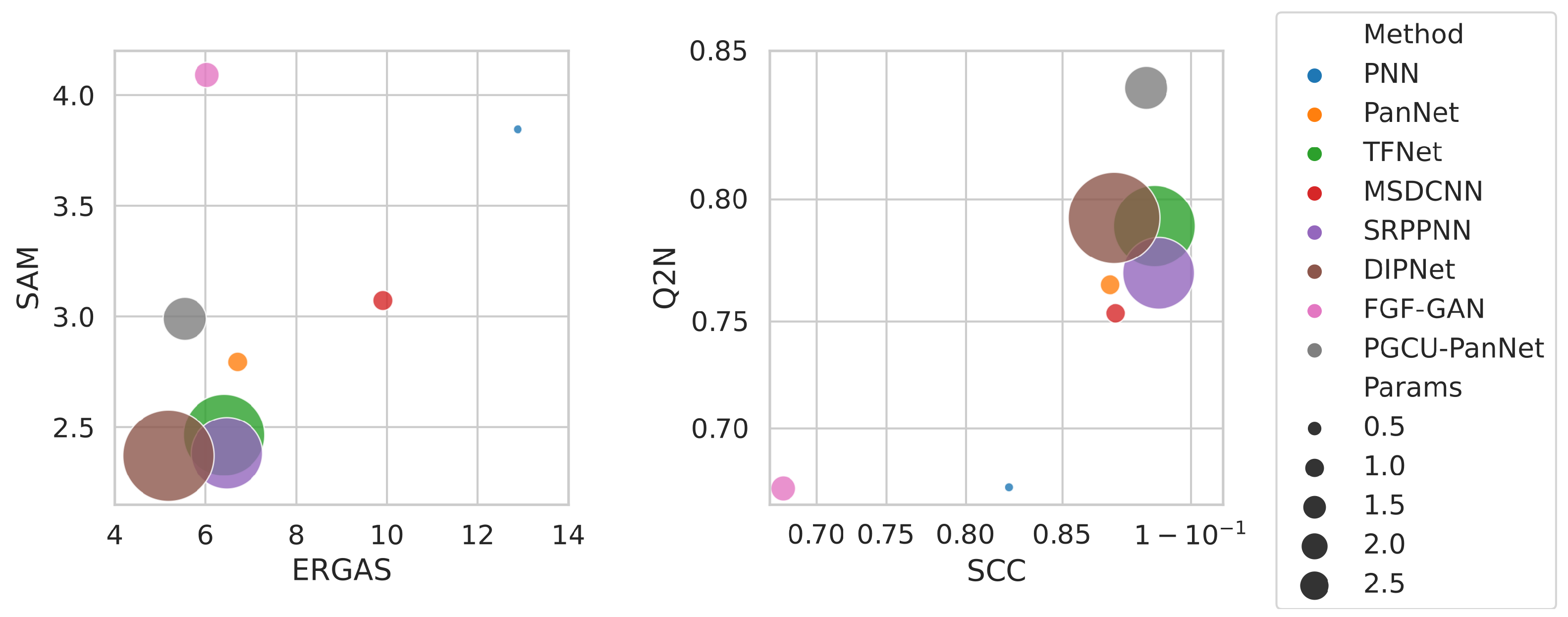

2.2. Reduced Resolution Metrics

- ERGAS [44] is an error index that tries to propose a global evaluation of the quality of the fused images. This metric is based on the distance between the bands that constitute the fused and the reference images and is computed as:where x and y are the output pansharpened image and the reference, respectively, m is the number of pixels in each band, h and l are the spatial resolution of the PAN image and HS image, respectively, is the mean of the i-th band of the reference, and N is the number of total bands.

- The Spectral Angle Mapper (SAM) [45] denotes the absolute value of the angle between two vectors v and .where v and are, respectively, the flattened versions of and . A SAM value of zero denotes a complete absence of spectral distortion but possible radiometric distortion (the two vectors are parallel but have different lengths).

- The Spatial Correlation Coefficient (SCC) [46] is a spatial evaluation index that analyzes the difference in high-frequency details between two images. The SCC is computed as follows:where and are the means of and , respectively, and w and h are the width and height of an image. F is a filter for the extraction of high-frequency details, defined as follows:

- The index is a generalization of the Universal Quality Index () defined by Wang et al. [47] for an image x and a reference image y.Here is the covariance between x and y, and and are the standard deviation and mean of x, respectively. The metric represents a good candidate to give an overall evaluation of both the radiometric and spectral distortions in the pansharpened images.

2.3. Full Resolution Metrics

2.4. Methods

- SRPPNN [56]: The architecture proposed by Cai et al. [56] is characterized by multiple progressive upsampling steps that correspond to a first and a secondary latter bicubic upscaling operation. We changed those two upscaling operations by modifying their scale factors to and , respectively. The rest of the original architecture was not changed.

- DIPNet [57]: This model is composed of 3 main components. The first two are feature extraction branches, respectively, for the low-frequency and high-frequency details of the panchromatic image; here, we changed the stride value of the second convolutional layer used to reduce the features’ spatial resolution, from 2 to 3, in order to bring the extracted features to the same dimension of the input bands to perform feature concatenation. The third component is the main branch, which uses the features extracted from the previous components along with the input images to perform the actual pansharpening operation. The main branch can be also divided into two other components: a first upsampling part and an encoder–decoder structure for signal post-processing. We changed the scaling factor of the upscaling module from 2 to 3, and in the encoder–decoder part, we changed the stride values of the central convolutional and deconvolutional layers from 2 to 3.

- FPF-GAN [34]: This model consists of a generative and a discriminative network. To adapt to the scale, we only modified the discriminator, since the generative network works with a bicubic upsampling that dynamically adapts to the dimensions of the target image. Specifically, we changed the stride of the first layer from 2 to 3 and added an extra group of Conv2d-BatchNorm-LeakyReLU after the third block to accommodate the higher input dimensions in the training. The convolutional layer in this new block produces the same number of features as the previous one and uses a kernel of size 3 with stride 2.

- PGCU-PanNet [36]: This approach consists of a module specifically designed for upsampling MS images, which can be combined with existing methods. For the training and testing, we chose to use the version that combines the PGCU module with the PanNet model. In order to train the model with the PRISMA data, we changed the scale factor used by the initial interpolation to and reduced the number of hidden features of the information extractor to 32. This last modification is necessary because in the original model, this value is kept equal to the number of channels in the input (e.g., 4), a condition that is not feasible due to memory constraints when working with HS data (203 channels in this case).

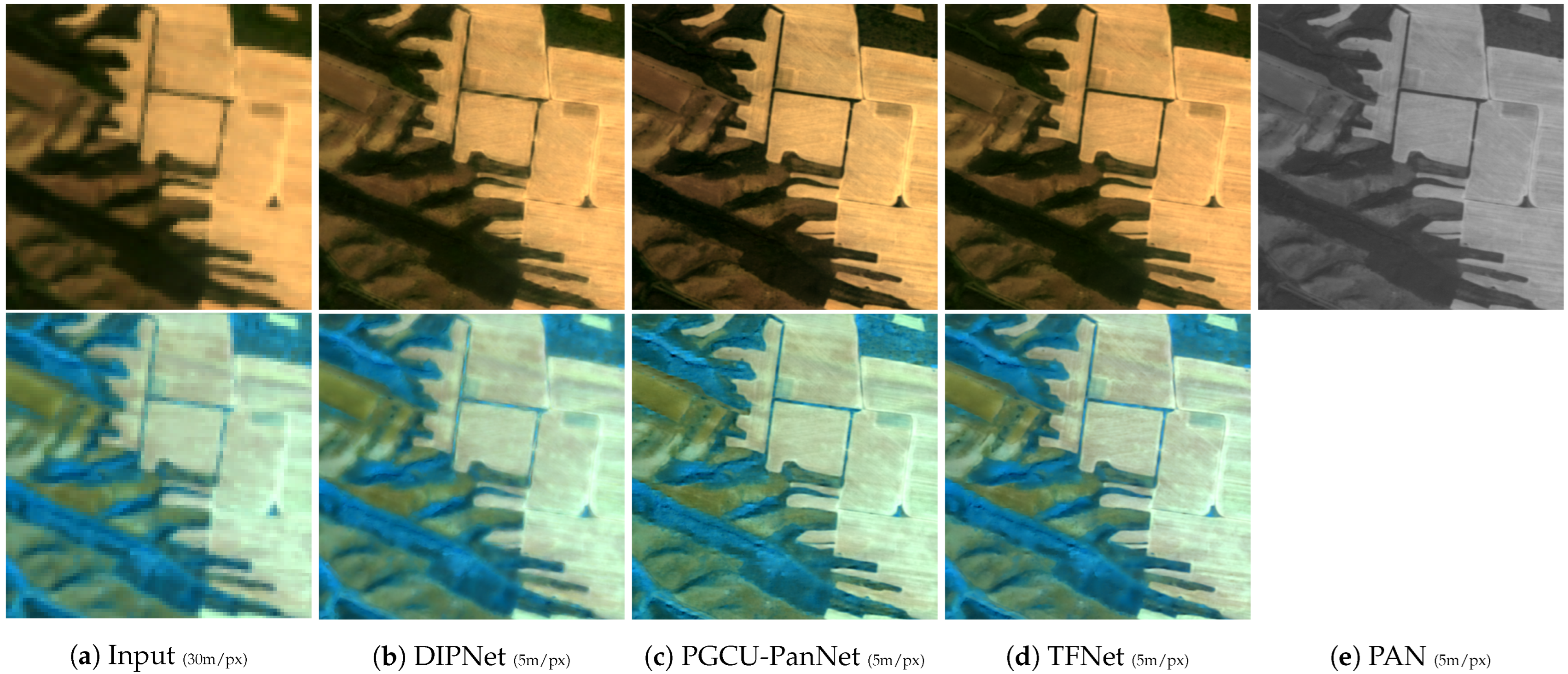

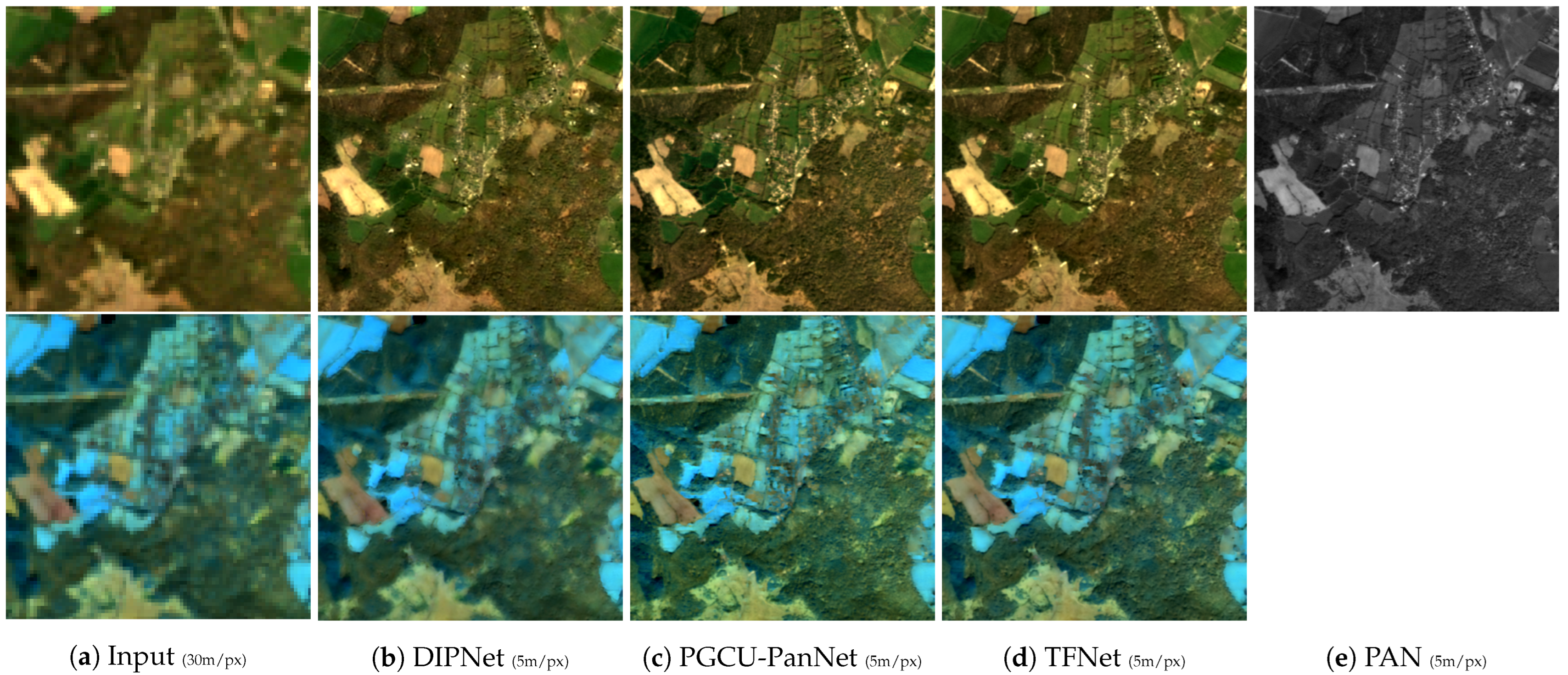

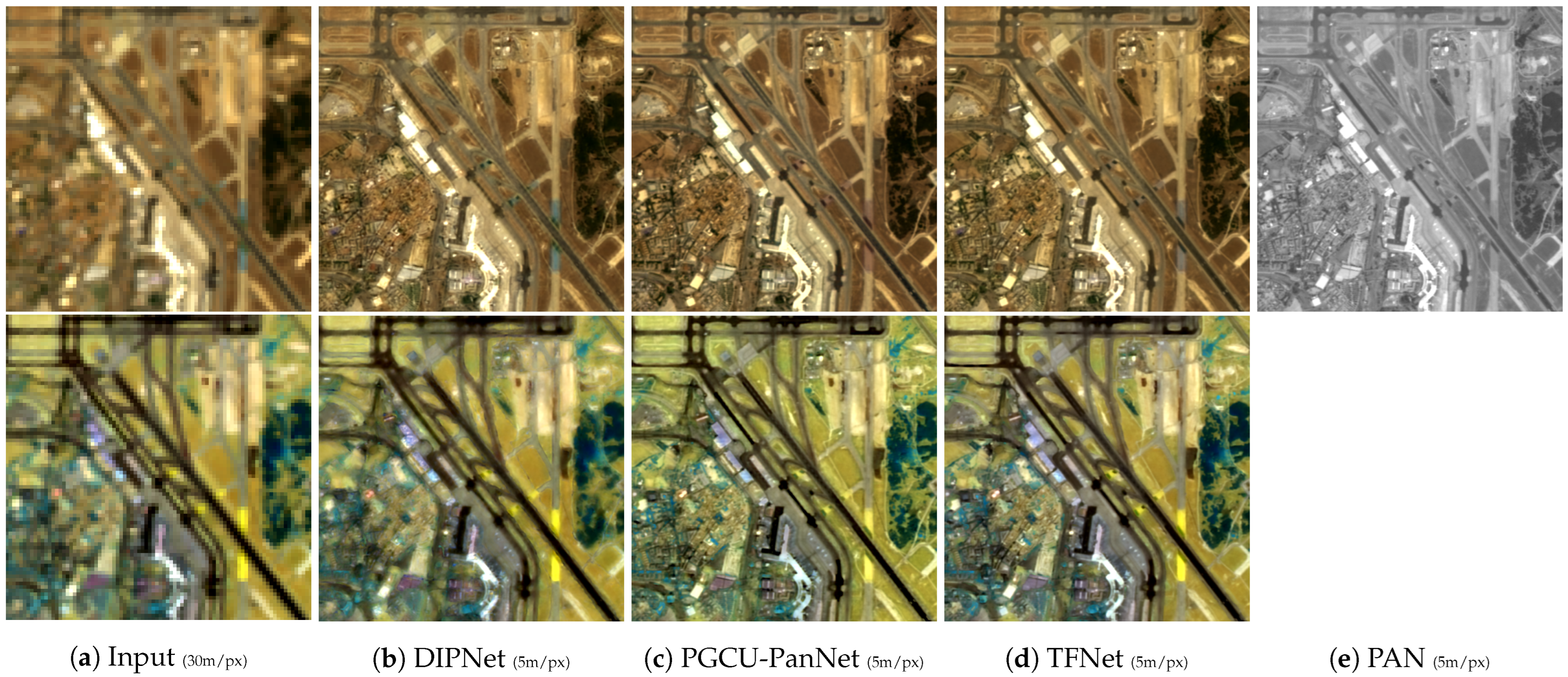

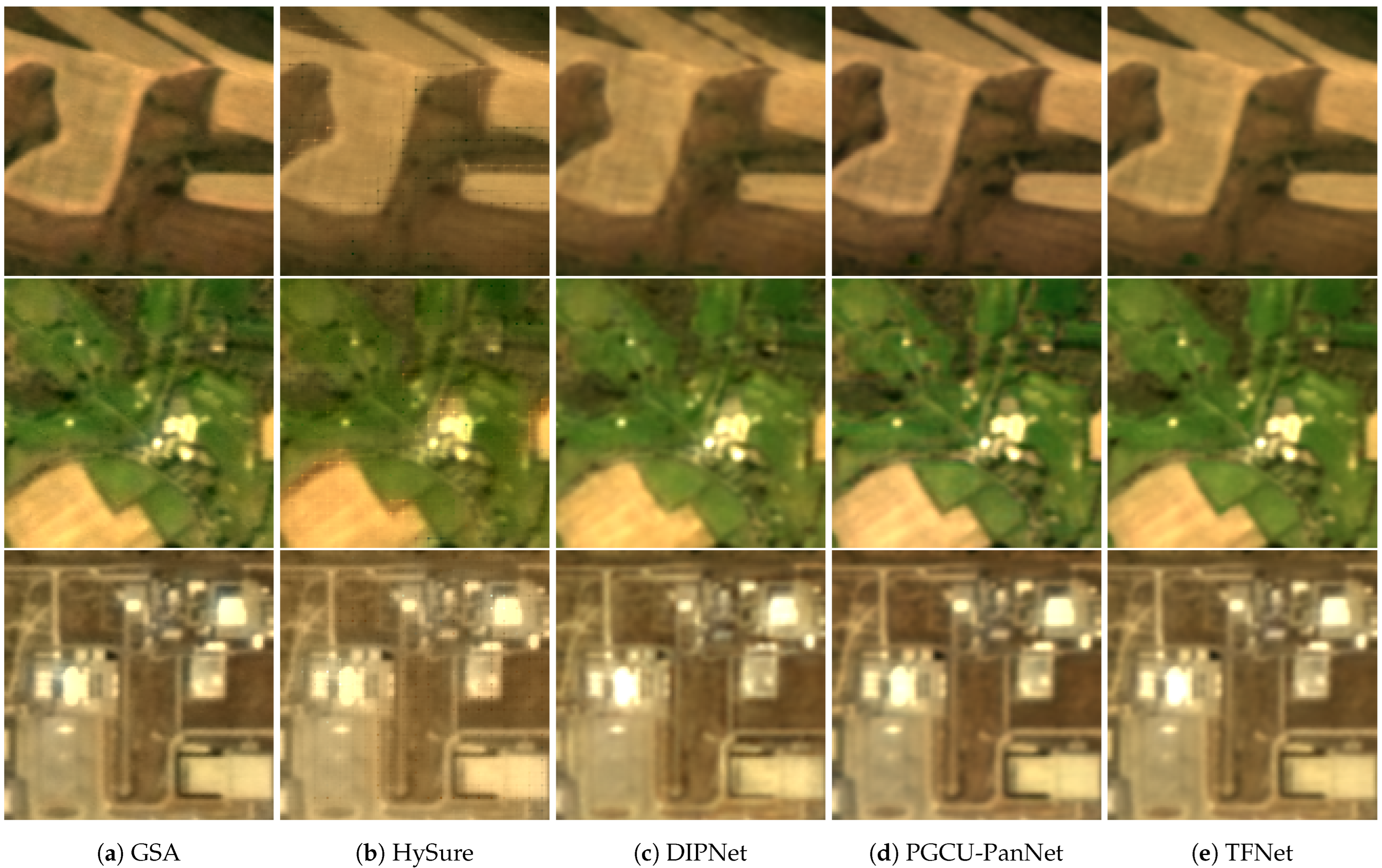

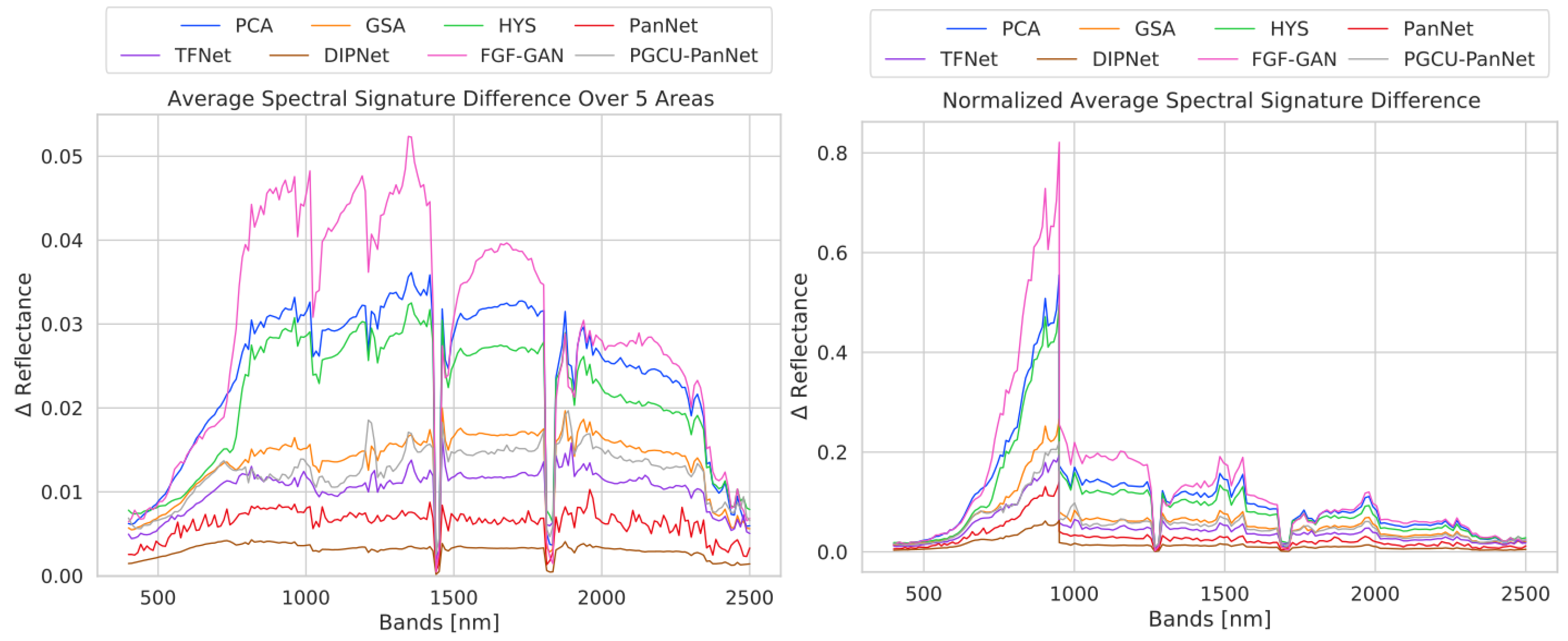

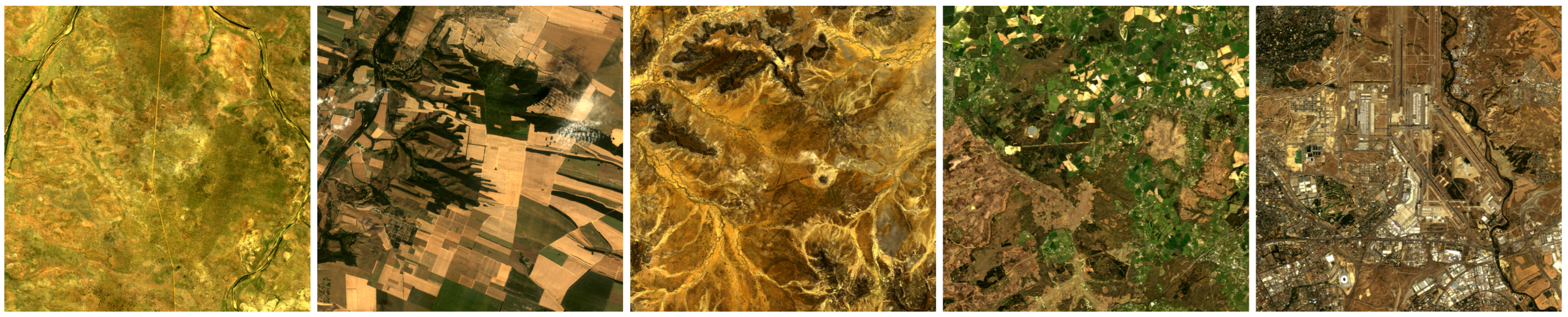

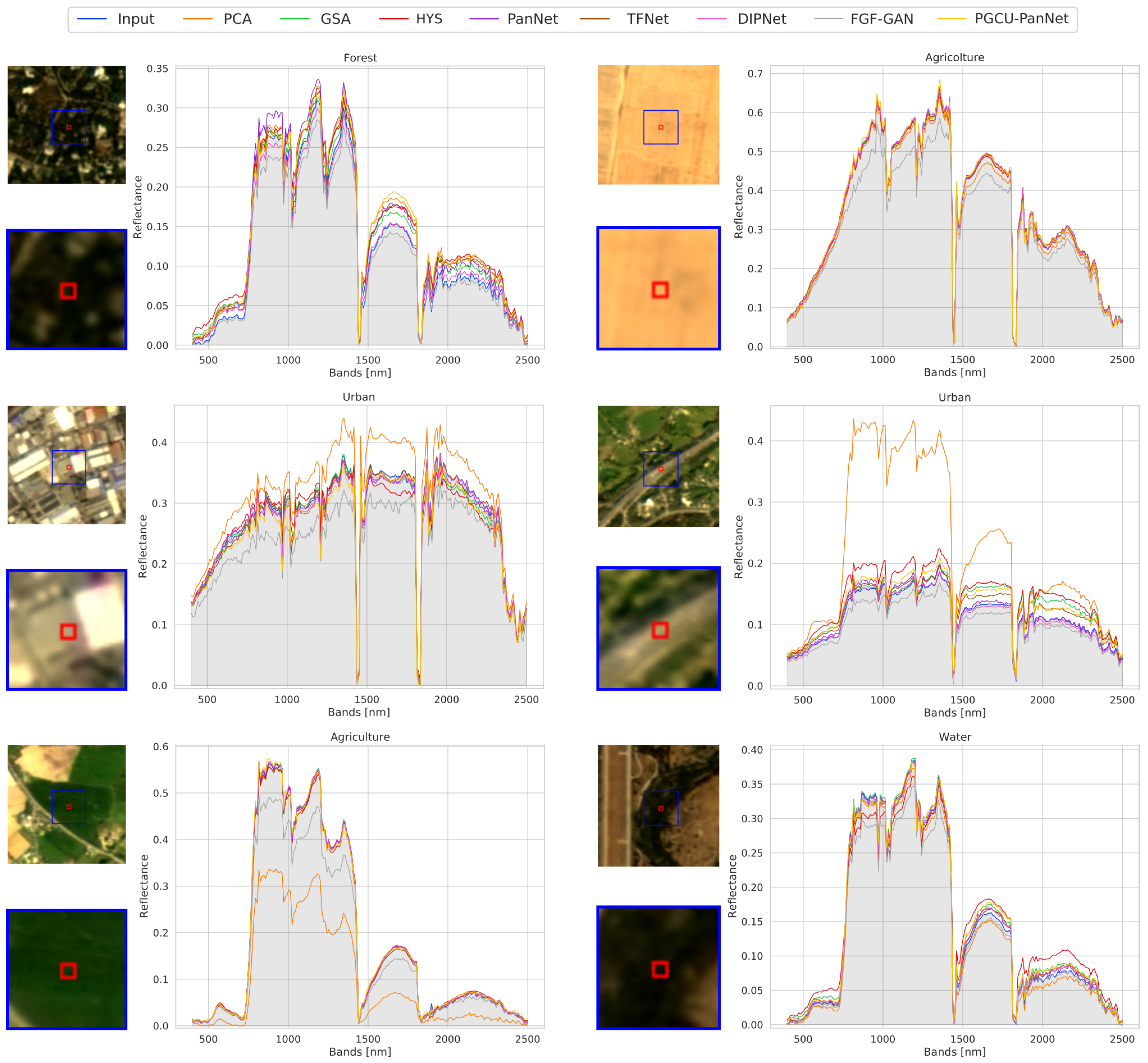

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Salcedo-Sanz, S.; Ghamisi, P.; Piles, M.; Werner, M.; Cuadra, L.; Moreno-Martínez, A.; Izquierdo-Verdiguier, E.; Muñoz-Marí, J.; Mosavi, A.; Camps-Valls, G. Machine learning information fusion in Earth observation: A comprehensive review of methods, applications and data sources. Inf. Fusion 2020, 63, 256–272. [Google Scholar] [CrossRef]

- Barbato, M.P.; Napoletano, P.; Piccoli, F.; Schettini, R. Unsupervised segmentation of hyperspectral remote sensing images with superpixels. Remote Sens. Appl. Soc. Environ. 2022, 28, 100823. [Google Scholar] [CrossRef]

- Iglseder, A.; Immitzer, M.; Dostálová, A.; Kasper, A.; Pfeifer, N.; Bauerhansl, C.; Schöttl, S.; Hollaus, M. The potential of combining satellite and airborne remote sensing data for habitat classification and monitoring in forest landscapes. Int. J. Appl. Earth Obs. Geoinf. 2023, 117, 103131. [Google Scholar] [CrossRef]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of remote sensing in precision agriculture: A review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Wellmann, T.; Lausch, A.; Andersson, E.; Knapp, S.; Cortinovis, C.; Jache, J.; Scheuer, S.; Kremer, P.; Mascarenhas, A.; Kraemer, R.; et al. Remote sensing in urban planning: Contributions towards ecologically sound policies? Landsc. Urban Plan. 2020, 204, 103921. [Google Scholar] [CrossRef]

- Van Westen, C. Remote sensing for natural disaster management. Int. Arch. Photogramm. Remote Sens. 2000, 33, 1609–1617. [Google Scholar]

- Frick, A.; Tervooren, S. A framework for the long-term monitoring of urban green volume based on multi-temporal and multi-sensoral remote sensing data. J. Geovis. Spat. Anal. 2019, 3, 6. [Google Scholar] [CrossRef]

- Costs, S.T. Trends in Price per Pound to Orbit 1990–2000; Futron Corporation: Bethesda, MD, USA, 2002. [Google Scholar]

- Jones, H. The recent large reduction in space launch cost. In Proceedings of the 48th International Conference on Environmental Systems, Albuquerque, NM, USA, 8–12 July 2018. [Google Scholar]

- Okninski, A.; Kopacz, W.; Kaniewski, D.; Sobczak, K. Hybrid rocket propulsion technology for space transportation revisited-propellant solutions and challenges. FirePhysChem 2021, 1, 260–271. [Google Scholar] [CrossRef]

- Wulder, M.A.; Loveland, T.R.; Roy, D.P.; Crawford, C.J.; Masek, J.G.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Belward, A.S.; Cohen, W.B.; et al. Current status of Landsat program, science, and applications. Remote Sens. Environ. 2019, 225, 127–147. [Google Scholar] [CrossRef]

- Chevrel, M.; Courtois, M.; Weill, G. The SPOT satellite remote sensing mission. Photogramm. Eng. Remote Sens. 1981, 47, 1163–1171. [Google Scholar]

- Phiri, D.; Simwanda, M.; Salekin, S.; Nyirenda, V.R.; Murayama, Y.; Ranagalage, M. Sentinel-2 data for land cover/use mapping: A review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Apostolopoulos, D.N.; Nikolakopoulos, K.G. SPOT vs. Landsat satellite images for the evolution of the north Peloponnese coastline, Greece. Reg. Stud. Mar. Sci. 2022, 56, 102691. [Google Scholar] [CrossRef]

- Krueger, J.K. CLOSeSat: Perigee-Lowering Techniques and Preliminary Design for a Small Optical Imaging Satellite Operating in Very Low Earth Orbit. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2010. [Google Scholar]

- Li, S.; Kang, X.; Fang, L.; Hu, J.; Yin, H. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2017, 33, 100–112. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2565–2586. [Google Scholar] [CrossRef]

- Loncan, L.; De Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Chavez, P.; Sides, S.C.; Anderson, J.A. Comparison of three different methods to merge multiresolution and multispectral data- Landsat TM and SPOT panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 295–303. [Google Scholar]

- Tu, T.M.; Huang, P.S.; Hung, C.L.; Chang, C.P. A fast intensity-hue-saturation fusion technique with spectral adjustment for IKONOS imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving Component Substitution Pansharpening Through Multivariate Regression of MS +Pan Data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Mallat, S.G. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Nason, G.P.; Silverman, B.W. The stationary wavelet transform and some statistical applications. In Wavelets and Statistics; Springer: New York, NY, USA, 1995; pp. 281–299. [Google Scholar]

- Shensa, M.J. The discrete wavelet transform: Wedding the a trous and Mallat algorithms. IEEE Trans. Signal Process. 1992, 40, 2464–2482. [Google Scholar] [CrossRef]

- Burt, P.J.; Adelson, E.H. The Laplacian pyramid as a compact image code. In Readings in Computer Vision; Elsevier: Amsterdam, The Netherlands, 1987; pp. 671–679. [Google Scholar]

- Liao, W.; Huang, X.; Van Coillie, F.; Gautama, S.; Pižurica, A.; Philips, W.; Liu, H.; Zhu, T.; Shimoni, M.; Moser, G.; et al. Processing of multiresolution thermal hyperspectral and digital color data: Outcome of the 2014 IEEE GRSS data fusion contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2984–2996. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, F.; Wan, W.; Yu, H.; Sun, J.; Del Ser, J.; Elyan, E.; Hussain, A. Panchromatic and multispectral image fusion for remote sensing and earth observation: Concepts, taxonomy, literature review, evaluation methodologies and challenges ahead. Inf. Fusion 2023, 93, 227–242. [Google Scholar] [CrossRef]

- He, L.; Zhu, J.; Li, J.; Plaza, A.; Chanussot, J.; Li, B. HyperPNN: Hyperspectral pansharpening via spectrally predictive convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3092–3100. [Google Scholar] [CrossRef]

- He, L.; Zhu, J.; Li, J.; Meng, D.; Chanussot, J.; Plaza, A. Spectral-fidelity convolutional neural networks for hyperspectral pansharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5898–5914. [Google Scholar] [CrossRef]

- Zheng, Y.; Li, J.; Li, Y.; Cao, K.; Wang, K. Deep residual learning for boosting the accuracy of hyperspectral pansharpening. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1435–1439. [Google Scholar] [CrossRef]

- Xie, W.; Lei, J.; Cui, Y.; Li, Y.; Du, Q. Hyperspectral pansharpening with deep priors. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 1529–1543. [Google Scholar] [CrossRef]

- He, L.; Xi, D.; Li, J.; Lai, H.; Plaza, A.; Chanussot, J. Dynamic hyperspectral pansharpening CNNs. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–19. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhan, J.; Xu, S.; Sun, K.; Huang, L.; Liu, J.; Zhang, C. FGF-GAN: A lightweight generative adversarial network for pansharpening via fast guided filter. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- He, K.; Sun, J. Fast guided filter. arXiv 2015, arXiv:1505.00996. [Google Scholar]

- Zhu, Z.; Cao, X.; Zhou, M.; Huang, J.; Meng, D. Probability-based global cross-modal upsampling for pansharpening. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14039–14048. [Google Scholar]

- Vivone, G.; Garzelli, A.; Xu, Y.; Liao, W.; Chanussot, J. Panchromatic and hyperspectral image fusion: Outcome of the 2022 whispers hyperspectral pansharpening challenge. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 16, 166–179. [Google Scholar] [CrossRef]

- Labate, D.; Safari, K.; Karantzas, N.; Prasad, S.; Shahraki, F.F. Structured receptive field networks and applications to hyperspectral image classification. In Proceedings of the Wavelets and Sparsity XVIII, San Diego, CA, USA, 13–15 August 2019; SPIE: Bellingham, WA, USA, 2019; Volume 11138, pp. 218–226. [Google Scholar]

- Yokoya, N.; Iwasaki, A. Airborne Hyperspectral Data over Chikusei; Report Number: SAL-2016-5-27; Space Application Laboratory, University of Tokyo: Tokyo, Japan, 2016; Volume 5. [Google Scholar]

- Baumgardner, M.F.; Biehl, L.L.; Landgrebe, D.A. 220 Band Aviris Hyperspectral Image Data Set: June 12, 1992 Indian Pine Test Site 3; Purdue University Research Repository: West Lafayette, IN, USA, 2015; Volume 10, p. 991. [Google Scholar]

- ASI. PRISMA Algorithm Theoretical Basis Document (ATBD). 2021. Available online: https://prisma.asi.it/missionselect/docs/PRISMA%20ATBD_v1.pdf (accessed on 3 April 2023).

- Potapov, P.; Hansen, M.C.; Pickens, A.; Hernandez-Serna, A.; Tyukavina, A.; Turubanova, S.; Zalles, V.; Li, X.; Khan, A.; Stolle, F.; et al. The global 2000–2020 land cover and land use change dataset derived from the Landsat archive: First results. Front. Remote Sens. 2022, 3, 856903. [Google Scholar] [CrossRef]

- Scheffler, D.; Hollstein, A.; Diedrich, H.; Segl, K.; Hostert, P. AROSICS: An automated and robust open-source image co-registration software for multi-sensor satellite data. Remote Sens. 2017, 9, 676. [Google Scholar] [CrossRef]

- Wald, L. Data Fusion: Definitions and Architectures: Fusion of Images of Different Spatial Resolutions; Presses des MINES: Paris, France, 2002. [Google Scholar]

- Yuhas, R.H.; Goetz, A.F.; Boardman, J.W. Discrimination among semi-arid landscape endmembers using the spectral angle mapper (SAM) algorithm. In Proceedings of the JPL, Summaries of the Third Annual JPL Airborne Geoscience Workshop, Volume 1: AVIRIS Workshop, Pasadena, CA, USA, 1–5 June 1992. [Google Scholar]

- Zhou, J.; Civco, D.L.; Silander, J.A. A wavelet transform method to merge Landsat TM and SPOT panchromatic data. Int. J. Remote Sens. 1998, 19, 743–757. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Arienzo, A.; Vivone, G.; Garzelli, A.; Alparone, L.; Chanussot, J. Full-resolution quality assessment of pansharpening: Theoretical and hands-on approaches. IEEE Geosci. Remote Sens. Mag. 2022, 10, 168–201. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Alparone, L.; Garzelli, A.; Vivone, G. Spatial consistency for full-scale assessment of pansharpening. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 5132–5134. [Google Scholar]

- Simoes, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. A convex formulation for hyperspectral image superresolution via subspace-based regularization. IEEE Trans. Geosci. Remote Sens. 2014, 53, 3373–3388. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Yang, J.; Fu, X.; Hu, Y.; Huang, Y.; Ding, X.; Paisley, J. PanNet: A deep network architecture for pan-sharpening. In Proceedings of the IEEE International Conference on Computer Vision, Piscataway, NJ, USA, 22–29 October 2017; pp. 5449–5457. [Google Scholar]

- Yuan, Q.; Wei, Y.; Meng, X.; Shen, H.; Zhang, L. A multiscale and multidepth convolutional neural network for remote sensing imagery pan-sharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 978–989. [Google Scholar] [CrossRef]

- Liu, X.; Liu, Q.; Wang, Y. Remote sensing image fusion based on two-stream fusion network. Inf. Fusion 2020, 55, 1–15. [Google Scholar] [CrossRef]

- Cai, J.; Huang, B. Super-resolution-guided progressive pansharpening based on a deep convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5206–5220. [Google Scholar] [CrossRef]

- Xie, Y.; Wu, W.; Yang, H.; Wu, N.; Shen, Y. Detail information prior net for remote sensing image pansharpening. Remote Sens. 2021, 13, 2800. [Google Scholar] [CrossRef]

| Cube | Wavelengths Covered nm | # of Bands | Resolution | |

|---|---|---|---|---|

| m/px | pixels | |||

| panchromatic | 400–700 | 1 | 5 | 7554 × 7350 |

| VNIR | 400–1010 | 66 | 30 | 1259 × 1225 |

| SWIR | 920–2505 | 174 | 30 | 1259 × 1225 |

| Size (px) | Resolution (m/px) | Usage | ||

|---|---|---|---|---|

| FR | RR | |||

| 5 | input | - | ||

| 30 | - | input | ||

| 30 | input | reference | ||

| 180 | - | input | ||

| 5 | output | - | ||

| 30 | - | output | ||

| Method | # of Parameters (M) | ERGAS ↓ | SAM ↓ | SCC ↑ | |

|---|---|---|---|---|---|

| PCA [19] | - | 8.9545 | 4.8613 | 0.6414 | 0.6071 |

| GSA [22] | - | 7.9682 | 4.3499 | 0.6642 | 0.6686 |

| HySure [51] | - | 8.3699 | 4.8709 | 0.5832 | 0.5610 |

| PNN [52] | 0.08 | 12.8840 | 3.8465 | 0.8237 | 0.6702 |

| PanNet [53] | 0.19 | 6.7062 | 2.7951 | 0.8705 | 0.7659 |

| MSDCNN [54] | 0.19 | 9.9105 | 3.0733 | 0.8727 | 0.7537 |

| TFNet [55] | 2.36 | 6.4090 | 2.4644 | 0.8875 | 0.7897 |

| SRPPNN [56] | 1.83 | 6.4702 | 2.3823 | 0.8890 | 0.7708 |

| DIPNet [57] | 2.95 | 5.1830 | 2.3715 | 0.8721 | 0.7929 |

| FGF-GAN [34] | 0.27 | 6.0256 | 4.0922 | 0.6741 | 0.6696 |

| PGCU-PanNet [36] | 0.70 | 5.5432 | 2.9902 | 0.8845 | 0.8386 |

| Method | # of Parameters (M) | |||

|---|---|---|---|---|

| PCA [19] | - | 0.9411 | 1.5277 | 0.0558 |

| GSA [22] | - | 0.3820 | 0.0016 | 0.6170 |

| HySure [51] | - | 0.4151 | 0.0009 | 0.5843 |

| PNN [52] | 0.08 | 0.3801 | 0.0101 | 0.6136 |

| PanNet [53] | 0.19 | 0.3507 | 0.0203 | 0.6360 |

| MSDCNN [54] | 0.19 | 0.3915 | 0.0068 | 0.6044 |

| TFNet [55] | 2.36 | 0.3552 | 0.0066 | 0.6405 |

| SRPPNN [56] | 1.83 | 0.3948 | 0.0139 | 0.5965 |

| DIPNet [57] | 2.95 | 0.3681 | 0.0348 | 0.6098 |

| FGF-GAN [34] | 0.27 | 0.4024 | 0.0406 | 0.5740 |

| PGCU-PanNet [36] | 0.70 | 0.4101 | 0.0039 | 0.5876 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zini, S.; Barbato, M.P.; Piccoli, F.; Napoletano, P. Deep Learning Hyperspectral Pansharpening on Large-Scale PRISMA Dataset. Remote Sens. 2024, 16, 2079. https://doi.org/10.3390/rs16122079

Zini S, Barbato MP, Piccoli F, Napoletano P. Deep Learning Hyperspectral Pansharpening on Large-Scale PRISMA Dataset. Remote Sensing. 2024; 16(12):2079. https://doi.org/10.3390/rs16122079

Chicago/Turabian StyleZini, Simone, Mirko Paolo Barbato, Flavio Piccoli, and Paolo Napoletano. 2024. "Deep Learning Hyperspectral Pansharpening on Large-Scale PRISMA Dataset" Remote Sensing 16, no. 12: 2079. https://doi.org/10.3390/rs16122079

APA StyleZini, S., Barbato, M. P., Piccoli, F., & Napoletano, P. (2024). Deep Learning Hyperspectral Pansharpening on Large-Scale PRISMA Dataset. Remote Sensing, 16(12), 2079. https://doi.org/10.3390/rs16122079