Double-Factor Tensor Cascaded-Rank Decomposition for Hyperspectral Image Denoising

Abstract

:1. Introduction

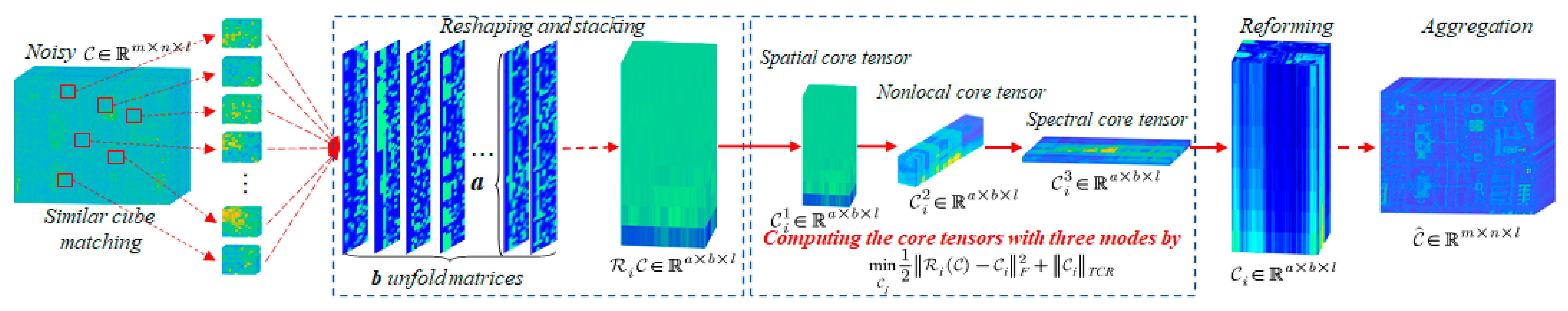

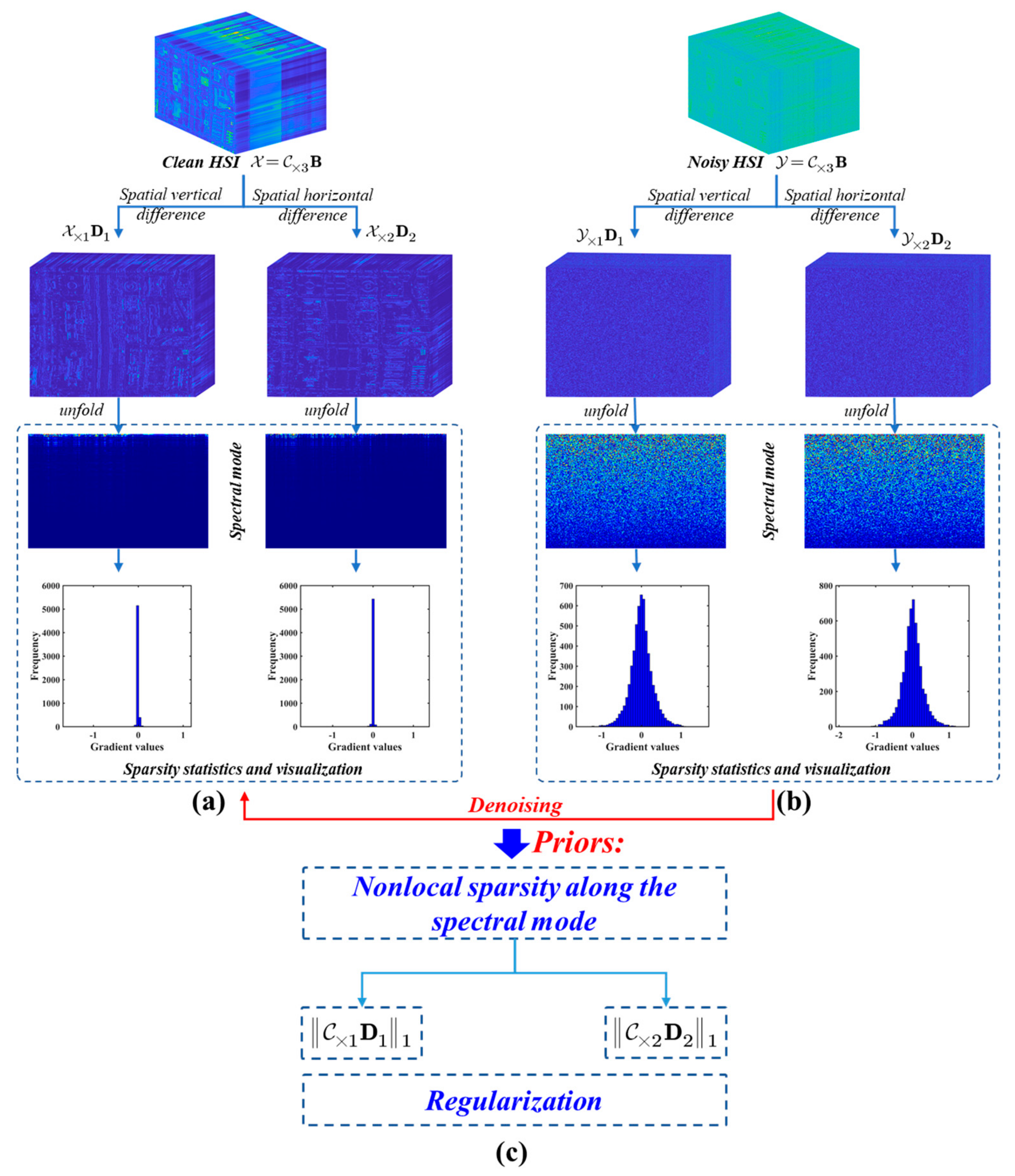

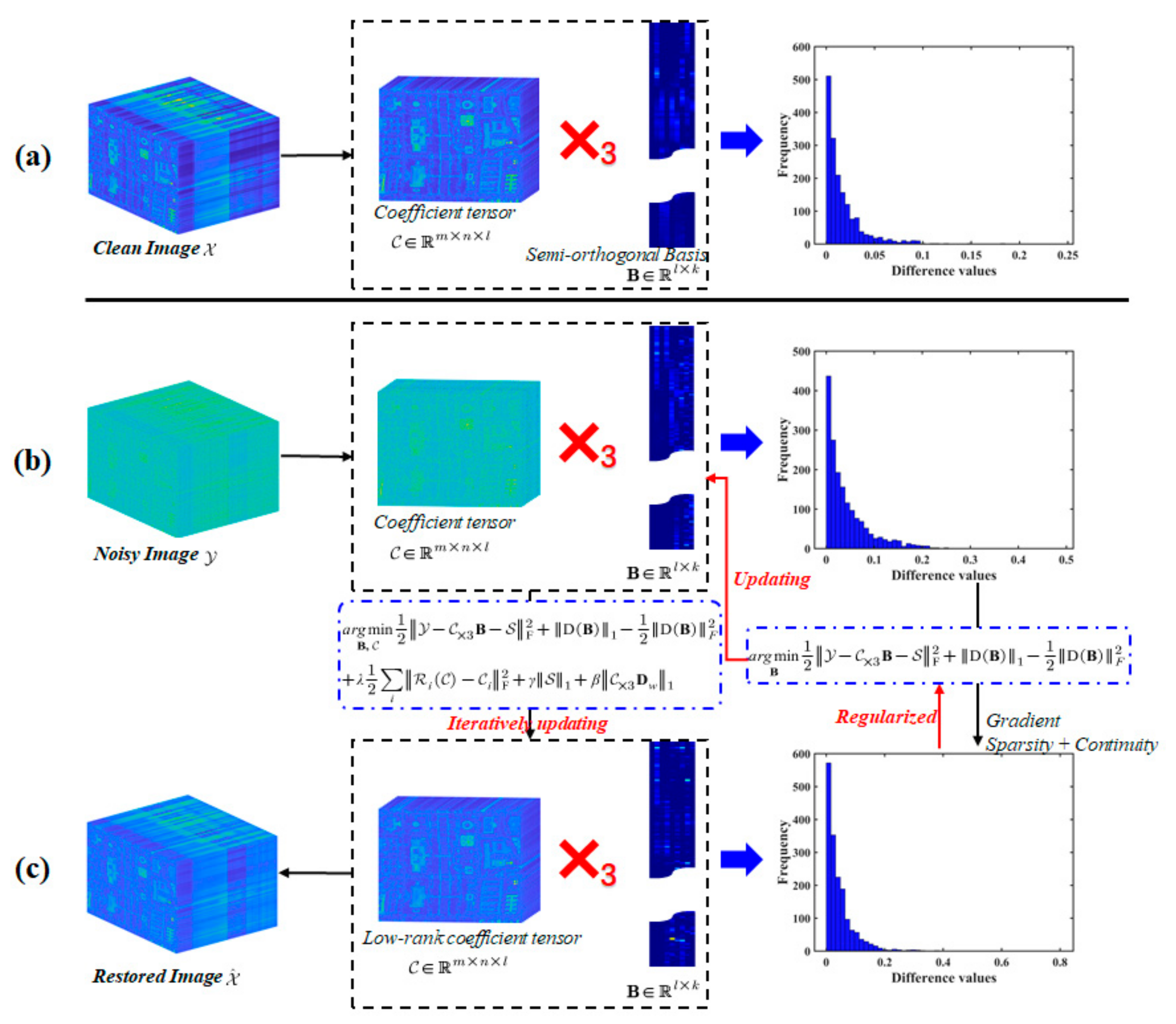

2. HSI Denoising Using Double-Factor-Regularized Tensor Cascaded-Rank Minimization

2.1. DFTCR-Based HSI Denoising Model

2.2. Efficient Alternating Optimization for Solving the Denoising Algorithm

2.2.1. -Subproblem

2.2.2. , , and -Subproblems Based on L1 Norm

2.2.3. -Subproblem

2.2.4. -Subproblem

2.2.5. Updating Lagrangian Multipliers

| Algorithm 1 Proposed algorithm for HSI denoising |

| Input: A rearranged noisy HSI tensor . |

| Initialization: Estimate B0 with SVD, set ; regularization parameters , , and ; Subspace dimension ; Cube matching parameter a, b; Tensor cascaded rank ; Stop criterion , positive scalar , , , , , , and ; maximum iteration . |

| Tensor Low-cascaded-rank decomposition: estimate by Equation (7). |

| While not converged do |

| Sparse noise estimation: calculate by Equation (15); |

| Latent input HSI estimation: calculate by Equation (10); |

| Tensor coefficient learning: calculate by Equation (18); |

| Continuous basis learning: calculate by Equation (24); |

| Auxiliary variables estimation: calculate by Equations (16) and (17) |

| Update lagrangian multiplier by Equation (25), Equation (26), Equation (27), respectively; |

| Update penalty scalar: , |

| , ; |

| Check the convergence: or functional energy: |

| ; |

| Update iteration: . |

| End while |

| Output. |

3. Experimentation

3.1. Experiment Setup

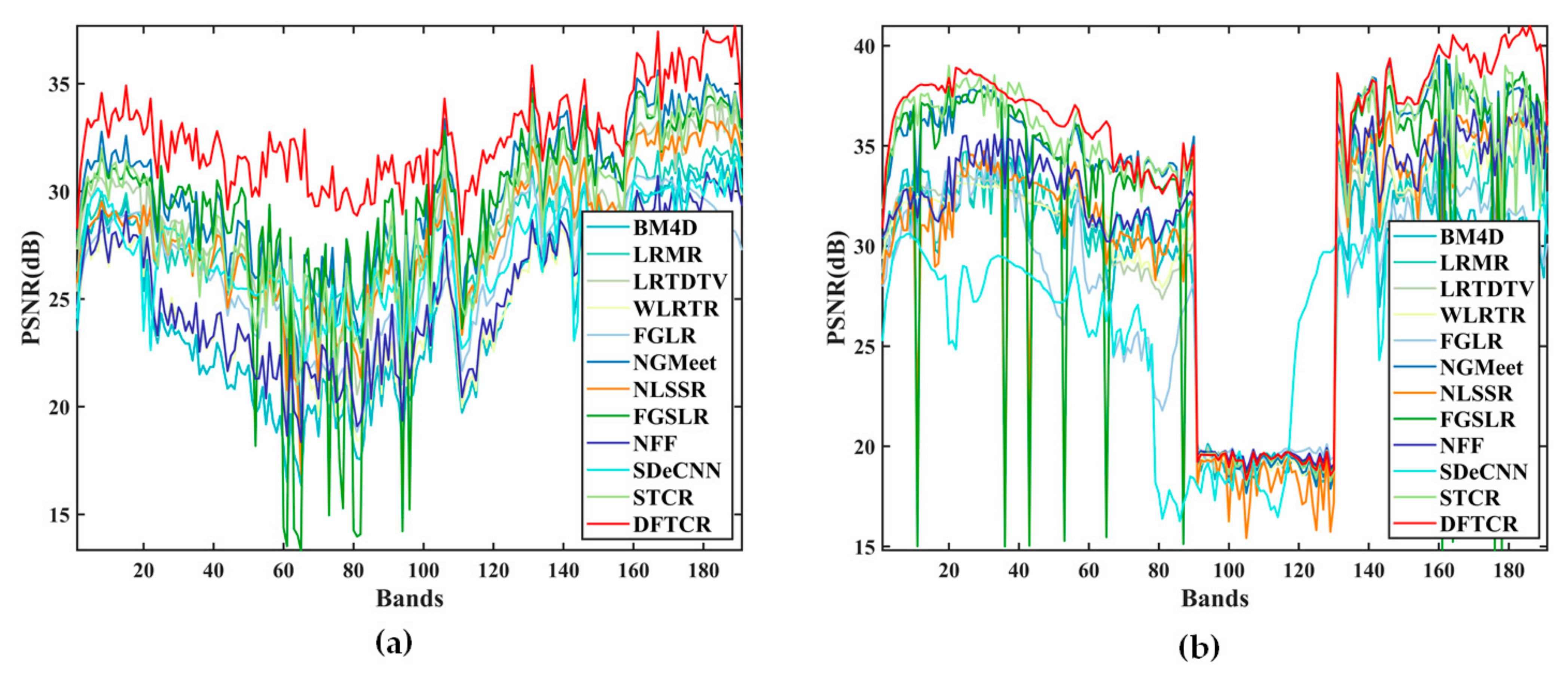

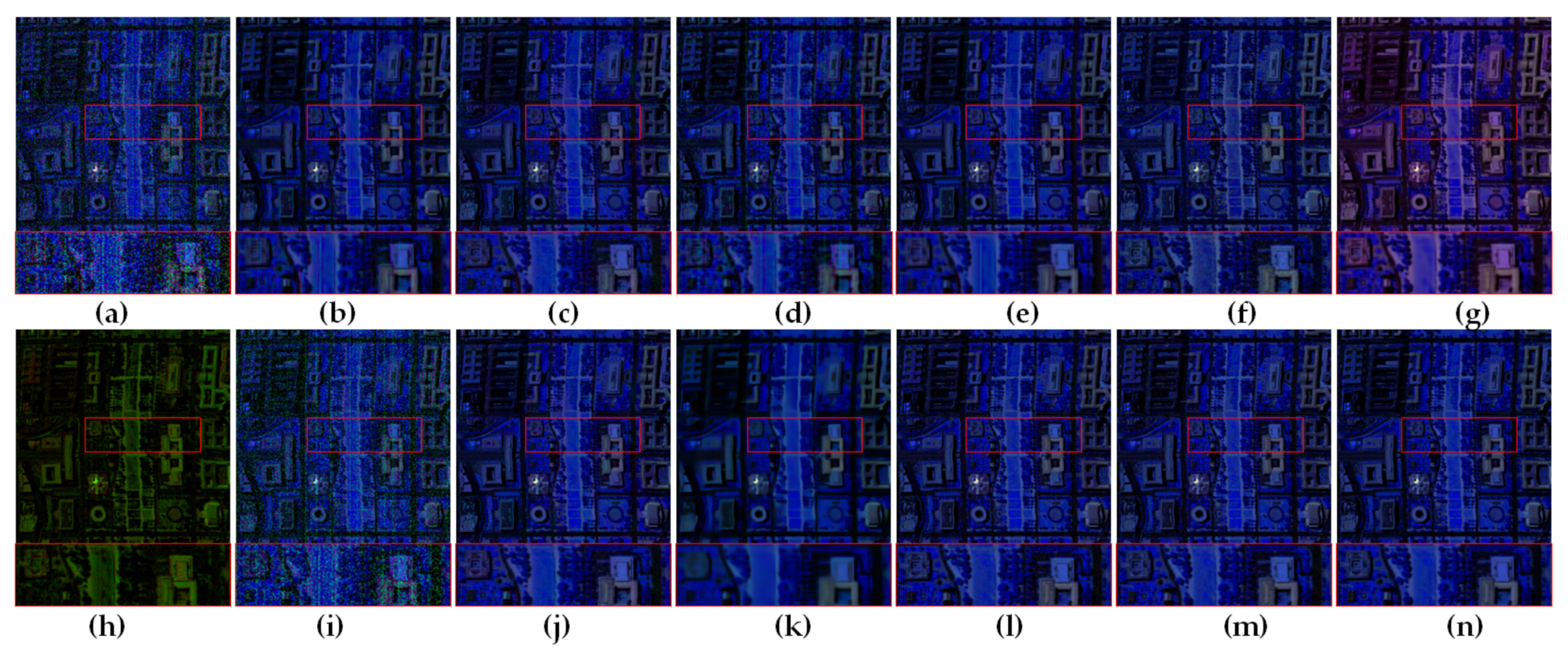

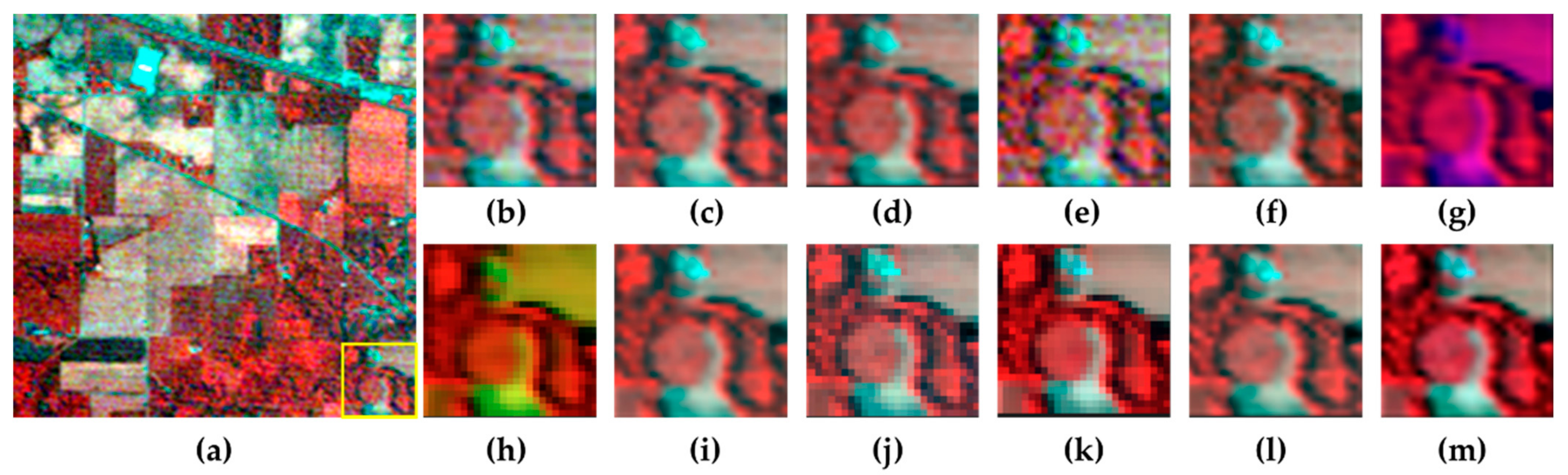

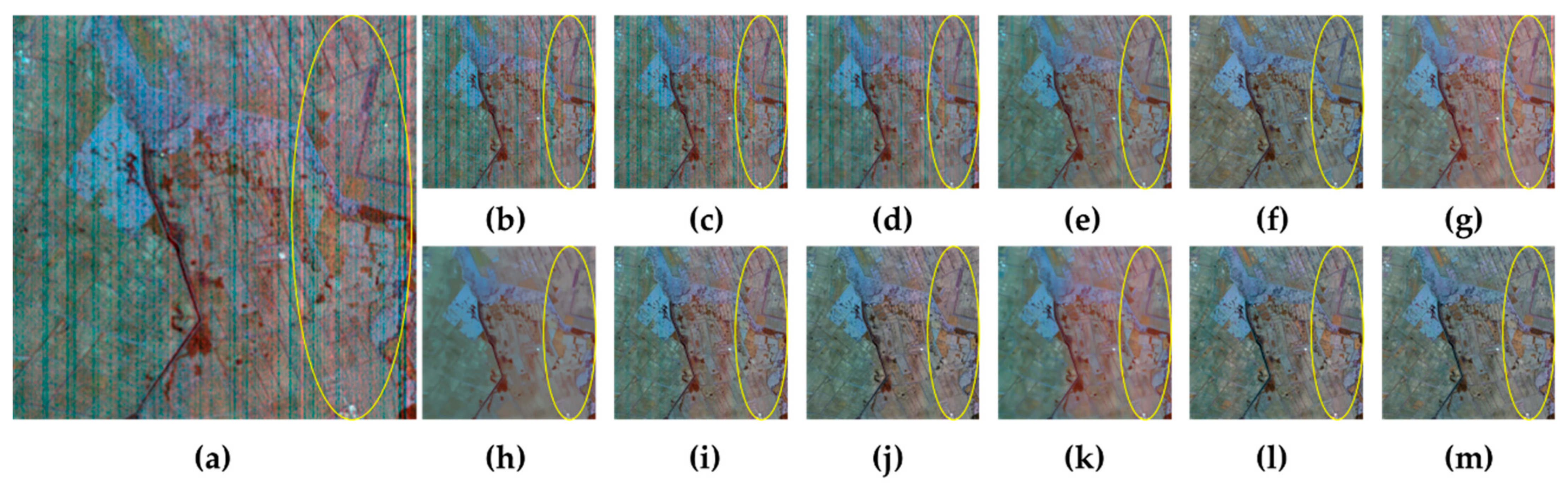

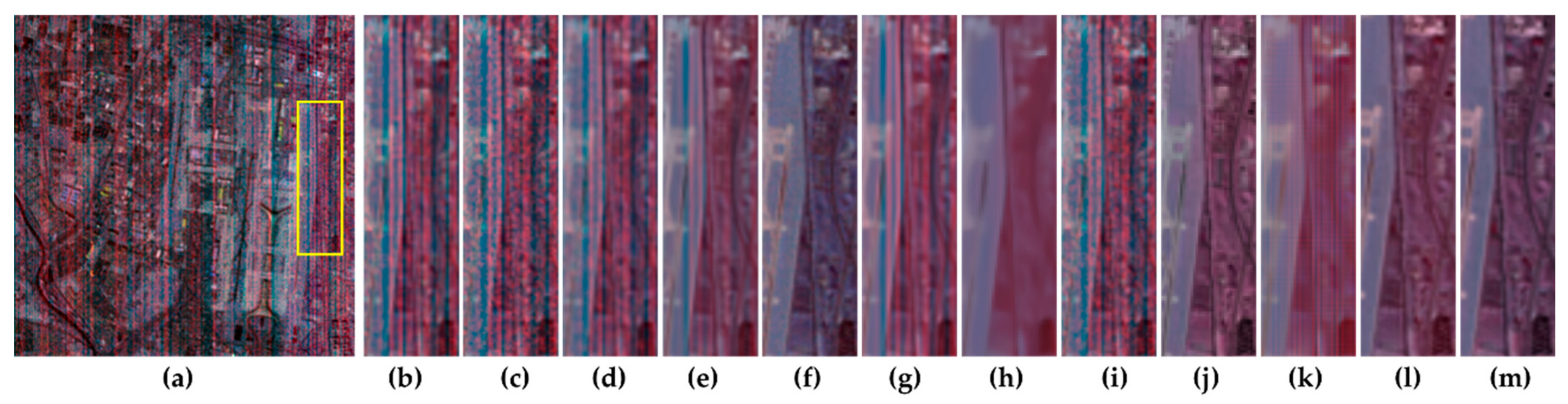

3.2. Denoising Results for Simulated Noisy HSIs

3.3. Denoising Results for Real-World Noisy HSIs

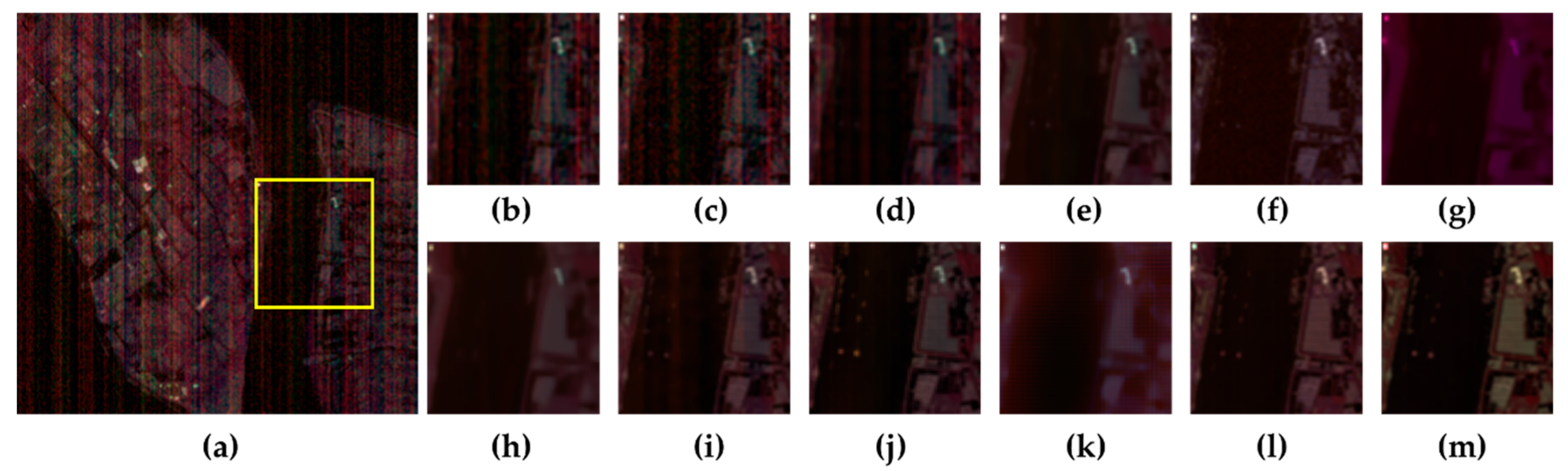

4. Discussion

4.1. Analysis of Computation Complexity

4.2. Ablation Analysis

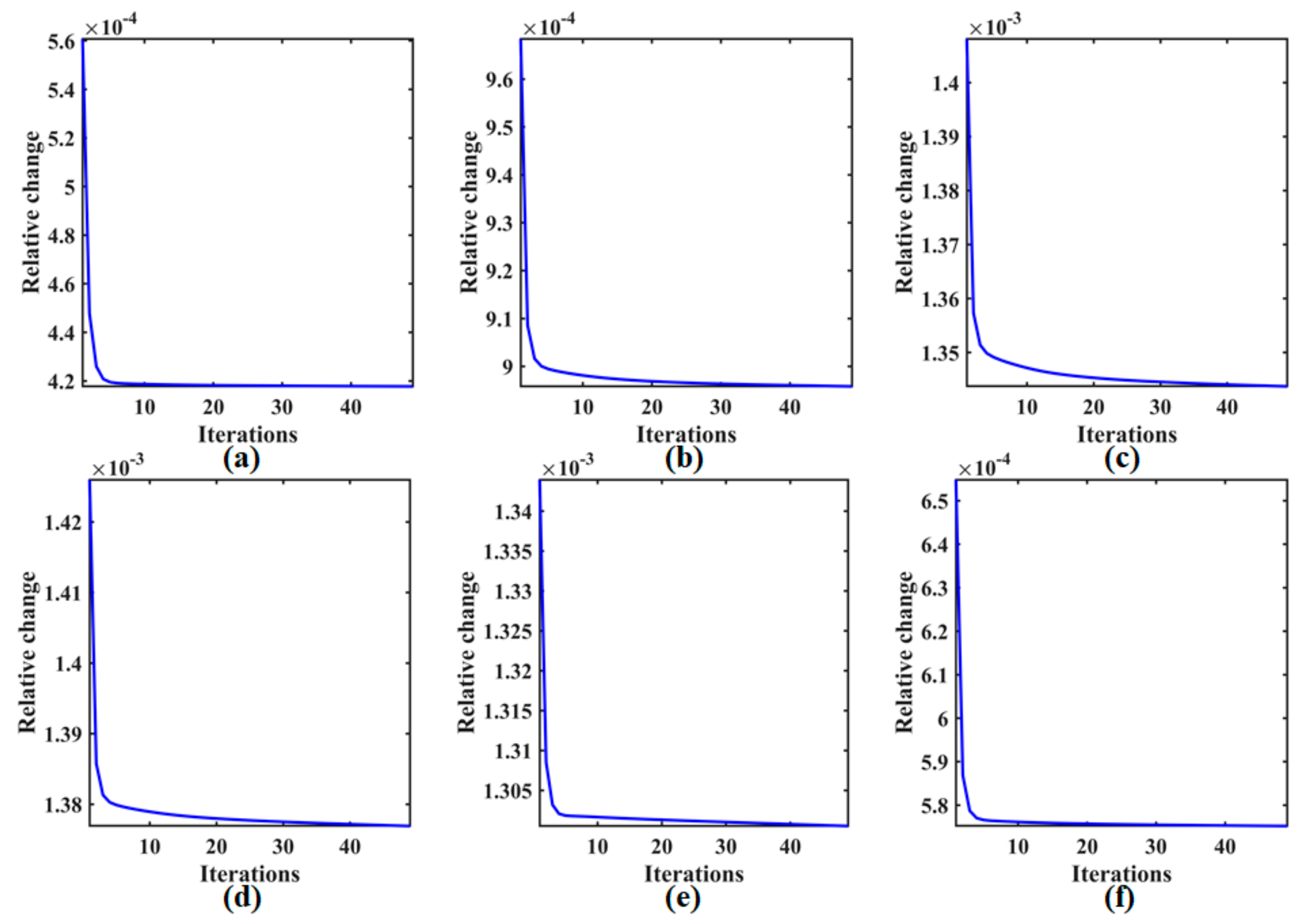

4.3. Convergence Analysis

4.4. Running Time

5. Conclusions and Outlooks

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Driss, H.; John, R.M.; Elizabeth, P.; Pablo, J.Z.-T.; Ian, B.S. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral Unmixing Overview: Geometrical, Statistical, and Sparse Regression-Based Approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral Remote Sensing Data Analysis and Future Challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Shen, F.; Zhao, H.; Zhu, Q.; Sun, X.; Liu, Y. Chinese Hyperspectral Satellite Missions and Preliminary Applications of Aquatic Environment. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 1233–1236. [Google Scholar]

- Zürn, J.; Burgard, W.; Valada, A. Self-Supervised Visual Terrain Classification from Unsupervised Acoustic Feature Learning. IEEE Trans. Robot. 2021, 37, 466–481. [Google Scholar] [CrossRef]

- Antonio, P.; Jon, A.B.; Joseph, W.B.; Jason, B.; Lorenzo, B.; Gustavo, C.-V.; Jocelyn, C.; Mathieu, F.; Paolo, G.; Anthony, G.; et al. Recent advances in techniques for hyperspectral image processing. Remote Sens. Environ. 2009, 113, S110–S122. [Google Scholar] [CrossRef]

- Willett, R.M.; Duarte, M.F.; Davenport, M.A.; Baraniuk, R.G. Sparsity and Structure in Hyperspectral Imaging: Sensing, Reconstruction, and Target Detection. IEEE Signal Process. Mag. 2014, 31, 116–126. [Google Scholar] [CrossRef]

- Sun, L.; Cao, Q.; Chen, Y.; Zheng, Y.; Wu, Z. Mixed Noise Removal for Hyperspectral Images Based on Global Tensor Low-Rankness and Nonlocal SVD-Aided Group Sparsity. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar] [CrossRef]

- Sun, L.; He, C.; Zheng, Y.; Wu, Z.; Jeon, B. Tensor Cascaded-Rank Minimization in Subspace: A Unified Regime for Hyperspectral Image Low-Level Vision. IEEE Trans. Image Process. 2023, 32, 100–115. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, H.; Yang, G.; Zhang, L. LR-Net: Low-Rank Spatial-Spectral Network for Hyperspectral Image Denoising. IEEE Trans. Image Process. 2021, 30, 8743–8758. [Google Scholar] [CrossRef]

- Elad, M. On the origin of the bilateral filter and ways to improve it. IEEE Trans. Image Process. 2002, 11, 1141–1151. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Maggioni, M.; Katkovnik, V.; Egiazarian, K.; Foi, A. Nonlocal Transform-Domain Filter for Volumetric Data Denoising and Reconstruction. IEEE Trans. Image Process. 2013, 22, 119–133. [Google Scholar] [CrossRef] [PubMed]

- Maggioni, M.; Boracchi, G.; Foi, A.; Egiazarian, K. Video Denoising, Deblocking, and Enhancement Through Separable 4-D Nonlocal Spatiotemporal Transforms. IEEE Trans. Image Process. 2012, 21, 3952–3966. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.; Tien, D.B.; Kha, G.Q.; Qian, S.-E. Denoising Hyperspectral Imagery Using Principal Component Analysis and Block-Matching 4D Filtering. Can. J. Remote Sens. 2014, 40, 60–66. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.-M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 60–65. [Google Scholar]

- Dong, W.; Huang, T.; Shi, G.; Ma, Y.; Li, X. Robust Tensor Approximation with Laplacian Scale Mixture Modeling for Multiframe Image and Video Denoising. IEEE J. Sel. Top. Signal Process. 2018, 12, 1435–1448. [Google Scholar] [CrossRef]

- Ge, Q.; Jing, X.-Y.; Wu, F.; Wei, Z.-H.; Xiao, L.; Shao, W.-Z.; Yue, D.; Li, H.-B. Structure-Based Low-Rank Model with Graph Nuclear Norm Regularization for Noise Removal. IEEE Trans. Image Process. 2017, 26, 3098–3112. [Google Scholar] [CrossRef] [PubMed]

- He, W.; Yao, Q.; Li, C.; Yokoya, N.; Zhao, Q.; Zhang, H.; Zhang, L. Non-Local Meets Global: An Iterative Paradigm for Hyperspectral Image Restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 2089–2107. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, L.; Fu, X.; Ng, M.K.; Bioucas-Dias, J.M. Hyperspectral Image Denoising Based on Global and Nonlocal Low-Rank Factorizations. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10438–10454. [Google Scholar] [CrossRef]

- Zha, Z.; Wen, B.; Yuan, X.; Zhang, J.; Zhou, J.; Lu, Y.; Zhu, C. Nonlocal Structured Sparsity Regularization Modeling for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Aggarwal, H.K.; Majumdar, A. Hyperspectral Image Denoising Using Spatio-Spectral Total Variation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 442–446. [Google Scholar] [CrossRef]

- Yuan, Q.; Zhang, L.; Shen, H. Hyperspectral Image Denoising Employing a Spectral–Spatial Adaptive Total Variation Model. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3660–3677. [Google Scholar] [CrossRef]

- Qian, Y.; Ye, M. Hyperspectral Imagery Restoration Using Nonlocal Spectral-Spatial Structured Sparse Representation With Noise Estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 499–515. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Zhang, L.; Shen, H. Total-Variation-Regularized Low-Rank Matrix Factorization for Hyperspectral Image Restoration. IEEE Trans. Geosci. Remote Sens. 2016, 54, 178–188. [Google Scholar] [CrossRef]

- Lu, T.; Li, S.; Fang, L.; Ma, Y.; Benediktsson, J.A. Spectral–Spatial Adaptive Sparse Representation for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2016, 54, 373–385. [Google Scholar] [CrossRef]

- Rasti, B.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. Hyperspectral Image Denoising Using First Order Spectral Roughness Penalty in Wavelet Domain. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2458–2467. [Google Scholar] [CrossRef]

- Zhao, B.; Ulfarsson, M.O.; Sveinsson, J.R.; Chanussot, J. Hyperspectral Image Denoising Using Spectral-Spatial Transform-Based Sparse and Low-Rank Representations. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–25. [Google Scholar] [CrossRef]

- Zhuang, L.; Bioucas-Dias, J.M. Fast Hyperspectral Image Denoising and Inpainting Based on Low-Rank and Sparse Representations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 730–742. [Google Scholar] [CrossRef]

- Zhang, H.; He, W.; Zhang, L.; Shen, H.; Yuan, Q. Hyperspectral Image Restoration Using Low-Rank Matrix Recovery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4729–4743. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Shen, H.; Zhang, L. Hyperspectral Image Denoising Using Local Low-Rank Matrix Recovery and Global Spatial–Spectral Total Variation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 713–729. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Zhao, X.-L.; Fang, H.; Zhang, Z.; Zhong, S. Weighted Low-Rank Tensor Recovery for Hyperspectral Image Restoration. IEEE Trans. Cybern. 2020, 50, 4558–4572. [Google Scholar] [CrossRef]

- Chen, Y.; Guo, Y.; Wang, Y.; Wang, D.; Peng, C.; He, G. Denoising of Hyperspectral Images Using Nonconvex Low Rank Matrix Approximation. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5366–5380. [Google Scholar] [CrossRef]

- Zheng, Y.-B.; Huang, T.-Z.; Zhao, X.-L.; Chen, Y.; He, W. Double-Factor-Regularized Low-Rank Tensor Factorization for Mixed Noise Removal in Hyperspectral Image. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8450–8464. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, T.-Z.; He, W.; Zhao, X.-L.; Zhang, H.; Zeng, J. Hyperspectral Image Denoising Using Factor Group Sparsity-Regularized Nonconvex Low-Rank Approximation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Su, X.; Zhang, Z.; Yang, F. Fast Hyperspectral Image Denoising and Destriping Method Based on Graph Laplacian Regularization. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Chen, Y.; He, W.; Yokoya, N.; Huang, T.-Z. Hyperspectral Image Restoration Using Weighted Group Sparsity-Regularized Low-Rank Tensor Decomposition. IEEE Trans. Cybern. 2020, 50, 3556–3570. [Google Scholar] [CrossRef] [PubMed]

- Zeng, H.; Xie, X.; Cui, H.; Yin, H.; Ning, J. Hyperspectral Image Restoration via Global L1-2 Spatial–Spectral Total Variation Regularized Local Low-Rank Tensor Recovery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3309–3325. [Google Scholar] [CrossRef]

- Yuan, Q.; Zhang, Q.; Li, J.; Shen, H.; Zhang, L. Hyperspectral Image Denoising Employing a Spatial–Spectral Deep Residual Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1205–1218. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Li, J.; Sun, F.; Zhang, L. Deep spatio-spectral Bayesian posterior for hyperspectral image non-i.i.d. noise removal. ISPRS J. Photogramm. Remote Sens. 2020, 164, 125–137. [Google Scholar] [CrossRef]

- Maffei, A.; Haut, J.M.; Paoletti, M.E.; Plaza, J.; Bruzzone, L.; Plaza, A. A Single Model CNN for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2516–2529. [Google Scholar] [CrossRef]

- Murugesan, R.; Nachimuthu, N.; Prakash, G. Attention based deep convolutional U-Net with CSA optimization for hyperspectral image denoising. Infrared Phys. Technol. 2023, 129, 104531. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Fang, H.; Zhong, S.; Liao, W. HSI-DeNet: Hyperspectral Image Restoration via Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 667–682. [Google Scholar] [CrossRef]

- Tucker, L.R. Some mathematical notes on three-mode factor analysis. Psychometrika 1966, 31, 279–311. [Google Scholar] [CrossRef] [PubMed]

- Carroll, J.D.; Chang, J.-J. Analysis of individual differences in multidimensional scaling via an n-way generalization of “Eckart-Young” decomposition. Psychometrika 1970, 35, 283–319. [Google Scholar] [CrossRef]

- Kilmer, M.E.; Braman, K.; Hao, N.; Hoover, R.C. Third-Order Tensors as Operators on Matrices: A Theoretical and Computational Framework with Applications in Imaging. SIAM J. Matrix Anal. Appl. 2013, 34, 148–172. [Google Scholar] [CrossRef]

- Zhang, Z.; Ely, G.; Aeron, S.; Hao, N.; Kilmer, M. Novel Methods for Multilinear Data Completion and De-noising Based on Tensor-SVD. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3842–3849. [Google Scholar]

- Oseledets, I.V. Tensor-Train Decomposition. SIAM J. Sci. Comput. 2011, 33, 2295–2317. [Google Scholar] [CrossRef]

- Zhao, Q.; Zhou, G.; Xie, S.; Zhang, L.; Cichocki, A. Tensor Ring Decomposition. arXiv 2016, arXiv:1606.05535. [Google Scholar] [CrossRef]

- Wang, M.; Hong, D.; Han, Z.; Li, J.; Yao, J.; Gao, L.; Zhang, B.; Chanussot, J. Tensor Decompositions for Hyperspectral Data Processing in Remote Sensing: A comprehensive review. IEEE Geosci. Remote Sens. Mag. 2023, 11, 26–72. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Lu, C.; Feng, J.; Yan, S.; Lin, Z. A Unified Alternating Direction Method of Multipliers by Majorization Minimization. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 527–541. [Google Scholar] [CrossRef]

- Liu, T.; Hu, D.; Wang, Z.; Gou, J.; Chen, W. Hyperspectral Image Denoising Using Nonconvex Fraction Function. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

| Case | Index | BM4D | LRMR | LRTDTV | WLRTR | FGLR | NGMeet | NLSSR | NFF | FGSLR | SDeCNN | STCR | DFTCR |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Case 1: Gaussian Noise | |||||||||||||

| PSNR | 31.7828 | 33.1616 | 32.3958 | 32.8736 | 29.9907 | 35.9711 | 35.1194 | 34.3819 | 34.9102 | 28.4516 | 36.9208 | 37.3647 | |

| SSIM | 0.8905 | 0.9235 | 0.9100 | 0.9199 | 0.8828 | 0.9669 | 0.9598 | 0.9436 | 0.9158 | 0.7719 | 0.9624 | 0.9661 | |

| MSAM | 0.1051 | 0.0996 | 0.0828 | 0.0735 | 0.1533 | 0.0606 | 0.0630 | 0.0811 | 0.2037 | 0.1232 | 0.0637 | 0.0600 | |

| PSNR | 28.7382 | 30.1235 | 31.0538 | 30.1217 | 27.9487 | 32.0521 | 32.6969 | 27.7750 | 31.2225 | 28.6010 | 33.0368 | 33.9688 | |

| SSIM | 0.7907 | 0.8648 | 0.8794 | 0.8561 | 0.8216 | 0.9184 | 0.9222 | 0.7533 | 0.8525 | 0.7827 | 0.9082 | 0.9264 | |

| MSAM | 0.1514 | 0.1415 | 0.1118 | 0.1002 | 0.1844 | 0.0888 | 0.1155 | 0.2539 | 0.4114 | 0.1245 | 0.1049 | 0.0915 | |

| PSNR | 26.7505 | 28.0738 | 29.5993 | 28.3815 | 26.5602 | 29.4187 | 27.5070 | 27.750 | 29.0902 | 28.6949 | 30.4437 | 31.6006 | |

| SSIM | 06974 | 0.8052 | 0.8374 | 0.7956 | 0.7717 | 0.8522 | 0.7785 | 0.7533 | 0.8188 | 0.7962 | 0.8467 | 0.8790 | |

| MSAM | 0.1963 | 0.1804 | 0.1525 | 0.1243 | 0.2115 | 0.1172 | 0.2852 | 0.2539 | 0.5650 | 0.1292 | 0.1478 | 0.1249 | |

| Case 2: Gaussian Noise + Impulse Noise | |||||||||||||

| PSNR | 29.1526 | 29.8571 | 29.7821 | 30.0378 | 27.9627 | 32.4915 | 29.7650 | 30.8235 | 31.3202 | 26.3835 | 32.9093 | 33.2625 | |

| SSIM | 0.8569 | 0.8925 | 0.8821 | 0.8897 | 0.8583 | 0.9388 | 0.8943 | 0.9126 | 0.8878 | 0.7432 | 0.9305 | 0.9344 | |

| MSAM | 0.2348 | 0.2292 | 0.2283 | 0.2265 | 0.2486 | 0.2220 | 0.2565 | 0.2146 | 0.2745 | 0.2652 | 0.2181 | 0.2205 | |

| Case 3: Gaussian Noise + Impulse Noise + Deadlines | |||||||||||||

| PSNR | 24.6478 | 28.0118 | 28.3779 | 24.9081 | 26.4307 | 29.7875 | 27.9576 | 25.1743 | 29.0582 | 26.5918 | 28.7792 | 32.5335 | |

| SSIM | 0.7126 | 0.8500 | 0.8550 | 0.7386 | 0.8026 | 0.9147 | 0.8379 | 0.7784 | 0.8618 | 0.7251 | 0.8363 | 0.9441 | |

| MSAM | 0.2539 | 0.1574 | 0.1276 | 0.1987 | 0.1990 | 0.0979 | 0.1429 | 0.2072 | 0.1423 | 0.1381 | 0.2591 | 0.0728 | |

| Case 4: Gaussian Noise + Impulse Noise + Stripes | |||||||||||||

| PSNR | 28.6042 | 29.6547 | 29.5840 | 29.7255 | 27.8851 | 32.3462 | 29.6949 | 30.6570 | 31.2862 | 26.4745 | 32.5697 | 32.8969 | |

| SSIM | 0.8420 | 0.8897 | 0.8768 | 0.8851 | 0.8570 | 0.9368 | 0.8922 | 0.9111 | 0.8884 | 0.7433 | 0.9267 | 0.9311 | |

| MSAM | 0.2381 | 0.2303 | 0.2292 | 0.2272 | 0.2493 | 0.2222 | 0.2570 | 0.2149 | 0.2725 | 0.2447 | 0.2187 | 0.2210 | |

| Cases | Index | STCR | DFTCR |

|---|---|---|---|

| Case 1: Gaussian Noise | |||

| PSNR | 36.9208 | 37.3647 | |

| SSIM | 0.9624 | 0.9661 | |

| MSAM | 0.0637 | 0.0600 | |

| PSNR | 33.0368 | 33.9688 | |

| SSIM | 0.9082 | 0.9264 | |

| MSAM | 0.1049 | 0.0915 | |

| PSNR | 30.4437 | 31.6006 | |

| SSIM | 0.8467 | 0.8790 | |

| MSAM | 0.1478 | 0.1249 | |

| Case 2: Gaussian Noise + Impulse Noise | |||

| PSNR | 32.9093 | 33.2625 | |

| SSIM | 0.9305 | 0.9344 | |

| MSAM | 0.2181 | 0.2205 | |

| Case 3: Gaussian Noise + Impulse Noise + Deadlines | |||

| PSNR | 28.7792 | 32.5335 | |

| SSIM | 0.8363 | 0.9441 | |

| MSAM | 0.2591 | 0.0728 | |

| Case 4: Gaussian Noise + Impulse Noise + Stripes | |||

| PSNR | 32.5697 | 32.8969 | |

| SSIM | 0.9267 | 0.9311 | |

| MSAM | 0.2187 | 0.2210 | |

| Dataset | BM4D | LRMR | LRTDTV | WLRTR | FGLR | NGMeet |

| GF-5 | 685.30 | 54.62 | 94.37 | 325.96 | 4.98 | 135.42 |

| WDC | 1008.64 | 61.63 | 117.21 | 382.81 | 6.93 | 135.91 |

| Dataset | FGSLR | STCR | NLSSR | SDeCNN | NFF | DFTCR |

| GF-5 | 258.00 | 78.03 | 50.33 | 5.37 | 706.30 | 86.51 |

| WDC | 296.92 | 77.48 | 58.11 | 1.90 | 1104.75 | 91.18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, J.; Pan, C.; Ding, H.; Zhang, Z. Double-Factor Tensor Cascaded-Rank Decomposition for Hyperspectral Image Denoising. Remote Sens. 2024, 16, 109. https://doi.org/10.3390/rs16010109

Han J, Pan C, Ding H, Zhang Z. Double-Factor Tensor Cascaded-Rank Decomposition for Hyperspectral Image Denoising. Remote Sensing. 2024; 16(1):109. https://doi.org/10.3390/rs16010109

Chicago/Turabian StyleHan, Jie, Chuang Pan, Haiyong Ding, and Zhichao Zhang. 2024. "Double-Factor Tensor Cascaded-Rank Decomposition for Hyperspectral Image Denoising" Remote Sensing 16, no. 1: 109. https://doi.org/10.3390/rs16010109

APA StyleHan, J., Pan, C., Ding, H., & Zhang, Z. (2024). Double-Factor Tensor Cascaded-Rank Decomposition for Hyperspectral Image Denoising. Remote Sensing, 16(1), 109. https://doi.org/10.3390/rs16010109