Abstract

The specific characteristics of remote sensing images, such as large directional variations, large target sizes, and dense target distributions, make target detection a challenging task. To improve the detection performance of models while ensuring real-time detection, this paper proposes a lightweight object detection algorithm based on an attention mechanism and YOLOv5s. Firstly, a depthwise-decoupled head (DD-head) module and spatial pyramid pooling cross-stage partial GSConv (SPPCSPG) module were constructed to replace the coupled head and the spatial pyramid pooling-fast (SPPF) module of YOLOv5s. A shuffle attention (SA) mechanism was introduced in the head structure to enhance spatial attention and reconstruct channel attention. A content-aware reassembly of features (CARAFE) module was introduced in the up-sampling operation to reassemble feature points with similar semantic information. In the neck structure, a GSConv module was introduced to maintain detection accuracy while reducing the number of parameters. Experimental results on remote sensing datasets, RSOD and DIOR, showed an improvement of 1.4% and 1.2% in mean average precision accuracy compared with the original YOLOv5s algorithm. Moreover, the algorithm was also tested on conventional object detection datasets, PASCAL VOC and MS COCO, which showed an improvement of 1.4% and 3.1% in mean average precision accuracy. Therefore, the experiments showed that the constructed algorithm not only outperformed the original network on remote sensing images but also performed better than the original network on conventional object detection images.

1. Introduction

In the era of big data, due to the development and progress of machine learning technology represented by deep learning, artificial intelligence can better cope with complex environments and tasks through continuous learning and adaptation. By processing and analyzing large-scale and high-dimensional data [1], it can reveal the patterns and patterns behind the data, supporting more accurate predictions and decisions [2,3,4]. By exploring potential patterns in data, new knowledge and innovation can be discovered, supporting the development of various sciences and technologies. Therefore, in recent years, artificial intelligence has been gradually applied to speech recognition, natural language processing, computer vision, and other fields. In the field of artificial intelligence, computer vision technology has found widespread application in diverse fields such as intelligent security [5], autonomous driving [6], remote sensing monitoring [7,8], medical and pharmaceuticals [9,10], agriculture [11], intelligent transportation [12], and information security [13]. Computer vision tasks can be categorized into image classification [14], object detection [15], and image segmentation [16]. The core task of object detection is to determine the categories and positions of multiple objects in the image and to give corresponding detection boxes and object categories for each object. Remote sensing images contain a wealth of detailed information, which can intuitively reflect the shape, color, and texture of ground targets. Remote sensing target detection, as a fundamental technique, is widely applied in various fields, such as urban planning [17], land use [18], traffic guidance [19], and military surveillance [20]. With the development of ground observation technology, the scale of high-resolution remote sensing image data has been continuously increasing. High-resolution remote sensing images provide higher image quality and more abundant, detailed information, which presents greater opportunities for the development of target detection in the field of remote sensing. Target detection can be divided into two main categories: traditional object detection algorithms and deep learning-based object detection algorithms. Traditional object detection algorithms mainly rely on traditional feature extractors [21] and use sliding windows to generate object candidate regions. Representative algorithms include the Viola–Jones detector (VJ-Det) [22], the Histogram of Oriented Gradient (HOG) detector [23], and the deformable part model detector (DPM) [24]. With the development of deep learning, convolutional neural networks (CNNs) have gradually been applied to object detection tasks. Based on deep learning, object detection technology can use multi-structured network models and powerful training algorithms to adaptively learn high-level semantic information from images. Image features are extracted and fed into a classification network to complete the tasks of object classification and localization, thereby effectively improving the accuracy and efficiency of object detection tasks.

According to the detection principle, target detection algorithms based on deep learning can be divided into two categories: (1) Two-stage target detection algorithms based on candidate regions. Representative algorithms include R-CNN [25], Fast R-CNN [26], and Faster R-CNN [27]. This algorithm first generates sample candidate boxes [28,29,30], then encodes the extracted feature vectors using deep convolutional neural networks [31,32,33], and finally performs regression on the class and location of the target object within the candidate box [34]. By employing a two-stage operation, the target detection algorithm achieves high detection accuracy at the expense of slower speed and non-real-time detection. (2) One-stage object detection algorithms based on direct regression, represented by algorithms such as SSD [35] and the YOLO series [36,37,38]. This algorithm abandons the stage of generating candidate bounding boxes and directly outputs the position and category of the target through regression, which improves the detection speed.

The YOLO series is currently a classical one-stage object detection algorithm. Redmon et al. [39] proposed the YOLO algorithm, which represented a significant breakthrough in real-time object detection. However, the training of each component in YOLO needs to be conducted separately, leading to a slow inference speed. A strategy of jointly training the components was proposed, which not only improved the inference speed but also reduced the complexity of network training while enhancing the detection performance of YOLO. The YOLOv3 algorithm [37] utilizes Darknet-53 as the backbone network and fuses up-sampled feature maps with shallow feature maps to retain the semantic information of small objects and enhance the detection performance of such objects. The YOLOv4 algorithm [38] adopts CSPDarknet53 [40] as the backbone network and introduces Spatial Pyramid Pooling (SPP) [41] to optimize the receptive field of deep feature maps, thereby further improving detection accuracy. Ultralytics released the YOLOv5 algorithm, which incorporates CSPNet as its backbone network. The neck component employs a feature pyramid network (FPN) [42] to enable top-down semantic information transmission, leveraging both low-level features with high resolution and high-level features with semantic information. In addition, the algorithm utilizes a path aggregation network (PAN) [43] for bottom-up localization transmission, which facilitates the propagation of low-level information to the top level. The YOLOv5 model proposes five types—YOLOv5n, YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x—based on the differences in network structure depth and width. While the parameters and performance of the models increase sequentially, the detection speed gradually decreases. The YOLOv5n/s models have a small backbone feature extraction network and are lightweight, but their target bounding box regression is not sufficiently accurate for practical applications. The YOLOv5m/l/x models have better detection and recognition performance with increasing network depth and width, but they struggle to meet real-time detection requirements on hardware-limited embedded devices. To address this problem, this paper proposes a lightweight object detection algorithm based on the YOLOv5s network, which combines the shuffle attention (SA) module, the depthwise-decoupled head (DD-head) module, the content-aware reassembly of features (CARAFE) module, the GSConv module, and the spatial pyramid pooling cross-stage partial GSConv (SPPCSPG) module to improve the detection accuracy of the model while meeting real-time requirements. The main contributions and innovations of the constructed model can be summarized as follows:

- (1)

- In this paper, depthwise convolution is used to replace the standard convolution in the decoupled head module to construct a new detection head, the DD-head, which can improve the negative impact of classification and regression task conflicts while reducing the parameter volume of the decoupled head.

- (2)

- Based on the SPPCSPC module, this paper utilizes the design principle of the GS bottleneck and replaces the CBS module in the SPPCSPC module with the GSConv module to design a lightweight SPPCSPG module, which is introduced into the backbone structure to optimize the YOLOv5s network model.

- (3)

- The effect of embedding the SA module in the network backbone, neck, and head regions is studied, and the SA module is ultimately embedded in the head region to enhance the spatial attention and channel attention of the feature map, thereby improving the accuracy of multi-scale object detection.

- (4)

- The CARAFE module is used to replace the nearest neighbor interpolation up-sampling module to reassemble feature points with similar semantic information in a content-aware manner and aggregate features in a larger receptive field, achieving the up-sampling operation.

- (5)

- In this study, the Conv module in the variety of view-GS cross-stage partial (VoV-GSCSP) module is replaced by the GSConv module to reconstruct a new VoV-GSCSP module to further reduce the model’s parameters. The GSConv module and the improved VoV-GSCSP module are embedded in the neck structure to maintain the model’s detection accuracy while reducing the parameter volume.

Experimental results show that the proposed algorithm outperforms the original YOLOv5s algorithm in multi-scale object detection performance while meeting real-time requirements.

2. Related Work

2.1. Object Detection Algorithms for Remote Sensing Images

Traditional remote sensing image object detection algorithms are based on handcrafted feature design. The detection process typically includes candidate region extraction [44], feature extraction [45], classifier design [46], and post-processing. First, potential target regions are extracted from the input image using candidate region extraction. For each region, features are extracted; then, the extracted features are classified. Finally, post-processing, such as filtering and merging, is applied to all candidate boxes to obtain the final detection results. Candidate region extraction requires the setting of a large number of sliding windows, which results in a high time complexity and a significant amount of redundant computation. Handcrafted features are mainly extracted based on target visual information (such as color [47], texture [48], edges [49], context [50], etc.), giving them strong interpretability. However, handcrafted features have weak feature expression capability, poor robustness, limited adaptability, and are difficult to apply in complex and changing environments.

With the development of deep learning, the deep features extracted by neural networks have stronger semantic representation and discriminative ability. However, due to the characteristics of remote sensing images, such as large image size, significant directional changes, large-scale small targets, dense target distribution, significant scale variations, target blurring, and complex backgrounds, existing detection algorithms cannot achieve satisfactory performance on remote sensing images. To address the problem of large image size, R2-CNN [51] was designed using a lightweight backbone network, Tiny-Net, for feature extraction and used the approach of judging first and then locating to filter out sub-image blocks without targets, thereby reducing the computational burden of subsequent detection and recognition. For the problem of significant directional changes, the approaches of data augmentation [52] or adding rotation-invariant sub-modules [53] are typically used to solve this problem. Cheng et al. [54] explicitly increased rotation-invariant regularizers on CNN features by optimizing a new objective function to force the feature representation of training samples before and after rotation to be closely mapped to achieve rotational invariance. For the small target problem, Yang et al. [55] increased the number and scale of shallow feature pyramids to improve the detection accuracy of small targets and used a dense connection structure to enhance the feature expression ability of small targets. Zhang et al. [56] improved small target detection by up-sampling and enlarging the feature map size of each candidate region in the first stage of the two-stage Faster R-CNN. To address the problem of dense target distribution, DAPNet [57] used an adaptive region generation strategy based on the density of targets in the image. For the problem of significant scale variations, Guo et al. [58] and Zhang et al. [59] directly used a multi-scale candidate region network and a multi-scale detector to detect targets of different scales. For the problem of target blurring, Li et al. [60] proposed a dual-channel feature fusion network that can learn local and contextual attributes along two independent paths and fuse features to enhance discriminative power. Finally, for the problem of complex backgrounds, Li et al. [61] extracted multi-scale features and used an attention mechanism to enhance each feature map individually, thereby eliminating the influence of background noise.

2.2. Attention Mechanism

To extract effective information from massive and complex data, researchers have proposed attention mechanisms to obtain the importance differences of each feature map. In the visual system, attention mechanisms are considered as dynamic selection processes that adaptively weigh the features based on their importance differences in the input [62]. Currently, attention mechanisms have achieved good performance in tasks such as image classification [63], object detection [64], semantic segmentation [65], medical image processing [66], super-resolution [67], and multimodal tasks [68]. Attention mechanisms can be classified into the following six categories. (1) Channel attention: In deep neural networks, different channels in various feature maps typically represent different objects. Channel attention adaptively adjusts the weight of each channel to increase the importance of focused objects. Hu et al. [69] were the first to propose the concept of channel attention and to introduce SENet. The SENet module collects global information [70] using squeeze and excitation modules, captures channel relationships, and improves the representation power of the network. (2) Spatial attention: Spatial attention is an adaptive mechanism for selecting spatial regions. The representative algorithms of spatial attention include RAM [71], based on the RNN method; STN, which uses subnetworks to explicitly predict relevant regions [72]; GENet [73], which uses subnetworks implicitly to predict soft masks for selecting important regions; and GCNet [74], which uses a self-attention mechanism [75]. (3) Temporal attention: Temporal attention can be regarded as a dynamic temporal selection mechanism for determining when to focus attention, typically achieved by capturing short-term and long-term cross-frame feature dependencies [76,77]. Li et al. [76] proposed a global–local temporal representation (GLTR) to utilize multi-scale temporal information in video sequences. GLTR consists of a dilated temporal pyramid (DTP) for local temporal context learning and a temporal self-attention module for capturing global temporal interaction. (4) Branch attention: Branch attention can be regarded as a dynamic branch selection mechanism; it is often used in conjunction with multi-branch structures. Representative networks include highway networks [78], Selective Kernel (SK) convolution [79], CondCov operator [80], and dynamic convolution [81]. (5) Channel and spatial attention: Channel and spatial attention combines the advantages of channel and spatial attention and can adaptively select important objects and regions [82]. Based on the ResNet network [83], the Residual Attention Network [84] pioneers the research of channel and spatial attention by combining attention mechanism and residual connection, emphasizing the importance of information features in spatial and channel dimensions. Woo et al. [63] proposed the convolutional block attention module (CBAM) by concatenating channel and spatial attention; it decouples channel and spatial attention to improve computational efficiency. (6) Spatial and temporal attention: Combining the advantages of spatial and temporal attention, it can adaptively select important regions and key frames. Song et al. [85] proposed a joint spatial and temporal attention network based on LSTM [86], which enables the adaptive discovery of discriminative features and key frames.

2.3. Multi-Scale Feature Fusion

In object detection tasks, feature maps at different levels represent varying information about the detection targets. High-level feature maps encode semantic information about the objects which can be used for classification, while low-level feature maps encode positional information about the objects which can be used for regression [87]. The YOLO algorithms fuse multiple features obtained from neural networks to extract more information about small targets to improve detection accuracy. The Feature Pyramid Network (FPN) [42] enhances semantic feature representation through a top-down pathway and fuses features with more precise location information. However, FPN fails to propagate accurate localization information from lower-level feature maps to higher-level semantic feature maps, and the feature transfer between non-adjacent layers is limited. In addition, for masks generated for large targets, the redundant and lengthy spatial transfer path hinders the effective integration of high-level and low-level information, leading to information loss. Liu et al. [43] proposed the PANet network, which incorporates a bottom-up pathway enhancement structure and integrates shallow network features with FPN features. To improve upon the suboptimal fusion performance of manually designed feature pyramids, Ghiasi et al. [88] introduced the neural architecture search-feature pyramid network (NAS-FPN), which utilizes neural network architecture search methods to automatically design the feature network. The bidirectional feature pyramid network (BiFPN) [89] improves upon the PANet by introducing contextual [90] and weight information to balance features of different scales, resulting in a larger receptive field and richer semantic information. To address the issue of inconsistent feature scales in pyramid-based methods, Liu et al. [91] proposed a data-driven pyramid feature fusion strategy called adaptive spatial feature fusion (ASFF), which enables the network to learn how to directly filter out features from other levels in space to preserve useful information for combination. Subsequent research has shown the effectiveness of BiFPN [92,93,94] and ASPP [95,96,97] in improving the detection performance of YOLO algorithms.

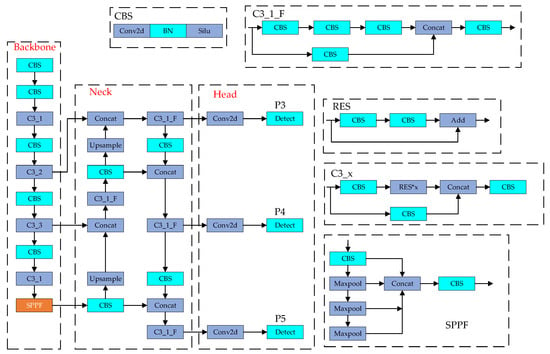

3. YOLOv5 Algorithm

Among the existing object detection algorithms, the YOLOv5 algorithm has gained wide popularity in various applications due to its fast detection speed, high accuracy, and good flexibility. Based on differences in network depth and width, the proposed YOLOv5 model can be categorized into five types: YOLOv5n, YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x. These models demonstrate a progressive increase in number of parameters and level of performance, but with a corresponding decrease in detection speed. Considering both detection performance and speed, this research selected the YOLOv5s model. This model comprises four main components: input, backbone, neck, and head. Figure 1 illustrates the network structure diagram.

Figure 1.

YOLOv5 network structure.

3.1. Input

YOLOv5 performs adaptive image scaling and Mosaic data augmentation, as well as optimized anchor box calculations at the input end for image data [38].

Mosaic is a data augmentation method based on Cutmix [98]. By combining four training images into one image and scaling the resulting image to the standard size before training, Mosaic can effectively improve object detection beyond normal backgrounds. During training, each batch of data contains a large number of images, and data augmentation increases the number of images in each batch fourfold, which reduces the requirement for large quantities when estimating mean and variance. Adaptive image scaling is only performed during the model inference stage. First, the scaling ratio is calculated based on the original image and the input network image size. Then, the scaled image size is determined by multiplying the original image size by the scaling ratio. Finally, the image is scaled to fit the input size of the network. In the YOLOv2–4 algorithms [36,37,38], prior box dimensions need to be extracted using K-means clustering [99]. To train on different datasets, a separate program is required to obtain the initial anchor boxes to meet specific size requirements. YOLOv5 embeds adaptive anchor box calculation into its code, which automatically calculates the optimal anchor boxes during each training session based on the dataset.

3.2. Backbone

The backbone network primarily extracts feature information from input images. The C3 module and SPPF module are mainly used in the YOLOv5 network. The C3 module reduces model computation and improves inference speed, while the SPPF module extracts multi-scale information from feature maps, which is beneficial for improving model accuracy.

The C3 module consists of three standard convolutional layers and multiple bottleneck modules, the number of which is determined by the parameters specified in the configuration file. The C3 module is the main module for learning residual features. Its structure consists of two branches: one branch uses the specified multiple bottleneck modules stacked and standard convolutional layers, while the other branch only passes through standard convolutional layers. Finally, the two branches are concatenated and passed through standard convolutional layers to output the final feature map.

SPP [41] can fuse feature maps of different scales and sizes by performing fixed-size pooling on feature maps of any scale to obtain a fixed number of features. Then, each pooled feature is concatenated to obtain a fixed-length feature map. The principle of the SPPF module is similar to SPP, with a slightly different structure. In YOLOv5, SPP uses three scales of features [5,9,13] to fuse with the input feature. The results further improve the scale invariance of input images with different scales and aspect ratios. On the other hand, SPPF only uses a 5 × 5 pooling kernel. After the input image passes through a standard convolutional layer, it goes through three stacked 5 × 5 pooling kernels. Each scale feature after pooling is fused with the scale feature after passing through the standard convolutional layer to obtain the final feature map. Compared with the SPP module, the computational complexity of the SPPF module is greatly reduced, and the model speed is improved.

3.3. Neck

The neck network of YOLOv5 consists of a feature pyramid network (FPN) [42] and a path aggregation network (PAN) [43]. FPN always uses the semantic information of high-level features and high-resolution location information of low-level features simultaneously by using a top-down approach to propagate semantic information. On the other hand, PAN uses a bottom-up approach to facilitate the propagation of low-level information to the top level for better localization. The three sizes of feature maps output by the backbone network are aggregated by the neck network to enhance semantic information and localization features, which helps to improve the ability to detect objects of different sizes.

3.4. Head

As the detection component of the object detection model, the head predicts objects of different sizes by processing multi-scale feature maps. The anchor box mechanism at the head extracts prior box scales through clustering and constrains the predicted box positions.

The model outputs three scale tensors, with the first scale having an eight-fold down-sampling compared to the input image, resulting in a smaller receptive field that preserves high-resolution features from the bottom layers and is beneficial for detecting small objects. The second scale has a 16-fold down-sampling, resulting in a moderately sized receptive field that is beneficial for detecting medium-sized objects. The third scale has a 32-fold down-sampling, resulting in a larger receptive field that is beneficial for detecting large objects.

3.5. Loss Function

The loss function () of YOLOv5 consists of three components defined as Equation (1), which covers several necessary loss function modules in object detection such as confidence loss function, class prediction loss function, and bounding box prediction loss function [100].

where represents the target confidence loss of the model, represents the target class prediction loss of the model, and represents the bounding box loss of the model.

where represents the grid size, represents the number of predicted boxes per grid, and represents whether the predicted box in the grid contains an object. If the overlap between the predicted box and the ground truth box exceeds the threshold, is set to 1, indicating the presence of an object to be predicted, and it is included in the calculation of the loss function; otherwise, is set to 0. represents whether the predicted box in the grid contains a background object. If the overlap between the predicted box and the ground truth box is less than the threshold, is set to 1; otherwise, it is set to 0. and are balance coefficients used to adjust the balance between the confidence loss in the presence and absence of objects. represents the confidence of the predicted box, represents the confidence of the ground truth box, represents the predicted probability of the class when the network detects an object, and represents the true probability of the class when the network detects an object.

The YOLOv5 bounding box prediction loss function utilizes the CIOU loss function () [101], and its definition is as follows:

where represents the Euclidean distance between the centers of the predicted box and the ground-truth box; represents the diagonal distance of the minimum enclosing region that can simultaneously contain the predicted and ground-truth boxes; ,, and respectively denote the predicted box, the ground-truth box, and the weight coefficient; is used to measure the similarity of aspect ratios; and the formulas for and are given by Equations (6) and (7), respectively:

where and respectively represent the width and height of the ground-truth box; and and respectively represent the width and height of the predicted box.

4. Improved YOLOv5s Algorithm

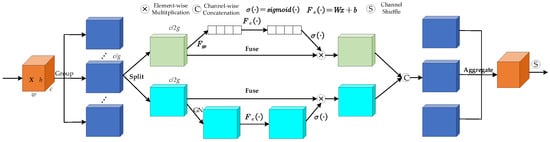

4.1. Shuffle Attention Module

As the hierarchical depth of the network increases, the information extracted from the head of the YOLOv5s network becomes increasingly abstract which will lead to missed or false detection of small objects in the image. In this study, an attention mechanism was incorporated into the YOLOv5s network to address this issue.

The attention mechanism can be mainly divided into spatial attention and channel attention, which are used to capture pixel relationships in space and dependencies between channels, respectively. The combination of these two attention mechanisms, such as in CBAM, can achieve better results but inevitably increases the computational complexity of the model. The SGE attention mechanism module [102] is a classic attention module. Its core idea is to group feature maps, with each group of feature maps representing a semantic feature. By utilizing the similarity between local and global features, the attention mask is generated to guide the spatial distribution of enhanced semantic features. Based on the design concept of the SGE attention mechanism, the shuffle attention (SA) mechanism [103] introduces the channel shuffle operation, which uses both spatial and channel attention mechanisms in parallel, efficiently combining the two. As shown in Figure 2, the SA module first groups the c × h × w feature map obtained by convolution, and the grouped feature map serves as the SA unit. Each SA unit is divided into two parts, with the upper part using the channel attention mechanism and the lower part using the spatial attention mechanism. The processed two parts are stacked by channel numbers to achieve information fusion within the SA unit. Finally, the channel shuffle operation is applied to all SA units to realize information communication between different sub-features and obtain the final output feature map.

Figure 2.

Shuffle attention network structure.

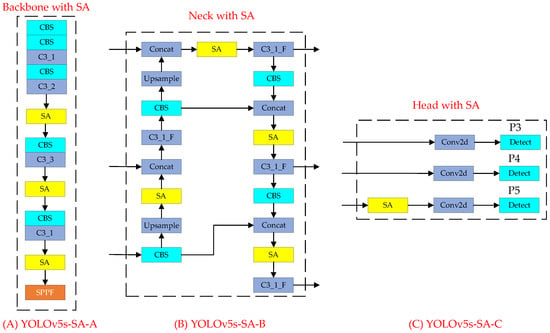

The SA module has the convenient feature of being plug-and-play and has been applied in some networks. However, there is currently no absolute theoretical basis for determining which part of the YOLOv5s network is best in terms of integration. The YOLOv5s network can be divided into four modules: input, backbone, neck, and head. The input module mainly performs preprocessing operations on images; it does not perform feature extraction processing on images. Therefore, in this study, fusion network models that incorporate the SA module into the backbone, neck, and head modules of YOLOv5s were designed and named YOLOv5s-SA-A, YOLOv5s-SA-B, and YOLOv5s-SA-C, respectively.

The SA module was embedded into the backbone structure to form the YOLOv5s-SA-A network. The backbone extracts the feature information from images through a relatively deep convolutional network. As the network layers deepen, the resolution of the feature map decreases. The SA module can be used for spatial attention enhancement and channel attention reconstruction of feature maps at different locations. The C3 module aggregates features at different levels. In this study, the SA module was placed after the C3 module. The network structure is shown in Figure 3A. The SA module was embedded into the neck structure to form the YOLOv5s-SA-B network. The FPN and PAN structures in the neck module can transmit semantic information from top to bottom and positional information from bottom to top, thereby enhancing the aggregation of semantic information and positioning features. This module uses four Concat operations to fuse deep and shallow information. Therefore, the SA module was placed after the Concat operation to enhance the spatial attention and channel reconstruction of the fused feature map. The network structure is shown in Figure 3B. The SA module was embedded into the head structure to form the YOLOv5s-SA-C network. The YOLOv5s network predicts targets using three feature maps of different scales. Large targets are predicted on small feature maps, while small targets are predicted on large feature maps. In this study, the SA module was embedded before the prediction head to enhance the spatial attention and channel reconstruction of each feature map. The network structure is shown in Figure 3C.

Figure 3.

YOLOv5s network structure integrating shuffle attention module.

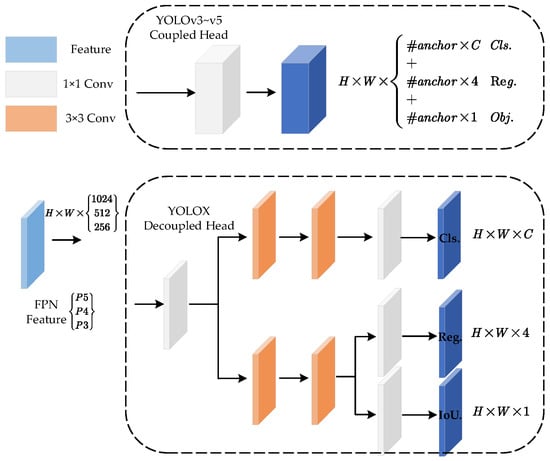

4.2. DD-Head Module

Traditional YOLO algorithms use the coupled head, which utilizes the same convolutional layer for both classification and regression tasks at the head of the network. However, classification and regression tasks have different focuses. Classification is more concerned with the texture of each sample, while regression is more focused on the edge features of object images. Studies [104,105] have pointed out that there is a conflict between classification and regression tasks in object detection, and using a coupled head for both tasks may lower the model’s performance.

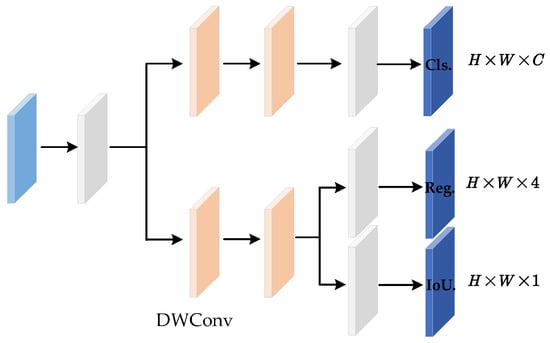

The YOLOX algorithm [106] is the first algorithm to apply the decoupled head module, achieving more significant results than the coupled head. The network structure of the decoupled head is shown in Figure 4. For the input feature map, the decoupled head first uses a 1 × 1 convolution to reduce its dimensionality, mapping the feature maps of P3, P4, and P5 with different dimensions of the feature fusion network output into feature maps with a unified number of channels. Then, two parallel channels are used to perform object regression and target box coordinate regression tasks. To reduce the complexity of the decoupled head and improve model convergence speed, each channel uses two 3 × 3 convolutions. Cls., Reg., and Obj. output values can be obtained through processing, where Cls. represents the category of the target box, Reg. represents the position information of the target box, and Obj. represents whether each feature point contains an object. The final prediction information is obtained by fusing the three output values. In summary, the decoupled head improves model performance by separately addressing classification and regression tasks.

Figure 4.

Decoupled head network structure.

Although introducing a decoupled head can effectively improve the detection performance of a network, it undoubtedly increases the model’s parameter count and decreases the detection speed. In this study, the 3 × 3 convolution in each branch of the decoupled head network was replaced with a 3 × 3 depthwise convolution [107], reducing the parameter count. The network architecture is shown in Figure 5, which is named the DD-head. The original coupled head in the YOLOv5s model was replaced with the DD-head to mitigate the negative impact of the classification and regression task conflict.

Figure 5.

Depthwise-decoupled head network structure.

4.3. Content-Aware Reassembly of the Features Module

The YOLOv5s model utilizes the FPN module to achieve top-down semantic information transfer and multi-scale object detection through feature fusion. The multi-scale feature fusion is achieved by nearest-neighbor interpolation up-sampling to unify the feature map size. However, this up-sampling operation presents two limitations: (1) the interpolation up-sampling operation only considers the spatial information of the feature map and ignores its semantic information, resulting in simultaneous up-sampling of target and noise positions; (2) the receptive field of the interpolation up-sampling operation is usually small, leading to insufficient use of global feature information. Another adaptive up-sampling operation is the deconvolution operation; however, it also has two limitations: (1) the deconvolution operator uses the same convolution kernel across the entire feature map, regardless of the underlying information, which limits its ability to respond to local changes; (2) the parameter volume of the deconvolution operation is large, which reduces the detection speed of the network. To address the problem of low semantic correlation in up-sampling in object detection models, this study adopts the Content-Aware Reassembly of Features (CARAFE) module [108] to replace the nearest-neighbor interpolation up-sampling module. The CARAFE module recombines feature points with similar semantic information in a content-aware manner and aggregates features in a larger receptive field to achieve the up-sampling operation.

The up-sampling process of the CARAFE module mainly consists of two steps: up-sampling kernel prediction and content-aware reassembly. Firstly, channel compression is performed by the up-sampling kernel prediction module to reduce the number of input feature channels. Then, the compressed feature map is encoded with content and the reassembly kernel is predicted according to the content of each target location. Finally, the content-aware reassembly module performs a dot product between the reassembly kernel and the corresponding region of the original feature map to complete the up-sampling process.

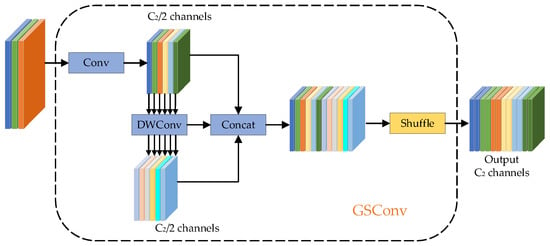

4.4. GSConv Module

Although the introduction of the decoupled head and SPPCSPC modules can improve the detection performance of the YOLOv5s network model, these modules increase the parameter count of the model, which is unfavorable for creating lightweight networks. To design lightweight networks, deep separable convolution (DSC) modules are typically used instead of conventional convolutional modules. The advantage of DSC modules is their efficient computational capability, as their parameter count and computational workload are approximately one-third of those of traditional convolutional modules. However, during the feature extraction process, the channel information of the input image is separated in the calculation process, which can result in lower feature extraction and fusion capabilities compared to standard convolutional modules. To effectively utilize the computational capability of DSC and ensure that its detection accuracy reaches the level of standard convolution (SC), the GSconv [109] module is proposed based on SC, DSC, and shuffle modules. The network structure is shown in Figure 6. Firstly, the feature map with C1 channels is split into two parts, where half of the feature map is used for deep separable convolution and the remaining part is used for standard convolution. Then, the two-channel feature maps are combined for feature concatenation. Shuffle is a channel mixing technique that allows information from the SC module to completely mix with the DSC output by transmitting its feature information across various channels, thus achieving channel information interaction.

Figure 6.

GSConv network structure.

During the convolution process, the spatial information of the feature map gradually shifts to channels, where the number of channels increases when the width and height of the feature map decrease, resulting in stronger semantic information. However, each spatial compression and channel expansion of the feature map can lead to partial loss of semantic information, which affects the accuracy of object detection. The SC module largely preserves the hidden connections between each channel, which can reduce information loss to some extent, but with high time complexity. In contrast, the DSC module cuts off these hidden connections, resulting in the complete separation of channel information during the calculation process. The GSconv module retains as many connections as possible while maintaining lower time complexity, thereby reducing information loss and achieving faster operations and thus unifying SC and DSC.

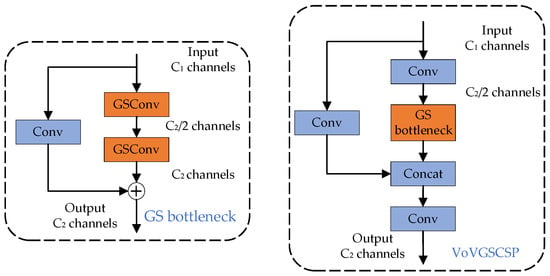

Based on the GSConv module, the network structure of the GS bottleneck and VoV-GSCSP module is shown in Figure 7. Compared with the bottleneck module in the original YOLOv5s network, the GS bottleneck replaces the two 1 × 1 convolutions in the bottleneck module with the GSConv module and adds new skip connections. Therefore, the two branches of the GS bottleneck perform separate convolutions without weight sharing, propagating channel information through different network paths by dividing the number of channels. As a result, the information propagated by the GS bottleneck shows greater correlation and diversity, resulting in more accurate information and reduced computational workload. The VoV-GSCSP module is designed by using the GS bottleneck instead of the bottleneck in the C3 module. In the VoV-GSCSP module, the input feature map is also divided into two parts based on channel numbers. The first part is processed by a convolutional module and features are extracted by stacking the GS bottleneck, while the other part serves as the residual connection and is convolved by a convolutional module. The two feature maps are then concatenated based on channel numbers and passed through a convolutional module for output. The VoV-GSCSP module inherits the advantages of both the GSConv module and the GS bottleneck. With the new skip connection branch, the VoV-GSCSP module has a stronger nonlinear representation, effectively addressing the problem of gradient vanishing. At the same time, the split-channel method of VoV-GSCSP achieves rich gradient combinations, solving the problem of redundant gradient information and improving learning ability [109]. Experimental results have shown that the VoV-GSCSP module not only reduces computational workload but also improves model accuracy [110,111]. In this study, the Conv module in the VoV-GSCSP module was replaced with the GSConv module to further reduce model parameters. The GSConv module and the improved VoV-GSCSP module were embedded into the neck structure of the model, so as to maintain detection accuracy while reducing parameter count.

Figure 7.

GS bottleneck and VoV-GSCSP network structure.

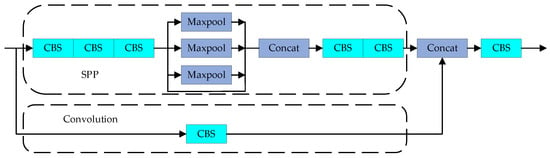

4.5. SPPCSPG Module

The SPPCSPC module [112] is built on the basis of the SPP module and the CSP structure, as shown in Figure 8. The module first divides the features extracted by the C3 module of the backbone into two parts: the SPP and conventional convolution operations. The SPP structure consists of four branches, corresponding to max-pooling operations with pool kernel sizes of 1, 5, 9, and 13. These four different pool kernels allow the SPPCSPC structure to handle objects with four different receptive fields, better distinguishing small and large targets. Finally, the SPP operation and conventional convolution operation are merged together by Concat to achieve faster speed and higher accuracy.

Figure 8.

Spatial pyramid pooling cross-stage partial conv network structure.

Although the SPPCSPC module can improve the detection performance of the model to a certain extent, it also increases the model’s parameter count. Therefore, in this study, a lightweight SPPCSPG module was proposed based on the design principles of the GS bottleneck and the GSConv module. The SPPCSPG module was integrated into the backbone structure to optimize the YOLOv5s network model.

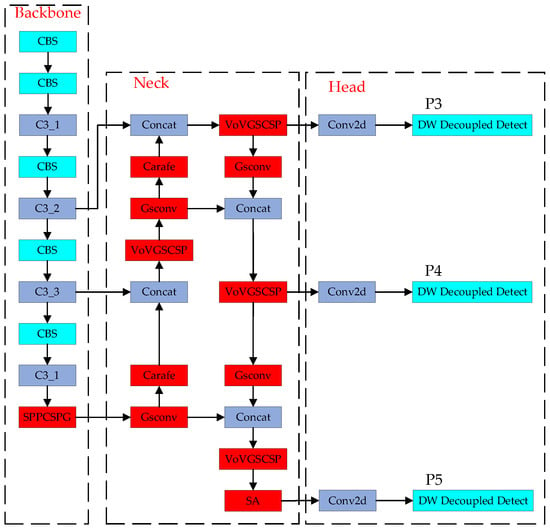

Incorporating the improvements to the YOLOv5s backbone, this study replaced the SPPF module with the SPPCSPG module to enhance detection accuracy. In the neck structure, all Conv modules were replaced with GSConv modules and the improved VoV-GSCSP module was introduced to reduce the parameters and computation brought about by feature pyramid structure upgrades. To address the issue of limited semantic information and receptive fields caused by nearest-neighbor interpolation up-sampling operations in the original network model, this study adopted the CARAFE module to replace the nearest-neighbor interpolation up-sampling module, which reorganizes feature points with similar semantic information in a content-aware way and aggregates features in a larger receptive field to perform up-sampling operations. To address the problem of inaccurate target localization and weak feature expression capability in the original network model, this study introduced the SA attention module in the head module. Finally, to improve the performance metrics of the model, the DD-head was used in the detection layer of the YOLOv5s model to accomplish classification and regression tasks. The improved network model structure is shown in Figure 9.

Figure 9.

Network structure of improved YOLOv5s model.

5. Experimental Results and Analysis

5.1. Experimental Platform and Dataset

The dataset used in the experiment includes two remote sensing datasets, the RSOD dataset [113,114] and DIOR dataset [115], as well as two general target detection datasets, the PASCAL VOC dataset [116,117] and MS-COCO dataset [118].

The RSOD dataset includes four types of detection object categories, aircraft, oil tank, playground, and overpass, with a total of 976 images. The aircraft category includes 446 images, with a total of 4993 targets. The oil tank category includes 165 images, with a total of 1586 targets. The playground category includes 189 images, with a total of 191 targets. The overpass category contains 176 images and a total of 180 targets. The partition ratio between the training set to the test set is 4 to 1.

The DIOR dataset is a large-scale benchmark dataset for object detection in remote sensing images. The dataset includes 23,463 images of different seasons and weather patterns, with a total of 190,288 targets. The unified image size is 800 × 800, with a resolution of 0.5 m to 30 m. DIOR datasets include 20 categories: airplane (AL), airport (AT), baseball field (BF), basketball court (BC), bridge (B), chimney (C), dam (D), expressway service area (ESA), expressway toll station (ETS), golf course (GC), ground track field (GTF), harbor (HB), overpass (O), ship (S), stadium (SD), storage tank (ST), tennis court (TC), train station (TS), vehicle (V), and windmill (W). According to the original settings in the DIOR dataset, the number of images in the training, validation, and testing sets is 5863, 5862, and 11,738, respectively. This study combines the training set and validation set as the training set.

The PASCAL VOC dataset includes the PASCAL VOC 2007 and 2012 datasets, which can be used for tasks such as image classification, object detection, semantic segmentation, and motion detection. The PASCAL VOC dataset includes a total of 20 common objects in daily life. In this study, the training and validation sets of the PASCAL VOC 2007 and VOC 2012 datasets were used as the model’s training set, while the testing set of VOC 2007 was used as the model’s testing set. The MS-COO dataset is currently the most challenging target detection dataset which includes a total of 80 types of detection objects. The MS-COO dataset includes more small objects (with an area smaller than 1% of the image) and more dense localization objects than the PASCAL VOC dataset.

The experiments were conducted on a system with Ubuntu 18.04, CUDA 11.1, and a GeForce RTX A5000 graphics card. The network development framework used was Pytorch 1.9, and the integrated development environment was Pycharm. The training was uniformly set to 300 epochs, with a batch size of 64.

5.2. Evaluation Metrics

In this study, precision, mean average precision (mAP), and detection frames per second (FPS) were used as performance evaluation metrics for the object detection method. Precision (P) and recall (R) were calculated using Equations (8) and (9), respectively:

where TP represents the number of positive samples that are correctly identified as positive, FP represents the number of negative samples that are incorrectly identified as positive, and FN represents the number of positive samples that are incorrectly identified as negative. By selecting different precision and recall values, the precision-recall (PR) curve can be drawn, and the area under the PR curve is defined as the AP. The mean AP (mAP) is calculated by taking the mean of the AP for all detection categories. The calculation of the performance evaluation metrics AP and mAP is shown in Equations (10) and (11), respectively:

where represents the precision value at a certain recall value r, and n represents the number of detection categories.

5.3. Experimental Results and Analysis

The experimental results are divided into seven sections based on two different datasets: the comparison experiment of SA module embedding, the effect experiment of the DD-head module, the effect experiment of the SPPCSPG module, the RSOD dataset experiment, the DIOR dataset experiment, the PASCAL VOC dataset experiment, and the MS COCO dataset experiment.

5.3.1. Performance Evaluation of the SA Module Embedded Model

To explore the best SA module embedding model and investigate the detection performance changes brought by embedding the SA module in different structures of the YOLOv5s network, three proposed models (YOLOv5s-SA-A, YOLOv5s-SA-B, and YOLOv5s-SA-C) were evaluated using the RSOD dataset to achieve better optimization design of the network. The detection performance of the original YOLOv5s and improved models were compared, and the experimental results are shown in Table 1.

Table 1.

Performance evaluation of shuffle attention module embedded model.

From Table 1, it can be seen that not all networks with integrated SA modules can improve detection performance. YOLOv5s-SA-A had significantly decreased precision, recall, mAP, and FPS compared to the original YOLOv5s network. YOLOv5s-SA-B showed improved recall, but the other three indicators were decreased compared to the original network. YOLOv5s-SA-C had increased mAP, but the other three indicators were decreased compared to the original network. The reason for the different experimental results when embedding attention mechanisms at different positions in the network is that the feature maps extracted by the backbone have rich semantic features, while the feature maps extracted by the neck and head have larger receptive fields, which play a crucial role in improving object detection performance. In the backbone module, the feature maps retain the shallow texture and contour information of the targets, with poor semantic information; thus, embedding attention mechanisms cannot effectively learn semantic information. The YOLOv5s-SA-C algorithm is superior to the YOLOv5s-SA-B algorithm in both detection accuracy and speed. Therefore, considering the principle of balancing accuracy and speed, the YOLOv5s-SA-C algorithm was finally chosen as the model for embedding SA modules.

5.3.2. Effect Experiment of the DD-Head Module

Experiments were conducted on the proposed DD-head module, decoupled head module, and coupled head module in the RSOD dataset to investigate the impact of the constructed DD-head module on the detection accuracy and speed of the model.

Table 2 shows that both the decoupled head and the proposed DD-head modules can improve the mean average precision accuracy of the model, with an increase of 0.6% and 0.5%, respectively. Although the DD-head module has a 0.1% lower mean average precision accuracy compared to the decoupled head module, it has a 7.05 M lower parameter count and an 11.9 increase in FPS value compared to that of the decoupled head module.

Table 2.

Performance evaluation of depthwise-decoupled head module embedded model.

5.3.3. Effect Experiment of the SPPCSPG Module

Experiments were conducted on the proposed SPPCSPG module, SPPCSPC module, and SPPF module in the RSOD dataset to study the impact of the constructed SPPCSPG module on the detection accuracy and speed of the model.

Table 3 indicates that both the SPPCSPC module and the proposed SPPCSPG module can improve the mean average precision accuracy of the model, with an increase of 0.3% and 0.5%, respectively. Moreover, the SPPCSPG module has a 0.2% higher mean average precision accuracy than the SPPCSPC module and has 3.5 M fewer parameters. Therefore, based on the evaluation of parameter count and detection accuracy, the proposed SPPCSPG module outperforms the SPPCSPC module.

Table 3.

Performance evaluation of spatial pyramid pooling cross-stage partial with GSConv module embedded model.

5.3.4. Performance Comparison in the RSOD Dataset

The detection performance of the original YOLOv5s model and the constructed model was compared on the RSOD dataset to verify the effectiveness of the constructed model. The results were shown in Table 4. The mean average precision accuracy and FPS of the model constructed in this article with the original YOLOv5s model were compared on the RSOD dataset, and the detection accuracy of each category was compared. It can be seen that the mean average precision accuracy of the model constructed in this article is 96.4%, which is an improvement of 1.4% compared to the original model, and the mean average precision accuracy on categories oil tank and playground were improved by 0.1% and 5.4%, respectively.

Table 4.

RSOD test detection results.

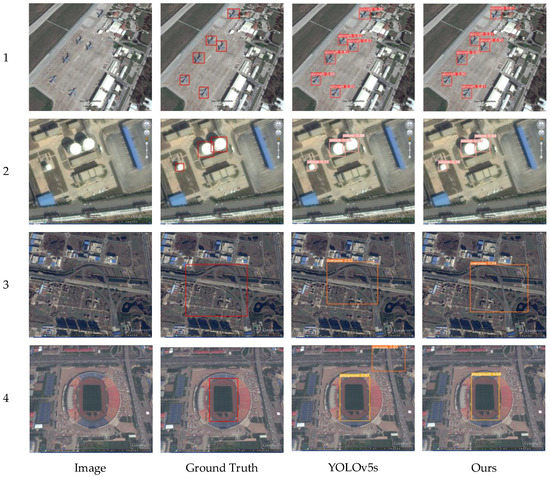

In order to further visually demonstrate the effectiveness of the constructed models, the detection results of each model were shown in Figure 10.

Figure 10.

Visualization results of each model on the RSOD test dataset. The model constructed in this article has a lower false alarm rate (row 4) and a higher recall rate (rows 1, 2, and 3).

As shown in Figure 10, from rows 1 to 4, it could be seen that the detection boxes of the model constructed in this article were closer to the actual detection boxes of targets, with a higher recall rate. From row 4, it can be seen that the model constructed in this article had a lower false alarm rate on the overpass. Therefore, the effectiveness of the model constructed in this article is superior to the original model.

5.3.5. Performance Comparison in the DIOR Dataset

In order to further validate and evaluate the effectiveness of the improved YOLOv5s in improving the detection accuracy of the model, experimental comparisons were conducted on the DIOR dataset with other methods specified in the literature. The experimental results are shown in Table 5, with bold values indicating the optimal results in each column.

Table 5.

DIOR test detection results.

From the experimental results in Table 6, it can be seen that compared with HawkNet [119], CANet [120], MFPNet [122], FSoD-Net [123], ASDN [124], MSFC-Net [125], DFPN-YOLO [127], AC-YOLO [128], SCRNet++ [129], MSSNet [130], MDCT [132], and YOLOv5s, the improved algorithm proposed in this paper based on YOLOv5s significantly improves detection accuracy. On the DIOR dataset, the mean average precision accuracy of the improved YOLOv5s network is 1.2% higher than that of the original YOLOv5s network, indicating that the network constructed in this article not only outperforms the original YOLOv5s network on the RSOD dataset but also achieves better performance than the original network on the more complex large-scale remote sensing dataset, DIOR.

Table 6.

Object detection results on PASCAL VOC2007 test dataset.

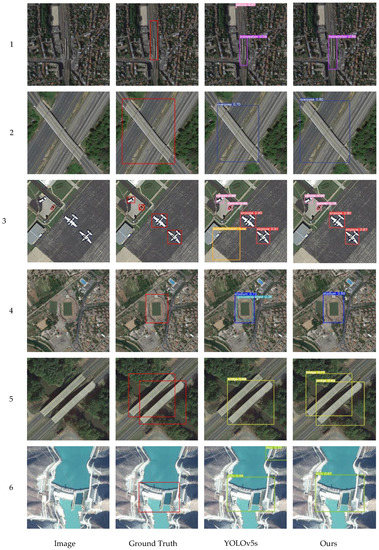

In order to further visually demonstrate the effectiveness of the constructed models, the detection results of each model were shown in Figure 11.

Figure 11.

Visualization results of each model on the DIOR test dataset. The model constructed in this article has a lower false alarm rate (rows 1, 3, 4 and 6) and a higher recall (rows 1, 2, 5 and 6).

As shown in Figure 11, from rows 1, 2, 5 and 6, it could be seen that the detection boxes of the model constructed in this article were closer to the actual detection boxes of targets, with a higher recall rate. From rows 1, 3, 4, and 6, it can be seen that the model constructed in this article had a lower false alarm rate. Therefore, the effectiveness of the model constructed in this article is superior to the original model.

5.3.6. Performance Comparison in the PASCAL VOC Dataset

To further validate and evaluate the effectiveness of the proposed algorithm to YOLOv5s in improving detection accuracy, the proposed algorithm was compared with several advanced object detection algorithms that have emerged in recent years. The training set and test set used in the experiments were consistent with those used in the study. The experimental results are shown in Table 6, where the bold values indicate the best results in each column.

From Table 6, it can be seen that the algorithm proposed in this article meets the real-time requirements, with a mean average precision accuracy of 85.1%, which is 1.4% higher than the original YOLOv5s algorithm. Compared to one-stage target detection algorithms, such as the SSD series algorithm and its improved algorithm, it has advantages in terms of precision and detection speed. Compared to two-stage target detection algorithms, such as the Fast R-CNN algorithm, it has a much higher detection accuracy and detection rate.

To further verify whether the algorithm can effectively improve the accuracy of small target detection, Table 7 compares the accuracy of the improved YOLOv5s and other advanced target detection algorithms in the 20 categories in the PASCAL VOC2007 test set. The results show that compared to the original YOLOv5s algorithm, the improved YOLOv5s algorithm improves the detection accuracy of the model in almost every category, especially for small target categories such as birds.

Table 7.

PASCAL VOC2007 test detection results.

5.3.7. Performance Comparison in the MS COCO Dataset

In order to further demonstrate the advantages of this method in detecting small and dense targets, an experimental comparison between this method and other methods in the literature was conducted on the MS COCO test dataset. From the experimental results in Table 8, it can be seen that compared with R-FCN [150], SSD [35], FESSD [136], YOLOv3 [37], GC-YOLOv3 [137], Mini-YOLOv4-tiny [151], TRC-YOLO [152], Trident-YOLO [153], SLMS-SSD [142], BANet_S [154], STDN [144], SFGNet [149], YOLO-T [146], SL-YOLO [148], and YOLOv5s, the improved algorithm proposed in this article based on YOLOv5s significantly improved detection accuracy. Compared to the original YOLOv5s algorithm, the overall detection accuracy of the algorithm proposed in this article was improved by 3.1%, and the detection accuracy of small, medium, and large targets was improved by 0.2%, 1.9%, and 3.9%, respectively. Experimental results show that the proposed algorithm outperforms the original YOLOv5s algorithm in small target detection, medium target detection, and large target detection.

Table 8.

MS COCO test-dev detection results.

6. Discussion

In this section, the contribution of the constructed module to the proposed network was explored through ablation experiments.

Ablation experiments were conducted on the RSOD dataset to study the effects of CARAFE, SA attention, SPPCSPG, GSConv, and DD-head modules on both model accuracy and detection speed. These models were trained on the RSOD dataset and tested on an RTXA5000 GPU. The input size of the test images for the ablation experiment was 640 × 640, and the experimental results are presented in Table 9.

Table 9.

Ablation experiment on RSOD dataset.

In Table 9, CARAFE represents the replacement of the nearest-neighbor interpolation up-sampling module in the original YOLOv5s network with the CARAFE module. SA represents the embedding of the SA attention module in the head structure of the YOLOv5s network. SPPCSPG represents the replacement of the SPPF module in the original YOLOv5s network with the SPPCSPG module. GSConv represents the replacement of the Conv module in the neck structure of the YOLOv5s network with the GSConv module and the replacement of the C3 module with the improved VoV-GSCSP module. DD-head represents the replacement of the coupled head module in the original YOLOv5s network with the DD-head module. The presence or absence of a checkmark (√) indicates whether the proposed improvement module was incorporated into the YOLOv5s network.

Model 1 is the original YOLOv5s network, while models 2–10 are the corresponding improved YOLOv5s networks. Analysis of the results in Table 2 shows that embedding the CARAFE, SA attention, SPPCSPG, GSConv, and DD-head modules separately into the original YOLOv5s network can improve the detection accuracy of the network. The detection performance measured by mAP@0.5 is improved by 0.2%, 0.2%, 0.5%, 0.5%, and 0.3%, respectively, compared with the original YOLOv5s network. Analysis of models 7–10 reveals that the combination of multiple improved modules performs better than individual improved modules, indicating that each introduced module contributes to the effective improvement of the model’s detection performance.

In order to verify the improvement effect of the proposed model on the accuracy of general object detection, ablation experiments were conducted on the PASCAL VOC dataset, and the experimental results are shown in Table 10.

Table 10.

Ablation experiment on PASCAL VOC dataset.

Similarly, analyzing the results of models 2–6 in Table 10, it can be seen that embedding the CARAFE module, SA attention module, SPPCSPG module, GSConv module, and DD-head module separately in the original YOLOv5s network can improve the mean average precision accuracy of the network to levels higher than the detection indicators of the original YOLOv5s network; mAP@0.5 increased by 0.3%, 0.2%, 1.0%, 0.4%, and 0.5% respectively. Analyzing models 7–10, it is apparent that the results of multiple improved module combinations are better than those of a single improved module, indicating that the introduced improved models have effectively improved the detection performance of the model.

7. Conclusions

This paper proposes a lightweight target detection algorithm based on YOLOv5s to improve the detection performance of the model while meeting the real-time detection requirements. Specifically, this article constructs a DD-head to replace the coupled head of YOLOv5s based on a decoupled head and depthwise convolution to improve the negative impact of classification and regression task conflicts. An SPPCSPG module based on the SPPCSPC module and GSConv module is constructed to replace the SPPF module of YOLOv5s, which improves the utilization of multi-scale information. An SA attention mechanism is introduced in the head structure to enhance spatial attention and reconstruct channel attention. A CARAFE module is introduced in the up-sampling operation to reassemble feature points with similar semantic information in a content-aware manner and aggregate features in a larger receptive field to fully fuse semantic information. In the neck structure, the GSConv module and the reconstructed VoV-GSCSP module are introduced to maintain detection accuracy while reducing the number of parameters. The experiments show that the constructed algorithm performs better than the original network not only for remote sensing images but also for conventional object detection images. The model built in this research is for target detection in remote sensing datasets, and the equipment used is a high-performance GPU. The detection performance of the model built on resource-constrained edge computing devices has not been tested. The next research work will focus on how to build a real-time detection model for edge computing devices.

Author Contributions

P.L. conducted the research. Q.W. revised the paper and guided the research. J.M. and P.L. were responsible for data collection, creating the figures, and revising the paper. H.Z., J.M., P.L., Q.W. and Y.L. revised and improved the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (No.42074039) and Post graduate Research and Practice Innovation Program of Jiangsu Province (No. SJCX21_0040).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Acknowledgments

This research was supported by Foundation items: National Natural Science Foundation of China and Post graduate Research and Practice Innovation Program of Jiangsu Province.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Haq, M.A.; Ahmed, A.; Khan, I.; Gyani, J.; Mohamed, A.; Attia, E.; Mangan, P.; Pandi, D. Analysis of environmental factors using AI and ML methods. Sci. Rep. 2022, 12, 13267. [Google Scholar] [CrossRef] [PubMed]

- Haq, M.A.; Jilani, A.K.; Prabu, P. Deep Learning Based Modeling of Groundwater Storage Change. CMC Comput. Mat. Contin. 2022, 70, 4599–4617. [Google Scholar]

- Haq, M.A. CDLSTM: A Novel Model for Climate Change Forecasting. CMC Comput. Mat. Contin. 2022, 71, 2363–2381. [Google Scholar]

- Haq, M.A. SMOTEDNN: A Novel Model for Air Pollution Forecasting and AQI Classification. CMC Comput. Mat. Contin. 2022, 71, 1403–1425. [Google Scholar]

- Ning, Z.; Sun, S.; Wang, X.; Guo, L.; Wang, G.; Gao, X.; Kwok, R.Y.K. Intelligent resource allocation in mobile blockchain for privacy and security transactions: A deep reinforcement learning based approach. Sci. China Inf. Sci. 2021, 64, 162303. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, H.; Liu, X.; He, H.R.; Gu, Q.; Sun, W. Learning to See the Hidden Part of the Vehicle in the Autopilot Scene. Electronics 2019, 8, 331. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Shukla, N.; Chakraborty, S.; Alamri, A. Deep Learning Approaches Applied to Remote Sensing Datasets for Road Extraction: A State-Of-The-Art Review. Remote Sens. 2020, 12, 144. [Google Scholar] [CrossRef]

- Liu, P.; Wang, Q.; Yang, G.; Li, L.; Zhang, H. Survey of Road Extraction Methods in Remote Sensing Images Based on Deep Learning. PFG—J. Photogramm. Remote Sens. Geoinf. Sci. 2022, 90, 135–159. [Google Scholar] [CrossRef]

- Jia, D.; He, Z.; Zhang, C.; Yin, W.; Wu, N.; Li, Z. Detection of cervical cancer cells in complex situation based on improved YOLOv3 network. Multimed. Tools Appl. 2022, 81, 8939–8961. [Google Scholar] [CrossRef]

- Shaheen, H.; Ravikumar, K.; Lakshmipathi Anantha, N.; Uma Shankar Kumar, A.; Jayapandian, N.; Kirubakaran, S. An efficient classification of cirrhosis liver disease using hybrid convolutional neural network-capsule network. Biomed. Signal. Process. Control. 2023, 80, 104152. [Google Scholar] [CrossRef]

- Yang, J.; Guo, X.; Li, Y.; Marinello, F.; Ercisli, S.; Zhang, Z. A survey of few-shot learning in smart agriculture: Developments, applications, and challenges. Plant Methods 2022, 18, 28. [Google Scholar] [CrossRef] [PubMed]

- Lv, Z.; Zhang, S.; Xiu, W. Solving the Security Problem of Intelligent Transportation System with Deep Learning. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4281–4290. [Google Scholar] [CrossRef]

- Shaik, A.S.; Karsh, R.K.; Islam, M.; Laskar, R.H. A review of hashing based image authentication techniques. Multimed. Tools Appl. 2022, 81, 2489–2516. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep learning for remote sensing image classification: A survey. WIREs Data Min. Knowl. Discov. 2018, 8, e1264. [Google Scholar] [CrossRef]

- Fan, D.; Ji, G.; Cheng, M.; Shao, L. Concealed Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 6024–6042. [Google Scholar] [CrossRef] [PubMed]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- Liu, X.; He, J.; Yao, Y.; Zhang, J.; Liang, H.; Wang, H.; Hong, Y. Classifying urban land use by integrating remote sensing and social media data. Int. J. Geogr. Inf. Sci. 2017, 31, 1675–1696. [Google Scholar] [CrossRef]

- Liu, R.; Tao, F.; Liu, X.; Na, J.; Leng, H.; Wu, J.; Zhou, T. RAANet: A Residual ASPP with Attention Framework for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 3109. [Google Scholar] [CrossRef]

- Li, S.; Lyu, D.; Huang, G.; Zhang, X.; Gao, F.; Chen, Y.; Liu, X. Spatially varying impacts of built environment factors on rail transit ridership at station level: A case study in Guangzhou, China. J. Transp. Geogr. 2020, 82, 102631. [Google Scholar] [CrossRef]

- Hu, S.; Fong, S.; Yang, L.; Yang, S.; Dey, N.; Millham, R.C.; Fiaidhi, J. Fast and Accurate Terrain Image Classification for ASTER Remote Sensing by Data Stream Mining and Evolutionary-EAC Instance-Learning-Based Algorithm. Remote Sens. 2021, 13, 1123. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Tang, X.; Zhou, P.; Wang, P. Real-time image-based driver fatigue detection and monitoring system for monitoring driver vigilance. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 4188–4193. [Google Scholar]

- Alexe, B.; Deselaers, T.; Ferrari, V. Measuring the Objectness of Image Windows. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2189–2202. [Google Scholar] [CrossRef]

- Yap, M.H.; Pons, G.; Martí, J.; Ganau, S.; Sentís, M.; Zwiggelaar, R.; Davison, A.K.; Martí, R. Automated Breast Ultrasound Lesions Detection Using Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 2018, 22, 1218–1226. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Kong, T.; Yao, A.; Chen, Y.; Sun, F. HyperNet: Towards Accurate Region Proposal Generation and Joint Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 845–853. [Google Scholar]

- Cho, M.; Chung, T.Y.; Lee, H.; Lee, S. N-RPN: Hard Example Learning for Region Proposal Networks. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3955–3959. [Google Scholar]

- Rao, Y.; Cheng, Y.; Xue, J.; Pu, J.; Wang, Q.; Jin, R.; Wang, Q. FPSiamRPN: Feature Pyramid Siamese Network with Region Proposal Network for Target Tracking. IEEE Access 2020, 8, 176158–176169. [Google Scholar] [CrossRef]

- Zhong, Q.; Li, C.; Zhang, Y.; Xie, D.; Yang, S.; Pu, S. Cascade region proposal and global context for deep object detection. Neurocomputing 2020, 395, 170–177. [Google Scholar] [CrossRef]

- Cai, C.; Chen, L.; Zhang, X.; Gao, Z. End-to-End Optimized ROI Image Compression. IEEE Trans. Image Process. 2020, 29, 3442–3457. [Google Scholar] [CrossRef] [PubMed]

- Shaik, A.S.; Karsh, R.K.; Islam, M.; Singh, S.P.; Wan, S. A Secure and Robust Autoencoder-Based Perceptual Image Hashing for Image Authentication. Wirel. Commun. Mob. Comput. 2022, 2022, 1645658. [Google Scholar] [CrossRef]

- Seferbekov, S.; Iglovikov, V.; Buslaev, A.; Shvets, A. Feature Pyramid Network for Multi-class Land Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 272–273. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wang, C.; Liao, H.M.; Wu, Y.; Chen, P.; Hsieh, J.; Yeh, I. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 13–19 June 2020; pp. 1571–1580. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid Attention Network for Semantic Segmentation. arXiv 2018, arXiv:1805.10180. [Google Scholar]

- Xu, J.; Sun, X.; Zhang, D.; Fu, K. Automatic Detection of Inshore Ships in High-Resolution Remote Sensing Images Using Robust Invariant Generalized Hough Transform. IEEE Geosci. Remote Sens. Lett. 2014, 11, 2070–2074. [Google Scholar]

- Cucchiara, R.; Grana, C.; Piccardi, M.; Prati, A.; Sirotti, S. Improving shadow suppression in moving object detection with HSV color information. In Proceedings of the ITSC 2001. 2001 IEEE Intelligent Transportation Systems. Proceedings (Cat. No.01TH8585), Oakland, CA, USA, 25–29 August 2001; pp. 334–339. [Google Scholar]

- Corbane, C.; Najman, L.; Pecoul, E.; Demagistri, L.; Petit, M. A complete processing chain for ship detection using optical satellite imagery. Int. J. Remote Sens. 2010, 31, 5837–5854. [Google Scholar] [CrossRef]

- Li, Z.; Itti, L. Saliency and Gist Features for Target Detection in Satellite Images. IEEE Trans. Image Process. 2011, 20, 2017–2029. [Google Scholar]

- Brekke, C.; Solberg, A.H.S. Oil spill detection by satellite remote sensing. Remote Sens. Environ. 2005, 95, 1–13. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Guo, L.; Qian, X.; Zhou, P.; Yao, X.; Hu, X. Object detection in remote sensing imagery using a discriminatively trained mixture model. ISPRS J. Photogramm. Remote Sens. 2013, 85, 32–43. [Google Scholar] [CrossRef]

- Hinz, S.; Stilla, U. Car detection in aerial thermal images by local and global evidence accumulation. Pattern Recognit. Lett. 2006, 27, 308–315. [Google Scholar] [CrossRef]

- Pang, J.; Li, C.; Shi, J.; Xu, Z.; Feng, H. R2-CNN: Fast Tiny Object Detection in Large-Scale Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5512–5524. [Google Scholar] [CrossRef]

- Fu, Y.; Wu, F.; Zhao, J. Context-Aware and Depthwise-based Detection on Orbit for Remote Sensing Image. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1725–1730. [Google Scholar]

- Cheng, G.; Zhou, P.; Han, J. Learning Rotation-Invariant Convolutional Neural Networks for Object Detection in VHR Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Zhou, P.; Xu, D. Learning Rotation-Invariant and Fisher Discriminative Convolutional Neural Networks for Object Detection. IEEE Trans. Image Process. 2019, 28, 265–278. [Google Scholar] [CrossRef]

- Yang, X.; Sun, H.; Sun, X.; Yan, M.; Guo, Z.; Fu, K. Position Detection and Direction Prediction for Arbitrary-Oriented Ships via Multitask Rotation Region Convolutional Neural Network. IEEE Access 2018, 6, 50839–50849. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, S.; Thachan, S.; Chen, J.; Qian, Y. Deconv R-CNN for Small Object Detection on Remote Sensing Images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2483–2486. [Google Scholar]

- Li, L.; Cheng, L.; Guo, X.; Liu, X.; Jiao, L.; Liu, F. Deep Adaptive Proposal Network in Optical Remote Sensing Images Objective Detection. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2651–2654. [Google Scholar]

- Guo, W.; Yang, W.; Zhang, H.; Hua, G. Geospatial Object Detection in High Resolution Satellite Images Based on Multi-Scale Convolutional Neural Network. Remote Sens. 2018, 10, 131. [Google Scholar] [CrossRef]

- Zhang, X.; Zhu, K.; Chen, G.; Tan, X.; Zhang, L.; Dai, F.; Liao, P.; Gong, Y. Geospatial Object Detection on High Resolution Remote Sensing Imagery Based on Double Multi-Scale Feature Pyramid Network. Remote Sens. 2019, 11, 755. [Google Scholar] [CrossRef]

- Li, K.; Cheng, G.; Bu, S.; You, X. Rotation-Insensitive and Context-Augmented Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2337–2348. [Google Scholar] [CrossRef]

- Li, Q.; Mou, L.; Jiang, K.; Liu, Q.; Wang, Y.; Zhu, X. Hierarchical Region Based Convolution Neural Network for Multiscale Object Detection in Remote Sensing Images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 4355–4358. [Google Scholar]

- Guo, M.; Xu, T.; Liu, J.; Liu, Z.; Jiang, P.; Mu, T.; Zhang, S.; Martin, R.R.; Cheng, M.; Hu, S. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Hao, Z.; Wang, Z.; Bai, D.; Tao, B.; Tong, X.; Chen, B. Intelligent Detection of Steel Defects Based on Improved Split Attention Networks. Front. Bioeng. Biotechnol. 2022, 9, 810876. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3141–3149. [Google Scholar]

- Guan, Q.; Huang, Y.; Zhong, Z.; Zheng, Z.; Zheng, L.; Yang, Y. Diagnose like a Radiologist: Attention Guided Convolutional Neural Network for Thorax Disease Classification. arXiv 2018, arXiv:1801.09927. [Google Scholar]