TESR: Two-Stage Approach for Enhancement and Super-Resolution of Remote Sensing Images

Abstract

1. Introduction

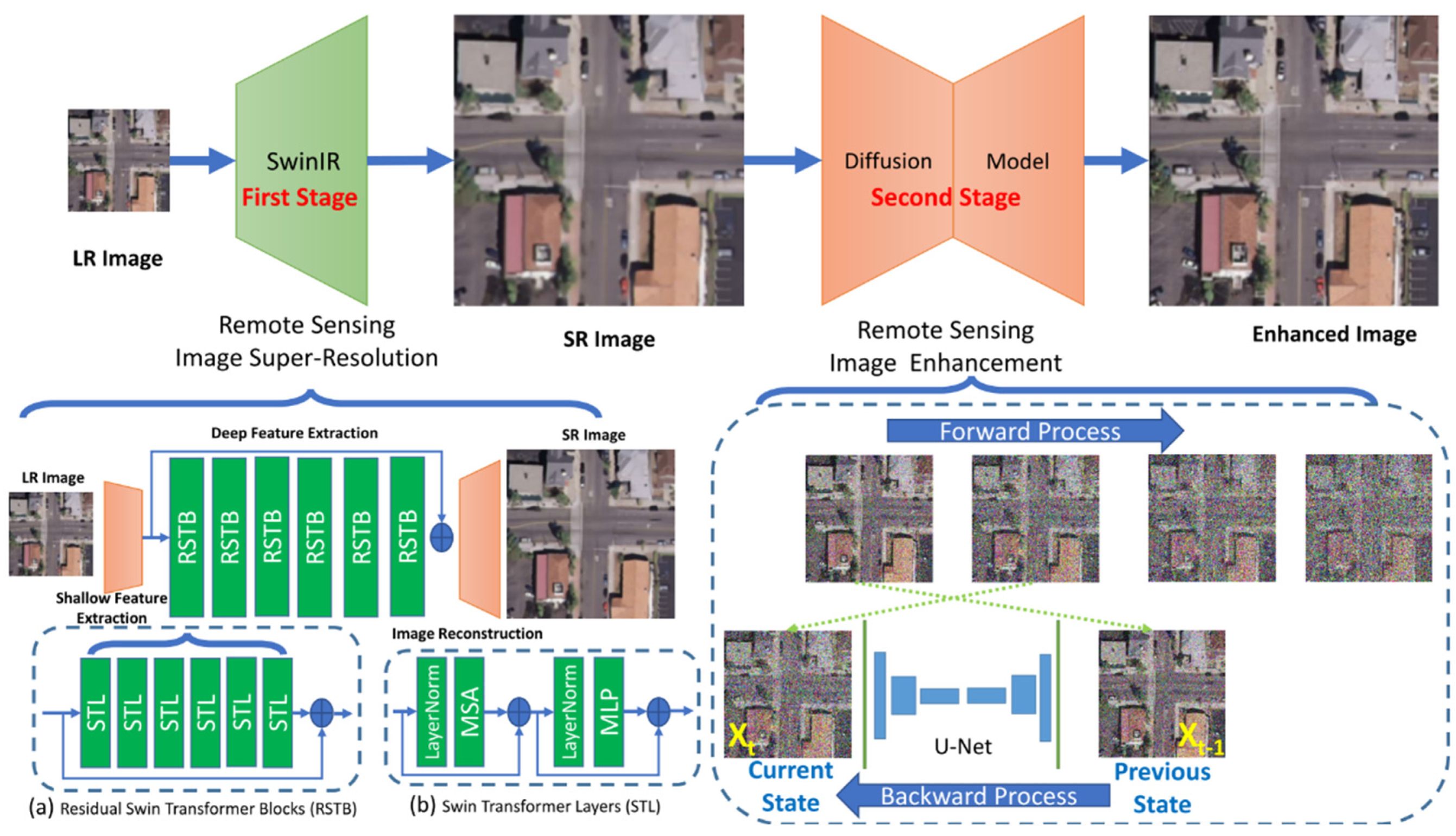

- The development of a two-stage TESR architecture for enhancement and super-resolution using a combination of the Vision Transformer and Diffusion models;

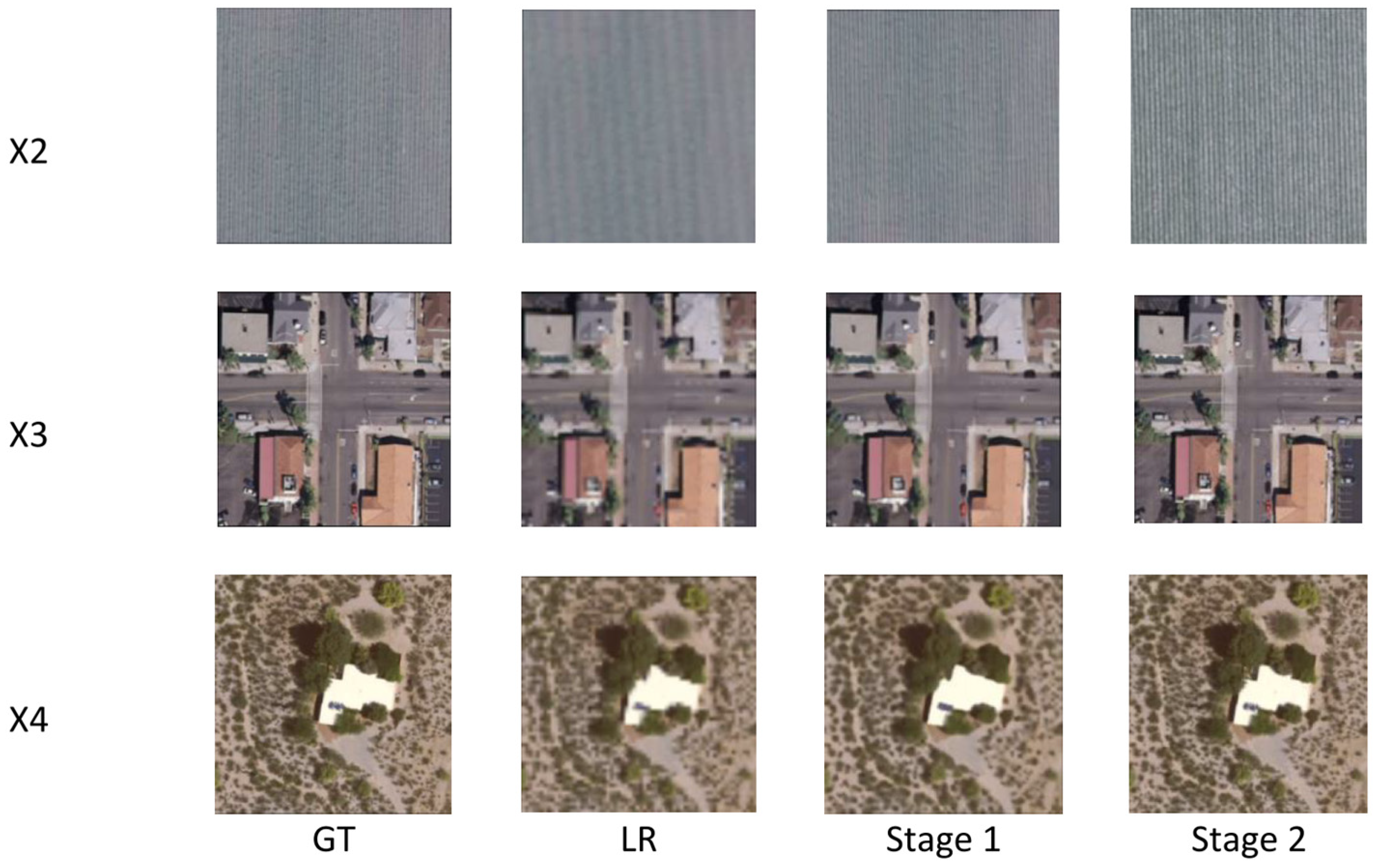

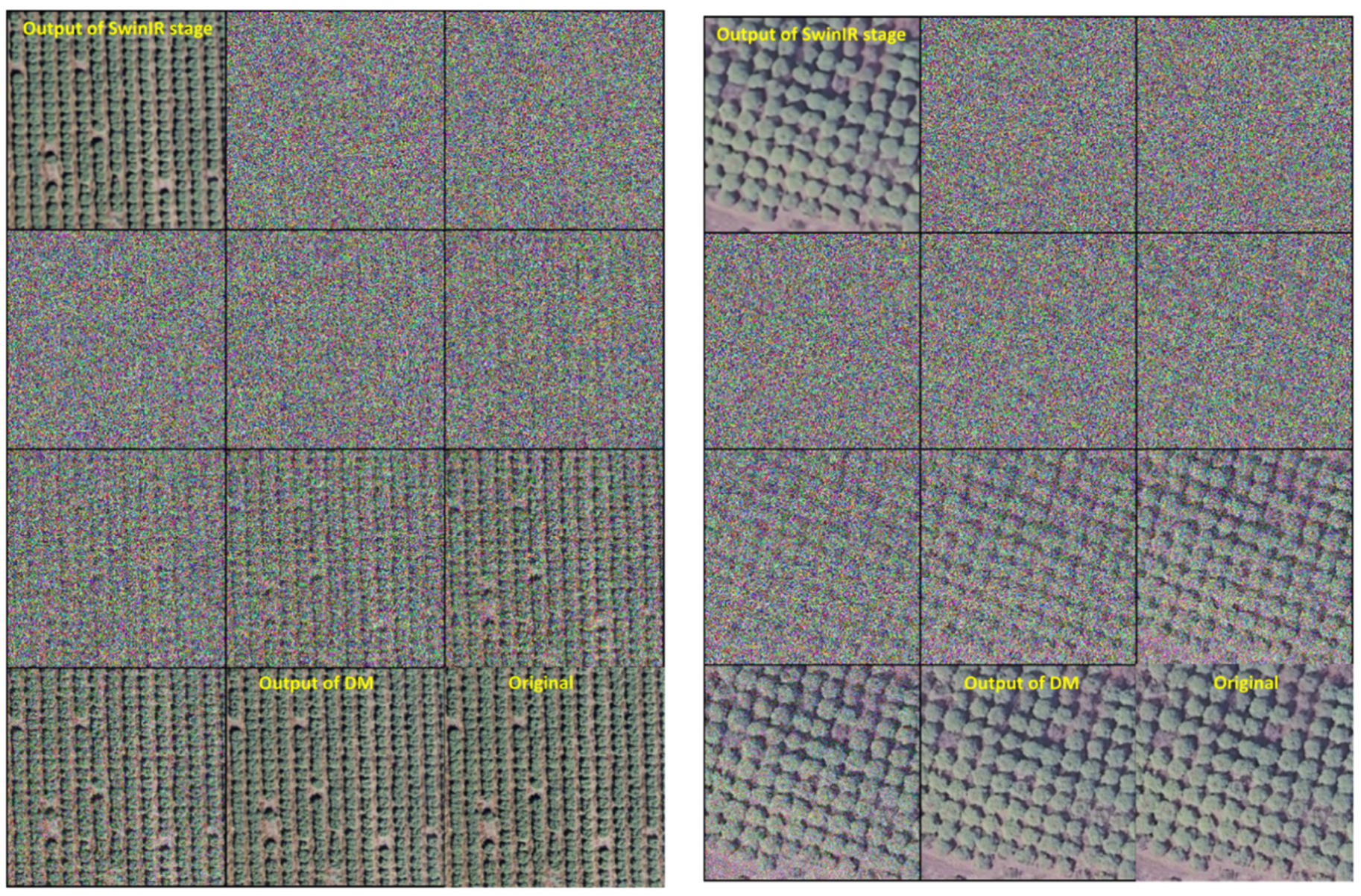

- Proving that the Vision Transformer block was more appropriate for the super-resolution stage (generation of global details), while the Diffusion Model was more appropriate for the Enhancement stage (enhancement of fine details);

- Outperforming other methods when tested on the super-resolution of RS images from the UCMerced dataset;

- Demonstrating the efficiency of using the Charbonnier loss in the training of the TESR model, which emphasizes its usefulness in the super-resolution domain.

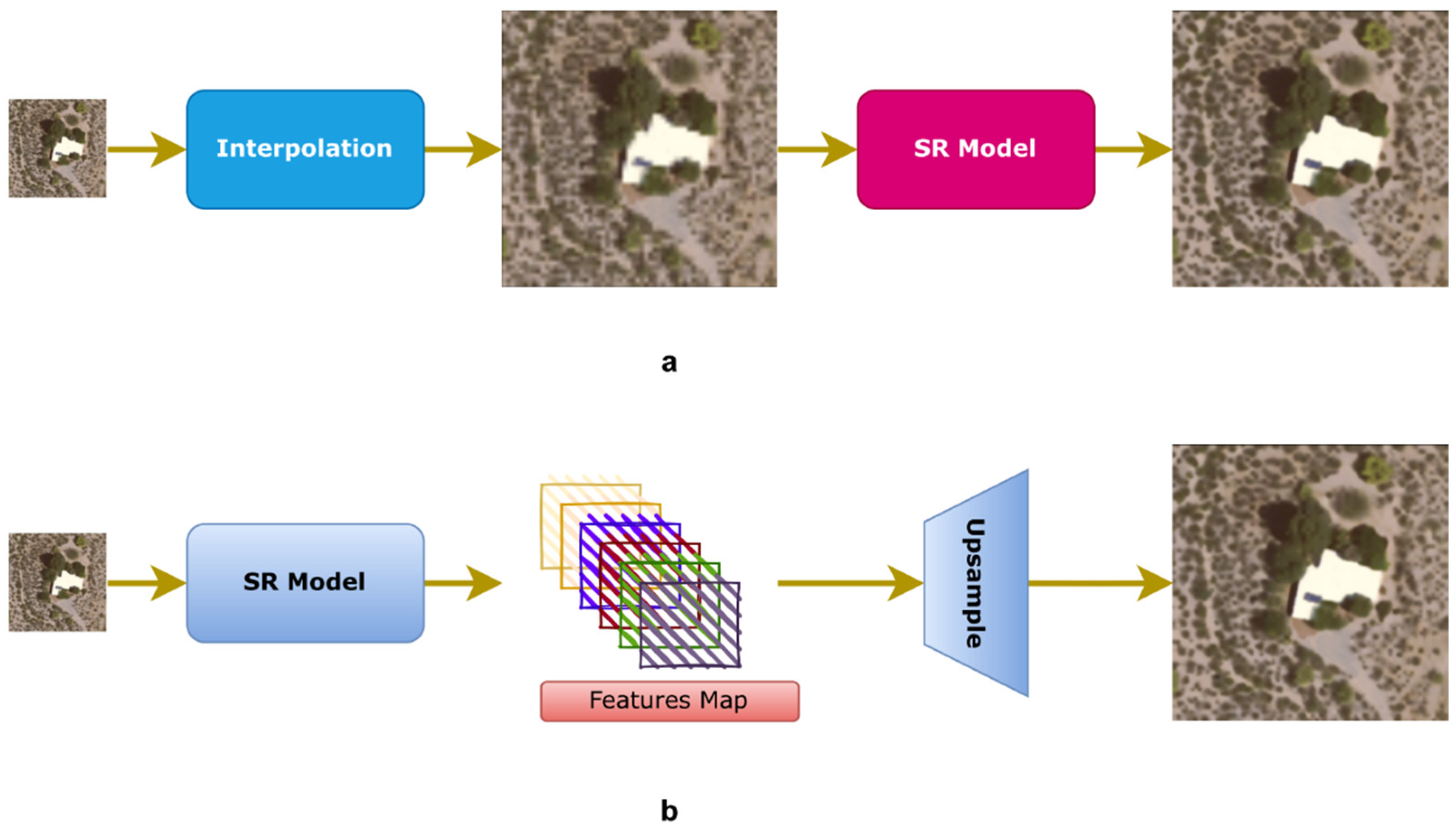

2. Related Work

3. Proposed Methodology

3.1. TESR Architecture

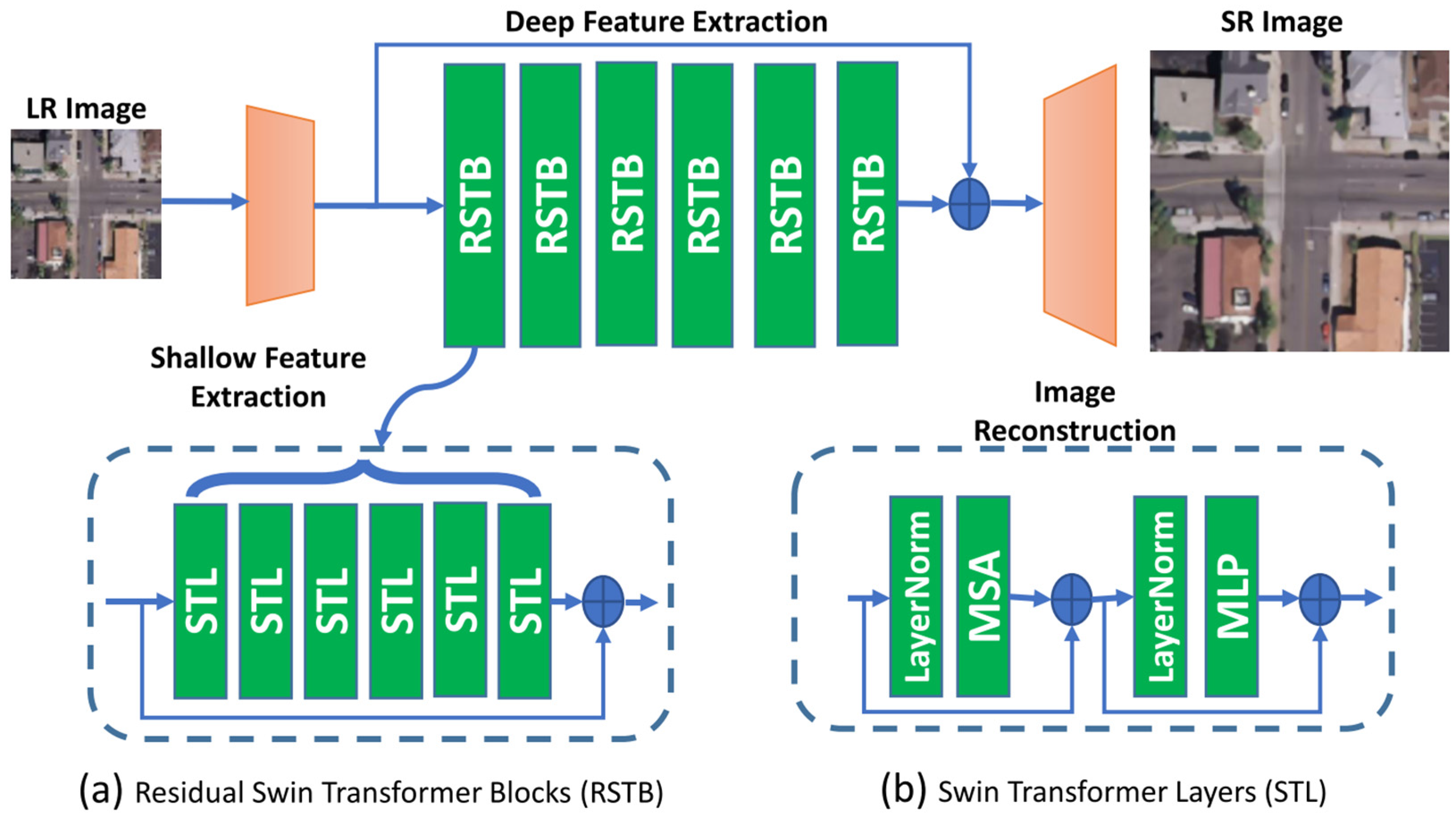

3.1.1. Super-Resolution Stage

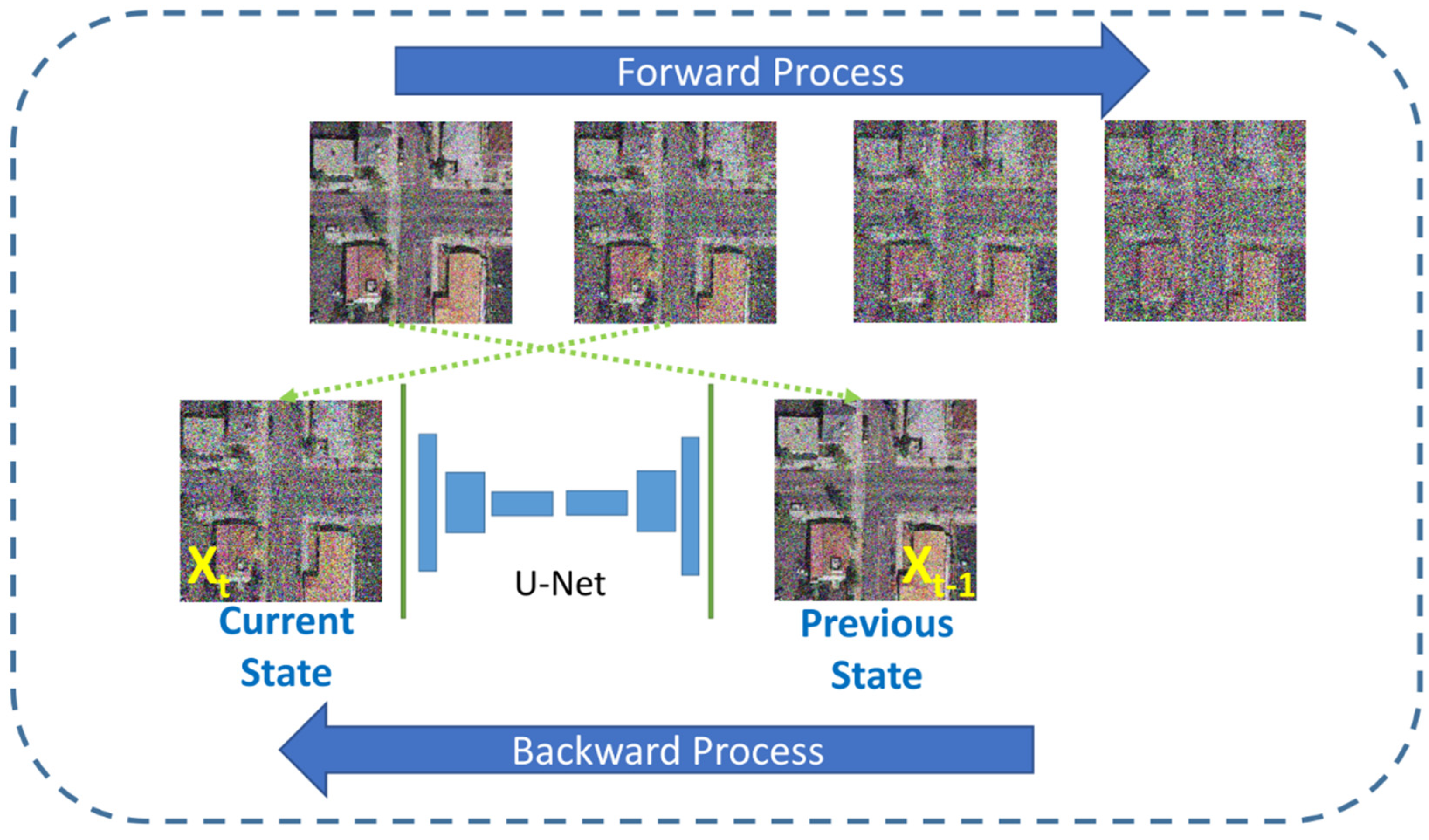

3.1.2. Enhancement Stage

3.2. The Training Algorithm of TESR

3.3. Description of the Training Algorithm

| Algorithm 1: TESR |

|

4. Results and Analysis

4.1. Experimental and Analysis Details

- Effectively recovering high-frequency (fine) details in the image, such as edges and texture;

- Preserving structural information in the image, such as the overall shape and layout of objects;

- Enhancing images with complex structures and noise as it adapts to the local characteristics of the image;

- Handling images with missing or corrupted pixels by filling in missing data based on the surrounding pixels.

4.2. Performance Evaluation

- Human evaluation: this involves presenting the image to a panel of human judges, who then rate the image based on various subjective criteria;

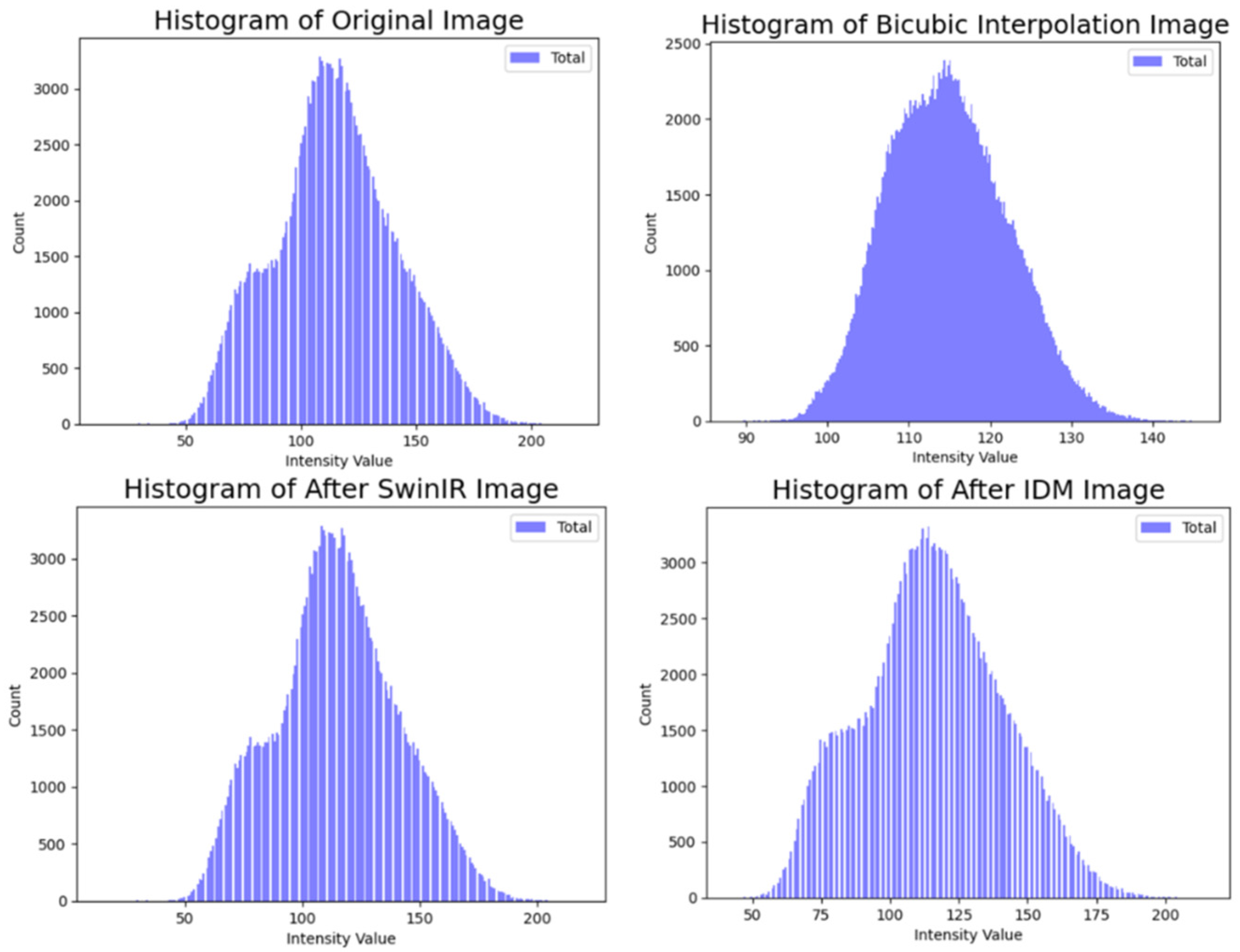

4.3. Results Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lim, S.B.; Seo, C.W.; Yun, H.C. Digital Map Updates with UAV Photogrammetric Methods. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2015, 33, 397–405. [Google Scholar] [CrossRef]

- Sun, H.; Sun, X.; Wang, H.; Li, Y.; Li, X. Automatic Target Detection in High-Resolution Remote Sensing Images Using Spatial Sparse Coding Bag-of-Words Model. IEEE Geosci. Remote Sens. Lett. 2012, 9, 109–113. [Google Scholar] [CrossRef]

- Benjdira, B.; Koubaa, A.; Boulila, W.; Ammar, A. Parking Analytics Framework Using Deep Learning. In Proceedings of the 2022 2nd International Conference of Smart Systems and Emerging Technologies, SMARTTECH, Riyadh, Saudi Arabia, 9–11 May 2022; pp. 200–205. [Google Scholar] [CrossRef]

- Benjdira, B.; Koubaa, A.; Azar, A.T.; Khan, Z.; Ammar, A.; Boulila, W. TAU: A Framework for Video-Based Traffic Analytics Leveraging Artificial Intelligence and Unmanned Aerial Systems. Eng. Appl. Artif. Intell. 2022, 114, 105095. [Google Scholar] [CrossRef]

- Guo, M.; Liu, H.; Xu, Y.; Huang, Y. Building Extraction Based on U-Net with an Attention Block and Multiple Losses. Remote Sens. 2020, 12, 1400. [Google Scholar] [CrossRef]

- Tang, Y.; Zhu, M.; Chen, Z.; Wu, C.; Chen, B.; Li, C.; Li, L. Seismic Performance Evaluation of Recycled Aggregate Concrete-Filled Steel Tubular Columns with Field Strain Detected via a Novel Mark-Free Vision Method. Structures 2022, 37, 426–441. [Google Scholar] [CrossRef]

- Wang, Z.; Jiang, K.; Yi, P.; Han, Z.; He, Z. Ultra-Dense GAN for Satellite Imagery Super-Resolution. Neurocomputing 2020, 398, 328–337. [Google Scholar] [CrossRef]

- Xiang-Guang, Z. A New Kind of Super-Resolution Reconstruction Algorithm Based on the ICM and the Bicubic Interpolation. In Proceedings of the 2nd 2008 International Symposium on Intelligent Information Technology Application Workshop, IITA 2008 Workshop, Shanghai, China, 21–22 December 2008; pp. 817–820. [Google Scholar] [CrossRef]

- Khan, A.R.; Saba, T.; Khan, M.Z.; Fati, S.M.; Khan, M.U.G. Classification of Human’s Activities from Gesture Recognition in Live Videos Using Deep Learning. Concurr. Comput. 2022, 34, e6825. [Google Scholar] [CrossRef]

- Ubaid, M.T.; Saba, T.; Draz, H.U.; Rehman, A.; Ghani, M.U.; Kolivand, H. Intelligent Traffic Signal Automation Based on Computer Vision Techniques Using Deep Learning. IT Prof. 2022, 24, 27–33. [Google Scholar] [CrossRef]

- Delia-Alexandrina, M.; Nedevschi, S.; Fati, S.M.; Senan, E.M.; Azar, A.T. Hybrid and Deep Learning Approach for Early Diagnosis of Lower Gastrointestinal Diseases. Sensors 2022, 22, 4079. [Google Scholar] [CrossRef]

- Ran, Q.; Xu, X.; Zhao, S.; Li, W.; Du, Q. Remote Sensing Images Super-Resolution with Deep Convolution Networks. Multimed. Tools Appl. 2020, 79, 8985–9001. [Google Scholar] [CrossRef]

- Zhu, Y.; Geiß, C.; So, E. Image Super-Resolution with Dense-Sampling Residual Channel-Spatial Attention Networks for Multi-Temporal Remote Sensing Image Classification. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102543. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Yi, P.; Wang, G.; Lu, T.; Jiang, J. Edge-Enhanced GAN for Remote Sensing Image Superresolution. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5799–5812. [Google Scholar] [CrossRef]

- Xiong, Y.; Guo, S.; Chen, J.; Deng, X.; Sun, L.; Zheng, X.; Xu, W. Improved SRGAN for Remote Sensing Image Super-Resolution Across Locations and Sensors. Remote Sens. 2020, 12, 1263. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- El-Shafai, W.; Ali, A.M.; El-Rabaie, E.S.M.; Soliman, N.F.; Algarni, A.D.; Abd El-Samie, F.E. Automated COVID-19 Detection Based on Single-Image Super-Resolution and CNN Models. Comput. Mater. Contin. 2021, 70, 1141–1157. [Google Scholar] [CrossRef]

- El-Shafai, W.; Mohamed, E.M.; Zeghid, M.; Ali, A.M.; Aly, M.H. Hybrid Single Image Super-Resolution Algorithm for Medical Images. Comput. Mater. Contin. 2022, 72, 4879–4896. [Google Scholar] [CrossRef]

- Sohl-Dickstein, J.; Weiss, E.A.; Maheswaranathan, N.; Ganguli, S.; Edu, S. Deep Unsupervised Learning Using Nonequilibrium Thermodynamics. In Proceedings of the 32nd International Conference on Machine Learning, PMLR 37:2256-2265. Lille, France, 7–9 July 2015; pp. 2256–2265. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. Adv. Neural. Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Saharia, C.; Ho, J.; Chan, W.; Salimans, T.; Fleet, D.J.; Norouzi, M. Image Super-Resolution Via Iterative Refinement. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4713–4726. [Google Scholar] [CrossRef]

- Dhariwal, P.; Nichol, A. Diffusion Models Beat GANs on Image Synthesis. Adv. Neural. Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar]

- Nichol, A.Q.; Dhariwal, P. Improved Denoising Diffusion Probabilistic Models. In Proceedings of the 38th International Conference on Machine Learning, PMLR 139:8162-8171, Virtual, 18–24 July 2021; pp. 8162–8171. [Google Scholar]

- Song, Y.; Sohl-Dickstein, J.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-Based Generative Modeling through Stochastic Differential Equations. In Proceedings of the International Conference on Learning Representations(ICLR), Virtual, 3 May 2021; Available online: https://openreview.net/forum?id=PxTIG12RRHS (accessed on 15 April 2023).

- Wolleb, J.; Sandkühler, R.; Bieder, F.; Valmaggia, P.; Cattin, P.C. Diffusion Models for Implicit Image Segmentation Ensembles. Proc. Mach. Learn. Res. 2022, 172, 1336–1348. [Google Scholar]

- Baranchuk, D.; Rubachev, I.; Voynov, A.; Khrulkov, V.; Babenko, A. Label-Efficient Semantic Segmentation with Diffusion Models. arXiv 2021, arXiv:2112.03126. [Google Scholar] [CrossRef]

- Whang, J.; Delbracio, M.; Talebi, H.; Saharia, C.; Dimakis, A.G.; Milanfar, P. Deblurring via Stochastic Refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 16293–16303. [Google Scholar]

- Yang, Y.; Newsam, S. Bag-of-Visual-Words and Spatial Extensions for Land-Use Classification. In Proceedings of the ACM International Symposium on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar] [CrossRef]

- Wan, F.; Zhang, X. Super Resolution Reconstruction Algorithm of UAV Image Based on Residual Neural Network. IEEE Access 2021, 9, 140372–140382. [Google Scholar] [CrossRef]

- González, D.; Patricio, M.A.; Berlanga, A.; Molina, J.M. A Super-Resolution Enhancement of UAV Images Based on a Convolutional Neural Network for Mobile Devices. Pers. Ubiquitous Comput. 2022, 26, 1193–1204. [Google Scholar] [CrossRef]

- Zhang, D.; Shao, J.; Li, X.; Shen, H.T. Remote Sensing Image Super-Resolution via Mixed High-Order Attention Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5183–5196. [Google Scholar] [CrossRef]

- Guo, M.; Zhang, Z.; Liu, H.; Huang, Y. NDSRGAN: A Novel Dense Generative Adversarial Network for Real Aerial Imagery Super-Resolution Reconstruction. Remote Sens. 2022, 14, 1574. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhang, J.; Chen, W.; Wang, Y.; You, J.; Wang, Q. SR-DeblurUGAN: An End-to-End Super-Resolution and Deblurring Model with High Performance. Drones 2022, 6, 162. [Google Scholar] [CrossRef]

- Li, B.; Qiu, S.; Jiang, W.; Zhang, W.; Le, M. A UAV Detection and Tracking Algorithm Based on Image Feature Super-Resolution. Wirel. Commun. Mob. Comput. 2022, 2022, 6526684. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z.; Mo, W. Transformer-Based Multistage Enhancement for Remote Sensing Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5615611. [Google Scholar] [CrossRef]

- Tu, J.; Mei, G.; Ma, Z.; Piccialli, F. SWCGAN: Generative Adversarial Network Combining Swin Transformer and CNN for Remote Sensing Image Super-Resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5662–5673. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 10012–10022. [Google Scholar]

- Liu, J.; Yuan, Z.; Pan, Z.; Fu, Y.; Liu, L.; Lu, B. Diffusion Model with Detail Complement for Super-Resolution of Remote Sensing. Remote Sens. 2022, 14, 4834. [Google Scholar] [CrossRef]

- Fu, X.; Wang, J.; Zeng, D.; Huang, Y.; Ding, X. Remote Sensing Image Enhancement Using Regularized-Histogram Equalization and DCT. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2301–2305. [Google Scholar] [CrossRef]

- Ablin, R.; Helen Sulochana, C.; Prabin, G. An investigation in satellite images based on image enhancement techniques. Eur. J. Remote Sens. 2020, 53 (Suppl. 2), 86–94. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Proceedings of the 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Volume 8689, pp. 818–833. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszar, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Kingma, D.P.; Welling, M. An Introduction to Variational Autoencoders. Found. Trends® Mach. Learn. 2019, 12, 307–392. [Google Scholar] [CrossRef]

- Karras, T.; Laine, S.; Aila, T. A Style-Based Generator Architecture for Generative Adversarial Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4401–4410. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv 2017, arXiv:1710.10196. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Lin, W.; Jay Kuo, C.C. Perceptual Visual Quality Metrics: A Survey. J. Vis. Commun. Image Represent. 2011, 22, 297–312. [Google Scholar] [CrossRef]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multi-Scale Structural Similarity for Image Quality Assessment. In Proceedings of the Conference Record of the Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 9–12 November 2003; Volume 2, pp. 1398–1402. [Google Scholar] [CrossRef]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image Super-Resolution via Sparse Representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the Super-Resolution Convolutional Neural Network. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; Volume 9906 LNCS, pp. 391–407. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z.; Zou, Z. Super-Resolution for Remote Sensing Images via Local-Global Combined Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1243–1247. [Google Scholar] [CrossRef]

- Haut, J.M.; Paoletti, M.E.; Fernandez-Beltran, R.; Plaza, J.; Plaza, A.; Li, J. Remote Sensing Single-Image Superresolution Based on a Deep Compendium Model. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1432–1436. [Google Scholar] [CrossRef]

- Qin, M.; Mavromatis, S.; Hu, L.; Zhang, F.; Liu, R.; Sequeira, J.; Du, Z. Remote Sensing Single-Image Resolution Improvement Using A Deep Gradient-Aware Network with Image-Specific Enhancement. Remote Sens. 2020, 12, 758. [Google Scholar] [CrossRef]

- Deng, Z.; Cai, Y.; Chen, L.; Gong, Z.; Bao, Q.; Yao, X.; Fang, D.; Yang, W.; Zhang, S.; Ma, L. RFormer: Transformer-Based Generative Adversarial Network for Real Fundus Image Restoration on a New Clinical Benchmark. IEEE J. Biomed. Health Inf. 2022, 26, 4645–4655. [Google Scholar] [CrossRef] [PubMed]

| Scale Factor | Deep-Tuning SwinIR | Deep-Tuning Iterative DM | ||

|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | |

| ×2 | 34.938 | 0.9232 | 30.256 | 0.90742 |

| Scale Factor | Deep-Tuning SwinIR Stage 1 | Deep-Tuning Iterative DM Stage 2 | ||||

|---|---|---|---|---|---|---|

| PSNR | SSIM | MS-SSIM | PSNR | SSIM | MS-SSIM | |

| ×2 | 34.938 | 0.9232 | 0.9738 | 35.367 | 0.9449 | 0.9892 |

| ×3 | 30.813 | 0.8784 | 0.9385 | 32.311 | 0.91143 | 0.9731 |

| ×4 | 27.424 | 0.8201 | 0.9278 | 31.951 | 0.90456 | 0.9748 |

| Model | ×2 | ×3 | ×4 |

|---|---|---|---|

| PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | |

| Bicubic | 32.76/0.879 | 27.46/0.7631 | 25.65/0.6725 |

| SC [50] | 32.77/0.9166 | 28.26/0.7971 | 26.51/0.7152 |

| SRCNN [51] | 32.84/0.9152 | 28.66/0.8038 | 26.78/0.7219 |

| FSRCNN [52] | 33.18/0.9196 | 29.09/0.8167 | 26.93/0.7267 |

| LGCNet [53] | 33.48/0.9235 | 29.28/0.8238 | 27.02/0.7333 |

| DCM [54] | 33.65/0.9274 | 29.52/0.8394 | 27.22/0.7528 |

| DGANet-ISE [55] | 33.68/0.9344 | -/- | 27.31/0.7665 |

| TransENet [36] | 34.03/0.9301 | 29.92/0.8408 | 27.77/0.7630 |

| TESR (our) | 35.367/0.9449 | 32.311/0.91143 | 31.951/0.90456 |

| Scale Factor | MSE Loss | Edge Loss | Perceptual Loss | Charbonnier Loss |

|---|---|---|---|---|

| PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | |

| ×2 | 27.662/0.78535 | 22.667/0.60635 | 27.761/0.80892 | 35.367/0.9449 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, A.M.; Benjdira, B.; Koubaa, A.; Boulila, W.; El-Shafai, W. TESR: Two-Stage Approach for Enhancement and Super-Resolution of Remote Sensing Images. Remote Sens. 2023, 15, 2346. https://doi.org/10.3390/rs15092346

Ali AM, Benjdira B, Koubaa A, Boulila W, El-Shafai W. TESR: Two-Stage Approach for Enhancement and Super-Resolution of Remote Sensing Images. Remote Sensing. 2023; 15(9):2346. https://doi.org/10.3390/rs15092346

Chicago/Turabian StyleAli, Anas M., Bilel Benjdira, Anis Koubaa, Wadii Boulila, and Walid El-Shafai. 2023. "TESR: Two-Stage Approach for Enhancement and Super-Resolution of Remote Sensing Images" Remote Sensing 15, no. 9: 2346. https://doi.org/10.3390/rs15092346

APA StyleAli, A. M., Benjdira, B., Koubaa, A., Boulila, W., & El-Shafai, W. (2023). TESR: Two-Stage Approach for Enhancement and Super-Resolution of Remote Sensing Images. Remote Sensing, 15(9), 2346. https://doi.org/10.3390/rs15092346