MBCNet: Multi-Branch Collaborative Change-Detection Network Based on Siamese Structure

Abstract

1. Introduction

1.1. Traditional Methods

1.2. Change-Detection Method in Deep Learning

1.3. Content of This Article

- 1.

- A multi-branch collaborative change-detection network based on Siamese structure is proposed.

- 2.

- Previous deep learning approaches do not adequately consider various types of semantically meaningful information in remote-sensing images, leading to limitations in their ability to extract features. Some more-traditional methods are unable to differentiate between the changed and invariant regions, let alone identify the changing region. The network continuously extracts different levels of differential semantic information, global semantic information, and similar semantic information through three branches and continuously aggregates different levels of differential semantic information, global semantic information, and similar semantic information in the process of upsampling. It distinguishes the changing area and the invariant area and pays attention to the edge details as much as possible.

- 3.

- For the difference semantic information, global semantic information, and similar semantic information in the three branches, a cross-scale feature-attention module (CSAM), global semantic filtering module (GSFM), double-branch information-fusion module (DBIFM) and similarity-enhancement module (SEM) are proposed. The four modules independently and selectively integrate multi-level difference information.

- 4.

- Ablation experiments and comparative experiments were performed on the self-built BTRS-CD dataset and the public LEVIR-CD dataset. The ablation experiments show that each module of MHCNet can help the whole network to complete the change-detection task. The comparative experiments show that MHCNet has a higher performance in change-detection tasks.

2. Methods

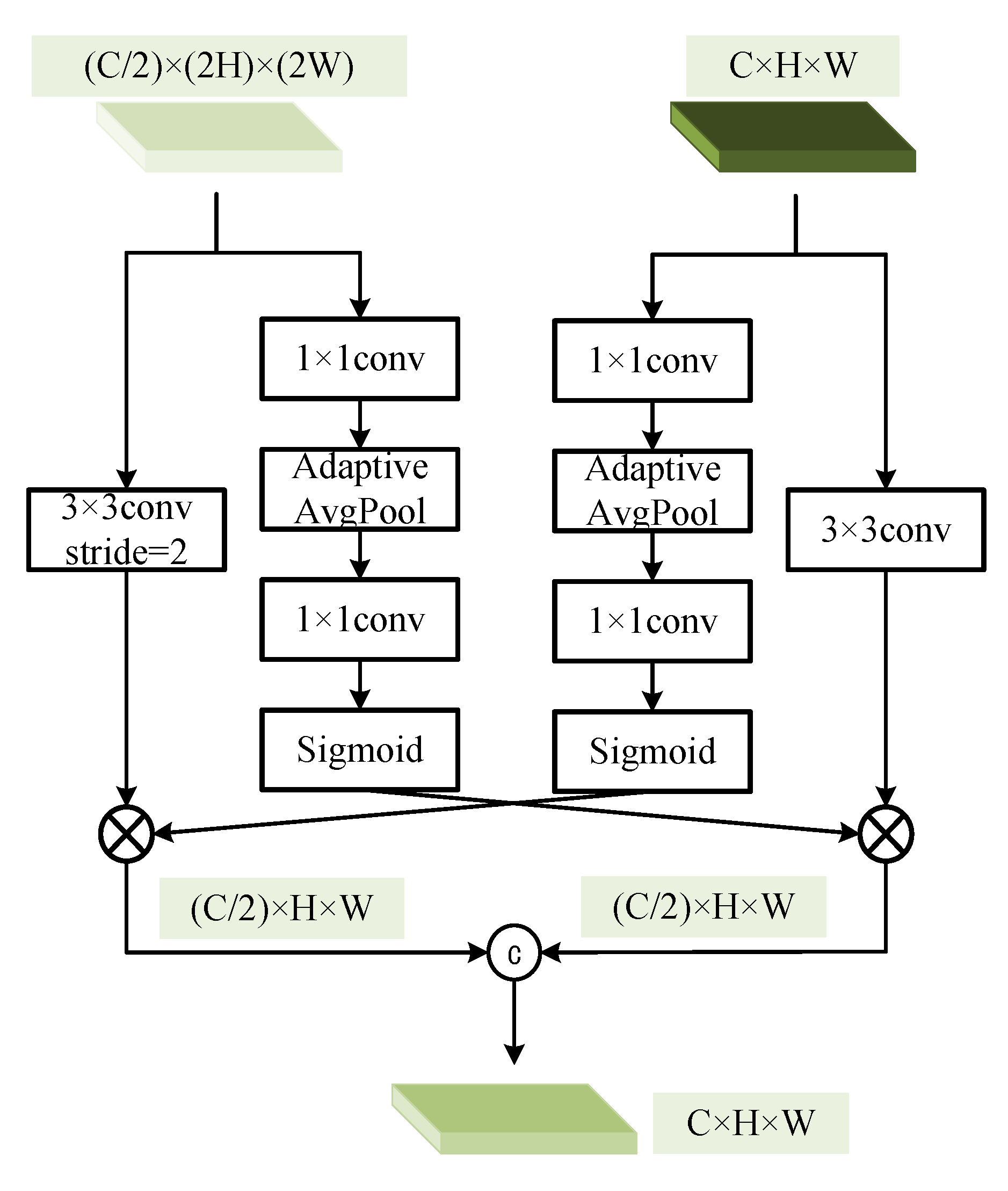

2.1. Cross-Scale Feature-Attention Module (CSAM)

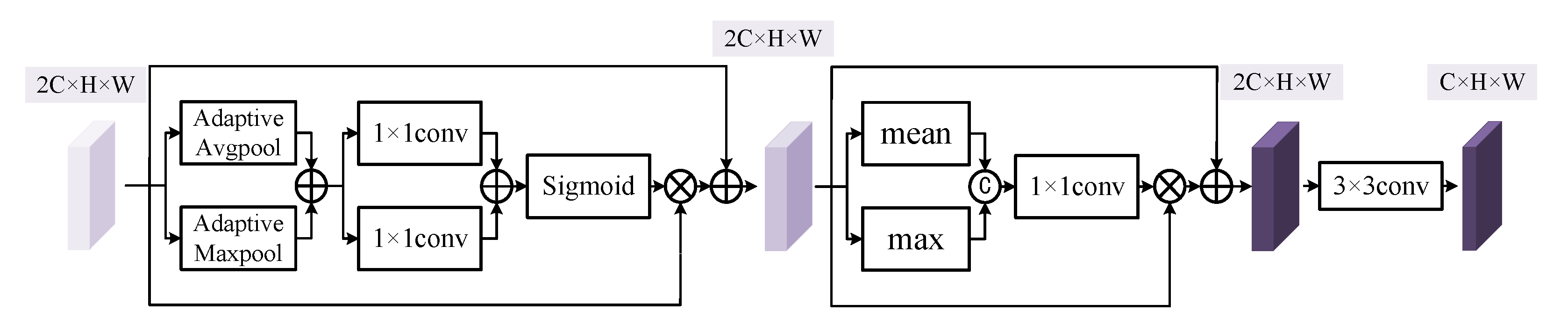

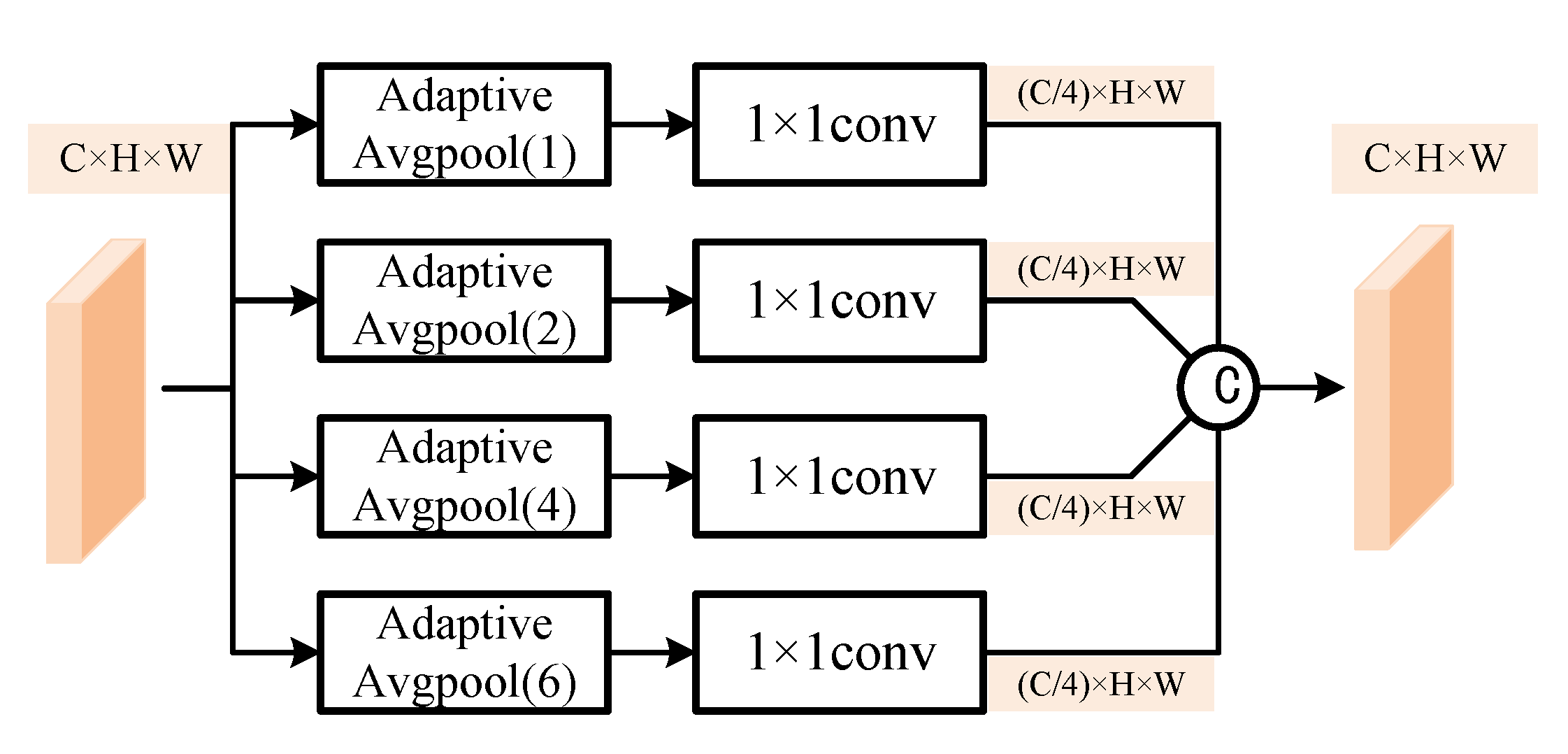

2.2. Global Semantic Filtering Module (GSFM)

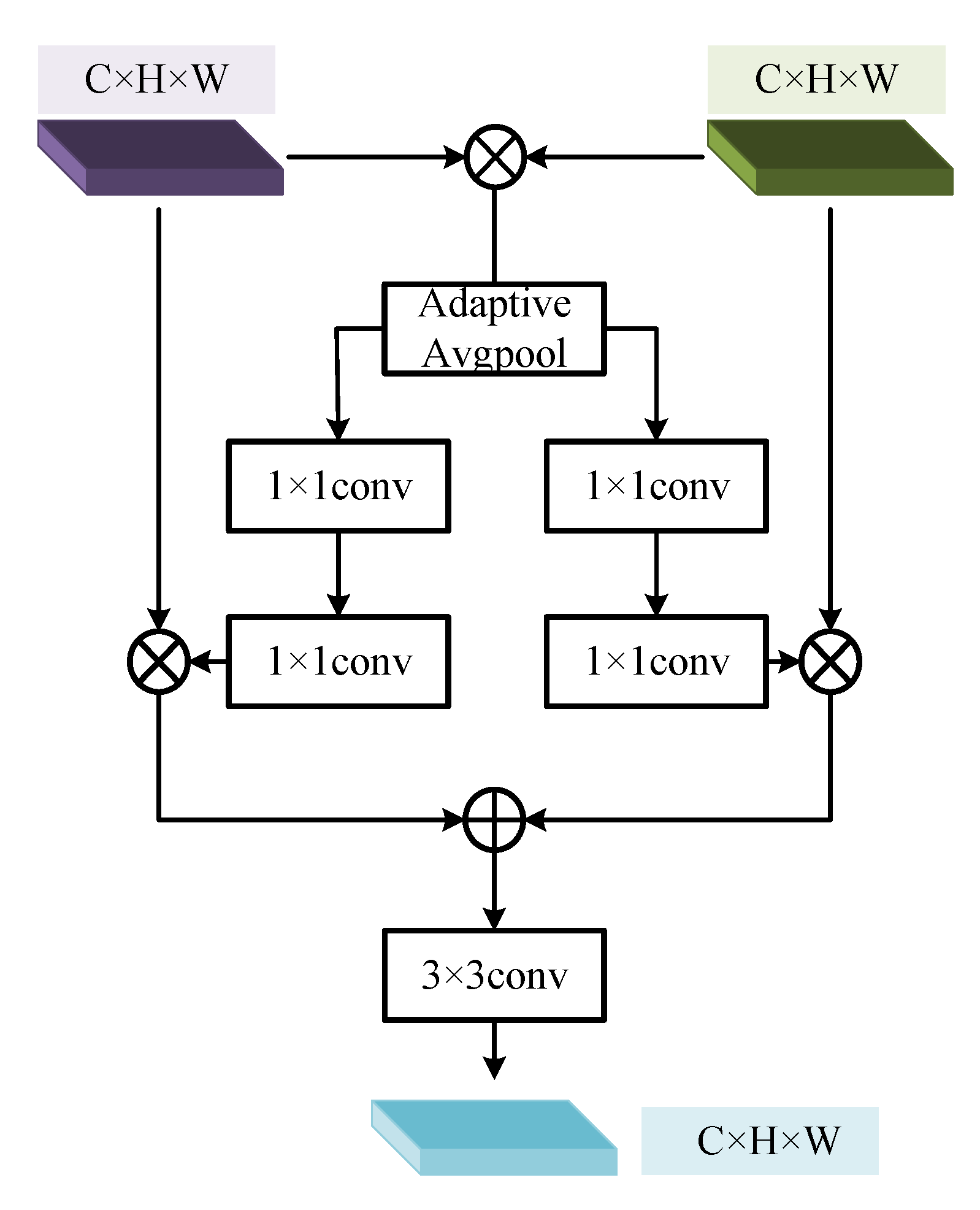

2.3. Double-Branch Information-Fusion Module (DBIFM)

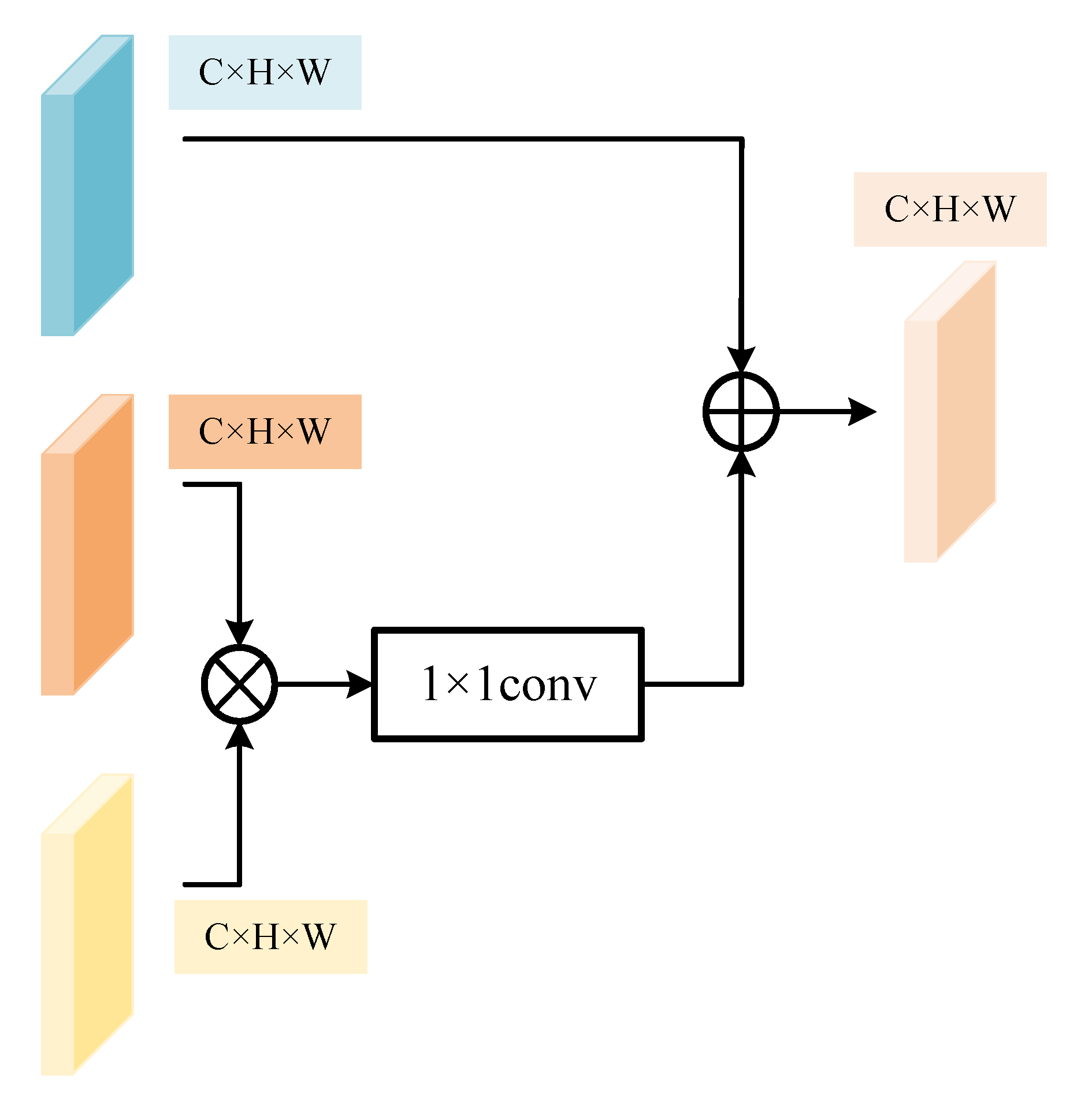

2.4. Similarity-Enhancement Module (SEM)

2.5. Summary at the End of the Chapter

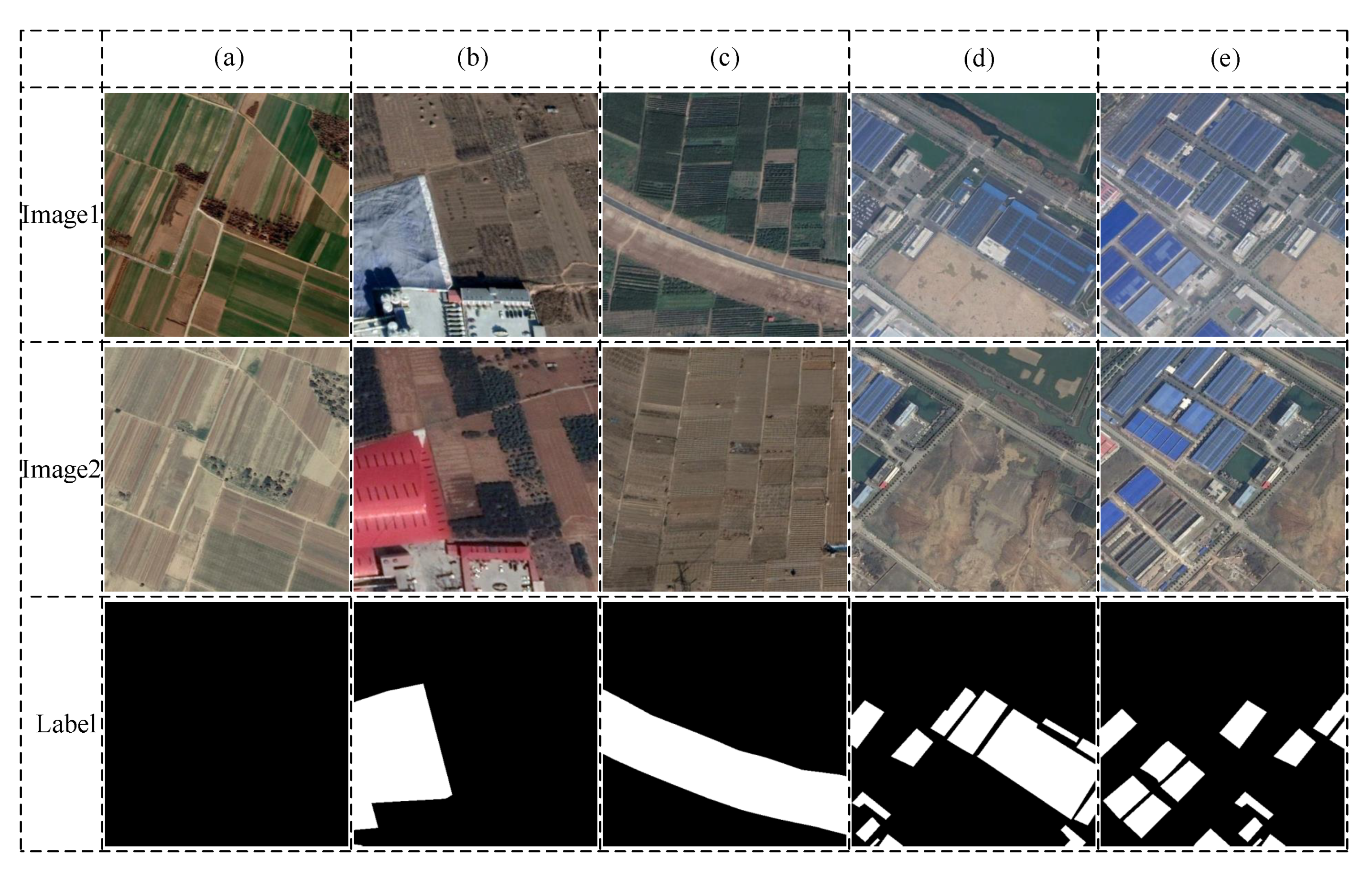

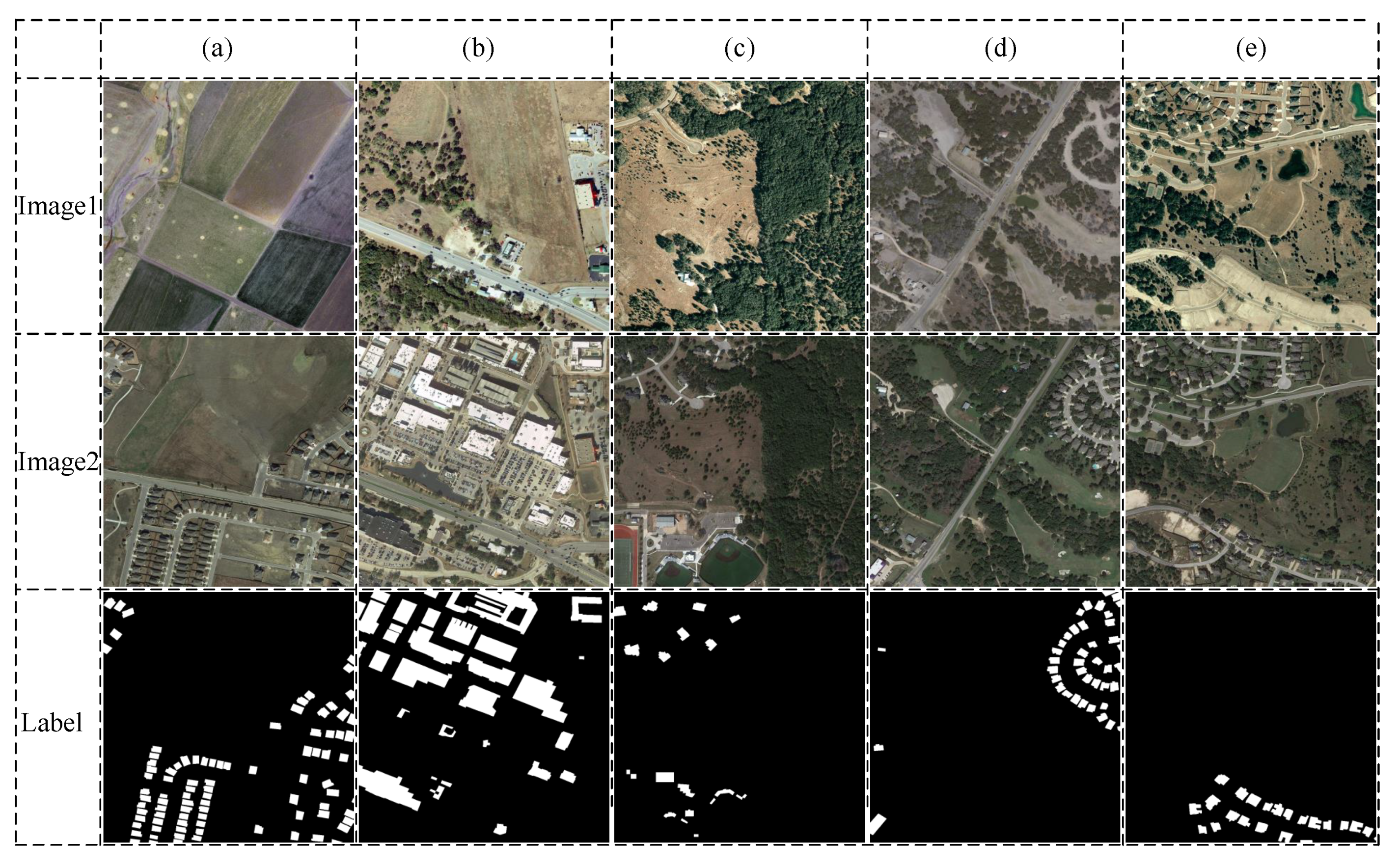

3. Dataset

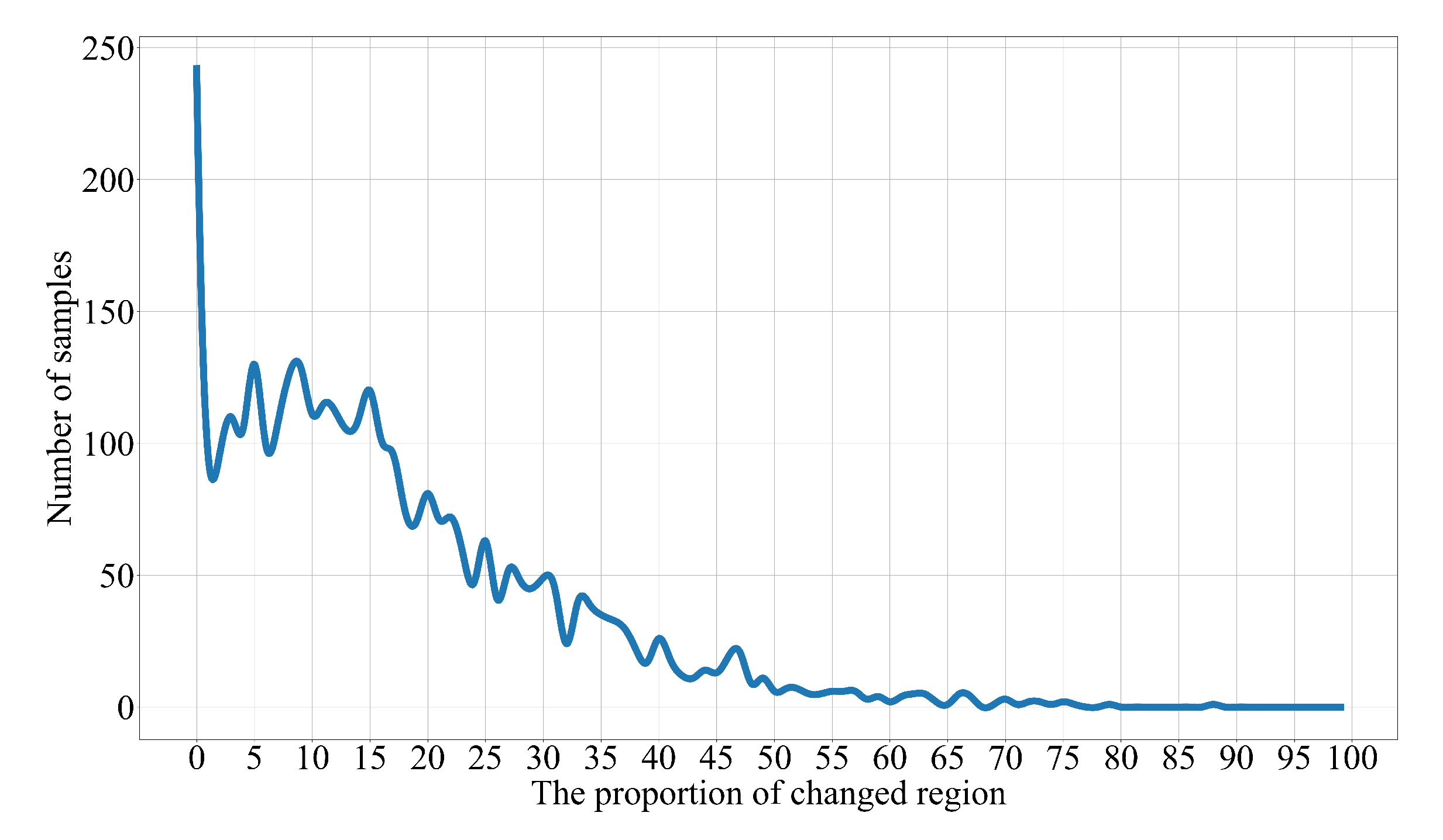

3.1. BTRS-CD Dataset

- 1.

- The entire dataset should contain a large number of changed regions.

- 2.

- Human-induced geomorphological changes, such as the expansion of roads in fertile land, the conversion of forests into factories, the expansion and reconstruction of urban buildings, the diversion of rivers, and the return of farmland to forests, can lead to changed areas in remote-sensing images. Therefore, the dataset should include as many of these geomorphological changes as possible.

- 3.

- Seasonal geomorphological changes can also lead to changes in remote-sensing images, such as significant changes in winter and summer broad-leaved forest and river ebb and flow.

- 4.

- Completely rely on manual tagging to turn the most accurate change-area information into a label map in the dataset.

3.2. LEVIR-CD Dataset

- 1.

- The entire dataset covers a time span of 5 to 14 years; thus, the remote-sensing image includes a significant number of areas that have undergone changes.

- 2.

- In terms of spatial coverage, the remote-sensing images from the entire dataset were collected from more than 20 distinct regions across Texas, encompassing a variety of settings such as residential areas, apartments, vegetated areas, garages, open land, highways, and other locations.

- 3.

- The dataset takes into account seasonal and illumination changes, which are crucial factors for developing effective algorithms.

4. Experiment

4.1. Experimental Details

4.2. Selection of Backbone

4.3. Ablation Experiment of TRS-CD Dataset

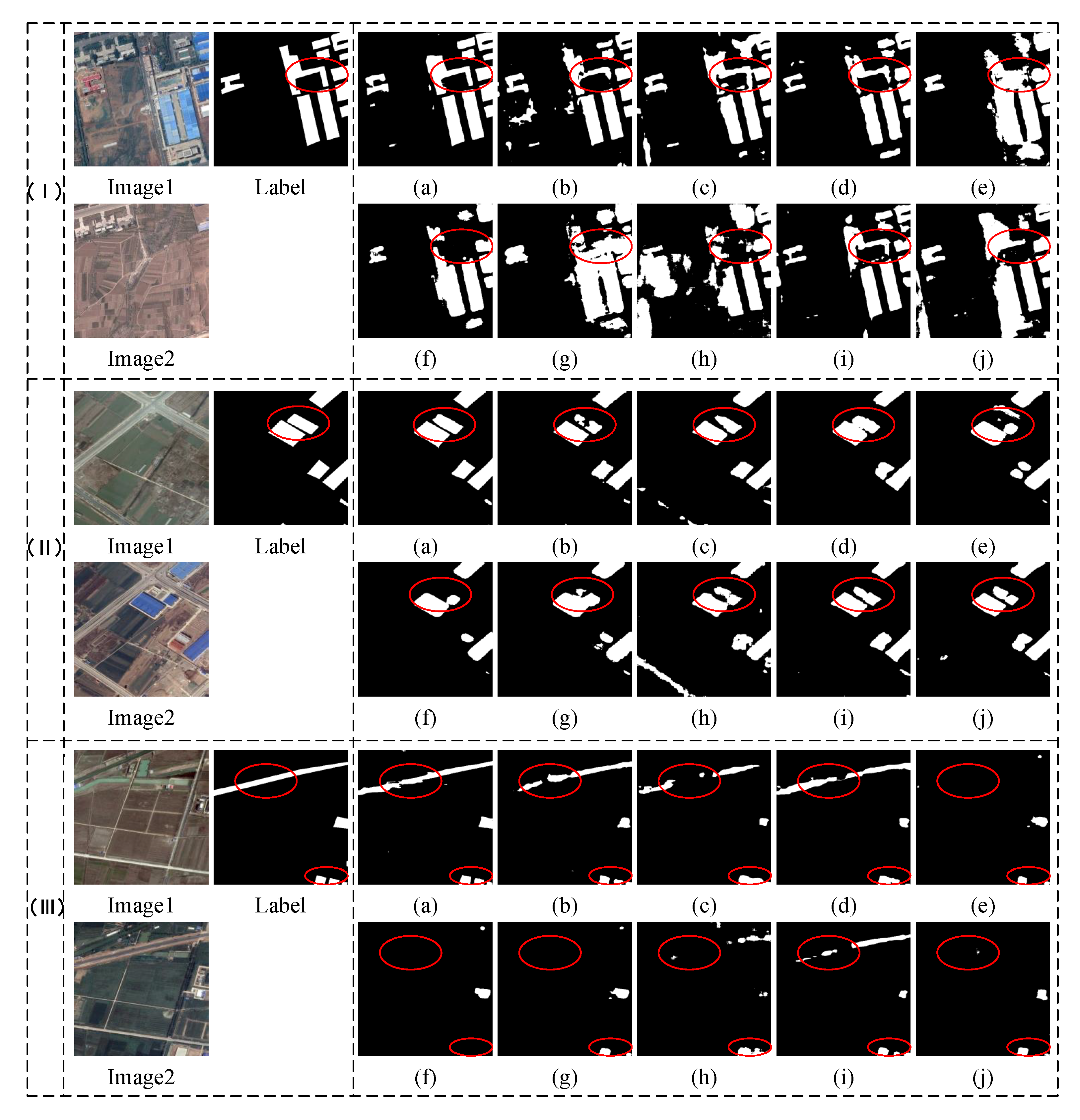

4.4. TRS-CD Dataset Comparison Test

- 1.

- The first three lines in the table are deep learning networks dedicated to semantic segmentation. The best-performing network is BiseNet, with a value of 81.5% for Miou, indicating that the change-detection task is a more complex binary semantic-segmentation task.

- 2.

- Among other networks, FC_DIFF was specifically proposed for change detection in 2018 and achieved the lowest performance on the TRS-CD dataset with a Miou score of only 65.87%. In contrast, MFGANnet, proposed in 2022, achieved the highest performance with a Miou score of 82.32%. It can be seen that, over time, researchers have made breakthroughs in the field of change detection, and the proposed change-detection networks are becoming more and more effective.

- 3.

- Our proposed MHCNet achieved a Miou score of 84.36% on the TRS-CD dataset, outperforming the second-best-performing MFGANnet by 2.04%. These results demonstrate that MHCNet is more effective than the other compared networks in the challenging task of change detection.

- 4.

- MHCNet has a relatively large number of parameters compared to the other models. This is due to the dual-branch structure of MHCNet, which results in a large number of parameters in the backbone network, accounting for almost three-quarters of the total model parameters. Despite its relatively large number of parameters, the model complexity of MHCNet is at a medium level, making it a practical option for change-detection tasks.

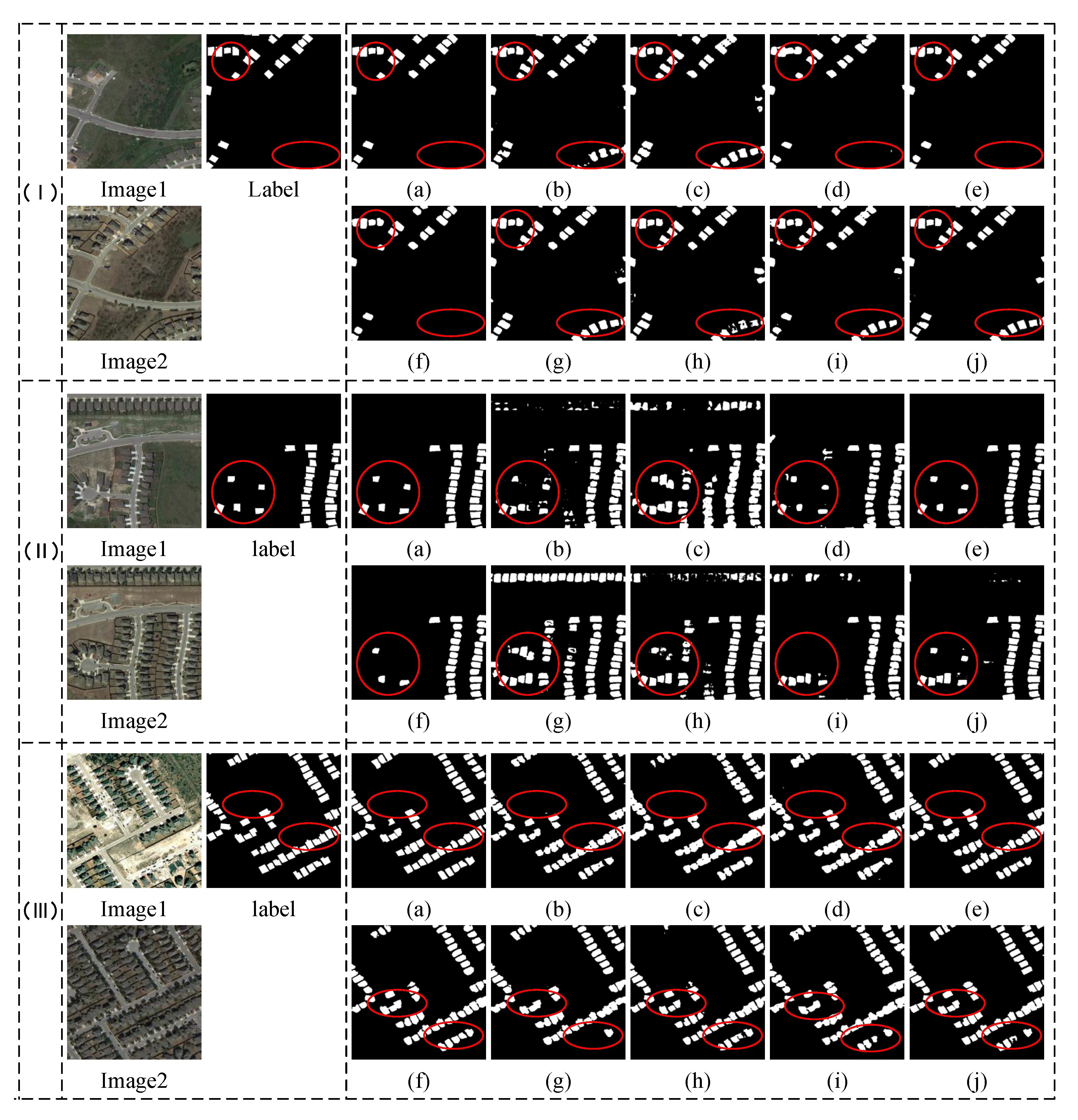

4.5. Comparative Experiments on the LEVIR-CD Dataset

4.6. Comparative Experiments on Cross-Dataset

- 1.

- Train on the TRS-CD dataset and test on the LEVIR-CD dataset.

- 2.

- Train on the LEVIR-CD dataset and test on the TRS-CD dataset.

5. Summary

- 1.

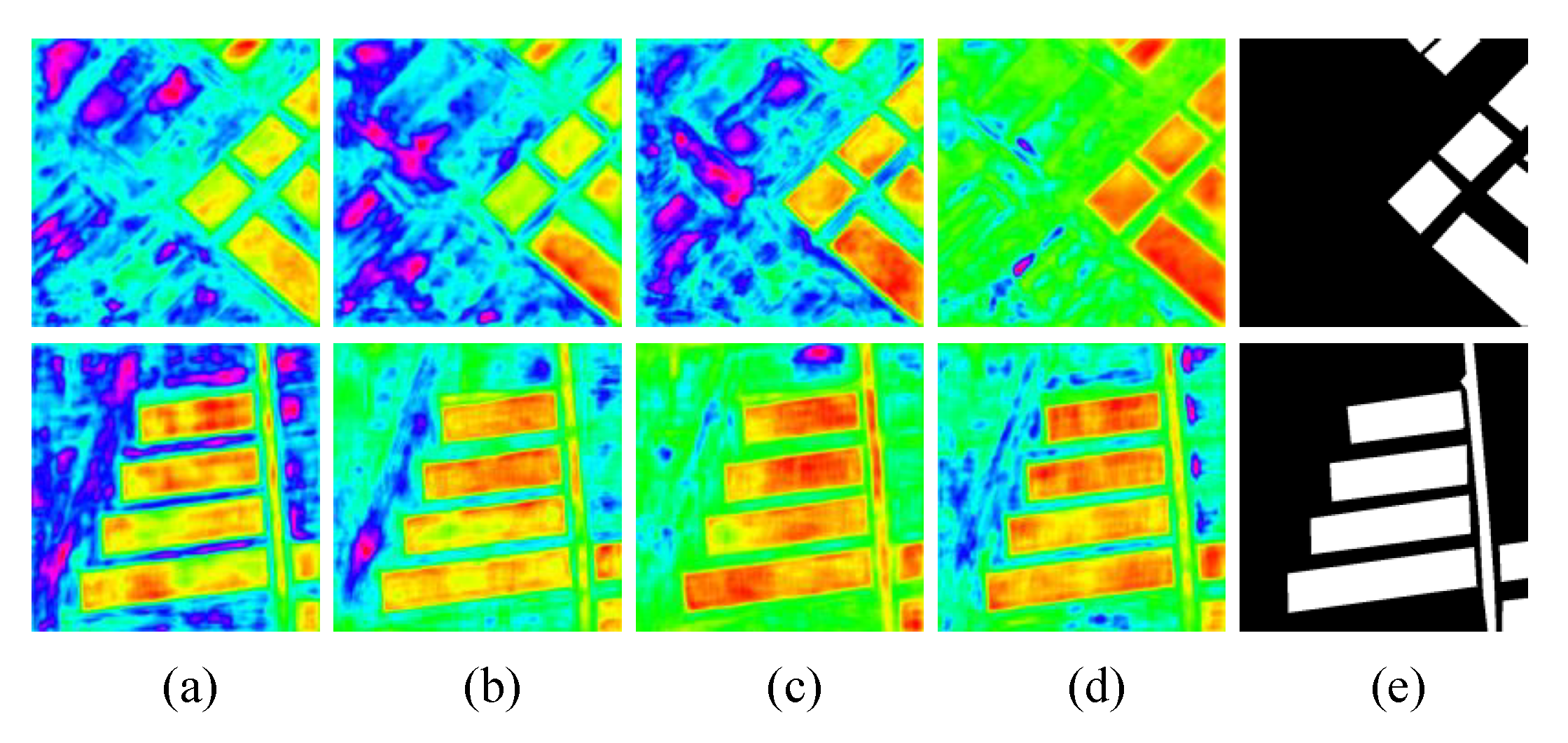

- As can be seen from the comparison graph, MHCNet performs better at handling edge details, such as the shape of the river and adjacent houses, among others.

- 2.

- Other networks have many missed detections, multiple detections, and false detections. MHCNet has few of these problems, and the prediction graph is closest to the real label.

- 3.

- MFGANnet, BiseNet, and TCDNet ranked second, third, and fourth, respectively, on the TRS-CD dataset, but ranked fifth, sixth, and seventh on the LEVIR-CD dataset. In contrast, MCDNet achieves the best results on both the TRS-CD and LEVIR-CD datasets and exhibits superior robustness and generalization.

- 4.

- MHCNet received the best score for every evaluation metric when tested on the cross-dataset. Despite having the largest number of parameters, MHCNet exhibits better generalization performance and does not exhibit significant signs of overfitting, indicating that the model performs quite well.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- He, C.; Wei, A.; Shi, P.; Zhang, Q.; Zhao, Y. Detecting land-use/land-cover change in rural–urban fringe areas using extended change-vector analysis. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 572–585. [Google Scholar] [CrossRef]

- Sommer, S.; Hill, J.; Megier, J. The potential of remote sensing for monitoring rural land use changes and their effects on soil conditions. Agric. Ecosyst. Environ. 1998, 67, 197–209. [Google Scholar] [CrossRef]

- Fichera, C.R.; Modica, G.; Pollino, M. Land Cover classification and change-detection analysis using multi-temporal remote sensed imagery and landscape metrics. Eur. J. Remote Sens. 2012, 45, 1–18. [Google Scholar] [CrossRef]

- Eisavi, V.; Homayouni, S.; Karami, J. Integration of remotely sensed spatial and spectral information for change detection using FAHP. J. Fac. For. Istanb. Univ. 2016, 66, 524–538. [Google Scholar] [CrossRef]

- Ma, Z.; Xia, M.; Lin, H.; Qian, M.; Zhang, Y. FENet: Feature enhancement network for land cover classification. Int. J. Remote Sens. 2023, 44, 1702–1725. [Google Scholar] [CrossRef]

- Gillespie, T.W.; Chu, J.; Frankenberg, E.; Thomas, D. Assessment and prediction of natural hazards from satellite imagery. Prog. Phys. Geogr. 2007, 31, 459–470. [Google Scholar] [CrossRef]

- Dong, L.; Shan, J. A comprehensive review of earthquake-induced building damage detection with remote sensing techniques. ISPRS J. Photogramm. Remote Sens. 2013, 84, 85–99. [Google Scholar] [CrossRef]

- Weismiller, R.; Kristof, S.; Scholz, D.; Anuta, P.; Momin, S. Change detection in coastal zone environments. Photogramm. Eng. Remote Sens. 1977, 43, 1533–1539. [Google Scholar]

- Ke, L.; Lin, Y.; Zeng, Z.; Zhang, L.; Meng, L. Adaptive change detection with significance test. IEEE Access 2018, 6, 27442–27450. [Google Scholar] [CrossRef]

- Rignot, E.J.; Van Zyl, J.J. Change detection techniques for ERS-1 SAR data. IEEE Trans. Geosci. Remote Sens. 1993, 31, 896–906. [Google Scholar] [CrossRef]

- Al Rawashdeh, S.B. Evaluation of the differencing pixel-by-pixel change detection method in mapping irrigated areas in dry zones. Int. J. Remote Sens. 2011, 32, 2173–2184. [Google Scholar] [CrossRef]

- Comber, A.; Fisher, P.; Wadsworth, R. Assessment of a semantic statistical approach to detecting land cover change using inconsistent data sets. Photogramm. Eng. Remote Sens. 2004, 70, 931–938. [Google Scholar] [CrossRef]

- Kesikoglu, M.H.; Atasever, U.; Ozkana, C. Unsupervised change detection in satellite images using fuzzy c-means clustering and principal component analysis. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 7, W2. [Google Scholar] [CrossRef]

- Hay, G.J. Visualizing 3-D Texture: A Three Dimensional Structural Approach to Model Forest Texture. Master’s Thesis, University of Calgary, Calgary, AB, Canada, 1995. [Google Scholar]

- Shi, X.; Lu, L.; Yang, S.; Huang, G.; Zhao, Z. Object-oriented change detection based on weighted polarimetric scattering difference on polsar images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 149–154. [Google Scholar] [CrossRef]

- Leichtle, T.; Geiß, C.; Wurm, M.; Lakes, T.; Taubenböck, H. Unsupervised change detection in VHR remote sensing imagery—An object-based clustering approach in a dynamic urban environment. Int. J. Appl. Earth Obs. Geoinf. 2017, 54, 15–27. [Google Scholar] [CrossRef]

- Desclée, B.; Bogaert, P.; Defourny, P. Forest change detection by statistical object-based method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Shuai Zhang, L.W. STPGTN–A Multi-Branch Parameters Identification Method Considering Spatial Constraints and Transient Measurement Data. Comput. Model. Eng. Sci. 2023, 136, 2635–2654. [Google Scholar] [CrossRef]

- Hu, K.; Ding, Y.; Jin, J.; Weng, L.; Xia, M. Skeleton Motion Recognition Based on Multi-Scale Deep Spatio-Temporal Features. Appl. Sci. 2022, 12, 1028. [Google Scholar] [CrossRef]

- Hu, K.; Weng, C.; Zhang, Y.; Jin, J.; Xia, Q. An Overview of Underwater Vision Enhancement: From Traditional Methods to Recent Deep Learning. J. Mar. Sci. Eng. 2022, 10, 241. [Google Scholar] [CrossRef]

- Wang, Z.; Xia, M.; Lu, M.; Pan, L.; Liu, J. Parameter Identification in Power Transmission Systems Based on Graph Convolution Network. IEEE Trans. Power Deliv. 2022, 37, 3155–3163. [Google Scholar] [CrossRef]

- Ding, A.; Zhang, Q.; Zhou, X.; Dai, B. Automatic recognition of landslide based on CNN and texture change detection. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; IEEE: New York, NY, USA, 2016; pp. 444–448. [Google Scholar]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A general end-to-end 2-D CNN framework for hyperspectral image change detection. IEEE Trans. Geosci. Remote Sens. 2018, 57, 3–13. [Google Scholar] [CrossRef]

- Zhan, Y.; Fu, K.; Yan, M.; Sun, X.; Wang, H.; Qiu, X. Change detection based on deep siamese convolutional network for optical aerial images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Zhang, W.; Lu, X. The spectral-spatial joint learning for change detection in multispectral imagery. Remote Sens. 2019, 11, 240. [Google Scholar] [CrossRef]

- Liu, M.; Shi, Q.; Marinoni, A.; He, D.; Liu, X.; Zhang, L. Super-resolution-based change detection network with stacked attention module for images with different resolutions. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–18. [Google Scholar] [CrossRef]

- Hu, K.; Li, M.; Xia, M.; Lin, H. Multi-Scale Feature Aggregation Network for Water Area Segmentation. Remote Sens. 2022, 14, 206. [Google Scholar] [CrossRef]

- Miao, S.; Xia, M.; Qian, M.; Zhang, Y.; Liu, J.; Lin, H. Cloud/shadow segmentation based on multi-level feature enhanced network for remote sensing imagery. Int. J. Remote Sens. 2022, 43, 5940–5960. [Google Scholar] [CrossRef]

- Chen, B.; Xia, M.; Qian, M.; Huang, J. MANet: A multi-level aggregation network for semantic segmentation of high-resolution remote sensing images. Int. J. Remote Sens. 2022, 43, 5874–5894. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Chen, J.; Yuan, Z.; Peng, J.; Chen, L.; Huang, H.; Zhu, J.; Liu, Y.; Li, H. DASNet: Dual attentive fully convolutional Siamese networks for change detection in high-resolution satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1194–1206. [Google Scholar] [CrossRef]

- Lu, C.; Xia, M.; Qian, M.; Chen, B. Dual-Branch Network for Cloud and Cloud Shadow Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Peng, X.; Zhong, R.; Li, Z.; Li, Q. Optical remote sensing image change detection based on attention mechanism and image difference. IEEE Trans. Geosci. Remote Sens. 2020, 59, 7296–7307. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Zhang, C.; Weng, L.; Ding, L.; Xia, M.; Lin, H. CRSNet: Cloud and Cloud Shadow Refinement Segmentation Networks for Remote Sensing Imagery. Remote Sens. 2023, 15, 1664. [Google Scholar] [CrossRef]

- Qu, Y.; Xia, M.; Zhang, Y. Strip pooling channel spatial attention network for the segmentation of cloud and cloud shadow. Comput. Geosci. 2021, 157, 104940. [Google Scholar] [CrossRef]

- Ma, Z.; Xia, M.; Weng, L.; Lin, H. Local Feature Search Network for Building and Water Segmentation of Remote Sensing Image. Sustainability 2023, 15, 3034. [Google Scholar] [CrossRef]

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation. Int. J. Comput. Vis. 2021, 129, 3051–3068. [Google Scholar] [CrossRef]

- Hu, K.; Weng, C.; Shen, C.; Wang, T.; Weng, L.; Xia, M. A multi-stage underwater image aesthetic enhancement algorithm based on a generative adversarial network. Eng. Appl. Artif. Intell. 2023, 123, 106196. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. Proceedings of European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Daudt, R.C.; Le Saux, B.; Boulch, A. Fully convolutional siamese networks for change detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; IEEE: New York, NY, USA, 2018; pp. 4063–4067. [Google Scholar]

- Varghese, A.; Gubbi, J.; Ramaswamy, A.; Balamuralidhar, P. ChangeNet: A deep learning architecture for visual change detection. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Liu, Y.; Pang, C.; Zhan, Z.; Zhang, X.; Yang, X. Building change detection for remote sensing images using a dual-task constrained deep siamese convolutional network model. IEEE Geosci. Remote Sens. Lett. 2020, 18, 811–815. [Google Scholar] [CrossRef]

- Chu, S.; Li, P.; Xia, M. MFGAN: Multi feature guided aggregation network for remote sensing image. Neural Comput. Appl. 2022, 34, 10157–10173. [Google Scholar] [CrossRef]

- Song, L.; Xia, M.; Weng, L.; Lin, H.; Qian, M.; Chen, B. Axial Cross Attention Meets CNN: Bibranch Fusion Network for Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 32–43. [Google Scholar] [CrossRef]

- Lu, C.; Xia, M.; Lin, H. Multi-scale strip pooling feature aggregation network for cloud and cloud shadow segmentation. Neural Comput. Appl. 2022, 34, 6149–6162. [Google Scholar] [CrossRef]

- Chen, J.; Xia, M.; Wang, D.; Lin, H. Double Branch Parallel Network for Segmentation of Buildings and Waters in Remote Sensing Images. Remote Sens. 2023, 15, 1536. [Google Scholar] [CrossRef]

- Hu, K.; Zhang, E.; Xia, M.; Weng, L.; Lin, H. MCANet: A Multi-Branch Network for Cloud/Snow Segmentation in High-Resolution Remote Sensing Images. Remote Sens. 2023, 15, 1055. [Google Scholar] [CrossRef]

- Gao, J.; Weng, L.; Xia, M.; Lin, H. MLNet: Multichannel feature fusion lozenge network for land segmentation. J. Appl. Remote Sens. 2022, 16, 1–19. [Google Scholar] [CrossRef]

| Backbone | ACC (%) | RC (%) | PR (%) | MIoU (%) |

|---|---|---|---|---|

| VGG16 | 93.96 | 63.83 | 74.63 | 76.70 |

| VGG19 | 93.46 | 65.90 | 69.61 | 75.59 |

| ResNet18 | 95.79 | 73.92 | 82.30 | 83.56 |

| ResNet34 | 96.01 | 75.33 | 82.90 | 84.36 |

| Method | ACC (%) | RC (%) | PR (%) | MIoU (%) | Param (M) |

|---|---|---|---|---|---|

| Backbone | 95.53 | 74.09 | 79.85 | 82.77 | 30.65 |

| Backbone + CSAM | 95.71 | 74.55 | 80.63 | 83.14 | 33.45 |

| Backbone + CSAM + GSFM | 95.80 | 74.59 | 81.71 | 83.58 | 36.25 |

| Backbone + CSAM + GSFM + DBIFM | 95.94 | 74.83 | 82.50 | 84.19 | 40.08 |

| Backbone + CSAM + GSFM + DBIFM + SEM | 96.01 | 75.33 | 82.90 | 84.36 | 40.43 |

| Method | ACC (%) | RC (%) | PR (%) | MIoU (%) | Param (M) | Flops (GMac) |

|---|---|---|---|---|---|---|

| BiSeNet [44] | 95.21 | 71.92 | 78.56 | 81.33 | 22.02 | 22.48 |

| FCN8s [45] | 92.85 | 66.06 | 66.84 | 74.49 | 18.65 | 80.68 |

| UNet [46] | 92.68 | 59.67 | 70.00 | 73.18 | 13.42 | 124.21 |

| FC_DIFF [47] | 91.12 | 39.27 | 74.83 | 65.87 | 11.35 | 19.29 |

| FC_EF [47] | 90.11 | 48.06 | 67.38 | 66.51 | 11.35 | 14.79 |

| FC_CONC [47] | 91.58 | 51.25 | 70.87 | 69.61 | 11.55 | 19.30 |

| ChangNet [48] | 94.18 | 62.78 | 75.62 | 76.88 | 23.52 | 42.73 |

| TCDNet [49] | 95.07 | 69.98 | 79.31 | 80.97 | 23.28 | 32.65 |

| MFGANnet [50] | 95.54 | 72.40 | 80.09 | 82.32 | 33.53 | 52.82 |

| MHCNet (Ours) | 96.01 | 75.33 | 82.90 | 84.36 | 40.43 | 59.07 |

| Method | ACC (%) | RC (%) | PR (%) | MIoU (%) | Param (M) | Flops (GMac) |

|---|---|---|---|---|---|---|

| BiSeNet | 98.04 | 80.49 | 78.74 | 83.36 | 22.02 | 22.48 |

| FCN8s | 98.39 | 79.08 | 83.33 | 84.68 | 18.65 | 80.68 |

| UNet | 98.62 | 81.32 | 84.69 | 86.25 | 13.42 | 124.21 |

| FC_DIFF | 98.46 | 78.84 | 85.72 | 85.26 | 11.35 | 19.29 |

| FC_EF | 97.94 | 80.26 | 78.07 | 82.86 | 11.35 | 14.79 |

| FC_CONC | 98.54 | 79.72 | 86.53 | 86.09 | 11.55 | 19.30 |

| TCDNet | 98.20 | 77.02 | 83.05 | 83.63 | 23.28 | 32.65 |

| ChangNet | 98.12 | 79.57 | 81.21 | 83.74 | 23.52 | 42.73 |

| MFGANnet | 98.30 | 78.49 | 84.70 | 84.73 | 33.53 | 52.82 |

| MHCNet (Ours) | 98.65 | 81.79 | 86.59 | 86.92 | 40.43 | 59.07 |

| Method | ACC (%) | RC (%) | PR (%) | MIoU (%) | Param (M) | Flops (GMac) |

|---|---|---|---|---|---|---|

| BiSeNet | 91.97 | 60.65 | 61.69 | 66.08 | 22.02 | 22.48 |

| FCN8s | 92.08 | 59.97 | 57.53 | 64.25 | 18.65 | 80.68 |

| UNet | 92.28 | 61.44 | 62.48 | 65.55 | 13.42 | 124.21 |

| FC_DIFF | 90.18 | 44.55 | 56.74 | 63.93 | 11.35 | 19.29 |

| FC_EF | 91.74 | 45.19 | 60.72 | 64.95 | 11.35 | 14.79 |

| FC_CONC | 90.46 | 44.85 | 59.11 | 63.86 | 11.55 | 19.30 |

| TCDNet | 90.83 | 63.62 | 63.32 | 66.31 | 23.28 | 32.65 |

| ChangNet | 91.07 | 54.07 | 56.68 | 62.80 | 23.52 | 42.73 |

| MFGANnet | 92.16 | 61.47 | 64.27 | 67.94 | 33.53 | 52.82 |

| MHCNet (Ours) | 92.44 | 63.30 | 65.12 | 68.71 | 40.43 | 59.07 |

| Method | ACC (%) | RC (%) | PR (%) | MIoU (%) | Param (M) | Flops (GMac) |

|---|---|---|---|---|---|---|

| BiSeNet | 88.51 | 55.74 | 58.26 | 65.31 | 22.02 | 22.48 |

| FCN8s | 88.10 | 55.15 | 57.73 | 62.29 | 18.65 | 80.68 |

| UNet | 88.28 | 56.83 | 58.14 | 65.74 | 13.42 | 124.21 |

| FC_DIFF | 88.86 | 53.26 | 52.35 | 65.67 | 11.35 | 19.29 |

| FC_EF | 87.54 | 52.15 | 57.74 | 64.57 | 11.35 | 14.79 |

| FC_CONC | 88.61 | 54.36 | 51.49 | 65.55 | 11.55 | 19.30 |

| TCDNet | 88.48 | 55.57 | 55.84 | 66.24 | 23.28 | 32.65 |

| ChangNet | 88.23 | 56.09 | 54.70 | 64.10 | 23.52 | 42.73 |

| MFGANnet | 8.39 | 56.17 | 56.31 | 66.34 | 33.53 | 52.82 |

| MHCNet (Ours) | 88.90 | 57.12 | 58.02 | 66.78 | 40.43 | 59.07 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, D.; Weng, L.; Xia, M.; Lin, H. MBCNet: Multi-Branch Collaborative Change-Detection Network Based on Siamese Structure. Remote Sens. 2023, 15, 2237. https://doi.org/10.3390/rs15092237

Wang D, Weng L, Xia M, Lin H. MBCNet: Multi-Branch Collaborative Change-Detection Network Based on Siamese Structure. Remote Sensing. 2023; 15(9):2237. https://doi.org/10.3390/rs15092237

Chicago/Turabian StyleWang, Dehao, Liguo Weng, Min Xia, and Haifeng Lin. 2023. "MBCNet: Multi-Branch Collaborative Change-Detection Network Based on Siamese Structure" Remote Sensing 15, no. 9: 2237. https://doi.org/10.3390/rs15092237

APA StyleWang, D., Weng, L., Xia, M., & Lin, H. (2023). MBCNet: Multi-Branch Collaborative Change-Detection Network Based on Siamese Structure. Remote Sensing, 15(9), 2237. https://doi.org/10.3390/rs15092237