Combining Drones and Deep Learning to Automate Coral Reef Assessment with RGB Imagery

Abstract

1. Introduction

2. Materials and Methods

2.1. Context

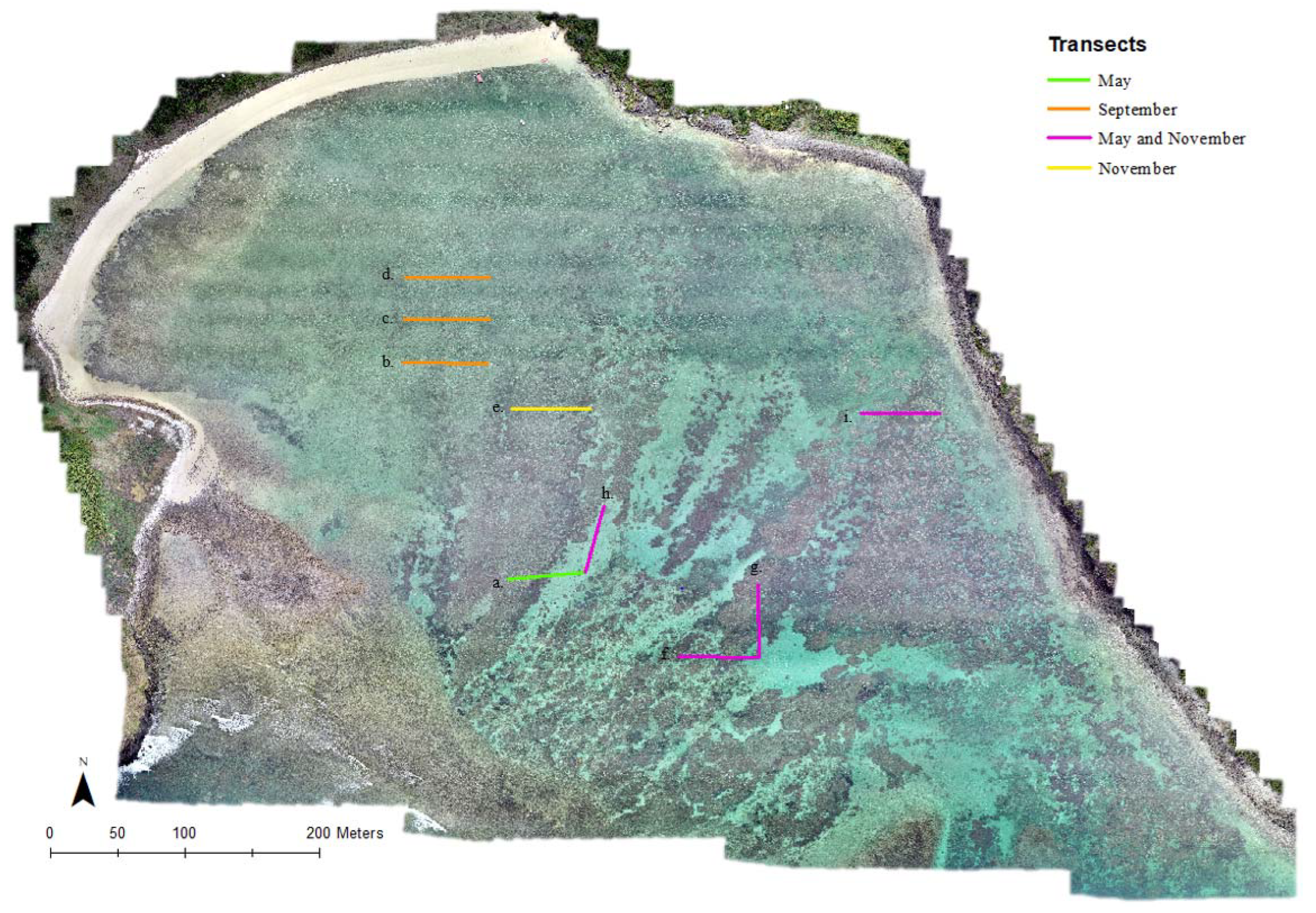

2.2. Data Collection

2.3. Developing Training Data using Object-Based Image Analysis

2.4. Algorithm Pre-Processing

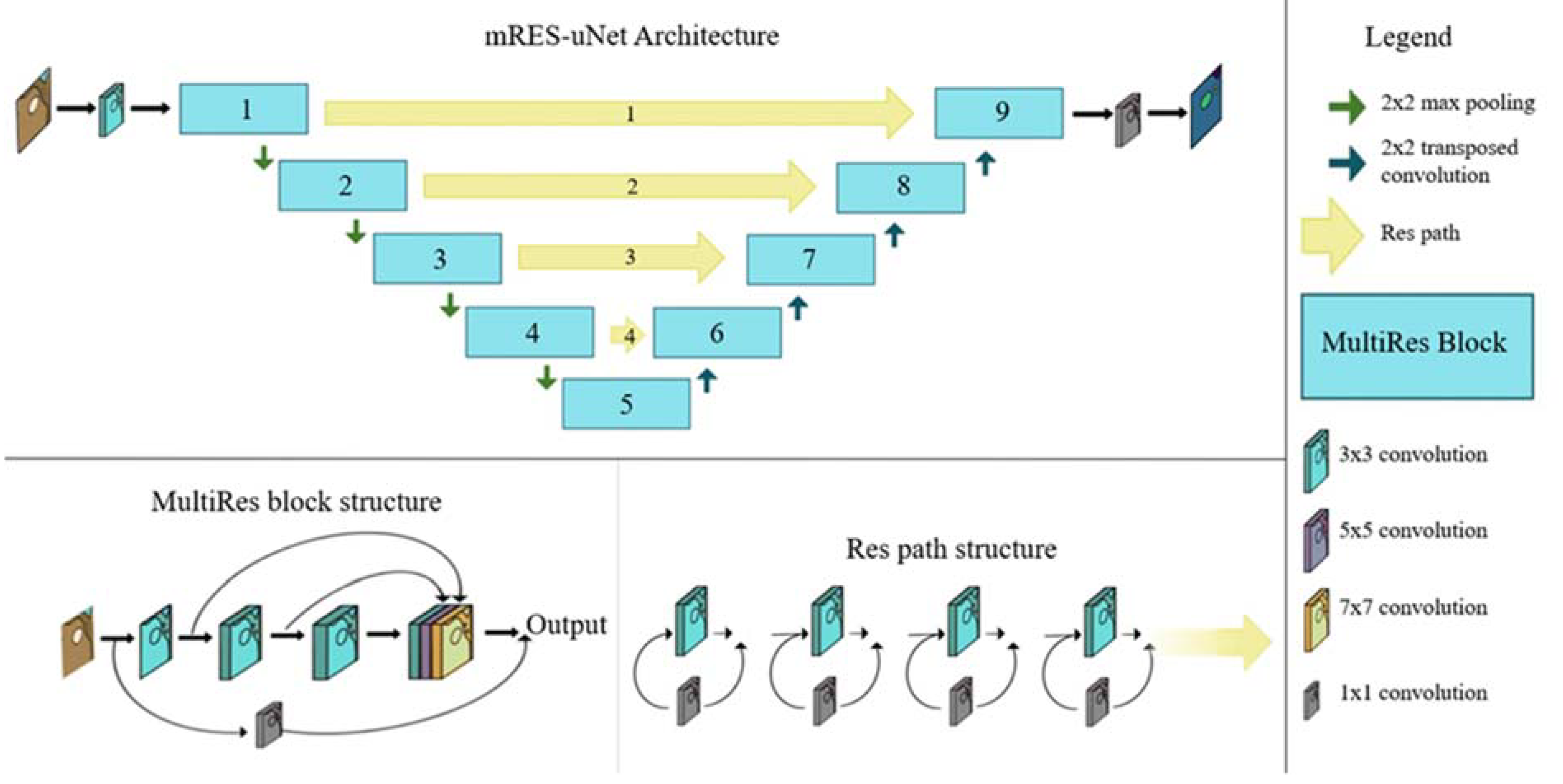

2.5. Developing and Running a Multi-Resolution Neural Network (mRES-uNet)

2.6. Algorithm Training

2.7. Building Temporal Robustness via Transfer Learning

2.8. Test of Model Performance

2.9. Object-Based Bleached Coral Analysis

3. Results

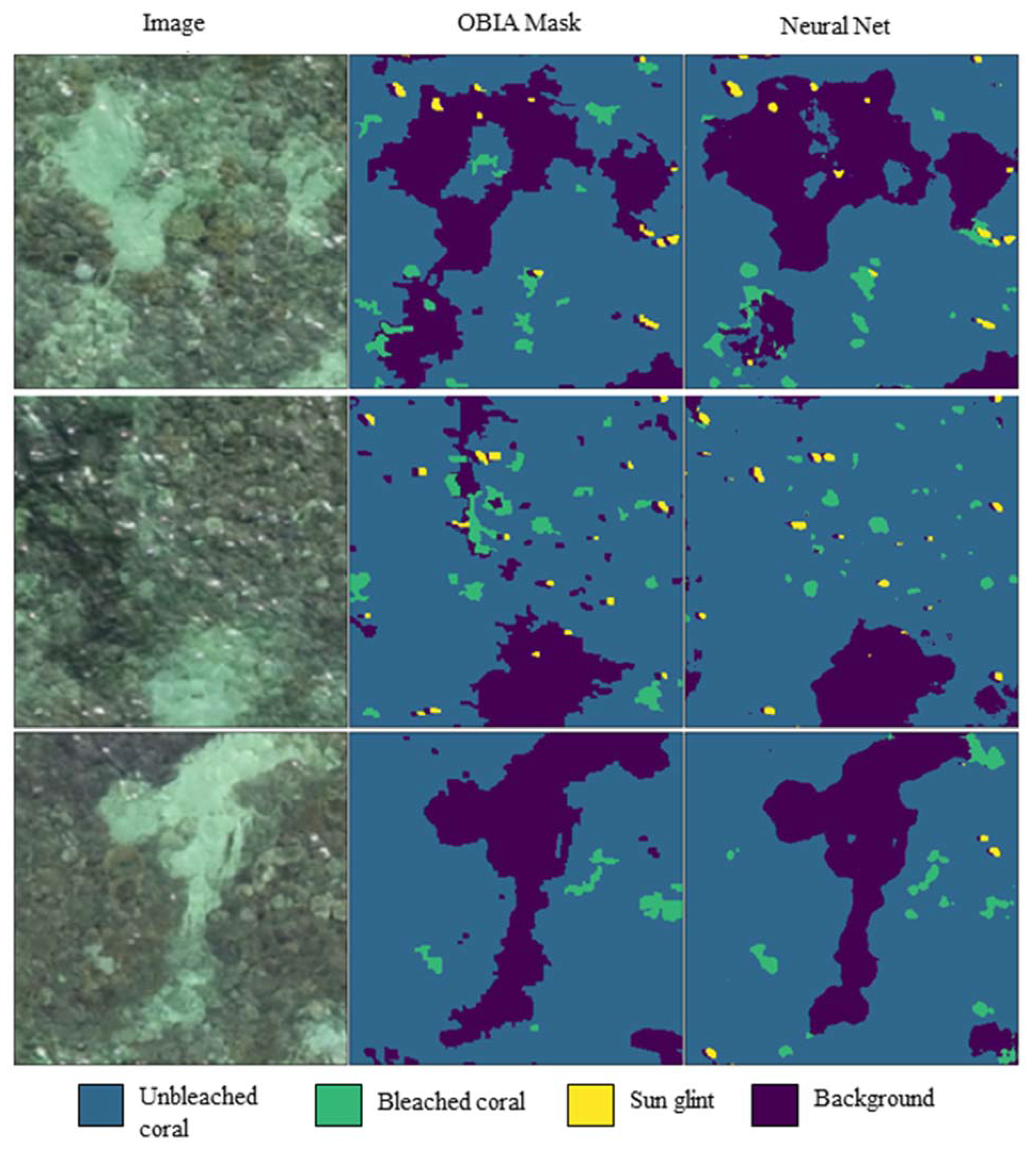

3.1. Mask Validations

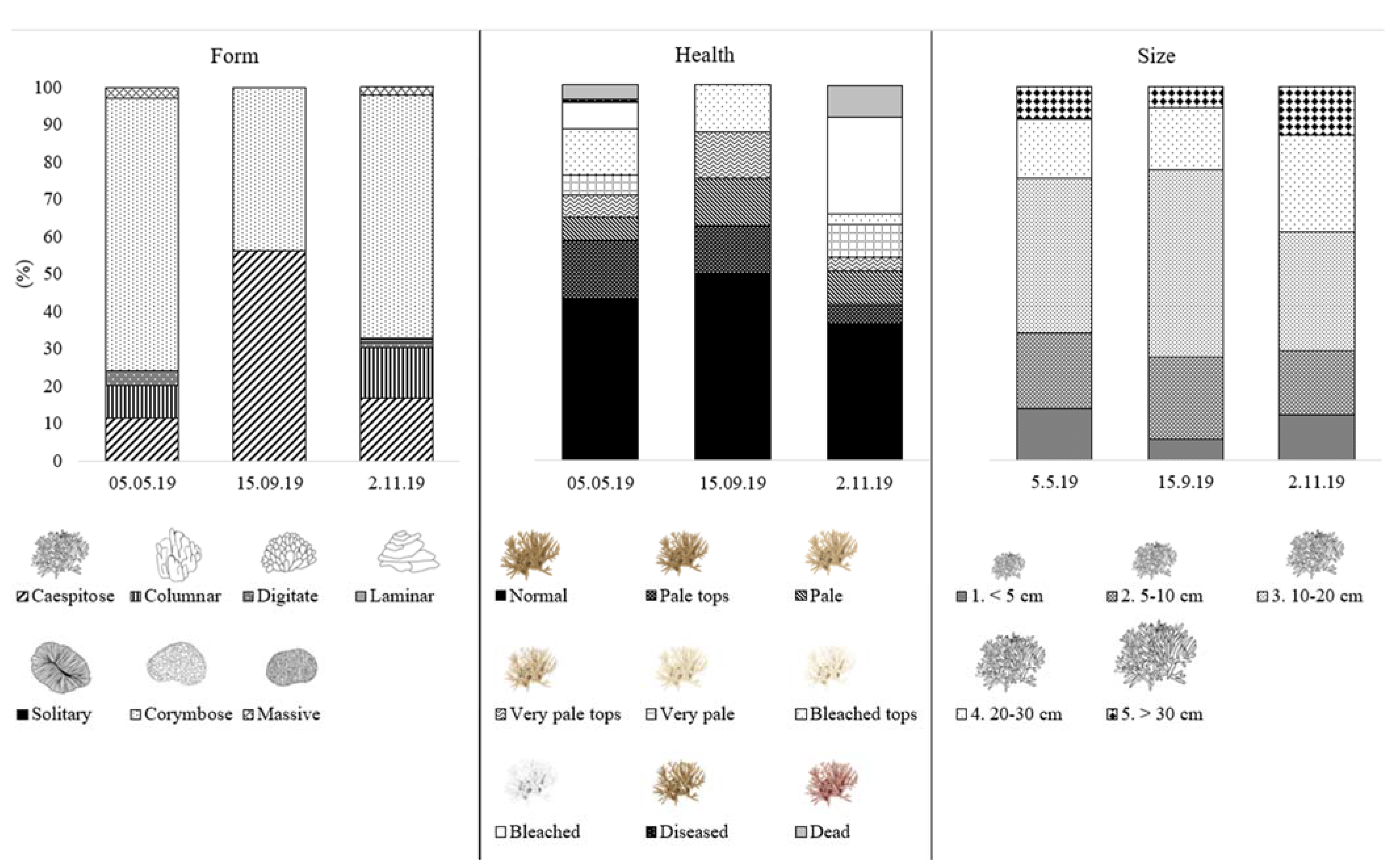

3.2. Ground Truthing in Situ Transects

3.3. Neural Network Performance and Precision

3.4. Object-Based Bleached Coral Analysis and Precision

3.5. Neural Network Classification Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [PubMed]

- Hughes, T.P.; Kerry, J.T.; Álvarez-Noriega, M.; Álvarez-Romero, J.G.; Anderson, K.D.; Baird, A.H.; Babcock, R.C.; Beger, M.; Bellwood, D.R.; Berkelmans, R.; et al. Global warming and recurrent mass bleaching of corals. Nature 2017, 543, 373–377. [Google Scholar] [CrossRef]

- Stuart-Smith, R.D.; Brown, C.J.; Ceccarelli, D.M.; Edgar, G.J. Ecosystem restructuring along the Great Barrier Reef following mass coral bleaching. Nature 2018, 560, 92–96. [Google Scholar] [CrossRef] [PubMed]

- Cantin, N.E.; Spalding, M. Detecting and Monitoring Coral Bleaching Events. In Coral Bleaching Ecological Studies; Springer: Cham, Switzerland, 2018; pp. 85–110. [Google Scholar] [CrossRef]

- Marshall, N.J.; A Kleine, D.; Dean, A.J. CoralWatch: Education, monitoring, and sustainability through citizen science. Front. Ecol. Environ. 2012, 10, 332–334. [Google Scholar] [CrossRef]

- Mumby, P.J.; Skirving, W.; Strong, A.E.; Hardy, J.T.; LeDrew, E.F.; Hochberg, E.J.; Stumpf, R.P.; David, L.T. Remote sensing of coral reefs and their physical environment. Mar. Pollut. Bull. 2004, 48, 219–228. [Google Scholar] [CrossRef]

- Hedley, J.D.; Roelfsema, C.M.; Chollett, I.; Harborne, A.R.; Heron, S.F.; Weeks, S.; Skirving, W.J.; Strong, A.E.; Eakin, C.M.; Christensen, T.R.L.; et al. Remote Sensing of Coral Reefs for Monitoring and Management: A Review. Remote Sens. 2016, 8, 118. [Google Scholar] [CrossRef]

- Scopélitis, J.; Andréfouët, S.; Phinn, S.; Arroyo, L.; Dalleau, M.; Cros, A.; Chabanet, P. The next step in shallow coral reef monitoring: Combining remote sensing and in situ approaches. Mar. Pollut. Bull. 2010, 60, 1956–1968. [Google Scholar] [CrossRef] [PubMed]

- Yamano, H.; Tamura, M. Detection limits of coral reef bleaching by satellite remote sensing: Simulation and data analysis. Remote Sens. Environ. 2004, 90, 86–103. [Google Scholar] [CrossRef]

- Joyce, K.E.; Duce, S.; Leahy, S.M.; Leon, J.; Maier, S.W. Principles and practice of acquiring drone-based image data in marine environments. Mar. Freshw. Res. 2019, 70, 952–963. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S.A. Dawn of Drone Ecology: Low-Cost Autonomous Aerial Vehicles for Conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef]

- Alquezar, R.; Boyd, W. Development of rapid, cost effective coral survey techniques: Tools for management and conservation planning. J. Coast. Conserv. 2007, 11, 105–119. [Google Scholar] [CrossRef]

- Parsons, M.; Bratanov, D.; Gaston, K.J.; Gonzalez, F. UAVs, Hyperspectral Remote Sensing, and Machine Learning Revolutionizing Reef Monitoring. Sensors 2018, 18, 2026. [Google Scholar] [CrossRef]

- Teague, J.; Megson-Smith, D.A.; Allen, M.J.; Day, J.C.; Scott, T.B. A Review of Current and New Optical Techniques for Coral Monitoring. Oceans 2022, 3, 30–45. [Google Scholar] [CrossRef]

- Fallati, L.; Saponari, L.; Savini, A.; Marchese, F.; Corselli, C.; Galli, P. Multi-Temporal UAV Data and Object-Based Image Analysis (OBIA) for Estimation of Substrate Changes in a Post-Bleaching Scenario on a Maldivian Reef. Remote Sens. 2020, 12, 2093. [Google Scholar] [CrossRef]

- Levy, J.; Hunter, C.; Lukacazyk, T.; Franklin, E.C. Assessing the spatial distribution of coral bleaching using small unmanned aerial systems. Coral Reefs 2018, 37, 373–387. [Google Scholar] [CrossRef]

- Nababan, B.; Mastu, L.O.K.; Idris, N.H.; Panjaitan, J.P. Shallow-Water Benthic Habitat Mapping Using Drone with Object Based Image Analyses. Remote Sens. 2021, 13, 4452. [Google Scholar] [CrossRef]

- Collin, A.; Ramambason, C.; Pastol, Y.; Casella, E.; Rovere, A.; Thiault, L.; Espiau, B.; Siu, G.; Lerouvreur, F.; Nakamura, N.; et al. Very high resolution mapping of coral reef state using airborne bathymetric LiDAR surface-intensity and drone imagery. Int. J. Remote Sens. 2018, 39, 5676–5688. [Google Scholar] [CrossRef]

- Hamylton, S.M.; Zhou, Z.; Wang, L. What Can Artificial Intelligence Offer Coral Reef Managers? Front. Mar. Sci. 2020, 1049, 603829. [Google Scholar] [CrossRef]

- Jamil, S.; Rahman, M.; Haider, A. Bag of Features (BoF) Based Deep Learning Framework for Bleached Corals Detection. Big Data Cogn. Comput. 2021, 5, 53. [Google Scholar] [CrossRef]

- Dey, V.; Zhang, Y.; Zhong, M. A review on image segmentation techniques with remote sensing perspective. In Proceedings of the ISPRS TC VII Symposium—100 Years ISPRS, Vienna, Austria, 5–7 July 2010; Volume XXXVIII. [Google Scholar]

- Ghosh, S.; Das, N.; Das, I.; Maulik, U. Understanding Deep Learning Techniques for Image Segmentation. ACM Comput. Surv. 2019, 52, 1–35. [Google Scholar] [CrossRef]

- Harriott, V.; Harrison, P.; Banks, S. The coral communities of Lord Howe Island. Mar. Freshw. Res. 1995, 46, 457–465. [Google Scholar] [CrossRef]

- Valentine, J.P.; Edgar, G.J. Impacts of a population outbreak of the urchin Tripneustes gratilla amongst Lord Howe Island coral communities. Coral Reefs 2010, 29, 399–410. [Google Scholar] [CrossRef]

- Harrison, P.L.; Dalton, S.J.; Carroll, A.G. Extensive coral bleaching on the world’s southernmost coral reef at Lord Howe Island, Australia. Coral Reefs 2011, 30, 775. [Google Scholar] [CrossRef]

- NSW Government. Seascapes. Department of Primary Industries 2022. Available online: https://www.dpi.nsw.gov.au/fishing/marine-protected-areas/marine-parks/lord-howe-island-marine-park/life-under-the-sea/landscapes (accessed on 6 October 2021).

- Giles, A.B.; Davies, J.E.; Ren, K.; Kelaher, B. A deep learning algorithm to detect and classify sun glint from high-resolution aerial imagery over shallow marine environments. ISPRS J. Photogramm. Remote Sens. 2021, 181, 20–26. [Google Scholar] [CrossRef]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S. Demystify Transposed Convolutional Layers. Medium. 2020. Available online: https://medium.com/analytics-vidhya/demystify-transposed-convolutional-layers-6f7b61485454 (accessed on 6 October 2021).

- Shafkat, I. Intuitively Understanding Convolutions for Deep Learning. Towards Data Science. 2018. Available online: https://towardsdatascience.com/intuitively-understanding-convolutions-for-deep-learning-1f6f42faee1 (accessed on 11 October 2021).

- Powell, V. Image Kernels. Setosa. 2015. Available online: https://setosa.io/ev/image-kernels/ (accessed on 12 November 2021).

- Mishra, D. Transposed Convolutions Demystified. Towards Data Science. 2020. Available online: https://towardsdatascience.com/transposed-convolution-demystified-84ca81b4baba#:~:text=Transposed%20convolution%20is%20also%20known,upsample%20the%20input%20feature%20map (accessed on 6 October 2021).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 26 December 2018).

- Hoegh-Guldberg, O. Coral reef ecosystems and anthropogenic climate change. Reg. Environ. Chang. 2011, 11, 215–227. [Google Scholar] [CrossRef]

- Bellwood, D.R.; Hughes, T.P.; Folke, C.; Nyström, M. Confronting the coral reef crisis. Nature 2004, 429, 827–833. [Google Scholar] [CrossRef]

- Alom, M.Z.; Hasan, M.; Yakopcic, C.; Taha, T.M.; Asari, V.K. Recurrent residual convolutional neural network based on u-net (r2u-net) for medical image segmentation. arXiv 2018, arXiv:1802.06955. [Google Scholar]

- Iglovikov, V.; Shvets, A. Ternausnet: U-net with vgg11 encoder pre-trained on imagenet for image segmentation. arXiv 2018, arXiv:1801.05746. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Hamylton, S.M. Mapping coral reef environments: A review of historical methods, recent advances and future opportunities. Prog. Phys. Geogr. 2017, 41, 803–833. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press: Boca Raton, FL, USA, 2019; Available online: https://books.google.com.ec/books?hl=es&lr=&id=yTmDDwAAQBAJ&oi=fnd&pg=PP1&ots=1HaQilihig&sig=hfe0btykmLoM6xWds0y0mqZebIU&redir_esc=y#v=onepage&q&f=false (accessed on 10 April 2023).

- Bennett, M.K.; Younes, N.; Joyce, K.E. Automating Drone Image Processing to Map Coral Reef Substrates Using Google Earth Engine. Drones 2020, 4, 50. [Google Scholar] [CrossRef]

- Cheng, B.; Girshick, R.; Dollár, P.; Berg, A.C.; Kirillov, A. Boundary IoU: Improving object-centric image segmentation evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 20–25 June 2021; pp. 15334–15342. [Google Scholar]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Ben Jdira, B.; Alajlan, N.; Zuair, M. Deep Learning Approach for Car Detection in UAV Imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S.; Emery, W.J. Object-Based Convolutional Neural Network for High-Resolution Imagery Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3386–3396. [Google Scholar] [CrossRef]

- Veeranampalayam, S.; Arun, N.; Li, J.; Scott, S.; Psota, E.; Jhala, J.A.; Luck, J.D.; Shi, Y. Comparison of Object Detection and Patch-Based Classification Deep Learning Models on Mid- to Late-Season Weed Detection in UAV Imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Andréfouët, S.; Berkelmans, R.; Odriozola, L.; Done, T.; Oliver, J.; Müller-Karger, F. Choosing the appropriate spatial resolution for monitoring coral bleaching events using remote sensing. Coral Reefs 2002, 21, 147–154. [Google Scholar] [CrossRef]

- Brown, B.E. Adaptations of Reef Corals to Physical Environmental Stress. In Advances in Marine Biology; Blaxter, J.H.S., Southward, A.J., Eds.; Academic Press: Cambridge, MA, USA, 1997; Volume 31, pp. 221–299. [Google Scholar] [CrossRef]

- Bhatnagar, S.; Gill, L.; Regan, S.; Waldren, S.; Ghosh, B. A nested drone-satellite approach to monitoring the ecological conditions of wetlands. ISPRS J. Photogramm. Remote Sens. 2021, 174, 151–165. [Google Scholar] [CrossRef]

- Majewski, J. Why Should You Label Your Own Data in Image Classification Experiments? Towards Data Science. 2021. Available online: https://towardsdatascience.com/why-should-you-label-your-own-data-in-image-classification-experiments-6b499c68773e (accessed on 11 October 2021).

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional Neural Networks for Medical Image Analysis: Full Training or Fine Tuning? IEEE Trans. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef]

- Jiménez López, J.; Mulero-Pázmány, M. Drones for Conservation in Protected Areas: Present and Future. Drones 2019, 3, 10. [Google Scholar] [CrossRef]

| Correctly Assigned (%) | Incorrectly Assigned (%) | |

|---|---|---|

| Transect 1 | 64.46 | 35.54 |

| Transect 2 | 100.00 | 0.00 |

| Transect 3 | 96.94 | 3.06 |

| Transect 4 | 91.94 | 8.06 |

| Transect 5 | 87.86 | 12.14 |

| Background | Unbleached Coral | Bleached Coral | Sun Glint | |

|---|---|---|---|---|

| Jaccard | 0.863 | 0.885 | 0.232 | 0.442 |

| Precision | 0.909 | 0.958 | 0.280 | 0.722 |

| Recall | 0.945 | 0.922 | 0.576 | 0.533 |

| True Class | |||||

|---|---|---|---|---|---|

| Background | Unbleached Coral | Bleached Coral | Sun Glint | ||

| Predicted class | Background | 0.92 | 0.16 | 0.17 | 0.30 |

| Unbleached coral | 0.07 | 0.83 | 0.30 | 0.02 | |

| Bleached coral | 0.01 | 0.01 | 0.52 | 0.02 | |

| Sun glint | 0.00 | 0.00 | 0.03 | 0.57 | |

| Background (%) | Unbleached Coral (%) | Bleached Coral (%) | Sun Glint (%) | |

|---|---|---|---|---|

| November 2018 March 2019 | 49.51 | 48.52 | 1.56 | 0.41 |

| 68.51 | 28.24 | 2.21 | 1.04 | |

| May 2019 | 58.00 | 40.73 | 0.97 | 0.30 |

| September 2019 | 27.52 | 64.58 | 6.98 | 0.92 |

| November 2019 | 49.82 | 48.99 | 0.61 | 0.59 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Giles, A.B.; Ren, K.; Davies, J.E.; Abrego, D.; Kelaher, B. Combining Drones and Deep Learning to Automate Coral Reef Assessment with RGB Imagery. Remote Sens. 2023, 15, 2238. https://doi.org/10.3390/rs15092238

Giles AB, Ren K, Davies JE, Abrego D, Kelaher B. Combining Drones and Deep Learning to Automate Coral Reef Assessment with RGB Imagery. Remote Sensing. 2023; 15(9):2238. https://doi.org/10.3390/rs15092238

Chicago/Turabian StyleGiles, Anna Barbara, Keven Ren, James Edward Davies, David Abrego, and Brendan Kelaher. 2023. "Combining Drones and Deep Learning to Automate Coral Reef Assessment with RGB Imagery" Remote Sensing 15, no. 9: 2238. https://doi.org/10.3390/rs15092238

APA StyleGiles, A. B., Ren, K., Davies, J. E., Abrego, D., & Kelaher, B. (2023). Combining Drones and Deep Learning to Automate Coral Reef Assessment with RGB Imagery. Remote Sensing, 15(9), 2238. https://doi.org/10.3390/rs15092238