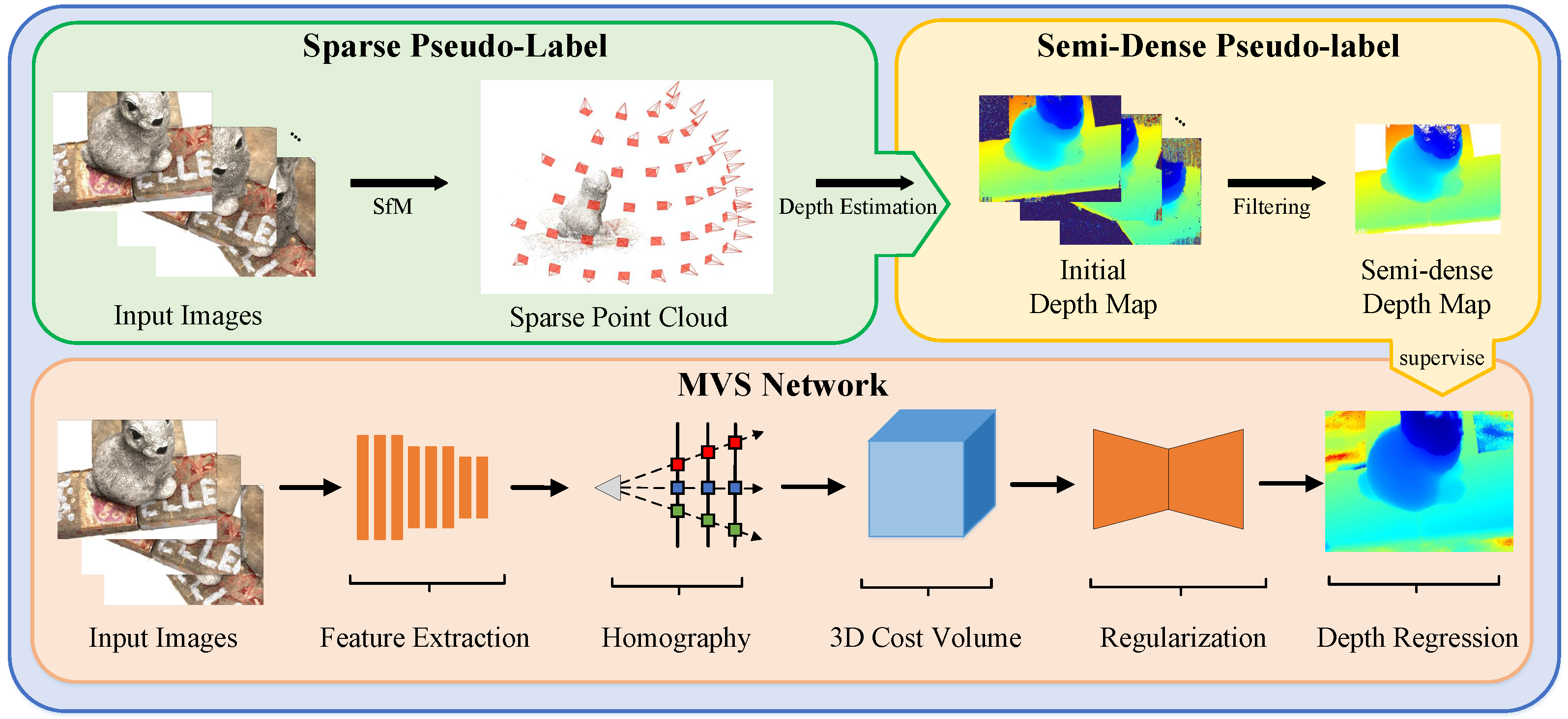

In this section, the performance of the GP-MVS framework is evaluated on the DTU [

39] and Tanks and Temples benchmark [

40]. We begin by describing these datasets and providing implementation details. Subsequently, we present the benchmarking process carried out on the aforementioned datasets. Finally, an ablation study is presented, to showcase the benefits of utilizing the proposed pseudo-labels.

4.2. Benchmark Performance

Results on DTU. Our method’s performance is assessed on the DTU test set using the network trained on the DTU training set. As for the supervised backbone CasMVSNet [

35], the resolution of the input is resized to

and five images are used for depth map prediction (one reference image and four source images).

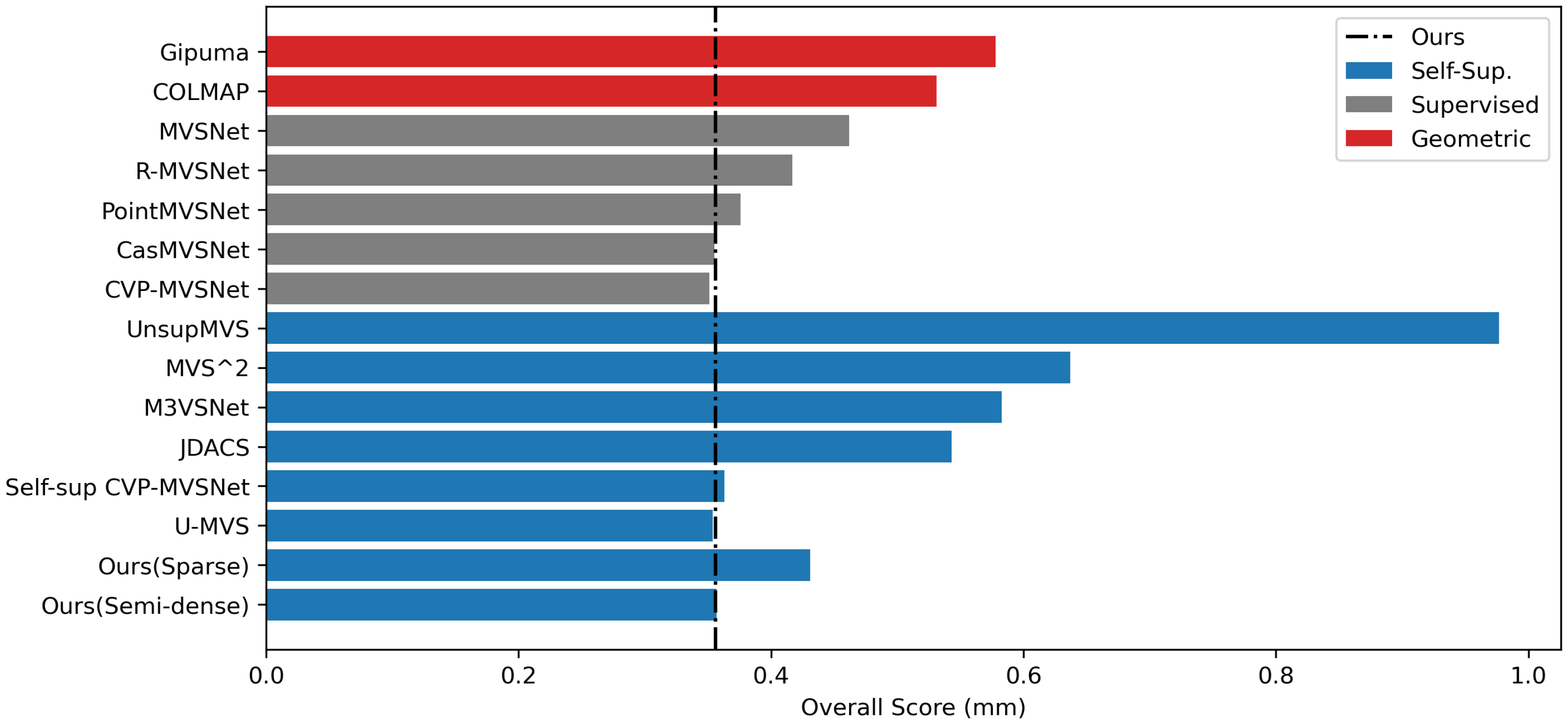

We evaluate the point clouds reconstructed by our method using the overall score. As summarized in

Figure 6, our approach that utilizes semi-dense depth pseudo-labels delivers performance that is comparable to self-supervised learning approaches and even outperforms the supervised MVSNet [

27], R-MVSNet [

31], and Point-MVSNet [

34], and the result is roughly on par with those of CasMVSNet [

35] and CVP-MVSNet [

36].

The quantitative results of various self-supervised MVS methods, including the proposed pseudo-label based method, are presented in

Table 1. Our methods (trained with sparse pseudo-labels or semi-dense pseudo-labels) perform better than UnsupMVS [

10], MVS

2 [

11], M

3VSNet [

12], and JDACS [

13]. The model trained with our semi-dense depth map pseudo-labels (semi-dense) achieved comparable performance compared with Self-sup CVP-MVSNet [

14] and U-MVS [

15]. Note that the pseudo-labels generation process of Self-sup CVP-MVSNet and U-MVS is much more complicated compared with that of our method. For Self-sup CVP-MVSNet, after obtaining the initial depth map from the unsupervised model, an iterative refinement process is performed to obtain the pseudo-labels, which involves several steps, such as initial depth estimation from a high-resolution image, consistency check-based filtering for estimates, and fusion of the depth from multiple views, to obtain final pseudo-labels. For U-MVS, it uses the pretrained unsupervised model based on the uncertainty to generate pseudo-labels, which requires sampling up to 20 times to obtain reliable uncertainty maps for depth filtering.

Table 2 showcases a comparison between the proposed methods and traditional/supervised MVS methods. Our approach surpasses the traditional approaches Gipuma [

21] and COLMAP [

6]. MVSFormer [

37] has been improved on the basis of CasMVSNet [

35], achieving the best performance of the supervised methods on the DTU dataset. Our self-supervised method is comparable to the supervised multi-scale MVS network CVP-MVSNet [

14], and the point cloud reconstructed by our method has better completeness. We also compare the self-supervised approach proposed with the backbone network CasMVSNet.

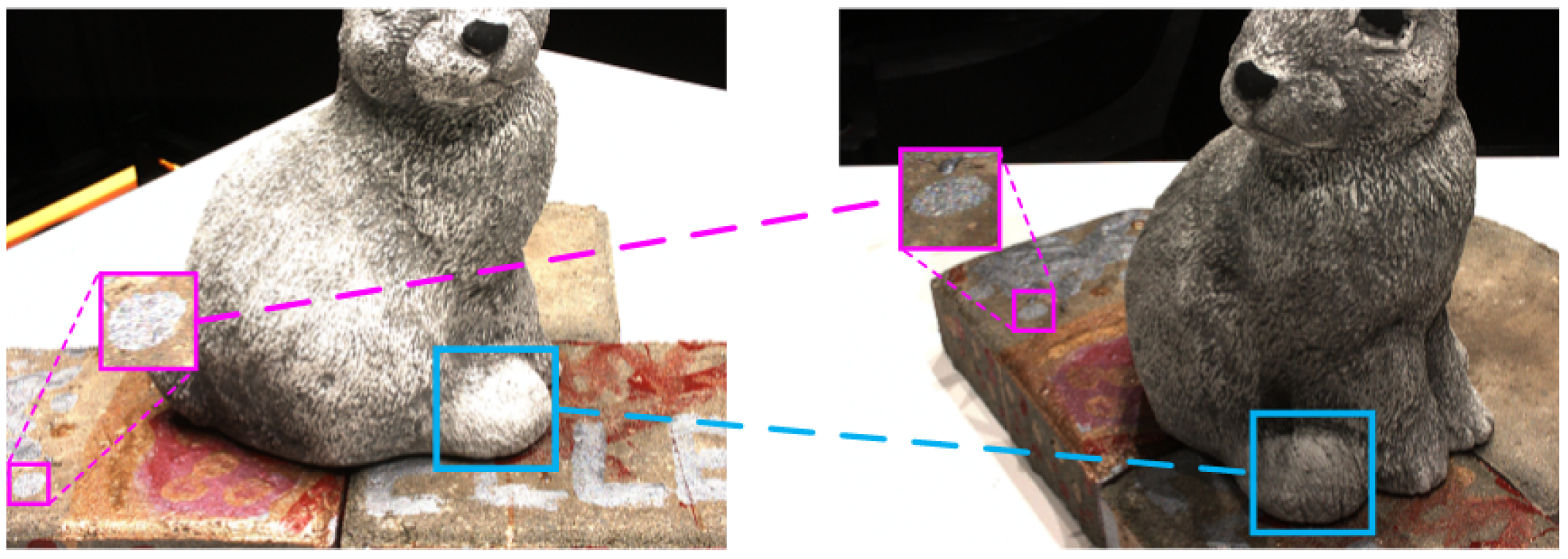

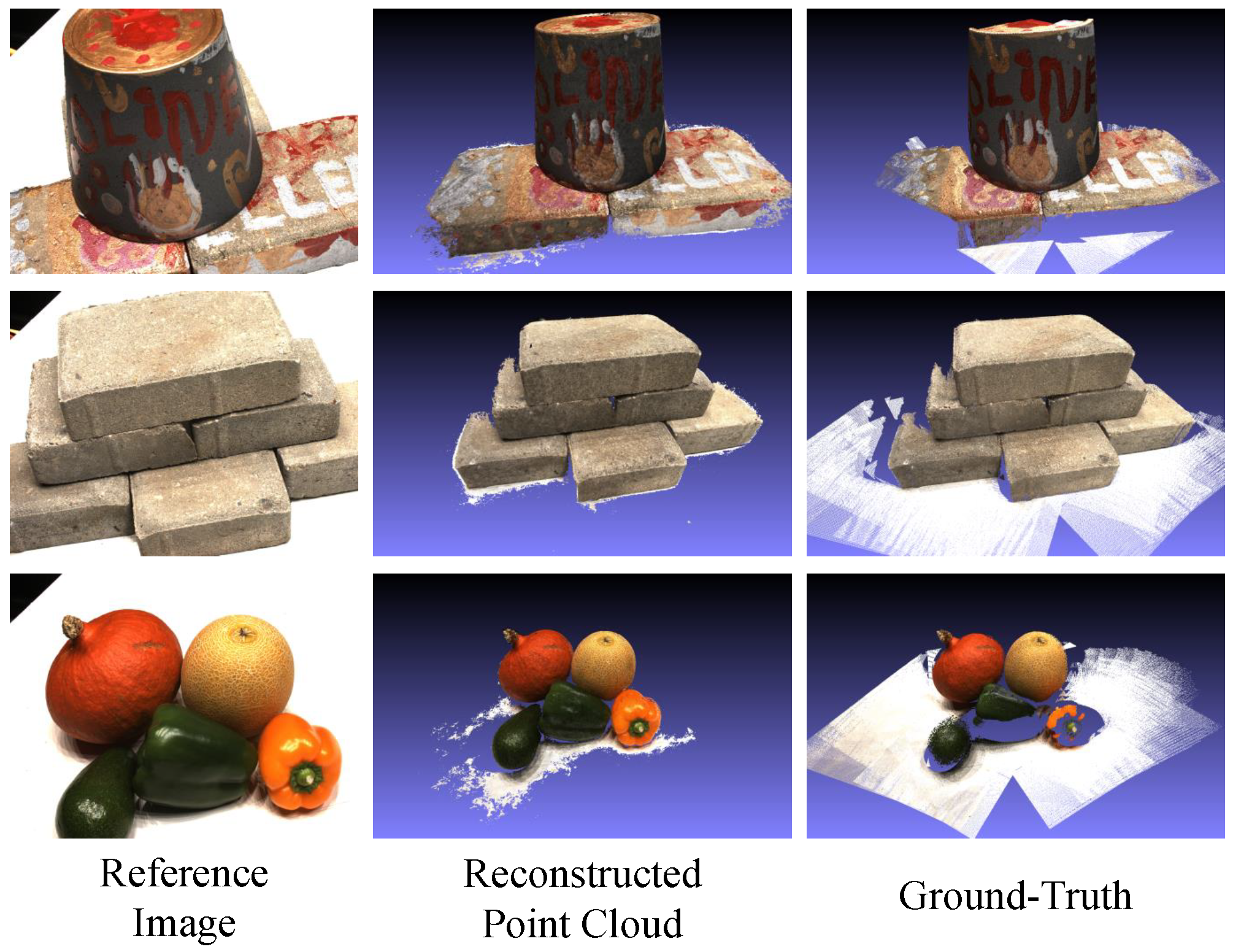

Table 2 presents a numerical evaluation of our approach compared to the CasMVSNet on the DTU dataset. Our approach shows slightly lower quantitative results but the qualitative results, as shown in

Figure 7, suggest that our approach can reconstruct 3D point clouds with high accuracy, especially in capturing local details.

Results on Tanks and Temples. To assess the generalization capability of the proposed methods, the models were trained on the DTU dataset and performed an evaluation on the Tanks and Temples dataset, without any fine-tuning. Specifically, five input images were used as an input, with a resolution of

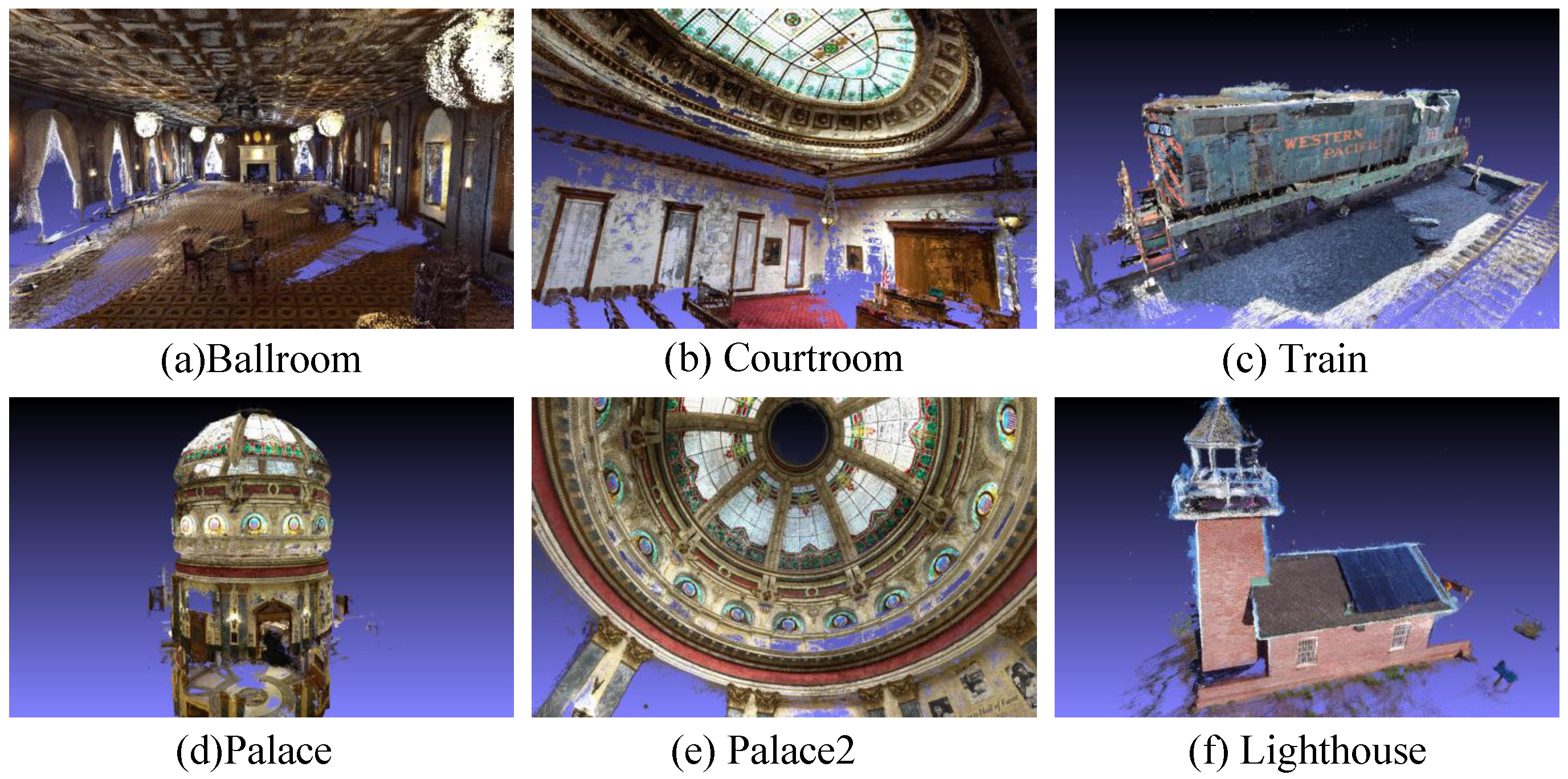

. As displayed in

Table 3, our approach surpasses the traditional methods and supervised methods by a significant margin, which proves that the MVS network supervised with our proposed pseudo-label is effective. Additionally,

Figure 8 illustrates the qualitative results of both subsets. The proposed method can reconstruct denser point clouds with more details, making them more visually appealing.

The advanced set of Tanks and Temples contains challenging scenes. Our approach demonstrates superior performance compared to other approaches in most evaluation metrics, as presented in

Table 4. This proves that the proposed depth map pseudo-labels based on the geometry prior, can effectively capture the geometric information in the 3D scene. Due to overfitting on the DTU dataset, supervised methods, such as the backbone CasMVSNet, exhibit limited generalization performance. Thus, even though our method achieved slightly lower reconstruction performance on the DTU compared to the backbone network, the use of our proposed pseudo-labels has the potential to enhance the network’s generalization ability. This proves that the MVS network supervised with our proposed pseudo-labels is effective.

4.3. Ablation Study

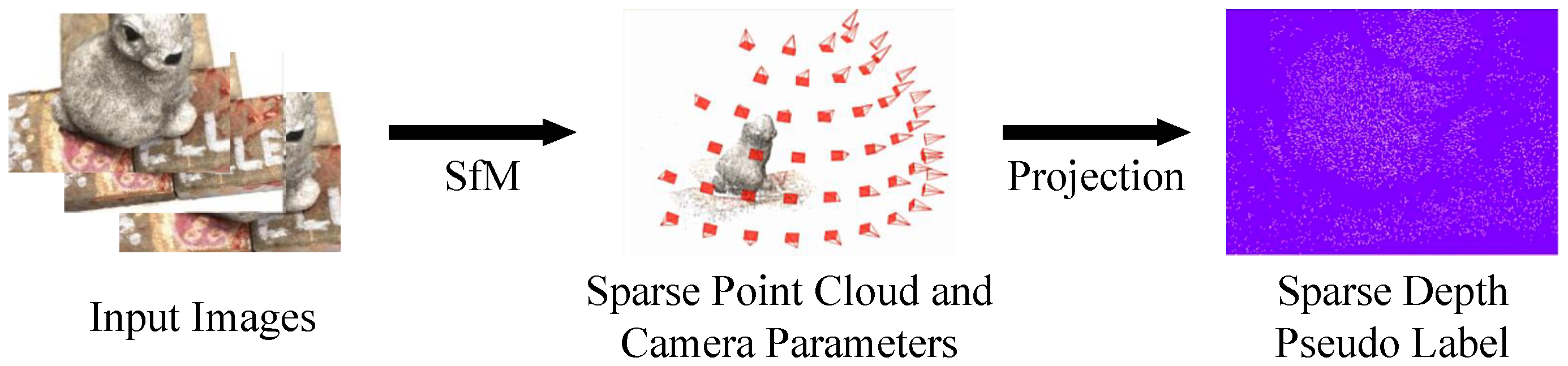

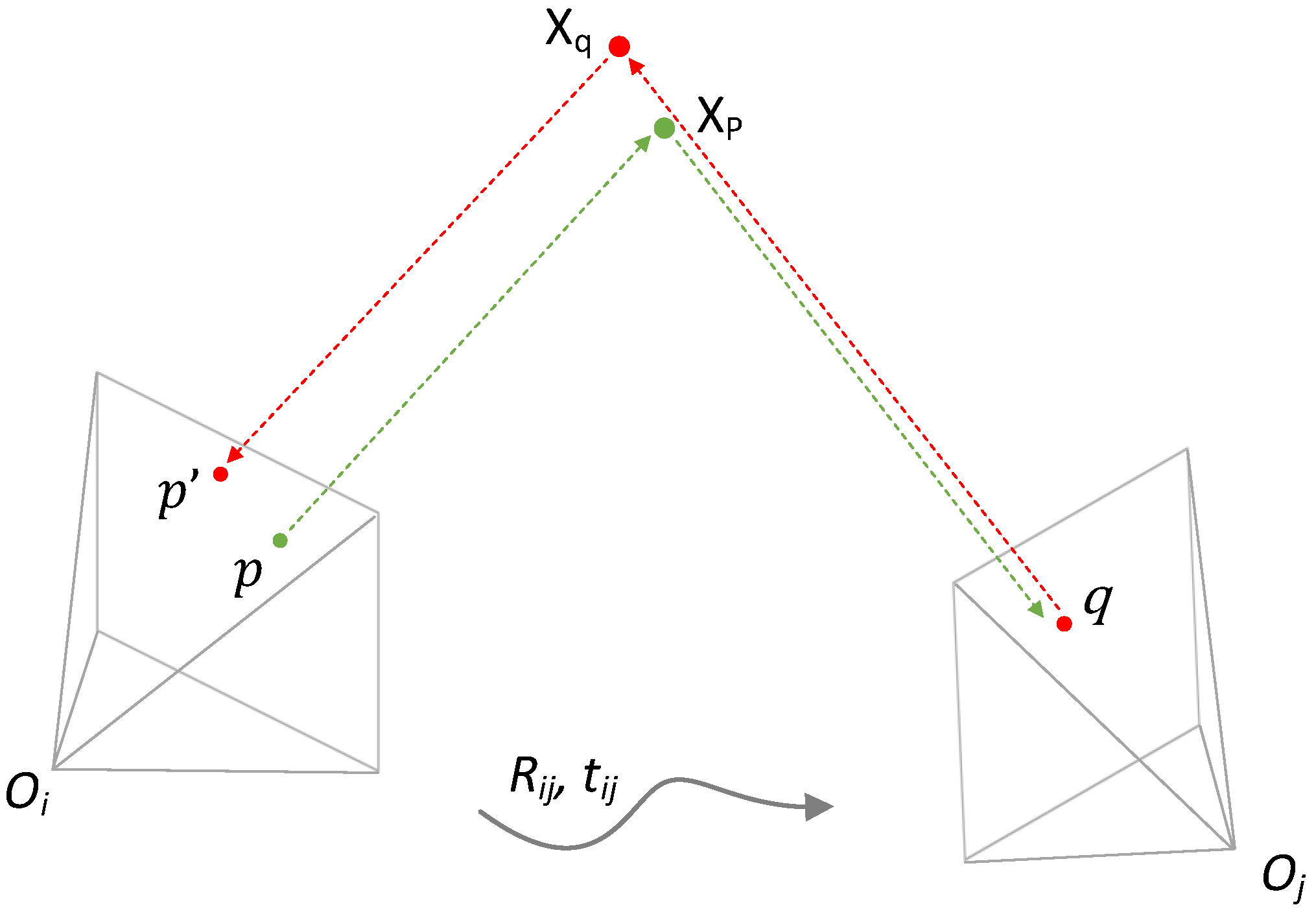

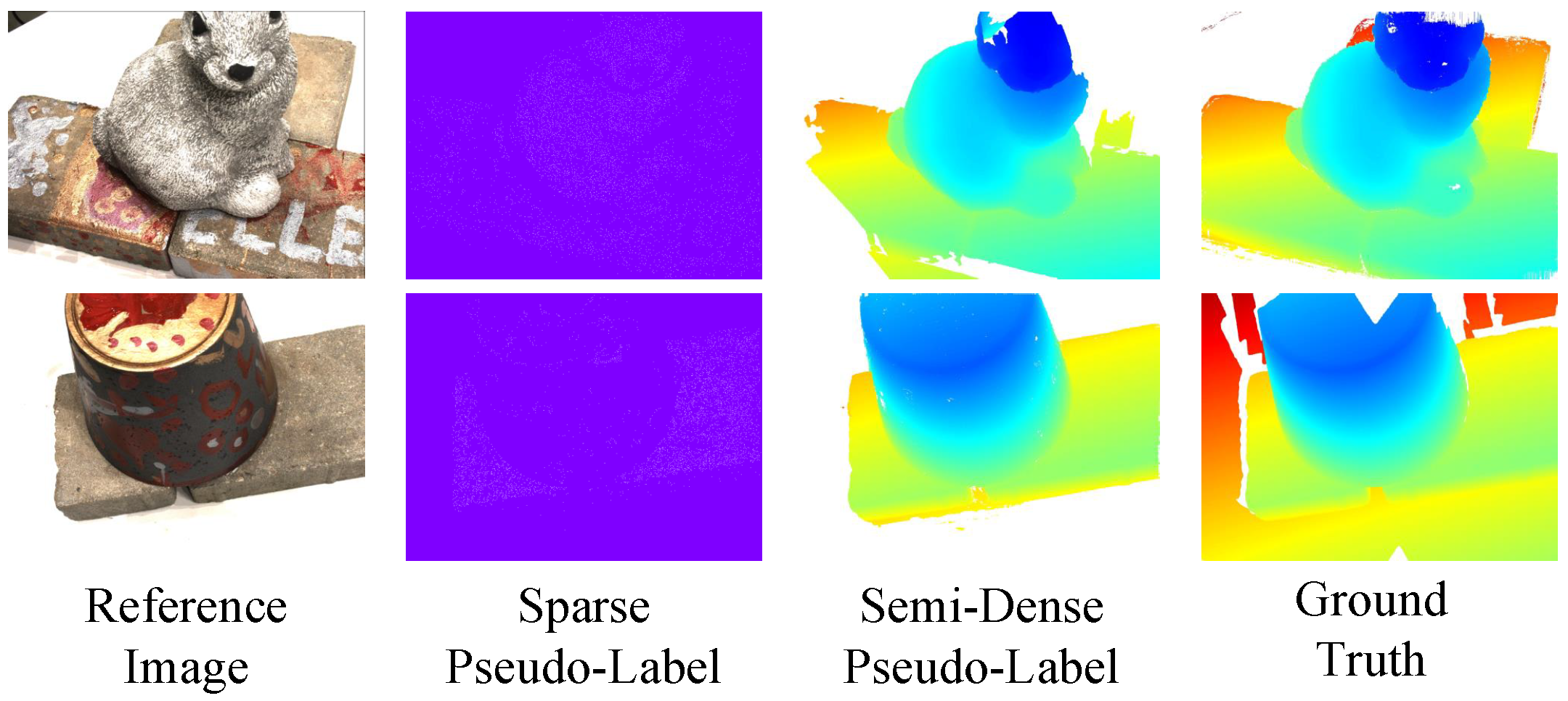

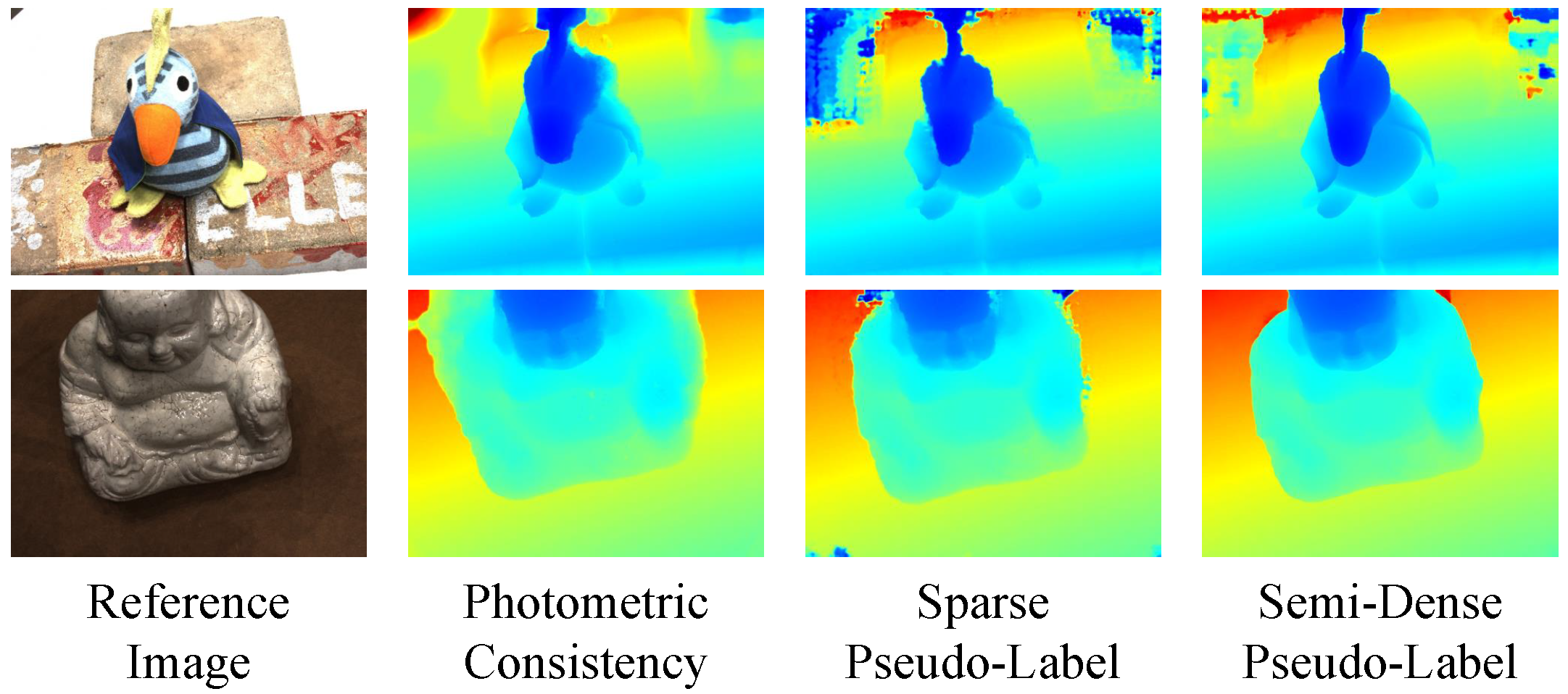

Accuracy of pseudo-labels. Figure 9 shows the visualization of different pseudo-labels. The white dots in the sparse depth map are the pixel positions with sparse prior information. The sparse depth map can only describe the basic geometric structure of the 3D scene, focusing more on the rich texture part. The supervision of the semi-dense pseudo-label depth map in the foreground area is more complete. Upon comparison with the ground truth, it can be inferred that the foreground, using semi-dense pseudo-labels, is more complete, while removing false background estimates. We assess the accuracy of the network using various pseudo-labels as supervision on the DTU dataset, with depth prediction accuracy serving as the evaluation metric. In addition, we provide the density (means of percentage of labeled pixels in each image) of different pseudo-labels. Note that the density of the initial depth map without filtering is 100%.

From

Table 5, it can be concluded that the accuracy of the sparse depth map has already achieved a high accuracy (86% pixels of the sparse depth map are accurate within 2 mm). However, due to the few labeled points, the sparse pseudo-labels have certain limitations as supervision. The semi-dense pseudo-labels, after removing the wrong points, has the highest accuracy.

Analysis of Different Supervisions. Table 6 reflects the accuracy of depth maps estimated by models trained under different supervision. The results show that the network trained with semi-dense depth map pseudo-labels achieves the second best accuracy, which is comparable to that of the supervised CasMVSNet, while outperforming the network based on photometric consistency loss and the sparse prior loss.

As shown in

Figure 10, using photometric consistency loss as supervision, leads to noticeable errors at the boundaries. In contrast, using semi-dense pseudo-labels as the network’s supervision, allows for more precise depth map predictions, especially at the border between foreground and background.

Table 7 shows the results of point clouds reconstructed by models with different supervisions. We use the overall and the F score under the 1 mm threshold as the evaluation metrics. Methods based on semi-dense pseudo-labels have the best quality.

By comparing the performance of different methods, we aimed to provide further evidence of the effectiveness of our self-supervised approach utilizing pseudo-labels.

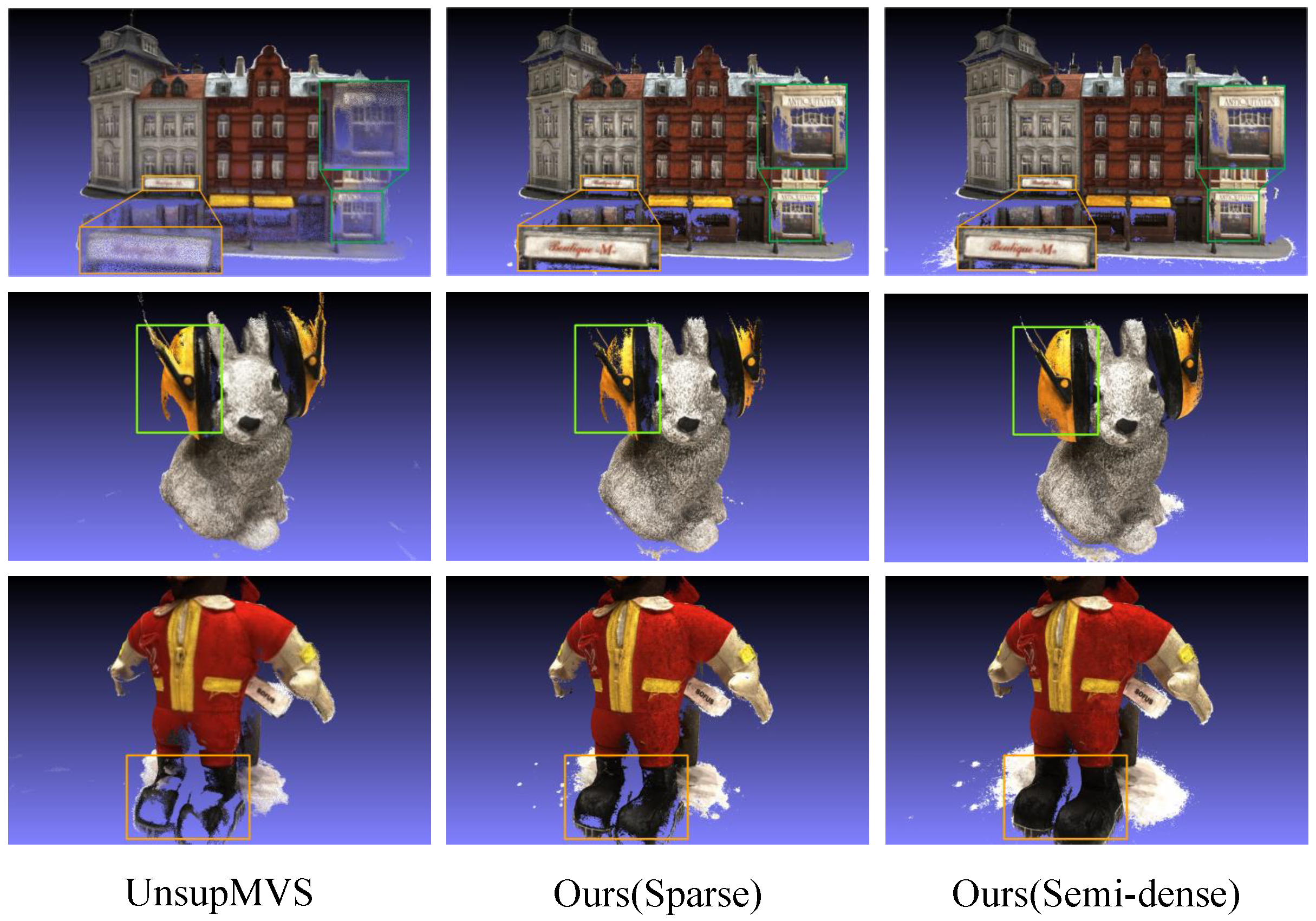

Figure 11 displays the reconstructed results of scan9, scan33, and scan49 in the DTU dataset. UnsupMVS [

10] is an unsupervised MVS method based on photometric consistency loss. The self-supervised MVS method based on pseudo-labels produces denser 3D point clouds with more complete local details compared to other methods, as shown in

Figure 11.

In addition, we conducted a comparison between the point clouds generated by our method and those obtained using traditional methods. The sparse point clouds were generated from the SfM described in

Section 3.2.

Table 8 demonstrates that although the sparse point cloud has an acceptable accuracy, its completeness is compromised, due to the sparse distribution of points. It should be noted, that our self-supervised methods using semi-dense pseudo-labels outperformed the traditional method COLMAP. In addition, using the dense depth map reconstructed by COLMAP as a pseudo-label for training, the accuracy is not only better than COLMAP itself, but also close to the best supervised learning method, and even stronger in generalization ability. These results highlight the strengths of our proposed pseudo-label approach.

Statistical Analysis. To further show the effectiveness of the proposed semi-dense pseudo-labels, a statistical analysis based on the paired

t-test is conducted for CasMVSNet [

35] and CasMVSNet [

35] combined with semi-dense pseudo-labels. The statistic

t of the paired

t-test is calculated as:

where

denotes the sample mean of differences,

denotes the hypothesized population mean difference,

denotes the standard deviation of differences, and

n denotes the sample size. The degrees of freedom

. The

p-value is determined by checking the corresponding threshold table based on the

t statistic.

Table 9 shows the results of the paired

t-tests for CasMVSNet [

35] and CasMVSNet with our semi-dense pseudo-labels on the DTU dataset and the Tanks and Temples dataset, the significance level

is set to 0.05. The

p values for the DTU and intermediate subsets are 0.8260 and 0.2794, respectively, indicating no significant difference between the experimental results of CasMVSNet and CasMVSNet with our semi-dense pseudo-labels on these datasets. This suggests that CasMVSNet with our semi-dense pseudo-labels is competitive with CasMVSNet on these datasets. On the advanced subset, the

p value is 0.0076, indicating a significant difference between the experimental results of CasMVSNet and CasMVSNet with our semi-dense pseudo-labels on this dataset. Therefore, our method outperforms CasMVSNet significantly on this dataset.