Giving Historical Photographs a New Perspective: Introducing Camera Orientation Parameters as New Metadata in a Large-Scale 4D Application

Abstract

1. Introduction

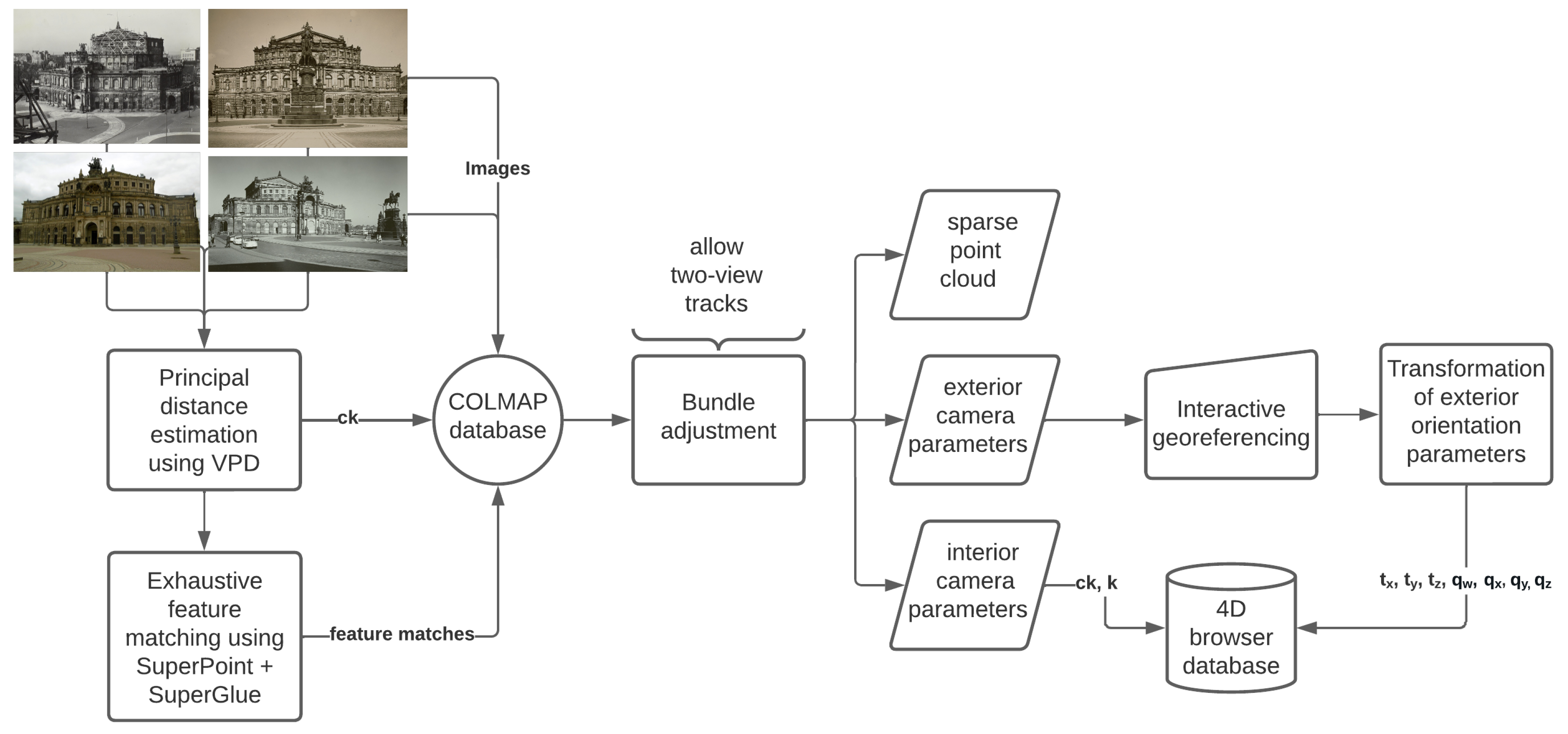

2. Related Research

2.1. Geoprocessing of Historical Photographs

2.2. 3D/4D Research Platforms

3. Materials and Methods

3.1. Data

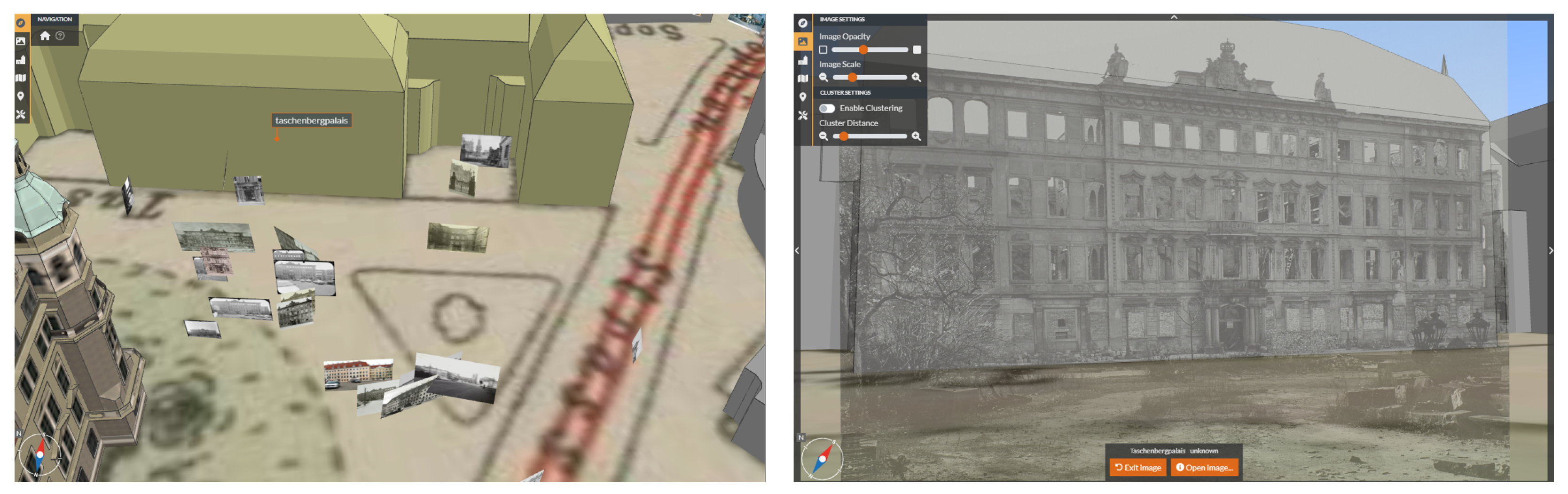

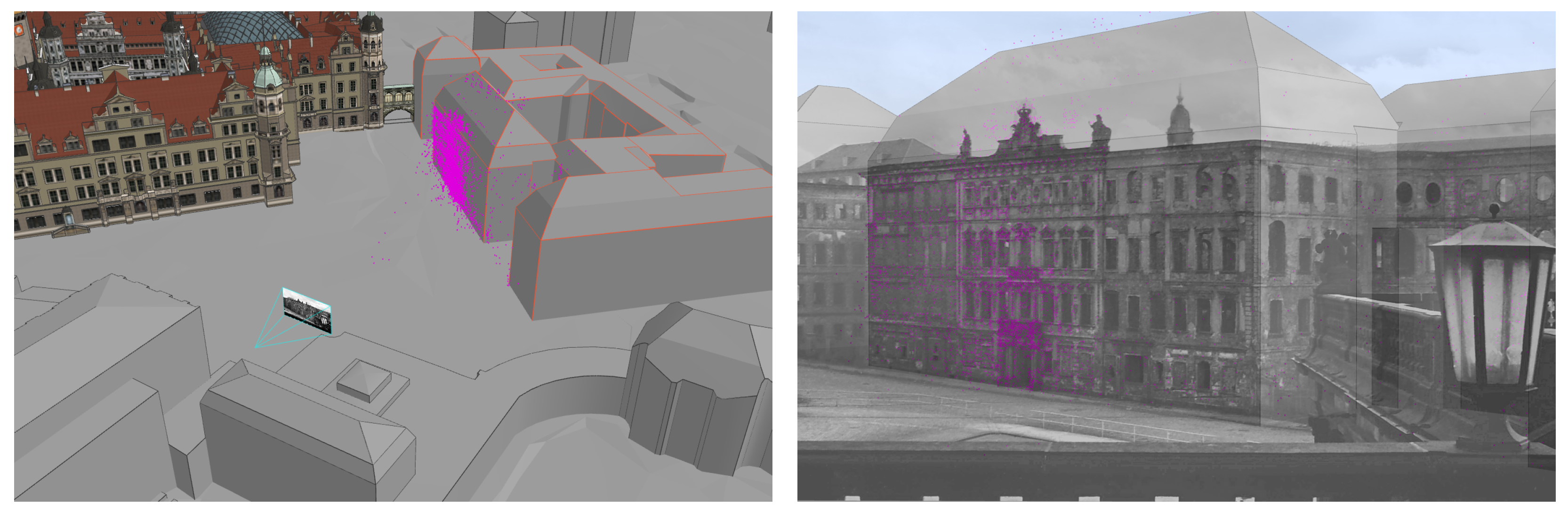

3.2. Structure of the 4D Browser and Its Database

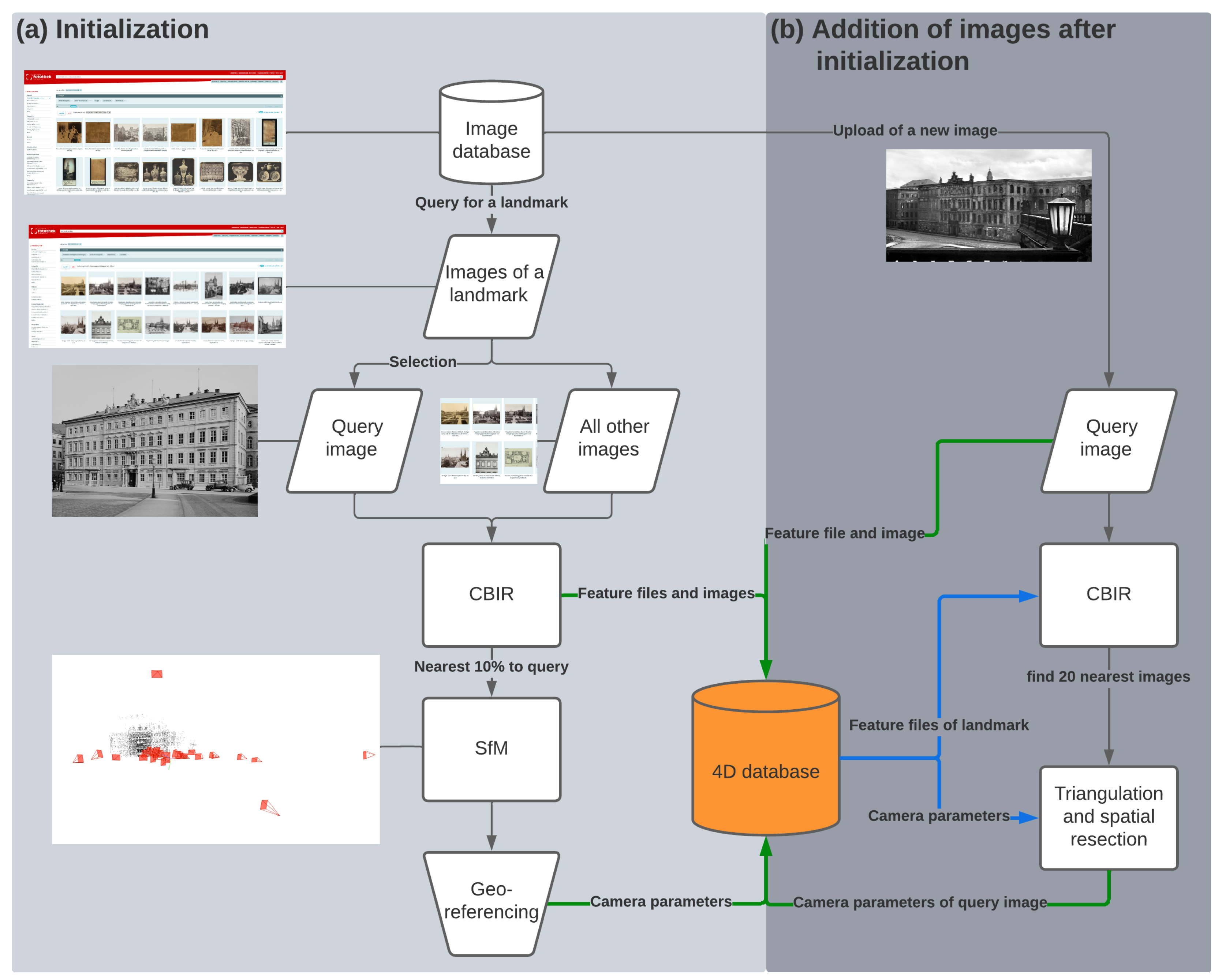

3.3. Initialization of a New Dataset

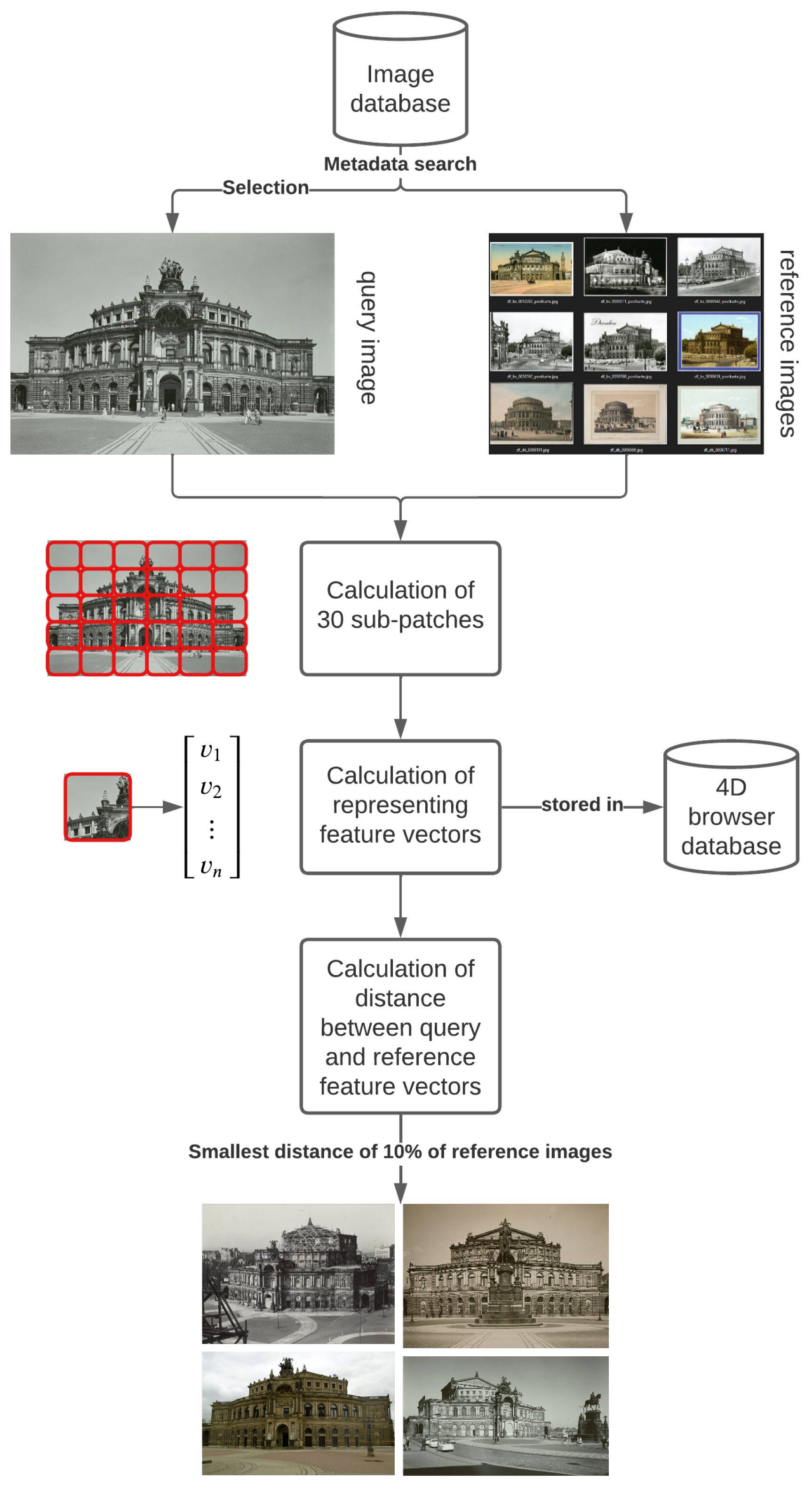

3.3.1. Layer Extraction Approach (LEA)

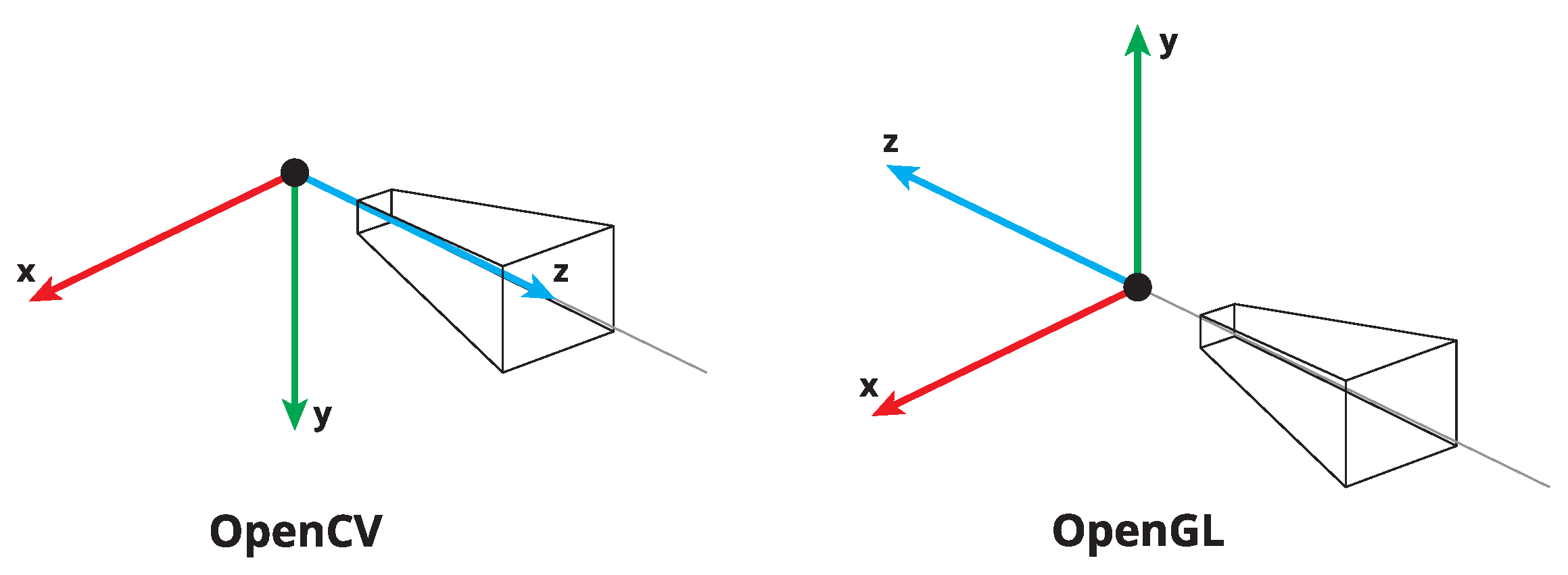

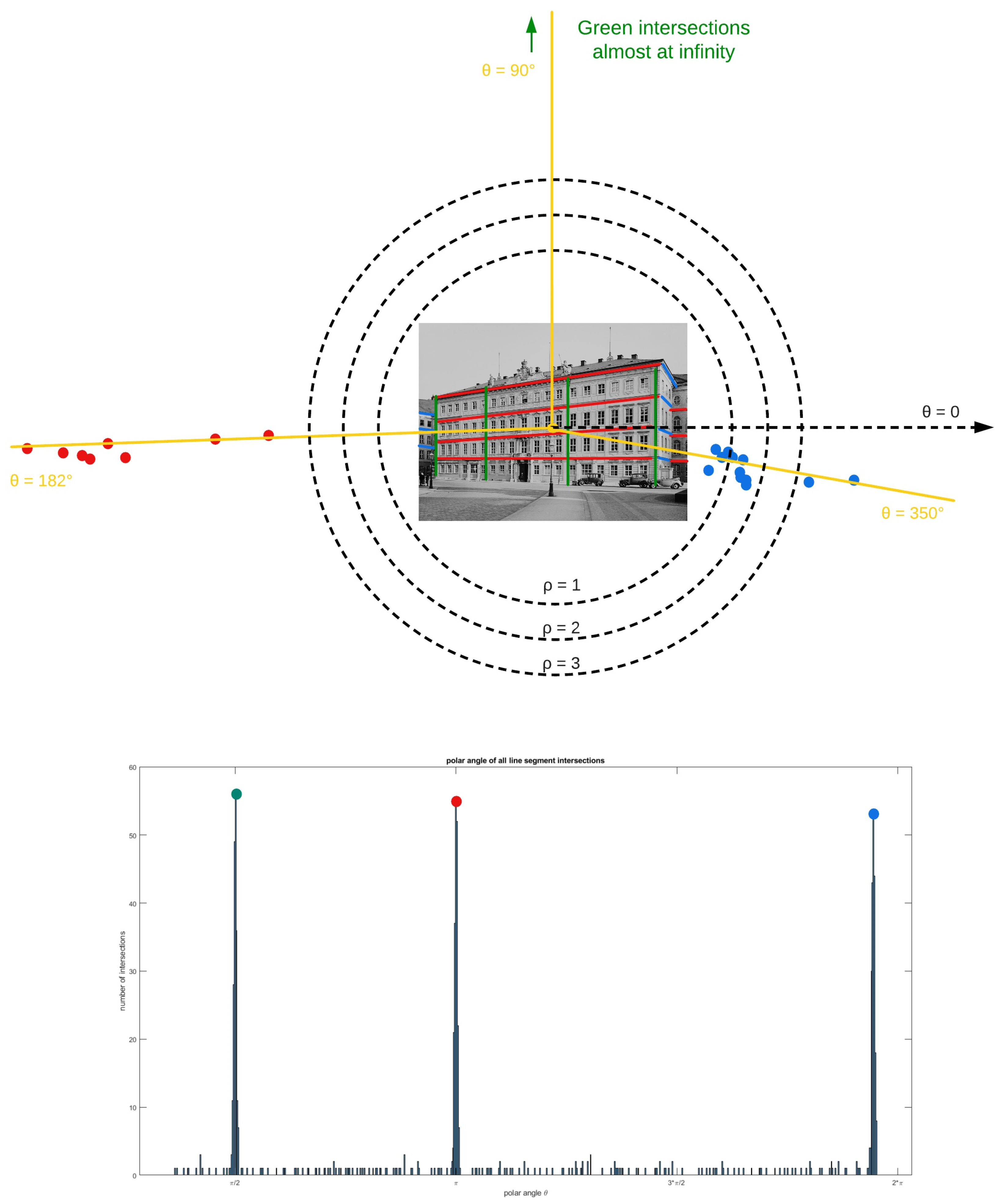

3.3.2. Estimation of Initial Interior Camera Orientation Parameters

3.3.3. Structure-from-Motion for Historical Photographs

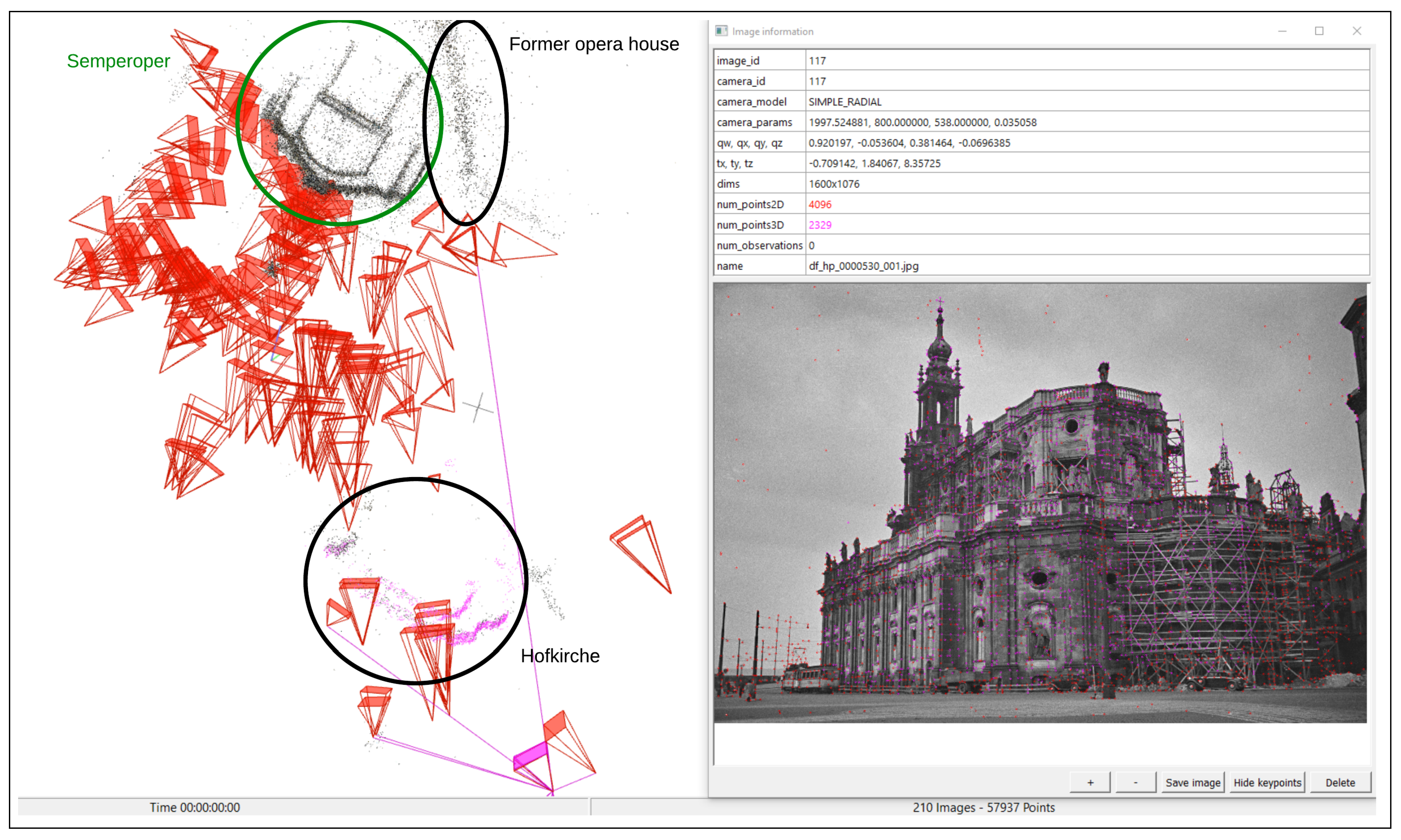

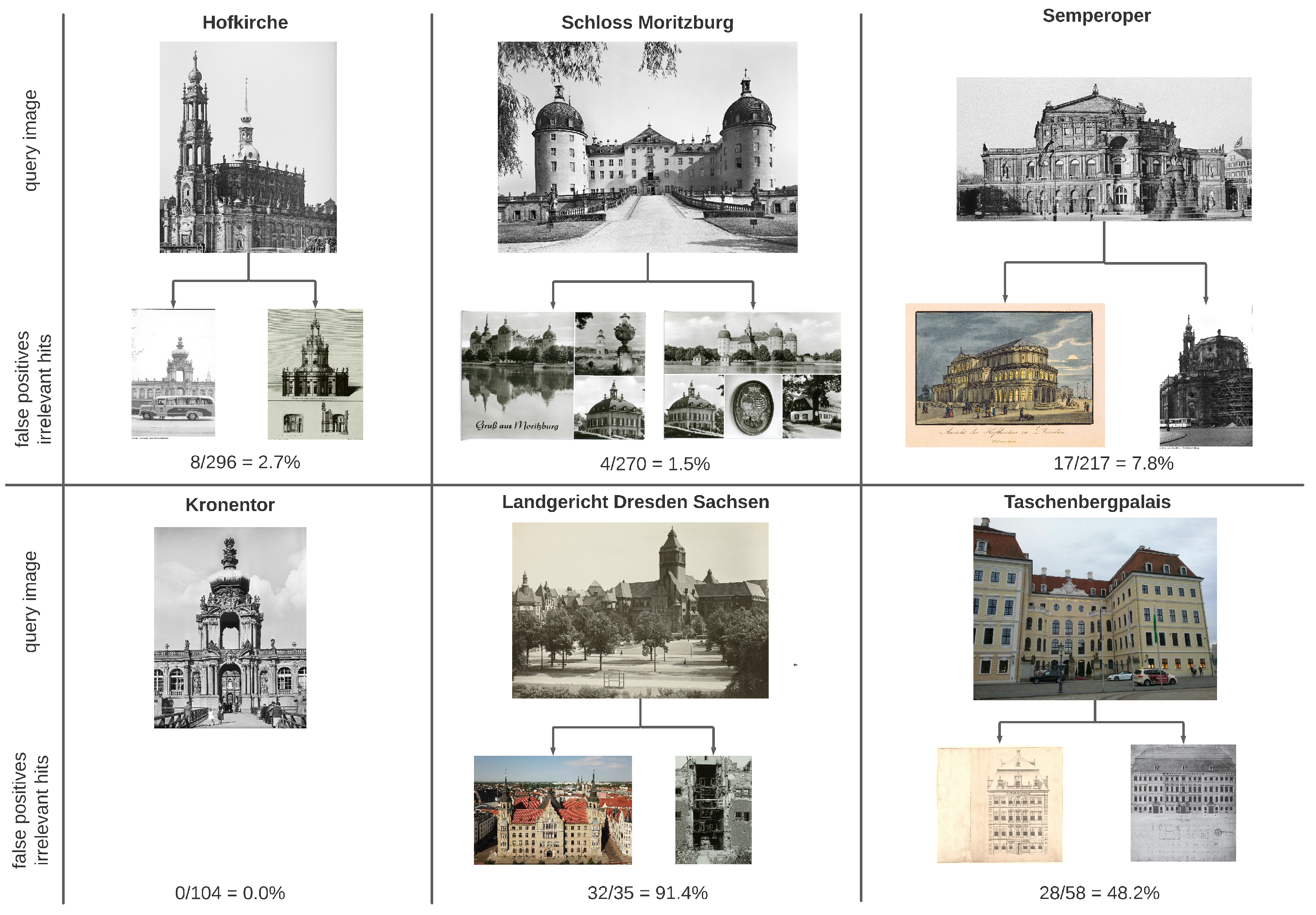

3.4. Data Extension

3.5. Limitations

4. Results

4.1. Taschenbergpalais

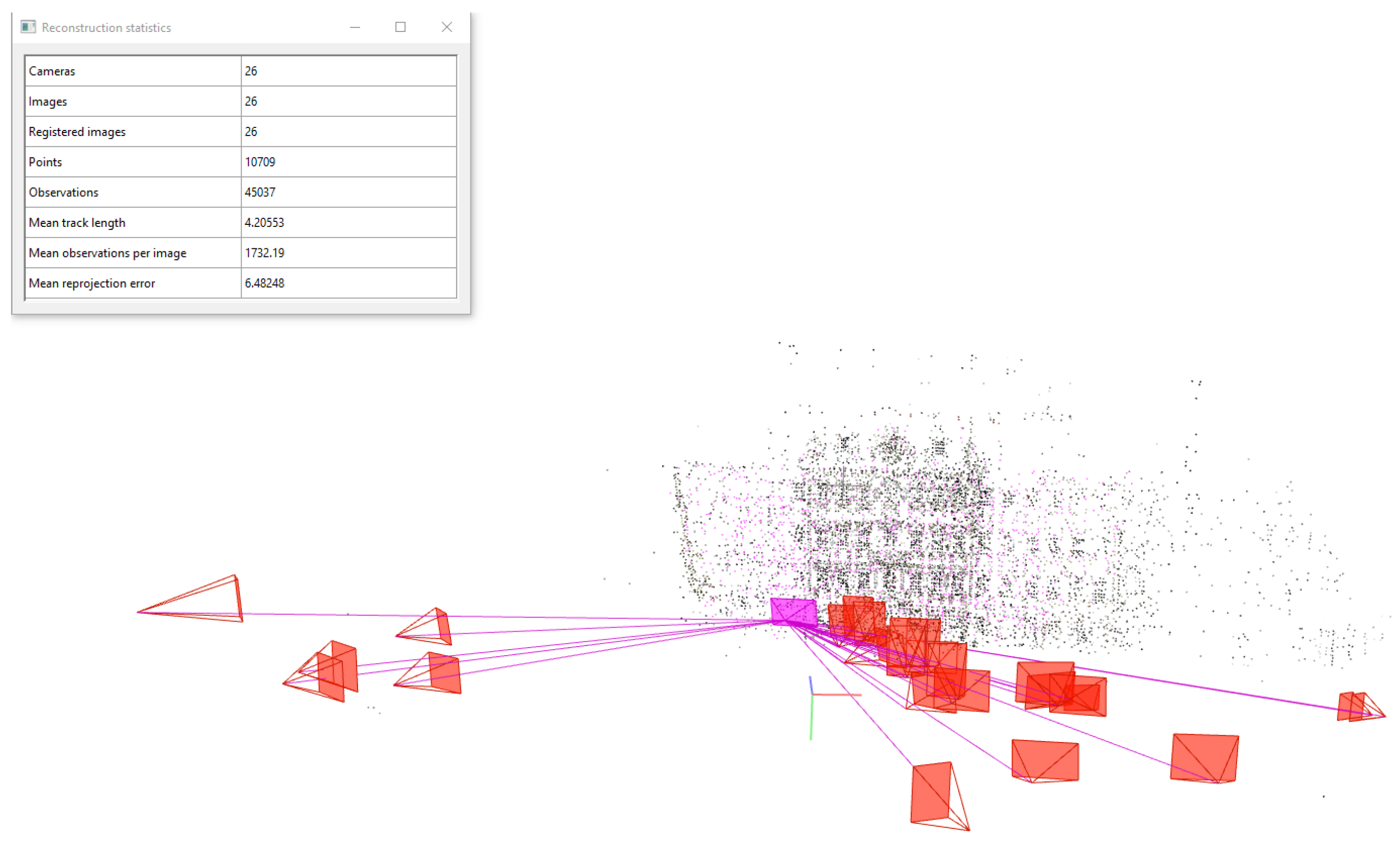

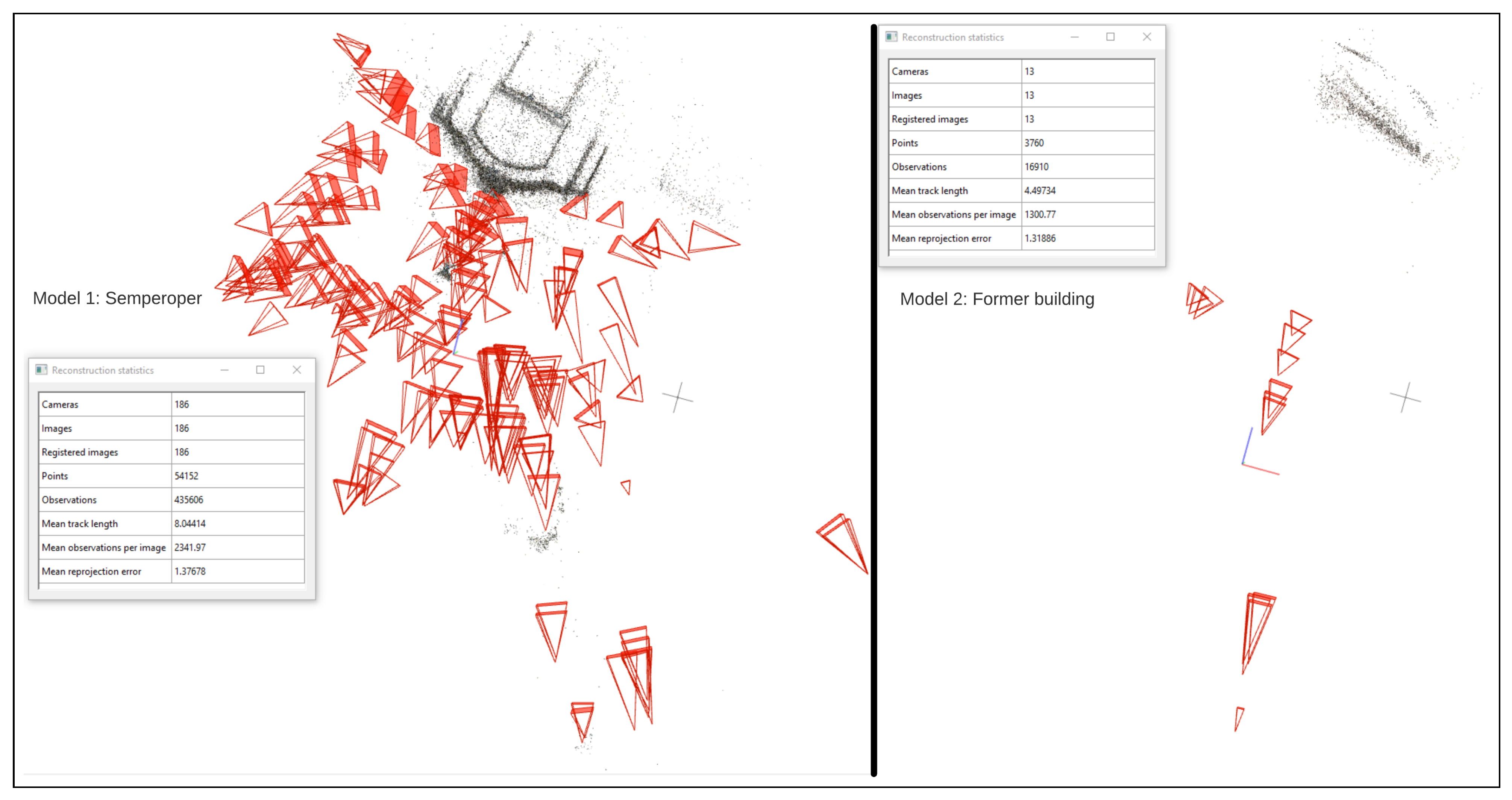

4.2. Semperoper

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| 3D | three-dimensional |

| 4D | four-dimensional |

| GIS | geographic information system |

| SfM | Structure-from-Motion |

| CBIR | content-based image retrieval |

| LOD | level of detail |

| SLUB | The Saxon State and University Library Dresden |

| LEA | layer extraction approach |

| CNN | convolutional neural network |

| pkl | pickle file extension |

| kB | kilobytes |

| VPD | vanishing point detection |

| VP | vanishing point |

| hloc | hierarchical localization toolbox |

| TLS | terrestrial laser scanning |

Appendix A

References

- Friedrichs, K.; Münster, S.; Kröber, C.; Bruschke, J. Creating Suitable Tools for Art and Architectural Research with Historic Media Repositories. In Digital Research and Education in Architectural Heritage. UHDL 2017, DECH 2017; Münster, S., Friedrichs, K., Niebling, F., Seidel-Grzesińska, A., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 117–138. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 337–33712. [Google Scholar] [CrossRef]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperGlue: Learning Feature Matching with Graph Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4938–4947. [Google Scholar] [CrossRef]

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Schaffland, A.; Heidemann, G. Heritage and Repeat Photography: Techniques, Management, Applications, and Publications. Heritage 2022, 5, 4267–4305. [Google Scholar] [CrossRef]

- Gaetani, C.I.D.; Ioli, F.; Pinto, L. Aerial and UAV Images for Photogrammetric Analysis of Belvedere Glacier Evolution in the Period 1977–2019. Remote Sens. 2021, 13, 3787. [Google Scholar] [CrossRef]

- Knuth, F.; Shean, D.; Bhushan, S.; Schwat, E.; Alexandrov, O.; McNeil, C.; Dehecq, A.; Florentine, C.; O’Neel, S. Historical Structure from Motion (HSfM): Automated processing of historical aerial photographs for long-term topographic change analysis. Remote Sens. Environ. 2023, 285, 113379. [Google Scholar] [CrossRef]

- Nebiker, S.; Lack, N.; Deuber, M. Building Change Detection from Historical Aerial Photographs Using Dense Image Matching and Object-Based Image Analysis. Remote Sens. 2014, 6, 8310–8336. [Google Scholar] [CrossRef]

- Deane, E.; Macciotta, R.; Hendry, M.T.; Gräpel, C.; Skirrow, R. Leveraging historical aerial photographs and digital photogrammetry techniques for landslide investigation—A practical perspective. Landslides 2020, 17, 1989–1996. [Google Scholar] [CrossRef]

- Meixner, P.; Eckstein, M. Multi-Temporal Analysis of WWII Reconnaissance Photos. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B8, 973–978. [Google Scholar] [CrossRef]

- Khalil, O.A.; Grussenmeyer, P. 2D & 3D Reconstruction workflows from archive images, case study of damaged monuments in Bosra Al-Sham city (Syria). ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 55–62. [Google Scholar] [CrossRef]

- Maiwald, F.; Maas, H.G. An automatic workflow for orientation of historical images with large radiometric and geometric differences. Photogramm. Rec. 2021, 36, 77–103. [Google Scholar] [CrossRef]

- Farella, E.M.; Özdemir, E.; Remondino, F. 4D Building Reconstruction with Machine Learning and Historical Maps. Appl. Sci. 2021, 11, 1445. [Google Scholar] [CrossRef]

- Beltrami, C.; Cavezzali, D.; Chiabrando, F.; Idelson, A.I.; Patrucco, G.; Rinaudo, F. 3D Digital and Physical Reconstruction of a Collapsed Dome using SFM Techniques from Historical Images. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W11, 217–224. [Google Scholar] [CrossRef]

- Stellacci, S.; Condorelli, F. Remote survey of traditional dwellings using advanced photogrammetry integrated with archival data: The case of Lisbon. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B2-2022, 893–899. [Google Scholar] [CrossRef]

- Muenster, S. Digital 3D Technologies for Humanities Research and Education: An Overview. Appl. Sci. 2022, 12, 2426. [Google Scholar] [CrossRef]

- Manferdini, A.M.; Remondino, F. Reality-Based 3D Modeling, Segmentation and Web-Based Visualization. In Digital Heritage; Springer: Berlin/Heidelberg, Germany, 2010; pp. 110–124. [Google Scholar] [CrossRef]

- Nishanbaev, I. A web repository for geo-located 3D digital cultural heritage models. Digit. Appl. Archaeol. Cult. Herit. 2020, 16, e00139. [Google Scholar] [CrossRef]

- Gominski, D.; Gouet-Brunet, V.; Chen, L. Connecting Images through Sources: Exploring Low-Data, Heterogeneous Instance Retrieval. Remote Sens. 2021, 13, 3080. [Google Scholar] [CrossRef]

- Schaffland, A.; Vornberger, O.; Heidemann, G. An Interactive Web Application for the Creation, Organization, and Visualization of Repeat Photographs. In Proceedings of the 1st Workshop on Structuring and Understanding of Multimedia Heritage Contents, Nice, France, 21 October 2019. [Google Scholar] [CrossRef]

- Fanini, B.; Ferdani, D.; Demetrescu, E.; Berto, S.; d’Annibale, E. ATON: An Open-Source Framework for Creating Immersive, Collaborative and Liquid Web-Apps for Cultural Heritage. Appl. Sci. 2021, 11, 11062. [Google Scholar] [CrossRef]

- Champion, E.; Rahaman, H. Survey of 3D digital heritage repositories and platforms. Virtual Archaeol. Rev. 2020, 11, 1. [Google Scholar] [CrossRef]

- Münster, S.; Maiwald, F.; Bruschke, J.; Kröber, C.; Dietz, R.; Messemer, H.; Niebling, F. Where Are We Now on the Road to 4D Urban History Research and Discovery? ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, VIII-M-1-2021, 109–116. [Google Scholar] [CrossRef]

- Schindler, G.; Dellaert, F. 4D Cities: Analyzing, Visualizing, and Interacting with Historical Urban Photo Collections. J. Multimed. 2012, 7, 124–131. [Google Scholar] [CrossRef]

- Bruschke, J.; Wacker, M.; Niebling, F. Comparing Methods to Visualize Orientation of Photographs: A User Study. In Research and Education in Urban History in the Age of Digital Libraries. UHDL 2019; Niebling, F., Münster, S., Messemer, H., Eds.; Springer International Publishing: Cham, Swizterland, 2021; pp. 129–151. [Google Scholar] [CrossRef]

- Bekiari, C.; Bruseker, G.; Doerr, M.; Ore, C.E.; Stead, S.; Velios, A. Definition of the CIDOC Conceptual Reference Model v7.1.1; The CIDOC Conceptual Reference Model Special Interest Group: Berlin, Germany, 2021. [Google Scholar] [CrossRef]

- Maiwald, F.; Lehmann, C.; Lazariv, T. Fully Automated Pose Estimation of Historical Images in the Context of 4D Geographic Information Systems Utilizing Machine Learning Methods. ISPRS Int. J. -Geo-Inf. 2021, 10, 748. [Google Scholar] [CrossRef]

- Sharif Razavian, A.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN Features Off-the-Shelf: An Astounding Baseline for Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 512–519. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Razavian, A.S.; Sullivan, J.; Carlsson, S.; Maki, A. Visual instance retrieval with deep convolutional networks. ITE Trans. Media Technol. Appl. 2016, 4, 251–258. [Google Scholar] [CrossRef]

- Maiwald, F. Generation of a Benchmark Dataset Using Historical Photographs for an Automated Evaluation of Different Feature Matching Methods. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2019, XLII-2/W13, 87–94. [Google Scholar] [CrossRef]

- Li, B.; Peng, K.; Ying, X.; Zha, H. Simultaneous Vanishing Point Detection and Camera Calibration from Single Images. In Advances in Visual Computing; Springer: Berlin/Heidelberg, Germany, 2010; pp. 151–160. [Google Scholar] [CrossRef]

- de Agapito, L.; Hayman, E.; Reid, I. Self-Calibration of a Rotating Camera with Varying Intrinsic Parameters. In Proceedings of the British Machine Vision Conference, Southampton, UK, 14–17 September 1998; Nixon, M., Carter, J., Eds.; British Machine Vision Association: Southampton, UK, 1998; pp. 105–114. [Google Scholar] [CrossRef]

- Morelli, L.; Bellavia, F.; Menna, F.; Remondino, F. Photogrammetry Now And Then—From Hand-Crafted To Deep-Learning Tie Points. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLVIII-2/W1-2022, 163–170. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Jin, Y.; Mishkin, D.; Mishchuk, A.; Matas, J.; Fua, P.; Yi, K.M.; Trulls, E. Image Matching Across Wide Baselines: From Paper to Practice. Int. J. Comput. Vis. 2020, 129, 517–547. [Google Scholar] [CrossRef]

- Sarlin, P.E.; Cadena, C.; Siegwart, R.; Dymczyk, M. From Coarse to Fine: Robust Hierarchical Localization at Large Scale. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12708–12717. [Google Scholar] [CrossRef]

- Umeyama, S. Least-squares estimation of transformation parameters between two point patterns. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 376–380. [Google Scholar] [CrossRef]

- Chum, O.; Mikulik, A.; Perdoch, M.; Matas, J. Total recall II: Query expansion revisited. In Proceedings of the CVPR 2011, Springs, CO, USA, 20–25 June 2011. [Google Scholar] [CrossRef]

- Maiwald, F. A Window to the Past Through Modern Urban Environments—Developing a Photogrammetric Workflow for the Orientation Parameter Estimation of Historical Images. Ph.D. Thesis, Technische Universität Dresden, Dresden, Germany, 2022. Available online: https://nbn-resolving.org/urn:nbn:de:bsz:14-qucosa2-810852 (accessed on 12 February 2023).

| Relevant Photographs | Relevant Drawings | Other Photographs | Other Drawings |

|---|---|---|---|

| 22 | 8 | 23 | 5 |

| Relevant Photographs | Drawings/Postcards | Other Photographs (Hofkirche) | Former Opera House | Stereo Photos |

|---|---|---|---|---|

| 178 | 8 | 7 | 22 | 2 |

| Dataset | Relevant Photographs | Reconstructed Cameras | Sparse Points | Mean Reprojection Error |

|---|---|---|---|---|

| Hofkirche | 296 | 235 | 56,359 | 1.47 |

| Schloss Moritzburg | 270 | 227 | 26,698 | 1.36 |

| Semperoper | 186 | 186 | 57,937 | 1.35 |

| Kronentor | 104 | 104 | 34,130 | 1.49 |

| Landgericht Dresden Sachsen | 3 | NA | NA | NA |

| Taschenbergpalais | 30 | 26 | 10,709 | 6.48 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maiwald, F.; Bruschke, J.; Schneider, D.; Wacker, M.; Niebling, F. Giving Historical Photographs a New Perspective: Introducing Camera Orientation Parameters as New Metadata in a Large-Scale 4D Application. Remote Sens. 2023, 15, 1879. https://doi.org/10.3390/rs15071879

Maiwald F, Bruschke J, Schneider D, Wacker M, Niebling F. Giving Historical Photographs a New Perspective: Introducing Camera Orientation Parameters as New Metadata in a Large-Scale 4D Application. Remote Sensing. 2023; 15(7):1879. https://doi.org/10.3390/rs15071879

Chicago/Turabian StyleMaiwald, Ferdinand, Jonas Bruschke, Danilo Schneider, Markus Wacker, and Florian Niebling. 2023. "Giving Historical Photographs a New Perspective: Introducing Camera Orientation Parameters as New Metadata in a Large-Scale 4D Application" Remote Sensing 15, no. 7: 1879. https://doi.org/10.3390/rs15071879

APA StyleMaiwald, F., Bruschke, J., Schneider, D., Wacker, M., & Niebling, F. (2023). Giving Historical Photographs a New Perspective: Introducing Camera Orientation Parameters as New Metadata in a Large-Scale 4D Application. Remote Sensing, 15(7), 1879. https://doi.org/10.3390/rs15071879