A Dynamic Effective Class Balanced Approach for Remote Sensing Imagery Semantic Segmentation of Imbalanced Data

Abstract

1. Introduction

2. Data

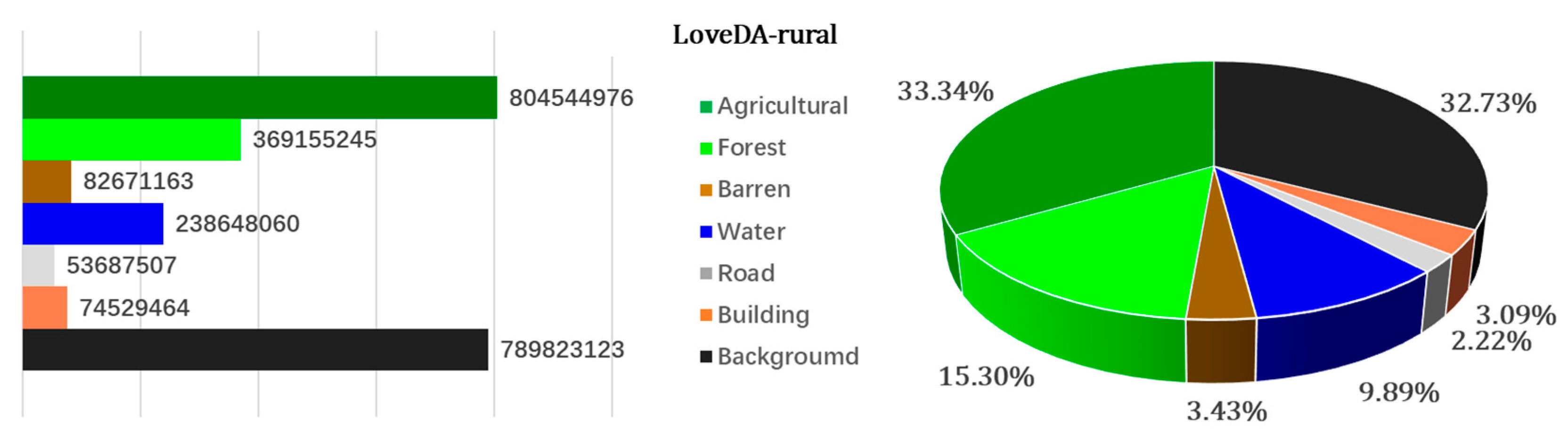

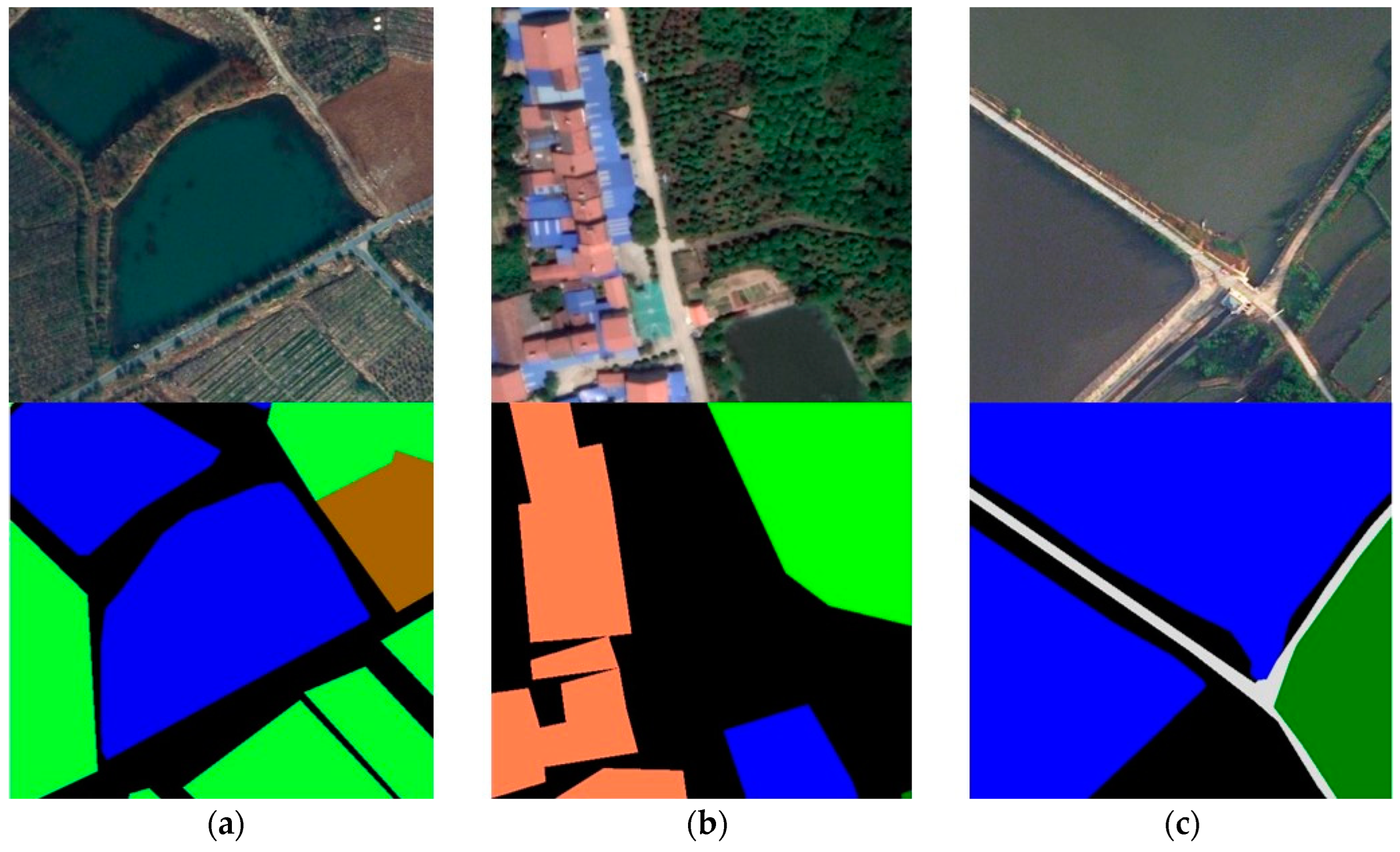

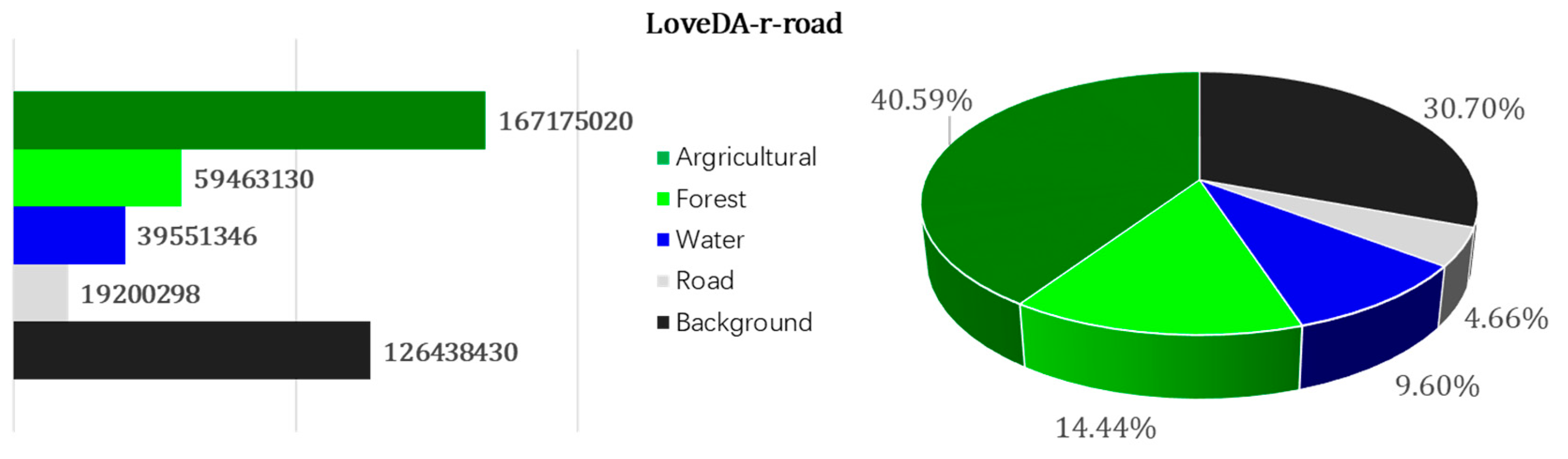

2.1. The LoveDA Dataset

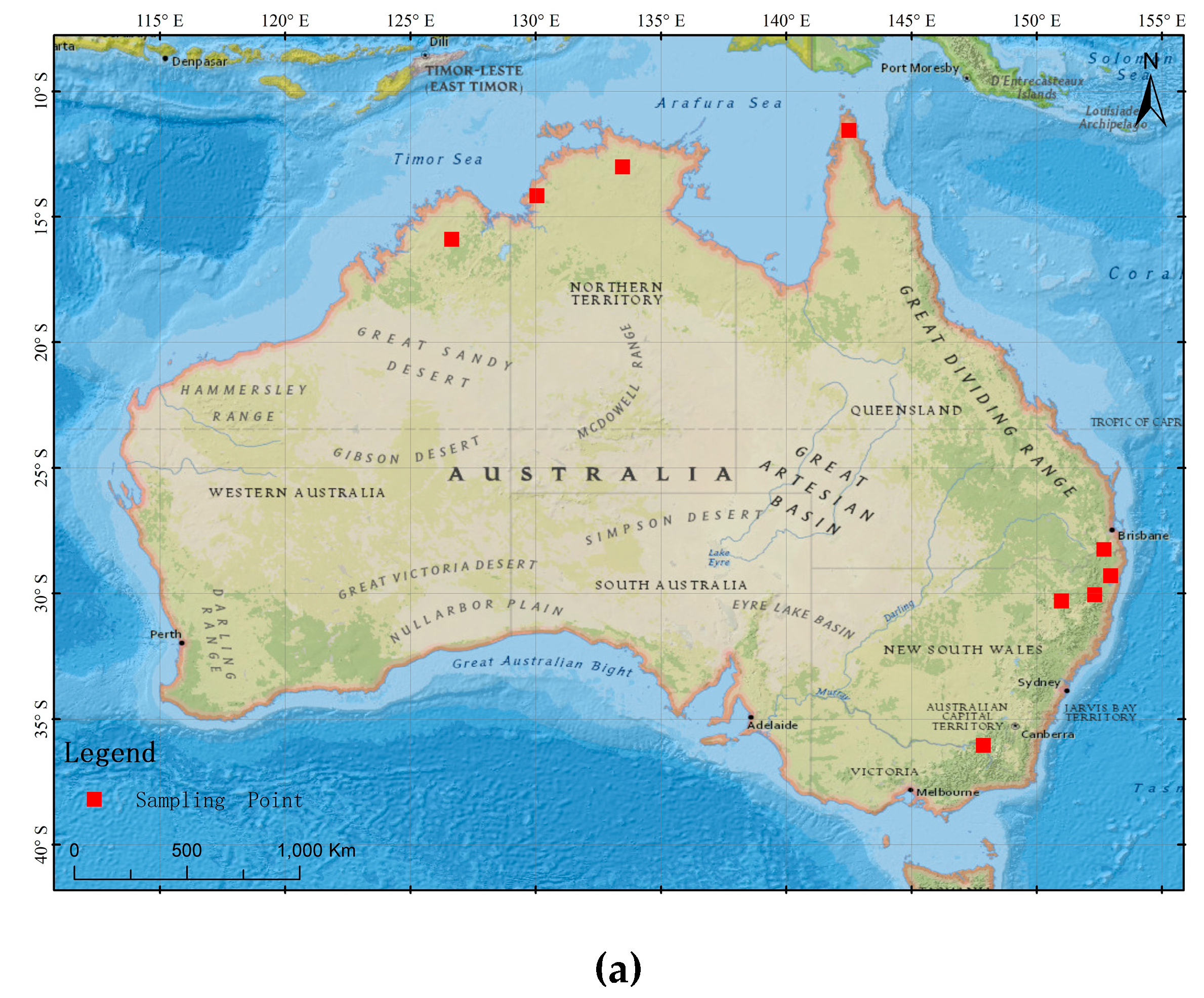

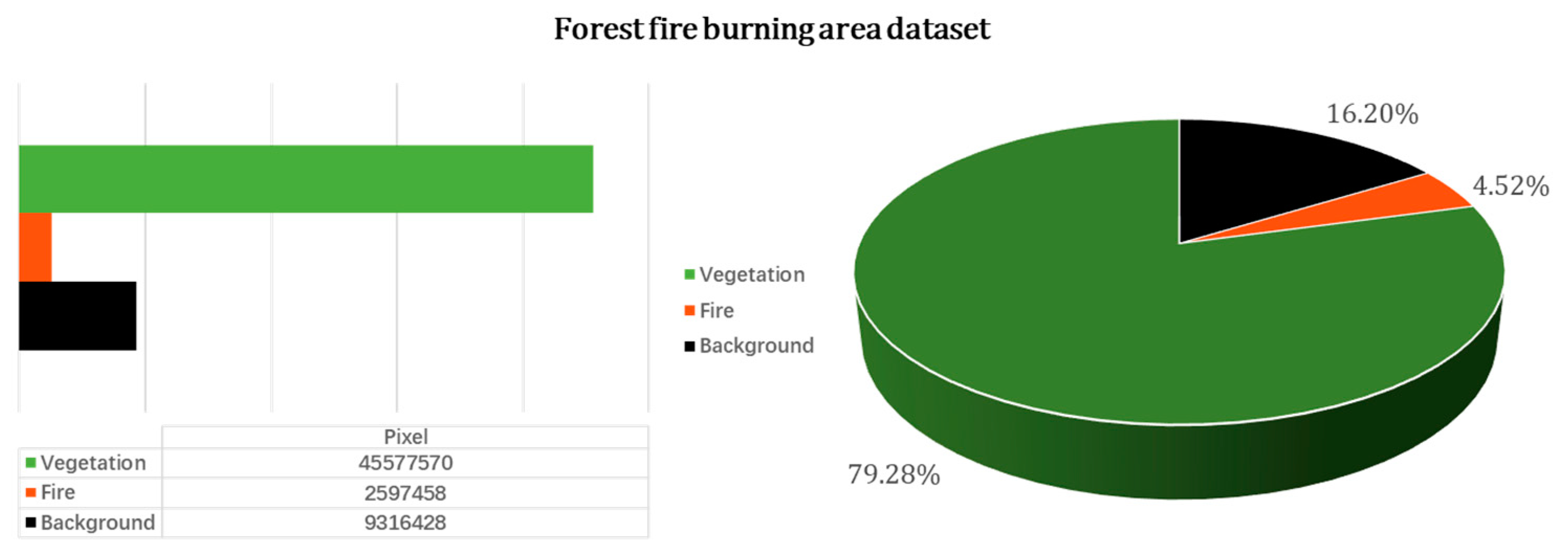

2.2. Landsat-8 Forest Fire Burning Area Images

3. Methods

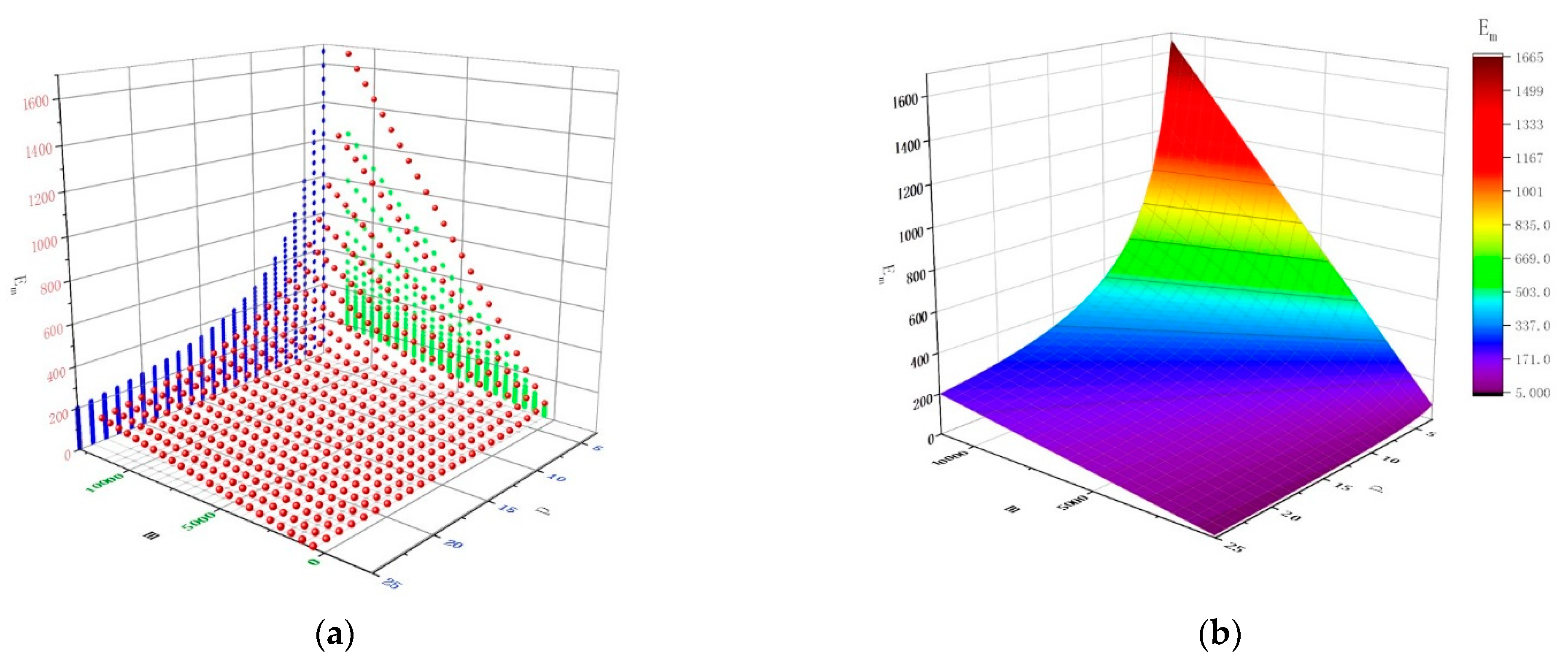

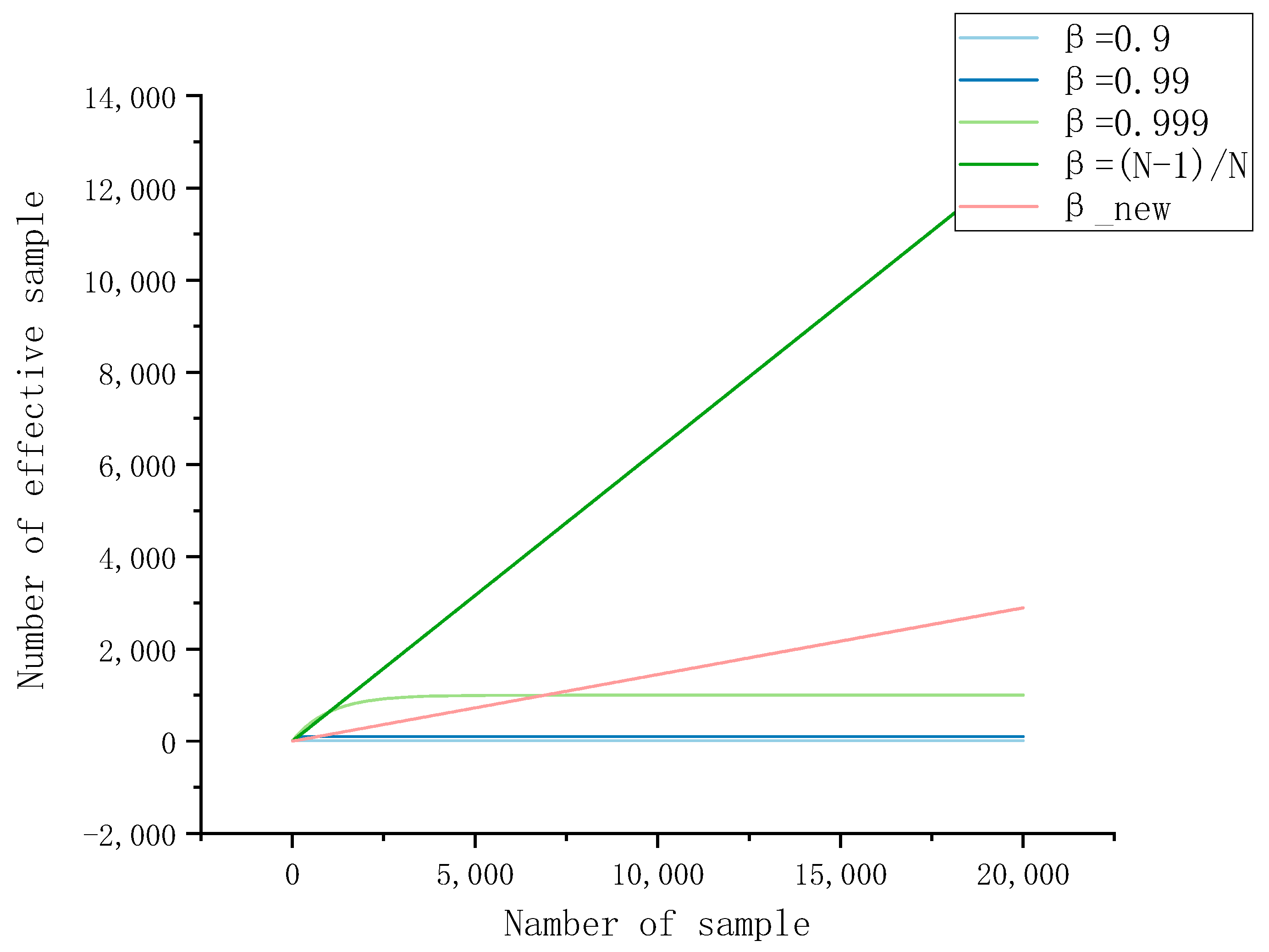

3.1. Effective Sample Space

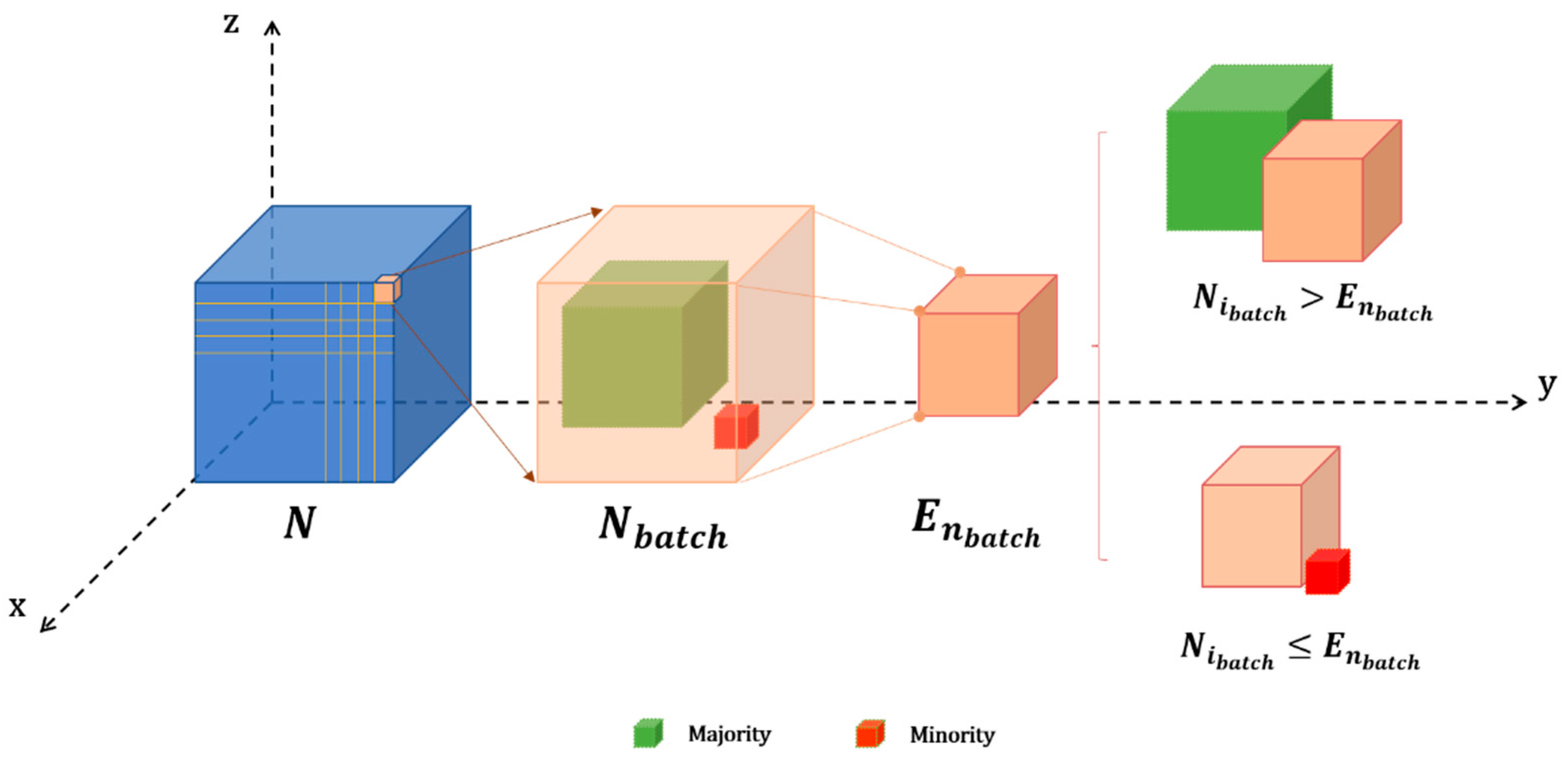

3.2. Dynamic Effective Sample Class Balance (DECB) Weighting Method

4. Results and Discussion

4.1. Environmental Configuration and Parameter Details

4.2. Network Structure and Loss Function

4.3. Evaluation Metrics

4.4. Results in the Loveda Dataset

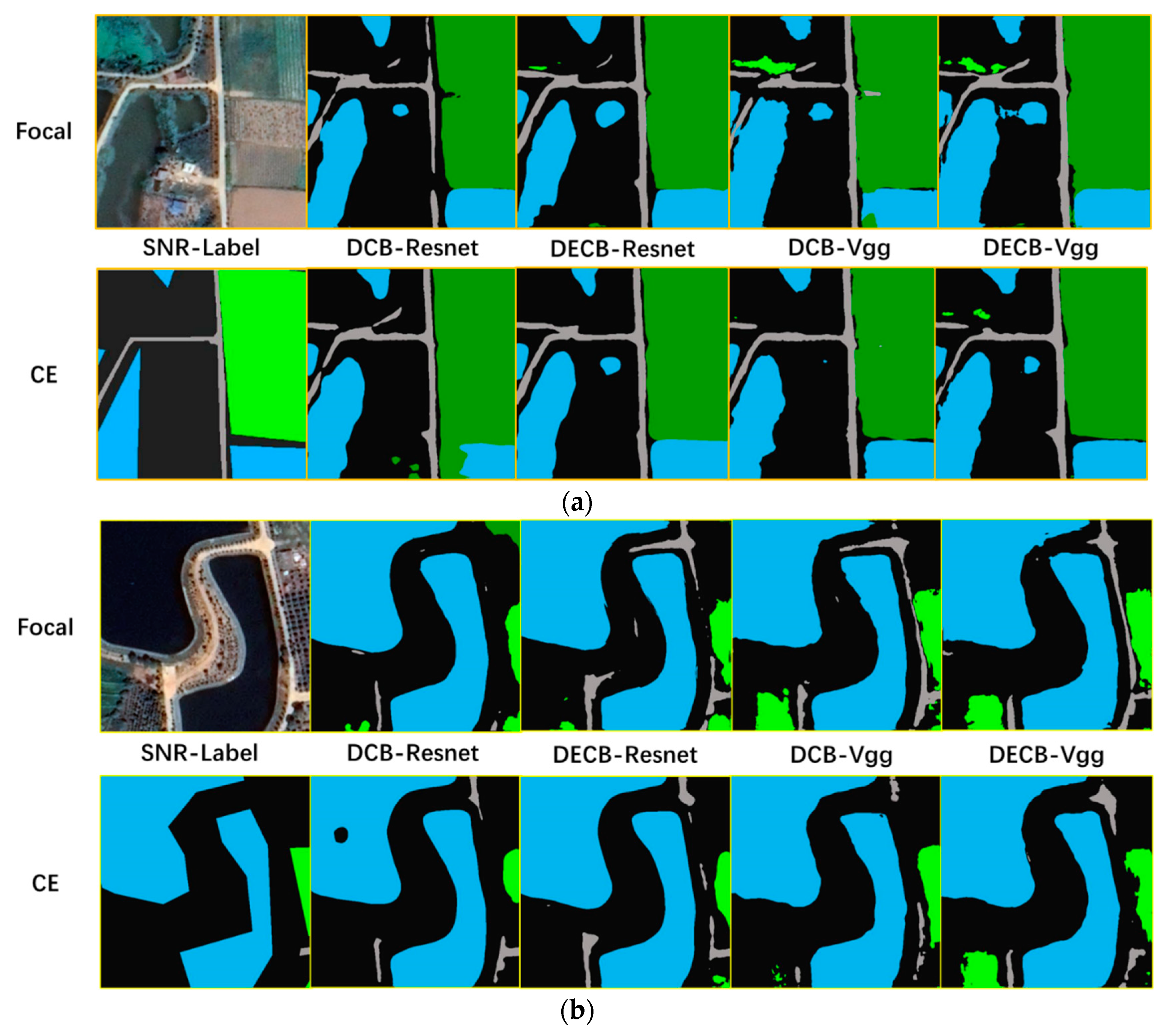

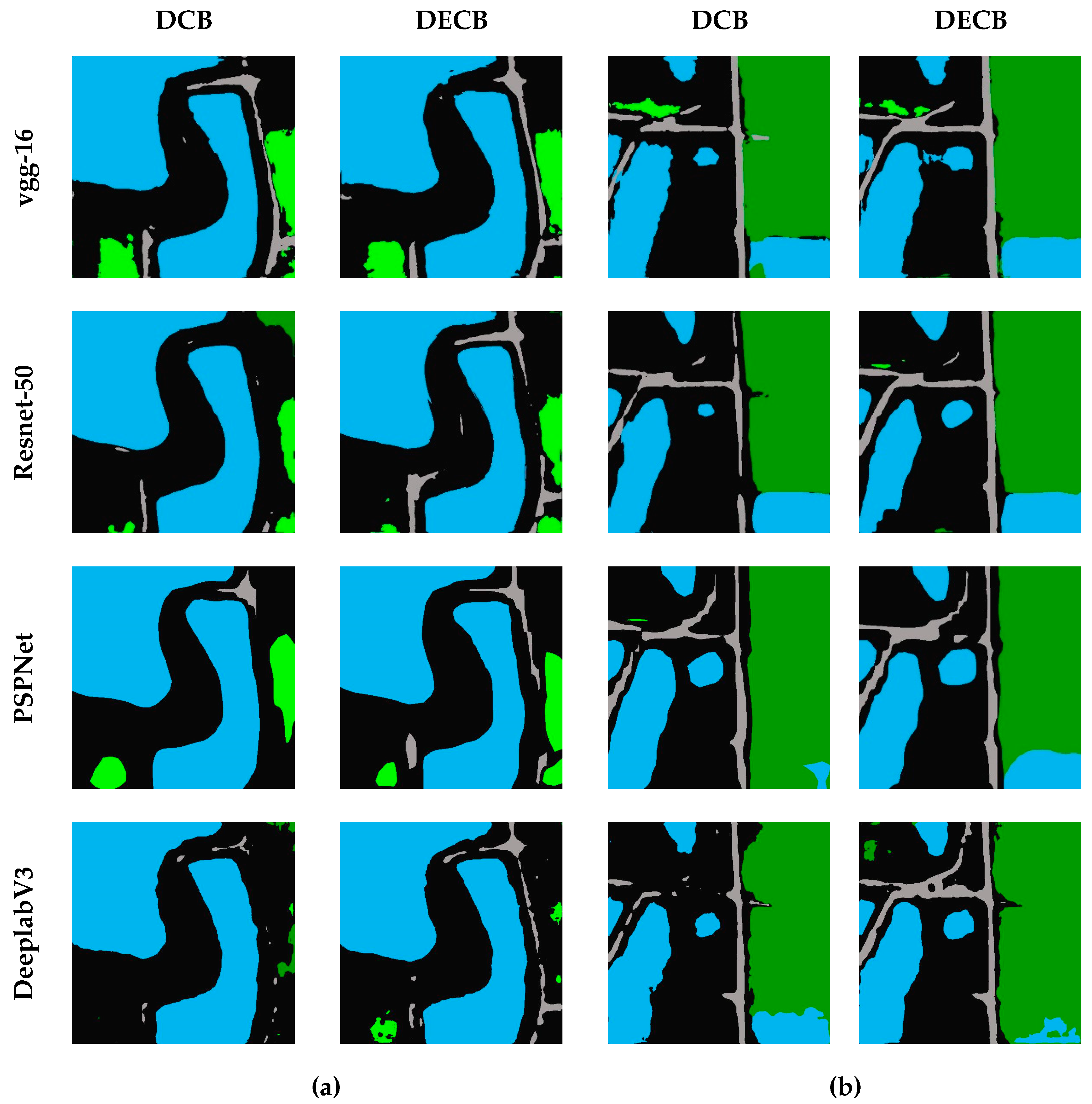

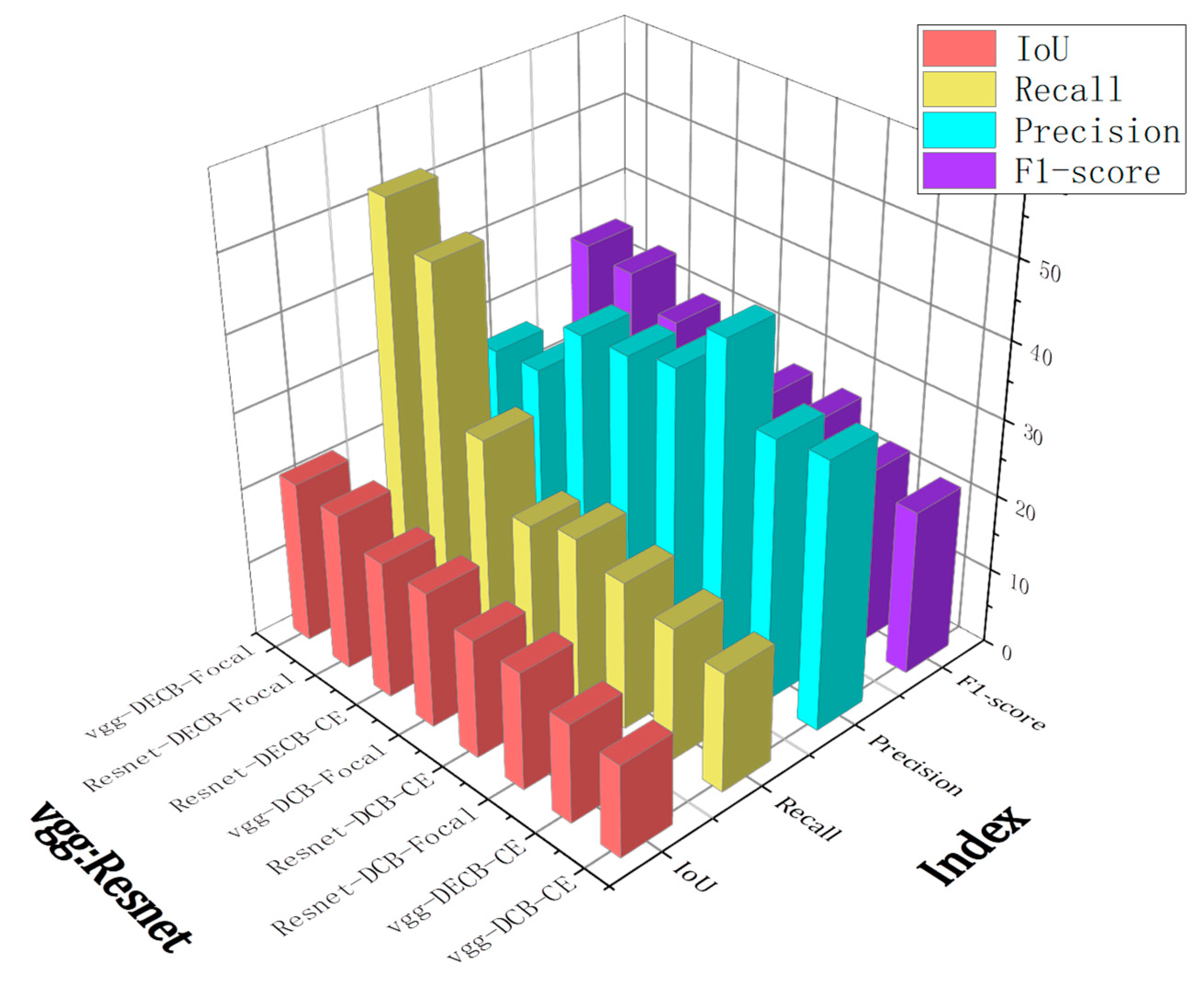

4.5. Results in the Forest Fire Burning Area Dataset

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef]

- Johnston, J.; Paugam, R.; Whitman, E.; Schiks, T.; Cantin, A. Remote Sensing of Fire Behavior. Encyclopedia of Wildfire and Wildland-Urban Interface (WUI) Fires; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Ghahreman, R.; Rahimzadegan, M. Calculating net radiation of freshwater reservoir to estimate spatial distribution of evaporation using satellite images. J. Hydrol. 2022, 605, 127392. [Google Scholar] [CrossRef]

- Brinkerhoff, C.B.; Gleason, C.J.; Zappa, C.J.; Raymond, P.A.; Harlan, M.E. Remotely Sensing River Greenhouse Gas Exchange Velocity Using the SWOT Satellite. Glob. Biogeochem. Cycles 2022, 36, e2022GB007419. [Google Scholar] [CrossRef]

- Weng, F.; Yu, X.; Duan, Y.; Yang, J.; Wang, J. Advanced Radiative Transfer Modeling System (ARMS): A New-Generation Satellite Observation Operator Developed for Numerical Weather Prediction and Remote Sensing Applications. Adv. Atmos. Sci. 2020, 37, 3–8. [Google Scholar] [CrossRef]

- Brown, A.; Ferentinos, K. Exploring the Potential of Sentinels-1 & 2 of the Copernicus Mission in Support of Rapid and Cost-effective Wildfire Assessment. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 262–276. [Google Scholar]

- Kotaridis, I.; Lazaridou, M. Remote sensing image segmentation advances: A meta-analysis. ISPRS J. Photogramm. Remote Sens. 2021, 173, 309–322. [Google Scholar] [CrossRef]

- Guo, X.; Chen, Y.; Liu, X.; Zhao, Y. Extraction of snow cover from high-resolution remote sensing imagery using deep learning on a small dataset. Remote Sens. Lett. 2020, 11, 66–75. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A Survey on Object Detection in Optical Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Thenkabail, P. Remotely Sensed Data Characterization, Classification, and Accuracies; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Cheng, H.D.; Jiang, X.H.; Sun, Y.; Wang, J. Color image segmentation: Advances and prospects. Pattern Recognit. 2001, 34, 2259–2281. [Google Scholar] [CrossRef]

- Derivaux, S.; Lefèvre, S.; Wemmert, C.; Korczak, J. Watershed Segmentation of Remotely Sensed Images Based on a Supervised Fuzzy Pixel Classification. In Proceedings of the IEEE International Geosciences and Remote Sensing Symposium (IGARSS), Denver, CO, USA, 31 July–4 August 2006. [Google Scholar] [CrossRef]

- Blaschke, T.; Lang, S.; Lorup, E.; Strobl, J.; Zeil, P. Object-Oriented Image Processing in an Integrated GIS/Remote Sensing Environment and Perspectives for Environmental Applications. Environ. Inf. Plan. Politics Public 2000, 2, 555–570. [Google Scholar]

- Blaschke, T.; Burnett, C.; Pekkarinen, A. Image Segmentation Methods for Object-based Analysis and Classification. Remote Sensing Image Analysis: Including the Spatial Domain; Springer: Berlin/Heidelberg, Germany, 2004; pp. 211–236. [Google Scholar]

- Navulur, K. Multispectral Image Analysis Using the Object-Oriented Paradigm; CRC Press: Boca Raton, FL, USA, 2006; pp. 1–155. [Google Scholar]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient Graph-Based Image Segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R. Hierarchical graph-based segmentation for extracting road networks from high-resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2017, 126, 245–260. [Google Scholar] [CrossRef]

- Wang, Y.; Meng, Q.; Qi, Q.; Yang, J.; Ying, L. Region Merging Considering Within- and Between-Segment Heterogeneity: An Improved Hybrid Remote-Sensing Image Segmentation Method. Remote Sens. 2018, 10, 781. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected CRFs. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Yan, Z.; Su, Y.; Sun, H.; Yu, H.; Ma, W.; Chi, H.; Cao, H.; Chang, Q. SegNet-based left ventricular MRI segmentation for the diagnosis of cardiac hypertrophy and myocardial infarction. Comput. Methods Programs Biomed. 2022, 227, 107197. [Google Scholar] [CrossRef] [PubMed]

- Xia, W.; Ma, C.; Liu, J.; Liu, S.; Chen, F.; Zhi, Y.; Duan, J. High-Resolution Remote Sensing Imagery Classification of Imbalanced Data Using Multistage Sampling Method and Deep Neural Networks. Remote Sens. 2019, 11, 2523. [Google Scholar] [CrossRef]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Feng, W.; Huang, W.; Ye, H.; Zhao, L. Synthetic Minority Over-Sampling Technique Based Rotation Forest for the Classification of Unbalanced Hyperspectral Data. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2651–2654. [Google Scholar]

- Zhang, Y.; Kang, B.; Hooi, B.; Yan, S.; Feng, J. Deep Long-Tailed Learning: A Survey. arXiv 2021, arXiv:2110.04596. [Google Scholar]

- Aggarwal, U.; Popescu, A.; Hudelot, C. Minority Class Oriented Active Learning for Imbalanced Datasets. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milano, Italy, 10–15 January 2021; pp. 9920–9927. [Google Scholar]

- Zhang, C.; Pan, T.-Y.; Chen, T.; Zhong, J.; Fu, W.; Chao, W.-L. Learning with Free Object Segments for Long-Tailed Instance Segmentation. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Buda, M.; Maki, A.; Mazurowski, M. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2017, 106, 249–259. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [CrossRef]

- Park, S.; Lim, J.; Jeon, Y.; Choi, J.Y. Influence-Balanced Loss for Imbalanced Visual Classification. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 715–724. [Google Scholar]

- Zhang, S.; Li, Z.; Yan, S.; He, X.; Sun, J. Distribution Alignment: A Unified Framework for Long-tail Visual Recognition. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 2361–2370. [Google Scholar]

- Ren, J.; Yu, C.; Sheng, S.; Ma, X.; Zhao, H.; Yi, S.; Li, H. Balanced meta-softmax for long-tailed visual recognition. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 7–8 June 2020; p. 351. [Google Scholar]

- Shu, J.; Xie, Q.; Yi, L.; Zhao, Q.; Zhou, S.; Xu, Z.; Meng, D. Meta-weight-net: Learning an explicit mapping for sample weighting. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; p. 172. [Google Scholar]

- Hossain, M.S.; Betts, J.M.; Paplinski, A.P. Dual Focal Loss to address class imbalance in semantic segmentation. Neurocomputing 2021, 462, 69–87. [Google Scholar] [CrossRef]

- Cui, Y.; Jia, M.; Lin, T.Y.; Song, Y.; Belongie, S. Class-Balanced Loss Based on Effective Number of Samples. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9260–9269. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 2999–3007. [Google Scholar]

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. LoveDA: A Remote Sensing Land-Cover Dataset for Domain Adaptive Semantic Segmentation. arXiv 2021, arXiv:2110.08733. [Google Scholar]

- USGS. US Geological Survey. Available online: https://www.usgs.gov/landsat-missions/landsat-8 (accessed on 1 March 2022).

- Wang, Z.; Yang, P.; Liang, H.; Zheng, C.; Yin, J.; Tian, Y.; Cui, W. Semantic Segmentation and Analysis on Sensitive Parameters of Forest Fire Smoke Using Smoke-Unet and Landsat-8 Imagery. Remote Sens. 2022, 14, 45. [Google Scholar] [CrossRef]

- NIFC. National Interagency Fire Center. Available online: https://www.nifc.gov/ (accessed on 1 October 2020).

- DAFF. Department of Agriculture Fisheries and Forestry. Available online: https://www.agriculture.gov.au/ (accessed on 1 October 2020).

- Kganyago, M.; Shikwambana, L. Assessment of the characteristics of recent major wildfires in the USA, Australia and Brazil in 2018–2019 using multi-source satellite products. Remote Sens. 2020, 12, 1803. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Quayle, B.; Lorenz, E.; Morelli, F. Active fire detection using Landsat-8/OLI data. Remote Sens. Environ. 2016, 185, 210–220. [Google Scholar] [CrossRef]

- Lu, S.; Gao, F.; Piao, C.; Ma, Y. Dynamic Weighted Cross Entropy for Semantic Segmentation with Extremely Imbalanced Data. In Proceedings of the 2019 International Conference on Artificial Intelligence and Advanced Manufacturing (AIAM), Dublin, Ireland, 16–18 October 2019; pp. 230–233. [Google Scholar]

- De Almeida Pereira, G.H.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. ISPRS J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Pinheiro, P.O.; Collobert, R.; Dollár, P. Learning to segment object candidates. In Proceedings of the 28th International Conference on Neural Information Processing Systems—Volume 2, Montreal, Canada, 7–12 December 2015; pp. 1990–1998. [Google Scholar]

- Chinchor, N.A.; Sundheim, B.M. MUC-5 evaluation metrics. In Proceedings of the Fifth Message Understanding Conference (MUC-5), Baltimore, MD, USA, 25–27 August 1993. [Google Scholar]

| Band | Channel | Applications | |

|---|---|---|---|

| 1 | Coastal | 0.433~0.453 | Active fire detection and environmental observation in coastal zones. |

| 2 | Blue | 0.450~0.515 | Visible light spectrum used for geographical identification. |

| 3 | Green | 0.525~0.600 | |

| 4 | Red | 0.630~0.680 | |

| 5 | NIR | 0.845~0.885 | Active fire detection and information extraction of vegetative cover. |

| 6 | SWIR1 | 1.560~1.660 | Active fire detection, vegetation drought detection, and mineral information extraction. |

| 7 | SWIR2 | 2.100~2.300 | Active fire detection, vegetation drought detection, mineral information extraction, and multi-temporal analysis. |

| Batch Size | DCB Weights | DECB Weights | |||||

|---|---|---|---|---|---|---|---|

| 4 | 1048576 | 0.9999934 | 151623.8390 | 150000 | 0.8569 | 21690.3920 | 0.9793 |

| 8 | 2097152 | 0.9999967 | 303247.1785 | 200000 | 0.9046 | 28920.3560 | 0.9862 |

| 12 | 3145728 | 0.9999978 | 454870.5181 | 400000 | 0.8728 | 57840.2134 | 0.9816 |

| 16 | 4194304 | 0.9999984 | 606493.8575 | 600000 | 0.8569 | 86760.0703 | 0.9793 |

| Programming Environment | Auxiliary Library | Hardware Configuration | Other Software |

|---|---|---|---|

| Python3.6.13 | h5py2.10.0 | CPU:InterE5-2620v3@2.4 GHz | Envi5.3.1 |

| torch1.2.0 | GDAL3.0.4 | GPU:NVIDIA TITAN X | ArcGIS PRO |

| CUDA11.6 | opencv4.1.2 | RAM:16 GB | |

| cuDNN8.0.4 | numpy1.17.0 | Numba0.26.0 |

| Name of Dataset | Number of Samples | Initial Learning Rates | Decay Rate | Batch Size | Epoch |

|---|---|---|---|---|---|

| LoveDA-rural | 8884 | 0.96 | 8 | 120 | |

| LoveDA-r-road | 1571 | 0.96 | 12 | 150 | |

| Forest fire burning area | 2022 | 0.96 | 4 | 150 |

| Backbone | Loss-Function | Background | Building | Road | Water | Barren | Forest | Agricultural | Average |

|---|---|---|---|---|---|---|---|---|---|

| vgg-16 | DECB- Focal | 55.89 | 68.06 | 62.74 | 73.59 | 46.92 | 72.51 | 73.35 | 64.72 |

| vgg-16 | DCB-Focal | 56.11 | 67.18 | 61.00 | 73.69 | 47.02 | 73.01 | 73.24 | 64.46 |

| resnet-50 | DECB- Focal | 56.46 | 66.1 | 61.00 | 73.85 | 47.96 | 73.15 | 73.9 | 64.63 |

| resnet-50 | DCB-Focal | 57.03 | 65.07 | 60.27 | 74.69 | 48.03 | 73.18 | 74.24 | 64.64 |

| Backbone | Loss-Function | Background | Road | Water | Forest | Agricultural | Average |

|---|---|---|---|---|---|---|---|

| vgg-16 | DECB-Focal | 53.26 | 68.79 | 70.89 | 66.52 | 72.75 | 66.44 |

| vgg-16 | DCB-Focal | 51.64 | 66.94 | 70.26 | 66.19 | 72.65 | 65.54 |

| resnet-50 | DECB-Focal | 52.94 | 68.88 | 71.06 | 66.94 | 73.33 | 66.63 |

| resnet-50 | DCB-Focal | 51.86 | 67.83 | 71.01 | 66.41 | 73.23 | 66.07 |

| vgg-16 | DECB-CE | 55.1 | 69.79 | 70.85 | 66.82 | 72.98 | 67.11 |

| vgg-16 | DCB-CE | 54.08 | 68.67 | 70.19 | 67.52 | 72.93 | 66.68 |

| resnet-50 | DECB-CE | 55.66 | 68.99 | 71.38 | 66.79 | 73.06 | 67.18 |

| resnet-50 | DCB-CE | 54.97 | 67.42 | 71.01 | 67.41 | 73.66 | 66.89 |

| Network | Weighting Methods | Background | Road | Water | Forest | Agricultural | Average |

|---|---|---|---|---|---|---|---|

| vgg-16 | DECB | 53.26 | 68.79 | 70.89 | 66.52 | 72.75 | 66.44 |

| DCB | 51.64 | 66.94 | 70.26 | 66.19 | 72.65 | 65.54 | |

| Resnet-50 | DECB | 52.94 | 68.88 | 71.06 | 66.94 | 73.33 | 66.63 |

| DCB | 51.86 | 67.83 | 71.01 | 66.41 | 73.23 | 66.07 | |

| PSPNet | DECB | 50.78 | 60.69 | 69.61 | 65.41 | 72.96 | 63.90 |

| DCB | 48.12 | 58.99 | 67.20 | 62.32 | 72.34 | 61.80 | |

| DeeplabV3 | DECB | 50.23 | 60.66 | 64.10 | 62.30 | 72.63 | 61.99 |

| DCB | 46.96 | 60.07 | 62.94 | 62.90 | 71.91 | 60.96 |

| Backbone | Loss-Function | Fire | Vegetation | Background | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IoU | Recall | Precision | F1-Score | IoU | Recall | Precision | F1-Score | IoU | Recall | Precision | ||

| vgg-16 | DECB-Focal | 21.36 | 51.96 | 26.62 | 35.21 | 93.51 | 97.13 | 96.17 | 96.65 | 78.06 | 88.36 | 87.01 |

| vgg-16 | DCB-Focal | 17.96 | 19.57 | 35.26 | 25.17 | 93.34 | 97.22 | 95.91 | 96.56 | 77.23 | 87.9 | 86.42 |

| resnet-50 | DECB-Focal | 20.72 | 46.77 | 27.11 | 34.32 | 92.55 | 95.18 | 97.1 | 96.13 | 74.12 | 81.3 | 89.36 |

| resnet-50 | DCB-Focal | 15.59 | 19.57 | 43.63 | 27.02 | 92.83 | 97.2 | 95.38 | 96.28 | 75.32 | 88.15 | 83.81 |

| vgg-16 | DECB-CE | 13.14 | 17.51 | 34.46 | 23.22 | 95.2 | 97.47 | 97.61 | 97.54 | 85.95 | 93.97 | 90.97 |

| vgg-16 | DCB-CE | 12.23 | 15.76 | 35.31 | 21.79 | 94.65 | 97.91 | 96.6 | 97.25 | 84.58 | 92.06 | 91.24 |

| resnet-50 | DECB-CE | 18.09 | 27.37 | 34.8 | 30.64 | 94.81 | 97.41 | 97.27 | 97.34 | 84.27 | 94.22 | 88.86 |

| resnet-50 | DCB-CE | 15.72 | 21.53 | 36.81 | 27.17 | 94.7 | 97.78 | 96.78 | 97.28 | 84.22 | 93.2 | 89.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Z.; Zheng, C.; Liu, X.; Tian, Y.; Chen, X.; Chen, X.; Dong, Z. A Dynamic Effective Class Balanced Approach for Remote Sensing Imagery Semantic Segmentation of Imbalanced Data. Remote Sens. 2023, 15, 1768. https://doi.org/10.3390/rs15071768

Zhou Z, Zheng C, Liu X, Tian Y, Chen X, Chen X, Dong Z. A Dynamic Effective Class Balanced Approach for Remote Sensing Imagery Semantic Segmentation of Imbalanced Data. Remote Sensing. 2023; 15(7):1768. https://doi.org/10.3390/rs15071768

Chicago/Turabian StyleZhou, Zheng, Change Zheng, Xiaodong Liu, Ye Tian, Xiaoyi Chen, Xuexue Chen, and Zixun Dong. 2023. "A Dynamic Effective Class Balanced Approach for Remote Sensing Imagery Semantic Segmentation of Imbalanced Data" Remote Sensing 15, no. 7: 1768. https://doi.org/10.3390/rs15071768

APA StyleZhou, Z., Zheng, C., Liu, X., Tian, Y., Chen, X., Chen, X., & Dong, Z. (2023). A Dynamic Effective Class Balanced Approach for Remote Sensing Imagery Semantic Segmentation of Imbalanced Data. Remote Sensing, 15(7), 1768. https://doi.org/10.3390/rs15071768