Abstract

Acquiring road information is important for smart cities and sustainable urban development. In recent years, significant progress has been made in the extraction of urban road information from remote sensing images using deep learning (DL) algorithms. However, due to the complex shape, narrowness, and high span of roads in the images, the results are often unsatisfactory. This article proposes a Seg-Road model to improve road connectivity. The Seg-Road uses a transformer structure to extract the long-range dependency and global contextual information to improve the fragmentation of road segmentation and uses a convolutional neural network (CNN) structure to extract local contextual information to improve the segmentation of road details. Furthermore, a novel pixel connectivity structure (PCS) is proposed to improve the connectivity of road segmentation and the robustness of prediction results. To verify the effectiveness of Seg-Road for road segmentation, the DeepGlobe and Massachusetts datasets were used for training and testing. The experimental results show that Seg-Road achieves state-of-the-art (SOTA) performance, with an intersection over union (IoU) of 67.20%, mean intersection over union (MIoU) of 82.06%, F1 of 91.43%, precision of 90.05%, and recall of 92.85% in the DeepGlobe dataset, and achieves an IoU of 68.38%, MIoU of 83.89%, F1 of 90.01%, precision of 87.34%, and recall of 92.86% in the Massachusetts dataset, which is better than the values for CoANet. Further, it has higher application value for achieving sustainable urban development.

1. Introduction

Roads are an important part of the geographic information system (GIS), and obtaining timely and complete road information plays an important role in digital city construction, public transportation, and unmanned vehicle navigation [1,2]. In recent years, with rapid developments in remote sensing technology, remote sensing images have been greatly improved in terms of spatial and spectral resolution, etc. Further, extracting roads from high-resolution images has gradually become a research hotspot [3]. However, manual road extraction methods are not only time-consuming but are also susceptible to subjective human factors [4,5]. With the massive amount of satellite remote sensing data generated every day, it is not feasible to rely entirely on manual intervention for road extraction. Therefore, a fast and automated road information extraction method for remote sensing images is an urgent requirement [6,7,8]. Significant research on road extraction has been conducted using remote sensing images, and many methods have been developed with varying extraction accuracies. These traditional methods can be divided into two categories, depending on the extraction task. The first kind relies on expert knowledge, road geometric and shape features, extracting the road skeleton through template matching, and knowledge-driven algorithms. This type of method has the disadvantages of high computational complexity and low automation capabilities. The second kind involves detecting all the road areas in the remote sensing image by using object-oriented concepts, such as graph-based segmentation and support vector machines (SVMs), to obtain road information. This type of method is subject to the issues of building shadow obscuration and uneven road grayscale variation, resulting in the existence of a large number of road breaks, coupled with the complex shapes of roads in remote sensing images and different scales, resulting in poor road information extraction.

To achieve fast and accurate road segmentation, the method of road extraction based on deep learning (DL) is becoming an efficient and automated solution. However, during road segmentation for satellite remote sensing, the road has a large span and is narrow, which usually leads to poor segmentation of the final model as a whole. Mosinska et al. argue that the use of pixel-wise losses, such as binary cross-entropy, affects the topological structure of the road distribution in the road segmentation task, and propose the loss of higher-order topological features with a linear structure for improving the overall segmentation accuracy of the model [9]. Bastani et al. concluded that the output of convolutional neural networks (CNNs) usually has high noise, which makes it difficult to make the final result more accurate, and proposed RoadTracer [10]. RoadTracer adds an iterable post-processing measure to the CNN prediction to improve the accuracy of the model for road segmentation in remote sensing images from an a priori perspective. Zhou et al. used the encoder–decoder structure and proposed D-LinkNet [11], which used dilated convolution to enhance the overall receptive field of the model, but did not reduce the resolution of the feature map, and achieved an IoU of 64.66% on the DeepGlobe dataset. Tan et al. argued that using a conventional iterative algorithm to process the prediction results of the model leads to too much focus on local information [12], yielding suboptimal results and, therefore, proposed a point-based iterative graph exploration scheme to enhance the model’s perception of global information and ultimately improve the overall segmentation results. Vasu et al. argue that conventional CNN-based methods usually use pixel-wise losses for optimization [13], which cannot preserve the global connectivity of roads, and propose the use of adversarial learning to improve the segmentation of roads in remotely sensed images. Mei et al. proposed the use of a strip convolution module to capture long-range contextual information from different directions to improve the connectivity of segmentation results [14] and finally obtained high performance on the DeepGlobe dataset. Cao et al. argue that, for road segmentation in remote sensing images, the network needs to extract rich long-range dependencies. However, currently, it is difficult to implement CNNs with small convolutional kernels, and CSANet based on a cross-scale axial attention mechanism is proposed for achieving higher accuracy in terms of road segmentation [15]. Liu et al. proposed a CNN based on masked regions to automatically detect and segment small cracks in asphalt pavements at the pixel level [16]. Yuan et al. suggest that the accuracy of road segmentation in remote sensing images is mainly affected by the loss function and propose an attention loss function named GapLoss, which can improve the segmentation effect of many current models [17]. Sun et al. propose a lightweight semantic segmentation model that incorporates attention mechanisms to obtain the predictions of the model [18]. Lian et al. proposed a DeepWindow model, guided by a CNN-based decision function, which uses a sliding window to track the road network directly from the images instead of prior road segmentation [19]. Wang et al. proposed a model consisting of ResNet101 for pothole detection in pavement [20], and Tardy et al. proposed an automated approach based on 3D point cloud technology for road inventory [21]. Chen et al. provided a dataset consisting of optical remote sensing images and corresponding high quality labels for road extraction [22].

The development direction of semantic segmentation in recent years has ranged from the first end-to-end CNN-based semantic segmentation algorithm fully convolutional network (FCN) to today’s transformer-based segmentation algorithms, such as SegFormer. In comparison to CNN-based methods, transformer-based methods usually use self-attention to enhance the global information extraction ability of the model and, thus, achieve better segmentation results [23,24,25]. To solve the issue of poor road segmentation in remote sensing images, such as fragmentation [12,26,27,28], this study proposes a Seg-Road segmentation model. The main contributions are as follows:

- (1)

- By comparing existing models, this article proposes the Seg-Road, which achieves state-of-the-art (SOTA) performance on the DeepGlobe dataset, with an IoU of 67.20%. Furthermore, Seg-Road also achieves an IoU of 68.38% on the Massachusetts dataset.

- (2)

- The Seg-Road proposed in this paper combines transformer- and CNN-based models [29,30,31,32], which improves the global information perception [33,34] and local information [35,36,37,38] extraction capabilities.

- (3)

- This paper proposes the use of PCS for improving the fragmentation of road segmentation in remote sensing images and analyses the general shortcomings of the current model and future research directions.

2. Data and Methods

2.1. Data Collection

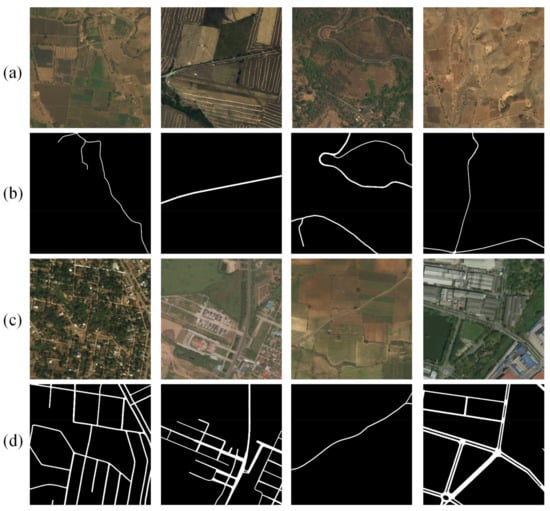

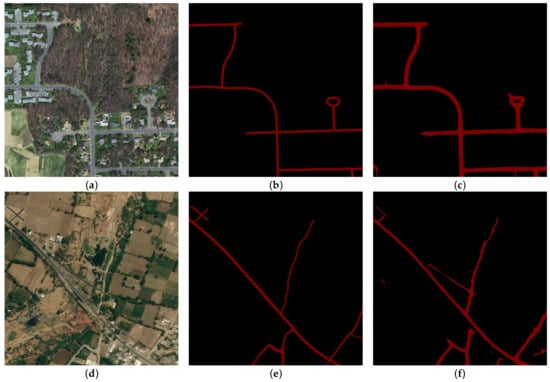

The DeepGlobe road dataset [39] is a set of high-resolution remote sensing images proposed by the DeepGlobe Road Extraction Challenge 2018; the images all have a size of 1024 × 1024 and a spatial resolution of 0.5 m, among which there are 6226 images with labeled data. The image sceneries are from three countries—Thailand, India, and Indonesia—including urban, rural, deserted suburban, seaside, and tropical rainforest scenes. To verify the effectiveness of the algorithm proposed in this study, 6226 remote sensing images with labeled data were randomly divided into a training set and a testing set. Among them, 4696 images were used for training, and 1530 images were used for testing. The training dataset was augmented by creating crops of 512 × 512 [11,14,40,41,42]; some of the images in the dataset are shown in Figure 1.

Figure 1.

Examples from the DeepGlobe dataset showing satellite images of the training set and testing set. ((a) shows satellite imagery in the training set, (b) shows the corresponding annotated images of (a), (c) shows satellite imagery in the testing set, and (d) shows the corresponding annotated images of (c)).

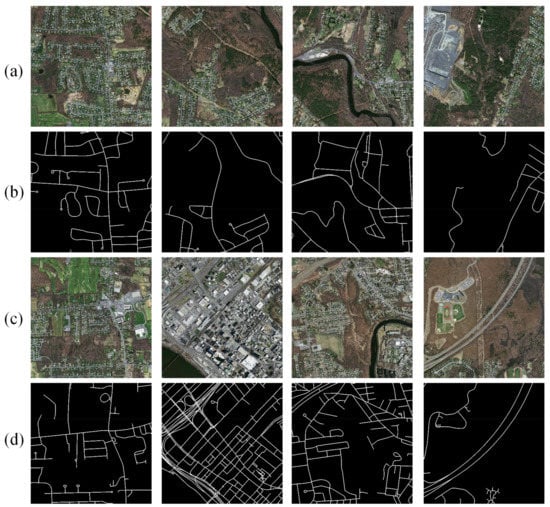

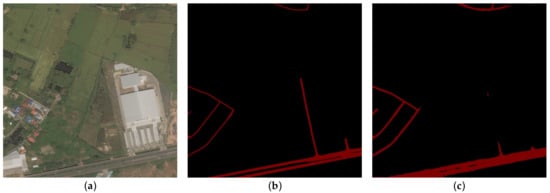

Further, the Massachusetts dataset is a high-quality aerial road imagery dataset that contains 1171 images (1108 for training, 14 for validation, and 49 for testing), which includes urban, suburban, rural, and other areas in Massachusetts. The images in the Massachusetts datasets all have a size of 1500 × 1500 and a spatial resolution of 1 m. In this study, the images were cropped to smaller sizes, of 512 × 512, with a stride of 512; some of the images in the dataset are shown in Figure 2.

Figure 2.

Examples from the Massachusetts dataset showing satellite images of the training set and testing set. ((a) shows satellite imagery in the training set, (b) shows the corresponding annotated images of (a), (c) shows satellite imagery in the testing set, and (d) shows the corresponding annotated images of (c)).

2.2. Seg-Road Model

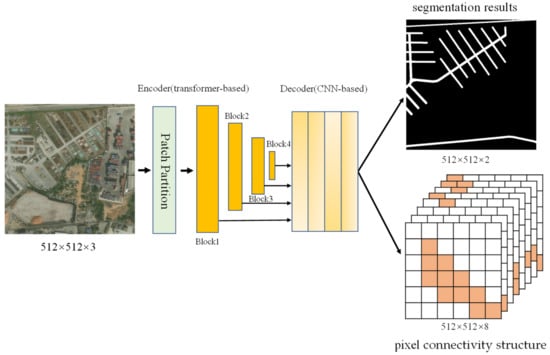

In this study, to solve the road segmentation problem in remote sensing images, an effective and efficient CNN- and transformer-based model named Seg-Road was proposed; its overall structure is schematically illustrated in Figure 3. It can be clearly observed that Seg-Road can be considered as a semantic segmentation model in the encoder–decoder form [43,44,45,46] and is mainly divided into two parts: (1) an encoder, consisting mainly of self-attention for extracting long-range dependency and global contextual information; (2) a decoder, consisting mainly of a CNN for enhancing local information perception, which contains the pixel connectivity structure (PCS) for predicting the road connectivity between neighboring pixels.

Figure 3.

Overall model structure of Seg-Road, including a backbone network, feature fusion, segmentation branch, and the PCS.

2.2.1. Encoder

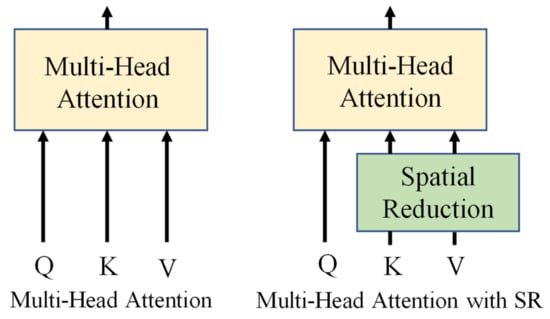

The hierarchical transformer encoder of Seg-Road differs from the swin transformer and other transformer-based models that use patch partition to cut the input image and add relevant position embedding information. Seg-Road uses a convolutional structure with a stride of 4 and a kernel size of 11 × 11 with LayerNorm (LN). The input image size is converted from B × C × H × W to B × × × , where B refers to the batch size; and are the number of channels, at 3 and 32, respectively, and H and W are the height and width of the input image, respectively. In contrast to directly cutting the image, the patch partition technique can extract the features of the image once and can be understood as preserving the connection information between different regions of the image to a certain extent. Usually, the transformer-based mode uses vanilla transformers for image feature extraction, which differs from CNN, which focuses on local information. Further, vanilla transformers have a high long-range dependency and global contextual information: Q, K, and V of dimension N × C as the input, as shown in Equations (1) and (2), where N = H × W, . To prevent the inner product of Q and from being too large, the value of is set to C [47,48,49].

The role of Softmax is to normalize the dimension of , whose shape is N × N (HW × HW), which is used to perceive the connection between every two pixels and introduces global perception attention for the overall model. In comparison to CNNs, this model has better global attention. Seg-Road uses an improved spatial reduction transformer (SRT) [23], which introduces spatial reduction calculations to compress the dimensions of and , and to reduce the number of calculations. Thus, the substantial computational cost incurred by directly using vanilla transformers is successfully avoided, as shown in Equations (3) and (4). Further, (x,r) is converted from HW × C to , ; Norm represents normalization and C represents the dimensions of the channels.

By introducing a scaling factor r, the overall time complexity of self-attention is reduced from to . Furthermore, the computational cost of the overall Seg-Road model is reduced, providing rich long-range dependency and global contextual information for the overall model. The computational speed advantage of the improved self-attention is more obvious when the input scale of the model is large, as shown in Figure 4.

Figure 4.

Vanilla transformer attention (left) and the spatial reduction attention (right) used in Seg-Road.

The road layout in remote sensing images has strong connectivity; therefore, this study proposes the use of a transformer to enhance the connectivity of road segmentation due to its global perception. In the Seg-Road model, the encoder uses four blocks composed of SRT to extract the feature information of the input image, namely Block1, Block2, Block3, and Block4. Semantic segmentation is not only used to predict the category of pixel points but also to determine the pixel position of the target. Therefore, to accurately segment roads in remote sensing images, Seg-Road uses the output results of four blocks in the encoder to perform feature fusion, so as to improve the comprehensive expression ability of high-level semantic information and low-level detailed information of the network. To reduce the computational cost of the model, the output result of each block was first upsampled to 1/4 of the resolution of the input image; then, the four output results were concatenated according to the channel. Finally, the BatchNorm (BN) CNN with kernel size 1 and stride 1 was used to operate the concatenation results once to obtain the final output result of the model.

2.2.2. Decoder

The SegFormer-like decoder was used in the design of the decoder process to avoid the introduction of a large amount of computation and a complex structure. To improve the segmentation detail performance of the Seg-Road model, CNN was introduced in the decoder. Further, the CNN layer was used for the multiple stages of the encoder to make sure that the dimensional adjustment remained consistent, and the output was upsampled to 1/4 of the input dimension through the channel for stitching, which ensured that the output of the decoder contained both rich high-level semantic information and low-level feature information. Further, the CNN layer was used to calculate the feature maps of the stitched channels and obtain the final output.

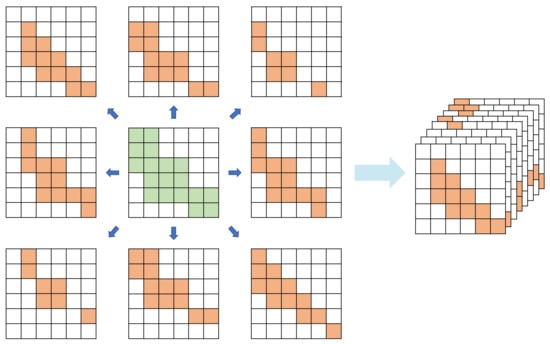

Therefore, to improve the accuracy of road segmentation, this article proposes the use of a pixel connectivity structure (PCS) to mitigate the fragmentation issue of road segmentation; the general process is shown in Figure 5; the output result of the PCS has the same resolution as the input image, with 8 channels. The PCS corresponds to the label generation steps, as follows:

Figure 5.

Process for generating PCS labels.

- (1)

- The distance to initialize the pixel spacing is r; the connected label element values are initialized as 0, and the shape is 8 × H × W, where 8 represents the current pixel point of 8 orientation numbers.

- (2)

- From the first pixel in the upper left corner, the current pixel point and the upper left bit pixel point with distance r are determined according to the principle of left-to-right and top-to-bottom; if both of them are road target pixels, the label of the current pixel point is recorded as 1.

- (3)

- Repeat step (2) and traverse through 8 directions (top left, top, top right, left, right, bottom left, and bottom right) to obtain 8×H×W feature labels, which represent the connectivity relationships of 8 directions. This will help mitigate the fragmentation issue of road segmentation in remote sensing images.

It should be noted that the generated PCS labels correspond to pixel point labels containing only 0 and 1, with 1 indicating the presence of connectivity and 0 indicating the absence of connectivity.

2.2.3. Loss

In this paper, by using the PCS structure to provide certain connectivity information for the model, the actual Seg-Road uses a pixel separation distance of r = 2 to assist in road segmentation in remote sensing images. During the training phase of the PCS, the corresponding label elements contain only 0 and 1, where 1 represents the existence of connectivity. Therefore, binary cross entropy loss is used as the loss function, as shown in the following equation, where denotes the connectivity label, denotes the prediction result, and C denotes the number of H × W pixel points.

The training of the segmentation branch in Seg-Road did not introduce the category balance loss, focal loss, etc., but also used the binary cross entropy loss function to achieve the final pixel-by-pixel classification. In the training of the PCS in Seg-Road, the BCE loss was used to ensure the optimization priority of the split branches; therefore, the overall loss function is shown below, where α takes the value of 0.2 during the actual training.

2.2.4. Seg-Road Inference

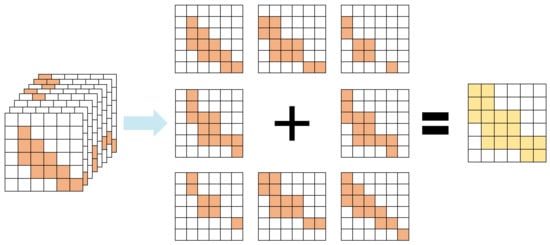

Seg-Road primarily comprises two segmentation branches: the regular semantic segmentation branch and PCS, which implement segmentation and connectivity detection for roads, respectively. The PCS is mainly involved in multi-task training and provides connectivity information to the model. However, to make full use of the information on the PCS prediction results, the final prediction results should be generated using the reverse derivation of the PCS prediction results, which is the inverse process of the PCS corresponding labels, as shown in the following Figure 6.

Figure 6.

Post-processing of the PCS prediction results to improve the connectivity of the final segmentation results.

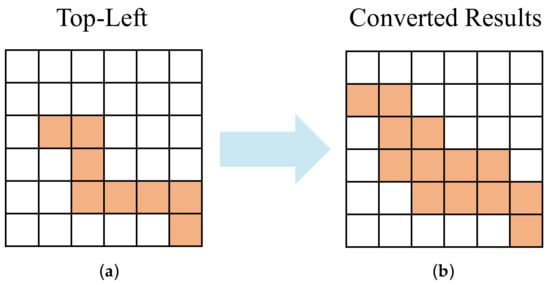

Seg-Road predicts the output results of 8 channels (orientations) with the same resolution as the input image using the PCS module. To represent the output results of the top-left orientation as an example, as shown in Figure 7a, the pavement segmentation results obtained by its reverse mapping are shown in Figure 7b. By reverse mapping the output results of the eight orientations and taking their union, they are used as the connectivity output result of the model.

Figure 7.

Reverse derivation of the PCS output to obtain segmentation results. ((a) is the output of the PCS representing the top-left connectivity and (b) is the result of the conversion of (a)).

3. Experiments and Results Analysis

3.1. Experimental Parameters

This study utilized the Ubuntu 20.04 operating system, Python, the PyTorch 1.9 (Facebook AI Research, New York, NY, USA) deep-learning framework, and CUDA version 11.0. The CPU was a 12th Gen Intel(R) Core(TM) i9-12900KF, and the GPU was NVIDIA GeForce RTX 4090 [50]. The epochs of training for all models were 100, and were divided into two stages. In the first stage (0–50), the batch size was 16, the initial learning rate was 0.001, and the learning rate was updated every training epoch using a decay rate of 0.98; in the second stage (50–100), the batch size was 8, the initial learning rate was 0.0001, and the learning rate was updated every training epoch using the same decay rate, of 0.98. Detailed hyperparameters are shown in Table 1. The models used were first pre-trained in the PASCAL VOC dataset [51], and the model parameters were initialized by transfer learning from the pre-trained model for training in the DeepGlobe and Massachusetts datasets.

Table 1.

Main hyperparameters used for Seg-Road training and testing.

3.2. Experimental Evaluation Indexes

To comprehensively evaluate the effectiveness of building disaster detection, this study selected the IoU, MIoU, Precision, Recall, and F1 as the main evaluation indexes.

In this paper, Precision refers to the ratio of the number of pixels correctly predicted as roads to the number of pixels predicted as roads, as shown in Equation (7). Recall refers to the ratio of the number of pixels that are correctly predicted as roads to the number of pixels that are real road labels, as shown in Equation (8). F1 is a comprehensive evaluation metric of Precision and Recall, as shown in Equation (9). TP refers to correct predictions as positive cases, FP refers to incorrect predictions as positive cases, and FN refers to incorrect predictions as negative cases.

MIoU is a classical measurement index of semantic segmentation, and its calculation method is as shown in Equation (10), where K represents the number of categories (in this study, K was 1 and K + 1 was the number of categories of the foreground and background) and can be understood as the number of objects in category predicted as objects in category . Further, is the evaluation index for road segmentation only.

3.3. Evaluation of Experimental Effects

The Seg-Road model includes three network sizes: s, m, and l, with increasing parameters and computational power, as well as progressively increasing segmentation performances (Table 2). A network of appropriate size can be selected for road segmentation, according to the segmentation accuracy requirements and the capabilities of computing equipment.

Table 2.

The parameters and frames per second (FPS) of Seg-Roads.

To verify the effectiveness of the proposed Seg-Road model for the segmentation of roads in remote sensing images, the DeepGlobe remote sensing image dataset was used, and DeepRoadMapper, LinkNet34, D-LinkNet, PSPNet, RoadCNN, and CoANet (two versions: CoANet and CoANet-UB) were also used for comparison. The detailed evaluation index results are shown in Table 3.

Table 3.

Relevant evaluation indexes of Seg-Road and some SOTA models on DeepGlobe dataset.

It was found that Seg-Road achieved good performance in the Massachusetts dataset, and Seg-Road-l achieved the best road segmentation result of an of 68.38% and MIoU of 83.89%, as shown in Table 4.

Table 4.

Relevant evaluation indexes of Seg-Road and SOTA models on Massachusetts dataset.

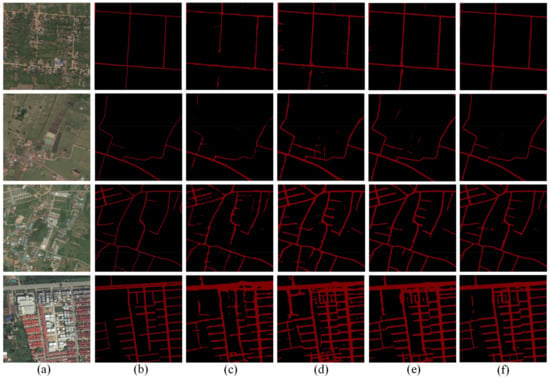

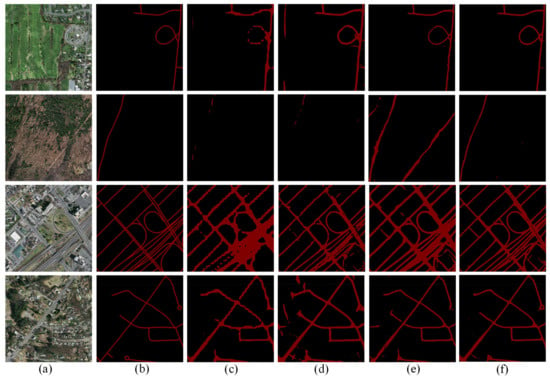

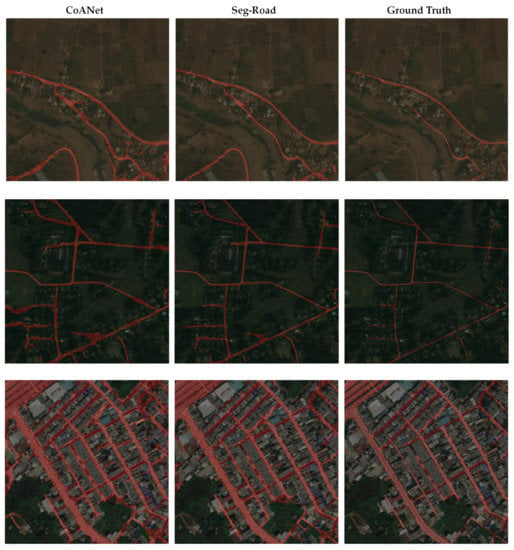

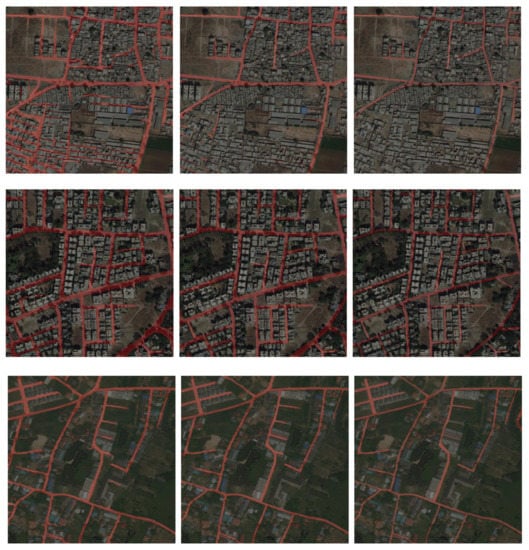

From Table 3 and Table 4, it can be clearly seen that Seg-Road-s, Seg-Road-m, and Seg-Road-l have significant improvements compared to the DeepRoadMapper, LinkNet34, D-LinkNet, PSPNet, RoadCNN, and CoANet models. In CoANet-UB, four different strip convolution structures are used, similar connectivity structures are adopted, and excellent road segmentation results are also obtained. However, both Seg-Road-m and Seg-Road-l—proposed in this study—achieve better segmentation results than CoANet-UB under the premise of fewer parameters. It can be clearly seen that, in comparison to CoANet-UB and Seg-Road, PSPNet and LinkNet32 detection results without connectivity branches exhibit significant fragmentation and poor segmentation at the road edges. On the whole, the segmentation results of CoANet-UB are better connected, but there are more FPs compared to Seg-Road; therefore, the overall evaluation index is lower than that of Seg-Road. It is important to note that Seg-Road has better road segmentation connectivity in remote sensing images, and there are fewer FPs with the highest accuracy. Figure 8 shows the segmentation results of PSPNet, LinkNet32, CoANet-UB, and Seg-Road-l on the DeepGlobe dataset, and Figure 9 shows the same results but for the Massachusetts dataset.

Figure 8.

Results of remote sensing image segmentation in DeepGlobe dataset. ((a) shows the input remote sensing image, (b) shows the ground truth, (c) shows the calculation results of PSPNet, (d) shows the calculation results of LinkNet34, (e) shows the calculation results of CoANet-UB, and (f) shows the calculation results of Seg-Road-l).

Figure 9.

Results of remote sensing image segmentation in Massachusetts dataset. ((a) shows the input remote sensing image, (b) shows the ground truth, (c) shows the calculation results of PSPNet, (d) shows the calculation results of LinkNet34, (e) shows the calculation results of CoANet-UB, and (f) shows the calculation results of Seg-Road-l).

4. Discussion

The Seg-Road model proposed in this paper has three different versions: Seg-Road-s, Seg-Road-m, and Seg-Road-l. The overall structure of the models is approximately the same, differing only in the number of channels in the intermediate computation process and the number of spatial reduction transformers used in each block. Compared with Seg-Road-m and Seg-Road-l, Seg-Road-s has a faster computation speed and can meet application scenarios with higher real-time requirements. The Seg-Road-s proposed in this article also has faster segmentation speed and better segmentation effects and is more suitable for segmentation scenarios with lower real-time requirements. To more clearly ascertain the differences in the segmentation effects between the three versions of the model, a remote sensing image was randomly selected from the testing set for segmentation, and the effect is shown in Figure 10.

Figure 10.

Segmentation results of Seg-Road ((a) shows the ground truth, (b) shows the result of Seg-Road-s, (c) shows the result of Seg-Road-m, and (d) shows the result of Seg-Road-l).

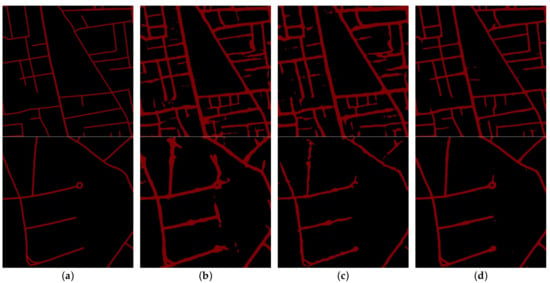

The use of PCS in Seg-Road enhances the network’s perception of connectivity between road pixel points in remotely sensed images, in contrast to the absence of connectivity predictions in segmentation models such as LinkNet34; thus, it is largely speculated that the PCS structure provides a more accurate segmentation effect for the model on the whole. To verify this hypothesis, Seg-Road-s was used as an example, and the evaluation metrics obtained by eliminating the use of PCS and retraining–testing using only segmentation branches were 60.03% IoU for DeepGlobe and 62.86% IoU for Massachusetts when using the same hyperparameters. Therefore, it is verified that the PCS structure proposed in this paper leads to excellent segmentation performance for the segmentation of roads in remote sensing images, even for targets of a similar style. Figure 11 shows the segmentation results of Seg-Road and Seg-Road without PCS. It can be seen that Seg-Road without PCS exhibits more fragmentation in terms of the segmentation results of roads in remote sensing images, while Seg-Road with PCS has stronger global connectivity and better segmentation effects in terms of road detail.

Figure 11.

Images of the effect of PCS on the overall segmentation. ((a) shows the remote sensing image, (b) shows the ground truth, (c) shows the result of Seg-Road without PCS, and (d) shows the result of Seg-Road with PCS).

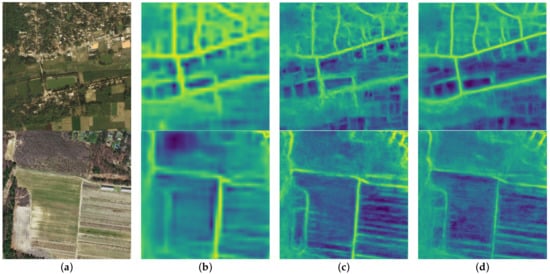

Convolutional neural networks are usually understood to have better local information extraction abilities, while transformer-based networks are usually understood to have better global information extraction abilities. The Seg-Road model proposed in this paper uses spatial reduction transformers in the encoder for the feature extraction of input images and CNNs in the decoder for the fusion of multi-scale features and the prediction of the final results. In comparison to most semantic segmentation models, the individual use of a CNN and transformer leads to a large bias between the global and local information and the segmentation effect of the overall model of the image. To understand the advantages of combined transformer and CNN models, this study visualized the feature maps of the intermediate computed images, as shown in Figure 12. It can be clearly seen that the global connectivity information and local detailed information of the intermediate feature maps are better utilized, and the features extracted from Seg-Road are more accurate and clearer than those from PSPNet and CoANet-UB. Therefore, in comparison, the Seg-Road proposed in this paper achieves better segmentation results on the DeepGlobe and Massachusetts datasets, while it is believed that it can achieve better prediction results for similar tasks [52,53].

Figure 12.

Feature maps obtained from network intermediate calculations. ((a) shows the input remote sensing image, (b) shows the feature map obtained from PSPNet intermediate calculations, (c) shows the feature map obtained from CoANet intermediate calculations, and (d) shows the feature map obtained from Seg-Road intermediate calculations).

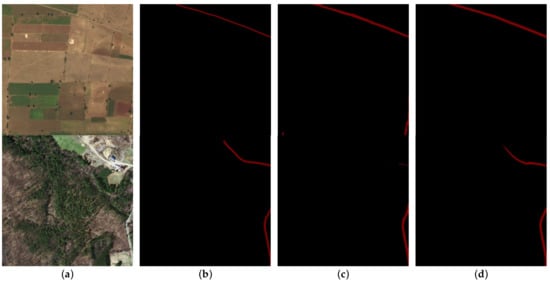

Further, it was found that Seg-Road trained using only the DeepGlobe dataset could achieve a good performance on remote sensing images of the Massachusetts dataset and vice versa, as shown in Figure 13.

Figure 13.

Segmentation results of Seg-Road on the DeepGlobe and Massachusetts datasets. ((a) shows the remote sensing image of the Massachusetts dataset, (b) shows the ground truth of (a), (c) shows the segmentation result of Seg-Road trained by the DeepGlobe dataset, (d) shows the remote sensing image of DeepGlobe dataset, (e) shows the ground truth of (d), and (f) shows the segmentation result of Seg-Road trained by the Massachusetts dataset).

Although Seg-Road has achieved good results in terms of road segmentation, there are still some issues, as follows: the two-way roads are merged in the final result, as shown in Figure 14, which affects the overall precision of the model. Further, the proposed encoder of Seg-Road uses a spatial reduction transformer, which is less computationally and parametrically complex than the vanilla transformer, but still suffers from the issue of insufficient computational speed compared to some CNN-based semantic segmentation models at the same scale. Therefore, in subsequent research, improving the segmentation speed of transformer-based semantic segmentation models will be focused on, in addition to improving the segmentation effect of adjacent roads, such that they have a more accurate segmentation effect, more convenient deployment capability, and higher practical application value. More comparison results can be found in Appendix A.

Figure 14.

Segmentation results of Seg-Road for adjacent roads ((a) shows the remote sensing image, (b) shows the ground truth, and (c) shows Seg-Road segmentation results).

5. Conclusions

This paper proposes a Seg-Road semantic segmentation model based on the transformer and CNN for road extraction in remote sensing images, which uses the transformer structure to enhance the global information extraction ability of the network and the CNN structure to improve the segmentation detail performance of roads. Further, Seg-Road uses PCS to enhance the perception of road pixel connectivity information and—to a certain extent—optimize the discontinuity and fragmentation issues of current semantic segmentation models for road extraction in remotely sensed images. Verification was conducted using the DeepGlobe and Massachusetts datasets, in addition to comparison experiments with D-LinkNet, PSPNet, CoANet, etc. The final IoU, MIoU, F1, precision, and recall of the Seg-Road model on the DeepGlobe dataset were 67.20%, 82.06%, 91.43%, 90.05%, and 92.85%, respectively. The final IoU, MIoU, F1, precision, and recall of the Seg-Road model on the Massachusetts dataset were 68.38%, 83.89%, 90.01%, 87.34%, and 92.86%, respectively. The experimental results show that the Seg-Road model achieves high accuracy and state-of-the-art results on both the DeepGlobe and Massachusetts datasets. In addition, three versions of Seg-Road, with different parametric quantities—namely Seg-Road-s, Seg-Road-m, and Seg-Road-l—were proposed in this paper, all of which achieve good extraction performance and allow the model to be used for real-time segmentation according to the requirements of different application scenarios. Finally, as future research, other improvements will be made, and their effects on the model will be tested. Further, higher-quality datasets will be constructed, and the network architecture will be explored.

Author Contributions

Methodology, writing—original draft preparation and editing, software, J.T.; writing—review and editing, Z.C.; validation, formal analysis, Z.S. and H.G.; investigation, data curation, B.L.; supervision, project administration, Z.Y.; resources, visualization, Y.W.; resources, visualization, Z.H.; funding acquisition, X.L. and J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Research and Development Program of Guangxi (Guike-AB22035060), the Innovative Research Program of the International Research Center of Big Data for Sustainable Development Goals (Grant No. CBAS2022IRP04), the National Natural Science Foundation of China (Grant No. 42171291), and the Chengdu University of Technology Post-graduate Innovative Cultivation Program: Tunnel Geothermal Disaster Susceptibility Evaluation in Sichuan-Tibet Railway Based on Deep Learning (CDUT2022BJCX015).

Data Availability Statement

Not applicable.

Acknowledgments

The authors are grateful for helpful comments from many researchers and colleagues.

Conflicts of Interest

The authors declare that they have no known competing financial interest or personal relationships that could have appeared to influence the work reported in this paper.

Appendix A

References

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Dong, J.; Wang, N.; Fang, H.; Hu, Q.; Zhang, C.; Ma, B.; Ma, D.; Hu, H. Innovative Method for Pavement Multiple Damages Segmentation and Measurement by the Road-Seg-CapsNet of Feature Fusion. Constr. Build. Mater. 2022, 324, 126719. [Google Scholar] [CrossRef]

- Wei, Y.; Wang, Z.; Xu, M. Road Structure Refined CNN for Road Extraction in Aerial Image. IEEE Geosci. Remote Sens. Lett. 2017, 14, 709–713. [Google Scholar] [CrossRef]

- Mattyus, G.; Wang, S.; Fidler, S.; Urtasun, R. Enhancing Road Maps by Parsing Aerial Images around the World. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Wang, J.; Song, J.; Chen, M.; Yang, Z. Road Network Extraction: A Neural-Dynamic Framework Based on Deep Learning and a Finite State Machine. Int. J. Remote Sens. 2015, 36, 3144–3169. [Google Scholar] [CrossRef]

- Guo, M.; Liu, H.; Xu, Y.; Huang, Y. Building Extraction Based on U-Net with an Attention Block and Multiple Losses. Remote Sens. 2020, 12, 1400. [Google Scholar] [CrossRef]

- Yu, Z.; Chang, R.; Chen, Z. Automatic Detection Method for Loess Landslides Based on GEE and an Improved YOLOX Algorithm. Remote Sens. 2022, 14, 4599. [Google Scholar] [CrossRef]

- Yu, Z.; Chen, Z.; Sun, Z.; Guo, H.; Leng, B.; He, Z.; Yang, J.; Xing, S. SegDetector: A Deep Learning Model for Detecting Small and Overlapping Damaged Buildings in Satellite Images. Remote Sens. 2022, 14, 6136. [Google Scholar] [CrossRef]

- Mosinska, A.; Marquez-Neila, P.; Kozinski, M.; Fua, P. Beyond the Pixel-Wise Loss for Topology-Aware Delineation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Bastani, F.; He, S.; Abbar, S.; Alizadeh, M.; Balakrishnan, H.; Chawla, S.; Madden, S.; Dewitt, D. RoadTracer: Automatic Extraction of Road Networks from Aerial Images. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Zhou, L.; Zhang, C.; Wu, M. D-Linknet: Linknet with Pretrained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Tan, Y.Q.; Gao, S.H.; Li, X.Y.; Cheng, M.M.; Ren, B. Vecroad: Point-Based Iterative Graph Exploration for Road Graphs Extraction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Vasu, S.; Kozinski, M.; Citraro, L.; Fua, P. TopoAL: An Adversarial Learning Approach for Topology-Aware Road Segmentation. In Proceedings of the Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Mei, J.; Li, R.J.; Gao, W.; Cheng, M.M. CoANet: Connectivity Attention Network for Road Extraction from Satellite Imagery. IEEE Trans. Image Process. 2021, 30, 8540–8552. [Google Scholar] [CrossRef]

- Cao, X.; Zhang, K.; Jiao, L. CSANet: Cross-Scale Axial Attention Network for Road Segmentation. Remote Sens. 2022, 15, 3. [Google Scholar] [CrossRef]

- Liu, Z.; Yeoh, J.K.W.; Gu, X.; Dong, Q.; Chen, Y.; Wu, W.; Wang, L.; Wang, D. Automatic Pixel-Level Detection of Vertical Cracks in Asphalt Pavement Based on GPR Investigation and Improved Mask R-CNN. Autom. Constr. 2023, 146, 104689. [Google Scholar] [CrossRef]

- Yuan, W.; Xu, W. GapLoss: A Loss Function for Semantic Segmentation of Roads in Remote Sensing Images. Remote Sens. 2022, 14, 2422. [Google Scholar] [CrossRef]

- Sun, J.; Li, Y. Multi-Feature Fusion Network for Road Scene Semantic Segmentation. Comput. Electr. Eng. 2021, 92, 107155. [Google Scholar] [CrossRef]

- Lian, R.; Huang, L. DeepWindow: Sliding Window Based on Deep Learning for Road Extraction from Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1905–1916. [Google Scholar] [CrossRef]

- Wang, D.; Liu, Z.; Gu, X.; Wu, W.; Chen, Y.; Wang, L. Automatic Detection of Pothole Distress in Asphalt Pavement Using Improved Convolutional Neural Networks. Remote Sens. 2022, 14, 3892. [Google Scholar] [CrossRef]

- Tardy, H.; Soilán, M.; Martín-Jiménez, J.A.; González-Aguilera, D. Automatic Road Inventory Using a Low-Cost Mobile Mapping System and Based on a Semantic Segmentation Deep Learning Model. Remote Sens. 2023, 15, 1351. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, C.; Li, J.; Xie, N.; Han, Y.; Du, J. Reconstruction Bias U-Net for Road Extraction from Optical Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2284–2294. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L. Swin transformer v2: Scaling up capacity and resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12009–12019. [Google Scholar]

- Chen, Z.; Chang, R.; Pei, X.; Yu, Z.; Guo, H.; He, Z.; Zhao, W.; Zhang, Q.; Chen, Y. Tunnel Geothermal Disaster Susceptibility Evaluation Based on Interpretable Ensemble Learning: A Case Study in Ya’an–Changdu Section of the Sichuan–Tibet Traffic Corridor. Eng. Geol. 2023, 313, 106985. [Google Scholar] [CrossRef]

- Singh, S.; Batra, A.; Pang, G.; Torresani, L.; Basu, S.; Paluri, M.; Jawahar, C.V. Self-Supervised Feature Learning for Semantic Segmentation of Overhead Imagery. In Proceedings of the British Machine Vision Conference 2018, BMVC 2018, Newcastle, UK, 3–6 September 2019. [Google Scholar]

- Chen, D.; Zhong, Y.; Zheng, Z.; Ma, A.; Lu, X. Urban Road Mapping Based on an End-to-End Road Vectorization Mapping Network Framework. ISPRS J. Photogramm. Remote Sens. 2021, 178, 345–365. [Google Scholar] [CrossRef]

- Batra, A.; Singh, S.; Pang, G.; Basu, S.; Jawahar, C.V.; Paluri, M. Improved Road Connectivity by Joint Learning of Orientation and Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Xie, Y.; Zhang, J.; Shen, C.; Xia, Y. CoTr: Efficiently Bridging CNN and Transformer for 3D Medical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention—MICCAI 2021, Strasbourg, France, 27 September–1 October 2021. Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). [Google Scholar]

- Fang, J.; Lin, H.; Chen, X.; Zeng, K. A Hybrid Network of CNN and Transformer for Lightweight Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1103–1112. [Google Scholar]

- Pinto, F.; Torr, P.H.; Dokania, P.K. An impartial take to the cnn vs transformer robustness contest. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–24 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 466–480. [Google Scholar]

- Chen, J.; Chen, X.; Chen, S.; Liu, Y.; Rao, Y.; Yang, Y.; Wang, H.; Wu, D. Shape-Former: Bridging CNN and Transformer via ShapeConv for multimodal image matching. Inf. Fusion 2023, 91, 445–457. [Google Scholar] [CrossRef]

- Kitaev, N.; Kaiser, A.; Levskaya, A. Reformer: The efficient transformer. arXiv 2020, arXiv:2001.04451. [Google Scholar]

- Rao, R.M.; Liu, J.; Verkuil, R.; Meier, J.; Canny, J.; Abbeel, P.; Sercu, T.; Rives, A. Msa transformer. In Proceedings of the International Conference on Machine Learning: PMLR, Online, 18–24 July 2021; pp. 8844–8856. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Weng, Y.; Zhou, T.; Li, Y.; Qiu, X. NAS-Unet: Neural Architecture Search for Medical Image Segmentation. IEEE Access 2019, 7, 44247–44257. [Google Scholar] [CrossRef]

- Liu, C.; Chen, L.C.; Schroff, F.; Adam, H.; Hua, W.; Yuille, A.L.; Fei-Fei, L. Auto-Deeplab: Hierarchical Neural Architecture Search for Semantic Image Segmentation. In Proceedings of the Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wang, H.; Zhu, Y.; Adam, H.; Yuille, A.; Chen, L.C. Max-DeepLab: End-to-End Panoptic Segmentation with Mask Transformers. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raska, R. DeepGlobe 2018: A Challenge to Parse the Earth through Satellite Images. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Yan, H.; Zhang, C.; Yang, J.; Wu, M.; Chen, J. Did-Linknet: Polishing D-Block with Dense Connection and Iterative Fusion for Road Extraction. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2186–2189. [Google Scholar]

- Wang, Y.; Seo, J.; Jeon, T. NL-LinkNet: Toward Lighter but More Accurate Road Extraction with Nonlocal Operations. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Mattyus, G.; Luo, W.; Urtasun, R. DeepRoadMapper: Extracting Road Topology from Aerial Images. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Yan, L.; Liu, D.; Xiang, Q.; Luo, Y.; Wang, T.; Wu, D.; Chen, H.; Zhang, Y.; Li, Q. PSP Net-Based Automatic Segmentation Network Model for Prostate Magnetic Resonance Imaging. Comput. Methods Programs Biomed. 2021, 207, 106211. [Google Scholar] [CrossRef]

- Chen, Z.; Chang, R.; Zhao, W.; Li, S.; Guo, H.; Xiao, K.; Wu, L.; Hou, D.; Zou, L. Quantitative Prediction and Evaluation of Geothermal Resource Areas in the Southwest Section of the Mid-Spine Belt of Beautiful China. Int. J. Digit. Earth 2022, 15, 748–769. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Fang, H.; Lafarge, F. Pyramid Scene Parsing Network in 3D: Improving Semantic Segmentation of Point Clouds with Multi-Scale Contextual Information. ISPRS J. Photogramm. Remote Sens. 2019, 154, 246–258. [Google Scholar] [CrossRef]

- Shaw, P.; Uszkoreit, J.; Vaswani, A. Self-Attention with Relative Position Representations. In Proceedings of the NAACL HLT 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies—Proceedings of the Conference, New Orleans, LA, USA, June 2018. [Google Scholar]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-Attention Generative Adversarial Networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 10–15 June 2019. [Google Scholar]

- Gibbons, F.X. Self-Attention and Behavior: A Review and Theoretical Update. Adv. Exp. Soc. Psychol. 1990, 23, 249–303. [Google Scholar] [CrossRef]

- Chen, Z.; Chang, R.; Guo, H.; Pei, X.; Zhao, W.; Yu, Z.; Zou, L. Prediction of Potential Geothermal Disaster Areas along the Yunnan–Tibet Railway Project. Remote Sens. 2022, 14, 3036. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial as Deep: Spatial CNN for Traffic Scene Understanding. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, AAAI 2018, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Huang, X.; Wang, P.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The ApolloScape Open Dataset for Autonomous Driving and Its Application. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2702–2719. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).