Double Branch Parallel Network for Segmentation of Buildings and Waters in Remote Sensing Images

Abstract

1. Introduction

2. Methods

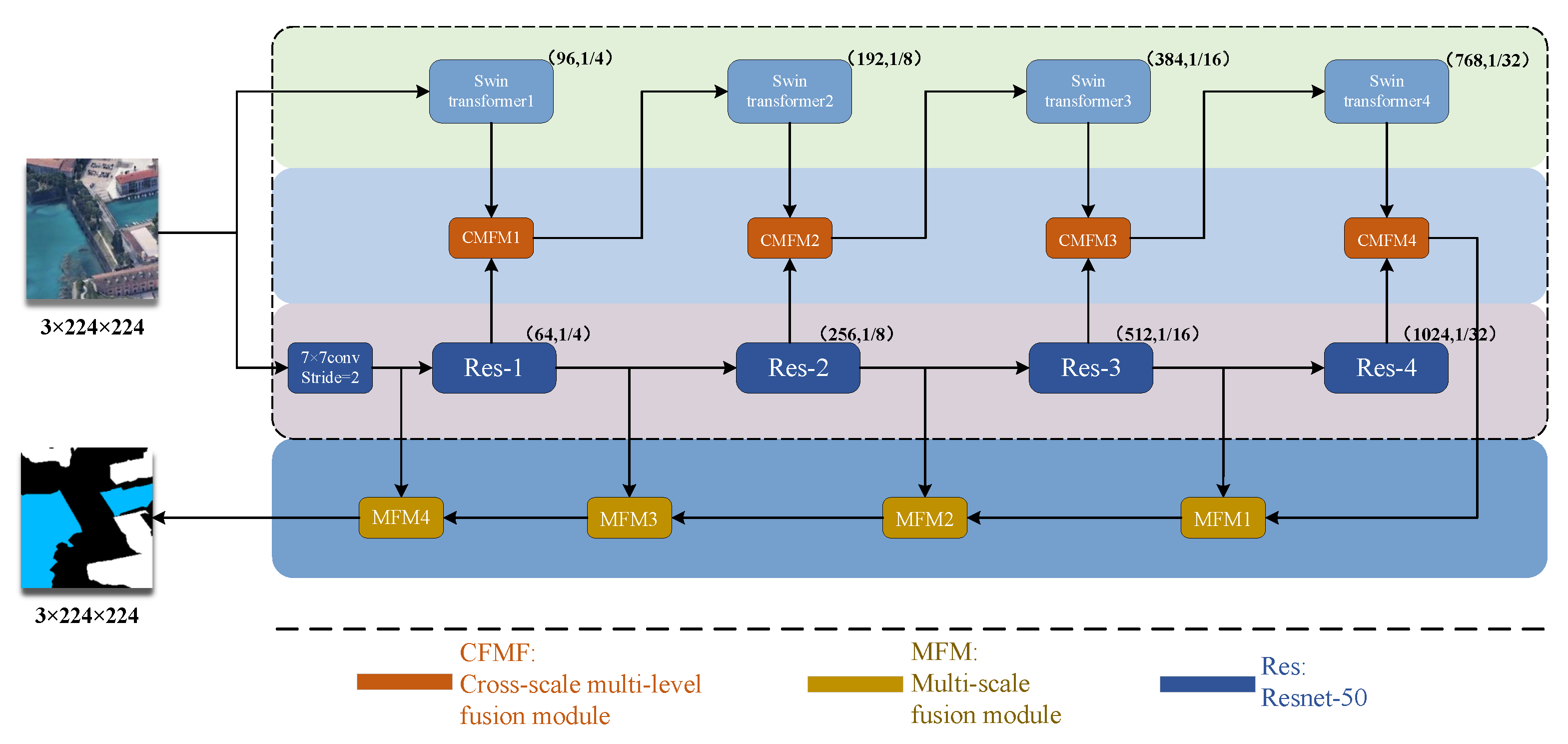

2.1. Overall Structure

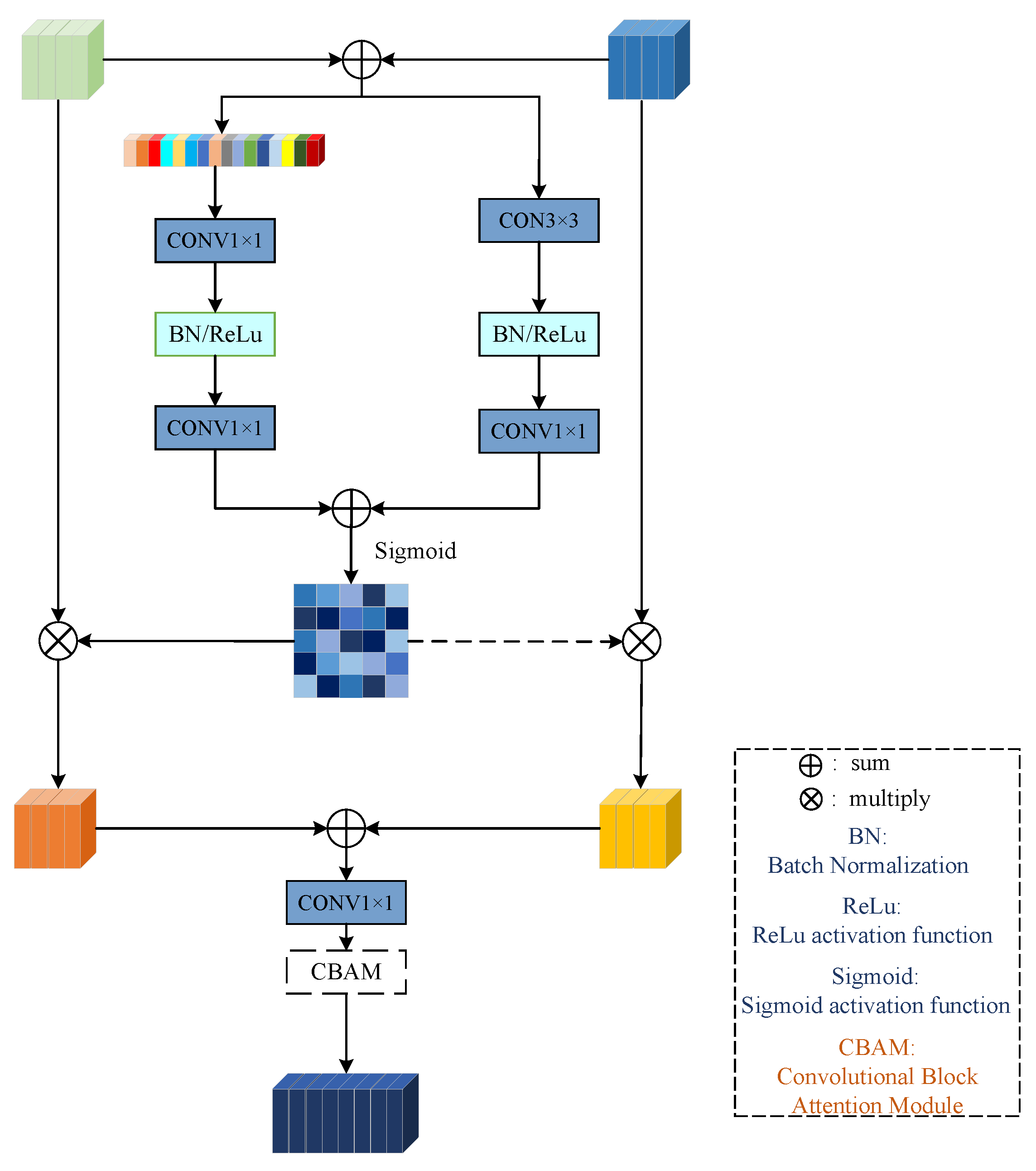

2.2. Cross-Scale Multi-Level Fusion Module

2.3. Multi-Scale Fusion Module

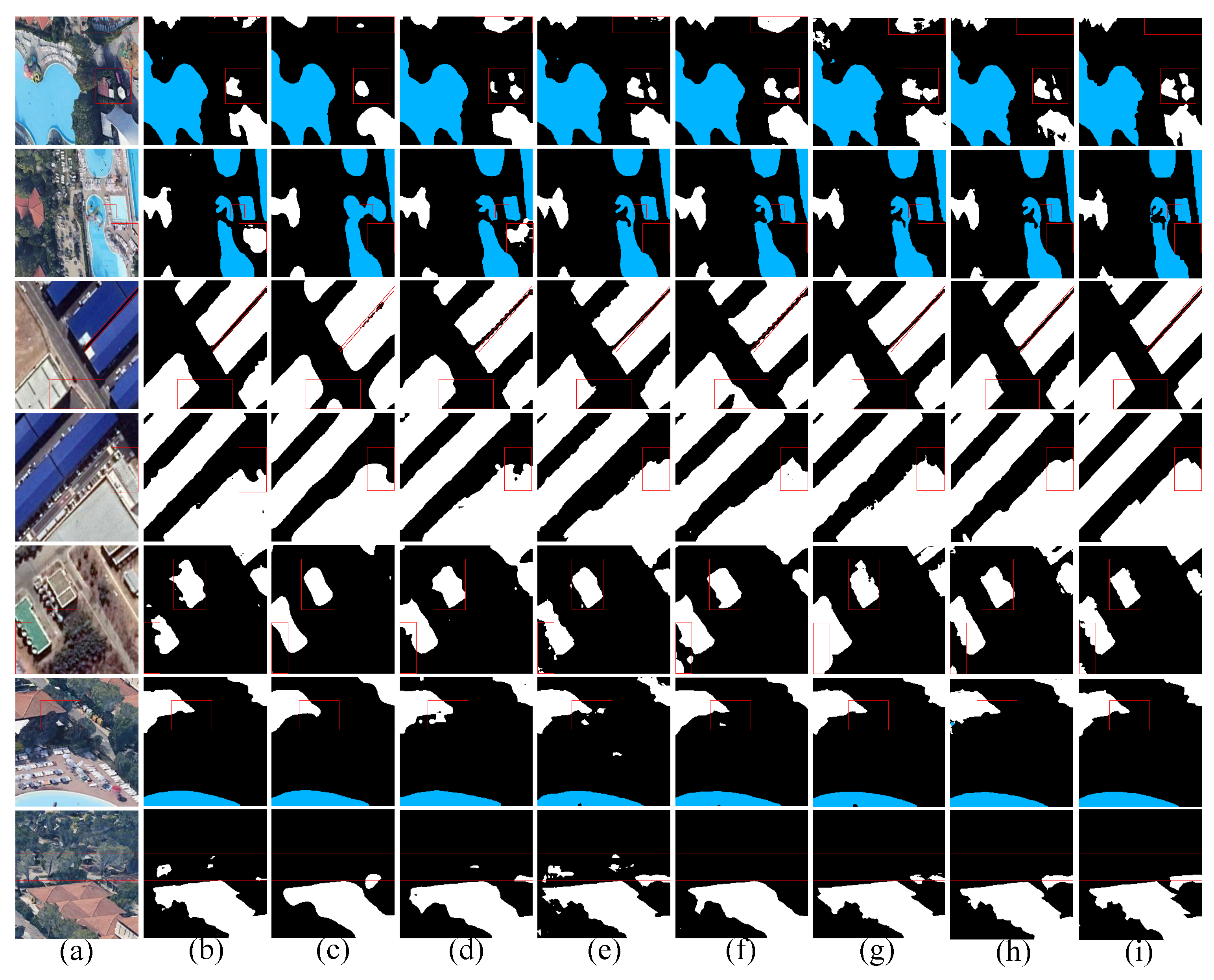

3. Results

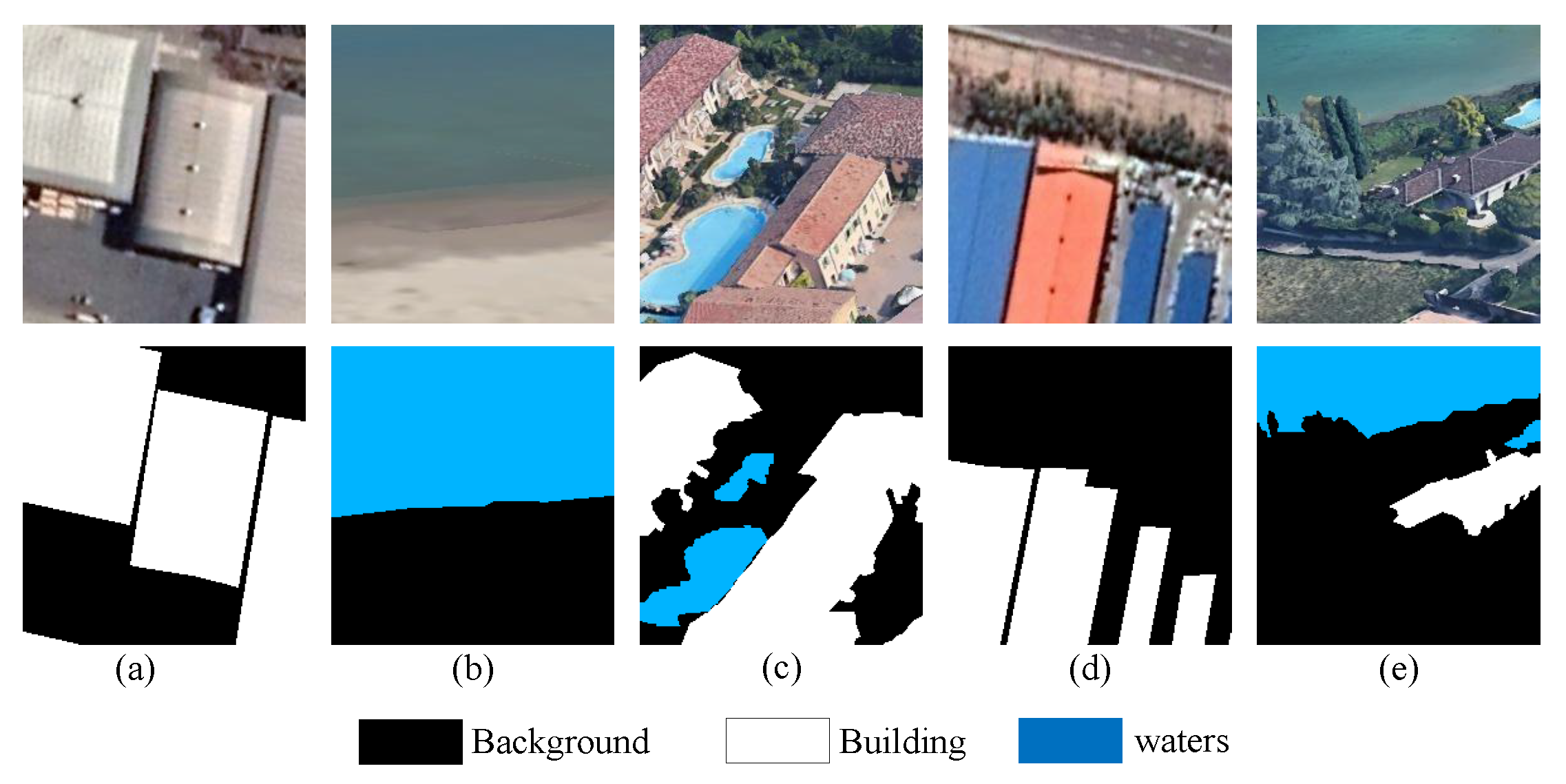

3.1. Building and Water Dataset

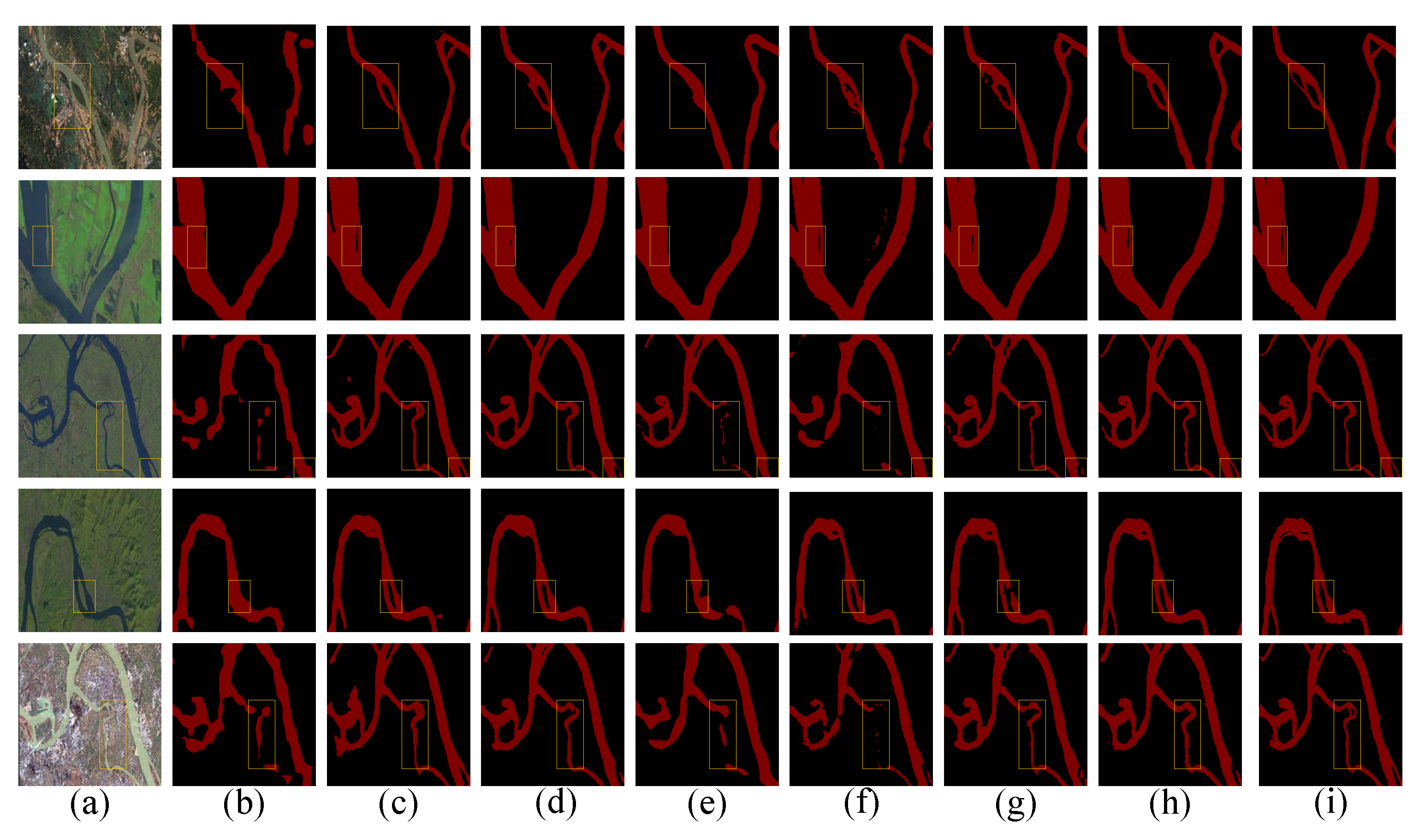

3.2. Waters Dataset

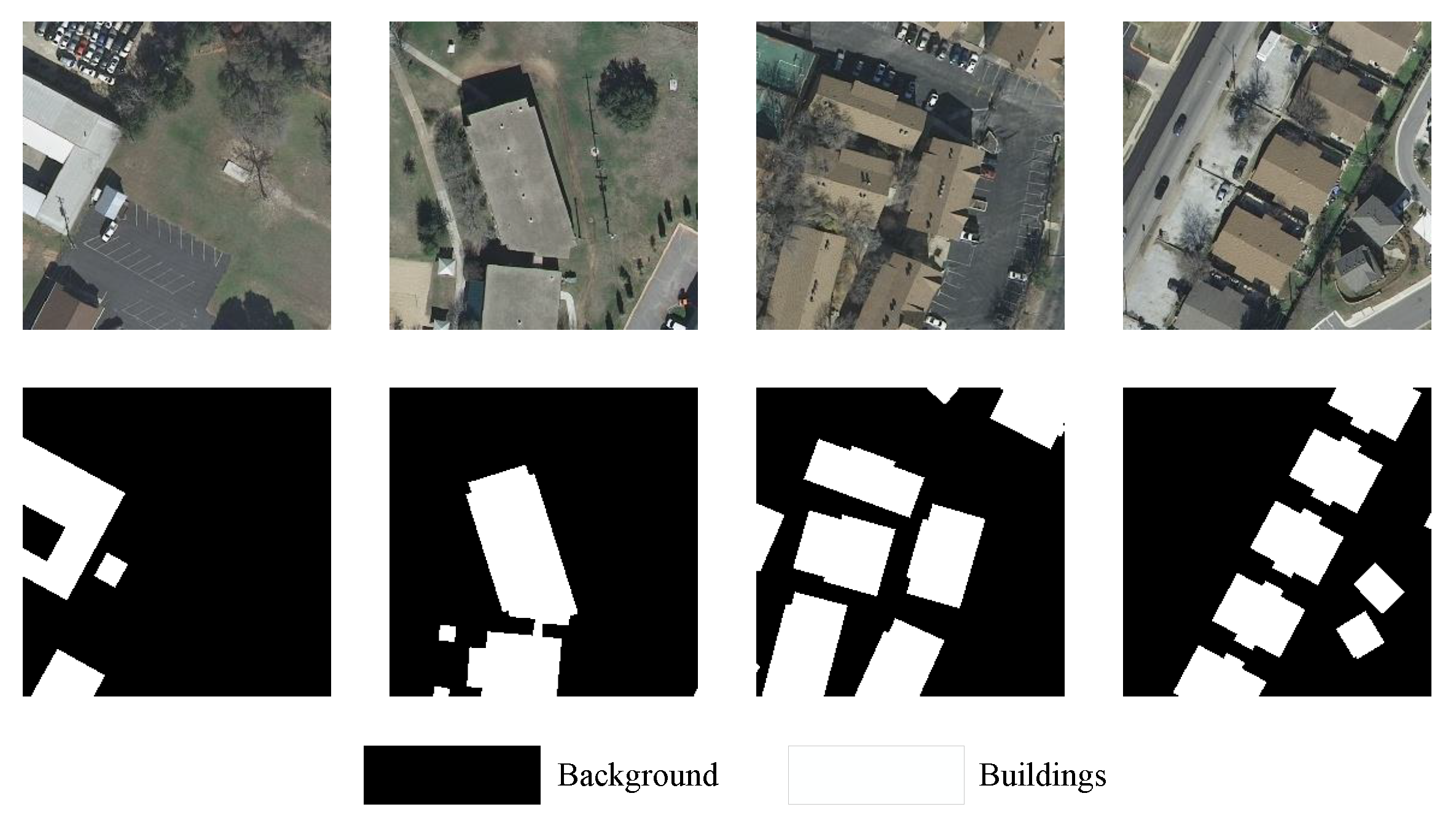

3.3. Inria Dataset

3.4. Ablation Experiment

3.5. Contrast Experiment

3.6. Generalization Experiment

3.6.1. Waters Dataset

3.6.2. Inria Dataset

4. Discussion

4.1. About the Model

- A double-branch parallel network of Swin Transformer and CNN is proposed. The two network structures extract feature information separately and aggregate the extracted feature information, which can better improve the accuracy and generalization of segmentation. Swin Transformer makes up for the deficiency of the limited receptive field of convolutional neural network (CNN) and can better perform global information interaction; in addition, CNN can make up for the lack of translation in the variance of Transformer.

- Considering the difference of feature information extracted from two branches, a cross-scale bilateral feature aggregation module is proposed. This method can effectively aggregate different levels of feature information and guide each other, so that more feature information can be globally interacted. It effectively reduces the occurrence of misjudgment. In the upsampling stage, an aggregation module is also proposed, which fully utilizes high-level semantic information to direct low-level semantic information, and recovers high-resolution pixel-level feature information and edge feature information.

4.2. About the Experiment

4.3. Limitations and Future Prospects of the Model

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shu, Q.; Pan, J.; Zhang, Z.; Wang, M. DPCC-Net: Dual-perspective change contextual network for change detection in high-resolution remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102940. [Google Scholar] [CrossRef]

- Song, L.; Xia, M.; Weng, L.; Lin, H.; Qian, M.; Chen, B. Axial Cross Attention Meets CNN: Bi-Branch Fusion Network for Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 32–43. [Google Scholar] [CrossRef]

- Yu, Y.; Huang, L.; Lu, W.; Guan, H.; Ma, L.; Jin, S.; Yu, C.; Zhang, Y.; Tang, P.; Liu, Z.; et al. WaterHRNet: A multibranch hierarchical attentive network for water body extraction with remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103103. [Google Scholar] [CrossRef]

- Qu, Y.; Xia, M.; Zhang, Y. Strip pooling channel spatial attention network for the segmentation of cloud and cloud shadow. Comput. Geosci. 2021, 157, 104940. [Google Scholar] [CrossRef]

- Lu, C.; Xia, M.; Lin, H. Multi-scale strip pooling feature aggregation network for cloud and cloud shadow segmentation. Neural Comput. Appl. 2022, 34, 6149–6162. [Google Scholar] [CrossRef]

- Hu, K.; Li, M.; Xia, M.; Lin, H. Multi-Scale Feature Aggregation Network for Water Area Segmentation. Remote Sens. 2022, 14, 206. [Google Scholar] [CrossRef]

- Wang, T.; Lan, J.; Han, Z.; Hu, Z.; Huang, Y.; Deng, Y.; Zhang, H.; Wang, J.; Chen, M.; Jiang, H.; et al. O-Net: A novel framework with deep fusion of CNN and transformer for simultaneous segmentation and classification. Front. Neurosci. 2022, 16, 876065. [Google Scholar] [CrossRef]

- Pang, K.; Weng, L.; Zhang, Y.; Liu, J.; Lin, H.; Xia, M. SGBNet: An Ultra Light-weight Network for Real-time Semantic Segmentation of Land Cover. Int. J. Remote Sens. 2022, 43, 5917–5939. [Google Scholar] [CrossRef]

- Chen, J.; Sun, B.; Wang, L.; Fang, B.; Chang, Y.; Li, Y.; Zhang, J.; Lyu, X.; Chen, G. Semi-supervised semantic segmentation framework with pseudo supervisions for land-use/land-cover mapping in coastal areas. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102881. [Google Scholar] [CrossRef]

- Miao, S.; Xia, M.; Qian, M.; Zhang, Y.; Liu, J.; Lin, H. Cloud/shadow segmentation based on multi-level feature enhanced network for remote sensing imagery. Int. J. Remote Sens. 2022, 43, 5940–5960. [Google Scholar] [CrossRef]

- Wang, Z.; Xia, M.; Lu, M.; Pan, L.; Liu, J. Parameter Identification in Power Transmission Systems Based on Graph Convolution Network. IEEE Trans. Power Deliv. 2022, 37, 3155–3163. [Google Scholar] [CrossRef]

- Chen, B.; Xia, M.; Huang, J. Mfanet: A multi-level feature aggregation network for semantic segmentation of land cover. Remote Sens. 2021, 13, 731. [Google Scholar] [CrossRef]

- Ma, Z.; Xia, M.; Weng, L.; Lin, H. Local Feature Search Network for Building and Water Segmentation of Remote Sensing Image. Sustainability 2023, 15, 3034. [Google Scholar] [CrossRef]

- Ding, Y.; Zhao, X.; Zhang, Z.; Cai, W.; Yang, N.; Zhan, Y. Semi-supervised locality preserving dense graph neural network with ARMA filters and context-aware learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Zhang, Z.; Ding, Y.; Zhao, X.; Siye, L.; Yang, N.; Cai, Y.; Zhan, Y. Multireceptive field: An adaptive path aggregation graph neural framework for hyperspectral image classification. Expert Syst. Appl. 2023, 217, 119508. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, Z.; Zhao, X.; Hong, D.; Cai, W.; Yu, C.; Yang, N.; Cai, W. Multi-feature fusion: Graph neural network and CNN combining for hyperspectral image classification. Neurocomputing 2022, 501, 246–257. [Google Scholar] [CrossRef]

- Chen, B.; Xia, M.; Qian, M.; Huang, J. MANet: A multi-level aggregation network for semantic segmentation of high-resolution remote sensing images. Int. J. Remote Sens. 2022, 43, 5874–5894. [Google Scholar] [CrossRef]

- Hu, K.; Weng, C.; Zhang, Y.; Jin, J.; Xia, Q. An Overview of Underwater Vision Enhancement: From Traditional Methods to Recent Deep Learning. J. Mar. Sci. Eng. 2022, 10, 241. [Google Scholar] [CrossRef]

- Hu, K.; Ding, Y.; Jin, J.; Weng, L.; Xia, M. Skeleton Motion Recognition Based on Multi-Scale Deep Spatio-Temporal Features. Appl. Sci. 2022, 12, 1028. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, Z.; Zhao, X.; Cai, Y.; Li, S.; Deng, B.; Cai, W. Self-supervised locality preserving low-pass graph convolutional embedding for large-scale hyperspectral image clustering. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Lu, C.; Xia, M.; Qian, M.; Chen, B. Dual-branch Network for Cloud and Cloud Shadow Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. Refinenet: Multi-path refinement networks for high-resolution semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1925–1934. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Chu, X.; Tian, Z.; Wang, Y.; Zhang, B.; Ren, H.; Wei, X.; Xia, H.; Shen, C. Twins: Revisiting the design of spatial attention in vision transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 9355–9366. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: Unet-like pure transformer for medical image segmentation. arXiv 2021, arXiv:2105.05537. [Google Scholar]

- Gao, J.; Weng, L.; Xia, M.; Lin, H. MLNet: Multichannel feature fusion lozenge network for land segmentation. J. Appl. Remote Sens. 2022, 16, 016513. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, G.; Yun, I.; Kim, J.; Kim, J. Dabnet: Depth-wise asymmetric bottleneck for real-time semantic segmentation. arXiv 2019, arXiv:1907.11357. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation. Int. J. Comput. Vis. 2021, 129, 3051–3068. [Google Scholar] [CrossRef]

- Fang, L.; Liu, J.; Liu, J.; Mao, R. Automatic segmentation and 3d reconstruction of spine based on fcn and marching cubes in ct volumes. In Proceedings of the 2018 10th International Conference on Modelling, Identification and Control (ICMIC), Guiyang, China, 2–4 July 2018; pp. 1–5. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Jiang, W.; Wu, Y.; Guan, L.; Zhao, J. Dfnet: Semantic segmentation on panoramic images with dynamic loss weights and residual fusion block. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5887–5892. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3146–3154. [Google Scholar]

- Peng, Z.; Huang, W.; Gu, S.; Xie, L.; Wang, Y.; Jiao, J.; Ye, Q. Conformer: Local features coupling global representations for visual recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 367–376. [Google Scholar]

- Mehta, S.; Rastegari, M.; Shapiro, L.; Hajishirzi, H. Espnetv2: A light-weight, power efficient, and general purpose convolutional neural network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9190–9200. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Learning a discriminative feature network for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1857–1866. [Google Scholar]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 22–31. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 568–578. [Google Scholar]

- Yuan, Y.; Chen, X.; Chen, X.; Wang, J. Segmentation transformer: Object-contextual representations for semantic segmentation. arXiv 2019, arXiv:1909.11065. [Google Scholar]

| Method | MIOU (%) |

|---|---|

| Resnet | 82.59 |

| Resnet + Swin Transformer | 84.40 |

| Resnet + Swin Transformer + CMFM | 85.53 |

| Resnet + Swin Transformer + CMFM + MFM | 87.86 |

| Method | PA (%) | MPA (%) | MIOU (%) |

|---|---|---|---|

| SegNet [40] | 89.88 | 89.06 | 80.06 |

| UNet [31] | 92.96 | 92.19 | 86.27 |

| PAN [41] | 92.78 | 93.22 | 85.78 |

| HRNet [27] | 92.37 | 92.68 | 84.78 |

| DFNet [42] | 91.74 | 90.31 | 84.42 |

| DeepLabV3+ [36] | 92.83 | 93.42 | 86.18 |

| BiSeNetV2 [38] | 91.19 | 91.13 | 82.81 |

| DANet [43] | 92.16 | 92.50 | 84.25 |

| DABNet [35] | 92.61 | 92.33 | 85.38 |

| ShuffleNetV2 [37] | 90.63 | 89.75 | 81.08 |

| PSPNet [24] | 93.40 | 92.88 | 86.61 |

| FCN8s [21] | 92.66 | 92.81 | 85.91 |

| SwinUNet [32] | 89.68 | 90.00 | 81.14 |

| Dual-branch [22] | 92.80 | 93.25 | 86.02 |

| MFANet [12] | 93.02 | 93.73 | 86.62 |

| Ours | 93.64 | 94.11 | 87.86 |

| Method | PA (%) | MPA (%) | MIOU (%) |

|---|---|---|---|

| Conformer [44] | 96.81 | 96.19 | 91.65 |

| SegNet [40] | 97.48 | 97.04 | 93.35 |

| ESPNetV2 [45] | 98.23 | 98.09 | 95.27 |

| GhostNet [46] | 96.80 | 96.32 | 91.61 |

| DeeplabV3+ [36] | 98.14 | 97.50 | 95.08 |

| DFNet [47] | 98.11 | 97.82 | 94.96 |

| CVT [48] | 97.34 | 96.61 | 93.03 |

| ShuffleNetV2 [37] | 98.17 | 98.02 | 95.10 |

| FCN8s [21] | 97.45 | 97.01 | 93.28 |

| Dual-branch [22] | 98.28 | 98.13 | 95.39 |

| PVT [49] | 97.88 | 97.75 | 94.34 |

| VIT [29] | 87.81 | 85.51 | 70.66 |

| MFANet [12] | 98.25 | 97.96 | 95.33 |

| Ours | 98.65 | 98.39 | 96.38 |

| Method | PA (%) | MPA (%) | MIOU (%) |

|---|---|---|---|

| DFNet [47] | 93.95 | 91.20 | 82.98 |

| CVT [48] | 90.91 | 86.34 | 75.72 |

| GhostNet [46] | 91.40 | 87.01 | 76.93 |

| MFANet [12] | 94.47 | 92.04 | 84.28 |

| SegNet [40] | 94.21 | 91.20 | 83.78 |

| HRNet [27] | 93.89 | 90.08 | 82.85 |

| DeeplabV3+ [36] | 94.33 | 91.37 | 84.09 |

| FCN8s [36] | 93.21 | 89.31 | 81.49 |

| SGBNet [8] | 94.12 | 91.52 | 83.36 |

| PVT [49] | 93.95 | 91.26 | 82.48 |

| Dual-branch [22] | 94.38 | 92.16 | 83.96 |

| ShuffleNetV2 [37] | 94.22 | 91.34 | 83.75 |

| OCRNet [50] | 94.77 | 92.27 | 85.13 |

| Ours | 95.27 | 92.66 | 86.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Xia, M.; Wang, D.; Lin, H. Double Branch Parallel Network for Segmentation of Buildings and Waters in Remote Sensing Images. Remote Sens. 2023, 15, 1536. https://doi.org/10.3390/rs15061536

Chen J, Xia M, Wang D, Lin H. Double Branch Parallel Network for Segmentation of Buildings and Waters in Remote Sensing Images. Remote Sensing. 2023; 15(6):1536. https://doi.org/10.3390/rs15061536

Chicago/Turabian StyleChen, Jing, Min Xia, Dehao Wang, and Haifeng Lin. 2023. "Double Branch Parallel Network for Segmentation of Buildings and Waters in Remote Sensing Images" Remote Sensing 15, no. 6: 1536. https://doi.org/10.3390/rs15061536

APA StyleChen, J., Xia, M., Wang, D., & Lin, H. (2023). Double Branch Parallel Network for Segmentation of Buildings and Waters in Remote Sensing Images. Remote Sensing, 15(6), 1536. https://doi.org/10.3390/rs15061536