Abstract

Oblique photography technology based on UAV (unmanned aerial vehicle) provides an effective means for the rapid, real-scene 3D reconstruction of geographical objects on a watershed scale. However, existing research cannot achieve the automatic and high-precision reconstruction of water regions due to the sensitivity of water surface patterns to wind and waves, reflections of objects on the shore, etc. To solve this problem, a novel rapid reconstruction scheme for water regions in 3D models of oblique photography is proposed in this paper. It extracts the boundaries of water regions firstly using a designed eight-neighborhood traversal algorithm, and then reconstructs the triangulated irregular network (TIN) of water regions. Afterwards, the corresponding texture images of water regions are intelligently selected and processed using a designed method based on coordinate matching, image stitching and clipping. Finally, the processed texture images are mapped to the obtained TIN, and the real information about water regions can be reconstructed, visualized and integrated into the original real-scene 3D environment. Experimental results have shown that the proposed scheme can rapidly and accurately reconstruct water regions in 3D models of oblique photography. The outcome of this work can refine the current technical system of 3D modeling by UAV oblique photography and expand its application in the construction of twin watershed, twin city, etc.

1. Introduction

Digital watershed technology has been considered the most powerful means for modern watershed planning and management. It can collect, represent and manage all kinds of watershed information by adopting synthetically several modern technologies, such as geographic information system (GIS), remote sensing (RS), virtual reality (VR), high-performance computing (HPC) [1,2,3,4], etc. Plenty of research has indicated that people can obtain more knowledge in 3D simulation scenes than in traditional 2D scenes [5,6,7,8,9]. For example, if one is in a virtual simulation scene, the impact of extreme weather can be understood more intuitively than if one were reading newspapers or watching TV programs. Consequently, the construction of 3D virtual simulative scenes of watersheds has received considerable attention from relevant scholars in the past two decades [10,11,12].

Early research on 3D visualization of watershed objects focused mainly on the 3D representation of watershed terrain, using digital elevation models (DEMs) and high-resolution remote sensing images [10,13,14]. Although 3D terrains of large areas can be represented rapidly by this kind of methods, the data volume of the generated 3D models is usually large, putting considerable pressure on data representation. The multi-resolution tile pyramid technology was usually adopted to improve the rendering efficiency of 3D terrain data without affecting the visual effect [15,16]. Limited by scale, it is difficult for 3D terrain models generated based on DEMs to express the local detailed features of watersheds. As a result, detailed models of some key objects were commonly constructed manually and overlaid on the basis of a 3D terrain model [17,18]. To strike a balance between the fidelity and loading speed of 3D models, multiple models with various levels of detail were generally built for each entity and invoked on demand [19,20]. This problem can also be solved to a certain extent by classifying spatial entities according to their significance; the greater the importance of an entity, the higher the accuracy of its corresponding 3D models [21,22]. Desired effects of 3D visualization can be obtained with limited hardware conditions using the methods introduced in [19,20,21,22]. Nevertheless, high-precision models were mainly constructed manually, and the modeling processes were commonly inefficient. Moreover, traditional approaches to 3D visualization were mainly for man-made structures and objects, e.g., buildings, roads, etc., which are not suitable for modeling most categories of watershed factors, e.g., vegetation, farmlands and rivers.

In the past decade, 3D modeling technology based on UAV (unmanned aerial vehicle) oblique photography has developed rapidly, providing a new solution for rapid real-scene 3D reconstruction of watershed objects [23,24,25]. It collects the images of the target region from vertical and oblique angles simultaneously by configuring multiple sensors (cameras with five lenses are commonly used at present) on the same flight platform (UAV) [26]. Aerial triangulation is then implemented on the collected multi-view images to generate the total-factor 3D surface models of the target region, matching conjugate points in various multi-view images. Compared with traditional 3D modeling methods, oblique photography technology based on UAV has many advantages, e.g., high efficiency, low cost, strong authenticity [27,28,29], etc.

For the 3D modeling technology based on UAV oblique photography, the matching of the conjugate points in various multi-view images is a key step in reconstructing surface objects. Compared with other surface objects, such as buildings and water conservancy facilities, water regions have several unique features. Firstly, wind and waves are common phenomena in water regions, which usually result in various surface morphologies of the water region at different moments. Additionally, due to water reflection, the visual effects of water regions commonly differ from various angles of photography. As such, it is quite difficult to match conjugate points in various multi-view images when reconstructing water regions, and the obtained 3D models are usually irregular with many holes (Figure 1).

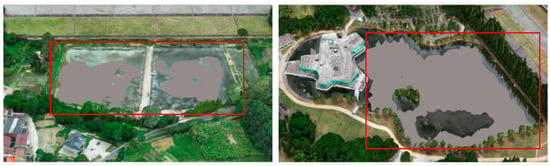

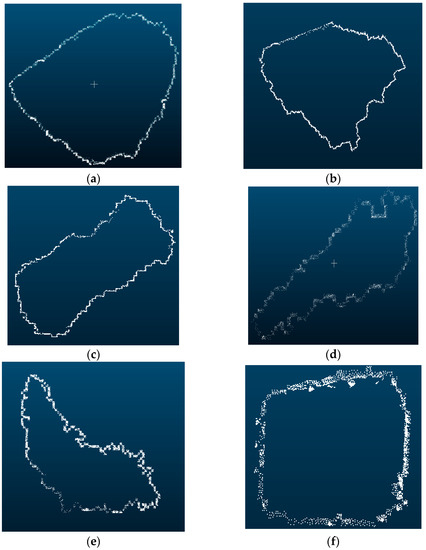

Figure 1.

The effects of 3D reconstruction of water regions by UAV-based oblique photography technology, and the content in the red box corresponds to water regions in the real world.

In normal application scenarios, real information about water regions is not important and is thus seldom concentrated on, and virtual digital water-surface models are usually adopted to represent information of water regions in real-scene 3D environments [30,31,32]. Although the overall visual effect of the virtual scene is guaranteed, the real information about water regions, e.g., water color, floating objects, surrounding environment, etc., cannot be visualized. In some specific applications, e.g., water pollution management, water environment monitoring, etc., real information about water regions is significant for decision-making, and traditional 3D watershed scenes cannot meet this demand. Based on high-resolution remote sensing images, the boundaries of water regions can be extracted using machine learning methods [33,34,35,36,37]. These methods, however, are only applicable to remote sensing images and cannot help in reconstructing water regions in 3D models of oblique photography. In addition, these methods can only extract the boundaries of water bodies and cannot obtain detailed texture information. In recent years, several commercial software programs, e.g., DP-Modeler [38], Meshmixer [39] and SVSModeler [40], have been developed to help repair the preliminary reconstruction results of water regions in 3D models of oblique photography. Nevertheless, a significant amount of human involvement is required when using these software programs, and the entire process of water region reconstruction is time-consuming. More significantly, in these methods, the texture of water-surface model is usually assigned through sample grabbing or texture interpolation, and rich texture information of water regions is still difficult to be represented in the 3D watershed scenes.

In summary, while UAV oblique photography technology has made it possible to rapidly and efficiently reconstruct multiple objects in a watershed, it cannot automatically and accurately obtain real information about water regions. As a result, information such as water color and floating objects is rarely represented in common 3D watershed scenes. The practicality of real-scene 3D models of watershed is not strong at present. To make a breakthrough in this field, a rapid reconstruction scheme for water regions in 3D models of oblique photography is proposed in this paper, the novelty of which can be summarized as follows:

- (1)

- A novel eight-neighborhood traversal algorithm has been designed and implemented. This algorithm can accurately and rapidly extract the boundary points of water regions in 3D models of oblique photography.

- (2)

- A fully automatic algorithm for texture image selection, preprocessing and mapping has been developed. This algorithm can intelligently map the textures of water regions based on the multi-view images acquired by UAV.

- (3)

- An evaluation system has been constructed for the reconstruction results of water regions in 3D models of oblique photography. This system can allow for both qualitative and quantitative evaluations of the reconstruction effect.

This paper is structured as follows: Section 2 describes the difficulties of reconstructing water regions based on oblique photography technology. Section 3 introduces the proposed rapid 3D reconstruction scheme for water region in detail. Finally, performance study and conclusions are presented in Section 4 and Section 5, respectively.

2. Materials and Methods

2.1. Data Acquisition

In this study, a DJI M200 UAV equipped with five lenses was used to acquire multi-view images of the study area, which is Lake Tianmuhu watershed, a typical small watershed in the low mountains and hills in China. The main flight parameters of the used UAV were set as follows: flight altitude of 90 m, flight speed of 5 m/s, longitudinal overlap of 80% and sidelap of 60%. The main parameters of the camera used were as follows: sensor type CMOS, equivalent focal length of 24 mm, image resolution of 5472 × 3078, effective pixels of 20 million, 3 bands (red, green and blue) and calibrated IMU status. The orientations of the five lenses equipped in the used UAV remained constant during the data acquisition process, i.e., the orientation of the middle lens was vertically downward and the orientations of the other four lenses were all 45 degrees tilted inward. During the data acquisition process, images captured by different cameras were stored in independent paths.

After the acquisition of multi-view images, a software named “ContextCapture” was used to perform the aerial triangulation and generate the final 3D models of the research areas (in .obj format).

2.2. Boundary Point Extraction of Water Region

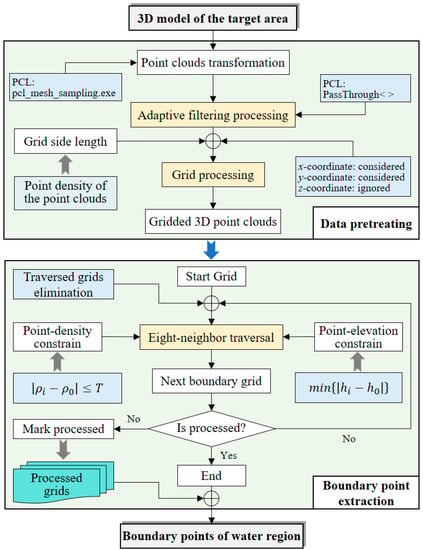

The definition of the spatial scope is the basis for the reconstruction of the water region. Considering that both the elevation and the density of point clouds of water regions in the 3D model of oblique photography are far lower than those of onshore areas, point density and point elevation are used as two constraints to identify the boundary points of the water regions in this section. The diagram of the boundary point extraction of the water region is shown in Figure 2. Given the original 3D model constructed using oblique photography technology, the process of the boundary point extraction of the water region can be demonstrated using the following seven steps:

Figure 2.

The flowchart of the procedure of boundary point extraction of water region.

Step 1. Transform the original 3D model into 3D point clouds. In this step, Point Cloud Library (PCL) [41], an open-source library for 2D/3D image and point cloud processing, is used to transform the 3D models obtained from oblique photography (in .obj format) into point clouds (in .pcd format). This step is designed to improve the generality of the proposed method. However, if the original 3D point cloud data are available in specific applications, this step can be skipped.

Step 2. Eliminate interference points from the obtained 3D point clouds. Due to errors introduced during the 3D reconstruction process (specifically, the matching of conjugate points in various multi-view images as mentioned in the Section 1), there may be some erroneous points in the 3D point clouds with abnormal elevations (either too large or too little). To improve the efficiency of the proposed scheme, a path-through filter of point elevation based on PCL is constructed in this step to eliminate interference points, with the threshold values being determined adaptively based on the point density of the obtained 3D point clouds.

The analysis results of the 3D point clouds show that the point density at the top and bottom of the 3D point cloud data are significantly lower than in other areas. In this step, the threshold values of the path-through filter are determined based on the minimum (assumed e1) and maximum (assumed e2) elevations. The basis for this determination is that the densities of the points whose elevations are larger than e2 or less than e1 are all less than a given value.

Step 3. Divide the obtained 3D point clouds into independent grids. Construct a regular 2D grid and divide the obtained 3D point clouds into corresponding grids based on x and y coordinates of the 3D points. The side length of the constructed 2D grid is determined by the average point density of the obtained 3D point clouds. All the grids are initially marked as “unprocessed.”

Step 4. Determine the starting grid for boundary extraction of the water region artificially. Note that the starting grid must be located on the boundary of the water region. The result of the starting point grid selection has an impact on the accuracy of water boundary extraction in theory. However, according to simulation experiments, the tolerance of the proposed scheme is satisfactory, and there is almost no influence on the result of boundary point extraction if the selected starting point grid is near the boundary of the water region (it does not need to be very precise).

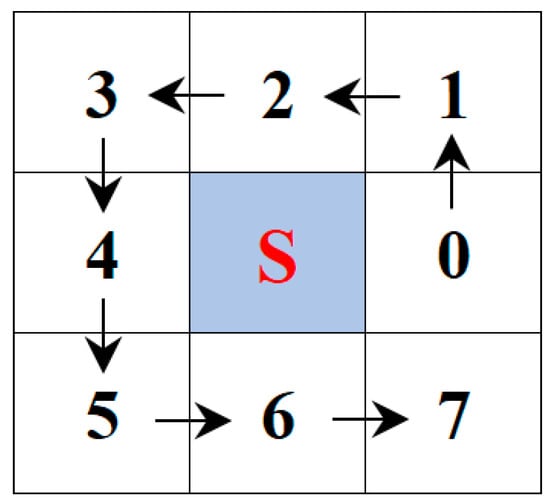

Step 5. Search the adjacent boundary grid of the starting grid using the eight-neighbor analytical method (Figure 3). Point density and point elevation are two constraints used to identify the boundary point grid from the eight-neighbor grids. As shown in Figure 4, the grid marked as “S” is the starting grid, and its adjacent boundary grid will be searched from its eight adjacent grids (i.e., the grids marked as 0, 1, 2, 3, 4, 5, 6 and 7). The determined adjacent boundary grid must satisfy two conditions: (i) the difference in the point density of this grid and that of the starting grid is less than a given threshold, and (ii) the average value of point z-coordinates in this grid is the nearest one to that of the starting grid among the adjacent grids that satisfy condition (i).

Figure 3.

The flowchart of the eight-neighbor analytical method.

Figure 4.

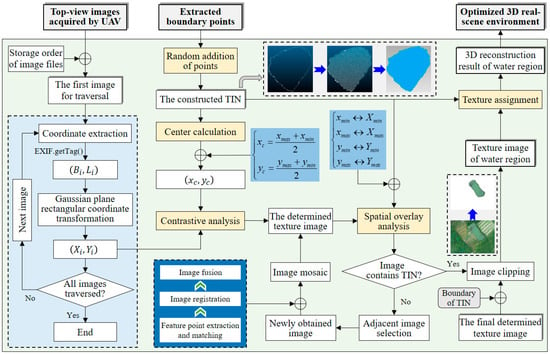

The flowchart of the procedure of TIN reconstruction and texture mapping of water region.

Step 6. If the identified boundary grid is marked as “processed”, then terminate the process of boundary point extraction. Otherwise, mark the identified boundary grid as “processed,” take it as the starting grid and repeat Step 5 (note that any grids that were traversed in the previous round of boundary grid searching will not be traversed again).

Step 7. Repeat Step 6 until the newly identified boundary grid is already marked “processed.”

Finally, all points within the processed grids will be taken as the extracted boundary points of the water region. Using these extracted boundary points, the triangulated irregular network (TIN) model of the water region will be reconstructed, and then the corresponding texture image(s) will be processed and mapped to the constructed TIN, which will be introduced in detail in Section 2.3.

2.3. Triangulated Irregular Network (TIN) Reconstruction and Texture Mapping of Water Region

The diagram of TIN reconstruction and texture mapping of the water region is shown in Figure 4. After extracting the boundary points of water region, the TIN of the water region will be reconstructed, and the corresponding texture image will be automatically selected and mapped based on the following six steps:

Step 1. Reconstruct the TIN of the water region. Firstly, randomly add some points within the coordinate range of the extracted boundary points, with the number of the added points determined according to the coordinate range of the extracted boundary points as described in this paper. Then, construct the TIN based on the added points and the extracted boundary points using the Delaunay algorithm [42].

Step 2. Intelligently select the texture image of the constructed TIN. Both the reflection theory of light and practical experience indicate that the top-view images of the water region are least interfered with by reflections of objects on the shore. On this basis, a method of texture selection is designed and implemented in this paper, which can be summarized in the following sub-steps: (i) calculate the coordinates of the center of the extracted boundary points (denoted as ) using Equation (1), where , , and denote the maximum x-coordinate, the maximum y-coordinate, the minimum x-coordinate and the minimum y-coordinate of the extracted boundary points, respectively; and (ii) transform the geographical coordinates (represented by longitude and latitude) of each top-view image into rectangular coordinates (represented by x and y) by Equation (2), where B and L represent the latitude and the longitude of the image center, respectively; N denotes the radius of curvature in prime vertical; L0 represents the longitude of the Central Meridian; a and b are the major and minor axis semidiameters of the Earth’s ellipsoid, respectively; ρ is a constant with a value of 206,264.806247096355″ and X represents the ellipsoid arc length from the equator to the projection point of the image center on the reference ellipsoid [43]. Afterwards, all top-view images are traversed, the image whose coordinates are the nearest to is selected as the texture image.

Step 3. Determine whether the TIN reconstructed in Step 1 is completely contained in the selected texture image. The inclusive relationship between the TIN and the texture image will be considered tenable only if the conditions listed in Equation (3) are all satisfied. In Equation (3), (resp.) and (resp.) represent the minimum (resp. maximum) x-coordinate and y-coordinate of points in the reconstructed TIN, while (resp. ) and (resp.) represent the minimum (resp. maximum) x-coordinate and y-coordinate of the pixels in the selected texture image, respectively. The calculation methods of , , and are shown in Equation (4), where represents the center coordinate of the selected image, W and H are the width and height of the image, respectively, Res represents the pixel resolution of the image and ⌊∙⌋ represents the rounding function.

Step 4. Determine the final texture image to be mapped to the TIN. If the inclusive relationship between the TIN and the texture image is tenable, the current selected image will be taken as the final texture image to be mapped. Otherwise, if the relationship is not tenable, the nearest image to the current image will be firstly selected from the top-view images based on coordinate comparison. Then, the two images will be merged to generate a mosaic image. The process of image mosaicing involves three main stages [44]: feature point extraction and matching, image registration, and image fusion. Afterwards, take the obtained mosaic image as the selected texture image and repeat Step 3 and Step 4 until the inclusive relationship between the TIN and the texture image is tenable.

Step 5. Map the final determined texture image to the reconstructed TIN of the water region. First, clip the selected texture image based on the vector boundary of the reconstructed TIN so that only the texture of the water region is retained. Then assign the clipped texture image to the reconstructed TIN, and the reconstructed 3D model of the water region can be obtained.

Step 6. Replace the original model data of the water region with the obtained 3D model. Delete all points within the boundary of the reconstructed TIN, and then place the reconstructed 3D model in the original model data.

After completing the steps described in Section 2.2 and Section 2.3, the water region can be fully reconstructed, and the original 3D real-scene environment of the watershed can be optimized.

2.4. Accuracy and Effect Evaluation of Water Region Reconstruction

The 3D reconstruction of water regions in 3D models of oblique photography is currently a rarely researched topic, and thus effective indexes for evaluating the reconstruction results are lacking. In this paper, the corresponding index system is constructed to evaluate the reconstruction results of the water region, both qualitatively and quantitatively.

To evaluate the reconstruction results, qualitative comparisons will be made between the scene photos of the water regions and the screenshots of reconstructed results. Moreover, to further assess the reconstruction accuracy, boundary points of water regions in 3D models of oblique photography will be manually selected, and then an error analysis will be performed between the selected boundary points and the boundary points extracted by the proposed algorithm. Four indexes are adopted in this paper to assess quantitatively the reconstruction accuracy: average error (AE), root mean square error (RMSE), standard deviation (SD) and error of area (EOA).

3. Experiments and Results

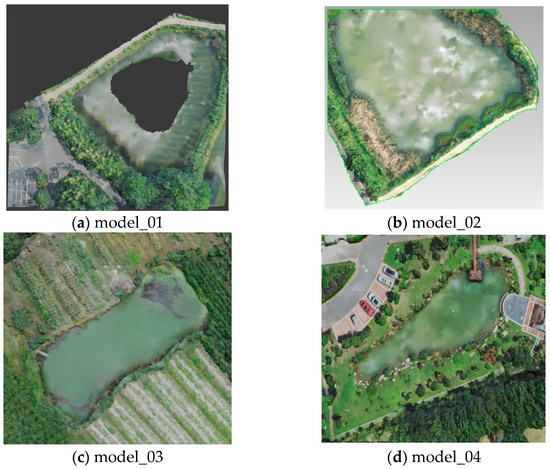

Ten 3D models generated by UAV oblique photography technology in Figure 5 (in .obj format) are used in this section as experimental data to test the performance of the proposed 3D reconstruction scheme for water regions. Table 1 lists the basic properties of the ten experimental 3D models, i.e., number of points and coordinate ranges. In this section, the experiments were conducted on a PC with a configuration: CPU Inter Core i7-8700 3.20 GHz, GPU Intel® UHD Graphics 630, RAM 64 GB and OS Windows 10 Education (×64).

Figure 5.

The experimental 3D models of oblique photography.

Table 1.

Properties of the experimental 3D models.

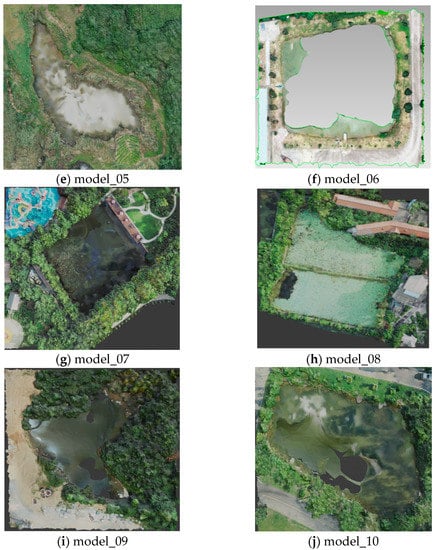

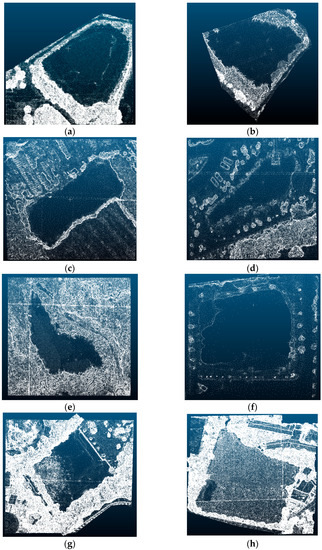

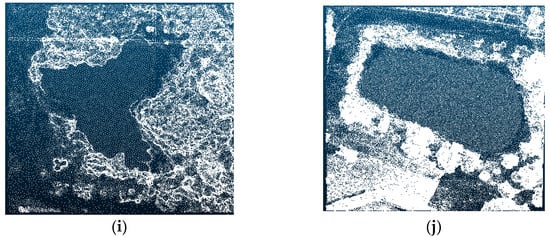

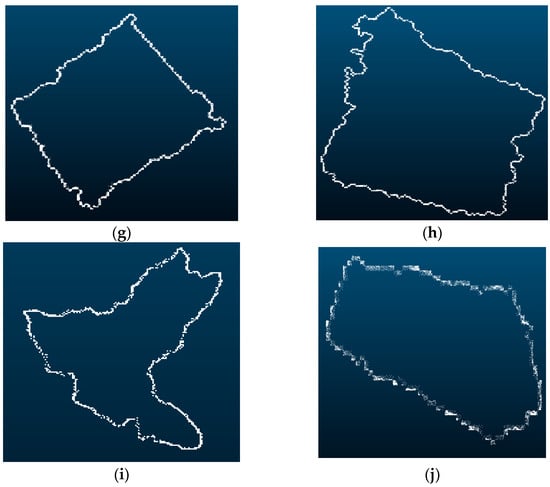

As described in Step 1 of Section 2.2, the ten experimental 3D models of oblique photography were first transformed into 3D point clouds (in .pcl format). The results of the transformation are shown in Figure 6. Afterwards, interference points were eliminated from the obtained 3D point clouds, and all 3D point clouds were then divided into independent grids, as mentioned in Step 2 and Step 3 of Section 2.2. Then, the boundary points of water regions were extracted from the ten pretreated 3D point clouds, using the method explained in Step 4, Step 5, Step 6 and Step 7 of Section 2.2. The results of boundary point extraction of water regions are shown in Figure 7.

Figure 6.

Results of 3D point clouds transformation of the ten experimental 3D models. (a–j) are the 3D point clouds of model_01, model_02, model_03, model_04, model_05, model_06, model_07, model_08, model_09 and model_10, respectively.

Figure 7.

Results of boundary point extraction of the ten experimental 3D models. (a–j) are the results of boundary point extraction of water regions in model_01, model_02, model_03, model_04, model_05, model_06, model_07, model_08, model_09 and model_10, respectively.

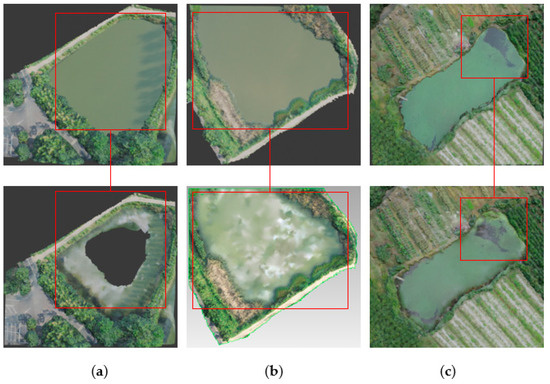

As mentioned in Section 2.3, after extracting the boundary points of water regions of the ten experimental 3D models, TINs of water regions were then constructed, and texture images were automatically selected and mapped. Afterwards, the original model data of water regions were replaced by the reconstructed 3D models. The final results of 3D reconstruction of water regions are shown in Figure 8.

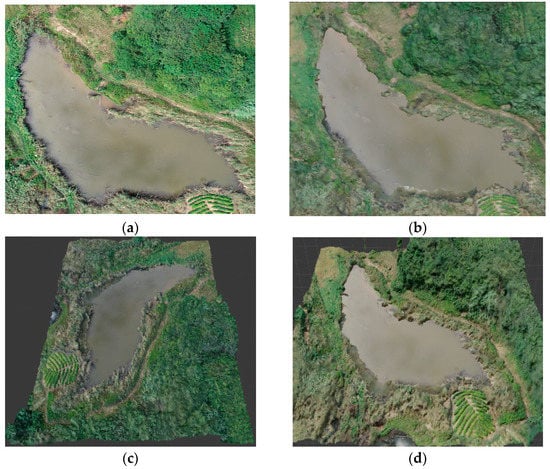

Figure 8.

Results of water region reconstruction. (a–j) are the reconstruction results of water regions in model_01, model_02, model_03, model_04, model_05, model_06, model_07, model_08, model_09 and model_10, respectively. For each result, the upper image is the reconstruction result, the bottom image is the original 3D model of photography, and the framed regions are the effects of local alignment.

3.1. Qualitative Evaluation

It can be seen from Figure 5 (the original 3D models of oblique photography) and Figure 8 (the final results of the water region reconstruction) that water regions in the original 3D models of oblique photography can be effectively reconstructed. Holes can be filled, and the real information of water regions can be represented in the real-scene 3D environments, thanks to the proposed scheme.

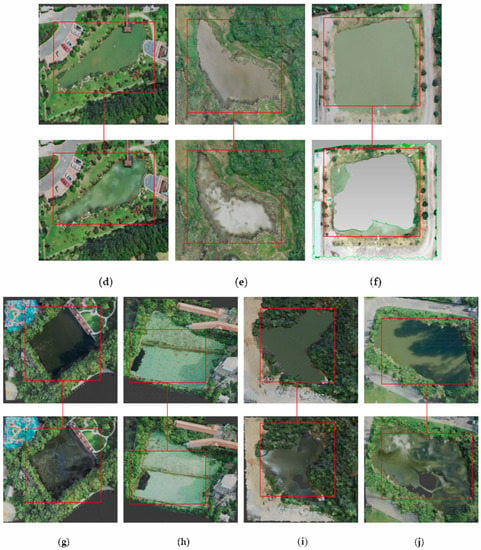

In order to further assess the reconstruction effect of water regions, visual comparisons were conducted between the final obtained 3D models of water regions and the scene photos captured by UAV. The comparison results indicate that the reconstruction effect of water regions is visually good. Taking one of the ten experimental 3D models as an example, the comparison results are shown in Figure 9.

Figure 9.

Comparison results of reconstruction effect of water regions. (a) is the scene photo captured by UAV, and (b–d) are the screenshots of the reconstruction result from three different angles.

3.2. Quantitative Evaluation

In addition to qualitative assessments, quantitative evaluations have also been carried out in this paper. Firstly, an accuracy comparison was made among this scheme and two state-of-the-art works, i.e., schemes in [33,37], in terms of water boundary extraction. The accuracy of each scheme was evaluated based on the manually selected boundary points of water regions using the four indexes explained in Section 2.4. Moreover, tests were performed with a general hardware configuration to evaluate the efficiency of the proposed scheme.

- (1)

- Accuracy Evaluation

For each experimental 3D model, the water boundary was firstly manually depicted, and then extracted using the proposed scheme. The corresponding texture image generated in Step 4 of Section 2.3 was also adopted for the water boundary extraction of the schemes in [33,37]. Afterwards, the point coordinate errors of this scheme and the schemes in [33,37] were calculated and analyzed based on the manually depicted water boundary, using the four indexes explained in Section 2.4, i.e., AE, RMSE, SD and EOA. The experimental results are shown in Table 2. It can be seen from Table 2 that the average values of AE, RMSE and SD of this scheme are all less than 0.6 m, and the average value of EOA is less than 4%. In addition, the accuracy of the water boundary extraction of the proposed scheme is acceptable compared with state-of-the-art works.

Table 2.

Comparison results of accuracy of water boundary extraction.

- (2)

- Efficiency Evaluation

For each experimental 3D model, the consumed time of 3D reconstruction of the water region was recorded and is presented in Table 3 (the configuration of the used PC is described in the first paragraph of Section 3). The consumed time includes the entire process of water region reconstruction, except the manual determination of the start-grid for boundary extraction of water regions, i.e., Step 4 of Section 2.2. It can be concluded from Table 3 that the proposed scheme is quite efficient.

Table 3.

Results of efficiency evaluation of this scheme.

4. Discussion

A novel, rapid and accurate reconstruction scheme for water regions in 3D models of oblique photography is proposed in this paper. Accurately reconstructing the water region in 3D models of oblique photography poses two main problems: (i) accurately modeling the water surface, and (ii) real-texture mapping of the reconstructed water surface model.

For the first problem, it is assumed that the surfaces of water regions are flat. In this case, the modeling of water surface can be transformed into the boundary extraction of the water region. The distinct point cloud characteristics (i.e., elevation and density) of water regions and other objects provide the foundation for the accurate identification of the boundary of land and water regions. On this basis, an algorithm for the boundary point extraction of the water region in 3D models of oblique photography is designed in this paper (explained in Section 2.2). Experimental results indicate that the accuracy of boundary extraction results can be at the centimeter level.

For the second problem, both the reflection theory of light and practical experience indicate that the top-view images of water regions are least interfered with by reflections of objects on the shore. In this case, the real-texture mapping of the reconstructed water-surface model can be transformed into the intelligent optimization and processing of top-view images. In fact, the metadata of each image captured by digital cameras are recorded and bound to themselves (named EXIF information). The content of EXIF contains image coordinates, image resolution, shooting time, etc. On this basis, a method of texture selection is designed and implemented in this paper. Furthermore, there was a situation where the spatial range of the selected top-view image cannot completely contain the target water region. To solve this problem, another method was designed in this paper (mentioned in Step 3 of Section 2.3). Experimental results have verified the effectiveness of the proposed methods.

In summary, the scheme presented in this paper can improve the current technical system of 3D modeling by UAV oblique photography. The outcomes of this paper can contribute to the construction of real-scene 3D environments in many application fields, e.g., twin watershed, twin lakes, twin city, etc. Meanwhile, there are four aspects of work that are valuable to be further researched in future studies. Firstly, in this work, the starting grid for boundary point extraction is determined manually, which is the only step requiring manual intervention in this scheme. Therefore, automatic determination methods for the starting grid should be studied further in the near future. Secondly, in the proposed scheme, both the TIN and the corresponding texture image are only processed and represented at the finest level, and the LOD (levels of detail) technology is not applied to improve the rendering efficiency of 3D scenes. In future work, more attention should be paid to the generation of TINs and texture images of water regions with various levels of fineness based on LOD technology to obtain an efficient rendering operation. Thirdly, the texture information is obtained only based on the top-view images of UAV in this paper, and there are some interfering factors in the final generated texture images of water regions, especially shadows of objects on the shore. Thus, there is still much room for improvement in texture mapping. In future studies, it is worthy to further develop intelligent algorithms for detecting and eliminating interfering factors in the texture images of water regions. Finally, it is assumed in this paper that the surfaces of water regions are flat, and thus objects within water regions, such as islands, towers, etc., cannot be 3D reconstructed, which is worthy of further study.

5. Conclusions

UAV oblique photography technology is providing a new and efficient solution for the 3D reconstruction of ground objects on a watershed scale. Although real-scene 3D environments can be established rapidly, water regions cannot be effectively reconstructed using this technology and real information about water regions is rarely visualized in 3D watershed scenes. As such, traditional 3D simulation environments of watersheds cannot meet the increasing demands of integrated watershed management.

To address the aforementioned problem, this paper proposes a rapid 3D reconstruction scheme for water regions in 3D models of oblique photography. Firstly, boundary points of the water region are extracted using a designed eight-neighbor traversal-based algorithm. Next, the TIN of the water region is constructed using the Delaunay algorithm. Afterwards, the corresponding texture image is intelligently selected and automatically processed. Finally, the processed texture image is mapped to the TIN to obtain the reconstructed 3D model of the water region. Simulation experiments have shown that the proposed scheme is accurate, efficient and effective.

In future studies, further research should be conducted on automatic determination methods for the starting grid, the generation of TINs and texture images of water regions with varying levels of fineness based on LOD technology, the detection and elimination of interfering factors in the texture images of water regions and the 3D reconstruction of objects within water regions.

Author Contributions

Conceptualization, Y.Q., H.D., J.L. and Y.J.; methodology, Y.Q., L.H. and J.Z.; software, Y.J.; validation, Y.J., Z.T. and Q.X.; formal analysis, Y.Q.; investigation, Y.J.; resources, H.D.; data curation, Y.J.; writing—original draft preparation, Y.Q.; writing—review and editing, H.D.; visualization, J.L.; supervision, Q.X.; project administration, Y.Q.; funding acquisition, Y.Q., H.D. and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded jointly by the Natural Science Foundation of Jiangsu Province (Grant No. BK20201100), the National Natural Science Foundation of China (Grant No. 42101433, 41971309 and 41971314) and the Open Research Fund of National Engineering Research Center for Agro-Ecological Big Data Analysis & Application, Anhui University (Grant No. AE202107).

Data Availability Statement

The data and materials that support the findings of this study are freely available upon request from the corresponding author at the following e-mail address: ygqiu@niglas.ac.cn.

Acknowledgments

We would like to acknowledge the assistance of Jia Liu and Xiaokang Ding in the design and evaluation of simulation experiments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yan, D.; Jiang, R.; Xie, J.; Wang, Y.; Li, X. Research on water resources monitoring system of Weihe River basin based on digital globe. Comput. Eng. 2019, 45, 49–55. [Google Scholar] [CrossRef]

- Qiu, Y.; Xie, H.; Sun, J.; Duan, H. A novel spatiotemporal data model for river water quality visualization and analysis. IEEE Access 2019, 7, 155455–155461. [Google Scholar] [CrossRef]

- Shi, H.; Chen, J.; Li, T.; Wang, G. A new method for estimation of spatially distributed rainfall through merging satellite observations, raingauge records, and terrain digital elevation model data. J. Hydro-Environ. Res. 2020, 28, 1–14. [Google Scholar] [CrossRef]

- Qiu, Y.; Duan, H.; Xie, H.; Ding, X.; Jiao, Y. Design and development of a web-based interactive twin platform for watershed management. Trans. GIS 2022, 26, 1299–1317. [Google Scholar] [CrossRef]

- Harman, J.; Brown, R.; Johnson, D.; Rinderle-Ma, S.; Kannengiesser, U. Virtual business role-play: Leveraging familiar environments to prime stakeholder memory during process elicitation. In Proceedings of the 27th International Conference on Advanced Information Systems Engineering, Stockholm, Sweden, 8–12 June 2015; pp. 166–180. [Google Scholar]

- Bhimani, A.; Spolentini, P. Empowering requirements elicitation for populations with special needs by using virtual reality. In Proceedings of the SouthEast Conference on ACM SE ’17, Kennesaw, GA, USA, 13–15 April 2017; pp. 268–270. [Google Scholar]

- Harman, J.; Brown, R.; Johnson, D. Improved memory elicitation in virtual reality: New experimental results and insights. In Proceedings of the 16th IFIP Conference on Human-Computer Interaction, Mumbai, India, 23 September 2017; pp. 128–146. [Google Scholar]

- Schito, J.; Jullier, J.; Raunal, M. A framework for integrating stakeholder preferences when deciding on power transmission line corridors. EURO J. Decis. Process 2019, 7, 159–195. [Google Scholar] [CrossRef]

- Japs, S.; Kaiser, L.; Kharatyan, A. Method for 3D-environment driven domain knowledge elicitation and system model generation. In Proceedings of the 16th International Design Conference-Design 2020, Cavtat, Croatia, 26–29 October 2020; pp. 197–206. [Google Scholar]

- Zhu, Q.; Zhao, J.; Zhong, Z.; Sui, H. The extraction of topographic patterns based on regular grid DEMs. Acta Geod. Cartogr. Sin. 2004, 33, 78–82. [Google Scholar] [CrossRef]

- Carmona, R.; Froehlich, B. Error-controlled real-time cut updates for multi-resolution volume rendering. Comput. Graph. 2011, 35, 931–944. [Google Scholar] [CrossRef]

- Gao, S.; Yuan, X.; Gan, S. Experiment research on Terrain 3D modeling based on tilt photogrammetry. J. Henan Univ. Sci. Technol. (Nat. Sci.) 2018, 39, 99–104. [Google Scholar] [CrossRef]

- Mark, D.M. Automated detection of drainage networks from digital elevation models. Cartographica 1983, 21, 168–178. [Google Scholar] [CrossRef]

- Jenson, S.K.; Domingue, J.O. Extraction topo-graphic structure from digital elevation data for geo-graphic information system. Photogrammetirc Eng. Remote Sens. 1988, 54, 1593–1600. [Google Scholar] [CrossRef]

- Losasso, F.; Hoppe, H. Geometry clipmaps: Terrain rendering using nested regular grids. ACM Trans. Graph. 2004, 23, 769–776. [Google Scholar] [CrossRef]

- Zhu, Q.; Gong, J.; Du, Z.; Zhang, Y. LODs description of 3D city model. Geomat. Inf. Sci. Wuhan Univ. 2005, 30, 965–969. [Google Scholar] [CrossRef]

- Chen, B.; Lu, N.; Wu, T. A converting method of 3D model based on OpenGL. Mini-Micro Syst. 2004, 25, 475–477. [Google Scholar] [CrossRef]

- Huang, J.; Mao, F.; Xu, W.; Li, J.; Lei, T. Implementation of large area valley simulation system based on VegaPrime. J. Syst. Simul. 2006, 18, 2819–2823+2831. [Google Scholar] [CrossRef]

- Piccand, S.; Noumeir, R.; Eric, P. Region of interest and multiresolution for volume rendering. IEEE Trans. Inf. Technol. Biomed. 2008, 12, 561–568. [Google Scholar] [CrossRef]

- Xie, K.; Yu, W.; Yu, H.; Wu, P.; Li, T.; Peng, M. GPU-based multi-resolution volume rendering for large seismic data. In Proceedings of the 2011 International Conference on Intelligence Science and Information Engineering, Wuhan, China, 20–21 August 2011; pp. 245–248. [Google Scholar]

- Zhu, Q. Full three-dimensional GIS and its key roles in smart city. J. Geo-Inf. Sci. 2014, 16, 151–157. [Google Scholar] [CrossRef]

- Cheng, P.; Li, Z.; Nie, Y.; Li, Q. Rapid batch automatic modeling method of urban road lamps based on CityEngine and 3D Max. Eng. Surv. Mapp. 2018, 27, 40–45. [Google Scholar] [CrossRef]

- Wang, J.A.; Ma, H.T.; Wang, C.M.; He, Y.J. Fast 3D reconstruction method based on UAV photography. ETRI J. 2018, 40, 788–793. [Google Scholar] [CrossRef]

- Hu, X.; Li, D. Research on a single-tree point cloud segmentation method based on UAV tilt photography and deep learning algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4111–4120. [Google Scholar] [CrossRef]

- Tu, Y.H.; Johansen, K.; Aragon, B.; Stutsel, B.M.; Angel, Y.; Camargo, O.A.L.; AlMashharawi, S.K.M.; Jiang, J.; Ziliani, M.G.; McCabe, M.F. Combining nadir, oblique, and facade imagery enhances reconstruction of rock formations using unmanned aerial vehicles. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9987–9999. [Google Scholar] [CrossRef]

- Huang, C.; Zhang, H.; Zhao, J. High-efficiency determination of coastline by combination of tidal level and coastal zone DEM from UAV tilt photogrammetry. Remote Sens. 2020, 12, 2189. [Google Scholar] [CrossRef]

- Wen, X.; Zhang, S.; Zhang, Y.; Li, Z. Potential of remote sensing technology assisted by UAV oblique photography applied to dynamic monitoring of soil and water conservation. J. Yangtze River Sci. Res. Inst. 2016, 33, 93–98. [Google Scholar] [CrossRef]

- Lai, H.; Liu, L.; Liu, X.; Zhang, Y.; Xuan, X. Unmanned aerial vehicle oblique photography-based superposed fold analysis of outcrops in the Xuhuai region, North China. Geol. J. 2020, 56, 2212–2222. [Google Scholar] [CrossRef]

- Yin, T.; Zeng, J.; Zhang, X.; Zhou, X. Individual tree parameters estimation for Chinese fir (Cunninghamia lanceolate (Lamb.) Hook) plantations of south China using UAV oblique photography: Possibilities and challenges. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 827–842. [Google Scholar] [CrossRef]

- Wang, Q.; Ai, H.; Zhang, L. Rapid city modeling based on oblique photography and 3ds Max technique. Sci. Surv. Mapp. 2014, 39, 74–78. [Google Scholar] [CrossRef]

- Wang, X.; Zhu, Y.; Zhang, X.; Li, X. Application of Oblique Photography Technique in Large Area 3D City Modeling. Urban Geotech. Investig. Surv. 2018, 6, 59–62. [Google Scholar]

- Zhang, B.; Zhang, L. Study on the application of 3D modeling based on UAV photography in urban planning—Taking Yi Jiequ area in Du Jiangyan as an example. AIP Conf. Proc. 2017, 1864, 020226. [Google Scholar] [CrossRef]

- Yu, L.; Wang, Z.; Tian, S.; Ye, F.; Ding, J.; Kong, J. Convolutional Neural Networks for Water Body Extraction from Landsat Imagery. Int. J. Comput. Intell. Appl. 2017, 16, 1750001. [Google Scholar] [CrossRef]

- Chen, Y.; Tang, L.; Kan, Z.; Bilal, M.; Li, Q. A novel water body extraction neural network (WBE-NN) for optical high-resolution multispectral imagery. J. Hydrol. 2020, 588, 125092. [Google Scholar] [CrossRef]

- Zhang, L.; Fan, Y.; Yan, R.; Shao, Y.; Wang, G.; Wu, J. Fine-grained tidal flat waterbody extraction method (FYOLOv3) for high-resolution remote sensing images. Remote Sens. 2021, 13, 2594. [Google Scholar] [CrossRef]

- Xu, Y.; Lin, J.; Zhao, J.; Zhu, X. New method improves extraction accuracy of lake water bodies in Central Asia. J. Hydrol. 2021, 603, 127180. [Google Scholar] [CrossRef]

- Jiang, Z.; Wen, Y.; Zhang, G.; Wu, X. Water Information Extraction Based on Multi-Model RF Algorithm and Sentinel-2 Image Data. Sustainability 2022, 14, 3797. [Google Scholar] [CrossRef]

- Zhang, F.; Gao, L. The analysis of real 3D modeling of oblique images based on DP-Modeler. Geomat. Spat. Inf. Technol. 2018, 41, 196–198. [Google Scholar] [CrossRef]

- Upex, P.; Jouffroy, P.; Riouallon, G. Application of 3D printing for treating fractures of both columns of the acetabulum: Benefit of pre-contouring plates on the mirrored healthy pelvis. Orthop. Traumatol. Surg. Res. 2017, 103, 331–334. [Google Scholar] [CrossRef]

- He, Y.; Jiang, Y.; Sun, L. Research on 3D data acquisition method of building based on SVS Modeler. Mod. Surv. Mapp. 2020, 43, 35–37. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–12 May 2011; pp. 1–4. [Google Scholar]

- Shewchuk, J.R. Delaunay refinement algorithm for triangular mesh generation. Comput. Geom. 2002, 22, 21–74. [Google Scholar] [CrossRef]

- Kong, X.; Guo, J.; Liu, Z. Basic theory of earth ellipsoid and its mathematical projection transformation. In Foundation of Geodesy; Wuhan University Press: Wuhan, China, 2010; pp. 166–169. [Google Scholar]

- He, J.; Li, Y.; Lu, H.; Ren, Z. Research of UAV aerial image mosaic based on SIFT. Opto-Electron. Eng. 2011, 38, 122–126. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).