Abstract

Access to real-time environmental sensing data is key to representing real-time environmental changes in the digital twin of lakes. The visualization of environmental sensing data is an important part of establishing a digital twin of lakes. In the past, environmental sensing data display methods were either charts or two-dimensional map-based visualization methods. Breaking through the traditional visualization methods of environmental sensing data and realizing a multi-dimensional and multi-view display of environmental sensing data in a digital twin of lakes is something that this particular paper tries to resolve. This study proposes a visualization framework to integrate, manage, analyze, and visualize the environmental sensing data in the digital twin of lakes. In addition, this study also seeks to realize the coupling expression of geospatial data and long-term monitoring sequence data. Different visualization methods are used to realize the visualization of environmental sensing data in the digital twin of lakes. Using vector and scalar visualization methods to display ambient wireless sensor monitoring data in a digital twin of lakes provides researchers with richer visualization methods and means for deeper analysis. Using video fusion technology to display environmental sensing video surveillance data strengthens the integration of the virtual environment and real space and saves time for position identification using video surveillance. These findings may also help realize the integration and management of real-time environmental sensing data in a digital twin of lakes. The visualization framework uses various visualization methods to express the monitoring data of environmental wireless sensors and increases the means of visualizing environmental sensing data in the world of a lake digital twin. This visualization framework is also a general approach that can be applied to all similar lakes, or other geographical scenarios where environmental sensing devices are deployed. The establishment of a digital twin of Poyang Lake has certain practical significance for improving the digital management level of Poyang Lake and monitoring its ecological changes. Poyang Lake is used as an example to verify the proposed framework and method, which shows that the framework can be applied to the construction of a lake-oriented digital twin.

1. Introduction

A digital twin (DT) originated from NASA’s Apollo program in the 1960s. The project developed two identical aircrafts: one was launched, and the other remained on the ground for simultaneous observation. Strictly speaking, a DT is not a new concept and it was rooted in some existing technologies [1], such as Internet of Things monitoring, 3D modeling, system simulation, digital prototyping (including geometric, functional, and behavioral prototyping), etc. DT technology has proven to be indispensable, and Internet of Things sensors make the DT cost-effective, so they could become essential for Industry 5.0 and play a major role in understanding, analyzing, and optimizing physical objects [2,3]. Humans sense the natural environment, while their replicas (i.e., DTs) are sensed by sensors. Humans sense the natural environment through their facial features, while DTs sense changes in the natural environment using sensors. Sensing data returned by sensors must be presented to humans in a user-friendly (i.e., visualized) form so that the human brain can analyze the sensor monitoring data. What we are interested in is how we can use sensor data to better demonstrate to humans a digital twin for lakes and obtain information that the human brain can recognize in order to help people make predictions, analyses, and decisions.

A DT represents the unification of virtual and physical assets in product lifecycle management [4]. As computer technology and DT technology have developed, the DT has been applied to various industries, including the manufacturing industry [5], the automobile industry [6], the energy sector, the agriculture sector [7,8], and the water conservancy industry [9,10,11,12]. Real-time sensing data visualization is an important component in the construction of the DT world; visualization helps the human brain to perform spatial reconstruction of abstract models, and helps experts and scholars to dig deep into the professional knowledge generated from the data [13]. The purpose of a DT is to build a virtual environment that simulates the actual physical environment. In addition, access to real-time environmental sensing data is key to reflect real-time changes from the real physical world in a DT world.

At present, numerous researchers have attempted to manage and visualize real-time monitoring data or implement the construction of a watershed DT. In terms of DT basins, Huang et al. developed the Jingjiang Valley simulation system [14], which was able to realize hydraulic model management and attribute information a query function. Yan et al. constructed a simulation watershed system for the Weihe River Basin, thus providing auxiliary decision support for watershed management departments [15]. Moreover, Qin et al. designed a web-based DT platform [16], applied in the Chaohu Lake Watershed, and provided decision support for integrated watershed management. Lastly, Eidson et al. designed the South Carolina Digital Watershed to manage real-time environmental and hydrological sensing data [17]. Environmental sensing data come from a wide range of sources [18,19,20], making it difficult to integrate, manage, analyze, and visualize them in the DT world. In terms of environmental sensing data visualization, Wan et al. constructed the Dongting Lake ecological environment information system based on two-dimensional maps [21]. This system obtained monitoring data from water, atmosphere, soil, and pollution monitoring. Moreover, Walker et al. proposed a web-based interactive visualization framework called the Interactive Catchment Explorer to manage and visualize environmental datasets based on two-dimensional maps and charts [22]. Another paper by Brendel et al. developed the application Stream Hydrology and Rainfall Knowledge System to visualize hydrological and meteorological time series data [23]. Furthermore, Steed et al. proposed a desktop-based software application for the visualization of large-scale environmental datasets and model outputs termed the Exploratory Data Analysis Environment [24]. In their recent research, Chen et al. attempted to carry out the construction of DT in the Three Gorges Reservoir area, and the data from sensors were presented in the form of data tables [25]. Lu et al. constructed a virtual scene of Poyang Lake, focusing on scene construction, which lacks the integration and visualization of corresponding environmental sensing data [26].

Although the aforementioned studies visualized environmental sensing data, some of them displayed it based on charts, which cannot carry out data visualization and analysis from multiple angles, and it is difficult to integrate users’ prior knowledge and explore the characteristic laws hidden in the environmental sensing data [27]. Some visualizations based on two-dimensional maps have certain limitations and cannot meet the needs of DT world building [28]. Furthermore, some visualization applications are stand-alone applications that are limited in their interactivity and expertise [29]. At present, it is still difficult to go beyond the traditional visualization methods of environmental sensing data and realize the multi-dimensional and multi-view display of environmental sensing data in the twin world; the presentation of environmental sensing data in the DT of lakes is still lacking in relevant visualization methods.

The main goals of this study are to use web development and design an environ-mental sensing data visualization framework that can be applied to a DT of lakes in or-der to perform a multi-dimensional and multi-angle visualization of environmental sensing data, and realize its integration, management, and visualization. This study uses the interpolation method, the particle method, and the video fusion method to visualize different types of environmental sensing data in the DT of lakes, and Poyang Lake is used as a case study to demonstrate the visualization framework. The main contributions of this study are as follows:

(1) This study proposes a framework for visualizing environmental sensing data in the DT world of lakes. These findings are then applied to the construction of Poyang Lake’s DT world, and the integration and management of real-time environmental sensing data. They can be used as a reference for constructing a DT world facing the lake.

(2) The environmental sensing data were connected to the DT world of lakes. Moreover, different visualization methods have been used to express the monitoring data of ambient wireless sensors. The multi-dimensional temporal changes in monitoring data of ambient wireless sensors are represented by charts, while the spatial continuity of scalar data in the DT world of lakes is realized using the interpolation method. On the other hand, the vector field construction method is used to show the dynamic changes in vector data in the DT world of lakes. Together, these visualization methods enrich the representation of the environmental sensing data in the DT world of lakes.

(3) Real-time video surveillance pictures of the environment are fused with the virtual DT scene to facilitate the real-time observation and monitoring of birds and water in lakes. In addition, this practice also strengthens the integration of the virtual environment and real space, saves time for position identification using video surveillance, and improves the supervisors’ work efficiency.

The remainder of the paper is organized as follows. Section 2 introduces the visualization architecture of the environmental sensing data in the DT world of Poyang Lake, as well as the methods used to realize the visualization of the environmental sensing data. Next, Poyang Lake is used as a case study to demonstrate the visualization framework and present the results of the visualization of environmental sensing data in Section 3. Finally, Section 4 discusses the results and Section 5 concludes this paper.

2. Research Framework

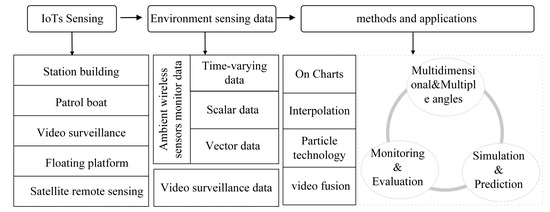

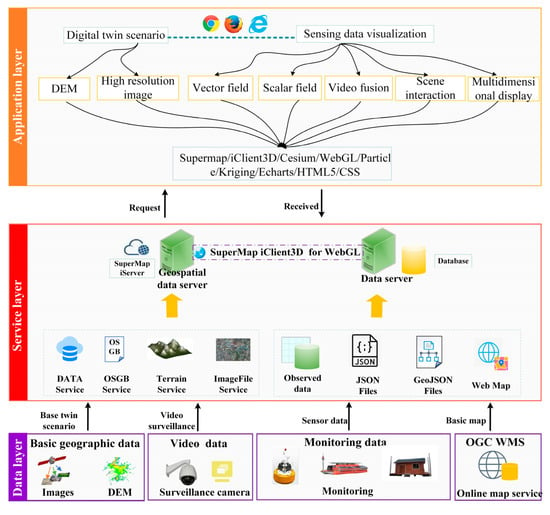

The visualization framework of environmental sensing data in the DT world is illustrated in Figure 1. The paper divides the visualization framework into three parts, including Internet of Things (IoTs) sensing, environmental sensing data, and environmental sensing data visualization methods and applications.

Figure 1.

Visualization framework of environmental sensing data in DT world of lakes.

2.1. IoTs Sensing

IoTs technologies that realize real-time multi-source data acquisition are the core of the DT [30]. Lakes’ environmental sensing data come from sensing devices equipped with various ecological environmental sensors, and the data are transmitted via the IoTs. These data are characterized by multi-dimensional, multi-source, heterogeneous, and time-varying characteristics. Multi-dimensional characteristics are represented by the characteristics of spatial elements of geometry and attributes and have significant space–time, multi-dimensional characteristics.

In general, the environmental sensing device includes an ambient wireless sensor monitoring platform and an environmental sensing video surveillance platform. Furthermore, the ambient wireless sensor monitoring platform includes a station building, patrol boat, and floating body platform equipped with multiple sensors (ecological environment monitoring sensor, water quality sensor, air quality and meteorological automatic monitoring station, etc.) used to monitor the ecological environment of lakes in real-time. On the other hand, the video surveillance platform is equipped with high-definition cameras placed higher than the lake surface during the wet season to conduct real-time sensing video surveillance of lakes.

2.2. Environmental Sensing Data

According to different environmental sensing devices, this paper divides the environmental sensing data into ambient wireless sensor monitoring data and environmental sensing video surveillance data. The former is based on environmental sensing data from wireless sensors, while the latter is based on the video surveillance cameras. Environmental sensing data meet the multi-dimensional variable form F = F (, , , … ), and can be expressed as a single-valued five-dimensional function Vis = f (x, y, z, t, ) in the DT world of lakes. The first three dimensions represent spatial dimensions, characterized by longitude, latitude, and height. The symbol “t” stands for time, while “” represents the collection of monitoring indicators and monitoring values. Furthermore, “” may be expressed as = {[, ], [, ], [, ], [, ], ……}. It is defined as a collection of monitoring indicators and monitoring values, where “m” denotes the environmental monitoring indicator, and “v” represents the monitoring value of the indicator. Within the DT world, environmental sensing data are converted into vector form, raster form, or the integrated grid format. Moreover, “x” and “y” coordinates or cells are used to represent the spatial distribution characteristics of data. When there is a time variable “t”, the characteristics of the temporal dynamics are represented by the time axis or by the changes in shape, size, and color.

2.2.1. Ambient Wireless Sensor Monitoring Data

Ambient wireless sensor monitoring data represent a four-dimensional array containing latitude and longitude coordinates (“x” and “y”), monitoring time (“t”), and a set of monitoring indicators and monitoring values (“”). Ambient wireless sensor monitoring data of lakes contain air quality data (PM2.5, CO2, SO2, etc.), meteorological data (temperature, humidity, air pressure, wind direction, etc.), and hydrological and water quality data (water temperature, dissolved oxygen, chlorophyll, turbidity, etc.). Moreover, the monitoring indicators and values constitute the dataset “”, which includes common scalar data such as water temperature and dissolved oxygen, as well as vector data such as wind direction and wind speed. Spatially, each monitoring station is a form of discrete distribution that generates real-time values, which after recording become historical data, thus constituting long-term continuous data. From the perspective of the attributes and patterns of the data, the sensing data include spatial and temporal dimensions and attribute dimensions. The monitoring stations distributed across the sensing area simultaneously produce their independent data. Furthermore, time series monitoring data are constantly generated, while data from the same monitoring site change over time, showing evident time series characteristics.

Environmental sensing and monitoring stations are discretely distributed in the sensing region. The visual environment based on the two-dimensional map cannot directly show the continuous spatial changes in a lake’s ecological environment. Spatial interpolation converts discrete values of monitoring stations into continuously distributed scalar fields, which can be used for spatial prediction and the visualization of lake environmental monitoring data. The wind field data are vector-type data with velocity and direction. The particle technology dynamically expresses the motion state of the vector data in real time and shows the changes in the real-time wind field in Poyang Lake. In addition, the vector field with continuous changes may be generated by combining changes in temporal data.

2.2.2. Environmental Sensing Video Surveillance Data

Through video surveillance technology, the video surveillance system detects, fortifies, displays, records, and plays back historical images in real time. Video surveillance technology is a pivotal part of the Internet of Things. The real-time videos are parsed and stored as video streams. In addition, a scheduled task periodically saves the monitoring images of the lake in MP4 format. In other words, the environmental sensing video surveillance data represent the time series of changing video images. Based on the historical surveillance video data, the water level, biological activities, and other environmental changes within the research area are viewed according to the time series changes. The environmental sensing video surveillance data are gathered from the monitoring stations at the lakes. From the perspective of multi-dimensional attributes, video surveillance data contain coordinate positions “x”, “y”, and “t.” The symbol “t” represents the time change in the monitoring video. A video image with long time series changes is formed after saving, showing the characteristics of the time sequence.

2.3. Visualization Methods and Applications

According to the data type characteristics, different visualization methods process the coordinates of environmental sensing data and match the coordinates of virtual space, so that the data can be displayed from multiple angles in the DT world. For time-varying data, this study uses charts that better show the time-varying characteristics. For scalar data, a regular grid is generated by the interpolation method, while the scalar field is formed after matching with the coordinates of the virtual DT space. Furthermore, vector data have position and direction, and may be visualized in the DT world through a vector field. Lastly, real-time video surveillance data illustrate the actual spatial location of video surveillance and show its status in the actual physical space.

2.3.1. Visualization of Ambient Wireless Sensor Monitoring Data

Visualization of Scalar Data Using Spatial Interpolation

Spatial interpolation is the process of obtaining the location data of a region based on the existing sampling data. The collection density and distribution of wireless sensor monitoring stations in the Poyang Lake region are not uniform. Therefore, to accurately illustrate the spatial distribution of environmental monitoring factors in the DT world, it is necessary to form a regular grid data model using the interpolation method. The discrete environmental monitoring data are transformed into continuous data surfaces for visual display in the DT world of Poyang Lake.

The Kriging interpolation method is also known as the local estimation or local spatial interpolation method. This method considers the spatial distribution of spatial attributes and may be used to visualize the scalar data of Poyang Lake. In order to determine the weight of influence of each sampling point, all points within the influence range are fitted based on variograms. Afterward, the attribute values of the interpolation points are estimated via a sliding average. The Kriging interpolation formula is as follows:

In the formula above, represents the measured value of the point i, while denotes the weight of the ith point and represents the position of the interpolation point.

The Kriging interpolation method employs semi-variograms to express the spatial autocorrelation and variability of its sampling points. Furthermore, its interpolation weight is based on the distance between monitoring points, the predicted location, the overall spatial arrangement of monitoring points and the fitting model, which achieves an unbiased estimation of regional variables in the interpolation region. The implementation steps are as follows:

(1) Prepare the data and initialize the canvas. Firstly, it is necessary to convert the monitoring data into the JSON format with a 4D array of Vt = [x, y, v, t]. Then, we calculated and generated the regular grid and boundary according to the Geo-JSON format file of the research area. Finally, the scalar data were loaded from Vis.

(2) Select probabilistic models to create a variogram. It is necessary to select a Gaussian, exponential, or spherical model to train the monitoring dataset and return the variogram objects.

(3) Use the variogram to spatially interpolate the generated grid elements. The paper uses the returned variogram objects to assign different predicted values to the grid elements within the Geo-JSON file description.

(4) Data mapping. Lastly, the resulting grid was mapped onto the initialization canvas to be displayed in the DT world.

Construction of a Vector Field Using Particle Technique

Web 3D dynamic visualization based on particle technology can construct a vector field model. It interacts with geospatial data to dynamically simulate the changing trend of the vector field in the lakes. Particle technology uses light intensity and color information to represent changes in the vector field. According to the speed and direction, vector data can be visualized through arrows, dynamic moving particles, or streamlines in the DT world. The basic unit of the particle beam method is a particle. Each particle consists of physical and image attributes, which dynamically change according to its life cycle. Vector field visualization relates particle and vector properties. Moreover, when simulating the vector field, the visualization maps the velocity vector onto the dynamic properties of particle motion. The stretching and movement of particle trajectories are illustrated by the changes in existing particle trajectories and the redrawing of new elements, thus conveying the dynamic flow process of vector data.

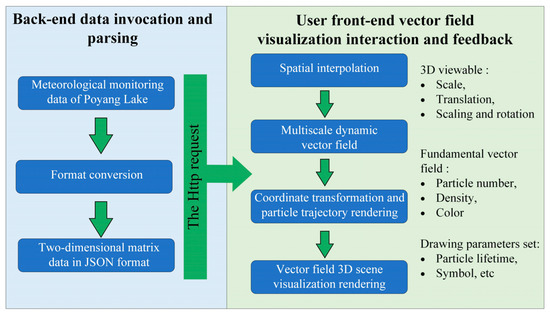

Furthermore, meteorology is an important indicator for the environmental monitoring of the lakes. Wind data are presented as vector data with direction and velocity. Particle-based technology allows meteorological data to be dynamically represented by particles in DT worlds according to velocity and direction, thus constructing a wind field expression vector movement in the real world. The algorithm flow of the Poyang Lake vector field visualization is provided in Figure 2.

Figure 2.

Monitoring data vector field visualization algorithm flow.

Firstly, we need to convert the obtained vector data (wind direction and wind speed) into a two-dimensional array in a fixed JSON file, which can build the initial vector field. Then, it is necessary to construct a spatial resolution grid that encompasses the entire study area; after this, the double linear interpolation method is used to calculate each grid node vectors’ direction and size. The interpolation results represent the specific property of the mesh node in order to generate particles placed on the canvas. Each particle moves in accordance with the direction and velocity of the interpolated surface in order to visualize the vector field data in the DT world.

Representing the spatio-temporal variation in the vector fields via particle trajectories requires a dynamic simulation of the particle trajectories considering their life cycle. Before a particle trajectory is drawn, coordinate transformation, particle period design, and particle trajectory symbol setting are required.

(1) Geographic coordinates convert screen coordinates. The data coordinates in the initial vector field are geographical coordinates, which need to be converted to screen coordinates when drawing on the screen. The projection orientation is the Mercator projection.

(2) Setting the particle life cycle. The moving velocity and position of the particle are obtained via interpolation of the initial vector field. Suppose that the drawing boundary of the wind vector field is () and the initial coordinates of particles are (x, y), then the coordinate formula of the randomly generated particles is

The particle life value (L) is assumed to be a random value within a certain range. Every time a particle updates its position coordinates, its life value will be reduced by one. Then, according to the initial position and updated position of the particle, the particle movement trajectory within the life cycle can be drawn in a virtual scene.

(3) Set particle symbol. Each vertex of the object to be rendered in the scene contains attribute information such as normal vector, color, texture, and coordinates. These data streams should first be imported from the web with a program, after the vertex shader and fragment shader processing, in order. Finally, the frame cache is drawn, and output to Canvas in HTML5 for visual display is performed.

2.3.2. Visualization of Environmental Sensing Video Surveillance Data

Visualization of Environmental Sensing Video Surveillance Data

The purpose of the video scene spatialization is to construct the relationship between video image space and geographic space. Thus, through video spatialization, the association between image space and geographic space information is realized. Real-time surveillance video and model fusion enhance the reality of the virtual scene. Video fusion technology can be considered either as a branch of virtual reality technology or as a development stage of virtual reality. It refers to the matching and fusion of one or more video sequences to create a new dynamic virtual scene or model and achieve the fusion of virtual scenes and real-time video, i.e., virtual–real fusion. The DT scene and video integration technology is implemented using three technologies: video-centered mode, video billboard mode, and projection video mode. This paper uses the latter two modes. In the video billboard mode, the billboard facing the user is used to display the video in the DT scene [31,32,33]. On the other hand, in the projection video mode, the video stream is projected into the virtual scene and thus allows the user to watch it from any position in the scene.

Video Surveillance Data Visualization in the Billboard Mode

Within the DT world, the visualization of video surveillance data in the billboard mode requires three coordinate references, namely the screen coordinate system, the Cartesian coordinate system, and the spherical coordinate system. To keep the video picture facing the user, the coordinate position of the video window needs to be continuously monitored. After that, the screen coordinates should be transformed to the Cartesian coordinates and further into the spherical coordinates, in order to make sure that the video window changes with the change in the virtual scene (movement, rotation, and other operations). The specific process of transforming the Cartesian spatial coordinate system into the spherical coordinate system is described by Po et al. [34].

Video Projection in a Virtual Environment

Surveillance images are transmitted through the IoTs and then returned to the background in the form of video streams. Real-time video streams are loaded using the representational state transfer (REST) interface. Then, a fast channel between the browser and the server is formed using the WebSocket protocol, so that the two can directly transmit data to each other. Lastly, the code stream is decoded and re-encoded into a format that Web Real-Time Communications (WebRTC) can recognize, and it is sent to the client using the WebRTC protocol stack. The IP address and port number are configured for access. After applying the texture mapping method for video frame projection onto the corresponding virtual geographic space [35], video stream data are processed to form video texture. The texture mapping method is used to map the texture in the virtual geographic space to achieve a deep fusion of the video image and the virtual space.

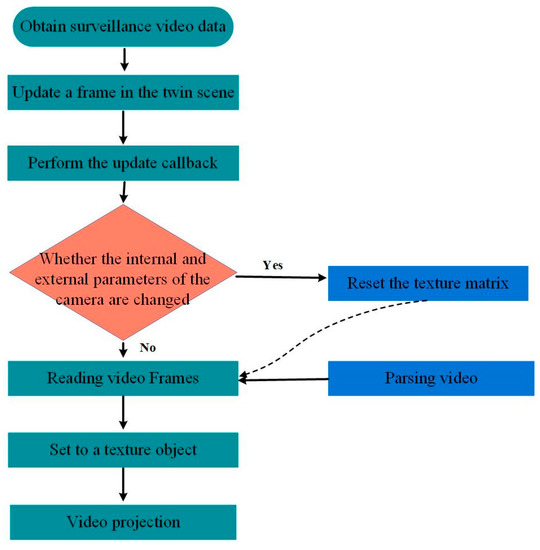

Video fusion is a simulation of the position and attitude of a real network camera in the three-dimensional scene, and the position can be obtained using a mapping method. The virtual camera attitude is defined by establishing a spatial Cartesian coordinate system at its center point and rotating it around the X, Y, and Z axes to generate the roll angle, pitch angle, and yaw angle. Generally, the roll angle is set to 0° in order to keep the video level. Scene and video fusion are features that match between the surveillance video images obtained by a network camera and the three-dimensional scene from different perspectives at the same location. The best matched images are selected and the visual angle parameters of the virtual camera in the corresponding virtual scene are obtained. These parameters are then used to replace the attitude parameters of the real scene to achieve video projection. The flowchart of the fusion of the video image and DT scene is illustrated in Figure 3. The texture matrix is calculated according to the internal and external parameters of the camera, while the video image is fused into the virtual scene through the projection texture technology. Moreover, the texture data are constantly updated in a callback function to achieve the fusion of video and virtual environment.

Figure 3.

Flowchart of video image and digital twin scene fusion.

3. Poyang Lake Case Study

Poyang Lake is the largest freshwater lake in China (115°47′E~116°45′E, and 8°22′N~29°45′N), situated in northern Jiangxi Province. It is vast and experiences seasonal fluctuations throughout the year. The Poyang Lake water system is an independent unit, with a drainage area of 162,000 km2. Furthermore, Songmen Mountain divides Poyang Lake into two parts. The lake is 173 km long and 16.9 km wide. It is 74 km wide at its widest point and 3 km narrow at its narrowest point. Moreover, Poyang Lake has a flat topographic lakebed, higher in the southwest and lower in the northeast, with micro-topography and rich biodiversity. The lake is also the largest wintering ground for migratory birds in Asia and there are frequent human activities. The establishment of the Poyang Lake DT has practical significance for improving the digital management level of the lake, simulating its ecological evolution, and repairing its ecological environment. Through the construction of the Poyang Lake’s DT world, its physical space can be described, monitored, forecasted, rehearsed, and pre-planned.

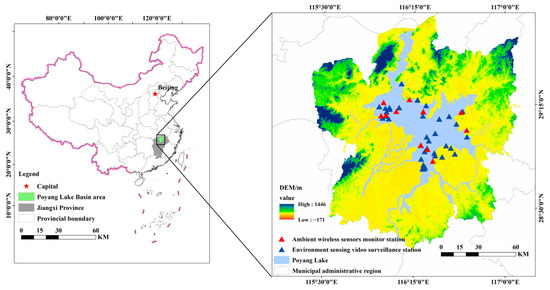

The DT world of Poyang Lake is an important part of the “monitoring and early warning platform of Poyang Lake” constructed by the Jiangxi Natural Reserve Center, a public institution affiliated with the Jiangxi Forestry Administration (http://drc.jiangxi.gov.cn/art/2022/3/4/art_14665_3877838.html?from=groupmessage&isappinstalled=0 (accessed on 21 September 2022)). During early preparatory work, the Jiangxi Natural Reserve Center deployed station-based platforms and floating platforms equipped with wireless sensors to monitor the environment and surveillance cameras and to monitor migratory birds and water changes in real time. Figure 4 illustrates the distribution of sensors, where the red dot represents the location of the environmental ambient wireless sensor monitoring station, while the blue dot represents the location of the environmental sensing video surveillance station. These sensing devices constantly generate new real-time environmental sensing data, which are then used in the construction of the DT world of Poyang Lake. Realizing the management and visualization environmental sensing data is pivotal for the construction of the Poyang Lake twin world.

Figure 4.

Distribution of environmental sensing sites.

3.1. Poyang Lake Digital Twin World System

The DT world is the basic virtual geographic space for the visualization of the environmental sensing data of Poyang Lake. In general, the digital scene is constructed using Unity3D and Unreal Engine [36,37,38]. Although the basic scene of the DT world is constructed based on these tools, the lighting and rendering effects are good. The DT worlds are mostly stand-alone applications, so there are certain limitations. The basic scene within the Poyang Lake twin world is constructed based on web technology, which has high rendering speed and solid visual effects. Relevant studies show that Cesium (https://cesium.com/platform/cesiumjs (accessed on 20 September 2022)) is the most reliable method for environment sensing data visualization in the web environment [39,40].

The DT world of Poyang Lake is the basis of environmental sensing data visualization. By superimposing the high-precision digital elevation model (DEM) and high-resolution image data, the study constructs a digital landscape with continuous terrain features. The global satellite images are taken from the online network map of Tianditu (China’s state-sponsored web mapping service). Furthermore, the Poyang Lake region adopts the high-precision DEM data with a resolution of 5 m, while the region’s satellite images are obtained from the high-resolution 2 m satellite image data from the Jiangxi High Resolution Center. After data processing, release, and development, the environmental sensing data visualization system of the Poyang Lake DT World is formed, as illustrated in Figure 5. The right panel is used to perform the visualization of the multi-dimensional temporal data of air, meteorology, and hydrology. The architecture of Poyang Lake digital Twin World system is shown in Appendix A (Figure A1).

Figure 5.

Digital twin world environmental sensing data visualization system of Poyang Lake.

3.2. Visualization of Ambient Wireless Sensor Monitoring Data in Poyang Lake

3.2.1. Multi-Dimensional Temporal Visualization of Ambient Wireless Sensor Monitoring Data

Wireless environmental sensor monitoring data have multiple attributes and are multi-dimensional. Furthermore, the temporal trends of monitoring data can be seen through the charts, and different curves or colors can show different environmental changes.

The multi-dimensional temporal visualization function is implemented based on the interactive drawing and visualization library of Echarts.JS (https://echarts.apache.org/zh/index.html (accessed on 20 September 2022)), an open-source visual development tool.

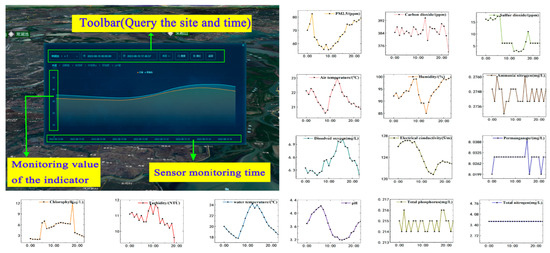

Monitoring stations are discretely distributed within each sub-lake area of Poyang Lake. Furthermore, each monitoring station contains different monitoring attributes. Each attribute value has its own changing trend within each monitoring station. The results of wireless sensor monitoring data visualization based on multi-dimensional time series methods are shown in Figure 6. The figure illustrates the monitoring curves of the indicators monitored for 24 h at the Changhuchi Monitoring Station on 30 August 2022. The upper left corner represents the results displayed in the DT world. In general, the monitoring time is presented on the abscissa, while the physical quantity is presented on the ordinate. The preceding toolbar is used to select the site data for comparison, and the monitoring type changes the historical time when the data are obtained and displays the time change curve within the time range.

Figure 6.

Display of multi-dimensional temporal changes in monitoring data.

3.2.2. Scalar Data Visualization

According to real-time monitoring data, interpolation technology can be used to visualize the DT worlds of the scalar field and contour. The distribution of monitored values and temperature fields can be visually displayed in three-dimensional form using discretely distributed sampling data after interpolation processing. This process provides researchers with more reliable means of analysis, thus revealing the internal spatial laws of environmental changes. At present, the environmental sensing data are mostly distributed discretely, with insufficient information to form a continuous expression. One direct expression method of a 3D scalar field is the 3D iso-surface method, which directly reflects the 3D spatial distribution of environmental factors within the Poyang Lake region. In general, continuous spatial changes in temperature and rainfall can be interpolated using the Kriging interpolation method, resulting in the visualization of the temperature field, rainfall iso-surface, and other scalar fields within the DT world.

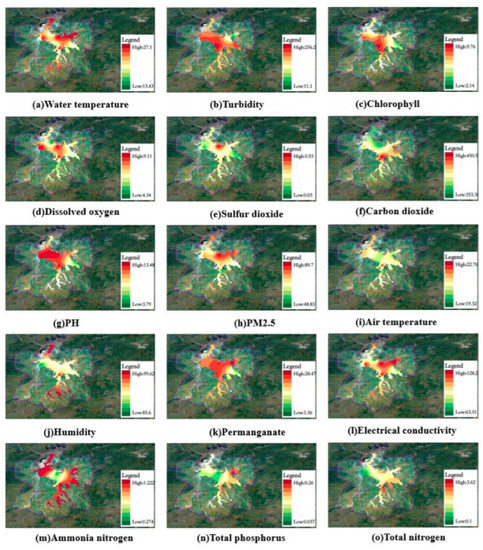

As shown in Figure 7, the final spatial interpolation resulted in a spatial continuous description of the scalar field monitoring results, which presented the spatial distribution of predicted values of ecological environment monitoring indicators in the Poyang Lake region. We conducted the interpolation analysis of the monitored values of 15 ecological and environmental indicators in the DT world of Poyang Lake in order to obtain a continuous scalar field of the ecological and environmental indicators in the Poyang Lake region. Red indicates a large number of predicted values in space, while green indicates a low number of predicted values in space.

Figure 7.

Visualization of the scalar fields of monitoring data.

3.2.3. Vector Data Visualization

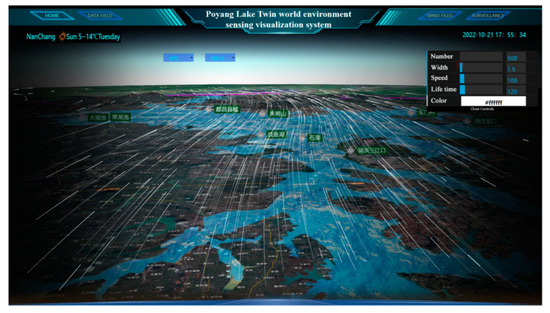

The results of vector field construction based on particle technology are provided in Figure 8. In this paper, wind direction and wind speed are used to generate the vector field, and texture mapping is carried out using Canvas in the DT world. The purpose of this process is to generate the vector field and display the vector data in the Poyang Lake DT world. The linear interpolation interpolates the vector monitoring data, while the regular grid is generated after the training process is complete. Furthermore, after color grading, the grid is rendered using Canvas in order to be displayed in the DT world. The direction and speed of wind within the Poyang Lake region are represented by the vector field in the form of particles. To achieve a better display effect, the density of moving particles is automatically adjusted to different levels according to the scale of the map, while particles are generated as the scene changes.

Figure 8.

Visualization of the vector field of monitoring data.

3.3. Visualization of Environmental Sensing Video Surveillance Data

Over 60 high-definition monitoring points are distributed in the Poyang Lake area, with each point equipped with high-definition cameras for real-time monitoring. A common display of surveillance video data is an independent HTML element separated from the actual geographical location in the DT world. This paper matches the real-time monitoring video stream in a flash video (FLV) format with the geographical location in the real twin world. Traditional video surveillance display in the DT of lakes requires a lot of experience and knowledge to locate the site of the video. However, video fusion can save time for supervisors to locate the actual location of the video and improve the efficiency of lake supervision. The goal of this process is to visualize the environmental sensing video surveillance data of Poyang Lake based on the video billboard mode and the projection video mode, so as to realize the integration and fusion of the environmental sensing video surveillance data and the scene of Poyang Lake’s DT world.

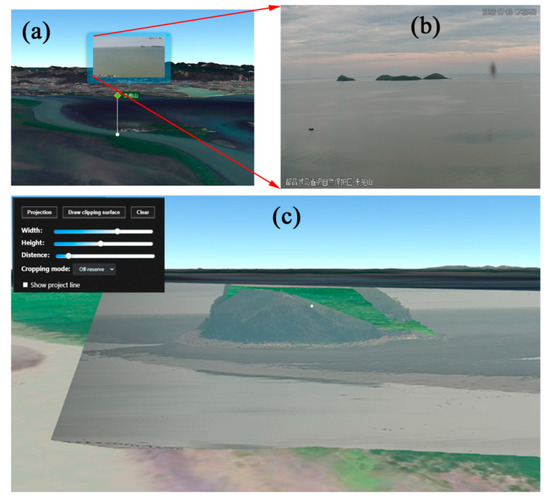

(1) Video billboard mode. In the video billboard mode, the video is oriented towards the user. Based on the coordinate position of the video, the display is realized in the DT world. This paper displays the environmental sensing video surveillance data in the form of a popover. Moreover, the video is combined with the virtual geospatial location, while the screen coordinates are constantly monitored. To achieve the effect of the video billboard mode after conversion, the billboard follows the camera movement of the scene. As illustrated in Figure 9a, regardless of the movement of the virtual environment, the real-time monitoring window always faces the user.

Figure 9.

Visualization of environmental sensing video surveillance data: (a) video visualization under the billboard mode in the DT world of Poyang Lake; (b) real-time monitoring picture of Poyang Lake; and (c) video visualization under the projection video mode in the DT world of Poyang Lake.

(2) Projection video mode. The video projection mode integrates the surveillance video data with the DT virtual scene. A surface entity is formed by selecting the same name point, while a video element is used as a material for texture mapping. The main goal is to fuse the virtual geographic scene and the real-time surveillance video. Furthermore, blurring is applied to the edge of the video to enhance the performance and fusion of the video and the virtual scene. Figure 9c shows the visualization of the real-time video surveillance data in the video projection mode.

3.4. Comparison with Traditional Visualization of Environmental Sensing Data

At present, the method of visualizing data from environmental sensors in the traditional lake-oriented DT world is relatively simple. In the lake-oriented DT world, the traditional visualization method to display wireless sensor monitoring data is mainly based on tables, line charts, pie charts, parallel coordinate charts, etc. This paper adopts different forms of visualization according to different data types. For example, charts are used to visualize data in multiple dimensions and sequential changes, while vector field visualization is based on particle technology in order to represent wind direction, wind speed, and other vector data. Furthermore, spatial interpolation is used to generate a continuous spatial scalar field representing scalar data. These methods enriched the visualization methods of wireless sensor monitoring data in the lake-oriented DT world and provided more detailed research tools in the field of natural environment.

As for video surveillance data, video surveillance is usually displayed as a separate HTML window element in systems. For this, we need to have a rich experience in locating the location of surveillance video in reality, as well as a considerable understanding of the deployment of monitoring points. Lake supervisors need additional training and experience to mentally map each surveillance image to the corresponding area in the real world. In the DT of lakes, real-time surveillance video is fused with virtual DT scenes, and virtual cameras are matched with surveillance video cameras, which can reduce the time required by lake supervisors, management departments, and researchers to locate the video surveillance points. Table 1 shows the difference between the traditional methods for visualizing the environmental sensing data in the DT of lakes and the visualization framework proposed in this paper.

Table 1.

Comparison between the traditional visualization of environmental sensing data and the method proposed in this study.

4. Discussion

4.1. Practical Application Value of Visualization Framework of Environmental Sensing Data

The visualization framework of the environmental sensing data in the DT world has practical application value. Namely, “The Poyang Lake Wetland ecosystem monitoring and early warning platform Phase II” construction project will begin in 2022. The project will be supervised by the Jiangxi Forestry Administration. Furthermore, as the construction unit, the Jiangxi Natural Reserve Construction Center will place multiple sensors and monitoring equipment in the Poyang Lake area to monitor its environment. As mentioned in the third part of this paper, the DT of Poyang Lake is a pivotal part of the project construction. Gaining access and visualizing perceptual data are prerequisites for the construction of Poyang Lake’s DT world.

The construction of the DT world requires environmental sensing data as a basis for representing changes in the real-world. Accessing, integrating, and visualizing the environmental sensing data are necessary for the construction of the DT world of the lake’s environment. The visualization framework of environmental sensing data in the DT world of Poyang Lake provides multi-dimensional and dynamic environmental sensing data access and visuals. In addition, this framework also provides a reference for the construction of DT scenes focused on the natural environment.

In summary, the visualization framework of environmental sensing data proposed in this paper has practical application value. It is significant for the construction of the Poyang Lake’s DT world and the visualization of environmental sensing data within this DT world.

4.2. The Construction of Poyang Lake’s Digital Twin World Requires a Diversified Expression of Environmental Sensing Data

A DT world represents a three-dimensional space containing “x”, “y”, and “z”. A DT world is not complete without access to real-time IoTs sensing data. Ambient wireless sensor monitoring data represent multi-dimensional dynamic data with monitoring values of coordinate positions and time series changes. These data include “x” and “y” coordinate values of monitoring sites, as well as set “”. Moreover, since monitoring is conducted in real time, it also contains the time parameter “t”. The actual picture of the environmental sensing video surveillance data contains relative coordinates, with the real-time monitoring picture being equivalent to the monitoring value “v”. Furthermore, the video surveillance data also contain a time attribute. Visualizing environmental sensing data in the DT world describes these data from multiple dimensions, thus intuitively showing its changes.

At present, 3D visualization of environmental monitoring data is commonly applied in marine environmental monitoring. In general, marine monitoring data are characterized by high heterogeneity, high dimensionality, huge volume, and huge spatial and temporal variability, and consist of multiple attributes. The characteristics of the lake’s environmental sensing data are similar to marine monitoring data. Qin et al. represented ocean vector data through the particle method and scalar data through a thermal map in a virtual Earth environment [29]. Moreover, He et al. proposed a prototype system for vector and scalar data visualization based on the web [41]. In general, these studies visualize the monitoring data as vector and scalar data through the vector and scalar fields. This practice allows them to show continuous spatial changes in monitoring data. Within the DT world, the multi-dimensional environmental sensing data are presented through charts, with a single display [42,43]. Within the DT world of Poyang Lake, the monitoring data are displayed as either scalar or vector fields, while the video data are integrated with the virtual environment, which can provide a richer visualization of environmental sensing data. In terms of practical significance, the visualization framework proposed in this paper accesses the environmental sensing data in the Poyang Lake twin world, thus providing a richer visualization method for environmental sensing data, with good interaction, fast rendering speed, and good visual effect.

4.3. The Integration of Video Surveillance Data and Virtual Geographic Environment Saves Time for Supervisors

Traditional video surveillance systems have numerous problems in multi-point monitoring [44]. For one, supervisors need to follow multiple shooting scenes simultaneously. Moreover, it is difficult to match scattered shooting videos with their actual geographical locations, which further makes it impossible to conduct global real-time monitoring of larger scenes. When the scale of the surveillance system exceeds the human surveillance capacity, further serious problems will arise. Supervisors need to mentally map each monitoring picture to the corresponding area of the real world, which requires additional training and experience [45]. Video fusion technology projects real-time images onto a 3D model of the real scene, avoiding deformation and dislocation through the tilt and rotation operation of the 3D model. Some scholars have suggested the concept of a video geographic information system (GIS), which combines video images with geographic information. The purpose of this concept is to establish a geographic index of video clips and generate hyper-videos that may be invoked within geographic environments [46,47,48].

To monitor the lake environment in real time, the lake management department has discretely deployed a number of high-definition cameras in various areas of the lake. For example, in the Poyang Lake Monitoring and Warning Platform project, related departments have installed hundreds of cameras in the Poyang Lake region to observe the activities of migratory birds, humans, and aquatic life. These cameras are scattered in various areas, and it is difficult for non-professionals to estimate the physical location of monitoring objects through video images. To monitor and protect the lake environment through video images, it is necessary to quickly locate the monitoring images in the real world and send feedback to the managers, who will design adequate responses. Therefore, within the digital twin world, monitoring images are fused with virtual scenes, while real scenes are combined with virtual scenes created by computers. By enhancing the real scene with supplementary information, the monitoring picture corresponds to the virtual digital twin scene, which can quickly locate the actual location of the surveillance camera, thus saving discrimination time and improving supervisors’ work efficiency.

5. Conclusions

This paper takes Poyang Lake as an example to construct a virtual DT world of the lake. It employs geospatial data and environmental sensing data to visualize the DT world of the research area. The paper proposes a visualization framework for the environmental sensing data in the DT world, and the DT world of Poyang Lake is constructed based on high-resolution remote sensing image data, high-precision DEM data, and the Tianditu network map service. The multi-dimensional dynamic changes in Poyang Lake are presented through graphs, which better represent their multi-dimensional characteristics. To predict environmental sensing and express spatial continuity, the continuous scalar field of the environmental monitoring space was generated via Kriging interpolation. The particle technology visualizes the vector data, thus dynamically displaying the Poyang Lake vector field motion and providing more visualization forms of monitoring data for ecological environment research. In addition, together, the real-time monitoring video and virtual geographic environment realize the combination of a real scene and virtual scene, thus enhancing the virtual reality [28]. However, although the current framework presents different types of lake environment sensing data in different visualization ways, there are also some limitations, such as the performance of dynamic monitoring data being shown in charts, and there being few visualization ways of showing dynamic changes across time. At the same time, there are some problems in the construction process of the Poyang Lake twin world based on this framework. Video fusion is still not smooth, and the method of visual feature matching needs to be optimized to better screen out effective matching points when the model texture is different from the real scene; the rendering of digital twin scenes is somewhat different from that of the desktop side. In view of the current limitations, further improvement is needed to achieve a better visualization effect.

In future work, in order to enrich the visualization of multi-dimensional dynamic data in the visualization framework, it is necessary to complete additional work in order to show the multi-dimensional characteristics of the lake environment sensing data in the DT. At a later stage, computer performance and efficiency of the framework need to be quantitatively evaluated, and the speed of data loading and image rendering should be tested. Furthermore, more consideration of the authenticity of DT scene rendering should be given in order to optimize the display effect of various visualization ways. Dynamic time series data can be represented by the animation transformation of the space field according to a single time step of the field observation data. Then, the spatial field switching should be controlled via time series animation transformation [49]. Video processing still has certain limitations that need to be improved in future work. Computer vision technology can extract real-time information from video sequences [50,51]. Even though this study contributes to the visualization of environmental sensing data in the DT of Poyang Lake, the DT is still incomplete. Namely, the scene construction needs to use high precision model data (building information modeling data [52], tilt photography data [53], terrain data [54], etc.) and the mechanisms behind the movement process need to be more precise [16,17,55,56].

Author Contributions

Conceptualization, H.C. and C.F.; methodology, H.C.; validation, H.C., C.F. and X.X.; formal analysis, H.C.; data curation, C.F.; writing—original draft preparation, H.C.; writing—review and editing, X.X.; visualization, H.C.; supervision, X.X.; project administration, C.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the 03 Special Project and 5G Program of Science and Technology Department of Jiangxi Province (grant no. 20212ABC03A09) and the Graduate Innovation Fund Project of the Education Department of Jiangxi Province (grant no. YC2022-B076).

Data Availability Statement

Not applicable.

Acknowledgments

Thanks to Jiangxi Protected Area Construction Center for data support. The authors would like to acknowledge all of the reviewers and editors.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Figure A1.

System architecture of the Poyang Lake digital twin world system.

References

- Shao, G.; Jain, S.; Laroque, C.; Lee, L.H.; Lendermann, P.; Rose, O. Digital Twin for Smart Manufacturing: The Simulation Aspect. In Proceedings of the 2019 Winter Simulation Conference (WSC), National Harbor, MD, USA, 8–11 December 2019; pp. 2085–2098. [Google Scholar]

- Lukač, L.; Fister, I.; Fister, I. Digital Twin in Sport: From an Idea to Realization. Appl. Sci. 2022, 12, 12741. [Google Scholar] [CrossRef]

- Guarino, A.; Malandrino, D.; Marzullo, F.; Torre, A.; Zaccagnino, R. Adaptive talent journey: Optimization of talents’ growth path within a company via Deep Q-Learning. Expert Syst. Appl. 2022, 209, 118302. [Google Scholar] [CrossRef]

- Pylianidis, C.; Osinga, S.; Athanasiadis, I.N. Introducing digital twins to agriculture. Comput. Electron. Agric. 2021, 184, 105942. [Google Scholar] [CrossRef]

- Kritzinger, W.; Karner, M.; Traar, G.; Henjes, J.; Sihn, W. Digital Twin in manufacturing: A categorical literature review and classification. IFAC-PapersOnLine 2018, 51, 1016–1022. [Google Scholar] [CrossRef]

- Caputo, F.; Greco, A.; Fera, M.; Macchiaroli, R. Digital twins to enhance the integration of ergonomics in the workplace design. Int. J. Ind. Ergon. 2019, 71, 20–31. [Google Scholar] [CrossRef]

- Verdouw, C.; Tekinerdogan, B.; Beulens, A.; Wolfert, S. Digital twins in smart farming. Agric. Syst. 2021, 189, 103046. [Google Scholar] [CrossRef]

- Wang, Z.K.; Jia, W.A.; Wang, K.N.; Wang, Y.C.; Hua, Q.Z. Digital twins supported equipment maintenance model in intelligent water conservancy. Comput. Electr. Eng. 2022, 101, 108033. [Google Scholar] [CrossRef]

- Smarsly, K.; Dragos, K.; Kolzer, T. Sensor-integrated digital twins for wireless structural health monitoring of civil infrastructure. Bautechnik 2022, 99, 471–476. [Google Scholar] [CrossRef]

- Fu, S.H.; Wan, Z.P.; Lu, W.F.; Liu, H.C.; Zhang, P.E.; Yu, B.; Tan, J.M.; Pan, F.; Liu, Z.G. High-accuracy virtual testing of air conditioner’s digital twin focusing on key material’s deformation and fracture behavior prediction. Sci. Rep. 2022, 12, 12432. [Google Scholar] [CrossRef]

- Ammar, A.; Nassereddine, H.; AbdulBaky, N.; AbouKansour, A.; Tannoury, J.; Urban, H.; Schranz, C. Digital Twins in the Construction Industry: A Perspective of Practitioners and Building Authority. Front. Built Environ. 2022, 8, 4671. [Google Scholar] [CrossRef]

- Diaz, R.G.; Yu, Q.T.; Ding, Y.Z.; Laamarti, F.; El Saddik, A. Digital Twin Coaching for Physical Activities: A Survey. Sensors 2020, 20, 5936. [Google Scholar] [CrossRef] [PubMed]

- Lettieri, N.; Guarino, A.; Malandrino, D.; Zaccagnino, R. The sight of Justice. Visual knowledge mining, legal data and computational crime analysis. In Proceedings of the 2021 25th International Conference Information Visualisation (IV), Sydney, Australia, 5–9 July 2021; pp. 267–272. [Google Scholar]

- Huang, J.; Mao, F.; Xu, W.B.; Li, J.; Lei, T.Z. Implementation of large area valley simulation system based on VegaPrime. J. Syst. Simul. 2006, 18, 2819–2823+2831. [Google Scholar]

- Yan, D.; Jiang, R.; Xie, J.; Wang, Y.; Xiaochun, L.I. Research on Water Resources Monitoring System of Weihe River Basin Based on Digital Globe. Comput. Eng. 2019, 4, 49–55. [Google Scholar]

- Qiu, Y.G.; Duan, H.T.; Xie, H.; Ding, X.K.; Jiao, Y.Q. Design and development of a web-based interactive twin platform for watershed management. Trans. Gis 2022, 26, 1299–1317. [Google Scholar] [CrossRef]

- Eidson, G.W.; Esswein, S.T.; Gemmill, J.B.; Hallstrom, J.O.; Howard, T.R.; Lawrence, J.K.; Post, C.J.; Sawyer, C.B.; Wang, K.C.; White, D.L. The South Carolina Digital Watershed: End-to-End Support for Real-Time Management of Water Resources. Int. J. Distrib. Sens. Netw. 2010, 26, 1–8. [Google Scholar] [CrossRef]

- Zhao, L.H. Prediction model of ecological environmental water demand based on big data analysis. Environ. Technol. Innov. 2021, 21, 101196. [Google Scholar] [CrossRef]

- Jiang, P.; Xia, H.B.; He, Z.Y.; Wang, Z.M. Design of a Water Environment Monitoring System Based on Wireless Sensor Networks. Sensors 2009, 9, 6411–6434. [Google Scholar] [CrossRef]

- Breunig, M.; Bradley, P.E.; Jahn, M.; Kuper, P.; Mazroob, N.; Rosch, N.; Al-Doori, M.; Stefanakis, E.; Jadidi, M. Geospatial Data Management Research: Progress and Future Directions. ISPRS Int. J. Geo-Inf. 2020, 9, 95. [Google Scholar] [CrossRef]

- Wan, D.; Yin, S. Construction of Ecological Environment Information System Based on Big Data: A Case Study on Dongting Lake Ecological Area. Mob. Inf. Syst. 2021, 2021, 3885949. [Google Scholar] [CrossRef]

- Walker, J.D.; Letcher, B.H.; Rodgers, K.D.; Muhlfeld, C.C.; D’Angelo, V.S. An Interactive Data Visualization Framework for Exploring Geospatial Environmental Datasets and Model Predictions. Water 2020, 12, 2928. [Google Scholar] [CrossRef]

- Brendel, C.E.; Dymond, R.L.; Aguilar, M.F. An interactive web app for retrieval, visualization, and analysis of hydrologic and meteorological time series data. Environ. Model. Softw. 2019, 117, 14–28. [Google Scholar] [CrossRef]

- Steed, C.A.; Ricciuto, D.M.; Shipman, G.; Smith, B.; Thornton, P.E.; Wang, D.L.; Shi, X.Y.; Williams, D.N. Big data visual analytics for exploratory earth system simulation analysis. Comput. Geosci. 2013, 61, 71–82. [Google Scholar] [CrossRef]

- Chen, Y.B.; Zhang, T.; N, W.J.; Q, H. Preliminary Study on Key Technologies of Digital Twin of Three Gorges Reservoir Region. Yangtze River 2023, 1–19. [Google Scholar]

- Lu, S.; Fang, C.; Xiao, X. Virtual Scene Construction of Wetlands: A Case Study of Poyang Lake, China. ISPRS Int. J. Geo-Inf. 2023, 12, 49. [Google Scholar] [CrossRef]

- Zhou, Z.; Hu, D.; Liu, Y.; Chen, W.; Su, W. Visual Analytics of the Spatio-temporal Multidimensional Air Monitoring Data. Jisuanji Fuzhu Sheji Yu Tuxingxue Xuebao/J. Comput.-Aided Des. Comput. Graph. 2017, 29, 1477–1487. [Google Scholar]

- Zhu, Q.; Zhang, L.G.; Ding, Y.L.; Hu, H.; Ge, X.M.; Liu, M.W.; Wang, W. From real 3D modeling to digital twin modeling. Acta Geod. Cartogr. Sin. 2022, 51, 1040–1049. [Google Scholar] [CrossRef]

- Qin, R.F.; Feng, B.; Xu, Z.N.; Zhou, Y.S.; Liu, L.X.; Li, Y.N. Web-based 3D visualization framework for time-varying and large-volume oceanic forecasting data using open-source technologies. Environ. Model. Softw. 2021, 135, 104908. [Google Scholar] [CrossRef]

- He, Y.; Guo, J.C.; Zheng, X.L. From Surveillance to Digital Twin Challenges and recent advances of signal processing for the industrial Internet of Things. Ieee Signal Process. Mag. 2018, 35, 120–129. [Google Scholar] [CrossRef]

- Haan, G.d.; Scheuer, J.; Vries, R.d.; Post, F.H. Egocentric navigation for video surveillance in 3D Virtual Environments. In Proceedings of the 2009 IEEE Symposium on 3D User Interfaces, Lafayette, LA, USA, 14–15 March 2009; pp. 103–110. [Google Scholar]

- Wang, Y.; Krum, D.M.; Coelho, E.M.; Bowman, D.A. Contextualized videos: Combining videos with environment models to support situational understanding. IEEE Trans. Vis. Comput. Graph. 2007, 13, 1568–1575. [Google Scholar] [CrossRef]

- Wang, Y.; Bowman, D.; Krum, D.; Coelho, E.; Smith-Jackson, T.; Bailey, D.; Peck, S.; Anand, S.; Kennedy, T.; Abdrazakov, Y. Effects of Video Placement and Spatial Context Presentation on Path Reconstruction Tasks with Contextualized Videos. IEEE Trans. Vis. Comput. Graph. 2008, 14, 1755–1762. [Google Scholar] [CrossRef]

- Liu, P.; Gong, J.H.; Yu, M. Visualizing and analyzing dynamic meteorological data with virtual globes: A case study of tropical cyclones. Environ. Model. Softw. 2015, 64, 80–93. [Google Scholar] [CrossRef]

- Segal, M.; Korobkin, C.; Widenfelt, R.v.; Foran, J.; Haeberli, P. Fast shadows and lighting effects using texture mapping. In Proceedings of the 19th Annual Conference on Computer Graphics and Interactive Techniques, Chicago, IL, USA, 26–31 July 1992; 1992; pp. 249–252. [Google Scholar]

- Lee, A.; Chang, Y.S.; Jang, I. Planetary-Scale Geospatial Open Platform Based on the Unity3D Environment. Sensors 2020, 20, 5967. [Google Scholar] [CrossRef] [PubMed]

- Ouyang, S.G.; Wang, G.; Yao, J.Y.; Zhu, G.H.W.; Liu, Z.Y.; Feng, C. A Unity3D-based interactive three-dimensional virtual practice platform for chemical engineering. Comput. Appl. Eng. Educ. 2018, 26, 91–100. [Google Scholar] [CrossRef]

- Scorpio, M.; Laffi, R.; Teimoorzadeh, A.; Ciampi, G.; Masullo, M.; Sibilio, S. A calibration methodology for light sources aimed at using immersive virtual reality game engine as a tool for lighting design in buildings. J. Build. Eng. 2022, 48, 103998. [Google Scholar] [CrossRef]

- Hunter, J.; Brooking, C.; Reading, L.; Vink, S. A Web-based system enabling the integration, analysis, and 3D sub-surface visualization of groundwater monitoring data and geological models. Int. J. Digit. Earth 2016, 9, 197–214. [Google Scholar] [CrossRef]

- Muller, R.D.; Qin, X.D.; Sandwell, D.T.; Dutkiewicz, A.; Williams, S.E.; Flament, N.; Maus, S.; Seton, M. The GPlates Portal: Cloud-Based Interactive 3D Visualization of Global Geophysical and Geological Data in a Web Browser. PLoS ONE 2016, 11, e0150883. [Google Scholar] [CrossRef]

- He, Y.W.; Su, F.Z.; Du, Y.Y.; Xiao, R.L. Web-based spatiotemporal visualization of marine environment data. Chin. J. Oceanol. Limnol. 2010, 28, 1086–1094. [Google Scholar] [CrossRef]

- Ham, Y.; Kim, J. Participatory Sensing and Digital Twin City: Updating Virtual City Models for Enhanced Risk-Informed Decision-Making. J. Manag. Eng. 2020, 36, 04020005. [Google Scholar] [CrossRef]

- Karamouz, M.; Nokhandan, A.K.; Kerachian, R.; Maksimovic, C. Design of on-line river water quality monitoring systems using the entropy theory: A case study. Environ. Monit. Assess. 2009, 155, 63–81. [Google Scholar] [CrossRef]

- Naoyuki, K.; Yoshiaki, T. Video Monitoring System for Security Surveillance Based on Augmented Reality. 2002. Available online: https://www.semanticscholar.org/paper/Video-Monitoring-System-for-Security-Surveillance-Naoyuki-Yoshiaki/fd3d926bc2254f1feaf663494888207449eb65f0 (accessed on 18 January 2023).

- Milosavljevic, A.; Rancic, D.; Dimitrijevic, A.; Predic, B.; Mihajlovic, V. Integration of GIS and video surveillance. Int. J. Geogr. Inf. Sci. 2016, 30, 2089–2107. [Google Scholar] [CrossRef]

- Neumann, U.; Suya, Y.; Jinhui, H.; Bolan, J.; JongWeon, L. Augmented virtual environments (AVE): Dynamic fusion of imagery and 3D models. In Proceedings of the IEEE Virtual Reality, Los Angeles, CA, USA, 22–26 March 2003; pp. 61–67. [Google Scholar]

- Sawhney, H.; Arpa, A.; Kumar, R.; Samarasekera, S.; Aggarwal, M.; Hsu, S.; Nistér, D.; Hanna, K. Video Flashlights: Real Time Rendering of Multiple Videosfor Immersive Model Visualization. In Proceedings of the 13th Eurographics Workshop on Rendering Techniques, Pisa, Italy, 26–28 June 2002; pp. 157–168. [Google Scholar]

- Lewis, P.; Fotheringham, S.; Winstanley, A. Spatial video and GIS. Int. J. Geogr. Inf. Sci. 2011, 25, 697–716. [Google Scholar] [CrossRef]

- Xie, C.; Li, M.K.; Wang, H.Y.; Dong, J.Y. A survey on visual analysis of ocean data. Vis. Inform. 2019, 3, 113–128. [Google Scholar] [CrossRef]

- Xiao, X.; Fang, C.; Lin, H. Characterizing Tourism Destination Image Using Photos’ Visual Content. ISPRS Int. J. Geo-Inf. 2020, 9, 730. [Google Scholar] [CrossRef]

- Xiao, X.; Fang, C.; Lin, H.; Chen, J. A framework for quantitative analysis and differentiated marketing of tourism destination image based on visual content of photos. Tour. Manag. 2022, 93, 104585. [Google Scholar] [CrossRef]

- Sun, J.; Mi, S.Y.; Olsson, P.O.; Paulsson, J.; Harrie, L. Utilizing BIM and GIS for Representation and Visualization of 3D Cadastre. ISPRS Int. J. Geo-Inf. 2019, 8, 503. [Google Scholar] [CrossRef]

- Tao, F.; Xiao, B.; Qi, Q.L.; Cheng, J.F.; Ji, P. Digital twin modeling. J. Manuf. Syst. 2022, 64, 372–389. [Google Scholar] [CrossRef]

- Qi, Q.L.; Tao, F.; Hu, T.L.; Anwer, N.; Liu, A.; Wei, Y.L.; Wang, L.H.; Nee, A.Y.C. Enabling technologies and tools for digital twin. J. Manuf. Syst. 2021, 58, 3–21. [Google Scholar] [CrossRef]

- Qiu, Y.G.; Xie, H.; Sun, J.Y.; Duan, H.T. A Novel Spatiotemporal Data Model for River Water Quality Visualization and Analysis. IEEE Access 2019, 7, 155455–155461. [Google Scholar] [CrossRef]

- Abdallah, A.M.; Rosenberg, D.E. A data model to manage data for water resources systems modeling. Environ. Model. Softw. 2019, 115, 113–127. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).