Abstract

Hyperspectral images taken from aircraft or satellites contain information from hundreds of spectral bands, within which lie latent lower-dimensional structures that can be exploited for classifying vegetation and other materials. A disadvantage of working with hyperspectral images is that, due to an inherent trade-off between spectral and spatial resolution, they have a relatively coarse spatial scale, meaning that single pixels may correspond to spatial regions containing multiple materials. This article introduces the Diffusion and Volume maximization-based Image Clustering (D-VIC) algorithm for unsupervised material clustering to address this problem. By directly incorporating pixel purity into its labeling procedure, D-VIC gives greater weight to pixels corresponding to a spatial region containing just a single material. D-VIC is shown to outperform comparable state-of-the-art methods in extensive experiments on a range of hyperspectral images, including land-use maps and highly mixed forest health surveys (in the context of ash dieback disease), implying that it is well-equipped for unsupervised material clustering of spectrally-mixed hyperspectral datasets.

1. Introduction

Hyperspectral images (HSIs) are images of a scene or object that store spectral reflectance at a hundred or more spectral bands per pixel [1,2,3]. HSI remote sensing data, which is generated continuously by airborne and space-borne sensors, has been used successfully for signal processing problems in fields including forensic medicine (e.g., age estimation of forensic traces [4]), conservation (e.g., species mapping in wetlands [5,6]), and ecology (e.g., estimating water content in vegetation canopies [7]). The high-dimensional characterization of a scene provided in remote sensing HSI data has motivated its use in material classification problems [8], wherein machine learning is used to separate pixels based on the constituent materials (including vegetation types, trees species, and plant health) within spatial regions [2,3,9].

Though hyperspectral imagery has become an essential tool across many scientific domains, material classification using HSI data faces at least two key challenges. First, because of an inherent trade-off between spectral and spatial resolution, HSIs are generated at a coarse spatial resolution [10,11,12,13,14,15]. One would prefer an HSI with both a high spatial resolution (so that individual pixels correspond to spatial regions containing just one material) and a high spectral resolution (to enable capacity for material classification) [11]. However, an increase in the spatial resolution of an HSI often comes at the cost of reducing the effective detection energy entering the recording spectrometer across each spectral band [10]. While this effect may at least partially be mitigated by increasing the aperture of the optical system underlying the spectrometer used to generate HSI data [16], high-aperture instruments generally also have high volume and weight [10]. As such, HSI data is typically generated at a coarse spatial resolution (roughly 1 m from drone, 3–10 m from aircraft, 30 m from space). Thus, though some high-purity pixels in an HSI may correspond to spatial regions containing predominantly just one material, other pixels are mixed, corresponding to spatial regions with many distinct materials [12,14]. A second challenge is that the generation of expert labels—often used for training supervised machine learning models—is generally impractical due to the large quantities of HSI data continuously produced by remote sensors [1]. To efficiently analyze unlabeled HSIs, one may use HSI clustering algorithms, which partition HSI pixels into groups of points sharing key commonalities [17]. These algorithms are unsupervised; i.e., ground truth labels are not used to provide a partition of an HSI [17]. Though clustering has become an important tool in the field of hyperspectral imagery [18,19,20,21,22,23,24,25,26,27,28,29,30,31], HSI clustering algorithms that do not directly account for the fact that HSI pixels are often spectrally mixed may fail to extract meaningful latent cluster structure [32,33].

This article introduces the Diffusion and Volume maximization-based Image Clustering (D-VIC) algorithm for unsupervised material classification (i.e., material clustering) of HSIs. D-VIC is the first algorithm to simultaneously exploit the high-dimensional geometry [19,34] and abundance structure [12,32] observed in HSIs for the clustering problem. In its first stage, D-VIC locates cluster modes: high-purity, high-empirical density pixels that are far in diffusion distance (a data-dependent distance metric [35]) from other high-purity, high-density pixels. These pixels serve as exemplars for all underlying material structures in the HSI. In its mode selection, D-VIC downweights high-density pixels that correspond to commonly co-occurring groups of materials. As such, D-VIC’s exploitation of spectrally mixed structure in HSI data [10,11,12,13] enables the selection of modes that better represent the material structure in the scene. After detecting cluster modes, D-VIC propagates modal labels to non-modal pixels in order of decreasing density and pixel purity. Since pixel purity is also incorporated into D-VIC’s non-modal labeling, D-VIC accounts for material abundance structure in the HSI during its entire labeling procedure. D-VIC is compared against classical and related state-of-the-art HSI clustering algorithms on three benchmark real HSI datasets and applied to the problem of unsupervised detection of a forest pathogen—ash dieback disease (Hymenoscyphus fraxineus) [36,37,38,39]—using real remote sensing HSI data. On each dataset, D-VIC produces competitive unsupervised labelings and, moreover, enjoys robustness to hyperparameter selection. Computationally, D-VIC scales quasilinearly in the size of the HSI, and its empirical runtime is competitive, suggesting it is well-suited to cluster large HSIs.

The rest of this article is structured as follows. Section 2 provides background on HSI clustering, diffusion geometry, and spectral unmixing. Section 3 motivates incorporating spectral unmixing into a nonlinear graph-based clustering framework and introduces D-VIC. Section 4 demonstrates the efficacy of D-VIC through substantial experiments on three real HSI datasets. Additionally, it is shown in Section 4 that D-VIC may be used for an unsupervised ash dieback disease detection problem using remotely sensed HSI data collected over a forest in Great Britain [40]. We conclude and offer directions for future work in Section 5. Finally, in Appendix A, we detail hyperparameter optimization.

2. Background

2.1. Background on Unsupervised HSI Clustering

HSI clustering algorithms partition an HSI, denoted (interpreted as a point cloud of HSI pixels’ spectral signatures, with n pixels and D spectral bands) into K clusters of pixels. The partition, which we call a clustering of X, may be encoded in a labeling vector such that is the label assigned to the pixel . Ideally, pixels from any one cluster are in some sense “related,” and pixels from any two clusters are “unrelated” [17,28,41,42]. Clustering algorithms are unsupervised, meaning that data points are labeled without the aid of any expert annotations or ground truth labels. This has motivated the development of algorithms explicitly built for material clustering using HSIs [18,19,20,21,22,23,24,25,26,27,28,29,30,31,43].

Though classical clustering algorithms such as K-Means and the Gaussian Mixture Model (GMM) [17] remain widely used in practice, these algorithms tend to perform poorly on HSIs for a number of reasons [18,19]. First, algorithms that rely on Euclidean distances are prone to the “curse of dimensionality” on datasets like HSIs with a high ambient dimension (i.e., the number of spectral bands is large) [44]. Second, HSIs are often spectrally mixed [10,11,12,13], and overlap may exist between clusters in Euclidean space [18]. A final complication is that classical algorithms generally assume that latent clusters in a dataset are approximately ellipsoidal groups of points that are well-separated in Euclidean space [17], but clusters in HSIs often exhibit nonlinear structure [34]. A simple toy dataset, visualized in Figure 1 [41], serves as an example of a dataset with a nonlinear structure. This dataset lacks a linear decision boundary between its latent clusters, and classical algorithms (K-Means and GMM [17]) could not learn its latent nonlinear cluster structure. HSIs often contain clusters that can only be separated using a nonlinear decision boundary [18]; thus, algorithms that rely solely on Euclidean distances are expected to perform poorly at material clustering on HSIs.

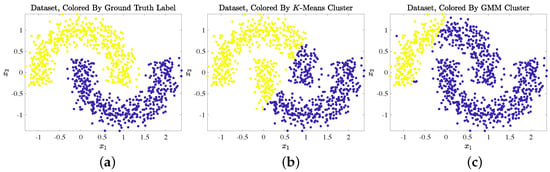

Figure 1.

Example of a toy dataset with nonlinear cluster structure: two interleaving half-circles. The idealized clustering, visualized in (a), separates each half-circle. Due to the lack of a linear decision boundary, however, both K-Means (b) and GMM (c) were unable to extract latent cluster structure from this simple nonlinear dataset. Both algorithms were run with 100 replicates.

The limitations outlined above have motivated the application and development of nonlinear graph-based algorithms for HSI clustering [18,19,20,21,22,23,24,31,41,43,45,46,47]. Graph-based algorithms rely on data-generated graphs; pixels are represented as nodes in the graph, and edges encode pairwise similarity between them. Highly connected regions in the graph may then be summarized using a nonlinear coordinate transformation [35,48,49,50], as is described in more detail in Section 2.2. Thus, a partition may be obtained by implementing a classical clustering algorithm on the dimension-reduced dataset. Due to their reliance on a graph representation of an HSI, these algorithms tend to be robust to small perturbations in the data and noise. Moreover, theoretical guarantees exist for the successful recovery of latent cluster structure, even if boundaries between latent clusters are nonlinear [42,51,52]. Despite their exhibited successes, algorithms that rely solely on graph structure tend to perform poorly on datasets containing multimodal cluster structure [42,52,53]; i.e., if a single cluster has multiple regions of high and low density. Importantly, this includes spectrally mixed HSIs, the classes of which often contain multiple co-occurring materials of varying abundances [10,11,12].

Deep neural networks and graph convolutional networks have recently become popular for material classification and clustering in HSIs because of their capacity to predict complex data sources [25,26,27,29,30,47,54,55]. While these algorithms tend to be highly accurate at material classification using real HSI data, many state-of-the-art deep models for HSI segmentation still rely in some part on training labels, whether via pre-training some or all of the network [54,55] or explicitly relying upon a small number of ground truth labels [25,26,47,56], and/or pseudo-labels [25,29]. Moreover, even fully unsupervised “deep clustering” algorithms [27,30] rely on deep neural networks, which have been shown to be prone to error from perturbations and noise [57,58] and whose success in unsupervised clustering is often due to data pre-processing steps rather than the unsupervised network learning meaningful features [59].

2.2. Background on Spectral Graph Theory

As overviewed in Section 2.1, graph-based clustering algorithms learn latent, possibly nonlinear cluster structure from HSIs by treating pixels as nodes in an undirected, weighted graph, where connections between pixels are encoded in a weight matrix [41,42,52,60]. In large datasets like HSIs, edges can be restricted to the first -nearest neighbors (i.e., Euclidean distance nearest neighbors) and given unit weight. In other words, if is one of the N nearest neighbors of or vice versa, and otherwise. Let , where is the diagonal degree matrix defined by . The matrix may be interpreted as the transition matrix for a Markov diffusion process on X and has a unique stationary distribution satisfying [35,42]. Define to be the (right) eigenvalue-eigenvector pairs of , sorted in non-increasing order so that . The first K eigenvectors of often concentrate on the K most coherent subgraphs in the graph underlying , making these vectors useful for clustering [41].

Background on Diffusion Geometry

Diffusion distances are a family of data-dependent distance metrics which enable comparisons between points in the context of the Markov diffusion process encoded in [35]. Diffusion distances have been successfully used in a number of applications (e.g., in gene expression profiling [61,62], data visualization [63,64], and molecular dynamics analysis [65,66,67]). Moreover, diffusion distances have been shown to efficiently capture low-dimensional structure in HSI data, resulting in excellent clustering performance [18,52].

Define to be the diffusion distance at time between pixels [35,68,69]. Diffusion distances are a nonlinear data-dependent distance metric that have a natural connection to the clustering problem [42,52]. To see this, note that may be interpreted as the Euclidean distance between the ith and jth rows of , weighted according to . If pixels from the same cluster share many high-weight paths of length t, but paths of length t between any two pixels from different clusters are relatively low weight, then the ith and jth rows of are expected to be nearly equal for pixels and from the same cluster and very different if these pixels come from different clusters. So, the diffusion distance between points from the same cluster is expected to be small, and the diffusion distance between points from different clusters is expected to be large [42,52]. Diffusion distances can be efficiently computed using the eigendecomposition of : [35,68,69]. For t sufficiently large so that for , the sum in diffusion distances can be truncated past the ℓth term, yielding an accurate and efficient approximation of diffusion distances. Importantly, the relationship between diffusion distances and the eigendecomposition of indicates that diffusion distances may be interpreted as Euclidean distances after nonlinear dimensionality reduction via the following dimension-reduced mapping of the ambient space into : [35,68,69].

HSIs often encode well-defined latent multiscale cluster structures that can be learned by diffusion-based HSI clustering algorithms by varying the time parameter t in diffusion distances [52,60]. Indeed, smaller t values generally enable the detection of fine-scale local cluster structure, while larger t values enable the detection of coarse-scale global cluster structure. However, for algorithms that require K as an input, t must be tuned to correspond to the desired number of clusters. Thus, the choice of t must be carefully considered when clustering a dataset using an algorithm that relies on diffusion distances [52,60].

2.3. Background on Spectral Unmixing

Real-world HSI data is often generated at a coarse spatial resolution; thus, pixels may correspond to spatial regions containing multiple materials [70,71,72]. To learn the latent material structure from HSIs, spectral unmixing algorithms decompose each pixel’s spectrum into a linear combination of endmembers that encode the spectral signature of materials in the scene. The endmembers may be understood as “pure” material signatures. When representing a pixel as a linear combination of these endmembers, the coefficients of the linear combination indicate the relative abundance of materials within the spatial region corresponding to that pixel. Mathematically, a spectral unmixing algorithm learns (with rows encoding the spectral signatures of endmembers) and (with rows encoding abundances) such that for each [72]. Usually, the entries of are nonnegative and normalized so that for each i; hence, abundances are data-dependent features storing estimates for the relative frequency of materials in pixels. The purity of , defined by [32], will be large if the spatial region corresponding to is highly homogeneous (i.e., containing predominantly just one material) and small otherwise. As such, pixel purity and spectral unmixing may be used to aid in the unsupervised clustering of HSIs [21,28,32].

Spectral unmixing has become an important tool in hyperspectral imagery, prompting its usage in a number of applications (e.g., image reconstruction [73,74,75], noise reduction [76,77,78], spatial resolution enhancement [79,80,81], supervised material classification [32,82,83], change detection [84,85,86,87], and anomaly detection [31,88,89]). The importance of spectral unmixing in remote sensing has motivated the development of many algorithms for this task, which we broadly summarize here; see surveys [12,90,91,92,93,94] for a more thorough overview. Geometric methods for spectral unmixing estimate endmembers by searching for points that form a simplex of minimal volume, subject to a constraint that at least some nearly pure pixels exist within the observed HSI pixels [12,14,19,70,95,96,97,98,99,100,101,102,103,104]. For highly mixed HSIs that lack pure pixels, statistical methods may be used [105,106,107,108,109]. These methods typically treat spectral unmixing as a blind source separation problem, and though they are often successful at this task, statistical algorithms are usually more computationally expensive [12]. Additionally, autoencoding methods learn latent spectral mixing structure by training neural networks that map pixel spectra to a lower-dimensional space that can be related to endmember and abundance matrices and [110,111,112,113,114,115,116,117,118]. Finally, while linear spectral unmixing is well-developed and widely used in practice, some nonlinear unmixing algorithms (including some relying on neural networks [111,115,119,120,121,122]) have been developed to account for nonlinear interactions between endmembers [111,115,119,120,122,123,124,125,126,127]. Nevertheless, many of these algorithms typically require training data or hyperparameter inputs, unlike many of the linear mixing models reviewed above [12].

Spectral unmixing is relevant to our paper as a way to determine cluster modes in an unsupervised setting. Below, we focus on two standard methods in unmixing but note that D-VIC is modular in this regard, and other unmixing algorithms could be used.

2.3.1. Background on the HySime Algorithm

Hyperspectral Signal Subspace Identification by Minimum Error (HySime) is a standard algorithm for estimating the number of materials m in X [128]. HySime assumes that each is of the form , where and model the signal and noise associated with , respectively. If signal vectors are linear mixtures of m ground truth endmembers (i.e., for ), then the set lies on a m-dimensional subspace of . With this motivation, HySime estimates the subspace dimension by balancing the error of projecting signal vectors onto their first m principal components with the amount of noise captured by those vectors’ orthogonal complement. Though other algorithms exist for estimating the number of materials in a scene using HSI data, many of these alternatives rely on hyperparameter inputs to estimate m or have large computational complexity [129]. For example, the ubiquitous virtual dimensionality—which relies on a Neyman–Pearson detection theory-based threshold to determine m [130]—has been shown to be highly sensitive to small perturbations in pixel spectra and hyperparameter inputs [128]. In contrast, HySime is hyperparameter-free and can compute a high-quality, numerically stable estimate using only X in just operations.

2.3.2. Background on the AVMAX Algorithm

Alternating Volume Maximization (AVMAX) is a spectral unmixing algorithm, requiring m as a parameter, that searches for vectors that produce an m-simplex of maximal volume, subject to the constraint that each lies in the convex hull of the dataset after Principal Component Analysis (PCA) dimensionality reduction: projecting pixel spectra onto the span of the first principal components [70]. This dimensionality reduction step is motivated by the fact that any vector in the affine hull of the m endmembers can always be expressed as , where is related to the first principal components of X [70,131]; see Lemma 1 in [70] for details. Endmembers are optimized through multiple partial maximization procedures (i.e., keeping endmembers constant and optimizing for volume while varying the endmember) until convergence [70]. AVMAX has become popular for spectral unmixing because of its strong performance guarantees and the rigor behind its optimization framework [12,70]. Indeed, in a noiseless, linearly mixed dataset containing the optimal endmember set, if each partial maximization problem in AVMAX converges to a unique solution, AVMAX is guaranteed to converge to the optimal endmember set [70]. Moreover, AVMAX can easily be modified to make it robust to random initialization; one can run multiple replicates of AVMAX in parallel and choose the endmember set with the largest volume. Once endmembers are learned, abundances may be computed using a nonnegative least squares solver: for each [132].

3. Diffusion and Volume Maximization-Based Image Clustering

In spectrally mixed HSIs, any one pixel may correspond to a spatial region that contains many materials [12,14]. Thus, even state-of-the-art algorithms for unsupervised material clustering may perform poorly on mixed HSIs, failing to recover clusterings that can be linked to materials within the scene. Algorithms that do not directly incorporate a spectral unmixing step into their labeling may assign clusters corresponding to groups of materials rather than clusters corresponding to individual materials. Thus, additional improvements are needed to develop algorithms suitable for material clustering on mixed HSIs.

This section introduces the Diffusion and Volume maximization-based Image Clustering (D-VIC) algorithm (Algorithm 1) for unsupervised material clustering of HSIs. To learn material abundances, D-VIC first performs a spectral unmixing step: decomposing the HSI by learning the number of endmembers m using HySime [128], implementing AVMAX with that m-value to learn endmembers [70], and calculating abundances and purity through a nonnegative least squares solver [132]. As will become clear in Section 4, this estimate for pixel purity resulted in high-quality material clustering with D-VIC. Nevertheless, the choice of the algorithm used for spectral unmixing in D-VIC is quite modular, and future work may consider applying other endmember extraction algorithms [12,92,93,94,130] and/or abundance solvers that explicitly constrain estimates to sum to one [133,134]. D-VIC then estimates empirical density using a kernel density estimate (KDE) defined by , where is the set of N -nearest neighbors of x in X, is a KDE scale controlling the interaction radius between points, and Z is a constant normalizing so that . By construction, will be large if the pixel x is close to its N -nearest neighbors in X and small otherwise [18,42,135].

| Algorithm 1: Diffusion and Volume maximization-based Image Clustering |

Input: X (HSI), N (# nearest neighbors), (KDE scale), t (diffusion time), K (# clusters) Output: (clustering)

|

To locate pixels that are both high-density and indicative of an underlying material, D-VIC calculates , where and . Thus, returns the harmonic mean of and , which are normalized so that density and purity are approximately at the same scale. By construction, only at high-density, highly pure pixels x. In contrast, if a pixel x is either low-density or low-purity, then will be small. Importantly, downweights mixed pixels that, though high-density, correspond to a spatial region containing many materials. Thus, points with large -values will correspond to pixels that are modal (due to their high empirical density) and representative of just one material in the scene (due to their high pixel purity).

D-VIC uses the following function to incorporate diffusion geometry into its procedure for selecting cluster modes:

Thus, a pixel will have a large -value if it is far in diffusion distance at time t from its -nearest neighbor of higher density and pixel purity. D-VIC assigns modal labels to the K points maximizing , which are high-density, high-purity pixels far in diffusion distance at time t from other high-density, high-purity pixels.

After labeling cluster modes, D-VIC labels non-modal points according to their -nearest neighbor of higher -value that is already labeled. Importantly, D-VIC downweights low-purity pixels through in its non-modal labeling. Thus, pixel purity is incorporated in all stages of the D-VIC algorithm through . D-VIC is provided in Algorithm 1, and a schematic is provided in Figure 2.

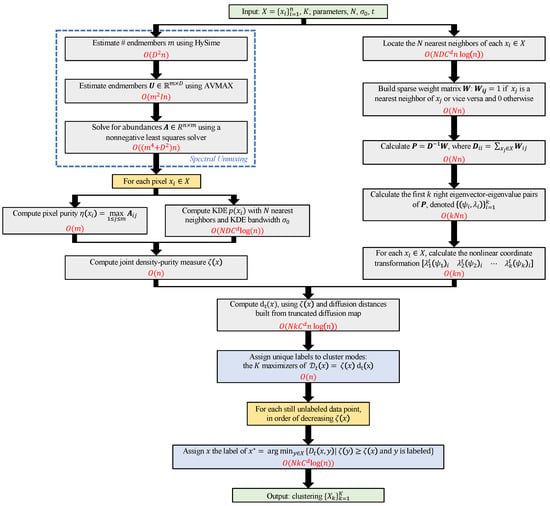

Figure 2.

Diagram of the D-VIC clustering algorithm. The computational complexity of each step is colored in red. The scaling of D-VIC depends on n (no. pixels), D (no. spectral bands), m (no. endmembers), I (no. AVMAX maximizations), N (no. nearest neighbors), d (doubling dimension of X [136]), and C: a constant independent of all other parameters [136]; see Section 3.1 for details. Note that all steps are quasilinear with respect to n, implying that D-VIC scales well to large HSI datasets. We remark that the spectral unmixing step (indicated with a blue box) is quite modular, and other approaches may be used in future work [12,90,93,94,130,133,134].

3.1. Computational Complexity

The computational complexity of the HySime algorithm is operations [128], whereas the computational complexity of spectral unmixing using AVMAX and a standard nonnegative least squares solver [132] is operations, where I is the number of AVMAX partial maximizations [70]. We assume that nearest neighbor searches are performed using cover trees: an indexing data structure that enables logarithmic nearest neighbor searches [136]. To see this, define the doubling dimension of X by , where is the smallest value for which any ball can be covered by c balls of radius . If the spectral signatures of pixels in have doubling dimension d, a search for the N -nearest neighbors of each HSI pixel using cover trees has computational complexity , where C is a constant independent of n, D, N, and d. Thus, if is constructed using cover trees [136] with N nearest neighbors, and eigenvectors of are used to calculate diffusion distances, then the computational complexity of D-VIC is [42,136].

So long as the spatial dimensions of the scene captured by an HSI are not changed, we expect that m (the expected number of materials within the scene) will be constant with respect to the number of samples n. Similarly, numerical simulations have shown that if m remains constant as the number of samples increases, then I tends to grow only slightly [70]. If and with respect to n, then the complexity of D-VIC reduces to (i.e., quasilinear in the image size).

3.2. Comparison with Learning by Unsupervised Nonlinear Diffusion

An important point of comparison for D-VIC is the Learning by Unsupervised Nonlinear Diffusion (LUND) algorithm [18,42], which follows a similar procedure to D-VIC but crucially uses the KDE in place of . To give some motivation for why we advocate for instead of p for material clustering, we remark that for any one cluster, there may be multiple reasonable choices for cluster modes: pixels that are exemplary of the underlying cluster structure. In LUND, cluster modes are selected to be high-density pixels that are far in diffusion distance from other high-density pixels. However, not all high-density pixels necessarily correspond to the underlying material structure. A maximizer of could, for example, correspond to a spatial region containing a group of commonly co-occurring materials (rather than a single material). By weighting pixel purity and density equally, D-VIC avoids selecting such a pixel as cluster mode; thus, D-VIC modes will both indicate the underlying material structure and be modal, making these points better exemplars of underlying material structure than the modes selected by LUND.

We demonstrate this key difference between LUND and D-VIC by implementing both algorithms on a simple dataset (visualized in Figure 3) built to illustrate the idealized scenario where D-VIC outperforms LUND due to its incorporation of pixel purity. This dataset was generated by sampling points from an equilateral triangle in centered at the origin with edge length ; the vertices of this triangle served as ground truth endmembers. We sampled 1000 data points from a Gaussian distribution with a standard deviation of 0.175 centered at each endmember, keeping only the samples lying within the convex hull of the ground truth endmember set. In addition, 2000 data points were sampled from a Gaussian distribution with zero mean and a smaller standard deviation of 0.0175. As such, high-purity points indicative of latent material structure were also relatively low-density, and density maximizers were engineered not to be indicative of latent material structure. Each point was assigned a ground truth label corresponding to its highest-abundance ground truth endmember.

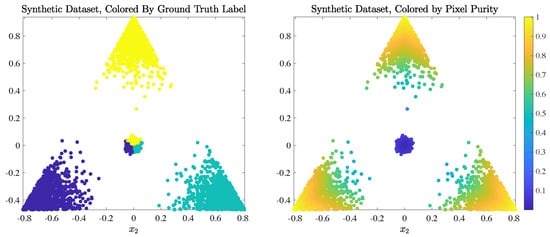

Figure 3.

Ground truth labels and pixel purity of synthetic dataset sampled from a triangle in ; the vertices of this triangle served as ground truth endmembers. Notice that empirical density maximizers near the origin are also the lowest-purity data points.

For both LUND and D-VIC, overall accuracy (OA), defined to be the fraction of correctly labeled pixels, was optimized for across the same grid of relevant hyperparameter values (see Appendix A). The optimal clusterings and their corresponding OA values are provided in Figure 4. These results illustrate a fundamental limitation of relying solely on empirical density to select cluster modes in spectrally mixed HSI data. Because empirical density maximizers are not representative of the underlying material structure in this synthetic dataset, LUND cannot accurately cluster data points within the high-density, low-purity region near the origin, resulting in poor performance and an OA of 0.739. In contrast, D-VIC downweights high-density points that are not also high-purity and, therefore, selects points that are more representative of the dataset’s underlying material structure as cluster modes. As a result, D-VIC correctly separates the high-density, low-purity region into three segments, yielding a substantially higher OA of 0.905, a difference of 0.166 when compared to LUND. We note that both LUND and D-VIC are related to classical spectral graph clustering methods [41,68,137] in their use of a diffusion process on the graph to learn the intrinsic geometry in the high-dimensional data, but differ in their use of data density (LUND) and data purity (D-VIC) in identifying cluster modes as well as in their use of an iterative labeling scheme.

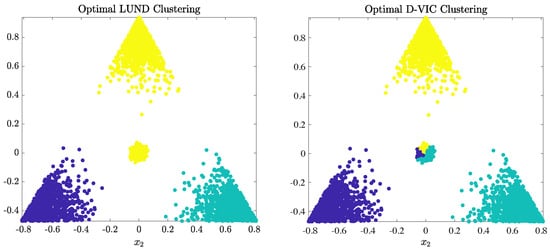

Figure 4.

Optimal LUND (OA = 0.739) and D-VIC (OA = 0.905) clusterings of the synthetic dataset (Figure 3). D-VIC explicitly incorporates data purity into its labeling procedure, resulting in better clustering performance than LUND in the high-density, low-purity region near the origin.

4. Experiments and Discussion

This section contains a series of experiments indicating the efficacy of D-VIC. First, in Section 4.1, classical and state-of-the-art clustering algorithms were implemented on three real benchmark HSIs. D-VIC was compared against classical algorithms [17]: K-Means, K-Means applied to the first principal components of the HSI (K-Means+PCA), and GMM applied to the first principal components of the HSI (GMM+PCA). D-VIC was also compared against several state-of-the-art HSI clustering algorithms: Density Peak Clustering (DPC) [135], Spectral Clustering (SC) [41,137], Symmetric Nonnegative Matrix Factorization (SymNMF) [21], K-Nearest Neighbors Sparse Subspace Clustering (KNN-SSC) [19,20], Fast Self-Supervised Clustering (FSSC) [46] and LUND [18,42]. Our second set of experiments appears in Section 4.2, where D-VIC and other clustering algorithms were implemented on a remote sensing HSI generated over deciduous forest containing both healthy and dieback-infected ash trees in Madingley Village near Cambridge, United Kingdom [40,138].

In all experiments, the number of clusters was set equal to the ground truth K. Comparisons were made using OA and Cohen’s coefficient: , where is the relative observed agreement between a clustering and the ground truth labels and is the probability that a clustering agrees with the ground truth labels by chance [139]. OA is a standard metric that, in some ways, captures the best sense of overall performance, as each pixel is considered of equal importance. However, it is biased in favor of correctly labeling large clusters at the expense of small clusters and can be misleading when a dataset has many small clusters of importance. To address this, we also consider ; we note that in our experimental results, performance with respect to OA and were highly correlated. OA was optimized for across hyperparameters ranging a grid of relevant values for each algorithm (see Appendix A). We report the median OA across 100 trials for K-Means, GMM, SymNMF, FSSC, and D-VIC to account for the stochasticity associated with random initial conditions. Diffusion distances were computed using only the first 10 eigenvectors of in LUND and D-VIC. For D-VIC, AVMAX was run 100 times in parallel, and the endmember set that formed the largest-volume simplex was selected for later cluster analysis.

4.1. Analysis of Benchmark HSI Datasets

To illustrate the efficacy of D-VIC, we analyzed three publicly available, real HSIs often used as benchmarks for new HSI clustering algorithms; see Table 1 and Figure 5. Water absorption bands were discarded, and pixel reflectance spectra were standardized before analysis [140]. We clustered entire images but discarded unlabeled pixels when comparing clusterings to the ground truth labels. Below, each benchmark HSI analyzed in this section is overviewed in detail; see Table 1 for summary statistics on these benchmark HSIs.

Table 1.

Summary of benchmark HSI datasets analyzed in Section 4.1.

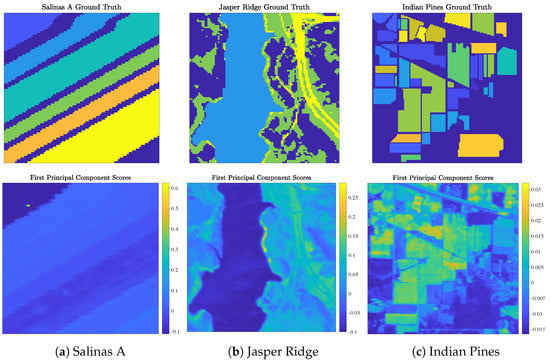

Figure 5.

Ground truth labels and first principal component scores for the real benchmark HSIs analyzed in this article: Salinas A (a), Jasper Ridge (b), and Indian Pines (c).

- 1.

- Salinas A (Figure 5a) was recorded by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor over farmland in Salinas Valley, California, USA, in 1998 at a spatial resolution of 1.3 m. Spectral signatures, ranging in recorded wavelength from 380 nm to 2500 nm across 224 spectral bands, were recorded across pixels (). Gaussian noise (with mean 0 and standard deviation ) was added to each pixel to differentiate two pixels with identical spectral signatures. The Salinas A scene contains ground truth classes corresponding to crop types.

- 2.

- Jasper Ridge (Figure 5b) was recorded by the AVIRIS sensor over the Jasper Ridge Biological Preserve, California, USA, in 1989 at a spatial resolution of 5 m. Spectral signatures, ranging in recorded wavelength from 380 nm to 2500 nm across 224 spectral bands, were recorded across spatial dimensions of pixels ( 10,000). The Jasper Ridge scene contains ground truth endmembers: road, soil, water, and trees. Ground truth labels were recovered by selecting the material of the highest ground truth abundance for each pixel.

- 3.

- Indian Pines (Figure 5c) was recorded by the AVIRIS sensor over farmland in northwest Indiana, USA, in 1992 at a low spatial resolution of 20 m. Spectral signatures, ranging in recorded wavelength from 400 nm to 2500 nm across 224 spectral bands, were recorded across spatial dimensions of pixels ( 21,025). The Indian Pines scene contains ground truth classes (e.g., crop types and manufactured structures) and many unlabeled pixels.

4.1.1. Discussion of Benchmark HSI Experiments

This section compares clusterings produced by D-VIC against those of related algorithms (Table 2). On each of the three benchmark HSIs analyzed, D-VIC produces a clustering closer to the ground truth labels than those produced by related algorithms. In the Indian Pines (Figure 5c) scene, pixels from the same class exist in multiple segments of the image, and the size of ground truth clusters varies substantially across the classes. As such, though supervised and semi-supervised HSI classification algorithms may output highly-accurate classifications of the Indian Pines HSI [141,142,143,144,145], this image is expected to be challenging for fully-unsupervised clustering algorithms that rely on no ground truth labels. Nevertheless, D-VIC achieves higher performance than all other algorithms on this challenging dataset. Notably, though all other algorithms (including state-of-the-art algorithms, such as LUND) achieve -statistics in the same narrow range of 0.271 to 0.316, D-VIC achieves a substantially higher -statistic of 0.350. As such, incorporating pixel purity in D-VIC enables superior detection even in this difficult setting.

Table 2.

Performances of D-VIC and related algorithms on benchmark HSIs. The highest and second-highest performances are bolded and underlined, respectively. D-VIC offers substantially higher performance on all datasets evaluated.

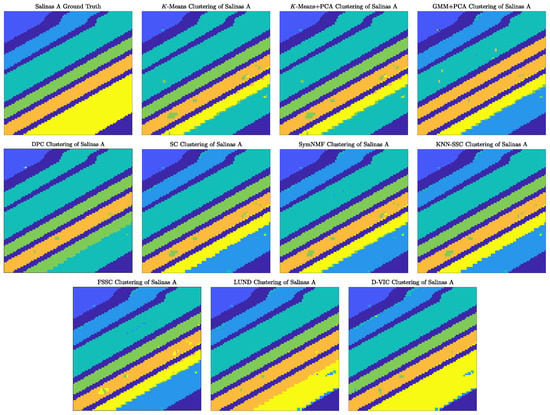

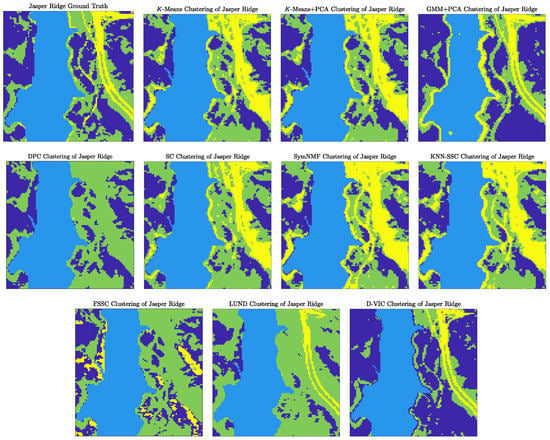

As visualized in Figure 6, D-VIC achieved nearly perfect recovery of the ground truth labels for Salinas A. Most notably, though all comparison methods erroneously separate the ground truth cluster indicated in yellow in Figure 5a (corresponding to 8-week maturity romaine), D-VIC correctly groups the pixels in this cluster, resulting in performance that was 0.089 higher in OA and 0.110 in than the that of LUND, its closest competitor in Table 2. As such, downweighting high-density points that are not also exemplary of the latent material structure improves not only modal but also non-modal labeling. Moreover, what error does exist in the D-VIC clustering of Salinas A could likely be remedied through spatial regularization or smoothing post-processing [146,147].

Figure 6.

Comparison of clusterings produced by D-VIC and related algorithms on the Salinas A HSI. Unlike any comparison method, D-VIC correctly groups pixels corresponding to 8-week maturity romaine (indicated in yellow), resulting in the near-perfect recovery of the ground truth labels.

D-VIC similarly achieved much higher performance than related state-of-the-art graph-based clustering algorithms on Jasper Ridge (as visualized in Figure 7). This difference in performance was substantially driven by the superior separation of the classes indicated in dark blue (corresponding to tree cover) and green (corresponding to soil) in Figure 5b. Indeed, though LUND groups most tree cover pixels with soil pixels in Figure 7, D-VIC correctly separates much of the latent structure for this class. The difference between LUND’s and D-VIC’s clusterings indicates that the pixels corresponding to the tree cover class, though lower density than pixels corresponding to the soil class have relatively high pixel purity.

Figure 7.

Comparison of clusterings produced by D-VIC and related algorithms on the Jasper Ridge HSI. D-VIC outperforms all other algorithms, largely due to superior performance among pixels corresponding to tree and soil classes (indicated in dark blue and green, respectively).

4.1.2. Runtime Analysis

This section compares runtimes of the algorithms implemented in Section 4.1.1, where hyperparameters were set to be those which produced the results in Table 2. All experiments were run in MATLAB on the same environment: a macOS Big Sur system with an 8-core Apple® M1™ Processor and 8 GB of RAM. Each core had a processor base frequency of 3.20 GHz. Runtimes are provided in Table 3. All classical algorithms have smaller runtimes than D-VIC, but the performances reported in Table 2 for these algorithms are substantially less than those reported for D-VIC. On the other hand, though KNN-SSC and SymNMF achieve performances competitive to D-VIC, unlike D-VIC, these algorithms appear to scale poorly to large datasets. DPC, which relies on Euclidean distances between high-dimensional pixel spectra, has lower runtimes on Salinas A than D-VIC but scales poorly to the larger Indian Pines image. In addition, D-VIC outperforms FSSC and operates at lower runtimes across HSI datasets. Finally, D-VIC outperforms LUND at the cost of only a small increase in runtime (associated with the spectral unmixing step).

Table 3.

Runtimes (seconds) of D-VIC and related algorithms. D-VIC achieves runtimes comparable to state-of-the-art algorithms and scales well to the larger Indian Pines dataset.

4.1.3. Robustness to Hyperparameter Selection

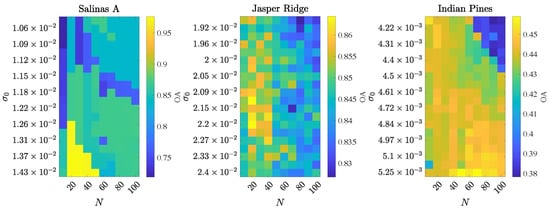

This section analyzes the robustness of D-VIC’s performance to hyperparameter selection. For each node in a grid of , D-VIC was implemented 50 times, and the median OA value across these 50 trials was stored. Performance degraded as N increased substantially past 100, and such a choice is not advised. In Figure 8, we visualize how the performance of D-VIC varies with N and . The relatively small range in nominal values of in our grid reflects that pixels from the HSIs analyzed in this article are relatively close to their -nearest neighbors on average. As is described in Appendix A—where our hyperparameter optimization is discussed in greater detail—the range of used for each grid search is data-dependent, ranging the distribution of -distances between pixel spectra and their 1000 -nearest neighbors.

Figure 8.

Visualization of D-VIC’s median OA across 50 trials as hyperparameters N and are varied. D-VIC achieves high performance across a large set of hyperparameters.

It is clear from Figure 8 that D-VIC can achieve high performance across a broad range of hyperparameters on each HSI. Thus, given little hyperparameter tuning, D-VIC is likely to output a partition that is competitive with clusterings reported in Table 2. Figure 8 also motivates recommendations for hyperparameter selection to optimize the OA of D-VIC. Larger datasets (e.g., Indian Pines) tend to require larger values of N for D-VIC to achieve high OA, corresponding with recommendations in the literature that N should grow logarithmically with n [42]. Additionally, D-VIC achieves the highest OA for datasets with high-purity material classes (e.g., Salinas A) using large . This reflects that, as increases, the KDE becomes more constant across the HSI and . Since purity is an excellent indicator of material class structure for Salinas A, D-VIC becomes better able to recover the latent material structure with larger .

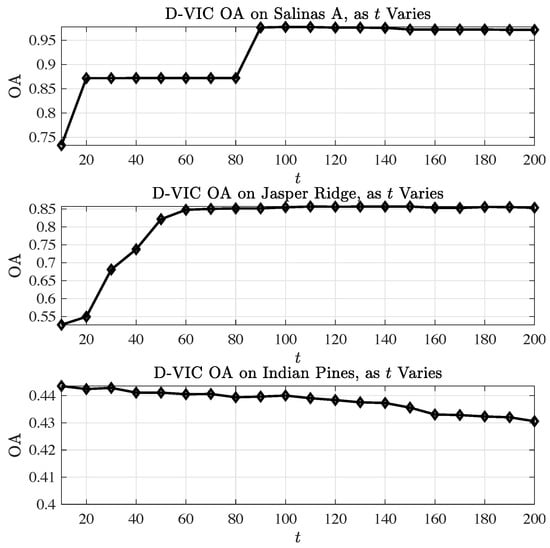

We also analyze the robustness of D-VIC’s performance to selection of the diffusion time parameter t. Using the optimal values of N and , D-VIC was evaluated 100 times at t-values ranging . Figure 9, which visualizes the results of this analysis, indicates D-VIC achieves high OA values across a broad range of t; for each , D-VIC outputs a clustering with OA equal to or very close to those reported in Table 2. These results indicate that D-VIC is well-equipped to provide high-quality clusterings given little or no tuning of t. Indeed, a simple choice of works exceptionally well across all datasets.

Figure 9.

Analysis of D-VIC’s performance as t varies across . Values are the median OA across 100 implementations of D-VIC with the optimal N and values. Generally, t appears to have little impact on the OA of D-VIC, and D-VIC achieves performances comparable to those reported in Table 2 across , uniformly across all data sets.

4.2. Analysis of the Madingley HSI Dataset

This section presents implementations of D-VIC and other clustering algorithms on real HSI data to illustrate that unsupervised clustering algorithms may be used to generate ash dieback disease mappings from remotely-sensed HSI data, even when no ground truth labels are available. Algorithms were evaluated on the Madingley HSI dataset, which was collected by a manned aircraft in August 2018 over a region of temperate deciduous forest in Madingley Village near Cambridge, United Kingdom [40]. This HSI was recorded by a Norsk Elektro Optikk hyperspectral camera (Hyspex VNIR 1800) at a high spatial resolution of 0.32 m. Spectral signatures, ranging in recorded wavelength from 410 nm to 1001 nm across 186 spectral bands, were recorded across pixels ( 1,816,835). The Madingley HSI was preprocessed using QUick Atmospheric Correction [1] (to remove atmospheric effects on pixel spectra) and standardization of spectral signatures (to mitigate differences in illumination across pixels) [40].

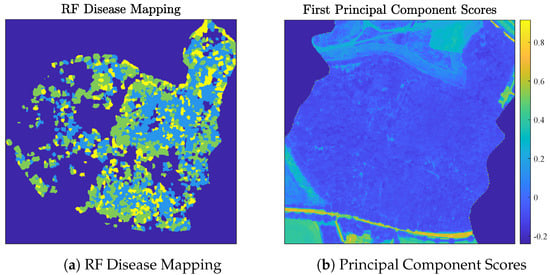

Healthy and dieback-infected ash trees were identified in the Madingley scene using a pair of supervised classifiers [40]. First, to isolate ash tree crowns in the scene, a supervised Partial Least Squares Discriminant Analysis (PLSDA) classifier was trained to predict tree species using manually-collected ground truth labels for 166 tree crowns in the Madingley scene and 256 tree crowns in three other forest regions near Cambridge [40]. Labeled tree crowns were split into training (70%) and validation (30%) sets. The trained PLSDA classifier generalized well to the validation set, achieving an OA of 85.3% on those data [40]. Next, a supervised ash dieback disease map was generated for trees in the Madingley scene classified as ash by the PLSDA [40]. Specifically, a supervised random forest (RF) classifier was trained to classify a tree crown as one of three disease classes—healthy, infected, and severely infected—using the average pixel spectra from pixels corresponding to that tree crown. The RF was trained using manually-labeled tree crowns across the four aforementioned scenes and evaluated on a validation set consisting of 16 tree crowns from each disease class. The trained RF classifier was highly successful at identifying ash dieback disease, with an OA of 77.1% on its validation set [40]. Visualizations of the Madingley HSI and the RF disease mapping are provided in Figure 10.

Figure 10.

Visualizations of the Madingley HSI. The RF disease mapping is visualized in (a) and the Madingley HSI’s first principal component scores are visualized in (b). In (a), yellow indicates severely-infected ash, green indicates infected ash, and light blue indicates healthy ash.

Dieback-infected ash trees tend to have a mosaic of healthy and dead branches, so bicubic interpolation [148] was implemented on the Madingley HSI before cluster analysis to downsample pixels to a 1.28 m spatial resolution [148]. Thus, each pixel covered a spatial region containing multiple branches, leading to a more holistic characterization of tree health (rather than of individual branches) [138]. Unsupervised clustering algorithms were evaluated on the 72,775 pixels in the resulting scene corresponding to ash trees in the down-sampled PLSDA species mapping [40]. For each clustering algorithm, we set so that clusters of pixels corresponded to healthy and dieback-infected trees. Unsupervised clusterings were evaluated by comparing against the supervised RF disease mapping after combining the “infected” and “severely infected” classes and aligning labels using the Hungarian algorithm.

Discussion of Madingley Experiments

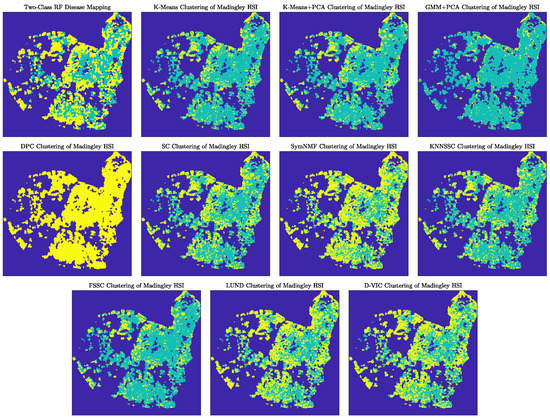

Table 4 summarizes the overlap of D-VIC’s and other algorithms’ clusterings of the Madingley HSI with the RF disease mapping. Notably, four algorithms—SC, KNN-SSC, LUND, and D-VIC—achieved comparably high OA and values. We remark that the RF disease mapping used for validation results from a supervised learning algorithm trained on a small set of labels. Because it is an imperfect labeling of the Madingley HSI, small differences in OA or values between SC, KNN-SSC, LUND, and D-VIC should not be taken as an indication that one of these clustering methods is better or worse than another. Nevertheless, these algorithms’ high levels of overlap with the RF disease mapping indicate that graph-based unsupervised clustering algorithms like D-VIC may be applied to remotely-sensed HSI data to assess forest health even when ground truth labels are unavailable.

Table 4.

Performances of D-VIC and related algorithms on the Madingley HSI. The highest and second-highest performances are bolded and underlined, respectively. Many graph-based algorithms—SC, KNN-SSC, LUND, and D-VIC—achieved approximately the same high performance on the Madingley HSI, indicating that graph-based HSI clustering algorithms may be used for unsupervised ash dieback disease mapping, even when no ground truth labels exist. KM denotes K-Means.

Though many graph-based HSI clustering algorithms exhibited similar levels of overlap with the supervised RF disease mapping, substantial differences exist between the unsupervised disease mappings obtained by different clustering algorithms, as can be observed in Figure 11. Indeed, LUND and D-VIC tended to predict ash dieback disease in regions considered healthy according to the RF disease map [40]. On the other hand, other similarly-performing graph-based clustering algorithms (SC and KNN-SSC) tended to label trees as healthy even in regions where the RF disease map indicates substantial dieback disease infection [40]. All clusterings exhibit salt-and-pepper error, indicating that spatial regularization [146,147] or majority voting within tree crowns [40,138] may improve overlap between unsupervised clusterings and the RF labeling even further.

Figure 11.

Comparison of clusterings produced by D-VIC and related algorithms on the Madingley HSI. Labels were aligned so that yellow indicates dieback-infected ash and teal indicates healthy ash. Though the performance of many graph-based algorithms (SC, KNN-SSC, LUND, and D-VIC) was similar in Table 4, qualitative differences exist between these algorithms’ clusterings.

5. Conclusions

This article introduces the D-VIC clustering algorithm for unsupervised material classification in HSIs. D-VIC assigns modal labels to high-density, high-purity pixels within the HSI that are far in diffusion distance from other high-density, high-purity pixels [32,42,70]. We have argued that these cluster modes are highly indicative of underlying material structure, leading to more interpretable and accurate clusterings than those produced by related algorithms [18,42]. Indeed, experiments presented in Section 4 show that incorporating pixel purity into D-VIC results in clusterings closer to the ground truth labels on three benchmark real HSI datasets of varying sizes and complexities and enables high-fidelity unsupervised ash dieback disease detection on remotely-sensed HSI data. As such, D-VIC is equipped to perform efficient material clustering on broad ranges of spectrally mixed HSI datasets.

Future work includes modifying the spectral unmixing step in D-VIC. To demonstrate the effect of including a pixel purity estimate in a diffusion-based HSI clustering algorithm, we have chosen a simple, standard linear unmixing procedure to generate the pixel purity estimate in D-VIC: using HySime to estimate the number of endmembers in an HSI [128], AVMAX to estimate those endmembers [70], and a nonlinear least squares solver [132] to calculate abundances. The spectral unmixing procedure in D-VIC is quite modular, however, and improvements to D-VIC’s clustering performance may be gained through improvements to this procedure; for example, by explicitly constraining abundances to sum to one [134] or accounting for nonlinear mixing of endmembers [123,124,125]. Linear endmember extraction is computationally inexpensive [70,128,132], and it results in a strong performance in D-VIC, but recent years have brought significant advances in algorithms for the nonlinear spectral unmixing of HSIs [123,124,125]. Modifying the spectral unmixing step in D-VIC may improve performance, especially for HSIs in which assumptions on linear mixing do not hold [123,124,125].

Additionally, much of the error in D-VIC’s clusterings may be corrected by incorporating spatial information into its labeling. Such a modification of D-VIC may improve performance on datasets with spatially homogeneous clusters [60,146,149,150,151,152,153,154]. Moreover, it is likely that varying the diffusion time parameter t in D-VIC may enable the detection of multiple scales of latent cluster structure, a problem we would like to consider further in future work [28,52,60,155]. Additionally, we expect D-VIC may be modified for active learning, wherein ground truth labels for a small number of carefully selected pixels are queried and propagated across the image [147,156]. Finally, we expect that D-VIC (or one of the extensions described above) may be modified for change detection in remotely-sensed scenes [86].

Author Contributions

Conceptualization, S.L.P. and K.C.; methodology, S.L.P., A.H.Y.C. and J.M.M.; software, S.L.P., A.H.Y.C. and K.C.; validation, S.L.P., K.C. and J.M.M.; formal analysis, S.L.P. and K.C.; investigation, S.L.P., K.C., A.H.Y.C., D.A.C. and J.M.M.; resources, J.M.M. and D.A.C.; data curation, J.M.M., A.H.Y.C. and D.A.C.; writing—original draft preparation, S.L.P.; writing—review and editing, K.C., A.H.Y.C., D.A.C., R.J.P. and J.M.M.; visualization, S.L.P.; supervision, R.J.P., D.A.C. and J.M.M.; project administration, R.J.P.; funding acquisition, J.M.M. All authors have read and agreed to the published version of the manuscript.

Funding

The US National Science Foundation partially supported this research through grants NSF-DMS 1912737, NSF-DMS 1924513, and NSF-CCF 1934553.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Real benchmark hyperspectral image data used in this study can be found at the following links: http://www.ehu.eus/ccwintco/index.php?title=Hyperspectral_Remote_Sensing_Scenes (accessed on 12 December 2021) and https://rslab.ut.ac.ir/data (accessed on 12 December 2021). Experiments for benchmark data can be replicated at https://github.com/sampolk/D-VIC. Software and data required to replicate experiments on the Madingley HSI shall be made available upon reasonable request.

Acknowledgments

We thank C. Schönlieb and M. S. Kotzagiannidis for conversations that aided in the development of D-VIC. We acknowledge C. Barnes and 2Excel Geo for collecting the Madingley HSI used in this study. We thank the University of Cambridge for access to the Madingley field site and the Wildlife Trust for Bedfordshire, Cambridgeshire & Nottinghamshire for access to other forest field sites. Finally, we thank N. Gillis, D. Kuang, H. Park, C. Ding, M. Abdolali, D. Kun, I. Gerg, and J. Wang for making code for HySime [128], AVMAX [70,157], SymNMF [21], KNN-SSC [19], and FSSC [46] publicly available. We also thank the academic editor and the three reviewers for their helpful comments, which improved the presentation of this paper.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A. Hyperparameter Optimization

This appendix describes the hyperparameter optimization performed to generate numerical results. The parameter grids used for each algorithm are summarized in Table A1. For K-Means + PCA and GMM + PCA, we clustered the first z principal components of the HSI, where z was chosen so that 99% of the variation in the HSI was maintained after PCA dimensionality reduction. Thus, K-Means, K-Means + PCA, and GMM + PCA required no hyperparameter inputs. For stochastic algorithms with hyperparameter inputs (SC, SymNMF, FSSC, and D-VIC), we optimized for the median OA across 10 trials at each node in the hyperparameter grids described below.

Table A1.

Hyperparameter grids for algorithms. The number of nearest neighbors N took values in : an exponential sampling of the set . The set is a grid of values ranging from 0 to 1 used as FSSC regularization parameters. The set contains -distances between data points and their 1000 -nearest neighbors. The set is an exponential sampling of the diffusion process: . A “—” indicates a lack of a hyperparameter input.

Table A1.

Hyperparameter grids for algorithms. The number of nearest neighbors N took values in : an exponential sampling of the set . The set is a grid of values ranging from 0 to 1 used as FSSC regularization parameters. The set contains -distances between data points and their 1000 -nearest neighbors. The set is an exponential sampling of the diffusion process: . A “—” indicates a lack of a hyperparameter input.

| Parameter 1 Grid | Parameter 2 Grid | Parameter 3 Grid | |

|---|---|---|---|

| K-Means | — | — | — |

| K-Means + PCA | — | — | — |

| GMM + PCA | — | — | — |

| DPC [135] | — | ||

| SC [41] | — | — | |

| SymNMF [21] | — | — | |

| KNN-SSC [19,20] | — | ||

| FSSC [46] | |||

| LUND [42] | |||

| D-VIC |

All graph-based algorithms relied on adjacency matrices built from sparse KNN graphs. The number of nearest neighbors was optimized for each algorithm across : an exponential sampling of the set . KNN-SSC’s regularization parameter was set to , motivated by prior work with this parameter [19]. FSSC was evaluated using regularization parameters , as was suggested in [46]. FSSC, as an anchor-based clustering algorithm, requires the number of anchor pixels m as input. We set , as this ℓ-value is greater than all [46]. We used the same KDE and hyperparameter ranges of for DPC, LUND, and D-VIC. In our grid searches, ranged : a sampling of the distribution of -distances between data points and their 1000 -nearest neighbors. Both LUND and D-VIC were implemented at time steps , where . Searches end at time because for [52,60]. For each dataset, we chose the resulting in maximal OA. As is described in Section 4.1.3, D-VIC is quite robust to this choice of parameter.

References

- Eismann, M.T. Hyperspectral Remote Sensing; SPIE: Philadelphia, PA, USA, 2012. [Google Scholar]

- Ghamisi, P.; Plaza, J.; Chen, Y.; Li, J.; Plaza, A.J. Advanced spectral classifiers for hyperspectral images: A review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–32. [Google Scholar] [CrossRef]

- Plaza, A.; Martín, G.; Plaza, J.; Zortea, M.; Sánchez, S. Recent developments in endmember extraction and spectral unmixing. In Optical Remote Sensing: Advances in Signal Processing and Exploitation Techniques; Springer: Berlin/Heidelberg, Germany, 2011; pp. 235–267. [Google Scholar]

- Edelman, G.J.; Gaston, E.; Van Leeuwen, T.G.; Cullen, P.; Aalders, M.C. Hyperspectral imaging for non-contact analysis of forensic traces. Forensic Sci. Int. 2012, 223, 28–39. [Google Scholar] [CrossRef]

- Adam, E.; Mutanga, O.; Rugege, D. Multispectral and hyperspectral remote sensing for identification and mapping of wetland vegetation: A review. Wetl. Ecol. Manag. 2010, 18, 281–296. [Google Scholar] [CrossRef]

- Hirano, A.; Madden, M.; Welch, R. Hyperspectral image data for mapping wetland vegetation. Wetlands 2003, 23, 436–448. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W.; Kooistra, L.; Schaepman, M.E. Estimating canopy water content using hyperspectral remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 119–125. [Google Scholar]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Fusion of hyperspectral and LIDAR remote sensing data for classification of complex forest areas. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1416–1427. [Google Scholar] [CrossRef]

- Wang, S.; Guan, K.; Zhang, C.; Lee, D.; Margenot, A.J.; Ge, Y.; Peng, J.; Zhou, W.; Zhou, Q.; Huang, Y. Using soil library hyperspectral reflectance and machine learning to predict soil organic carbon: Assessing potential of airborne and spaceborne optical soil sensing. Remote Sens. Environ. 2022, 271, 112914. [Google Scholar] [CrossRef]

- Jia, J.; Wang, Y.; Chen, J.; Guo, R.; Shu, R.; Wang, J. Status and application of advanced airborne hyperspectral imaging technology: A review. Infr. Phys. Technol. 2020, 104, 103115. [Google Scholar] [CrossRef]

- Price, J.C. Spectral band selection for visible-near infrared remote sensing: Spectral-Spatial resolution tradeoffs. IEEE Trans. Geosci. Remote Sens. 1997, 35, 1277–1285. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Laparrcr, V.; Santos-Rodriguez, R. Spatial/spectral information trade-off in hyperspectral images. In Proceedings of the International Geosci Remote Sensing Symposium, Milan, Italy, 26–31 July 2015; IEEE: New York, NY, USA, 2015; pp. 1124–1127. [Google Scholar]

- Miao, L.; Qi, H. Endmember extraction from highly mixed data using minimum volume constrained nonnegative matrix factorization. IEEE Trans. Geosci. Remote Sens. 2007, 45, 765–777. [Google Scholar] [CrossRef]

- Pacheco-Labrador, J.; Migliavacca, M.; Ma, X.; Mahecha, M.; Carvalhais, N.; Weber, U.; Benavides, R.; Bouriaud, O.; Barnoaiea, I.; Coomes, D.A. Challenging the link between functional and spectral diversity with radiative transfer modeling and data. Remote Sens. Environ. 2022, 280, 113170. [Google Scholar] [CrossRef]

- Jia, J.; Wang, Y.; Zhuang, X.; Yao, Y.; Wang, S.; Zhao, D.; Shu, R.; Wang, J. High spatial resolution shortwave infrared imaging technology based on time delay and digital accumulation method. Inf. Phys. Technol. 2017, 81, 305–312. [Google Scholar] [CrossRef]

- Friedman, J.; Hastie, T.; Tibshirani, R. The Elements of Statistical Learning; Springer: Berlin/Heidelberg, Germany, 2001; Volume 1, Springer Series in Statistics. [Google Scholar]

- Murphy, J.M.; Maggioni, M. Unsupervised clustering and active learning of hyperspectral images with nonlinear diffusion. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1829–1845. [Google Scholar] [CrossRef]

- Abdolali, M.; Gillis, N. Beyond linear subspace clustering: A comparative study of nonlinear manifold clustering algorithms. Comput. Sci. Rev. 2021, 42, 100435. [Google Scholar] [CrossRef]

- Zhuang, L.; Wang, J.; Lin, Z.; Yang, A.Y.; Ma, Y.; Yu, N. Locality-preserving low-rank representation for graph construction from nonlinear manifolds. Neurocomputing 2016, 175, 715–722. [Google Scholar] [CrossRef]

- Kuang, D.; Ding, C.; Park, H. Symmetric nonnegative matrix factorization for graph clustering. In Proceedings of the SIAM International Conference Data Min, Anaheim, CA, USA, 26–28 April 2012; SIAM: Philadelphia, PA, USA, 2012; pp. 106–117. [Google Scholar]

- Wang, R.; Nie, N.; Wang, Z.; He, F.; Li, X. Scalable graph-based clustering with nonnegative relaxation for large hyperspectral image. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7352–7364. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Marsheva, T.V.B.; Zhou, D. Semi-supervised graph-based hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3044–3054. [Google Scholar] [CrossRef]

- Gao, Y.; Ji, R.; Cui, P.; Dai, Q.; Hua, G. Hyperspectral image classification through bilayer graph-based learning. IEEE Trans. Image Process 2014, 23, 2769–2778. [Google Scholar] [CrossRef]

- Wu, H.; Prasad, S. Semi-supervised deep learning using pseudo labels for hyperspectral image classification. IEEE Trans. Image Process 2017, 27, 1259–1270. [Google Scholar] [CrossRef]

- Yang, X.; Ye, Y.; Li, X.; Lau, R.Y.; Zhang, X.; Huang, X. Hyperspectral image classification with deep learning models. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5408–5423. [Google Scholar] [CrossRef]

- Nalepa, J.; Myller, M.; Imai, Y.; Honda, K.I.; Takeda, T.; Antoniak, M. Unsupervised segmentation of hyperspectral images using 3-D convolutional autoencoders. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1948–1952. [Google Scholar] [CrossRef]

- Gillis, N.; Kuang, D.; Park, H. Hierarchical clustering of hyperspectral images using rank-two nonnegative matrix factorization. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2066–2078. [Google Scholar] [CrossRef]

- Li, K.; Qin, Y.; Ling, Q.; Wang, Y.; Lin, Z.; An, W. Self-supervised deep subspace clustering for hyperspectral images with adaptive self-expressive coefficient matrix initialization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3215–3227. [Google Scholar] [CrossRef]

- Sun, J.; Wang, W.; Wei, X.; Fang, L.; Tang, X.; Xu, Y.; Yu, H.; Yao, W. Deep clustering with intraclass distance constraint for hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4135–4149. [Google Scholar] [CrossRef]

- Zhou, J.; Kwan, C.; Ayhan, B.; Eismann, M.T. A novel cluster kernel RX algorithm for anomaly and change detection using hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6497–6504. [Google Scholar] [CrossRef]

- Cui, K.; Plemmons, R.J. Unsupervised classification of AVIRIS-NG hyperspectral images. In Proceedings of the Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing, Amsterdam, The Netherlands, 24–26 March 2021; IEEE: New York, NY, USA, 2021; pp. 1–5. [Google Scholar]

- Cui, K.; Li, R.; Polk, S.L.; Murphy, J.M.; Plemmons, R.J.; Chan, R.H. Unsupervised spatial-spectral hyperspectral image reconstruction and clustering with diffusion geometry. In Proceedings of the Workshop Hyperspectral Image Signal Process Evolution in Remote Sensing, Rome, Italy, 13–16 September 2022; IEEE: New York, NY, USA, 2022; pp. 1–5. [Google Scholar]

- Bachmann, C.M.; Ainsworth, T.L.; Fusina, R.A. Exploiting manifold geometry in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2005, 43, 441–454. [Google Scholar] [CrossRef]

- Coifman, R.R.; Lafon, S. Diffusion maps. Appl. Comput. Harm. Anal. 2006, 21, 5–30. [Google Scholar] [CrossRef]

- Baral, H.; Queloz, V.; Hosoya, T. Hymenoscyphus fraxineus, the correct scientific name for the fungus causing ash dieback in Europe. IMA Fungus 2014, 5, 79–80. [Google Scholar] [CrossRef]

- McKinney, L.; Nielsen, L.; Collinge, D.; Thomsen, I.; Hansen, J.; Kjær, E. The ash dieback crisis: Genetic variation in resistance can prove a long-term solution. Plant Pathol. 2014, 63, 485–499. [Google Scholar] [CrossRef]

- Stone, C.; Mohammed, C. Application of remote sensing technologies for assessing planted forests damaged by insect pests and fungal pathogens: A review. Curr. For. Rep. 2017, 3, 75–92. [Google Scholar]

- Waser, L.T.; Küchler, M.; Jütte, K.; Stampfer, T. Evaluating the potential of WorldView-2 data to classify tree species and different levels of ash mortality. Remote Sens. 2014, 6, 4515–4545. [Google Scholar] [CrossRef]

- Chan, A.H.Y.; Barnes, C.; Swinfield, T.; Coomes, D.A. Monitoring ash dieback (Hymenoscyphus fraxineus) in British forests using hyperspectral remote sensing. Remote Sens. Ecol. Conserv. 2021, 7, 306–320. [Google Scholar] [CrossRef]

- Ng, A.Y.; Jordan, M.I.; Weiss, Y. On spectral clustering: Analysis and an algorithm. Adv. Neural Inf. Process Syst. 2002, 14, 849–856. [Google Scholar]

- Maggioni, M.; Murphy, J.M. Learning by unsupervised nonlinear diffusion. J. Mach. Learn. Res. 2019, 20, 1–56. [Google Scholar]

- Cahill, N.D.; Czaja, W.; Messinger, D.W. Schroedinger Eigenmaps with Nondiagonal Potentials for Spatial-Spectral Clustering of Hyperspectral Imagery; SPIE: Philadelphia, PA, USA, 2014; Volume 9088, pp. 27–39. [Google Scholar]

- Theodoridis, S.; Koutroumbas, K. Pattern Recognition; Elsevier: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Zhu, W.; Chayes, V.; Tiard, A.; Sanchez, S.; Dahlberg, D.; Bertozzi, A.L.; Osher, S.; Zosso, D.; Kuang, D. Unsupervised classification in hyperspectral imagery with nonlocal total variation and primal-dual hybrid gradient algorithm. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2786–2798. [Google Scholar] [CrossRef]

- Wang, J.; Ma, Z.; Nie, F.; Li, X. Fast self-supervised clustering with anchor graph. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 4199–4212. [Google Scholar] [CrossRef]

- Bandyopadhyay, D.; Mukherjee, S. Tree species classification from hyperspectral data using graph-regularized neural networks. arXiv 2022, arXiv:2208.08675. [Google Scholar]

- Tenenbaum, J.B.; de Silva, V.; Langford, J.C. A global geometric framework for nonlinear dimensionality reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef]

- Belkin, M.; Niyogi, P. Laplacian eigenmaps and spectral techniques for embedding and clustering. Adv. Neural Inf. Process Syst. 2001, 585–591. [Google Scholar]

- Rohe, K.; Chatterjee, S.; Yu, B. Spectral clustering and the high-dimensional stochastic blockmodel. Ann. Stat. 2011, 39, 1878–1915. [Google Scholar] [CrossRef]

- Murphy, J.M.; Polk, S.L. A multiscale environment for learning by diffusion. Appl. Comput. Harm. Anal. 2022, 57, 58–100. [Google Scholar] [CrossRef]

- Nadler, B.; Galun, M. Fundamental limitations of spectral clustering. Adv. Neural Inf. Process Syst. 2007, 19, 1017–1024. [Google Scholar]

- Dilokthanakul, N.; Mediano, P.A.M.; Garnelo, M.; Lee, M.C.H.; Salimbeni, H.; Arulkumaran, K.; Shanahan, M. Deep unsupervised clustering with Gaussian mixture variational autoencoders. arXiv 2016, arXiv:1611.02648. [Google Scholar]

- Min, E.; Guo, X.; Liu, Q.; Zhang, G.; Cui, J.; Long, J. A survey of clustering with deep learning: From the perspective of network architecture. IEEE Access 2018, 6, 39501–39514. [Google Scholar] [CrossRef]

- Tasissa, A.; Nguyen, D.; Murphy, J.M. Deep diffusion processes for active learning of hyperspectral images. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: New York, NY, USA, 2021; pp. 3665–3668. [Google Scholar]

- Nguyen, A.; Yosinski, J.; Clune, J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 427–436. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. In Proceedings of the International Conference Learn Represent, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Haeffele, B.D.; You, C.; Vidal, R. A Critique of Self-Expressive Deep Subspace Clustering. In Proceedings of the International Conference Learn Represent, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Polk, S.L.; Murphy, J.M. Multiscale clustering of hyperspectral images through spectral-spatial diffusion geometry. In Proceedings of the International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 4688–4691. [Google Scholar]

- Haghverdi, L.; Büttner, M.; Wolf, F.A.; Buettner, F.; Theis, F.J. Diffusion pseudotime robustly reconstructs lineage branching. Nat. Methods 2016, 13, 845–848. [Google Scholar] [CrossRef]

- Van Dijk, D.; Sharma, R.; Nainys, J.; Yim, K.; Kathail, P.; Carr, A.J.; Burdziak, C.; Moon, K.R.; Chaffer, C.L.; Pattabiraman, D. Recovering gene interactions from single-cell data using data diffusion. Cell 2018, 174, 716–729. [Google Scholar] [CrossRef]

- Zhao, Z.; Singer, A. Rotationally invariant image representation for viewing direction classification in cryo-EM. J. Struct. Biol. 2014, 186, 153–166. [Google Scholar] [CrossRef]

- Moon, K.R.; van Dijk, D.; Wang, Z.; Gigante, S.; Burkhardt, D.B.; Chen, W.S.; Yim, K.; van den Elzen, A.; Hirn, M.J.; Coifman, R.R. Visualizing structure and transitions in high-dimensional biological data. Nat. Biotechnol. 2019, 37, 1482–1492. [Google Scholar] [CrossRef]

- Rohrdanz, M.A.; Zheng, W.; Maggioni, M.; Clementi, C. Determination of reaction coordinates via locally scaled diffusion map. J. Chem. Phys. 2011, 134, 03B624. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.; Rohrdanz, M.A.; Maggioni, M.; Clementi, C. Polymer reversal rate calculated via locally scaled diffusion map. J. Chem. Phys. 2011, 134, 144109. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Ferguson, A.L. Molecular enhanced sampling with autoencoders: On-the-fly collective variable discovery and accelerated free energy landscape exploration. J. Comput. Chem. 2018, 39, 2079–2102. [Google Scholar] [CrossRef]

- Coifman, R.R.; Lafon, S.; Lee, A.B.; Maggioni, M.; Nadler, B.; Warner, F.; Zucker, S.W. Geometric diffusions as a tool for harmonic analysis and structure definition of data: Diffusion maps. Proc. Natl. Acad. Sci. USA 2005, 102, 7426–7431. [Google Scholar] [CrossRef]

- Nadler, B.; Lafon, S.; Coifman, R.R.; Kevrekidis, I.G. Diffusion maps, spectral clustering and reaction coordinates of dynamical systems. Appl. Comput. Harmon. Anal. 2006, 21, 113–127. [Google Scholar] [CrossRef]

- Chan, T.; Ma, W.; Ambikapathi, A.; Chi, C. A simplex volume maximization framework for hyperspectral endmember extraction. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4177–4193. [Google Scholar] [CrossRef]

- Winter, M.E. N-FINDR: An algorithm for fast autonomous spectral end-member determination in hyperspectral data. In Imaging Spectrometry V; SPIE: Philadelphia, PA, USA, 1999; Volume 3753, pp. 266–275. [Google Scholar]

- Manolakis, D.; Siracusa, C.; Shaw, G. Hyperspectral subpixel target detection using the linear mixing model. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1392–1409. [Google Scholar] [CrossRef]

- Zhao, X.L.; Wang, F.; Huang, T.Z.; Ng, M.K.; Plemmons, R.J. Deblurring and sparse unmixing for hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4045–4058. [Google Scholar] [CrossRef]

- Berisha, S.; Nagy, J.G.; Plemmons, R.J. Deblurring and sparse unmixing of hyperspectral images using multiple point spread functions. SIAM J. Sci. Comput. 2015, 37, S389–S406. [Google Scholar] [CrossRef]

- Wang, L.; Feng, Y.; Gao, Y.; Wang, Z.; He, M. Compressed sensing reconstruction of hyperspectral images based on spectral unmixing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1266–1284. [Google Scholar] [CrossRef]

- Cerra, D.; Müller, R.; Reinartz, P. Noise reduction in hyperspectral images through spectral unmixing. IEEE Geosci. Remote Sens. Lett. 2013, 11, 109–113. [Google Scholar] [CrossRef]

- Rasti, B.; Scheunders, P.; Ghamisi, P.; Licciardi, G.; Chanussot, J. Noise reduction in hyperspectral imagery: Overview and application. Remote Sens. 2018, 10, 482. [Google Scholar] [CrossRef]

- Rasti, B.; Koirala, B.; Scheunders, P.; Ghamisi, P. How hyperspectral image unmixing and denoising can boost each other. Remote Sens. 2020, 12, 1728. [Google Scholar] [CrossRef]

- Ertürk, A.; Güllü, M.K.; Çeşmeci, D.; Gerçek, D.; Ertürk, S. Spatial resolution enhancement of hyperspectral images using unmixing and binary particle swarm optimization. IEEE Geosci. Remote Sens. Lett. 2014, 11, 2100–2104. [Google Scholar] [CrossRef]

- Bendoumi, M.A.; He, M.; Mei, S. Hyperspectral image resolution enhancement using high-resolution multispectral image based on spectral unmixing. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6574–6583. [Google Scholar] [CrossRef]

- Kordi Ghasrodashti, E.; Karami, A.; Heylen, R.; Scheunders, P. Spatial resolution enhancement of hyperspectral images using spectral unmixing and Bayesian sparse representation. Remote Sens. 2017, 9, 541. [Google Scholar] [CrossRef]

- Villa, A.; Chanussot, J.; Benediktsson, J.A.; Jutten, C. Spectral unmixing for the classification of hyperspectral images at a finer spatial resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2010, 5, 521–533. [Google Scholar] [CrossRef]

- Dópido, I.; Villa, A.; Plaza, A.; Gamba, P. A quantitative and comparative assessment of unmixing-based feature extraction techniques for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 421–435. [Google Scholar] [CrossRef]

- Ertürk, A.; Plaza, A. Informative change detection by unmixing for hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1252–1256. [Google Scholar] [CrossRef]

- Liu, S.; Bruzzone, L.; Bovolo, F.; Du, P. Unsupervised multitemporal spectral unmixing for detecting multiple changes in hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2733–2748. [Google Scholar] [CrossRef]

- Camalan, S.; Cui, K.; Pauca, V.P.; Alqahtani, S.; Silman, M.; Chan, R.; Plemmons, R.J.; Dethier, E.N.; Fernandez, L.E.; Lutz, D.A. Change detection of Amazonian alluvial gold mining using deep learning and Sentinel-2 imagery. Remote Sens. 2022, 14, 1746. [Google Scholar] [CrossRef]

- Li, H.; Wu, K.; Xu, Y. An Integrated Change Detection Method Based on Spectral Unmixing and the CNN for Hyperspectral Imagery. Remote Sens. 2022, 14, 2523. [Google Scholar] [CrossRef]

- Qu, Y.; Wang, W.; Guo, R.; Ayhan, B.; Kwan, C.; Vance, S.; Qi, H. Hyperspectral anomaly detection through spectral unmixing and dictionary-based low-rank decomposition. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4391–4405. [Google Scholar] [CrossRef]

- Ma, D.; Yuan, Y.; Wang, Q. Hyperspectral anomaly detection via discriminative feature learning with multiple-dictionary sparse representation. Remote Sens. 2018, 10, 745. [Google Scholar] [CrossRef]

- Somers, B.; Asner, G.P.; Tits, L.; Coppin, P. Endmember variability in spectral mixture analysis: A review. Remote Sens. Environ. 2011, 115, 1603–1616. [Google Scholar] [CrossRef]

- Quintano, C.; Fernández-Manso, A.; Shimabukuro, Y.E.; Pereira, G. Spectral unmixing. Int. J. Remote Sens. 2012, 33, 5307–5340. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Heylen, R.; Parente, M.; Gader, P. A review of nonlinear hyperspectral unmixing methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1844–1868. [Google Scholar] [CrossRef]

- Borsoi, R.; Imbiriba, T.; Bermudez, J.C.; Richard, C.; Chanussot, J.; Drumetz, L.; Tourneret, J.Y.; Zare, A.; Jutten, C. Spectral Variability in Hyperspectral Data Unmixing: A Comprehensive Review. IEEE Geosci. Remote Sens. Mag. 2021, 9, 223–270. [Google Scholar] [CrossRef]