1. Introduction

In recent years, image interpretation and classification have emerged as crucial research areas within the remote sensing community [

1,

2]. In particular, the field of ship image classification within remote sensing has gained substantial focus from researchers, driven by the need to devise efficient methods for a range of applications including maritime security, traffic monitoring, oil spill detection, prevention of illegal fishing activities, etc. [

3]. However, traditional approaches to ship monitoring, such as the Automated Identification System (AIS) and the Constant False Alarm Rate (CFAR), have limitations due to factors like sea clutter and vessels disabling their transponders [

4]. These limitations necessitate the development of more-effective techniques. Using Convolutional Neural Networks (CNN) as a baseline has demonstrated remarkable progress in image categorization and object detection, particularly in deep learning approaches in the past decade [

5,

6]. Previous studies have shown the success of CNN-based solutions for ship classification using Synthetic Aperture Radar (SAR) and optical satellite imagery. Despite these advancements, the classification and detection of ships remain a challenging task due to various factors, including the spatial arrangement of geospatial objects, complex backgrounds, and the resolution limitations of sensor platforms [

7,

8]. Additionally, existing works rely on homogeneous datasets, either SAR or optical, which limits diversity. In light of these challenges, there is an imperative need for innovative solutions that can harness diverse data modalities and offer robustness against the complexities of maritime backgrounds. To address these challenges, this paper introduces a novel dataset termed Heterogeneous Ship data and a new technique called the Spatial–Channel Attention with Bilinear Pooling Network (SCABPNet).

The term “heterogeneous” refers to the combination of SAR and optical satellite imagery within a single dataset. This novel dataset amalgamates SAR and optical satellite imagery to harness a broader range of data modalities, enhancing the richness and diversity of information available for ship classification. The motivations behind the introduction of Heterogeneous Ship data can be summarized as follows. Firstly, by combining SAR and optical data, our approach overcomes the limitations of solely relying on a single data type. SAR images excel in capturing a larger area of the surroundings, regardless of time, weather conditions, or altitude [

9,

10]. On the other hand, optical images provide rich color and texture information. By merging these two data sources, we created a universal dataset that encompasses varying acquisition scenarios, making ship classification models more robust for real-time scenarios.

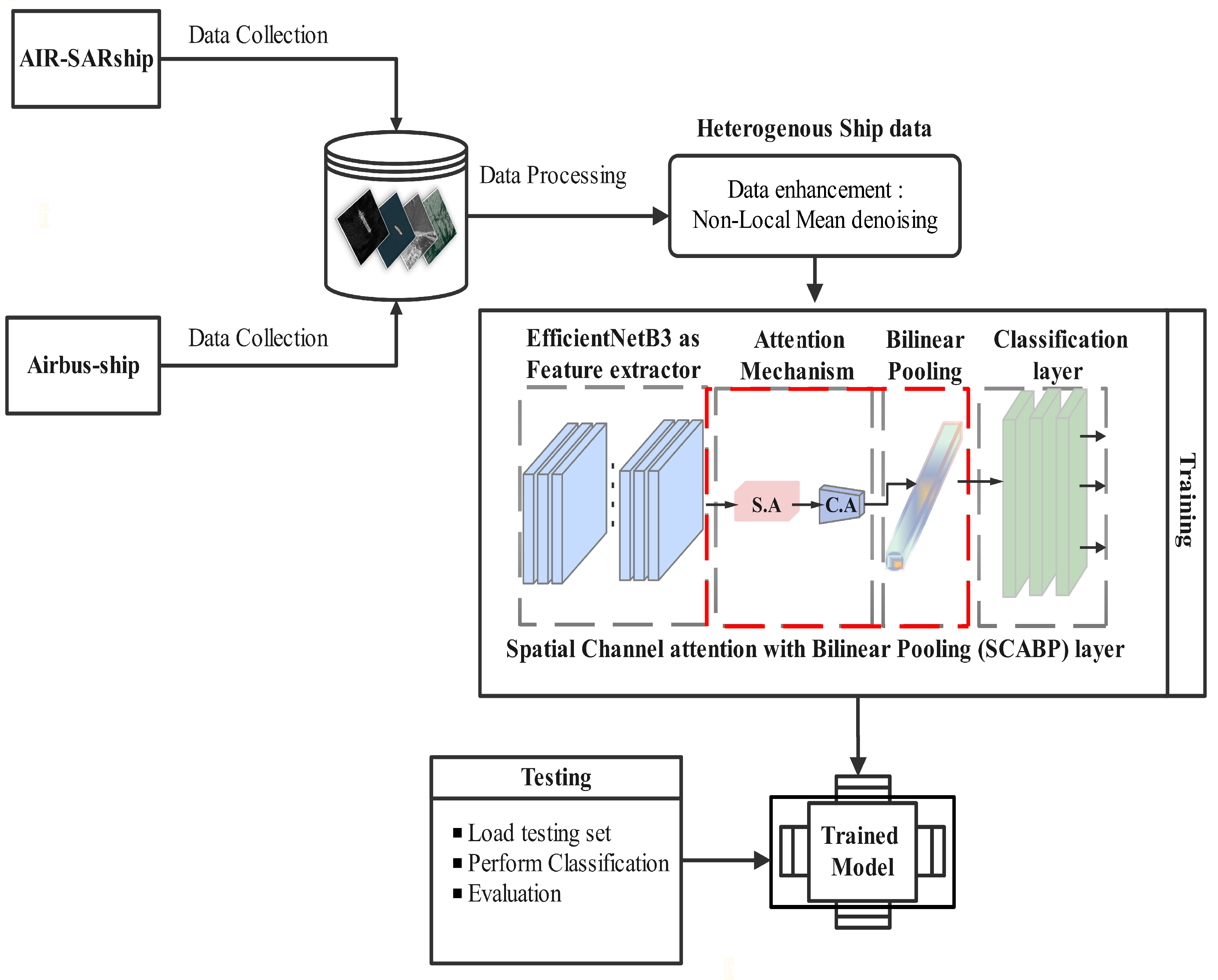

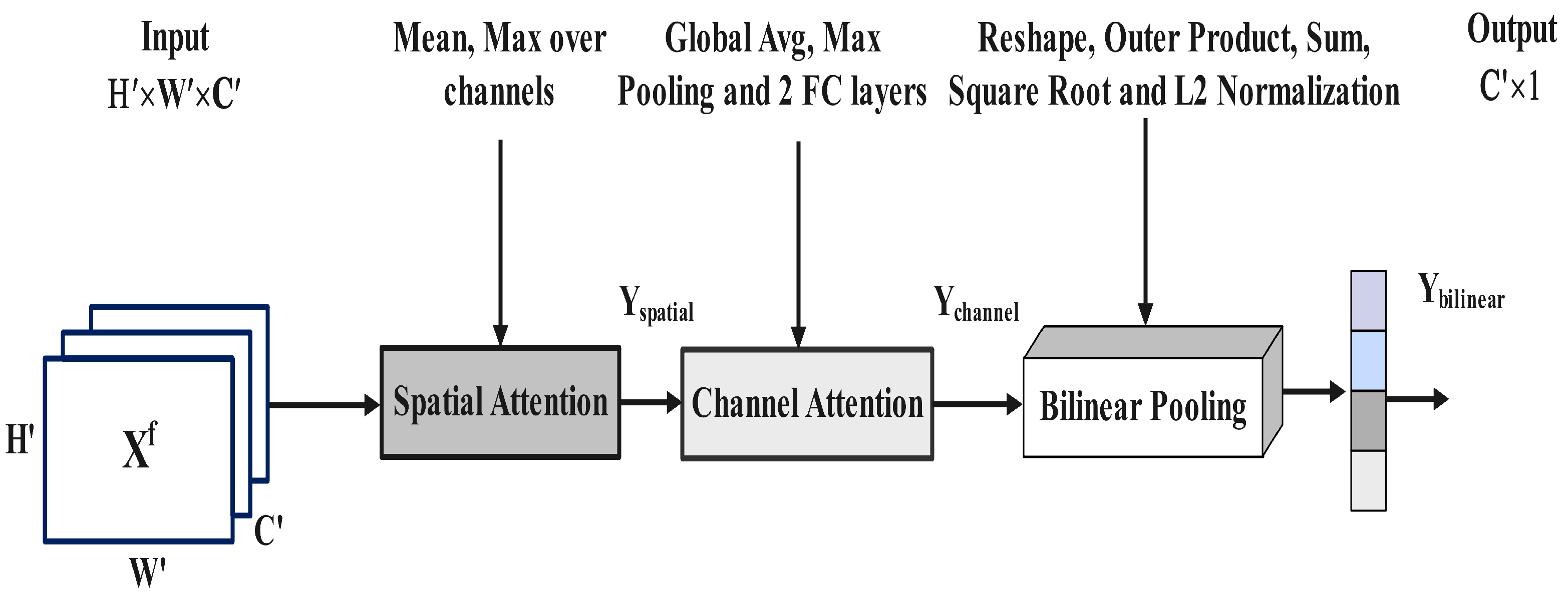

Additionally, motivated by the idea of overcoming the challenge of complex backgrounds in ship image datasets, we also propose an attention-based bilinear pooling approach called the Spatial–Channel Attention with Bilinear Pooling Network (SCABPNet). This approach combines spatial and channelwise information to effectively distinguish ships from the complex background, thereby enhancing the model’s classification performance. The use of Heterogeneous Ship data and the introduction of the SCABPNet approach offer several advantages. Firstly, the combination of SAR and optical satellite imagery provides a more-comprehensive representation of ships, incorporating complementary information from different modalities [

11]. This fusion of data enhances the model’s ability to capture diverse ship characteristics, resulting in improved classification accuracy. Secondly, the SCABPNet approach addresses the challenge of complex backgrounds in target classification and detection. SCABPNet is able to effectively distinguish ships from sea clutter, coastlines, and other irrelevant background elements. By incorporating the spatial and channel attention mechanisms into a pooling technique, the SCABPNet approach is capable of focusing on salient ship-specific features while suppressing background noise and feature redundancy, leading to more-accurate ship classification results. Finally, the SCABPNet approach addresses the limitations of traditional ship monitoring techniques such as AIS and CFAR. In contrast, by incorporating these attention mechanisms and pooling techniques, SCABPNet maximizes the discriminative power of the Heterogeneous Ship data, resulting in enhanced classification performance. This represents a key advantage of our approach over conventional CNN models. The main contributions of our research are as follows:

Heterogeneous Ship dataset: We present a novel dataset that combines SAR and optical satellite imagery, offering a richer and more-diverse set of features for ship classification, addressing the limitations of existing homogeneous datasets.

SCABPNet model: A novel model that perfectly integrates spatial–channel attention with bilinear pooling, ensuring effective learning of discriminative specific ship features for classification tasks. Detailed ablation studies further elucidated the efficacy of the model.

Comprehensive analysis: We conducted exhaustive experiments using the proposed SCABPNet model on the Heterogeneous Ship data, and on the MSTAR dataset, as well, then we compared their performance against existing state-of-the-art models, thereby establishing the superiority of the SCABPNet model.

Through this research, we aimed to provide a holistic solution to the challenges of ship image classification in remote sensing, thereby contributing to the advancement of this important field. The rest of this paper is arranged as follows:

Section 2 reviews related work.

Section 3 introduces the proposed Heterogeneous Ship data.

Section 4 details the methodology, including the different components of SCABPNet.

Section 5 presents the experiments conducted and discusses their results (the potential implications of our research in real-world scenarios). Finally,

Section 6 concludes the paper and suggests potential research topics for future research.

2. Related Work

Ship image classification has seen significant advancements in recent years, with researchers exploring various approaches to improve classification accuracy. These approaches can be broadly categorized into three areas: using both Synthetic Aperture Radar (SAR) and optical datasets, incorporating attention mechanisms, and integrating improved pooling techniques.

Integration of SAR and optical datasets: The motivation behind integrating SAR and optical datasets is rooted in their complementary nature. For instance, SAR images excel in capturing the structural details of ships, while optical images provide rich color and texture information. In [

12], Kanjir et al. surveyed the fusion of SAR and optical data, showcasing several studies that have capitalized on this integration over the past decade. A notable development in this field is the unified algorithm for vessel detection by Jubelin and Khenchaf (2014), which functions effectively across both SAR and optical imagery. They reported that a single detection algorithm can streamline the development and operational processes, a perspective contrasted by the findings in Kanjir et al.’s survey. This survey discusses the fact that dedicated algorithms, tailored to the unique attributes of each sensor type, might deliver superior results. Our research with the SCABPNet model contributes to this discourse by presenting a novel approach that aims to leverage the strengths of both modalities within a unified framework. This model challenges the view presented by Kanjir et al., demonstrating that a well-designed integrated system can indeed effectively bridge the diverse outputs of SAR and optical sensors, thereby enhancing ship detection and classification performance. Additionally, Rostami et al. [

13] proposed a few-shot learning approach that utilizes cross-domain knowledge transfer from the optical dataset, designated as the source domain, to address a task in the SAR domain, identified as the target domain. Furthermore, other studies made use of both modalities (SAR and optical imageries) for classification tasks. Expanding beyond maritime applications, SAR–optical data have been used for classification in other domains like land cover, agriculture, etc. The studies by Shakya et al. [

14] and Sreedhar et al. [

15] demonstrated the broader utility of SAR-optical data fusion in areas like land cover and agriculture. Shakya et al. emphasized gradient-based data fusion for classification, while Sreedhar et al. highlighted the combined use of SAR’s all-weather imaging and the multispectral capabilities of optical datasets for time series analysis in crop classification. Further contributions in this evolving field include Prabhakar et al.’s method to refine noisy ground truth labels in SAR and optical image fusion in [

16] and He et al.’s development of an oriented ship detector for remote sensing imagery in [

17], underscoring the continuous innovation and application of these integrated techniques.

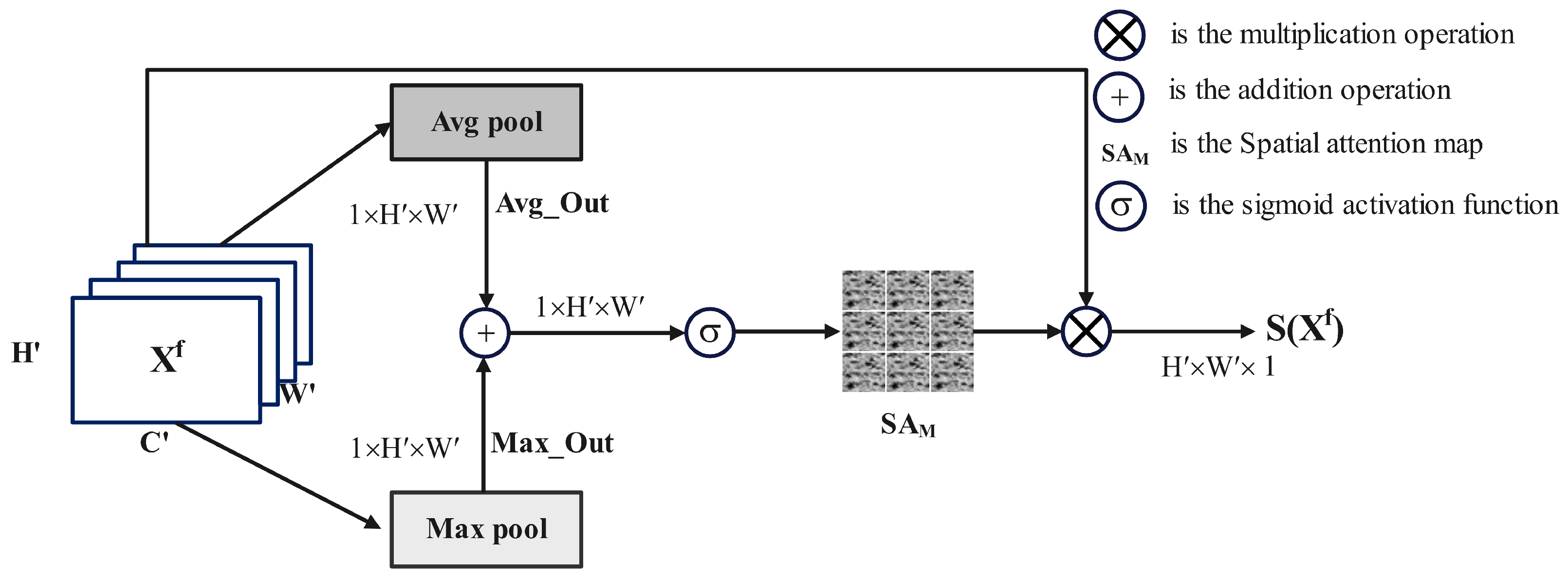

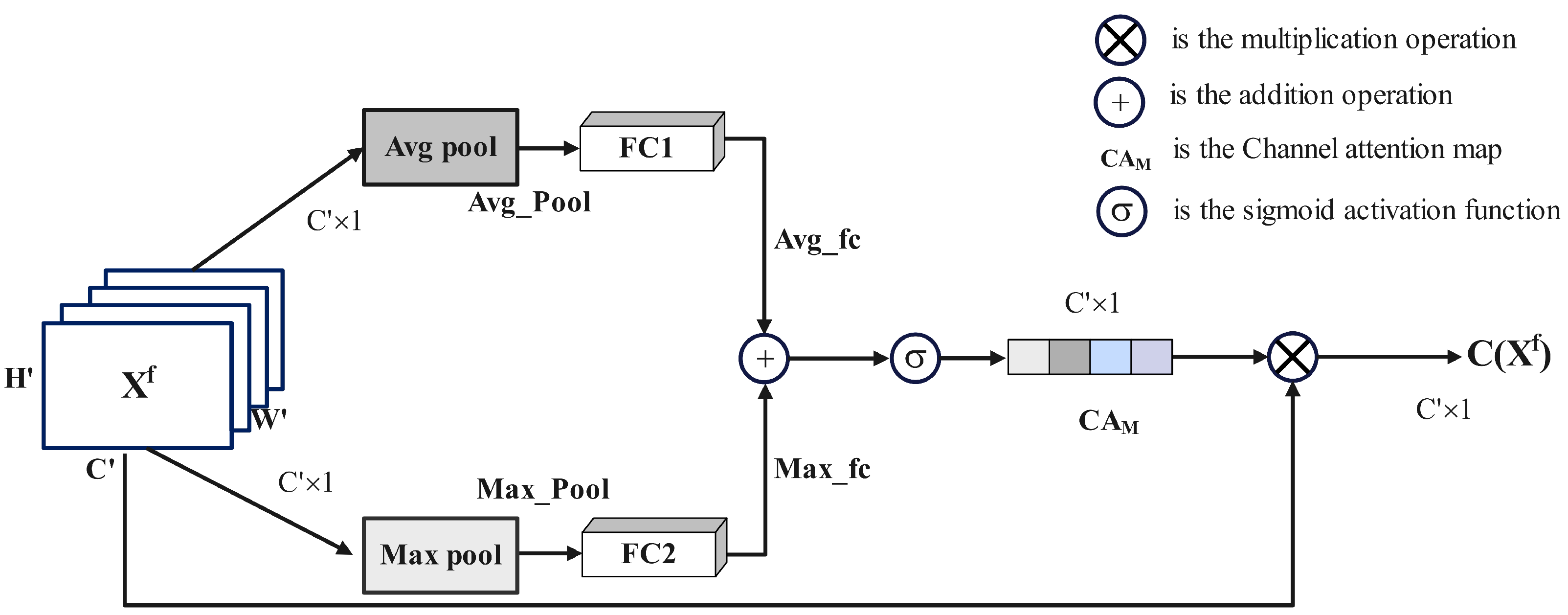

Advent of attention mechanisms: Attention mechanisms have emerged as powerful tools in ship image classification. These mechanisms enable models to selectively focus on relevant target-specific features while suppressing background noise [

18]. They capture spatial and channelwise dependencies, allowing the model to attend to the most-discriminative regions and features. Several studies have explored attention-based approaches for ship image classification. For instance, Cui et al. [

10] proposed a novel image-based convolutional network with Spatial Pyramid Aggregated Pooling (SPAP) and an attention mechanism called MAP-Net, which can learn features that are invariant, distinguishable, repeatable, and suitable for cross-modal image matching. They evaluated their method on five sets of multisource and multiresolution SAR and optical images and demonstrated that it achieved superior performance compared to the state-of-the-art methods. Additionally, Zhao et al. [

19] proposed a spatial attention mechanism to highlight informative regions in ship images, while Hu et al. [

20] introduced a channel attention mechanism to emphasize relevant features. Both studies showed promising results in improving ship classification accuracy by effectively capturing fine-grained details and enhancing the model’s discriminative capacity. Further, Sun et al. [

21] implemented a Strong-Scattering-Point-Aware Network (SPAN), which recognizes ship categories based on the distribution characteristics of strong scattering points. Their approach underscores the potential of attention mechanisms in ship detection and classification in SAR images. In [

22], another study conducted by Zhao et al. highlighted a multitask learning framework for object recognition and detection in SAR images, emphasizing the potential of attention mechanism techniques in recognizing small, weak, and dense targets in SAR images.

Improved pooling techniques: Bilinear techniques have gained attention in ship image classification and detection due to their ability to capture complex interactions between spatial and channelwise information. Bilinear pooling, in particular, enables the modeling of complex relationships within the input data through elementwise multiplications between spatial and channelwise features, followed by summation. This technique has proven effective in ship-image-classification tasks. For example, Li et al. [

23] introduced an improved bilinear pooling technique to construct a compact bilinear CNN model. They specifically incorporated a joint pooling approach to diminish the dimensionality of bilinear features, facilitating their integration into a bilinear CNN framework for end-to-end optimization. Furthermore, He et al. [

24] developed a novel Group Bilinear Convolutional Neural Network (GBCNN) model to extract discriminative second-order representations of ship targets from the pairwise Vertical–Horizontal polarization (VH) and Vertical–Vertical polarization (VV) SAR images, yielding state-of-the-art performance. Similarly, Lin et al. [

25,

26,

27] applied bilinear pooling in ship classification and demonstrated its ability to capture rich interactions and enhance discriminative power. Additionally, Li et al. [

28] introduced a Multimodal Bilinear Fusion Network (MBFNet) for hyperspectral and SAR image classification, achieving effective land cover classification performance. These studies highlight the effectiveness of bilinear techniques in image classification.

Despite these advancements, there are still challenges in ship image classification that need to be addressed. These include the need for more-effective integration of SAR and optical datasets, more-sophisticated attention mechanisms, and more-powerful bilinear techniques. Motivated by these underlying challenges and inspired by previous academic endeavors, we propose the SCABPNet model, which combines these techniques to further enhance classification performance.

3. Proposed Heterogeneous Ship Data

Remote sensing constantly suffers from the inherent complexity of dealing with diverse datasets. In the context of ship image classification, this complexity is greatly amplified when addressing the challenges posed by Heterogeneous Ship data. This proposed dataset aimed to serve as a robust, diverse platform that encapsulates the complexities and distinct features of real-world scenarios. However, the richness and diversity of the dataset also introduce multiple challenges that require further investigation.

Variability of ship types: Our dataset encompasses a range of ship types—from transport vessels to oil tankers and fishing boats. Each of these ship categories presents its own set of features and structural complexities, rendering the classification task more challenging than initially perceived. The distinctiveness of these ships in terms of size, structural design, and functionalities inevitably introduces a high degree of intra-class variability [

29,

30].

Geographical and environmental differences: The images in our dataset were sourced from diverse geographical regions, each with its own set of challenges. The variations in coastlines, water turbidity, lighting conditions, and even man-made buildings can drastically alter the appearance of ships in the imagery [

31]. Further, changes in weather conditions, sea state, and seasonal effects may introduce inconsistencies across images, complicating the classification task [

3].

Interference and clutter: The inclusion of both offshore and, notably, inshore ship images introduces the substantial challenge of land interference. Ships near the coast might be obscured by coastal infrastructure or their features might blend with reflections from adjacent terrains, making them harder to distinguish [

32].

Heterogeneity of sensor data: With SAR data sourced from satellites like Gaofen-3 and Sentinel-1 [

33] and optical images from the Airbus Ship Detection dataset [

34,

35], there is a sharp difference in the imaging mechanisms. These satellites capture imagery at different resolutions, frequencies, and imaging modes. While SAR provides all-weather, day-and-night imaging capabilities, it may also introduce speckle noise. Conversely, optical images, although rich in color information, can be obscured by cloud cover and varying lighting conditions [

36,

37].

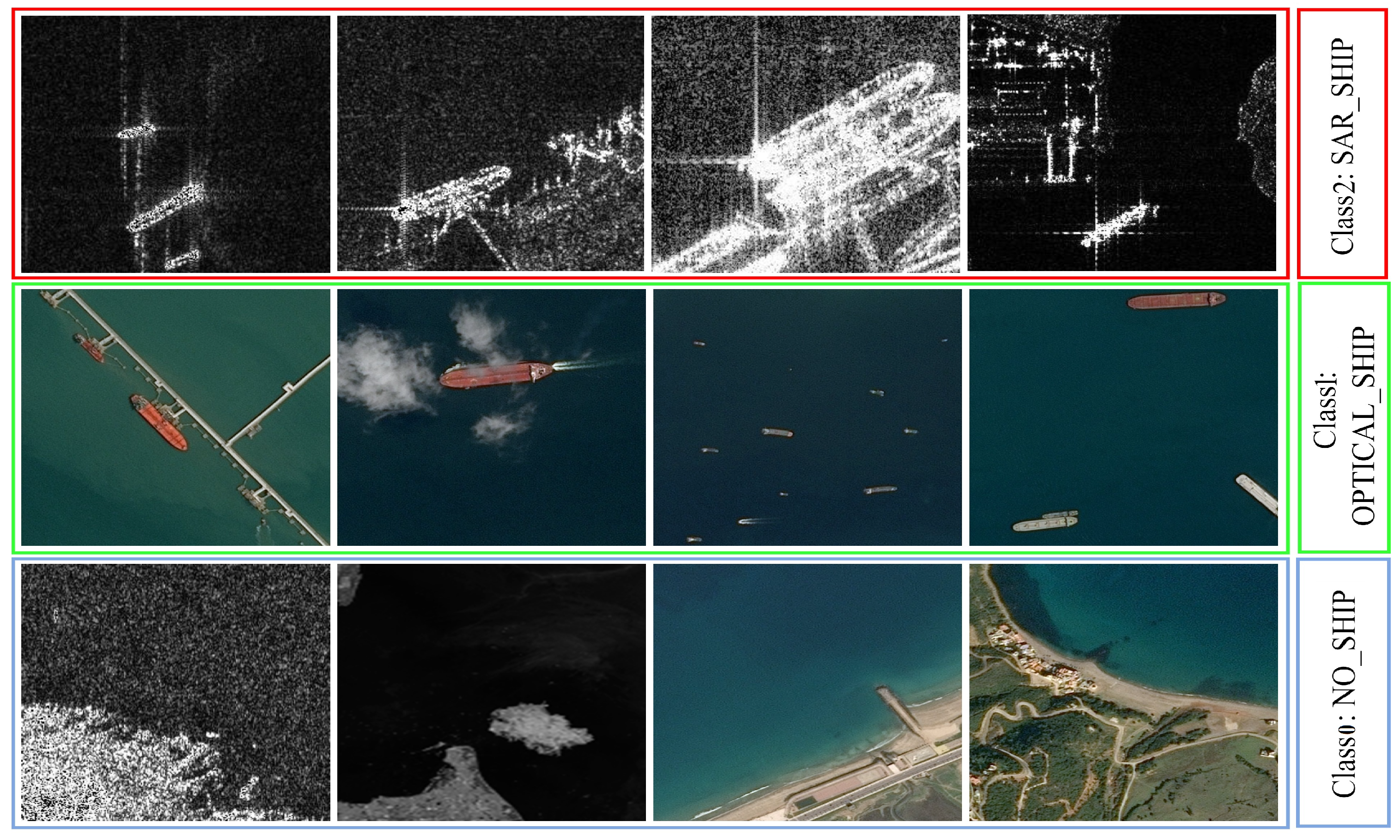

To sum up, the proposed dataset is made up of three classes: no ship (Class 0), optical ship (Class 1), and SAR ship (Class 2). It contains 4962 images, with 20% set aside for validation and the rest for training.

Table 1 shows the partition of the dataset, with a balanced distribution of images across the three categories.

Figure 1 provides a visualization of the proposed Heterogeneous Ship data, with different colors representing different classes.

To address the above challenges and improve the quality of the images, we applied image pre-processing and denoising techniques. The denoising technique used in this research was the Non-Local Means Algorithm. Despite its proven efficacy, it has limits and cannot solve every problem. While it reduces noise, ensuring the preservation of important ship features in the imagery remains a challenge. Furthermore, the inherent noise characteristics of SAR (speckle) differ from those in optical images, necessitating a fine-tuning setting of the Non-Local Means Algorithm for each modality. This algorithm was chosen due to its effectiveness in image processing, particularly in reducing noise while preserving important image details. The Non-Local Means Algorithm corrects the value of the center pixel in an image by moving a ‘search window’ over the image and averaging the values in the window [

38]. The Non-Local Means Algorithm used during the experiment is described in Algorithm 1. The mathematical representation of this technique is as follows:

Given a noisy image

v = {

|

i∈

I}, we can compute the estimated pixel values as follows:

where

I is the image domain,

i and

j are the pixel values in the noisy image,

is the similarity between the pixels

i, and

j (0 ≤

≤ 1). Also,

is computed as follows:

where

is a normalization factor,

is the standard deviation,

h is the smoothing parameter, and

is the Euclidean distance between the pixel intensities of the local neighborhood. The Non-Local Means Algorithm used during the experiment is as follows.

| Algorithm 1 Non-Local Means Algorithm. |

- 1:

- 2:

- 3:

- 4:

- 5:

- 6:

- 7:

- 8:

|

The current Heterogeneous Ship data were substantially updated in this study. Originally comprising a total of 4630 images, the dataset was expanded to a total of 5952 images. This expansion enhanced the dataset’s robustness, allowing for more-comprehensive training and evaluation of our SCABPNet model. Moreover, the updated dataset featured a revised class configuration in the no ship category, now comprising an equal ratio of 50% SAR images and 50% optical images. Furthermore, we used Augmentor, a Python package for data augmentation, to balance the number of images in each class. These updates to the Heterogeneous Ship data represent a significant enhancement over our previous dataset. In summary, the combination of SAR and optical satellite imagery provides a more-comprehensive representation of ships, incorporating complementary information from different modalities.

5. Experiments and Discussion

In this section, we provide an overview of the experiments conducted on the proposed model using the Heterogeneous Ship data. Additionally, we evaluated the effectiveness of SCABPNet by conducting the same experiments on the MSTAR dataset. We present the details of the experimental setup, the evaluation metrics, and the results. Furthermore, we conducted ablation studies to analyze the contributions of the SCABP layer. We also provide comparisons with the CBAM approach and conclude with a discussion.

5.1. Experimental Settings

Our SCABPNet model was implemented using the TensorFlow library, and the hyperparameters of the proposed method were meticulously optimized to enhance the classification performance. The SCABPNet model was configured with an input size of 224 × 224. The Adam optimizer was employed for training, with the epoch number and batch size set to 500 and 16, respectively. These values were determined based on preliminary experiments that demonstrated an optimal balance between computational efficiency and model performance. The initial learning rate was set to 0.001, and the exponential decay factor was statistically adjusted to match the learning rate’s equivalent value, with a clip value of 0.2. To mitigate overfitting, the dropout rate was set to the expected values of 0.55 and 0.25. The image data generator performed operations such as rescaling (1/255), rotation (range = 10), width shift (range = 0.2), height shift (range = 0.2), shear (range = 0.2), zoom (range = 0.2), and horizontal flip. The callback learning rate adjustment was set to ReduceLROnPlateau, with parameters including ‘Val loss’ monitoring, a factor of 0.1, an epsilon of

, a patience of 10, a verbose of 1, and the mode set to ‘min’. A custom focal loss was utilized as the loss function during training.

Table 3 summarizes the hyperparameters used in this experiment.

5.2. Evaluation Metrics

The experiment’s performance was quantitatively evaluated using metrics such as the classification report, confusion matrix, Receiver Operating Characteristics (ROCs), and the Area Under the Curve (AUC). Precision (Pre), Recall (Rec), F1-score (F1), Accuracy (Acc), and ROC/AUC were calculated using the following equations:

The ROC curve is obtained by plotting the TPR vs. the FPR at different classification threshold settings.

The AUC provides an aggregate measure of model performance across all possible classification thresholds, where denotes True Positive, denotes True Negative, denotes False Positive, denotes False Negative, is the False Positive Rate, and is the True Positive Rate. Finally, t is the classification threshold.

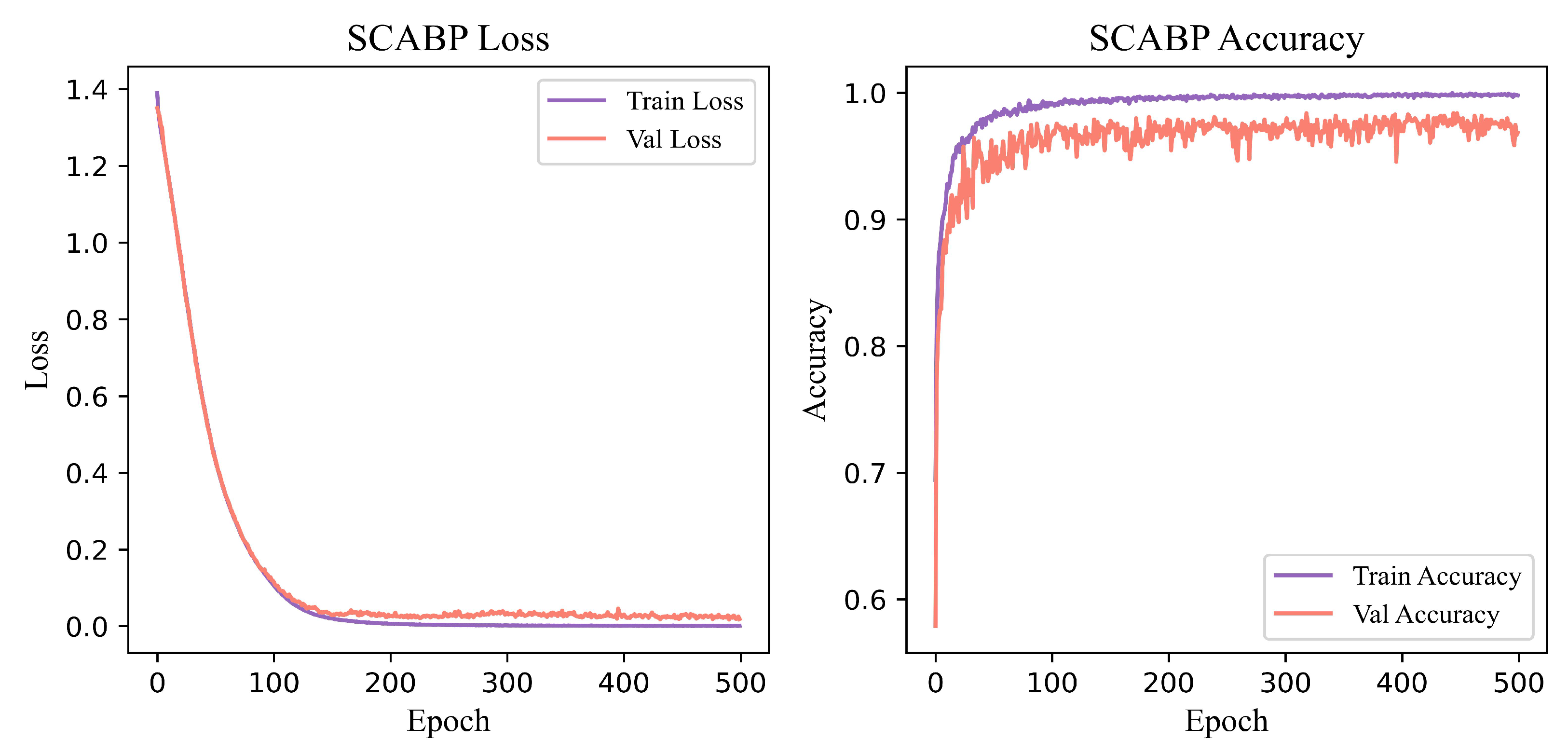

5.3. Experimental Results of Proposed Solution and Baseline + CBAM

This section highlights the results of SCABPNet’s performance on the proposed Heterogeneous Ship data and the Baseline + CBAM model. First, our SCABPNet achieved an accuracy of 97.67%, a precision of 97.78%, a recall of 97.67%, and an F1-score of 97.68%, as indicated in

Table 4, on the testing set. In

Figure 6, SCABPNet’s training curves show how the model converged during training. Second,the Baseline + CBAM model recorded an accuracy of 95.45%, a precision of 95.77%, a recall of 95.45%, and an F1-score of 95.46%, as indicated in

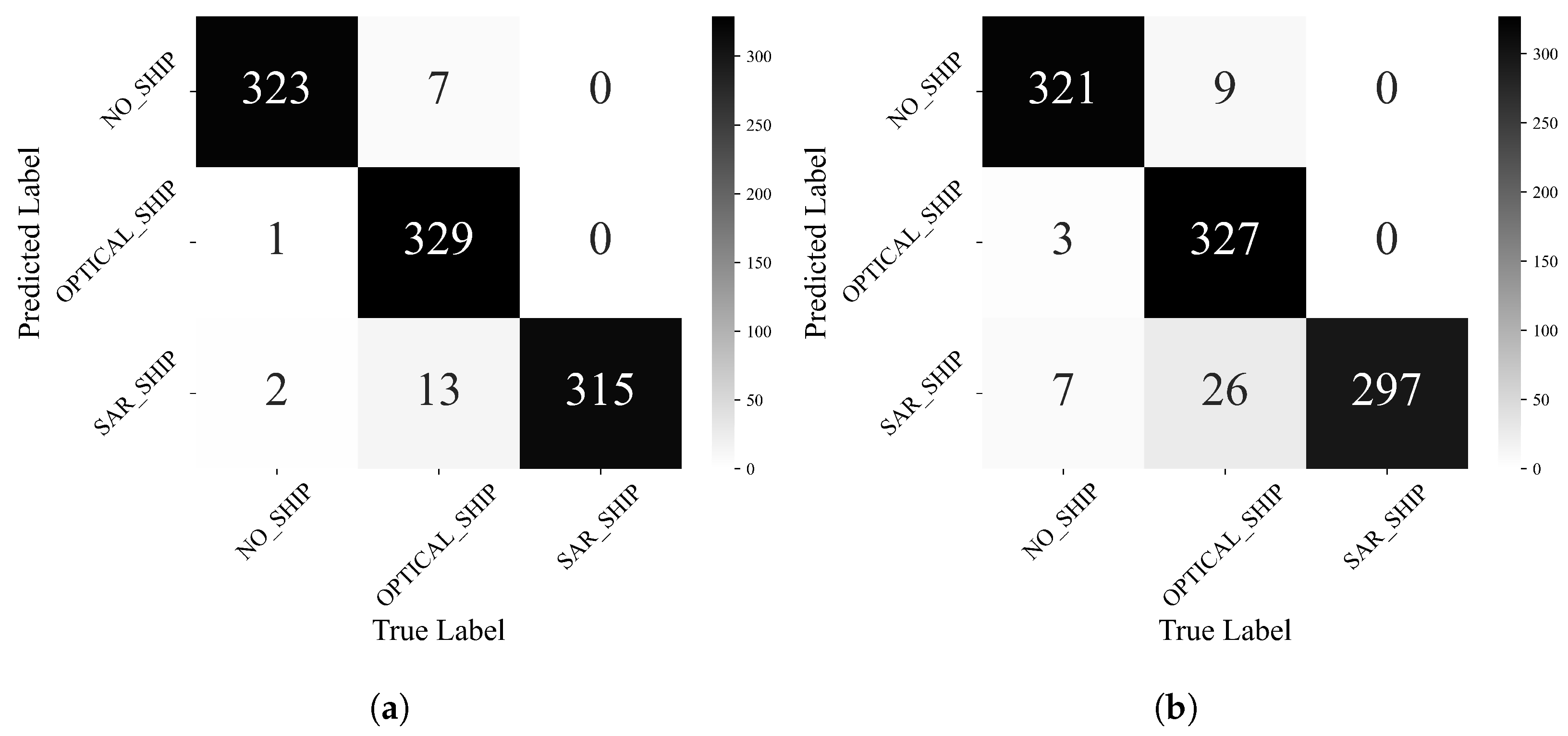

Table 5, on the same testing set. The confusion matrices in

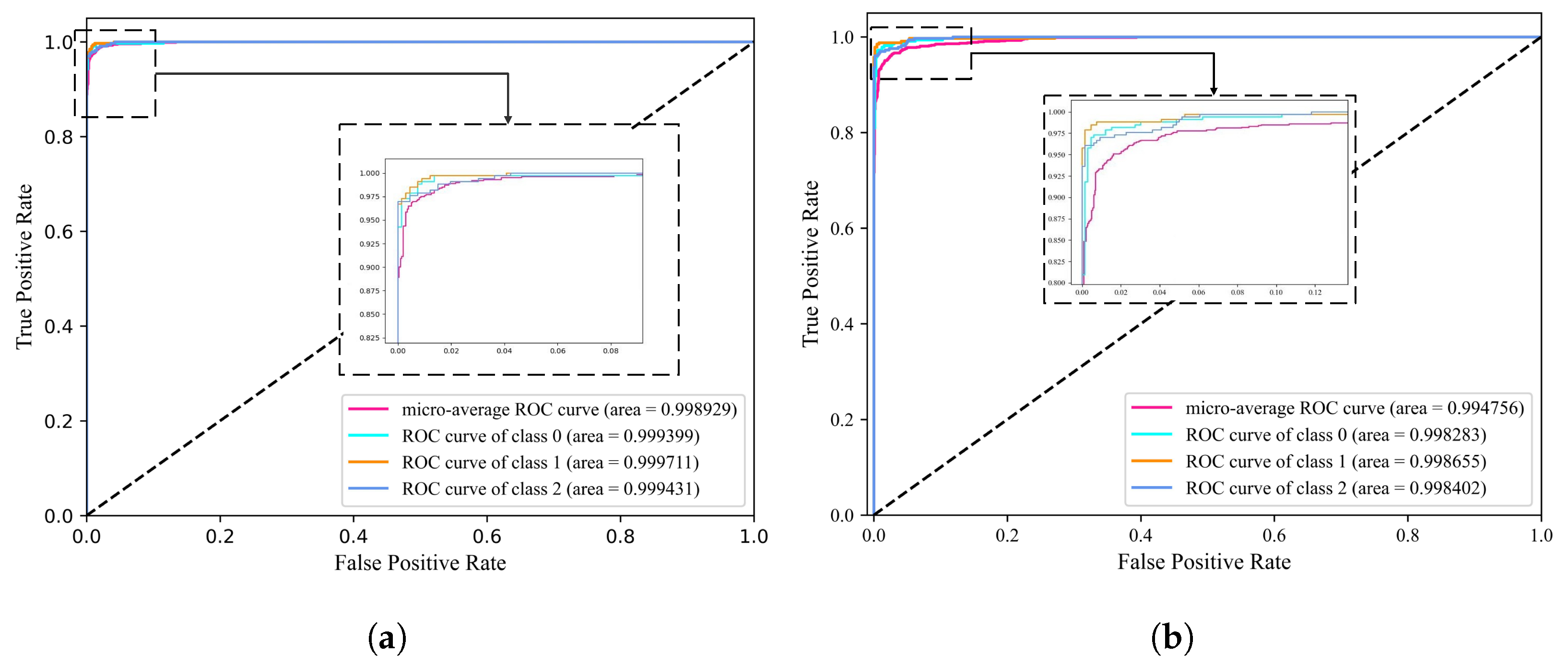

Figure 7 demonstrate reliable classification across all classes. The individual class performance under the Receiver Operating Characteristic (ROC) curve is graphically represented. The ROC curves in

Figure 8 ((a) for SCABPNet and (b) for the Baseline + CBAM model) indicate robust discrimination ability.

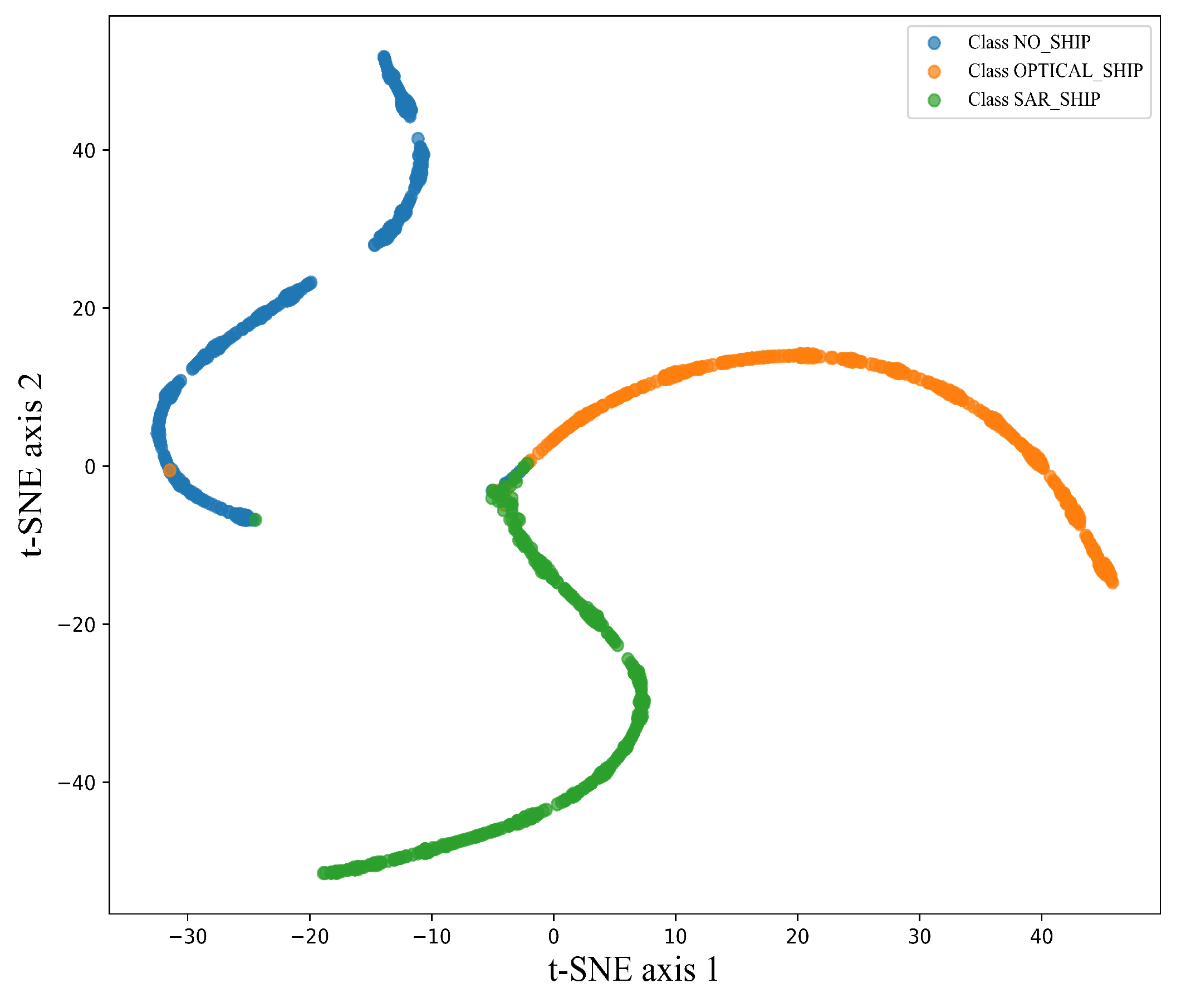

Figure 9 shows a t-SNE plot demonstrating how SCABPNet distinguished between the three classes with distinct feature clusters, proving its feature representation strength.

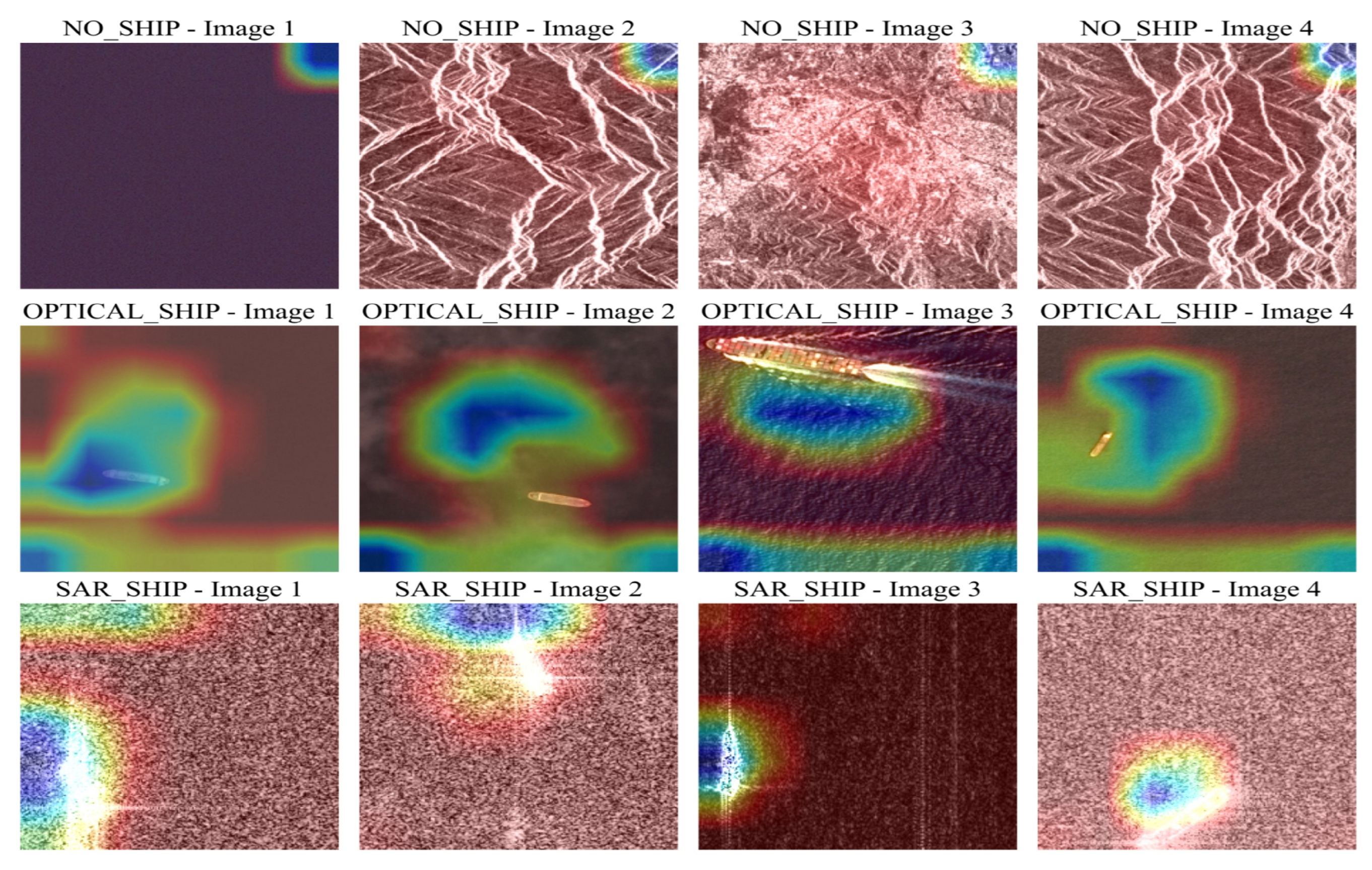

Figure 10 displays SCABPNet’s attention heat maps on the test images, highlighting focus areas on ships and reducing background distractions, confirming the spatial–channel attention’s contribution to the model.

5.4. Experimental Results of SCABPNet under MSTAR Dataset

This section highlights the performance of our proposed SCABPNet model when applied to the well-known MSTAR dataset, a benchmark in the remote sensing field, comprising ten distinctive classes of military vehicles. The MSTAR dataset provides two distinct configurations: Standard Operating Conditions (SOCs) and Extended Operating Conditions (EOCs). For the scope of this investigation, we used the SOC configuration to evaluate the efficacy of SCABPNet in a 10-class classification scenario. The data partitioning of the MSTAR SOC configuration is presented in

Table 6. Before presenting the results of this experiment, it is important to clarify that the primary objective behind this experiment was to test the proposed solution (SCABPNet) on a dataset that offers more than three classes. Therefore, the MSTAR dataset, renowned for comprising ten distinct categories, was suitable for this investigation. Furthermore, this experiment sought to discern the efficacy of SCABPNet when applied to homogeneous data, as well as its performance on datasets that do not have ship-specific features.

The evaluation of SCABPNet under MSTAR’s SOCs yielded highly impressive results, with accuracy, precision, recall, and F1-score metrics nearing the 98% mark, detailed in

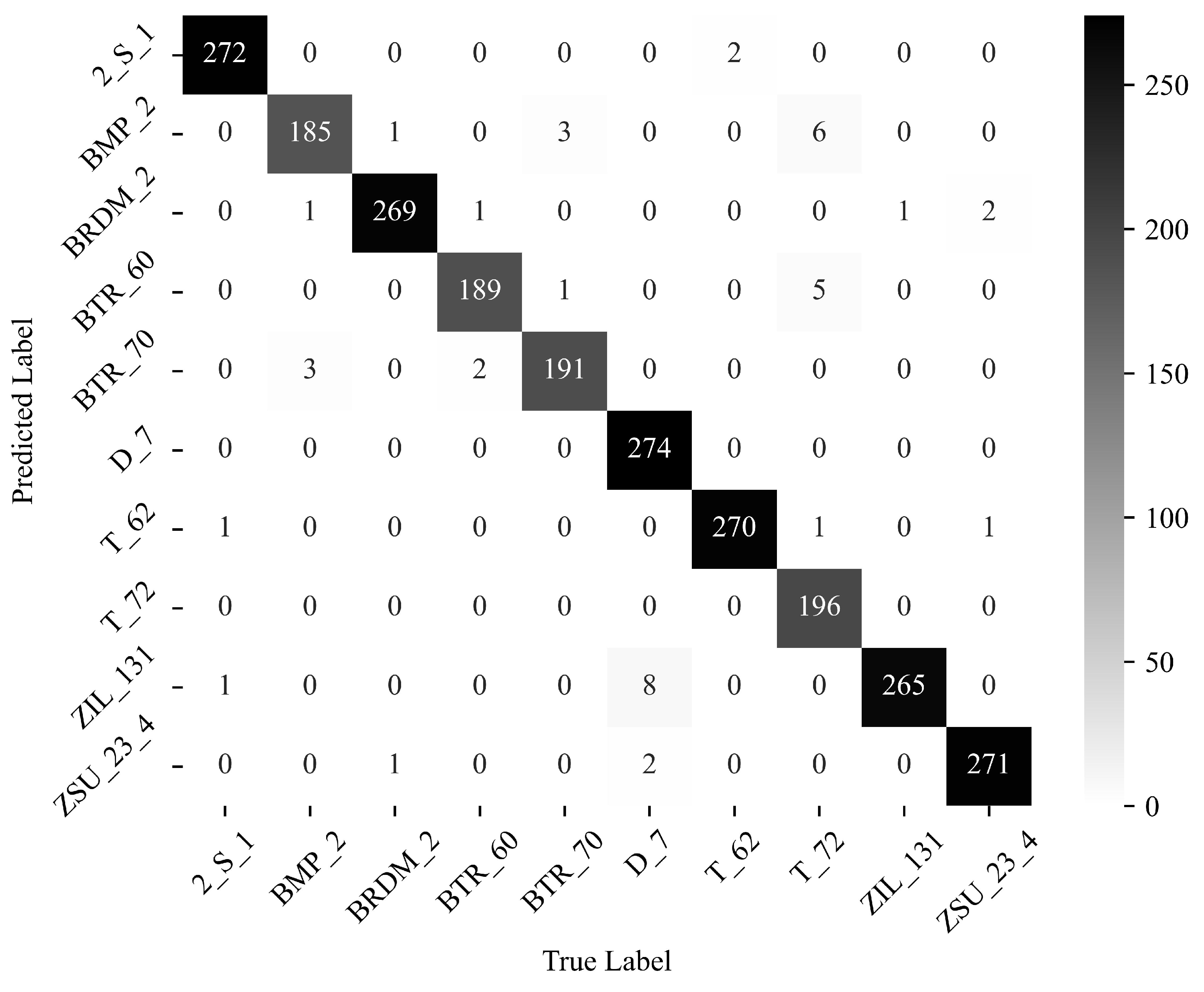

Table 7. The confusion matrix (

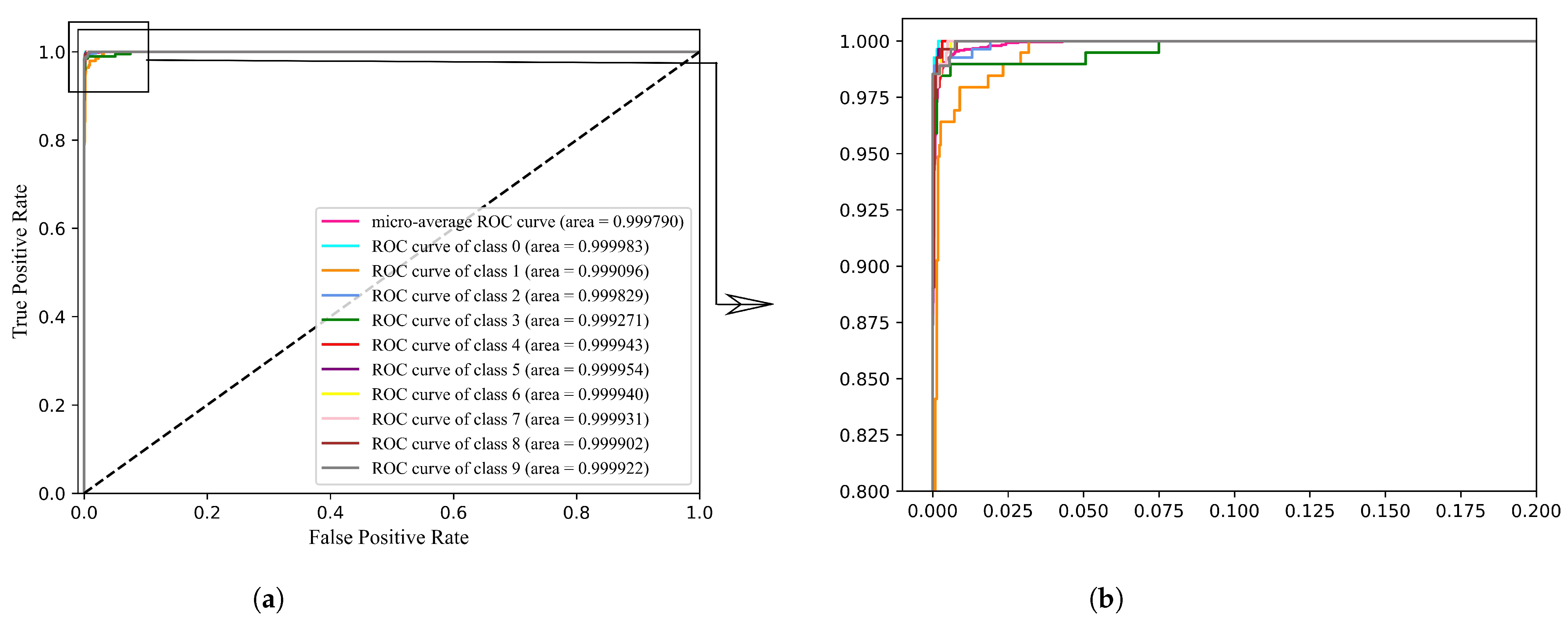

Figure 11) showcases the model’s consistent performance, accurately classifying instances across a dataset of ten classes. Moreover, the class-specific ROC analysis, as presented in

Figure 12, exhibited AUC scores surpassing 99.90%, underscoring SCABPNet’s exceptional capability to discern between classes with minimal error, thereby reinforcing its efficacy in handling varied and complex recognition scenarios.

5.5. Ablation Study

A comprehensive ablation study was conducted to validate the efficacy of some components within our proposed SCABPNet model. The primary objective was to evaluate the SCABP layer’s influence on the model’s overall performance. A comparative analysis was performed, juxtaposing the results derived from the SCABPNet model with the Baseline + CBAM model. As presented in

Table 4 and

Table 5, the integration of the SCABP layer consistently enhanced the classification metrics across all classes (SAR ship, optical ship, no ship) within the Heterogeneous Ship dataset. The ‘Baseline + CBAM’ column in

Table 8 illustrates the performance metrics of the model using the CBAM approach. For the SAR ship class, the Baseline + CBAM model recorded an accuracy of 90%. Conversely, the ‘Baseline + SCABP layer’ column displays the results when the SCABP layer was incorporated into the model, which yielded significantly superior results. For the SAR ship, the accuracy escalated to 95.45%, indicating a substantial enhancement in all the performance metrics. A similar trend of improvement was observed for the optical ship and no ship classes, thereby reinforcing the effectiveness of the SCABP layer. As a result, the overall performance of the model also witnessed a significant improvement upon the inclusion of the SCABP layer. The precision improved from 95.77% to 97.78%, recall from 95.45% to 97.67%, F1-score from 95.46% to 97.68%, and accuracy from 95.45% to 97.67%. Moreover, the ROC/AUC values ranged from 0 to 1, with 1 indicating a perfect classification model. By incorporating the SCABPNet layer, the ROC/AUC of the proposed model increased from 99.47% to 99.89%.The SCABPNet model’s ROC/AUC was very close to 1, indicating an excellent performance in distinguishing between the classes.

Additionally, the confusion matrices of both models revealed that, in the proposed solution (SCABPNet), the SAR ship class had only 15 misclassifications, whereas the Baseline + CBAM model (CBAM approach) experienced 33 misclassifications. Moreover, when discriminating between the presence or absence of a ship, the SCABPNet model performed better than the Baseline + CBAM model. For example, within the SAR ship class, the SCABPNet model misclassified 13 images as the optical ship class when they were actually SAR ship class, and misclassified only 2 images as the no ship class. Conversely, the Baseline + CBAM model misclassified 7 images as the no ship class and 26 as the optical ship class. In summary, the confusion matrix of the proposed solution indicated a total of 3 images misclassified as the no ship class, while the Baseline + CBAM model’s confusion matrix showed 10 images in the no ship class. These findings suggest that the proposed solution is adept at accurately discriminating between ship images and no ship images. The results underscore the important role of the SCABP layer in enhancing the SCABPNet model’s performance. By facilitating more-effective feature extraction, the SCABP layer significantly improved the model’s ability to accurately classify different types of ships, emphasizing the SCABP layer’s importance in the proposed model.

5.6. Discussion

Handling heterogeneous data for classification tasks poses a significant challenge in the field of remote sensing. Our study addressed this issue, and the results presented in the previous section provided a comprehensive evaluation of the proposed SCABPNet model for this classification task. The model’s performance, as evidenced by the high precision, recall, F1-score, and accuracy, demonstrated its effectiveness in classifying different types of ships. This section discusses the implications of these results, the strengths and limitations of the study, and the potential directions for future research.

The SCABPNet model, with its novel Spatial–Channel Attention with Bilinear Pooling (SCABP) layer, showed a significant improvement in performance metrics over the Baseline + CBAM. The SCABP layer’s ability to apply spatial and channel attention mechanisms to the input data, followed by bilinear pooling, proved to be instrumental in enhancing the model’s performance. This was particularly evident in the ablation study, where the inclusion of the SCABP layer led to substantial improvements in all performance metrics across all classes within the Heterogeneous Ship dataset. This underscored the importance of the SCABP layer in facilitating more-effective feature extraction and, consequently, more-accurate ship classification.

When compared to other state-of-the-art methods, as shown in

Table 9, the SCABPNet model demonstrated competitive performance. It outperformed most of the other methods in terms of the accuracy, precision, recall, and F1-score. Notably, it surpassed the performance of well-established models such as ResNet-50, ResNet-101, VGG-16, and Inception-V3, which have been recognized for their precision in numerous remote sensing applications. This indicates that our SCABPNet model, with its unique SCABP layer, is a promising tool for ship classification tasks, particularly in the context of Heterogeneous Ship data.

Additionally, we discuss the advantages and limitations of the proposed solution:

Advantages of SCABPNet:

Attention mechanism: The SCABPNet’s dual-attention mechanism (spatial and channel) allows it to concentrate on the most-salient features in both the spatial and channel dimensions. This helps eliminate redundant information, leading to more-accurate classifications.

Bilinear pooling: By employing bilinear pooling, the SCABP layer can capture complex features and relationships between features, enhancing the model’s discriminative power.

Robust performance: As demonstrated in the results, SCABPNet showed consistently superior performance across various performance metrics when compared to other state-of-the-art models. This indicates its effectiveness and potential applicability in real-world scenarios.

Scalability: Given its modular nature, SCABPNet can be easily scaled up or integrated with other network architectures to further enhance performance or adapt to specific tasks.

Limitations of SCABPNet:

Generalizability: While SCABPNet demonstrated robust performance on the Heterogeneous Ship data, its performance on other maritime datasets or scenarios needs to be thoroughly evaluated.

Computational complexity: The incorporation of the dual-attention mechanism and bilinear pooling augmented the computational demands, making SCABPNet more resource-intensive compared to simpler architectures.

The presented results in

Table 10 highlight the nuances and complexities of optimizing the computational cost and performance in ship data classification. The SCABPNet model demonstrated an exemplary balance between computational complexity and classification accuracy. With just 0.25 Giga Floating Point Operations Per Second (GFLOPs), SCABPNet outperformed notable architectures such as VGG16_bn and ResNet-50, achieving an accuracy of 97.67%. This clearly indicates the efficacy of the spatial–channel attention mechanism coupled with bilinear pooling in effectively classifying the Heterogeneous Ship data. Drawing comparisons with other models, the disparity in the FLOPs and accuracies showcased how more-complex models do not necessarily guarantee better performance. For instance, FUSAR-CNN, despite having the highest computational complexity at 10.82 GFLOPs, lagged behind with an accuracy of just 66.10% [

53]. This stark contrast underscores the necessity of model optimization beyond just increasing the computational layers or nodes. When it comes to lightweight models, MobileNetV3-Large stood out with its efficiency, having a GFLOPs value of 0.16 and an accuracy of 95.93%.

To further evaluate the efficacy and robustness of SCABPNet, another experiment was conducted using the MSTAR dataset. The results of this experiment are depicted in

Table 7 and

Figure 11 and

Figure 12. The results indicated that SCABPNet maintained good performance even when applied to a different dataset, notably one without ship features like the MSTAR dataset. Despite the inherent complexities associated with MSTAR data, the proposed model demonstrated high classification capabilities. The objective of this experiment was to showcase the ability of SCABPNet to effectively handle both homogeneous (MSTAR dataset) and heterogeneous datasets. Yet, an extensive evaluation of SCABPNet’s performance on various other datasets or in real-world scenarios is essential to ensure optimal recognition accuracy. Future work could involve testing the model on different datasets and in various real-world maritime scenarios to further validate its effectiveness and robustness. Another potential area for future research could be the investigation of the application of different attention mechanisms or pooling strategies within the SCABP layer. Although the existing implementation of the SCABP layer has demonstrated its effectiveness, exploring other attention or pooling strategies could potentially enhance the model’s performance.

6. Conclusions

The field of remote sensing has made significant advancements in the past decade due to increasing demands for improved performance. This study contributes to this ongoing progress by introducing SCABPNet, a novel spatial–channel attention with an improved bilinear pooling model specifically designed for the classification of Heterogeneous Ship data. Unlike previous studies that focused on homogeneous data, this work leveraged the complementary aspects of Synthetic Aperture Radar (SAR) and optical images, thereby enhancing the model’s performance. The SCABPNet model, with its unique SCABP layer, demonstrated superior performance in our experiments, surpassing the results of several deep learning models. The SCABP layer’s ability to effectively apply spatial and channel attention mechanisms followed by bilinear pooling proved instrumental in achieving these results. However, despite the promising results, it is important to acknowledge the challenges inherent in deep learning research, particularly in the context of radar and optical image analysis. The complexity of the parameters and dataset can lead to overfitting and long training times, requiring extensive training data. In light of these challenges, future work will focus on further improving the SCABPNet model and exploring new strategies to manage these variables. This includes extending the number of classes in the proposed ship dataset, investigating the ensemble learning of the proposed model for ship classification tasks, and exploring the use of other types of attention mechanisms and pooling methods. In conclusion, the SCABPNet model represents a significant step forward in ship classification, demonstrating the potential of combining SAR and optical images for enhanced performance. While challenges remain, this study provides a solid foundation for future research in this area, contributing to the ongoing advancement of maritime surveillance applications.