Abstract

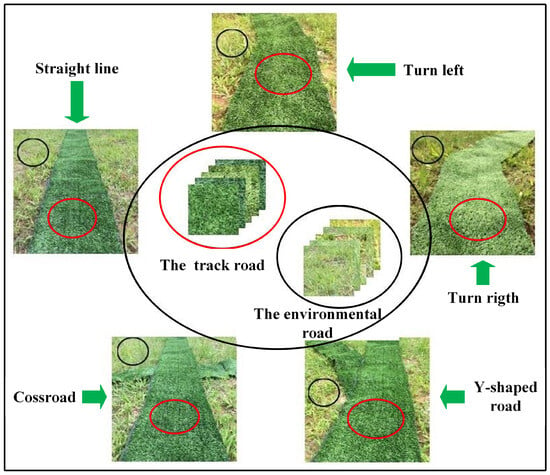

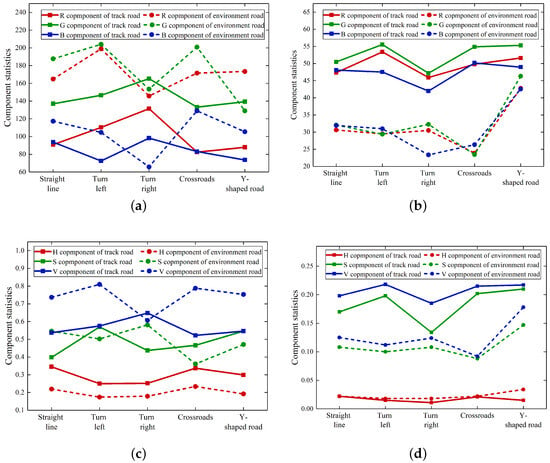

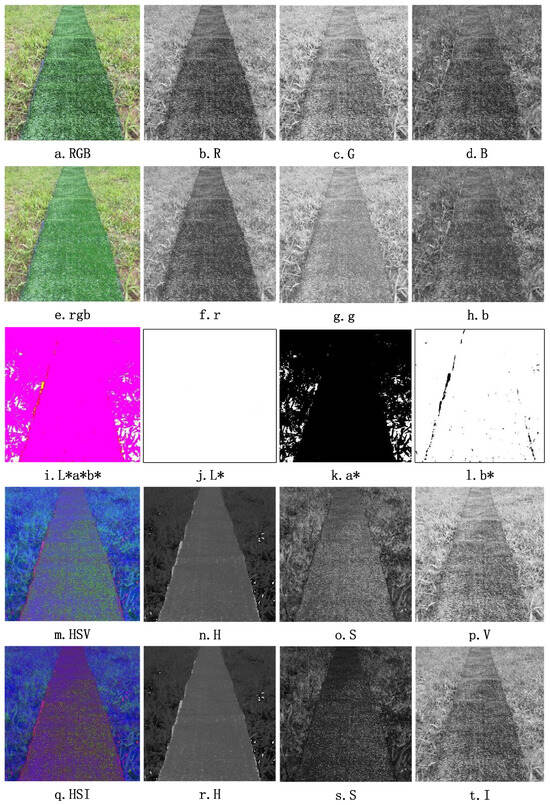

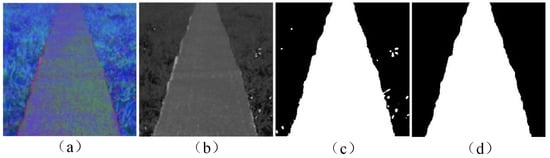

Obstacle avoidance control and navigation in unstructured agricultural environments are key to the safe operation of autonomous robots, especially for agricultural machinery, where cost and stability should be taken into account. In this paper, we designed a navigation and obstacle avoidance system for agricultural robots based on LiDAR and a vision camera. The improved clustering algorithm is used to quickly and accurately analyze the obstacle information collected by LiDAR in real time. Also, the convex hull algorithm is combined with the rotating calipers algorithm to obtain the maximum diameter of the convex polygon of the clustered data. Obstacle avoidance paths and course control methods are developed based on the danger zones of obstacles. Moreover, by performing color space analysis and feature analysis on the complex orchard environment images, the optimal H-component of HSV color space is selected to obtain the ideal vision-guided trajectory images based on mean filtering and corrosion treatment. Finally, the proposed algorithm is integrated into the Three-Wheeled Mobile Differential Robot (TWMDR) platform to carry out obstacle avoidance experiments, and the results show the effectiveness and robustness of the proposed algorithm. The research conclusion can achieve satisfactory results in precise obstacle avoidance and intelligent navigation control of agricultural robots.

1. Introduction

Agricultural robots have been acknowledged as a highly promising frontier technology in agriculture. With the rapid development of modern electronic computers, sensors, the Internet of Things and other technologies, the application of agricultural robots is the best way to improve the efficiency and quality of agricultural production [1,2,3]. As a new type of intelligent agricultural equipment, agricultural robots integrate various advanced technologies such as artificial intelligence, automatic control and algorithmic development, and are widely used in the fields of agricultural harvesting, picking, transportation, and sorting, especially in the field of farm mobile inspection [4]. The key to the safe and efficient operation of agricultural mobile robots is to have obstacle avoidance and navigation capabilities, which require practicality and stability and, more importantly, simple and low-cost implementation [5]. However, other industrial mobile robots are not fully applicable in agricultural settings owing to technical limitations and differences in application scenarios. Similarly, unstructured agricultural scenarios and random and changeable environments pose huge challenges for agricultural robots. Therefore, the navigation and obstacle avoidance systems of agricultural robots must adopt stable and low-cost algorithms and control methods [6,7,8].

In order to develop agricultural robots, obstacle avoidance technology is the most important functional module of robots, which can improve the working efficiency of robots, reduce working errors, ensure that robots complete tasks and produce greater benefits. The obstacle avoidance process of agricultural robot integrates agricultural information perception, intelligent control and communication technology. The robot obtains the surrounding environment information through relevant sensors, including the status, size, and position of obstacles, and then makes a series of obstacle avoidance strategies. Therefore, LiDAR [9], ultrasonic sensors, and vision sensors have been widely used in navigation and obstacle avoidance technology because of their high precision and fast speed. Rouveure et al. described in detail millimeter-wave radar for mobile robots, including obstacle detection, mapping, and general situational awareness. The selection of Frequency Modulated Continuous Wave (FMCW) radar is explained and the theoretical elements of the solution are described in detail in [10]. Ball et al. described a vision-based obstacle detection and navigation system that uses an expensive combination of Global Positioning System (GPS) and inertial navigation systems for obstacle detection and visual guidance [11]. Santos et al. proposed a real-time obstacle avoidance method for steep slope farmland based on local observation, which has been successfully tested in vineyards. The LiDAR combined with depth cameras will be considered in the future to improve the perception of the elevation map [12]. However, high sensor costs have impeded researchers. To address this, Monaca et al. explored the “SMARTGRID” initiative. This project utilizes an integrated wireless safety network infrastructure, incorporating Bluetooth Low Energy (BLE) devices and passive Radio Frequency Identification (RFID) tags, designed for identifying obstacles, workers, and nearby vehicles, among other things [13]. Advanced sensors enable potential obstacle avoidance in intricate agricultural surroundings, although their high-cost ratio to accuracy persists and they necessitate the integration of well-developed and stable navigation and algorithmic technologies.

Similarly, robot navigation in obstacle environments remains a challenging problem, especially in the field of agriculture, where accurate navigation of robots in complex agricultural environments is a prerequisite for completing various tasks [14]. Gao et al. discussed the solution to the navigation problem of Mobile Wheeled Robots (WMRs) in agricultural engineering and analyzed the navigation mechanism of WMRs in detail, but do not delve into their cost [15]. Wangt et al. reviewed the advantages, disadvantages, and roles of different vision sensors and Machine Vision (MV) algorithms in agricultural robot navigation [16]. Durand et al. built a vision-based navigation system for autonomous robots, which they noted is low-cost because trees in an orchard can be quickly and accurately identified using four stereo cameras to provide point cloud maps of the environment [17]. Higuti et al. described an autonomous field navigation system based on LiDAR that uses only two-dimensional LiDAR sensors as a single sensing source, reducing costs while enabling robots to travel in a straight line without damaging plantation plants [18]. Gutonneau et al. proposed an autonomous agricultural robot navigation method based on LiDAR data, including a line-finding algorithm and a control algorithm, and performed well in tests with the ROS middleware and the Gazebo simulator. They focused on the sophistication of the algorithm at the expense of the complexity of the algorithm and the stability of the simulation results [19]. LiDAR has become prevalent in agricultural navigation, including vineyards [20] and cornfields [21]. Nevertheless, the equilibrium between cost and practical outcomes is an aspect frequently disregarded by numerous scholars.

Currently, low cost is vital for the development of agricultural robots. Cheap robotic hardware systems and software systems are always welcome as the users are farmers working in the agricultural industry. Global Navigation Satellite System (GNSS) technology provides autonomous navigation solutions for today’s commercial robotic platforms with centimeter-level accuracy. Researchers are trying to make agricultural navigation systems more reliable by using a fusion of GNSS, GPS technology, and other sensor technologies [22]. Winterhalter et al. proposed a GNSS reference map based approach for field localization and autonomous navigation via crop rows [23]. However, GNSS-based solutions are expensive and require a long preparation phase, while on the other hand, GNSS signals can fail or be interrupted in complex agricultural environments, which is hard for farmers to accept.

In this paper, we have developed a low-cost agricultural mobile robot platform based on LiDAR and vision-assisted navigation. The LiDAR is used to acquire information about obstacles in the surrounding environment. An improved density-based fast clustering algorithm is used to find the clustering centers of obstacles, and the maximum diameters of convex polygons in the clustering data are obtained by combining the convex shell algorithm and the rotating jamming algorithm. In addition, an effective orchard path tracking and control method is proposed in this paper, and effective and obvious feature components are selected as the color space components used by the robot in real-time tracking, which initially solves the orchard path feature recognition task and realizes the robot’s visual aided navigation. Finally, the feasibility of the proposed method in obstacle avoidance and navigation of agricultural robots is verified by experiments.

The main contributions of this paper are summarized in the following folds.

- An improved density-based fast clustering algorithm is proposed, combined with a convex hull algorithm and rotating jamming algorithm to analyze obstacle information, and an obstacle avoidance path and heading control method based on the dangerous area of obstacles is developed. The feasibility of low-cost obstacle avoidance using LiDAR in agricultural robots is realized.

- By analyzing the color space information of the orchard environment road and track road images, a robot track navigation route and control method based on image features were proposed. A vision camera was used to assist agricultural robots in navigation, and the orchard path feature recognition task was preliminarily solved.

- An agricultural robot navigation and obstacle avoidance system based on a vision camera and LiDAR is designed, which makes up for the shortcomings of poor robustness and easy to be disturbed by the environment of a single sensor, and realizes the stable work of agricultural robots working in the environment rejected by GNSS.

The rest of this article is organized as follows. Section 2 introduces the calibration work of LiDAR and visual camera. Section 3 provides the development and proposed method of robot obstacle avoidance system. Section 4 presents the research of robot vision system. In Section 5, the obstacle avoidance and navigation experiments of the robot are carried out. Finally, we make conclusions work of the whole manuscript.

2. Preliminaries

2.1. LiDAR Calibration and Filtering Processing

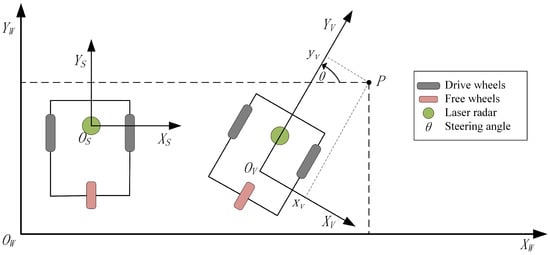

Since the process of collecting environmental data by LiDAR is relative to the form of LiDAR polar coordinate system [24], if LiDAR is installed on the robot platform, the coordinate system will be based on the robot. Therefore, it is necessary to re-calibrate the coordinate system relationship between LiDAR and the robot. There are three coordinate definitions in the robot positioning system, which are global coordinate system , robot coordinate system and LiDAR coordinate system . The relationship between the robot and LiDAR coordinates is determined by the LiDAR’s relative position to the robot. Therefore, the global coordinate system and the robot coordinate system are the main research coordinate systems, in which the LiDAR coordinate system is , and the typical robot coordinate system relationship is shown in Figure 1. The pose of the robot in the global coordinate system is denoted as , with representing the robot position, and representing the heading angle [25]. The relationship between any P point in the robot coordinate system and the global coordinate system can be obtained by Equation (1).

Figure 1.

The relationship between robot, LiDAR, and global coordinate system.

The polar coordinate system is the working mode of LiDAR. Firstly, the LiDAR installed on the robot needs to be calibrated to obtain the relationship between LiDAR and robot [26,27]. As shown in Figure 1, the LiDAR coordinate system is , so the Cartesian coordinate of LiDAR is , the polar coordinate form is . The conversion relationship between Cartesian coordinates and polar coordinates of LiDAR is shown in Equation (2).

The pose coordinate of the LiDAR is , where is the angle from axis to axis of the robot coordinate system. Suppose the coordinate of LiDAR coordinate point s under robot is , then the rotation translation of the coordinate system is as shown in Equation (3) [28].

According to the above calibration method, a calibration test is carried out. Firstly, the pose of the robot in global coordinates is determined as . The coordinate of the auxiliary calibration object placed in the global coordinate system is . According to the coordinate system relations in Figure 1, the relational data of the global coordinate system and the LiDAR coordinate system can be calculated.

The center of the auxiliary calibration object in the LiDAR coordinate system is calculated as by the least square method. Further, the LiDAR pose coordinate is obtained by the m-estimation method in Equation (4).

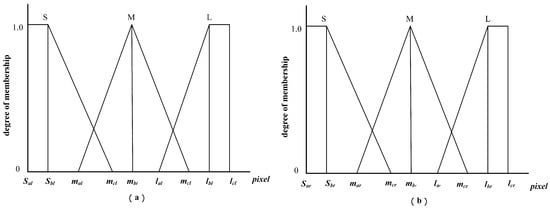

Due to the data collected by LiDAR producing interference cloud signal, such interference noise will have an unstable influence on the detection effect of obstacles [29]. The laser radar data with median filtering effect depends on the filter window options, therefore, this paper adopts an improved median filtering method, which can first determine whether the data point is in the extreme value within the filtering window range. If yes, the point can be filtered; otherwise, the operation will be canceled [30]. Figure 2b shows the effect of the improved median filtering algorithm, the filtering algorithm is shown in Equation (5).

Figure 2.

Comparison of median filtering effect before (a) and after (b).

2.2. Camera Visualization and Parameter Calibration

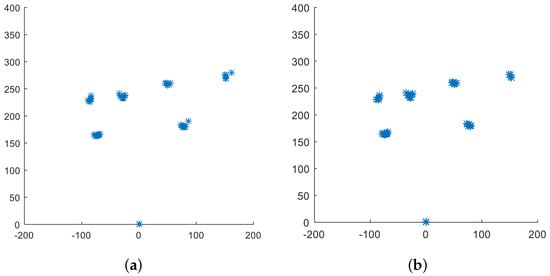

The camera is calibrated using the checkerboard method, with key points acquired. The MATLAB camera calibration function is called, and the camera parameters are calculated by the Zhang Zhengyou calibration algorithm. External parameters of the camera can be visually checked by visualizing 3D images to check the position of the checkerboard calibration board and camera [31,32]. Figure 3a,b are visualized 3D views based on the checkerboard calibration board reference frame and camera reference frame, respectively.

Figure 3.

Visual 3D view of different reference frame modes. (a) Reference frame based on checkerboard calibration board; (b) camera reference system.

Finally, the values of internal parameters and external parameters in the camera are calculated by the MATLAB camera calibration algorithm. The matrix A in the camera is as follows:

The radial distortion coefficient K of the camera lens is as follows:

The tangential distortion coefficient P of the camera lens is as follows:

3. Obstacle Avoidance System and Algorithm Implementation

In this section, for the obstacle avoidance system designed for autonomous robots, LiDAR is used to collect static obstacles in the moving direction in real-time; in addition, the obstacle information is analyzed by an improved clustering algorithm combined with the convex hull algorithm and the rotating jamming algorithm. Finally, an obstacle avoidance path and heading control method based on the dangerous area of obstacles is developed.

3.1. Improved Clustering Algorithm of Obstacle Point Cloud Information

The present research utilizes an improved algorithm to rapidly and accurately cluster obstacle cloud data, followed by determining the clustering center, which helps locate the obstacle cloud data center [33]. Compared with the traditional K-means algorithm, the clustering algorithm can obtain non-spherical clustering results, describe the data distribution well, and has lower algorithm complexity than the K-means algorithm. This paper first gives a conceptual definition of the algorithm. Two quantities need to be calculated for each data point i, including the local density and the minimum distance above the density of point i.

Local density is defined as follows:

where

In this paper, the is a truncation distance which refers to the distance between all points from smallest to largest, accounting for two percent of the total. Distance is defined as

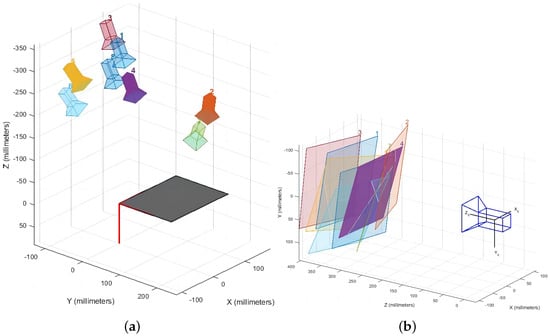

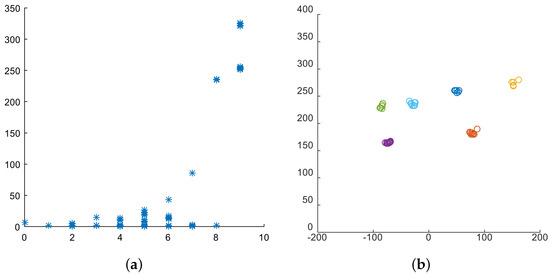

The denotes the smallest distance of point i among points whose density surpasses that of point i. A higher value indicates that point i is at a further distance from the high-density point, making it more likely to become the cluster center. For point i with the highest global density, is equal to the distance between point i and the maximum value of all points [34]. The obstacle point distribution, generated by the enhanced clustering algorithm, is presented in Figure 4.

Figure 4.

(a) The diagram of data decision; (b) the result of clustering algorithm.

3.2. The Fusion of Convex Hull Algorithm and Rotary Jamming Algorithm

Convex hull is a concept in computer geometry. In a real vector space V, for a given set X, the intersection S of all convex sets containing X is called the convex hull of X. The convex hull of X can be constructed from a linear combination of all points in X. We use the Graham’s Scan algorithm to find the convex polygon corresponding to the obstacle clustering data collected by LiDAR.

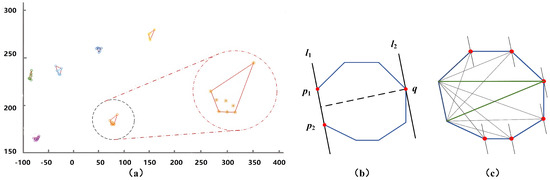

The basic idea of Graham’s Scan algorithm is as follows: the convex hull problem is solved by setting a stack S of candidate points. Each point in the input set X is pushed once, and points that are not vertices in CH(X) (representing the convex hull of X) are eventually popped off the stack. At the end of the calculation, there are only vertices in CH(X) in stack S in the order in which the vertices appear counterclockwise on the boundary. Graham’s Scan algorithm is shown in Table 1. If there is more than one such point, the leftmost point is taken as , meanwhile . Call the function TOP(S) TO return the point at the TOP of the stack S, and call the function next-to-top (S) TO return the point below the TOP of the stack without changing the structure of the stack [35]. The layout of obstacle cloud points processed by Graham’s Scan algorithm is shown in Figure 5a.

Table 1.

Graham’s Scan (X) algorithm.

Figure 5.

(a) The layout of obstacle cloud points processed by Graham’s Scan algorithm; (b) diagram of “point–edge” to heel point; (c) the distance between convex envelope and corresponding edge.

To determine the maximum diameter of the obstacle point cloud’s convex hull, some scholars have utilized the enumeration technique. However, this method, although easy to implement, sacrifices efficiency due to its time complexity of O(). Consequently, this paper introduces an alternative algorithm, the rotating caliper algorithm, which provides a time complexity of O(n) for computing convex hull diameter. Before introducing the Rotating Calipers Algorithm, we defined the term “heel point pair”: if two points P and Q (a convex polygon Q) lie on two parallel tangent lines, they form a heel point pair. What appears in this paper is the “point–edge” heel point pair, as shown in Figure 5b, which occurs when the intersection of one tangent line and polygon is one edge, and the other tangent line is unique to the tangent point of the polygon. The existence of tangents must contain the existence of two different “point–point” pairs of heel points. Therefore, and q, , and q are two pairs of “heel point pairs” of convex polygon q, respectively. The Rotating Calipers Algorithm is shown in Table 2.

Table 2.

Rotating Calipers algorithm.

There are the two furthest points on the convex hull through which a pair of parallel lines can be drawn. By rotating the pair of parallel lines to coincide with an edge on the convex hull, such as the line in Figure 5b, notice that q is the point farthest from the line on the convex hull. Points on the convex hull in turn form a single-peak function with the distance generated by the corresponding edge, as shown in Figure 5c. According to the characteristics of the convex hull, enumerating edges counterclockwise can avoid the repeated calculation of the farthest point and obtain the maximum pair of points of the convex hull diameter. The maximum diameter of the convex polygon in Figure 5a is 5.65 cm, 15.55 cm, 12.65 cm, 9.43 cm, 8.94 cm, and 12.04 cm, respectively, processed by the rotating jamming algorithm. Finally, the circle with the maximum diameter of the convex polygon is taken as the danger range.

3.3. Path Planning and Heading Control of the Robot

3.3.1. Obstacle Avoidance Path Planning

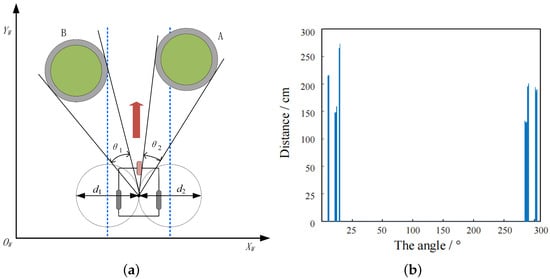

This paper improved the VFH+ path planning method to meet the basic obstacle avoidance requirements of the robot [36]. According to the distribution of obstacles in the surrounding environment on the guiding track of the robot, the passage direction interval is determined according to a certain angle threshold, and then the passage direction and speed are determined through calculation. Then, the robot is taken as the center to establish the coordinate system, as shown in Figure 6a. The steps are as follows.

Figure 6.

(a) Schematic diagram of obstacle angle determination; (b) the result diagram of obstacle angle determination.

(1) The path design of this paper adopts the particle filter theory considering the size of the robot, based on the obstacle identification and positioning in the previous section, the radius is expanded by expanding the cluster center circle to half the width of the robot. Through dynamic constraints, the robot will be limited in the turning process by setting a restricted area of and on both sides of the robot [37,38]; meanwhile, the dynamic constraint angle is set at . As shown in Figure 6a, both A and B are obstacles, and the expansion part is the gray part on the edge of the obstacle. The LiDAR distance from the obstacle to the robot can be known from polar coordinates. Assuming the LiDAR scanning range [0, 360], angle and distance l are expressed as a bar graph, as shown in Figure 6b.

(2) Determine the threshold and obtain the passage interval. According to the threshold , it is defined as follows:

When = 0, it indicates that i direction is passable and vice versa. In combination with the robot specifications and safe distance, the threshold is set at 20 cm, and the angle and distance l are expressed as bar charts, as shown in Figure 6b.

3.3.2. Heading Control of the Robot

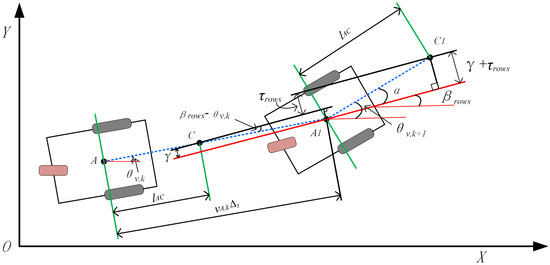

The movement of the robot is described by the motion process and motion model through state vectors in different time periods. The input control of the robot is shown in Equation (13) [39].

(m/s) is the linear velocity obtained from the wheel radius, (rad/s) is the speed measured by the rotational yaw rate, (m) is the offset of the previous frame tracked by the trajectory, and (m) is a tracking mark.

The movement of the robot can be approximated by a small range of movement through a suitable rotation fine-tuning in each cycle. The forward motion of a robot is determined by speed . The robot deflection is determined by a combination of visual guidance and LiDAR. The robot will prioritize visual tracking when the obstacle distance reaches a safe threshold, which triggers the LiDAR obstacle avoidance program [40,41], = 0, the steering angle of the robot is Equation (14).

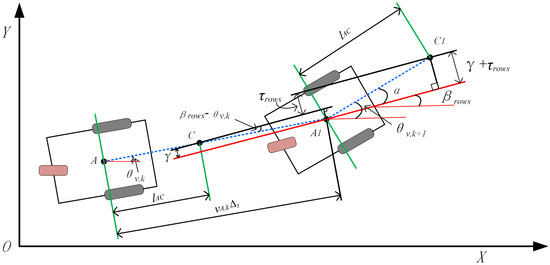

The tracker refreshes output through the robot’s heading, which is defined as the lateral offset of a navigation point (point C in Figure 7) that is perpendicular to the track and heading . On each refresh, navigation point C is perpendicular to the robot heading of the track distance, and then output. It can be seen from Figure 7 that the robot heading changes at frame k + 1 as follows [42,43]:

: the angle of the robot with respect to the track angle , which can be seen in Figure 7.

where is described as follows:

is the distance from point A to point C.

By combining the above equations, the angle of robot can be obtained as shown in Equation (18).

Figure 7.

The course change chart of avoidance on robot.

5. Experimental Test Results of Autonomous Robot

5.1. System Composition

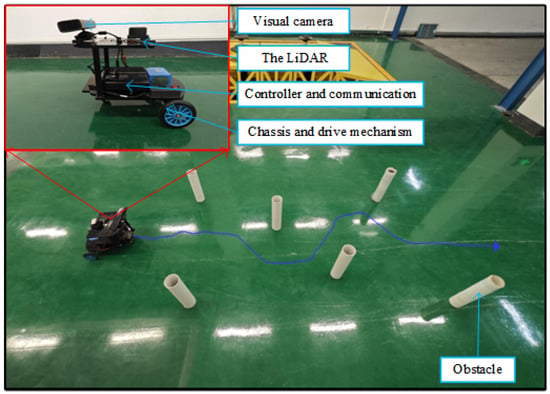

To verify the performance of the robot visual navigation and obstacle avoidance control system described in Section 3 and Section 4, a Three-Wheeled Differential Mobile Robot (TWDMR) was used [50]. It is mainly composed of a vision camera, laser radar (RPLIDAR S2, measuring radius 30 m, frequency 32 K), a wheeled robot chassis, and ROS software control system. The visual camera is used for visual navigation, collecting environmental image information, data acquisition and environment modeling of obstacle avoidance systems with LiDAR. The wheeled robot chassis includes stepper motors on both sides, universal wheels, and uses differential control to change or maintain course. The ROS control system was used to verify the feasibility of the algorithm and integrated the visual navigation control algorithm based on image features and the obstacle avoidance control algorithm based on LiDAR data.

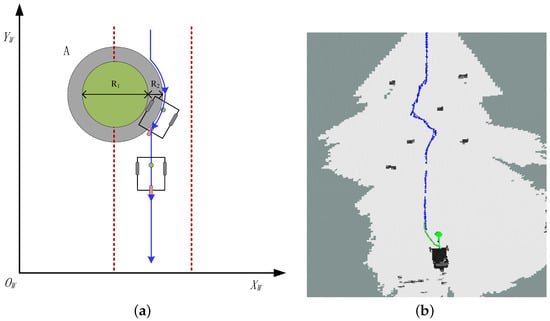

5.2. Test of Obstacle Avoidance System

Figure 13 shows the obstacle avoidance test scenario for an autonomous robot, which integrates the obstacle avoidance control algorithm developed in this paper to implement the obstacle avoidance function. To increase the complexity of the obstacle during the test, six plastic tubes with a diameter of 20 cm were placed at different positions and angles to act as obstacles. The autonomous robot was tested directly through six obstacles at different speeds of 0.25 m/s, 0.5 m/s, and 1 m/s. Following the theoretical analysis in Section 3, the central circle of the obstacle is enlarged using a modified clustering algorithm. The expanded part is shown in the gray part, and the theoretical obstacle avoidance trajectory of the robot when it passes the obstacle is shown in Figure 14a. Finally, real-time scanning of the surrounding environment information via LiDAR is used to locate and measure the robot’s trajectory using the slam-map modeling method on top of the computer’s Ubuntu system to obtain the robot’s moving position and pose in real time. The results show that the robot can successfully pass through obstacles at three different speeds: 0.25 m/s, 0.5 m/s, and 1 m/s, and achieve obstacle avoidance function at different speeds. The actual obstacle avoidance trajectory of the robot when passing obstacles is shown in Figure 14b. This test preliminarily proves that the autonomous robot (TWDMR) designed in this paper is equipped with LiDAR and vision camera only (detailed parameters are introduced in Section 5.1), and the obstacle avoidance function is realized by using the developed algorithm. It does not require sophisticated GPS navigation and RTK localization modules, reflecting its convenience and low-cost advantages.

Figure 13.

A diagram of the field test of the Three-Wheeled Mobile Differential Robot (TWMDR).

Figure 14.

(a) The theoretical obstacle avoidance analysis diagram of robot; (b) the SLAM obstacle avoidance trajectory diagram of robot.

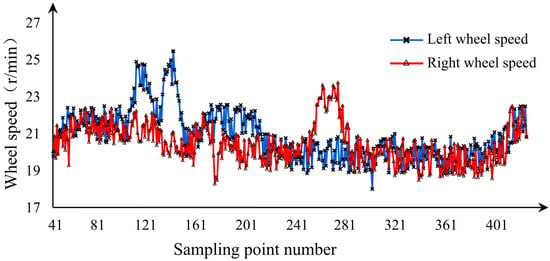

5.3. Test of Visual Guidance System

The experimental test is carried out in the robot (TWDMR), which uses a vision system to control the steering. The visual guidance trajectory and the operational specifications of the robot platform are shown in Table 4. The experimental testing procedure is based on a guided trajectory of first running straight, then turning, and finally running straight again. Figure 15 depicts the real-time variation in the left and right wheel speeds of the robot. Specifically, when the sampling number is 100, the robot starts to turn in the right corner; when the sampling number reaches 150, the right turn ends; when the sampling number reaches 250, the robot starts to turn left; when the sampling number reaches 290, the left turn ends. In the case of linear guidance trajectory, the deviation between the rotation speed of the left and right wheels of the robot platform is within r/min.

Table 4.

The operating parameters of the robot platform.

Figure 15.

Real-time change in robot left and right wheel speed.

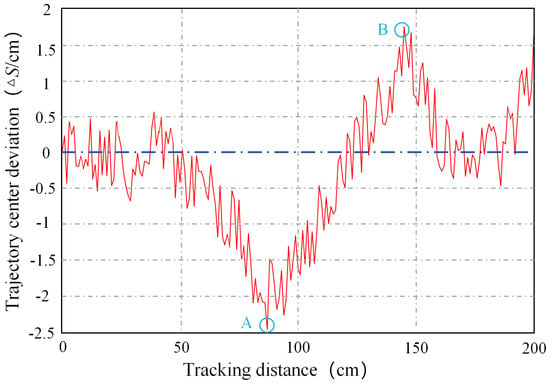

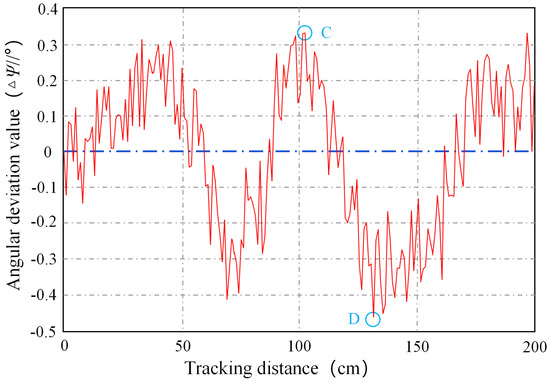

Figure 16 shows the test results of the robot trajectory tracking, including the transverse center deviation and angular deviation , where the negative value represents the left deviation and the positive value is the right deviation. The test results show that the center deviation of the navigation track is within the range of −2.5 cm to 2 cm, the maximum deviation to the left of the track center is shown at point A in Figure 16, and the maximum deviation to the right is shown at point B in Figure 16. Meanwhile, the angle deviation is within the range of to , the maximum deviation to the left is shown at point D in Figure 17, and the maximum deviation to the right is shown at point C in Figure 17.

Figure 16.

The deviation of the lateral center of the trajectory.

Figure 17.

The deviation of trajectory angle.

6. Conclusions and Future Works

In this paper, we develop and design an agricultural mobile robot platform with visual navigation and obstacle avoidance capabilities to address the problem of low-cost obstacle detection for agricultural unmanned mobility platforms. As an obstacle detection sensor, LiDAR suffers from several drawbacks, such as a small amount of data, incomplete information about the detection environment, and redundant obstacle information during clustering. In this paper, we propose an improved clustering algorithm for obstacle avoidance systems to extract dynamic and static obstacle information and obtain the centers of obstacle scan points. Then, the maximum diameter of the convex polygon of the cluster data are obtained by fusion of the rotating jamming algorithm and the convex hull algorithm, and the obstacle danger zone is established with the cluster center as the center, and the VFH+ obstacle avoidance path planning and heading control method is proposed. Compared to the field linear navigation system built by Higuti et al. [18] in the same context using LiDAR only, our autonomous mobile robot is capable of turning and avoiding obstacles. On the other hand, the color-space analysis and feature analysis of complex orchard environment images were performed to obtain the H component of the HSV color space, which was used to obtain the ideal visual guidance trajectory images by means of mean-filtering and corrosion treatment. Finally, the effectiveness and robustness of the proposed algorithm are verified through the three-wheeled mobile differential robot platform. Compared with the proposed LiDAR–vision–inertial odometer fusion navigation method for agricultural vehicles by Zhao et al. [51], we can achieve obstacle avoidance and navigation functions for agricultural robots by using only LiDAR and camera. It embodies the advantages of simplicity, low cost, and efficiency, and is easier to meet the operational needs of the agricultural environment and farmers.

In this paper, the visual navigation and obstacle avoidance functions of agricultural mobile robots are realized based on the three-wheeled mobile differential robot platform. In contrast to the methods proposed by Ball and Winterhalter et al. [11,23], for agricultural navigation based on GNSS and GPS technology, the stable operation of agricultural robots working in environments rejected by GNSS is achieved. It is expected to shorten the development cycle of agricultural robot navigation systems when GNSS is not working, and has the advantages of low cost, simple operation, and good stability. In our future research work, we will transfer the proposed algorithm and hardware platform to agricultural driverless tractors for field trials and increase the construction of environmental maps for agricultural tractors.

Author Contributions

Conceptualization, C.H. and W.W.; Data curation, C.H.; Formal analysis, W.W. and X.L.; Investigation, X.L.; Project administration, J.L.; Resources, W.W.; Software, W.W.; Validation, X.L.; Writing—original draft, C.H.; Writing—review and editing, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China under Grant 62203176, the 2024 Basic and Applied Research Project of Guangzhou Science and Technology Plan under Grant SL2024A04j01318, and China Scholarship Council under Grant 202308440524.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Albiero, D.; Garcia, A.P.; Umezu, C.K.; de Paulo, R.L. Swarm robots in mechanized agricultural operations: A review about challenges for research. Comput. Electron. Agric. 2022, 193, 106608. [Google Scholar] [CrossRef]

- Jin, Y.; Liu, J.; Xu, Z.; Yuan, S.; Li, P.; Wang, J. Development status and trend of agricultural robot technology. Int. J. Agric. Biol. Eng. 2021, 14, 1–19. [Google Scholar] [CrossRef]

- Li, J.; Li, J.; Zhao, X.; Su, X.; Wu, W. Lightweight detection networks for tea bud on complex agricultural environment via improved YOLO v4. Comput. Electron. Agric. 2023, 211, 107955. [Google Scholar] [CrossRef]

- Sparrow, R.; Howard, M. Robots in agriculture: Prospects, impacts, ethics, and policy. Precis. Agric. 2021, 22, 818–833. [Google Scholar] [CrossRef]

- Ju, C.; Kim, J.; Seol, J.; Son, H.I. A review on multirobot systems in agriculture. Comput. Electron. Agric. 2022, 202, 107336. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Liu, X.; Wang, J.; Li, J. URTSegNet: A real-time segmentation network of unstructured road at night based on thermal infrared images for autonomous robot system. Control. Eng. Pract. 2023, 137, 105560. [Google Scholar] [CrossRef]

- Dutta, A.; Roy, S.; Kreidl, O.P.; Bölöni, L. Multi-robot information gathering for precision agriculture: Current state, scope, and challenges. IEEE Access 2021, 9, 161416–161430. [Google Scholar] [CrossRef]

- Malavazi, F.B.; Guyonneau, R.; Fasquel, J.B.; Lagrange, S.; Mercier, F. LiDAR-only based navigation algorithm for an autonomous agricultural robot. Comput. Electron. Agric. 2018, 154, 71–79. [Google Scholar] [CrossRef]

- Rouveure, R.; Faure, P.; Monod, M.O. PELICAN: Panoramic millimeter-wave radar for perception in mobile robotics applications, Part 1: Principles of FMCW radar and of 2D image construction. Robot. Auton. Syst. 2016, 81, 1–16. [Google Scholar] [CrossRef]

- Ball, D.; Upcroft, B.; Wyeth, G.; Corke, P.; English, A.; Ross, P.; Patten, T.; Fitch, R.; Sukkarieh, S.; Bate, A. Vision-based obstacle detection and navigation for an agricultural robot. J. Field Robot. 2016, 33, 1107–1130. [Google Scholar] [CrossRef]

- Santos, L.C.; Santos, F.N.; Valente, A.; Sobreira, H.; Sarmento, J.; Petry, M. Collision avoidance considering iterative Bézier based approach for steep slope terrains. IEEE Access 2022, 10, 25005–25015. [Google Scholar] [CrossRef]

- Monarca, D.; Rossi, P.; Alemanno, R.; Cossio, F.; Nepa, P.; Motroni, A.; Gabbrielli, R.; Pirozzi, M.; Console, C.; Cecchini, M. Autonomous Vehicles Management in Agriculture with Bluetooth Low Energy (BLE) and Passive Radio Frequency Identification (RFID) for Obstacle Avoidance. Sustainability 2022, 14, 9393. [Google Scholar] [CrossRef]

- Blok, P.; Suh, H.; van Boheemen, K.; Kim, H.J.; Gookhwan, K. Autonomous in-row navigation of an orchard robot with a 2D LIDAR scanner and particle filter with a laser-beam model. J. Inst. Control. Robot. Syst. 2018, 24, 726–735. [Google Scholar] [CrossRef]

- Gao, X.; Li, J.; Fan, L.; Zhou, Q.; Yin, K.; Wang, J.; Song, C.; Huang, L.; Wang, Z. Review of wheeled mobile robots’ navigation problems and application prospects in agriculture. IEEE Access 2018, 6, 49248–49268. [Google Scholar] [CrossRef]

- Wang, T.; Chen, B.; Zhang, Z.; Li, H.; Zhang, M. Applications of machine vision in agricultural robot navigation: A review. Comput. Electron. Agric. 2022, 198, 107085. [Google Scholar] [CrossRef]

- Durand-Petiteville, A.; Le Flecher, E.; Cadenat, V.; Sentenac, T.; Vougioukas, S. Tree detection with low-cost three-dimensional sensors for autonomous navigation in orchards. IEEE Robot. Autom. Lett. 2018, 3, 3876–3883. [Google Scholar] [CrossRef]

- Higuti, V.A.; Velasquez, A.E.; Magalhaes, D.V.; Becker, M.; Chowdhary, G. Under canopy light detection and ranging-based autonomous navigation. J. Field Robot. 2019, 36, 547–567. [Google Scholar] [CrossRef]

- Guyonneau, R.; Mercier, F.; Oliveira, G.F. LiDAR-Only Crop Navigation for Symmetrical Robot. Sensors 2022, 22, 8918. [Google Scholar] [CrossRef]

- Nehme, H.; Aubry, C.; Solatges, T.; Savatier, X.; Rossi, R.; Boutteau, R. Lidar-based structure tracking for agricultural robots: Application to autonomous navigation in vineyards. J. Intell. Robot. Syst. 2021, 103, 61. [Google Scholar] [CrossRef]

- Hiremath, S.A.; Van Der Heijden, G.W.; Van Evert, F.K.; Stein, A.; Ter Braak, C.J. Laser range finder model for autonomous navigation of a robot in a maize field using a particle filter. Comput. Electron. Agric. 2014, 100, 41–50. [Google Scholar] [CrossRef]

- Zhao, R.M.; Zhu, Z.; Chen, J.N.; Yu, T.J.; Ma, J.J.; Fan, G.S.; Wu, M.; Huang, P.C. Rapid development methodology of agricultural robot navigation system working in GNSS-denied environment. Adv. Manuf. 2023, 11, 601–617. [Google Scholar] [CrossRef]

- Winterhalter, W.; Fleckenstein, F.; Dornhege, C.; Burgard, W. Localization for precision navigation in agricultural fields—Beyond crop row following. J. Field Robot. 2021, 38, 429–451. [Google Scholar] [CrossRef]

- Chen, W.; Sun, J.; Li, W.; Zhao, D. A real-time multi-constraints obstacle avoidance method using LiDAR. J. Intell. Fuzzy Syst. 2020, 39, 119–131. [Google Scholar] [CrossRef]

- Lenac, K.; Kitanov, A.; Cupec, R.; Petrović, I. Fast planar surface 3D SLAM using LIDAR. Robot. Auton. Syst. 2017, 92, 197–220. [Google Scholar] [CrossRef]

- Park, J.; Cho, N. Collision Avoidance of Hexacopter UAV Based on LiDAR Data in Dynamic Environment. Remote Sens. 2020, 12, 975. [Google Scholar] [CrossRef]

- Li, J.; Wang, J.; Peng, H.; Hu, Y.; Su, H. Fuzzy-Torque Approximation-Enhanced Sliding Mode Control for Lateral Stability of Mobile Robot. IEEE Trans. Syst. Man Cybern. 2022, 52, 2491–2500. [Google Scholar]

- Wang, D.; Chen, X.; Liu, J.; Liu, Z.; Zheng, F.; Zhao, L.; Li, J.; Mi, X. Fast Positioning Model and Systematic Error Calibration of Chang’E-3 Obstacle Avoidance Lidar for Soft Landing. Sensors 2022, 22, 7366. [Google Scholar] [CrossRef]

- Kim, J.S.; Lee, D.H.; Kim, D.W.; Park, H.; Paik, K.J.; Kim, S. A numerical and experimental study on the obstacle collision avoidance system using a 2D LiDAR sensor for an autonomous surface vehicle. Ocean Eng. 2022, 257, 111508. [Google Scholar] [CrossRef]

- Asvadi, A.; Premebida, C.; Peixoto, P.; Nunes, U. 3D Lidar-based static and moving obstacle detection in driving environments: An approach based on voxels and multi-region ground planes. Robot. Auton. Syst. 2016, 83, 299–311. [Google Scholar] [CrossRef]

- Baek, J.; Noh, G.; Seo, J. Robotic Camera Calibration to Maintain Consistent Percision of 3D Trackers. Int. J. Precis. Eng. Manuf. 2021, 22, 1853–1860. [Google Scholar] [CrossRef]

- Li, J.; Dai, Y.; Su, X.; Wu, W. Efficient Dual-Branch Bottleneck Networks of Semantic Segmentation Based on CCD Camera. Remote Sens. 2022, 14, 3925. [Google Scholar] [CrossRef]

- Dong, H.; Weng, C.Y.; Guo, C.; Yu, H.; Chen, I.M. Real-time avoidance strategy of dynamic obstacles via half model-free detection and tracking with 2d lidar for mobile robots. IEEE/ASME Trans. Mechatron. 2020, 26, 2215–2225. [Google Scholar] [CrossRef]

- Choi, Y.; Jimenez, H.; Mavris, D.N. Two-layer obstacle collision avoidance with machine learning for more energy-efficient unmanned aircraft trajectories. Robot. Auton. Syst. 2017, 98, 158–173. [Google Scholar] [CrossRef]

- Gao, F.; Li, C.; Zhang, B. A dynamic clustering algorithm for LiDAR obstacle detection of autonomous driving system. IEEE Sens. J. 2021, 21, 25922–25930. [Google Scholar] [CrossRef]

- Jin, X.Z.; Zhao, Y.X.; Wang, H.; Zhao, Z.; Dong, X.P. Adaptive fault-tolerant control of mobile robots with actuator faults and unknown parameters. IET Control. Theory Appl. 2019, 13, 1665–1672. [Google Scholar] [CrossRef]

- Zhang, H.D.; Liu, S.B.; Lei, Q.J.; He, Y.; Yang, Y.; Bai, Y. Robot programming by demonstration: A novel system for robot trajectory programming based on robot operating system. Adv. Manuf. 2020, 8, 216–229. [Google Scholar] [CrossRef]

- Raikwar, S.; Fehrmann, J.; Herlitzius, T. Navigation and control development for a four-wheel-steered mobile orchard robot using model-based design. Comput. Electron. Agric. 2022, 202, 107410. [Google Scholar] [CrossRef]

- Gheisarnejad, M.; Khooban, M.H. Supervised Control Strategy in Trajectory Tracking for a Wheeled Mobile Robot. IET Collab. Intell. Manuf. 2019, 1, 3–9. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Yang, C.; Chen, C.; Wang, N.; Ju, Z.; Fu, J.; Wang, M. Biologically inspired motion modeling and neural control for robot learning from demonstrations. IEEE Trans. Cogn. Dev. Syst. 2018, 11, 281–291. [Google Scholar]

- Wu, J.; Wang, H.; Zhang, M.; Yu, Y. On obstacle avoidance path planning in unknown 3D environments: A fluid-based framework. ISA Trans. 2021, 111, 249–264. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Tomizuka, M. Real time trajectory optimization for nonlinear robotic systems: Relaxation and convexification. Syst. Control. Lett. 2017, 108, 56–63. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, M.S.; Li, B. A visual navigation algorithm for paddy field weeding robot based on image understanding. Comput. Electron. Agric. 2017, 143, 66–78. [Google Scholar] [CrossRef]

- Liu, Q.; Yang, F.; Pu, Y.; Zhang, M.; Pan, G. Segmentation of farmland obstacle images based on intuitionistic fuzzy divergence. J. Intell. Fuzzy Syst. 2016, 31, 163–172. [Google Scholar] [CrossRef]

- Liu, M.; Ren, D.; Sun, H.; Yang, S.X.; Shao, P. Orchard Areas Segmentation in Remote Sensing Images via Class Feature Aggregate Discriminator. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Mills, L.; Flemmer, R.; Flemmer, C.; Bakker, H. Prediction of kiwifruit orchard characteristics from satellite images. Precis. Agric. 2019, 20, 911–925. [Google Scholar] [CrossRef]

- Liu, S.; Wang, X.; Li, S.; Chen, X.; Zhang, X. Obstacle avoidance for orchard vehicle trinocular vision system based on coupling of geometric constraint and virtual force field method. Expert Syst. Appl. 2022, 190, 116216. [Google Scholar] [CrossRef]

- Begnini, M.; Bertol, D.W.; Martins, N.A. A robust adaptive fuzzy variable structure tracking control for the wheeled mobile robot: Simulation and experimental results. Control. Eng. Pract. 2017, 64, 27–43. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, W.; Luo, X.; Zhang, Z.; Lu, Y.; Wang, B. Developing an ioT-enabled cloud management platform for agricultural machinery equipped with automatic navigation systems. Agriculture 2022, 12(2), 310. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, Y.; Long, L.; Lu, Z.; Shi, J. Efficient and adaptive lidar visual inertial odometry for agricultural unmanned ground vehicle. Int. J. Adv. Robot. Syst. 2022, 19, 17298806221094925. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).