Abstract

Deep learning (DL) models are gaining popularity in forest variable prediction using Earth observation (EO) images. However, in practical forest inventories, reference datasets are often represented by plot- or stand-level measurements, while high-quality representative wall-to-wall reference data for end-to-end training of DL models are rarely available. Transfer learning facilitates expansion of the use of deep learning models into areas with sub-optimal training data by allowing pretraining of the model in areas where high-quality teaching data are available. In this study, we perform a “model transfer” (or domain adaptation) of a pretrained DL model into a target area using plot-level measurements and compare performance versus other machine learning models. We use an earlier developed UNet based model (SeUNet) to demonstrate the approach on two distinct taiga sites with varying forest structure and composition. The examined SeUNet model uses multi-source EO data to predict forest height. Here, EO data are represented by a combination of Copernicus Sentinel-1 C-band SAR and Sentinel-2 multispectral images, ALOS-2 PALSAR-2 SAR mosaics and TanDEM-X bistatic interferometric radar data. The training study site is located in Finnish Lapland, while the target site is located in Southern Finland. By leveraging transfer learning, the SeUNet prediction achieved root mean squared error (RMSE) of m and R2 of 0.882, considerably more accurate than traditional benchmark methods. We expect such forest-specific DL model transfer can be suitable also for other forest variables and other EO data sources that are sensitive to forest structure.

1. Introduction

Forests cover approximately one-third of Earth’s landmass and play a key role in mitigating the effects of climate change by reducing the concentration of carbon dioxide in the atmosphere. Forests are fundamental for preserving biodiversity as they are the natural habitat for a myriad of plant and animal species. Several international initiatives, such as the Framework Convention on Climate Change and the Convention on Biological Diversity by the United Nations, as well as the European Union’s recent strategies like Forest 2021 and Biodiversity 2020, have heightened the need for enhanced forest monitoring at national and local levels by increasing the reporting requirements. Interest in voluntary certification schemes and diversification of forest uses (e.g., carbon or other ecosystem services) is also growing among forestry stakeholders, which typically requires improved forest monitoring approaches for verification purposes, further increasing the need for reliable high frequency information on forests.

The use of Earth observation (EO) data has become an integral part of forest monitoring due to numerous satellite sensor missions designed for environmental monitoring during the last decades [1,2]. However, as satellite sensors typically cannot directly measure many forest attributes, indirect modeling approaches have been developed that combine EO data with ground based observations to provide a set of predictions presented in the form of a map. In wide-area forest mapping, statistical, physics-based, and machine learning (ML) methods have been used for modeling and prediction purposes [3]. Satellite remotely sensed data combined with in situ forest measurements can be considered a cost-effective means for producing forest attribute maps and forest estimates on various areal levels, but such predictions and estimates can have considerable uncertainty [2].

EO datasets used for mapping of boreal forest resources include both optical and SAR datasets [3,4]. Optical datasets were widely used historically and are particularly suitable for mapping forest cover and tree species [5]. The SAR signal, especially at lower frequencies, is sensitive to forest biomass and structure [3]. Multitemporal and polarimetric features provide improved accuracies [6,7]. Interferometric SAR datasets have particular sensitivity to the vertical structure of forest that makes them very suitable predictors for mapping forest structure and height [8,9].

Deep learning (DL) methods are widely adopted for various image classification and semantic segmentation tasks [10,11,12]. To date, several fully convolutional and recurrent neural networks have been demonstrated in forest remote sensing [13,14,15,16,17,18,19,20,21,22]. These models often provide improved accuracy in forest classification or prediction of forest variables, as well as in forest change mapping. However, training of DL models often requires a fully segmented reference label, such as airborne laser scanner (ALS)-based forest attribute maps that are costly and not available over wide areas.

Instead, reference data from forests are typically available as field sample plots measurements. The sample plots have been traditionally used to produce sample-based areal estimates of forest resources, but in recent decades also as in situ data for training and evaluating remote sensing based models.

Within the DL context, such reference data can be considered weak labels [18] and they are not optimal for training a DL model. In addition to the typically insufficient number of sample plots, they lack information on the spatial context.

Although there is an increasing amount of ALS data available over boreal forests, current airborne campaigns do not provide the spatial and temporal coverage required to meet the needs of modern forest inventories. For example, annual information would be needed to support forest management decisions and monitoring requirements in an operational context. Therefore, the lack of appropriate ALS data greatly reduces the utility of DL models for operational forest monitoring. An effective way to reduce reference data requirements is to use transfer learning approaches as successfully adopted earlier into such remote sensing tasks as land-use classification, SAR target recognition and forest change detection [23,24,25,26]. Transfer learning is a model training strategy that leverages pre-existing knowledge learned from the source task instead of training the model “from scratch” in the target domain only. The basic idea behind transfer learning is that the features learned by the pretrained model can be relevant and generalizable in the target domain, especially when the source and target domains share common underlying patterns or characteristics [27]. With transfer learning techniques, it may be possible to fine-tune DL models to the area of interest using data for field sample plots that are generally more widely available in managed forest areas. This approach would open possibilities for both geographic (i.e., from an area that has ALS data to an area that does not) and temporal (i.e., from a year that has ALS data to a year that does not) transfer of DL models, facilitating wider usability of DL-based approaches in operational forest monitoring.

In this study, we aim to demonstrate the potential of transfer learning in the context of forest monitoring with deep learning models. With this purpose, we focus on one of the key forest attributes, forest height, and demonstrate the improvement in prediction accuracy brought by transfer learning. We pretrain an earlier developed SeUNet deep learning model [15] with ALS-based data in a training site situated in Lapland in the northern part of Finland. Further, we apply the pretrained model in the target site in the southern part of Finland, with and without fine-tuning the model with field sample plots from the target area. We highlight the effects of the model fine-tuning with field plot data and compare the resulting accuracy to the accuracies of traditional machine learning methods trained in the target area using the same field plots. We also investigate scenarios where reference forest plots in the target site are very scarce or measurements for certain forest strata are completely missing. Additionally, we compare various EO sensors in terms of prediction accuracies.

2. Materials and Methods

2.1. UNet Model and Its Derivative/Improved Models

One of the main and frequently used architectures of convolutional neaural networks (CNNs) for semantic segmentation of satellite images, including the forest mask segmentation, is the U-Net architecture. UNet is a variant of a convolutional network originally introduced for biomedical image segmentation [28] and is presently often used in various semantic segmentation and regression tasks [15,19,29]. The basic UNet (also known as Vanilla UNet) uses convolutional network to extract image features. The UNet model consists of an encoder and a decoder, which are connected by skip connections. The encoder is responsible for feature extraction and the decoder is used to restore the feature map to its original size. The model is symmetrical in structure and has a double-convolution structure at its core, which is made up of a 2-D convolution, batch normalization, and ReLU activation. This structure allows UNet to extract deeper features of the input data and maintain a good fusion ability at all levels while keeping the feature map size unchanged. The overall architecture of UNet makes it well suited for pixel-level classification and regression tasks.

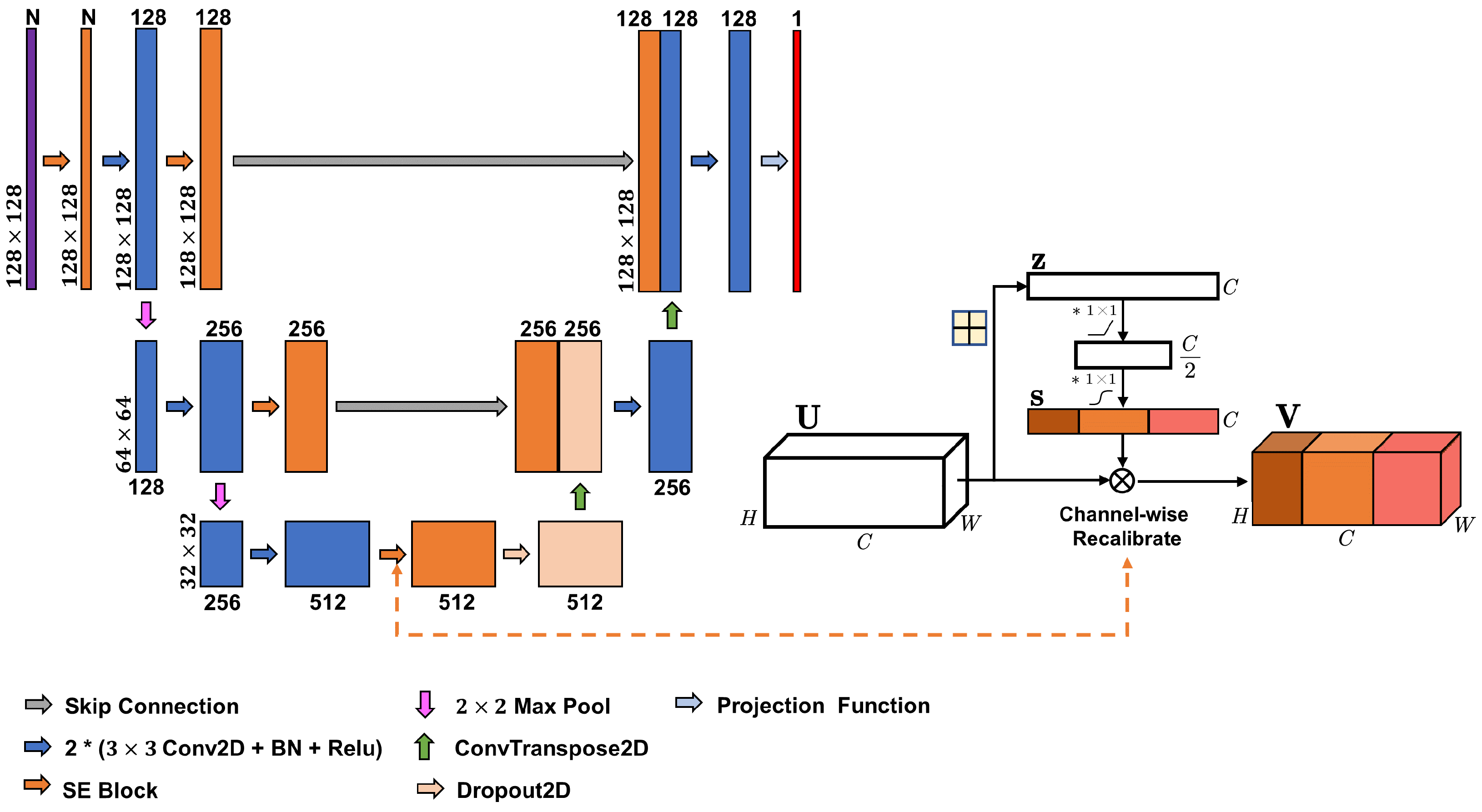

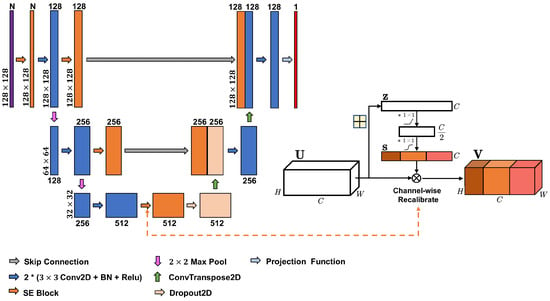

Here, we use an improved version called SeUNet, suitable for producing spatially explicit pixel-level forest inventory using EO data [15] when trained with fully-segmented (spatially explicit) image patches, such as ALS-based forest inventory data. Within SeUNet, a squeeze-and-excitation attention module is used to recalibrate the multi-source features using channel self-attention to improve the accuracy of predictions with limited reference data. SeUNet was superior to basic UNet model and was shown to be particularly effective in boreal forest height mapping using Sentinel-1 time series and Sentinel-2 datasets [15]. It was also found superior to several other enhanced UNet-based models in predicting forest height, cover and density [30]. The structure of the model is shown in Figure 1.

Figure 1.

SeUNet model for regression task with embedded squeeze-excitation blocks.

2.2. Logic of Transfer Learning

The concept of transfer learning in deep learning involves using a pretrained model on a large dataset as a starting point for training on a new, smaller dataset [27,31,32]. Since both study areas (initial and target sites) are represented by boreal forests, we can safely assume their latent representations strongly overlap in EO feature space, although the specific forest characteristics may be different (considerably more sparse forest in Lapland). This means the prior knowledge learned from the source site can mitigate the negative influence brought by limited reference, e.g., plot-level forest reference that limits inferring spatial context (neighbourhood features). Our hypothesis is that by leveraging the knowledge gained from the pretraining on a spatially explicit dataset, we can achieve better results compared to the end-to-end training from scratch using conventional statistical and machine learning approaches with limited reference forest plot data collected at the new target site.

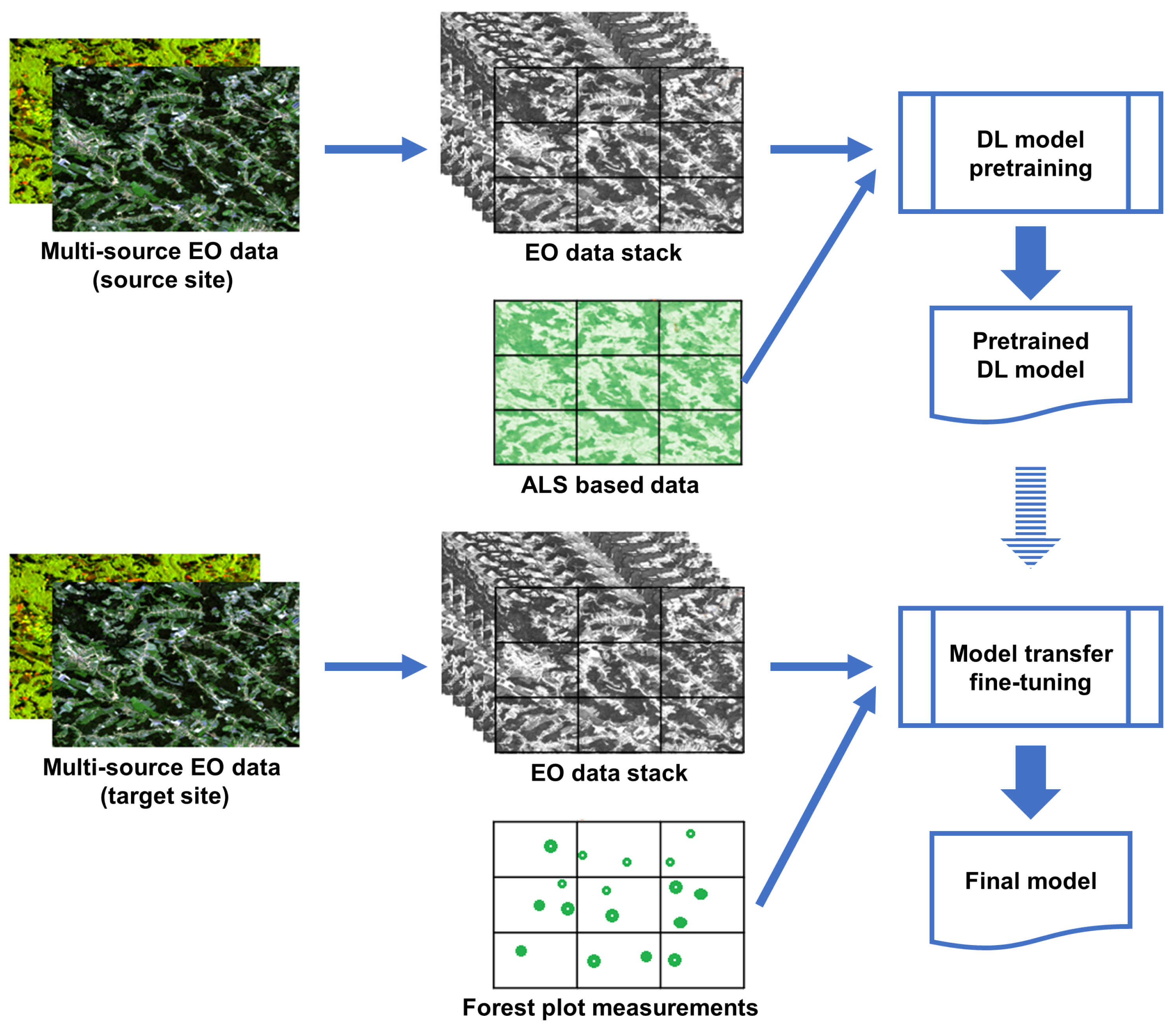

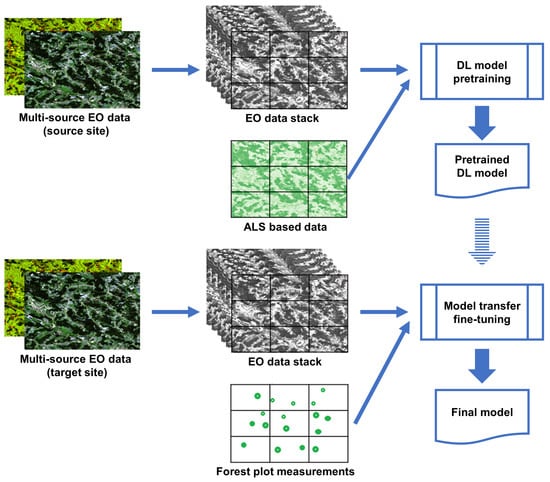

2.3. Our Approach

Our suggested approach follows the study logic shown in Figure 2. Firstly, a SeUNet model is pretrained using a multi-source EO-dataset and spatially explicit reference based on ALS data. This is done over the pretraining site where such reference data are available. Both spectral and spatial forest signatures are learned as parameters of the pretrained UNet model. Various combinations of input satellite EO data are tested, resulting in a set of DL models.

Figure 2.

Model transfer logic.

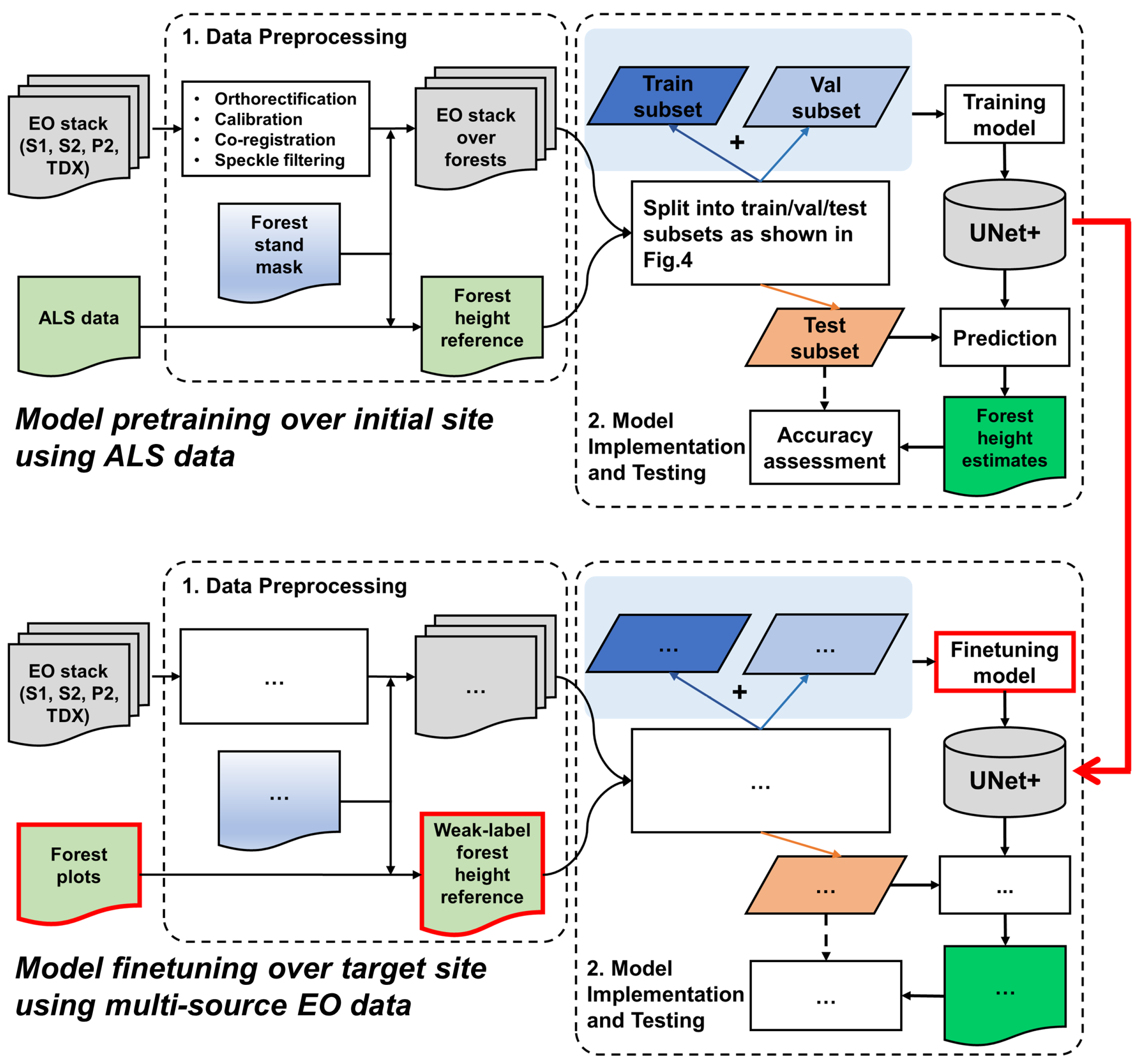

In the second stage, the learned SeUNet parameters are used as the initial weights for model training over the target site. In contrast to the pretraining site, only a very sparse reference dataset is available from the target site, represented by forest plots. The model is fine-tuned by including only pixels that have known reference value in the loss computation. Both the pretraining phase and DL model training over the target site are illustrated in Figure 3. We also investigate scenarios for which only a fraction (5–10%) of the originally available forest plots are used, and when several forest strata are underrepresented.

Figure 3.

Overall processing approach: ellipses in the model fine-tuning section over target site (bottom part) denote the repeated steps from the model pretraining phase (upper part).

Lastly, our predictions are compared to predictions obtained using traditional EO-based forest inventory methods including multiple linear regression (MLR) and the popular k-nearest neighbors (k-NN) technique [7,33,34,35]. MLR is a basic regression approach often used for modeling the relationship between response variables such as GSV or forest biomass and SAR and optical image features [4,34,36]. k-NN is an established nonparametric and distribution free method widely used for forest variable prediction [37]. Predictions are obtained as weighted linear combinations of attribute values for a set of k nearest units selected from a reference set of units with known values. The choice of these units is determined by a distance metric defined in the auxiliary variable space.

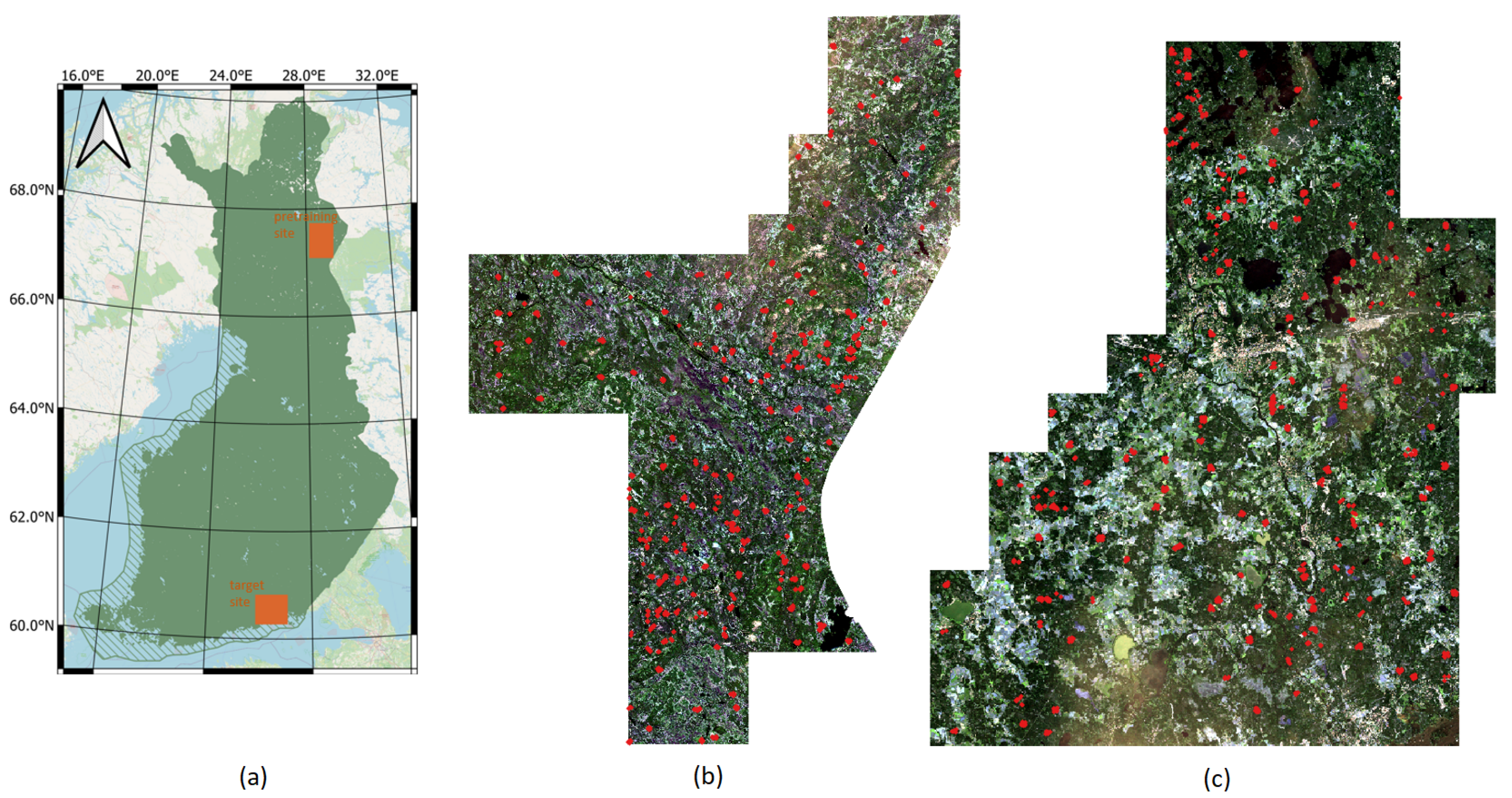

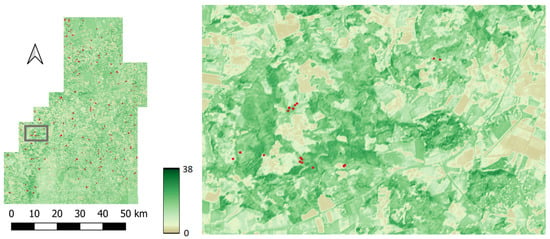

2.4. Study Sites, Satellite SAR and Optical Data

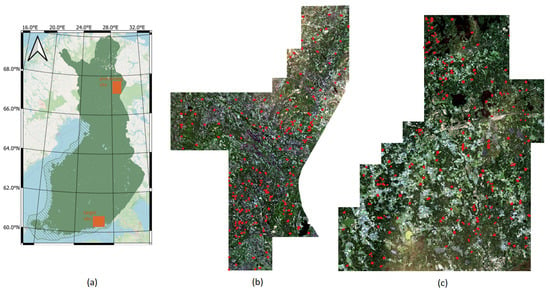

The study is performed in Finland over two geographically distinct areas, spatially separated by around 700 km (Figure 4). Both sites feature boreal forests, one in the northern Boreal zone and the other in the Southern boreal zone. The forests in northern Finland are in many aspects different from the forests in southern Finland. This makes this pair of study sites particularly suitable for demonstrating the potential of DL model transfer. The northern site in Lapland, in the Salla municipality (hereafter called the Salla site), features a varying landscape of forests and open peatlands. The elevation above sea level ranges from less than 200 m to around 500 m. The forests are dominated by pine (60%) with some spruce (20%) and broadleaf forest (20%). The average volume in the study site is 95.3 m3/ha, with an average height of 10.8 m. The forests in Lapland are typically sparser than in the south, with the average stem density around 1600 stems/ha in the Salla site. The southern site between the towns of Kouvola and Kotka (hereafter called the Kouvola site) features a typical southeastern Finnish landscape with a mixture of forests and agricultural areas. The site has only minor elevation differences, with the highest points generally less than 100 m above sea level. The forest has around 40% pine, more than 30% spruce and the rest broadleaf trees. The average volume in the study site is 156.4 m3/ha, with an average height of 15.0 m. Note that the volume and height are substantially greater than in the Salla site. Similarly, the stem density of around 2200 stems/ha in the Kouvola site is clearly greater than the stem density on the Salla site (1600 stems/ha).

Figure 4.

Study site locations in Finland (a), Sentinel-2 natural color composite image over the Salla (pretraining) site (b), and over the Kouvola (target) site (c). Red dots designate forest plot locations.

2.4.1. Satellite Image Data

The EO dataset consists of a 14-channel tensor with Sentinel-2 image bands, Sentinel-1 yearly composite bands (VV and VH bands) as well as ALOS-2 PALSAR-2 and TanDEM-X image features. The model training uses image patches of size 256 px × 256 px, with all input images preprocessed and further resampled to 10 m × 10 m pixel size using bilinear interpolation.

- Satellite optical data were represented by an ESA Sentinel-2 image acquired on 12 July 2018 over the Salla site and 11 August 2018 over the Kouvola site. The Level 2A surface reflectance product systematically generated by ESA and distributed in tiles of 110 × 110 km2 was used. The multi-spectral instrument on board Sentinel-2 satellites has 13 spectral bands with 10 m (four bands), 20 m (six bands) and 60 m (three bands) spatial resolutions. We used bands 2, 3, 4, 5, 8, 11, 12 in our analysis, which were found most useful for monitoring boreal forest in prior studies [38].

- SAR data are represented by an annual composite of 39 Sentinel-1 images acquired during 2018 in the same geometry. The original dual-polarization Sentinel-1A images available as GRD (ground range detected) products were radiometrically terrain-flattened and orthorectified with VTT in-house software using local digital elevation model available from National Land Survey of Finland [39]. Final preprocessed images were in gamma-naught format [40].

- L-band SAR imagery was represented by JAXA mosaic produced from dual-pol ALOS-2 PALSAR-2 images acquired during 2018.

- Interferometric SAR layers were represented by TanDEM-X images collected during summer 2018. TanDEM-X canopy height model is calculated via subtracting of TanDEM-X phase and topographic phase (calculated from local topographic map) in slant range followed by phase-to-height conversion and geocoding obtained height product. It is later called interferometric canopy height model (ICHM) in the paper. Additionally, TanDEM-X coherence magnitude was used as an image feature layer. ESA SNAP software was used for calculating TanDEM-X image layers.

2.4.2. Reference Data

Over the initial pretraining site, ALS-based heights were used. ALS data were collected by National Land Survey of Finland during summer of 2018. Forest heights were estimated from ALS point clouds as average elevation of forest classified points over ground layer within 20 m × 20 m pixel cells. In this way, a wall-to-wall coverage of the pretraining study site with the reference height information was obtained.

Reference data over the target Kouvola site were represented by data for a sample of plots measured by the Finnish Forest Centre in 2018. The plots were circular with three different radii: 9 m in young and advanced managed forests with a relatively large tree density; 12.62 m in forest with a small stem density but usually large volume due to the mature development stage and 5.64 m in seedling stands. Altogether 1064 field plots were used. Two thirds of the plots, 709 of them, were used for model training (model transfer), while the remaining 355 (selected as every third plot after arranging the plots in the order by volume) were used for the uncertainty assessment.

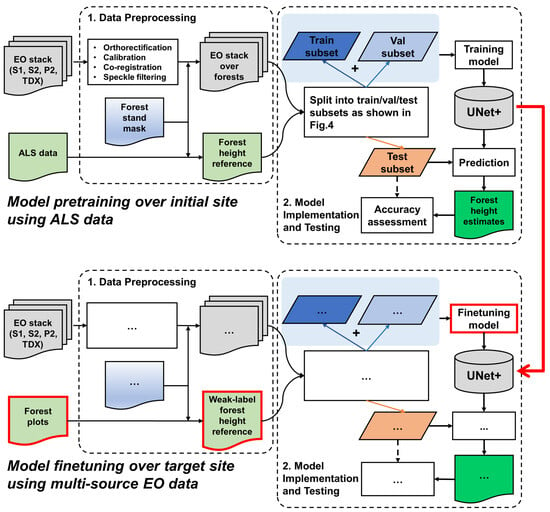

2.5. Implementation Details

In model pretraining, the wall-to-wall reference and EO data were first cropped into 256 px × 256 px non-overlapping image patches in spatial dimension. The non-forested regions were removed by masking out corresponding areas on both EO data and reference data. Additionally, several patches with forest cover proportion less than 20% were removed. In total, 614 image patches were prepared, half of which (307 patches) were randomly assigned to the testing subset, 10% were assigned to the validation subset and the remaining patches were used in the model training. Later, after the data augmentations that included in situ spatial shifting and rotations, 1433 augmented training patches were generated.

In model transfer, forest field plots were converted to rasters. We used the similar image patch cropping approach as described above, keeping only patches that had at least one plot-level reference within the patch. In total, there were 138 testing patches, 32 validation patches and 524 augmented training patches. We used the weak-labeled training and validation patches to fine-tune the pretrained model.

In both pretraining and fine-tuning processes, we used Adam as the optimizer and OneCycleLR as learning rate scheduler, the maximum learning rate was set to 1 × 10. We pretrained the model for 100 epochs in pretraining with weight decay factor of 1 × 10 to avoid overfitting. The best checkpoint was determined based on the validation loss value and corresponding weights were saved for later fine-tuning the transferred model over the target site. Differently from the pretraining process, we chose not to fully fine-tune the transferred model with a large number of epochs. This decision was made to prevent overfitting and maintain its generalization ability. After conducting preliminary testing, we determined that five epochs were sufficient, given the selected learning rate and our specific EO and forest reference data situation.

For conventional methods, plot-level EO features were calculated using described datasets and data splits over the Kouvola target site and used in the model training.

The experiments were performed using Windows 11 Enterprise with Intel(R) Core(TM) i9-10900X CPU and NVIDIA RTX A5000 GPU accelerated by CUDA 11.7 toolkit. The SeUNet model was built with a neural network library, Pytorch 1.11.0. MLR and RF were implemented with Scikit-learn machine learning toolbox.

2.6. Accuracy Metrics

The prediction accuracies for the various regression models werecalculated using the following accuracy metrics, including root mean squared error (RMSE), relative root mean squared error (rRMSE) and coefficient of determination (R2) as follows:

where and are reference and predicted values of forest height for i-th plot, is the mean value of all plots and n is the total number of plots.

3. Results

3.1. Prediction Performance over Pretraining (Salla) Site

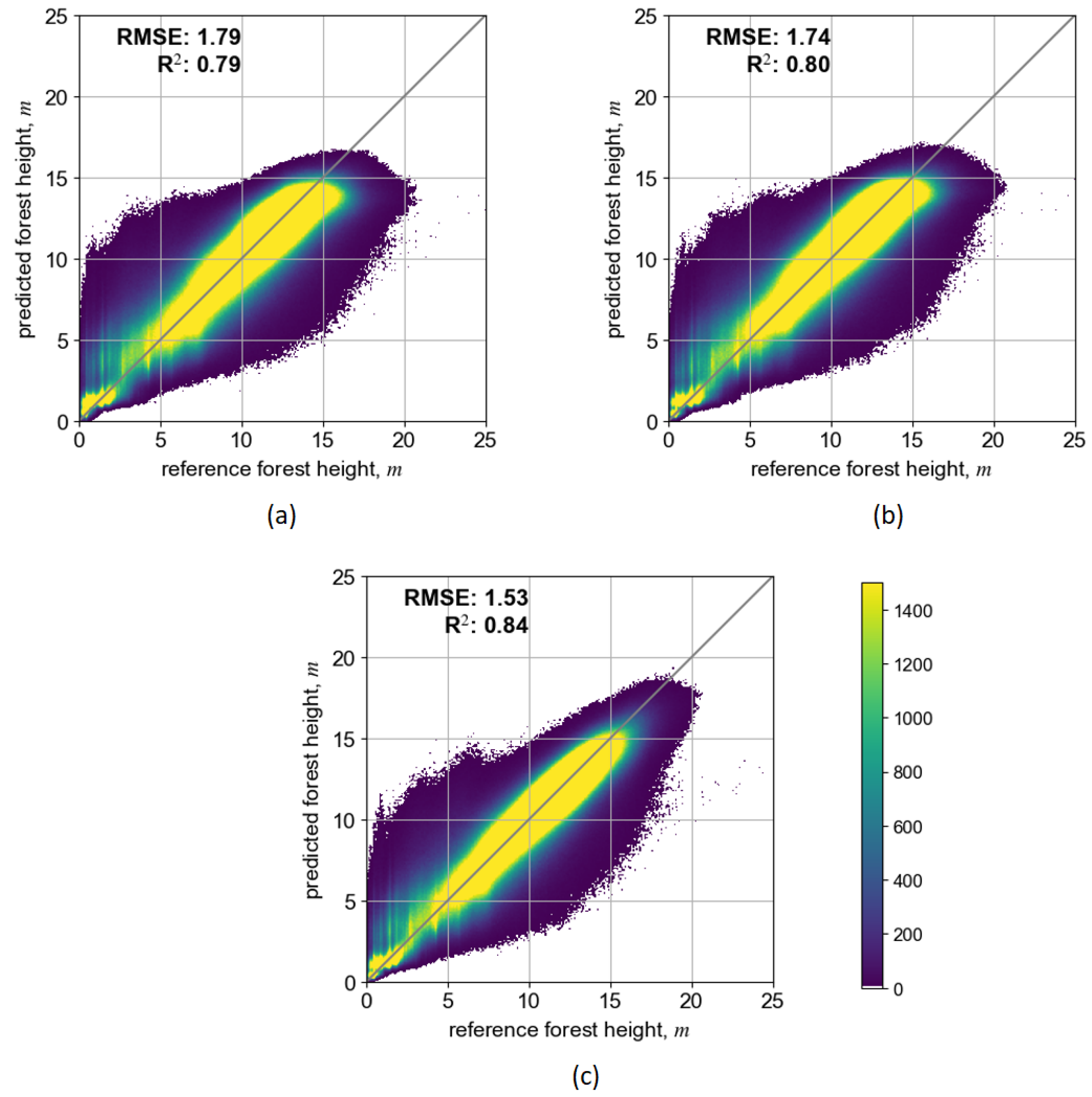

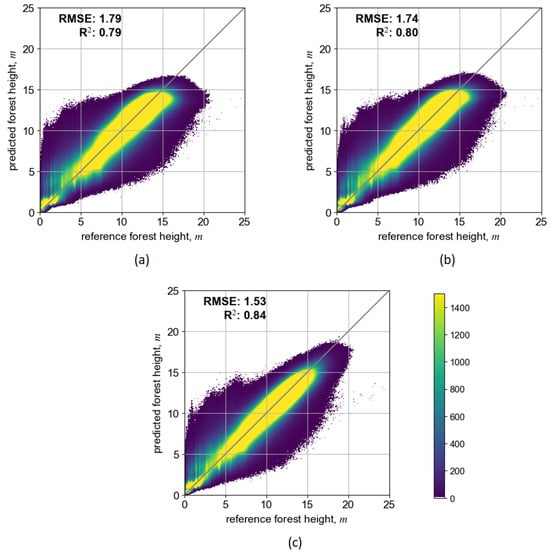

Figure 5 shows scatterplots after the model pretraining on the original/initial dataset over the Salla site in Lapland. Three combinations of input EO datasets resulted in three distinct DL models: (1) S2-Lapland, with only Sentinel-2 data; (2) S1S2-Lapland, where both Sentinel-2 and Sentinel-1 data were included; and (3) MS-Lapland, where multi-source data additionally included ALOS-2 PALSAR-2 and TanDEM-X data layers.

Figure 5.

Scatterplots illustrating prediction performance of pretrained SeUNet model using ALS reference data: (a) S2-Lapland model, (b) S1S2-Lapland model, (c) MS-Lapland model.

Depending on the input EO dataset, the prediction accuracy of the SeUNet model (calculated on testing image patches not involved in the training process) varied. The scenario that included only Sentinel-2 bands had somewhat smaller prediction accuracy (RMSE = 1.79 m, rRMSE = 18.8%, R2 = 0.79), while adding the Sentinel-1 layer slightly increased the prediction performance (RMSE = 1.74 m, rRMSE = 18.2%, R2 = 0.80). Importantly, these results can be achieved using freely available Copernicus datasets. When adding the ALOS-2 PALSAR-2 mosaic (with well-known L-band sensitivity to forest growing stock volume) or TanDEM-X data (with well-known sensitivity to vertical forest structure), the prediction accuracies increased to RMSE = 1.53 m, rRMSE = 16.1% and R2 = 0.84. Importantly, those predictions are performed at 10 m pixel resolution, and accuracy estimates increase when aggregating to coarser mapping units.

3.2. Prediction Performance over Target (Kouvola) Site

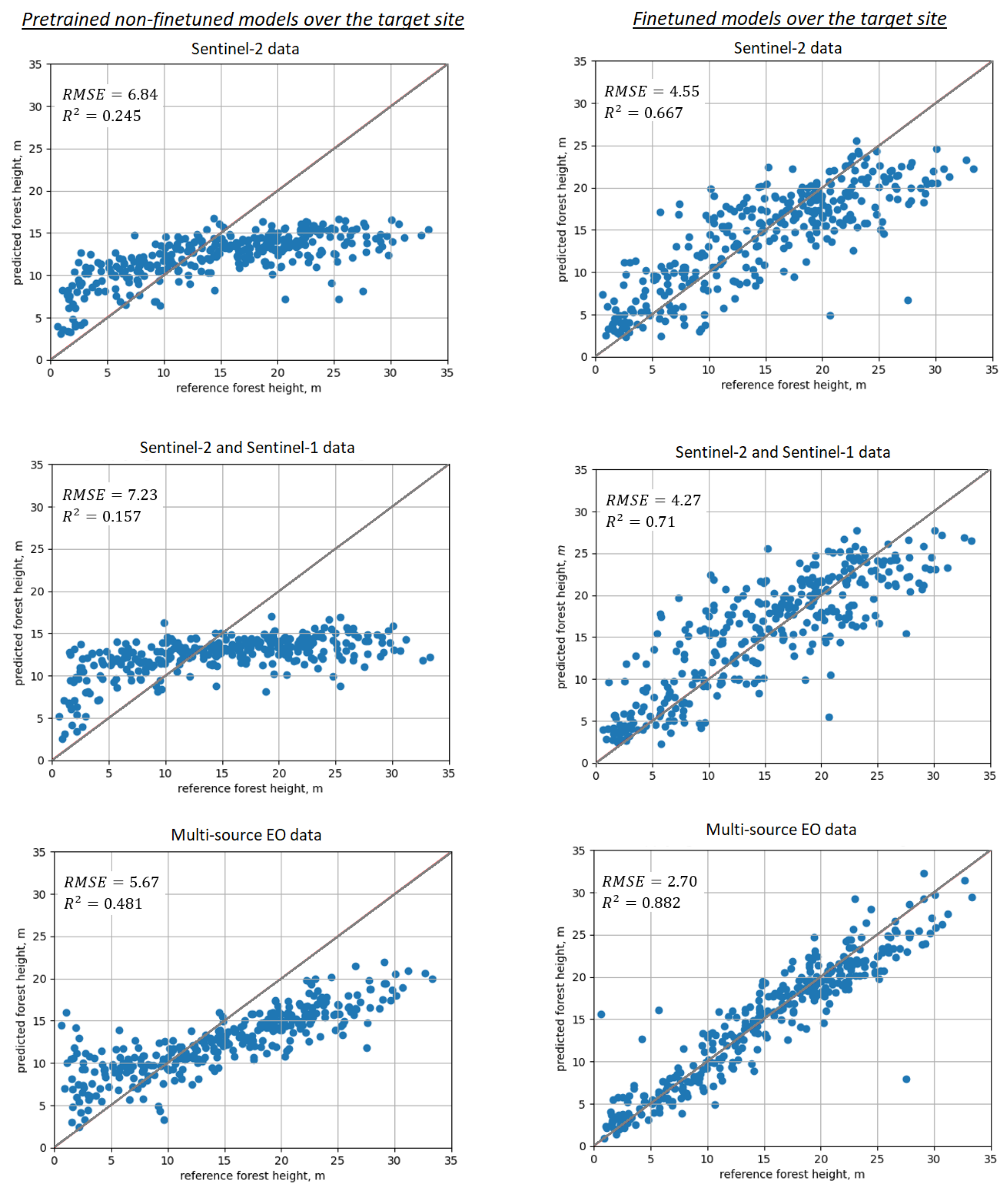

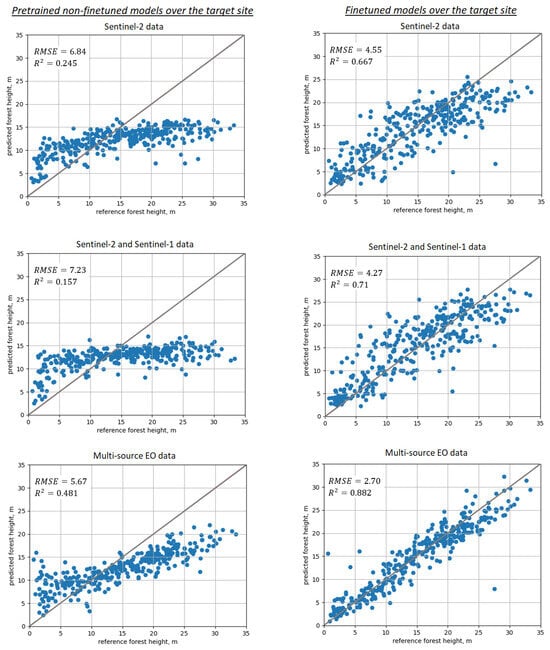

Results of “blindly” applying pretrained models (without fine-tuning with in situ forest plots) over the target Kouvola site are shown in the upper row of Figure 6. Prediction performance is not satisfactory, with RMSE in the range 5–7 m (40–50% rRMSE), strong negative systematic prediction error of 2.3–2.8 m present in all model predictions and apparent signal-saturation effects clearly visible for taller trees. Such performance can be attributed to differences in both forest structure and EO images (spectral, calibration, seasonal changes). Similar effects could be expected if the target site was the same as pretraining, and only EO images acquired at another time were used in the prediction (i.e., at least the forest structure would be the same). Prediction performance was problematic for all non-fine-tuned DL models and EO data inputs, with slightly more accurate predictions for the MS-Lapland model.

Figure 6.

Scatterplots illustrating prediction performance for SeUNet model: 1st column—use of pretrained non-fine-tuned models; 2nd column—using fine-tuned models; 1st row—Sentinel-2 data; 2nd row—combined Sentinel-2 and Sentinel-1 images; 3rd row—all available images (Sentinel-2, Sentinel-1, ALOS-2 PALSAR-2, TanDEM-X).

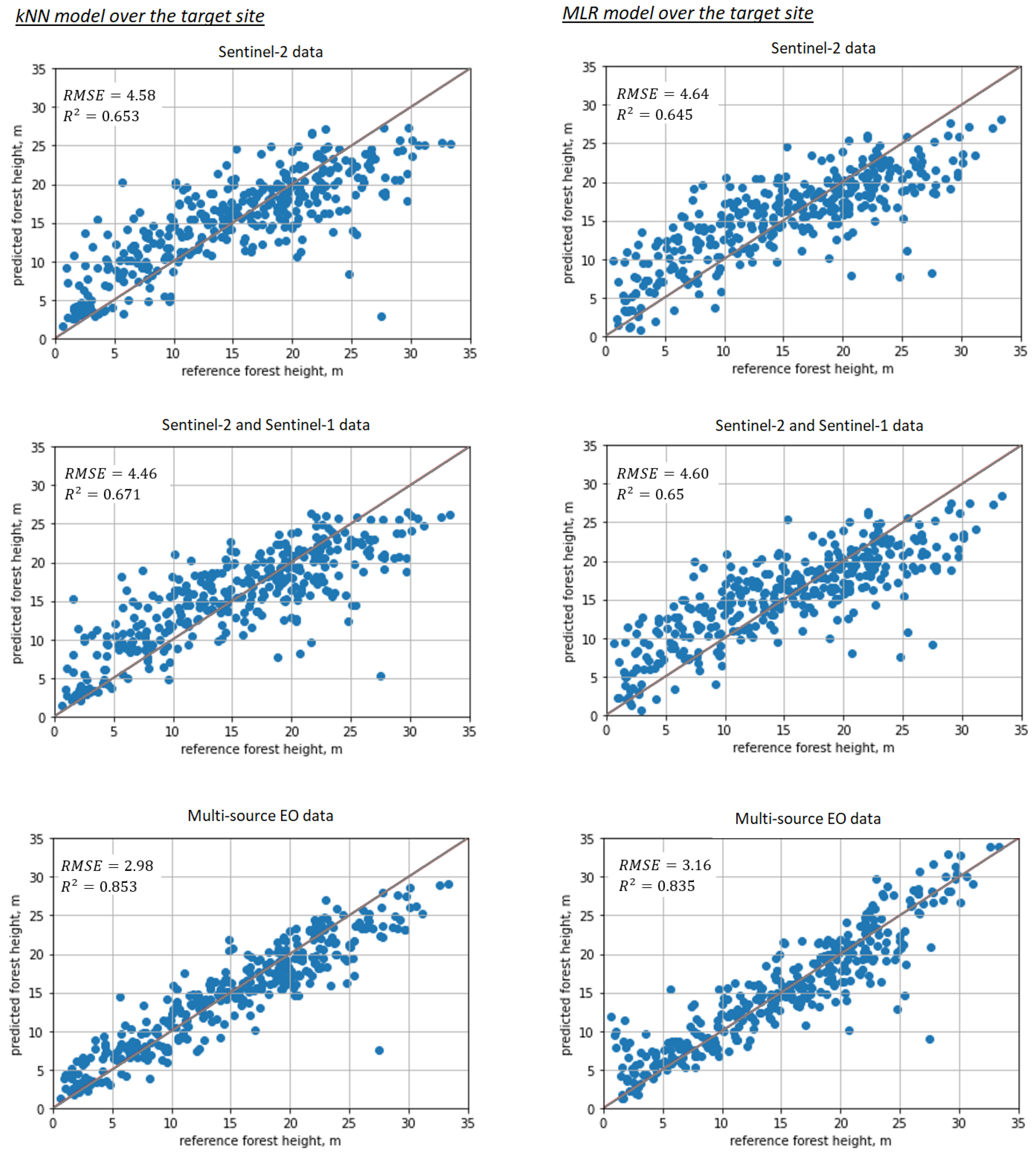

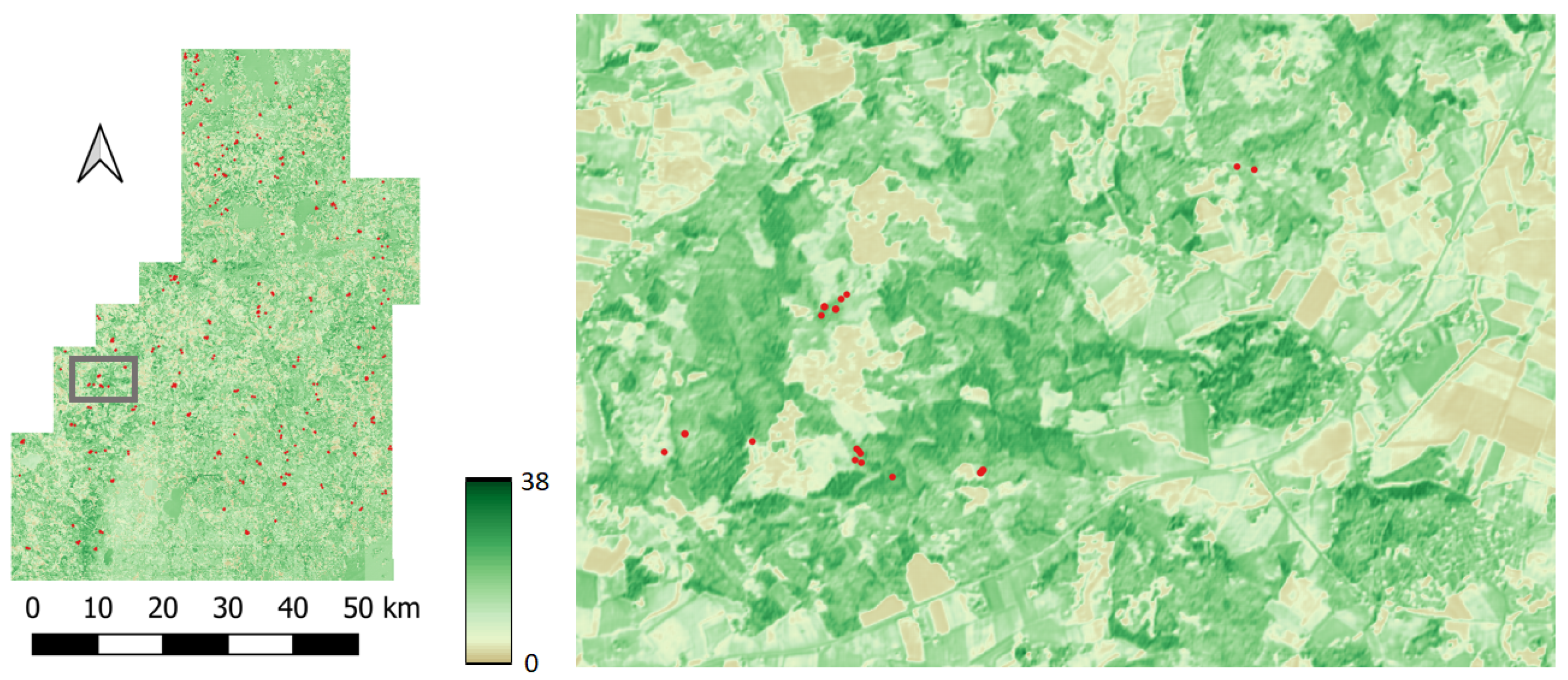

After the SeUNet model fine-tuning, accuracies strongly increased. Scatterplots for corresponding models are shown in Figure 6, bottom row. Detailed results are collected in Table 1 for all models as well as for the benchmark MLR and kNN methods. Corresponding scatterplots for the benchmark models are shown in Figure 7. Forest height map produced using fine-tuned SeUNet model is shown in Figure 8.

Table 1.

Prediction accuracy statistics for Kouvola (target) site.

Figure 7.

Scatterplots illustrating prediction performance for baseline methods: 1st column—kNN, 2nd column—MLR; 1st row—Sentinel-2 data; 2nd row—combined Sentinel-2 and Sentinel-1 images; 3rd row—all available images (Sentinel-2, Sentinel-1, ALOS-2 PALSAR-2, TanDEM-X).

Figure 8.

Predicted forest height map over the Kouvola (target) site and zoomed in fragment. SeUNet model has been used in the prediction.

Using additional explanatory EO variables improved prediction accuracy in all cases for both baseline methods and for developed models. The multi-source dataset demonstrated the most accurate predictions for all methods, including benchmark methods, similar to model pretraining with ALS data.

Achieved prediction accuracies are considerably increased compared to applying non-fine-tuned methods and compared to traditional machine learning approaches.

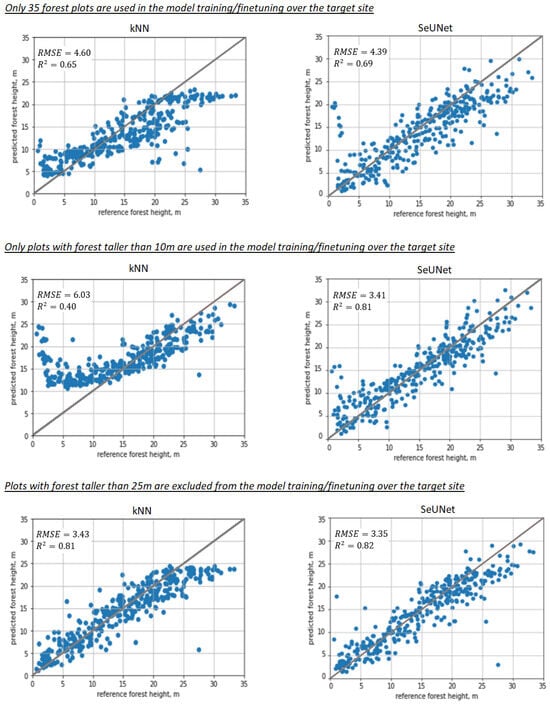

3.3. Model Stability with Scarce or Missing Reference Data in the Kouvola Site

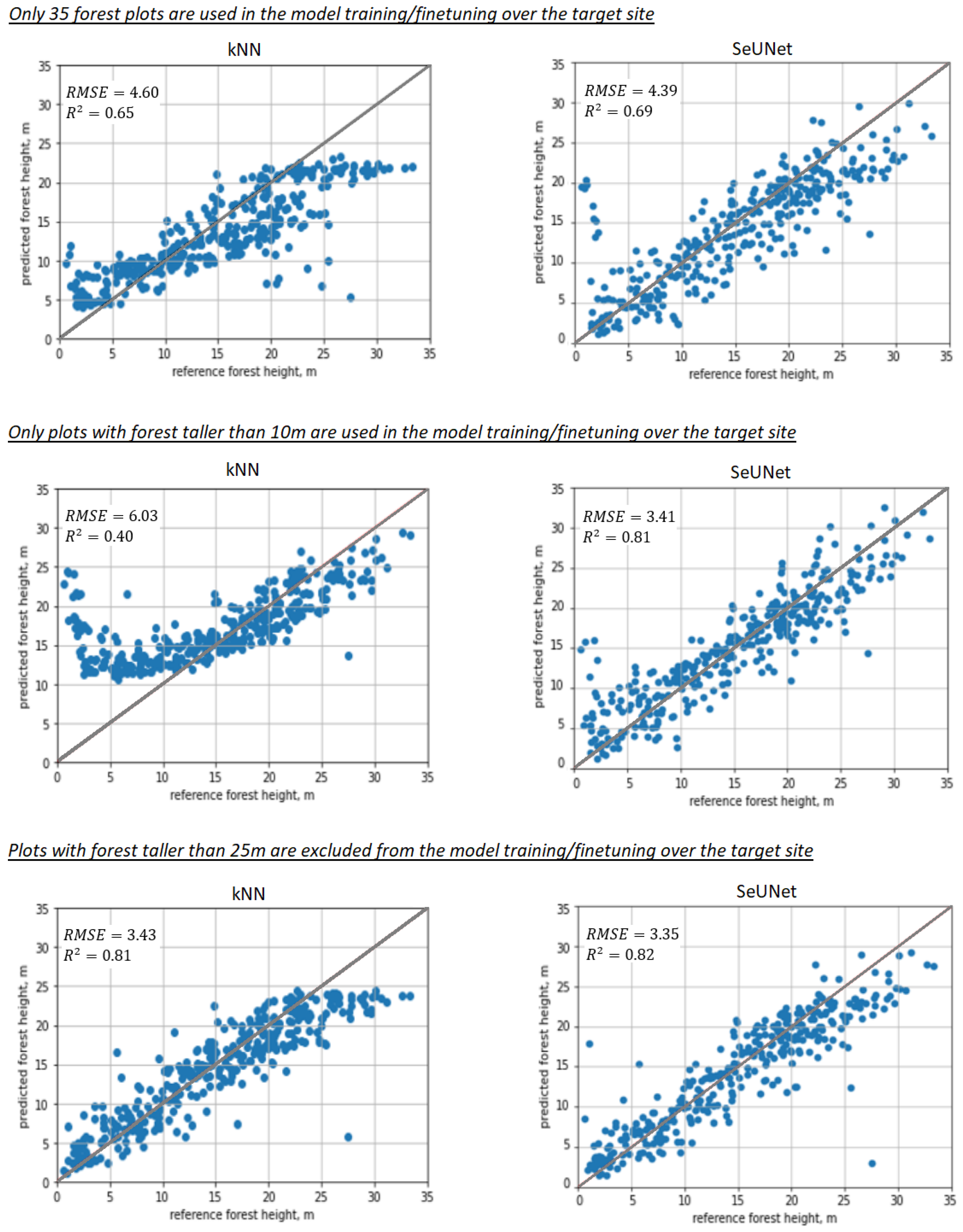

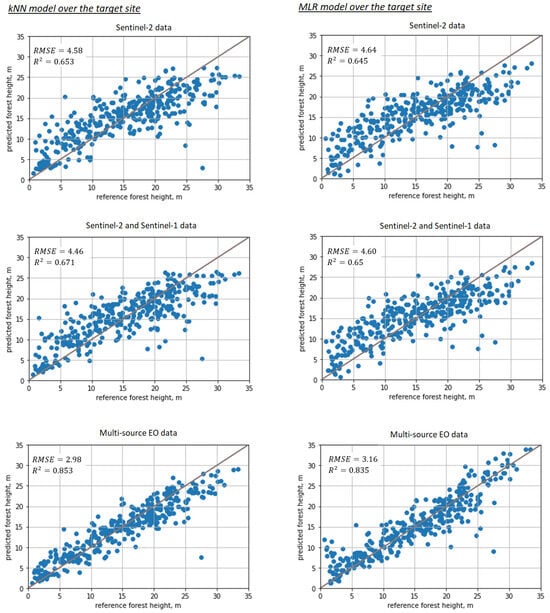

Additionally, we checked the stability/resilience of the suggested model and baseline approaches with respect to scarce (only 5–10% of all plots are available over target site, suitable for SeUNet model transfer or baseline traditional model training) or completely missing data (e.g., forest plots with tall trees or forest plots with short trees are completely missing from the target site). The baseline method for comparison was kNN, which is widely used in forest inventory mapping [2] and which also demonstrated superior performance compared to MLR also in our experiments. Using multi-source EO data, the specific considered cases that simulate realistic scenario of sparse measurements or lack of specific forest strata over target site were:

- Only 35 plots (5% of original training sample) are used in model training (model transfer).

- Small-biomass plots with forest heights less than 10 m are completely removed from the training dataset.

- Tall forest plots with heights exceeding 25 m are completely removed from the training dataset.

It can be seen that in all the “extreme” cases, illustrated in Figure 9, classical nonparametric approaches such as kNN start to fail when reference data are scarce or non-representative (e.g., missing the smaller or larger end of the height range).

Figure 9.

Scatterplots illustrating prediction performance for nonparameteric models: 1st column—kNN, 2nd column—SeUNet, 1st row—scarce training sample (35 plots used), 2nd row—plots smaller than 10 m were removed during model training/finetuning in the target site, 3rd row—plots with forest taller than 25 m were absent in model training/finetuning over the target site.

The difference in R2 and RMSE is substantial between fine-tuned SeUNet and kNN methods, but most importantly, the range of predicted heights is distorted, and predictions cannot understandably reach certain (small or large) values that fall out of the range of forest plot measurements. For example, when young forest plots are missing in the training set (Figure 9, 2nd column), kNN fails to produce any estimates less than 10 m. The same effect can be observed for forest plots with tall trees, with the tallest predictions of ∼25 m for kNN, while the SeUNet predictions reach 30 m. When a small number of plots is used, kNN predictions start to approach the average height. The effect would become even more pronounced if the number of plots is decreased further, e.g., to 10 reference plots. The model transfer is still expected to work in that situation, provided that the reference dataset for the target site includes sample units with short and tall forests.

4. Discussion

4.1. Overall Discussion on Performance across Various Models and EO Datasets

Figure 6 clearly demonstrates increased accuracy for SeUNet model prediction when using transfer learning mechanism. As visible from scatterplots in the upper row, the prediction without transfer learning does not follow the 1:1 line very well, showing strong systematic negative prediction error and saturation effects. The heights of taller forests greater than 15 m are underestimated, while height predictions of forests shorter than 10 m tend to be overestimated. In the best case when multisource satellite data are used, the mean prediction error exceeds 2 m.

Within the transfer learning, the finetuning process compensates for the disadvantage of insufficient or erroneous (because of different forest structure and EO data) representation information. Representation features themselves, particularly spatial context learned from the source site, are transferred to the target site. As shown in the bottom row of Figure 6, when using multisource EO data, the rRMSE is reduced by 20% units compared to the scenario for which the non-fine-tuned model is applied, and the mean prediction error is accordingly reduced to less than m.

Additionally, we compare the performance of SeUNet to other traditional machine learning methods, such as kNN, RF and MLR. The deep learning model generated more precise forest height predictions, especially when multi-satellite data are used. Achieved RMSE of m with rRMSE = 18.07% is several percentage units smaller than obtained with kNN, RF and MLR. From the scatterplots, we can clearly observe a closer linear relationship between predictions and reference. The improvement can be attributed to learned spatial representations from the pretraining site. This is in line with common observation that use of textural information can help improve predictions of forest variables [6].

Regarding the role of different EO datasets, combining SAR and optical datasets provided more accurate predictions than use of optical or SAR datasets alone, even though the gain from adding Sentinel-1 to Sentinel-2 was limited compared to using Sentinel-2 data only. In Figure 6 and Figure 7, the systematic error is slightly reduced when adding Sentinel-1 data. One possible reason of limited improvement is that Sentinel-1 data are not the optimal dataset for forest height prediction. In contrast, adding L-band ALOS-2 PALSAR-2 and especially interferometric TanDEM-X image layers strongly increased the accuracies; such effects were observed over both the pretraining site with ALS data and over the target site. With multi-source data, considerable reduction in rRMSE was observed not only for SeUNet but also for kNN and MLR, which can be explained by high sensitivity of TanDEM-X to vertical structure of forests [8,9].

4.2. Comparison with Prior Studies

To date, numerous methods and remotely sensed data combinations have been used for forest height prediction in boreal and temperate forests [5,38,41,42,43]. Reported accuracies for boreal forest height mapping range are typically in the order of 30-40% rRMSE in these studies. The results achieved in the present study can therefore be considered quite comparable to earlier methods, although in this study a model transfer between two very different boreal study sites was performed.

In the boreal region, reported forest height accuracies with Sentinel-2 and Landsat data have been 35–60% rRMSE at forest plot level [5,13,38], while the proposed model transfer approach reached 30% rRMSE at plot level with Sentinel-2 data only, and 18% rRMSE with multi-source EO dataset. Predictions obtained with ML models and Sentinel-2 data are within the same accuracy range as in recent published studies using Sentinel-2 and Landsat [5], while our predictions using DL models are more accurate for similar EO data combinations. The literature on using SAR data for forest height prediction is limited, with most studies conducted at forest-stand level, but our tree height predictions are at the same accuracy level or even greater than reported for retrievals with TanDEM-X interferometric SAR data that are considered very suitable for vertical forest structure retrieval [8,9,44]. Regarding coupling of ALS data with satellite EO data in our pretraining in Lapland, our results are in line with other similar studies [45]. Use of recurrent and fully convolutional neural networks with fully segmented labels and Sentinel-1 time series or combined SAR and optical data provided accuracies on the order of 17–30% rRMSE that are similar to results in our work over the pretraining site in Lapland [15,16,20,46]. Inversion of TanDEM-X images acquired over Estonian hemiboreal and Canadian boreal forests provided accuracies with RMSE in range of 3–4 m and correlation coefficients R2 larger than 0.5 [44,47,48,49,50]. Such results were achieved using various sets of TanDEM-X data and simplified semi-empirical parametric models. Other reports indicate typical error levels around 4.8 m RMSE for TanDEM-X datasets in comprehensive studies using those models [51].

Our results with MS-Lapland SeUNet model fine-tuned using forest plots in Kouvola are more precise, thus indicating the useful synergy of SAR and optical datasets in boreal forest parameter mapping and thus the benefits of using combined multi-source datasets.

4.3. Outlook

The first demonstration of the model transfer of a deep learning model for forest variable estimation performed in this study indicates substantial potential of such approaches for operational forest monitoring tasks. The demonstrated study can be considered a “geographic transfer”, while also “temporal transfer” is possible when a model is pretrained on one year’s EO data and then later used over the same site but with EO data collected in another year. Obviously, a combination of those is possible as well. Both types of model transfer hold great potential for forest monitoring.

The geographic model transfer, such as the one used in this study, extends the utility of deep learning models into areas that do not have suitable training data. Knowing the sporadic availability of ALS data over boreal and temperate forests, this would greatly expand the usability of deep learning models. It must be remembered that in this study, the transfer was performed within the same boreal zone, albeit between two forest areas with different structural characteristics. Further studies are needed to investigate the limitations of transferability of models between different biomes with greater structural differences (e.g., in species composition). Similarly, transferability of models between combinations of remotely sensed data from different sensors would be required. This would further broaden the benefit of model transfer by allowing flexible use of the best possible dataset combinations in the target area, regardless of the dataset used in the model development.

Temporal model transfers, which were not tested in this study, would improve the efficiency of high frequency forest monitoring even in areas that have ALS data coverage for some years. With a limited number of field plots measured in the following (or preceding) years, availability of pretrained DL model and model transfer technique would allow use of the fine-tuned model in the years that do not have any ALS data available from the area. This would improve the frequency and consistency of forest monitoring in the area, which is something that is in great demand as the monitoring and reporting requirements for forest owners are constantly increasing.

The results of this study also indicate also that model transfer can be performed with sub-optimal non-representative field plot reference data (e.g., with very few plots or limited range of observations). Limitations of existing field datasets can be overcome by transferring a model that is trained with optimal datasets into the target area (or year) by fine-tuning it with existing sub-optimal field plots. Alternatively, limited field campaigns can be conducted to collect sufficient data for fine-tuning. This increases the efficiency and reduces the costs of forest inventories, thereby enabling increases in temporal frequency in an economical manner. Thus, the approach presented in this study has the potential to support forest owners in meeting the increasing forest monitoring and reporting requirements.

In our opinion, further improvement from an operational viewpoint, and also in terms of prediction accuracy, can be gained by improving an “initial” pretrained deep learning model that is further fine-tuned. The Lapland model in this regard was limited as it did not feature the whole range of forest height and biomass values. Reference data from a whole country, such as Finland, can be used to establish such baseline deep learning models for taiga forests. Our further work will focus on scaling the demonstrated approach and establishing such baseline multi-source EO models for various forest biomes. Another direction is incorporating other UNet+ models as well as other semi-supervised convolutional and recurrent models to form an extended set of deep learning modeling approaches.

5. Conclusions

This is the first demonstration of deep learning model transfer in the context of EO-based forest inventory using multi-source optical and SAR data, for which ALS data were used in the model pretraining, and only a limited sample of forest plots was used in the target area to fine-tune the model. This approach facilitated production of more accurate predictions compared to more traditional modeling and machine learning approaches, particularly when reference data were incomplete, very sparse or underrepresented.

The proposed approach offers new perspectives in multi-source EO-based forest mapping using pretrained deep learning models and a sparse set of forest plots. We demonstrated that such an approach can deliver greater accuracies compared to traditional machine learning methods, and, importantly, it is also quite robust to underrepresented or scarce forest plot data that are used in fine-tuning—when other machine learning models completely fail. This opens new perspectives in operational forest management and producing timely and updated forest inventories using EO datasets.

Author Contributions

Conceptualisation: S.G. and O.A.; methodology: S.G. and O.A.; validation: O.A. and R.E.M.; data curation: O.A. and J.M.; writing—original draft preparation: S.G. and O.A.; writing—review and editing: R.E.M., T.H. and J.M.; supervision: R.E.M. and T.H.; funding acquisition: O.A., T.H. and J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by European Space Agency within project on Forest Carbon Monitoring, grant agreement number 4000135015/21/I-NB. The processing costs were covered by the ESA Network of Resources (NoR) initiative. S.G. was supported by the National Natural Science Foundation of China under Grant 62001229, Grant 62101264 and Grant 62101260.

Data Availability Statement

Preprocessed EO images and reference data are available from the corresponding author upon reasonable request. The plot-level ground reference data were provided by the Finnish Forest Centre. TanDEM-X data were provided by German Aerospace Agency DLR within the framework of TanDEM-X CoSSC project XTI_VEGE7568.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ALS | Airborne Laser Scanning |

| ALOS | Advanced Land-Observing Satellite |

| CNN | Convolutional Neural Network |

| CPR | Cross Pseudo Regression |

| DL | Deep Learning |

| EO | Earth Observation |

| ESA | European Space Agency |

| GRD | Ground Range Detected |

| ML | Machine Learning |

| MLR | Multiple Linear Regression |

| PALSAR | Phased-Array L-band Synthetic-Aperture Radar |

| PCA | Principal Component Analysis |

| RF | Random Forest |

| RMSE | Root Mean Squared Error |

| RNN | Recurrent Neural Network |

| SAR | Synthetic Aperture Radar |

| TanDEM-X | TerraSAR-X add-on for Digital Elevation Measurement |

References

- Herold, M.; Carter, S.; Avitabile, V.; Espejo, A.B.; Jonckheere, I.; Lucas, R.; McRoberts, R.E.; Næsset, E.; Nightingale, J.; Petersen, R.; et al. The role and need for space-based forest biomass-related measurements in environmental management and policy. Surv. Geophys. 2019, 40, 757–778. [Google Scholar] [CrossRef]

- McRoberts, R.E.; Tomppo, E.O. Remote sensing support for national forest inventories. Remote Sens. Environ. 2007, 110, 412–419. [Google Scholar] [CrossRef]

- GFOI. Integrating Remote-Sensing and Ground-Based Observations for Estimation of Emissions and Removals of Greenhouse Gases in Forests: Methods and Guidance from the Global Forest Observations Initiative; Group on Earth Observations: Geneva, Switzerland, 2014. [Google Scholar]

- Rodríguez-Veiga, P.; Quegan, S.; Carreiras, J.; Persson, H.; Fransson, J.; Hoscilo, A.; Ziółkowski, D.; Stereńczak, K.; Lohberger, S.; Stängel, M.; et al. Forest biomass retrieval approaches from Earth observation in different biomes. Int. J. Appl. Earth Obs. Geoinf. 2019, 77, 53–68. [Google Scholar] [CrossRef]

- Astola, H.; Häme, T.; Sirro, L.; Molinier, M.; Kilpi, J. Comparison of Sentinel-2 and Landsat 8 imagery for forest variable prediction in boreal region. Remote Sens. Environ. 2019, 223, 257–273. [Google Scholar] [CrossRef]

- Schmullius, C.; Thiel, C.; Pathe, C.; Santoro, M. Radar time series for land cover and forest mapping. In Remote Sensing Time Series: Revealing Land Surface Dynamics; Kuenzer, C., Dech, S., Wagner, W., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 323–356. [Google Scholar]

- Antropov, O.; Rauste, Y.; Häme, T.; Praks, J. Polarimetric ALOS PALSAR time series in mapping biomass of boreal forests. Remote Sens. 2017, 9, 999. [Google Scholar] [CrossRef]

- Olesk, A.; Praks, J.; Antropov, O.; Zalite, K.; Arumäe, T.; Voormansik, K. Interferometric SAR coherence models for characterization of hemiboreal forests using TanDEM-X data. Remote Sens. 2016, 8, 700. [Google Scholar] [CrossRef]

- Kugler, F.; Lee, S.K.; Hajnsek, I.; Papathanassiou, K.P. Forest height estimation by means of Pol-InSAR data inversion: The role of the vertical wavenumber. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5294–5311. [Google Scholar] [CrossRef]

- Persello, C.; Wegner, J.D.; Hänsch, R.; Tuia, D.; Ghamisi, P.; Koeva, M.; Camps-Valls, G. Deep learning and Earth Observation to support the sustainable development goals: Current approaches, open challenges, and future opportunities. IEEE Geosci. Remote Sens. Mag. 2022, 10, 172–200. [Google Scholar] [CrossRef]

- Zhu, X.X.; Montazeri, S.; Ali, M.; Hua, Y.; Wang, Y.; Mou, L.; Shi, Y.; Xu, F.; Bamler, R. Deep learning meets SAR: Concepts, models, pitfalls, and perspectives. IEEE Geosci. Remote Sens. Mag. 2021, 9, 143–172. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Astola, H.; Seitsonen, L.; Halme, E.; Molinier, M.; Lönnqvist, A. Deep neural networks with transfer learning for forest variable estimation using Sentinel-2 imagery in boreal forest. Remote Sens. 2021, 13, 2392. [Google Scholar] [CrossRef]

- Illarionova, S.; Trekin, A.; Ignatiev, V.; Oseledets, I. Tree species mapping on Sentinel-2 satellite imagery with weakly supervised classification and object-wise sampling. Forests 2021, 12, 1413. [Google Scholar] [CrossRef]

- Ge, S.; Gu, H.; Su, W.; Praks, J.; Antropov, O. Improved semisupervised UNet deep learning model for forest height mapping with satellite SAR and optical Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5776–5787. [Google Scholar] [CrossRef]

- Ge, S.; Su, W.; Gu, H.; Rauste, Y.; Praks, J.; Antropov, O. Improved LSTM model for boreal forest height mapping using Sentinel-1 time series. Remote Sens. 2022, 14, 5560. [Google Scholar] [CrossRef]

- Bolyn, C.; Lejeune, P.; Michez, A.; Latte, N. Mapping tree species proportions from satellite imagery using spectral–spatial deep learning. Remote Sens. Environ. 2022, 280, 113205. [Google Scholar] [CrossRef]

- Wang, S.; Chen, W.; Xie, S.M.; Azzari, G.; Lobell, D.B. Weakly supervised deep learning for segmentation of remote sensing imagery. Remote Sens. 2020, 12, 207. [Google Scholar] [CrossRef]

- Illarionova, S.; Shadrin, D.; Ignatiev, V.; Shayakhmetov, S.; Trekin, A.; Oseledets, I. Estimation of the canopy height model from multispectral satellite imagery with convolutional neural networks. IEEE Access 2022, 10, 34116–34132. [Google Scholar] [CrossRef]

- Lang, N.; Schindler, K.; Wegner, J.D. Country-wide high-resolution vegetation height mapping with Sentinel-2. Remote Sens. Environ. 2019, 233, 111347. [Google Scholar] [CrossRef]

- Bueso-Bello, J.-L.; Carcereri, D.; Martone, M.; González, C.; Posovszky, P.; Rizzoli, P. Deep learning for mapping tropical forests with TanDEM-X bistatic InSAR data. Remote Sens. 2022, 14, 3981. [Google Scholar] [CrossRef]

- Bjork, S.; Anfinsen, S.N.; Næsset, E.; Gobakken, T.; Zahabu, E. On the potential of sequential and nonsequential regression models for Sentinel-1-based biomass prediction in Tanzanian Miombo forests. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4612–4639. [Google Scholar] [CrossRef]

- Zhao, X.; Hu, J.; Mou, L.; Xiong, Z.; Zhu, X.X. Cross-city Landuse classification of remote sensing images via deep transfer learning. Int. J. Appl. Earth Obs. Geoinf. 2023, 122, 103358. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, X.; Leung, H.; Li, L. Cross-task and cross-domain SAR target recognition: A meta-transfer learning approach. Pattern Recognit. 2023, 138, 109402. [Google Scholar] [CrossRef]

- Javed, A.; Kim, T.; Lee, C.; Oh, J.; Han, Y. Deep learning-based detection of urban forest cover change along with overall urban changes using very-high-resolution satellite images. Remote Sens. 2023, 15, 4285. [Google Scholar] [CrossRef]

- Reis, H.C.; Turk, V. Detection of forest fire using deep convolutional neural networks with transfer learning approach. Appl. Soft Comput. 2023, 143, 110362. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin, Germany, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Šćepanović, S.; Antropov, O.; Laurila, P.; Rauste, Y.; Ignatenko, V.; Praks, J. Wide-area land cover mapping with Sentinel-1 imagery using deep learning semantic segmentation models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10357–10374. [Google Scholar] [CrossRef]

- Gazzea, M.; Solheim, A.; Arghandeh, R. High-resolution mapping of forest structure from integrated SAR and optical images using an enhanced U-net method. Sci. Remote Sens. 2023, 8, 100093. [Google Scholar] [CrossRef]

- Huang, Z.; Pan, Z.; Lei, B. Transfer learning with deep convolutional neural network for SAR target classification with limited labeled data. Remote Sens. 2017, 9, 907. [Google Scholar] [CrossRef]

- Wurm, M.; Stark, T.; Zhu, X.X.; Weigand, M.; Taubenböck, H. Semantic segmentation of slums in satellite images using transfer learning on fully convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2019, 150, 59–69. [Google Scholar] [CrossRef]

- Englhart, S.; Keuck, V.; Siegert, F. Modeling aboveground biomass in tropical forests using multi-frequency SAR data—A comparison of methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 298–306. [Google Scholar] [CrossRef]

- Ge, S.; Tomppo, E.; Rauste, Y.; McRoberts, R.E.; Praks, J.; Gu, H.; Su, W.; Antropov, O. Sentinel-1 time series for predicting growing stock volume of boreal forest: Multitemporal analysis and feature selection. Remote Sens. 2023, 15, 3489. [Google Scholar] [CrossRef]

- Antropov, O.; Miettinen, J.; Häme, T.; Yrjö, R.; Seitsonen, L.; McRoberts, R.E.; Santoro, M.; Cartus, O.; Duran, N.M.; Herold, M.; et al. Intercomparison of Earth Observation data and methods for forest mapping in the context of forest carbon monitoring. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 5777–5780. [Google Scholar] [CrossRef]

- Berninger, A.; Lohberger, S.; Stängel, M.; Siegert, F. SAR-based estimation of above-ground biomass and its changes in tropical forests of Kalimantan using L- and C-band. Remote Sens. 2018, 10, 831. [Google Scholar] [CrossRef]

- Tomppo, E.; Olsson, H.; Ståhl, G.; Nilsson, M.; Hagner, O.; Katila, M. Combining national forest inventory field plots and remote sensing data for forest databases. Remote Sens. Environ. 2008, 112, 1982–1999. [Google Scholar] [CrossRef]

- Miettinen, J.; Carlier, S.; Häme, L.; Mäkelä, A.; Minunno, F.; Penttilä, J.; Pisl, J.; Rasinmäki, J.; Rauste, Y.; Seitsonen, L.; et al. Demonstration of large area forest volume and primary production estimation approach based on Sentinel-2 imagery and process based ecosystem modelling. Int. J. Remote Sens. 2021, 42, 9467–9489. [Google Scholar] [CrossRef]

- Rauste, Y.; Lonnqvist, A.; Molinier, M.; Henry, J.B.; Hame, T. Ortho-rectification and terrain correction of polarimetric SAR data applied in the ALOS/Palsar context. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 1618–1621. [Google Scholar] [CrossRef]

- Small, D. Flattening gamma: Radiometric terrain correction for SAR imagery. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3081–3093. [Google Scholar] [CrossRef]

- Huang, W.; Min, W.; Ding, J.; Liu, Y.; Hu, Y.; Ni, W.; Shen, H. Forest height mapping using inventory and multi-source satellite data over Hunan Province in southern China. For. Ecosyst. 2022, 9, 100006. [Google Scholar] [CrossRef]

- Luo, Y.; Qi, S.; Liao, K.; Zhang, S.; Hu, B.; Tian, Y. Mapping the forest height by fusion of ICESat-2 and multi-source remote sensing imagery and topographic information: A case study in Jiangxi province, China. Forests 2023, 14, 454. [Google Scholar] [CrossRef]

- Zhang, N.; Chen, M.; Yang, F.; Yang, C.; Yang, P.; Gao, Y.; Shang, Y.; Peng, D. Forest height mapping using feature selection and machine learning by integrating multi-source satellite data in Baoding city, North China. Remote Sens. 2022, 14, 4434. [Google Scholar] [CrossRef]

- Praks, J.; Hallikainen, M.; Antropov, O.; Molina, D. Boreal forest tree height estimation from interferometric TanDEM-X images. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 1262–1265. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Shang, R.; Qin, Y.; Wang, L.; Chen, H. High-resolution mapping of forest canopy height using machine learning by coupling ICESat-2 LiDAR with Sentinel-1, Sentinel-2 and Landsat-8 data. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102163. [Google Scholar] [CrossRef]

- Becker, A.; Russo, S.; Puliti, S.; Lang, N.; Schindler, K.; Wegner, J.D. Country-wide retrieval of forest structure from optical and SAR satellite imagery with deep ensembles. ISPRS J. Photogramm. Remote Sens. 2023, 195, 269–286. [Google Scholar] [CrossRef]

- Chen, H.; Cloude, S.R.; Goodenough, D.G. Forest canopy height estimation using Tandem-X coherence data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3177–3188. [Google Scholar] [CrossRef]

- Olesk, A.; Voormansik, K.; Vain, A.; Noorma, M.; Praks, J. Seasonal differences in forest height estimation from interferometric TanDEM-X coherence data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 5565–5572. [Google Scholar] [CrossRef]

- Praks, J.; Antropov, O.; Olesk, A.; Voormansik, K. Forest height estimation from TanDEM-X images with semi-empirical coherence models. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 8805–8808. [Google Scholar] [CrossRef]

- Chen, H.; Cloude, S.R.; Goodenough, D.G.; Hill, D.A.; Nesdoly, A. Radar forest height estimation in mountainous terrain using Tandem-X coherence data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3443–3452. [Google Scholar] [CrossRef]

- Schlund, M.; Magdon, P.; Eaton, B.; Aumann, C.; Erasmi, S. Canopy height estimation with TanDEM-X in temperate and boreal forests. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101904. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).