Abstract

As the habitat areas of Tibetan antelopes usually exhibit poaching and unpredictable risks, combining target recognition and tracking with intelligent Unmanned Aerial Vehicle (UAV) technology is necessary to obtain the real-time location of injured Tibetan antelopes to better protect and rescue them. (1) Background: The most common way to track an object is to detect each frame of it, and it is not necessary to run the object tracker and classifier at the same rate, because the speed for them to change class is slower than objects move. Especially in the edge reasoning scene, UAV real-time monitoring requires to seek a balance between the frame rate, latency, and accuracy. (2) Methods: A backtracking tracker is proposed to recognize Tibetan antelopes which generates motion vectors through stored optical flow, achieving faster target detection. The lightweight You Only Look Once X (YOLOX) is selected as the baseline model to reduce the dependence on hardware configuration and calculation cost while ensuring detection accuracy. Region-of-Interest (ROI)-to-centroid tracking technology is employed to reduce the processing cost of motion interpolation, and the overall processing frame rate is smoothed by pre-calculating the motions of different objects recognized. The On-Line Object Tracking (OLOT) system with adaptive search area selection is adopted to dynamically adjust the frame rate to reduce energy waste. (3) Results: using YOLOX to trace back in the native Darkenet can reduce latency by 3.75 times, and the latency is only 2.82 ms after about 10 frame hops, with the accuracy being higher than YOLOv3. Compared with traditional algorithms, the proposed algorithm can reduce the tracking latency of UAVs by 50%. By running and comparing in the onboard computer, although the proposed tracker is inferior to KCF in FPS, it is significantly higher than other trackers and is obviously superior to KCF in accuracy. (4) Conclusion: A UAV equipped with the proposed tracker effectively reduces reasoning latency in monitoring Tibetan antelopes, achieving high recognition accuracy. Therefore, it is expected to help better protection of Tibetan antelopes.

1. Introduction

There has been much talk about the Fourth Industrial Revolution (4IR), which in its simplest definition refers to the continued digitization of traditional manufacturing and industrial practices, using Artificial Intelligence (AI), Internet of Things (IoT), Unmanned Aerial Vehicles (UAVs), robotics, and other aspects of modern technology. Integrating these kinds of technologies aims to make smart devices and computers think and analyze like the human brain to ensure a secure, decentralized, and transparent way of recording and exchanging data which integrates the digital and physical worlds [1]. In this context, how to make full use of the scientific and technological achievements of 4IR to provide more-effective and -humane protection for wildlife, especially rare wildlife, has become one of the topics widely concerned and discussed by relevant scholars in recent years.

The Tibetan antelope is a unique species of China and a typical representative of plateau animals. It is mainly distributed in Tibet, Xinjiang, and Qinghai in China. It is reputed as “plateau genie” and is listed as a “national first-class protected animal”. According to incomplete statistics, the number of Tibetan antelopes killed by poachers every year is about 20,000, which is induced by its high economic value [2]. In addition, the total number of Tibetan antelopes has reduced sharply and their habitat area has shrunk dramatically due to disturbance of human activities. Tibetan antelopes once faced the danger of extinction in China. To this end, the Chinese government has established special nature reserves to protect Tibetan antelopes, and has organized regular monitor on their population distribution and scope of activities. As Tibetan antelopes are easily frightened and the natural conditions in the plateau area are increasingly harsh, manual inspection is inefficient. In recent years, the rapid development of intelligent Unmanned Aerial Vehicle (UAV) technology has partially solved the above problems [3,4]. Due to the poaching and unpredictable risks in plateau areas, Tibetan antelopes are often injured. Once any injured Tibetan antelope is found, the UAV will inform the rescue team of its exact location. However, the injured Tibetan antelope may have moved to a place it considers safer when the rescue team arrives at the informed location. Therefore, combining target identification and tracking technologies to obtain the real-time location of injured Tibetan antelopes will be more conducive to effective rescue.

With the rapid development of deep learning technology, CNN has been extensively applied in ecology, including tools for species identification [5,6]. For instance, Norouzzadeha et al. [7] identified 48 animal species from the Snapshot Serengeti dataset containing 3.2 million images, with the accuracy reaching 93.8%, which is similar to that of the crowdsourced identification. With such an achievement, human labeling effort has been saved greatly (approximately 8.4 years). Gray et al. [8] detected and counted olive ridley turtles in the nearshore waters of Ostional, Costa Rica, by using the CNN method, and the total number of identified turtles was increased by 8% compared to manual detection, reducing 66-fold time for analysis.

The most common way to achieve single-target tracking (SOT) is to simply detect each frame of the object, which is called Tracking Through Detection (TBD). It is not necessary to run the tracker and classifier at the same rate, because they fail to change class as fast as objects move. After a traditional object tracker is optimized using the key frames under deep learning, both worlds may be the best, as the classifier runs slowly so that its scale and shift invariance capability project the traditional tracker on every key frame effectively. Such combination can balance the frame rate, latency, and accuracy, which is an essentiality for the new edge applications. Based on the above contents, this work is developed to reduce tracker latency while maintaining high detection accuracy, thereby modifying the CNN and motion-based trackers.

This work intends to propose a lightweight LB tracker with adjustable low latency by referring to the CNN-based object detector and to enhance the optical flow (OF) motion vector, which is believed to be the major contribution of this work. Using an LB technique, the proposed algorithm effectively promotes the previous “stale” CNN reasoning through the motion vector generated by the stored OF. Being independent of hardware-specific video encoding/decoding functions, the algorithm can be easily transplanted to the edge computing devices such as a graphics processing unit (GPU) or field-programmable gate array (FPGA). Compared with similar works, this work employs ROI-to-centroid tracking technology based on OF to lower the processing cost of motion interpolation and smooth the overall processing frame rate by pre-calculating the motions of various objects recognized. An On-Line Object Tracking (OLOT) system is adopted to adaptively select the search area based on the speed and frame rate of the target. Compared with the traditional OLOT system, the optimized system is superior with dynamically adjusted frame rate by incorporating an energy tradeoff based on the behaviors of the tracked object, thus reducing the energy waste. Tibetan antelopes are tracked using UAVs equipped with NX edge reasoning units to verify the effectiveness of the proposed algorithm. We found that the proposed algorithm reduces the tracking latency of UAVs by half (around 50%).

2. Related Works

2.1. Optical Flow in Object Detection

Pooja Agrawal et al. [9] proposed a reverse optical flow method for autonomous UAV navigation, including optical flow-based obstacle detection. Yugui Zhang et al. [10] proposed an effective motion object detection method based on optical flow estimation, characterized in that the complete boundary of the motion object can be extracted from the combination of the optimized horizontal flow with the optimized vertical flow. Sandeep Singh Sensor et al. [11] proposed a moving-target detection algorithm based on optical flow, which uses a bidirectional optical flow field for motion estimation and detection. TaoYang et al. [12] designed a single-object tracker combining optical flow and compressive tracking. The designed tracker was applied to multi-object tracking. These methods prove the reliability of object detection based on optical flow. Barisic et al. [13] have applied YOLO trained on a custom dataset for real-time UAV detection.

2.2. SOT Algorithm Based on Filter

Numerous tracking approaches have been proposed in previous decades. Among these methods, correlation filter-based trackers have drawn extensive attention owing to their high efficiency. The seminal work MOSSE [14] runs at an impressive speed of several hundred frames per second. Kernalized correlation filters (KCF) [15] extend this method using improved feature representation, achieving remarkable performance. Many later extensions [14,15] have been conducted. To handle the problem of scale variation, the approaches of [16,17] leverage an additional scale filter to perform pyramid scale search. The methods in [18,19] propose to enhance correlation filter trackers with complex regularization techniques. Background contextual information is exploited in [20,21] to improve robustness. Part-based strategy is used in [22] to handle the problem of occlusion for correlation filter tracking. The method in [23] incorporates structural information in local target part variations using the global constraint in correlation filter tracking.

2.3. SOT Combined with Deep Learning

Inspired by success in various vision tasks [24,25,26], deep features have been adopted for object tracking and demonstrated state-of-the-art results. The approaches in [27,28,29] replaced hand-crafted features with deep features in correlation filter tracking and achieved significant performance gains. To further explore the power of deep features in tracking, end-to-end deep tracking algorithms have been proposed. MDNet [30] introduces an end-to-end classification for tracking. This approach is extended by SANet [31] by exploring structure of target object. VITAL [32] improves tracking performance by exploiting richer representation for classification. Despite excellent performance, these trackers are inefficient due to extensive deep-feature extraction or network fine-tuning. To solve this problem, a deep Siamese network has been proposed for tracking [33,34] and achieved a good balance between accuracy and speed. Many extensions based on Siamese tracking have been proposed. Among them, Siamese tracking with a regional proposal network (RPN) [35,36,37,38,39] exhibits impressive results and fast speed owing to the effectiveness of an RPN in dealing with scale changes. In addition to these methods, another trend is to combine a discriminative localization model with a separate scale-estimation model for tracking. ATOM [40] utilizes the correlation filter method for target localization and an IoU Net [41] for scale estimation. Based on ATOM [40], DiMP [42] incorporates contextual background information for improvement.

2.4. Tracker Based on Edge Reasoning

Zhu et al. [43] and Ujiie et al. [44] completed the detection of edge objects based on interpolation but were subjected to large bulk latencies, which may be caused by CNN inference time. Nicolai et al. [45] adopted motion-based interpolation for latency reduction to further adjust three-dimensional point cloud data, and Liu et al. [46] and Chen et al. [47] adopted a similar method to solve the network latency of remote reasoning. Look-back (LB) motion marching has nearly been implemented completely in Glimpse [15], but there are still some tracking problems which may result from the properties of the feature selection and the constraints in devices.

Cintas et al. propose vision-based UAV tracking by another UAV by use of YOLOv3 and KCF [48]. They recognized the need for deep-learning key frames to effectively generate the new correlation templates that can be used in-between successive math-heavy inference-based key frames. The problem with the KCF approachfor target tracking is that they are very robust to translation changes in a target template within an image but are not immune to rotational or scale changes. Opromolla [49] uses YOLOv2 as well as bounding-box renement in order to better hone in on the centroid of another UAV flying in front of the tracking UAV. Opromolla, however, only scans the middle portion of the full image for targets to track, which is not ideal in scenarios where a UAV must sense and avoid objects entering the scene from the top or bottom of the field of view.

In recent years, a related research field has been formed which combines video-based motion tracking with CNN [50]. These trackers use many traditional tracking technologies, such as optical flow, motion vectors, and simple macroblocks, and are used in combination with object detectors such as YOLO. In this paper, we combine YOLO with the optical flow-based target detection method to detect Tibetan antelopes to achieve the accuracy of detection.

3. Methods

3.1. Research Area and Study Objects

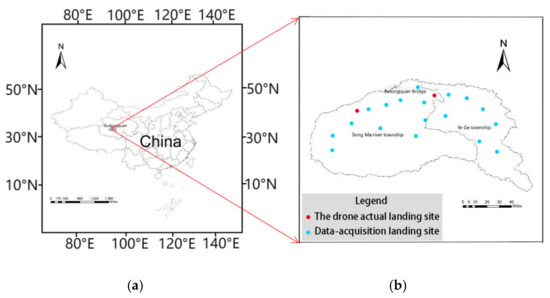

This work takes Budongquan as the research area, which is in Qumalai County, Qinghai Province, at the junction of Sanjiangyuan Nature Reserve and Hoh Xili Nature Reserve (see Figure 1a). Its altitude is 4543.3 m, and the annual average temperature is −4.5–5.6 °C. Due to the unique geographical location and ecological environment, wild Tibetan antelopes are quite common to see.

Figure 1.

The research area in this work. (a) Geographical location of Budongquan; (b) distribution of UAV landing sites in July.

In July 2022, we went to the research area with the tasks of UAV data acquisition and actual flight verification. There were 20 flights in three days, and each flight lasted about half an hour. The average flight height was about 100 m, and the aerial photography coverage area reached 2100 km2. The Figure 1b shows the flight landing sites. The videos in the research area were acquired by using Prometheus 600 (P600) intelligent UAVs (Chengdu Bobei Technology Co., Ltd., Chengdu, China) and a photoelectric pod [4]. Based on the captured videos, we built an object-tracking database. The main task was to identify the moving Tibetan antelopes in a given image sequence, match them in different frames one by one, and track their motions.

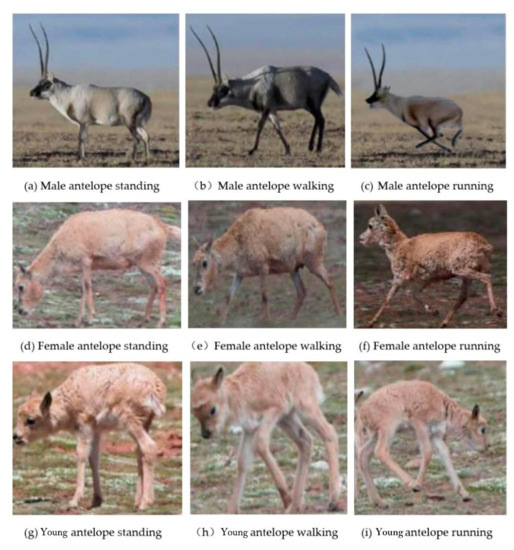

Six video sequences were labeled, including three training databases with labeled information and three test databases. There are three major motions for Tibetan antelopes, namely, standing, walking, and running, as shown in Figure 2a–c (male), Figure 2d–f (female), and Figure 2g–i (young).

Figure 2.

Male, female, and young antelopes with different motions.

In addition, a unified data format was established for the six sequences of the object-tracking database, and all images were converted into JPEG format. The detection file and annotation file were saved in the format of simple comma-separated value (CSV). Each line represents the annotation or detection information of an instance object, including 7 values as given in Table 1.

Table 1.

Format of input or output data.

We marked six video sequence databases (including three training databases and three test databases with marked information). The ratio of 3:2:1 was divided into the training set, test set, and verification set. The constructed YOLOX model was used to train the dataset by adjusting parameters (weight ratio, confidence threshold, IOU threshold of nms, and activation function), and a stable model with high prediction was obtained. At present, this dataset has the problem that the number of datasets on the behavior of Tibetan antelopes (standing, walking, running) is not enough, which has a certain impact on the experimental results of tracking and identifying Tibetan antelopes. To this end, we intercepted 2400 datasets about Tibetan antelope behavior from six video sequences, divided the datasets according to the 3:2:1 ratio, and used the model for training.

3.2. Overall Technical Route

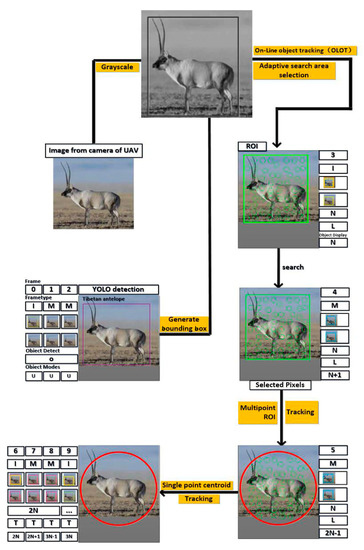

This section describes how to detect and track Tibetan antelopes using the YOLO and OF phase. As mentioned above, images of Tibetan antelopes were captured by a camera and displayed in an onboard computer. Firstly, motion direction of the Tibetan antelope has to be estimated so that it can be followed better. YOLO can track the center of the bounding box (BB) automatically, so the target can be tracked directly. YOLO should be updated regularly according to the specific configuration of the computer, which gives specific requirements and pressure on physical hardware constraints of the UAV. Targeting this, the OF-based method was employed to track the target to expand its application.

Cameras equipped for the UAV can capture the relative position of the Tibetan antelope with respect to the camera frame (ego-frame). With the ego-frame, the Tibetan antelope can be tracked in two directions instead of one direction calibrated by the traditional camera [51]. OF refers to the process for a pixel moving between two frames. The UAV hovers above the Tibetan antelope stably when reaching it, so it is in a static state relatively. Thus, OF results from the motion of the Tibetan antelope primarily. In this work, the Lucas Kanade (LK) method [52] with optimized local energy is employed to calculate the OF of the pixels which have been determined and selected in the first frame.

As long as finding and localizing the Tibetan antelope, YOLO will feed back the size of the BB. With the LK method, the pixels selected by Shi and Tomasi’s corner finder [53] can be processed and deemed as the only tracking target. If the Tibetan antelope is out of the camera frame, the UAV will adjust its tracking direction according to the estimated result, as introduced above, till the antelope is included in the frame again. Then, the algorithm is activated again, and the procedures illustrated in Figure 3 will be implemented again, during which the Redmon’s Darknet framework [54] is selected. The Darknet framework enables the utilization of several YOLO models and makes the selected models [55,56,57] accessible from the active forked Darknet [58]. In addition, we used the Python wrapped around the OpenCV-based Darknet DLL to infer image processing, image reading, and image displaying. OpenCV imported OF into the inference loop to interpolate among different frames, which was realized by obeying the scheme described in [59].

Figure 3.

The algorithm framework proposed in this work.

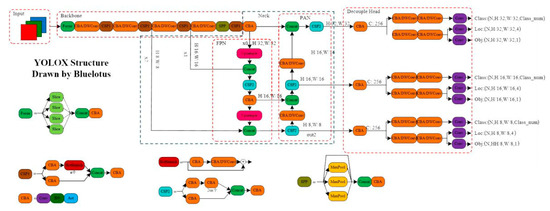

3.3. YOLOX Detector

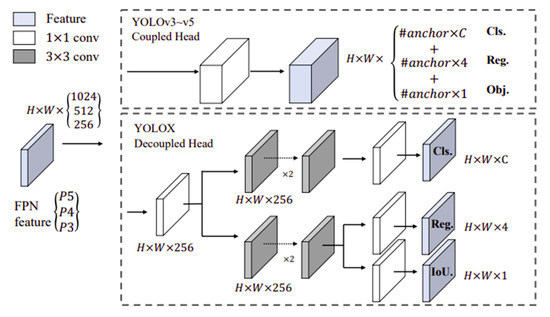

Detection is very challenging due to fast mobility in running, low training data, low object-detection accuracy, and frequent position changes caused by continuous mobility of Tibetan antelopes. Therefore, the YOLOX algorithm was selected as the baseline model in this study to detect and track Tibetan antelopes with high accuracy. Figure 4 shows the structural framework of the YOLOX algorithm.

Figure 4.

Structural framework of the YOLOX algorithm.

YOLOX takes YOLOV3 and Darknet53 as the baseline and adopts the structural architecture and SPP layer of the Darknet53 skeleton network. In addition, it is equipped with high-performance detectors such as the understanding coupler, anchor-free, and advanced label assignment strategy, realizing a better trade-off between speed and accuracy.

In this work, the YOLO algorithm was optimized by replacing the coupled detection head with the decoupled head in the original YOLO series, which greatly improved the training convergence speed. The YOLOX network was supplemented with two convolution layers to realize the end of YOLO. For the FPN characteristics of each layer, the decoupling head is used ×1, the number of features is reduced to 256, and then two parallel branches are integrated with two 3× in each. The three convolution layers can realize classification and regression (see Figure 5).

Figure 5.

Comparison between YOLOX decoupling head and YOLO series.

Furthermore, Anchor-free is incorporated into YOLOX to reduce the number of predictions of each location from 3 parameters to 1 parameter, and predict 4 values directly (i.e., the two offsets of the upper left corner of the grid, and the height and width of the prediction box). See Formula (1) for the specific calculation.

In Formula (2), xcenter is the x coordinate on the size of the feature graph, ycenter is the y coordinate on the size of the feature graph, tx, ty, th, and tw are the parameters of the target boundary frame, and cx and cy are the offset. w is the width of the feature graph and h is the height of the feature graph. At the same time, we designated this center. We defined the cation as a positive sample and set a proportional direction to specify the FPN level. In this way, it was possible to reduce the parameters of the detector, making it faster and perform better, thus more accurately detecting the Tibetan antelope.

When deep learning model loss is calculated, Lcls is present in the detection header of YOLOX network. Branches, Reg. branches, and IoU branches comprise the loss function. See Formula (2) for the specific formula.

In Formula (2), Lcls represents classification loss, Lreg represents positioning loss, Lobj represents obj loss, λ represents the balance coefficient of positioning loss, and Npos represents the number of Anchor points classified as positive.

3.4. Improved Backtracking Tracker

We used a backtracking tracker [47], in which the backtracking technology effectively promoted the previous “old” CNN inference through the motion vector generated by the stored OF. With weakened hardware-specific video encoding/decoding capabilities, this technology is easy to migrate to GPU or FPGA edge computing devices. Backtracking is basically motion interpolation based on object detection in video sequences. It uses motion-based interpolation to accelerate the frame rate and eliminates batch latency related to the competitive algorithm. The backtracking is to reduce the tracking latency by recording the outdated detection information of motion adjustment, which is similar to Glimpse [47] and other operations. Such an operation is a vital backtracking, because the algorithm outputs the tracking result before completion of CNN detection.

3.4.1. ROI-to-Centroid Tracking

To reduce the computational overhead, the ROI was added to the centroid tracking and motion vector precomputation in the backtracking algorithm applied here.

Step 1: As shown in Figure 4, when frame 0 reasoning was completed and a pure yellow BB was generated in the third frame, the object would transition to L mode, in which the backtracking algorithm was outdated in understanding the location of the Tibetan antelope. Backtracking increased the old BB by about 20%, and uses the enlarged one (the orange one in the third frame) as the ROI of the OF motion algorithm. In this way, 15 key points could be tracked.

Step 2: After the third frame inference described in the (2N) solid yellow BB of the sixth frame was completed, which of the 15 tracking points in the third frame were initially in the dotted yellow BB of the same frame was searched and determined. Points represented by blue stars denote the third, fourth, and fifth frames that were in fact on the tracked object. This technique reduced the OF motion vector and ensured that external points of the object were not included in interpolating the related motion.

Step 3: After the 15 tracking points were reduced to a subset of travel points, all travel motion vectors (those related to blue stars) were averaged to one motion vector and moved continually to reach real pink “travel” boundary box.

Step 4: From the sixth frame, the T mode was activated, under which the center of mass of the pink boundary box represented by the pink star moves forward in each frame through a single-point OF.

ROI-to-centroid tracking refers to the transition from ROI-based tracking with multiple points to centroid tracking with a single point, that is, from the L mode to T mode, and it is more conducive to accelerating the backtracking than the per frame method, which prefers a mobile tracker such as Glimpse. In T mode, after the next frame is read in, the BB will be on the actual target. At this time, the centroid generated by its frame position can be adopted to track the object, and, based on the data resulting from it, we can reduce the the critical travel latency of a previous frame and allow it to distribute over the past N frames.

3.4.2. Improvement Based on OLOT

When an object changes its moving speed rapidly, misprediction will occur if the frame rate is reduced. To alleviate this view, the frame rate was adjusted dynamically after the energy tradeoff was integrated based on the behavior of the tracked object. The ROI BB expansion mentioned in the previous section can be adjusted according to the speed of the tracked object. The faster the object moves, the larger the ROI area.

We selected an OLOT [60] system with adaptive search region and the image frame was captured as a stream. After a target Tibetan antelope was detected, a search area could be defined. After the target Tibetan antelope was detected in the i-th frame, the OLOT system would automatically predict the antelope’s location in the i-th + 1 frame. Searching the whole frame may obtain higher accuracy, but energy efficiency is very low due to the need for a large number of operations for pattern matching.

A search area can be defined to prevent such a negative effect, but it would be much smaller compared with the size of the full frame. If the Tibetan antelope is walking or standing, the search area will be narrowed as much possible to reduce the number of operations for pattern matching. On the contrary, when the object moves at a high speed, it will expand the search area. In addition, the shape of the search area was assumed to be square in this study, and the pixel size in units of its size can be expressed as Formula (3):

S = (2r + 1)2

r in Formula (3) refers to the predicted value of an object’s moving distance, and can be calculated by speed v and frame rate, as expressed in Formula (4), which represent the pixels and frames per second, respectively.

3.4.3. Keep Alive

To enhance a tracker, including the core backtracking tracker, is to keep it alive based on the IOU, which has been proved in many most-advanced trackers. In this way, the tracker proposed in this study keeps the BB of an object alive for several frames after it fails to translate from one tracklet to the other. With this method, the fault detection can be mitigated when it fails to detect an object in the i-th frame. If an object is subjected to the risk of sequence induced by occlusion, a smaller number of objects, poor training of the network, and exceeded number of keep-alive frames, it will be set to F mode.

Several tracklets will be generated from the tracker for N frames, and they are connected to each other using the IoU-based matching. If any object which was tracked for indexing is matched successfully, it will be assigned with a unique ID, and a new ID will be specified for the unmatched object. If any object disappears, it can still be kept alive for several frames, which can be realized by setting. With “keep alive”, results of the last detection can be extracted from a previous tracklet and applied again when the BB with enough confidence for an object in the next tracklet cannot be predicted successfully using CNN. References [44,61] explained the above process briefly, and reference [62] depicted it in detail.

4. Results

4.1. Evaluating Indicator

We employed the Object-Tracking Benchmark (OTB) proposed by Wu et al. [60] to evaluate the performance of the tracking model. There were several tracking results generated by the model, and we selected the tracking set of a single object for the testing. The experimental results were compared to those obtained in a best scenario without motion tracking but with an object detector.

The tracking accuracy mentioned in this work refers to the percent of correctly detected positive frames to the CNN-based inference on every frame. In consideration of it, we ignored the mAP metric but concentrated on the IoU TP metric only and analyzed the degrading condition over the skip numbers. Usually, half of the IoU was determined as the threshold for correctly detected positive frames, so it would be deemed as a comprehensive summary of the performance if the value was higher than 50%. Based on this, the models can be compared with the skip numbers instead of other OTB models.

4.1.1. Normalized Centroid Drift (NCD) Metric

Further, the normalized centroid drift metric was included in this work. It refers to the magnitude of a distance vector from the predicted centroid to the BB centroid of the ground truth, and we performed normalization on each component through width and height of BB for the ground truth. It is well-known that we always focus on the center of an object to be tracked by the UAV, so the NCD metric was selected in this work. If the LB is selected, the predicted boxes would come from the previous frames, under which condition the object may move between the foreground and the background, so that the scale is changed, but the accurate centroid can be guaranteed. Meanwhile, we averaged the numbers of positive IoU and NCD, which were detected correctly over all sequences and presented the result as a single value for a comprehensive report. The objective of above operation is to degrade the TP frames over number of skips, thus explaining the performance loss against the latency benefit.

4.1.2. Calculation of Latency

When a tracker is designed, latency, as a crucial feature, has to be considered. Latency refers to the time a frame is processed, involving the entire process from capturing the photons to outputting the results of the algorithm. We used the X-axis to represent the number of frame hops, the left Y-axis to display the latency of each frame jump, that is, the processing time of each frame jump, and the right Y-axis to display the IOU result of each frame jump (>50%), that is, the accuracy evaluation. To determine the baselines for accuracy, latency, and frame rate, YOLO was run in each frame of various OTB videos without considering any motion vector or making any interpolation. By viewing the Y-axis value corresponding to the X-axis jump value 0, the accuracy and timing number of YOLO baseline per frame can be observed.

4.2. Performance Comparison

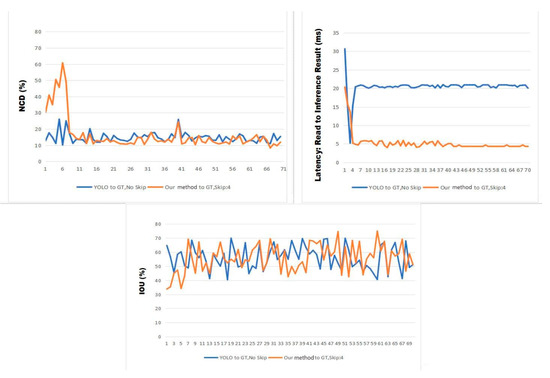

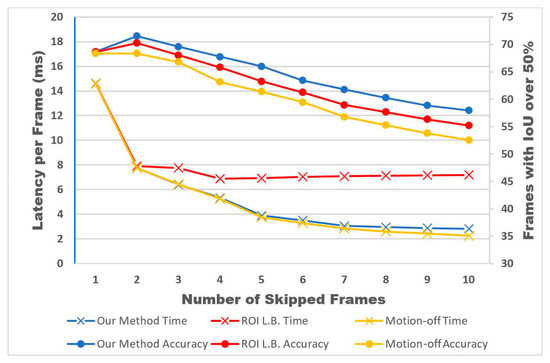

YOLOv3, v4, v4-Tiny, and x were all evaluated while using our method. The evaluation data were analyzed and the frame skip experiments were compared to experiments with inference. In Figure 6, YOLOX (frame skip: 4) using our method is compared with YOLOX with a frame skip of 0 on each frame to observe the difference in NCD, frame read to output delay, and IoU. When they were compared, we introduced a smoothing delay so that the latency could be maintained at 5 ms per frame, which was the minimal value, at frame 40. Latency of a frame can be reduced 5× when the skip involves in 4 frames. In addition, we tracked and recorded all the latency, NCD, and IoU data for all sequences and models.

Figure 6.

Metrics for walking OTB sequence with basic spin-lock latency smoothing enabled at frame 40.

In Figure 7, the yellow polyline represents motion off, the red polyline represents ROI per frame OF, and the blue polyline represents our method. The motion off shows the condition when stale detections failed to be matched and no motion was recorded, and illustrates the fastest processing time and the worst accuracy of each frame. The ROI per frame OF method displays time and IoU results similar to Glimpse with change in the skip numbers, but the case in other Glimpse techniques is different. The OF based on ROI can ensure accuracy when skipping, but it processes the OF points when traveling with more time than our method. Our method shows the fastest processing time and the best case accuracy of each frame by the feature of LB.

Figure 7.

Consolidated performance of LB-based YOLOX.

The average latency and accuracy of the LB-based tracker integrating with YOLOX at each frame jump are displayed on PC, as shown in Figure 7. In addition, it also illustrates the advantages of the LB to frame latency. When the skip is set as 1, the inference latency can be distributed over 2 frames due to application of the LB. Under this condition, the LB-based tracking shows a latency which is no higher than half of the maximum value. When the frame skip is defined as 3, we found the inference time on 3 frames distributed by the LB prediction, and the maximum value of latency is lower than one-third of the inference time. Such a finding continues when the other computing resources can satisfy the number of the skip frames.

For NCD, the TP threshold generated essentially the same number of TP frames as that of the IoU, and each model showed no skipping. IoU and NCD exhibit different degradation rates but the same number of TP samples at the starting point. This demonstrates the influence of BB scale variation on the IoU and increases frame skips by using NCD, which is attributed to no effects of target-to-ground-truth scale differences on the accuracy metric. The consistent assessment is conducted on the desktop, and the average values of YOLOv3 in Table 2 and YOLOv4 in Table 3 are calculated. The results based on YOLOv4-Tiny are shown in Table 4, and are compared with YOLOX. The results suggest that YOLOX achieved greater acceleration in the 4-frame skip under the condition that the decrease in accuracy is basically consistent. Table 5 lists the YOLOX with Look Back results.

Table 2.

YOLOv3 with Look Back Results.

Table 3.

YOLOv4 with Look Back Results.

Table 4.

YOLOv4-Tiny with Look Back Results.

Table 5.

YOLOX with Look Back Results.

To investigate the performance of the proposed tracker in the edge reasoning scenario, we chose the NVIDIA Jetson AGX Xavier (NX) [4] as the verification platform, and compared it with some common trackers. The comparison results are shown in Table 6.

Table 6.

Performance comparison results between our tracker and common trackers.

Table 6 shows the IOU TP (success = IOU TP (>50%)), precision, and FPS of these methods. From the table, we can see that our method reaches 58.4 Hz FPS. Although this is not as good as KCF in FPS, it is obviously higher than other trackers and is obviously better than KCF in precision. In general, our tracker has the best performance.

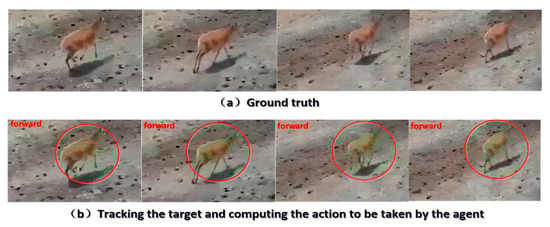

4.3. Actual Tracking Effect

We found that the UAV is able to process images at a fast rate of about 30 FPS, that is, one new frame can be processed every 33 ms, which is an advantage of the proposed algorithm. If the traditional algorithm is adopted, it will take 2 frames (66 ms) to update after receiving the visual information [20], so as to properly adjust the flight control surface. Therefore, after the visual information is captured, the UAV needs to fly 2.4 m to make the corresponding action decision and execute it, because the flight speed of the UAV in this work is about 20 m/s (the maximum running speed of Tibetan antelopes). According to the experiment results demonstrated in Figure 8 and Figure 9, the tracking latency can be reduced by half and the UAV can act with 1.2 m delayed when the proposed algorithm is adopted.

Figure 8.

Comparison between ground truth and output of the algorithm for verification.

Figure 9.

Computation for the motion direction of Tibetan antelopes in eight directions by the proposed algorithm.

Figure 8 compares the ground truth and output of the algorithm. The visual representations of the algorithm are marked by red circles, and actions for the UAV to follow the Tibetan antelope are marked in red words. Based on the experiment, we confirmed that the proposed algorithm tracks the Tibetan antelope accurately and estimate the actions of UAV to follow the antelope correctly.

As shown in Figure 9, the algorithm can track bidirectionally because it can compute the motion of the Tibetan antelope in eight directions.

5. Discussion

Visualization like Figure 8 is important in determining the way to decide on the tracker skips. The decline in accuracy is mainly linear, but the reasoning time of the model exhibits a saturated decay return. The ideal skip is to achieve the required performance advantage without skipping more than the required number of times. Some test time characteristics of the model include 10 live frames, 1.2 scale factor ROI extended BB, and IoU-based association. The ROI BB can be adjusted adaptively according to the speed and frame rate of the object to be tracked. The faster the object moves, the farther it moves, and the larger the ROI regions. In contrast, slower objects need only a small ROI area. In this work, 1.2×is good for obtainingobjects, which can be adjusted based on the actual demands. This analysis turns off the frame display, object painting, and all other operations which are not necessary.

This work demonstrates that the LB tracking shows no effect on the edge devices. Based on lots of previous tracking paradigms, this work is applicable for many various cases and hardware topologies. Equipped with YOLOX under Darknet, the LB tracking reduces the latency by 3.75× when the IoU accuracy drop is 6.18%. Meanwhile, we found that the drop mentioned above is 2.67% only from the prospective of NCD from the ground truth. On the other hand, the latency is reduced by 4.97× and 4.68× by using YOLOv3 and YOLOv4 on the NX, with an IoU accuracy drop of 6.42% and 6.22% and an increase in NCD error of 1.4% and 6.04%, respectively. We observed similar latency reduction, IoU accuracy drop, and increase in NCD error by using YOLOv4-Tiny on the NX, which are 1.69×, 4.96%, and 1.22%, respectively.

These benefits are also embodied in the power benefits, because computing resources with high power can be applied to edge devices, which is attributed to the LB tracking. When the NX and GPU frequency are reduced, the LB can save the power for an accuracy drop at a specific skip (10), which can still output the results when the frame rate is consistent. Due to the less-cumbersome centroid tracking, it can reduce GPU frequency while basically maintaining the frame rate, which is of critical significance for edge device throughput, power usage, and response time of the lightweight trackers integrating the UAV.

Sharp increase in the skip size can result in reduced accuracy returns of the OF processing and motion vector marching. Based on the property of minimizing the latency of LB, we found that accuracy will be reduced earlier when compared to that of the detector with normal OF interpolation among different detection frames. This is because, before the second detection, the last frame in the previous detection marches 2×N-1 times instead of N-1 times for the standard motion interpolation schemes, so that the latency cannot be minimized. However, such limitation can be mitigated by paying more attention to the skip number and the latency when the accuracy ratio is calculated.

6. Conclusions

In this work, an LB tracker is proposed to identify Tibetan antelopes which exhibits the following advantages. Firstly, the proposed LB tracker generates motion vectors through the stored OF, making target detection faster. Integrated with YOLOX in native Darknet, it reduces latency by 3.75× on a desktop personal computer. Secondly, lightweight YOLOX is undertaken as the baseline model to reduce dependence on hardware configuration and to lower computing costs while ensuring high detection accuracy. After about 10 frames of skipping, there is only a 2.82 ms latency, and the accuracy is higher than that of YOLOv3 and other models. Thirdly, the algorithm proposed in this work processes images at 30 frames per second on the UAV, suggesting that it can process a frame every 33 ms. Compared with the traditional algorithm, it helps the UAV to reduce the tracking latency by 50%. Fourthly, the tracker proposed in this paper uses NX as the verification platform, with the precision of 76.8 and the FPS of 58.4 Hz. Although it is not as good as KCF in terms of FPS, compared with common trackers such as KCF, its performance is the best by integrating its precision and FPS.

The deep learning-based intelligent UAV can prevent poaching through real-time monitoring, and tracking technology with high accuracy and low latency can provide more-effective rescue for Tibetan antelopes. The research achievement presents an important scientific significance and popularization value for ecological research and population protection of Tibetan antelopes.

Author Contributions

W.L. took charge of the conceptualization and writing—preparing original draft; X.L. (Xiaofeng Li) was responsible for methodology; G.Z. took charge of the software; X.L. (Xuqing Li) took charge of the validation. The formal analysis was conducted by Y.Z. (Yunfeng Zhao), and the investigation was performed by X.L. (Xiaoliang Li). Y.L. took charge of data curation. Y.Z. (Yongxiang Zhao) was responsible for writing—review and editing. Visualization was carried out by Z.Z. Supervision was conducted by Q.S. and D.L. took charge of funding acquisition. The published version of the manuscript has been read by all authors and their agreement was obtained. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by: the National Natural Science Foundation of China (no. 42071289); Open Fund of Key Laboratory of Agricultural Monitoring and Early Warning Technology, Ministry of Agriculture and Rural Affairs (JCYJKFKT2204); Open Fund of Key Laboratory of Spectroscopy Sensing, Ministry of Agriculture and Rural Affairs, P.R. China: “2022ZJUGP004; Fund project of central government-guided local science and technology development (no. 216Z0303G); National Key R&D Program of China” Research on establishing medium spatial resolution spectrum earth and its application (2019YFE0127300); Full-time introduced top talent scientific research projects in Hebei Province (2020HBQZYC002); Application and Demonstration of High-resolution Remote Sensing Monitoring Platform for Ecological Environment in Xiongan New Area (67-Y50G04-9001-22/23) National Science and Technology Major Project of High Resolution Earth Observation System; Innovation Fund of Production, Study and Research in Chinese Universities (2021ZYA08001); National Basic Research Program of China (grant number 2019YFE0126600), and; Doctoral Research Startup Fund Project (BKY-2021-32; BKY-2021-35).

Data Availability Statement

Data for this research can be found at the following data link (https://pan.baidu.com/s/1HhwT-AcjVh8EihSf-7KSYg?pwd=f19h, accessed on 5 June 2022).

Acknowledgments

This research was completed with the support of Chengdu Bobei Technology Co., Ltd. Chengdu, China.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Alsamhi, S.H.; Shvetsov, A.V.; Kumar, S.; Hassan, J.; Alhartomi, M.A.; Shvetsova, S.V.; Sahal, R.; Hawbani, A. Computing in the Sky: A Survey on Intelligent Ubiquitous Computing for UAV-Assisted 6G Networks and Industry 4.0/5.0. Drones 2022, 6, 177. [Google Scholar] [CrossRef]

- Huang, Y. Tibetan Antelope Detection and Tracking Based on Deep Learning; Xidian University: Xi’an, China, 2020. [Google Scholar]

- Luo, W.; Jin, Y.; Li, X.; Liu, K. Application of Deep Learning in Remote Sensing Monitosring of Large Herbivores—A Case Study in Qinghai Tibet Plateau. Pak. J. Zool. 2022, 54, 413. [Google Scholar]

- Luo, W.; Zhang, Z.; Fu, P.; Wei, G.; Wang, D.; Li, X.; Shao, Q.; He, Y.; Wang, H.; Zhao, Z.; et al. Intelligent Grazing UAV Based on Airborne Depth Reasoning. Remote Sens. 2022, 14, 4188. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Wäldchen, J.; Mäder, P. Machine learning for image based species identification. Methods Ecol. Evol. 2018, 9, 2216–2225. [Google Scholar] [CrossRef]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Palmer, M.S.; Packer, C.; Clune, J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, E5716–E5725. [Google Scholar] [CrossRef]

- Gray, P.C.; Fleishman, A.B.; Klein, D.J.; McKown, M.W.; Bézy, V.S.; Lohmann, K.J.; Johnston, D.W. A convolutional neural network for detecting sea turtles in drone imagery. Methods Ecol. Evol. 2018, 10, 345–355. [Google Scholar] [CrossRef]

- Agrawal, P.; Ratnoo, A.; Ghose, D. Inverse optical flow based guidance for UAV navigation through urban canyons. Aerosp. Sci. Technol. 2017, 68, 163–178. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, J.; Zhang, C.; Li, B. An effective motion object detection method using optical flow estimation under a moving camera. J. Vis. Commun. Image Represent. 2018, 55, 215–228. [Google Scholar] [CrossRef]

- Sengar, S.S.; Mukhopadhyay, S. Motion detection using block based bi-directional optical flow method. J. Vis. Commun. Image Represent. 2017, 49, 89–103. [Google Scholar] [CrossRef]

- Yang, T.; Cappelle, C.; Ruichek, Y.; El Bagdouri, M. Online multi-object tracking combining optical flow and compressive tracking in Markov decision process. J. Vis. Commun. Image Represent. 2019, 58, 178–186. [Google Scholar] [CrossRef]

- Barisic, A.; Car, M.; Bogdan, S. Vision-based system for a real-time detection and following of UAV. In Workshop on Research, Education and Development of Unmanned Aerial Systems (RED UAS); IEEE: Piscataway, NJ, USA, 2019; pp. 156–159. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef]

- Danelljan, M.; Häger, G.; Khan, F.; Felsberg, M. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Li, Y.; Zhu, J. A scale adaptive kernel correlation filter tracker with feature integration. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Volume 8926, pp. 254–265. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning Spatial-Temporal Regularized Correlation Filters for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Mueller, M.; Smith, N.; Ghanem, B. Context-Aware Correlation Filter Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Liu, T.; Wang, G.; Yang, Q. Real-time part-based visual tracking via adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Du, D.; Wen, L.; Qi, H.; Huang, Q.; Tian, Q.; Lyu, S. Iterative Graph Seeking for Object Tracking. IEEE Trans. Image Process. 2017, 27, 1809–1821. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Volume 9909, pp. 472–488. [Google Scholar] [CrossRef]

- Fan, H.; Ling, H. Parallel Tracking and Verifying: A Framework for Real-Time and High Accuracy Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Ma, C.; Huang, J.-B.; Yang, X.; Yang, M.-H. Hierarchical convolutional features for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Fan, H.; Ling, H. SANet: Structure-Aware Network for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Song, Y.; Ma, C.; Wu, X.; Gong, L.; Bao, L.; Zuo, W.; Shen, C.; Lau, R.W.; Yang, M.-H. VITAL: VIsual Tracking via Adversarial Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Volume 9914, pp. 850–865. [Google Scholar]

- Tao, R.; Gavves, E.; Smeulders, A.W.M. Siamese Instance Search for Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Fan, H.; Ling, H. Siamese Cascaded Region Proposal Networks for Real-Time Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Wang, G.; Luo, C.; Xiong, Z.; Zeng, W. SPM-Tracker: Series-Parallel Matching for Real-Time Visual Object Tracking. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Zhou, W.; Wen, L.; Zhang, L.; Du, D.; Luo, T.; Wu, Y. SiamMan: Siamese motionaware network for visual tracking. arXiv 2019. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-Aware Siamese Networks for Visual Object Tracking. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Volume 11213, pp. 103–119. [Google Scholar] [CrossRef]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ATOM: Accurate Tracking by Overlap Maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar] [CrossRef]

- Jiang, B.; Luo, R.; Mao, J.; Xiao, T.; Jiang, Y. Acquisition of Localization Confidence for Accurate Object Detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Volume 11218, pp. 816–832. [Google Scholar]

- Bhat, G.; Danelljan, M.; Van Gool, L.; Timofte, R. Learning Discriminative Model Prediction for Tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Zhu, Y.; Samajdar, A.; Mattina, M.; Whatmough, P. Euphrates: Algorithm-SoC co-design for low-power mobile continuous vision. In Proceedings of the 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA), Los Angeles, CA, USA, 1–6 June 2018; Available online: https://ieeexplore.ieee.org/document/8416854/ (accessed on 5 June 2018).

- Ujiie, T.; Hiromoto, M.; Sato, T. Interpolation-Based Object Detection Using Motion Vectors for Embedded Real-time Tracking Systems. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 616–624. Available online: https://ieeexplore.ieee.org/document/8575254/ (accessed on 5 June 2018).

- Nicolai, P.; Raczkowsky, J.; Wörn, H. Continuous Pre-Calculation of Human Tracking with Time-delayed Ground-truth—A Hybrid Approach to Minimizing Tracking Latency by Combination of Different 3D Cameras. In Proceedings of the 2015 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Colmar, France, 21–23 July 2015; pp. 121–130. [Google Scholar] [CrossRef]

- Liu, L.; Li, H.; Gruteser, M. Edge Assisted Real-time Object Detection for Mobile Augmented Reality. In Proceedings of the 25th Annual International Conference on Mobile Computing and Networking, Los Cabos, Mexico, 21–25 October 2019; pp. 1–16. [Google Scholar] [CrossRef]

- Chen, T.Y.-H.; Ravindranath, L.; Deng, S.; Bahl, P.; Balakrishnan, H. Glimpse: Continuous, real-time object recognition on mobile devices. In Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems, Seoul, Republic of Korea, 1–4 November 2015; pp. 155–168. [Google Scholar] [CrossRef]

- Cintas, E.; Ozyer, B.; Simsek, E. Vision-Based Moving UAV Tracking by Another UAV on Low-Cost Hardware and a New Ground Control Station. IEEE Access 2020, 8, 194601–194611. [Google Scholar] [CrossRef]

- Opromolla, R.; Inchingolo, G.; Fasano, G. Airborne Visual Detection and Tracking of Cooperative UAVs Exploiting Deep Learning. Sensors 2019, 19, 4332. [Google Scholar] [CrossRef]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Detect to track and track to detect. In Proceedings of the IEEE international conference on computer vision. arXiv 2017. [Google Scholar] [CrossRef]

- Mishra, R.; Ajmera, Y.; Mishra, N.; Javed, A. Ego-Centric framework for a three-wheel omni-drive Telepresence robot. In Proceedings of the 2019 IEEE International Conference on Advanced Robotics and its Social Impacts (ARSO), Beijing, China, 31 October–2 November 2019; pp. 281–286. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), Vancouver, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Jianbo, S.; Tomasi, C. Good features to track computer vision and pattern recognition. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Redmon, J. DarkNet: Open Source Neural Networks in C. 2013. Available online: https://pjreddie.com/darknet/ (accessed on 15 March 2013).

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-J.M. YOLOv4 Optimal Speed and Accuracy of Object Detection. In Proceedings of the Computer Vision and Pattern Recognition. arXiv 2020. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Wang, Z.; Xu, K.; Wu, S.; Liu, L.; Liu, L.; Wang, D. Sparse-YOLO: Hardware/software co-design of an FPGA accelerator forYOLOv2. IEEE Access 2020, 8, 116569–116585. [Google Scholar] [CrossRef]

- Bochkovskiy, A. AlexeyAB/Darknet. Available online: https://github.com/AlexeyAB/darknet (accessed on 6 January 2021).

- Lin, C.-E. Introduction to Motion Estimation with Optical Flow. Available online: https://nanonets.com/blog/optical-_ow/ (accessed on 5 April 2019).

- Wu, Y.; Lim, J.; Yang, M.-H. Adaptive Frame-Rate Optimization for Energy-Efficient Object Tracking. In Computer Vision and Pattern Recognition (CVPR); 2013; pp. 2411–2418. [Google Scholar]

- Murray, S. Real-time multiple object tracking—A study on the importance of speed. arXiv 2017. [Google Scholar] [CrossRef]

- Bochinski, E.; Senst, T.; Sikora, T. Extending IOU Based Multi-Object Tracking by Visual Information. In Proceedings of the 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; Volume 15, pp. 1–6. [Google Scholar] [CrossRef]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).