1. Introduction

The peach tree is planted widely in Japan as a fruit tree with high economic value. According to 2021 survey reports of Japan, the total planted area of peach trees is 9300 ha. and the harvest volume is 107,000 tons. However, the average age of those managing orchards is rapidly growing, from 61 in 2005 to 77 in 2015 within ten years [

1]. The decrease in the number of professionals and the aging of the population is high; thus, with the increase in the cultivated area, the area that one person needs to manage is bound to increase significantly if the agricultural productivity of the orchard is to be maintained. An orchard takes a long time from planting to harvesting, and the adjustment of the trees and cultivation management during this period must be performed precisely. Compared to other crops, the barrier to fruit cultivation is high initially, and professional cultivation is not easy. Although using smart agriculture and tree improvement in recent years has accelerated the efficiency, the overall mechanical automation rate of important cash crops, such as fruit trees, from cultivation to harvest is quite low [

2,

3]. Therefore, it is important to develop fruit tree cultivation and management technology to be used by non-specialists.

The winter pruning of peach trees is the third most time-consuming task after bud picking and harvesting and accounts for approximately 25% of the total time spent on cultivation management [

1]. The amount of pruning has a great influence on the future growth of the fruit tree and the harvest. It is difficult to change the shape of perennial branches, such as major and sub-major branches of peach trees, because they do not have new growth points to grow side buds or flower buds. Therefore, from the viewpoint of cultivation management, it is crucial to quickly grasping the status of major branches and subprincipal branches in the winter pruning of deciduous fruit trees such as peach trees [

4,

5,

6]. In the current pruning operation, the cultivation management expert first determines the size of the entire tree and then determines the distribution of each branch in a small area. By observing the overall tree potential and predicting future growth trends, a pruning strategy is established to achieve sufficient branch density in each space. In this way, it is indispensable to quickly grasp the state of the tree potential and the distribution of major branches to reduce the overall workload effectively.

A nondestructive three-dimensional (3D) measurement technique can measure the growth parameters of plants, such as plant shape and biomass. Based on the type of technology used, there are two major techniques: passive unmanned aerial vehicle structure from motion (UAV-SfM) and active lidar [

7]. UAV-SfM technology uses continuous and overlapping images obtained by a camera on the UAV to construct a 3D model. This method preserves the color information of the images in the 3D model, which makes it possible to be applied in different wavelengths, such as visible light, infrared, and thermal images [

8,

9,

10,

11,

12,

13,

14]. Nevertheless, UAV-SfM technology requires more hardware resources and computing time to complete 3D matching and modeling. When the object of a model has complex structures and low reflectivity, such as plants, more shooting angles, and a good spatial resolution are required [

15,

16]. Considering the time constraints in a planting management schedule, it is often necessary to obtain and analyze the 3D information of a target in a short amount of time or in real time; therefore, UAV-SfM, which requires a long time for the modeling calculations, may not be an appropriate method. Therefore, developing a 3D plant measurement method that is quick and of high accuracy is an urgent issue in the agricultural field.

Another active nondestructive 3D measurement technique is terrestrial laser scanning (TLS) technology. Based on the time-of-flight principle, TLS can construct a centimeter-level high-precision 3D point cloud model by irradiating the target object and calculating the return time [

7,

17]. TLS has been widely used in the observation of trees, such as fruit trees and forests, over the last 2 decades. This technology can be used to measure the three-dimensional structural elements of trees, such as plant height, crown structure, leaf tilt angle, and branch distribution [

17,

18,

19]. Despite TLS having many advantages, one of its problems is that the measurement must be completely still in a short amount of time. In addition, for the opposite side of the target object that cannot be scanned, TLS must be merged with the previous point cloud after changing the observation angle [

17,

19,

20]. If the observation area is large, it must be observed by multiple measurement locations to completely model. Therefore, the maintenance of high-precision lidar observation effect and a reduction in observation time has always been an important research topic.

Thrun et al. [

21] proposed a technique based on a mounted detector, with simultaneous localization and mapping (SLAM), which can explore its position and simultaneously construct a map of its surroundings, as proposed in [

21,

22]. Using 2D lidar as the sensor in SLAM technology can effectively grasp the position of its own sensor and construct the surrounding map information. If it is 3D lidar, the surrounding point cloud can be constructed during the movement process. The calculation methods of 3D lidar SLAM are distinguished simply by the traditional 3D direct pairing and image-based methods [

23]. Among them, the lidar odometry and mapping (LOAM) method, proposed by Zhang and Singh in 2014, is the founding image-based method, which converts the original 3D point cloud data into two-dimensional (2D) depth images and divides the feature data into contours and surfaces for fast pairing calculation [

24]. The image-based method has a faster computation time and smaller cumulative error than the 3D direct pairing method. LeGO LOAM SLAM (lightweight and ground-optimized lidar odometry and mapping on variable terrain), developed by Shan et al. [

25] in 2018, is a technique that improves the LOAM method by first calculating the ground features through the lower laser beam, reducing the number of points that need to be paired. Therefore, it is not necessary to use advanced hardware resources, such as GPUs, to create 3D point cloud models at high speed in the vicinity, showing great application advantages. The odometer and pose record were introduced into the LeGO LOAM SLAM system, allowing for the loop closing problem and the failure of the self-location estimation, the most troubling problems in SLAM technology, to be solved. The high stability of LEGO LOAM SLAM was introduced as a standard method for image-based methods in a review paper by Huang, L. [

23].

In the past, in lidar SLAM studied the focus was on environments such as autonomous driving and construction sites [

23,

26,

27]. The objects detected were usually man-made objects with simple 3D constructions, and plants with complex constructions were relatively less discussed [

23,

28,

29,

30,

31,

32,

33,

34,

35]. Among them, the iterative closest point (ICP) method, which mainly uses the 3D direct matching method, has been used to perform relevant studies on the structure of tree crowns, diameter at breast height, and plant parameters such as tree height. However, a review paper on SLAM indicated that the ICP method has a serious cumulative error loop closing problem, and the failure of its position projection in large-scale detection and model stability have been considered [

23,

26,

27,

36]. For the image-based method, it was confirmed that the detection of tree height and diameter at breast height had high accuracy, but there is a lack of discussion on branch distribution, extraction methods for small branches, and comparison of the tree potential for agricultural applications related to fruit trees [

37,

38,

39,

40,

41]. There are few discussions on the effect of the 3D reconstruction of fruit trees or the effect on the model stability during winter pruning periods [

42]. On the other hand, there has not been enough discussion on whether the 3D lidar SLAM method is better than the UAV-SfM method, which has been used widely in recent years concerning modeling, detection accuracy, and computation time [

15,

16,

42,

43,

44].

Therefore, the main objective of this study was to evaluate the accuracy of backpack 3D lidar SLAM and UAV-SfM in the 3D modeling and major branch detection methods for peach trees during the winter pruning period. The results of the model accuracy evaluation were compared with the actual fresh pruning weights by measurements before and after winter pruning. In addition, the spatial density of the point cloud was proposed as the evaluation data for the detection method of the different thicknesses of branches for the 3D point cloud data without color (

Figure 1).

2. Materials and Methods

2.1. Experiments

This experiment was conducted in a peach orchard (36.04892°N, 140.0775°E, area: 80 m × 40 m) located at the Institute of Fruit Tree and Tea Science, National Agriculture and Rood Research Organization (NARO) in Tsukuba, Ibaraki, Japan. The farm is surrounded by trees for windbreak isolation, with iron posts and support frames for peach trees set-up as supports for bird-protecting nets. The peach trees were 12 years old and were cultivated and managed normally. There were two varieties, Akatsuki and Kawanakajima Hakuto, and the average height of the peach trees was approximately 3 m. Winter pruning is performed every winter by cultivators of peach trees.

Pruning began in January 2022, and the pruning of the BCD column (shown in

Figure 2) was completed by 4 February, and most of the pruning was completed by 25 February. In addition, experimental pruning was conducted from 25 February to 4 March for a few of the following trees: No. 1: A4; No. 2: B2; No. 3: B3; No. 4 and No. 5: C4. After the pruning, the axial width and fresh weights of the pruned branches were measured. The diameters of the branches were measured near the major branch and at the fork and the end of the major branch in the experimental area. The diameter at the center of the branch was recorded in a random sample of 25% of the cut branches in testing area B3.

The measurements using 3D lidar SLAM were performed on 24 December 2021, 4 and 24 February 2022, and 4 March 2022. The measurements using UAV-SfM were performed on 4 February 2022 and 4 March 2022. White pesticides were sprayed at the farm on 2 March to prevent disease, and the branches and buds became white after the spraying, and a few white spots could even be observed.

2.2. The Measurement of 3D Lidar SLAM

The 3D lidar photodetector used in this study was the Velodyne VLP-16 lidar Omnidirectional 3D Sensor, developed by Velodyne (San Jose, CA, USA), combined with the LeGO LOAM SLAM (lightweight and ground-optimized lidar), developed by Shan et al. [

25]. The Velodyne VLP-16 lidar has a built-in IMU system to record the inclination and acceleration of the machine, and the machine has 16 laser-beam transceivers between plus and minus 15 degrees in the vertical direction, which rotate around the axis and scan the surrounding area. The laser-beams are reflected onto the receiver after encountering an obstacle, and the system calculates the travel time of each beam from the light source to the receiver to obtain the distance to the obstacle. The 3D lidar uses Class 1 eye-safe laser beams at a wavelength of 903 nm, considered to have a low impact on the human eye.

LeGO LOAM SLAM was modified according to 3D lidar-based LOAM SLAM technology. Considering the 16 lines of VLP16 lidar as an example, the seven lasers below were used for the ground detection. The horizontal angle of the adjacent lasers below 10 degrees were set as a ground point cloud and the rest as a nonground point cloud. After that, the point cloud projection segmentation was converted into a distance image, ensuring that each point P had a unique pixel correspondence, and the pixel value was the distance value from point P to the sensor. As the ground points are usually the main cause of noise, a column-wise evaluation was performed on the distance image to extract the ground points, after which the remaining distance images were clustered, the point clouds with less than 30 points were filtered, and the retained point clouds were assigned different labels. The ground point clouds were then clustered. Due to the special characteristics of the ground point clouds, separate processing improved operational efficiency and extracted more stable features. After that, the features were extracted separately for the ground point cloud and the segmented point cloud, mainly using the LOAM method. The original 360 degree point cloud was divided into six equal parts, and the accuracy of each part was 300 points/time (1800 points for one laser scan of VLP16) with 16 lasers, so that each rotation could obtain a depth image of 16 × 1800. The curvature or smoothness of each segment was recorded and sorted, and then the data were divided into edge point features and surface point features (

Figure 3). Lidar odometry was used to estimate the subject pose of the sensor between two consecutive depth images. Similar convolutions between two consecutive depth images were matched to improve the accuracy and efficiency of the matching using two consecutive depth images as the matching conditions. Compared with the LOAM method, the LeGO LOAM approach uses a two-step LM optimization that synthesizes the original matching problem into a complex distance vector calculation problem and finally obtains the pose matrix with the corresponding feature matching at the shortest distance. Such an approach can reduce the computation time by approximately 35% while achieving a measurement accuracy equal to LOAM. On the other hand, to reduce the amount of stored data, the LeGO LOAM approach does not store the point cloud map model derived from each depth map but records the feature set, which is bound to the estimated pose of the lidar, and finally splices the feature set within 100 m of the current position into the final point cloud map model. This method has a significantly shorter processing time than other lidar SLAM methods and can realize the current cubic point cloud results presented while measuring.

In the actual field test, the controller, power supply, and other equipment were loaded in a backpack and carried by the operator, and the lidar body was set at approximately the same height as the head, nearly 170 m above the ground (

Figure 1C). The data were linked to a laptop computer via an ethernet network, and the user moved while confirming the current 3D point cloud creation status. The moving speed was slightly slower than normal, approximately 0.5 m per step, walking through the farm. Turning was performed with a fixed-point turn at a slow speed of 30 degrees per second. The AC100V power supply required for the system was provided by a power supply (Ankers powerhouse 200 (Changsha, China)), which was capable of stable output even at low currents. Including the power supply, the total weight of the machine was 4.3 kg.

2.3. UAV-SfM

The aerial shots were captured using the DJI Inspire version 2 (DJI, Shenzhen, China) UAV model, which has a dedicated camera (ZenmuseX3). The flight speed of the aircraft was set at 0.5 m/s, and the altitude was 15 m. The maximum flight time was 15 min, and for safety reasons, all flight plans were set to be completed within 12.5 min. The flight path was calculated in advance, the rate of photo overlap for the ground was more than 80%, and the three flights were set to cover the entire farm. The wind speed during the flight was below 1.0 m/s. The flight time was between 10:30 and 13:00.

The camera resolution was set to 4000 × 3000 pixels and the camera shutter to 1/2000 s using automatic interval photography at a frequency of one image every 3 s. As the camera was integrated with the drone, the GPS coordinates of the aircraft were recorded automatically in the photos at the time of the shooting. The ground sample distance of a photo was 7 mm/pixel.

The automatic flight software was developed using the API provided by DJI and designed by the Robotics Research Institute of NARO. Before the flight, the data, including the latitude and longitude of the set path and the flight altitude, were entered into the aircraft and then the flight was performed. The images obtained from the aerial photography were sorted based on the photographic date and imported into Agisoft Metashape version 1.72 (formerly known as Agisoft PhotoScan) software to produce a 3D point cloud model and a 3D high-density point cloud model. The number of point clouds obtained and the time required to construct the model were recorded. In this study, we did not use markers as a reference for the UAV-SfM. The error in the GNSS coordinates on the UAV was 0.5 m.

2.4. Overlapping of Point Clouds

The data of the point clouds were collected using different methods and for different periods over more than two months, and the 3D point clouds did not have characteristic factors such as color and markers for the overlapping point cloud. Therefore, in this study, we used CloudCompare software (

http://www.cloudcompare.org/, accessed on 20 July 2021), which has excellent point cloud editing and display functions, to fit the two different point cloud models using the ICP (iterative closest point). The algorithm was based on setting a one-point cloud model as the reference point cluster and the other point cluster model as the matching point cluster. The points of the matching point cloud were matched first with the nearest points of the reference point cloud. Then, we used the root mean square (RMS) to calculate the point-to-point distance to adjust the rotation, translation, and even scaling of the matched point cloud. The iterative process was performed using the transformed data until the amount of change reached a threshold value. This study set the threshold for randomly sampled points to 500,000 and for the RMS variation below 1 × 10

7 to achieve high results (

Figure 4).

The point cloud was removed manually from the ground and the surrounding wire mesh after completing the point cloud iteration successfully. After that, point cloud fitting of the ICP was reperformed. The settings were the same as those described above. After the fitting, the point cloud data were cross-fitted, and the final RMS values were recorded. The differences between the 3D lidar and UAV-SfM were compared and analyzed using profile plots. The profile is the middle trunk part of the orange block in

Figure 2.

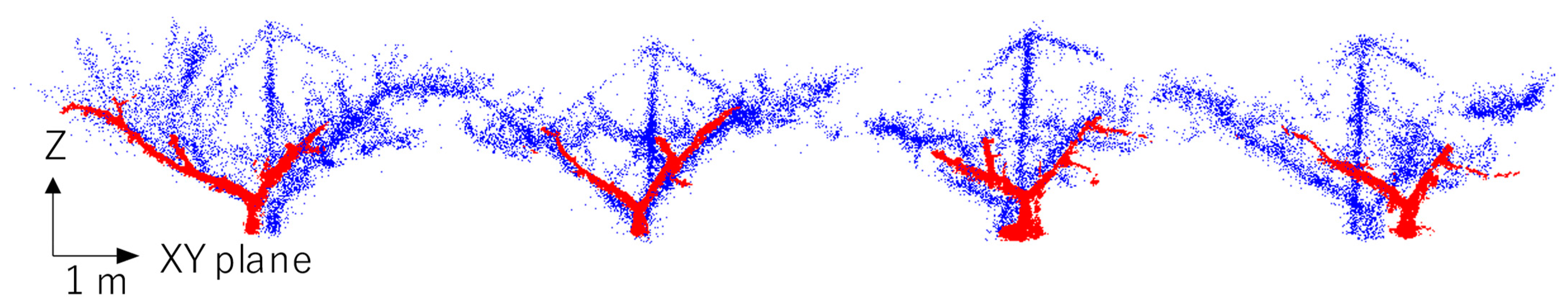

2.5. Classification of Branches and Density Calculation

As the pruning operation is based on the lateral branches, such as small branches from the major and sub-major branches, it is quite necessary to classify the major and sub-major branches. Thus far, mainstream research has focused on classifying the branches based on their color using the photo information captured simultaneously. Most of the point clouds produced by lidar alone are visually labeled with attributes and then separated. In the point cloud models obtained by either UAV-SfM or 3D lidar SLAM, compared to the major branches, the branches below 5 cm in diameter were difficult to model, and the overall point cloud distribution was discrete. On the other hand, thicker branches with a diameter of 5 cm or more had a high density of point clouds around them, and the 3D contours could be seen with the naked eye. Therefore, this study proposed to use the number of dots in the space around the dots for classifying major branches and small branches. This study was conducted in spherical space.

The number of all points in the specified radius around each point was calculated (sphere space density; Equation (1)) and stored as the attribute of the point.

where N is the number of points, and R is the radius.

In addition, from the 3D point cloud model generated by 3D lidar SLAM, the circle generated by the diameter of each actual branch is the plane, and the cylindrical block with a height of 20 was calculated as the number of points in the internal space to evaluate the actual performance of the branch classification system.

Thereafter, the spatial density information of all points was represented as a histogram based on the number of points. If the graph was distributed normally, the points above μ arithmetic mean (2) + 1σ standard deviation (3) were defined as thick branches, such as major branches and sub-major branches, whereas the following points were regarded as small branches:

2.6. Converting the Point Cloud Model to a Voxel Model

The distribution of point clouds in space is not even. Point clouds with different numbers of points cannot be effectively compared with the measured values. Here, we referred to the paper by Hosoi and Omasa [

19] and used the voxel model to homogenize the values of the point clouds using the following coordinates conversion formula. The size of a voxel was set to 10 cm

3, so Δx′, Δy′, and Δz′ were all 10 (

Figure 5).

Thereafter, the number of voxel points in each point cloud model was counted as the volume information of the point cloud. Comparisons of the voxel model of the trees in columns B–E were used for the different methods, which were built before pruning on 4 February and after pruning on 4 March. The number of voxel points in the different periods was subtracted to obtain the volume change, and we calculated the change in the estimation volume of the A4, B2, B3, and C4 trees as the estimation volume of the pruned branch No. 1–5. Finally, the pruned branch of the estimated volume value was compared with the actual fresh weight.

4. Discussion

In terms of accuracy, the spatial resolution obtained by the UAV-SfM was 7 mm/pixel. The closest distance between the 3D lidar-based path and the center of the peach tree was approximately 4 m, and each laser had 1800 points per rotation, so ideally each of the 16 lasers produce 16 × 5 points per degree, totaling 80 points. If projected on a 4 m far away wall, the ideal projection is 1.64 points per square centimeter, slightly lower than the spatial resolution of the UAV-SfM. However, based on the results of this study, it is known that a ground-walking type of 3D lidar SLAM provides better point cloud modeling results than the UAV-SfM for peach tree branches without leaves in winter (

Figure 9). Although the UAV-SfM provided better ground modeling results, it was more difficult to be modeled in the critical plant body, specifically in the fine branch parts (

Figure 2). It should be noted that although the overall number of points was several times higher than the original point cloud, only a very small amount of voxel (approximately 3%) was constructed in the branch part of the voxel model, which reflects that it is not appropriate for use in branch detection. The overall experimental results also show that the 3D lidar SLAM has a huge advantage over the UAV-SfM technique in terms of point cloud production time.

The LeGO LOAM SLAM calculation method used in this study provides an almost real-time 3D point cloud construction method, allowing users to see the point cloud construction while they are moving. Even if there is an error problem, it can be corrected or restarted immediately. Compared with the complex operation and long calculation time of the UAV-SfM, 3D lidar SLAM presents a highly competitive advantage. The operation of the whole system can be performed by only one person, compared to the UAV flight, which may require special permits or unique licenses in different countries; backpack 3D lidar can be easily carried and moved, which is more widely used in general agricultural fields. In terms of the price, the current high-stability UAV unit price is approximately JPY 50,000–150,000, and the SfM analysis software also costs more than JPY 300,000, not including the price of a high-performance computer and computing costs. This time, the 3D lidar used a lightweight 16-bar laser-beam model with a unit cost of approximately JPY 600,000, using an ubuntu + ROS system, and used the publicly available LeGO LOAM SLAM technology and other software systems at no extra cost, so it clearly has a greater competitive advantage in terms of price. On the other hand, major Japanese agricultural machinery manufacturers, such as Kubota and Yammar, have been using lidar SLAM technology in their own agricultural machinery for autonomous driving technology, which makes it easier to introduce this technology to the market in the future.

Other studies on the 3D lidar SLAM technique discussed tilting the lidar ontology to achieve a higher density 3D point cloud model [

13,

38,

39,

40,

41]. We also tried to tilt the lidar body, but the point cloud could not be modeled smoothly, resulting in a huge error. As the LeGO LOAM SLAM technology that constructs a 3D point cloud model using several laser-beams downward for ground detection was used to construct the 3D point cloud data, it might lead to the ground point cloud not being matched. According to the original report on LeGO LOAM SLAM, the lidar body could not be tilted during movement [

25]. However, there are no known papers that use LeGO LOAM SLAM technology to discuss modeling issues such as tilt, so it is difficult to use for comparison and is a topic that must be explored in the future.

In this study, we simply walked in the peach tree orchard with the relevant equipment on our backs. Although the head might block some of the laser beams, there would be no effect on the point cloud modeling, because the minimum distance for modeling was set at over one meter. On the other hand, the branches of the trees were also mostly distributed in the range of 100–350 cm from the ground, and the height of the machine was approximately 170 m at an angle of 15 degrees above and below each position, so that the distribution of the branches of fruit trees could be grasped more effectively. For different heights of the lidar scanning method, the impact on the overall point cloud needs to be further explored.

Due to the limitation of the VLP-16 machine, each rotation could only obtain a depth graph of 1800 × 16 for matching, so there is still much room for enhancement of the spatial resolution. With a greater laser emission frequency and more laser beams, a better resolution during point cloud modeling should be achieved. According to the matching algorithm of the LEGO LOAM SLAM, point cloud clumping around fewer than 30 points is considered as an error that is deleted directly in the matching step. Then, the chances of the tiny branches being scanned again by the laser emitted by the constantly moving 3D lidar may not be sufficient during the matching step, so factors such as the moving speed and the relative distance of the small target in the modeling using 3D lidar SLAM technology should be discussed in more detail. This also explains why the point cloud density of the major branches was much higher than that of thin branches. However, it also shows that even with the LeGO LOAM SLAM approach, the problem of the difficult detection of fine branches still exists. Finally, the problem of the loop closing problem, which is common in SLAM, was not observed in this study. This may be because plants with only branches in winter have a relatively open space, and the surrounding windbreak forest also had the effect of establishing corresponding feature points. It is also possible that this is an improved effect of LeGO LOAM SLAM on the loop closing problem.

According to the review papers by Xu et al. [

26] and Huang [

23] on lidar SLAM technology, it has been mentioned that a variety of 2D lidar-based SLAM techniques have been developed and are relatively more mature than 3D lidar SLAM. It is also described that the technology of converting into a depth image, starting from LOAM technology, is considered to have a better error recovery ability and modeling time advantage. In this study, in terms of the modeling stability, 3D lidar SLAM also showed great advantages, although the tree potential and other branches were cut off, but the error between the two models was relatively small (RMSE < 0.25 m) and can be regarded as the effect of changes such as pruning (

Table 2). In the UAV-SfM, there was the biggest error amount of 0.22 m between the point cloud and the dense point cloud. This may be because the high-density point cloud method uses more photo information to generate many more points from the point cloud model instead of three-dimensional pairing. Another reason for the error between the UAV-SfM point clouds is inferred to be caused by the different light situation and white spots of the easily matched feature points. In particular, the white pesticide sprayed on 2 March showed randomly distributed spots on every branch. According to previous studies by Nguyen et al. [

45], Paulus et al. [

46], and Kochi et al. [

47], the spray of white pesticide can significantly increase the number of feature points using actively projected structured light or irregular speckle distribution in the SfM calculations. This leads to the number of points increasing in the point cloud model and, overall, increasing the accuracy of the modeling. We consider this could be the main reason why the UAV-SfM of the 2 March model had a higher number of total points and quality than the 4 February model.

For the core observation item of branches, it can also be found in this paper that the modeling rate of the 3D lidar SLAM in branches with an RMSE = 3084 was much higher than that of the UAV-SfM model, with the point cloud’s RMSE = 4496 and dense point cloud’s RMSE = 4554. Although it cannot make an accurate model of the fine branches like TLS, a sparse and dense structure of the point cloud could indeed be observed. On the other hand, for the spatial density branch detection method used in this paper, the major branch parts with diameters over 3 cm were also successfully classified correctly. In general, a larger the branch diameter means a stronger the structure, so this method may also be used as a method to detect strong obstacles in an agricultural environment. Finally, since the structure of major branches was obtained, growth models might be constructed for the leaf density and growth status of the fruit trees using different periods of 3D point cloud data of the fruit trees in the future.