Segmentation and Connectivity Reconstruction of Urban Rivers from Sentinel-2 Multi-Spectral Imagery by the WaterSCNet Deep Learning Model

Abstract

:1. Introduction

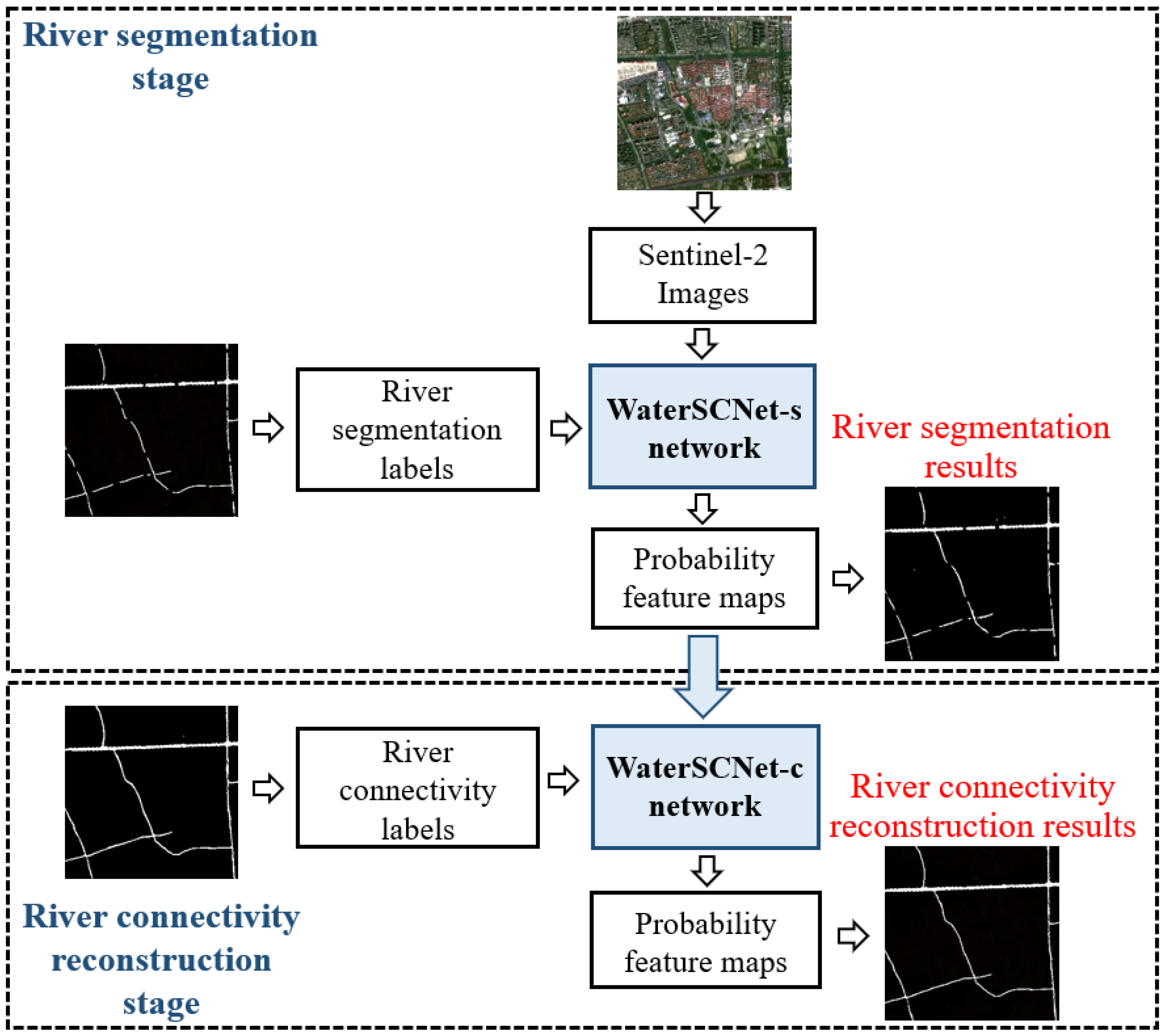

2. Methods

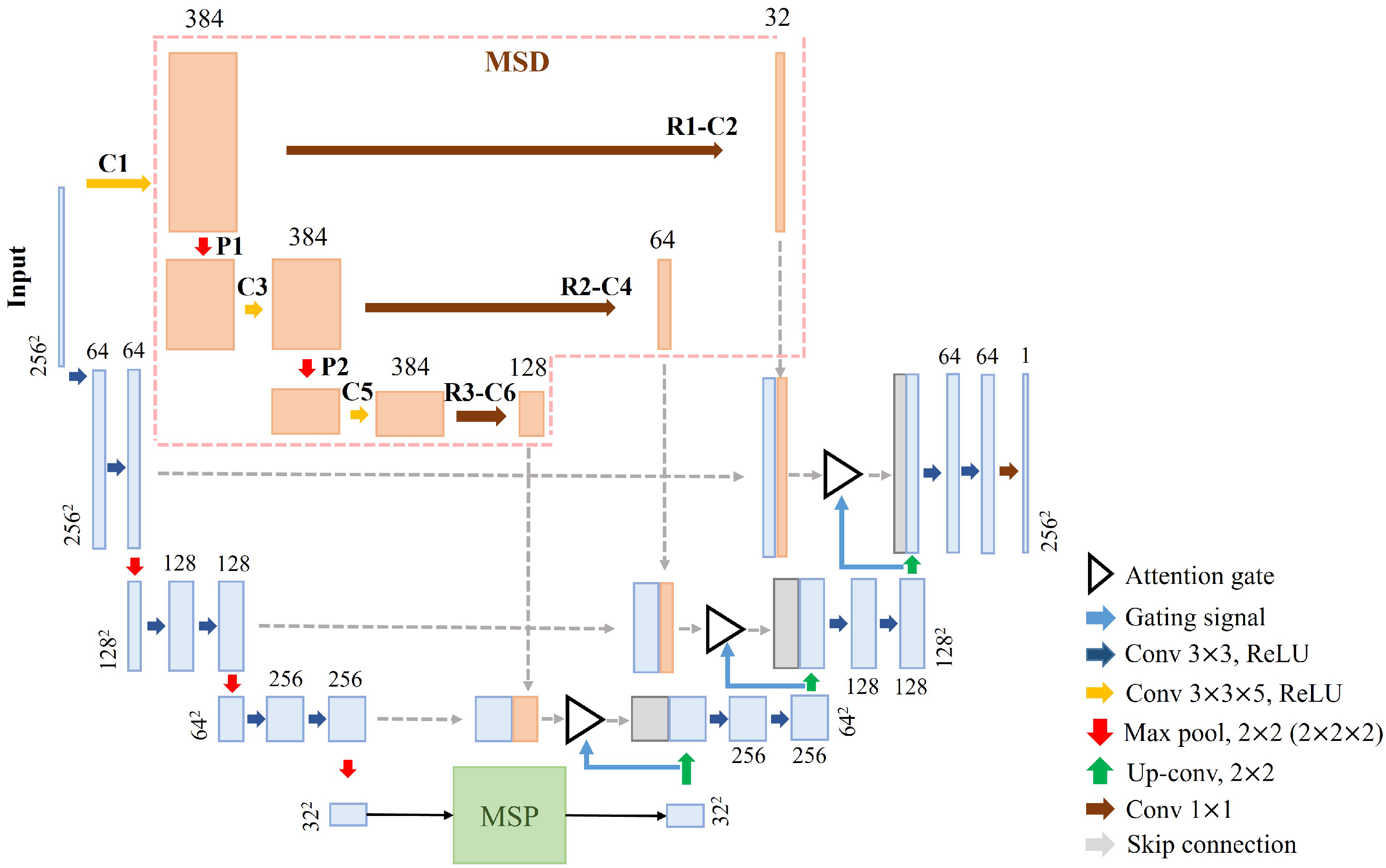

2.1. The WaterSCNet Model

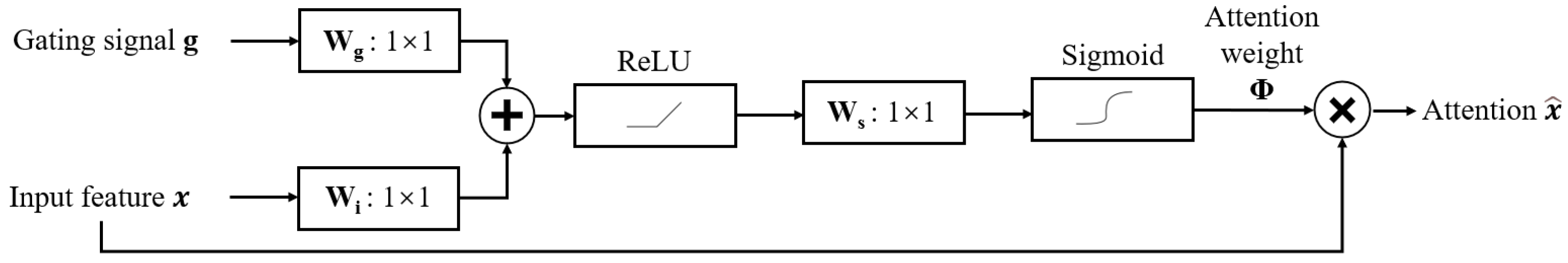

2.2. River Segmentation Subnetwork: WaterSCNet-s

2.2.1. The U-Shaped Encoder and Decoder Structure

2.2.2. The MSD Path Module

2.2.3. The MSP Block

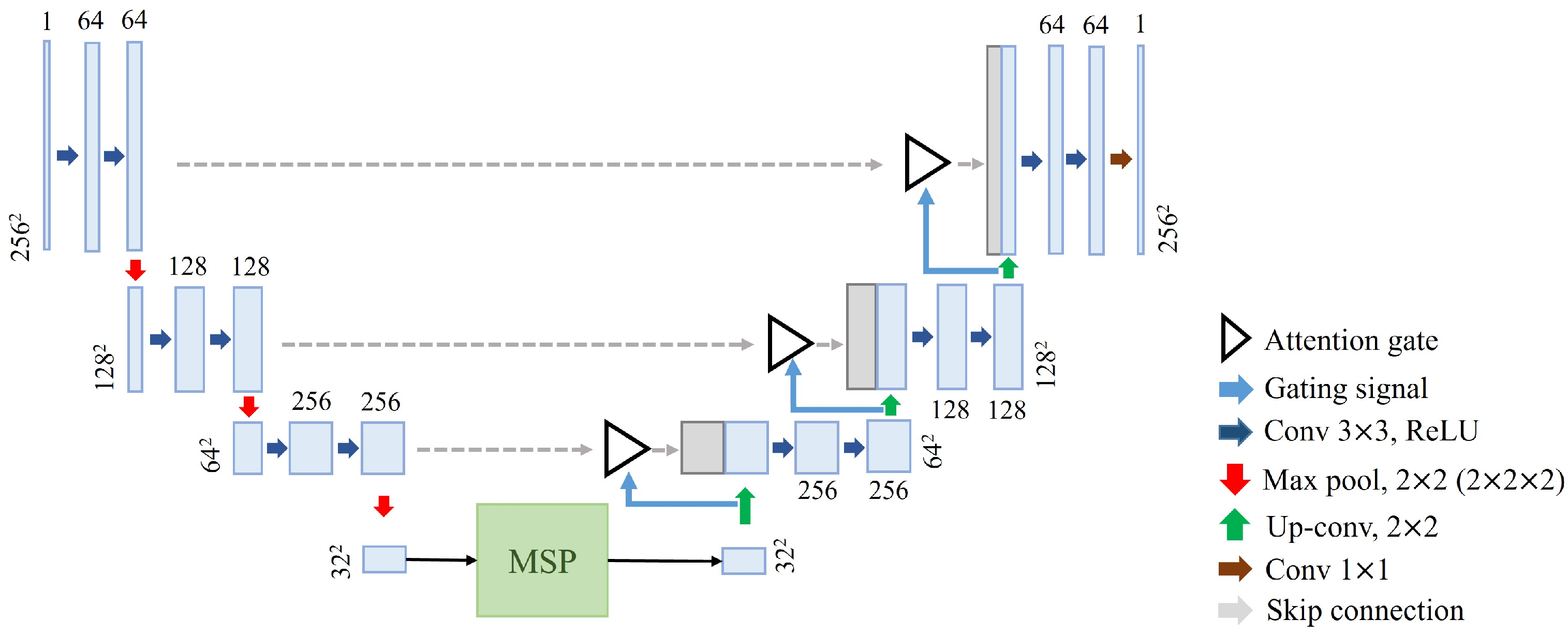

2.3. River Connectivity Reconstruction Subnetwork: WaterSCNet-c

3. Experiments

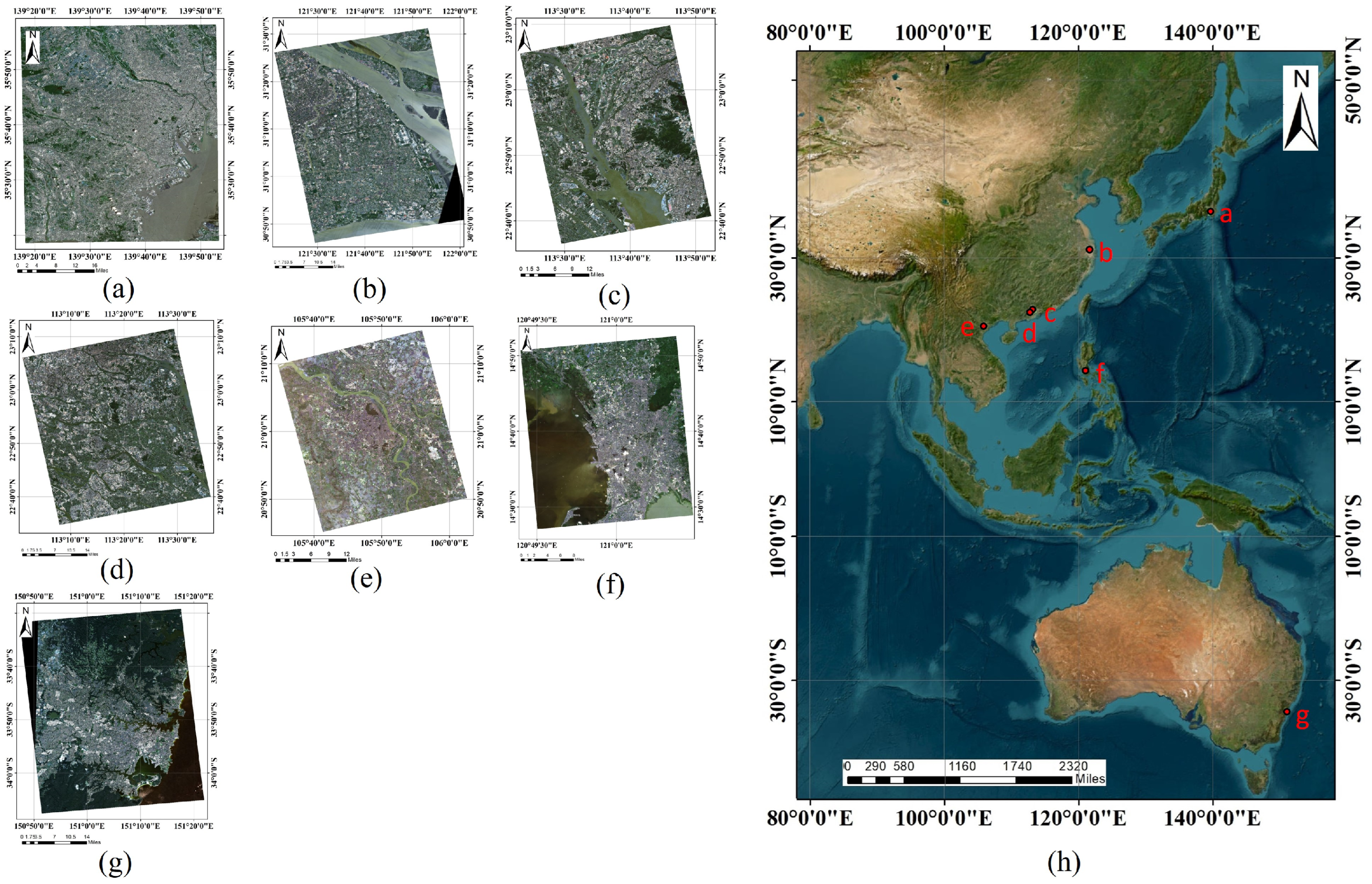

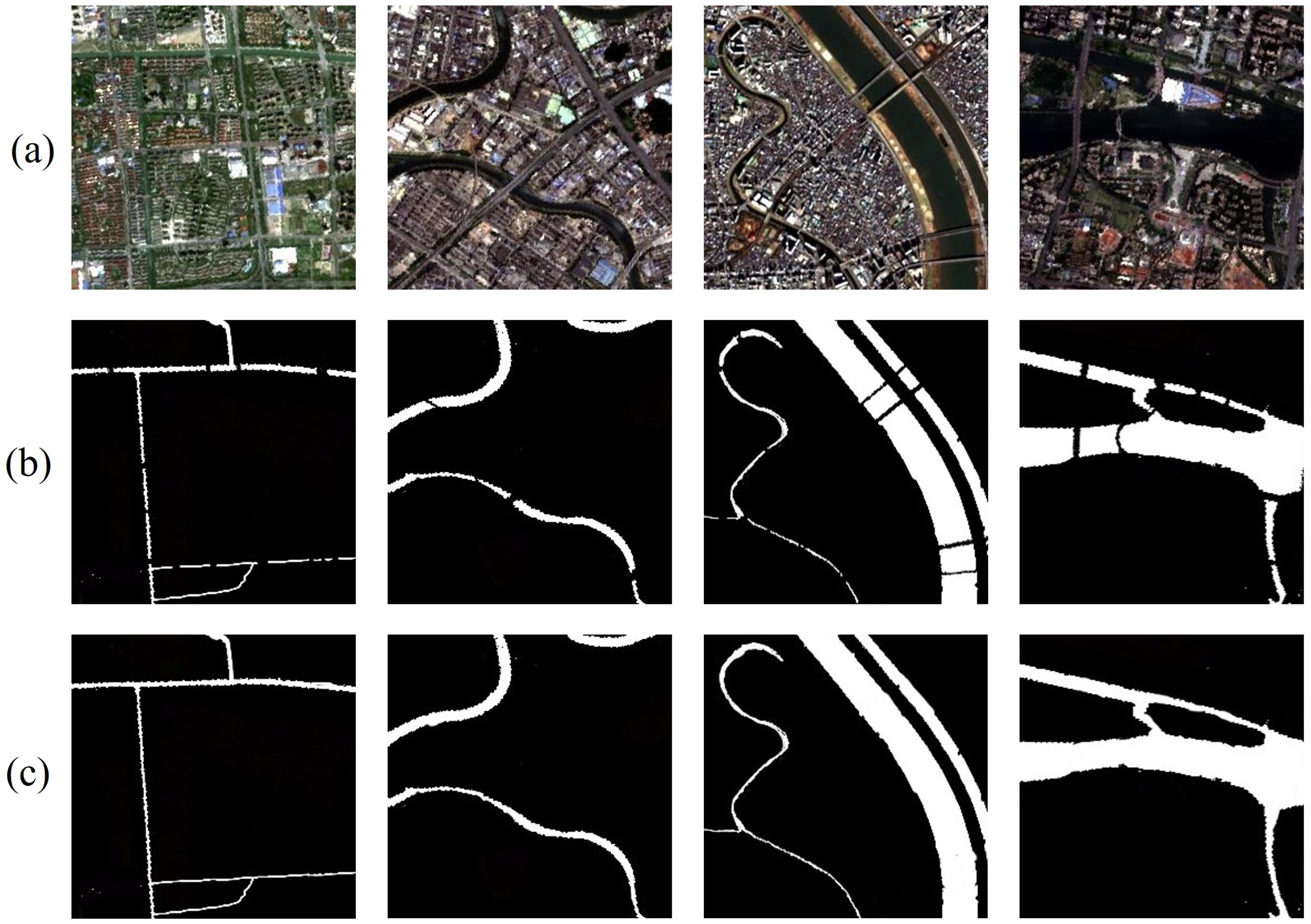

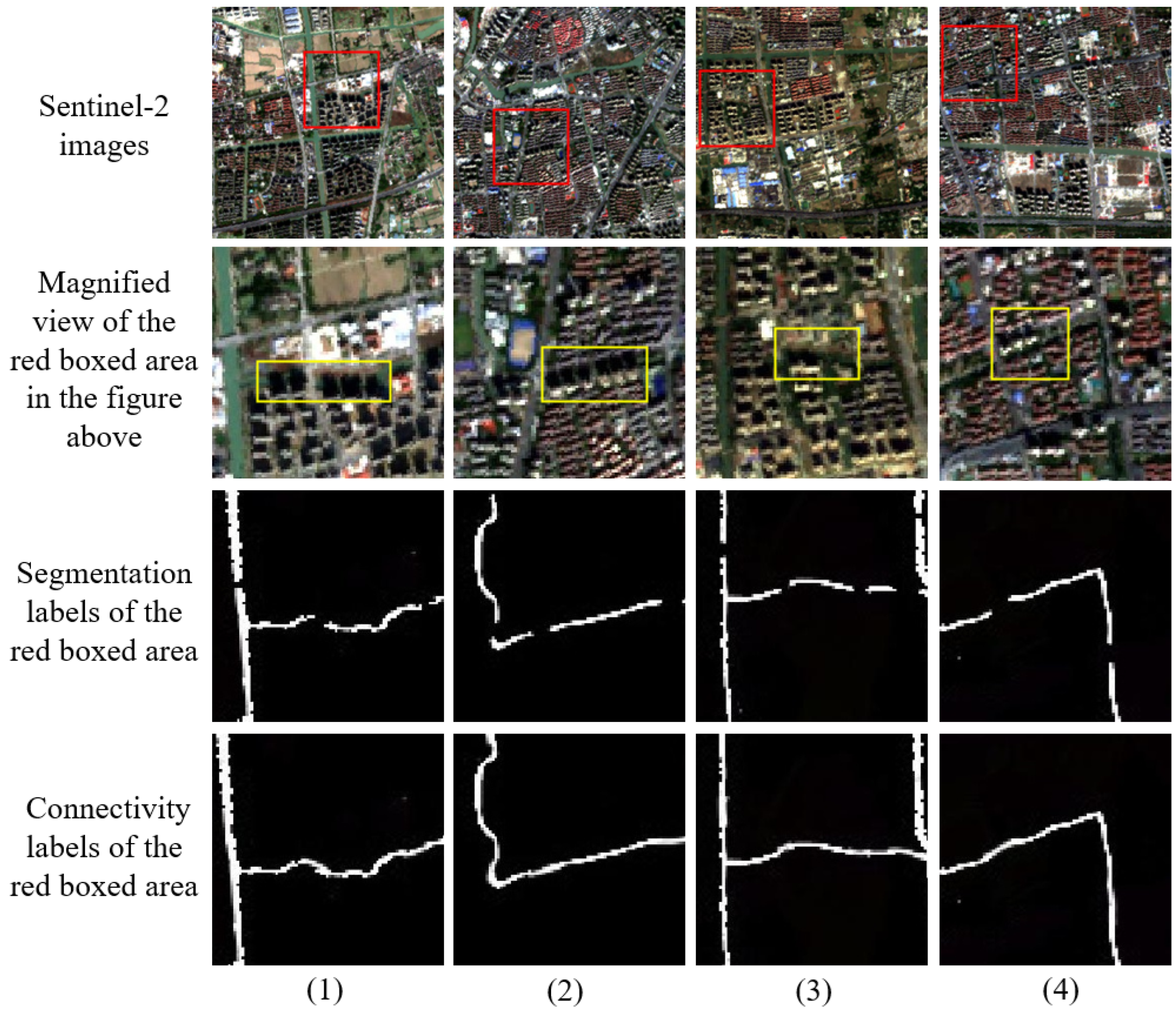

3.1. Experimental Data

3.2. Model Training

3.3. Experimental Design

3.4. Evaluation Metrics

4. Experimental Results and Discussion

4.1. Results of the Training Strategy Comparison Experiments

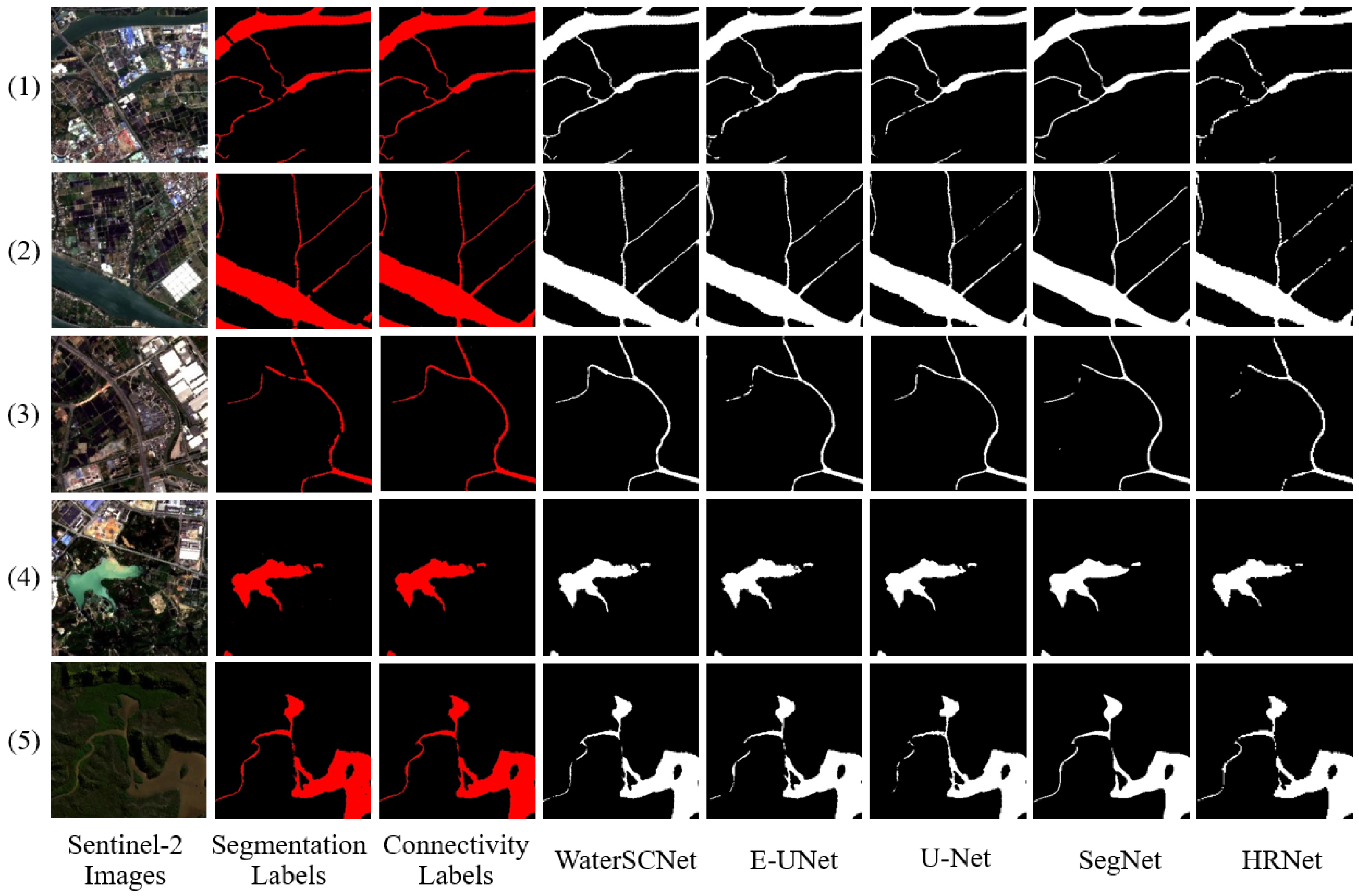

4.2. Results of the Performance Comparison Experiments

4.3. Comparison of Computational Costs for Model Training

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- O’Callaghan, J.F.; Mark, D.M. The extraction of drainage networks from digital elevation data. Comput. Vision Graph. Image Process. 1984, 28, 323–344. [Google Scholar] [CrossRef]

- Tarboton, D.G. A new method for the determination of flow directions and upslope areas in grid digital elevation models. Water Resour. Res. 1997, 33, 309–319. [Google Scholar]

- Wang, Z.; Liu, J.; Li, J.; Meng, Y.; Pokhrel, Y.; Zhang, H. Basin-scale high-resolution extraction of drainage networks using 10-m Sentinel-2 imagery. Remote Sens. Environ. 2021, 255, 112281. [Google Scholar]

- Lu, L.; Wang, L.; Yang, Q.; Zhao, P.; Du, Y.; Xiao, F.; Ling, F. Extracting a Connected River Network from DEM by Incorporating Surface River Occurrence Data and Sentinel-2 Imagery in the Danjiangkou Reservoir Area. Remote Sens. 2023, 15, 1014. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Grosse, P.; De Vries, B.V.W.; Euillades, P.A.; Kervyn, M.; Petrinovic, I.A. Systematic morphometric characterization of volcanic edifices using digital elevation models. Geomorphology 2012, 136, 114–131. [Google Scholar]

- Lashermes, B.; Foufoula-Georgiou, E.; Dietrich, W.E. Channel network extraction from high resolution topography using wavelets. Geophys. Res. Lett. 2007, 34, L23S04. [Google Scholar] [CrossRef]

- Yang, K.; Li, M.; Liu, Y.; Cheng, L.; Huang, Q.; Chen, Y. River detection in remotely sensed imagery using Gabor filtering and path opening. Remote Sens. 2015, 7, 8779–8802. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Gao, B.C. Normalized difference water index for remote sensing of vegetation liquid water from space. In Proceedings of the Imaging Spectrometry, Orlando, FL, USA, 17–18 April 1995; Volume 2480, pp. 225–236. [Google Scholar]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Sghaier, M.O.; Foucher, S.; Lepage, R. River extraction from high-resolution SAR images combining a structural feature set and mathematical morphology. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 1025–1038. [Google Scholar] [CrossRef]

- Fan, Z.; Hou, J.; Zang, Q.; Chen, Y.; Yan, F. River Segmentation of Remote Sensing Images Based on Composite Attention Network. Complexity 2022, 2022, 7750281. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Dui, Z.; Huang, Y.; Jin, J.; Gu, Q. Automatic detection of photovoltaic facilities from Sentinel-2 observations by the enhanced U-Net method. J. Appl. Remote Sens. 2023, 17, 014516. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder–decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Wei, S.; Zhang, H.; Wang, C.; Wang, Y.; Xu, L. Multi-temporal SAR data large-scale crop mapping based on U-Net model. Remote Sens. 2019, 11, 68. [Google Scholar] [CrossRef]

- Abderrahim, N.Y.Q.; Abderrahim, S.; Rida, A. Road segmentation using u-net architecture. In Proceedings of the 2020 IEEE International Conference of Moroccan Geomatics (Morgeo), Casablanca, Morocco, 11–13 May 2020; pp. 1–4. [Google Scholar]

- Chen, C.; Fan, L. Scene segmentation of remotely sensed images with data augmentation using U-net++. In Proceedings of the 2021 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shanghai, China, 27–29 August 2021; pp. 201–205. [Google Scholar]

- Ji, S.; Zhang, C.; Xu, A.; Shi, Y.; Duan, Y. 3D convolutional neural networks for crop classification with multi-temporal remote sensing images. Remote Sens. 2018, 10, 75. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. Multispectral and hyperspectral image fusion using a 3-D-convolutional neural network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 639–643. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Cao, Z.; Li, X.; Jianfeng, J.; Zhao, L. 3D convolutional siamese network for few-shot hyperspectral classification. J. Appl. Remote Sens. 2020, 14, 048504. [Google Scholar] [CrossRef]

- Yoo, D.; Park, S.; Lee, J.Y.; Kweon, I.S. Multi-scale pyramid pooling for deep convolutional representation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 71–80. [Google Scholar] [CrossRef]

- Miao, Z.; Fu, K.; Sun, H.; Sun, X.; Yan, M. Automatic water-body segmentation from high-resolution satellite images via deep networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 602–606. [Google Scholar] [CrossRef]

- Chen, Y.; Fan, R.; Yang, X.; Wang, J.; Latif, A. Extraction of urban water bodies from high-resolution remote-sensing imagery using deep learning. Water 2018, 10, 585. [Google Scholar] [CrossRef]

- Li, M.; Pan, T.; Guan, H.; Liu, H.; Gao, J. Gaofen-2 mission introduction and characteristics. In Proceedings of the 66th International Astronautical Congress (IAC 2015), Jerusalem, Israel, 12–16 October 2015; pp. 12–16. [Google Scholar]

- Xu, R.; Liu, J.; Xu, J. Extraction of high-precision urban impervious surfaces from sentinel-2 multispectral imagery via modified linear spectral mixture analysis. Sensors 2018, 18, 2873. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.C.; Zhang, Y.C.; Chen, P.Y.; Lai, C.C.; Chen, Y.H.; Cheng, J.H.; Ko, M.H. Clouds classification from Sentinel-2 imagery with deep residual learning and semantic image segmentation. Remote Sens. 2019, 11, 119. [Google Scholar] [CrossRef]

- Zhang, Y. A method for continuous extraction of multispectrally classified urban rivers. Photogramm. Eng. Remote Sens. 2000, 66, 991–999. [Google Scholar]

- Li, X.; Lyu, X.; Tong, Y.; Li, S.; Liu, D. An object-based river extraction method via optimized transductive support vector machine for multi-spectral remote-sensing images. IEEE Access 2019, 7, 46165–46175. [Google Scholar] [CrossRef]

- Yuan, K.; Zhuang, X.; Schaefer, G.; Feng, J.; Guan, L.; Fang, H. Deep-learning-based multispectral satellite image segmentation for water body detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7422–7434. [Google Scholar] [CrossRef]

- Isikdogan, F.; Bovik, A.C.; Passalacqua, P. Surface water mapping by deep learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4909–4918. [Google Scholar] [CrossRef]

- Gutman, G.; Byrnes, R.A.; Masek, J.G.; Covington, S.; Justice, C.O.; Franks, S.; Headley, R.M.K. Towards monitoring land-cover and land-use changes at a global scale: The global land survey 2005. Photogramm. Eng. Remote Sens. 2008, 74, 6–10. [Google Scholar]

- Jiang, W.; He, G.; Long, T.; Ni, Y. Detecting water bodies in landsat8 oli image using deep learning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2018, 42, 669–672. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar]

- Xia, M.; Qian, J.; Zhang, X.; Liu, J.; Xu, Y. River segmentation based on separable attention residual network. J. Appl. Remote Sens. 2020, 14, 032602. [Google Scholar] [CrossRef]

- Lin, Q.; Han, Y.; Hahn, H. Real-time lane departure detection based on extended edge-linking algorithm. In Proceedings of the 2010 Second International Conference on Computer Research and Development, Kuala Lumpur, Malaysia, 7–10 May 2010; pp. 725–730. [Google Scholar]

- Yu, J.; Han, Y.; Hahn, H. An efficient extraction of on-road object and lane information using representation method. In Proceedings of the 2008 IEEE International Conference on Signal Image Technology and Internet Based Systems, Bali, Indonesia, 30 November–3 December 2008; pp. 327–332. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-excite: Exploiting feature context in convolutional neural networks. Adv. Neural Inf. Process. Syst. 2018, 31, 1–11. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef]

- Zhao, B.; Feng, J.; Wu, X.; Yan, S. A survey on deep learning-based fine-grained object classification and semantic segmentation. Int. J. Autom. Comput. 2017, 14, 119–135. [Google Scholar] [CrossRef]

- Mnih, V.; Heess, N.; Graves, A. Recurrent models of visual attention. Adv. Neural Inf. Process. Syst. 2014, 27, 1–9. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Britz, D.; Goldie, A.; Luong, M.T.; Le, Q. Massive exploration of neural machine translation architectures. arXiv 2017, arXiv:1703.03906. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the 14th International Conference on Artificial Intelligence and Statistics. JMLR Workshop and Conference Proceedings, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Han, J.; Moraga, C. The influence of the sigmoid function parameters on the speed of backpropagation learning. In Proceedings of the From Natural to Artificial Neural Computation: International Workshop on Artificial Neural Networks, Malaga-Torremolinos, Spain, 7–9 June 1995; Proceedings 3. Springer: Berlin/Heidelberg, Germany, 1995; pp. 195–201. [Google Scholar]

- Jiang, C.; Zhang, H.; Wang, C.; Ge, J.; Wu, F. Water Surface Mapping from Sentinel-1 Imagery Based on Attention-UNet3+: A Case Study of Poyang Lake Region. Remote Sens. 2022, 14, 4708. [Google Scholar] [CrossRef]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, K. Recurrent Models of Visual Attention. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Muller-Wilm, U.; Louis, J.; Richter, R.; Gascon, F.; Niezette, M. Sentinel-2 level 2A prototype processor: Architecture, algorithms and first results. In Proceedings of the ESA Living Planet Symposium, Edinburgh, UK, 9–13 September 2013; pp. 9–13. [Google Scholar]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Li, H.; Wang, C.; Cui, Y.; Hodgson, M. Mapping salt marsh along coastal South Carolina using U-Net. ISPRS J. Photogramm. Remote Sens. 2021, 179, 121–132. [Google Scholar] [CrossRef]

- Liu, C.; Huang, H.; Hui, F.; Zhang, Z.; Cheng, X. Fine-resolution mapping of pan-arctic lake ice-off phenology based on dense sentinel-2 time series data. Remote Sens. 2021, 13, 2742. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Niroumand-Jadidi, M.; Bovolo, F.; Bresciani, M.; Gege, P.; Giardino, C. Water Quality Retrieval from Landsat-9 (OLI-2) Imagery and Comparison to Sentinel-2. Remote Sens. 2022, 14, 4596. [Google Scholar] [CrossRef]

- Niroumand-Jadidi, M.; Bovolo, F.; Bruzzone, L.; Gege, P. Inter-Comparison of Methods for Chlorophyll-a Retrieval: Sentinel-2 Time-Series Analysis in Italian Lakes. Remote Sens. 2021, 13, 2381. [Google Scholar] [CrossRef]

- Ansper, A.; Alikas, K. Retrieval of Chlorophyll a from Sentinel-2 MSI Data for the European Union Water Framework Directive Reporting Purposes. Remote Sens. 2019, 11, 64. [Google Scholar] [CrossRef]

- Ciancia, E.; Campanelli, A.; Lacava, T.; Palombo, A.; Pascucci, S.; Pergola, N.; Pignatti, S.; Satriano, V.; Tramutoli, V. Modeling and Multi-Temporal Characterization of Total Suspended Matter by the Combined Use of Sentinel 2-MSI and Landsat 8-OLI Data: The Pertusillo Lake Case Study (Italy). Remote Sens. 2020, 12, 2147. [Google Scholar] [CrossRef]

- Sent, G.; Biguino, B.; Favareto, L.; Cruz, J.; Sá, C.; Dogliotti, A.I.; Palma, C.; Brotas, V.; Brito, A.C. Deriving Water Quality Parameters Using Sentinel-2 Imagery: A Case Study in the Sado Estuary, Portugal. Remote Sens. 2021, 13, 1043. [Google Scholar] [CrossRef]

- Virdis, S.G.; Xue, W.; Winijkul, E.; Nitivattananon, V.; Punpukdee, P. Remote sensing of tropical riverine water quality using sentinel-2 MSI and field observations. Ecol. Indic. 2022, 144, 109472. [Google Scholar] [CrossRef]

- Peterson, K.T.; Sagan, V.; Sloan, J.J. Deep learning-based water quality estimation and anomaly detection using Landsat-8/Sentinel-2 virtual constellation and cloud computing. Gisci. Remote Sens. 2020, 57, 510–525. [Google Scholar] [CrossRef]

| Layer Name | Layer Input | (Filter Size) × Number | Output Size |

|---|---|---|---|

| C1 | Input | (5 × 5 × 5) × 32 | (256 × 256 × 12) × 32 |

| R1 | C1 | - | 256 × 256 × 384 |

| C2 | R1 | (1 × 1) × 16 | 256 × 256 × 16 |

| P1 | C1 | 2 × 2 × 2 | (128 × 128 × 6) × 32 |

| C3 | P1 | (5 × 5 × 5) × 64 | (128 × 128 × 6) × 64 |

| R2 | C3 | - | 128 × 128 × 384 |

| C4 | R2 | (1 × 1) × 32 | 128 × 128 × 32 |

| P2 | C3 | 2 × 2 × 2 | (64 × 64 × 3) × 64 |

| C5 | P2 | (5 × 5 × 5) × 128 | (64 × 64 × 3) × 128 |

| R3 | C5 | - | 64 × 64 × 384 |

| C6 | R3 | (1 × 1) × 64 | 64 × 64 × 64 |

| Evaluation Subject | Experiment | Evaluation Results | |||

|---|---|---|---|---|---|

| MCC | F1 | Kappa | Recall | ||

| River | Exp_Syn | 0.925 ± 0.005 | 0.930 ± 0.005 | 0.924 ± 0.005 | 0.926 ± 0.005 |

| Segmentation | Exp_Asyn | 0.926 ± 0.004 | 0.931 ± 0.003 | 0.925 ± 0.004 | 0.928 ± 0.005 |

| River connectivity | Exp_Syn | 0.932 ± 0.003 | 0.937 ± 0.003 | 0.931 ± 0.003 | 0.933 ± 0.005 |

| reconstruction | Exp_Asyn | 0.928 ± 0.004 | 0.933 ± 0.003 | 0.927 ± 0.004 | 0.929 ± 0.002 |

| Experiment | Model | Evaluation Results | |||

|---|---|---|---|---|---|

| MCC | F1 | Kappa | Recall | ||

| Exp_Seg | WaterSCNet | 0.925 ± 0.005 | 0.930 ± 0.005 | 0.924 ± 0.005 | 0.926 ± 0.005 |

| E-UNet | 0.915 ± 0.009 | 0.921 ± 0.009 | 0.914 ± 0.009 | 0.916 ± 0.013 | |

| U-Net | 0.896 ± 0.014 | 0.902 ± 0.014 | 0.894 ± 0.015 | 0.891 ± 0.020 | |

| SegNet | 0.879 ± 0.012 | 0.886 ± 0.012 | 0.876 ± 0.013 | 0.869 ± 0.018 | |

| HRNet | 0.874 ± 0.004 | 0.882 ± 0.004 | 0.872 ± 0.005 | 0.869 ± 0.007 | |

| Exp_Con | WaterSCNet | 0.932 ± 0.003 | 0.937 ± 0.003 | 0.931 ± 0.003 | 0.933 ± 0.005 |

| E-UNet | 0.906 ± 0.010 | 0.912 ± 0.010 | 0.904 ± 0.011 | 0.905 ± 0.011 | |

| U-Net | 0.894 ± 0.010 | 0.900 ± 0.010 | 0.892 ± 0.011 | 0.890 ± 0.014 | |

| SegNet | 0.883 ± 0.006 | 0.891 ± 0.005 | 0.881 ± 0.006 | 0.878 ± 0.003 | |

| HRNet | 0.879 ± 0.006 | 0.887 ± 0.006 | 0.877 ± 0.006 | 0.876 ± 0.009 | |

| Experiment | Model Training Time (Hours of CPU Time) | ||||

|---|---|---|---|---|---|

| WaterSCNet | E-UNet | U-Net | SegNet | HRNet | |

| Exp_Seg | 16.42 1 | 22.16 | 15.07 | 2.96 | 12.56 |

| Exp_Con | 21.32 | 12.72 | 2.41 | 9.37 | |

| Total | 16.42 | 43.48 | 27.79 | 5.37 | 21.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dui, Z.; Huang, Y.; Wang, M.; Jin, J.; Gu, Q. Segmentation and Connectivity Reconstruction of Urban Rivers from Sentinel-2 Multi-Spectral Imagery by the WaterSCNet Deep Learning Model. Remote Sens. 2023, 15, 4875. https://doi.org/10.3390/rs15194875

Dui Z, Huang Y, Wang M, Jin J, Gu Q. Segmentation and Connectivity Reconstruction of Urban Rivers from Sentinel-2 Multi-Spectral Imagery by the WaterSCNet Deep Learning Model. Remote Sensing. 2023; 15(19):4875. https://doi.org/10.3390/rs15194875

Chicago/Turabian StyleDui, Zixuan, Yongjian Huang, Mingquan Wang, Jiuping Jin, and Qianrong Gu. 2023. "Segmentation and Connectivity Reconstruction of Urban Rivers from Sentinel-2 Multi-Spectral Imagery by the WaterSCNet Deep Learning Model" Remote Sensing 15, no. 19: 4875. https://doi.org/10.3390/rs15194875

APA StyleDui, Z., Huang, Y., Wang, M., Jin, J., & Gu, Q. (2023). Segmentation and Connectivity Reconstruction of Urban Rivers from Sentinel-2 Multi-Spectral Imagery by the WaterSCNet Deep Learning Model. Remote Sensing, 15(19), 4875. https://doi.org/10.3390/rs15194875