SEL-Net: A Self-Supervised Learning-Based Network for PolSAR Image Runway Region Detection

Abstract

:1. Introduction

- (1)

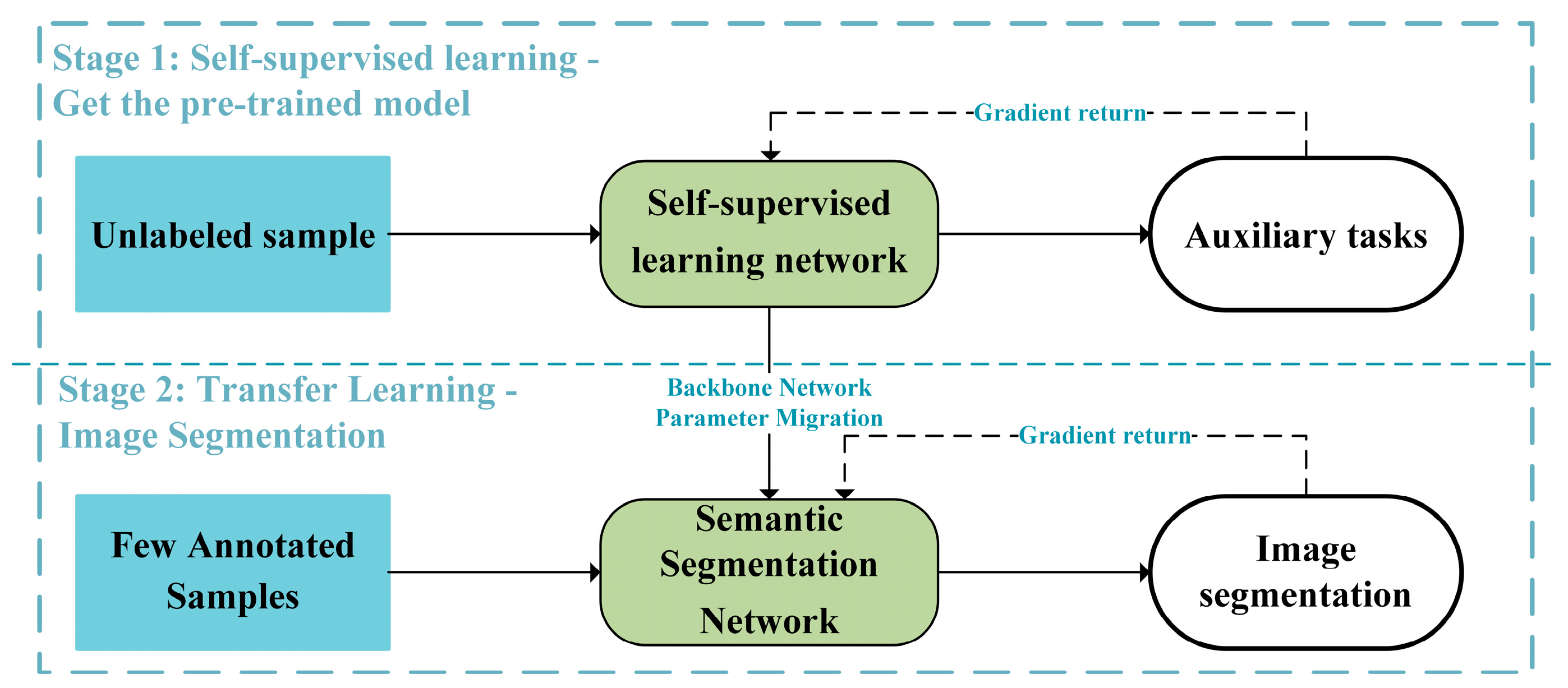

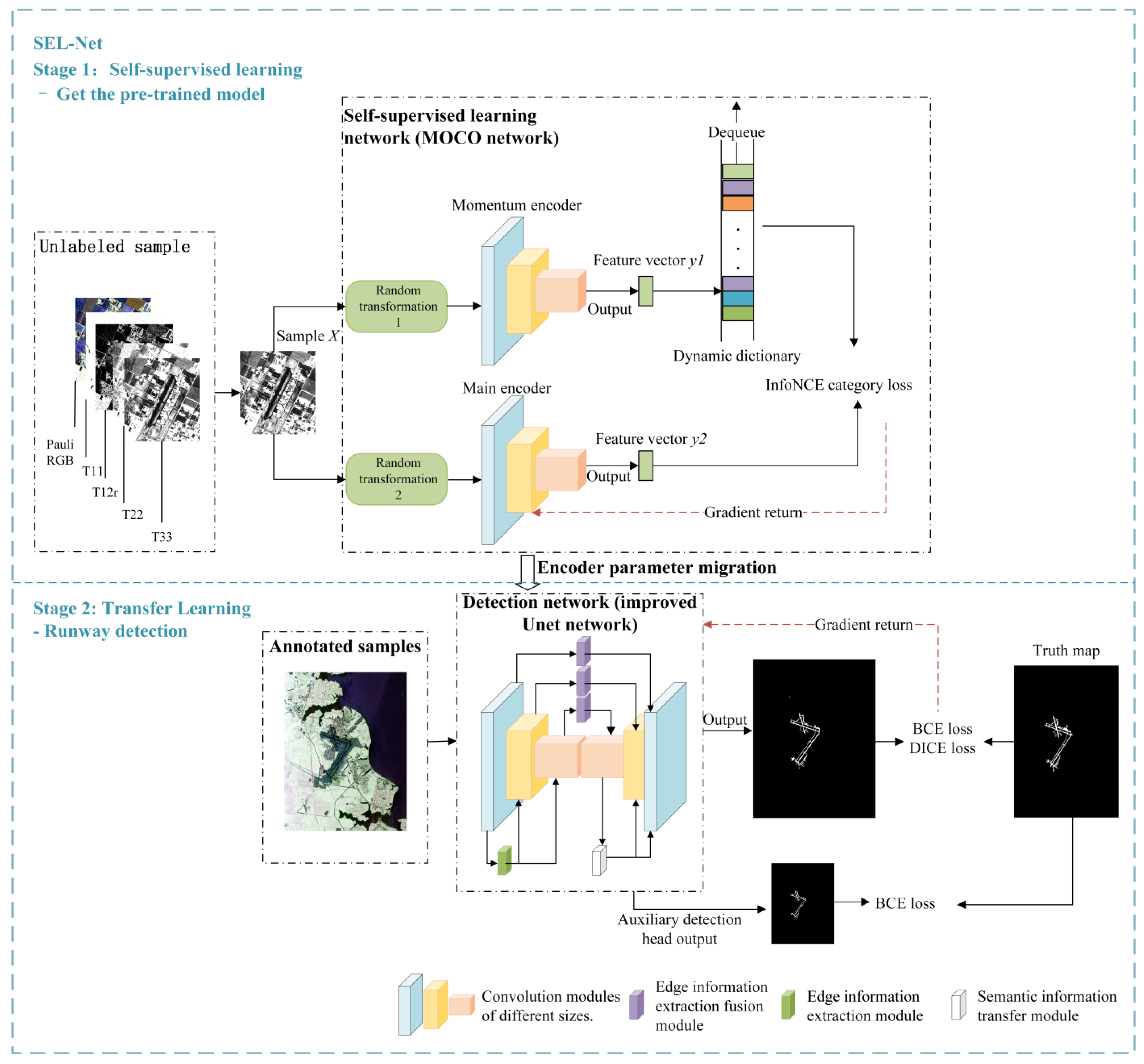

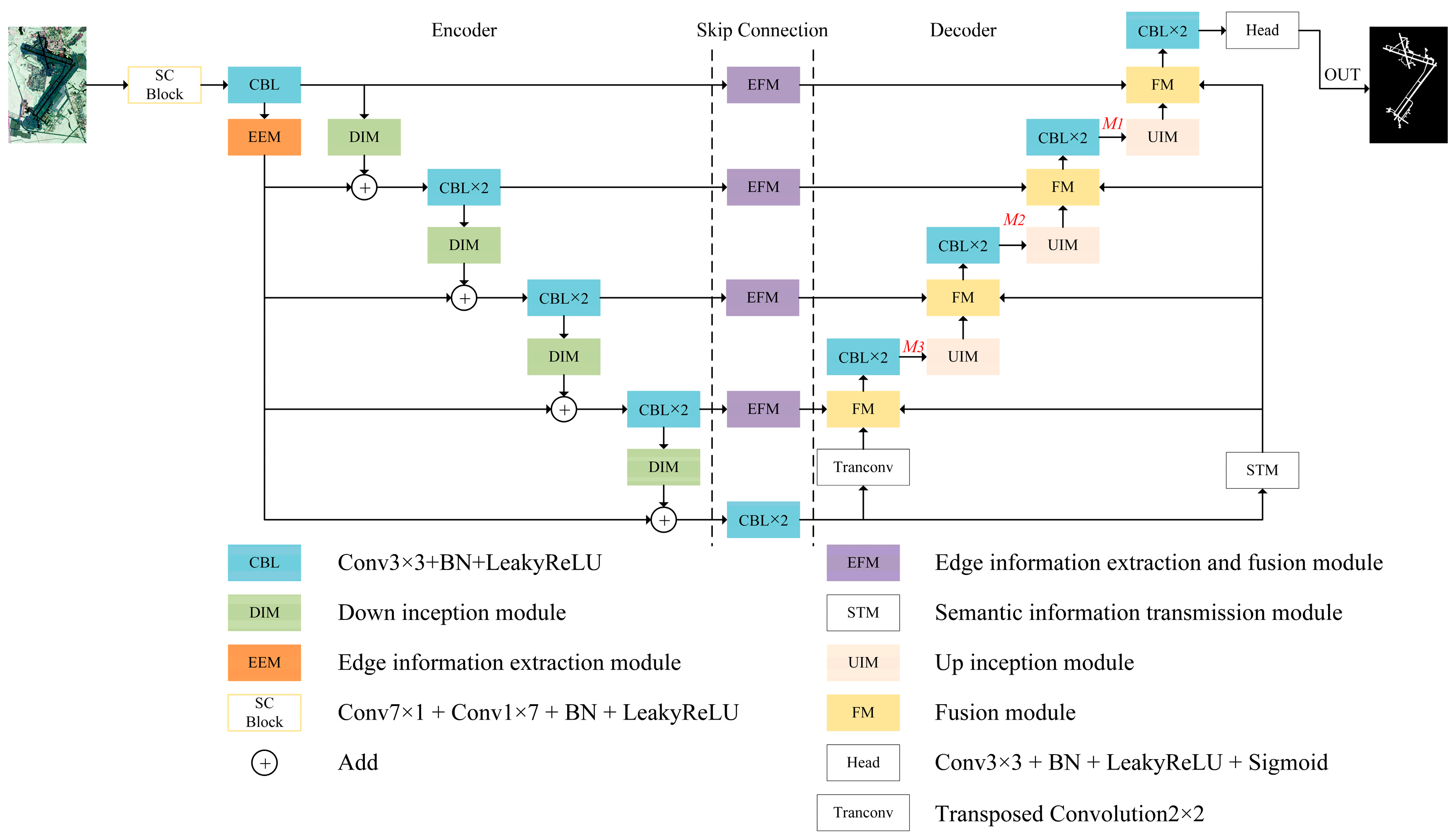

- A self-supervised learning-based PolSAR image runway area detection network, SEL-Net, is designed. By introducing self-supervised learning and improving the detection network, the effectiveness of runway area detection in PolSAR images has been significantly improved under conditions of insufficient annotated data, resulting in a reduction in both false positive and false negative rates.

- (2)

- By capitalizing on the distinctive traits of PolSAR data and employing the MOCO network, we obtain a pre-trained model that prioritizes the recognition of runway region features. Transferring this well-trained model to the downstream segmentation task effectively addresses the issue of insufficient deep semantic feature extraction from the runway region, which is previously constrained by the scarcity of PolSAR data annotations.

- (3)

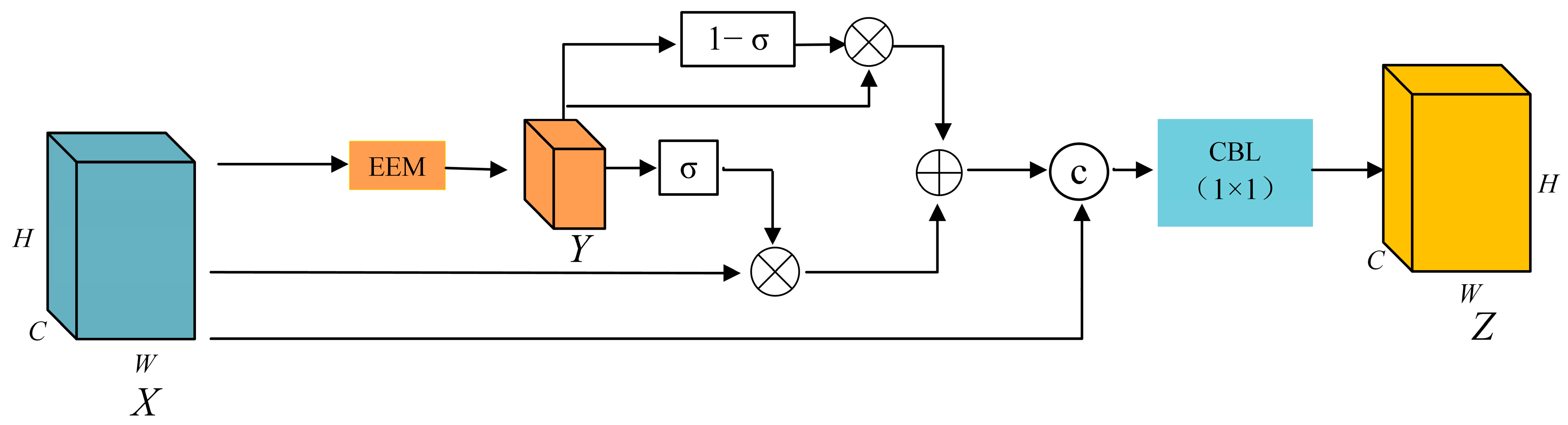

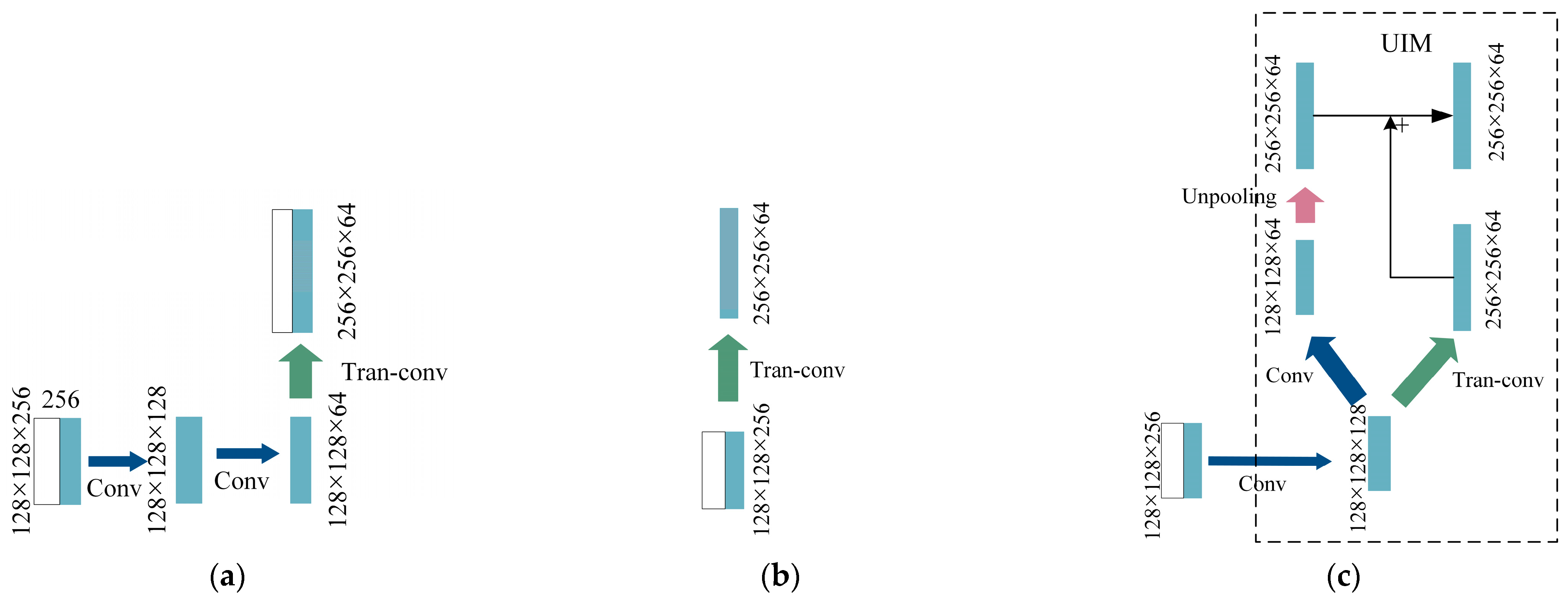

- To enhance the U-Net network’s ability to extract edge information, we introduce EEM and EFM. Furthermore, we design a STM, and implement improvements to the up- and down-sampling processes to minimize the loss of semantic information during network propagation.

2. Related Work

2.1. Self-Supervised Learning

2.2. Semantic Segmentation Network

2.3. Representation of PolSAR Image Data

- : Scattering power caused by the symmetry of the target;

- : Scattering power resulting from the overall asymmetry of the target;

- : Scattering power caused by the irregularity of the target;

- : Linear factor;

- : Measure of local curvature difference;

- : Local distortion of the object;

- : Overall distortion of the target;

- : Coupling between symmetric and asymmetric parts;

- : Directionality of the target.

3. Methodology

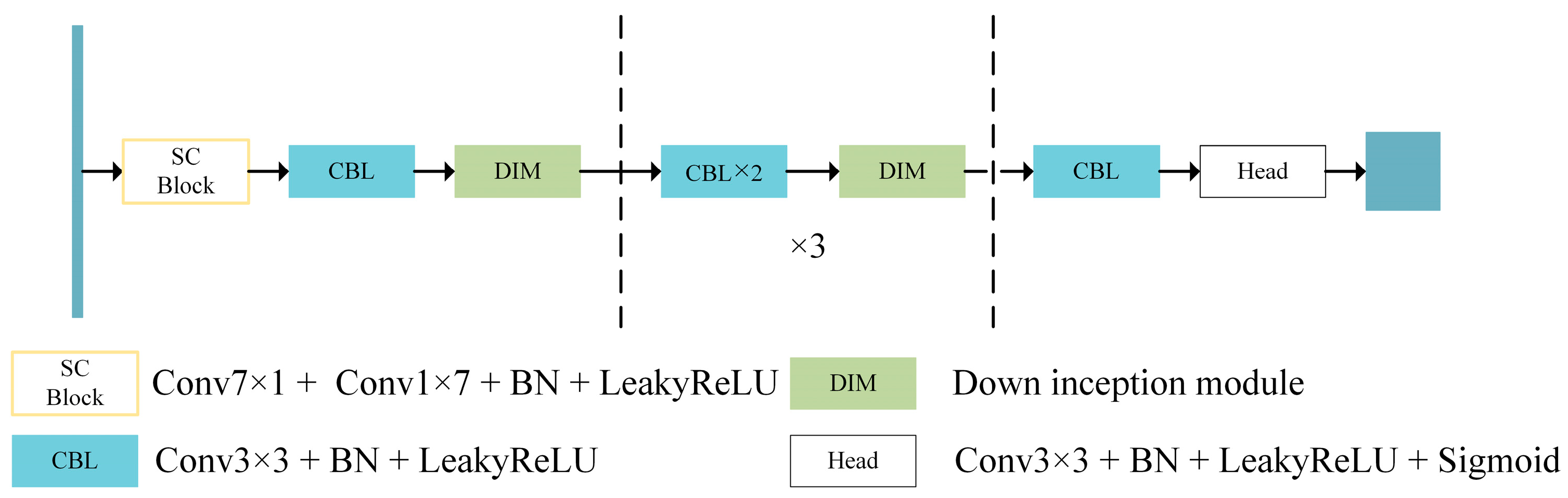

3.1. Self-Supervised Learning Network

3.1.1. Encoder for MOCO

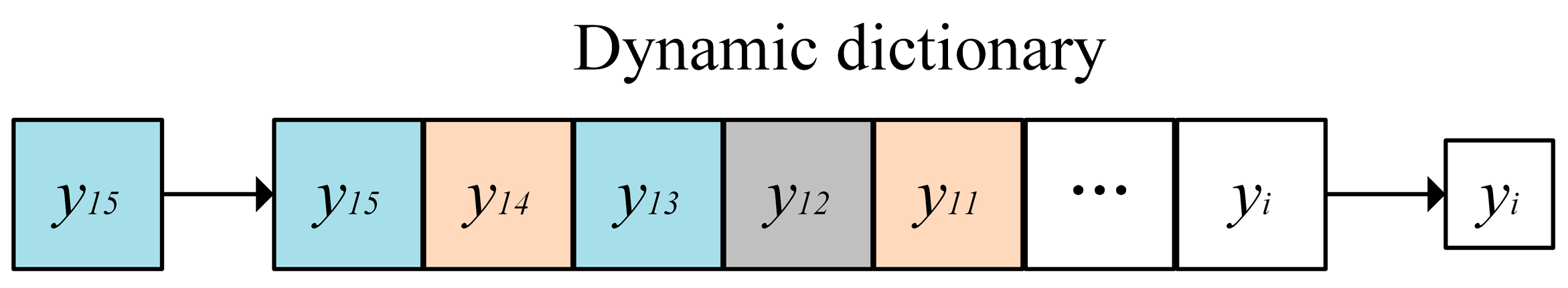

3.1.2. Dynamic Dictionary

3.1.3. Loss Function

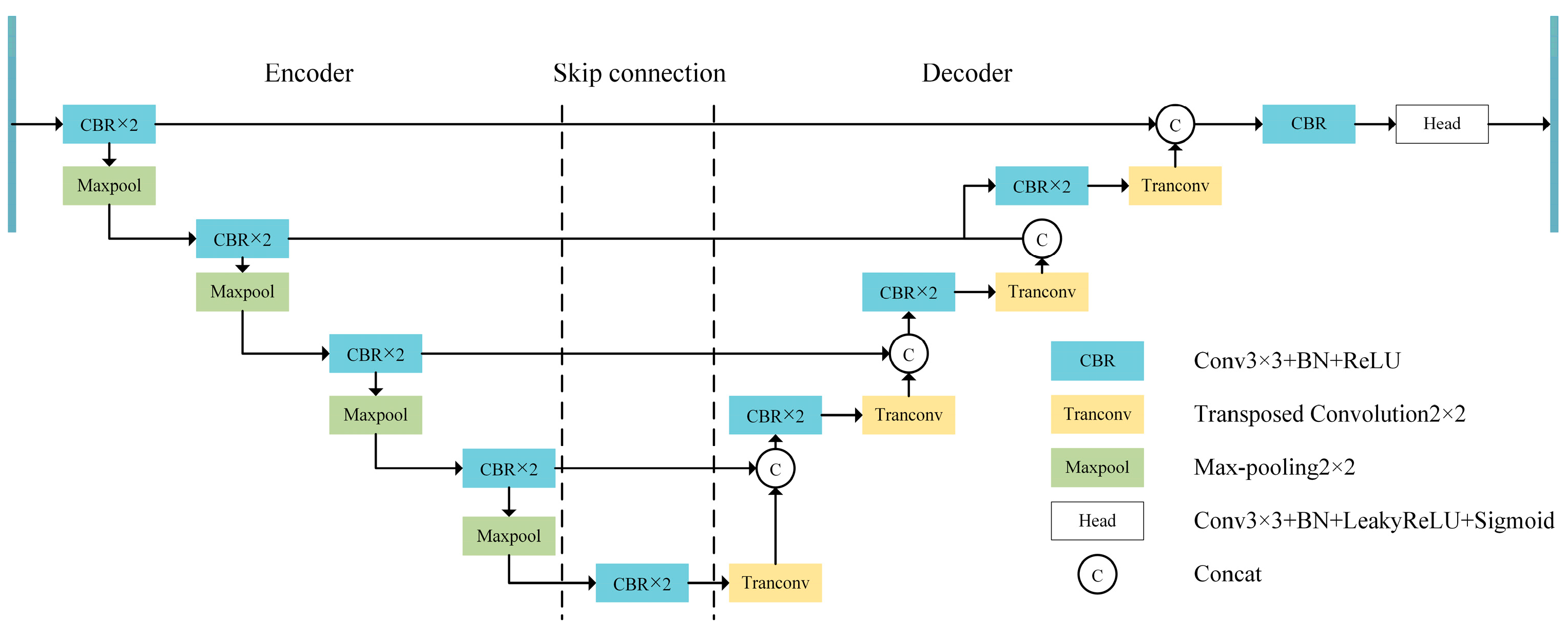

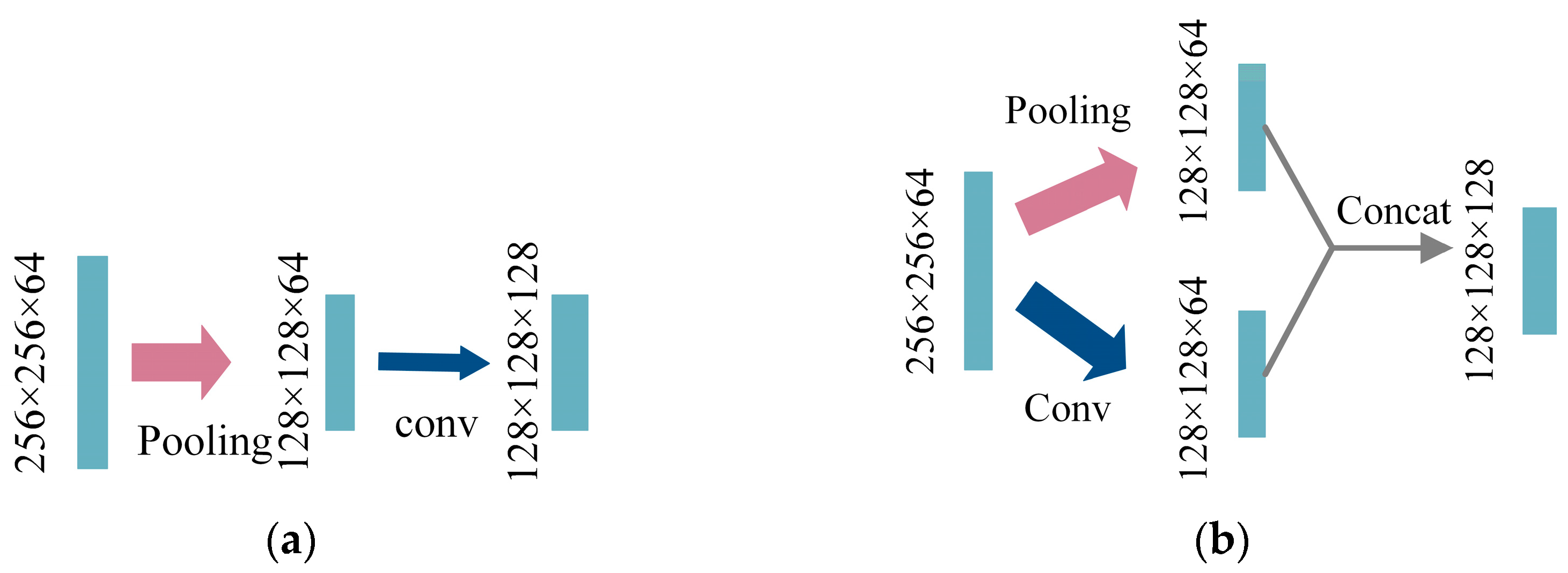

3.2. Detection Network

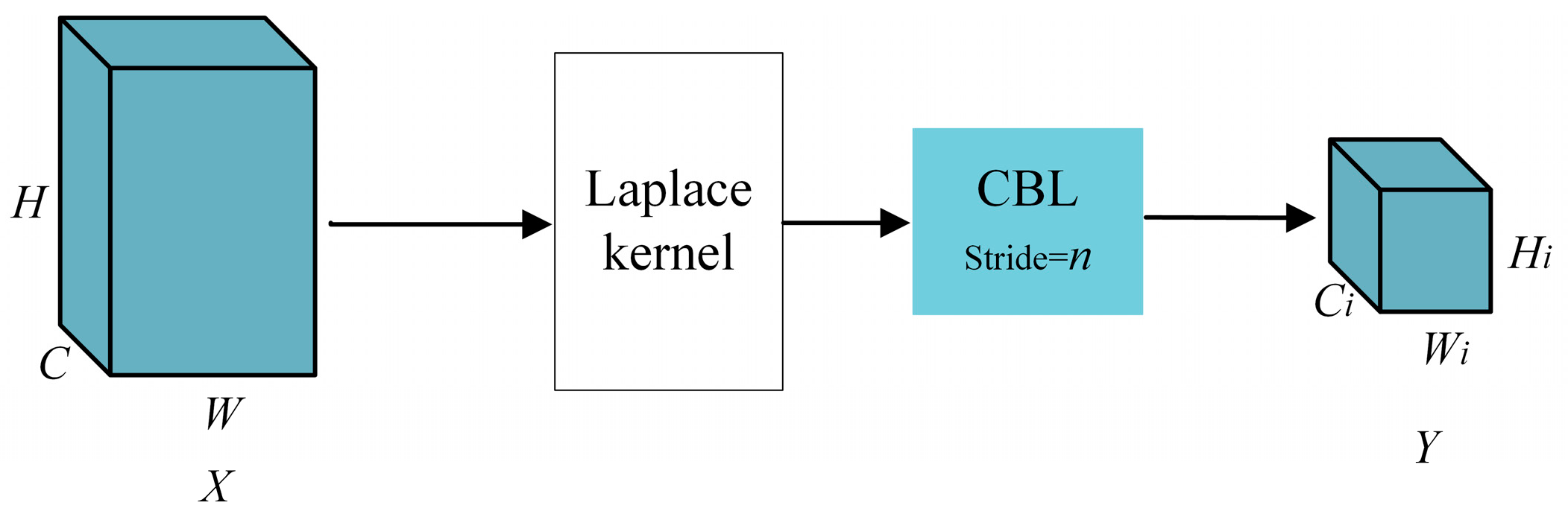

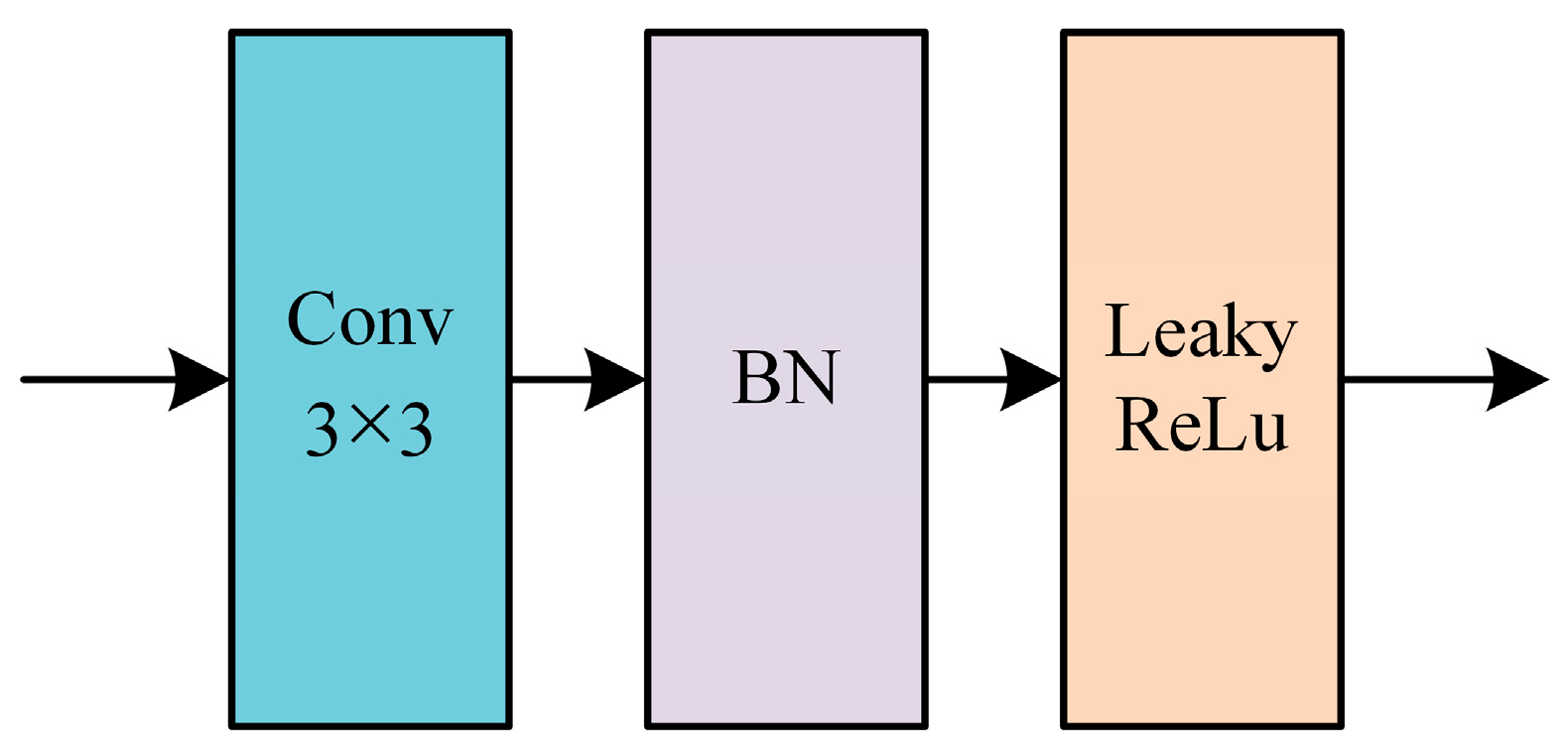

3.2.1. Encoder

3.2.2. Skip Connection

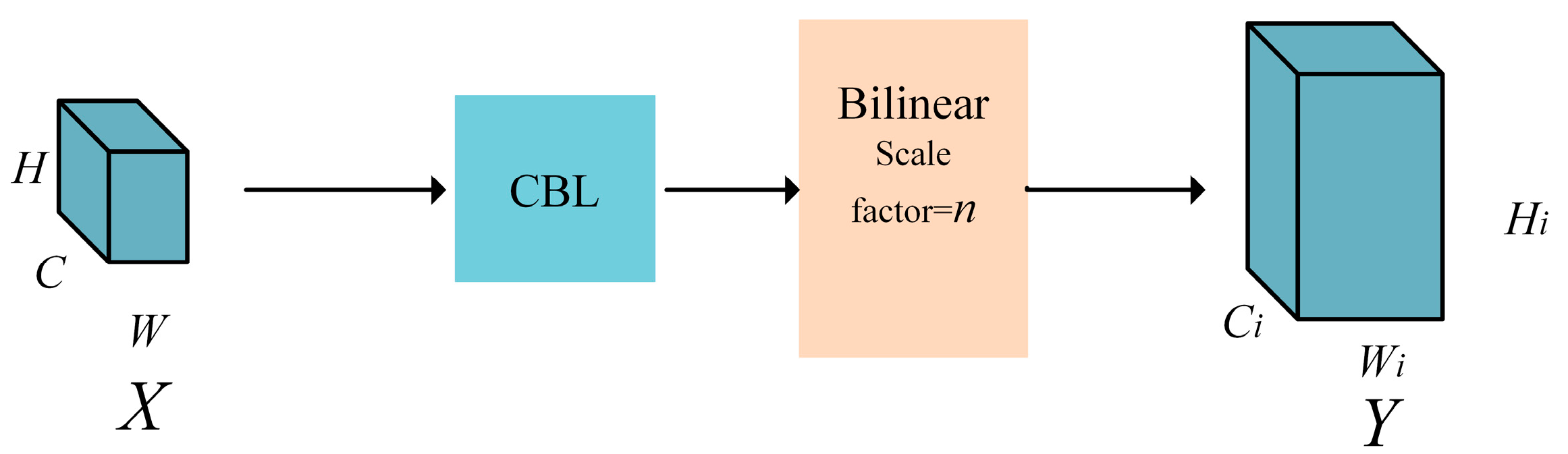

3.2.3. Decoder

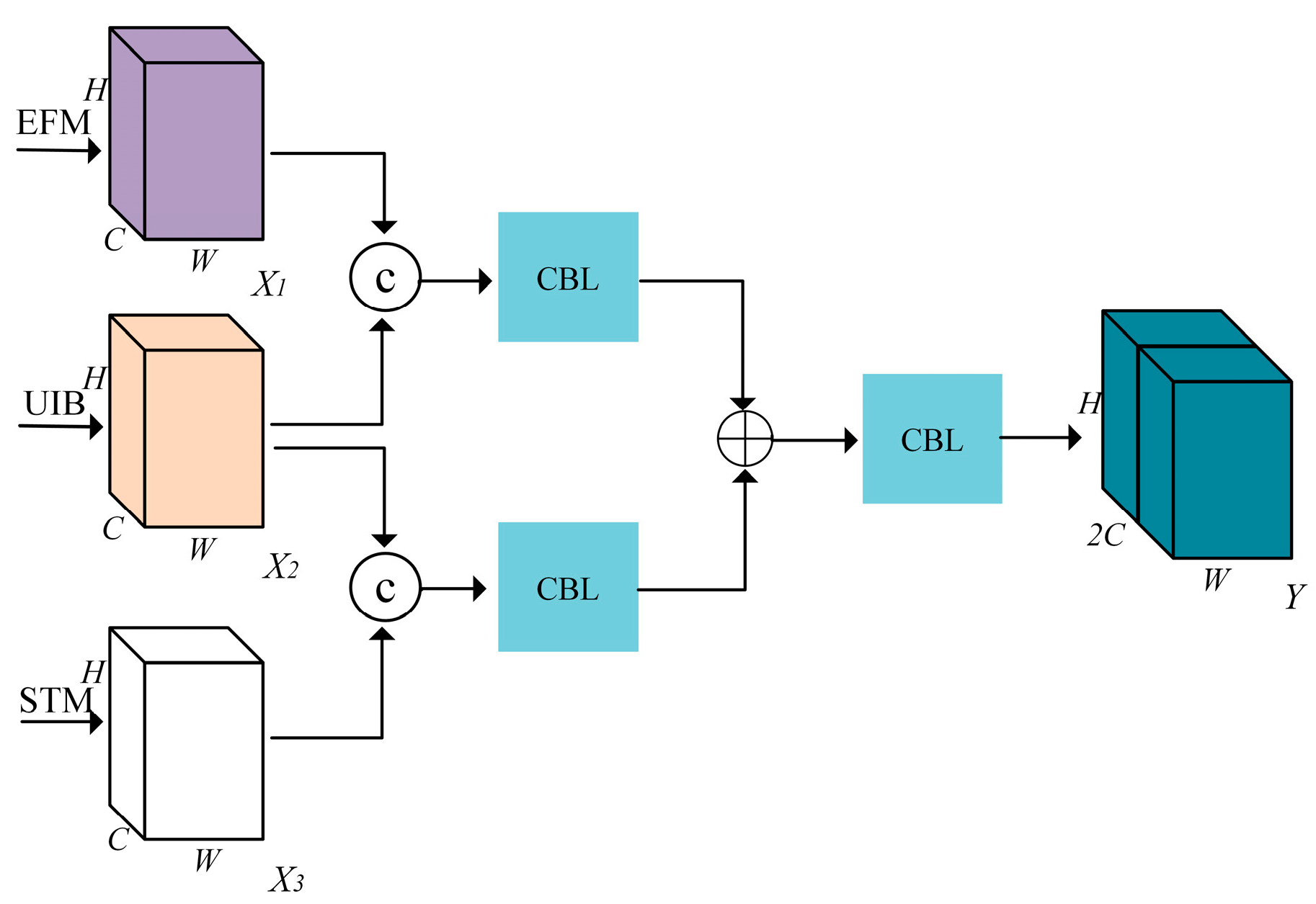

3.2.4. Feature Fusion Module (FM)

3.2.5. The Loss Function of the Detection Network

4. Experiments and Analysis

4.1. Data Introduction

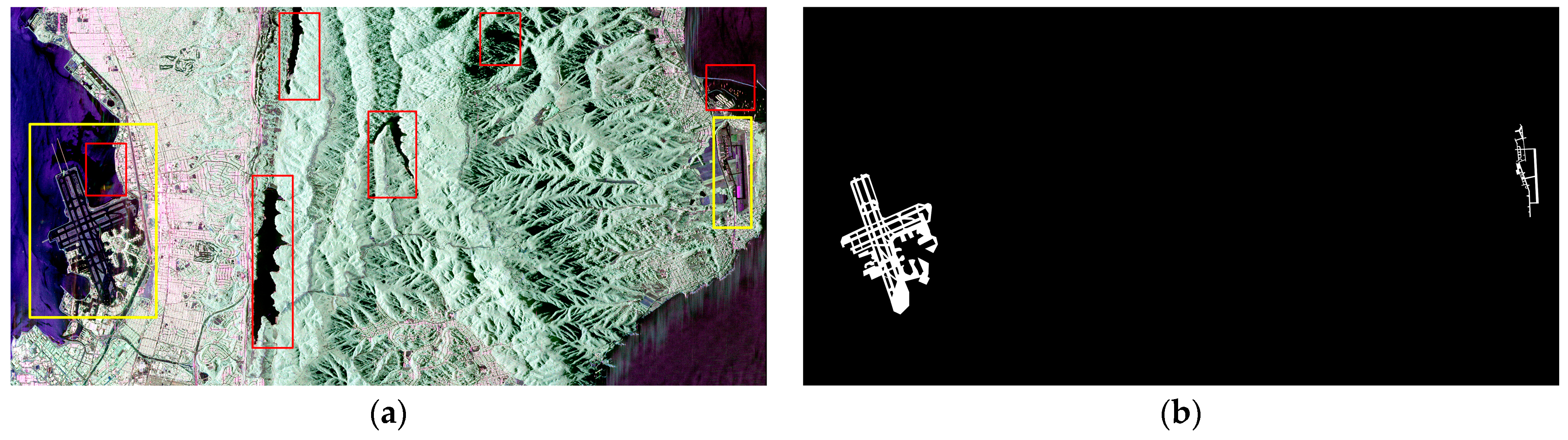

4.1.1. Introduction to the Self-Supervised Learning Phase Dataset

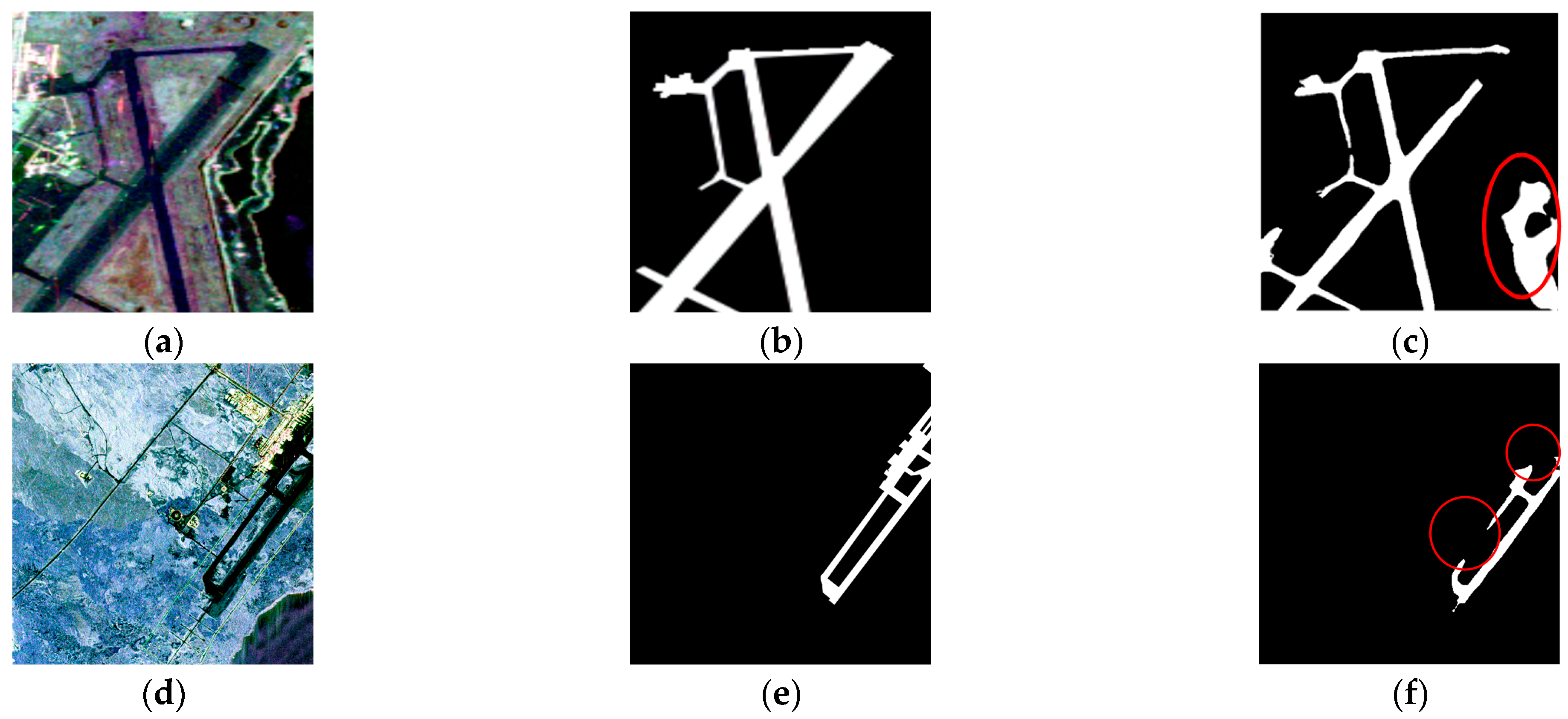

4.1.2. Introduction to the Detection Phase Dataset

4.2. Experimental Parameter Settings

4.3. Evaluation Metrics

4.4. Experimental Results and Analysis

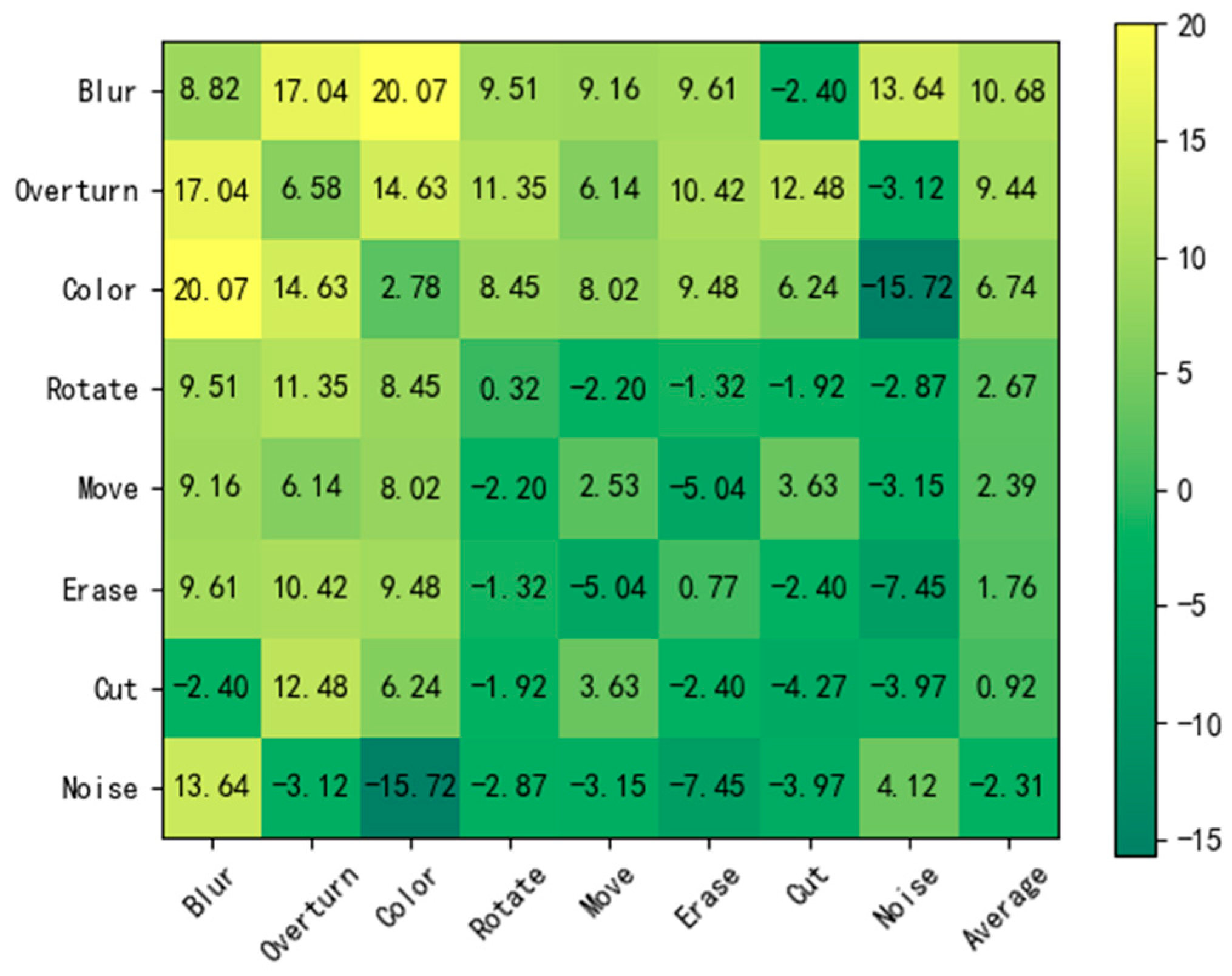

4.4.1. Selection of Data Transformations for Part of Self-Supervised Learning

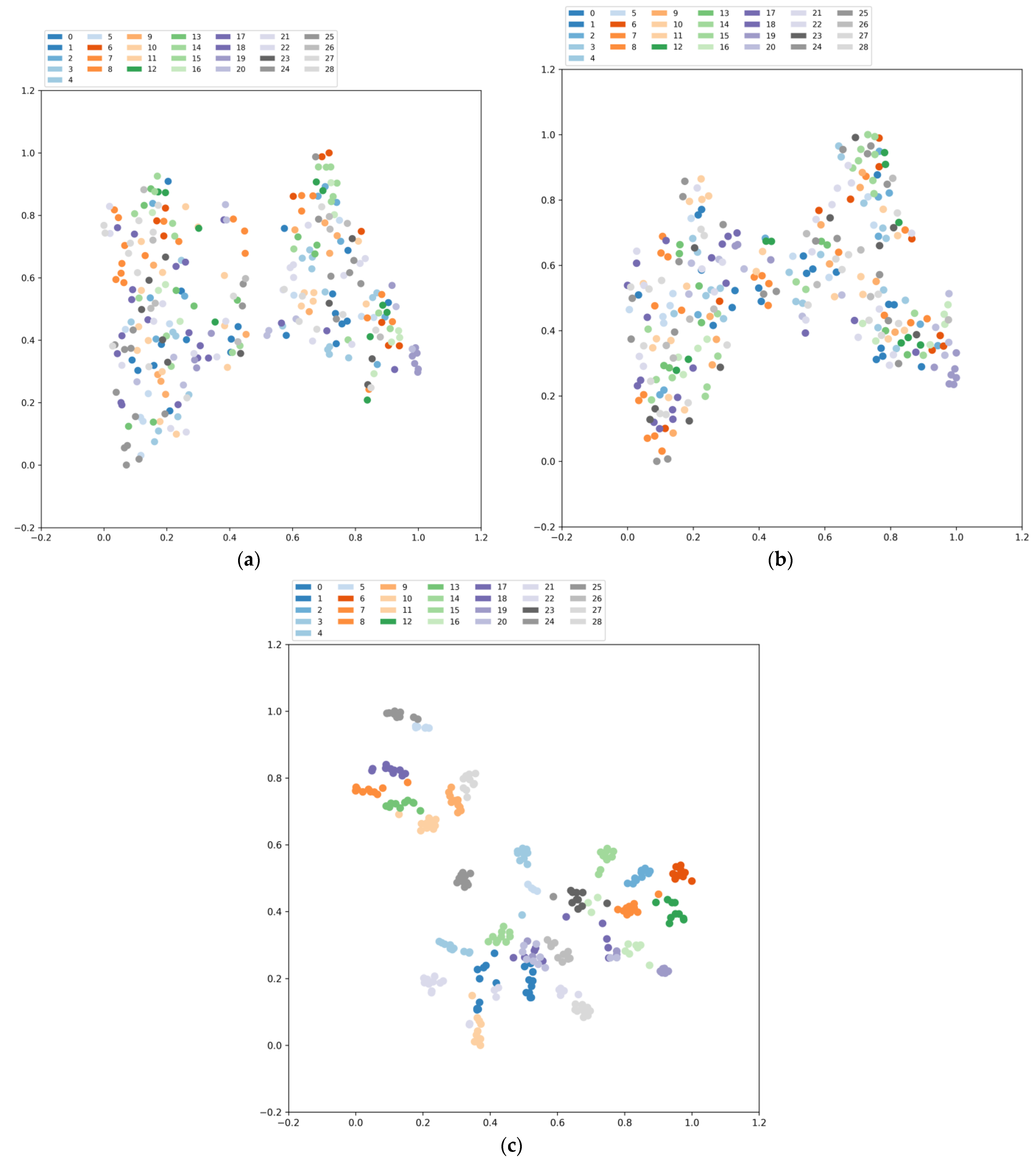

4.4.2. Experiments for Self-Supervised Learning

4.4.3. Ablation Experiment of SEL-Net

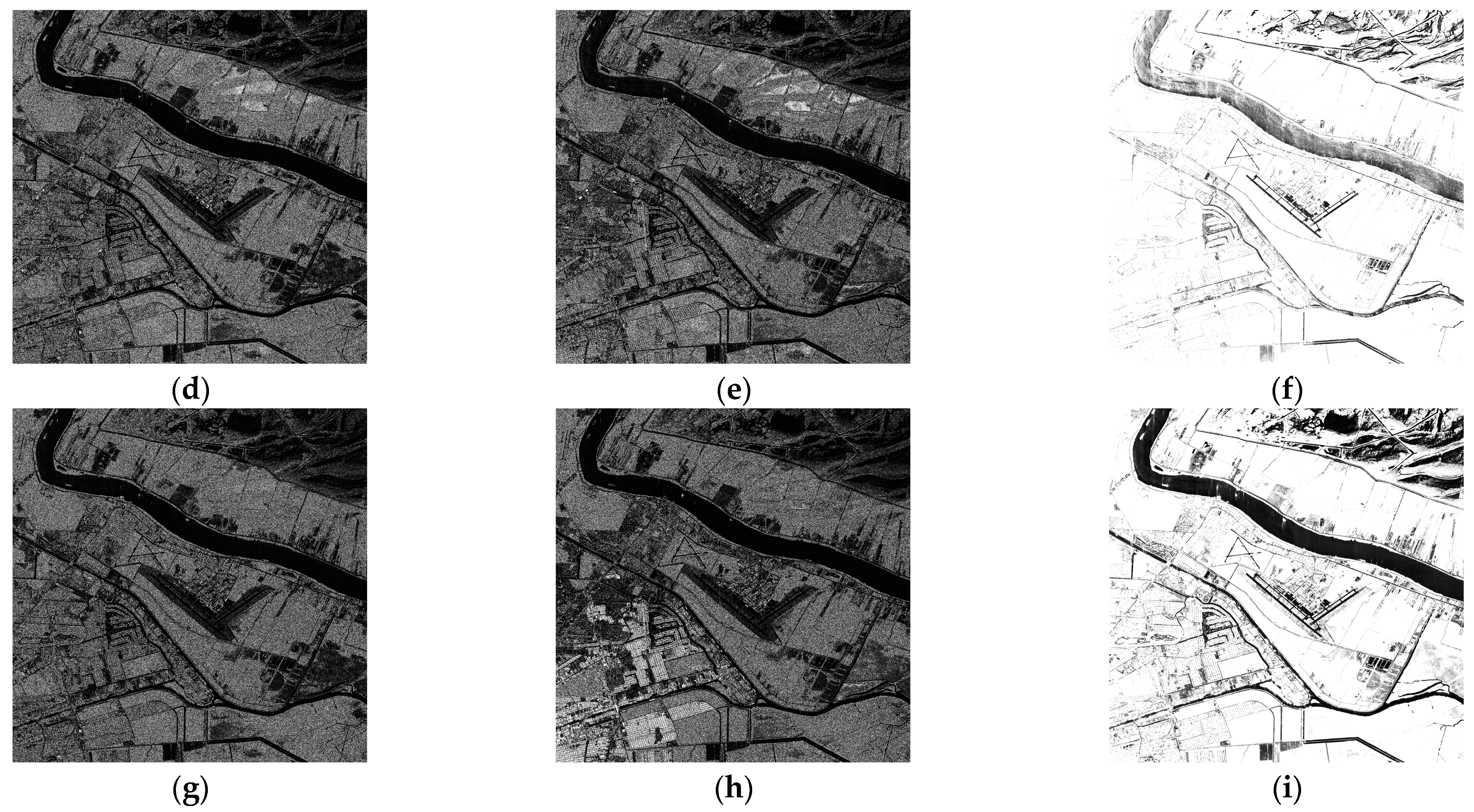

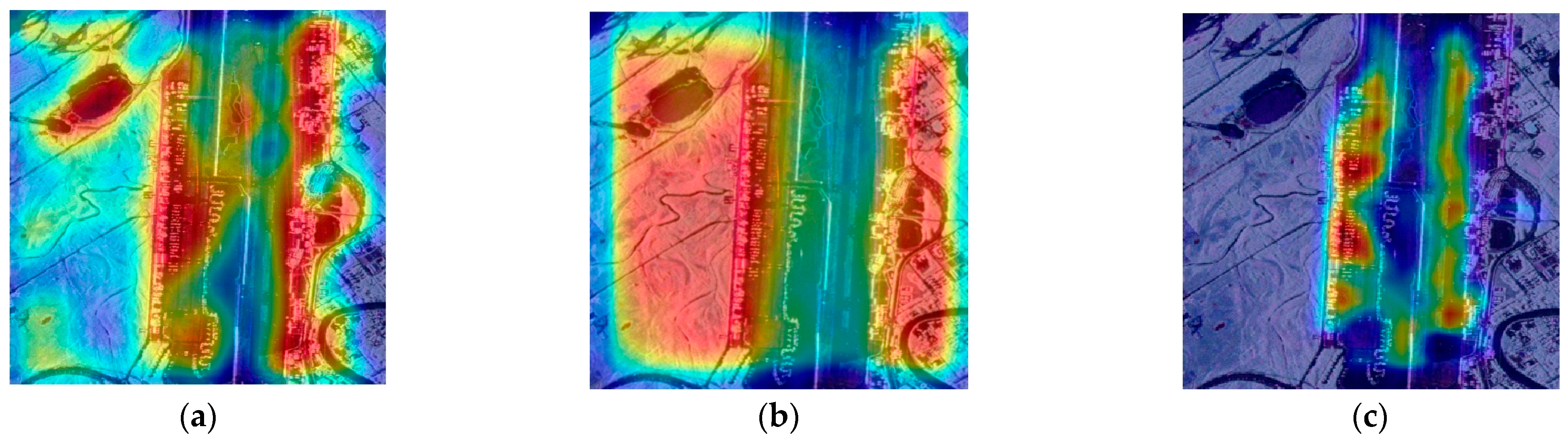

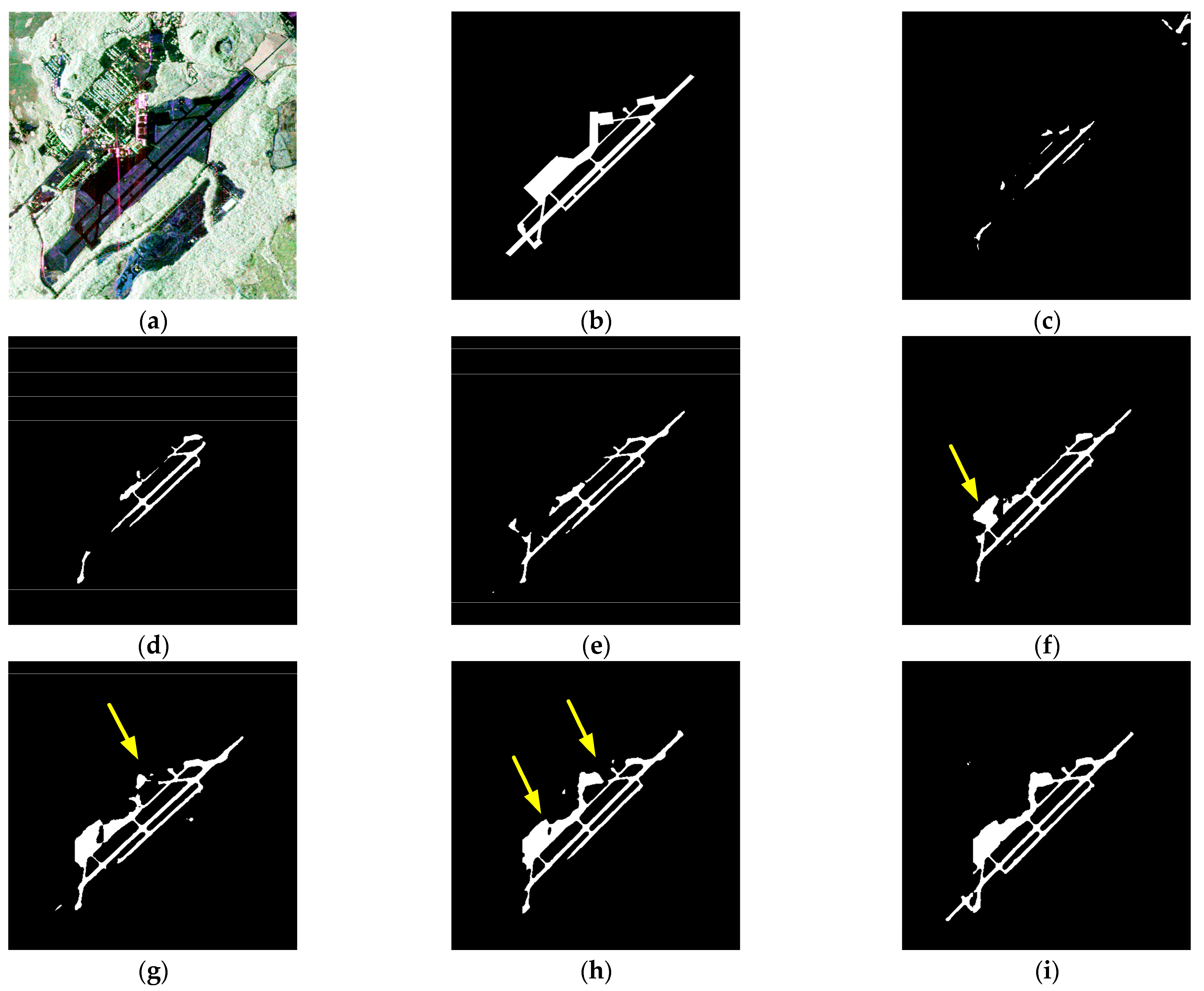

- Visualize the channels of the last down-sampled layer before and after the incorporation of edge information, as well as the channels of the last up-sampled layer, as shown in Figure 21d,e. It can be observed that the addition of the EEM aids in extracting edge information during the down-sampling stage and the skip-connection stage, making the outlines of the runway area more distinct.

- Visualize the channel map before the last down-sampling of the improved down-sampling structure, as shown in Figure 21f. The increased brightness in the image represents that the down-sampling has retained more semantic information of the image while also reducing the information of non-runway area objects.

- Visualize the channel map after the last up-sampling of the improved up-sampling structure, as shown in Figure 21g. It can be observed that the addition of the STM has made the runway lines clear and continuous.

- Visualize the channel map after the last up-sampling of the improved up-sampling structure, as shown in Figure 21h. It can be observed that the network with the improved up-sampling structure has reduced the loss in feature map processing, resulting in clearer runway lines.

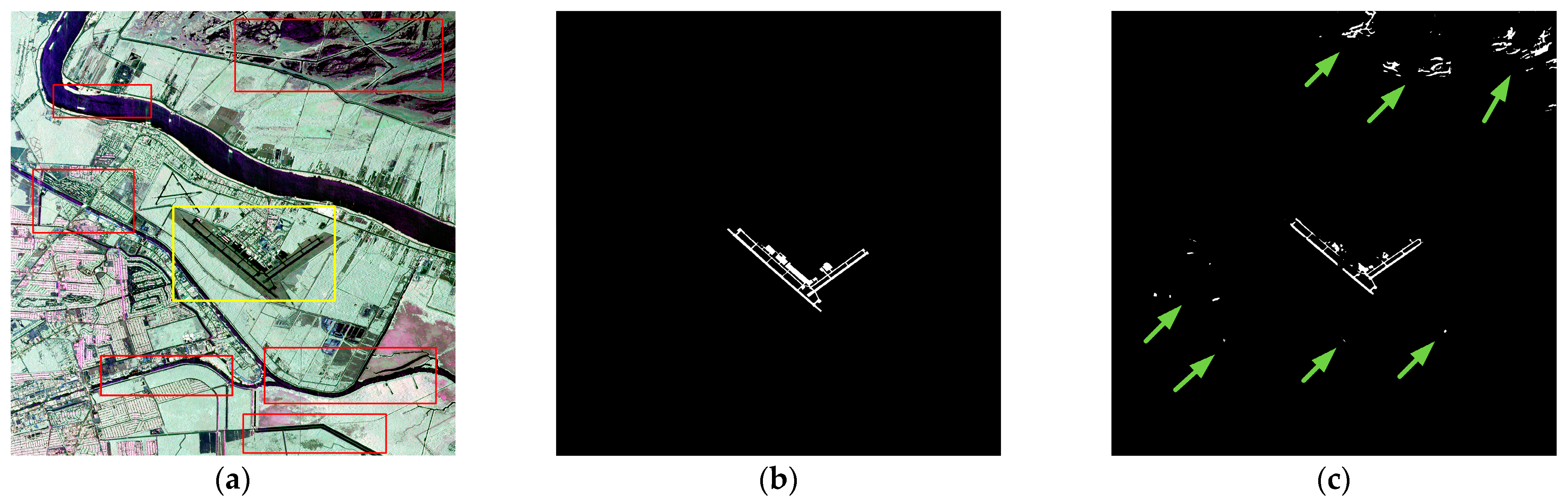

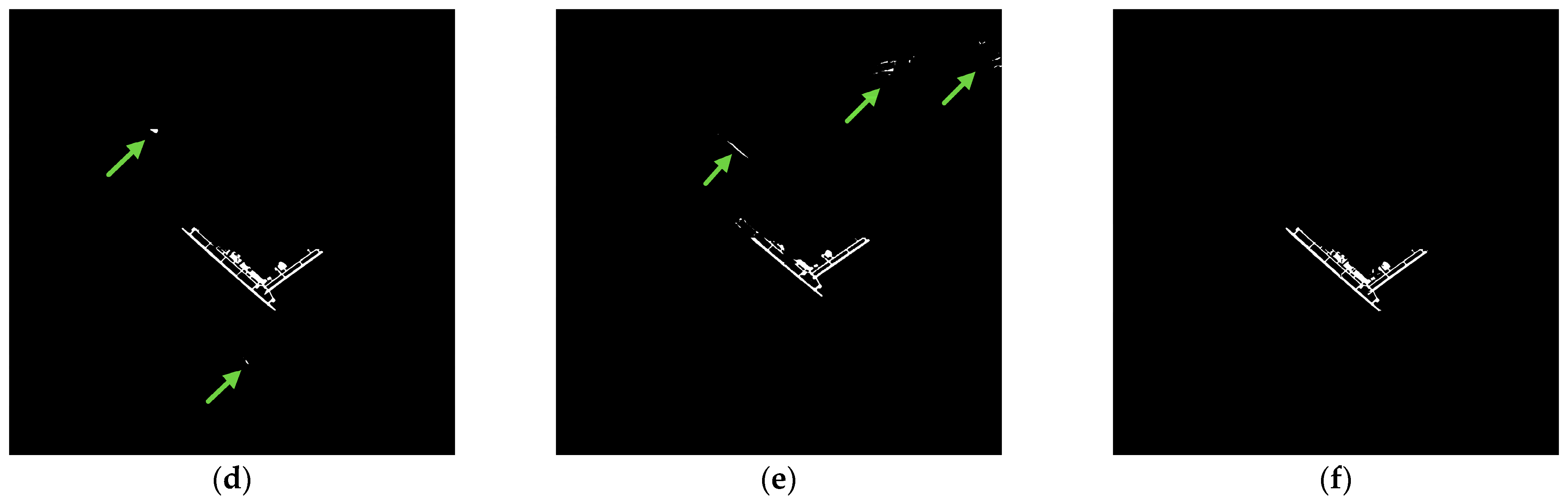

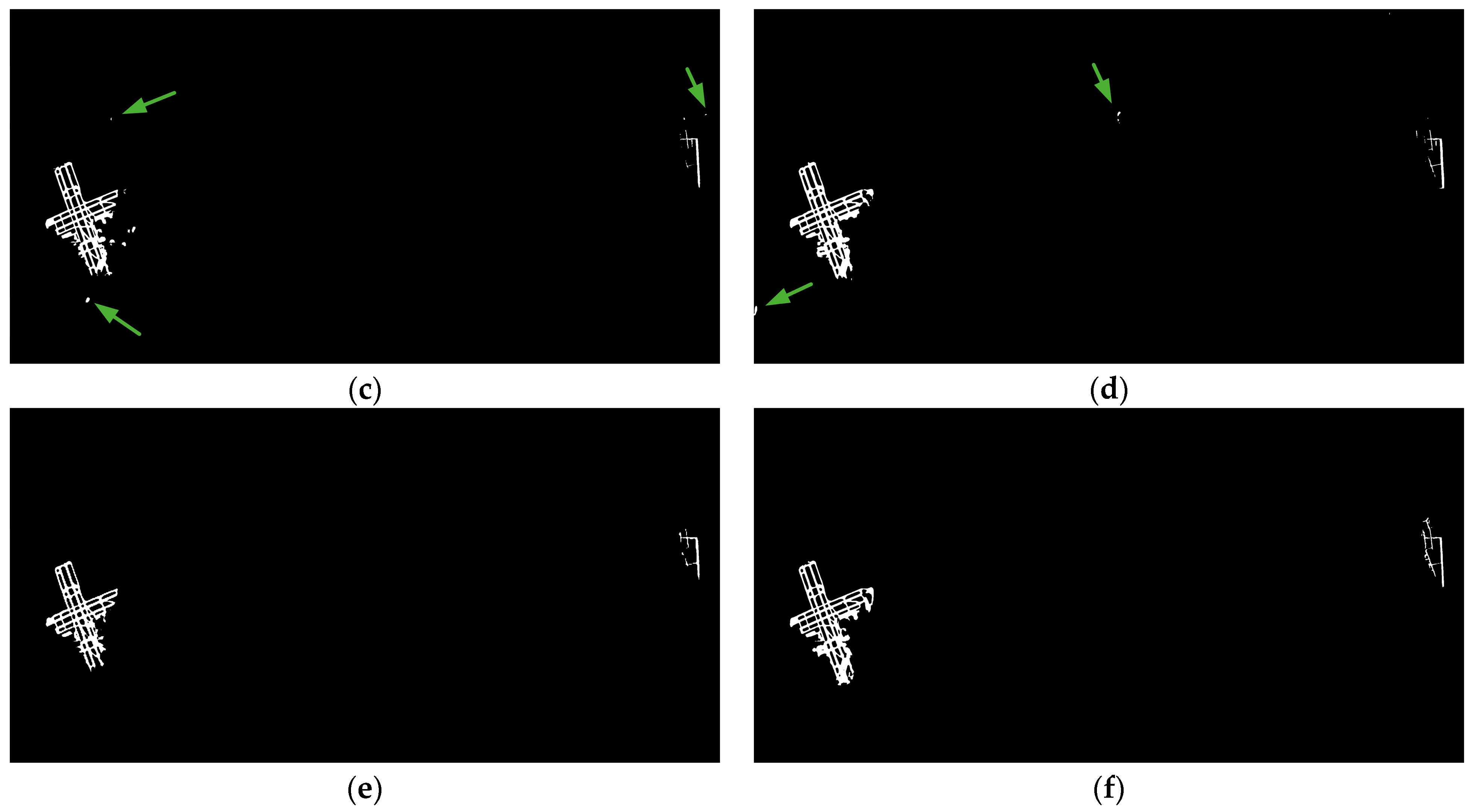

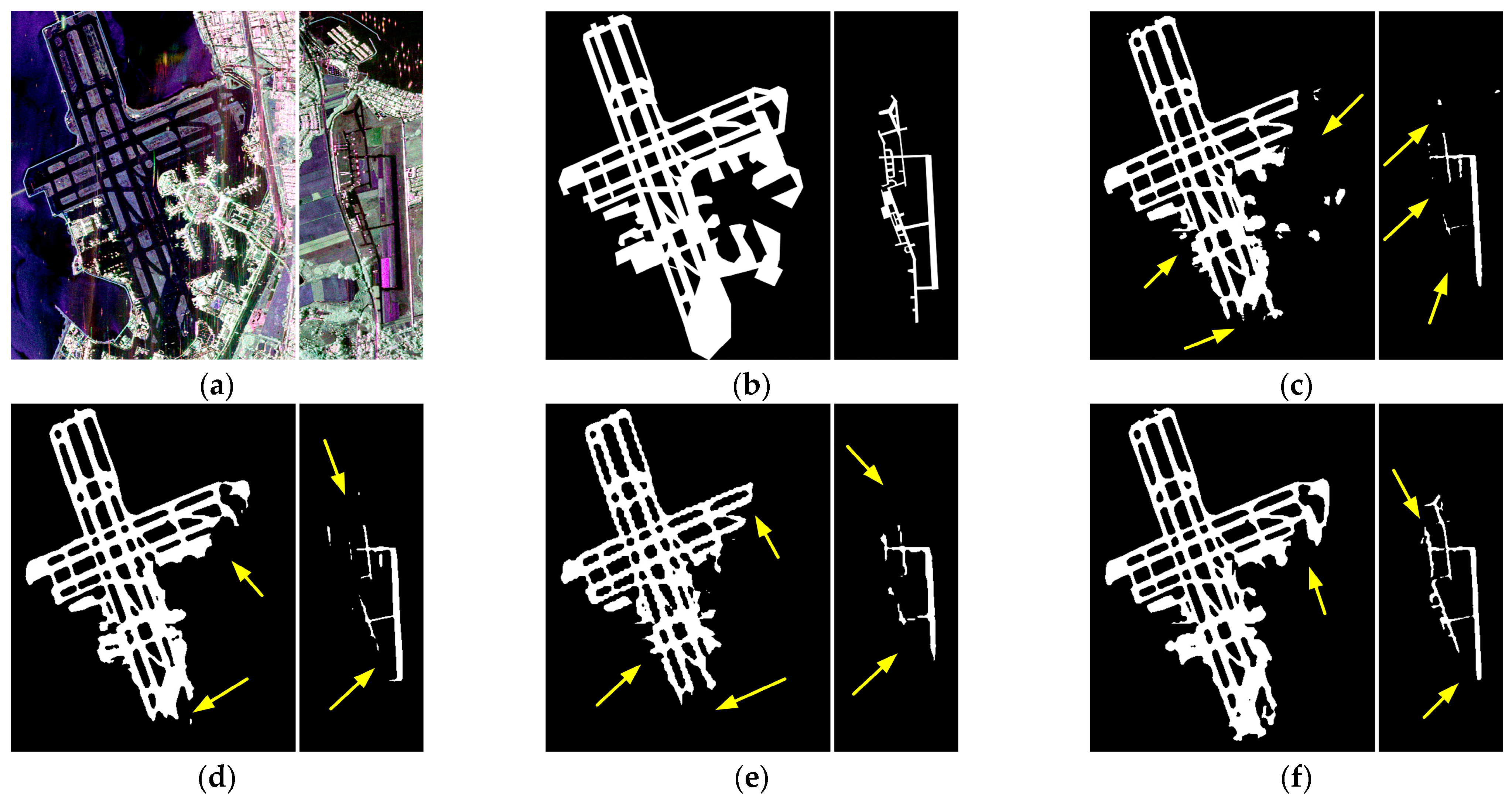

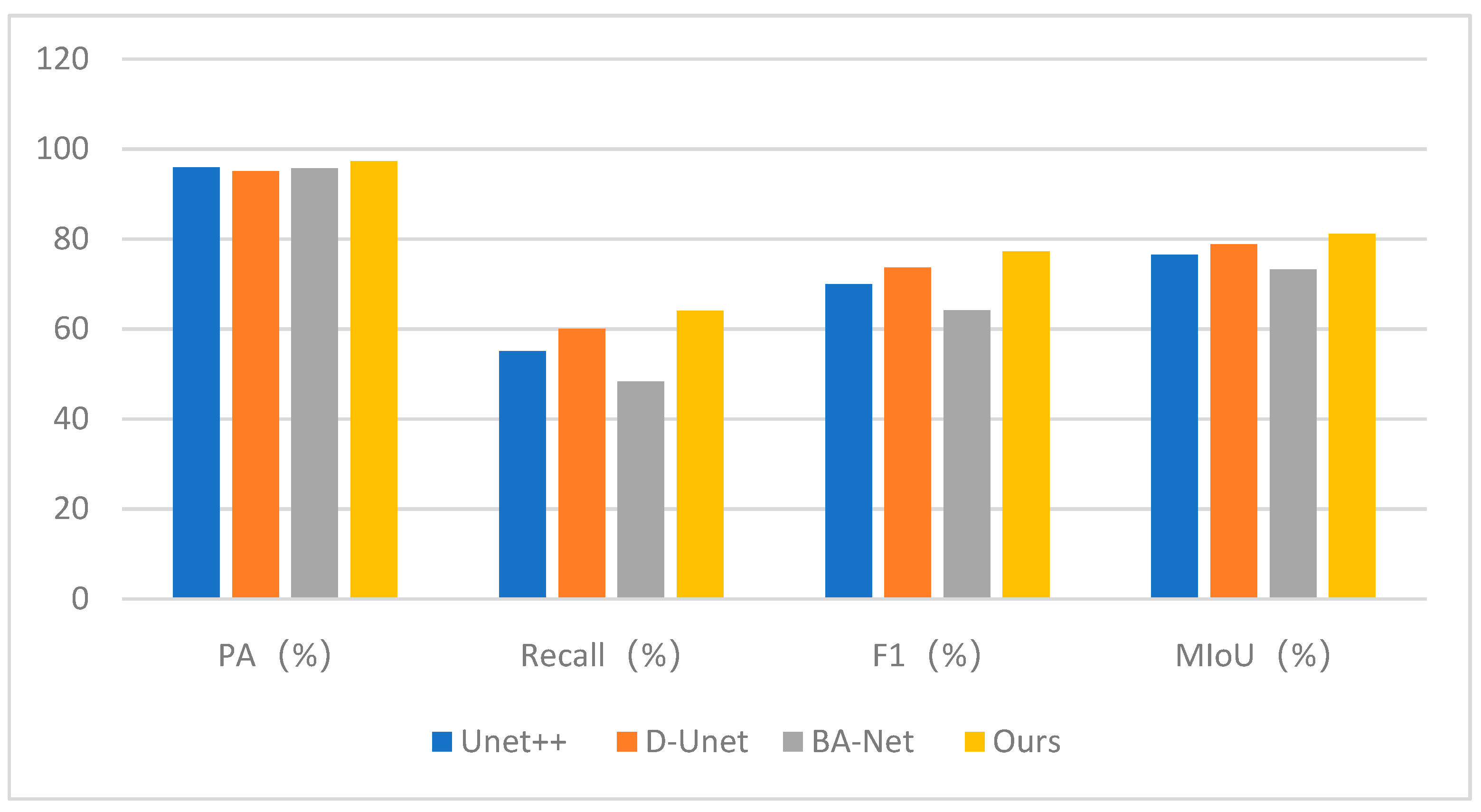

4.4.4. Comparative Experiment with SEL-Net

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, D. Talking about the Importance and Significance of General Aviation Airport Construction. Sci. Technol. Ind. Parks 2017, 15, 233. [Google Scholar]

- Xu, X.; Zou, B.; Zhang, L. PolSAR Image Classification Based on Object-Based Markov Random Field with Polarimetric Auxiliary Label Field. IEEE Trans. Geosci. Remote Sens. 2019, 17, 1558–1562. [Google Scholar] [CrossRef]

- Jin, K.; Chen, Y.; Xu, B.; Yin, J.; Wang, X.; Yang, J. A Patch-to-Pixel Convolutional Neural Network for Small Ship Detection with PolSAR Images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6623–6638. [Google Scholar] [CrossRef]

- Liu, F.; Duan, Y.; Li, L.; Jiao, L.; Wu, J.; Yang, S.; Zhang, X.; Yuan, J. SAR Image Segmentation Based on Hierarchical Visual Semantic and Adaptive Neighborhood Multinomial Latent Model. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4287–4301. [Google Scholar] [CrossRef]

- Cui, Y.; Liu, F.; Liu, X.; Li, L.; Qian, X. TCSPANET: Two-Staged Contrastive Learning and Sub-Patch Attention Based Network for Polsar Image Classification. Remote Sens. 2022, 14, 2451. [Google Scholar] [CrossRef]

- Ji, C.; Cheng, L.; Li, N.; Zeng, F.; Li, M. Validation of Global Airport Spatial Locations from Open Databases Using Deep Learning for Runway Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1120–1131. [Google Scholar] [CrossRef]

- Tu, J.; Gao, F.; Sun, J.; Hussain, A.; Zhou, H. Airport Detection in SAR Images via Salient Line Segment Detector and Edge-Oriented Region Growing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 314–326. [Google Scholar] [CrossRef]

- Liu, N.; Cui, Z.; Cao, Z.; Pi, Y.; Dang, S. Airport Detection in Large-Scale SAR Images via Line Segment Grouping and Saliency Analysis. IEEE Trans. Geosci. Remote Sens. 2018, 15, 434–438. [Google Scholar] [CrossRef]

- Ai, S.; Yan, J.; Li, D. Airport Runway Detection Algorithm in Remote Sensing Images. Electron. Opt. Control. 2017, 24, 43–46. [Google Scholar]

- Marapareddy, R.; Pothuraju, A. Runway Detection Using Unsupervised Classification. In Proceedings of the 2017 IEEE 8th Annual Ubiquitous Computing, Electronics and Mobile Communication Conference (UEMCON), New York, NY, USA, 19–21 October 2017; IEEE: New York, NY, USA, 2017; pp. 278–281. [Google Scholar]

- Lu, X.; Lin, Z.; Han, P.; Zou, C. Fast Detection of Airport Runway Areas in PolSAR Images Using Adaptive Unsupervised Classification. Natl. Remote Sens. Bull. 2019, 23, 1186–1193. [Google Scholar] [CrossRef]

- Han, P.; Liu, Y.; Han, B.; Chen, Z. Airport Runway Area Detection in PolSAR Image Combined with Image Segmentation and Classification. J. Signal Process. 2021, 37, 2084–2096. [Google Scholar]

- Zhang, Z.; Zou, C.; Han, P.; Lu, X. A Runway Detection Method Based on Classification Using Optimized Polarimetric Features and HOG Features for PolSAR Images. IEEE Access 2020, 8, 49160–49168. [Google Scholar] [CrossRef]

- Liu, N.; Cao, Z.; Cui, Z.; Pi, Y.; Dang, S. Multi-Layer Abstraction Saliency for Airport Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9820–9831. [Google Scholar] [CrossRef]

- Zhao, D.; Li, J.; Shi, Z.; Jiang, Z.; Meng, C. Subjective Saliency Model Driven by Multi-Cues Stimulus for Airport Detection. IEEE Access 2019, 7, 32118–32127. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, W.; Xing, Q. A Survey of SAR Image Segmentation Methods. J. Ordnance Equip. Eng. 2017, 38, 99–103. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI, Singapore, 18–22 September 2022; Springer: Munich, Germany, 2015; pp. 234–241. [Google Scholar]

- Han, P.; Liang, Y. Airport Runway Area Segmentation in PolSAR Image Based D-Unet Network. In Proceedings of the International Workshop on ATM/CNS, Tokyo, Japan, 25–27 October 2022; Electronic Navigation Research Institute: Tokyo, Japan, 2022; pp. 127–136. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A Nested u-Net Architecture for Medical Image Segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, Granada, Spain, 20 September 2018; Springer: Granada, Spain, 2018; pp. 3–11. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. Arxiv Prepr. 2017, arXiv:1706.05587. [Google Scholar]

- Usami, N.; Muhuri, A.; Bhattacharya, A.; Hirose, A. Proposal of Wet Snowmapping with Focus on Incident Angle Influential to Depolarization of Surface Scattering. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; IEEE: Beijing, China, 2016; pp. 1544–1547. [Google Scholar]

- Shang, F.; Hirose, A. Quaternion Neural-Network-Based PolSAR Land Classification in Poincare-Sphere-Parameter Space. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5693–5703. [Google Scholar] [CrossRef]

- Tan, S.; Chen, L.; Pan, Z.; Xing, J.; Li, Z.; Yuan, Z. Geospatial Contextual Attention Mechanism for Automatic and Fast Airport Detection in SAR Imagery. IEEE Access 2020, 8, 173627–173640. [Google Scholar] [CrossRef]

- Wang, R.; Chen, S.; Ji, C.; Fan, J.; Li, Y. Boundary-Aware Context Neural Network for Medical Image Segmentation. Med. Image Anal. 2022, 78, 102395. [Google Scholar] [CrossRef]

- Du, S.; Li, W.; Xing, J.; Zhang, C.; She, C.; Wang, S. Change detection of open-pit mining area based on FM-UNet++ and Gaofen-2 satellite images. Coal Geol. Explor. 2023, 51, 1–12. [Google Scholar]

- Wang, C.; Wang, S.; Chen, X.; Li, J.; Xie, T. Object-level change detection in multi-source optical remote sensing images combined with UNet++ and multi-level difference modules. Acta Geod. Cartogr. Sin. 2023, 52, 283–296. [Google Scholar]

- Du, Y.; Zhong, R.; Li, Q.; Zhang, F. TransUNet++ SAR: Change Detection with Deep Learning about Architectural Ensemble in SAR Images. Remote Sens. 2022, 15, 6. [Google Scholar] [CrossRef]

- Peng, C.; Zhang, X.; Yu, G.; Luo, G.; Sun, J. Large Kernel Matters–Improve Semantic Segmentation by Global Convolutional Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4353–4361. [Google Scholar]

- Zhang, Z.; Zhang, X.; Peng, C.; Xue, X.; Sun, J. Exfuse: Enhancing Feature Fusion for Semantic Segmentation. In Proceedings of the Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 269–284. [Google Scholar]

- Gu, Z.; Cheng, J.; Fu, H.; Zhou, K.; Hao, H.; Zhao, Y.; Zhang, T.; Gao, S.; Liu, J. Ce-Net: Context Encoder Network for 2d Medical Image Segmentation. IEEE Trans. Med. Imaging 2019, 38, 2281–2292. [Google Scholar] [CrossRef] [PubMed]

- Xu, Q.; Ma, Z.; Na, H.E.; Duan, W. DCSAU-Net: A Deeper and More Compact Split-Attention U-Net for Medical Image Segmentation. Comput. Biol. Med. 2023, 154, 106626. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June 2016; pp. 2818–2826. [Google Scholar]

- Han, P.; Liu, Y.; Cheng, Z. Airport Runway Detection Based on a Combination of Complex Convolution and ResNet for PolSAR Images. In Proceedings of the 2021 SAR in Big Data Era (BIGSARDATA), Nanjing, China, 22 September 2021; pp. 1–4. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14 June 2020; pp. 9729–9738. [Google Scholar]

- Zhang, L.; Zhang, S.; Zou, B.; Dong, H. Unsupervised Deep Representation Learning and Few-Shot Classification of PolSAR Images. IEEE Trans. Geosci. Remote Sens. 2020, 60, 1–16. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the International Conference on Machine Learning (PMLR), Vienna, Austria, 12 July 2020; pp. 1597–1607. [Google Scholar]

- Zhang, C.; Chen, J.; Li, Q.; Deng, B.; Wang, J.; Chen, C. A Survey of Deep Contrastive Learning. Acta Autom. Sin. 2023, 49, 15–39. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16 June 2019; pp. 3146–3154. [Google Scholar]

- Wu, T.; Tang, S.; Zhang, R.; Cao, J.; Zhang, Y. Cgnet: A Light-Weight Context Guided Network for Semantic Segmentation. IEEE Trans. Image Process. 2020, 30, 1169–1179. [Google Scholar] [CrossRef]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Learning a Discriminative Feature Network for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18 June 2018; pp. 1857–1866. [Google Scholar]

- Takikawa, T.; Acuna, D.; Jampani, V.; Fidler, S. Gated-Scnn: Gated Shape Cnns for Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October 2019; pp. 5229–5238. [Google Scholar]

- Lee, J.-S.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Huynen, J.R. Stokes Matrix Parameters and Their Interpretation in Terms of Physical Target Properties. In Proceedings of the Polarimetry: Radar, Infrared, Visible, Ultraviolet, and X-ray, Huntsville, AL, USA, 15–17 May 1990; SPIE: Huntsville, AL, USA, 1990; Volume 1317, pp. 195–207. [Google Scholar]

- Shugar, D.H.; Jacquemart, M.; Shean, D.; Bhushan, S.; Upadhyay, K.; Sattar, A.; Schwanghart, W.; McBride, S.; De Vries, M.V.W.; Mergili, M.; et al. A Massive Rock and Ice Avalanche Caused the 2021 Disaster at Chamoli, Indian Himalaya. Science 2021, 373, 300–306. [Google Scholar] [CrossRef]

| Method | PA (%) | Recall (%) | F1 (%) | MioU (%) |

|---|---|---|---|---|

| SimCLR | 93.25 | 21.35 | 34.64 | 57.87 |

| MOCO | 92.70 | 22.22 | 35.79 | 56.56 |

| SEL-Net (the feature images removed) | 90.26 | 7.60 | 14.01 | 54.20 |

| SEL-Net (ours) | 94.14 | 24.82 | 39.31 | 62.12 |

| Method | PA (%) | Recall (%) | F1 (%) | MioU (%) |

|---|---|---|---|---|

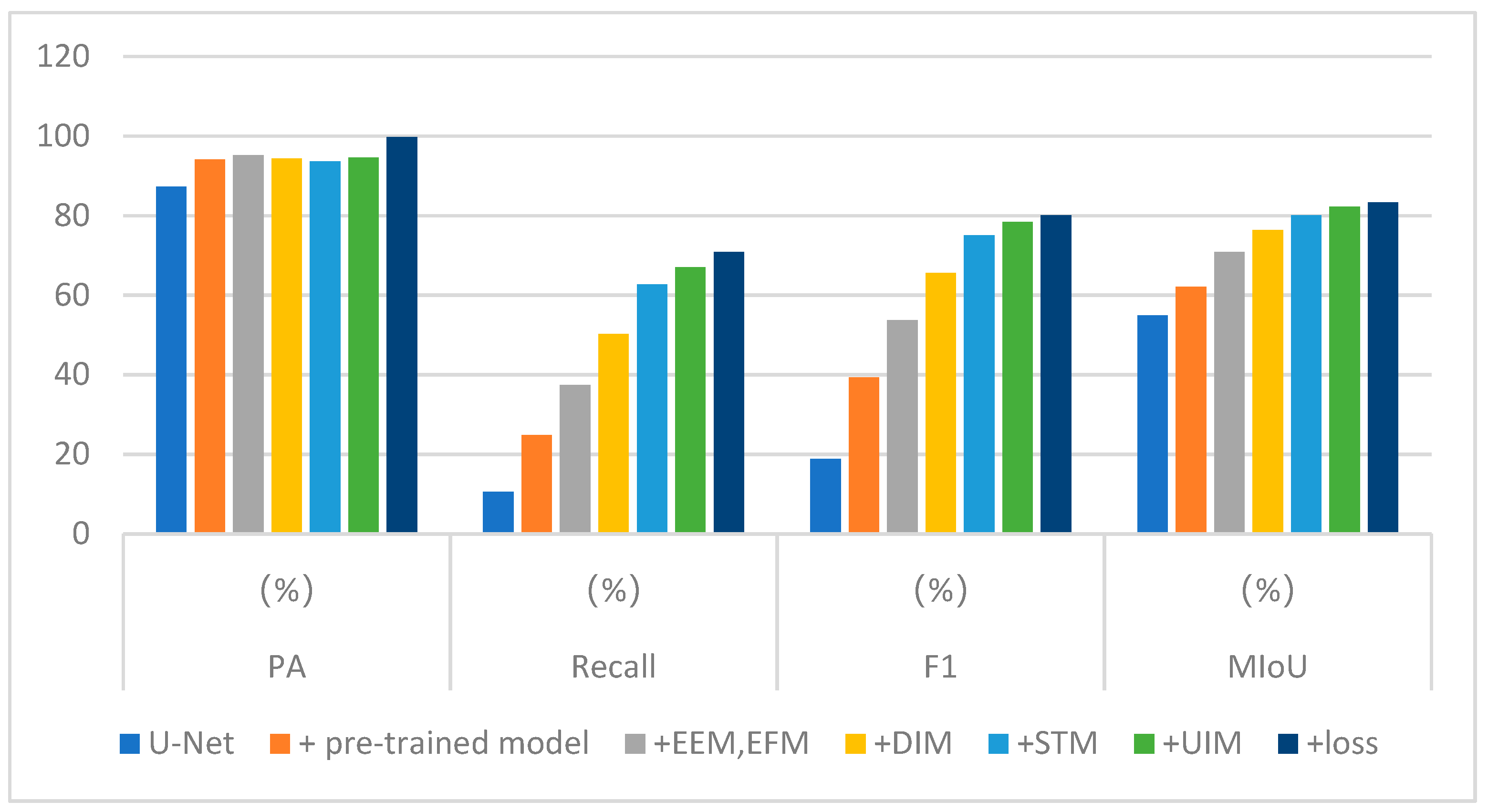

| U-Net | 87.26 | 10.56 | 18.80 | 54.88 |

| +pre-trained model | 94.14 | 24.82 | 39.31 | 62.12 |

| +EEM,EFM | 95.18 | 37.40 | 53.70 | 70.81 |

| +DIM | 94.32 | 50.24 | 65.55 | 76.32 |

| +STM | 93.64 | 62.67 | 75.09 | 80.02 |

| +UIM | 94.58 | 66.95 | 78.40 | 82.21 |

| +loss | 99.68 | 70.78 | 80.10 | 83.26 |

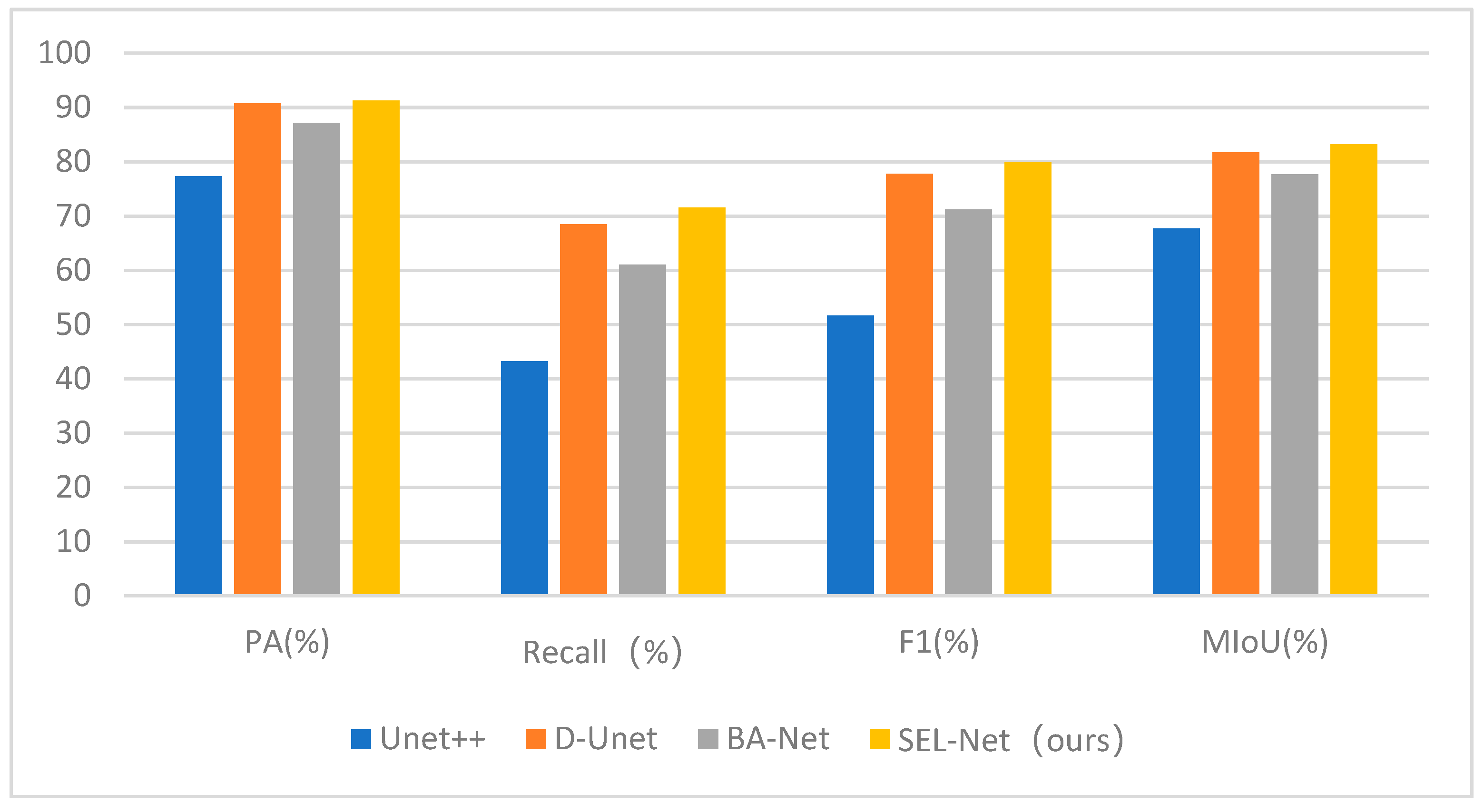

| Method | PA (%) | Recall (%) | F1 (%) | MioU (%) |

|---|---|---|---|---|

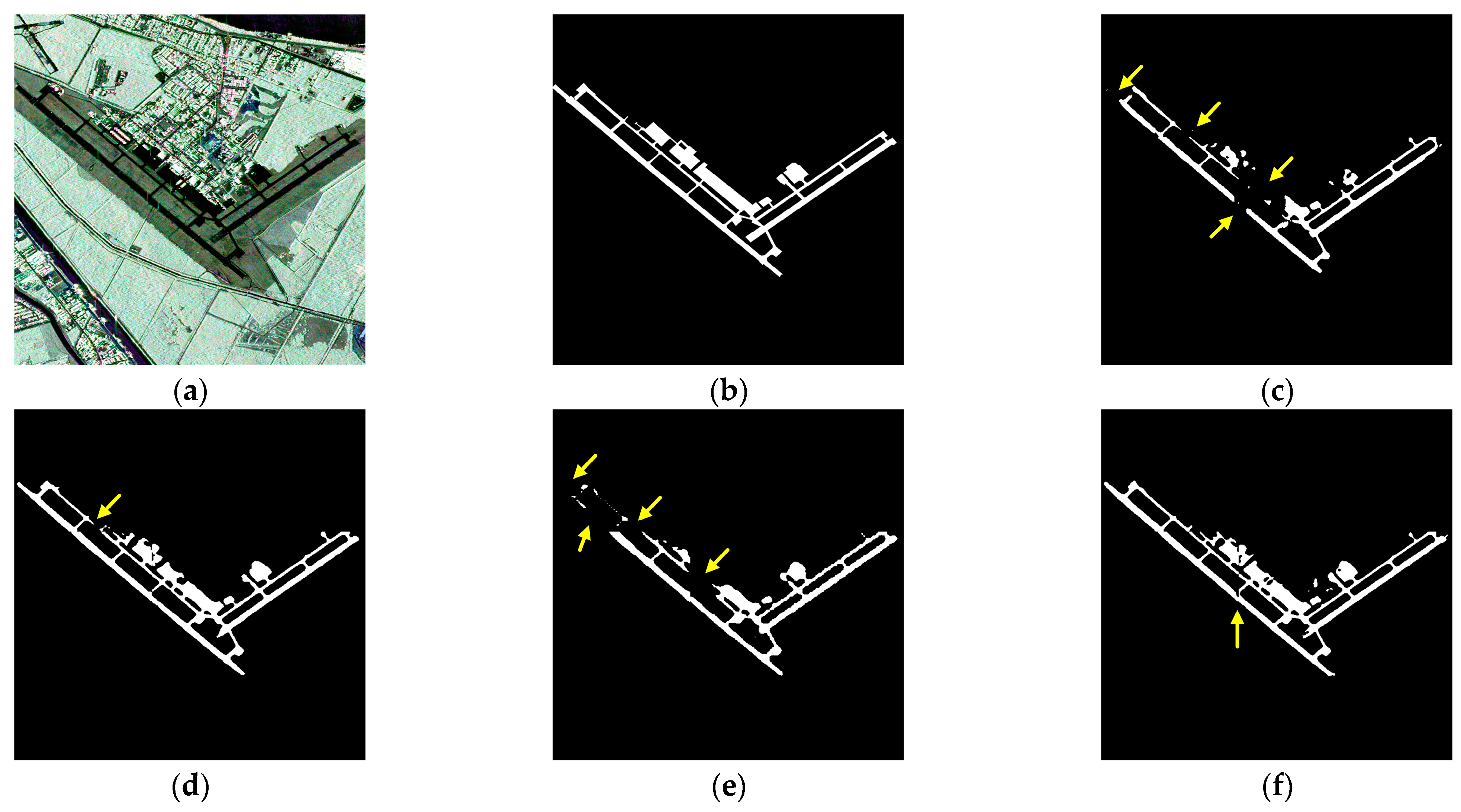

| Unet++ | 36.22 | 53.11 | 43.07 | 63.32 |

| D-Unet | 80.36 | 74.76 | 77.46 | 81.50 |

| BA-Net | 73.19 | 57.18 | 64.20 | 73.47 |

| SEL-Net (ours) | 81.95 | 74.65 | 78.12 | 81.95 |

| Method | PA (%) | Recall (%) | F1 (%) | MioU (%) |

|---|---|---|---|---|

| Unet++ | 95.95 | 55.09 | 70.00 | 76.52 |

| D-Unet | 95.12 | 60.00 | 73.62 | 78.77 |

| BA-Net | 95.67 | 48.28 | 64.17 | 73.17 |

| SEL-Net (ours) | 97.25 | 64.07 | 77.25 | 81.15 |

| Method | PA (%) | Recall (%) | F1 (%) | MioU (%) |

|---|---|---|---|---|

| Unet++ | 77.29 | 43.25 | 51.69 | 67.68 |

| D-Unet | 90.74 | 68.5 | 77.71 | 81.65 |

| BA-Net | 87.11 | 61 | 71.18 | 77.66 |

| SEL-Net (ours) | 91.26 | 71.53 | 79.89 | 83.17 |

| Method | Params (M) | FLOPs (G) | FPS |

|---|---|---|---|

| Unet++ | 47 | 798 | 1.33 |

| D-Unet | 16.84 | 272.80 | 1.77 |

| BA-Net | 37.22 | 208.52 | 1.67 |

| SEL-Net (ours) | 27.72 | 247.40 | 1.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, P.; Peng, Y.; Cheng, Z.; Liao, D.; Han, B. SEL-Net: A Self-Supervised Learning-Based Network for PolSAR Image Runway Region Detection. Remote Sens. 2023, 15, 4708. https://doi.org/10.3390/rs15194708

Han P, Peng Y, Cheng Z, Liao D, Han B. SEL-Net: A Self-Supervised Learning-Based Network for PolSAR Image Runway Region Detection. Remote Sensing. 2023; 15(19):4708. https://doi.org/10.3390/rs15194708

Chicago/Turabian StyleHan, Ping, Yanwen Peng, Zheng Cheng, Dayu Liao, and Binbin Han. 2023. "SEL-Net: A Self-Supervised Learning-Based Network for PolSAR Image Runway Region Detection" Remote Sensing 15, no. 19: 4708. https://doi.org/10.3390/rs15194708

APA StyleHan, P., Peng, Y., Cheng, Z., Liao, D., & Han, B. (2023). SEL-Net: A Self-Supervised Learning-Based Network for PolSAR Image Runway Region Detection. Remote Sensing, 15(19), 4708. https://doi.org/10.3390/rs15194708