Abstract

Due to the inability of remote sensing satellites to monitor avalanches in real time, this study focuses on the glaciers in the rear edge of Jialongcuo, Tibet, and uses infrasound sensors to conduct real-time monitoring of ice avalanches. The following conclusions are drawn: (1) In terms of waveform, compared to background noise, ice avalanche events have a slight left deviation and a slightly steep shape; compared to wind, rain, and floods events, ice avalanche events have less obvious kurtosis and skewness. (2) In terms of frequency distribution, the infrasound frequency generated by ice avalanche events is mainly distributed in the range of 1.5 Hz to 9.5 Hz; compared to other events, ice avalanche events differ some in frequency characteristics. (3) The model based on information entropy and marginal spectral frequency distribution characteristics of infrasound have higher accuracy in signal classification and recognition, as they can better represent the differences between infrasound signals of different events than other features. (4) Compared with the K-nearest neighbor algorithm and classification tree algorithm, the support vector machine and BP (Back Propagation) neural network algorithm are more suitable for identifying infrasound signals in the Jialongcuo ice avalanche. The research results can provide theoretical support for the application of infrasound-based ice avalanche monitoring technology.

1. Introduction

Glaciers are widely distributed in the Qinghai–Tibet Plateau, which is rich in fresh water resources, which can meet the survival needs of about 1/3 of people all over the world, so it is also known as the “Asian Water Tower”. The Qinghai–Tibet Plateau serves as a regulator of climate change in the Northern Hemisphere. It provides important ecosystem services for the Asian region. Affected by global warming, glaciers in the Qinghai–Tibet Plateau are melting and retreating. At the same time, the influence of topography, earthquakes, precipitation, and other internal and external forces further increases the probability of ice avalanche disasters [1,2,3]. An ice avalanche is an instant collapse of the ice body in front of a glacier under the action of gravity, which often occurs at the end of the glacier, and sometimes in the middle and upper part of the glacier associated with ice falls [4]. Ice avalanches easily lead to a series of glacier disasters, such as ice lake collapse, debris flow, and plateau landslides [2,3,5,6], which not only affect the ecological environment of the plateau, but also seriously threaten the life and property safety of the people in the plateau and reduce the service function of the ecosystem.

Records of ice avalanches originated in 1767 from Saussure, a Swiss geologist, meteorologist, and mountaineer, who first recorded in detail the movement and ice avalanche phenomena of Alpine glaciers during mountaineering [7]. D.R. Crandell divided ice avalanches into four types in 1968, and ice avalanches belong to one of them [2]. In the same year, Pinchak [8] made a preliminary study on the ice avalanche probability of Vaughan Lewis Glacier. Hans [9] classified ice avalanches in terms of volume and distance of movement. Margreth et al. [10] conducted an ice avalanche hazard assessment based on the Gutzgletscher ice avalanche northwest of Wetterhorn above Greendewa (Bern Alps, Switzerland) in September 1996 and January 1997, and analyzed the formation mechanism and research difficulties of the ice avalanche. It is considered that the ice avalanche is the process of the ice body breaking from the glacier and then falling to the slope and forming small pieces of ice under the action of gravity. With the in-depth understanding of the ice avalanche, researchers began to study and monitor the activity of the ice avalanche. Salzmann et al. [11] developed a method to detect potentially hazardous areas based on statistical parameters, geographic information system (GIS) modeling technology, and remote sensing technology. Vilajosana et al. [12] use seismographs to observe and estimate the energy transferred to the ground by ice avalanches, which is used to verify the model and evaluate the size and classification of ice avalanches. Herwijnen et al. [13] use seismic sensors combined with automatic cameras to monitor ice avalanches to predict ice avalanche activity. Murayama et al. [14] monitored ice shocks through an infrasound array deployed in the bay of eastern Antarctica and found that ice shocks produce special infrasound signals, and ice avalanches are one of the causes of ice shocks and special infrasound. Asming et al. [15] also carried out infrasound monitoring of glaciers in the Arctic and Svalbard, which once again proved that infrasound can be used to monitor glacier damage. Mayer et al. [16] also monitor ice avalanche activity through different infrasound detection systems installed in the Swiss Alps. Herwijnen et al. [13] monitored ice avalanches by infrasound array and evaluated the avalanche activity near the avalanche area by machine learning. Graveline et al. [17] indicated that there may be interaction between ice avalanches and snowslides. Compared with the research on ice avalanche disasters worldwide, there are fewer studies on individual ice avalanche disasters in China, and more on secondary disasters, such as glacial lake collapse and glacier debris flow caused by ice avalanches. Scholars [18,19,20,21] analyzed a large number of ice lake collapse events in Tibet, which proved that ice avalanches were one of the main factors leading to ice lake collapse.

At present, research methods for ice avalanche disasters mainly include site investigation, remote sensing, seismograph, and numerical simulation [12,13]. As ice avalanche disasters often occur in remote mountainous areas with rugged terrain, site investigation is very difficult and dangerous, so the actual data collected are very limited. The development of remote sensing technology has promoted the research on ice avalanche disasters. Remote sensing images can monitor glaciers in all directions, but remote sensing technology relies on the temporal and spatial resolution of satellites and cannot monitor glaciers in real time. Seismographs are mainly used to record the frequency of avalanche events currently, but do not record the whole dynamic process of avalanches. Numerical simulation can simulate the main factors influencing the avalanche, but it is still difficult to simulate the mechanism of an avalanche. In view of this situation, taking the trailing edge glacier of Jialongcuo in Tibet as the research object, infrasound with the characteristics of low frequency, low attenuation, and long propagation distance [22,23,24,25] is used to monitor ice avalanches in this work. On this basis, combined with other monitoring data, features of avalanche infrasound signals were extracted, and avalanche infrasound signals were sorted out from signals of other events to provide technical support for follow-up monitoring and early warning of secondary disasters (ice lake collapse and glacier debris flow, etc.). The infrasound monitoring of ice avalanches can make up for the deficiency of remote sensing technology and realize real-time monitoring of ice avalanche disasters.

2. Methods

2.1. Study Area

2.1.1. Geographical Location

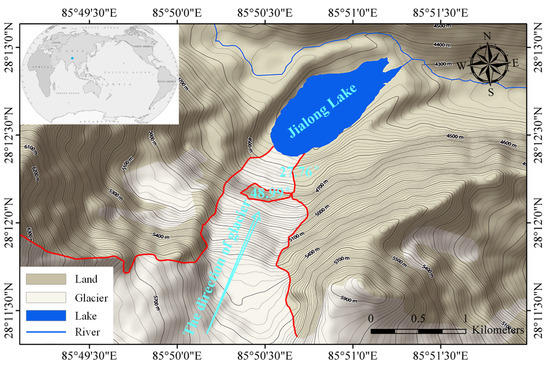

The Jialong Cuo trailing margin glacier belongs to the middle part of the Himalayas, and its watershed code is 50191B. According to the second round of glacier statistics, this glacier is about 4.52 square kilometers in area and about 0.30 cubic kilometers in volume (based on the average estimated volume of the two rounds). The glacier has a maximum elevation of 6660.7 m, a minimum elevation of 4384.2 m, and an average elevation of 5593.6 m, an average slope direction of 56.0 degrees, and an average slope of 27.6 degrees (the above data are from the second catalogue of glaciers in Tibet), as shown in Figure 1.

Figure 1.

Geographical location of the study area.

According to the classification of Chinese glaciers by Chinese glaciologist and academician Shi Yafeng, the upper glacier of Jialongcuo belongs to continental glacier or cold glacier. With a low temperature, dry climate, less precipitation, and weaker water vapor cycle than that on the southern slope of the Himalayas, it has slow movement and weak activity.

2.1.2. Climate

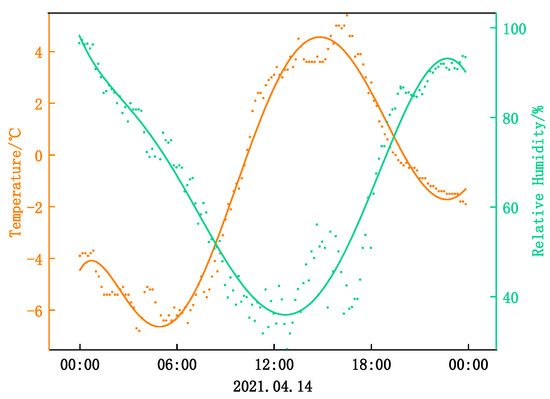

A set of weather stations has been set up in the study area, and meteorological data from 5 November 2020 to 19 January 2022 have been collected. Temperature, air humidity, and wind direction data were processed and analyzed in this work, as shown in Figure 2.

Figure 2.

Variation trend of daily temperature and humidity.

The monitoring results show that the annual temperature in the study area is less than 15 degrees Celsius, and the temperature varies widely from day to night. And, the daily temperature reaches the highest between 12:00 and 14:00. Opposite to air temperature, the relative humidity of the air falls with the rise of temperature, as the water in the air decreases due to evaporation, while sporadic light rain in the evening and night make the moisture content in the air increase.

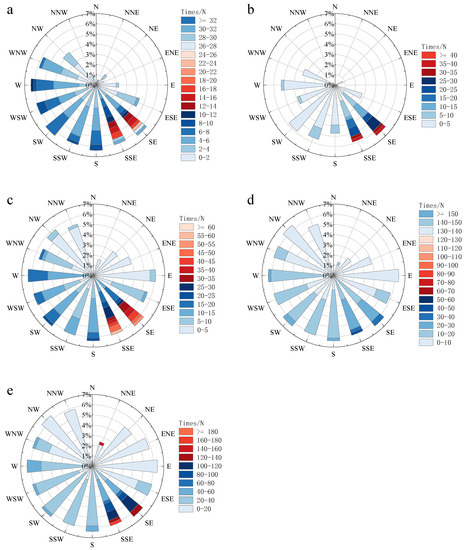

It can be seen from Figure 3 that southeasterly winds prevail in the study area, which is closely related to the geographical location of the monitoring point. Except for a channel in the southeast, the monitoring point is surrounded by high mountains in the other directions, and thus has weak air circulation overall. Affected by the Indian Ocean monsoon in summer, a small amount of warm and wet air rises along the Zhangmu channel to the southeast of the monitoring point, so it mainly has southeasterly winds in the summer.

Figure 3.

Distributions of wind directions in different seasons. In which, (a) is wind direction rose chart of Spring, (b) is wind direction rose chart of Summer, (c) is wind direction rose chart of Autumn, (d) is wind direction rose chart of Winter, and (e) is wind direction rose chart of the whole year.

2.1.3. Earthquake

According to the statistics of the earthquakes that have occurred in the range of 200 km around the monitoring site since 2021, as shown in Table 1, there are 0 earthquakes with magnitude ≥ 5.0 M, 4 earthquakes with magnitude ≥ 4.0 M, and 10 earthquakes with magnitude < 4.0 M in the whole year. On 21 August 2023, at 16:51:59, the epicenter center closest to the monitoring point (Nie Lamu County earthquake with magnitude 4.2) was approximately 118 km away. Therefore, the earthquake may have some impact on potentially unstable glaciers.

Table 1.

Statistics on earthquakes from January 2021 to February 2022.

2.1.4. Topographic Factor

As shown in Figure 1, the part circled by the red line in the picture is the monitored glacier, which moves toward the glacial lake. The ice tongue of the glacier is about 4880 m in elevation, about 430 m vertically to the ice lake surface, and about 230 m vertically to the position of the steps (surrounded by orange thin strips in the picture). The corresponding angle of the ice tongue at the steps is about 48.99°, exceeding the limit slope of a cold glacier (45°). The steps are 200 m vertically from the lake surface, and the slope of the ice tongue in this section is about 27.76°. The slope of the ice tongue is steep on the whole, which is not conducive to the stability of the glacier.

2.1.5. Disaster Form

Due to the steep slope at the first step of the glacier, if large amounts of ice fall, they will directly gush into the ice lake and cause surges, which may cause the spillway to burst. The spillway has a height difference of about 40 m with the river and is nearly perpendicular to the other side of the river bank. When a surge instantly rushes into the river and hits the slope of the opposite bank, this part of the bank with loose soil may experience landslides or collapses (Figure 4), causing slope instability and providing large amounts of material sources for debris flow. That is to say, the form of the disaster chain is ice avalanche–ice lake collapse–debris flow. The debris flow formed by the collapse of the ice lake is sudden, which is often accompanied by erosion on both sides of the channel, resulting in the collapse of soil on both sides of the channel, the destruction of vegetation, and the flooding of pastures in areas with flat terrain, causing damage to the ecological environment and posing a threat to the lives and property of local residents. According to the literature records, in May and June 2002, there were two ice avalanches into the Jialongcuo lake, which led to collapses of the ice lake and debris flow disasters.

Figure 4.

The slope on the opposite side of the spillway.

2.2. Data Collection

2.2.1. Overview of Equipment Layout

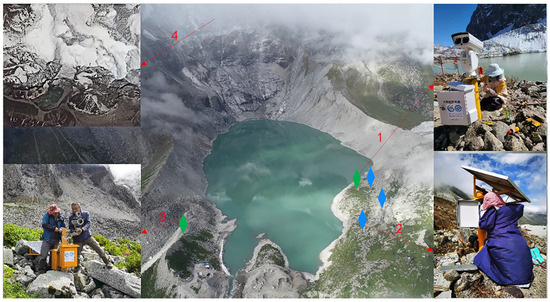

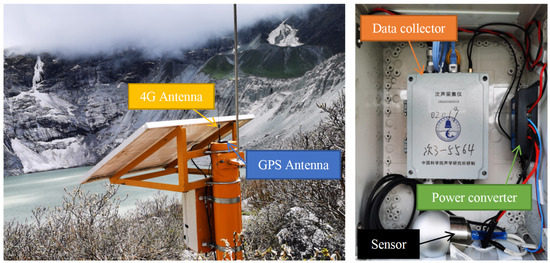

The monitoring equipment is installed in Jialong Cuo, Nyalamu County, Rikaze City, Tibet Autonomous region, at the latitude 28°12′24.55″–28°12′54.13″N, longitude 85°51′00.63″–85°51′11.48″E, and about 4376 m above sea level.

As shown in Figure 5, the blue marks are the positions of infrasound monitoring equipment (corresponding to 2 in the figure). Three sets of infrasound monitoring equipment are installed to form an infrasound array, and the sensors are all facing the glacier (corresponding to 4 in the figure) in the rear edge of Jialongcuo. The infrasound array can effectively reduce the interference of background noise and improve the signal-to-noise ratio of the signals. The green marks are the position where the cameras are; the camera on the right side of the lake (corresponding to 1 in the figure) is used to cooperate with infrasound monitoring equipment to monitor the glacier dynamics, and the camera on the left side of the lake (corresponding to 3 in the figure) is used to monitor whether there are surges and bursts at the overflow mouth of the ice lake.

Figure 5.

Schematic diagram of the location of monitoring equipment. In which, the green marks are the position of cameras (corresponding to 1 and 3 in the figure), the blue marks are the position of infrasound monitoring equipment (corresponding to 2 in the figure), and the number 4 in the figure corresponds to the glacier.

2.2.2. Infrasound Signal Acquisition

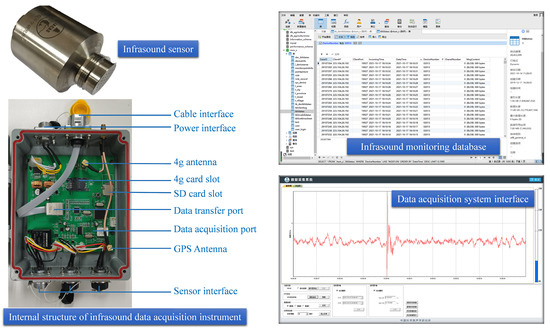

The infrasound monitoring equipment used in this study includes an infrasound sensor, infrasound data acquisition instrument, and supporting infrasound data acquisition system developed by the Institute of Acoustics, Chinese Academy of Sciences. The detectable range of the infrasound sensor is from 0.5 to 200 Hz. Its 50 mV/Pa sensitivity enables it to collect weak signals. Other indicators of the sensor are shown in Table 2. The collected data are sent to the original infrasound database through a 4G network online (Figure 6).

Table 2.

Index parameters of infrasound sensor.

Figure 6.

Infrasound signal acquisition equipment and software platform.

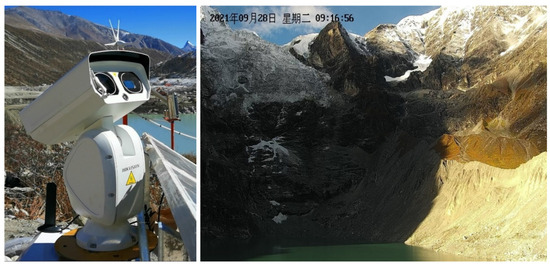

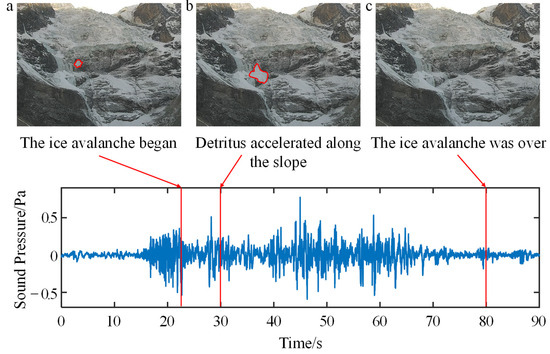

Since it is impossible to tell accurately that abnormal events monitored are ice avalanche events, a video surveillance device is installed near the infrasound array to realize video online transmission and local storage at the same time (see Figure 7 and Figure 8). In this study, infrasound signals occurring 2–3 min before and after a possible ice avalanche were collected and compared with the surveillance video to determine whether it was an ice avalanche event (see Figure 9).

Figure 7.

Schematic diagram of infrasound installation.

Figure 8.

Camera and surveillance video.

Figure 9.

An ice avalanche on 24 November 2021. The ice avalanche occurred at 17:15:20 from the video, and the time range for intercepting the infrasound signal is 17:15:00~17:16:30, in which (a) corresponds to 17:15:23, (b) corresponds to 17:15:30, and (c) corresponds to 17:16:20.

2.3. Analysis Method

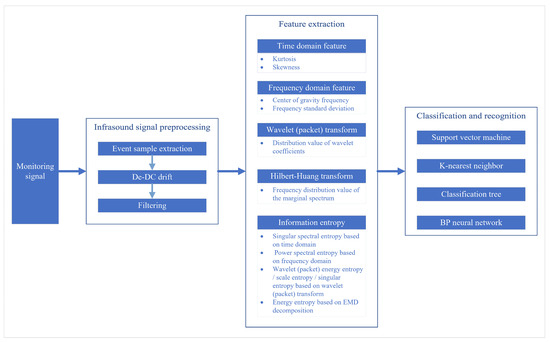

The collected signal needs to be processed before it can be analyzed. Figure 10 shows the signal processing work flow.

Figure 10.

Technical work flow chart.

2.3.1. Infrasound Signal Preprocessing

The collected infrasound signals are discrete nonlinear data. Meanwhile, due to the insufficient power supply of the equipment and interruption of the network signal at the monitoring site, the collected infrasound signals may have some missing, high-frequency noise interference and a large amount of useless information, etc., so it is necessary to preprocess the original signals before extracting the features of the infrasound signals of ice avalanche, flood, wind, and rain events. The preprocessing aims to improve the signal-to-noise ratio of the signals through event sample extraction, de-DC (direct current) drift, and filtering.

- (1)

- Event sample extraction

Due to the large amount of data and the traditional manual identification of samples taking a lot of time, the ratio of the short time average (STA) to the long time average (LTA), namely, the time window ratio method, was used to obtain the first arrival time of events and judge if the events were an ice avalanche according to the first arrival time and the video surveillance. Then, the ice avalanche event samples were extracted.

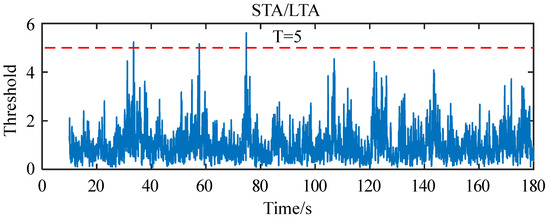

The time–window ratio method sets a long-time window and a short-time window, the windows move with the time series, and all signal amplitudes in the long-time window and short-time window are averaged at the same time. When the ratio R of the two windows exceeds the set threshold T, it is confirmed an event happened [26,27].

In Equation (1), are the lengths of the long-time window and short-time window, respectively, and is the amplitude of the signal. In this study, the lengths of the long-time window and short-time window were 10 s and 0.1 s, respectively, and the threshold was set at 5. Figure 11 shows the identified avalanche events.

Figure 11.

Ice avalanches on 10 June 2021.

- (2)

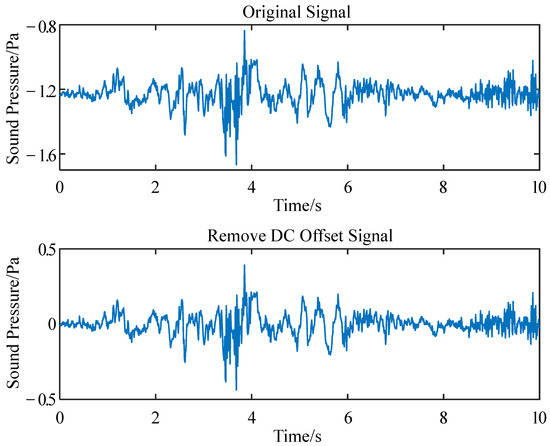

- De-DC drift

The data acquisition equipment can produce a constant interference signal during operation, namely, the DC component. This part of the signal does not carry information, but carries energy, which will convey an energy error to the subsequent data processing; so, it is necessary to remove it before signal processing.

where is the original signal fragment containing the ice avalanche event for about 3 min. As shown in Figure 12, the original signal has a DC drift of about −1.2/Pa.

Figure 12.

Original signal and de-DC signal.

- (3)

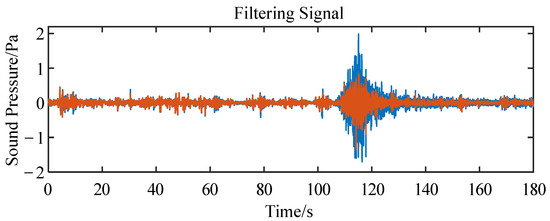

- Filtering

The response frequency range of the infrasound sensor is 0.1–1000 Hz, that is, in the actual signal acquisition, part of the signals collected by the sensor will be greater than 20 Hz in frequency. As only the signals no more than 20 Hz in frequency were analyzed in this study, signals larger than 20 Hz in frequency need to be removed through filtering. The filter adopted the window function design method and, specifically, the Kaiser window with high adaptability. The filtering effect is shown in Figure 13.

Figure 13.

Raw data filtering. The blue line indicates the original signal and the orange line represents the filtered signal.

2.3.2. Extraction Methods of Avalanche Infrasound Features

- (1)

- Extraction of time domain features

In this study, kurtosis and skewness of the signal are extracted to characterize the waveform characteristics of the signal in the time domain. Kurtosis reflects the gentle degree of the waveform: the higher the kurtosis, the steeper the waveform. Skewness reflects the deflection degree of the waveform: positive skewness indicates right skewness, negative skewness indicates left skewness.

Kurtosis is expressed as:

Skewness is expressed as:

In the equations above, represents the input signal, represents the mean value of the input signals, represents the standard deviation of the input signal, and represents the expected value of the input signal.

- (2)

- Extraction of features in frequency domain

In this study, the frequency spectrum and power spectrum of the signal are obtained by Fast Fourier Transform (FFT), and the center of gravity frequency [28] and frequency standard deviation [29] of the signal are extracted. The center of gravity frequency represents the frequency distribution of the signal on the frequency spectrum. The frequency standard deviation characterizes the dispersion of the power spectrum energy of the signal.

represents the Fourier transform of the signal, is the frequency, and represents the input signal [30].

is the power spectrum, represents the fast Fourier transform of the signal, and represents the length of the signal.

is the center of gravity frequency.

is the standard deviation of the frequency.

- (3)

- Joint extraction of features in time and frequency domains

The time domain analysis and frequency domain analysis of the signal can only characterize the characteristics of the signal from one aspect. In this paper, wavelet and Hilbert–Huang transform analyses were selected to obtain wavelet coefficients and the Hilbert marginal spectrum distribution, respectively, which were used to characterize the features of the signal in both the time domain and the frequency domain.

① Wavelet transform

The discrete wavelet transform depends on the selection of the wavelet function, which is mainly divided into the following five steps [31]:

Step 1: suppose that the function satisfies , and its Fourier transform satisfies the following conditions, then is a mother wavelet:

Step 2: by stretching and translating the mother wavelet, a set of wavelet basis functions are obtained, such as the following formula, where , is the scale factor, and is the translation factor, all of which are continuously changing values:

Step 3: the scale parameter a and the shift parameter b are discretized, that is, , , , and the discrete wavelet basis function, , is obtained, which is the wavelet coefficient :

Step 4: discrete wavelet transform of signal :

Step 5: calculate the energy and energy proportion on different scales:

The main eigenvalue extracted based on the wavelet transform is the distribution value of wavelet coefficients, and the extraction includes four main steps:

Step 1: wavelet packet decomposition: the pre-processed signal is decomposed by three layers of wavelet packets, and the waveforms of 8 nodes can be obtained, and each node waveform corresponds to a set of wavelet packet coefficients .

Step 2: take the absolute values of the wavelet coefficients of the waveform at each node and sum them.

where is a natural number of finite length of .

Step 3: the ratio of the sum of wavelet packet coefficients of each node to the sum of all node coefficients is calculated:

where is the number of nodes corresponding to the number of decomposition layers, and in this study, the decomposition layers are three, that is, .

Step 4: finally, the ratio of the ratio calculated in the above step and the ratio of the adjacent node is determined, and that is the eigenvalue of the wavelet coefficient distribution.

Through the above steps, the eigenvalues of the wavelet coefficient distribution corresponding to the signal can be extracted. In this study, the signal was decomposed by three layers of wavelet packets, and the corresponding eigenvalue dimension was the dimension.

② Hilbert–Huang transform

The main eigenvalues extracted based on the Hilbert–Huang transform are the Hilbert spectrum and Hilbert marginal spectrum. The marginal spectrum can reflect the distribution of signals at different frequencies, especially local characteristics of signals at lower frequencies. In this study, the proportions of different frequency segments corresponding to the Hilbert marginal spectrum were taken as the characteristics of different sample signals, that is, the frequency distribution value of the marginal spectrum [32,33].

The Hilbert–Huang transform is mainly divided into the following nine steps:

Step 1: find all the maximum points, minimum points, and endpoints of signal .

Step 2: the extreme points of all the marks in step 1 are interpolated, and then the upper and lower envelope sequences and of the signal are obtained, and the mean value of the upper and lower envelope of signal is calculated:

Step 3: calculate the signal of signal :

Step 4: to judge whether satisfies the two conditions of , (1) the difference between the total number of cross-zero points of the signal waveform and the number of all extreme points is less than or equal to 1, (2) and the local mean of the upper and downer envelope of the signal is zero. If the above two conditions are satisfied, then , and the first component is obtained; if the condition is not satisfied, then steps 2 to 4 of the above are repeated as the signal until the two conditions of are met, and the is obtained.

Step 5: the component is removed to obtain the residual component :

Step 6: to judge whether conforms to the three conditions of EMD (Empirical Mode Decomposition), (1) if the number of extreme points of the signal is greater than or equal to 2, it means that there is at least one maximum and one minimum point; (2) the time scale of the extreme point can only determine the local time domain characteristics of the original signal; and (3) if a signal has no extreme point, there must be a singularity or inflection point that can be obtained by several differentials. If the above three conditions are satisfied, the EMD decomposition ends. If not, steps 2 to 5 above are repeated with as the new original signal to obtain other components.

Step 7: when satisfies the termination condition, the EMD decomposition ends, and the signal can be expressed in the form of the sum of multiple components:

Step 8: the Hilbert–Huang transform is performed on each component to obtain the Hilbert spectrum , where represents the real part of the component:

Step 9: the Hilbert spectrum is integrated, and the marginal spectrum is obtained:

In this study, the proportion of different frequency segments corresponding to the Hilbert marginal spectrum is taken as the characteristic of different sample signals, that is, the marginal spectrum frequency distribution value. The extraction process mainly has the following four steps:

Step 1: the marginal spectrum of the signal is obtained by the Hilbert–Huang transform, and the marginal spectrum is divided according to 1 Hz. In this study, after resampling the original data, the corresponding sampling frequency is 25 Hz, so it can be divided into 25 frequency bands, each band corresponding to 1 Hz.

is the marginal spectrum, and is the sampling frequency.

Step 2: the sum of the marginal spectrums of each frequency band, that is, the sum of the amplitudes corresponding to the marginal spectrum, is determined as follows:

In the equation, is a finite natural number of , and is the amplitude corresponding to the marginal spectrum.

Step 3: the ratio of the sum of the marginal spectrums to the sum of the marginal spectrums corresponding to each frequency band is determined as follows:

Step 4: finally, the ratio calculated in step 3 and the ratio of the adjacent frequency bands are calculated again, that is, the eigenvalue of the marginal spectral frequency distribution.

Through the above steps, the eigenvalues of the marginal spectrum frequency distribution corresponding to the signal can be extracted. In this study, 25 frequency segments are obtained after the marginal spectrum is segmented, and the corresponding eigenvalue dimension is 24 dimensions.

- (4)

- Extraction of information entropy feature

Information entropy is used to measure the uncertainty of random variables [34] (Zhu Xuenong, 2001), which is expressed as

where represents the probability of occurrence of N values of the random variable.

In this study, eigenvalues extracted based on information entropy included singular spectrum entropy based on time domain analysis, power spectrum entropy based on frequency domain analysis, wavelet energy entropy based on the wavelet transform, wavelet packet energy entropy, wavelet packet scale entropy and wavelet packet singular entropy based on the wavelet packet transform, and energy entropy based on EMD(Empirical Mode Decomposition) decomposition.

① Singular spectral entropy based on time domain analysis

The singular spectrum of signals needs to be decomposed before solving the singular spectrum entropy, which includes decomposing and reconstructing signals according to the time series of the signals to extract different components of the signals [35].

where denotes the matrix of signals to be decomposed. is the unitary matrix of ; is the unitary matrix of , that is, , ; and is the matrix of .

It can be obtained from Equation (29).

where is the eigenvector of , is the eigenvector of , are singular values.

The corresponding singular spectrum can be obtained by singular value decomposition of . By calculating the proportion of every singular value in the singular spectrum, a set of probability density functions can be obtained. By substituting the probability density function into the classical entropy formula, the singular spectrum entropy of the input signal can be obtained.

② Power spectral entropy based on frequency domain analysis

In the part of the extraction of features in the frequency domain, the power spectrum of the input signal, , has been obtained, and the power spectrum, contains band values. By calculating the proportion of every band value of the power spectrum in the power spectrum, a set of probability density functions can be obtained. The power spectrum entropy of the input signal can be obtained by substituting the probability density function into the classical entropy formula.

③ Wavelet energy entropy and wavelet packet energy entropy based on the wavelet and wavelet packet transforms

In the part of the extraction of features through the wavelet and wavelet packet transforms, the energy and energy proportion of each layer after wavelet decomposition have been calculated, and then the energy entropy can be determined.

④ Wavelet packet scale entropy and wavelet packet singular entropy based on the wavelet packet transform

Similarly, the wavelet scale entropy is the Shannon entropy of the probability density of the wavelet coefficients of all nodes in the wavelet packet decomposition.

The calculation method of singular entropy of the wavelet packet is consistent with that of singular spectral entropy of the input signal, that is, the singular value of each node is obtained by singular spectral decomposition of the wavelet packet coefficients of each node, and then the singular entropy of wavelet packet is obtained by the same method.

⑤ Energy entropy based on EMD decomposition

In the part of the extraction of features through the Hilbert–Huang transform, a set of IMF (Intrinsic Mode Function) components of the input signal can be obtained by EMD decomposition, and the energy entropy of the probability density function corresponding to each group of can be obtained.

The eigenvalues of ice avalanche events, flood events, and wind-and-rain events extracted by using the above feature extraction methods constitute feature vectors of different features.

2.3.3. Class Recognition Method

In this study, supervised pattern recognition was used to classify infrasound signals. Support vector machine (SVM), K-nearest neighbor (KNN), classification tree, and BP neural network (BPNN) were used. After preprocessing of the original infrasound signals, based on feature vectors extracted by time and frequency domain analysis, the wavelet transform and the Hilbert–Huang transform, classification models were constructed, and the model parameters were improved through continuous training to achieve the best classification effect. The trained classification models have been applied to the recognition of unknown signals to judge classes of the signals, with the classification results output [36,37].

In order to characterize the recognition effect of the models more accurately, five indexes—recall ratio, precision, accuracy, F1 score, and area under the curve (AUC) were used to evaluate the classification results of the models.

The recall ratio, also known as recall, refers to the ratio of samples of a class identified in the process of class recognition to the actual total samples of this class. It is generally considered that the higher the value, the better the performance of the classifier is, but the higher recall rate also means a greater possibility of misjudgment.

refers to the number of samples of the class concerned that are true in the actual situation and prediction, while is the number of samples that are true, but predicted as false.

Precision refers to the ratio of the actual samples to the predicted samples of the concerned class in the process of class recognition. The higher the value, the better the performance of the classifier is.

represents the number of samples of the class in concern that are actually false, but predicted as true.

Accuracy refers to the ratio of the correctly predicted events to the total events, which characterizes the performance of the classifier. And, the higher the value, the better the classifier is.

indicates the number of samples that are false in the actual situation and prediction.

The score can characterize the recall rate and accuracy rate at the same time, and it is the harmonic average that can maintain the two indexes as high at the same time.

The area under the, AUC, refers to the area under the Receiver Operating Characteristic Curve (ROC). Its abscissa is the false positive rate; its vertical coordinate is the true positive rate, in which the true positive rate is equal to the recall rate; and the closer the curve is to the upper left corner, the better the recognition effect is. In order to quantitatively characterize the performance of the classifier, AUC, that is, the area under the ROC, values ranging from 0 to 1 are introduced. The closer the AUC value is to 1, the better the performance of the model.

As the main purpose of class identification is to identify disaster events, and this study focuses on ice avalanche disasters, the three types of events were given different weights, ice avalanche events 0.5, flood events 0.4, wind-and-rain events 0.1, and the comprehensive index of each classification model was calculated.

3. Results and Discussion

3.1. Characteristic Response Analysis of Avalanche Infrasound

As only a small number of ice avalanche events have been monitored so far, the recognition effect may not be obvious or ideal due to insufficient training samples in the later class recognition of ice avalanche signals. Therefore, the captured six complete ice avalanche events (about 3 min of each event) were divided into 151 short-time-series ice avalanche events and combined with 386 samples of wind-and-rain events and 146 samples of flood events, and the classifiers were trained to improve the effect of class identification of ice avalanche events. The infrasound signal data of the three types of events used for subsequent class identification in this paper all come from the infrasound monitoring equipment installed by us. By using the above feature extraction methods, characteristic responses of different events in waveform, sound pressure, power spectrum, and frequency distribution have been obtained, as shown in Table 3 and Table 4.

Table 3.

Characteristics of different events in time domain and frequency domain.

Table 4.

Wavelet frequency distribution characteristics of different events.

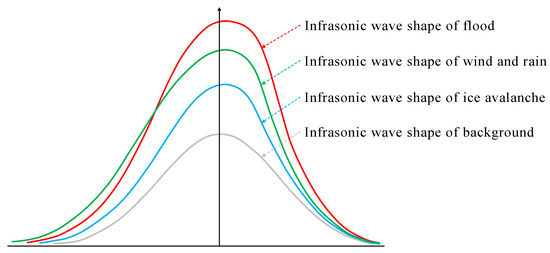

Different kinds of events have some differences in waveform. Figure 14 is a schematic diagram of the waveform based on the kurtosis and skewness in Table 3. As shown in Figure 14, the gray curve in the figure is the waveform feature of background noise, which basically shows a standard normal distribution, and the waveform of the flood event (red curve in the figure) has the maximum kurtosis of 8.33; therefore, it is steeper than those of other events and has a skewness of −0.13, that is, showing a left trailing phenomenon, which is slightly slighter than that of wind-and-rain events (green curve in the picture). The waveform of wind-and-rain events has a skewness of −0.16 and shows a more obvious characteristic of left trailing among the three kinds of events. In comparison, the waveform of the ice avalanche event has kurtosis and skewness (the blue part in the figure) less obvious than those of the other two kinds of events. In addition, there are overlaps between the three kinds of events and background noise in frequency range, which makes it difficult to remove the background noise. But, the background noise is very small in sound pressure and has little impact on the events. Therefore, there was no specific processing to remove the background noise in this study.

Figure 14.

Waveform characteristics of different events.

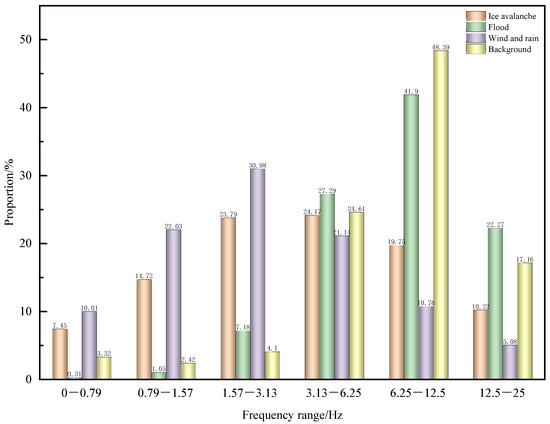

In terms of frequency distribution characteristics, avalanche events, flood events, and wind-and-rain events are mainly distributed in the frequency ranges of 1.5–9.5 Hz, 3–12.5 Hz, and 0.5–6.5 Hz, respectively, while background noise is mainly concentrated in the frequency range of 3–12.5 Hz. As shown in Figure 15, these events have certain overlaps among each other and overlaps with background noise, but they also differ some in frequency characteristics.

Figure 15.

Frequency band distribution of different events.

3.2. Class Recognition of Avalanche Infrasound

Through pre-processing and feature extraction of the three kinds of events, the features of different events were extracted in a total of 63 dimensions, including 4 dimensions in the time domain and the frequency domain, 7 dimensions extracted by the wavelet transform (distribution values of wavelet coefficients), 24 dimensions extracted by the Hilbert–Huang transform (frequency distribution value of marginal spectrum), and 14 dimensions of information entropy. The information entropy features are subdivided into seven dimensions of total entropy (total entropy) and seven dimensions of partial entropy (the partial entropy is only the information entropy of part of the components). As shown in Table 5, there are great differences between the extracted different eigenvalues, so each type of feature extracted should be standardized to obtain the standard input data type for the class recognition.

Table 5.

Eigenvalue categories and corresponding dimensions.

Table 6, Table 7, Table 8, Table 9 and Table 10 list the recall rate, accuracy values, F1 score, AUC, and precision of the four kinds of algorithm models.

Table 6.

Comparison of recall rates of four kinds of models.

Table 7.

Comparison of accuracy values of the four kinds of models.

Table 8.

Comparison of F1 scores of the four kinds of models.

Table 9.

Comparison of AUC indicators of the four kinds of models.

Table 10.

Comparison of precision of the four kinds of models.

It can be seen from Table 6, in terms of the recall rate, support vector machine, K-nearest neighbor algorithm, and classification tree algorithm, that models based on the time–frequency feature have a poor class recognition effect, while BP neural network algorithm models yield average class recognition results, and the four algorithm models based on information entropy and marginal spectral frequency distribution all have a very good classification effect. The support vector machine and K-nearest neighbor algorithm based on partial information entropy have a very good classification effect, while the classification tree and BP neural network models based on partial information entropy have a fairly good classification effect. The support vector machine and BP neural network model based on total information entropy have a very good classification effect; the K-nearest neighbor algorithm and classification tree based on total information entropy have a fairly good classification effect; and the BP neural network based on wavelet coefficient distribution has a very good classification effect, while the other three algorithm models based on this feature have a fairly good classification effect.

It can be seen from Table 7 that the classification tree algorithm model based on the time–frequency feature has an average class recognition effect, and the other three algorithm models based on this feature have a fairly good class recognition effect. The four algorithm models based on information entropy, the wavelet coefficient distribution, and the marginal spectral frequency distribution have a very good classification effect. The classification tree models based on partial information entropy and total information entropy have a fairly good classification effect, and the other three algorithms based on these features have a very good classification effect. Generally speaking, the classification tree algorithm has a poorer classification effect than the other three classification algorithms in terms of accuracy.

It can be seen from Table 8 that the four algorithm models based on time–frequency features are average in the class recognition effect; the four algorithm models based on information entropy and marginal spectral frequency distribution features all have very good class recognition results; the support vector algorithm models based on the other features, except time–frequency features, have the best class recognition effect; and although the classification tree algorithm model has a fairly good class recognition effect, it is still dead last among the four models.

It can be seen from Table 9 that, except for the classification tree algorithm model (with a fairly good class recognition effect), all the other three kinds of algorithm models have AUC indexes higher than 0.95. That is to say, they have a very good class recognition effect. Similarly, the models based on time–frequency features have a poorer class recognition effect; except for the classification tree model with an average class recognition effect, the other three kinds of models based on time–frequency features have a fairly good class recognition effect.

It can be seen from Table 10 that the precision of the BP neural network model is the best, while classification tree is the worst, and the precision based on time–frequency features is still the lowest. To sum up, all four kinds of models based on time–frequency features have a poorer class recognition effect (average) than those based on other features; the four kinds of algorithm models based on marginal spectral frequency distribution features all have a very good class recognition effect; the classification tree model based on information entropy has a fairly good class recognition effect; and the other models based on information entropy have a fairly good class recognition effect. The support vector machine model based on partial information entropy has a very good class recognition effect, while the other models based on this feature have a fairly good class recognition effect. The four kinds of models based on the total information entropy and distribution characteristics of wavelet coefficients all have a fairly good class recognition effect. The class recognition effects of the models are closely related to the differences between the selected features, and the models based on information entropy and marginal spectral frequency distribution features with the best classification effect characterize more details of the original signals. Among the four kinds of models, the support vector machine and BP neural network models are roughly the same in class recognition effect. BP neural network models are slightly better than support vector machine models, and K-nearest neighbor algorithm models take the third place. The classification tree models have the poorest effect among the four kinds of models, but they still have a fairly good effect in recognizing events by class.

Therefore, in practical application, it is not recommended to train models to recognize events based on time–frequency features alone; but, time–frequency features can be combined with other features to form recognition features of higher dimensions. Information entropy features and marginal spectral frequency distribution features can better reflect the subtle differences among different infrasound signals, so they can be used as recognition features for subsequent class recognition training. The four kinds of class recognition algorithms all have fairly good recognition results in this study, but the infrasound samples of disasters are often few in number and uneven in distribution, so the K-nearest neighbor and classification tree algorithms are likely to have large errors in this circumstance.

4. Conclusions and Future Prospects

The main results are as follows:

(1) The waveform of background noise basically shows a standard normal distribution; avalanche events are steeper than background noise and show slight left skewness in the waveform; wind-and-rain events have more left skewness in the waveform than ice avalanche events and flood events; and flood events are steeper than the other events in the waveform, showing obvious impact characteristics.

(2) In terms of frequency distribution, the ice avalanche events, flood events, wind-and-rain events, and background noise are distributed in the frequency ranges of 1.5–9.5 Hz, 3–12.5 Hz, 0.5–6.5 Hz, and 3–12.5 Hz, respectively. These events have a certain overlap in frequency, but some different characteristics. For the background noise and flood events with the same frequency range, in the same frequency distribution range, the frequency distribution of flood events decreases gradually with the increase in frequency, while the frequency distribution of background noise increases at first and then decreases with the increase in frequency, reaching a peak distribution value between 6.0 and 9.5 Hz.

(3) The BP neural network is similar to the support vector machine algorithm in class recognition effect. The BP neural network is slightly better than the support vector machine algorithm, followed by the K-nearest neighbor algorithm, and the classification tree algorithm is dead last in class recognition effect, so it is suggested the support vector machine or BP neural network algorithm be used to recognize ice avalanche infrasound events.

Some research prospects are as follows:

(1) Carry out indoor ice avalanche tests. As there are few ice avalanches actually monitored, the conclusions of this study may not be universal. Therefore, the indoor test of ice avalanches needs to be performed. By monitoring the infrasound signal of a glacier fracture under different variables, the early warning model of ice avalanches will be established to further improve the accuracy of field monitoring.

(2) Construction of more ice avalanche infrasound monitoring arrays. Glaciers are widely distributed in the Qinghai–Tibet Plateau, global warming has led to the decline of glacier stability, and there are serious security risks. Infrasound has the characteristics of long propagation distance and low attenuation. With the further deepening of research work, we will deploy more infrasound arrays and a video surveillance system in the research area for monitoring, which can collect a more effective and larger number of ice avalanche infrasound event samples for the training of artificial intelligence infrasound signal recognition models in this study. At the same time, as the number of infrasound sensors in the infrasound array increases, it will also lay the foundation for further improving the accuracy of infrasound positioning of the location of ice avalanche events. By strengthening the construction of avalanche infrasound monitoring arrays, ice avalanche events can be monitored and located remotely. And, we can lay the foundation for the follow-up early warning of an ice avalanche disaster and disaster chain.

Author Contributions

Conceptualization, Y.Z., Q.C. and F.W.; methodology, Y.Z.; software, D.L.; validation, D.L., P.S. and J.C. (Jilong Chen); formal analysis, Y.Z. and Q.C.; investigation, Y.Z., Q.C. and J.C. (Jianzhao Cui); resources, J.C. (Jilong Chen); data curation, D.L. and J.C. (Jilong Chen); writing—original draft preparation, Y.Z. and Q.C.; writing—review and editing, J.M., F.X. and F.W.; project administration, Q.C. and P.S.; funding acquisition, Q.X. and Y.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (42005130), the Xigaze City Science and Technology Plan Project (RKZ2020KJ01), the Chongqing Natural Science Foundation project (no.cstc2021jcyj-msxmX0187), Western Scholar of Chinese Academy of Sciences Category A (E2296201), and the Ecological environment survey and ecological restoration technology demonstration project in the water-level-fluctuating of the Three Gorges Reservoir (no. 5000002021BF40001).

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hu, W.; Yao, T.; Yu, W.; Yang, W.; Gao, Y. Advances in the study of glacier avalanches in High Aisa. J. Glaciol. Geocryol. 2018, 40, 1141–1152. [Google Scholar]

- Pei, L. The Preliminary Study of Characteristics and Types of Ice Avalanche Disaster in the Tibetan Plateau. Master’s Thesis, China University of Geosciences, Beijing, China, 2019. [Google Scholar]

- Tong, L.; Pei, L.; Tu, J.; Guo, Z.; Yu, J.; Fan, J.; Li, D. A preliminary study of definition and classification of ice avalanche in the Tibetan Plateau region. Remote Sens. Land Resour. 2020, 32, 11–18. [Google Scholar]

- Wang, S.; Wen, J. Characteristics, influence of cryosphere disaster and prospect of discipline development. Bull. Chin. Acad. Sci. 2020, 35, 523–530. [Google Scholar]

- Cui, P.; Guo, X.; Jiang, T.; Zhang, G.; Jin, W. Disaster effect induced by Asian Water Tower change and mitigation strategies. Bull. Chin. Acad. Sci. 2019, 34, 1313–1321. [Google Scholar]

- Zhong, D.; Xie, H.; Wei, F.; Liu, H.; Tang, J. Discussion on mountain hazards chain. Mt. Res. 2013, 31, 314–326. [Google Scholar]

- Huang, T. Spatial-Temporal Changes of Glacier Thickness and Lake Level on the Qinghai-Tibetan Plateau. Master’s Thesis, University of Chinese Academy of Sciences (Institute of Remote Sensing and Digital Earth Chinese Academy Sciences), Beijing, China, 2017. [Google Scholar]

- Pinchak, A.C. Avalanche Activity on the Vaughan Lewis Icefall, Alaska. J. Glaciol. 1968, 7, 441–448. [Google Scholar] [CrossRef]

- Hans, R. Ice Avalanches. J. Glaciol. 1977, 19, 669–671. [Google Scholar]

- Margreth, S.; Funk, M. Hazard mapping for ice and combined snow/ice avalanches—Two case studies from the Swiss and Italian Alps. Cold Reg. Sci. Technol. 1999, 30, 159–173. [Google Scholar] [CrossRef]

- Salzmann, N.; Kääb, A.; Huggel, C.; Allgöwer, B.; Haeberli, W. Assessment of the hazard potential of ice avalanches using remote sensing and GIS-modelling. Nor. Geogr. Tidsskr.-Nor. J. Geogr. 2004, 58, 74–84. [Google Scholar] [CrossRef]

- Vilajosana, I.; Suriñach, E.; Khazaradze, G.; Gauer, P. Snow avalanche energy estimation from seismic signal analysis. Cold Reg. Sci. Technol. 2007, 49, 72–85. [Google Scholar] [CrossRef]

- Herwijnen, A.V.; Schweizer, J. Monitoring avalanche activity using a seismic sensor. Cold Reg. Sci. Technol. 2011, 69, 165–176. [Google Scholar] [CrossRef]

- Murayama, T.; Kanao, M.; Yamamoto, M.; Ishihara, Y. Infrasound Signals and Their Source Location Inferred from Array Deployment in the Lützow-Holm Bay Region, East Antarctica: January–June 2015. Int. J. Geoences 2017, 8, 181–188. [Google Scholar]

- Asming, V.E.; Baranov, S.V.; Vinogradov, A.N.; Vinogradov, Y.A.; Fedorov, A. V Using an infrasonic method to monitor the destruction of glaciers in Arctic conditions. Acoust. Phys. 2016, 62, 583–592. [Google Scholar] [CrossRef]

- Mayer, S.; van Herwijnen, A.; Ulivieri, G.; Schweizer, J. Evaluating the performance of an operational infrasound avalanche detection system at three locations in the Swiss Alps during two winter seasons. Cold Reg. Sci. Technol. 2020, 173, 102962. [Google Scholar] [CrossRef]

- Graveline, M.H.; Germain, D. Ice-block fall and snow avalanche hazards in northern Gaspésie (eastern Canada): Triggering weather scenarios and process interactions. Cold Reg. Sci. Technol. 2016, 123, 81–90. [Google Scholar] [CrossRef]

- Lv, R.; Li, D. Debris flow induced by ice lake burst in the Tangbulang Gully, Gongbujiangda, Xizang (Tibet). J. Glaciol. Geocryol. 1986, 8, 61–71. [Google Scholar]

- Liu, J.; Cheng, Z.; Li, Y.; Su, P. Characteristics of Glacier-Lake Breaks in Tibet. J. Catastrophol. 2008, 23, 55–60. [Google Scholar]

- Cheng, Z.; Zhu, P.; Dang, C.; Liu, J. Hazards of debris flow due to glacier-lake outburst in southeastern Tibet. J. Glaciol. Geocryol. 2008, 30, 954–959. [Google Scholar]

- Yao, X.; Liu, S.; Sun, M.; Zhang, X. Study on the glacial lake outburst flood events in Tibet since the 20th century. J. Nat. Resour. 2014, 29, 1377–1390. [Google Scholar]

- Zhang, J.; Pang, J.; Li, C. Physics properties of infrasonic waves and its applications. Phys. Bull. 2018, 123–126. [Google Scholar] [CrossRef]

- Tong, N. Features and applications of infrasound. Tech. Acoust. 2003, 22, 199–202. [Google Scholar]

- Zheng, F.; Chen, W.; Lin, C. Research status and progress of infrasound in disaster monitoring. Sci. Technol. Eng. 2015, 15, 129–137. [Google Scholar]

- Shang, Y. Study on the Analysis Method of Infrasonic Signal. Master’s Thesis, Kunming University of Science and Technology, Kunming, China, 2013. [Google Scholar]

- Hu, Z. Characteristics and Type Identification of Debris Flow Acoustic Signal. Master’s Thesis, University of Chinese Academy of Science (Institution of Mountain Hazards and Environment, Chinese Academy of Sciences), Chengdu, China, 2020. [Google Scholar]

- Duan, J.; Cheng, J.; Wang, Y.; Lu, B.; Zhu, H. Automatic identification technology of microseismic event based on STA/LTA algorithm. Coal Geol. Explor. 2015, 43, 76–80+85. [Google Scholar]

- Yang, J.; Zhang, D.; Lu, J.; Shuai, X. Gravity frequency and its monitoring application of EEG spectrum in the vigilance operation. J. Biomed. Eng. 2014, 31, 257–261. [Google Scholar]

- Xing, N.; Liu, F.; Zhou, C.; Xu, L. Cutting chatter feature extraction method based on mean square frequency and empirical mode decomposition. Manuf. Technol. Mach. Tool 2021, 35–40. [Google Scholar] [CrossRef]

- Zheng, J.; Ying, Q.; Yang, W. Signals and Systems, 3rd ed.; Higher Education Press: Beijing, China, 2011. [Google Scholar]

- Liu, X. The Research on Feature Extraction and Classification of Infrasound Signals. Master’s Thesis, China University of Geosciences, Beijing, China, 2015. [Google Scholar]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.C.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. Math. Phys. Eng. Sci. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Huang, N.E.; Wu, Z. A review on Hilbert-Huang transform: Method and its applications to geophysical studies. Rev. Geophys. 2008, 46, RG2006. [Google Scholar] [CrossRef]

- Zhu, X. Fundamentals of Applied Information Theory; Tsinghua University Press: Beijing, China, 2001. [Google Scholar]

- Zhao, X.; Ye, B.; Chen, T. Theory of multi-resolution singular value decomposition and its application to signal processing and fault diagnosis. J. Mech. Eng. 2010, 46, 64–75. [Google Scholar] [CrossRef]

- Zhou, Z. Machine Learning; Tsinghua University Press: Beijing, China, 2016. [Google Scholar]

- Liu, M. Pattern Recognition; Publishing House of Electronics Industry: Beijing, China, 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).