Abstract

With the development of remote sensing earth observation technology, object tracking has gained attention for its broad application prospects in computer vision. However, object tracking is challenging owing to the background clutter, occlusion, and scale variation that often appear in remote sensing videos. Many existing trackers cannot accurately track the object for remote sensing videos with complex backgrounds. Several tracking methods can handle just one situation, such as occlusion. In this article, we propose a Siamese multi-scale adaptive search (SiamMAS) network framework to achieve object tracking for remote sensing videos. First, a multi-scale cross correlation is presented to obtain a more discriminative model and comprehensive feature representation, improving the performance of the model to handle complex backgrounds in remote sensing videos. Second, an adaptive search module is employed that augments the Kalman filter with a partition search strategy for object motion estimation. The Kalman filter is adopted to re-detect the object when the network cannot track the object in the current frame. Moreover, the partition search strategy can help the Kalman filter accomplish a more accurate region-proposal selection. Finally, extensive experiments on remote sensing videos taken from Jilin-1 commercial remote sensing satellites show that the proposed tracking algorithm achieves strong tracking performance with 0.913 precision while running at 37.528 frames per second (FPS), demonstrating its effectiveness and efficiency.

1. Introduction

Object tracking, an active research topic in computer vision, can be used in a wide range of applications, such as military monitoring, battlefield analysis, traffic management, and public security. Its goal is to obtain an object’s exact position in subsequent video frames given the object’s coordinates in the first frame. Although some research has been done on object tracking, the task remains challenging owing to various uncertainties in tracking.

Over the past few decades, research on object tracking has evolved considerably [1], from classical tracking methods, such as mean shift, particle filter, and Kalman filter, to mainstream object tracking methods, including correlation filter and deep learning (DL). Mainstream object tracking methods are more adaptable to complex changes in the tracking process and are more robust and accurate than classical tracking methods [2]. The correlation filter was first used in signal processing to describe the correlation between two signals. Correlation filter-based tracking methods [3,4,5,6,7,8,9,10,11,12,13,14,15] aim to find a filter template and convolve it with the image of the next frame, and then the region with the largest response is the predicted location of the tracked object. A fast speed is the most important feature of this algorithm, while the algorithm’s boundary effects are a disadvantage [16].

Currently, object tracking methods based on DL have become the mainstream approach because of their powerful feature representation capabilities [17,18,19,20,21,22,23,24,25,26,27]. The offline learning capability of features is the key to deep learning models because it enables these models to learn complex, rich relationships from large amounts of labeled data. In particular, Siamese network-based approaches are a hot research topic for object tracking owing to their high precision and end-to-end training advantages. Siamese networks regard object tracking as a similarity learning problem and can learn the similarity between the object and the search region through end-to-end offline training [28]. As a result, a series of Siamese network-based tracking algorithms have emerged. In this paper, the Siamese network structure is also adopted to implement object tracking.

General object tracking algorithms cannot achieve good tracking performance for remote sensing videos under complex backgrounds. Locating objects of interest accurately and quickly is difficult owing to the large number of small objects and the diverse background in remote sensing images, compared with natural images [29]. Today, no uniform standard exists for the definition of small objects in object detection and tracking. Existing definitions are mainly divided into two categories: relative scale-based and absolute scale-based. The first category is defined in terms of the relative proportion of the object to the image. For example, Chen et al. [30] proposed that an object is small when the median ratio of the bounding-box area to the image area is between and . The second category is defined from the perspective of the object’s absolute pixel size. Currently, the most common definition comes from the MS COCO dataset, a general dataset in object detection that defines a small object as one with a resolution of less than px [31]. In addition, some researchers consider objects with size pixels as small objects [32,33,34]. Various challenges are encountered in real-life object tracking scenarios, such as the background clutter, occlusion, and scale variation in Figure 1. For the background clutter in Figure 1a, the background near the object has a similar color or texture to the object, thus affecting the classifier’s ability to discriminate between the background and the object. Complete occlusion occurs owing to changes in the camera angle during filming for remote sensing video, resulting in the loss of object information during tracking in Figure 1b. Furthermore, Figure 1c,d shows the scale variation of the object owing to the presence of a cloud in different frames of the remote sensing video. Therefore, it is crucial to build a more robust tracking model that can respond to complex backgrounds in remote sensing videos.

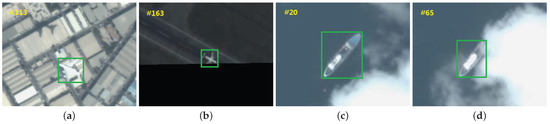

Figure 1.

Somemoving objects in remote sensing videos. The number in the upper left corner indicates the number of frames in the video. The green box represents the labeled ground truth, which contains the position and size of the tracked object. (a) Background clutter appears during object tracking; (b) The object is occluded; (c) The object is complete at frame 20; (d) The object size changes owing to cloud occlusion at frame 65.

To solve the issues mentioned above, this paper proposes a new single-object tracking algorithm based on the Siamese network to improve tracking performance for remote sensing videos under complex backgrounds. The tracker is improved with a multi-scale cross correlation and an adaptive search module based on SiamBAN [35], which can effectively handle the change in object scale in an anchor-free manner. A multi-scale cross correlation is proposed to realize the complementary advantages of multiple features and obtain a more robust, discriminative model. When the network cannot accurately track the object, the adaptive search module combining the Kalman filter and partition search strategy is employed to relocate the tracked object. The object position obtained by the adaptive search module is then used to update the search region of the Siamese network for the next frame. We can obtain a more accurate bounding box of the object by the Siamese multi-scale adaptive search (SiamMAS) network for remote sensing single-object tracking under complex backgrounds. The main contributions of this work can be summarized as follows:

- First, the multi-scale cross correlation is proposed, making use of several image features to achieve the complementary advantages of multiple features and obtain a discriminative model and comprehensive feature representation. The performance of the model is improved, and better tracking results are achieved;

- Second, an adaptive search module is introduced into the network for object tracking. The adaptive search module uses a partition search strategy to assist the Kalman filter in object motion estimation. It can correct the coordinate position of the object when the network is unable to accurately track the object;

- Finally, experiments on remote sensing videos have verified the superiority of the proposed SiamMAS tracker when compared with state-of-the-art tracking methods. This tracker can effectively handle complex backgrounds in remote sensing videos, such as background clutter, occlusion, and scale variation.

The remainder of this article is organized as follows. Section 2 briefly reviews the previous literature that is most relevant to this work. Section 3 provides the methodology proposed for this study. Then, implementation details of the proposed model and the experimental results and discussions are described in Section 4. Finally, conclusions are drawn in Section 5.

2. Related Work

Over the past decade, many studies on object tracking have revealed important insights. In this section, we introduce the main research streams in object tracking that are closely related to our work.

2.1. Trackers with Siamese Architecture

The Siamese network-based tracker has two inputs: the template image and the search region image. The Siamese network mainly measures the similarity of these inputs. The two inputs are fed into two neural networks, which map the two inputs to a new space and represent them. Finally, the similarity between the two inputs is calculated through a loss function. Bertinetto et al. [22] proposed a classic tracking method named SiamFC, encouraging Siamese fully-convolutional deep networks to improve the speed of tracking. It provides the foundation for subsequent tracking algorithm based on the Siamese network. Shen et al. [36] introduced an attention mechanism to the Siamese network to improve tracking performance. Moreover, multi-scale response maps of each middle layer are fused to increase the estimation accuracy of the position. Furthermore, Jiang et al. [37] built a comprehensive Siamese network composed of a mutual learning subnetwork (M-net) and a feature fusion subnetwork (F-net), aiming to mine more meaningful potential relationships between feature channels. Inspired by Siamese networks, Dong et al. [38] introduced a quadruplet deep network for one-shot learning that examines the inherent connections between the training instances to achieve more robust representations. In addition, Shao et al. [36] designed a lightweight parallel network with a high spatial resolution to obtain fine-grained appearance features for small objects in satellite videos. Most recently, Bi et al. [39] proposed the variable-angle-adaptive Siamese network for remote sensing object tracking in satellite videos. The method introduces the octave convolution to adapt to the new feature representation and uses TextBoxes++ to extract the angle information and then perform angle-consistency update operations on the detection frames. Zhang et al. [40] presented an RGB-T Siamese tracker for achieving high tracking performance and enhancing the robustness of the tracker. It mainly includes a complementarity-aware multimodal feature fusion module and a distractor-aware region-proposal selection module. The proposed network architecture guarantees real-time, precise localization when applied to Siamese trackers.

2.2. Trackers for Remote Sensing Videos

Compared to traditional video object tracking, the task of remote sensing video object tracking is more challenging [41]. Typically, the size occupied by the object of interest is much smaller in remote sensing videos, potentially only a few pixels. In addition, problems such as low spatial resolution and a large amount of similar background interference can occur. Thus, general object tracking methods customarily fail to track the object correctly owing to inadequate representation features [42]. To date, several studies have been done on remote sensing video object tracking. For example, Shao et al. [43] proposed a velocity correlation filter algorithm for remote sensing video object tracking, which utilizes both a velocity feature and an inertia mechanism to construct a specific kernel feature space. In addition, Xuan et al. [44] developed an effective tracking algorithm based on the correlation filter embedded with motion estimations in remote sensing videos. The proposed algorithm improves the kernelized correlation filter by combining the Kalman filter and motion trajectory averaging, and it mitigates the boundary effect simultaneously. However, the above methods are based on the correlation filter. More recently, Song et al. [45] introduced a joint Siamese attention-aware network and designed a feature combination strategy for vehicle object tracking in satellite videos. The Siamese attention mechanism contains both the self-attention and cross-attention modules. Cui et al. [46] proposed a deep reinforcement learning method to solve the occlusion problem in remote sensing object tracking. In this paper, we adopt the SiamMAS network to achieve object tracking for remote sensing videos under complex backgrounds.

2.3. Trackers under Complex Backgrounds

A variety of challenges are encountered in a real remote sensing tracking task, such as background clutter, scale variation, and occlusion. If the dataset contains multiple challenging video sequences, we consider them to have complex backgrounds for object tracking. Some research has been done to address these challenges and improve the tracking performance.

Background clutter means that similar objects in the vicinity of the tracked object interfere with the tracking. To handle the problem of background clutter and appearance change, Li et al. [47] proposed a multi-stream deep similarity learning network, which consists of a template stream, a searching stream, and background stream-sharing parameters. By minimizing a relative distance considering both background patches and the object template, we can ensure that the loss function of the network encourages learning a feature space so that more robust representations of the object can be built. Xiong et al. [48] explored how material information can be utilized to tackle challenging scenarios such as background clutter in object tracking. In addition, a template update mechanism [49] was proposed to improve the visual tracking accuracy for a massive video under background clutter.

As for scale variation, Danelljan et al. [50] presented an approach for accurate scale estimation in a tracking-by-detection framework, which learns discriminative correlation filters based on a scale pyramid representation. An effective scale adaptive scheme was presented to handle the puzzle of the fixed template size in the kernel correlation filter tracker in [51]. More recently, SiamBAN [35] was designed to exploit the expressive power of the fully convolutional network to address the problem of accurately estimating the scale and aspect ratio of the object. The multi-scale cross correlation can effectively respond to the challenges posed by background clutter and scale variation in our tracker.

Occlusion, a common problem encountered in object tracking, is generally divided into partial, severe, and complete occlusion. Several algorithms have been proposed to solve the occlusion problem in object tracking. For partial occlusion, Ruan et al. [52] incorporated a part-based strategy into the framework of correlation filters to handle occlusions. A multipart correlation tracker with triangle-structure constraints was designed by integrating the correlation responses of the holistic candidate and its local parts. To reduce the impact of partial occlusion or ineluctable background information, He et al. [53] presented a robust tracker based on a key patch sparse representation, which treats the sampled patch differently according to the occlusion prediction and patch location. For severe occlusion, Dong et al. [54] introduced a two-stage classifier with a circulant structure kernel and applied it to transition estimation and scale estimation. Moreover, a classifier pool was developed to re-detect objects with an entropy minimization criterion when the object is occluded. Guo et al. [55] proposed selective spatial regularization for a correlation filter tracking scheme that selectively learns the target-context-regularized filters; the tracker achieved good performance even if when it was severely occluded. For complete occlusion, Zhao et al. [56] proposed a single-object tracker with background estimation in a scene with moving cameras, which restricts the adaptivity to stationary cameras. The method can distinguish occlusion from an active appearance change and can make a proper update to the appearance model. Recently, an action decision-occlusion handling network [46] based on deep reinforcement learning was built for object tracking. The temporal and spatial context, the object appearance model, and the motion vector are adopted to provide the occlusion information, remarkably enhancing the robustness and precision of the algorithm. Our proposed adaptive search module can effectively handle the case of complete occlusion.

3. Methods

In this section, we describe the proposed SiamMAS tracking method with a multi-scale cross correlation and a region-proposal adaptive selection for remote sensing video in detail. The overall architecture is presented in Figure 2.

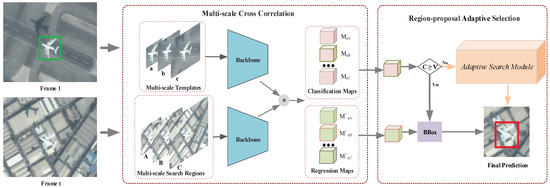

Figure 2.

The architecture of the proposed tracker. The green and red boxes represent the labeled ground truth and the bounding box of the object obtained by the tracking algorithm respectively. After cropped multi-scale templates and multi-scale search regions have been fed into the backbone network, the optimal classification map and corresponding regression map are chosen. The classification map and regression map can be used to determine whether the selected map contains the tracked object and the location information of the object. ∗ indicates the depth-wise cross correlation operation in this figure. When the confidence score of the object is less than the set threshold , the adaptive search module combining the Kalman filter and partition search strategy is introduced to correct the coordinate position of the object in the current frame.

3.1. Multi-Scale Cross Correlation

As introduced before, Siamese network-based tracking methods have been proven to be effective owing to their excellent tracking performance. We also adopt the Siamese network tracking framework and take ResNet-50 [57] as the backbone network in this paper. The network deconstructs the object tracking task into two subproblems, classification and bounding-box regression, solving the visual tracking problem end-to-end during training. The classification predicts the label of each position, and the regression determines the relative position of the bounding boxes in the tracking phase.

Inspired by the immense success of multi-scale residual neural networks in satellite images [58], we propose the multi-scale cross correlation to improve the discriminative power of the model to handle complex backgrounds in remote sensing videos. The network is divided into two identical branches, the template branch and the search branch, which share parameters, as shown in Figure 2. The template branch and the search branch take the multi-scale templates and the multi-scale search regions as input, respectively. The multi-scale templates and the multi-scale search regions are cropped according to normal size, with three scale variation factors of [59]. The normal sizes of the template and search region are generally and [35]. That is, the object patch and search patch are recorded as and (), respectively. These multi-scale templates and multi-scale search regions are subjected to feature extraction by a shared convolutional neural network (CNN) architecture. and are the output of the two branches and are divided into , , , and .

Moreover, the multilayer features are fused in the backbone network, and the feature maps of higher and lower layers are considered simultaneously to obtain a more accurate object feature representation and location information. The convolution results of the third, fourth, and fifth convolution blocks in the search branch and template branch are taken out separately. To obtain feature maps, we subject the corresponding convolution results to a depth-wise cross-correlation [25] operation

where and indicate the classification map and regression map, respectively. Here, ★ denotes the convolution operation. The depth-wise cross correlation can greatly reduce the computational cost and memory usage. In addition, the number of parameters of the template and search branch tends to be balanced, resulting in a more stable training process. The feature maps are combined into one cross-correlation feature map by averaging. This way, a variety of image features can be fully utilized to realize the complementary advantages of various features and obtain a more discriminative and robust model.

After feature extraction, the network output nine classification maps and nine regression maps corresponding to the inputs of different scale templates and search regions. We choose the maximum value from the classification map as the final selected optimal classification map, which can better judge whether the object category belongs to the foreground or the background. The corresponding regression maps can determine the specific location of the object accurately. At the same time, the corresponding scale variation factor of the multi-scale templates and multi-scale search regions is adaptively selected as the final scale factor.

3.2. Region-Proposal Adaptive Selection

Owing to the presence of background clutter and occlusion in the remote sensing video, the general Siamese network model cannot perform the region-proposal selection well. Therefore, we add an adaptive search module with the Kalman filter aided by a partition search strategy to overcome this dilemma.

3.2.1. Kalman Filter

Some tracking algorithms improve the precision at the cost of tracking speed when faced with complex backgrounds in a tracking task. Owing to the small computational effort of the Kalman filter, there is little reduction in tracking speed, and the model maintains the end-to-end property. The Kalman filter has become a beneficial tool in object tracking [60,61,62]. It is also a powerful solution for localization error correction, smoothing, and optimizing the state of the object of algorithms. Moreover, it can better handle a complex background in object tracking, such as background clutter, occlusion, illumination variation, and out-of-plane rotation [63]. Therefore, we use the Kalman filter to estimate the motion of the moving object in this paper.

The Kalman filter is a recursive estimation; that is, as long as the estimated value of the state at the last moment and the observed value of the current state can be calculated, the estimated value of the current state can be calculated, so there is no need to record the historical information of observations or estimates.

The operation of the Kalman filter includes two stages: prediction and update.

In the prediction phase, the filter uses the previous state to estimate the current state. The prediction state and prediction estimate covariance matrix can be expressed as

where is the control input, and , , and are the state transition matrix, control matrix, and process covariance at time k, respectively. The state transition matrix can be written as follows:

is a null matrix, and and are the diagonal matrices in our experiments, which can be expressed as

In the update phase, the filter optimizes the predicted value obtained in the prediction phase using the observed value of the current state to obtain a more accurate new estimate. The measurement residual , measurement residual covariance , and optimal Kalman gain are defined by

where z is a measurement, and and are the measurement matrix and measurement noise covariance at time k, respectively. and are the diagonal matrices in the experiments.

Then, the updated state estimate and updated covariance estimate can be written as

where I is the identity matrix.

Ultimately, the Kalman filter dynamically estimates the state of the next moment iteratively while considering the historical state and observed data. Moreover, the Kalman filter is a well-established method with different parameters for different experimental data. The specific parameters above are the ones we have experimented with several times and applied to our data. For object tracking, the Kalman filter models the motion of the object, which can be used to estimate the position of the object in the next frame.

3.2.2. Partition Search Strategy

When the tracked object is completely occluded in the remote sensing video, the Kalman filter also yields an incorrect bounding box, and the partition search strategy is employed to patch it.

The whole process of the partition search strategy is as follows. First, we select a small range near the object loss, as shown in Figure 3b, and we use random Gaussian noise to generate b candidate boxes of the same size as the object in the previous frame. Assuming that the width and height of the tracked object are w and h, respectively, for convenience, the area chosen for the first partition can be expressed as

where and denote the width and height of the first partition, respectively. If the maximum value in the intersection over union (IoU) score of the candidate boxes and the ground truth is greater than threshold for the first partition, the candidate box is designated as the object bounding box for the current frame. Otherwise, the selected range is expanded, and then the candidate boxes are adaptively generated using random Gaussian noise again. At this point, the area of the second partition selected is

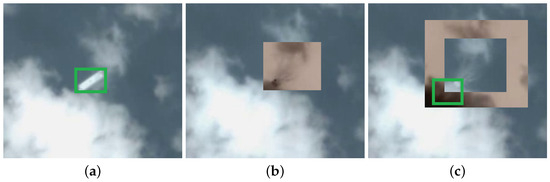

Figure 3.

The partition search strategy in our method. (a) The object is not occluded; (b) A small area search with the brown part of the image is performed when the object is completely occluded; (c) We expand the search area to re-detect the object. The green box indicates the actual position of the object.

Then, the maximum IoU value is compared again with the threshold , which is empirically set to 0.7 [46] in our experiments. Figure 3c shows that the object can be re-detected when expanding the search area once. This process can be repeated until the tracked object has been re-detected facing other videos. However, when the range is expanded to the size of the current frame image and the candidate frame exceeding the threshold cannot be obtained, the bounding box , , , of the current frame is set as [0, 0, 0, 0], meaning that no object has been found in the current frame.

3.3. Tracking Algorithm

After training the SiamMAS network, we use the network to achieve the tracking of the object in the test videos. Details for the proposed tracker are described in Algorithm 1.

For each test video, the object position of the given first frame is represented by the bounding box , , , . , denotes the coordinate position of the upper-left corner of the object bounding box in the first frame, and and denote the width and height, respectively. We can extract multi-scale templates and multi-scale search regions according to , , , . By feeding and into the network, we can obtain two kinds of maps: classification maps and regression maps . The optimal classification map and regression map are derived, and they can determine the category and location of the object. If the tracking video is not in complex backgrounds, the input multi-scale search regions of the network for the next frame can be updated based on the bounding box obtained from the current frame. This process is repeated until the object has been detected in all frames.

| Algorithm 1 Proposed SiamMAS tracking algorithm. |

| Input: Frames ; Initial bounding box of the object , , , ; Output: Predicted bounding boxes of the object , , , ;

|

There are two subsections when training the network, classification and regression, and the loss function can be defined by

where and denote the classification loss and regression loss, respectively. We simply set [24,35,36] in our experiments. For classification, the positive and negative samples are set as in [35], while the loss function is chosen as a generic cross-entropy function. For regression, the loss function is the IoU loss, which is assigned by

The IoU represents the overlap ratio between the predicted bounding box and the ground truth.

However, complex backgrounds, especially background clutter and occlusion, often occur in remote sensing videos. Complex backgrounds can easily lead to the failure of object tracking owing to the difficulty of feature extraction. Thus, the adaptive search module was utilized to solve this challenge. First, a threshold is set to determine whether the object is being tracked in the current frame. The status of the current frame is judged by comparing the size of confidence score obtained by the network and this threshold . If the confidence score is less than the threshold , the object has not been tracked correctly in the current frame. Second, the position of the bounding box , obtained in the last frame is taken as the initial state of the Kalman filter of the adaptive search module, and the width and height of the object are kept constant. Through the prediction and update of the Kalman filter by (3)–(11), the bounding box , , , of the object in the current frame can be obtained. Then, the search region is updated through the boundary box , , , and input to the network for the detection of the object in the next frame. When the Kalman filter also fails to yield accurate tracking results, the partition search strategy is performed as an auxiliary method for the Kalman filter to re-detect the object, improving the tracking performance.

4. Experiments

In this section, we verify the effectiveness of the proposed SiamMAS method through a series of experiments on remote sensing video datasets.

4.1. Experimental Setup

4.1.1. Datasets

To train the tracking network, we compile the datasets so that they contain ImageNet VID [64], LaSOT [65], YouTube-BoundingBoxes [66], COCO [67], GOT10k [68], and ImageNet DET [64].

In the process of testing, we use the remote sensing videos from Jilin-1, China’s first commercial remote sensing satellite. It is developed by Changchun-based ChangGuang Satellite Technology and can obtain very high-resolution satellite videos at a spatial resolution of 0.92 m. The moving objects mainly include airplanes, ships, and vehicles. The actual ground dimensions in the remote sensing videos are roughly 54.6 square km, respectively. Each raw video is played back at 30 frames per second (FPS), and each frame has a size of 12,000 × 5000 px. Because of the large image size, the areas of interest (AOIs) are intercepted and manually annotated for moving object tracking. The 36 AOI videos used for testing have frames ranging from 100 to 320 and resolutions of , , and px. Our tracking algorithm accurately tracks moving objects even in the face of a complex background, such as background clutter, occlusion, and scale variation.

4.1.2. Evaluation Metrics

To characterize the robustness of the algorithm, OTB-13 [69] proposes three metrics: one pass evaluation (OPE), spatial robustness evaluation (SRE), and temporal robustness evaluation (TRE). The OPE is an experiment on the trackers for the selected AOIs from remote sensing videos. The SRE is performed on the trackers by changing the annotation of the first frame of the AOIs, and the TRE is performed on the trackers by changing the first frame of the AOIs.

We evaluate tracker’s performance by conducting an OPE, an SRE, or a TRE based on the common evaluation metrics: mean overlap precision, the area under the curve (AUC), and FPS [70]. The mean overlap precision is related to the center location error, which is the Euclidean distance between the predicted object center and the true object center. The mean overlap precision refers to the percentage of the number of frames whose center location error is lower than a certain threshold (usually 20 pixels [9,71]) to the total number of video frames. The mean overlap precision and AUC can be reflected in the precision plot and success plot, respectively. The AUC represents the area under the curve of the success plot. In addition, the FPS can be adopted to evaluate the speed of the tracking algorithm.

4.1.3. Implementation Details

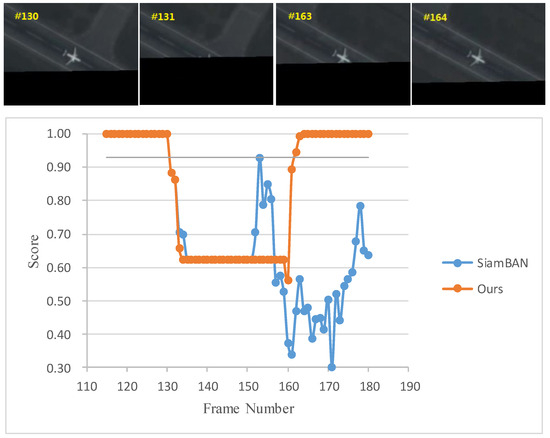

We select the three scales of the template and the search region, which feed into the backbone network ResNet-50. The backbone network is initialized with the weights pre-trained on ImageNet. We train our model with stochastic gradient descent (SGD) for 20 epochs with a batch size of 128. The learning rate is set as 0.005, with a weight decay of 0.0001 [35,38,72]. The number of positive samples and negative samples is both 16 [40] for training. We set and in (3) to 0.01 and 0.003 empirically. The detection threshold is set to 0.93 in our experiment. As shown in Figure 4, the score obtained through the network is close to 1 when the object is detected accurately. However, our tracker is applied to remote sensing videos under complex backgrounds. By mathematical statistics, the maximum value of the score obtained by the network is 0.927 in case of tracking failure, so we choose 0.93 as the detection threshold . When the confidence score output through the network is less than the set threshold , it is determined that the object has not been accurately tracked, and the adaptive search module is adopted to correct the coordinate position of the object.

Figure 4.

An example of object tracking with occlusion. The object is completely occluded between frames 131 and 162 of the video. The SiamBAN does not correctly detect the object from frame 163. However, our tracker can accurately locate the object when it reappears.

In the experiment, the model is implemented in PyTorch 1.9.0 and performed on a PC equipped with Intel(R) Xeon(R) Silver 4216 2.10 GHz CPU and NVIDIA GeForce RTX 3090 GPU. The PyTorch implementation runs at 37.528 FPS, and the proposed tracker runs in real-time.

4.2. Comparison with Existing Techniques

4.2.1. Comparison for Complex Backgrounds

To demonstrate the effectiveness of our tracker, we compare the proposed method with our previous work AD-OHNet [46]. AD-OHNet uses the network framework of deep reinforcement learning to achieve object tracking in remote sensing videos. To solve the problem of complete occlusion, we employ the temporal and spatial contexts between consecutive frames, and we use object appearance model and motion vector obtained by reinforcement learning to provide occlusion information. However, we adopt a SiamMAS network to solve the object tracking problem in remote sensing videos under a complex background in this paper. In our test dataset, there are 30, 20, and 10 frames when objects are completely occluded. Our tracking algorithm generally re-detects the object at frame 3 once the object has reappeared.

Table 1 shows the comparison results for complex backgrounds. It can be seen that these two methods can effectively solve the problem of occlusion in object tracking. Although our tracking algorithm is lower than AD-OHNet in precision, the precision is only decreased by 5.3 percentage points (p.p.). In the experiment, we find that the AD-OHNet can only handle the situation where the object is traveling along a straight line when it is completely occluded, whereas our method can also achieve a good tracking effect when the object is traveling along a curve. In addition, our method performs better in the face of background clutter and scale variation compared with AD-OHNet. Moreover, the tracking speed of the proposed method is significantly higher than that of AD-OHNet. Our tracker is capable of running in real time with a speed of 37.528 FPS. These experimental results prove that the proposed tracker is effective and achieves good performance.

Table 1.

A comparison of our tracker with the AD-OHNet.

4.2.2. Comparison with State-of-the-Art Trackers

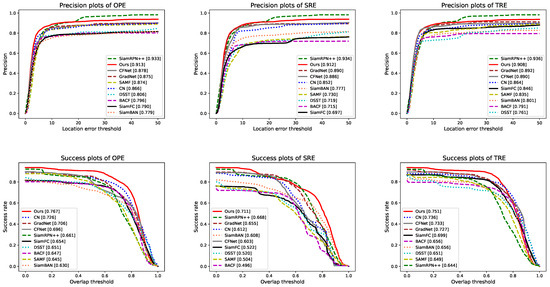

In this section, we evaluate our method with 20 state-of-the-art trackers: GradNet [23], CFNet [17], Staple [10], DSST [9], CN [5], SAMF [6], KCF_CN [8], BACF [11], CSK [4], CSR-DCF [12], DAT [73], KCF_HOG [7], STRCF [14], MCCT-H [13], MOSSE [3], AD-OHNet [46], SiamFC [22], SiamBAN [35], SiamMask [24], and SiamRPN++ [25]. The results of the precision plots and success plots are shown in Figure 5. We select nine comparative methods from the above trackers to compare with our tracker. In these comparative experiments, both correlation filter-based [5,6,9,11] and DL-based [17,22,23,25,35] tracking methods are included. Extensive experiments on remote sensing videos verify the effectiveness of the proposed tracker compared with other tracking methods in precision plots and success plots, whether for OPE, SRE, or TRE. Overall, these results indicate that our tracker has excellent robustness.

Figure 5.

Precision plots and success plots for OPE, SRE, and TRE on the AOIs from remote sensing videos. The legends of the precision plot and the success plot are the precision score at 20 px and the AUC for each tracker, respectively.

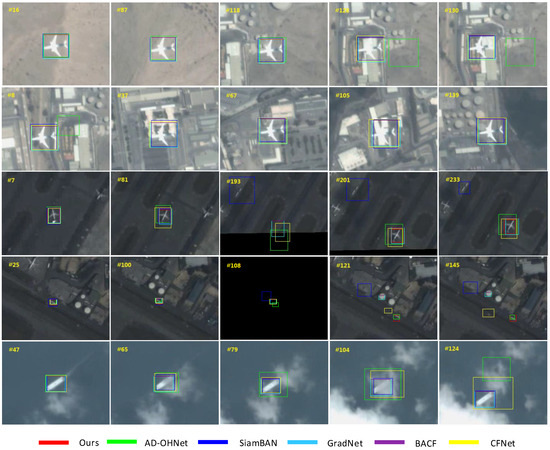

Figure 6 illustrates the qualitative results comparing the proposed tracker with the five other trackers on remote sensing videos under complex backgrounds. As shown in Figure 7, px is the resolution of each frame in this remote sensing video sequence. For convenience of presentation, the resolution of px is intercepted in each frame of the videos. The moving objects include airplanes, vehicles, and ships in the tested AOIs in Figure 6. The first two videos show the tracking results for background clutter. From the graph, we can see that our tracking algorithm can accurately track the object even when highly similar objects (e.g., buildings) exist around the object being tracked. Lines 3 and 4 show the tracking results for occlusion. Other tracking methods achieve the wrong bounding box in frame 193 and frame 108 when the object is completely occluded, but the right bounding box [0, 0, 0, 0] is acquired because of the partition search strategy in our tracker. The general tracking methods cannot continue to track the object when occlusion occurs during the tracking process, especially when the object is completely occluded. However, our tracker can still track the occluded object once the object has reappeared. Note that the occurrence of occlusions in frames 193, 201 of the third line, and frame 108 of the fourth line may be due to deviations in shooting on a remote sensing platform. In other words, the remote sensing videos we purchased are as shown in Figure 6, and we have not made personal modifications to simulate occlusion. The experimental scene in the third line contains other similar airplanes. When SiamBAN tracks the other airplane, our tracker can still track the object accurately. In addition, our tracking algorithm is effective even when the tracked object is such small object that it is difficult to extract features. The object in the fourth line occupies only px in the remote sensing video. In this remote sensing video, only AD-OHNet and our SiamMAS method can correctly track the object when the object reappears after complete occlusion. By contrast, AD-OHNet cannot handle background clutter and scale variation well. The last video shows the tracking results for scale variation. Our tracking method accurately tracks the ship when the ship has a scale change due to cloud occlusion. In sum, our method shows remarkable tracking results for remote sensing videos.

Figure 6.

Qualitative results comparing our approach with other trackers on the AOIs from remote sensing videos under complex backgrounds. The number in the upper-left corner indicates the frame number within the video. The first two lines show the tracking results for background clutter. Lines 3 and 4 show the tracking results for occlusion. The last line shows the tracking results for scale variation.

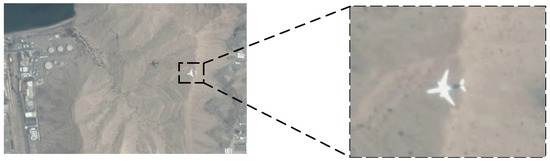

Figure 7.

The resolution of each frame is px in this remote sensing video experiment. We intercept the size of to facilitate the demonstration.

The precision and AUC are shown in Table 2. In these AOI data tested, our tracker achieves strong results in both precision and AUC. From Table 2, we can see that our method achieves comparable performance to other trackers. Obviously, the effect of tracking algorithms based on the Siamese network is generally better than that of other methods. Among the Siamese network-based tracking methods, SiamFC is the first to apply Siamese networks to the object tracking task, and SiamBAN, SiamMask, and SiamRPN++ all improve on it. Consequently, the tracking effect of SiamFC is slightly worse. Since our tracker introduces multi-scale cross correlation and an adaptive search module based on SiamBAN for remote sensing video under complex backgrounds, our tracker is more suitable than SiamBAN for the Jilin-1 dataset. However, SiamMask and SiamRPN++ also show good tracking results in the experiment. Compared with these two tracking methods, our SiamMAS generally has a higher AUC and lower precision. We will focus on improving the tracking precision in our next work. In sum, the experimental results indicate that our method is suitable for tracking tasks in remote sensing videos with complex backgrounds.

Table 2.

Comparison of our tracker with state-of-the-art trackers on remote sensing videos under complex backgrounds. The two best results are shown in red and blue text, respectively.

In addition, we test our tracking method on other datasets. Owing to the scarcity of remote sensing datasets, the UAV123 [74] and LaSOT [65] datasets are chosen to verify the effectiveness of the method. The UAV123 is a dataset captured by an unmanned aerial vehicle for object detection and object tracking, containing 123 video sequences. The LaSOT is a high-quality benchmark for large-scale single object tracking, which has been widely used for the evaluation of various tracking algorithms. The OPE experiments are carried out on these two datasets, and the precision values are 0.802 and 0.604, respectively. Moreover, we compare our method with some state-of-the-art trackers, and the results are shown in Table 3 and Table 4. It can be seen from the experimental results that our method can achieve good tracking performance even on non-remote sensing datasets.

Table 3.

Comparison with the state-of-the-art trackers on UAV123. The two best results are shown in red and blue text, respectively.

Table 4.

Comparison with the state-of-the-art trackers on LaSOT. The two best results are shown in red and blue text, respectively.

4.3. Ablation Study

4.3.1. Discussion on Multi-Scale Cross Correlation

To validate the effectiveness of our proposed multi-scale cross correlation, we perform an ablation study on remote sensing datasets. Quantitative comparison results are shown in Table 5. The multi-scale cross correlation can enhance the discriminative power of the model to enable the model to better handle complex backgrounds in remote sensing videos. By adopting the multi-scale cross correlation, the precision value is improved by 4.6 p.p.

Table 5.

Ablation study results of our tracker.

4.3.2. Discussion on Adaptive Search Module

The adaptive search module plays a key role in our tracker. The adaptive search module combining the Kalman filter and the partition search strategy can well estimate the motion of the tracked object and perform re-detection when there is severe occlusion or background clutter in the video. It can be seen from Table 5 that the precision value of the tracker is improved by 8.4 p.p. by introducing the adaptive search module. The experimental results also verified the effectiveness of the proposed adaptive search module.

Table 5 shows that the multi-scale cross correlation and adaptive search module can work independently or together to complement each other. That is, when the multi-scale cross correlation cannot solve difficult problems in tracking, the adaptive search module is used as an auxiliary means, so the model can achieve good performance in the face of a complex Jilin-1 remote sensing dataset. As shown in Table 5, When using these two modules at the same time, the precision value of the tracking algorithm reaches the highest value of 0.913. It can be concluded that both the adaptive search module and the multi-scale cross correlation can effectively improve the tracking performance of the model.

However, our method cannot perform well when the size of the object being tracked is small. Small objects, which may be just a few pixels, often appear in remote sensing videos, making it difficult to extract features. As the tracking result of frame 121 in the fourth line of Figure 6, our tracker can track the object. By contrast, the bounding box is not accurate, resulting in tracking results with high precision and low AUC. We intend to improve this situation in the future.

5. Conclusions

In this paper, we propose a single-object tracking method for remote sensing video under complex backgrounds. The tracking algorithm presents a multi-scale cross correlation and an adaptive search module. The multi-scale cross correlation makes the model more discriminative so that the model can better deal with complex backgrounds and scale variation in remote sensing videos. When the output of the network does not detect the object well in the current frame, the Kalman filter of the adaptive search module is performed to relocate the tracked object. Moreover, the input of the network is updated with the object position obtained by the Kalman filter in the next frame. The partition search strategy of the adaptive search module can help the Kalman filter achieve a more accurate region-proposal selection. In addition, the experimental results demonstrate that the proposed tracking algorithm can effectively handle background clutter, occlusion, and scale variation in remote sensing videos. Our trackers achieve superior performance against these complex backgrounds. In the future, we will try to build a network model that is more accurate and adaptable to small objects in remote sensing videos so that the tracking algorithm can achieve high precision and AUC even when facing objects with only a few pixels.

Author Contributions

Conceptualization, B.H. and Y.C.; methodology, Y.C.; software, Y.C.; validation, B.H.; formal analysis, S.W. and L.J.; investigation, Z.L.; resources, Z.R.; writing—original draft preparation, Y.C.; writing—review and editing, Y.C.; visualization, Y.C.; supervision, B.H.; funding acquisition, B.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Key Scientific Technological Innovation Research Project by the Ministry of Education; the National Natural Science Foundation of China under Grant 62171347, 61877066, 62276199, 61771379, 62001355, 62101405; the Science and Technology Program in Xi’an of China under Grant XA2020-RGZNTJ-0021; 111 Project.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Marvasti-Zadeh, S.M.; Cheng, L.; Ghanei-Yakhdan, H.; Kasaei, S. Deep Learning for Visual Tracking: A Comprehensive Survey. IEEE Trans. Intell. Transport. Syst. 2021, 23, 3943–3968. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 702–715. [Google Scholar]

- Danelljan, M.; Shahbaz Khan, F.; Felsberg, M.; Van de Weijer, J. Adaptive color attributes for real-time visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1090–1097. [Google Scholar]

- Li, Y.; Zhu, J. A Scale Adaptive Kernel Correlation Filter Tracker with Feature Integration. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Asha, C.S.; Narasimhadhan, A.V. Adaptive Learning Rate for Visual Tracking Using Correlation Filters. Procedia Comput. Sci. 2016, 89, 614–622. [Google Scholar] [CrossRef]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Discriminative Scale Space Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1561–1575. [Google Scholar] [CrossRef] [PubMed]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H. Staple: Complementary learners for real-time tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Lukei, A.; Vojí, T.; Ehovinzajc, L.; Matas, J.; Kristan, M. Discriminative Correlation Filter with Channel and Spatial Reliability. Int. J. Comput. Vis. 2018, 126, 671–688. [Google Scholar]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Li, H. Multi-Cue Correlation Filters for Robust Visual Tracking. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning Spatial-Temporal Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Jain, M.; Tyagi, A.; Subramanyam, A.V.; Denman, S.; Sridharan, S.; Fookes, C. Channel Graph Regularized Correlation Filters for Visual Object Tracking. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 715–729. [Google Scholar] [CrossRef]

- Moorthy, S.; Joo, Y.H. Adaptive Spatial-Temporal Surrounding-Aware Correlation Filter Tracking via Ensemble Learning. Pattern Recognit. 2023, 139, 109457. [Google Scholar] [CrossRef]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. End-to-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008. [Google Scholar] [CrossRef]

- Danelljan, M.; Robinson, A.; Shahbaz Khan, F.; Felsberg, M. Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 9909, pp. 472–488. [Google Scholar] [CrossRef]

- Nam, H.; Han, B. Learning Multi-domain Convolutional Neural Networks for Visual Tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar] [CrossRef]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar] [CrossRef]

- Nam, H.; Baek, M.; Han, B. Modeling and Propagating CNNs in a Tree Structure for Visual Tracking. arXiv 2016, arXiv:1608.07242. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking. In Computer Vision—ECCV 2016 Workshops; Hua, G., Jégou, H., Eds.; Springer: Cham, Switzerland, 2016; pp. 850–865. [Google Scholar] [CrossRef]

- Li, P.; Chen, B.; Ouyang, W.; Wang, D.; Yang, X.; Lu, H. GradNet: Gradient-Guided Network for Visual Object Tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2019. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast Online Object Tracking and Segmentation: A Unifying Approach. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1328–1338. [Google Scholar] [CrossRef]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of Siamese Visual Tracking with Very Deep Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4277–4286. [Google Scholar] [CrossRef]

- Zhang, Y.; Hittawe, M.M.; Katterbauer, K.; Marsala, A.F.; Knio, O.M.; Hoteit, I. Joint seismic and electromagnetic inversion for reservoir mapping using a deep learning aided feature-oriented approach. In Proceedings of the SEG Technical Program Expanded Abstracts, Houston, TX, USA, 13 October 2020; pp. 2186–2190. [Google Scholar]

- Fang, J.; Liu, G. Visual Object Tracking Based on Mutual Learning Between Cohort Multiscale Feature-Fusion Networks with Weighted Loss. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1055–1065. [Google Scholar] [CrossRef]

- Dong, X.; Shen, J.; Porikli, F.; Luo, J.; Shao, L. Adaptive Siamese Tracking With a Compact Latent Network. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 8049–8062. [Google Scholar] [CrossRef]

- Li, X.; Jiao, L.; Zhu, H.; Liu, F.; Yang, S.; Zhang, X.; Wang, S.; Qu, R. A Collaborative Learning Tracking Network for Remote Sensing Videos. IEEE Trans. Cybern. 2023, 53, 1954–1967. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Liu, M.Y.; Tuzel, O.; Xiao, J. R-CNN for Small Object Detection. In Computer Vision—ACCV 2016; Lai, S.H., Lepetit, V., Nishino, K., Sato, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10115, pp. 214–230. [Google Scholar] [CrossRef]

- Kisantal, M.; Wojna, Z.; Murawski, J.; Naruniec, J.; Cho, K. Augmentation for Small Object Detection. arXiv 2019, arXiv:cs/1902.07296. [Google Scholar]

- Long, M.; Cong, S.; Shanshan, H.; Zoujian, W.; Xuhao, W.; Yanxi, W. SDDNet: Infrared small and dim target detection network. CAAI Trans. Intell. Technol. 2023, in press. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric contextual modulation for infrared small target detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2021; pp. 950–959. [Google Scholar]

- Pham, M.T.; Courtrai, L.; Friguet, C.; Lefèvre, S.; Baussard, A. YOLO-Fine: One-stage detector of small objects under various backgrounds in remote sensing images. Remote Sens. 2020, 12, 2501. [Google Scholar] [CrossRef]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese Box Adaptive Network for Visual Tracking. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6667–6676. [Google Scholar] [CrossRef]

- Shao, J.; Du, B.; Wu, C.; Gong, M.; Liu, T. HRSiam: High-Resolution Siamese Network, Towards Space-Borne Satellite Video Tracking. IEEE Trans. Image Process. 2021, 30, 3056–3068. [Google Scholar] [CrossRef] [PubMed]

- Jiang, M.; Zhao, Y.; Kong, J. Mutual Learning and Feature Fusion Siamese Networks for Visual Object Tracking. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3154–3167. [Google Scholar] [CrossRef]

- Dong, X.; Shen, J.; Wu, D.; Guo, K.; Jin, X.; Porikli, F. Quadruplet Network With One-Shot Learning for Fast Visual Object Tracking. IEEE Trans. Image Process. 2019, 28, 3516–3527. [Google Scholar] [CrossRef]

- Bi, F.; Sun, J.; Han, J.; Wang, Y.; Bian, M. Remote Sensing Target Tracking in Satellite Videos Based on a Variable-angle-adaptive Siamese Network. IET Image Proc. 2021, 15, 1987–1997. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, X.; Zhang, Q.; Han, J. SiamCDA: Complementarity- and Distractor-Aware RGB-T Tracking Based on Siamese Network. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1403–1417. [Google Scholar] [CrossRef]

- Shi, F.; Qiu, F.; Li, X.; Tang, Y.; Zhong, R.; Yang, C. A Method to Detect and Track Moving Airplanes from a Satellite Video. Remote Sens. 2020, 12, 2390. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, C.; Song, J.; Xu, Y. Object Tracking Based on Satellite Videos: A Literature Review. Remote Sens. 2022, 14, 3674. [Google Scholar] [CrossRef]

- Shao, J.; Du, B.; Wu, C.; Zhang, L. Tracking Objects From Satellite Videos: A Velocity Feature Based Correlation Filter. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7860–7871. [Google Scholar] [CrossRef]

- Xuan, S.; Li, S.; Han, M.; Wan, X.; Xia, G.S. Object Tracking in Satellite Videos by Improved Correlation Filters With Motion Estimations. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1074–1086. [Google Scholar] [CrossRef]

- Song, W.; Jiao, L.; Liu, F.; Liu, X.; Li, L.; Yang, S.; Hou, B.; Zhang, W. A Joint Siamese Attention-Aware Network for Vehicle Object Tracking in Satellite Videos. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Cui, Y.; Hou, B.; Wu, Q.; Ren, B.; Wang, S.; Jiao, L. Remote Sensing Object Tracking with Deep Reinforcement Learning Under Occlusion. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Li, K.; Kong, Y.; Fu, Y. Visual Object Tracking Via Multi-Stream Deep Similarity Learning Networks. IEEE Trans. Image Process. 2020, 29, 3311–3320. [Google Scholar] [CrossRef]

- Xiong, F.; Zhou, J.; Qian, Y. Material Based Object Tracking in Hyperspectral Videos. IEEE Trans. Image Process. 2020, 29, 3719–3733. [Google Scholar] [CrossRef]

- Liu, S.; Liu, D.; Muhammad, K.; Ding, W. Effective Template Update Mechanism in Visual Tracking with Background Clutter. Neurocomputing 2021, 458, 615–625. [Google Scholar] [CrossRef]

- Danelljan, M.; Häger, G.; Shahbaz Khan, F.; Felsberg, M. Accurate Scale Estimation for Robust Visual Tracking. In Proceedings of the British Machine Vision Conference 2014, Nottingham, UK, 1–5 September 2014; pp. 1–11. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, J. A Scale Adaptive Kernel Correlation Filter Tracker with Feature Integration. In Computer Vision—ECCV 2014 Workshops; Agapito, L., Bronstein, M.M., Rother, C., Eds.; Springer International Publishing: Cham, Switzerland, 2015; Volume 8926, pp. 254–265. [Google Scholar] [CrossRef]

- Ruan, W.; Chen, J.; Wu, Y.; Wang, J.; Liang, C.; Hu, R.; Jiang, J. Multi-Correlation Filters with Triangle-Structure Constraints for Object Tracking. IEEE Trans. Multimed. 2019, 21, 1122–1134. [Google Scholar] [CrossRef]

- He, Z.; Yi, S.; Cheung, Y.M.; You, X.; Tang, Y.Y. Robust Object Tracking via Key Patch Sparse Representation. IEEE Trans. Cybern. 2016, 47, 354–364. [Google Scholar] [CrossRef]

- Dong, X.; Shen, J.; Yu, D.; Wang, W.; Liu, J.; Huang, H. Occlusion-Aware Real-Time Object Tracking. IEEE Trans. Multimed. 2017, 19, 763–771. [Google Scholar] [CrossRef]

- Guo, Q.; Han, R.; Feng, W.; Chen, Z.; Wan, L. Selective Spatial Regularization by Reinforcement Learned Decision Making for Object Tracking. IEEE Trans. Image Process. 2020, 29, 2999–3013. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Zhang, S.; Zhang, L. Towards Occlusion Handling: Object Tracking With Background Estimation. IEEE Trans. Cybern. 2018, 48, 2086–2100. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lu, T.; Wang, J.; Zhang, Y.; Wang, Z.; Jiang, J. Satellite Image Super-Resolution via Multi-Scale Residual Deep Neural Network. Remote Sens. 2019, 11, 1588. [Google Scholar] [CrossRef]

- Shen, J.; Tang, X.; Dong, X.; Shao, L. Visual Object Tracking by Hierarchical Attention Siamese Network. IEEE Trans. Cybern. 2020, 50, 3068–3080. [Google Scholar] [CrossRef] [PubMed]

- Funk, N. A Study of the Kalman Filter Applied to Visual Tracking; University of Alberta, Project for CMPUT; University of Alberta: Edmonton, AB, Canada, 2003; Volume 652, pp. 1–26. [Google Scholar]

- Weng, S.K.; Kuo, C.M.; Tu, S.K. Video Object Tracking Using Adaptive Kalman Filter. J. Vis. Commun. Image Represent. 2006, 17, 1190–1208. [Google Scholar] [CrossRef]

- Gunjal, P.R.; Gunjal, B.R.; Shinde, H.A.; Vanam, S.M.; Aher, S.S. Moving Object Tracking Using Kalman Filter. In Proceedings of the 2018 International Conference On Advances in Communication and Computing Technology (ICACCT), Sangamner, India, 8–9 February 2018; pp. 544–547. [Google Scholar] [CrossRef]

- Feng, S.; Hu, K.; Fan, E.; Zhao, L.; Wu, C. Kalman Filter for Spatial-Temporal Regularized Correlation Filters. IEEE Trans. Image Process. 2021, 30, 3263–3278. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. LaSOT: A High-Quality Benchmark for Large-Scale Single Object Tracking. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5369–5378. [Google Scholar] [CrossRef]

- Real, E.; Shlens, J.; Mazzocchi, S.; Pan, X.; Vanhoucke, V. YouTube-BoundingBoxes: A Large High-Precision Human-Annotated Data Set for Object Detection in Video. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7464–7473. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar] [CrossRef]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M.H. Online Object Tracking: A Benchmark. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar] [CrossRef]

- Yun, S.; Choi, J.; Yoo, Y.; Yun, K.; Choi, J.Y. Action-Driven Visual Object Tracking with Deep Reinforcement Learning. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2239–2252. [Google Scholar] [CrossRef]

- Babenko, B.; Yang, M.H.; Belongie, S. Robust Object Tracking with Online Multiple Instance Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1619–1632. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Mu, X. Dynamic Siamese Network with Adaptive Kalman Filter for Object Tracking in Complex Scenes. IEEE Access 2020, 8, 222918–222930. [Google Scholar] [CrossRef]

- Possegger, H.; Mauthner, T.; Bischof, H. In defense of color-based model-free tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2113–2120. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A Benchmark and Simulator for UAV Tracking. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 445–461. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).