Abstract

Recently, the methods based on the autoencoder reconstruction background have been applied to the area of hyperspectral image (HSI) anomaly detection (HSI-AD). However, the encoding mechanism of the autoencoder (AE) makes it possible to treat the anomaly and the background indistinguishably during reconstruction, which can result in a small number of anomalous pixels still being included in the acquired reconstruction background. In addition, the problem of redundant information in HSIs also exists in reconstruction errors. To this end, a fully convolutional AE hyperspectral anomaly detection (AD) network with an attention gate (AG) connection is proposed. First, the low-dimensional feature map as a product of the encoder and the fine feature map as a product of the corresponding decoding stage are simultaneously input into the AG module. The network context information is used to suppress the irrelevant regions in the input image and obtain the significant feature map. Then, the features from the AG and the deep features from upsampling are efficiently combined in the decoder stage based on the skip connection to gradually estimate the reconstructed background image. Finally, post-processing optimization based on guided filtering (GF) is carried out on the reconstruction error to eliminate the wrong anomalous pixels in the reconstruction error image and amplify the contrast between the anomaly and the background.

1. Introduction

As an important branch of target detection, HSI-AD refers to finding a small set of anomalous targets in the image that contrast with the background by analyzing the spectral characteristics of the background and the target anomaly when the prior information of the target is unknown [1]. With the achievements of hyperspectral imaging technology, HSI-AD has been emphasized and developed as a frontier technology in related fields such as military reconnaissance, submarine detection, and crop identification [2]. The statistical model-based RX algorithm proposed by Reed and Xiaoli [3] is still attracting attention due to its simplicity and high efficiency. The algorithm first assumes that the background of HSI is subject to multivariate normal distribution. Then, the anomaly degree of the pixel to be measured is analyzed by calculating the Mahalanobis distance between the pixel to be measured and the background. Furthermore, many improved algorithms based on RX are proposed in view of the fact that in actual HSIs, the background is often complex, does not conform to the hypothesis of normal distribution, and the covariance calculation is polluted by anomalous pixels such as the cluster kernel RX (CKRX) algorithm [4], which first uses the clustering algorithm to cluster background pixels and then uses a cluster center to represent pixels, so as to solve the burden problem in the actual calculation. The improved RX with a CNN framework algorithm [5] uses CNN to estimate the similarity between the pixel to be measured and the background or target pixel to achieve the purpose of suppressing the prominent anomaly in the background. Then, the similarity score is applied to the improved RX algorithm to obtain the detection result. The superpixel-based dual window RX (SPDWRX) algorithm [6] uses superpixel segmentation to determine the local detection window adaptively and performs the same background suppression on the pixels in the superpixel, which improves the detection efficiency to a certain extent.

HSI-AD algorithms based on sparse representation (SR) and cooperative representation (CR) have also been proposed in recent years. The former uses an SR model to first construct a dictionary and assumes that as few atoms as possible in the dictionary can represent all background pixels in the scene, while anomalous pixels cannot be represented. For example, the low-rank representation and adaptive weighting algorithm [7], the enhanced tensor RPCA-based Mahalanobis distance method algorithm [8], the low-rank and sparse matrix decomposition-based dictionary reconstruction, and the anomaly extraction framework algorithm [9], etc. However, if using SR algorithms, it is difficult to guarantee that the feature types covered by the dictionary are not disturbed by anomalous pixels. In addition, they usually focus only on the spectral features of the pixel to be measured but ignore the spatial features. The algorithm based on CR obtains dictionary atoms by constructing a pair of windows around the pixel to be measured. Like SR, this kind of algorithm usually uses a linear combination of a series of background atomic spectra to symbolize the spectrum of the measurement pixel. The representative algorithms include the representative algorithms include a self-weighted collaborative representation algorithm [10], a relaxed collaborative representation (RCRD) algorithm [11], a collaborative representation with background purification and saliency weight (CRDBPSW) algorithm [12], etc.

As a frontier field in recent years, deep learning has offered viable solutions to many problems in pattern recognition [13,14,15]. Deep learning frameworks are divided into supervised learning or not. The classification criteria are usually judged by whether labeled training dates are required for model construction. As an unsupervised target detection method, HSI-AD doesn’t need to know anything about the target previously, and thus a commonly used unsupervised feature extraction model, autoencoders (AEs) [16], is applicable to the HSI-AD field. The basic idea is to consider that most of the pixels in HSI-AD can be regarded as background, while the anomalous pixels only account for a small part. In this way, when HSI is added to the AE model, it can be assumed that the AE model generally learns the background features. The model’s capacity to reconstruct the background is higher than that to reconstruct the anomaly, so the reconstruction error of the anomaly will be larger than that of the background. Thus, it can be judged by the reconstruction error of each image pixel to determine whether it is anomalous or not. Since Bati et al. proposed the AE-based AD algorithm [17], numerous enhanced algorithms have been put forth. Zhao C. et al. proposed an AD algorithm based on the stacked denoising autoencoders (SDA) algorithm [18], which uses principal component analysis (PCA) and whitening to process the original image and then extracts the potential features by SDA. W. Xie et al. proposed an algorithm based on spectral constraint adversarial autoencoders (SC_AAE) [19]. The potential representation of HSI is learned by adding spectral constraints to the adversarial autoencoders (AAE) network. Finally, the two-layer structure is used for anomaly detection, using the manifold-constrained AE (MC-AE) algorithm [20] proposed by X. Lu et al. Firstly, manifold learning constraints are added to maintain the internal structure of hyperspectral data, and the global reconstruction error of MC-AE is combined with the learned local reconstruction error to better detect the anomaly. Although the above AE-based and improved algorithms improve the detection performance to some extent, there is the problem of treating anomalous pixels and background pixels indiscriminately in the reconstruction process during actual AE training, which results in anomalous pixels being well-reconstructed. Even if they only occupy a small proportion, they will have a significant impact on anomaly detection performance.

A HSI-AD network based on AG-connected full convolutional AE is proposed in this paper. HSI is regarded as consisting of two types of image elements, background and anomaly, and the model is constructed following the principle of separation of anomaly and background. The purpose of AE training reconstruction is to better remove the anomaly and purify the background, increasing the reconstruction error of the anomaly. To overcome the problem of the background and anomaly being reconstructed indistinguishably in real training, higher weights are assigned to the background pixels by introducing an AG mechanism at the full convolutional AE skip connection. In addition, to fix the issue of the AE’s reconstruction capability being unstable, the reconstruction process is prone to producing some irregular background pixels, resulting in some false anomalous pixels in the results. In this paper, the reconstruction error image-processing mechanism based on GF is adopted to get the final experimental result map.

The below is a summary of this paper’s significant developments:

- (1)

- A fully convolutional AE network is used to recreate the background in the same dimension and scale as the original image, avoiding the lack of spectral information.

- (2)

- By introducing the AG model into the AE skip connection, a higher weight is assigned to the background reconstruction to better rebuild the background than the anomaly, thus increasing the separability of the two types of pixels.

- (3)

- Error map processing based on GF eliminates the irregular background pixels generated during image reconstruction and mitigates the effect of false anomalies on the rate of false alarms.

2. Related Works

2.1. Autoencoders

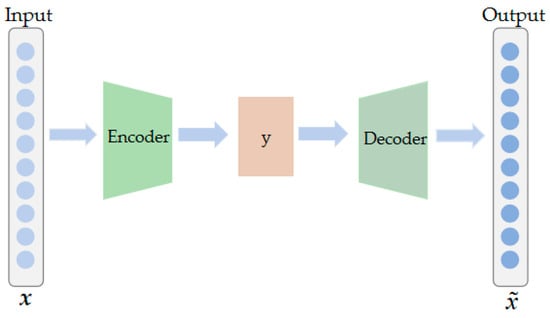

As a typical unsupervised deep neural network [21], the AE network is composed of two parts, the encoder and decoder, respectively. The schematic diagram of the network structure is shown in Figure 1.

Figure 1.

AE network.

The encoder maps the input HSI to low-dimensional data, and the decoder reduces it to the original data as much as possible. The parameters of the network include M and b, where M denotes the weight and b denotes the bias. The encoding process from layer x of the input to layer y of the hidden is shown in (1), which represents the encoder correspondence function.

The decoding process from hidden layer y to output layer is shown in (2), representing the decoder’s corresponding function, and corresponding to M and b, representing the weight and bias of the decoder.

In the training stage, the reconstruction error is taken as the loss function, and the gradient descent method and back propagation algorithm are used to update the weight and bias parameters until the iteration termination condition is satisfied. The loss function is shown in (3), and L represents the reconstruction error of the whole process.

2.2. Guided Filter

Guided filtering (GF), as an efficient edge filter maintainer [22], has found extensive use in the fields of image fusion [23], feature enhancement [24], and image classification [25] in remote sensing. It is usually able to focus on the image’s specific information and has high efficiency. Its principle is as follows: it assumes that linear transformation is performed on the guide image g in the local window centered on pixel j to obtain the output image q, as shown in (4).

where is the size of the window . and are linear coefficients calculated by minimizing the difference between the input image p and the output image q within the window, as shown in (5), where the window parameter r and the regularization parameter are manual input parameters.

Further, making

where and are, respectively, the average coefficients of sum in all windows covering pixel i. Then, the final image after GF is:

2.3. Attention Gates

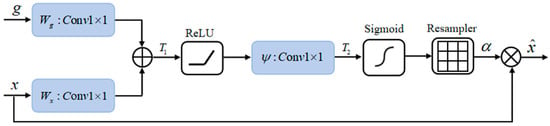

The AG module consists of several functions [26], which are defined as follows:

where and are the input feature maps; and are the activation functions of ReLU and Sigmoid, respectively; , , and are the parameter matrices of the linear transform, which is convolution; and are the bias matrices of the convolution; is the attention coefficient of the output. The specific model architecture of AG is shown in Figure 2.

Figure 2.

AG model diagram [26].

Without feature localization, the AG model can automatically respond to the feature region and suppress the response of irrelevant regions at the same time, so that the final output feature map focuses on the region near the mark point.

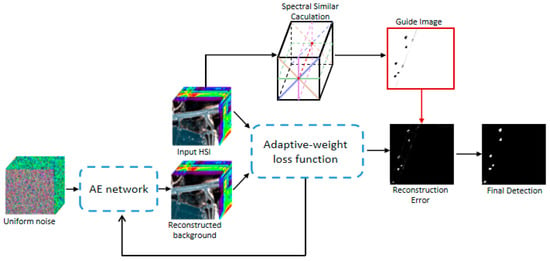

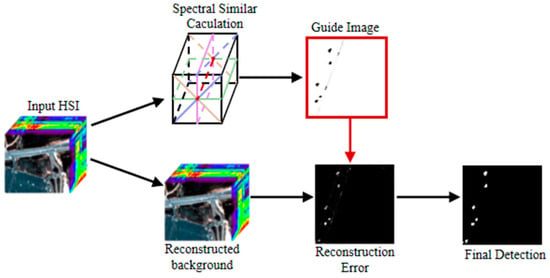

3. Methodology

This paper proposes the HSI-AD architecture, FCAE-AD, for full convolutional AE with an AG skip connection. The overall framework is shown in Figure 3. It can be divided into two steps. Firstly, the coarse sampling diagram of the encoder and the fine sampling diagram of the decoder are combined to reconstruct the HSI background at the skip connection of the full convolutional AE through the AG. Next, the GF is used to process the initial reconstruction error map to obtain the final detection map. Section 3.1, Section 3.2 and Section 3.3 analyze and discuss the above sections, respectively. To better explain the proposed method, a summary of abbreviations is given in Table 1.

Figure 3.

Overall framework of AG-AD.

Table 1.

Parameters of different AD algorithms.

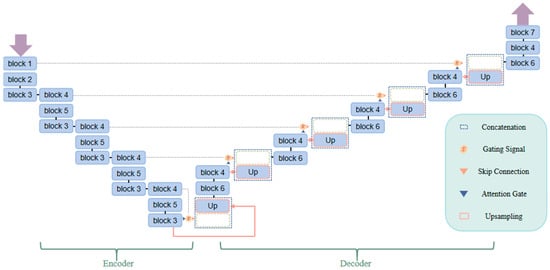

3.1. Full Convolution AE Network Connected by AG

In HSI-AD, HSI can be visually composed of the background and anomaly, and the anomaly can be detected by separating the background. As a result, the precision of anomaly detection is directly impacted by the quality of background separation. To better reconstruct the background, this paper introduces a full convolutional AE network [27], in which the background is obtained by network reconstruction and the reconstruction error is a representation of the anomaly. The grid-based AG module is added to the network’s skip connection to give background pixels more weight to inhibit the reconstruction of anomalies and improve background reconstruction. The proposed AG-connected fully convolutional AE network is shown in Figure 4.

Figure 4.

AE network framework.

For the HSI (), where H, W, and B denote the length and width of the X and the number of bands, respectively, the input to the network is a uniform noise distribution of the same size as X, let it be , with a distribution of pixel values in the range .

- (1)

- Encoder: It contains 15 convolutional modules. A convolution layer, a batch normalization (BatchNorm) function, and a leaky rectified linear unit (LeakyReLU) activation function are all included in each convolution module. A convolutional layer with a stride of 1 is used in Block 1 and Block 4. Through AG, the created feature maps are linked to the feature maps produced by the respective decoder layers. A convolutional layer with a stride of 2 is used in Block 2 and Block 5 primarily for downsampling. In Block 3, to follow Block 2 or Block 5, a convolutional layer with a stride of 1 is employed. The feature map dimension is always maintained at 128 dimensions at this stage.

- (2)

- Converter: It contains 5 AGs with skip connections. The AG model is shown in Figure 1, and its implementation steps are as follows: (a) Convolution is performed on the input feature maps g and x. Then, add the two feature maps to obtain the new feature map. To achieve the purpose of enhancing the feature area; (b) To create the feature map , the feature map is first processed by the ReLU activation function and then put through a convolution operation; (c) The weight map (taking values in the range [0, 1]) is obtained after the sigmoid activation function is applied to , the background feature area, which tends to 1, and the anomalous feature area, which tends to 0; (d) Resample and add it to the feature map x to obtain to enhance the background features and weaken abnormal features [28]. The coarse feature map generated by Block 1 or Block 4 and the fine feature map extracted at multi-scales are sampled, and the complementary information is extracted and fused by AG input before finally being merged into the next Block 6 by skip connection.

- (3)

- Decoder: It contains 11 convolution modules. Each convolution module has a convolution layer, a BatchNorm layer, and a LeakyReLU layer. The last convolution module also contains a sigmoid activation function. A convolution layer with a stride of 1 is used in Block 6, the input is a 256-dimensional feature map that combines the AG feature map with the encoding stage feature map, and the output is a 128-dimensional feature map. Block 4 uses a convolution layer with a step size of 1, following Block 6, with the feature map dimension kept at 128 dimensions. Block 7 uses a convolution layer with a step size of 1 to convert the feature map with a depth of 128 dimensions to the same dimensions as the HSI.

The reconstructed map, with the same size as the original input HSI, is the network’s output.

In the actual training, when the number of training runs increases until it reaches a certain point, the phenomenon of anomalous pixels being reconstructed due to the high fitting of the network will occur. To this end, adaptive weight to the loss function is added to train the model. The reconstruction error is used to construct the weight map, and then the weight map is added to the loss function to construct the weighted loss to train the model [27]. The specific calculation steps are as follows:

where, and represent the pixel vectors of the original image X and the reconstructed image W, respectively. The represents the reconstruction error between them, and constitutes the reconstruction error graph E. According to (12), the maximum reconstruction error is subtracted from the value of each reconstruction error to construct the weight graph, and the weighted loss (14) is obtained.

Finally, the loss function is used in the iterative training of the network. The values of the loss function are fed back after each training session to update the network parameters, and the above steps are repeated for each iteration until the average change of the loss function in the last 50 iterations of training is below the threshold .

In Algorithm 1, the detailed process of obtaining the reconstructed background image is given by the proposed FCAE-AE network.

| Algorithm 1 FCAE-AE network. |

| Input: HSI . |

| Initialization: Random noise map ; |

| Do |

| 1: Network forward; |

| 2: The difference map of the X and the network reconstructed background map |

| Weight is constructed by Formula (11)–(13) for the weight graph; |

| 3: The adaptive weighted loss L is calculated by Formula (14); |

| 4: Network backward; |

| Until average weighted loss ; |

| Output: Reconstruct Error E. |

3.2. Post Processing Optimization Based on GF

The reconstruction error process usually generates irregular background pixels. To reduce the interference of false anomalies on the detection results, this paper uses GF to process the reconstruction error map E obtained by Section 3.1.

3.2.1. Guide Image Construction

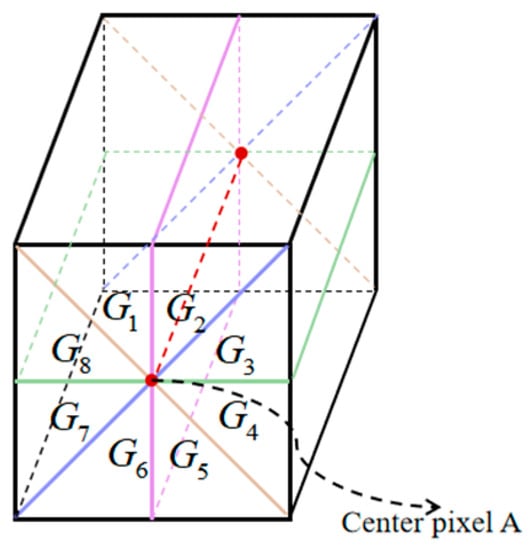

In this paper, we construct the guide image D based on the single-window model [29], using the similarity scores of the image pixel values to be measured with each sub-volume as the pixel values of the guide image. Firstly, the X is divided into 8 sub-volumes, as shown in Figure 5, which can be expressed as . The central pixel A is the image pixel to be measured. The difference between each sub-volume and A is calculated separately, as shown in (15).

Figure 5.

Eight-volumes segmentation method.

denotes the value of the image pixel to be measured in the band in X. denotes the average vector of each sub-volume and denotes the value of the band of . The similarity score between and A is obtained by , as shown in (16).

The highest score in the 8 sub-volumes is selected as the pixel value of guide image D, as shown in (17), where denotes the function to select the maximum value.

For this single-window model, there may be four possible cases, as follows:

- (a)

- Both the pixel to be measured and the window pixels are the background. The similarity scores of the image pixel A to be measured and all sub-volumes are high.

- (b)

- The pixel to be measured is the background, but there are anomalies in the window. The similarity scores between containing the anomaly and the image pixel A to be tested are lower than those of not containing the anomalous pixels.

- (c)

- The image pixel to be measured is anomalous, but the pixels in the window are background. All values are very low, and it is easy to determine that the image element A to be measured is anomalous.

- (d)

- The image pixel to be measured is anomalous, and window pixels also have anomalous pixels. The similarity score between containing anomalies and pixel A to be measured is higher than without anomalies. However, in the actual HSI, anomalies only occupy a few, so the situation almost does not exist.

3.2.2. Process of Guide Filtering

The reconstruction error map E is taken as the input image of GF, and we can get the final detection map under the correction of the above guide image D. The specific process is shown in (18), and the process figure is shown in Figure 6.

where and represent the average of the linear function coefficients covering all overlapping windows of , respectively. See (6) for the calculation function.

Figure 6.

Guided filtering process diagram.

3.3. Algorithm Implementation

Based on the above analysis, a fully convolutional HSI-AD algorithm with AG connection is proposed, and the method steps are given in Algorithm 2.

| Algorithm 2 Overall framework. |

| Input: HSI . |

| Initialization: Random noise map ; Window size c; Guide filter size r; Guide filter |

| regularization parameters . |

| 1: The random noise image is input into the network, and the background reconstruction |

| image W is obtained by Algorithm 1 after the correction of the X; |

| 2: The reconstruction error E is obtained by the X and the W; |

| 3: The X is divided into 8 volumes by the single-window model, and the difference |

| between each sub-volume and the pixel A to be measured is calculated by Formula (15); |

| 4: The similarity score of and A is calculated by Formula (16); |

| 5: According to Formula (17), the highest score in is selected as the pixel |

| value to guide image D; |

| 6: The final detection image is obtained by GF the residual map E with the guide image D; |

| Output: Anomaly Detection Map . |

4. Analysis and Experiment

In this paper, the experimental environment is the Windows 10 operating system, the experimental computer configuration is an Intel Core i7-7700 CPU and 16 GB RAM, and the experimental platform is Python 3.7.

4.1. Experimental Datasets

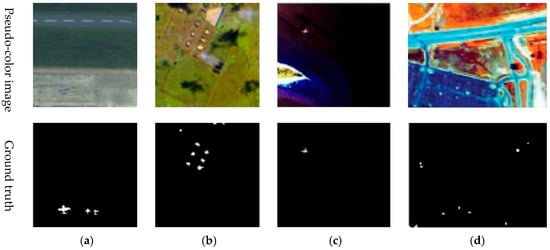

The four real public datasets are adopted, which are, respectively, the Gulfport Airport, Texas Coast, Cat Island, and HYDICE Urban datasets [30]. The details are as follows:

- (1)

- Gulfport Airport: The dataset has a spatial resolution of 3.5 m and was acquired by the AVIRIS sensor in Gulfport, Mississippi, in the United States. The size is 100 × 100 and the total number of spectral bands is 191 spectral bands. The anomalous area that must be found is the aircraft in the photograph. The sample image and the ground truth map for this dataset are displayed in Figure 7a.

Figure 7. Pseudo-color images and Ground truth images of the experimental datasets (a) Gulfport Airport; (b) Texas Coast; (c) Cat Island; (d) HYDICE Urban.

Figure 7. Pseudo-color images and Ground truth images of the experimental datasets (a) Gulfport Airport; (b) Texas Coast; (c) Cat Island; (d) HYDICE Urban. - (2)

- Texas Coast: The dataset, which has a size of 100 × 100, a spatial resolution of 17.2 m, and 204 bands, was acquired by AVIRIS sensors off the Texas coast. Residents’ houses of various shapes in the image are marked as anomaly, containing 155 anomalous pixels. The sample image and the ground truth map for this dataset are displayed in Figure 7b.

- (3)

- Cat Island: The dataset was acquired by the AVIRIS sensor over the beaches and sea areas surrounding Cat Island and contains 188 spectral bands in an image of size 150 × 150. The dataset includes 19 anomalous pixels from airplane scenes. The sample image and the ground truth map for this dataset are displayed in Figure 7c.

- (4)

- HYDICE Urban: The dataset was acquired by the HYDICE sensor in an urban area in California, USA. The size of the test image used in the experiment is 80 × 100. After removing the noise band, there are still 175 spectral bands remaining. and the spatial resolution is 3 m. It contains 17 anomalous pixels, including cars and roofs. The example image and ground truth map for this dataset are shown in Figure 7d.

4.2. Parameter Setting

Several classical AD algorithms are selected for comparison experiments in this paper, including the RX algorithm [3], the LRX algorithm [4], the Auto-AD algorithm [27], the guided autoencoder algorithm (GAED) [29], the Low-Rank Representation incorporating a spatial constraint (MLW_LRRSTO) algorithm [31], and the feature extraction and background purification for anomaly detection (FEBPAD) algorithm [32], the CRD algorithm [33].

To get the best detection result possible from each algorithm, parameters are set in each algorithm. Table 1 shows the parameter settings of the relevant algorithms.

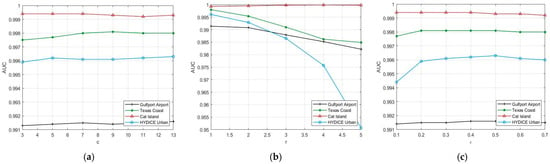

The FCAE-AD algorithm in this paper contains three parameters, which are window size c, guided filtering size r, and regularization parameter . The effects of different parameter settings on FCAE-AD results are evaluated based on the AUC using the four common datasets mentioned above.

To analyze a particular parameter, the other parameters are left unchanged, and the default value is set to c = 9, r = 1, . The AUC line graphs concerning the parameter variation are shown in Figure 8.

Figure 8.

Influence of different parameters on AD results of FCAE-AD algorithm. (a) the window size c; (b) the guide filter size r; (c) the guide filter regularization parameter .

- (1)

- Window size c: A line chart of the AUC values with parameter c is shown in Figure 8a. The AUC value of Gulfport Airport fluctuates up and down in the general trend and reaches its peak when c = 13, so the Gulfport Airport window size c is set to 13. For Texas Coast, the general trend of AUC value is increasing first, then decreasing to reach the peak at c = 9. Therefore, the Texas Coast window size c is set to 9. For Cat Island, the AUC value is constant from window sizes 3–9 and decreasing from c = 9, so the Cat Island window size c is set to 9. For HYDICE Urban, the AUC value tends to increase all the time, so the HYDICE Urban window size c is set to 13. To sum up, the parameters c for the above four datasets are set to 13, 9, 9, and 13, respectively. In the order given in Section 4.1.

- (2)

- Guide filter size r: A line chart of the AUC values with parameter r is shown in Figure 8b. The general trend of the AUC value for Cat Island increases from r = 1 to decreases at r = 4. For Gulfport Airport, Texas Coast, and HYDICE Urban, the AUC value decreases from r = 1. To sum up, r of Cat Island is set to 4 in this paper, and r of other datasets is set to 1.

- (3)

- Guide filter regularization parameters : A line chart of the AUC values with parameter is shown in Figure 8c. As we can see from the figure, for Gulfport Airport and HYDICE Urban, the general trend of the AUC value decreases from to , so the above two datasets are 0.5. For Texas Coast, the general trend of the AUC value is to first increase to , stabilize to , and then decrease, so the value of for Texas Coast is 0.2. For Cat Island, the AUC value first stays the same at and then decreases, so for Cat Island is 0.4. To sum up, parameter is set to 0.5, 0.2, 0.4, and 0.5, respectively, in the sequence of datasets in Section 4.1.

4.3. Analysis and Comparison of Detection Results

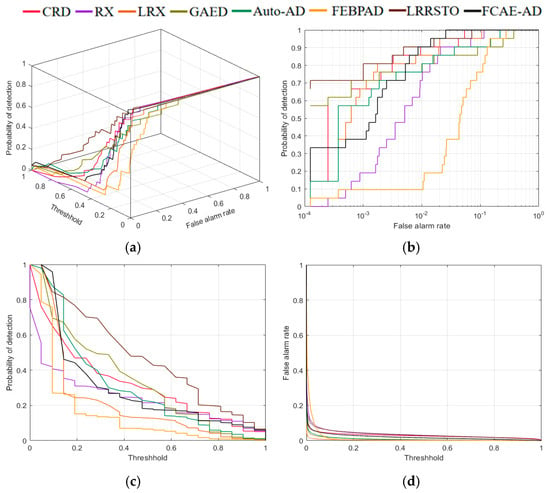

In order to analyze the detection results effectively, this paper adopts the corresponding three-dimensional receiver operating characteristic (3-D ROC) curve, the corresponding two-dimensional ROC (2-D ROC) curve , 2-D ROC curve , 2-D ROC curve [34], and some evaluation indicators , , , , for quantitative analysis. Where , , , , are generated by calculating , , , respectively. They are calculated as follows:

where , , and represent the overall performance. , , and represent the target detection rate. and represent the background suppression rate. The better, the smaller the value of . The better, the larger the remaining value [29].

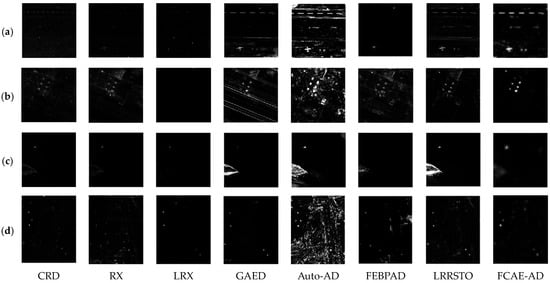

To analyze the detection performance of the FCAE-AD algorithm proposed in this paper, the detection result graphs of different algorithms are compared, and the detection results of each algorithm on different datasets are shown in Figure 9. It can be seen from the figure that although the CRD algorithm, RX algorithm, LRX algorithm, and FCBPAD algorithm have good background suppression effects, they cannot realize the separation of anomaly and background in most cases. Although the Auto-AD algorithm can detect the anomaly, the background suppression effect is not good enough overall. The detection results of the GAED algorithm and the LRRSTO algorithm are close to the FCAE-AD algorithm, but the background of the GAED algorithm in the second dataset is relatively clear, and the anomaly separation effect of the LRRSTO algorithm is not as good as that of the FCAE-AD algorithm. From the point of view of subjective analysis, the FCAE-AD algorithm proposed in this paper has achieved relatively good results on various datasets.

Figure 9.

Detection results of different algorithms. (a) Gulfport Airport; (b) Texas Coast; (c) Cat Island; (d) HYDICE Urban.

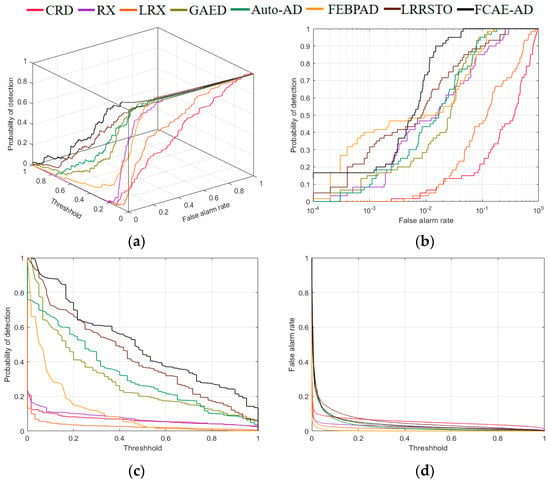

For the Gulfport Airport experimental dataset, the FCAE-AD algorithm achieves very significant results. The data given in Table 2 shows that most of the scores of the other comparison algorithms are around or below 0.96, while the AUC score of the FCAE-AD algorithm is above 0.99. In addition, the , , , , and values of the FCAE-AD algorithm are much larger than those of the other compared algorithms. The best values are shown in bold. Although the performance of the FCAE-AD algorithm on is not the best, it has achieved certain competitive results. As shown in Figure 10, the 3D-ROC curve and the corresponding 2D-ROC curves and of the proposed algorithm are both higher than other comparison algorithms, and the 2D-ROC curve also achieves relatively good results. In particular, according to Figure 10b, it can be seen from the image that when reaches 100%, the of FCAE-AD algorithm is also lower than others, the algorithm proposed in this paper has a lower false positive rate than others, which indicates that the anomaly and background are well separated. In conclusion, FCAE-AD has advantages in most indexes.

Table 2.

Comparison of AUC performance of Gulfport Airport on different algorithms.

Figure 10.

Performance comparison of different algorithms on Gulfport Airport. (a) 3-D ROC curves; (b) 2-D ROC curves ; (c) 2-D ROC curves ; (d) 2-D ROC curves .

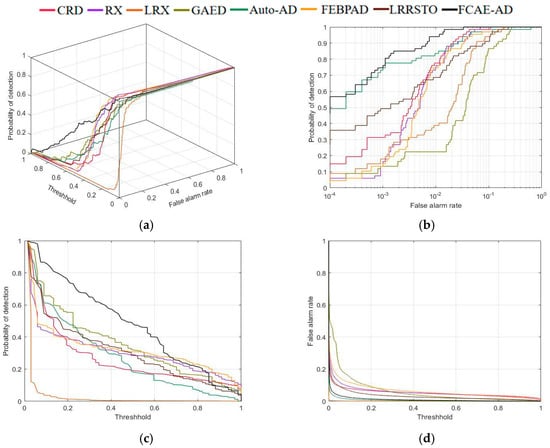

According to Figure 11, the 3D-ROC curve, the 2D-ROC curves and of the FCAE-AD algorithm are significantly greater than those of the other compared algorithms. The 2D-ROC curve of is shown in Figure 11d, and relatively good results are also obtained. It is seen from Table 3 that the maximum score of the algorithm in this paper is 0.9981. In addition, the , , , , and values of the FCAE-AD algorithm are much higher than those of other comparison algorithms. The best values are shown in bold. Although the performance of the FCAE-AD algorithm on is not the best, it has achieved certain competitive results. Although the result of is not optimal, it is second only to the Auto-AD algorithm. Combined with these results, FCAE-AD has the advantage in most indexes.

Figure 11.

Performance comparison of different algorithms on Texas Coast. (a) 3-D ROC curves; (b) 2-D ROC curves ; (c) 2-D ROC curves ; (d) 2-D ROC curves .

Table 3.

Comparison of AUC performance of Texas Coast on different algorithms.

The 3D-ROC curve, 2D-ROC curves, and corresponding AUC values of several algorithms in the Cat Island experimental dataset are displayed in Figure 12 and Table 4, respectively. As demonstrated by the figure, when compared to other algorithms, the FCAE-AD curve is substantially higher. According to Figure 12b, the 2D-ROC curve of the AG-AD algorithm is significantly greater than that of the other compared algorithms. According to the image, when reaches 100%, the value of the FCAE-AD algorithm is much lower than others, so the false positive rate of the algorithm in this paper is lower than others, which indicates that the anomaly and background are well separated. As demonstrated by the table, the score of the algorithm in this paper is 0.9998, which is very near 1. In addition, the values of , , and of FCAE-AD algorithm are much higher than those of other comparison algorithms. The best values are shown in bold. Although the performance of the FCAE-AD algorithm on , and is not the best, it still achieves certain competitive results. Although the result of is not optimal, it is second only to the Auto-AD algorithm. Combined with these results, FCAE-AD has the advantage in most indexes.

Figure 12.

Performance comparison of different algorithms on Cat Island. (a) 3-D ROC curves; (b) 2-D ROC curves ; (c) 2-D ROC curves ; (d) 2-D ROC curves .

Table 4.

Comparison of AUC performance of Cat Island on different algorithms.

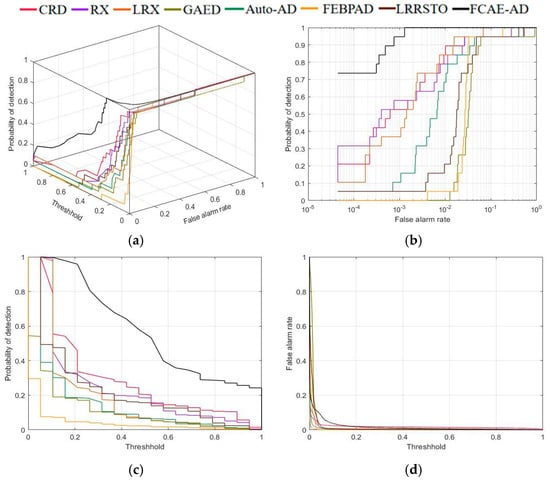

The 3D-ROC curve, 2D-ROC curves, and corresponding AUC values of several algorithms in the HYDICE Urban experimental dataset are displayed in Figure 13 and Table 5, respectively. As demonstrated by Figure 13a,b, the 3D-ROC curve and the 2D-ROC curve of the FCAE-AD algorithm are superior to other comparison algorithms. The 2D-ROC curves of and are shown in Figure 13c,d. As can be seen from the table, the value in this paper is the highest, 0.9963. The best values are shown in bold. Although the values of other , , , , , , and indexes in this paper are not optimal, some competitive results have been obtained.

Figure 13.

Performance comparison of different algorithms on HYDICE Urban. (a) 3-D ROC curves; (b) 2-D ROC curves ; (c) 2-D ROC curves ; (d) 2-D ROC curves .

Table 5.

Comparison of AUC performance of HYDICE Urban on different algorithms.

4.4. Ablation Study

To further explore the effectiveness of the proposed algorithm, detailed ablation experiments of the FCAE-AD algorithm are conducted in this section.

The FCAE-AD algorithm is composed of AE model, AG model, and GF model. In this study, the three components are each subjected to ablation experiments. To test their AD efficiency and thus achieve the purpose of evaluating the performance of the suggested algorithm. The AUCs of the initial AE model, AE + AG, and AE + AG + GF models are calculated, respectively. As shown in Table 6, AUC values are respectively improved after the addition of AG and GF models, and the final detection results of all experimental datasets reach above 0.99 without image preprocessing. This indicates that the addition of AG based on the AE model can play a certain role in suppressing the reconstruction of the anomaly during the reconstruction of the background. The GF post-processing optimization based on the above stages can also largely eliminate irregular background pixels and mitigate the impact of false anomalies on the results.

Table 6.

Ablation experiments at different stages.

5. Conclusions

The HSI-AD algorithm based on AG-connected full convolution AE is proposed in this paper. The coarse scale and deep features are input into the AG at the same time after upsampling. To extract the feature information. In the decoder stage, the skip connection is effectively combined to assign the higher weight to the background pixels, which effectively solves the problem of the undifferentiated reconstruction of background and anomaly in the AE process of background reconstruction. In addition, to further eliminate the problems of anomalous pixels and redundant interference in the reconstruction error map. The HSI is divided into eight sub-volumes by adopting the single-window segmentation model. Calculate the values of all spectral similarities to the center pixel in the eight sub-volumes and select the largest value as the pixel value of the guide image. The accuracy of HSI-AD is greatly improved by using the guide image to guide the reconstruction error map generated by the above process.

Experiments on four experimental datasets validate the effectiveness of the proposed algorithm, and the experimental results show that the algorithm has a good anomaly detection performance and maintains a low false alarm rate. Through the experimental verification in this paper, it is proved that the attention mechanism can be well applied in the field of hyperspectral anomaly detection. However, it can be seen from some indicators that the background suppression rate of the algorithm in this paper still has room to increase, which is the focus of our future work. The source code of the proposed method will be public on https://github.com/WYH-yihan/FCAE-AD.

Author Contributions

Conceptualization, methodology and Writing—review, X.W.; Formal analysis and writing—original draft preparation, Y.W.; Investigation validation, Supervision, Z.M. Data curation, M.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant No. 41971388).

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the editors and the reviewers for their valuable suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ben Salem, M.; Ettabaa, K.S.; Hamdi, M.A. Anomaly Detection in Hyperspectral Imagery: An Overview. In Proceedings of the International Image Processing, Applications and Systems Conference, Sfax, Tunisia, 5–7 November 2014; pp. 1–6. [Google Scholar]

- Racetin, I.; Krtalić, A. Systematic review of anomaly detection in hyperspectral remote sensing applications. Appl. Sci. 2021, 11, 4878. [Google Scholar] [CrossRef]

- Reed, I.S.; Yu, X. Adaptive multiple-band CFAR detection of an optical pattern with unknown spectral distribution. IEEE Trans. Acoust. Speech Signal Process. 1990, 38, 1760–1770. [Google Scholar] [CrossRef]

- Chun-Tong, L.; Shi-Xin, M.; Hao, W.; Yang, W.; Hong-Cai, L. A Density-Based Cluster Kernel RX Algorithm for Hyperspectral Anomaly Detection. Spectrosc. Spectr. Anal. 2019, 39, 1878–1884. [Google Scholar]

- Li, Z.; Zhang, Y. Hyperspectral Anomaly Detection Based on Improved RX with CNN Framework. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 2244–2247. [Google Scholar]

- Ren, L.; Zhao, L.; Wang, Y. A superpixel-based dual window RX for hyperspectral anomaly detection. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1233–1237. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, J.; Song, S.; Liu, D. Hyperspectral anomaly detection via dictionary construction-based low-rank representation and adaptive weighting. Remote Sens. 2019, 11, 192. [Google Scholar] [CrossRef]

- Ruhan, A.; Mu, X.; He, J. Enhance Tensor RPCA-Based Mahalanobis Distance Method for Hyperspectral Anomaly Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6008305. [Google Scholar]

- Xu, Y.; Du, B.; Zhang, L.; Chang, S. A low-rank and sparse matrix decomposition-based dictionary reconstruction and anomaly extraction framework for hyperspectral anomaly detection. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1248–1252. [Google Scholar] [CrossRef]

- Wang, R.; Hu, H.; He, F.; Nie, F.; Cai, S.; Ming, Z. Self-weighted collaborative representation for hyperspectral anomaly detection. Signal Process. 2020, 177, 107718. [Google Scholar] [CrossRef]

- Wu, Z.; Su, H.; Tao, X.; Han, L.; Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. Hyperspectral anomaly detection with relaxed collaborative representation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5533417. [Google Scholar] [CrossRef]

- Hou, Z.; Li, W.; Tao, R.; Ma, P.; Shi, W. Collaborative representation with background purification and saliency weight for hyperspectral anomaly detection. Sci. China Inf. Sci. 2022, 65, 112305. [Google Scholar] [CrossRef]

- Kuswidiyanto, L.W.; Noh, H.H.; Han, X. Plant Disease Diagnosis Using Deep Learning Based on Aerial Hyperspectral Images: A Review. Remote Sens. 2022, 14, 6031. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Minaee, S.; Kalchbrenner, N.; Cambria, E.; Nikzad, N.; Chenaghlu, M. Deep learning—Based text classification: A comprehensive review. ACM Comput. Surv. CSUR 2021, 54, 1–40. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Bati, E.; Çalışkan, A.; Koz, A.; Aydin, A. Hyperspectral anomaly detection method based on auto-encoder. Image Signal Process. Remote Sens. XXI. Spie 2015, 9643, 220–226. [Google Scholar]

- Zhao, C.; Li, X.; Zhu, H. Hyperspectral anomaly detection based on stacked denoising autoencoders. J. Appl. Remote Sens. 2017, 11, 042605. [Google Scholar] [CrossRef]

- Xie, W.; Lei, J.; Liu, B.; Li, Y.; Jia, X. Spectral constraint adversarial autoencoders approach to feature representation in hyperspectral anomaly detection. Neural Netw. 2019, 119, 222–234. [Google Scholar] [CrossRef] [PubMed]

- Lu, X.; Zhang, W.; Huang, J. Exploiting embedding manifold of autoencoders for hyperspectral anomaly detection. IEEE Trans. Geosci. Remote Sens. 2019, 58, 1527–1537. [Google Scholar] [CrossRef]

- Makhzani, A.; Shlens, J.; Jaitly, N.; Goodfellow, L.; Frey, B. Adversarial autoencoders. arXiv 2015, arXiv:1511.05644. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Hu, J. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar]

- Xie, W.; Li, Y.; Zhou, W.; Zheng, Y. Efficient coarse-to-fine spectral rectification for hyperspectral image. Neurocomputing 2018, 275, 2490–2504. [Google Scholar] [CrossRef]

- Kang, X.; Li, S.; Benediktsson, J.A. Spectral–spatial hyperspectral image classification with edge-preserving filtering. IEEE Trans. Geosci. Remote Sens. 2013, 52, 2666–2677. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Wang, S.; Wang, X.; Zhang, L.; Zhong, Y. Auto-AD: Autonomous hyperspectral anomaly detection network based on fully convolutional autoencoder. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5503314. [Google Scholar] [CrossRef]

- Yu, M.; Chen, X.; Zhang, W.; Liu, Y. AGs-Unet: Building Extraction Model for High Resolution Remote Sensing Images Based on Attention Gates U Network. Sensors 2022, 22, 2932. [Google Scholar] [CrossRef]

- Xiang, P.; Ali, S.; Jung, S.K.; Zhou, H. Hyperspectral anomaly detection with guided autoencoder. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5538818. [Google Scholar] [CrossRef]

- Mu, Z.; Wang, M.; Wang, Y.; Song, R.; Wang, X. SI2FM: SID Isolation Double Forest Model for Hyperspectral Anomaly Detection. Remote Sens. 2023, 15, 612. [Google Scholar] [CrossRef]

- Tan, K.; Hou, Z.; Ma, D.; Chen, Y.; Du, Q. Anomaly detection in hyperspectral imagery based on low-rank representation incorporating a spatial constraint. Remote Sens. 2019, 11, 1578. [Google Scholar] [CrossRef]

- Ma, Y.; Fan, G.; Jin, Q.; Huang, J.; Mei, X.; Ma, J. Hyperspectral anomaly detection via integration of feature extraction and background purification. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1436–1440. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. Collaborative representation for hyperspectral anomaly detection. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1463–1474. [Google Scholar]

- Shang, W.; Jouni, M.; Wu, Z.; Xu, Y. Hyperspectral Anomaly Detection Based on Regularized Background Abundance Tensor Decomposition. Remote Sens. 2023, 15, 1679. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).