1. Introduction

Target detection can efficiently identify and extract various object information in images, such as buildings, roads, crops, etc. It is an essential method for Earth observation and has a wide range of applications in environmental monitoring, ocean monitoring, urban planning and forestry engineering. However, there are various challenges in object detection from large amounts of data. First, images with high spatial resolution contain hundreds of millions of pixels. Thus, it is difficult to detect from a huge amount of data. Moreover, objects with multi-scale features present challenges when training with natural images for remote sensing object detection, resulting in suboptimal detection performance.

Traditional techniques for object recognition use artificial features and prior knowledge, Deep learning object detection techniques are the main approaches for recognition. Traditional target identification techniques based on the template sliding window approach include Histogram of Oriented Gradient (HOG) [

1] and Scale Invariant Feature Transform (SIFT) [

2]. The approach is complex and places undue reliance on manually aggregating and summarizing past knowledge of colors, textures, edges, and other properties. Deep-learning-based object detection algorithms use convolutional neural networks to automatically learn features instead of relying on prior knowledge to manually design templates, and have stronger feature extraction capabilities, higher adaptability to complex scenes, and clear advantages in terms of detection accuracy and speed. Deep learning has made significant advances in computer vision related fields in recent years.The 2012 ImageNet Large Scale Visual Recognition Challenge (ILSVR 2012), which was won by AlexNet offered by A. Krizhevsky et al. [

3], considerably advanced the study of deep learning in the area of image processing [

4]. Since then, a wide range of excellent deep learning algorithms have developed; most of them not only increase the detection accuracy, but also add a large number of model parameters [

5]. Currently, central processing units (CPUs) and graphics processing units (GPUs) are mostly used in terms of deep learning algorithm training and inference, while CPUs have better computing ability, and definitely could achieve better efficiency and results. GPUs are commonly employed due to their superior parallel processing capabilities. However, due to their extreme power consumption, they cannot achieve a perfect performance-to-power ratio. In some specific applications, extra stringent power consumption constraints are necessary [

6]. General purpose CPUs and GPUs, for example, are not easy to adapt to the application requirements in embedded application.

The large number of parameters in deep convolutional neural models makes it challenging to deploy them from large servers to embedded devices with constrained memory and processing capabilities. It is now necessary to find more efficient models and hardware implementations of object detection algorithms for remote sensing photos. Thanks to emerging communication technologies such as 5G, everything can be connected. In remote sensing observation scenarios such as UAV dynamic real-time monitoring, on-board intelligent information processing systems can not only significantly reduce transmission bandwidth, processing time, and resource consumption, but also perform monitoring tasks more efficiently, adaptively, and quickly [

7,

8]. Consequently, the task of developing accurate and lightweight algorithmic models that can seamlessly integrate with edge computing systems like aerial information processing systems has become increasingly challenging. In this context, researchers start to develop on-board real-time information processing systems using Field Programmable Gate Arrays (FPGAs) and application-specific Integrated Circuits (ASICs) [

9]. FPGAs and ASICs offer the benefits of superior performance and low power consumption compared to CPUs and GPUs. FPGA is the perfect deployment platform for CNN due to its short development period and greater reconfiguration than ASIC. A FPGA-based YOLOv4 road surface detection system was proposed by Chen et al. [

10]. The Vitis AI framework was used for the quantization and deployment of YOLOv4. The network structure of YOLOv4 was not improved or made lighter by its design, which resulted in lower average detection accuracy and detection speed for their final detection system.

Zynq UltraScale+ MPSoC is a system-on-chip (SOC) that integrates a processor system and programmable logic. The DPU of the Zynq UltraScale+ MPSoC is co-designed with both ARM processors and FPGA programmable logic. Specifically, the DPU is located in the FPGA section of the Zynq UltraScale+ MPSoC chip, while the accompanying ARM processor is used for system-level control and management. The DPU is a hardware acceleration unit in the Zynq UltraScale+ MPSoC architecture, specifically designed for deep learning inference tasks. It utilizes the programmable logic resources of an FPGA to accelerate the inference process of neural network models through efficient matrix operations and hardware optimizations. The design and implementation of the DPU is based on FPGA technology. Meanwhile, the Zynq UltraScale+ MPSoC chip also incorporates ARM Cortex-A series processors, which are responsible for system-level control, management, and processing tasks. The ARM processor handles communication, data transfer, neural network control, and other operations with the FPGA section. They provide software support and interfaces that enable collaboration between FPGA and ARM processors to achieve high performance acceleration for deep learning inference tasks.

Overall, we present an optimized real-time object recognition model for optical remote sensing images specifically designed for the Xilinx DPU. Our approach involves compressing the model with minimal accuracy loss while leveraging the Xilinx Zynq UltraScale+ architecture to implement a hardware acceleration system. The result is a solution that effectively balances detection speed, accuracy, and power usage. These are our primary contributions, in brief:

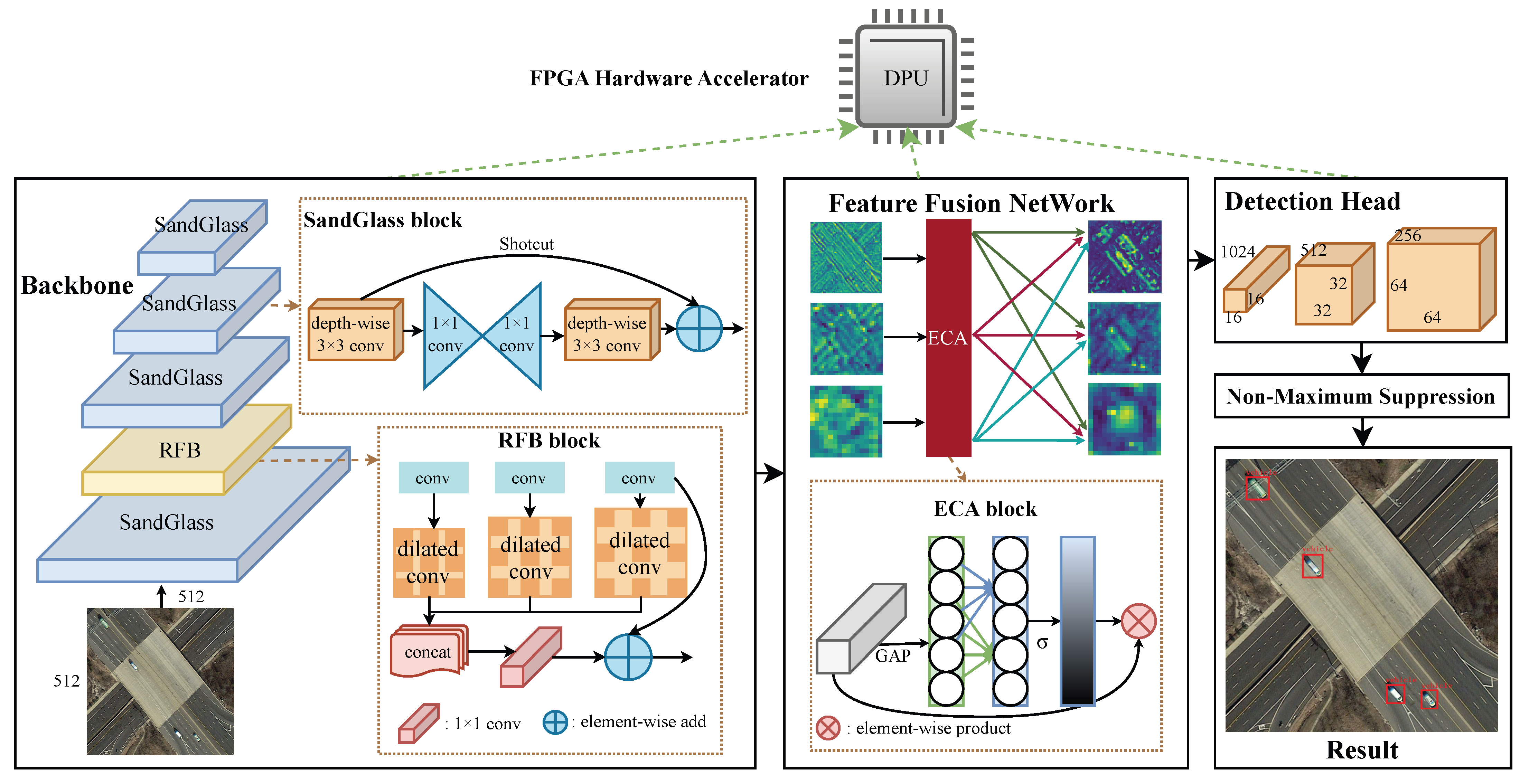

We proposed a lightweight backbone network and feature fusion neck network YOLO model with attention mechanism and receptive field (RFA-YOLO) for optical remote sensing images. Depth separable convolution and SandGlass block was used to reduce the model parameters, and the RFB and the ECA module was carried out to improve the detection accuracy for small object detection;

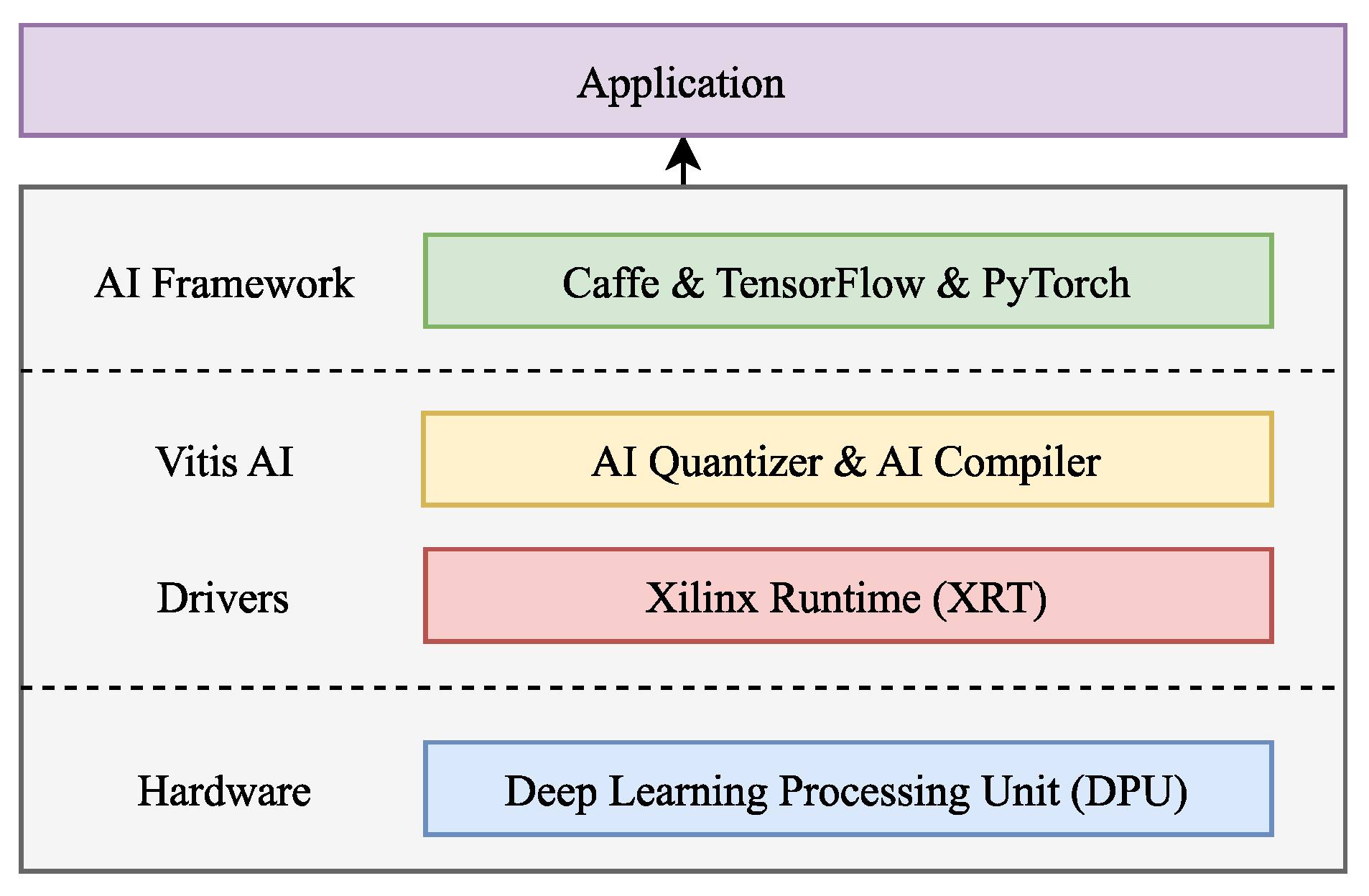

We adopt Vitis AI platform and Deep learning Processing Unit (DPU) on a Field Programmable Gate Array (FPGA) to achieve parallelism acceleration and low power deployment, thus implement airborne real-time object detection of aerial remote sensing images;

We evaluate the efficiency of the proposed RFA-YOLO model and hardware implementation through experimental and simulation validation. The RFA-YOLO model has better performance compared to several other popular algorithmic models on the DIOR dataset.

3. Methods

In this section, we initially selected YOLO and DPU as the baseline for object detection algorithms and hardware acceleration architectures, respectively.

We then introduced a novel YOLO model, called RFA-YOLO, which incorporates attention mechanism and receptive field for improved performance on optical remote sensing images. The model comprises a lightweight MobileNext-RFB backbone network for multi-scale feature extraction, followed by a lightweight PAN-lite feature fusion network and ECA module to enhance and fuse semantic information from different scales of feature maps. The fused features are then used for regression to predict the detection results. Furthermore, we demonstrate the adaptation of the object detection model to the DPU Accelerator, enabling low-power embedded deployment. The design framework flowchart is illustrated in

Figure 1.

3.1. MobileNext-RFB Backbone

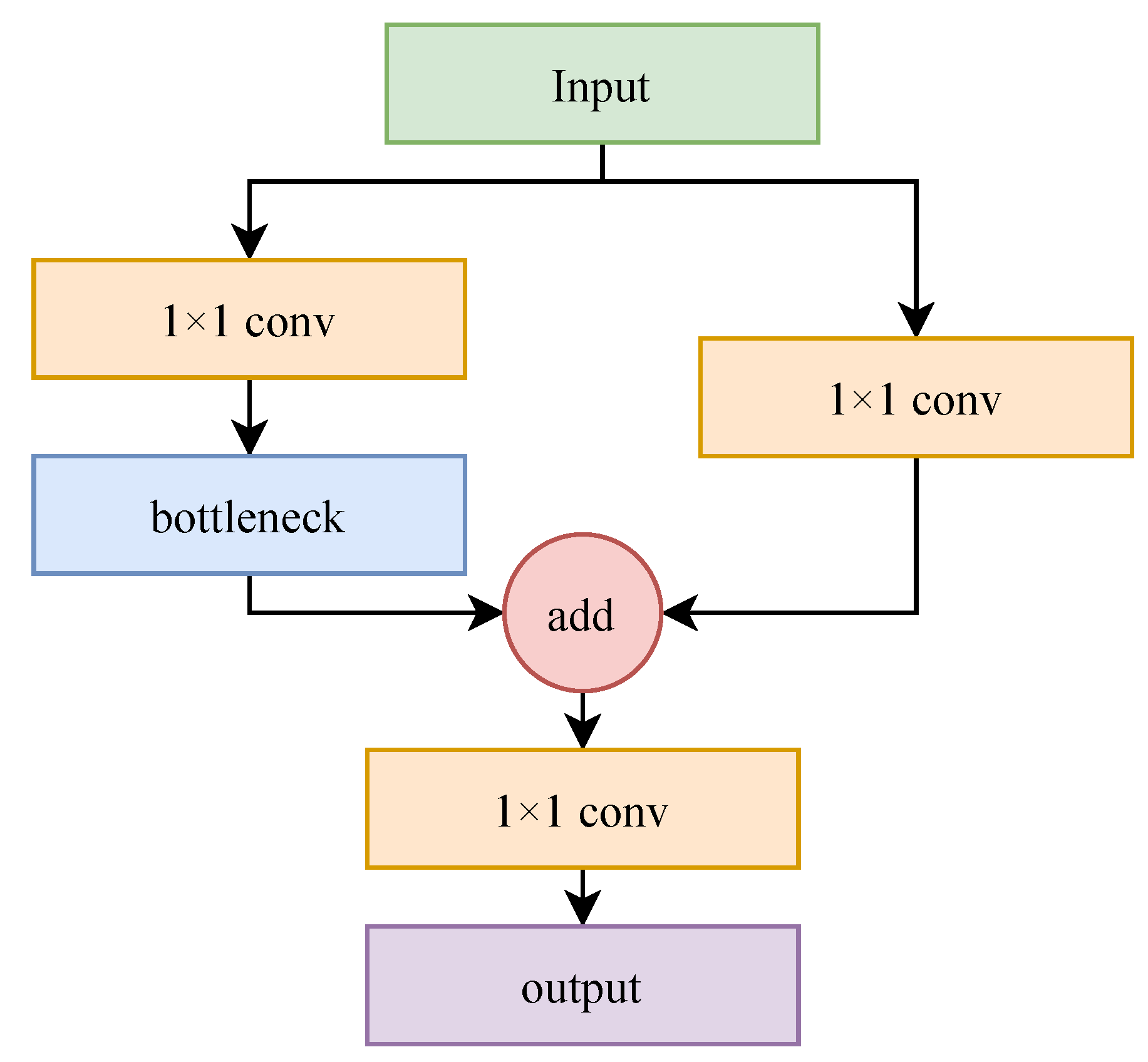

YOLOv4 uses CSPDarknet53 as the backbone network based on cross-stage partial connection (CSP). As shown in

Figure 2, CSP is a structure that divides feature maps into two parts (one part for direct transmission and the other part involving complex operations), and then merges them together; this structure reduces the redundancy of the feature map and improves the efficiency of the network. CSPDarknet53 uses the Dense Block structure to connect the output of each layer with the previous layer to increase the diversity and complexity of the feature map and improve the expressiveness of the model. CSPDarknet53 uses the Mish activation function, which can avoid gradient disappearance and explosion, improving the stability and accuracy of the model.

While the CSP module structure and Mish activation function used in the CSPDarknet53 backbone network can indeed enhance the feature extraction quality, it comes with certain drawbacks. The complexity of the structure and the computational intensity make the network deep with a large number of layers and parameters. Moreover, the computing process of the Mish activation function is complex and not optimized for hardware implementation. Additionally, the model requires substantial memory and computing resources, making it challenging to compress and accelerate the model and deploy it in resource-constrained edge environments. Therefore, we use the SandGlass [

32] module and the RFB [

15] module to build a lightweight MobileNeXt-RFB network to replace the original backbone network of YOLOv4.

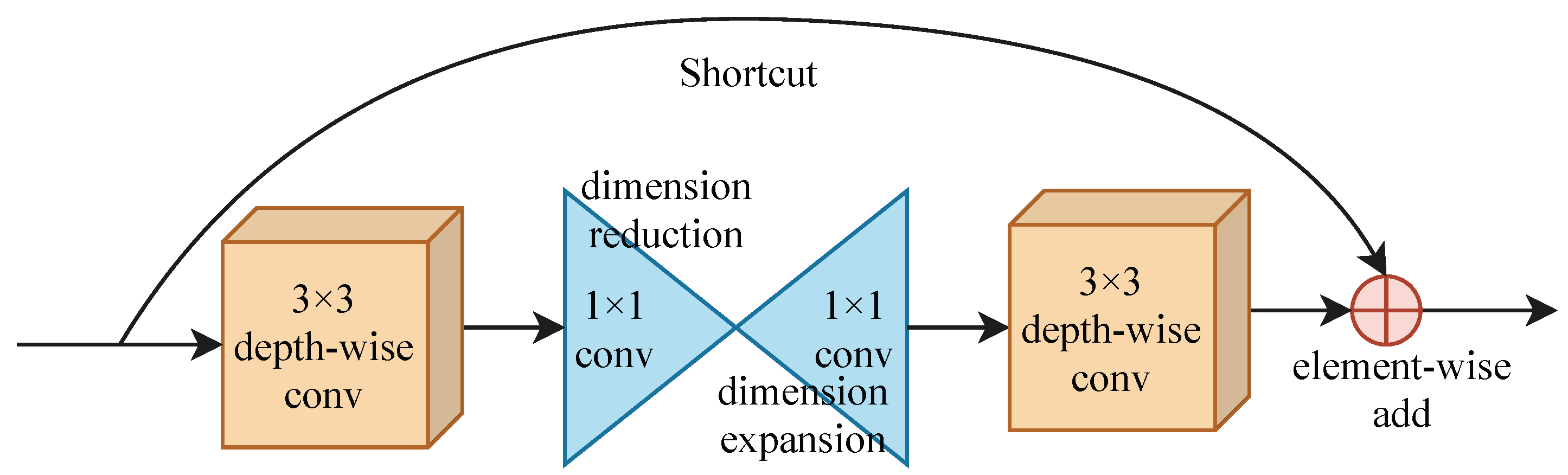

SandGlass Block

The SandGlass module is adopted instead of the CSP module to construct the MobileNeXt network as the backbone network of the target detection model. SandGlass improves the loss of feature information and gradient disappearance of the inverse residual module in the MobileNetv2 network.

The SandGlass module first performs spatial information transformation on the input features through a convolutional layer, and then performs channel information transformation through two convolutions for dimensionality reduction and expansion, before finally extracting features through another convolutional layer.

The SandGlass block reduces dimensions and then expands dimensions of input features, which are in a shape resembling an hourglass, as shown in

Figure 3. The module adopts a linear bottleneck design, where only the first

convolution and the second

convolution use the ReLU6 activation function, while other layers use the linear activation function. Additionally, the

convolution in the module is replaced by a depthwise separable convolution to reduce model computation. The module uses the ReLU6 activation function, whose mathematical expression is shown in Equation (

1). The advantage of ReLU6 is that it leads to fast convergence, but it may cause gradient vanishing due to the zeroing of values less than 0. To avoid this issue, we replace the original ReLU6 activation function in the SandGlass module with the LeakyReLU activation function, whose mathematical expression is shown in Equation (

2).

3.2. Receptive Field Block

The receptive field refers to the portion of the input image that each pixel on the feature map corresponds to, indicating the extent to which a pixel “sees” the input image. The size of the receptive field has an impact on the network’s performance. In general, a larger receptive field allows the network to capture more image information, thereby enhancing its recognition ability. The size of the receptive field is determined by the network’s structure, including factors such as the size of the convolution kernel and the stride. The receptive field of a feature map can be calculated recursively using a formula, such as Equation (

3). The receptive field of the m-layer is equal to the receptive field of the

layer plus the convolution kernel or pooling kernel of the m-layer covering the range, and the size of the receptive field is calculated layer by layer from back to front. Assuming that the receptive field of the first input feature is

, the receptive field after

m-layer convolution is

as in Equation (

3), where

is the convolution kernel size (or pooling kernel size) of the

m-layer,

is the convolution step (or pooling step) of the

nth layer.

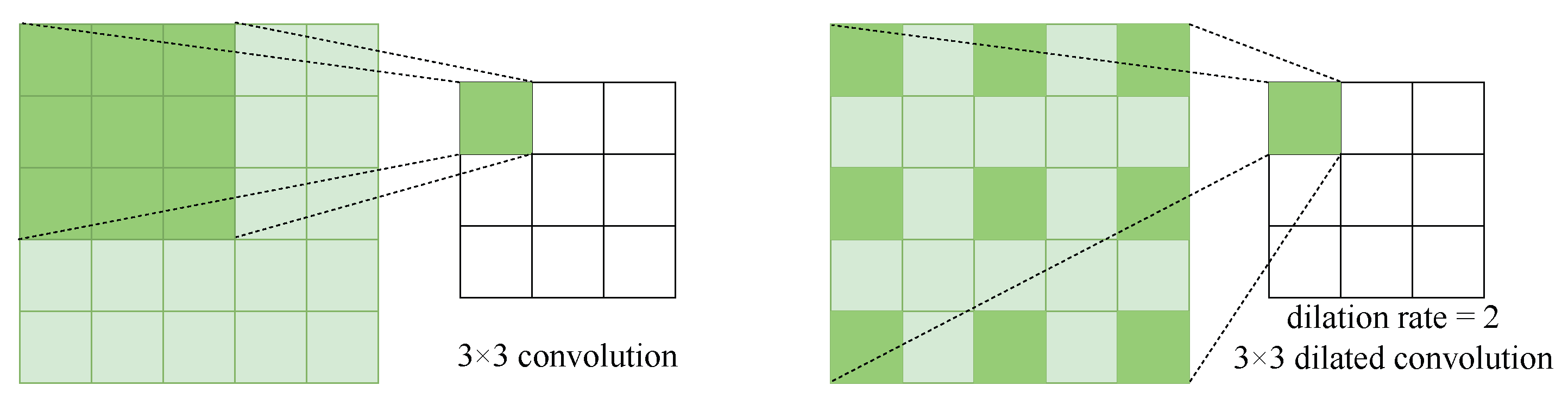

The receptive field of convolutional operations is primarily determined by the size of the convolution kernel. Increasing the size of the kernel can expand the receptive field. However, this also results in a substantial increase in model parameters. Dilated convolution, also known as atrous convolution, overcomes this challenge by introducing holes in the convolution kernel. By doing so, it enlarges the receptive field without altering the number of parameters or the size of the output feature map. This allows the network to capture a broader range of spatial information, enhancing its ability to understand larger contextual patterns.

The comparison between regular convolution and dilated convolution is shown in

Figure 4. The key parameter of expansion convolution is the dilated rate, which indicates that the convolution of different dilated rates of the size of the cavity has different receptive fields, and the fused features contain both the characteristics of large-scale receptive fields and the characteristics of small-scale receptive fields, and which has more contextual information, reduces the loss of detail information in the downsampling process, and is conducive to improving the detection accuracy.

However, the dilated convolution structure as a whole has a drawback commonly referred to as the grid effect, where not all pixels on the feature map are covered. This leads to a loss of continuity in contextual information. To address this issue, a sawtooth-shaped mixed convolution structure called Hybrid Dilated Convolution (HDC) has been introduced. HDC consists of a series of dilated convolutions with different dilation rates operating in parallel. This structure allows for complete coverage of the image features and effectively avoids the grid effect, ensuring better preservation of contextual information.

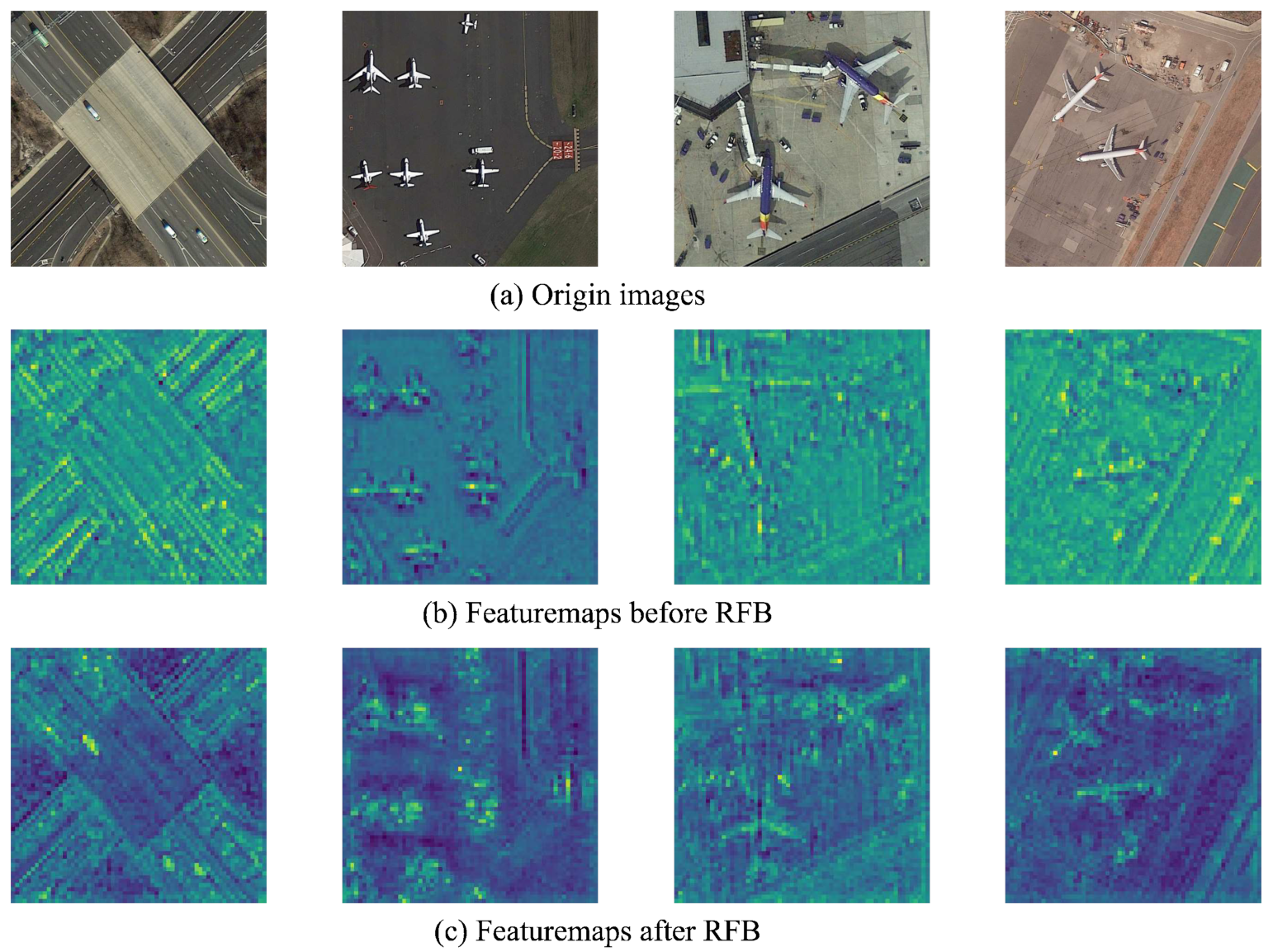

The RFB module utilizes multiple expansion convolutions with different dilated rates to sample the input features independently. These sampled features are then overlaid and fused to enhance the model’s receptive field. By increasing the receptive field, the network becomes capable of extracting a broader range of spatial information. This improves its understanding of long-distance spatial relationships, implicit spatial structures, and enables the model to adapt to targets of different scales. Additionally, it enhances the extraction and fusion capabilities of multi-scale features, facilitating better feature representation and understanding of complex spatial patterns.

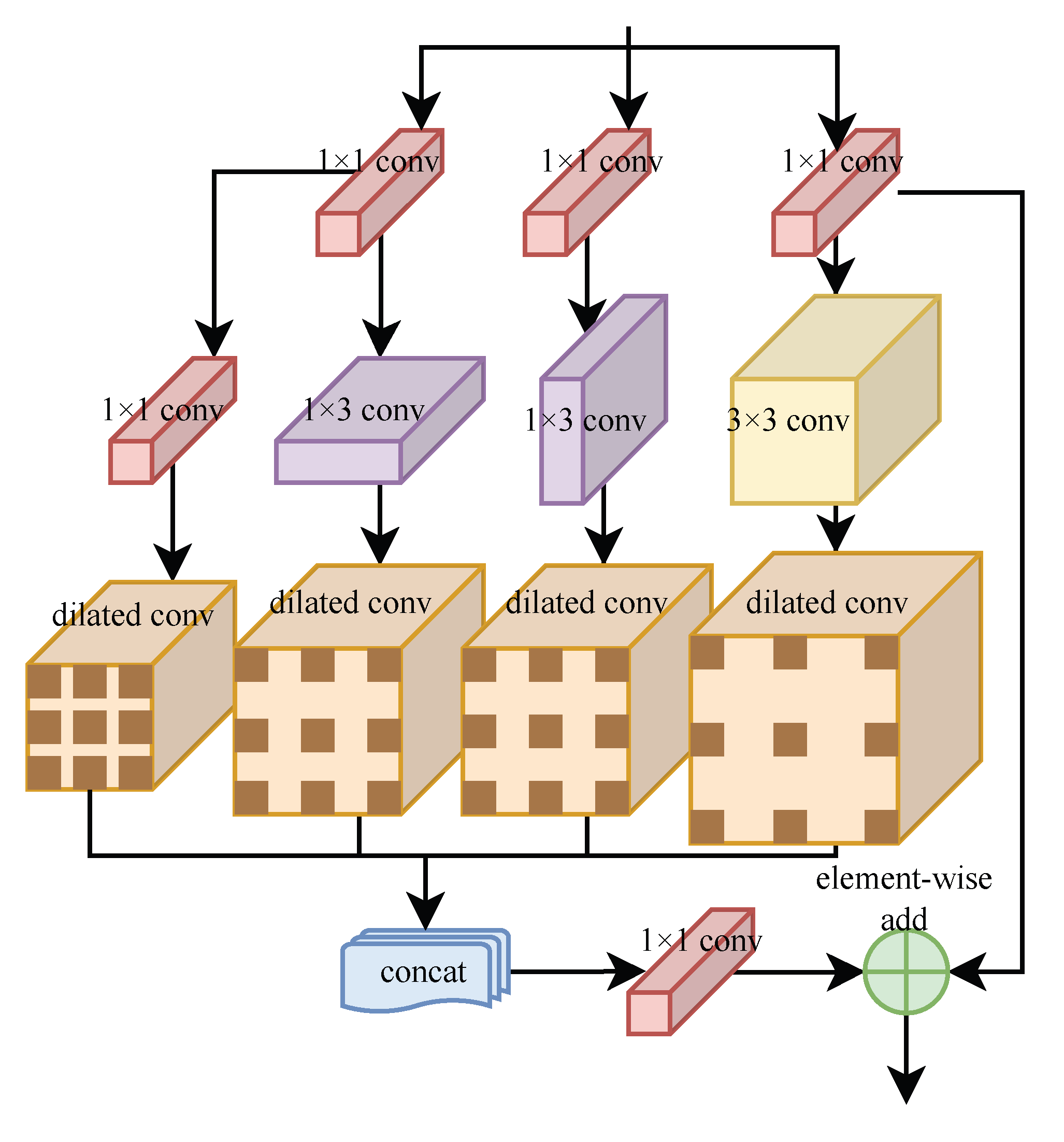

To improve the multi-scale detection accuracy of the model, we introduce the RFB module into the backbone network, whose network structure is shown in

Figure 5.

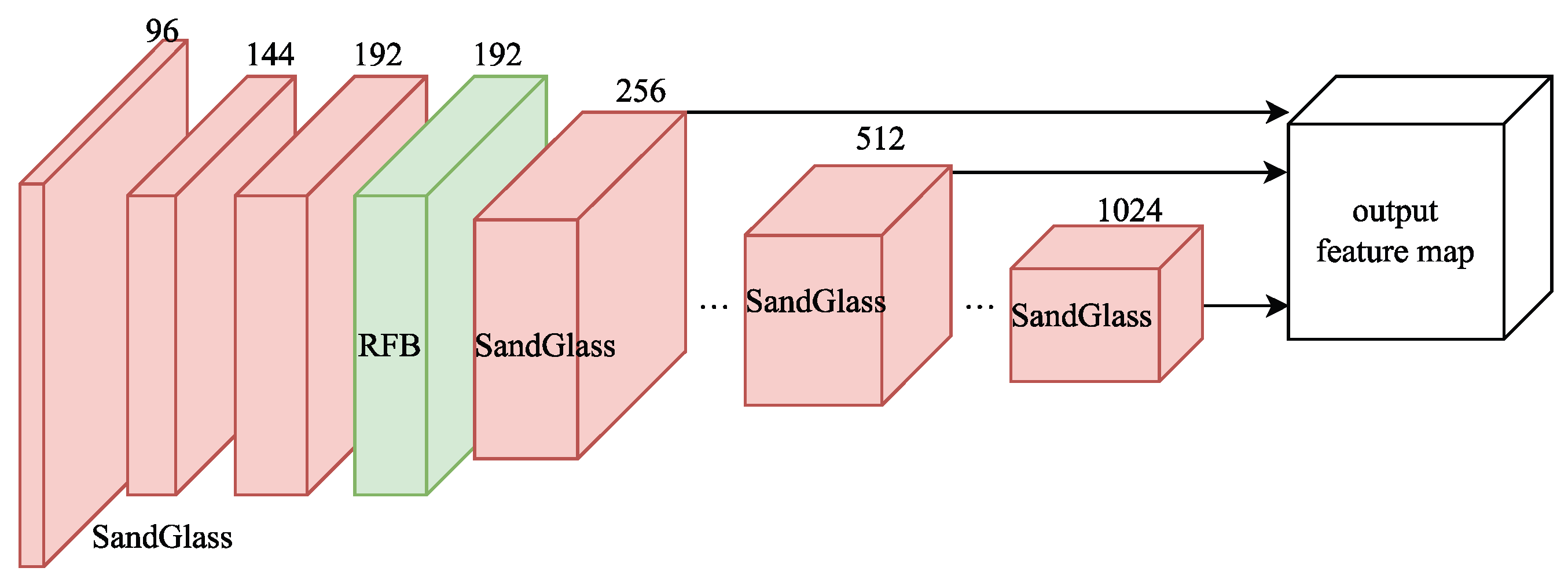

RFB uses a series of dilated convolutions with different dilation rates to sample the input features, and then superimposes and fuses the sampled features. Convolutions with different dilation rates have different receptive fields. The fused features contain both large-scale receptive field features and small-scale receptive field features, providing more contextual information and reducing the loss of detailed information during downsampling, which is beneficial to improve detection accuracy. The backbone network is a continuous downsampling process (the deeper the network layer, the more small target information loss); therefore, we choose to add RFB module in the shallow part of the backbone network to improve the accuracy of small target detection as much as possible, and the structure of the constructed MobileNeXt-RFB network is shown in

Figure 6.

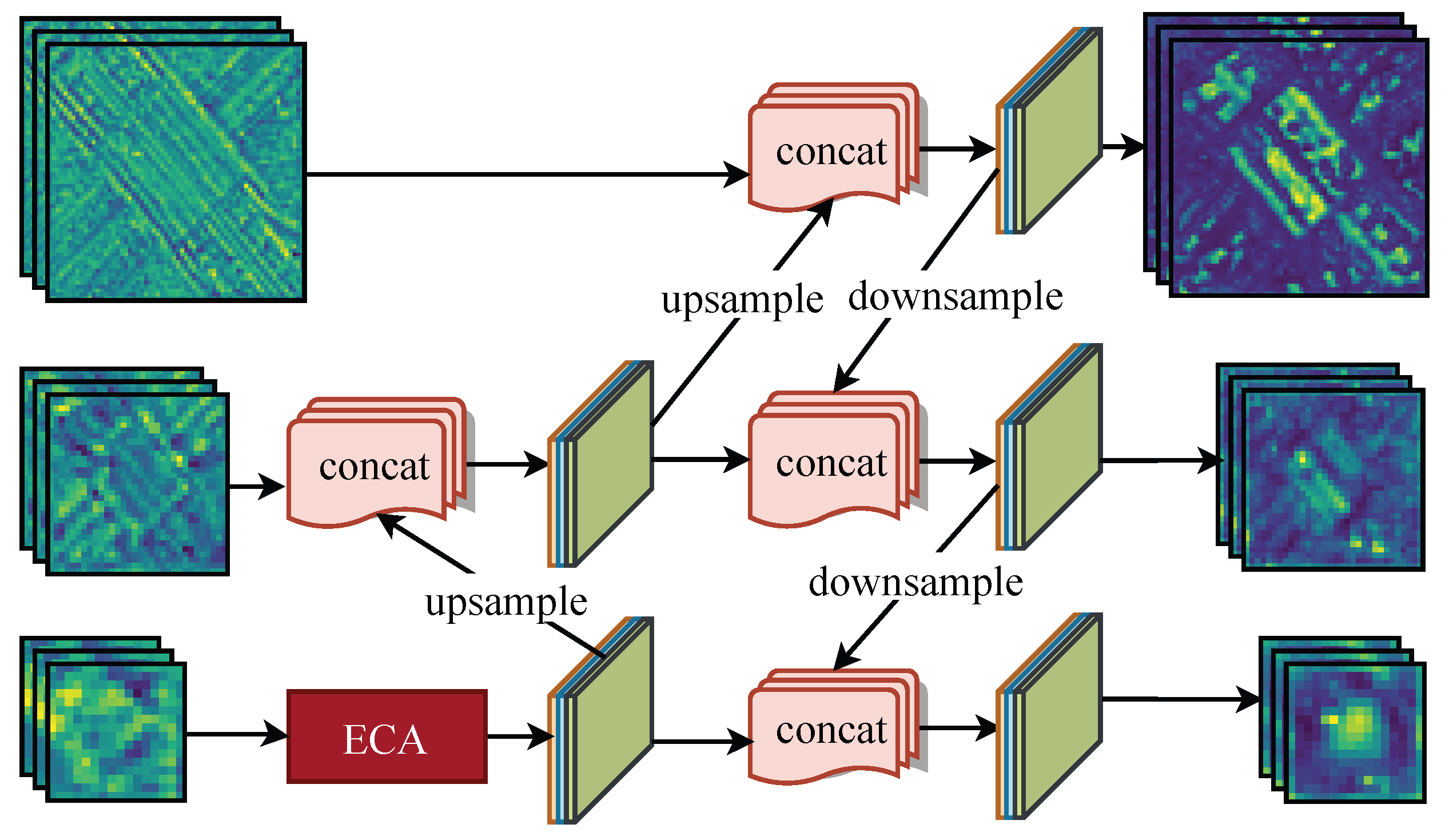

3.3. PAN-Lite Feature Fusion Network

After downsampling the input image through the backbone network, three different scales of feature maps are outputted, where the large-scale feature map contains richer semantic information, including more complete global feature information of the target, and the small-scale feature map contains strong positional information, which better reflects the local texture and coordinates position of the objects. In order to better utilize the target feature information of different scales of feature maps while ensuring detection speed, we use the PAN-lite network, which is based on the PAN network, for multi-scale feature fusion. Firstly, the number of channels of the input feature map is adjusted, and then the bi-directional fusion of self-upward and self-downward sampling with other scale feature maps is performed. The PAN-lite network structure is shown in

Figure 7.

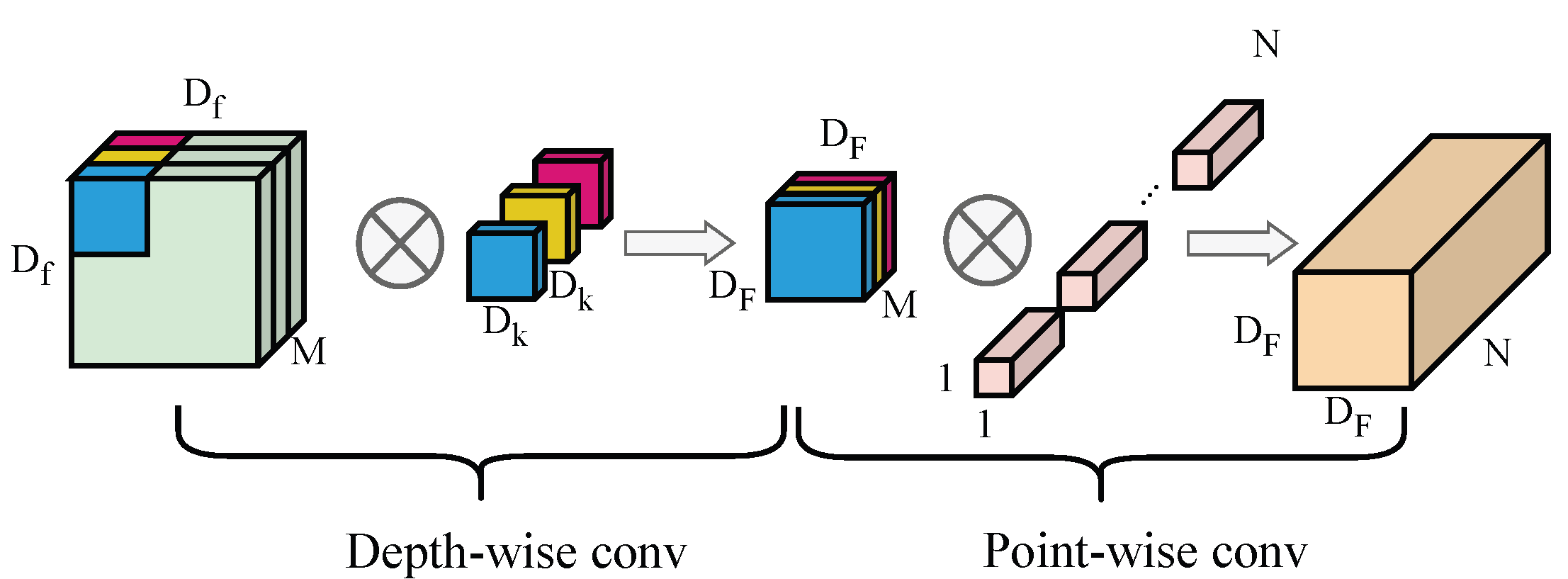

3.4. Depthwise Separable Convolution

To avoid the stacking effect caused by the superposition of feature maps at different levels, the feature fusion network needs to transform the spatial and channel information of the stacked features. In YOLOv4, a convolution group composed of three convolutions and two convolutions is used to enhance features. However, this design significantly increases the model’s size, which is not conducive to improving detection speed. Therefore, we use two convolutions and one depthwise separable convolution instead of the five convolution operations.

The depthwise separable convolution [

33] consists of depthwise and pointwise convolutions, as shown in

Figure 8. The input feature map size is

, the output feature map size is

, and the kernel size is

. The computation and parameter sizes of standard convolution are shown in Equations (

4) and (

5), respectively.

The computation and parameter sizes of depthwise separable convolution are the sum of the computation sizes of depthwise and pointwise convolutions, as shown in Equations (

6) and (

7), respectively. Compared with standard convolution operations, the parameter count and computational cost of depthwise separable convolution are relatively low.

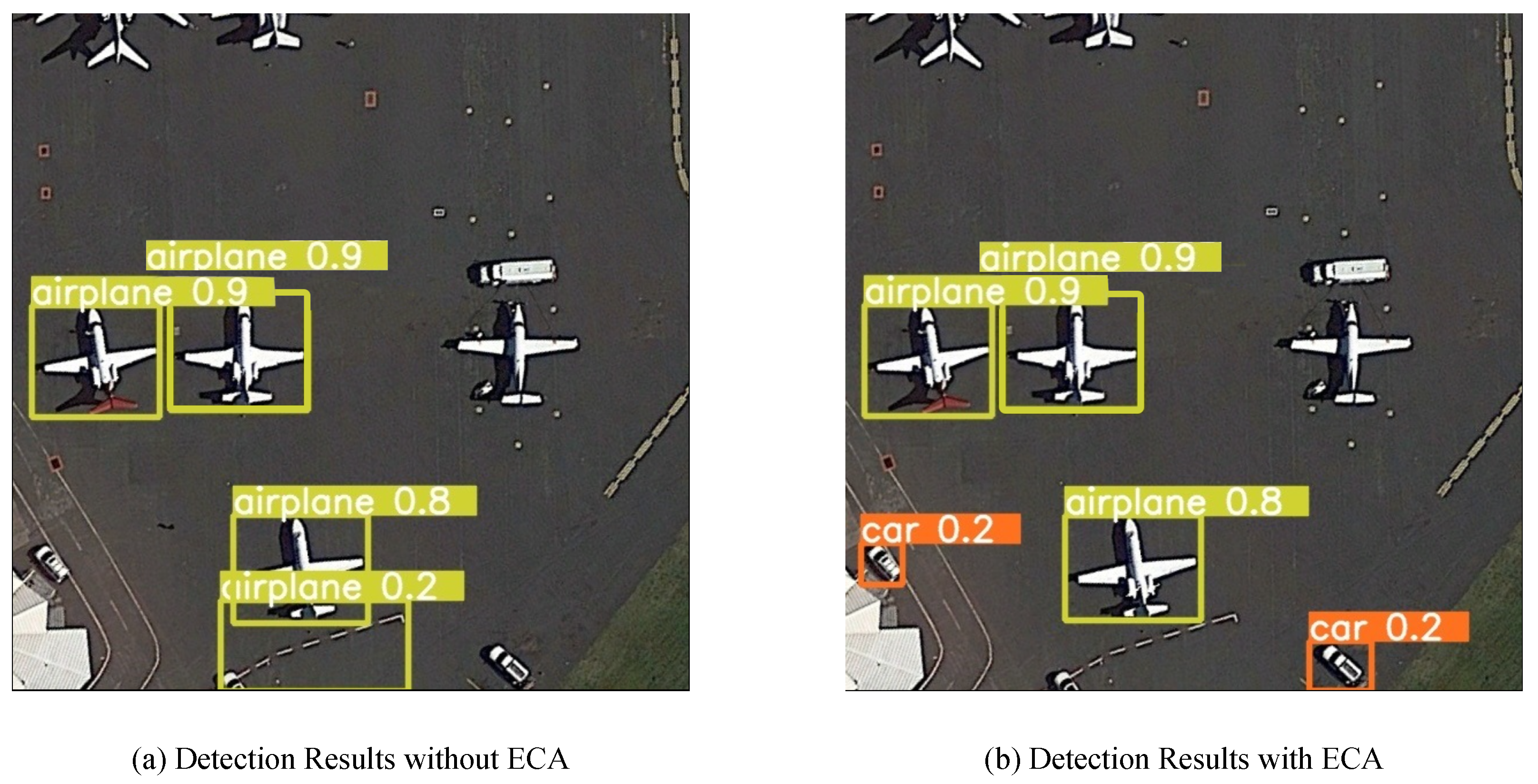

3.5. An Efficient Channel-Attention Mechanism

Many soft attention modules do not modify the output size, so they can be flexibly inserted into various parts of the convolutional network, but increase the training parameters, resulting in an increased computational cost. As a result, an increasing number of modules focus on the trade-off between the number of parameters and accuracy, and various lightweight attention modules have been proposed [

34,

35].

The ECA network is a cross-channel interaction mechanism in which dimensionality reduction is not needed. In addition, an adaptive choice of 1D convolution kernel is used to implement local cross-channel interactions. The feature maps are fed into the ECA module and the weight vectors are generated by pooling the input feature maps by global average. The weight vector was adjusted by a 1D convolution kernel and the sigmoid function, and the input features were dot product by the weight vector. Suppose the feature mapping input of the ECA module is

, and then convert it to

one-dimensional vector using global average pooling (GAP).

As shown in Equation (

8),

, the weight values after reshape are multiplied with the original feature map,

C is the channel number,

is the local feature of channel

c,

H is the row of the feature vector, and

W is the column of the feature vector. The weight of channel

i can be calculate by Equation (

9), where

represent the set of

k adjacent channels of

.

Due to FPGA hardware limitations, it is difficult to perform complex and accurate floating-point calculations, so we use the Hard Sigmoid function to approximate instead of the Sigmoid function. The calculation formula of Hard Sigmoid and Sigmoid is shown in Equations (

10) and (

11).

3.6. Data Augmentation

Due to environmental factors and the influence of the viewing angle of the aerial platform (such as airplanes and drones), the images collected by remote sensing platforms have complex backgrounds, lighting, viewing angles, and occlusion conditions that cannot be fully covered by the existing dataset.

To mitigate overfitting during the model training process and enhance the model’s generalization ability and robustness, we employed data augmentation techniques on the existing dataset. This involved applying various transformations and modifications to the images. The specific data augmentation methods used are illustrated in

Figure 9. These techniques help to introduce diversity in the training data and expose the model to a wider range of variations, thereby improving its performance on unseen data.

In order to simulate real-world scenarios and enhance the dataset, we employed various data augmentation techniques. These included random scaling, rotation, brightness adjustment, occlusion addition, and motion blur. By applying these transformations, we aimed to introduce variability and better prepare the model for handling diverse and challenging real-world conditions. Additionally, given the importance of accurately detecting small objects, we incorporated the Copy–Paste data augmentation method. This technique involves randomly copying bounding boxes of small objects to augment the dataset. By doing so, we provided the network with more examples of small objects, aiding its ability to learn effective feature representations for detecting and localizing such objects accurately. This approach can improve the model’s performance specifically on small object detection tasks.

3.7. K-Means Anchor Box Clustering

In the YOLOv4 model, the initial anchor box sizes are calculated based on the COCO image dataset. However, these anchor box sizes may not be suitable for the optical remote sensing image dataset used in this work due to the significant differences in object scale distribution. To improve the accuracy of the detection model, we employ the k-means clustering algorithm to determine appropriate anchor box sizes specifically for the DIOR optical remote sensing dataset. The k-means clustering algorithm starts by randomly selecting k samples as the initial centroids. Then, it calculates the distance between each sample in the dataset and the selected initial centroids. Based on the distance, each sample is assigned to the cluster represented by the nearest centroid. This process is repeated iteratively until the results stabilize, ensuring that the assignment of samples to clusters becomes consistent. By using the k-means clustering algorithm, we can calculate anchor box sizes that better suit the characteristics of the DIOR optical remote sensing dataset. This approach improves the accuracy of the detection model by providing anchor boxes that are more appropriate for the specific objects and scale distribution present in the dataset.

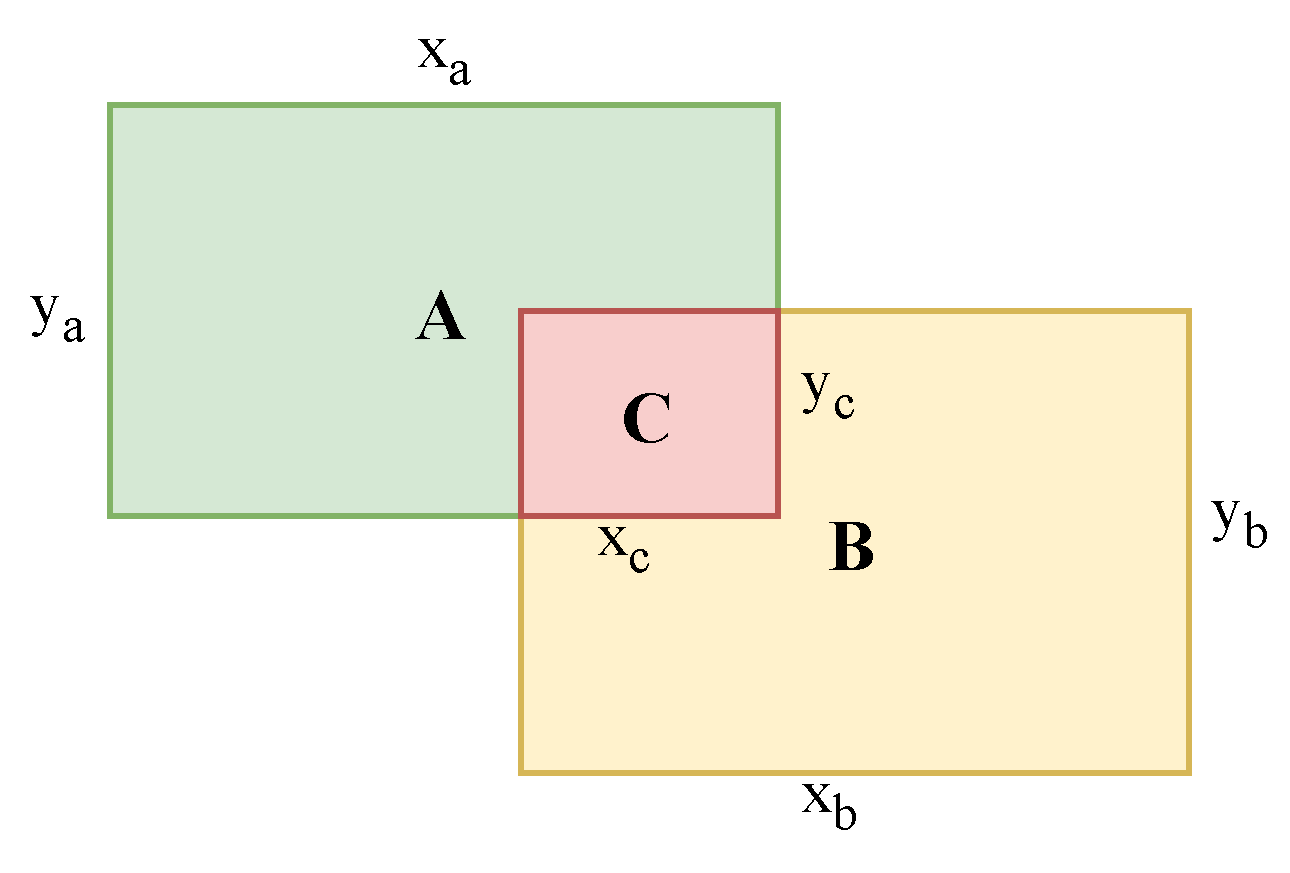

To avoid the impact of ignoring the area factor on the clustering results of large objects, we use the intersection over union (IoU) of the area as the metric for measuring the distance between samples. IOU stands for Intersection over Union. It is a commonly used evaluation metric in computer vision and object detection tasks to measure the overlap between the predicted bounding box and the ground truth bounding box of an object. It is calculated by dividing the intersection area of the two bounding boxes by their union area, the effect is shown in

Figure 10. Equation (

12) shows the IoU calculation formula.

3.8. FPGA Hardware Accelerator

We propose a high-performance and low-power ARM + FPGA architecture neural network hardware acceleration system based on the Xilinx ZYNQ UltraScale+ MPSoC chip and Xilinx DPU IP core for power-constrained airborne information processing systems. The hardware acceleration system architecture is shown in

Figure 11.

The part of the neural network with dense calculations, including feature extraction, feature fusion, and prediction regression is deployed to the neural network accelerator on the FPGA for parallel acceleration, while pre-processing and post-processing operations with relatively less computational complexity are deployed to the CPU to reduce the occupancy of FPGA resources and balance the system’s computational load.

3.8.1. Configurations of DPU

The DPU offers an architectural parameter that determines its scale, specifically the degree of parallelism. This parameter determines the number of logical resources allocated to the DPU. Depending on the FPGA chip or computing task at hand, the DPU can be customized by adjusting these architectural parameters.

Table 1 provides an overview of different configurations. In this work, the ZCU104 FPGA board and the complexity of the model are considered when selecting the configuration parameter for the DPU architecture. After evaluating the available hardware logic resources and model requirements, a configuration parameter of B4096 is chosen for the DPU. This specific choice ensures compatibility between the DPU and the ZCU104 board while accommodating the model’s complexity effectively.

Deep learning models on FPGA platforms are generally loaded with model structure and parameters in a one-time initialization stage through dedicated data reading interfaces. This approach requires loading a large amount of data during initialization, which occupies a significant amount of on-chip resources and affects the efficiency of model inference. Therefore, we propose a software–hardware co-design model deployment method based on Xilinx Runtime (XRT) to dynamically load the model structure and parameters.

Firstly, the network model is quantized into an 8-bit fixed-point type to adapt to the FPGA hardware structure and reduce computational complexity and memory bandwidth usage. Then, the quantized model is compiled, and the model structure is compiled into a binary file adapted to the DPU hardware structure based on the hardware design. Finally, the implemented DPU hardware structure is loaded onto the target hardware platform, and the construction of the model acceleration application on the target hardware platform is completed.

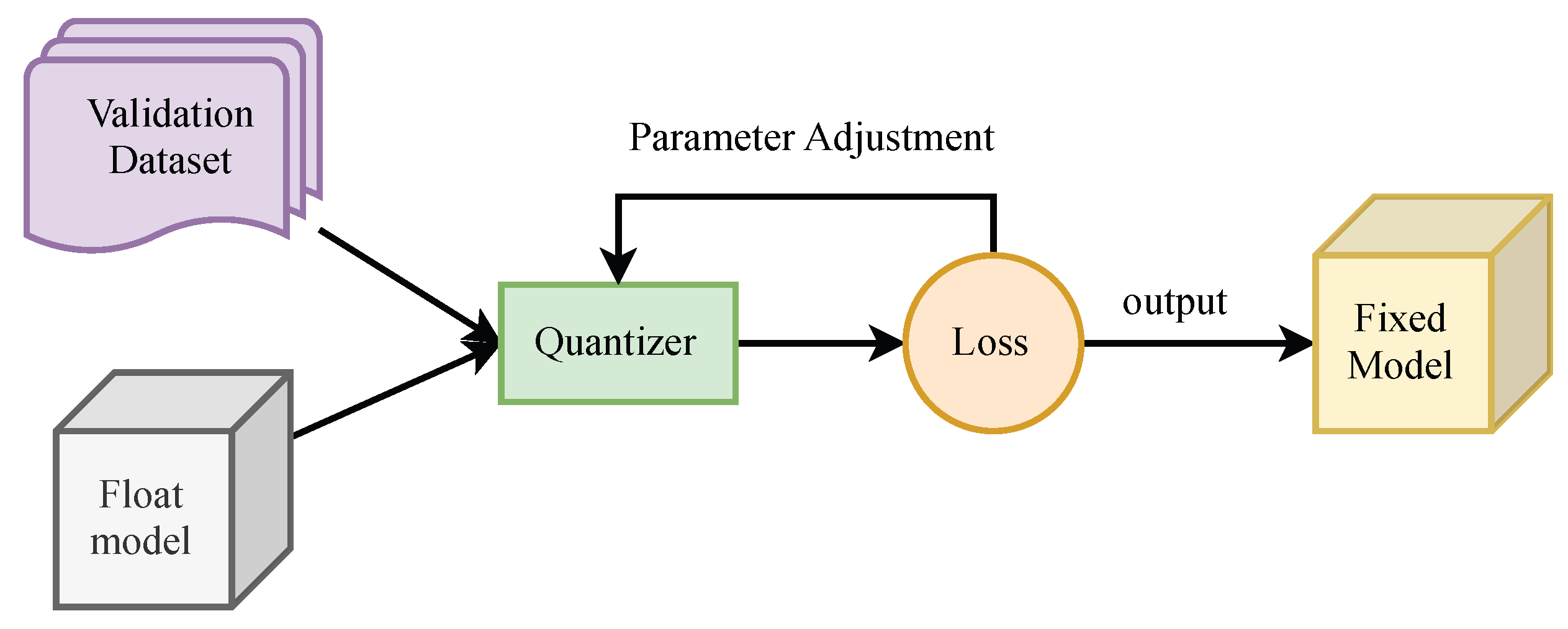

3.8.2. 8-Bit Fixed-Point Quantization

The computational units in the ZYNQ UltraScale+ FPGA chip used in this work are DSP48E2, which can handle two parallel INT8 multiplication–accumulation (MACC) operations when sharing the same kernel weight. Therefore, we adopt the 8-bit fixed-point number mapping weight and activation value quantization method to compress the model data. After mapping floating-point numbers to lower-bit 8-bit fixed-point numbers, higher parallelism and efficient utilization of resources can be achieved, and the required memory and computational resources are greatly reduced.

The model is quantized using an iterative refinement method. The 32-bit floating-point model trained by TensorFlow and a validation dataset composed of a subset of training data (about 1000 image samples) are simultaneously input into the quantizer. During each quantization, the forward inference of the model is checked, the accuracy loss is calculated, and the model quantization parameters are adjusted. Through multiple iterations, the 32-bit floating-point model input is gradually quantized into an 8-bit fixed-point number model with minimal accuracy loss, as shown in

Figure 12.

3.8.3. Model Compilation

The hardware structure of the model and the quantized 8-bit fixed-point number model are input to the compiler to generate a binary file that can run on the DPU. This binary file can be read by the DPU through Xilinx Runtime (XRT) and implement the forward inference of the model.

6. Conclusions

Based on the existing target detection algorithm, we have proposed an enhanced attention mechanism and receptive field for the YOLO optical remote sensing model, which we refer to as RFA-YOLO. The key improvements in our approach include the utilization of lightweight backbone and feature fusion neck networks, aiming to enhance both model accuracy and detection speed. To reduce the model’s parameters without compromising performance, we employ deep separable convolution and SandGlass blocks. Additionally, we incorporate Receptive Field Block (RFB) and Efficient Channel Attention (ECA) modules to improve the precision scales of multiple detections and enable better detection of small targets. These enhancements allow for more accurate and detailed object detection capabilities. Furthermore, we prioritize detection speed by incorporating lightweight architectures such as MobileNext and PAN-lite networks. These networks help optimize the computational efficiency without sacrificing accuracy. Moreover, we enhance the detection accuracy by employing dilated convolutional-based receptive field blocks and ECA modules, which enable the model to capture more contextual information and improve object detection performance. By combining these techniques, our RFA-YOLO model achieves improved accuracy, faster detection speeds, and enhanced capabilities in handling various detection scenarios in optical remote sensing applications. The model is then fixed to the FPGA and deployed with low power consumption, achieving a better compromise between detection speed, accuracy, and power consumption. To demonstrate the effectiveness of the proposed object detection model and FPGA hardware acceleration scheme, we conduct a series of ablation experiments on different dataset, as well as comparative experiments on three hardware platforms: CPU, GPU, and FPGA. The experimental results show that the improved model achieves a mAP improvement of 4.57% compared to the baseline model, and achieves a detection speed of 27.97 FPS and an average power consumption of 15.82 W on the FPGA, reducing the power consumption by 89.72% compared to the GPU, and increasing the detection speed by 189.84% compared to the CPU, which can meet the requirements of onboard real-time detection applications. However, there are still some aspects of the proposed approach that can be improved, such as complex decoding and non-maximally suppressed post-processing operations that can easily lead to performance bottlenecks. Therefore, future research will focus on further optimizing the network model to achieve end-to-end detection. This involves refining the architecture, exploring advanced techniques, and integrating additional components to enhance the overall performance and efficiency of the detection process. By continuously improving the network model, we aim to achieve more accurate and robust end-to-end detection capabilities for various applications.