1. Introduction

Ship detection in synthetic aperture radar (SAR) images has significant implications for various military and commercial domains, such as maritime surveillance and military reconnaissance [

1]. Despite the considerable advances in ship detection techniques over the years, most existing methods are tailored to offshore ship detection, i.e., ships in a marine area, and have limited applicability to inshore ship detection [

2]. Compared to offshore ship detection, inshore ship detection poses several challenges. First, the background consists of both ocean and harbor/land region, which complicates the estimation of background clutter. Second, ships and harbor facilities have similar scattering characteristics in terms of intensity and spatial distribution, resulting in high false alarms. Third, land/dock area and ships may be connected, leading to low detection accuracy. Last, the contour and edge features are difficult to extract accurately due to the intrinsic multiplicative speckle noise [

3]. Therefore, SAR ship detection for inshore scenes still represents the most challenging scenario in SAR target detection.

The most fundamental feature in SAR target detection is the amplitude; as a result, the constant false alarm rate (CFAR) detection method, which lays a particular emphasis on the amplitude feature, has become the most widely used algorithm for SAR ship detection [

4]. The CFAR detection methods model the statistical distribution of the background clutters to get an adaptive threshold, and then search for pixels that are unusually bright compared to the surrounding background clutters [

5]. It typically achieves good performance in offshore scenes since ships tend to appear as small bright targets with discernible shape information [

6]. However, for inshore scenes, the conditions are totally different, and several problems appear for the traditional CFAR detection. The CFAR methods focus on pixel level and cannot identify whether adjacent pixels belong to a target or clutter regions. Therefore, in most cases, it requires further morphological procedures to cluster pixels [

7]. For inshore ship detection, this morphological clustering will connect ship pixels with strong land clutters, resulting in false negative detections. Another problem mainly arises in scenes with large targets or large parts of high intensity land clutters. The distribution weight of high intensities is so large that the statistical fitting for background clutters tends to exhibit excess kurtosis and a long tail. This inaccurate fitting usually gives a higher intensity threshold, resulting in the loss of detected ship structures such as holes and fractures [

2,

8,

9,

10].

Motivated by the target pixel clustering requirements, some researchers employ the super-pixel (SP) method to overcome the inshore ship detection challenge. The SP methods typically returns a set of locally coherent pixels, which benefits the extraction of region-based features [

11,

12]. The simple linear iterative clustering (SLIC) segmentation method [

13] and the maximally stable extremal regions (MSER) method [

14] are the two most widely used SP methods in SAR target detection. SLIC adopts k-means clustering to generate SPs, which cover the whole image region, whether it is the ship target or background clutter. Therefore, the extracted SP features have to be labeled by a CFAR detector or some prior knowledge. The main problem of SLIC is the inherent over-segmentation, leading to excessive computational resources allocated for quantities of background clutter SPs (BCSPs), while the ship target SPs (STSPs) are much fewer [

8]. In addition, the number of total SPs is normally predetermined in SLIC, so when there exists strong noise, the target region cannot be outlined accurately, and the achieved SPs will include both true target region and the surrounding speckle noise [

2,

8].

The MSER method is another category of SP methods, which defines the feature blobs by the extremal property of the intensity function in the blob region and on its outer boundary. The MSER method can return a more accurate contour of the target region, even with strong speckle noise, and typically finds only the bright regions, which avoids the over-segmentation. However, a batch of nested MSER regions may be generated in a specific area since there are noise and numbers of local height extrema in real SAR images [

15]. In addition, the extracted regions of harbor facilities and ship targets are quite similar, and the absence of background clutter patches makes it impossible to exclude false alarms by comparison. Therefore, such approaches often also require accurate and robust sea–land segmentation. However, current sea–land segmentation approaches are generally designed based on an amplitude segmentation method, such as the OTSU method, and are quite sensitive to dense speckle noise and strong sea clutter [

2,

16,

17], resulting in inaccurate sea–land boundaries.

Motivated by the successful application of the human attention theory in optical remote sensing images [

18], saliency analysis has been introduced to SAR target detection [

19,

20]. The commonly used saliency in SAR detection is considered as a perceptual feature that describes the prominence of an object or location compared to its contextual surroundings and easily attracts visual attention [

21] for small and weak target detection. For inshore ship detection, the key challenge is the false alarm in harbor region and inaccurate sea–land segmentation instead of small and weak targets. As a result, the common saliency is not suitable for our problem. In this paper, a broader saliency is defined to alleviate the complexity caused by dense speckle noises, harbor facilities, and strong sea–land clutters for more accurate sea–land segmentation and a lower false alarm rate.

With the rapid development of deep learning, the convolution neural network (CNN) based methods are gradually used in SAR ship detection [

22,

23,

24,

25,

26,

27]. The data-driven deep learning approaches can build an end-to-end system to learn the hierarchical features automatically and detect ship targets simultaneously without human intervention and have realized state-of-the-art detection performance on widely used benchmark datasets. However, such performance usually requires a large amount of data, and the fundamental assumption that training and test data are identically and independently distributed (a.k.a. i.i.d. assumption) [

28]. In most datasets, the inshore scenario is relatively scarce compared to the offshore scenario, making it harder to satisfy the i.i.d. assumption. Some studies found that the CNN based detectors tend to be weaker on inshore ship detection because the features extracted by convolution are easily affected by noise. In some extreme cases, changing one pixel can lead to totally different detection results [

29]. Additional constraints are usually required to achieve reliable predictions under non i.i.d. assumption, but the prediction of most current deep models is short of a physical explanation. In consideration of the special physical characteristics underlying SAR images, it is promising to combine the data-driven CNN methods with traditional model-driven methods to enhance the interpretability and generalization in the future [

30]. Therefore, a reliable model-driven method can still be a boost and supplement for the future development of CNN based models.

In this paper, we propose a multi-modality saliency (MMS) method for ship detection in SAR images of complex inshore scenes with similar port facilities. The method extracts four types of modality information from the images: the MSER feature, the scale feature, the spatial distribution feature, and the intensity distribution feature. Each type of feature is converted into a saliency map: the super-pixel saliency map (SPSM), the ocean-buffer saliency map (OBSM), the local stability saliency map (LSSM), and the intensity saliency map (ISM). The method combines these saliency maps to detect ships with accurate contours and exclude false alarms with low stability, weak intensity, or located in land regions. The main contributions of this paper are as follows:

This paper provides a novel interdisciplinary perspective of surface metrology for SAR image segmentation by addressing the scale difference of the main elements of SAR images with the suitable usage of a second-order Gaussian regression filter.

This paper defines the spatial characteristics of SAR image elements as the local stability calculated by the moving standard deviation. It discovers distinct differences in the local stability between ships, sea regions, land regions, and connections between the sea and other elements, which demonstrates the detection effectiveness of the proposed local stability.

This paper proposes a novel robust constant false alarm rate (CFAR) procedure that can eliminate noise and large ships’ effects on background clutters’ statistical fitting. This procedure can also be widely used for fitting other distributions.

3. Materials and Methods

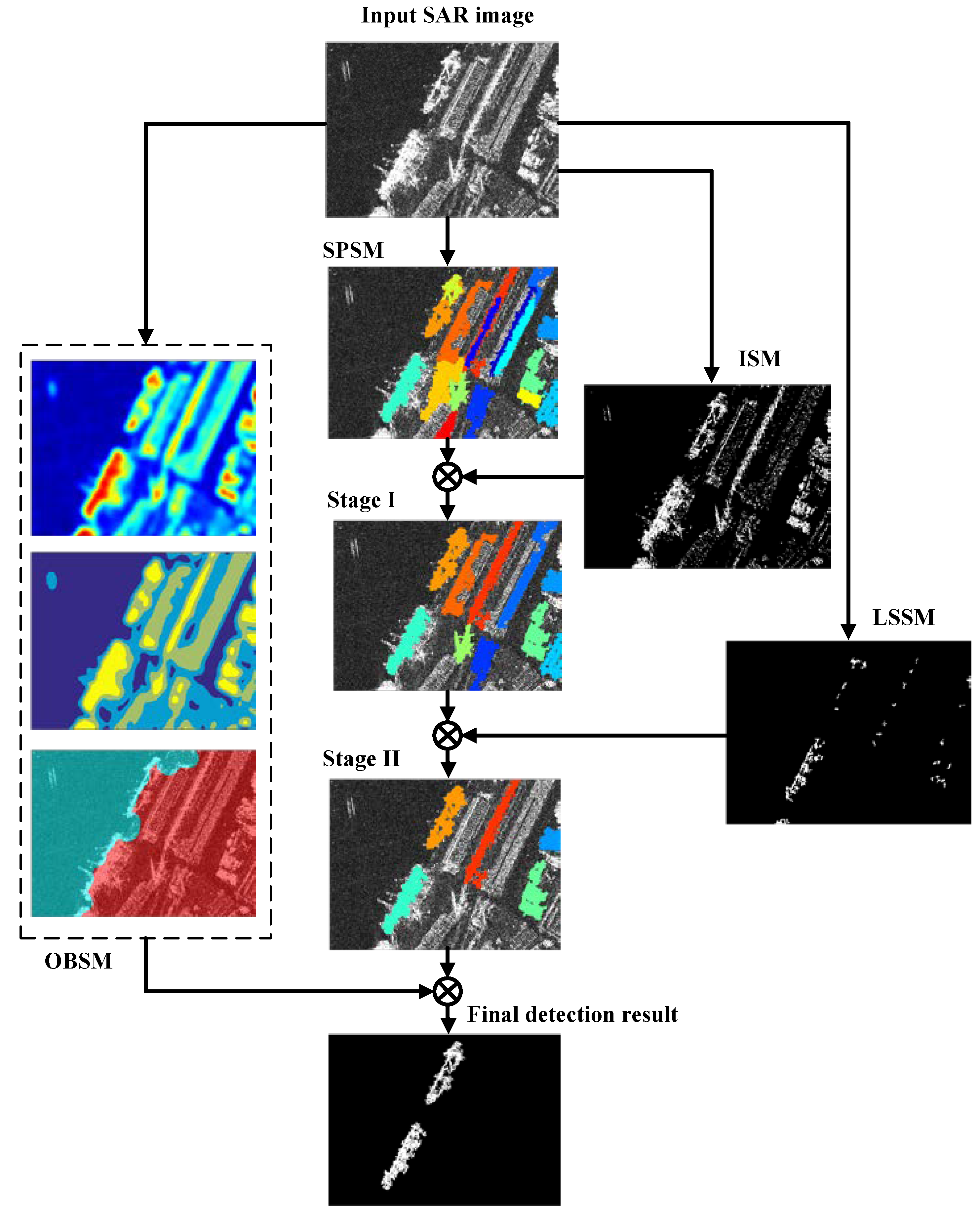

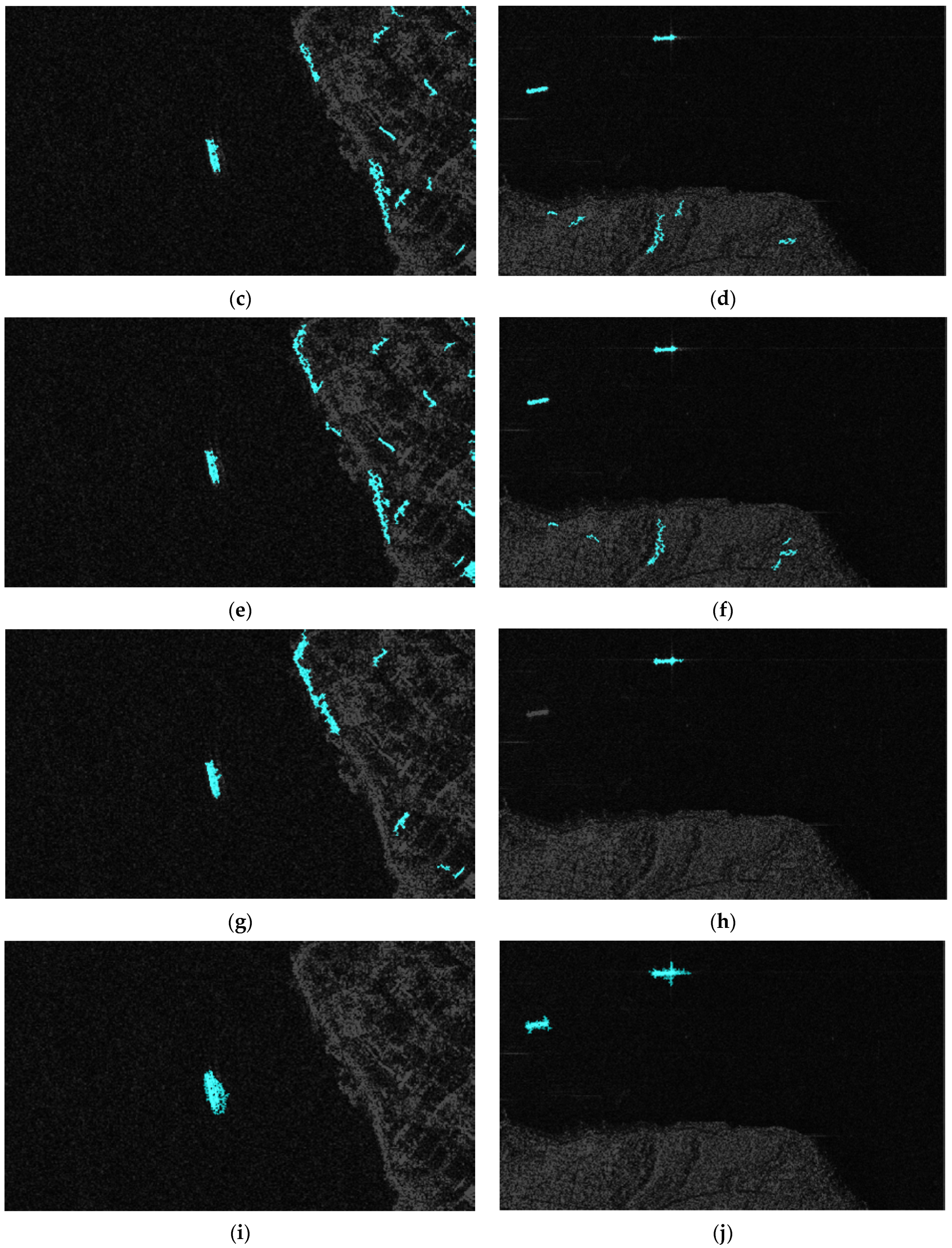

Figure 1 shows the workflow of our method. We use four modalities to detect ships in SAR images. First, we extract non-overlapping regions with a non-nested MSER procedure and form a super-pixel saliency map (SPSM), where the region features are not labeled and usually contain quantities of false alarms. Second, we apply an improved robust K-CFAR detector to obtain an intensity saliency map (ISM) and use it to filter out false alarms from SPSM. This gives us the stage I results. Third, we calculate the local stability of each region with a moving standard deviation (σ) and create a local stability saliency map (LSSM). We use LSSM to refine the stage I results to obtain the stage II results. Fourth, we filter out low frequency components with a second order Gaussian regression filter and segment the image with a four-level OTSU method. We then dilate the lowest level to get an ocean-buffer saliency map (OBSM), which excludes targets near the coast from the stage II results. This gives us the final ship detection results. In real time application, these four saliency processes can be implemented in parallel. We arranged the workflow in

Figure 1 for a better illustration.

The proposed SPSM, OBSM, LSSM, and ISM are introduced in detail below.

3.1. Super-Pixel Saliency Map (SPSM)

To reduce the computational cost of over-segmentation by the SLIC method, we employ the MSER method for extracting super-pixel region features [

14]. The MSER method scans the intensity range [0, 255] of the input SAR image incrementally and detects region features that have relatively stable areas across different intensity levels. The intensity level increases by a constant step size. The MSER method constructs a series of binary cross section images based on the increasing intensity level. For each intensity level,

, the image pixels are assigned ‘1’ if their intensity is lower than or equal to

, and ‘0’ otherwise. Each connected region of ‘1’ pixels is called an extremal region. As

increases, new extremal regions appear, and the existing ones expand and merge with each other. When

reaches the maximum intensity of 255, there is only one extremal region that covers the whole image. The MSER method tracks both the area and the area growth rate of the nested extremal regions. When two regions merge, the tracking of the smaller one terminates. For a given region, the MSER method measures the variation of its area size between adjacent intensity thresholds. If this variation is below a threshold value, then this region area is regarded as a maximally stable extremal region (MSER). In our application, the intensity step is set as 2, and the growth rate threshold is set as 0.2.

Real SAR images contain many local intensity peaks and valleys. Therefore, for a given region, the MSER detector will produce a set of nested MSER region features due to its intrinsic extremal property.

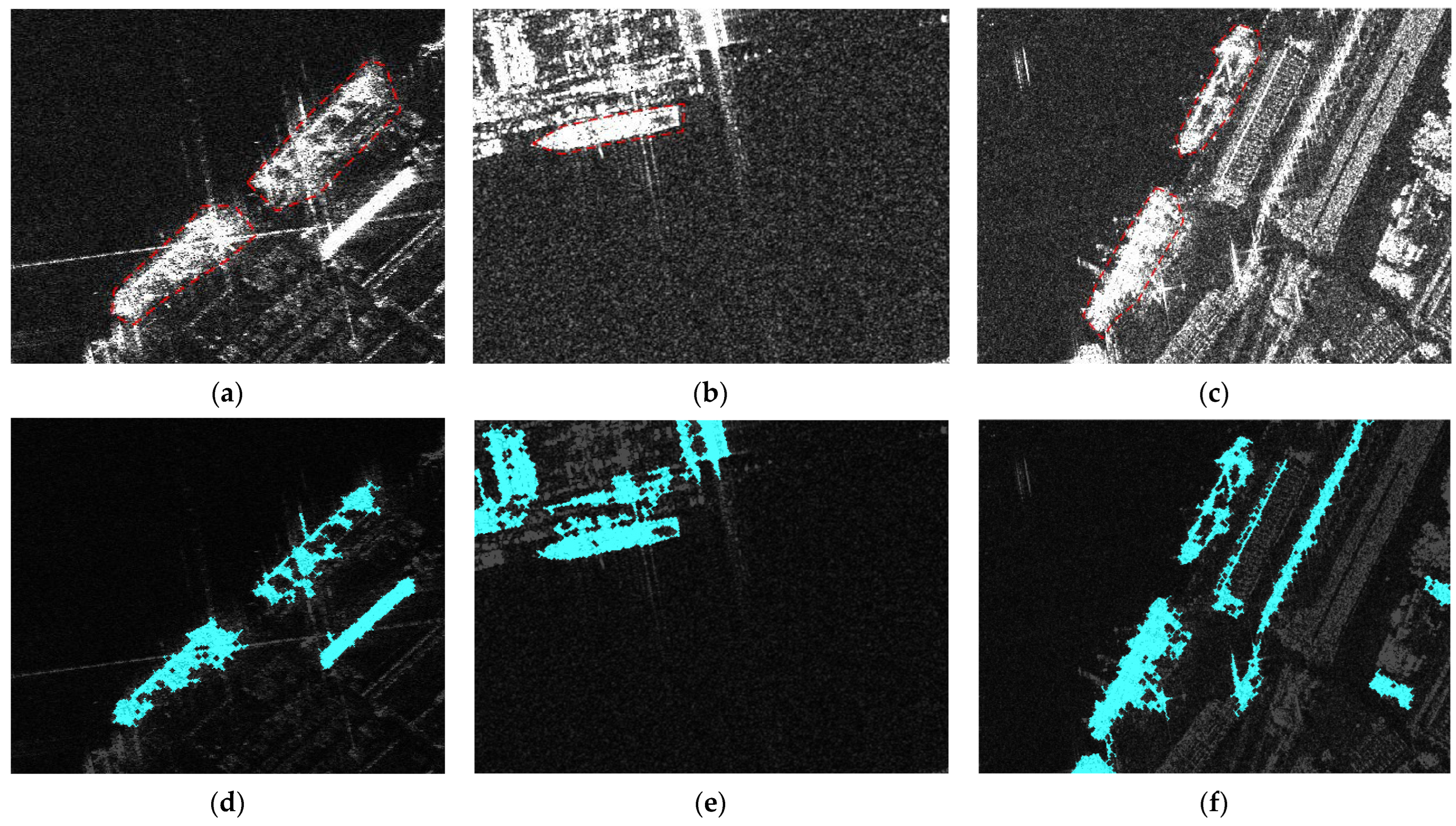

Figure 2 illustrates the nested feature removal process.

Figure 2a displays an original SAR image, and

Figure 2b shows the MSER extraction result of

Figure 2a.

Figure 2c presents the non-nested MSER regions of

Figure 2a, which are collectively regarded as our SPSM. The nested feature removal method is proposed as follows:

- (a)

Get the gravity center, , of each MSER region, , where , and is the total number of the extracted MSER features.

- (b)

For any two gravity centers,

and , if pixels, the smaller one of and is eliminated.

- (c)

Repeat the above procedures until all the remaining gravity centers are more than 20 pixels apart from each other.

3.2. Intensity Saliency Map (ISM)

Pixel intensity is the fundamental characteristics of SAR images, and CFAR detection methods model the statistical distribution of the background clutters to obtain an adaptive intensity threshold. However, the threshold relies heavily on the statistical fitting accuracy for the background clutters. In inhomogeneous scenes, the intensity histogram tends to exhibit excess kurtosis and a long tail [

2], which require an asymmetric (or non-gaussian) distribution for optimal fitting. In the existing literature, some parametric models have been proposed to fit the long heavy tail feature of a heterogeneous clutter, such as the K distribution [

40]. However, the modeling ability of a singular parametric model is doubtable for inshore scene probability density function (PDF), and the computational cost is too high for mixture parametric models [

9]. As a result, here, we chose the nonparametric kernel density estimator (KDE) to model the PDF of inshore scene clutters. The KDE is given by [

41]:

where

are

identically and independently distributed samples forming an unknown PDF of inshore scene clutters.

is the bandwidth that indicates the width of kernel function.

is a kernel function satisfying

. In our work, a Gaussian kernel function is employed:

where in our case, Gaussian basis functions are used to approximate univariate data, the optimal choice for bandwidth

, which is also the bandwidth that minimizes the mean integrated squared error [

42]:

where

is the estimated standard deviation of

. Then, the corresponding CDF is given by:

where

is the CDF of standard normal distribution. Given the value of the global false alarm rate, Pfa, the threshold,

, can be calculated from:

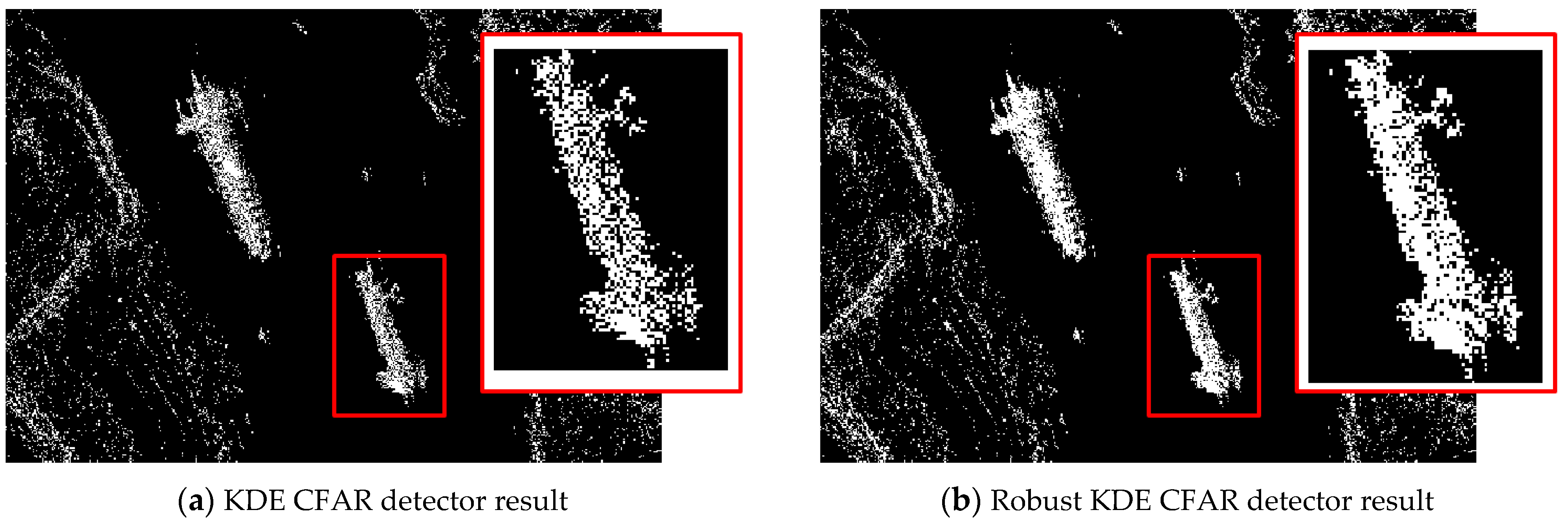

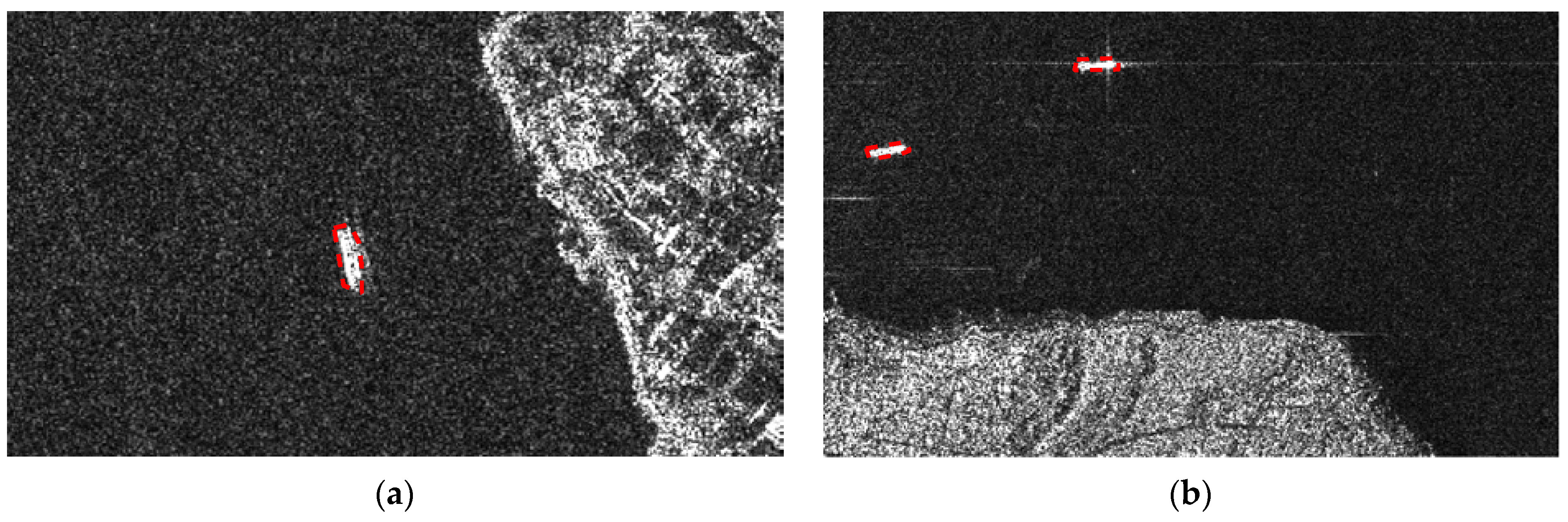

However, when applying the KDE to SAR images with big targets and large area land clutters, as shown in

Figure 3a, the PDF generated by KDE (green line in

Figure 3b) shows overfitting to outliers in the high intensity edge, resulting in a higher threshold

and increasing the risk of missed detections (as shown in

Figure 4a) under the same global false alarm rate Pfa (in this case, we set Pfa at 0.005). We found in

Figure 3b that, although KDE overfits the high intensity edge, the curve does not follow the histogram well; there are outliers in the residual between the fitting curve and the histogram (yellow line in

Figure 3b). Inspired by this phenomenon, we added a weighting function,

, to the samples,

, to reduce the effect of large ships and land clutters. Considering both the convergence property and calculation speed, the Turkey estimator is introduced as the weighting function:

where

,

is the histogram of

, and

is the PDF of KDE.

, where

is the current number of iterations. If

, and

, the iteration ends, and

is the robust PDF of KDE fitting. The red line in

Figure 3b shows the robust KDE PDF; it is clear that there is no overfitting to ship targets and strong land clutters.

Figure 4b shows the detection result by a robust KDE CFAR detector; as can be seen, more details of ship targets are retained in

Figure 4b, and the result is termed as our ISM. No matter how well the model fits the background clutters, there are still false alarms detected in land regions, so simple intensity-based saliency may be not enough for inshore scene target detection.

3.3. Local Stability Saliency Map (LSSM)

Ships are distinct from the ocean or land not only in the intensity domain, but also in the spatial domain. Bright pixels of ships are often contiguous and concentrated in a small area, while those of the background are relatively discrete and unstable. Elements of a SAR image typically exhibit the following difference in spatial characteristics: (a) ships appear as bright, concentrated pixels, with the highest local stability; (b) bright pixels are distributed uniformly in the marine region, whose local stability is slightly lower than ships; (c) due to the large-scale ridges and landscapes, the local stability of the land region is lower than the marine region; and (d) the connections between the sea and other elements have the lowest local stability. Local stability can be measured by the standard deviation of the pixel intensities in a local region. For a vector

with

dimensions, its standard deviation,

, is defined as follows:

The calculation of the local standard deviation can be accelerated in the frequency domain: we construct a template image,

, with the same size as the SAR image,

S, and set all its values to ‘1’. We also create a convolution kernel,

. Then, we compute the local mean matrix of pixel intensity,

, by

, and the local mean matrix of pixel intensity squared,

, by

, where

denotes convolution. The local standard deviation matrix is then obtained by:

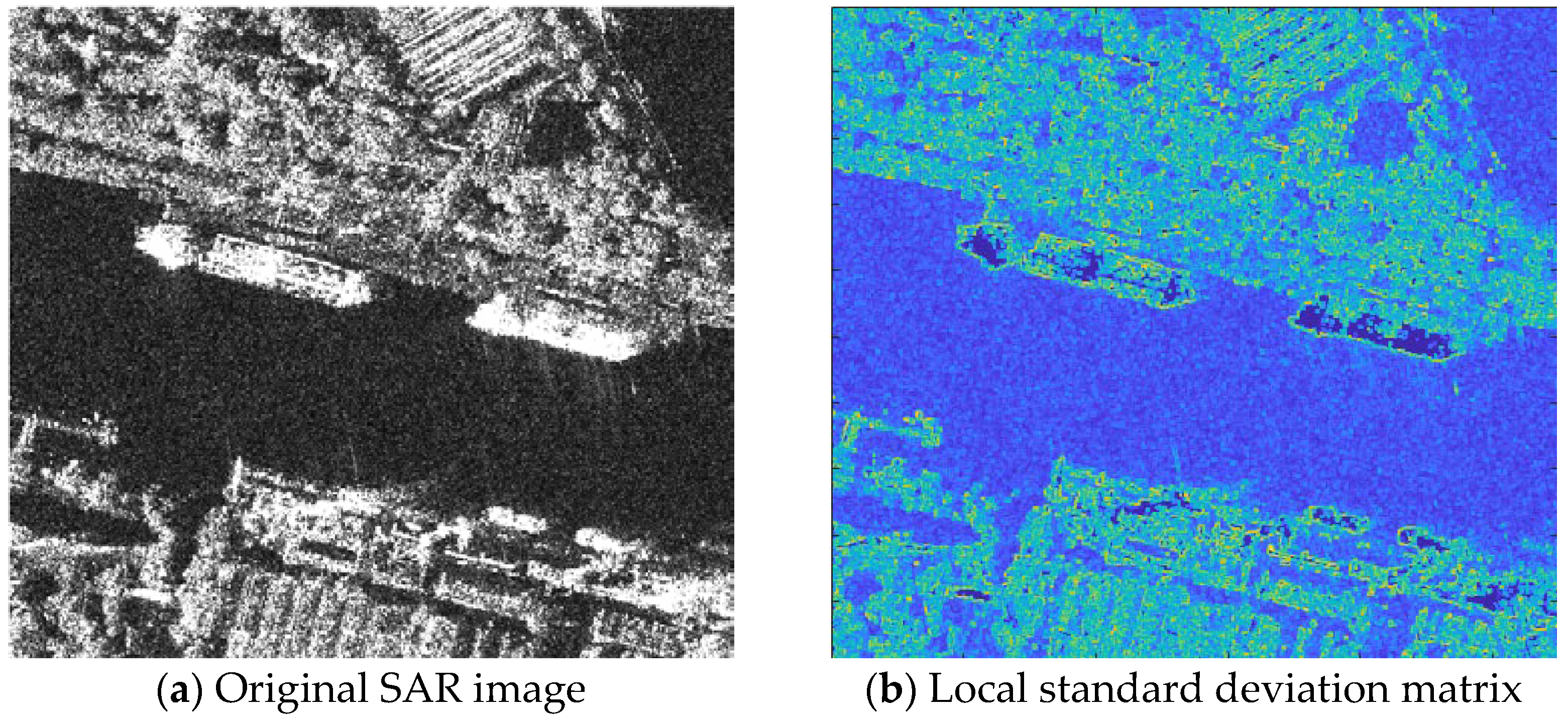

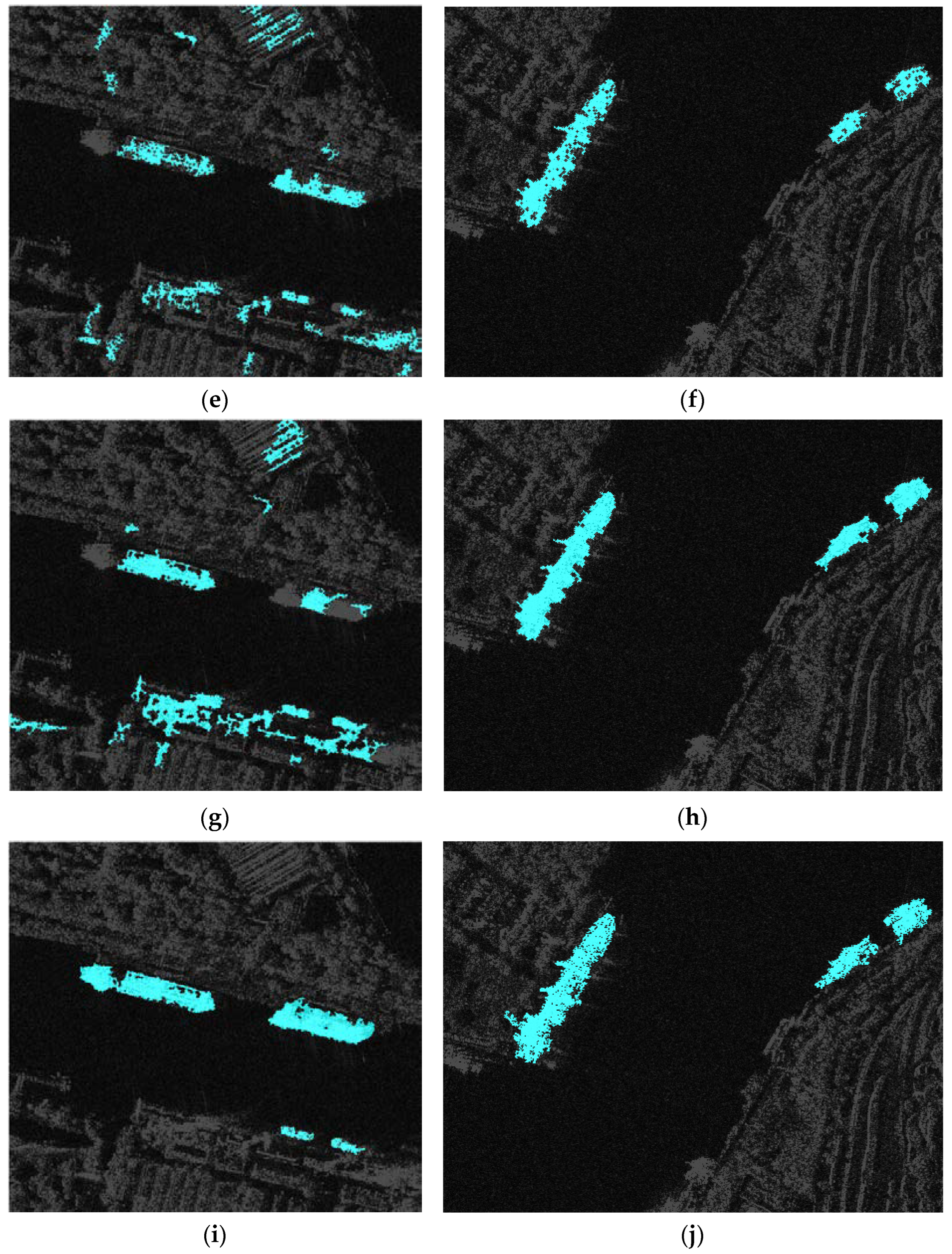

The process of local stability is illustrated in

Figure 5.

Figure 5a displays an original SAR image, and

Figure 5b shows its corresponding local standard deviation matrix.

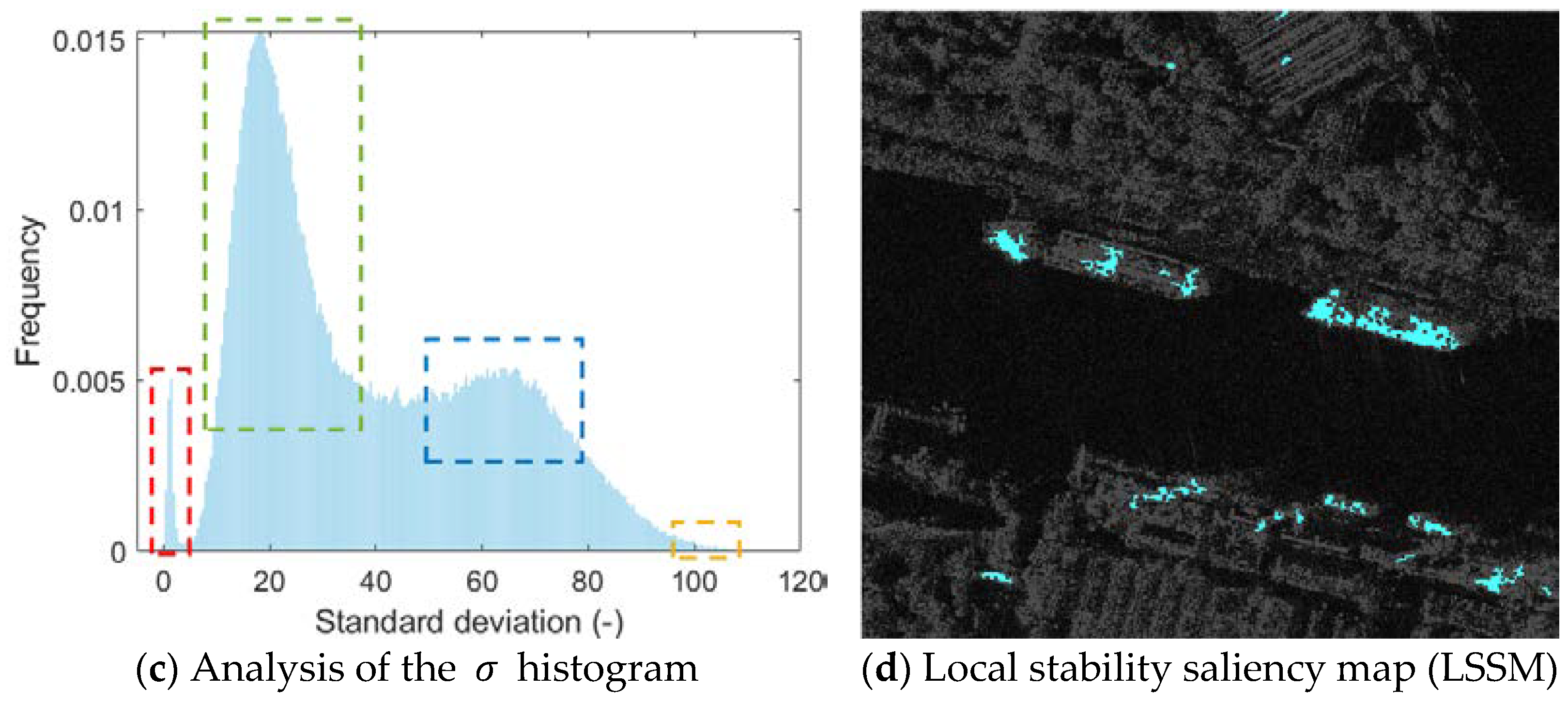

Figure 5c presents the histogram of

Figure 5b, where the

value of the red rectangle indicates the central area of ships, the

value of the green rectangle represents the sea region, the

value of the blue rectangle signifies the land region, and the

value of the yellow rectangle marks the boundaries between sea and other elements. We define an adaptive threshold by detecting the breaking point between the red and green rectangles, which is usually less than 10. It can be seen in

Figure 5d that, not only ship targes, but metallic harbor facilities are identified by the LSSM as well, so simple LSSM is not enough for inshore scene detection.

3.4. Ocean-Buffer Saliency Map (OBSM)

Ships can only appear in marine areas for ship detection in harbor regions. Therefore, we need to first segment the sea from the land. Previous studies often used intensity-based segmentation methods, such as Otsu’s method, with morphology operations to connect isolated land regions and eliminate small holes. However, these methods are sensitive to strong noise and sea clutter. We consider three main elements in SAR images: noise, ship targets, and landforms. These elements have different scales. We address this scale difference by using surface metrology methods [

43]. We extract features with interested scales for further analysis by filtering them. We use the second order Gaussian regression filter [

44] to avoid boundary effects and reduce noise, which can be defined by the following minimization problem:

We obtain the resulting filtration surface, , by zeroing the partial derivatives in the directions of , , , , , and . We define these terms in Equation (9) as follows:

: the filtration result of the input SAR image ;

and : spatial coordinates in two orthogonal directions.

: Gaussian weighting function, where .

and : the cutoff wavelengths in and directions;

and : first-order coefficients;

, , and : second-order coefficients;

: error metric function of the estimated residual.

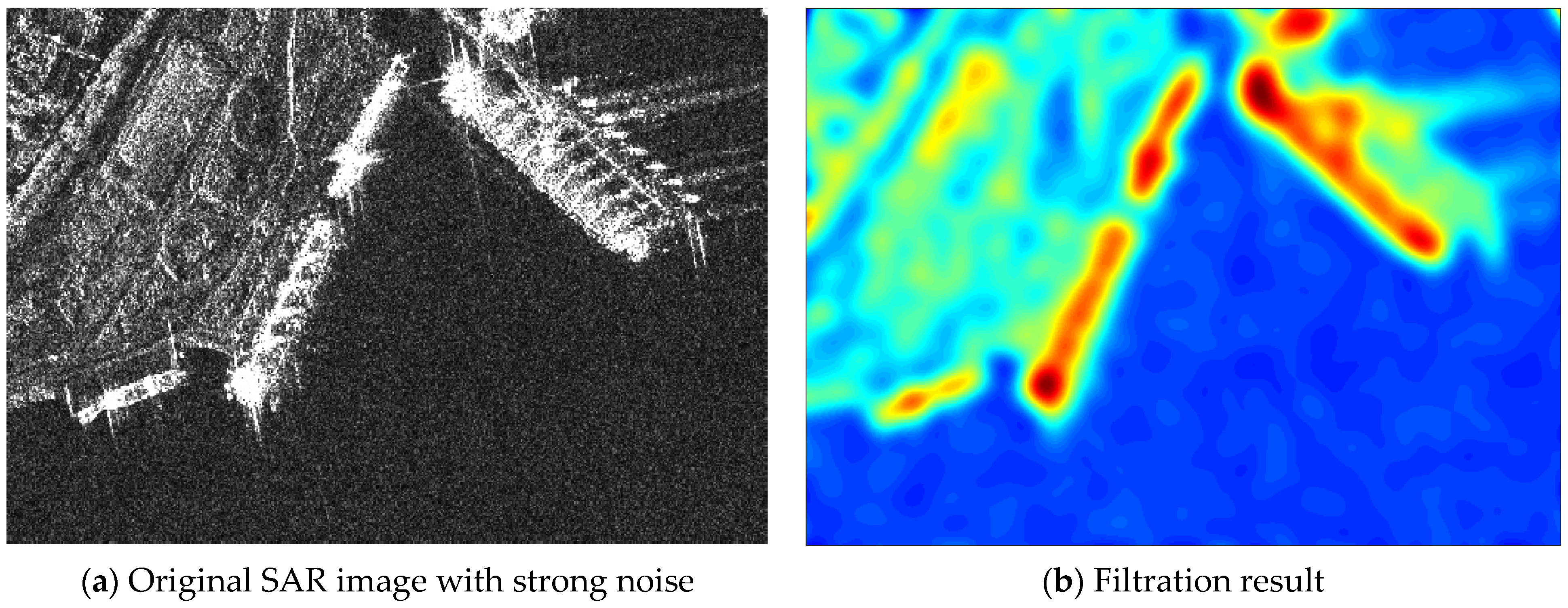

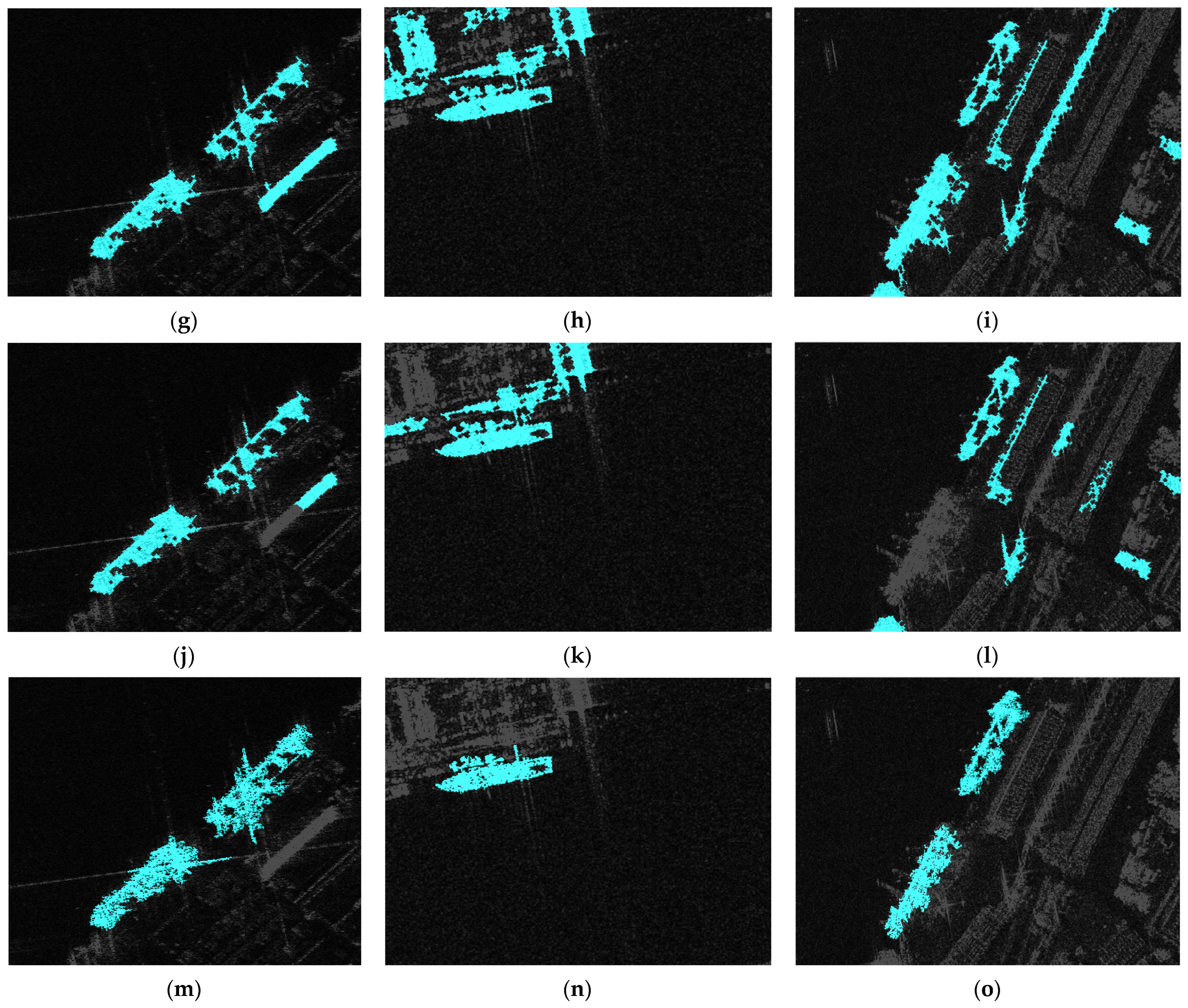

Figure 6 and

Figure 7 show the filtration results of SAR images in an inshore scene with different levels of speckle noise. We use the structural similarity index [

45] to assess their difference. The structural similarity index between

Figure 6a and

Figure 7a is 56.7%. The structural similarity index between their filtration results is 76.3%. The increase indicates that using a second order Gaussian regression filter can reduce noise sensitivity.

To further identify the marine area, the four-level Otsu’s method is employed for adaptive thresholding based on the maximization of inter-class variance [

46]. The filtered image,

, is first rescaled to the gray level range of 0~255 using the following formula:

The intensity level of the filtered image is now [0, 255], and

denotes the number of pixels with intensity,

, where

. The probability of occurrence of a gray level

is

. For a four-level segmentation with three thresholds

, the total inter-class variance,

, can be expressed as:

In this equation,

represents the cumulative probability of the occurrence of the gray level

, and

.

denotes the mean value at the gray level

, and

. The optimal thresholds

that maximize Equation (11) can be obtained using the Nelder–Mead simplex method [

47]. The lowest level segment with intensity values lower than

corresponds to the marine area.

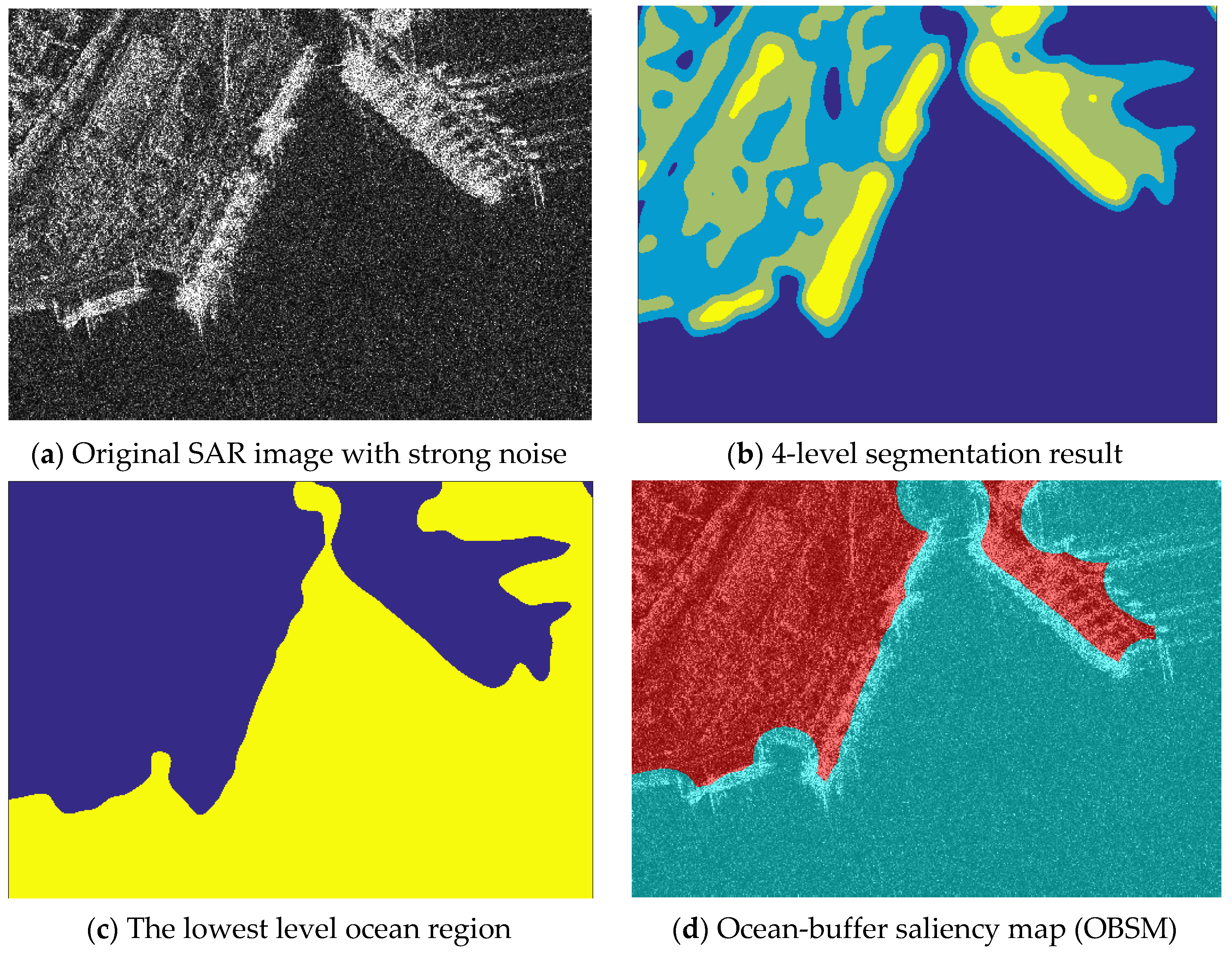

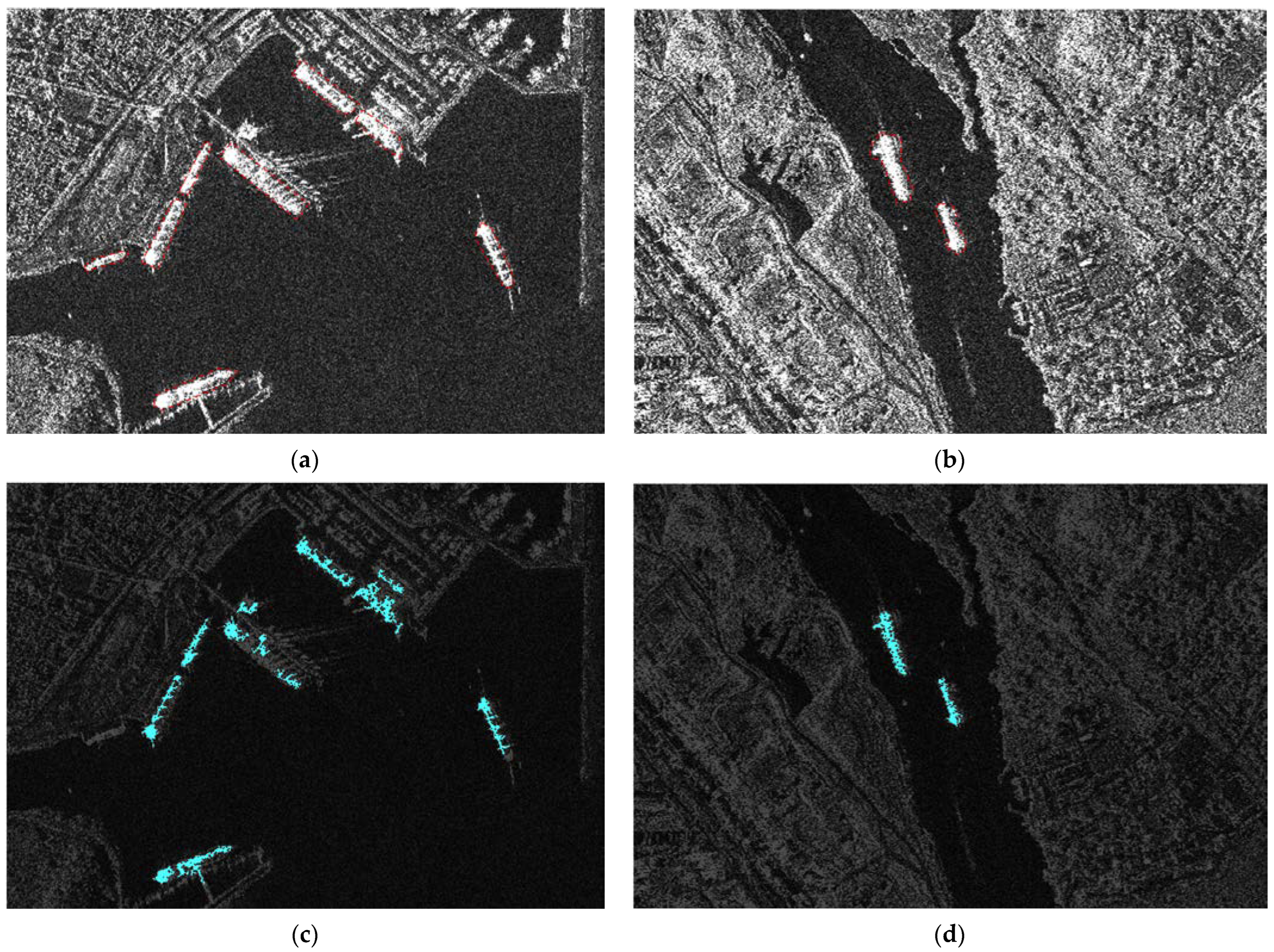

The process of sea–land segmentation is illustrated in

Figure 8.

Figure 8a displays the original SAR image,

Figure 8b shows the segmentation result obtained by using the second order Gaussian regression filter and four-level Otsu’s method, and

Figure 8c depicts the extracted marine area. Since ships only appear in or near the ocean, a buffer area is created by performing a morphological dilation operation with a disk-shaped structuring element whose radius is approximately equal to the width of ships.

Figure 8d presents the resulting OBSM in blue where ships are located, while the red area indicates land.

5. Discussion and Conclusions

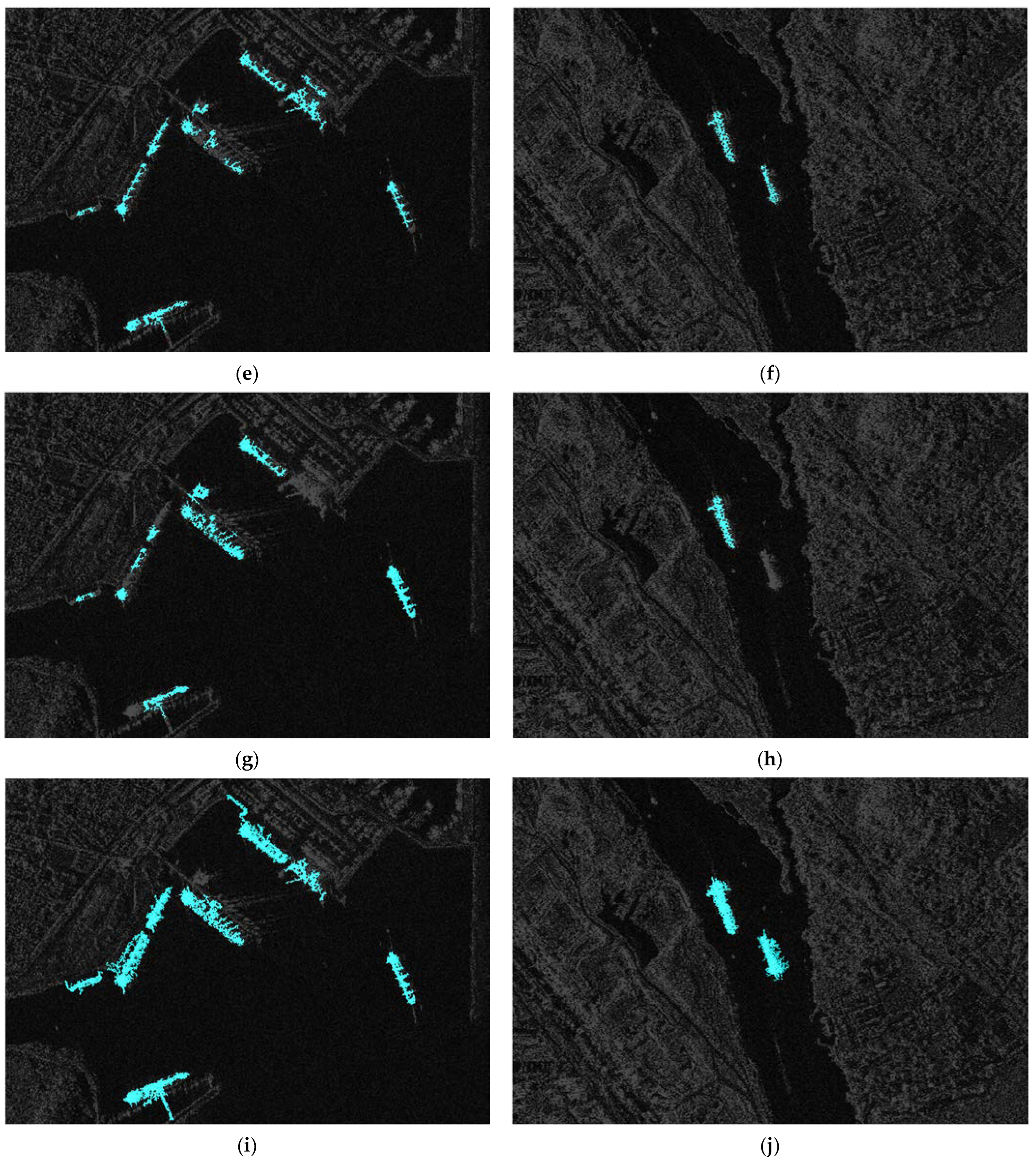

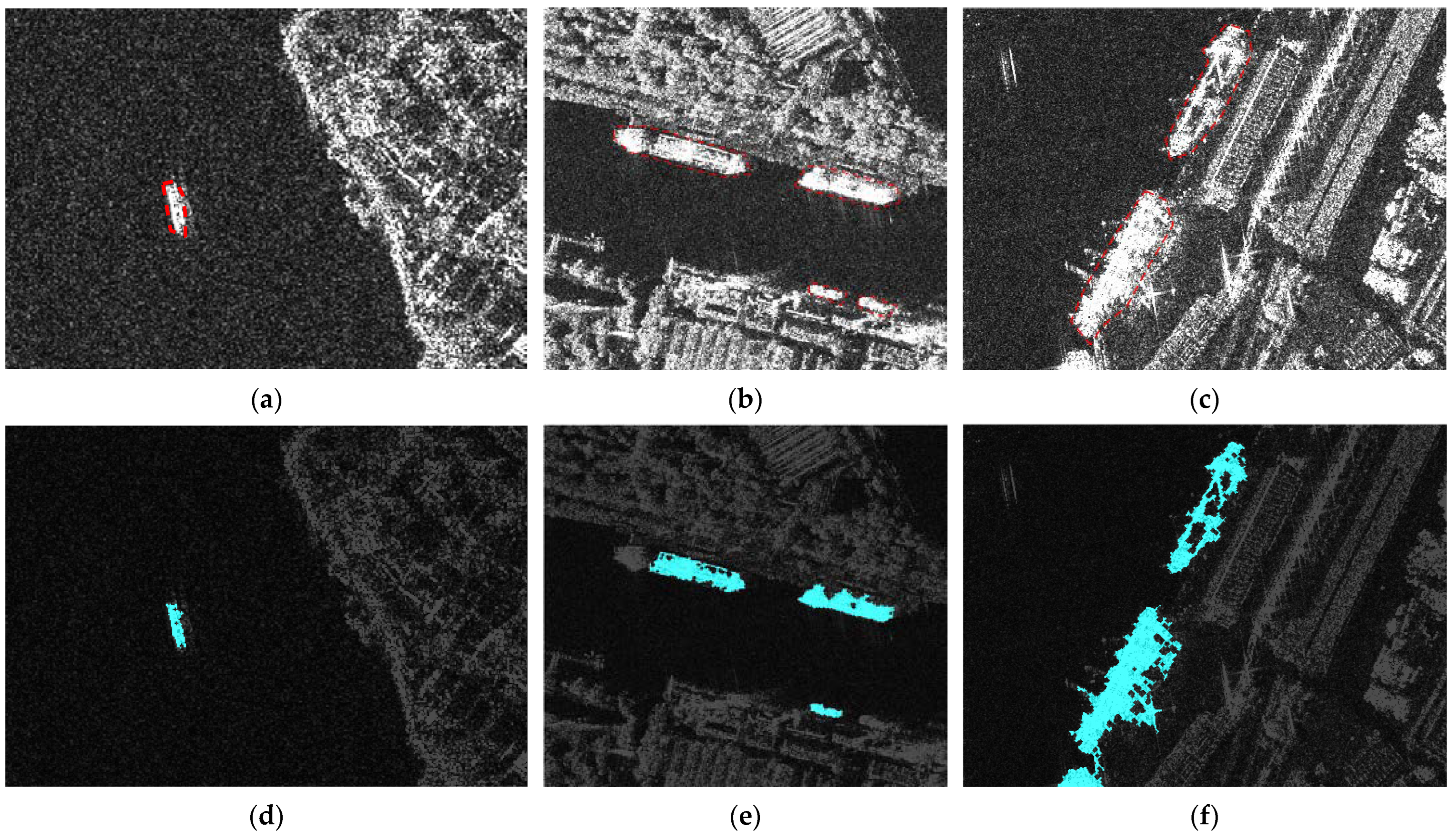

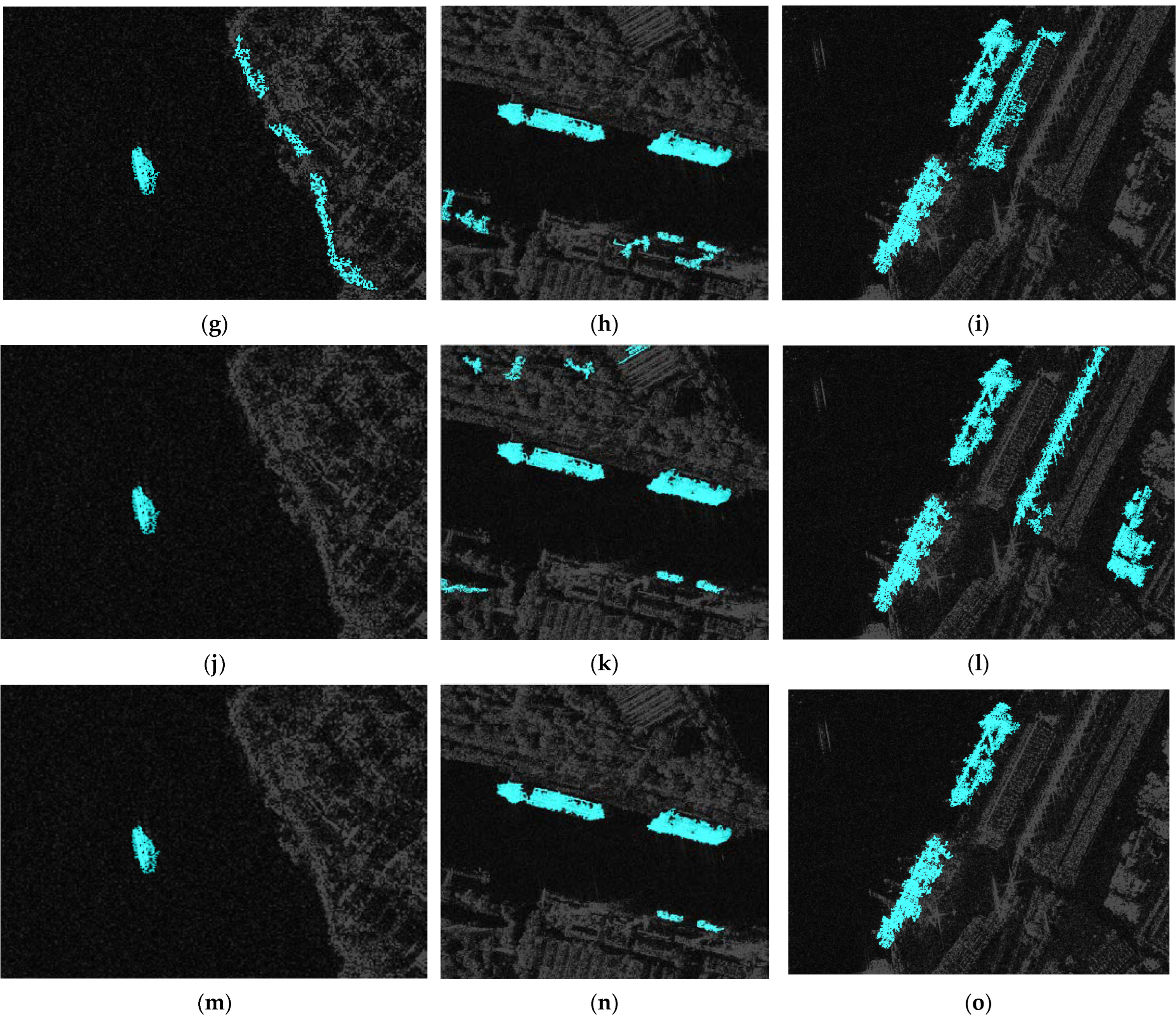

This paper proposed a novel multi-modality saliency (MMS) method for ship detection in SAR images with complex inshore scenes. The MMS method was validated on images with offshore scenes, inshore scenes without port facilities, inshore scenes with confusing port facilities, and large-scale scenes. The performance was compared with those of several existing methods to demonstrate the effectiveness and superiority. For all three scene types, the MMS method can successfully detect the ship targets without false alarms, while either miss detection or false alarms exist in the detection results of other methods. However, side lopes and some small land structures are found connected with the detected ships using MMS method, since a limitation of the MMS method is that it relies heavily on the performance of the MSER method for ship contour detection. A future research direction is to develop a clustering and pruning process for the MMS method for a more accurate ship contour, avoiding excluding ships connected with port facilities. Ablation tests are also conducted on three scene types to evaluate the concrete contributions of the saliency maps in our MMS method. Ships are falsely connected with land clutters without SPSM. Asymmetric land clutter clusters are detected along coast lines without LSSM. Concentrated land elements and facilities are falsely detected among the land regions without OBSM.

We can further draw the following conclusions from this paper: (1) It shows that the main elements of SAR images in complex scenes (noise, ship targets, and landforms) can be well separated by their scale difference from the perspective of surface metrology. This segmentation method is hardly affected by speckle noise, which surpasses most existing methods, such as Otsu’s method. This provides a novel interdisciplinary perspective for SAR image segmentation. (2) It utilizes the spatial characteristics of SAR image elements to mark the potential ships. For now, we only employ pixels with the most local stability, but further research can employ pixels with other extents of local stability for better ship locating. (3) It proposes a novel robust CFAR procedure that can eliminate the effects of noise and large ships on the background clutters’ statistical fitting. The robust KDE CFAR detection is just one application, and this procedure is also available for other statistical models. In summary, the proposed MMS method exploits multi-modality information of SAR images and transforms them into saliency maps. The fusion of these saliency maps enables effective and accurate ship detection in various scenes of SAR images. The experimental results demonstrate that the MMS method can achieve high detection performance in both inshore and offshore scenes, regardless of confusing port facilities, and outperforms the widely used methods, such as CFAR-based methods and super-pixel methods.