Abstract

Accurate and rapid monitoring of maize seedling growth is critical in early breeding decision making, field management, and yield improvement. However, the number and uniformity of seedlings are conventionally determined by manual evaluation, which is inefficient and unreliable. In this study, we proposed an automatic assessment method of maize seedling growth using unmanned aerial vehicle (UAV) RGB imagery. Firstly, high-resolution images of maize at the early and late seedling stages (before and after the third leaf) were acquired using the UAV RGB system. Secondly, the maize seedling center detection index (MCDI) was constructed, resulting in a significant enhancement of the color contrast between young and old leaves, facilitating the segmentation of maize seedling centers. Furthermore, the weed noise was removed by morphological processing and a dual-threshold method. Then, maize seedlings were extracted using the connected component labeling algorithm. Finally, the emergence rate, canopy coverage, and seedling uniformity in the field at the seedling stage were calculated and analyzed in combination with the number of seedlings. The results revealed that our approach showed good performance for maize seedling count with an average R2 greater than 0.99 and an accuracy of F1 greater than 98.5%. The estimation accuracies at the third leaf stage (V3) for the mean emergence rate and the mean seedling uniformity were 66.98% and 15.89%, respectively. The estimation accuracies at the sixth leaf stage (V6) for the mean seedling canopy coverage and the mean seedling uniformity were 32.21% and 8.20%, respectively. Our approach provided the automatic monitoring of maize growth per plot during early growth stages and demonstrated promising performance for precision agriculture in seedling management.

1. Introduction

Precision agriculture is an approach that provides crop growth monitoring with rapid acquisition and quantitative analysis of crop location and growth rate under real conditions in fields [1,2]. With current rates of population growth and ongoing climate change, improvements in crop yields through advanced developments in precision agriculture are crucial [3]. Maize is the largest crop grown globally, with a total planting area of 197 million hectares and a total yield of 1.15 billion tons [4]. The maize seedling stage is the critical period to determine high-yield corn, and a strong and neat seedling is the basis of the field harvest.

In seedling management, the quantitative assessment of the number and uniformity of seedlings plays a pivotal role in achieving consistent maize growth monitoring [5,6]. The traditional method for evaluating maize seedling conditions relies on field sampling and manual estimation by plant protection personnel. This approach is inherently subjective, demanding extensive time and labor resources, and yielding low accuracy, which hinders current agricultural requirements. With the rapid development of precision agriculture, the accurate and fast acquisition of crop information across large areas has become essential for precision farmland management. It has drawn considerable attention in the field of modern agricultural research [7,8].

Several researchers have carried out studies to promptly monitor and quantify crop emergence, such as plant counting [5,9], emergence rate [7,10,11], canopy coverage [12,13,14], and crop growth uniformity [7,15]. The number of crops reflects the emergence rate and is the primary prerequisite for measuring crop yield [16]. The maize sowing is influenced by fluctuations in the external environment, seeder performance, and seed quality across different plots. This has impacts on the planting density of maize, thus leading to reduced maize yields. In addition to the directly quantifiable number of seedlings per plot, crop emergence also involves seedling uniformity, which cannot be measured manually [5]. The uniformity of crop emergence can be characterized by the coefficient of variation of the emergence rate. The uniformity represents more accurately the distribution of crop seedlings in a plot than an average condition [17] and is crucial to maximizing yield [18].

Significant efforts have been dedicated to the research of accurate crop plant detection and counting [19,20,21,22,23]. The use of low-altitude UAV remote sensing platforms in obtaining field information offers the advantages of diversification and flexible activation [8,24,25,26]. Shuai et al. [27] used UAV maize images to compare the variation in plant spacing intervals and extracted the number of maize seedlings with an accuracy greater than 95%. Yu et al. [28] combined the hue intensity (HI) lookup table and affinity propagation (AP) clustering algorithm to develop a novel crop segmentation algorithm and attained an accuracy of 96.68% for plant detection. However, these methods were only applicable to cases where seedlings are not overlapped with little canopy coverage. All the aforementioned extraction algorithms have achieved high accuracy in detecting maize plants, but are limited to those at maize growth stages V1 (the first leaf) to V3 (the third leaf). The first leaf is fully unfolded, that is, the emergence of corn. The third leaf is fully unfolded, while the growing point of the corn is still underground. The maize growth stages V1 to V3 represent the leaf growth conditions during the early seedling stages and can indicate the survival of the maize seedling.

During the late stages of seedling emergence, challenges in plant counting arise due to factors such as increased random leaf direction and leaf overlap among maize seedlings in the field. Bai et al. [29] devised a plant counting method based on a peak detection algorithm capable of rapidly and accurately determining maize plant numbers at the V3 (the third leaf) stage. Zhou et al. [30] used a threshold segmentation method to separate maize seedlings from the soil background and adopted the Harris corner extraction algorithm to identify the number of maize plants. The overall detection rate was high, but the accuracy was limited due to the difficulty of extracting a single skeleton from the image when there is a significant overlap of leaves at the late stage of seedlings. Gnadinger et al. [31] utilized the morphological and spectral characteristics of crops to develop a decorrelation stretch contrast method. The method enhances the color contrast between old and young leaves and effectively counted maize seedlings at the V5 (the fifth leaf) stage. However, after the V5 stage, the precision loss resulting from leaf overlap remains a challenge in maize plant counting. Further advancements are required to accurately extract maize seedling plants with uneven planting and overlapping leaves.

Furthermore, other critical characteristics such as emergence rate, canopy coverage, and emergence uniformity are primary indicators for obtaining seedling conditions and measuring crop growth. The scientific and quantitative characterization of seedling conditions is instrumental in understanding the growth dynamics of crop seedlings and serves as a reference for the timely and accurate adjustment of relevant field management measures. It ensures the quality and quantity of crop production. Lin et al. [32] conducted peanut seedling counting using UAV videos and effectively monitored the emergence rate of peanuts. Jin et al. [33] proposed a method for estimating wheat emergence rate based on UAV high-resolution images and conducted a phenotype analysis under field conditions using this method. Zhou et al. [34] presented an image segmentation method leveraging machine learning techniques to accurately extract maize canopy coverage at the field scale. Feng et al. [7] counted the number of cotton seedlings using UAV hyperspectral images, and acquired information regarding cotton emergence rate, canopy coverage, and cotton growth uniformity. The characteristics extracted at the seedling stage were used for quantitative assessment of cotton seedling conditions. Despite the early start of related research efforts, practical methods for obtaining maize seedling conditions and related growth assessment models have yet to be systematically and deeply investigated. As established above, automatic monitoring methods are needed for the rapid and accurate acquisition of maize seedling conditions.

This study proposes a novel approach for maize seedling detection and counting based on UAV RGB imagery. The UAV RGB system was used to collect high-resolution maize seedling images. By leveraging the spectral differences between old and young leaves, a maize seedling center detection index (MCDI) was constructed to identify maize seedling centers. Image automatic processing technology was used for segmentation and extraction, thereby obtaining the number of maize seedlings. Furthermore, the growth characteristics of emergence rate, canopy coverage, and seedling uniformity were quantitatively assessed per plot in the field.

2. Materials and Methods

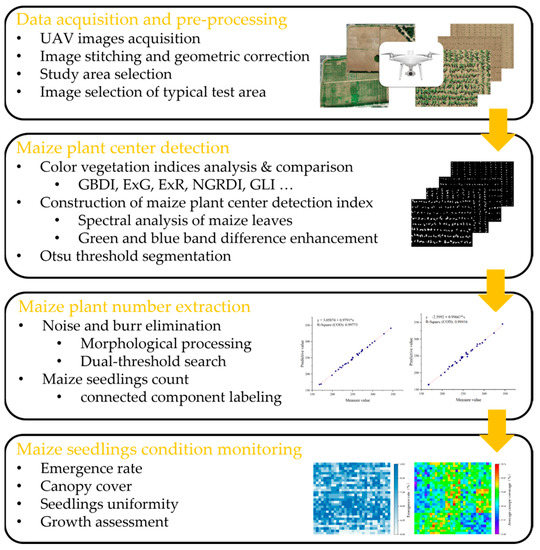

The flowchart of the proposed methodology is shown in Figure 1, which includes four main steps. (1) Pre-processing of UAV RGB images and selection of typical test area images for experiments. (2) Analysis of the spectral characteristics of UAV RGB images, which involves (i) distinguishing old and young leaves, enhanced by green and blue band calculations, (ii) constructing the maize center detection index for maize center recognition, and (iii) applying the Otsu algorithm for maize seedling center segmentation to generate binarized images. (3) Morphological processing for noise removal. The noise from large weed coverage is removed using a dual-threshold method, and the number of maize seedlings is extracted utilizing a connected component labeling algorithm. (4) Computation of the number of maize seedlings in the study area, along with the emergence rate, canopy coverage, and emergence uniformity of maize. The growth of maize at the seedling stage is then analyzed.

Figure 1.

The flowchart of the proposed methodology.

2.1. Study Site and Data Acquisition

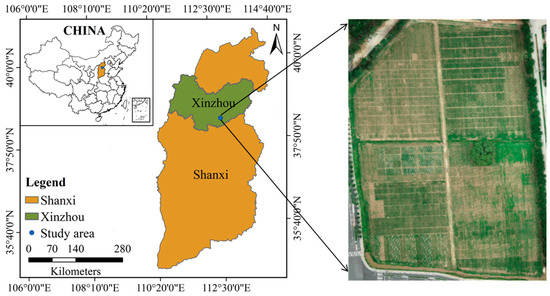

Field experiments were conducted in 2021 at the National Maize Industrial Technology System Experimental Demonstration Base, Shanxi, China (38°27′N, 112°43′E, altitude 776 m), as shown in Figure 2. The UAV image data of maize at the V3 and V6 stages were collected on 21 May 2021 and 18 June 2021, respectively. The DJI Phantom 4 Pro was equipped with a CMOS camera of 20 effective megapixels. The UAV flew at 25 m above ground level, with the forward and side overlaps set as 80%, and its ground sampling distance (GSD) was 0.94 cm. Experimental data from ROI1 to ROI4 in the study area were selected, as shown in Figure 3, with an image size of 1030 × 725 pixels. The experimental data include the two most representative growth stages for early seedling management, V3 and V6. V3 is the most critical growth stage for seedling survival, and V6 is the critical growth stage for seedling vigor monitoring.

Figure 2.

Geographical position of the study area.

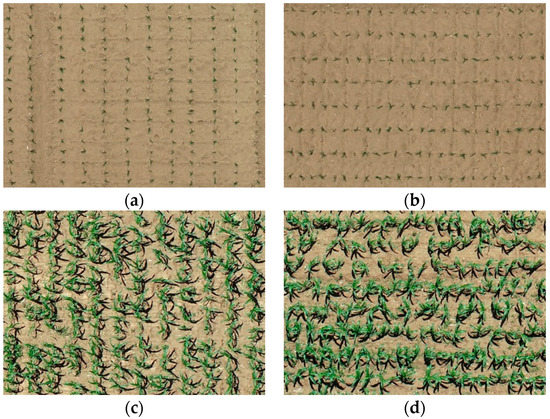

Figure 3.

Maize images in typical test area: (a) ROI1 (V3 stage), (b) ROI2 (V3 stage), (c) ROI3 (V6 stage), (d) ROI4 (V6 stage).

2.2. Maize Seedling Center Detection

2.2.1. Construction of Maize Seedling Center Detection Index (MCDI)

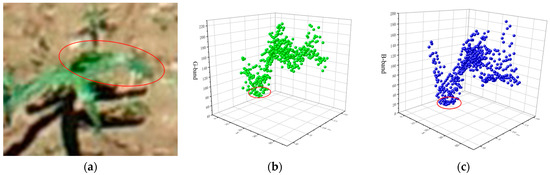

The image of a maize field is mainly composed of soil, shadow, and vegetation. The soil is mainly yellow-brown, the shadow is mainly dark, and the vegetation (maize plants and weeds) is mainly green. Therefore, the linear combination of red, green, and blue color components in RGB color space can maximize the difference between crops and soil and shadows to realize the separation of crops and background. According to the optical principle, the absorption band of a vegetation area was located in the blue and red bands, and the reflection peak was located in the green band. Therefore, [35] could effectively detect the plant area and realize the separation of maize plants from the background. The growth morphology of seedling maize plants was characterized by dark green leaves, tender green leaf bases, broad lance-shaped leaves, broad and long leaves, and wavy folded leaf margins. As shown in Figure 4, the color of young leaves of maize seedlings at the seedling stage is different from that of old leaves. Young leaves in the center of the seedling showed light green, while old leaves at the end showed dark green. This feature could be used to extract the center area of maize seedlings. According to statistical analysis, the difference between young and old leaves in the visible spectrum was mainly concentrated in the blue and green bands. The subtraction operation could be used to increase the spectral reflectance and contrast between different ground objects to increase the difference in spectral reflectance between young leaves and old leaves of vegetation. As shown in Figure 4a, the area within the red circle represents the center of the maize seedling and an adjacent intact maize leaf blade. As shown in Figure 4b,c, the first trough represents the center of the maize seedling, where the blue component is close to zero and significantly lower than the green component. Where the wave crest represents the leaf pile height, i.e., the region with the strongest reflectivity. The key region of interest extracted by our algorithm is the region from the first trough to the crest. By comparing the difference between the blue and green bands of the leaves in the center and edge regions of the seedlings, respectively, the blue component of the leaf tails near the edge region is higher and shows dark green.

Figure 4.

Spectral analysis of maize leaves: (a) Original image, (b) leaf G-band scatter plot, (c) leaf B-band scatter plot.

Based on the above analysis, we proposed a maize center detection index (MCDI) based on the red, green, and blue bands of a UAV RGB image, which was defined as follows:

where R, G, and B represent the red, green, and blue bands of the UAV RGB image, and the value ranges from 0 to 255; r, g, and b are normalized color components with values ranging from 0 to 1.

2.2.2. Otsu Threshold Segmentation

The traditional image segmentation algorithms mainly include edge detection, thresholding, and region-based segmentation. The Otsu method [36], also known as the maximum inter-class variance method, has the advantages of broad applicability, robust adaptability, and simple implementation. The method always plays an important role in image segmentation. According to the gray characteristics of the image, the image was divided into two parts: background and target. A threshold was selected based on the statistical method to make the threshold separate the target and background as much as possible. Firstly, the pixel proportions (bounded by threshold T) and and the average gray and of the target and background were obtained, respectively. Then, the total average gray of the image was calculated. Finally, the variance between classes was calculated. The higher the variance, the more distinguished the difference between the target and the background, and the better the image segmentation effect. The optimal threshold was the threshold when the variance between classes was maximum, and the formula was as follows:

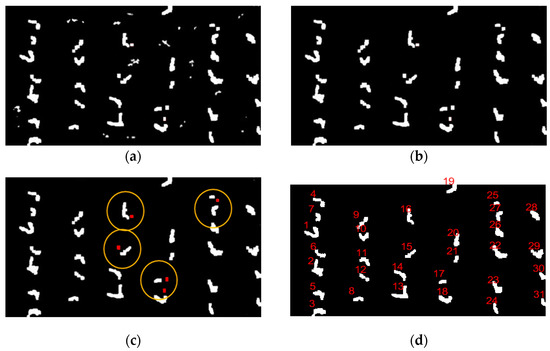

After leveraging the spectral differences between old and young leaves based on MCDI, the Otsu algorithm was used for maize seedling center segmentation to generate binarized images. In concrete terms, , , , , and refer to the ratio of the maize seedlings pixels, the ratio of the non-maize seedlings pixels, the average gray of the maize seedlings pixels, the average gray of the non-maize seedlings pixels, and the total average gray of the image, respectively. The variance was obtained by using Equation (4) to calculate the interclass variance between the gray values of the maize seedling and the non-maize seedling pixels. The threshold was selected for maize seedling segmentation when the interclass variance was maximum. As shown in Figure 5a, the Otsu algorithm was applied to segment the center of the maize seedling to generate a binarized image.

Figure 5.

Algorithm result diagram: (a) Binarized images based on Otsu algorithm, (b) morphological processing for noise removal, (c) weed noise elimination schematic, (d) maize seedling counting based on connected component labeling.

2.3. Maize Seedling Counting

2.3.1. Morphological Processing

The color of weeds in the maize fields was similar to that of maize plants. There was a certain amount of weed noise in the binary image obtained after Otsu threshold segmentation, which was further processed to count the number of maize seedlings. In the paper, some minor noise was removed by morphological processing, and then a considerable area of weed noise was removed by a dual-threshold search method.

Suitable structural elements were selected to perform erosion operations on the image to eliminate weeds with small areas and to restore as much as possible of the eroded maize morphological features through expansion operations. Erosion was a process of eliminating boundary points so that the boundary shrinks inward. At the same time, expansion was the reverse operation of erosion, which caused the boundary to expand outward. The morphological opening operation could eliminate isolated minor points, burrs. Morphological closing could fill holes and close small cracks, while the overall position and shape remain unchanged. Precisely, a square structure element of 3 × 3 was used to open image calculation to eliminate minor noise. The morphological opening operation is shown in Equation (5). After morphological optimization, the final results of maize seedling center detection could be obtained, as shown in Figure 5b.

where A is the image after threshold segmentation, B is the morphological structure element, and is the result obtained by the morphological structure element B opening operation.

For weed noise with large areas that cannot be removed by morphological treatment, a dual-threshold method was used to eliminate the noise, as shown in Figure 5c. The area and plant distance threshold of noise points were set by calculating the distance between the area of the connected area and the centroid points of each connected area. If Equation (6) is satisfied, it is denoted as noise point removal. In this experiment, s was set to 50 pixel area and d to 80 pixel value.

where Area is the area of the connected region, r is the distance between the centroid points in each connected region, s is the noise point area threshold, and d is the plant distance threshold.

2.3.2. Connected Component Labeling

The connected component labeling of the binary image refers to the process of setting unique labels for pixels of different connected components in the image [37]. The commonly used adjacency judgment methods mainly include 8-adjacent and 4-adjacent. Eight-adjacent is used to compare the upper, lower, left, right, and diagonal pixels of the target pixel point with the target pixel point to determine whether they possess the same properties. If they are found to be the same, the target pixel point is connected to that pixel point. Similarly, 4-adjacent is utilized to compare the upper, lower, left, and right pixels of the target pixel point with the target pixel point to ascertain whether they have the same properties. If they are found to be the same, they are connected. The detailed steps of the connected component labeling method in the paper were as follows. As shown in Figure 5d, the maize seedling counting is obtained using connected component labeling.

- (1)

- The binary image was scanned line by line from top to bottom. The line number, starting point, and ending point of each line-connected component were recorded.

- (2)

- The connected components were marked line by line. Whether identical components existed as the connected components in the previous line was examined. If such components existed, the label of the overlapping component was assigned to the connected component. If there were overlapping components with multiple connected components, the minimum label was assigned to these connected components. The connected component label of the previous line was written into the equivalent pair and given the minimum label. If there were no overlapping components with the connected component in the previous line, a new label was assigned to the connected component and the scanning continues.

- (3)

- Following the initial scan, minimum labeling of equivalence pairs was conducted. This entails assigning the label minimum of all equivalence paired to all connected components in equivalence pairs until there were no connected equivalence pairs.

2.4. Calculation of Emergence Rate, Canopy Coverage, and Seedling Uniformity

When testing the seedling emergence rate, the calculation was realized in units of seeding holes, and one seedling per hole was considered as emerged. The emergence rate of maize was calculated by the number of maize seeding holes in the image and the number of maize seedlings extracted by the algorithm, as shown in Equation (7).

where is the emergence rate of maize in the image, is the number of maize seedlings, and is the number of seeding holes.

The separation of vegetation from the background was a prerequisite for canopy coverage calculation. After the vegetation information was extracted, canopy coverage was calculated in terms of the binarized image to characterize the maize growth in the area. The canopy coverage formula was defined as follows:

where is the coverage of maize canopy, is the sum of maize canopy pixels, and is the sum of total image pixels.

At the seedling stage, uniformity was mainly evaluated from two aspects: emergence rate and emergence quality. The coefficient of variation CV1 was calculated based on the standard deviation and mean of maize emergence rate to characterize seedling emergence uniformity at the V3 stage. The coefficient of variation CV2 was calculated based on the standard deviation and mean of canopy coverage to characterize the seedling growth uniformity at the V6 stage. The formulas of CV1 and CV2 are defined as follows:

where is the standard deviation of the emergence rate, is the mean of the emergence rate, is the standard deviation of the maize canopy coverage, and is the mean value of the canopy coverage.

2.5. Evaluation Metrics

In order to evaluate the recognition effect of maize seedling center detection index (MCDI) on maize center of UAV remote sensing images, the extraction results of MCDI and the most commonly used color index for seedling detection were compared and analyzed. The formulas of each color index are shown in Table 1.

Table 1.

Common color vegetation indices based on RGB images.

The performance of the maize seedling counting method was quantitatively evaluated by using True Positive (TP), False Negative (FN), False Positive (FP), the recall rate (R), the precision rate (P), the overall accuracy (OA), the commission error (CE), the omission error (OE), and the F1-score (F1), as shown in Equations (11)–(16), respectively. The F1-score takes into account the accurate detection as well as the missed detection. The F1-score has a range of 0 to 1, with higher values representing higher detection accuracy.

where TP is the number of detected maize seedlings that are real maize seedlings, FN is the number of real maize seedlings missed out from the detection, and FP is the number of maize seedlings incorrectly detected as maize seedlings.

3. Results

3.1. Detection of Maize Seedlings

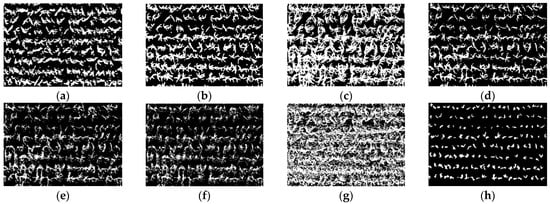

As described in Section 2.2, the maize center detection index (MCDI) was used to enhance the spectral differences between older and younger leaves, and then the Otsu algorithm was used for seedling center segmentation. The images of ROI1 to ROI4 in the study area were selected to detect the maize seedlings, which included two plant densities, as shown in Figure 3. Maize seedlings show weak color characteristics at the V3 stage and ambiguous color characteristics due to leaf overlapping at the V6 stage. The algorithm needs to make a compromise between the weak characteristics and the ambiguous characteristics at the early and late seedling stages (before and after the third leaf). The results of Otsu threshold segmentation based on images (ROI1, ROI2, ROI3, ROI4) of GBDI, ExG, ExR, ExG ExR, NGRDI, GLI, Cg, and MCDI are shown in Figure 6, Figure 7, Figure 8 and Figure 9, respectively.

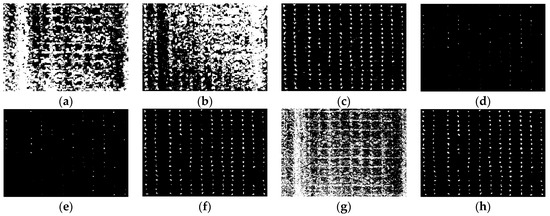

Figure 6.

The binary images of Otsu segmentation using different vegetation indices in ROI1 (V3 stage): (a) GBDI, (b) ExG, (c) ExR, (d) ExG − ExR, (e) NGRDI, (f) GLI, (g) Cg, (h) MCDI.

Figure 7.

The binary images of Otsu segmentation using different vegetation indices in ROI2 (V3 stage): (a) GBDI, (b) ExG, (c) ExR, (d) ExG − ExR, (e) NGRDI, (f) GLI, (g) Cg, (h) MCDI.

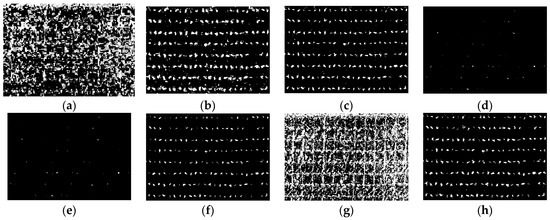

Figure 8.

The binary images of Otsu segmentation using different vegetation indices in ROI3 (V6 stage): (a) GBDI, (b) ExG, (c) ExR, (d) ExG − ExR, (e) NGRDI, (f) GLI, (g) Cg, (h) MCDI.

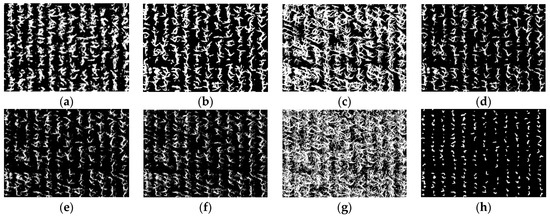

Figure 9.

The binary images of Otsu segmentation using different vegetation indices in ROI4 (V6 stage): (a) GBDI, (b) ExG, (c) ExR, (d) ExG − ExR, (e) NGRDI, (f) GLI, (g) Cg, (h) MCDI.

As shown in Figure 6a,g and Figure 7a,g, the seedling detection based on GBDI and Cg shows large patches and poor segmentation results. As shown in Figure 6d,e and Figure 7d,e, the maize seedlings are barely extracted. There was unstable extraction of the ExG component, as shown in Figure 6b and Figure 7b. As shown in Figure 6c,f and Figure 7c,f, the interference from noise is obvious. As shown in Figure 8a–f and Figure 9a–f, the extracted results were still with the sticking of maize seedling leaves. The maize seedlings could not be extracted, as shown in Figure 8c,g and Figure 9c,g. As shown in Figure 6h, Figure 7h, Figure 8h and Figure 9h, the MCDI component can effectively extract the maize seedling center, and the results are significantly better than the other experimental results.

3.2. Quantitative Analysis of Seedling Counting Algorithm

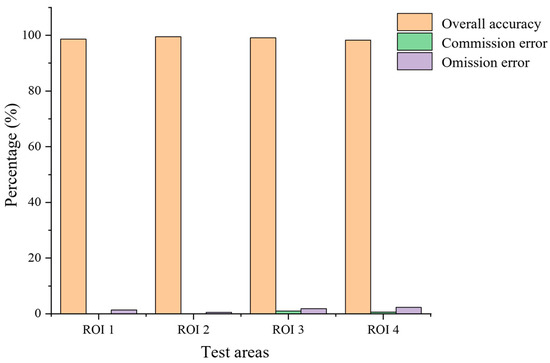

The number and accuracy of maize seedlings extracted from the above four typical test areas are shown in Table 2 and Table 3, respectively. The comparison of the overall accuracy, the commission error, and the omission error is shown in Figure 10.

Table 2.

Extraction results of maize seedling number in test areas.

Table 3.

Extraction accuracy of maize seedling number in test areas.

Figure 10.

Histogram of extraction accuracy of maize seedling number.

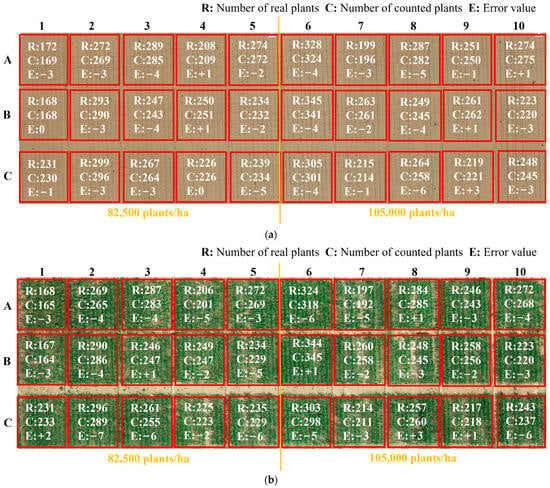

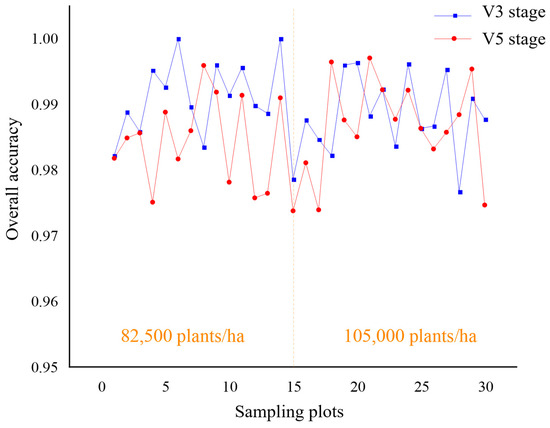

In order to further evaluate the algorithm performance, 30 sampling plots were selected from the experimental field and counted. The planting density of 1–5 plots was 82,500 plants/ha (which corresponds to a plant density of 8 plants/m2). The planting density of 6–10 plots was 105,000 plants/ha (which corresponds to a plant density of 10 plants/m2). The statistics of the number of seedlings extracted from the 30 sampling plots based on the proposed algorithm are shown in Figure 11. The number of real and detected seedlings is compared and analyzed, as shown in Figure 12. The overall accuracy of seedling number extraction by the proposed algorithm at the V3 and V6 stages of maize is compared and analyzed, as shown in Figure 13.

Figure 11.

Accuracy statistics of sampling plots at different seedling stages: (a) V3 stage, (b) V6 stage.

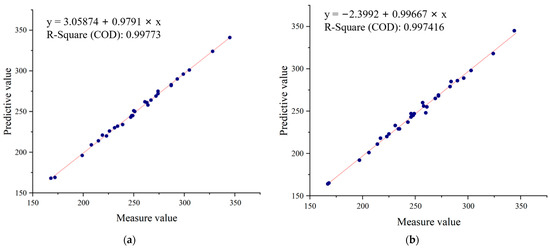

Figure 12.

Comparative analysis of real and detected seedlings: (a) V3 stage, (b) V6 stage.

Figure 13.

Comparative analysis of overall accuracy of sampling plots at different seedling stages.

3.3. Evaluation of Seedling Growth

The characteristics extracted at the seedling stage were used for the quantitative assessment of maize seedling conditions. Emergence rate, canopy coverage, and seedling uniformity were calculated per plot to monitor maize growth automatically. The calculations of the emergence rate and seedling uniformity of the 30 sampling plots are shown in Table 4 and Table 5.

Table 4.

Calculations of emergence rate and seedling uniformity in the sampling plots.

Table 5.

Calculations of canopy cover and seedling uniformity in the sampling plots.

4. Discussion

Maize seedlings show smaller morphology and weaker color characteristics at THE V3 stage, as shown in Figure 6 and Figure 7. GBDI and Cg components were easily disturbed by the intensity of soil pixels and appeared as large patches, resulting in poor segmentation. The ExG-ExR and NGRDI components have limited ability to enhance vegetation pixels, thereby preventing vegetation extraction. The extraction effect of the ExG component is unstable. Comparably, ExR, GLI, and MCDI components can separate vegetation from the soil. However, the segmentation results of ExR and GLI components are still prone to noise, such as soil pixels. Therefore, the MCDI component exhibits a greater advantage in identifying maize seedlings during the seedling stage.

As shown in Figure 8 and Figure 9, maize seedlings have more extensive morphology and severe leaf overlapping at the V6 stage. GBDI component significantly enhances the intensity of vegetation and soil pixels, while there is a significant difference between shadows and vegetation. This leads to the results after Otsu threshold segmentation being severely disturbed by shadows. ExG and ExG-ExR components could effectively enhance vegetation. The application of the Otsu threshold segmentation algorithm adequately preserves the morphology of maize seedlings. However, the extensive overlapping of maize leaves leads to significant adhesion. ExR and Cg components stretch the shadows and the vegetation to the same pixel intensity, making vegetation extraction impossible. From the segmentation results, maize seedling center pixels can be recognized by NGRDI and GLI components, but there is still leaf overlapping leading to plant adhesion, and there is more noise present, such as bumps and burrs. The MCDI component can effectively extract the tender green maize seedling center. The end dark green leaves can be accurately filtered out in the Otsu threshold segmentation, and soil and shadow pixel doping are almost absent. Consequently, the segmentation accuracy is improved. Based on the above analysis, the MCDI component segmentation has fewer impurities and a more complete extraction of the maize seedling center, because it mitigates the interference in counting caused by the overlap of maize leaves. Therefore, the proposed MCDI component can effectively detect maize seedlings at the early or late seedling stages, which is helpful in improving the extraction accuracy.

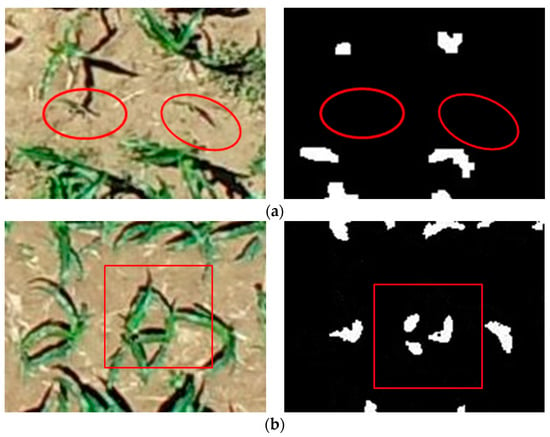

Moreover, the study results have shown that the algorithm has a higher estimation accuracy. The average overall accuracy and the average F1 score of the seedling counting algorithm are 99.06% and 99.53%. There are zero plant misidentifications and a few missed identifications. The average overall accuracy of the proposed method is 98.67%, and the average F1 score is 98.57%, with an increased number of plants missed and misidentifications compared to the evaluation of maize seedlings at the V3 stage. The causes of the errors are shown in Figure 14, which can be attributed to two main factors. One of the causes is the presence of weak seedlings, as indicated by the red oval marks in Figure 14a, which can be easily overlooked because of the small size of the seedlings and the extremely weak color characteristics. The other factor is the presence of maize seedling center obscured by leaves of neighboring plants in the late emergence stage, as marked by red rectangles in Figure 14b, which can easily lead to misidentification. From Figure 10, it can be observed that the extraction accuracy of seedling number at the maize seedling stage is above 98%, and the error is controlled below 3%, indicating reliable accuracy.

Figure 14.

Error analysis: (a) Omission error, (b) commission error.

As shown in Figure 11, it achieved a high accuracy of plant extraction in all sampling plots. In cases where the planting density of the sampling plots varied, the proposed automatic extraction results achieved high accuracy, with a statistical error ranging from 0.4% to 2.7%. The linear regression analysis was performed to compare the number of plants extracted by the proposed method and the number of plants visually inspected by hand. As shown in Figure 12, the coefficients of determination R2 between the automatically and manually segmented maize seedlings are all higher than 0.99. This demonstrates the high accuracy and reliability of the seedling counting method. As shown in Figure 13, when comparing the overall accuracy of the sampling plots with different planting densities, the accuracy of seedling extraction in the sampling plots with a planting density of 105,000 plants/ha is slightly lower than that in the sampling plots with a planting density of 82,500 plants/ha. At the same time, the extraction accuracy of seedlings at the V6 stage is slightly lower than that of the seedlings at the V3 stage due to the overlapping of leaves. The proposed method can effectively extract the number of maize seedlings with low error, strong universality, and robustness.

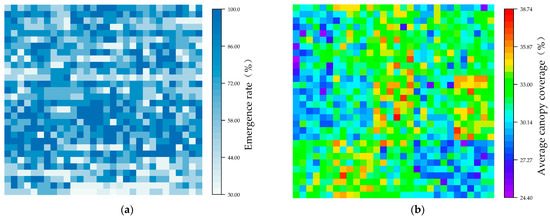

The maize seedling emergence rate, canopy coverage, and coefficient of variation of the 30 sampling plots were calculated to provide a comprehensive monitoring of the maize seedling condition in the study area. As shown in Table 4, the average emergence rate of maize at the V3 stage in the 30 sampling plots is 66.98% with a coefficient of variation CV1 of 15.89%. The average maize emergence rate is 72.14% with CV1 of 16.19% for the density of 82,500 plants/ha and 61.82% with CV1 of 15.59% for the density of 105,000 plants/ha. The maize emergence rate in the 30 sampling plots is 66.11% at the V6 stage with a coefficient of variation CV1 of 16.23%. The average emergence rate is 71.05% with a CV1 of 16.34% for the density of 82,500 plants/ha and 61.17% with a CV1 of 16.13% for the density of 105,000 plants/ha. As shown in Table 5, the maize canopy coverage at the V3 stage in the 30 sampling plots is 2.29% with a coefficient of variation CV2 of 10.97%. The average maize canopy coverage is 2.22% with CV2 of 10.85% for the density of 82,500 plants/ha and 2.36% with CV2 of 11.09% for the density of 105,000 plants/ha. The average canopy coverage at the V6 stage in the 30 sampling plots is 32.21% with a coefficient of variation CV2 of 8.20%. The average maize canopy coverage is 31.49% with a CV2 of 8.22% for the density of 82,500 plants/ha and 32.93% with a CV2 of 8.18% for the density of 105,000 plants/ha.

The heatmap of maize emergence based on the estimation results of the proposed method at the V3 stage planted at a density of 82,500 plants/ha was generated to visually represent the maize emergence situation, as shown in Figure 15a. At the same time, the distribution of maize canopy coverage was plotted to visualize the seedling condition, utilizing the average coverage of maize canopy at the V6 stage in the respective plots, as shown in Figure 15b. Based on the above analysis, it can be determined that maize emergence in the study area is inadequate, and the uniformity and neatness of growth are slightly poor.

Figure 15.

Spatial distribution of emergence rate and canopy coverage for maize: (a) emergence rate, (b) canopy coverage.

5. Conclusions

In this study, we proposed an automatic monitoring method of maize seedling growth based on UAV RGB imagery, which realized accurate maize seedling counting and rapid monitoring of seedling conditions in the field. The approach offers technical support for precision agriculture in seedling management. A comparative analysis of the seedling extraction results of the 30 sampling plots at the early and late growth stages for two different planting densities led to the following conclusions.

- (1)

- The maize seedling center detection index (MCDI) was constructed to significantly separate the maize seedling center from the background, allowing for accurate identification and extraction of the maize seedling center.

- (2)

- The proposed seedling counting method has effectively solved the problem of leaf adhesion affecting seedling extraction due to the severe leaf cross phenomenon at the late seedling stage. The applicability and robustness of the maize seedling monitoring algorithm have significantly improved.

- (3)

- Based on the quantitative evaluation of maize seedling number, emergence rate, canopy coverage, and uniformity, the overall growth of maize at the seedling stage is effectively monitored. It provides data support for timely and accurate information acquisition for crop precision management. It is helpful to take timely and favorable measures to ensure sufficient, complete, and vigorous seedlings to achieve high yields.

Author Contributions

Conceptualization, F.Y., M.G. and H.W.; methodology, M.G. and X.L.; software, M.G.; validation, M.G. and F.Y.; formal analysis, M.G.; investigation, M.G.; resources, F.Y. and M.G.; data curation, F.Y., M.G. and X.L.; writing—original draft preparation, M.G. and F.Y.; writing—review and editing, H.W.; supervision, F.Y. and H.W.; project administration, F.Y.; funding acquisition, F.Y. and H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of China (grant number 61972363), the Central Government Leading Local Science and Technology Development Fund Project (grant number YDZJSX2021C008), and the Postgraduate Science and Technology Project of North University of China (grant number 20221832).

Data Availability Statement

If interested in the data used in the research work, contact gaomindm@163.com for the original dataset.

Acknowledgments

The authors would like to acknowledge the support from the Cultivation and Physiology Research Unit of the Maize Research Institute of Shanxi Agricultural University; the plant trait surveys were completed by Zhengyu Guo, Zhongdong Zhang, Shuai Gong, Li Chen, and Haoyu Wang. In addition, the authors would like to acknowledge the assistance of data acquisition in the field supported by Fan Xie, Zeliang Ma, Danjing Zhao, and Rui Wang.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| UAV | unmanned aerial vehicle |

| V(n) stage | nth leaf stage |

| MCDI | maize seedling center detection index |

| GBDI | green–blue difference index |

| ExG | excess green index |

| ExR | excess red index |

| NGRDI | normalized green minus red difference index |

| GLI | green leaf index |

| Cg | YCrCb–green difference index |

| R | recall rate |

| P | precision rate |

| OA | overall accuracy |

| CE | commission error |

| OE | omission error |

| F1 | F1-score |

References

- Pierce, F.J.; Nowak, P. Aspects of Precision Agriculture. In Advances in Agronomy; Sparks, D.L., Ed.; Elsevier: Amsterdam, The Netherlands, 1999; pp. 1–85. [Google Scholar]

- Gao, M.; Yang, F.; Wei, H.; Liu, X. Individual Maize Location and Height Estimation in Field from UAV-Borne LiDAR and RGB Images. Remote Sens. 2022, 14, 2292. [Google Scholar] [CrossRef]

- Sweet, D.; Tirado, S.; Springer, N.; Hirsch, C. Opportunities and Challenges in Phenotyping Row Crops Using Drone-based RGB Imaging. Plant Phenome J. 2022, 5, e20044. [Google Scholar] [CrossRef]

- Tao, F.; Zhang, S.; Zhang, Z.; Rotter, R. Temporal and Spatial Changes of Maize Yield Potentials and Yield Gaps in the Past Three Decades in China. Agric. Ecosyst. Environ. 2015, 208, 12–20. [Google Scholar] [CrossRef]

- Liu, M.; Su, W.; Wang, X. Quantitative Evaluation of Maize Emergence Using UAV Imagery and Deep Learning. Remote Sens. 2023, 15, 1979. [Google Scholar] [CrossRef]

- Shirzadifar, A.; Maharlooei, M.; Bajwa, S.; Oduor, P.; Nowatzki, J. Mapping Crop Stand Count and Planting Uniformity Using High Resolution Imagery in a Maize Crop. Biosyst. Eng. 2020, 200, 377–390. [Google Scholar] [CrossRef]

- Feng, A.; Zhou, J.; Vories, E.; Sudduth, K. Evaluation of Cotton Emergence Using UAV-Based Imagery and Deep Learning. Comput. Electron. Agric. 2020, 177, 105711. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A Technical Study on UAV Characteristics for Precision Agriculture Applications and Associated Practical Challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Liu, S.; Yin, D.; Feng, H.; Li, Z.; Xu, X.; Shi, L.; Jin, X. Estimating Maize Seedling Number with UAV RGB Images and Advanced Image Processing Methods. Precis. Agric. 2022, 23, 1604–1632. [Google Scholar] [CrossRef]

- Zhao, L.; Han, Z.; Yang, J.; Qi, H. Single Seed Precise Sowing of Maize Using Computer Simulation. PLoS ONE 2018, 13, e0193750. [Google Scholar] [CrossRef]

- Zhao, J.; Lu, Y.; Tian, H.; Jia, H.; Guo, M. Effects of Straw Returning and Residue Cleaner on the Soil Moisture Content, Soil Temperature, and Maize Emergence Rate in China’s Three Major Maize Producing Areas. Sustainability 2019, 11, 5796. [Google Scholar] [CrossRef]

- Qiao, L.; Gao, D.; Zhao, R.; Tang, W.; An, L.; Li, M.; Sun, H. Improving Estimation of LAI Dynamic by Fusion of Morphological and Vegetation Indices Based on UAV Imagery. Comput. Electron. Agric. 2022, 192, 106603. [Google Scholar] [CrossRef]

- Niu, Y.; Han, W.; Zhang, H.; Zhang, L.; Chen, H. Estimating Fractional Vegetation Cover of Maize under Water Stress from UAV Multispectral Imagery Using Machine Learning Algorithms. Comput. Electron. Agric. 2021, 189, 106414. [Google Scholar] [CrossRef]

- Niu, Y.; Zhang, H.; Han, W.; Zhang, L.; Chen, H. A Fixed-Threshold Method for Estimating Fractional Vegetation Cover of Maize under Different Levels of Water Stress. Remote Sens. 2021, 13, 1009. [Google Scholar] [CrossRef]

- Pereyra, V.; Bastos, L.; de Borja Reis, A.; Melchiori, R.J.; Maltese, N.E.; Appelhans, S.C.; Vara Prasad, P.V.; Wright, Y.; Brokesh, E.; Sharda, A.; et al. Early-Season Plant-to-Plant Spatial Uniformity Can Affect Soybean Yields. Sci. Rep. 2022, 12, 17128. [Google Scholar] [CrossRef]

- Zhao, B.; Zhang, J.; Yang, C.; Zhou, G.; Ding, Y.; Shi, Y.; Zhang, D.; Xie, J.; Liao, Q. Rapeseed Seedling Stand Counting and Seeding Performance Evaluation at Two Early Growth Stages Based on Unmanned Aerial Vehicle Imagery. Front. Plant Sci. 2018, 9, 1362. [Google Scholar] [CrossRef]

- Liu, T.; Li, R.; Jin, X.; Ding, J.; Zhu, X.; Sun, C.; Guo, W. Evaluation of Seed Emergence Uniformity of Mechanically Sown Wheat with UAV RGB Imagery. Remote Sens. 2017, 9, 1241. [Google Scholar] [CrossRef]

- Karayel, D.; Özmerzi, A. Evaluation of Three Depth-Control Components on Seed Placement Accuracy and Emergence for a Precision Planter. Appl. Eng. Agric. 2008, 24, 271–276. [Google Scholar] [CrossRef]

- Vong, C.; Conway, L.; Zhou, J.; Kitchen, N.; Sudduth, K. Early Corn Stand Count of Different Cropping Systems Using UAV-Imagery and Deep Learning. Comput. Electron. Agric. 2021, 186, 106214. [Google Scholar] [CrossRef]

- García-Martínez, H.; Flores-Magdaleno, H.; Khalil-Gardezi, A.; Ascencio-Hernández, R.; Tijerina-Chávez, L.; Vázquez-Peña, M.A.; Mancilla-Villa, O.R. Digital Count of Corn Plants Using Images Taken by Unmanned Aerial Vehicles and cross Correlation of Templates. Agronomy 2020, 10, 469. [Google Scholar] [CrossRef]

- Liu, W.; Zhou, J.; Wang, B.; Costa, M.; Kaeppler, S.; Zhang, Z. IntegrateNet: A Deep Learning Network for Maize Stand Counting from UAV Imagery by Integrating Density and Local Count Maps. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6512605. [Google Scholar] [CrossRef]

- Che, Y.; Wang, Q.; Zhou, L.; Wang, X.; Li, B.; Ma, Y. The Effect of Growth Stage and Plant Counting Accuracy of Maize Inbred Lines on LAI and Biomass Prediction. Precis. Agric. 2022, 23, 2159–2185. [Google Scholar] [CrossRef]

- Barreto, A.; Lottes, P.; Ispizua Yamati, F.; Baumgarten, S.; Wolf, N.; Stachniss, C.; Mahlein, A.; Paulus, S. Automatic UAV-Based Counting of Seedlings in Sugar-Beet Field and Extension to Maize and Strawberry. Comput. Electron. Agric. 2021, 191, 106493. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, Technology and the Future of Small Autonomous Drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef]

- Sun, Y.; Bi, F.; Gao, Y.; Chen, L.; Feng, S. A Multi-Attention UNet for Semantic Segmentation in Remote Sensing Images. Symmetry 2022, 14, 906. [Google Scholar] [CrossRef]

- Maes, W.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Shuai, G.; Martinez-Feria, R.; Zhang, J.; Li, S.; Basso, B. Capturing Maize Stand Heterogeneity across Yield-Stability Zones Using Unmanned Aerial Vehicles (UAV). Sensors 2019, 19, 4446. [Google Scholar] [CrossRef]

- Yu, Z.; Cao, Z.; Wu, X.; Bai, X.; Qin, Y.; Zhuo, W.; Xiao, Y.; Zhang, X.; Xue, H. Automatic Image-Based Detection Technology for Two Critical Growth Stages of Maize: Emergence and Three-Leaf Stage. Agric. For. Meteorol. 2013, 174–175, 65–84. [Google Scholar] [CrossRef]

- Bai, Y.; Nie, C.; Wang, H.; Cheng, M.; Liu, S.; Yu, X.; Shao, M.; Wang, Z.; Wang, S.; Tuohuti, N.; et al. A Fast and Robust Method for Plant Count in Sunflower and Maize at Different Seedling Stages Using High-Resolution UAV RGB Imagery. Precis. Agric. 2022, 23, 1720–1742. [Google Scholar] [CrossRef]

- Zhou, C.; Yang, G.; Dong, L.; Yang, X.; Xu, B. An Integrated Skeleton Extraction and Pruning Method for Spatial Recognition of Maize Seedlings in MGV and UAV Remote Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4618–4632. [Google Scholar] [CrossRef]

- Gndinger, F.; Schmidhalter, U. Digital Counts of Maize Plants by Unmanned Aerial Vehicles (UAVs). Remote Sens. 2017, 9, 544. [Google Scholar] [CrossRef]

- Lin, Y.; Chen, T.; Liu, S.; Cai, Y.; Shi, H.; Zheng, D.; Lan, Y.; Yue, X.; Zhang, L. Quick and Accurate Monitoring Peanut Seedlings Emergence Rate through UAV Video and Deep Learning. Comput. Electron. Agric. 2022, 197, 106938. [Google Scholar] [CrossRef]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of Plant Density of Wheat Crops at Emergence from Very Low Altitude UAV Imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef]

- Zhou, C.; Ye, H.; Xu, Z.; Hu, J.; Yang, G. Estimating Maize-Leaf Coverage in Field Conditions by Applying a Machine Learning Algorithm to UAV Remote Sensing Images. Appl. Sci. 2019, 9, 2389. [Google Scholar] [CrossRef]

- Woebbecke, D.; Meyer, G.; Bargen, K.; Mortensen, D. Color Indices for Weed Identification under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Asad, P.; Marroquim, R.; Souza, A. On GPU Connected Components and Properties: A Systematic Evaluation of Connected Component Labeling Algorithms and Their Extension for Property Extraction. IEEE Trans. Image Process. 2019, 28, 17–31. [Google Scholar] [CrossRef] [PubMed]

- Turhal, U. Vegetation Detection Using Vegetation Indices Algorithm Supported by Statistical Machine Learning. Environ. Monit. Assess. 2022, 194, 826. [Google Scholar] [CrossRef]

- Joao, V.; Bilal, S.; Lammert, K.; Henk, K.; Sander, M. Automated crop plant counting from very high-resolution aerial imagery. Precis. Agric. 2020, 21, 106938. [Google Scholar]

- Zheng, Y.; Zhu, Q.; Huang, M.; Guo, Y.; Qin, J. Maize and Weed Classification Using Color Indices with Support Vector Data Description in Outdoor Fields. Comput. Electron. Agric. 2017, 141, 215–222. [Google Scholar] [CrossRef]

- Gitelson, A.; Kaufman, Y.; Stark, R.; Rundquist, D. Novel Algorithms for Remote Estimation of Vegetation Fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Yang, G.; He, Y.; Zhou, Z.; Huang, L.; Li, X.; Yu, Z.; Yang, Y.; Li, Y.; Ye, L.; Feng, X. Field Monitoring of Frac-tional Vegetation Cover Based on UAV Low-altitude Remote Sensing and Machine Learning. In Proceedings of the 2022 10th International Conference on Agro-Geoinformatics, Quebec City, QC, Canada, 11–14 July 2022. [Google Scholar]

- Prasetyo, E.; Adityo, R.; Suciati, N.; Fatichah, C. Mango Leaf Classification with Boundary Moments of Centroid Contour Distances as Shape Features. In Proceedings of the 2018 International Seminar on Intelligent Technology and Its Applications, Bali, Indonesia, 30–31 August 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).